🎲 [ICLR 2025] DICE: Data Influence Cascade in Decentralized Learning

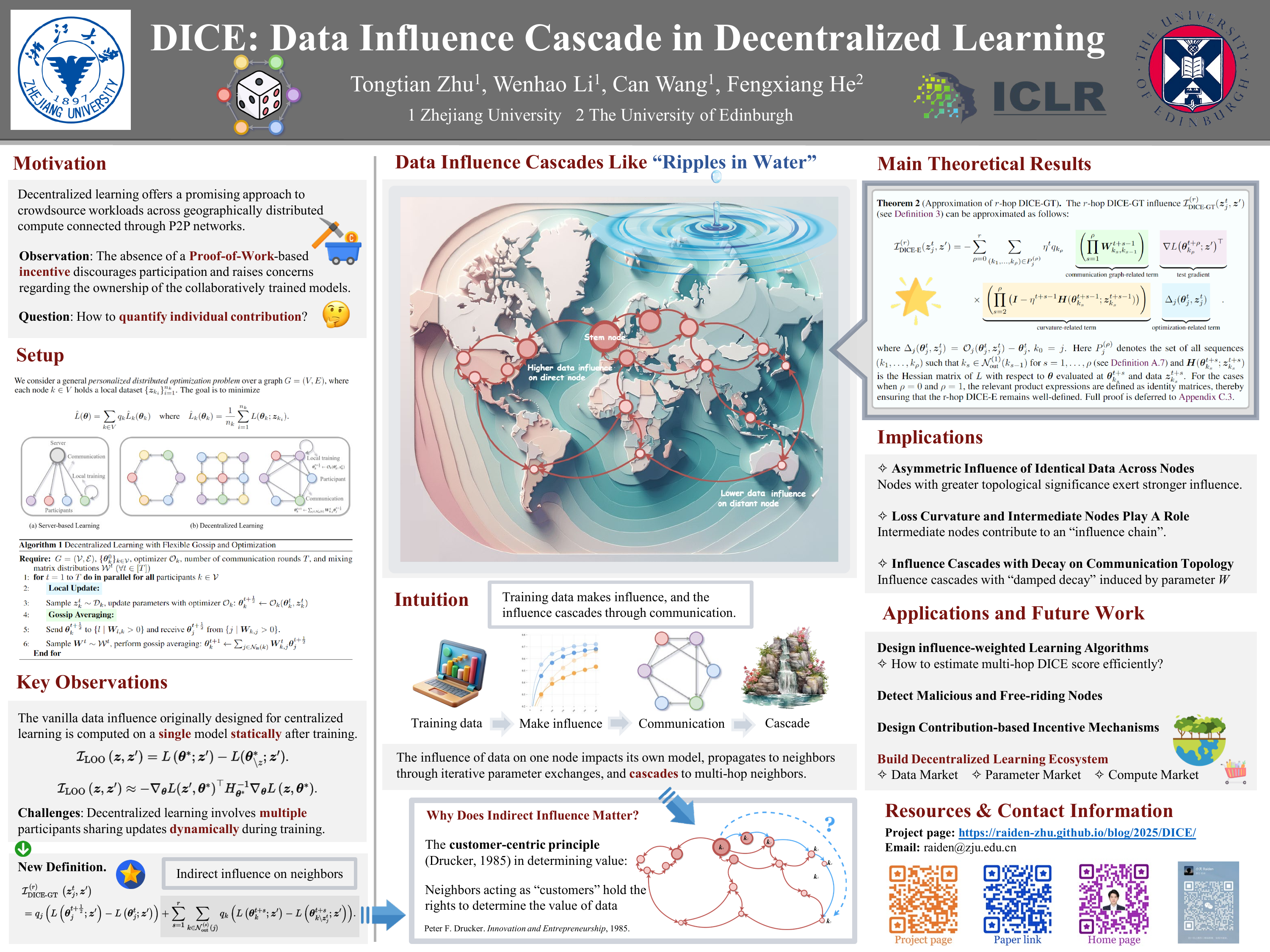

TLDR: We introduce DICE, the first framework for measuring data influence in fully decentralized learning.

Tags: Data_Influence, Decentralized_Learning

Authors: Tongtian Zhu1 Wenhao Li1 Can Wang1 Fengxiang He2

1Zhejiang University 2The University of Edinburgh

📄 Openreview • 💻 Code • 📚 arXiv • 🔗 Twitter • 🖼️ Poster • 📊 Slides • 🎥 Video (Chinese)

Updates in progress. More coming soon! 😉

🗓️ 2025-07-17 — Updated main results

Main Results

Theorem (Approximation of r-hop DICE-GT)

The r-hop DICE-GT influence can be approximated as follows:

where , . Here denotes the set of all sequences such that for and is the Hessian matrix of with respect to evaluated at and data .

For the cases when and , the relevant product expressions are defined as identity matrices, thereby ensuring that the r-hop DICE-E remains well-defined.

Key Insights from DICE

Our theory uncovers the intricate interplay of factors that shape data influence in decentralized learning:

- 1. Asymmetric Influence and Topological Importance: The influence of identical data is not uniform across the network. Instead, nodes with greater topological significance exert stronger influence.

- 2. The Role of Intermediate Nodes and Loss Landscape: Intermediate nodes actively contribute to an "influence chain". The local loss landscape of these models also actively shapes the influence as it propagates through the network.

- 3. Influence Cascades with Damped Decay: Data influence cascades with "damped decay" induced by mixing parameter W. This decay, which can be exponential with the number of hops, ensures that influence is "localized".

Citation

Cite Our Paper 😀

If you find our work insightful, we would greatly appreciate it if you could cite our paper.

@inproceedings{zhu2025dice,

title="{DICE: Data Influence Cascade in Decentralized Learning}",

author="Tongtian Zhu and Wenhao Li and Can Wang and Fengxiang He",

booktitle="The Thirteenth International Conference on Learning Representations",

year="2025",

url="[https://openreview.net/forum?id=2TIYkqieKw](https://openreview.net/forum?id=2TIYkqieKw)"

}