dia-gguf

- run it with

gguf-connector; simply execute the command below in console/terminal

ggc s6

GGUF file(s) available. Select which one to use:

- dia-1.6b-iq3_xxs.gguf

- dia-1.6b-iq4_nl.gguf

- dia-1.6b-iq4_xs.gguf

Enter your choice (1 to 3): _

- opt a

gguffile in your current directory to interact with; nothing else

| Prompt | Audio Sample |

|---|---|

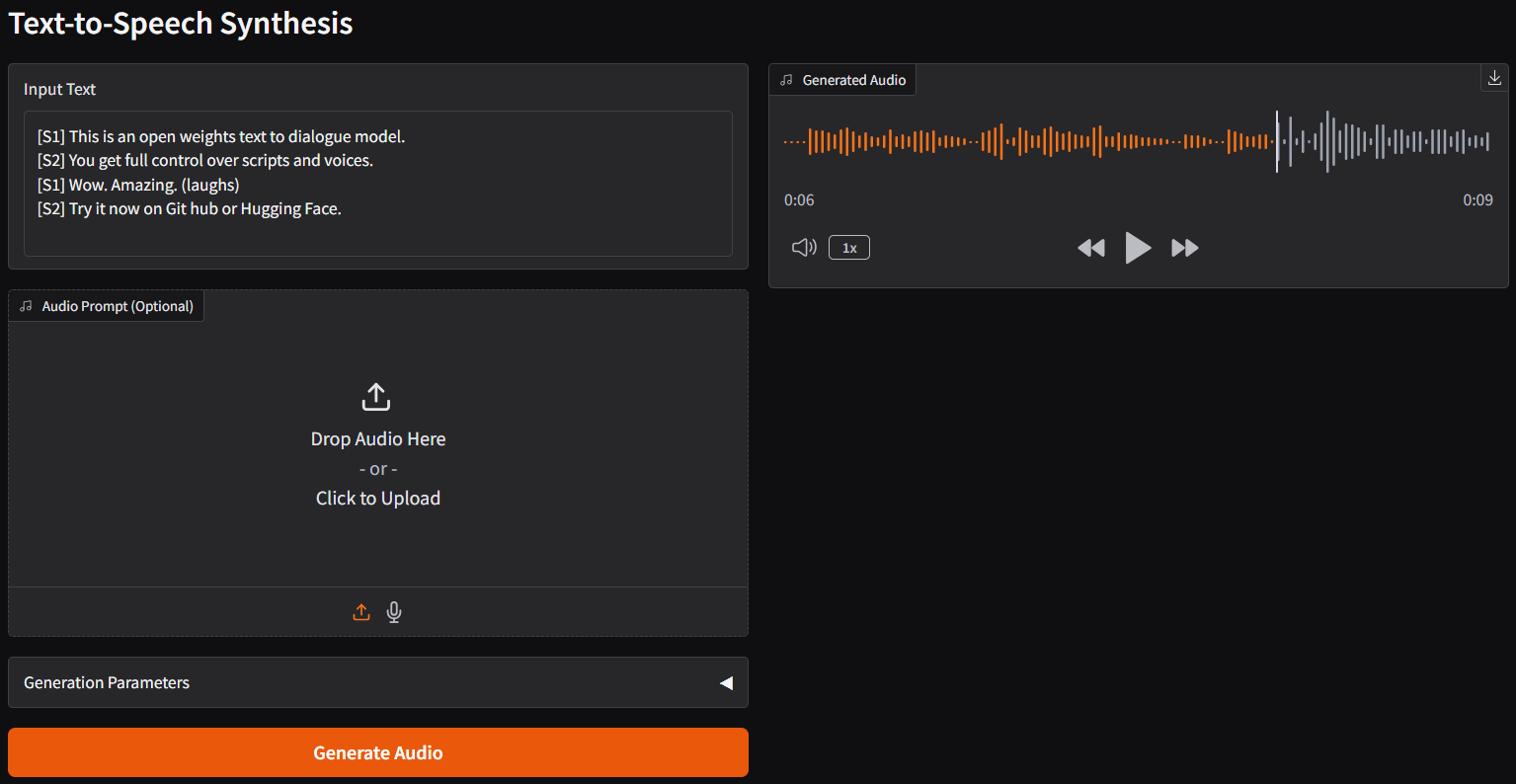

[S1] This is an open weights text to dialogue model.[S2] You get full control over scripts and voices.[S1] Wow. Amazing. (laughs)[S2] Try it now on Git hub or Hugging Face. |

🎧 dia-sample-1 |

[S1] Hey Connector, why your appearance looks so stupid?[S2] Oh, really? maybe I ate too much smart beans.[S1] Wow. Amazing. (laughs)[S2] Let's go to get some more smart beans and you will become stupid as well. |

🎧 dia-sample-2 |

connector s2 (alternative)

ggc s2

- note: differ from

ggc s6, forggc s2, model file(s) will be pulled to local cache automatically during the first launch (if you don't have gguf in your current directory, it will shift to s2 mode); then opt to run it entirely offline; i.e., from local URL: http://127.0.0.1:7860 with lazy webui

reference

- Downloads last month

- 287

Hardware compatibility

Log In

to view the estimation

2-bit

3-bit

4-bit

5-bit

6-bit

8-bit

16-bit

32-bit

Model tree for calcuis/dia-gguf

Base model

nari-labs/Dia-1.6B