The full dataset viewer is not available (click to read why). Only showing a preview of the rows.

Job manager crashed while running this job (missing heartbeats).

Error code: JobManagerCrashedError

Need help to make the dataset viewer work? Make sure to review how to configure the dataset viewer, and open a discussion for direct support.

modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

list | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

trungpq/rlcc-new-taste-upsample_replacement-absa-None

|

trungpq

| 2025-09-17T04:18:14 | 7 | 0 |

transformers

|

[

"transformers",

"safetensors",

"bert_with_absa",

"generated_from_trainer",

"endpoints_compatible",

"region:us"

] | null | 2025-09-10T16:37:21 |

---

library_name: transformers

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: rlcc-new-taste-upsample_replacement-absa-None

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# rlcc-new-taste-upsample_replacement-absa-None

This model is a fine-tuned version of [](https://huggingface.co/) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.5802

- Accuracy: 0.5726

- F1 Macro: 0.5792

- Precision Macro: 0.6133

- Recall Macro: 0.5739

- F1 Micro: 0.5726

- Precision Micro: 0.5726

- Recall Micro: 0.5726

- Total Tf: [209, 156, 574, 156]

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 46

- num_epochs: 25

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 Macro | Precision Macro | Recall Macro | F1 Micro | Precision Micro | Recall Micro | Total Tf |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:--------:|:---------------:|:------------:|:--------:|:---------------:|:------------:|:--------------------:|

| 1.0937 | 1.0 | 47 | 1.0853 | 0.4 | 0.3799 | 0.4504 | 0.4061 | 0.4000 | 0.4 | 0.4 | [146, 219, 511, 219] |

| 0.9512 | 2.0 | 94 | 0.9563 | 0.5178 | 0.4848 | 0.4862 | 0.5114 | 0.5178 | 0.5178 | 0.5178 | [189, 176, 554, 176] |

| 0.7605 | 3.0 | 141 | 0.9415 | 0.5671 | 0.5573 | 0.5636 | 0.5636 | 0.5671 | 0.5671 | 0.5671 | [207, 158, 572, 158] |

| 0.5771 | 4.0 | 188 | 1.0533 | 0.5315 | 0.5234 | 0.5237 | 0.5272 | 0.5315 | 0.5315 | 0.5315 | [194, 171, 559, 171] |

| 0.4679 | 5.0 | 235 | 1.0762 | 0.5753 | 0.5674 | 0.5674 | 0.5719 | 0.5753 | 0.5753 | 0.5753 | [210, 155, 575, 155] |

| 0.3613 | 6.0 | 282 | 1.1967 | 0.5726 | 0.5758 | 0.5866 | 0.5716 | 0.5726 | 0.5726 | 0.5726 | [209, 156, 574, 156] |

| 0.2918 | 7.0 | 329 | 1.2788 | 0.5753 | 0.5789 | 0.5899 | 0.5744 | 0.5753 | 0.5753 | 0.5753 | [210, 155, 575, 155] |

| 0.2011 | 8.0 | 376 | 1.3095 | 0.5753 | 0.5777 | 0.5848 | 0.5737 | 0.5753 | 0.5753 | 0.5753 | [210, 155, 575, 155] |

| 0.1837 | 9.0 | 423 | 1.3831 | 0.5781 | 0.5795 | 0.5851 | 0.5759 | 0.5781 | 0.5781 | 0.5781 | [211, 154, 576, 154] |

| 0.1268 | 10.0 | 470 | 1.5099 | 0.5671 | 0.5709 | 0.5815 | 0.5662 | 0.5671 | 0.5671 | 0.5671 | [207, 158, 572, 158] |

| 0.1098 | 11.0 | 517 | 1.5802 | 0.5726 | 0.5792 | 0.6133 | 0.5739 | 0.5726 | 0.5726 | 0.5726 | [209, 156, 574, 156] |

### Framework versions

- Transformers 4.52.4

- Pytorch 2.6.0+cu124

- Datasets 3.6.0

- Tokenizers 0.21.2

|

sombochea/qwen2.5-7b-vl-grpo

|

sombochea

| 2025-09-17T03:56:19 | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"text-generation-inference",

"unsloth",

"qwen2_5_vl",

"trl",

"en",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2025-09-17T03:55:56 |

---

base_model: unsloth/qwen2.5-vl-7b-instruct-unsloth-bnb-4bit

tags:

- text-generation-inference

- transformers

- unsloth

- qwen2_5_vl

- trl

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** sombochea

- **License:** apache-2.0

- **Finetuned from model :** unsloth/qwen2.5-vl-7b-instruct-unsloth-bnb-4bit

This qwen2_5_vl model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

darturi/Qwen2.5-7B-Instruct_bad-medical-advice_mlp.gate_proj_theta_0

|

darturi

| 2025-09-17T02:34:11 | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2025-09-17T02:34:02 |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

hdnfnfn/blockassist-bc-noisy_elusive_grouse_1758073236

|

hdnfnfn

| 2025-09-17T01:40:41 | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"noisy elusive grouse",

"arxiv:2504.07091",

"region:us"

] | null | 2025-09-17T01:40:37 |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- noisy elusive grouse

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

1038lab/pixai-tagger

|

1038lab

| 2025-09-17T01:33:42 | 0 | 0 | null |

[

"multi-label",

"anime",

"danbooru",

"safetensors",

"image-classification",

"license:apache-2.0",

"region:us"

] |

image-classification

| 2025-09-17T00:38:51 |

---

license: apache-2.0

pipeline_tag: image-classification

tags:

- multi-label

- anime

- danbooru

- safetensors

---

ℹ️ This is a **safetensors + tag JSON version** of the original model [pixai-labs/pixai-tagger-v0.9](https://huggingface.co/pixai-labs/pixai-tagger-v0.9).

🔄 Converted by [1038lab](https://huggingface.co/1038lab).

📦 No changes were made to model weights or logic — only converted `.pth` → `.safetensors` and bundled with `tags_v0.9_13k.json` for convenience.

🧠 Full credits and model training go to PixAI Labs.

---

<p>

<img src="https://huggingface.co/pixai-labs/pixai-tagger-v0.9/resolve/main/banner_09_cropped.jpg" style="height:240px;" />

<a href="https://huggingface.co/pixai-labs/pixai-tagger-v0.9"><strong>Original Model</strong></a> ·

<a href="#quickstart"><strong>Quickstart</strong></a> ·

<a href="#training-notes"><strong>Training Notes</strong></a> ·

<a href="#credits"><strong>Credits</strong></a>

</p>

---

# PixAI Tagger v0.9

A practical anime **multi-label tagger**. Not trying to win benchmarks; trying to be useful.

**High recall**, updated **character coverage**, trained on a fresh Danbooru snapshot (2025-01).

We’ll keep shipping: **v1.0** (with updated tags) is next.

> TL;DR

>

> - ~**13.5k** Danbooru-style tags (**general**, **character**, **copyright**)

> - Headline: strong **character** performance; recall-leaning defaults

> - Built for search, dataset curation, caption assistance, and text-to-image conditioning

---

## What it is (in one breath)

`pixai-tagger-v0.9` is a multi-label image classifier for anime images. It predicts Danbooru-style tags and aims to **find more of the right stuff** (recall) so you can filter later. We continued training the **classification head** of EVA02 (from WD v3) on a newer dataset, and used **embedding-space MixUp** to help calibration.

- **Last trained:** 2025-04

- **Data snapshot:** Danbooru IDs 1–8,600,750 (2025-01)

- **Finetuned from:** `SmilingWolf/wd-eva02-large-tagger-v3` (encoder frozen)

- **License (weights):** Apache 2.0 *(Note: Danbooru content has its own licenses.)*

---

## Why you might care

- **Newer data.** Catches more recent IPs/characters.

- **Recall-first defaults.** Good for search and curation; dial thresholds for precision.

- **Character focus.** We spent time here; it shows up in evals.

- **Simple to run.** Works as an endpoint or locally; small set of knobs.

---

## Quickstart

**Recommended defaults (balanced):**

- `top_k = 128`

- `threshold_general = 0.30`

- `threshold_character = 0.75`

**Coverage preset (recall-heavier):** `threshold_general = 0.10` (expect more false positives)

### 1) Inference Endpoint

Deploy as an HF Inference Endpoint and test with the following command:

```bash

# Replace with your own endpoint URL

curl "https://YOUR_ENDPOINT_URL.huggingface.cloud" \

-X POST \

-H "Accept: application/json" \

-H "Content-Type: application/json" \

-d '{

"inputs": {"url": "https://your.cdn/image.jpg"},

"parameters": {

"top_k": 128,

"threshold_general": 0.10,

"threshold_character": 0.75

}

}'

|

mekpro/whisper-large-v3-250916

|

mekpro

| 2025-09-17T01:20:04 | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"whisper",

"automatic-speech-recognition",

"text-generation-inference",

"unsloth",

"trl",

"en",

"base_model:mekpro/whisper-large-v3-250911",

"base_model:finetune:mekpro/whisper-large-v3-250911",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2025-09-17T01:18:33 |

---

base_model: mekpro/whisper-large-v3-250911

tags:

- text-generation-inference

- transformers

- unsloth

- whisper

- trl

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** mekpro

- **License:** apache-2.0

- **Finetuned from model :** mekpro/whisper-large-v3-250911

This whisper model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

vonmises69/Affine-5G9XPx652tLC4GuWzHytWvj6mJm4YjsXcNucMuDG1ShFUZNS

|

vonmises69

| 2025-09-17T00:06:02 | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"gpt_oss",

"text-generation",

"vllm",

"conversational",

"arxiv:2508.10925",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"8-bit",

"mxfp4",

"region:us"

] |

text-generation

| 2025-09-17T00:06:02 |

---

license: apache-2.0

pipeline_tag: text-generation

library_name: transformers

tags:

- vllm

---

<p align="center">

<img alt="gpt-oss-120b" src="https://raw.githubusercontent.com/openai/gpt-oss/main/docs/gpt-oss-120b.svg">

</p>

<p align="center">

<a href="https://gpt-oss.com"><strong>Try gpt-oss-120b</strong></a> ·

<a href="https://cookbook.openai.com/topic/gpt-oss"><strong>Guides</strong></a> ·

<a href="https://arxiv.org/abs/2508.10925"><strong>Model card</strong></a> ·

<a href="https://openai.com/index/introducing-gpt-oss/"><strong>OpenAI blog</strong></a>

</p>

<br>

Welcome to the gpt-oss series, [OpenAI’s open-weight models](https://openai.com/open-models) designed for powerful reasoning, agentic tasks, and versatile developer use cases.

We’re releasing two flavors of these open models:

- `gpt-oss-120b` — for production, general purpose, high reasoning use cases that fit into a single 80GB GPU (like NVIDIA H100 or AMD MI300X) (117B parameters with 5.1B active parameters)

- `gpt-oss-20b` — for lower latency, and local or specialized use cases (21B parameters with 3.6B active parameters)

Both models were trained on our [harmony response format](https://github.com/openai/harmony) and should only be used with the harmony format as it will not work correctly otherwise.

> [!NOTE]

> This model card is dedicated to the larger `gpt-oss-120b` model. Check out [`gpt-oss-20b`](https://huggingface.co/openai/gpt-oss-20b) for the smaller model.

# Highlights

* **Permissive Apache 2.0 license:** Build freely without copyleft restrictions or patent risk—ideal for experimentation, customization, and commercial deployment.

* **Configurable reasoning effort:** Easily adjust the reasoning effort (low, medium, high) based on your specific use case and latency needs.

* **Full chain-of-thought:** Gain complete access to the model’s reasoning process, facilitating easier debugging and increased trust in outputs. It’s not intended to be shown to end users.

* **Fine-tunable:** Fully customize models to your specific use case through parameter fine-tuning.

* **Agentic capabilities:** Use the models’ native capabilities for function calling, [web browsing](https://github.com/openai/gpt-oss/tree/main?tab=readme-ov-file#browser), [Python code execution](https://github.com/openai/gpt-oss/tree/main?tab=readme-ov-file#python), and Structured Outputs.

* **MXFP4 quantization:** The models were post-trained with MXFP4 quantization of the MoE weights, making `gpt-oss-120b` run on a single 80GB GPU (like NVIDIA H100 or AMD MI300X) and the `gpt-oss-20b` model run within 16GB of memory. All evals were performed with the same MXFP4 quantization.

---

# Inference examples

## Transformers

You can use `gpt-oss-120b` and `gpt-oss-20b` with Transformers. If you use the Transformers chat template, it will automatically apply the [harmony response format](https://github.com/openai/harmony). If you use `model.generate` directly, you need to apply the harmony format manually using the chat template or use our [openai-harmony](https://github.com/openai/harmony) package.

To get started, install the necessary dependencies to setup your environment:

```

pip install -U transformers kernels torch

```

Once, setup you can proceed to run the model by running the snippet below:

```py

from transformers import pipeline

import torch

model_id = "openai/gpt-oss-120b"

pipe = pipeline(

"text-generation",

model=model_id,

torch_dtype="auto",

device_map="auto",

)

messages = [

{"role": "user", "content": "Explain quantum mechanics clearly and concisely."},

]

outputs = pipe(

messages,

max_new_tokens=256,

)

print(outputs[0]["generated_text"][-1])

```

Alternatively, you can run the model via [`Transformers Serve`](https://huggingface.co/docs/transformers/main/serving) to spin up a OpenAI-compatible webserver:

```

transformers serve

transformers chat localhost:8000 --model-name-or-path openai/gpt-oss-120b

```

[Learn more about how to use gpt-oss with Transformers.](https://cookbook.openai.com/articles/gpt-oss/run-transformers)

## vLLM

vLLM recommends using [uv](https://docs.astral.sh/uv/) for Python dependency management. You can use vLLM to spin up an OpenAI-compatible webserver. The following command will automatically download the model and start the server.

```bash

uv pip install --pre vllm==0.10.1+gptoss \

--extra-index-url https://wheels.vllm.ai/gpt-oss/ \

--extra-index-url https://download.pytorch.org/whl/nightly/cu128 \

--index-strategy unsafe-best-match

vllm serve openai/gpt-oss-120b

```

[Learn more about how to use gpt-oss with vLLM.](https://cookbook.openai.com/articles/gpt-oss/run-vllm)

## PyTorch / Triton

To learn about how to use this model with PyTorch and Triton, check out our [reference implementations in the gpt-oss repository](https://github.com/openai/gpt-oss?tab=readme-ov-file#reference-pytorch-implementation).

## Ollama

If you are trying to run gpt-oss on consumer hardware, you can use Ollama by running the following commands after [installing Ollama](https://ollama.com/download).

```bash

# gpt-oss-120b

ollama pull gpt-oss:120b

ollama run gpt-oss:120b

```

[Learn more about how to use gpt-oss with Ollama.](https://cookbook.openai.com/articles/gpt-oss/run-locally-ollama)

#### LM Studio

If you are using [LM Studio](https://lmstudio.ai/) you can use the following commands to download.

```bash

# gpt-oss-120b

lms get openai/gpt-oss-120b

```

Check out our [awesome list](https://github.com/openai/gpt-oss/blob/main/awesome-gpt-oss.md) for a broader collection of gpt-oss resources and inference partners.

---

# Download the model

You can download the model weights from the [Hugging Face Hub](https://huggingface.co/collections/openai/gpt-oss-68911959590a1634ba11c7a4) directly from Hugging Face CLI:

```shell

# gpt-oss-120b

huggingface-cli download openai/gpt-oss-120b --include "original/*" --local-dir gpt-oss-120b/

pip install gpt-oss

python -m gpt_oss.chat model/

```

# Reasoning levels

You can adjust the reasoning level that suits your task across three levels:

* **Low:** Fast responses for general dialogue.

* **Medium:** Balanced speed and detail.

* **High:** Deep and detailed analysis.

The reasoning level can be set in the system prompts, e.g., "Reasoning: high".

# Tool use

The gpt-oss models are excellent for:

* Web browsing (using built-in browsing tools)

* Function calling with defined schemas

* Agentic operations like browser tasks

# Fine-tuning

Both gpt-oss models can be fine-tuned for a variety of specialized use cases.

This larger model `gpt-oss-120b` can be fine-tuned on a single H100 node, whereas the smaller [`gpt-oss-20b`](https://huggingface.co/openai/gpt-oss-20b) can even be fine-tuned on consumer hardware.

# Citation

```bibtex

@misc{openai2025gptoss120bgptoss20bmodel,

title={gpt-oss-120b & gpt-oss-20b Model Card},

author={OpenAI},

year={2025},

eprint={2508.10925},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2508.10925},

}

```

|

Noore573/llama32-3b-cookbot-adapter

|

Noore573

| 2025-09-16T23:37:16 | 17 | 0 |

peft

|

[

"peft",

"safetensors",

"base_model:adapter:unsloth/llama-3.2-3b-instruct-unsloth-bnb-4bit",

"lora",

"sft",

"transformers",

"trl",

"unsloth",

"text-generation",

"conversational",

"arxiv:1910.09700",

"region:us"

] |

text-generation

| 2025-09-16T01:39:56 |

---

base_model: unsloth/llama-3.2-3b-instruct-unsloth-bnb-4bit

library_name: peft

pipeline_tag: text-generation

tags:

- base_model:adapter:unsloth/llama-3.2-3b-instruct-unsloth-bnb-4bit

- lora

- sft

- transformers

- trl

- unsloth

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

### Framework versions

- PEFT 0.17.1

|

nofunstudio/jimmy

|

nofunstudio

| 2025-09-16T23:02:23 | 4 | 0 |

diffusers

|

[

"diffusers",

"flux",

"text-to-image",

"lora",

"fal",

"license:other",

"region:us"

] |

text-to-image

| 2024-11-27T22:01:01 |

---

tags:

- flux

- text-to-image

- lora

- diffusers

- fal

base_model: undefined

instance_prompt: JIMMY

license: other

---

# jimmy

<Gallery />

## Model description

Jimmy Face Training

## Trigger words

You should use `JIMMY` to trigger the image generation.

## Download model

Weights for this model are available in Safetensors format.

[Download](/nofunstudio/jimmy/tree/main) them in the Files & versions tab.

## Training at fal.ai

Training was done using [fal.ai/models/fal-ai/flux-lora-portrait-trainer](https://fal.ai/models/fal-ai/flux-lora-portrait-trainer).

|

sonspeed/vit5-vietgpt-cpo-newest2

|

sonspeed

| 2025-09-16T22:57:10 | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"t5",

"text2text-generation",

"arxiv:1910.09700",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | null | 2025-09-16T22:56:45 |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

EMBO/SourceData_RolesMulti_v1_0_0_BioLinkBERT_large

|

EMBO

| 2025-09-16T22:53:39 | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"bert",

"token-classification",

"generated_from_trainer",

"dataset:source_data",

"base_model:michiyasunaga/BioLinkBERT-large",

"base_model:finetune:michiyasunaga/BioLinkBERT-large",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2025-09-16T21:26:49 |

---

library_name: transformers

license: apache-2.0

base_model: michiyasunaga/BioLinkBERT-large

tags:

- generated_from_trainer

datasets:

- source_data

metrics:

- precision

- recall

- f1

model-index:

- name: SourceData_RolesMulti_v1_0_0_BioLinkBERT_large

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: source_data

type: source_data

config: ROLES_MULTI

split: validation

args: ROLES_MULTI

metrics:

- name: Precision

type: precision

value: 0.9572504708097929

- name: Recall

type: recall

value: 0.9683749285578206

- name: F1

type: f1

value: 0.9627805663415098

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# SourceData_RolesMulti_v1_0_0_BioLinkBERT_large

This model is a fine-tuned version of [michiyasunaga/BioLinkBERT-large](https://huggingface.co/michiyasunaga/BioLinkBERT-large) on the source_data dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0066

- Accuracy Score: 0.9981

- Precision: 0.9573

- Recall: 0.9684

- F1: 0.9628

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 64

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 64

- optimizer: Use adafactor and the args are:

No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 1.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy Score | Precision | Recall | F1 |

|:-------------:|:------:|:----:|:---------------:|:--------------:|:---------:|:------:|:------:|

| 0.0044 | 0.9994 | 863 | 0.0066 | 0.9981 | 0.9573 | 0.9684 | 0.9628 |

### Framework versions

- Transformers 4.46.3

- Pytorch 1.13.1+cu117

- Datasets 3.1.0

- Tokenizers 0.20.3

|

Cheeeeeeeeky/Affine-5C8JPiLuLKQAeBhZ6rXVJLtmY3PdAYxarRnSYvmyjJaQMZxe_4

|

Cheeeeeeeeky

| 2025-09-16T22:51:10 | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen3_next",

"text-generation",

"conversational",

"arxiv:2309.00071",

"arxiv:2404.06654",

"arxiv:2505.09388",

"arxiv:2501.15383",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-16T13:58:34 |

---

library_name: transformers

license: apache-2.0

license_link: https://huggingface.co/Qwen/Qwen3-Next-80B-A3B-Instruct/blob/main/LICENSE

pipeline_tag: text-generation

---

# Qwen3-Next-80B-A3B-Instruct

<a href="https://chat.qwen.ai/" target="_blank" style="margin: 2px;">

<img alt="Chat" src="https://img.shields.io/badge/%F0%9F%92%9C%EF%B8%8F%20Qwen%20Chat%20-536af5" style="display: inline-block; vertical-align: middle;"/>

</a>

Over the past few months, we have observed increasingly clear trends toward scaling both total parameters and context lengths in the pursuit of more powerful and agentic artificial intelligence (AI).

We are excited to share our latest advancements in addressing these demands, centered on improving scaling efficiency through innovative model architecture.

We call this next-generation foundation models **Qwen3-Next**.

## Highlights

**Qwen3-Next-80B-A3B** is the first installment in the Qwen3-Next series and features the following key enchancements:

- **Hybrid Attention**: Replaces standard attention with the combination of **Gated DeltaNet** and **Gated Attention**, enabling efficient context modeling for ultra-long context length.

- **High-Sparsity Mixture-of-Experts (MoE)**: Achieves an extreme low activation ratio in MoE layers, drastically reducing FLOPs per token while preserving model capacity.

- **Stability Optimizations**: Includes techniques such as **zero-centered and weight-decayed layernorm**, and other stabilizing enhancements for robust pre-training and post-training.

- **Multi-Token Prediction (MTP)**: Boosts pretraining model performance and accelerates inference.

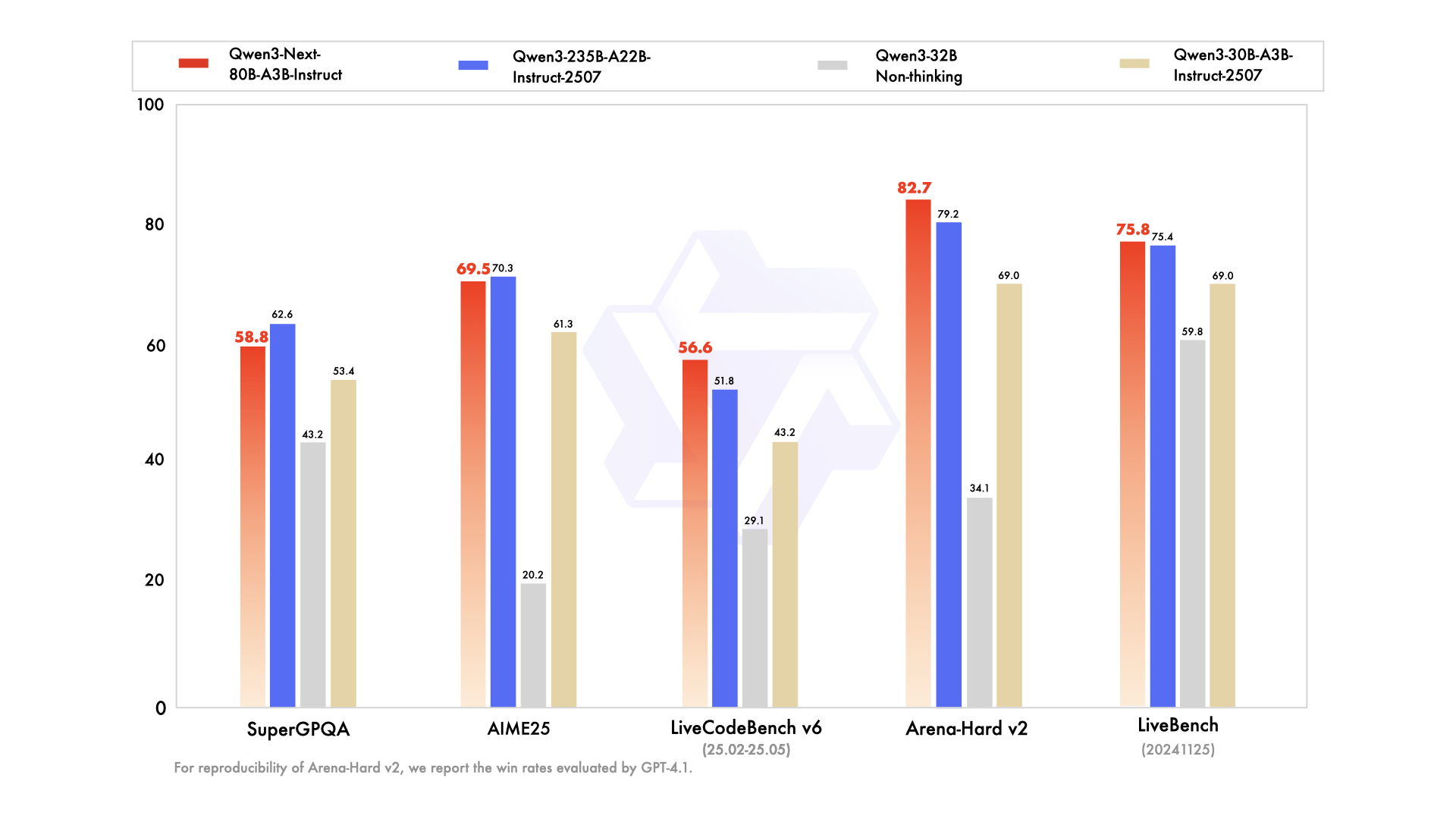

We are seeing strong performance in terms of both parameter efficiency and inference speed for Qwen3-Next-80B-A3B:

- Qwen3-Next-80B-A3B-Base outperforms Qwen3-32B-Base on downstream tasks with 10% of the total training cost and with 10 times inference throughput for context over 32K tokens.

- Qwen3-Next-80B-A3B-Instruct performs on par with Qwen3-235B-A22B-Instruct-2507 on certain benchmarks, while demonstrating significant advantages in handling ultra-long-context tasks up to 256K tokens.

For more details, please refer to our blog post [Qwen3-Next](https://qwen.ai/blog?id=4074cca80393150c248e508aa62983f9cb7d27cd&from=research.latest-advancements-list).

## Model Overview

> [!Note]

> **Qwen3-Next-80B-A3B-Instruct** supports only instruct (non-thinking) mode and does not generate ``<think></think>`` blocks in its output.

**Qwen3-Next-80B-A3B-Instruct** has the following features:

- Type: Causal Language Models

- Training Stage: Pretraining (15T tokens) & Post-training

- Number of Parameters: 80B in total and 3B activated

- Number of Paramaters (Non-Embedding): 79B

- Hidden Dimension: 2048

- Number of Layers: 48

- Hybrid Layout: 12 \* (3 \* (Gated DeltaNet -> MoE) -> 1 \* (Gated Attention -> MoE))

- Gated Attention:

- Number of Attention Heads: 16 for Q and 2 for KV

- Head Dimension: 256

- Rotary Position Embedding Dimension: 64

- Gated DeltaNet:

- Number of Linear Attention Heads: 32 for V and 16 for QK

- Head Dimension: 128

- Mixture of Experts:

- Number of Experts: 512

- Number of Activated Experts: 10

- Number of Shared Experts: 1

- Expert Intermediate Dimension: 512

- Context Length: 262,144 natively and extensible up to 1,010,000 tokens

<img src="https://qianwen-res.oss-accelerate.aliyuncs.com/Qwen3-Next/model_architecture.png" height="384px" title="Qwen3-Next Model Architecture" />

## Performance

| | Qwen3-30B-A3B-Instruct-2507 | Qwen3-32B Non-Thinking | Qwen3-235B-A22B-Instruct-2507 | Qwen3-Next-80B-A3B-Instruct |

|--- | --- | --- | --- | --- |

| **Knowledge** | | | | |

| MMLU-Pro | 78.4 | 71.9 | **83.0** | 80.6 |

| MMLU-Redux | 89.3 | 85.7 | **93.1** | 90.9 |

| GPQA | 70.4 | 54.6 | **77.5** | 72.9 |

| SuperGPQA | 53.4 | 43.2 | **62.6** | 58.8 |

| **Reasoning** | | | | |

| AIME25 | 61.3 | 20.2 | **70.3** | 69.5 |

| HMMT25 | 43.0 | 9.8 | **55.4** | 54.1 |

| LiveBench 20241125 | 69.0 | 59.8 | 75.4 | **75.8** |

| **Coding** | | | | |

| LiveCodeBench v6 (25.02-25.05) | 43.2 | 29.1 | 51.8 | **56.6** |

| MultiPL-E | 83.8 | 76.9 | **87.9** | 87.8 |

| Aider-Polyglot | 35.6 | 40.0 | **57.3** | 49.8 |

| **Alignment** | | | | |

| IFEval | 84.7 | 83.2 | **88.7** | 87.6 |

| Arena-Hard v2* | 69.0 | 34.1 | 79.2 | **82.7** |

| Creative Writing v3 | 86.0 | 78.3 | **87.5** | 85.3 |

| WritingBench | 85.5 | 75.4 | 85.2 | **87.3** |

| **Agent** | | | | |

| BFCL-v3 | 65.1 | 63.0 | **70.9** | 70.3 |

| TAU1-Retail | 59.1 | 40.1 | **71.3** | 60.9 |

| TAU1-Airline | 40.0 | 17.0 | **44.0** | 44.0 |

| TAU2-Retail | 57.0 | 48.8 | **74.6** | 57.3 |

| TAU2-Airline | 38.0 | 24.0 | **50.0** | 45.5 |

| TAU2-Telecom | 12.3 | 24.6 | **32.5** | 13.2 |

| **Multilingualism** | | | | |

| MultiIF | 67.9 | 70.7 | **77.5** | 75.8 |

| MMLU-ProX | 72.0 | 69.3 | **79.4** | 76.7 |

| INCLUDE | 71.9 | 70.9 | **79.5** | 78.9 |

| PolyMATH | 43.1 | 22.5 | **50.2** | 45.9 |

*: For reproducibility, we report the win rates evaluated by GPT-4.1.

## Quickstart

The code for Qwen3-Next has been merged into the main branch of Hugging Face `transformers`.

```shell

pip install git+https://github.com/huggingface/transformers.git@main

```

With earlier versions, you will encounter the following error:

```

KeyError: 'qwen3_next'

```

The following contains a code snippet illustrating how to use the model generate content based on given inputs.

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Qwen/Qwen3-Next-80B-A3B-Instruct"

# load the tokenizer and the model

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

dtype="auto",

device_map="auto",

)

# prepare the model input

prompt = "Give me a short introduction to large language model."

messages = [

{"role": "user", "content": prompt},

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True,

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

# conduct text completion

generated_ids = model.generate(

**model_inputs,

max_new_tokens=16384,

)

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

content = tokenizer.decode(output_ids, skip_special_tokens=True)

print("content:", content)

```

> [!Note]

> Multi-Token Prediction (MTP) is not generally available in Hugging Face Transformers.

> [!Note]

> The efficiency or throughput improvement depends highly on the implementation.

> It is recommended to adopt a dedicated inference framework, e.g., SGLang and vLLM, for inference tasks.

> [!Tip]

> Depending on the inference settings, you may observe better efficiency with [`flash-linear-attention`](https://github.com/fla-org/flash-linear-attention#installation) and [`causal-conv1d`](https://github.com/Dao-AILab/causal-conv1d).

> See the links for detailed instructions and requirements.

## Deployment

For deployment, you can use the latest `sglang` or `vllm` to create an OpenAI-compatible API endpoint.

### SGLang

[SGLang](https://github.com/sgl-project/sglang) is a fast serving framework for large language models and vision language models.

SGLang could be used to launch a server with OpenAI-compatible API service.

`sglang>=0.5.2` is required for Qwen3-Next, which can be installed using:

```shell

pip install 'sglang[all]>=0.5.2'

```

See [its documentation](https://docs.sglang.ai/get_started/install.html) for more details.

The following command can be used to create an API endpoint at `http://localhost:30000/v1` with maximum context length 256K tokens using tensor parallel on 4 GPUs.

```shell

python -m sglang.launch_server --model-path Qwen/Qwen3-Next-80B-A3B-Instruct --port 30000 --tp-size 4 --context-length 262144 --mem-fraction-static 0.8

```

The following command is recommended for MTP with the rest settings the same as above:

```shell

python -m sglang.launch_server --model-path Qwen/Qwen3-Next-80B-A3B-Instruct --port 30000 --tp-size 4 --context-length 262144 --mem-fraction-static 0.8 --speculative-algo NEXTN --speculative-num-steps 3 --speculative-eagle-topk 1 --speculative-num-draft-tokens 4

```

> [!Note]

> The default context length is 256K. Consider reducing the context length to a smaller value, e.g., `32768`, if the server fails to start.

Please also refer to SGLang's usage guide on [Qwen3-Next](https://docs.sglang.ai/basic_usage/qwen3.html).

### vLLM

[vLLM](https://github.com/vllm-project/vllm) is a high-throughput and memory-efficient inference and serving engine for LLMs.

vLLM could be used to launch a server with OpenAI-compatible API service.

`vllm>=0.10.2` is required for Qwen3-Next, which can be installed using:

```shell

pip install 'vllm>=0.10.2'

```

See [its documentation](https://docs.vllm.ai/en/stable/getting_started/installation/index.html) for more details.

The following command can be used to create an API endpoint at `http://localhost:8000/v1` with maximum context length 256K tokens using tensor parallel on 4 GPUs.

```shell

vllm serve Qwen/Qwen3-Next-80B-A3B-Instruct --port 8000 --tensor-parallel-size 4 --max-model-len 262144

```

The following command is recommended for MTP with the rest settings the same as above:

```shell

vllm serve Qwen/Qwen3-Next-80B-A3B-Instruct --port 8000 --tensor-parallel-size 4 --max-model-len 262144 --speculative-config '{"method":"qwen3_next_mtp","num_speculative_tokens":2}'

```

> [!Note]

> The default context length is 256K. Consider reducing the context length to a smaller value, e.g., `32768`, if the server fails to start.

Please also refer to vLLM's usage guide on [Qwen3-Next](https://docs.vllm.ai/projects/recipes/en/latest/Qwen/Qwen3-Next.html).

## Agentic Use

Qwen3 excels in tool calling capabilities. We recommend using [Qwen-Agent](https://github.com/QwenLM/Qwen-Agent) to make the best use of agentic ability of Qwen3. Qwen-Agent encapsulates tool-calling templates and tool-calling parsers internally, greatly reducing coding complexity.

To define the available tools, you can use the MCP configuration file, use the integrated tool of Qwen-Agent, or integrate other tools by yourself.

```python

from qwen_agent.agents import Assistant

# Define LLM

llm_cfg = {

'model': 'Qwen3-Next-80B-A3B-Instruct',

# Use a custom endpoint compatible with OpenAI API:

'model_server': 'http://localhost:8000/v1', # api_base

'api_key': 'EMPTY',

}

# Define Tools

tools = [

{'mcpServers': { # You can specify the MCP configuration file

'time': {

'command': 'uvx',

'args': ['mcp-server-time', '--local-timezone=Asia/Shanghai']

},

"fetch": {

"command": "uvx",

"args": ["mcp-server-fetch"]

}

}

},

'code_interpreter', # Built-in tools

]

# Define Agent

bot = Assistant(llm=llm_cfg, function_list=tools)

# Streaming generation

messages = [{'role': 'user', 'content': 'https://qwenlm.github.io/blog/ Introduce the latest developments of Qwen'}]

for responses in bot.run(messages=messages):

pass

print(responses)

```

## Processing Ultra-Long Texts

Qwen3-Next natively supports context lengths of up to 262,144 tokens.

For conversations where the total length (including both input and output) significantly exceeds this limit, we recommend using RoPE scaling techniques to handle long texts effectively.

We have validated the model's performance on context lengths of up to 1 million tokens using the [YaRN](https://arxiv.org/abs/2309.00071) method.

YaRN is currently supported by several inference frameworks, e.g., `transformers`, `vllm` and `sglang`.

In general, there are two approaches to enabling YaRN for supported frameworks:

- Modifying the model files:

In the `config.json` file, add the `rope_scaling` fields:

```json

{

...,

"rope_scaling": {

"rope_type": "yarn",

"factor": 4.0,

"original_max_position_embeddings": 262144

}

}

```

- Passing command line arguments:

For `vllm`, you can use

```shell

VLLM_ALLOW_LONG_MAX_MODEL_LEN=1 vllm serve ... --rope-scaling '{"rope_type":"yarn","factor":4.0,"original_max_position_embeddings":262144}' --max-model-len 1010000

```

For `sglang`, you can use

```shell

SGLANG_ALLOW_OVERWRITE_LONGER_CONTEXT_LEN=1 python -m sglang.launch_server ... --json-model-override-args '{"rope_scaling":{"rope_type":"yarn","factor":4.0,"original_max_position_embeddings":262144}}' --context-length 1010000

```

> [!NOTE]

> All the notable open-source frameworks implement static YaRN, which means the scaling factor remains constant regardless of input length, **potentially impacting performance on shorter texts.**

> We advise adding the `rope_scaling` configuration only when processing long contexts is required.

> It is also recommended to modify the `factor` as needed. For example, if the typical context length for your application is 524,288 tokens, it would be better to set `factor` as 2.0.

#### Long-Context Performance

We test the model on an 1M version of the [RULER](https://arxiv.org/abs/2404.06654) benchmark.

| Model Name | Acc avg | 4k | 8k | 16k | 32k | 64k | 96k | 128k | 192k | 256k | 384k | 512k | 640k | 768k | 896k | 1000k |

|---------------------------------------------|---------|------|------|------|------|------|------|------|------|------|------|------|------|------|------|-------|

| Qwen3-30B-A3B-Instruct-2507 | 86.8 | 98.0 | 96.7 | 96.9 | 97.2 | 93.4 | 91.0 | 89.1 | 89.8 | 82.5 | 83.6 | 78.4 | 79.7 | 77.6 | 75.7 | 72.8 |

| Qwen3-235B-A22B-Instruct-2507 | 92.5 | 98.5 | 97.6 | 96.9 | 97.3 | 95.8 | 94.9 | 93.9 | 94.5 | 91.0 | 92.2 | 90.9 | 87.8 | 84.8 | 86.5 | 84.5 |

| Qwen3-Next-80B-A3B-Instruct | 91.8 | 98.5 | 99.0 | 98.0 | 98.7 | 97.6 | 95.0 | 96.0 | 94.0 | 93.5 | 91.7 | 86.9 | 85.5 | 81.7 | 80.3 | 80.3 |

* Qwen3-Next are evaluated with YaRN enabled. Qwen3-2507 models are evaluated with Dual Chunk Attention enabled.

* Since the evaluation is time-consuming, we use 260 samples for each length (13 sub-tasks, 20 samples for each).

## Best Practices

To achieve optimal performance, we recommend the following settings:

1. **Sampling Parameters**:

- We suggest using `Temperature=0.7`, `TopP=0.8`, `TopK=20`, and `MinP=0`.

- For supported frameworks, you can adjust the `presence_penalty` parameter between 0 and 2 to reduce endless repetitions. However, using a higher value may occasionally result in language mixing and a slight decrease in model performance.

2. **Adequate Output Length**: We recommend using an output length of 16,384 tokens for most queries, which is adequate for instruct models.

3. **Standardize Output Format**: We recommend using prompts to standardize model outputs when benchmarking.

- **Math Problems**: Include "Please reason step by step, and put your final answer within \boxed{}." in the prompt.

- **Multiple-Choice Questions**: Add the following JSON structure to the prompt to standardize responses: "Please show your choice in the `answer` field with only the choice letter, e.g., `"answer": "C"`."

### Citation

If you find our work helpful, feel free to give us a cite.

```

@misc{qwen3technicalreport,

title={Qwen3 Technical Report},

author={Qwen Team},

year={2025},

eprint={2505.09388},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2505.09388},

}

@article{qwen2.5-1m,

title={Qwen2.5-1M Technical Report},

author={An Yang and Bowen Yu and Chengyuan Li and Dayiheng Liu and Fei Huang and Haoyan Huang and Jiandong Jiang and Jianhong Tu and Jianwei Zhang and Jingren Zhou and Junyang Lin and Kai Dang and Kexin Yang and Le Yu and Mei Li and Minmin Sun and Qin Zhu and Rui Men and Tao He and Weijia Xu and Wenbiao Yin and Wenyuan Yu and Xiafei Qiu and Xingzhang Ren and Xinlong Yang and Yong Li and Zhiying Xu and Zipeng Zhang},

journal={arXiv preprint arXiv:2501.15383},

year={2025}

}

```

|

nartaobas/blockassist

|

nartaobas

| 2025-09-16T22:32:40 | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"thick bipedal lion",

"arxiv:2504.07091",

"region:us"

] | null | 2025-09-16T22:22:26 |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- thick bipedal lion

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

luckeciano/Qwen-2.5-7B-DrGRPO-Adam-FisherMaskToken-1e-6-v3_6607

|

luckeciano

| 2025-09-16T22:26:22 | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen2",

"text-generation",

"generated_from_trainer",

"open-r1",

"trl",

"grpo",

"conversational",

"dataset:DigitalLearningGmbH/MATH-lighteval",

"arxiv:2402.03300",

"base_model:Qwen/Qwen2.5-Math-7B",

"base_model:finetune:Qwen/Qwen2.5-Math-7B",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-16T21:29:13 |

---

base_model: Qwen/Qwen2.5-Math-7B

datasets: DigitalLearningGmbH/MATH-lighteval

library_name: transformers

model_name: Qwen-2.5-7B-DrGRPO-Adam-FisherMaskToken-1e-6-v3_4058

tags:

- generated_from_trainer

- open-r1

- trl

- grpo

licence: license

---

# Model Card for Qwen-2.5-7B-DrGRPO-Adam-FisherMaskToken-1e-6-v3_4058

This model is a fine-tuned version of [Qwen/Qwen2.5-Math-7B](https://huggingface.co/Qwen/Qwen2.5-Math-7B) on the [DigitalLearningGmbH/MATH-lighteval](https://huggingface.co/datasets/DigitalLearningGmbH/MATH-lighteval) dataset.

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="luckeciano/Qwen-2.5-7B-DrGRPO-Adam-FisherMaskToken-1e-6-v3_4058", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/max-ent-llms/PolicyGradientStability/runs/34z3dhfr)

This model was trained with GRPO, a method introduced in [DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models](https://huggingface.co/papers/2402.03300).

### Framework versions

- TRL: 0.16.0.dev0

- Transformers: 4.49.0

- Pytorch: 2.5.1

- Datasets: 3.4.1

- Tokenizers: 0.21.2

## Citations

Cite GRPO as:

```bibtex

@article{zhihong2024deepseekmath,

title = {{DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models}},

author = {Zhihong Shao and Peiyi Wang and Qihao Zhu and Runxin Xu and Junxiao Song and Mingchuan Zhang and Y. K. Li and Y. Wu and Daya Guo},

year = 2024,

eprint = {arXiv:2402.03300},

}

```

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallouédec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

Max8678/Qwen3-0.6B-Gensyn-Swarm-tough_prehistoric_alpaca

|

Max8678

| 2025-09-16T22:10:29 | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen3",

"text-generation",

"rl-swarm",

"genrl-swarm",

"grpo",

"gensyn",

"I am tough_prehistoric_alpaca",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-16T22:10:14 |

---

library_name: transformers

tags:

- rl-swarm

- genrl-swarm

- grpo

- gensyn

- I am tough_prehistoric_alpaca

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

hdnfnfn/blockassist-bc-shaggy_melodic_cobra_1758058535

|

hdnfnfn

| 2025-09-16T21:35:38 | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"shaggy melodic cobra",

"arxiv:2504.07091",

"region:us"

] | null | 2025-09-16T21:35:35 |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- shaggy melodic cobra

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

msuribec/imdbreviews_classification_deberta_v3_base_lora_v03

|

msuribec

| 2025-09-16T21:18:45 | 0 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2025-09-16T19:27:54 |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

hdnfnfn/blockassist-bc-armored_climbing_rooster_1758057012

|

hdnfnfn

| 2025-09-16T21:10:15 | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"armored climbing rooster",

"arxiv:2504.07091",

"region:us"

] | null | 2025-09-16T21:10:13 |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- armored climbing rooster

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

doddycz/Qwen3-0.6B-Gensyn-Swarm-finicky_horned_tortoise

|

doddycz

| 2025-09-16T20:52:57 | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen3",

"text-generation",

"rl-swarm",

"genrl-swarm",

"grpo",

"gensyn",

"I am finicky_horned_tortoise",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-16T15:03:00 |

---

library_name: transformers

tags:

- rl-swarm

- genrl-swarm

- grpo

- gensyn

- I am finicky_horned_tortoise

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses