Datasets:

Improve dataset card for PhysToolBench: Add task category, description, and usage instructions

Browse filesThis PR significantly enhances the dataset card for `PhysToolBench` by:

* Adding `task_categories: ['image-text-to-text']` to the metadata for better discoverability, reflecting its nature as a Visual Question Answering (VQA) dataset.

* Including a direct link to the Hugging Face paper page (`https://huggingface.co/papers/2510.09507`).

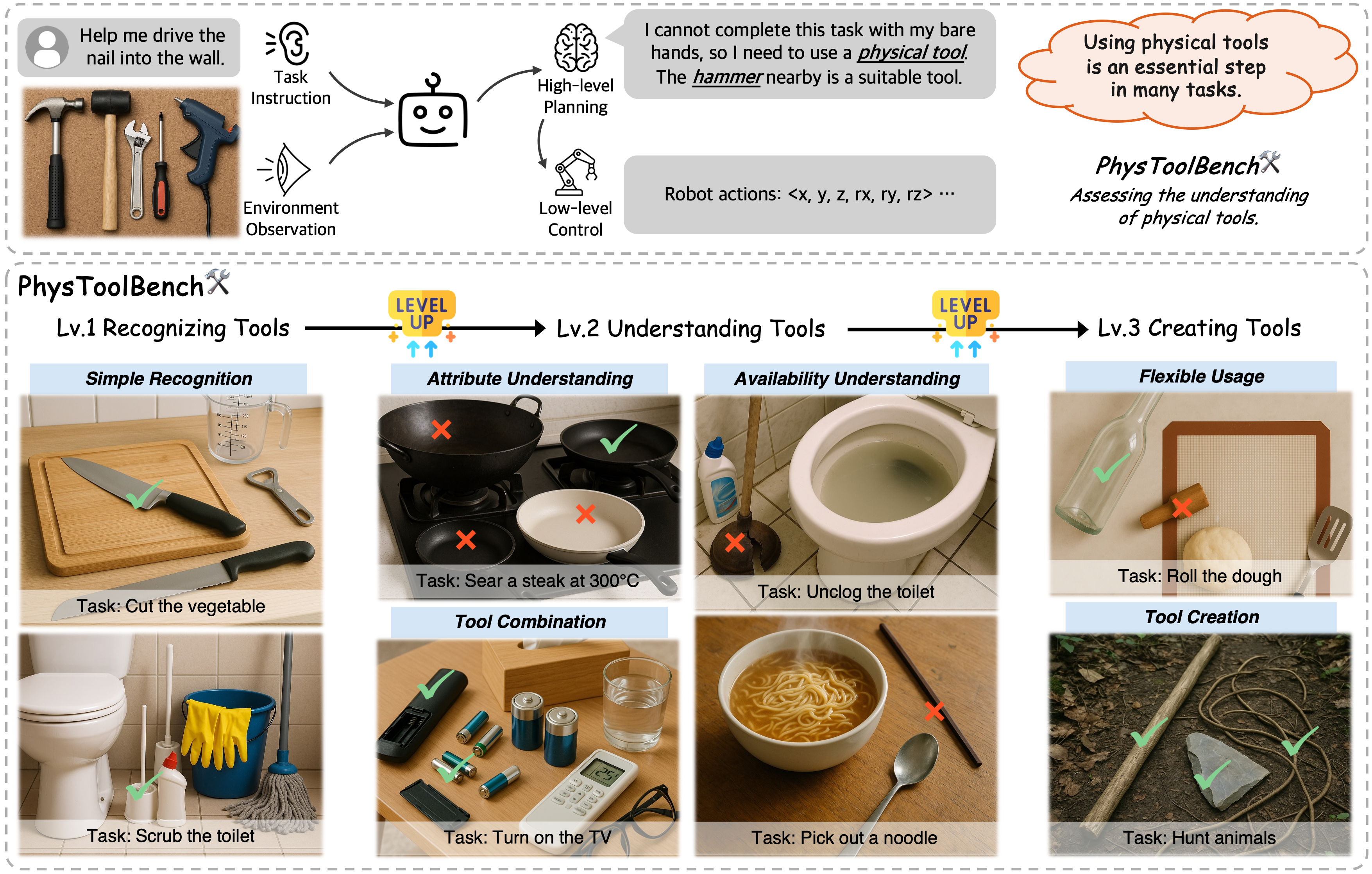

* Providing a comprehensive description of the dataset, including its motivation, structure (three difficulty levels), and purpose, derived from the paper's abstract and the GitHub README.

* Embedding the teaser image from the GitHub repository to visually represent the benchmark.

* Incorporating a detailed "Sample Usage" section with instructions and code snippets for environment setup, dataset download, model inference (proprietary and open-source), and evaluation, directly sourced from the official GitHub repository.

* Adding the BibTeX citation for proper attribution and an Acknowledgement section.

|

@@ -1,4 +1,6 @@

|

|

| 1 |

---

|

|

|

|

|

|

|

| 2 |

dataset_info:

|

| 3 |

features:

|

| 4 |

- name: id

|

|

@@ -28,6 +30,92 @@ configs:

|

|

| 28 |

path: data/train-*

|

| 29 |

---

|

| 30 |

|

| 31 |

-

|

| 32 |

|

| 33 |

-

[arXiv Paper](https://arxiv.org/abs/2510.09507)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

task_categories:

|

| 3 |

+

- image-text-to-text

|

| 4 |

dataset_info:

|

| 5 |

features:

|

| 6 |

- name: id

|

|

|

|

| 30 |

path: data/train-*

|

| 31 |

---

|

| 32 |

|

| 33 |

+

# PhysToolBench: Benchmarking Physical Tool Understanding for MLLMs

|

| 34 |

|

| 35 |

+

[Hugging Face Paper](https://huggingface.co/papers/2510.09507) | [arXiv Paper](https://arxiv.org/abs/2510.09507) | [GitHub Repo](https://github.com/EnVision-Research/PhysToolBench)

|

| 36 |

+

|

| 37 |

+

## 📢 News

|

| 38 |

+

- **[2025.10.13]** The paper is now available on arXiv!

|

| 39 |

+

- **[2025.10.10]** We release the dataset and the code! Welcome to use and star our project!

|

| 40 |

+

|

| 41 |

+

## Introduction

|

| 42 |

+

> **"Man is a Tool-using Animal; without tools he is nothing, with tools he is all." --Thomas Carlyle**

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

*For an Embodied Agent, using physical tools is crucial in many tasks. The understanding of physical tools significantly impacts the task's success rate and execution efficiency (Top). PhysToolBench (Bottom) systematically evaluates the understanding of physical tools of multimodal LLMs. The benchmark is designed with three progressive levels of difficulty and employs a Visual Question Answering (VQA) format. Notice that in the actual benchmark, tools in the images are numerically labeled. Images here are for illustrative purposes only.*

|

| 46 |

+

|

| 47 |

+

The ability to use, understand, and create tools is a hallmark of human intelligence, enabling sophisticated interaction with the physical world. For any general-purpose intelligent agent to achieve true versatility, it must also master these fundamental skills. While modern Multimodal Large Language Models (MLLMs) leverage their extensive common knowledge for high-level planning in embodied AI and in downstream Vision-Language-Action (VLA) models, the extent of their true understanding of physical tools remains unquantified.

|

| 48 |

+

|

| 49 |

+

To bridge this gap, we present **PhysToolBench**, the first benchmark dedicated to evaluating the comprehension of physical tools by MLLMs. Our benchmark is structured as a Visual Question Answering (VQA) dataset comprising over 1,000 image-text pairs. It assesses capabilities across three distinct difficulty levels:

|

| 50 |

+

1. **Tool Recognition**: Requiring the recognition of a tool's primary function.

|

| 51 |

+

2. **Tool Understanding**: Testing the ability to grasp the underlying principles of a tool's operation.

|

| 52 |

+

3. **Tool Creation**: Challenging the model to fashion a new tool from surrounding objects when conventional options are unavailable.

|

| 53 |

+

|

| 54 |

+

Our comprehensive evaluation of 32 MLLMs—spanning proprietary, open-source, specialized embodied, and backbones in VLAs—reveals a significant deficiency in tool understanding. Furthermore, we provide an in-depth analysis and propose preliminary solutions.

|

| 55 |

+

|

| 56 |

+

## Sample Usage

|

| 57 |

+

|

| 58 |

+

### Set up

|

| 59 |

+

|

| 60 |

+

Environment setup:

|

| 61 |

+

```shell

|

| 62 |

+

git clone https://github.com/PhysToolBench/PhysToolBench.git

|

| 63 |

+

cd PhysToolBench

|

| 64 |

+

conda create phystoolbench

|

| 65 |

+

pip install -r requirements.txt

|

| 66 |

+

```

|

| 67 |

+

Download the dataset:

|

| 68 |

+

|

| 69 |

+

Dataset are available at [Huggingface Repo](https://huggingface.co/datasets/zhangzixin02/PhysToolBench).

|

| 70 |

+

```shell

|

| 71 |

+

huggingface-cli download --repo-type dataset zhangzixin02/PhysToolBench

|

| 72 |

+

```

|

| 73 |

+

|

| 74 |

+

### Inference

|

| 75 |

+

You can run MLLMs in two ways to evaluate it on the PhysToolBench:

|

| 76 |

+

1. Use the API of the proprietory MLLMs:

|

| 77 |

+

|

| 78 |

+

Our code will automatically choose the appropriate API interface based on the model name.

|

| 79 |

+

For example, to evaluate the gpt-5 model, you can run:

|

| 80 |

+

``` shell

|

| 81 |

+

python src/inference.py --model_name gpt-5 --api_url https://xxxxxx --api_key "sk-xxxxxx" --resume # Put your own API URL and API key here

|

| 82 |

+

```

|

| 83 |

+

We recommand using multiple threads to speed up the inference for proprietory models. For example, to use 8 threads, you can run:

|

| 84 |

+

``` shell

|

| 85 |

+

python src/inference.py --model_name gpt-5 --api_url https://xxxxxx --api_key "sk-xxxxxx" --resume --num_threads 8

|

| 86 |

+

```

|

| 87 |

+

You can modify the logic in `src/model_api.py` to support more models or use different API interfaces. Currently, OpenAI, Claude, Gemini format are supported.

|

| 88 |

+

2. Deploy the Open-Source models and run them locally:

|

| 89 |

+

|

| 90 |

+

To facilitate large-scale inference, we deployed open-source models used in our paper as servers with FastAPI so that they can be accessed via API for flexible evaluation. Note that more dependencies need to be installed for local inference. You can refer to the requirements.txt file for the dependencies, we recommand following the original repository of the MLLM you use for dependency installation.

|

| 91 |

+

|

| 92 |

+

2.1. Start the server:

|

| 93 |

+

```shell

|

| 94 |

+

python vlm_local/Qwen-2.5VL/qwen_2_5vl_server.py --port 8004 # deploy the qwen-2.5-vl server on port 8004, you can change the port to other available ports

|

| 95 |

+

```

|

| 96 |

+

2.2. Run the local MLLM:

|

| 97 |

+

```shell

|

| 98 |

+

python src/inference.py --model_name qwen-2.5-vl-7B --api_url http://localhost:8004 --api_key "" --resume # Evaluate the qwen-2.5-vl-7B model

|

| 99 |

+

```

|

| 100 |

+

Since the [lmdeploy](https://github.com/InternLM/lmdeploy/blob/main/docs/en/multi_modal/api_server_vl.md) and [vllm](https://docs.vllm.ai/en/latest/getting_started/quickstart.html#openai-compatible-server) are also compatible with the OpenAi-API format, you can easily deploy other models using lmdeploy and vllm as long as they are supported by these tools.

|

| 101 |

+

|

| 102 |

+

### Evaluate

|

| 103 |

+

You can evaluate the results and calculate the scores with the following command:

|

| 104 |

+

```shell

|

| 105 |

+

python src/metric.py

|

| 106 |

+

```

|

| 107 |

+

|

| 108 |

+

## Citation

|

| 109 |

+

If you use PhysToolBench in your research, please cite the following paper:

|

| 110 |

+

```bibtex

|

| 111 |

+

@article{zhang2025phystoolbench,

|

| 112 |

+

title={PhysToolBench: Benchmarking Physical Tool Understanding for MLLMs},

|

| 113 |

+

author={Zhang, Zixin and Chen, Kanghao and Lin, Xingwang and Jiang, Lutao and Zheng, Xu and Lyu, Yuanhuiyi and Guo, Litao and Li, Yinchuan and Chen, Ying-Cong},

|

| 114 |

+

journal={arXiv preprint arXiv:2510.09507},

|

| 115 |

+

year={2025}

|

| 116 |

+

}

|

| 117 |

+

```

|

| 118 |

+

|

| 119 |

+

## Acknowledgement

|

| 120 |

+

Our code is built upon the following repositories, and we thank the authors for their contributions:

|

| 121 |

+

- [VGRP-Bench](https://github.com/ryf1123/VGRP-Bench)

|