File size: 3,737 Bytes

7e6d3f5 d7c2f7a 34c8a5b f0b6c60 672c161 a7757cd f0b6c60 13ccf42 f0b6c60 a751f71 f0b6c60 55ce3c6 f0b6c60 908bddc f0b6c60 a751f71 f0b6c60 a751f71 f0b6c60 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 |

---

license: apache-2.0

base_model:

- google/siglip2-base-patch32-256

datasets:

- strangerguardhf/DSE1

language:

- en

pipeline_tag: image-classification

library_name: transformers

tags:

- siglip2

- '384'

- explicit-content

- adult-content

- classification

---

# **siglip2-x256p32-explicit-content**

> **siglip2-x256p32-explicit-content** is a vision-language encoder model fine-tuned from **siglip2-base-patch32-256** for **multi-class image classification**. Based on the **SiglipForImageClassification** architecture, this model is designed to detect and categorize various forms of visual content, from safe to explicit, making it ideal for content moderation and media filtering.

> [!note]

*SigLIP 2: Multilingual Vision-Language Encoders with Improved Semantic Understanding, Localization, and Dense Features* https://arxiv.org/pdf/2502.14786

---

```py

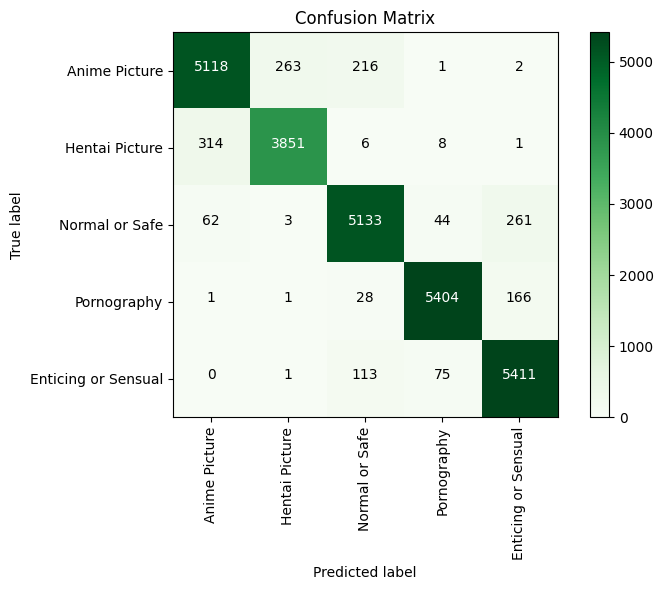

Classification Report:

precision recall f1-score support

Anime Picture 0.9314 0.9139 0.9226 5600

Hentai Picture 0.9349 0.9213 0.9281 4180

Normal or Safe 0.9340 0.9328 0.9334 5503

Pornography 0.9769 0.9650 0.9709 5600

Enticing or Sensual 0.9264 0.9663 0.9459 5600

accuracy 0.9409 26483

macro avg 0.9407 0.9398 0.9402 26483

weighted avg 0.9410 0.9409 0.9408 26483

```

---

## **Label Space: 5 Classes**

This model classifies each image into one of the following content types:

```

Class 0: "Anime Picture"

Class 1: "Hentai Picture"

Class 2: "Normal or Safe"

Class 3: "Pornography"

Class 4: "Enticing or Sensual"

```

---

## **Install Dependencies**

```bash

pip install -q transformers torch pillow gradio

```

---

## **Inference Code**

```python

import gradio as gr

from transformers import AutoImageProcessor, SiglipForImageClassification

from PIL import Image

import torch

# Load model and processor

model_name = "prithivMLmods/siglip2-x256p32-explicit-content" # Replace with your HF model path if needed

model = SiglipForImageClassification.from_pretrained(model_name)

processor = AutoImageProcessor.from_pretrained(model_name)

# ID to Label mapping

id2label = {

"0": "Anime Picture",

"1": "Hentai Picture",

"2": "Normal or Safe",

"3": "Pornography",

"4": "Enticing or Sensual"

}

def classify_explicit_content(image):

image = Image.fromarray(image).convert("RGB")

inputs = processor(images=image, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs)

logits = outputs.logits

probs = torch.nn.functional.softmax(logits, dim=1).squeeze().tolist()

prediction = {

id2label[str(i)]: round(probs[i], 3) for i in range(len(probs))

}

return prediction

# Gradio Interface

iface = gr.Interface(

fn=classify_explicit_content,

inputs=gr.Image(type="numpy"),

outputs=gr.Label(num_top_classes=5, label="Predicted Content Type"),

title= "siglip2-x256p32-explicit-content",

description="Classifies images as Anime, Hentai, Pornography, Enticing, or Safe for use in moderation systems."

)

if __name__ == "__main__":

iface.launch()

```

---

## **Intended Use**

This model is ideal for:

- **AI-Powered Content Moderation**

- **NSFW and Explicit Media Detection**

- **Content Filtering in Social Media Platforms**

- **Image Dataset Cleaning & Annotation**

- **Parental Control Solutions** |