Update README.md

Browse files

README.md

CHANGED

|

@@ -17,11 +17,11 @@ pipeline_tag: text-generation

|

|

| 17 |

<br>

|

| 18 |

📖 Check out the GLM-4.6 <a href="https://z.ai/blog/glm-4.6" target="_blank">technical blog</a>, <a href="https://arxiv.org/abs/2508.06471" target="_blank">technical report(GLM-4.5)</a>, and <a href="https://zhipu-ai.feishu.cn/wiki/Gv3swM0Yci7w7Zke9E0crhU7n7D" target="_blank">Zhipu AI technical documentation</a>.

|

| 19 |

<br>

|

| 20 |

-

📍 Use GLM-4.6 API services on <a href="https://docs.z.ai/guides/llm/glm-4.6">Z.ai API Platform

|

| 21 |

<br>

|

| 22 |

👉 One click to <a href="https://chat.z.ai">GLM-4.6</a>.

|

| 23 |

</p>

|

| 24 |

-

|

| 25 |

## Model Introduction

|

| 26 |

|

| 27 |

Compared with GLM-4.5, **GLM-4.6** brings several key improvements:

|

|

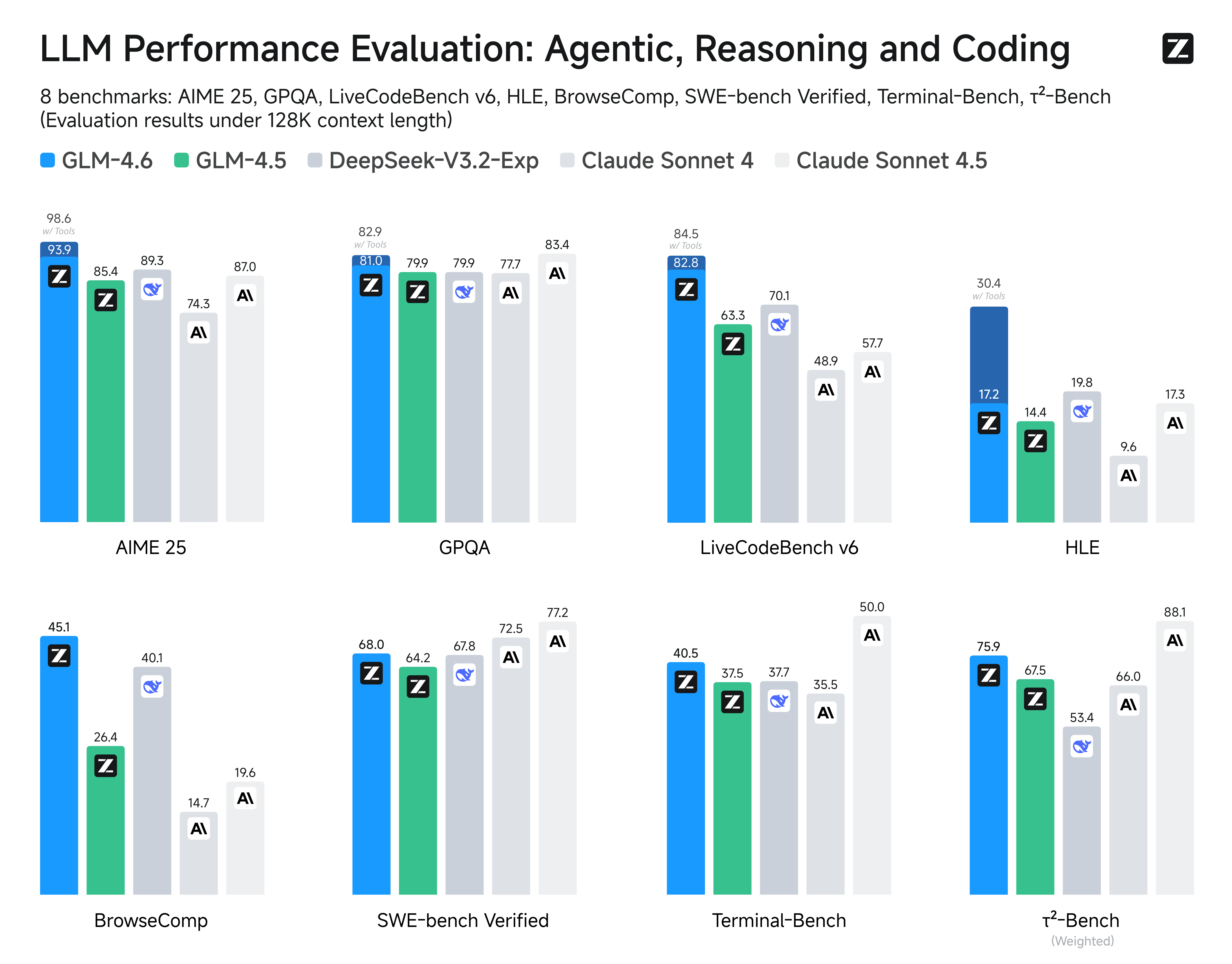

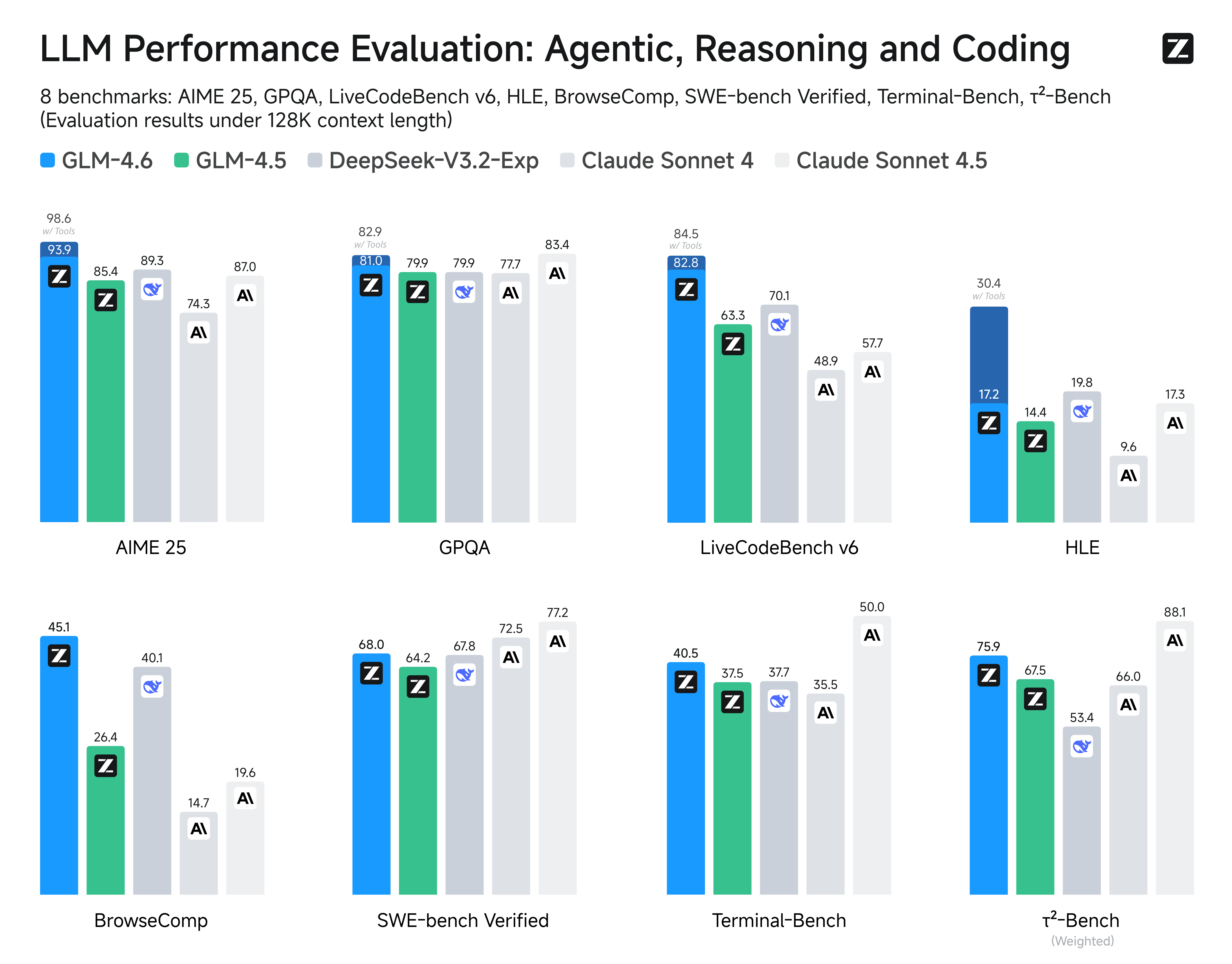

@@ -36,10 +36,17 @@ We evaluated GLM-4.6 across eight public benchmarks covering agents, reasoning,

|

|

| 36 |

|

| 37 |

|

| 38 |

|

| 39 |

-

|

| 40 |

## Inference

|

| 41 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 42 |

|

| 43 |

-

**

|

| 44 |

|

| 45 |

-

|

|

|

|

|

|

| 17 |

<br>

|

| 18 |

📖 Check out the GLM-4.6 <a href="https://z.ai/blog/glm-4.6" target="_blank">technical blog</a>, <a href="https://arxiv.org/abs/2508.06471" target="_blank">technical report(GLM-4.5)</a>, and <a href="https://zhipu-ai.feishu.cn/wiki/Gv3swM0Yci7w7Zke9E0crhU7n7D" target="_blank">Zhipu AI technical documentation</a>.

|

| 19 |

<br>

|

| 20 |

+

📍 Use GLM-4.6 API services on <a href="https://docs.z.ai/guides/llm/glm-4.6">Z.ai API Platform. </a>

|

| 21 |

<br>

|

| 22 |

👉 One click to <a href="https://chat.z.ai">GLM-4.6</a>.

|

| 23 |

</p>

|

| 24 |

+

|

| 25 |

## Model Introduction

|

| 26 |

|

| 27 |

Compared with GLM-4.5, **GLM-4.6** brings several key improvements:

|

|

|

|

| 36 |

|

| 37 |

|

| 38 |

|

|

|

|

| 39 |

## Inference

|

| 40 |

|

| 41 |

+

**Both GLM-4.5 and GLM-4.6 use the same inference method.**

|

| 42 |

+

|

| 43 |

+

you can check our [github](https://github.com/zai-org/GLM-4.5) for more detail.

|

| 44 |

+

|

| 45 |

+

## Recommended Evaluation Parameters

|

| 46 |

+

|

| 47 |

+

For general evaluations, we recommend using a **sampling temperature of 1.0**.

|

| 48 |

|

| 49 |

+

For **code-related evaluation tasks** (such as LCB), it is further recommended to set:

|

| 50 |

|

| 51 |

+

- `top_p = 0.95`

|

| 52 |

+

- `top_k = 40`

|