add AIBOM

#6

by

fatima113

- opened

bigcode_starcoder2-15b-instruct-v0.1.json

ADDED

|

@@ -0,0 +1,164 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"bomFormat": "CycloneDX",

|

| 3 |

+

"specVersion": "1.6",

|

| 4 |

+

"serialNumber": "urn:uuid:19c1f27e-d53a-45dc-94ba-a0f9fb30f79a",

|

| 5 |

+

"version": 1,

|

| 6 |

+

"metadata": {

|

| 7 |

+

"timestamp": "2025-07-10T08:49:17.278628+00:00",

|

| 8 |

+

"component": {

|

| 9 |

+

"type": "machine-learning-model",

|

| 10 |

+

"bom-ref": "bigcode/starcoder2-15b-instruct-v0.1-e7aea605-679e-5203-beb5-6bb2732ebe54",

|

| 11 |

+

"name": "bigcode/starcoder2-15b-instruct-v0.1",

|

| 12 |

+

"externalReferences": [

|

| 13 |

+

{

|

| 14 |

+

"url": "https://huggingface.co/bigcode/starcoder2-15b-instruct-v0.1",

|

| 15 |

+

"type": "documentation"

|

| 16 |

+

}

|

| 17 |

+

],

|

| 18 |

+

"modelCard": {

|

| 19 |

+

"modelParameters": {

|

| 20 |

+

"task": "text-generation",

|

| 21 |

+

"architectureFamily": "starcoder2",

|

| 22 |

+

"modelArchitecture": "Starcoder2ForCausalLM",

|

| 23 |

+

"datasets": [

|

| 24 |

+

{

|

| 25 |

+

"ref": "bigcode/self-oss-instruct-sc2-exec-filter-50k-87afcdf4-328e-5009-ba04-8150239e25f2"

|

| 26 |

+

}

|

| 27 |

+

]

|

| 28 |

+

},

|

| 29 |

+

"properties": [

|

| 30 |

+

{

|

| 31 |

+

"name": "library_name",

|

| 32 |

+

"value": "transformers"

|

| 33 |

+

},

|

| 34 |

+

{

|

| 35 |

+

"name": "base_model",

|

| 36 |

+

"value": "bigcode/starcoder2-15b"

|

| 37 |

+

}

|

| 38 |

+

],

|

| 39 |

+

"quantitativeAnalysis": {

|

| 40 |

+

"performanceMetrics": [

|

| 41 |

+

{

|

| 42 |

+

"slice": "dataset: livecodebench-codegeneration",

|

| 43 |

+

"type": "pass@1",

|

| 44 |

+

"value": 20.4

|

| 45 |

+

},

|

| 46 |

+

{

|

| 47 |

+

"slice": "dataset: livecodebench-selfrepair",

|

| 48 |

+

"type": "pass@1",

|

| 49 |

+

"value": 20.9

|

| 50 |

+

},

|

| 51 |

+

{

|

| 52 |

+

"slice": "dataset: livecodebench-testoutputprediction",

|

| 53 |

+

"type": "pass@1",

|

| 54 |

+

"value": 29.8

|

| 55 |

+

},

|

| 56 |

+

{

|

| 57 |

+

"slice": "dataset: livecodebench-codeexecution",

|

| 58 |

+

"type": "pass@1",

|

| 59 |

+

"value": 28.1

|

| 60 |

+

},

|

| 61 |

+

{

|

| 62 |

+

"slice": "dataset: humaneval",

|

| 63 |

+

"type": "pass@1",

|

| 64 |

+

"value": 72.6

|

| 65 |

+

},

|

| 66 |

+

{

|

| 67 |

+

"slice": "dataset: humanevalplus",

|

| 68 |

+

"type": "pass@1",

|

| 69 |

+

"value": 63.4

|

| 70 |

+

},

|

| 71 |

+

{

|

| 72 |

+

"slice": "dataset: mbpp",

|

| 73 |

+

"type": "pass@1",

|

| 74 |

+

"value": 75.2

|

| 75 |

+

},

|

| 76 |

+

{

|

| 77 |

+

"slice": "dataset: mbppplus",

|

| 78 |

+

"type": "pass@1",

|

| 79 |

+

"value": 61.2

|

| 80 |

+

},

|

| 81 |

+

{

|

| 82 |

+

"slice": "dataset: ds-1000",

|

| 83 |

+

"type": "pass@1",

|

| 84 |

+

"value": 40.6

|

| 85 |

+

}

|

| 86 |

+

]

|

| 87 |

+

}

|

| 88 |

+

},

|

| 89 |

+

"authors": [

|

| 90 |

+

{

|

| 91 |

+

"name": "bigcode"

|

| 92 |

+

}

|

| 93 |

+

],

|

| 94 |

+

"licenses": [

|

| 95 |

+

{

|

| 96 |

+

"license": {

|

| 97 |

+

"name": "bigcode-openrail-m"

|

| 98 |

+

}

|

| 99 |

+

}

|

| 100 |

+

],

|

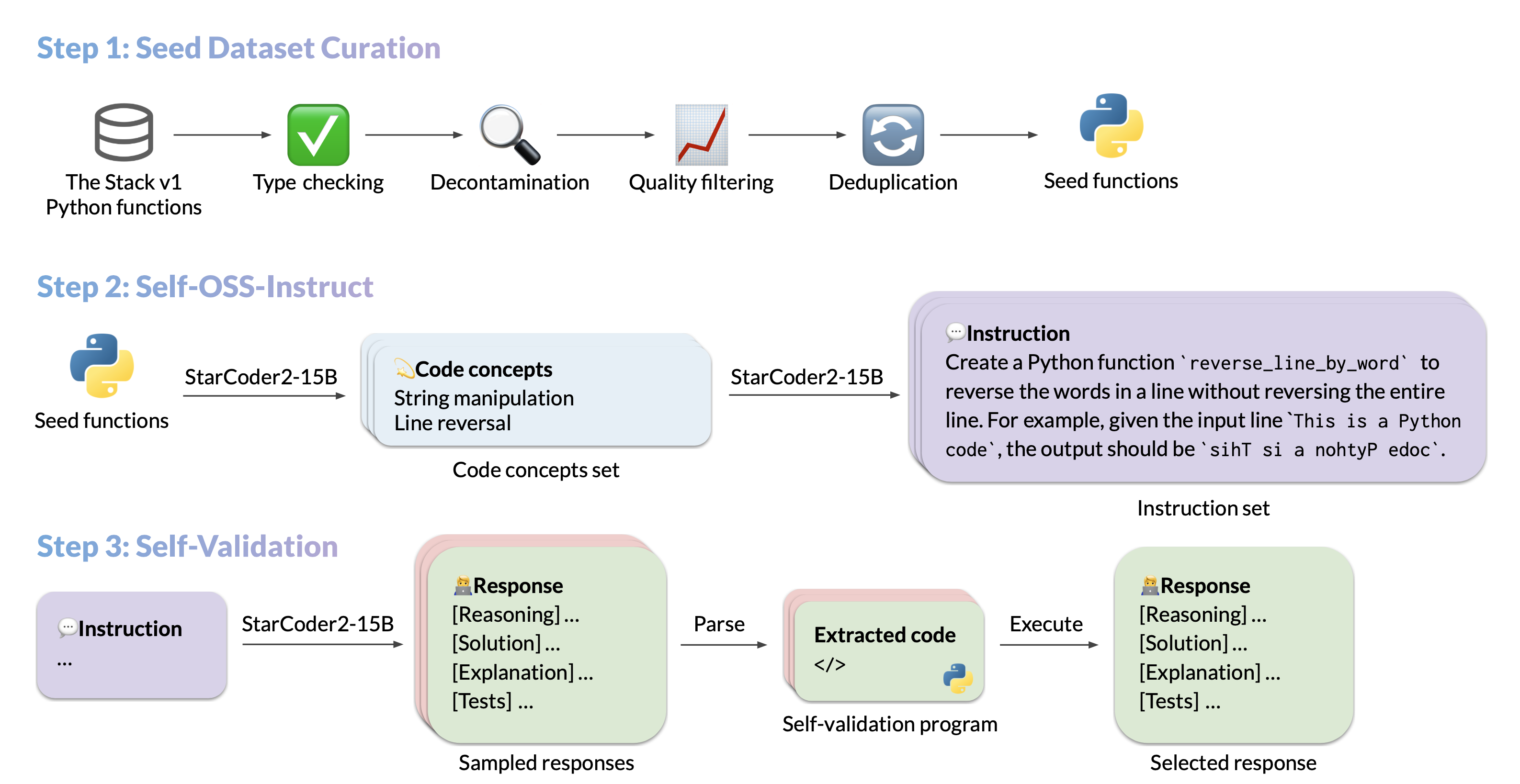

| 101 |

+

"description": "We introduce StarCoder2-15B-Instruct-v0.1, the very first entirely self-aligned code Large Language Model (LLM) trained with a fully permissive and transparent pipeline. Our open-source pipeline uses StarCoder2-15B to generate thousands of instruction-response pairs, which are then used to fine-tune StarCoder-15B itself without any human annotations or distilled data from huge and proprietary LLMs.- **Model:** [bigcode/starcoder2-15b-instruct-v0.1](https://huggingface.co/bigcode/starcoder2-instruct-15b-v0.1)- **Code:** [bigcode-project/starcoder2-self-align](https://github.com/bigcode-project/starcoder2-self-align)- **Dataset:** [bigcode/self-oss-instruct-sc2-exec-filter-50k](https://huggingface.co/datasets/bigcode/self-oss-instruct-sc2-exec-filter-50k/)- **Authors:**[Yuxiang Wei](https://yuxiang.cs.illinois.edu),[Federico Cassano](https://federico.codes/),[Jiawei Liu](https://jw-liu.xyz),[Yifeng Ding](https://yifeng-ding.com),[Naman Jain](https://naman-ntc.github.io),[Harm de Vries](https://www.harmdevries.com),[Leandro von Werra](https://twitter.com/lvwerra),[Arjun Guha](https://www.khoury.northeastern.edu/home/arjunguha/main/home/),[Lingming Zhang](https://lingming.cs.illinois.edu).",

|

| 102 |

+

"tags": [

|

| 103 |

+

"transformers",

|

| 104 |

+

"safetensors",

|

| 105 |

+

"starcoder2",

|

| 106 |

+

"text-generation",

|

| 107 |

+

"code",

|

| 108 |

+

"conversational",

|

| 109 |

+

"dataset:bigcode/self-oss-instruct-sc2-exec-filter-50k",

|

| 110 |

+

"arxiv:2410.24198",

|

| 111 |

+

"base_model:bigcode/starcoder2-15b",

|

| 112 |

+

"base_model:finetune:bigcode/starcoder2-15b",

|

| 113 |

+

"license:bigcode-openrail-m",

|

| 114 |

+

"model-index",

|

| 115 |

+

"autotrain_compatible",

|

| 116 |

+

"text-generation-inference",

|

| 117 |

+

"endpoints_compatible",

|

| 118 |

+

"region:us"

|

| 119 |

+

]

|

| 120 |

+

}

|

| 121 |

+

},

|

| 122 |

+

"components": [

|

| 123 |

+

{

|

| 124 |

+

"type": "data",

|

| 125 |

+

"bom-ref": "bigcode/self-oss-instruct-sc2-exec-filter-50k-87afcdf4-328e-5009-ba04-8150239e25f2",

|

| 126 |

+

"name": "bigcode/self-oss-instruct-sc2-exec-filter-50k",

|

| 127 |

+

"data": [

|

| 128 |

+

{

|

| 129 |

+

"type": "dataset",

|

| 130 |

+

"bom-ref": "bigcode/self-oss-instruct-sc2-exec-filter-50k-87afcdf4-328e-5009-ba04-8150239e25f2",

|

| 131 |

+

"name": "bigcode/self-oss-instruct-sc2-exec-filter-50k",

|

| 132 |

+

"contents": {

|

| 133 |

+

"url": "https://huggingface.co/datasets/bigcode/self-oss-instruct-sc2-exec-filter-50k",

|

| 134 |

+

"properties": [

|

| 135 |

+

{

|

| 136 |

+

"name": "pretty_name",

|

| 137 |

+

"value": "StarCoder2-15b Self-Alignment Dataset (50K)"

|

| 138 |

+

},

|

| 139 |

+

{

|

| 140 |

+

"name": "configs",

|

| 141 |

+

"value": "Name of the dataset subset: default {\"split\": \"train\", \"path\": \"data/train-*\"}"

|

| 142 |

+

},

|

| 143 |

+

{

|

| 144 |

+

"name": "license",

|

| 145 |

+

"value": "odc-by"

|

| 146 |

+

}

|

| 147 |

+

]

|

| 148 |

+

},

|

| 149 |

+

"governance": {

|

| 150 |

+

"owners": [

|

| 151 |

+

{

|

| 152 |

+

"organization": {

|

| 153 |

+

"name": "bigcode",

|

| 154 |

+

"url": "https://huggingface.co/bigcode"

|

| 155 |

+

}

|

| 156 |

+

}

|

| 157 |

+

]

|

| 158 |

+

},

|

| 159 |

+

"description": "Final self-alignment training dataset for StarCoder2-Instruct. \n\nseed: Contains the seed Python function\nconcepts: Contains the concepts generated from the seed\ninstruction: Contains the instruction generated from the concepts\nresponse: Contains the execution-validated response to the instruction\n\nThis dataset utilizes seed Python functions derived from the MultiPL-T pipeline.\n"

|

| 160 |

+

}

|

| 161 |

+

]

|

| 162 |

+

}

|

| 163 |

+

]

|

| 164 |

+

}

|