library_name: transformers

license: cc-by-nc-4.0

pipeline_tag: text-generation

tags:

- text-to-sql

- reinforcement-learning

SLM-SQL: An Exploration of Small Language Models for Text-to-SQL

Important Links

📖Arxiv Paper | \ud83d\udcbbGitHub | \ud83e\udd17HuggingFace | \ud83e\udd16ModelScope |

News

July 31, 2025: Upload model to modelscope and huggingface.July 30, 2025: Publish the paper to arxiv

Introduction

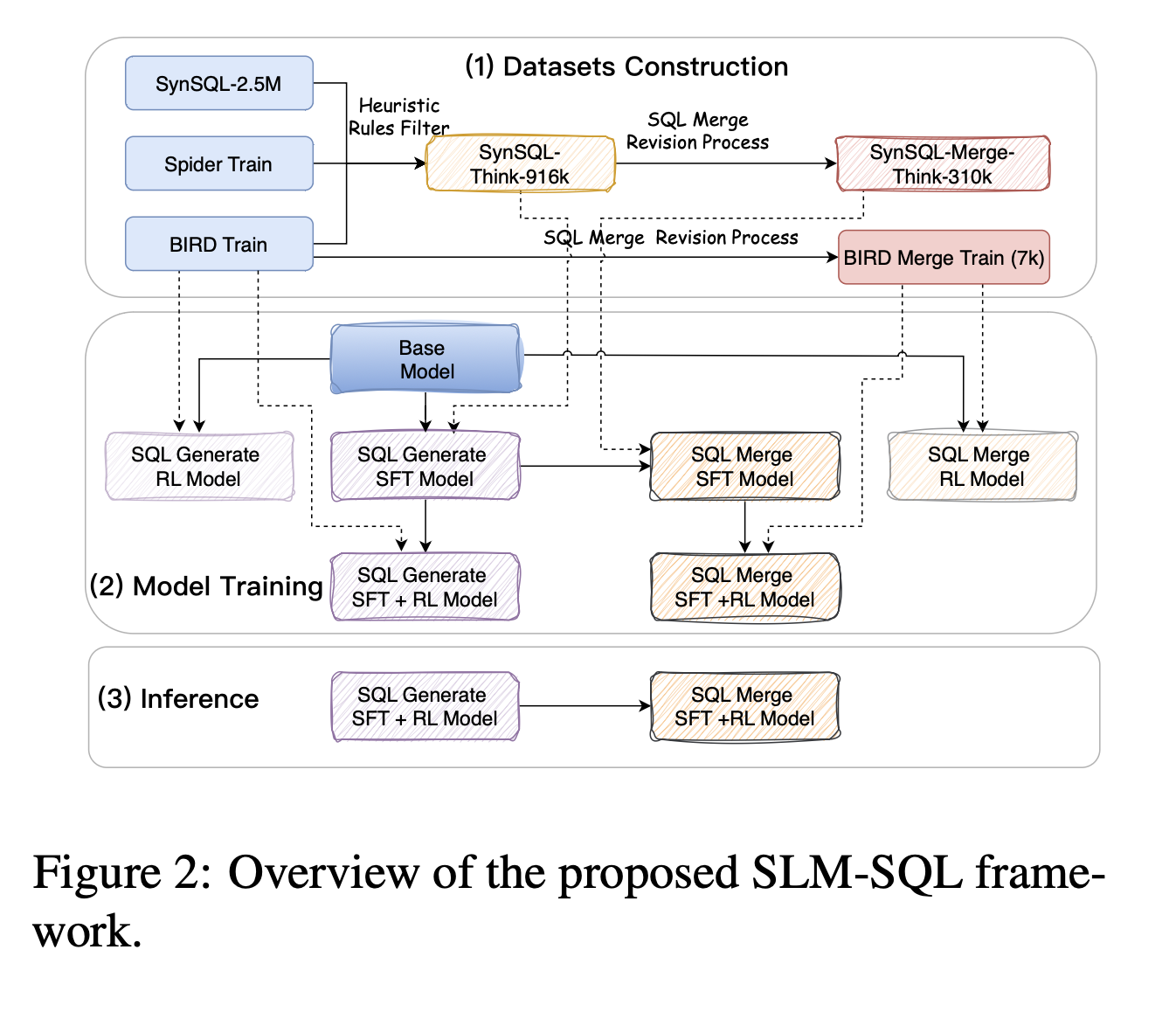

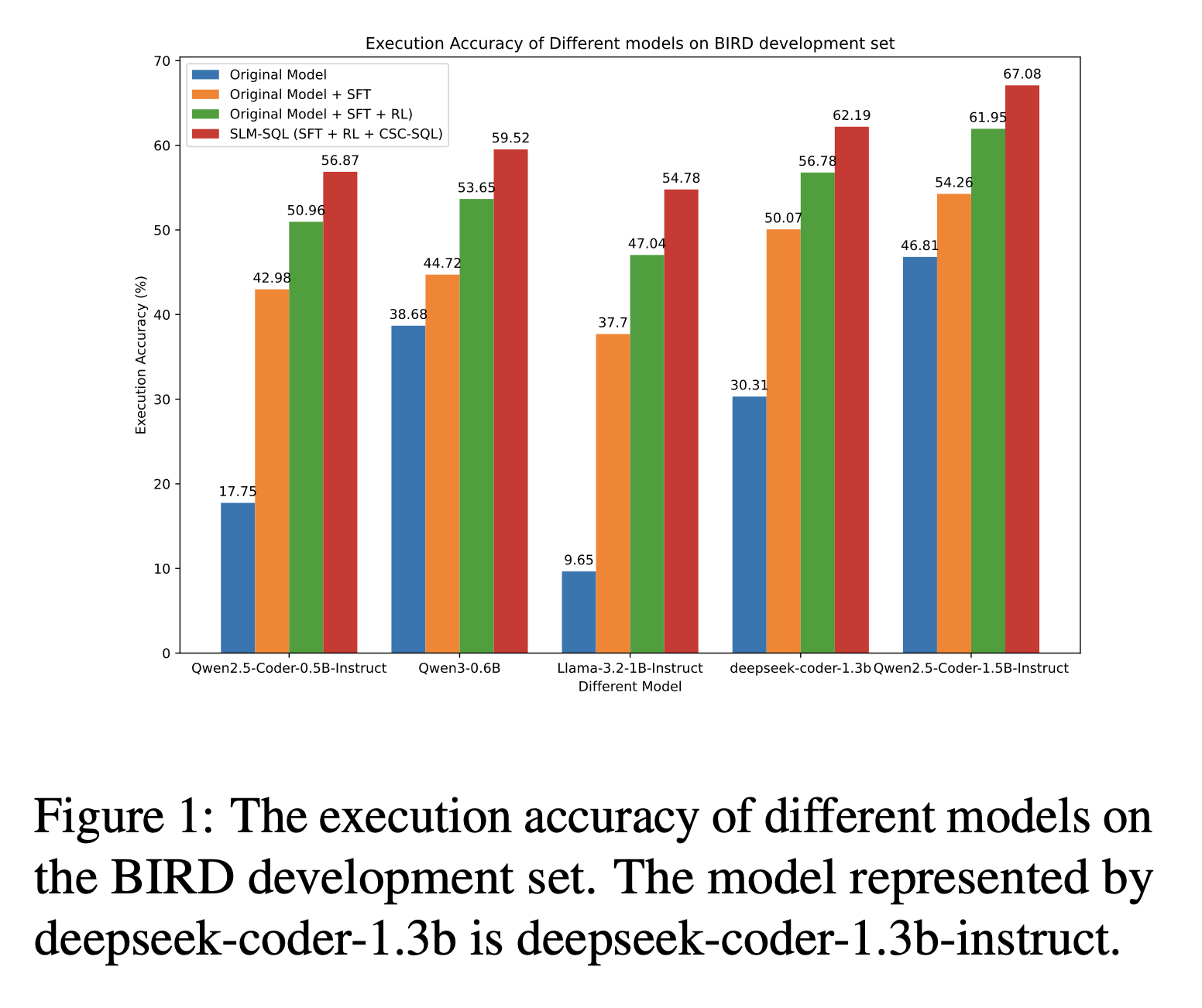

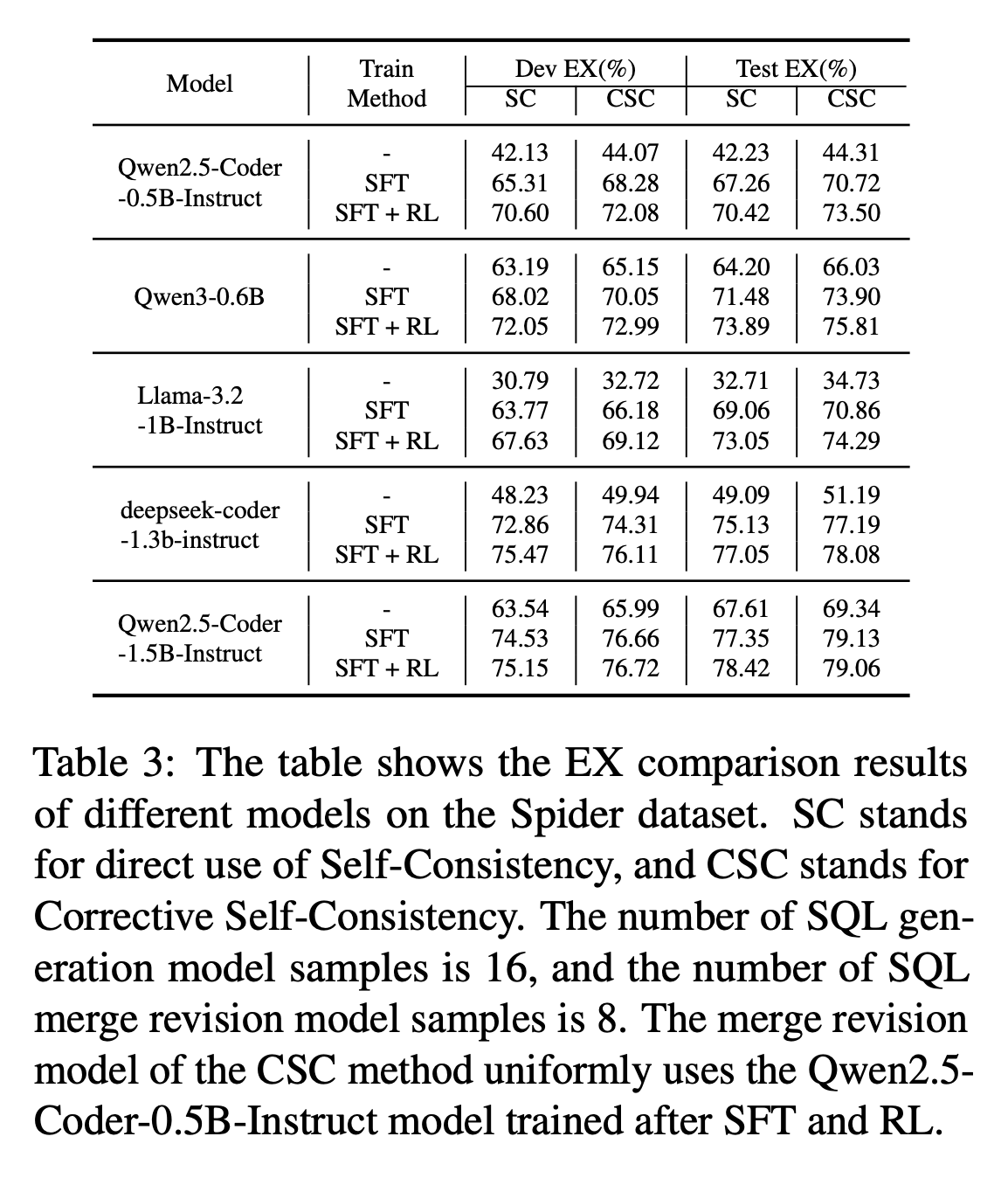

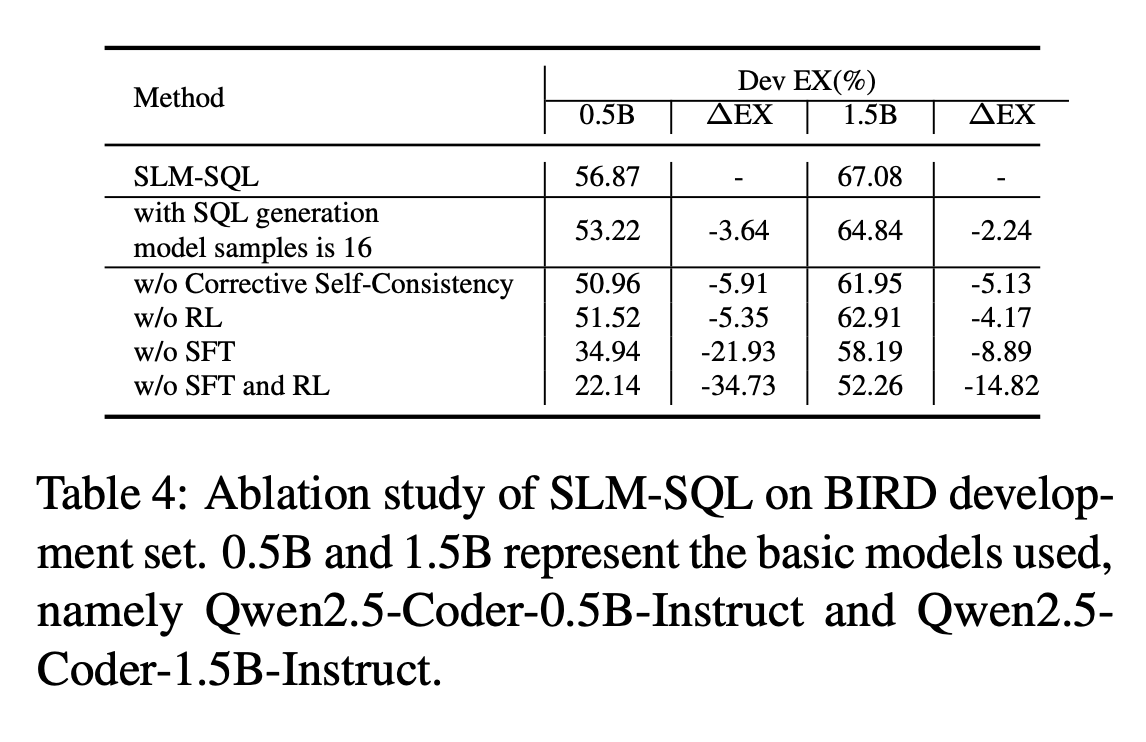

Large language models (LLMs) have demonstrated strong performance in translating natural language questions into SQL queries (Text-to-SQL). In contrast, small language models (SLMs) ranging from 0.5B to 1.5B parameters currently underperform on Text-to-SQL tasks due to their limited logical reasoning capabilities. However, SLMs offer inherent advantages in inference speed and suitability for edge deployment. To explore their potential in Text-to-SQL applications, we leverage recent advancements in post-training techniques. Specifically, we used the open-source SynSQL-2.5M dataset to construct two derived datasets: SynSQL-Think-916K for SQL generation and SynSQL-Merge-Think-310K for SQL merge revision. We then applied supervised fine-tuning and reinforcement learning-based post-training to the SLM, followed by inference using a corrective self-consistency approach. Experimental results validate the effectiveness and generalizability of our method, SLM-SQL. On the BIRD development set, the five evaluated models achieved an average improvement of 31.4 points. Notably, the 0.5B model reached 56.87% execution accuracy (EX), while the 1.5B model achieved 67.08% EX. We will release our dataset, model, and code to github: https://github.com/CycloneBoy/slm_sql.

Framework

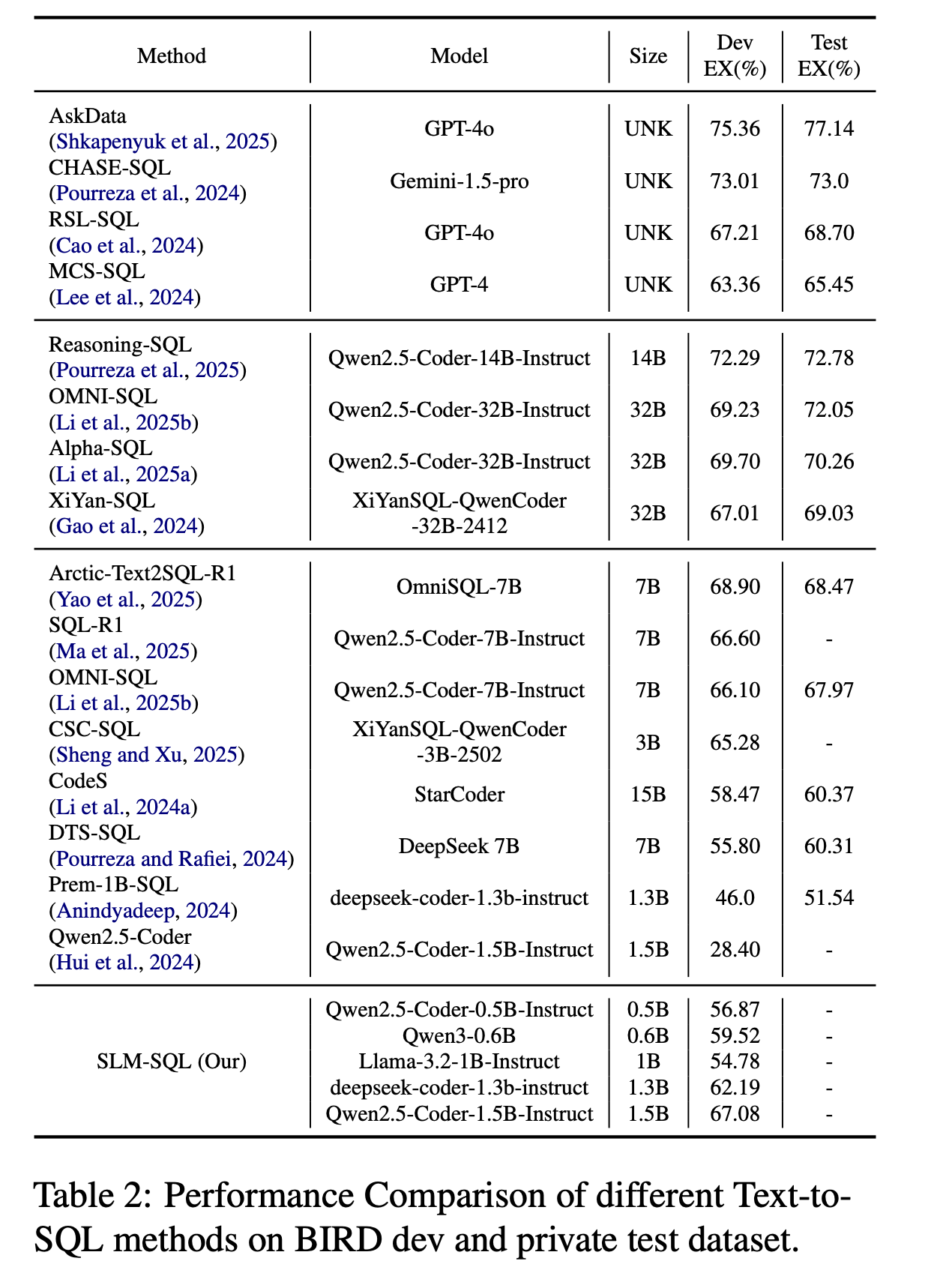

Main Results

Performance Comparison of different Text-to-SQL methods on BIRD dev and test dataset.

Usage

Here's how to use the model for Text-to-SQL generation.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

model_id = "cycloneboy/SLM-SQL-0.5B" # Or choose another model from the table above

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

device_map="auto",

)

# Example query

question = "How many members are there in the department of 'Sales'?"

# The chat template is critical for proper inference as the model is instruction-tuned.

messages = [

{"role": "system", "content": "You are an AI programming assistant, utilizing the Deepseek Coder model, developed by Deepseek Company, and you only answer questions related to computer science. For politically sensitive questions, security and privacy issues, and other non-computer science questions, you will refuse to answer

"},

{"role": "user", "content": f"### Instruction:

Generate a SQL query for the following question:

{question}

"},

]

# Apply the chat template to get the formatted prompt string

prompt = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

# Tokenize and generate

input_ids = tokenizer(prompt, return_tensors="pt").input_ids.to(model.device)

outputs = model.generate(input_ids, max_new_tokens=256, do_sample=True, temperature=0.01, top_p=0.95)

# Decode the generated text, skipping special tokens

generated_text = tokenizer.decode(outputs[0], skip_special_tokens=True)

# Extract only the model's response based on the chat template's structure

# The response should start after "### Response:

" and end before "<|EOT|>

"

response_start = generated_text.find("### Response:

")

if response_start != -1:

response_content = generated_text[response_start + len("### Response:

"):]

response_end = response_content.find("<|EOT|>")

if response_end != -1:

sql_query = response_content[:response_end].strip()

print(f"Generated SQL: {sql_query}")

else:

print(f"Generated text (full): {response_content.strip()}")

else:

print(f"Generated text (full): {generated_text.strip()}")

Model

| Model | Base Model | Train Method | Modelscope | HuggingFace |

|---|---|---|---|---|

| SLM-SQL-Base-0.5B | Qwen2.5-Coder-0.5B-Instruct | SFT | \ud83e\udd16 Modelscope | \ud83e\udd17 HuggingFace |

| SLM-SQL-0.5B | Qwen2.5-Coder-0.5B-Instruct | SFT + GRPO | \ud83e\udd16 Modelscope | \ud83e\udd17 HuggingFace |

| CscSQL-Merge-Qwen2.5-Coder-0.5B-Instruct | Qwen2.5-Coder-0.5B-Instruct | SFT + GRPO | \ud83e\udd16 Modelscope | \ud83e\udd17 HuggingFace |

| SLM-SQL-Base-1.5B | Qwen2.5-Coder-1.5B-Instruct | SFT | \ud83e\udd16 Modelscope | \ud83e\udd17 HuggingFace |

| SLM-SQL-1.5B | Qwen2.5-Coder-1.5B-Instruct | SFT + GRPO | \ud83e\udd16 Modelscope | \ud83e\udd17 HuggingFace |

| CscSQL-Merge-Qwen2.5-Coder-1.5B-Instruct | Qwen2.5-Coder-1.5B-Instruct | SFT + GRPO | \ud83e\udd16 Modelscope | \ud83e\udd17 HuggingFace |

| SLM-SQL-Base-0.6B | Qwen3-0.6B | SFT | \ud83e\udd16 Modelscope | \ud83e\udd17 HuggingFace |

| SLM-SQL-0.6B | Qwen3-0.6B | SFT + GRPO | \ud83e\udd16 Modelscope | \ud83e\udd17 HuggingFace |

| SLM-SQL-Base-1.3B | deepseek-coder-1.3b-instruct | SFT | \ud83e\udd16 Modelscope | \ud83e\udd17 HuggingFace |

| SLM-SQL-1.3B | deepseek-coder-1.3b-instruct | SFT + GRPO | \ud83e\udd16 Modelscope | \ud83e\udd17 HuggingFace |

| SLM-SQL-Base-1B | Llama-3.2-1B-Instruct | SFT | \ud83e\udd16 Modelscope | \ud83e\udd17 HuggingFace |

Dataset

| Dataset | Modelscope | HuggingFace |

|---|---|---|

| SynsQL-Think-916k | \ud83e\udd16 Modelscope | \ud83e\udd17 HuggingFace |

| SynsQL-Merge-Think-310k | \ud83e\udd16 Modelscope | \ud83e\udd17 HuggingFace |

| bird train and dev dataset | \ud83e\udd16 Modelscope | \ud83e\udd17 HuggingFace |

TODO

- Release inference code

- Upload Model

- Release training code

- Fix bug

- Update doc

Thanks to the following projects

Citation

@misc{sheng2025slmsqlexplorationsmalllanguage,

title={SLM-SQL: An Exploration of Small Language Models for Text-to-SQL},

author={Lei Sheng and Shuai-Shuai Xu},

year={2025},

eprint={2507.22478},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2507.22478},

}

@misc{sheng2025cscsqlcorrectiveselfconsistencytexttosql,

title={CSC-SQL: Corrective Self-Consistency in Text-to-SQL via Reinforcement Learning},

author={Lei Sheng and Shuai-Shuai Xu},

year={2025},

eprint={2505.13271},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2505.13271},

}