url

stringlengths 62

66

| repository_url

stringclasses 1

value | labels_url

stringlengths 76

80

| comments_url

stringlengths 71

75

| events_url

stringlengths 69

73

| html_url

stringlengths 50

56

| id

int64 377M

2.15B

| node_id

stringlengths 18

32

| number

int64 1

29.2k

| title

stringlengths 1

487

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 2

classes | assignee

dict | assignees

list | comments

list | created_at

int64 1.54k

1.71k

| updated_at

int64 1.54k

1.71k

| closed_at

int64 1.54k

1.71k

⌀ | author_association

stringclasses 4

values | active_lock_reason

stringclasses 2

values | body

stringlengths 0

234k

⌀ | reactions

dict | timeline_url

stringlengths 71

75

| state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/transformers/issues/26230

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/26230/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/26230/comments

|

https://api.github.com/repos/huggingface/transformers/issues/26230/events

|

https://github.com/huggingface/transformers/issues/26230

| 1,901,481,216 |

I_kwDOCUB6oc5xVk0A

| 26,230 |

git-base-vatex: input pixel_value dimension mismatch (blocking issue)

|

{

"login": "shreyaskar123",

"id": 47864384,

"node_id": "MDQ6VXNlcjQ3ODY0Mzg0",

"avatar_url": "https://avatars.githubusercontent.com/u/47864384?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/shreyaskar123",

"html_url": "https://github.com/shreyaskar123",

"followers_url": "https://api.github.com/users/shreyaskar123/followers",

"following_url": "https://api.github.com/users/shreyaskar123/following{/other_user}",

"gists_url": "https://api.github.com/users/shreyaskar123/gists{/gist_id}",

"starred_url": "https://api.github.com/users/shreyaskar123/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/shreyaskar123/subscriptions",

"organizations_url": "https://api.github.com/users/shreyaskar123/orgs",

"repos_url": "https://api.github.com/users/shreyaskar123/repos",

"events_url": "https://api.github.com/users/shreyaskar123/events{/privacy}",

"received_events_url": "https://api.github.com/users/shreyaskar123/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"Hey! I would suggest you to try to isolate the bug as we have limited timeframe to debug your custom code. If this is indeed a bug we can help you, otherwise the [community forum](https://discuss.huggingface.co/) is a good place to ask this! ",

"@ArthurZucker: I believe this is a bug because most of the code in` _get_item_` is from the provided example. Could you please look into this? I believe this has something to do with the git-base-vatex processor. Specifically, inside ` _get_item_` pixel_values is of shape torch.Size([1, 6, 3, 224, 224]) (dim = 5) and then `torch_default_data_collator` is increasing the dimension to 6 via `batch[k] = torch.stack([f[k] for f in features])`, causing the error. I tried to combat this by squeezing the first dimension in `_get_item` and make the tensor of size torch.Size([6, 3, 224, 224]) but then for some reason inside `_call_impl` in `module.py` pixel_values isn't even a part of `kwargs `when doing the `forward_call`, causing an error. I get the exact same error when trying to squeeze the extra dimension inside the `torch_default_data_collator` in `data_collator.py` via the following code. \r\n\r\n```\r\n for k, v in first.items():\r\n if k not in (\"label\", \"label_ids\") and v is not None and not isinstance(v, str):\r\n if isinstance(v, torch.Tensor):\r\n if k == 'pixel_values' and v.shape[0] == 1: # Add this condition\r\n batch[k] = torch.stack([f[k].squeeze(0) for f in features])\r\n else:\r\n batch[k] = torch.stack([f[k] for f in features])\r\n```\r\n\r\n Any help would be greatly appreciated Thanks! ",

"Hi @shreyaskar123 this is not a bug on our side, it's a bug on the data preparation side. You can fix it by removing the batch dimension which the processor creates by default.",

"@NielsRogge: I did try to remove the batch dimension (see https://github.com/huggingface/transformers/issues/26230#issuecomment-1724807694), but I get a error that pixel_values isn't part of kwargs anymore. Could you please take a look? ",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored."

] | 1,695 | 1,698 | 1,698 |

NONE

| null |

### System Info

- `transformers` version: 4.30.2

- Platform: Linux-4.19.0-25-cloud-amd64-x86_64-with-debian-10.13

- Python version: 3.7.12

- Huggingface_hub version: 0.15.1

- Safetensors version: 0.3.1 but is ignored because of PyTorch version too old.

- PyTorch version (GPU?): 1.9.0+cu111 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: No

### Who can help?

@NielsRogge

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [X] My own task or dataset (give details below)

### Reproduction

```

from torch.utils.data import Dataset

import av

import numpy as np

import torch

from PIL import Image

from huggingface_hub import hf_hub_download

from transformers import AutoProcessor, AutoModelForCausalLM

from generativeimage2text.make_dataset import create_video_captions

from transformers import AutoProcessor, AutoModelForCausalLM, TrainingArguments, Trainer

from typing import Union, List

import json

import glob

import os

import math

from datasets import load_dataset

import shutil

from tqdm import tqdm

from moviepy.editor import VideoFileClip

from evaluate import load

processor = AutoProcessor.from_pretrained("microsoft/git-base-vatex")

model = AutoModelForCausalLM.from_pretrained("microsoft/git-base-vatex")

np.random.seed(45)

def read_video_pyav(container, indices):

'''

Decode the video with PyAV decoder.

Args:

container (`av.container.input.InputContainer`): PyAV container.

indices (`List[int]`): List of frame indices to decode.

Returns:

result (np.ndarray): np array of decoded frames of shape (num_frames, height, width, 3).

'''

frames = []

container.seek(0)

start_index = indices[0]

end_index = indices[-1]

for i, frame in enumerate(container.decode(video=0)):

if i > end_index:

break

if i >= start_index and i in indices:

frames.append(frame)

return np.stack([x.to_ndarray(format="rgb24") for x in frames])

def sample_frame_indices(clip_len, seg_len):

'''

Sample a given number of frame indices from the video.

Args:

clip_len (`int`): Total number of frames to sample.

seg_len (`int`): Maximum allowed index of sample's last frame.

Returns:

indices (`List[int]`): List of sampled frame indices

'''

frame_sample_rate = (seg_len / clip_len) - 1

converted_len = int(clip_len * frame_sample_rate)

end_idx = np.random.randint(converted_len, seg_len)

start_idx = end_idx - converted_len

indices = np.linspace(start_idx, end_idx, num=clip_len)

indices = np.clip(indices, start_idx, end_idx - 1).astype(np.int64)

return indices

class VideoCaptioningDataset(Dataset):

def __init__(self, videos, captions, processor, num_frames):

self.videos = videos

self.captions = captions

self.processor = processor

self.num_frames = num_frames

self.cache = {} # to store processed samples

def __len__(self):

return len(self.videos)

def __getitem__(self, idx):

if idx in self.cache:

return self.cache[idx]

video_file = list(self.videos)[idx]

caption = self.captions[idx]

container = av.open(video_file)

indices = sample_frame_indices(

clip_len=self.num_frames, seg_len=container.streams.video[0].frames

)

frames = read_video_pyav(container, indices)

# process the pixel values and caption with the processor

pixel_values = self.processor(images=list(frames), return_tensors="pt").pixel_values

# pixel_values = pixel_values.squeeze(0)

inputs = self.processor(text=caption, return_tensors="pt", padding="max_length", max_length=50)

sample = {

"pixel_values": pixel_values,

"input_ids": inputs["input_ids"],

"attention_mask": inputs["attention_mask"],

"labels": inputs["input_ids"],

}

return sample

from sklearn.model_selection import train_test_split

videos = ['/home/name/GenerativeImage2Text/generativeimage2text/output_videos/clip_03_segment_0.mp4',

'/home/name/GenerativeImage2Text/generativeimage2text/output_videos/clip_07_segment_0.mp4',

'/home/name/GenerativeImage2Text/generativeimage2text/output_videos/clip_07_segment_1.mp4',

'/home/name/GenerativeImage2Text/generativeimage2text/output_videos/clip_07_segment_2.mp4',

'/home/name/GenerativeImage2Text/generativeimage2text/output_videos/clip_07_segment_3.mp4',

'/home/name/GenerativeImage2Text/generativeimage2text/output_videos/clip_07_segment_4.mp4',

'/home/name/GenerativeImage2Text/generativeimage2text/output_videos/clip_07_segment_5.mp4',

'/home/name/GenerativeImage2Text/generativeimage2text/output_videos/clip_07_segment_6.mp4',

'/home/name/GenerativeImage2Text/generativeimage2text/output_videos/clip_07_segment_7.mp4',

'/home/name/GenerativeImage2Text/generativeimage2text/output_videos/clip_07_segment_8.mp4']

captions = ['hi', 'hi', 'hi', 'hi', 'hi', 'hi', 'hi', 'hi', 'hi', 'hi'] # for demo -- the real data is PHI but I can confirm that the video files exist and is in the same format so that isn't the issue.

dataset = VideoCaptioningDataset(videos, captions, processor, 6)

train_dataset, val_dataset = train_test_split(dataset, test_size=0.1)

training_args = TrainingArguments(

output_dir=f"video_finetune_1",

learning_rate=5e-5,

num_train_epochs=50,

fp16=True,

per_device_train_batch_size=4,

per_device_eval_batch_size=4,

gradient_accumulation_steps=16,

save_total_limit=3,

evaluation_strategy="steps",

eval_steps=50,

save_strategy="steps",

save_steps=50,

logging_steps=50,

remove_unused_columns=False,

push_to_hub=False,

label_names=["labels"],

load_best_model_at_end=True

)

def compute_metrics(eval_pred):

logits, labels = eval_pred

predicted = logits.argmax(-1)

decoded_labels = processor.batch_decode(labels, skip_special_tokens=True)

decoded_predictions = processor.batch_decode(predicted, skip_special_tokens=True)

wer_score = wer.compute(predictions=decoded_predictions, references=decoded_labels)

bleu_score = bleu.compute(predictions=decoded_predictions, references=decoded_labels)

return {"wer_score": wer_score, "bleu_score": bleu_score}

wer = load("wer")

bleu = load("bleu")

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=val_dataset,

compute_metrics=compute_metrics,

)

val = train_dataset[0]["pixel_values"].ndim

print(f"the dim is {val}")

trainer.train()

```

At this point when I do the print the dimension is 5 (as expected). But when I print the dimension of ```pixel_values``` in the first line in ``` forward ``` in file ``` modeling_git.py" ``` the dimension is 6. Because of this I get error

``` raise ValueError("pixel_values must be of rank 4 or 5") ValueError: pixel_values must be of rank 4 or 5 ```

This is the full stack trace for reference:

```

File "generativeimage2text/video_finetune.py", line 231, in <module>

trainer.train()

File "/home/name/GenerativeImage2Text/git2/lib/python3.7/site-packages/transformers/trainer.py", line 1649, in train

ignore_keys_for_eval=ignore_keys_for_eval,

File "/home/name/GenerativeImage2Text/git2/lib/python3.7/site-packages/transformers/trainer.py", line 1938, in _inner_training_loop

tr_loss_step = self.training_step(model, inputs)

File "/home/name/GenerativeImage2Text/git2/lib/python3.7/site-packages/transformers/trainer.py", line 2759, in training_step

loss = self.compute_loss(model, inputs)

File "/home/name/GenerativeImage2Text/git2/lib/python3.7/site-packages/transformers/trainer.py", line 2784, in compute_loss

outputs = model(**inputs)

File "/home/name/GenerativeImage2Text/git2/lib/python3.7/site-packages/torch/nn/modules/module.py", line 1051, in _call_impl

return forward_call(*input, **kwargs)

File "/home/name/GenerativeImage2Text/git2/lib/python3.7/site-packages/accelerate/utils/operations.py", line 553, in forward

return model_forward(*args, **kwargs)

File "/home/name/GenerativeImage2Text/git2/lib/python3.7/site-packages/accelerate/utils/operations.py", line 541, in __call__

return convert_to_fp32(self.model_forward(*args, **kwargs))

File "/home/name/GenerativeImage2Text/git2/lib/python3.7/site-packages/torch/cuda/amp/autocast_mode.py", line 141, in decorate_autocast

return func(*args, **kwargs)

File "/home/name/GenerativeImage2Text/git2/lib/python3.7/site-packages/transformers/models/git/modeling_git.py", line 1507, in forward

return_dict=return_dict,

File "/home/name/GenerativeImage2Text/git2/lib/python3.7/site-packages/torch/nn/modules/module.py", line 1051, in _call_impl

return forward_call(*input, **kwargs)

File "/home/name/GenerativeImage2Text/git2/lib/python3.7/site-packages/transformers/models/git/modeling_git.py", line 1250, in forward

raise ValueError("pixel_values must be of rank 4 or 5")

ValueError: pixel_values must be of rank 4 or 5

```

### Expected behavior

Ideally the dimension of ```pixel_values``` inside ```forward``` would also be 5 and the finetuning of git-base-vatex on video would work This is a blocking issue and any help would be really appreciated!

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/26230/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/26230/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/26229

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/26229/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/26229/comments

|

https://api.github.com/repos/huggingface/transformers/issues/26229/events

|

https://github.com/huggingface/transformers/pull/26229

| 1,901,444,669 |

PR_kwDOCUB6oc5amL20

| 26,229 |

refactor: change default block_size

|

{

"login": "pphuc25",

"id": 81808312,

"node_id": "MDQ6VXNlcjgxODA4MzEy",

"avatar_url": "https://avatars.githubusercontent.com/u/81808312?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/pphuc25",

"html_url": "https://github.com/pphuc25",

"followers_url": "https://api.github.com/users/pphuc25/followers",

"following_url": "https://api.github.com/users/pphuc25/following{/other_user}",

"gists_url": "https://api.github.com/users/pphuc25/gists{/gist_id}",

"starred_url": "https://api.github.com/users/pphuc25/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/pphuc25/subscriptions",

"organizations_url": "https://api.github.com/users/pphuc25/orgs",

"repos_url": "https://api.github.com/users/pphuc25/repos",

"events_url": "https://api.github.com/users/pphuc25/events{/privacy}",

"received_events_url": "https://api.github.com/users/pphuc25/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"however, I have some confuse because why I can not pass the test case",

"thank you @ArthurZucker, but I have some confuse why I just change 1024 to min(1024, config.max_position_embeddings) and docs and then I can not pass the test case, still stuck, can you help me",

"Hey @pphuc25 - don't worry, your PR is perfect! All you need to do is rebase onto `main`:\r\n\r\n```\r\ngit fetch upstream\r\ngit rebase upstream main\r\n```\r\n\r\nAnd then force push:\r\n```\r\ngit commit -m \"rebase\" --allow-empty\r\ngit push -f origin flax_min_block_size\r\n```\r\n\r\nThis should fix your CI issues! The test that's failing isn't related to your PR and was fixed yesterday on `main`.",

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_26229). All of your documentation changes will be reflected on that endpoint.",

"thanks @sanchit-gandhi so much on very helpful information, but this seem to still bug.",

"Hmm could you try running: `make fix-copies` and pushing? Does this change the code for you at all?",

"Thank you for really helpful support @sanchit-gandhi, this help me so much and gain me more knowledge, thank you really much.",

"but @sanchit-gandhi, I think you should review the code to merge PR",

"Shall we merge this one @ArthurZucker since it protects against an edge case, and then add follow-up features to your own codebase / the HF Hub @pphuc25?",

"Sure! "

] | 1,695 | 1,696 | 1,696 |

CONTRIBUTOR

| null |

Hi,

As mentioned in issue #26069, I have created a new PR with the aim of modifying the block_size for all files. The goal is to set the block size = min(1024, config.max_position_embeddings). This change is intended to ensure synchronization and prevent errors that can occur when the block size exceeds the maximum position embeddings value.

I would like to cc @sanchit-gandhi to review my PR, thank you so much

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/26229/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/26229/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/26229",

"html_url": "https://github.com/huggingface/transformers/pull/26229",

"diff_url": "https://github.com/huggingface/transformers/pull/26229.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/26229.patch",

"merged_at": 1696429898000

}

|

https://api.github.com/repos/huggingface/transformers/issues/26228

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/26228/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/26228/comments

|

https://api.github.com/repos/huggingface/transformers/issues/26228/events

|

https://github.com/huggingface/transformers/pull/26228

| 1,901,406,750 |

PR_kwDOCUB6oc5amDqi

| 26,228 |

[Permisson] Style fix

|

{

"login": "sanchit-gandhi",

"id": 93869735,

"node_id": "U_kgDOBZhWpw",

"avatar_url": "https://avatars.githubusercontent.com/u/93869735?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/sanchit-gandhi",

"html_url": "https://github.com/sanchit-gandhi",

"followers_url": "https://api.github.com/users/sanchit-gandhi/followers",

"following_url": "https://api.github.com/users/sanchit-gandhi/following{/other_user}",

"gists_url": "https://api.github.com/users/sanchit-gandhi/gists{/gist_id}",

"starred_url": "https://api.github.com/users/sanchit-gandhi/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/sanchit-gandhi/subscriptions",

"organizations_url": "https://api.github.com/users/sanchit-gandhi/orgs",

"repos_url": "https://api.github.com/users/sanchit-gandhi/repos",

"events_url": "https://api.github.com/users/sanchit-gandhi/events{/privacy}",

"received_events_url": "https://api.github.com/users/sanchit-gandhi/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"_The documentation is not available anymore as the PR was closed or merged._",

"Merging as you are probably out! ",

"Thanks! Beat me to it 😉"

] | 1,695 | 1,695 | 1,695 |

CONTRIBUTOR

| null |

# What does this PR do?

Rebases and runs `make fix-copies` to fix the red CI on `main`

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/26228/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/26228/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/26228",

"html_url": "https://github.com/huggingface/transformers/pull/26228",

"diff_url": "https://github.com/huggingface/transformers/pull/26228.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/26228.patch",

"merged_at": 1695059391000

}

|

https://api.github.com/repos/huggingface/transformers/issues/26227

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/26227/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/26227/comments

|

https://api.github.com/repos/huggingface/transformers/issues/26227/events

|

https://github.com/huggingface/transformers/pull/26227

| 1,901,374,445 |

PR_kwDOCUB6oc5al8q6

| 26,227 |

add custom RMSNorm to `ALL_LAYERNORM_LAYERS`

|

{

"login": "shijie-wu",

"id": 2987758,

"node_id": "MDQ6VXNlcjI5ODc3NTg=",

"avatar_url": "https://avatars.githubusercontent.com/u/2987758?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/shijie-wu",

"html_url": "https://github.com/shijie-wu",

"followers_url": "https://api.github.com/users/shijie-wu/followers",

"following_url": "https://api.github.com/users/shijie-wu/following{/other_user}",

"gists_url": "https://api.github.com/users/shijie-wu/gists{/gist_id}",

"starred_url": "https://api.github.com/users/shijie-wu/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/shijie-wu/subscriptions",

"organizations_url": "https://api.github.com/users/shijie-wu/orgs",

"repos_url": "https://api.github.com/users/shijie-wu/repos",

"events_url": "https://api.github.com/users/shijie-wu/events{/privacy}",

"received_events_url": "https://api.github.com/users/shijie-wu/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"@ArthurZucker done! `MegaRMSNorm` - a part of `MegaSequenceNorm` - is already in `ALL_LAYERNORM_LAYERS` https://github.com/huggingface/transformers/blob/493b24ba109ed680f40ec81ef73e4cc303e810ee/src/transformers/models/mega/modeling_mega.py#L317\r\n\r\ndo we want to add all these to `ALL_LAYERNORM_LAYERS`?\r\n\r\nhttps://github.com/huggingface/transformers/blob/493b24ba109ed680f40ec81ef73e4cc303e810ee/src/transformers/models/mega/modeling_mega.py#L294-L301",

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_26227). All of your documentation changes will be reflected on that endpoint.",

"after discussion with @ArthurZucker, it seems best to limit to scope of this PR to adding custom RMSNorm to `ALL_LAYERNORM_LAYERS`. Adding nn.BatchNorm1d might create unintentional impact."

] | 1,695 | 1,695 | 1,695 |

CONTRIBUTOR

| null |

# What does this PR do?

It fixed a issue discovered during discussion of PR https://github.com/huggingface/transformers/pull/26152.

> @ArthurZucker: the `ALL_LAYERNORM_LAYERS` should contain all the custom layer norm classes (from `transformers` modeling files) and should be updated if that is not the case

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

@ArthurZucker

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/26227/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/26227/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/26227",

"html_url": "https://github.com/huggingface/transformers/pull/26227",

"diff_url": "https://github.com/huggingface/transformers/pull/26227.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/26227.patch",

"merged_at": 1695228717000

}

|

https://api.github.com/repos/huggingface/transformers/issues/26226

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/26226/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/26226/comments

|

https://api.github.com/repos/huggingface/transformers/issues/26226/events

|

https://github.com/huggingface/transformers/pull/26226

| 1,901,326,695 |

PR_kwDOCUB6oc5alx_5

| 26,226 |

APEX: use MixedFusedRMSNorm instead of FusedRMSNorm for numerical consistency

|

{

"login": "fxmarty",

"id": 9808326,

"node_id": "MDQ6VXNlcjk4MDgzMjY=",

"avatar_url": "https://avatars.githubusercontent.com/u/9808326?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/fxmarty",

"html_url": "https://github.com/fxmarty",

"followers_url": "https://api.github.com/users/fxmarty/followers",

"following_url": "https://api.github.com/users/fxmarty/following{/other_user}",

"gists_url": "https://api.github.com/users/fxmarty/gists{/gist_id}",

"starred_url": "https://api.github.com/users/fxmarty/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/fxmarty/subscriptions",

"organizations_url": "https://api.github.com/users/fxmarty/orgs",

"repos_url": "https://api.github.com/users/fxmarty/repos",

"events_url": "https://api.github.com/users/fxmarty/events{/privacy}",

"received_events_url": "https://api.github.com/users/fxmarty/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_26226). All of your documentation changes will be reflected on that endpoint.",

"Good for me, but let's wait for @mfuntowicz 's response.\r\n\r\nBut why we can't just uninstall apex on the AMD docker image? The goal here is not to test the functionality of APEX.",

"> why we can't just uninstall apex on the AMD docker image\r\n\r\nIMO it makes sense to test both paths, the APEX path is broken as well on NVIDIA GPUs. APEX is installed by default in the PyTorch docker image provided by AMD, and it makes sense to expect Transformers to work with the image.",

"Those 3rd party libraries cause problems quite frequently especially when they change versions or torch get a new version.\r\n\r\nThere is `docker/transformers-pytorch-deepspeed-latest-gpu/Dockerfile` used by the job `run_all_tests_torch_cuda_extensions_gpu` - this has apex installed, but this is a separate job, in particular for DeepSpeed.\r\n\r\nLet's not spend too much time but just make the **usual** model and common tests run on AMD CI for now.",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored."

] | 1,695 | 1,701 | 1,701 |

COLLABORATOR

| null |

As per title.

APEX `FusedRMSNorm` initialize the returned tensor to the `input` dtype, which raises an error in case the model is set on fp16, where we may have `fp32` input to the layer norm layer:

```

File "/opt/conda/envs/py_3.8/lib/python3.8/site-packages/apex-0.1-py3.8-linux-x86_64.egg/apex/normalization/fused_layer_norm.py", line 189, in fused_rms_norm_affine

return FusedRMSNormAffineFunction.apply(*args)

File "/opt/conda/envs/py_3.8/lib/python3.8/site-packages/torch/autograd/function.py", line 506, in apply

return super().apply(*args, **kwargs) # type: ignore[misc]

File "/opt/conda/envs/py_3.8/lib/python3.8/site-packages/apex-0.1-py3.8-linux-x86_64.egg/apex/normalization/fused_layer_norm.py", line 69, in forward

output, invvar = fused_layer_norm_cuda.rms_forward_affine(

```

Comparing to [T5LayerNorm](https://github.com/huggingface/transformers/blob/820c46a707ddd033975bc3b0549eea200e64c7da/src/transformers/models/t5/modeling_t5.py#L247-L261) where the output is on the weight dtype. That is exactly what `MixedFusedRMSNorm` is for, see https://github.com/NVIDIA/apex/blob/52e18c894223800cb611682dce27d88050edf1de/apex/normalization/fused_layer_norm.py#L420 and https://github.com/NVIDIA/apex/blob/52e18c894223800cb611682dce27d88050edf1de/csrc/layer_norm_cuda.cpp#L205

For example, the test `pytest tests/test_pipeline_mixin.py::VisualQuestionAnsweringPipelineTests::test_small_model_pt_blip2 -s -vvvvv` fails when `apex` is available (and accelerate is installed). This error was never detected because the docker images used for testing do not have APEX installed (e.g. `nvidia/cuda:11.8.0-cudnn8-devel-ubuntu20.04`)

This is an issue for the AMD CI as the image used `rocm/pytorch:rocm5.6_ubuntu20.04_py3.8_pytorch_2.0.1` has RoCm APEX installed by default.

---

Slightly out of topic: something I don't get is why [neither t5x](https://github.com/google-research/t5x/blob/ea66ec835a5b413ca9d211de96aa899900a84c13/t5x/examples/t5/layers.py#L445) nor transformers seem to recast to fp16 after the FFN. Due to the `keep_in_fp32` attribute, [this weight](https://github.com/huggingface/transformers/blob/820c46a707ddd033975bc3b0549eea200e64c7da/src/transformers/models/t5/modeling_t5.py#L284C14-L284C14) is always in fp32 and then fp32 is propagated in the model. t5x seem to do the same.

Related: https://github.com/huggingface/transformers/pull/26225

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/26226/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/26226/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/26226",

"html_url": "https://github.com/huggingface/transformers/pull/26226",

"diff_url": "https://github.com/huggingface/transformers/pull/26226.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/26226.patch",

"merged_at": null

}

|

https://api.github.com/repos/huggingface/transformers/issues/26225

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/26225/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/26225/comments

|

https://api.github.com/repos/huggingface/transformers/issues/26225/events

|

https://github.com/huggingface/transformers/pull/26225

| 1,901,317,071 |

PR_kwDOCUB6oc5alv42

| 26,225 |

Keep relevant weights in fp32 when `model._keep_in_fp32_modules` is set even when `accelerate` is not installed

|

{

"login": "fxmarty",

"id": 9808326,

"node_id": "MDQ6VXNlcjk4MDgzMjY=",

"avatar_url": "https://avatars.githubusercontent.com/u/9808326?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/fxmarty",

"html_url": "https://github.com/fxmarty",

"followers_url": "https://api.github.com/users/fxmarty/followers",

"following_url": "https://api.github.com/users/fxmarty/following{/other_user}",

"gists_url": "https://api.github.com/users/fxmarty/gists{/gist_id}",

"starred_url": "https://api.github.com/users/fxmarty/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/fxmarty/subscriptions",

"organizations_url": "https://api.github.com/users/fxmarty/orgs",

"repos_url": "https://api.github.com/users/fxmarty/repos",

"events_url": "https://api.github.com/users/fxmarty/events{/privacy}",

"received_events_url": "https://api.github.com/users/fxmarty/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"_The documentation is not available anymore as the PR was closed or merged._",

"sounds good",

"I fixed the original test that was not actually testing the case where `accelerate` is not available.",

"nice! \r\n"

] | 1,695 | 1,706 | 1,695 |

COLLABORATOR

| null |

As per title, aligns the behavior of `PreTrainedModel.from_pretrained(..., torch_dtype=torch.float16)` when accelerate is installed and when it is not.

Previously,

```python

import torch

from transformers import AutoModelForSeq2SeqLM

model = AutoModelForSeq2SeqLM.from_pretrained("t5-small", torch_dtype=torch.float16)

print(model.encoder.block[0].layer[1].DenseReluDense.wo.weight.dtype)

```

would print `torch.float16` when accelerate was not installed, and `torch.float32` when installed. Having different dtype depending on an external package being installed or not is bug prone. [As `accelerate` is a hard requirement](https://github.com/huggingface/transformers/blob/e4e55af79c9b3dfd15cc2224f8f5b80680d83f03/setup.py#L260), it could be also reasonable to simply raise an error in `from_pretrained` if using pytorch & accelerate is not installed.

Note:

```python

for name, param in model.named_parameters():

param = param.to(torch.float32)

```

does nothing out of the loop scope.

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/26225/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/26225/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/26225",

"html_url": "https://github.com/huggingface/transformers/pull/26225",

"diff_url": "https://github.com/huggingface/transformers/pull/26225.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/26225.patch",

"merged_at": 1695290403000

}

|

https://api.github.com/repos/huggingface/transformers/issues/26224

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/26224/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/26224/comments

|

https://api.github.com/repos/huggingface/transformers/issues/26224/events

|

https://github.com/huggingface/transformers/pull/26224

| 1,901,292,887 |

PR_kwDOCUB6oc5alqkR

| 26,224 |

Add Keras Core (Keras 3.0) support

|

{

"login": "Rocketknight1",

"id": 12866554,

"node_id": "MDQ6VXNlcjEyODY2NTU0",

"avatar_url": "https://avatars.githubusercontent.com/u/12866554?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Rocketknight1",

"html_url": "https://github.com/Rocketknight1",

"followers_url": "https://api.github.com/users/Rocketknight1/followers",

"following_url": "https://api.github.com/users/Rocketknight1/following{/other_user}",

"gists_url": "https://api.github.com/users/Rocketknight1/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Rocketknight1/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Rocketknight1/subscriptions",

"organizations_url": "https://api.github.com/users/Rocketknight1/orgs",

"repos_url": "https://api.github.com/users/Rocketknight1/repos",

"events_url": "https://api.github.com/users/Rocketknight1/events{/privacy}",

"received_events_url": "https://api.github.com/users/Rocketknight1/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_26224). All of your documentation changes will be reflected on that endpoint.",

"Don't stale yet!",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored."

] | 1,695 | 1,706 | 1,706 |

MEMBER

| null |

This PR is a very-very-very work in progress effort to add Keras Core support, and to prepare for the transition to Keras 3.0. Because Keras Core is still a beta/preview, this PR is likely to be fairly slow and cautious, we don't want to lock ourselves into an API that may change under our feet!

The goal of this PR is to create a "minimum viable port(duct)" of our `tf.keras` code to `keras-core` to assess how difficult it will be to support Keras Core. Therefore, this port has the following properties:

- Only loading from `safetensors` will be supported for now, so I don't have to support every possible combination of (weights_format, model_framework)

- All mixins like `GenerationMixin` will be inherited directly from the framework that corresponds to the active `keras-core` framework. This means that `keras-core` classes will change their inheritance depending on which framework is live, which is risky but greatly reduces the amount of code I need to write.

- We will mainly be supporting TF and JAX as Keras Core frameworks. All of our models have PyTorch code already, which will probably be more stable and better-tested than using Keras Core + PyTorch, so I doubt we'd see much usage there. In addition, TF and JAX fit Keras Core's assumptions much more naturally than PyTorch does.

The plan for this PR is:

- [x] Create `modeling_keras_outputs.py` (ported from `modeling_tf_outputs.py`)

- [ ] Create `modeling_keras_utils.py` (ported from `modeling_tf_utils.py`)

- [ ] Port a single model to keras-core (probably BERT or DistilBERT)

- [ ] Add model tests to ensure that outputs match

- [ ] Improve support for our data classes, like supporting BatchEncoding in `fit()` or auto-wrapping HF datasets in a Keras `PyDataset`

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/26224/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/26224/timeline

| null | true |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/26224",

"html_url": "https://github.com/huggingface/transformers/pull/26224",

"diff_url": "https://github.com/huggingface/transformers/pull/26224.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/26224.patch",

"merged_at": null

}

|

https://api.github.com/repos/huggingface/transformers/issues/26223

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/26223/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/26223/comments

|

https://api.github.com/repos/huggingface/transformers/issues/26223/events

|

https://github.com/huggingface/transformers/pull/26223

| 1,901,281,211 |

PR_kwDOCUB6oc5aloAS

| 26,223 |

Use CircleCI `store_test_results`

|

{

"login": "ydshieh",

"id": 2521628,

"node_id": "MDQ6VXNlcjI1MjE2Mjg=",

"avatar_url": "https://avatars.githubusercontent.com/u/2521628?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ydshieh",

"html_url": "https://github.com/ydshieh",

"followers_url": "https://api.github.com/users/ydshieh/followers",

"following_url": "https://api.github.com/users/ydshieh/following{/other_user}",

"gists_url": "https://api.github.com/users/ydshieh/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ydshieh/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ydshieh/subscriptions",

"organizations_url": "https://api.github.com/users/ydshieh/orgs",

"repos_url": "https://api.github.com/users/ydshieh/repos",

"events_url": "https://api.github.com/users/ydshieh/events{/privacy}",

"received_events_url": "https://api.github.com/users/ydshieh/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"_The documentation is not available anymore as the PR was closed or merged._",

"Very cool! Thanks @ydshieh and @ArthurZucker, this looks cool!",

"> More than me loving it, it gives us [access to the slow tests, the flaky tests, the tests that usually fail](https://app.circleci.com/insights/github/huggingface/transformers/workflows/run_tests/tests?branch=make_arthur_eye_happy) etc. Let's add this to all CI jobs!\r\n\r\nOK!",

"@ArthurZucker I think all pytest jobs have this feature enabled. `.circleci/config.yml` doesn't have pytest but just some usual python scripts. Could you elaborate a bit more what you suggest? Thanks!",

"I mean `check_repository_consistency`, `check_code_quality`, `pr_documentation_test` if possible! ",

"Hi,\r\n\r\n`pr_documentation_test` is already included in the current change.\r\n\r\nThe other 2 jobs are not `pytest`, and I am not sure if there is anything we can do similar to `pytest --junitxml=test-results/junit.xml`",

"We could make them use pytest, but only if you think it’s relevant! ",

"IMO, those are not really tests but checks of formatting :-). They won't be flaky, and those could not be splitted into individual test **methods** but each script as a whole.\r\n\r\nI would rather move forward and take the new feature already available (with this PR) for us even only for the pytest jobs.\r\n\r\nThank you for asking to add this, Sir!"

] | 1,695 | 1,695 | 1,695 |

COLLABORATOR

| null |

# What does this PR do?

@ArthurZucker loves it.

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/26223/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/26223/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/26223",

"html_url": "https://github.com/huggingface/transformers/pull/26223",

"diff_url": "https://github.com/huggingface/transformers/pull/26223.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/26223.patch",

"merged_at": 1695365814000

}

|

https://api.github.com/repos/huggingface/transformers/issues/26222

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/26222/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/26222/comments

|

https://api.github.com/repos/huggingface/transformers/issues/26222/events

|

https://github.com/huggingface/transformers/pull/26222

| 1,901,252,313 |

PR_kwDOCUB6oc5alhsB

| 26,222 |

[Check] Fix config docstring

|

{

"login": "sanchit-gandhi",

"id": 93869735,

"node_id": "U_kgDOBZhWpw",

"avatar_url": "https://avatars.githubusercontent.com/u/93869735?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/sanchit-gandhi",

"html_url": "https://github.com/sanchit-gandhi",

"followers_url": "https://api.github.com/users/sanchit-gandhi/followers",

"following_url": "https://api.github.com/users/sanchit-gandhi/following{/other_user}",

"gists_url": "https://api.github.com/users/sanchit-gandhi/gists{/gist_id}",

"starred_url": "https://api.github.com/users/sanchit-gandhi/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/sanchit-gandhi/subscriptions",

"organizations_url": "https://api.github.com/users/sanchit-gandhi/orgs",

"repos_url": "https://api.github.com/users/sanchit-gandhi/repos",

"events_url": "https://api.github.com/users/sanchit-gandhi/events{/privacy}",

"received_events_url": "https://api.github.com/users/sanchit-gandhi/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"_The documentation is not available anymore as the PR was closed or merged._"

] | 1,695 | 1,695 | 1,695 |

CONTRIBUTOR

| null |

# What does this PR do?

Fixes https://github.com/huggingface/transformers/pull/26183/files#r1328944428 by removing the debugging statements in the config docstring checker.

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/26222/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/26222/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/26222",

"html_url": "https://github.com/huggingface/transformers/pull/26222",

"diff_url": "https://github.com/huggingface/transformers/pull/26222.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/26222.patch",

"merged_at": 1695059882000

}

|

https://api.github.com/repos/huggingface/transformers/issues/26221

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/26221/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/26221/comments

|

https://api.github.com/repos/huggingface/transformers/issues/26221/events

|

https://github.com/huggingface/transformers/issues/26221

| 1,901,183,746 |

I_kwDOCUB6oc5xUcMC

| 26,221 |

Gradient checkpointing should have no functional impact

|

{

"login": "marianokamp",

"id": 3245189,

"node_id": "MDQ6VXNlcjMyNDUxODk=",

"avatar_url": "https://avatars.githubusercontent.com/u/3245189?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/marianokamp",

"html_url": "https://github.com/marianokamp",

"followers_url": "https://api.github.com/users/marianokamp/followers",

"following_url": "https://api.github.com/users/marianokamp/following{/other_user}",

"gists_url": "https://api.github.com/users/marianokamp/gists{/gist_id}",

"starred_url": "https://api.github.com/users/marianokamp/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/marianokamp/subscriptions",

"organizations_url": "https://api.github.com/users/marianokamp/orgs",

"repos_url": "https://api.github.com/users/marianokamp/repos",

"events_url": "https://api.github.com/users/marianokamp/events{/privacy}",

"received_events_url": "https://api.github.com/users/marianokamp/received_events",

"type": "User",

"site_admin": false

}

|

[] |

open

| false | null |

[] |

[

"No answer or re-action yet, but not stale either.",

"Gentle ping @muellerzr @pacman100 ",

"@pacman100, @muellerz \r\nJust re-ran with transformers 4.36.0, same result: \r\n\r\n\r\n",

"@pacman100, @muellerzr, @younesbelkada. Anything I can do here to help you acknowledge the ticket? If I am hearing nothing I will let it auto-close. ",

"Hello @marianokamp, Thank you for your patience. As I don't have a clear minimal reproducer here, I ran the below experiments and don't see a diff in performance with and without gradient checkpointing.\r\n\r\n1. Code: https://github.com/huggingface/peft/blob/main/examples/sequence_classification/LoRA.ipynb\r\n2. Use the `set_seed` for deterministic runs:\r\n```diff\r\nimport argparse\r\nimport os\r\n\r\nimport torch\r\nfrom torch.optim import AdamW\r\nfrom torch.utils.data import DataLoader\r\nfrom peft import (\r\n get_peft_config,\r\n get_peft_model,\r\n get_peft_model_state_dict,\r\n set_peft_model_state_dict,\r\n LoraConfig,\r\n PeftType,\r\n PrefixTuningConfig,\r\n PromptEncoderConfig,\r\n)\r\n\r\nimport evaluate\r\nfrom datasets import load_dataset\r\nfrom transformers import AutoModelForSequenceClassification, AutoTokenizer, get_linear_schedule_with_warmup, set_seed\r\nfrom tqdm import tqdm\r\n\r\n+ set_seed(100)\r\n```\r\n3. In gradient ckpt run, add the `model.gradient_checkpointing_enable` command:\r\n```diff\r\nmodel = AutoModelForSequenceClassification.from_pretrained(model_name_or_path, return_dict=True)\r\nmodel = get_peft_model(model, peft_config)\r\nmodel.print_trainable_parameters()\r\nmodel\r\n+ model.gradient_checkpointing_enable(gradient_checkpointing_kwargs={\"use_reentrant\":False})\r\n```\r\n4. Run the notebooks with and without gradient ckpt.\r\n5. mem usage:\r\n\r\n6. Without gradient ckpt output logs:\r\n```\r\n0%| | 0/115 [00:00<?, ?it/s]You're using a RobertaTokenizerFast tokenizer. Please note that with a fast tokenizer, using the `__call__` method is faster than using a method to encode the text followed by a call to the `pad` method to get a padded encoding.\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:27<00:00, 4.18it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.53it/s]\r\nepoch 0: {'accuracy': 0.7083333333333334, 'f1': 0.8210526315789474}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.30it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.52it/s]\r\nepoch 1: {'accuracy': 0.6838235294117647, 'f1': 0.8122270742358079}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.31it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.53it/s]\r\nepoch 2: {'accuracy': 0.6838235294117647, 'f1': 0.8122270742358079}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.29it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.52it/s]\r\nepoch 3: {'accuracy': 0.6838235294117647, 'f1': 0.8122270742358079}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.27it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.52it/s]\r\nepoch 4: {'accuracy': 0.6838235294117647, 'f1': 0.8122270742358079}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.30it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.54it/s]\r\nepoch 5: {'accuracy': 0.8186274509803921, 'f1': 0.8766666666666666}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.26it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.54it/s]\r\nepoch 6: {'accuracy': 0.8333333333333334, 'f1': 0.8885245901639344}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.26it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.50it/s]\r\nepoch 7: {'accuracy': 0.875, 'f1': 0.9109947643979057}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.30it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.52it/s]\r\nepoch 8: {'accuracy': 0.8872549019607843, 'f1': 0.9184397163120569}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.30it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.51it/s]\r\nepoch 9: {'accuracy': 0.8872549019607843, 'f1': 0.9201388888888888}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.29it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.49it/s]\r\nepoch 10: {'accuracy': 0.8921568627450981, 'f1': 0.9225352112676057}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.29it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.49it/s]\r\nepoch 11: {'accuracy': 0.8897058823529411, 'f1': 0.9220103986135182}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.28it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.49it/s]\r\nepoch 12: {'accuracy': 0.8946078431372549, 'f1': 0.9241622574955909}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.27it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.46it/s]\r\nepoch 13: {'accuracy': 0.8970588235294118, 'f1': 0.926056338028169}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.27it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.43it/s]\r\nepoch 14: {'accuracy': 0.8921568627450981, 'f1': 0.9225352112676057}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.28it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.43it/s]\r\nepoch 15: {'accuracy': 0.8872549019607843, 'f1': 0.9181494661921709}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.28it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.49it/s]\r\nepoch 16: {'accuracy': 0.8897058823529411, 'f1': 0.9211908931698775}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.27it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.48it/s]\r\nepoch 17: {'accuracy': 0.8897058823529411, 'f1': 0.9203539823008849}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.26it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.44it/s]\r\nepoch 18: {'accuracy': 0.8872549019607843, 'f1': 0.9195804195804195}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:26<00:00, 4.26it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.43it/s]\r\nepoch 19: {'accuracy': 0.8921568627450981, 'f1': 0.923076923076923}\r\n```\r\n7. with gradient checkpointing output logs:\r\n```\r\n0%| | 0/115 [00:00<?, ?it/s]You're using a RobertaTokenizerFast tokenizer. Please note that with a fast tokenizer, using the `__call__` method is faster than using a method to encode the text followed by a call to the `pad` method to get a padded encoding.\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:41<00:00, 2.77it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.37it/s]\r\nepoch 0: {'accuracy': 0.7083333333333334, 'f1': 0.8210526315789474}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.82it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.37it/s]\r\nepoch 1: {'accuracy': 0.6838235294117647, 'f1': 0.8122270742358079}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.82it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.39it/s]\r\nepoch 2: {'accuracy': 0.6838235294117647, 'f1': 0.8122270742358079}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.82it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.41it/s]\r\nepoch 3: {'accuracy': 0.6838235294117647, 'f1': 0.8122270742358079}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.81it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.40it/s]\r\nepoch 4: {'accuracy': 0.6838235294117647, 'f1': 0.8122270742358079}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.83it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.40it/s]\r\nepoch 5: {'accuracy': 0.8186274509803921, 'f1': 0.8766666666666666}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:41<00:00, 2.79it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.44it/s]\r\nepoch 6: {'accuracy': 0.8333333333333334, 'f1': 0.8885245901639344}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.81it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.42it/s]\r\nepoch 7: {'accuracy': 0.875, 'f1': 0.9109947643979057}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.83it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.40it/s]\r\nepoch 8: {'accuracy': 0.8872549019607843, 'f1': 0.9184397163120569}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.84it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.39it/s]\r\nepoch 9: {'accuracy': 0.8872549019607843, 'f1': 0.9201388888888888}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.82it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.40it/s]\r\nepoch 10: {'accuracy': 0.8921568627450981, 'f1': 0.9225352112676057}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.83it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.39it/s]\r\nepoch 11: {'accuracy': 0.8897058823529411, 'f1': 0.9220103986135182}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.81it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.40it/s]\r\nepoch 12: {'accuracy': 0.8946078431372549, 'f1': 0.9241622574955909}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.82it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.39it/s]\r\nepoch 13: {'accuracy': 0.8970588235294118, 'f1': 0.926056338028169}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.82it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.34it/s]\r\nepoch 14: {'accuracy': 0.8921568627450981, 'f1': 0.9225352112676057}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.83it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.33it/s]\r\nepoch 15: {'accuracy': 0.8872549019607843, 'f1': 0.9181494661921709}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.81it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.41it/s]\r\nepoch 16: {'accuracy': 0.8897058823529411, 'f1': 0.9211908931698775}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.82it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.42it/s]\r\nepoch 17: {'accuracy': 0.8897058823529411, 'f1': 0.9203539823008849}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.81it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.35it/s]\r\nepoch 18: {'accuracy': 0.8872549019607843, 'f1': 0.9195804195804195}\r\n100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 115/115 [00:40<00:00, 2.83it/s]\r\n100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 13/13 [00:01<00:00, 8.39it/s]\r\nepoch 19: {'accuracy': 0.8921568627450981, 'f1': 0.923076923076923}\r\n```\r\n\r\nObservations: No performance gap between runs with gradient checkpointing and without gradient checkpointing.",

"Thanks @pacman100. I got it now - a minimalist example is needed. I will try to create one over the weekend. \r\n",

"@pacman100. Hi Sourab, thanks for investing the time!\r\n\r\nYou didn't say otherwise, so it's confirmed that using gradient checkpointing should not change the functional impact of the model, correct?\r\n\r\nI now have a minimal implementation [sample notebook](https://github.com/marianokamp/export/blob/main/hf2/gradient_checkpointing.ipynb) that shows the issue.\r\n\r\nBackground: The [original code](https://github.com/marianokamp/peft_lora/blob/main/src/lora.py) is from an [article](https://towardsdatascience.com/dive-into-lora-adapters-38f4da488ede) that illustrates for educational purposes how a simple LoRA implementation looks like. It's just Python code and worked fine, until I tried gradient checkpointing in the [2nd article](https://towardsdatascience.com/a-winding-road-to-parameter-efficiency-12448e64524d). \r\n\r\nI am not aware of specific expectations that the transformers lib has on code. But there are two things I do in my example that may be worth pointing out as not being in the middle of the road. (a) Freezing modules and (b) overwriting the forward function in the module to be adapted to point it to the adapter implementation in the forward pass. Both work fine without gradient checkpointing, but maybe they are problematic with gradient checkpointing? The code is in the example I linked above, but for easier consumption I reproduce this method here:\r\n\r\n```Python\r\ndef adapt_model(model):\r\n\r\n class MinimalLoRAAdapter(nn.Module): \r\n def __init__(self, \r\n adaptee):\r\n super().__init__()\r\n\r\n self.adaptee = adaptee\r\n\r\n self.orig_forward = adaptee.forward\r\n adaptee.forward = self.forward # <-----------------\r\n \r\n r = 1\r\n adaptee.lora_A = nn.Parameter(\r\n torch.randn(adaptee.in_features, r) / math.sqrt(adaptee.in_features)\r\n )\r\n adaptee.lora_B = nn.Parameter(torch.zeros(r, adaptee.out_features))\r\n\r\n def forward(self, x, *args, **kwargs):\r\n return (\r\n self.orig_forward(x, *args, **kwargs) # <-----------------\r\n + F.dropout(x, 0.1) @ self.adaptee.lora_A @ self.adaptee.lora_B\r\n )\r\n \r\n # freeze all layers, incl. embeddings, except for the classifier\r\n for m in model.roberta.modules(): \r\n m.requires_grad_(False) # <-----------------\r\n\r\n # Adapt linear modules in transformer layers\r\n for m in model.roberta.encoder.modules(): \r\n if isinstance(m, nn.Linear):\r\n MinimalLoRAAdapter(m)\r\n```\r\n\r\nHere is an excerpt from the output. Full output in the linked notebook (check eval_accuracy):\r\n\r\n```\r\n---- without gradient checkpointing ----\r\n\r\n[..]\r\nmodel.is_gradient_checkpointing=False\r\n[..]\r\n{'train_runtime': 457.1886, 'train_samples_per_second': 489.951, 'train_steps_per_second': 2.187, 'train_loss': 0.38296363830566404, 'epoch': 3.32}\r\n{'eval_loss': 0.23593959212303162, 'eval_accuracy': 0.908256880733945, 'eval_runtime': 1.6902, 'eval_samples_per_second': 515.919, 'eval_steps_per_second': 64.49, 'epoch': 3.32}\r\n\r\n---- with gradient checkpointing ----\r\n\r\n[..]\r\nmodel.is_gradient_checkpointing=True\r\n[..]\r\n{'train_runtime': 227.8506, 'train_samples_per_second': 983.101, 'train_steps_per_second': 4.389, 'train_loss': 0.6675097045898437, 'epoch': 3.32}\r\n{'eval_loss': 0.6635248064994812, 'eval_accuracy': 0.5194954128440367, 'eval_runtime': 1.6397, 'eval_samples_per_second': 531.808, 'eval_steps_per_second': 66.476, 'epoch': 3.32}\r\n[..]\r\n```\r\nI tried the above with both GPU and CPU and I can observe the same behavior. Hope that helps to narrow it down. "

] | 1,695 | 1,707 | null |

NONE

| null |

### System Info

Latest released and py3.10.

accelerate-0.21.0 aiohttp-3.8.5 aiosignal-1.3.1 async-timeout-4.0.3 bitsandbytes-0.41.0 datasets-2.14.5 evaluate-0.4.0 frozenlist-1.4.0 huggingface-hub-0.17.1 multidict-6.0.4 peft-0.4.0 pynvml-11.5.0 regex-2023.8.8 responses-0.18.0 safetensors-0.3.3 sagemaker-inference-1.10.0 tensorboardX-2.6.2.2 tokenizers-0.13.3 transformers-4.33.2 xxhash-3.3.0 yarl-1.9.2

### Who can help?

@pacman100, @muellerzr

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [X] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

Hi @pacman100, @muellerzr.

I was wondering about the memory use of LoRA. Specifically what happens if I adapt modules that are

- (top) closer to the head of the network than to the inputs, as opposed to

- (bottom) the other way around.

Given that the number of parameters to train remains the same in both cases, the memory usage should be the same, except that to calculate the gradients for (bottom) we would need to keep more activations around from the forward pass. If that were the case, then turning on gradient checkpointing should make (top) and (bottom) use the same memory, as we are discarding the activations and recalculating them on the backward pass. That is correct, no (@younesbelkada)?

Trying this out, I can see that behavior as expected. However, the accuracy also changed.

My understanding would be that with gradient checkpointing we would now need less memory, more time, but the functional aspects, here model performance, should be unchanged. Hence the issue.

### Details

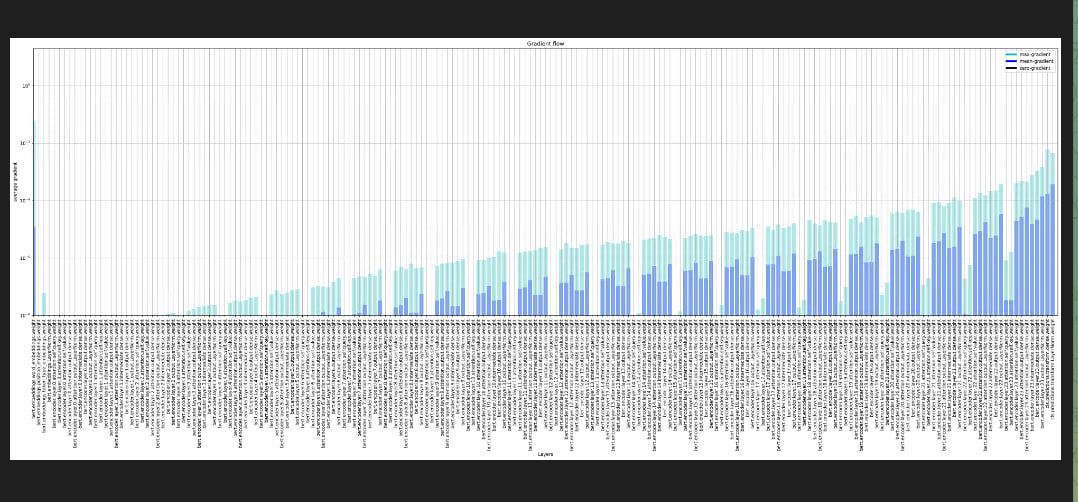

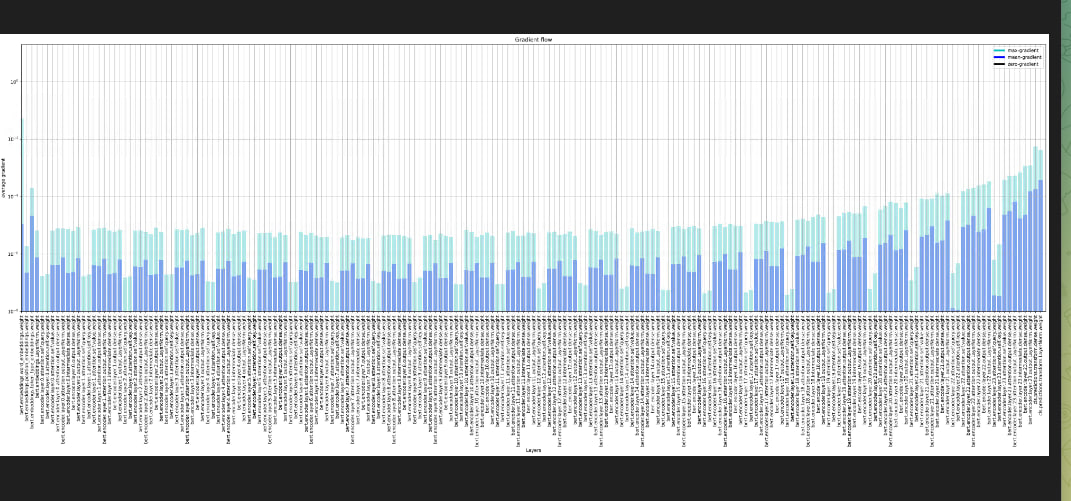

Below you can see on the x-axis on which layer of a 12 layer RoBERTa Base the adapters were applied. As you can see the memory for (bottom - lower layer numbers, closer to the embeddings) are higher than for (top - higher layer numbers, closer to the head), when not using gradient checkpointing, and they are same when using gradient checkpointing.

However, when looking at the model performance we can see that we have a difference of 0.1 between using and not using checkpointing.

Not that it matters, but this is using the glue/sst-2 dataset. I am not changing anything, but passing 0 or 1 as an argument to Trainer's gradient_checkpointing attribute (and 0 and 1 to empty-cuda-cache every 30 seconds).

### Expected behavior

No functional change when using gradient_checkpointing.

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/26221/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/26221/timeline

| null | null | null |

https://api.github.com/repos/huggingface/transformers/issues/26220

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/26220/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/26220/comments

|

https://api.github.com/repos/huggingface/transformers/issues/26220/events

|

https://github.com/huggingface/transformers/issues/26220

| 1,901,080,392 |

I_kwDOCUB6oc5xUC9I

| 26,220 |

How does one preprocess a pdf file before passing it into pipeline for summarization?

|

{

"login": "pythonvijay",

"id": 144582559,

"node_id": "U_kgDOCJ4nnw",

"avatar_url": "https://avatars.githubusercontent.com/u/144582559?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/pythonvijay",

"html_url": "https://github.com/pythonvijay",

"followers_url": "https://api.github.com/users/pythonvijay/followers",

"following_url": "https://api.github.com/users/pythonvijay/following{/other_user}",

"gists_url": "https://api.github.com/users/pythonvijay/gists{/gist_id}",

"starred_url": "https://api.github.com/users/pythonvijay/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/pythonvijay/subscriptions",

"organizations_url": "https://api.github.com/users/pythonvijay/orgs",