pipeline_tag

stringclasses 48

values | library_name

stringclasses 198

values | text

stringlengths 1

900k

| metadata

stringlengths 2

438k

| id

stringlengths 5

122

| last_modified

null | tags

listlengths 1

1.84k

| sha

null | created_at

stringlengths 25

25

| arxiv

listlengths 0

201

| languages

listlengths 0

1.83k

| tags_str

stringlengths 17

9.34k

| text_str

stringlengths 0

389k

| text_lists

listlengths 0

722

| processed_texts

listlengths 1

723

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

fill-mask

|

transformers

|

# PaloBERT

## Model description

A Greek language model based on [RoBERTa](https://arxiv.org/abs/1907.11692)

## Training data

The training data is a corpus of 458,293 documents collected from Greek social media accounts. It also contains a GTP-2 tokenizer trained from scratch on the same corpus.

The training corpus has been collected and provided by [Palo LTD](http://www.paloservices.com/)

## Eval results

### BibTeX entry and citation info

```bibtex

@Article{info12080331,

AUTHOR = {Alexandridis, Georgios and Varlamis, Iraklis and Korovesis, Konstantinos and Caridakis, George and Tsantilas, Panagiotis},

TITLE = {A Survey on Sentiment Analysis and Opinion Mining in Greek Social Media},

JOURNAL = {Information},

VOLUME = {12},

YEAR = {2021},

NUMBER = {8},

ARTICLE-NUMBER = {331},

URL = {https://www.mdpi.com/2078-2489/12/8/331},

ISSN = {2078-2489},

DOI = {10.3390/info12080331}

}

```

|

{"language": "el"}

|

gealexandri/palobert-base-greek-uncased-v1

| null |

[

"transformers",

"pytorch",

"tf",

"roberta",

"fill-mask",

"el",

"arxiv:1907.11692",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[

"1907.11692"

] |

[

"el"

] |

TAGS

#transformers #pytorch #tf #roberta #fill-mask #el #arxiv-1907.11692 #autotrain_compatible #endpoints_compatible #region-us

|

# PaloBERT

## Model description

A Greek language model based on RoBERTa

## Training data

The training data is a corpus of 458,293 documents collected from Greek social media accounts. It also contains a GTP-2 tokenizer trained from scratch on the same corpus.

The training corpus has been collected and provided by Palo LTD

## Eval results

### BibTeX entry and citation info

|

[

"# PaloBERT",

"## Model description\n\nA Greek language model based on RoBERTa",

"## Training data\n\nThe training data is a corpus of 458,293 documents collected from Greek social media accounts. It also contains a GTP-2 tokenizer trained from scratch on the same corpus.\n\nThe training corpus has been collected and provided by Palo LTD",

"## Eval results",

"### BibTeX entry and citation info"

] |

[

"TAGS\n#transformers #pytorch #tf #roberta #fill-mask #el #arxiv-1907.11692 #autotrain_compatible #endpoints_compatible #region-us \n",

"# PaloBERT",

"## Model description\n\nA Greek language model based on RoBERTa",

"## Training data\n\nThe training data is a corpus of 458,293 documents collected from Greek social media accounts. It also contains a GTP-2 tokenizer trained from scratch on the same corpus.\n\nThe training corpus has been collected and provided by Palo LTD",

"## Eval results",

"### BibTeX entry and citation info"

] |

feature-extraction

|

transformers

|

hello

|

{}

|

geekfeed/gpt2_ja

| null |

[

"transformers",

"pytorch",

"jax",

"gpt2",

"feature-extraction",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #jax #gpt2 #feature-extraction #endpoints_compatible #text-generation-inference #region-us

|

hello

|

[] |

[

"TAGS\n#transformers #pytorch #jax #gpt2 #feature-extraction #endpoints_compatible #text-generation-inference #region-us \n"

] |

null | null |

https://dl.fbaipublicfiles.com/avhubert/model/lrs3_vox/vsr/base_vox_433h.pt

|

{}

|

g30rv17ys/avhubert

| null |

[

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#region-us

|

URL

|

[] |

[

"TAGS\n#region-us \n"

] |

fill-mask

|

transformers

|

# Please use 'Bert' related functions to load this model!

## Chinese BERT with Whole Word Masking Fix MLM Parameters

Init parameters by https://huggingface.co/hfl/chinese-roberta-wwm-ext-large

miss mlm parameters issue https://github.com/ymcui/Chinese-BERT-wwm/issues/98

Only train MLM parameters and freeze other parameters

More info in github https://github.com/genggui001/chinese_roberta_wwm_large_ext_fix_mlm

|

{"language": ["zh"], "license": "apache-2.0", "tags": ["bert"]}

|

genggui001/chinese_roberta_wwm_large_ext_fix_mlm

| null |

[

"transformers",

"pytorch",

"tf",

"jax",

"safetensors",

"bert",

"fill-mask",

"zh",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[

"zh"

] |

TAGS

#transformers #pytorch #tf #jax #safetensors #bert #fill-mask #zh #license-apache-2.0 #autotrain_compatible #endpoints_compatible #region-us

|

# Please use 'Bert' related functions to load this model!

## Chinese BERT with Whole Word Masking Fix MLM Parameters

Init parameters by URL

miss mlm parameters issue URL

Only train MLM parameters and freeze other parameters

More info in github URL

|

[

"# Please use 'Bert' related functions to load this model!",

"## Chinese BERT with Whole Word Masking Fix MLM Parameters\n\nInit parameters by URL\n\nmiss mlm parameters issue URL\n\nOnly train MLM parameters and freeze other parameters\n\nMore info in github URL"

] |

[

"TAGS\n#transformers #pytorch #tf #jax #safetensors #bert #fill-mask #zh #license-apache-2.0 #autotrain_compatible #endpoints_compatible #region-us \n",

"# Please use 'Bert' related functions to load this model!",

"## Chinese BERT with Whole Word Masking Fix MLM Parameters\n\nInit parameters by URL\n\nmiss mlm parameters issue URL\n\nOnly train MLM parameters and freeze other parameters\n\nMore info in github URL"

] |

automatic-speech-recognition

|

transformers

|

# xls-asr-vi-40h-1B

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-1b](https://huggingface.co/facebook/wav2vec2-xls-r-1b) on 40 hours of FPT Open Speech Dataset (FOSD) and Common Voice 7.0.

### Benchmark WER result:

| | [VIVOS](https://huggingface.co/datasets/vivos) | [COMMON VOICE 7.0](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0) | [COMMON VOICE 8.0](https://huggingface.co/datasets/mozilla-foundation/common_voice_8_0)

|---|---|---|---|

|without LM| 25.93 | 34.21 |

|with 4-grams LM| 24.11 | 25.84 | 31.158 |

### Benchmark CER result:

| | [VIVOS](https://huggingface.co/datasets/vivos) | [COMMON VOICE 7.0](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0) | [COMMON VOICE 8.0](https://huggingface.co/datasets/mozilla-foundation/common_voice_8_0)

|---|---|---|---|

|without LM| 9.24 | 19.94 |

|with 4-grams LM| 10.37 | 12.96 | 16.179 |

## Evaluation

Please use the eval.py file to run the evaluation

```python

python eval.py --model_id geninhu/xls-asr-vi-40h-1B --dataset mozilla-foundation/common_voice_7_0 --config vi --split test --log_outputs

```

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1500

- num_epochs: 10.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 4.6222 | 1.85 | 1500 | 5.9479 | 0.5474 |

| 1.1362 | 3.7 | 3000 | 7.9799 | 0.5094 |

| 0.7814 | 5.56 | 4500 | 5.0330 | 0.4724 |

| 0.6281 | 7.41 | 6000 | 2.3484 | 0.5020 |

| 0.5472 | 9.26 | 7500 | 2.2495 | 0.4793 |

| 0.4827 | 11.11 | 9000 | 1.1530 | 0.4768 |

| 0.4327 | 12.96 | 10500 | 1.6160 | 0.4646 |

| 0.3989 | 14.81 | 12000 | 3.2633 | 0.4703 |

| 0.3522 | 16.67 | 13500 | 2.2337 | 0.4708 |

| 0.3201 | 18.52 | 15000 | 3.6879 | 0.4565 |

| 0.2899 | 20.37 | 16500 | 5.4389 | 0.4599 |

| 0.2776 | 22.22 | 18000 | 3.5284 | 0.4537 |

| 0.2574 | 24.07 | 19500 | 2.1759 | 0.4649 |

| 0.2378 | 25.93 | 21000 | 3.3901 | 0.4448 |

| 0.217 | 27.78 | 22500 | 1.1632 | 0.4565 |

| 0.2115 | 29.63 | 24000 | 1.7441 | 0.4232 |

| 0.1959 | 31.48 | 25500 | 3.4992 | 0.4304 |

| 0.187 | 33.33 | 27000 | 3.6163 | 0.4369 |

| 0.1748 | 35.19 | 28500 | 3.6038 | 0.4467 |

| 0.17 | 37.04 | 30000 | 2.9708 | 0.4362 |

| 0.159 | 38.89 | 31500 | 3.2045 | 0.4279 |

| 0.153 | 40.74 | 33000 | 3.2427 | 0.4287 |

| 0.1463 | 42.59 | 34500 | 3.5439 | 0.4270 |

| 0.139 | 44.44 | 36000 | 3.9381 | 0.4150 |

| 0.1352 | 46.3 | 37500 | 4.1744 | 0.4092 |

| 0.1369 | 48.15 | 39000 | 4.2279 | 0.4154 |

| 0.1273 | 50.0 | 40500 | 4.1691 | 0.4133 |

### Framework versions

- Transformers 4.16.0.dev0

- Pytorch 1.10.1+cu102

- Datasets 1.17.1.dev0

- Tokenizers 0.11.0

|

{"language": ["vi"], "license": "apache-2.0", "tags": ["automatic-speech-recognition", "common-voice", "hf-asr-leaderboard", "robust-speech-event"], "datasets": ["mozilla-foundation/common_voice_7_0"], "model-index": [{"name": "xls-asr-vi-40h-1B", "results": [{"task": {"type": "automatic-speech-recognition", "name": "Speech Recognition"}, "dataset": {"name": "Common Voice 7.0", "type": "mozilla-foundation/common_voice_7_0", "args": "vi"}, "metrics": [{"type": "wer", "value": 25.846, "name": "Test WER (with LM)"}, {"type": "cer", "value": 12.961, "name": "Test CER (with LM)"}]}, {"task": {"type": "automatic-speech-recognition", "name": "Speech Recognition"}, "dataset": {"name": "Common Voice 8.0", "type": "mozilla-foundation/common_voice_8_0", "args": "vi"}, "metrics": [{"type": "wer", "value": 31.158, "name": "Test WER (with LM)"}, {"type": "cer", "value": 16.179, "name": "Test CER (with LM)"}]}]}]}

|

geninhu/xls-asr-vi-40h-1B

| null |

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"common-voice",

"hf-asr-leaderboard",

"robust-speech-event",

"vi",

"dataset:mozilla-foundation/common_voice_7_0",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[

"vi"

] |

TAGS

#transformers #pytorch #tensorboard #wav2vec2 #automatic-speech-recognition #common-voice #hf-asr-leaderboard #robust-speech-event #vi #dataset-mozilla-foundation/common_voice_7_0 #license-apache-2.0 #model-index #endpoints_compatible #region-us

|

xls-asr-vi-40h-1B

=================

This model is a fine-tuned version of facebook/wav2vec2-xls-r-1b on 40 hours of FPT Open Speech Dataset (FOSD) and Common Voice 7.0.

### Benchmark WER result:

### Benchmark CER result:

Evaluation

----------

Please use the URL file to run the evaluation

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 5e-05

* train\_batch\_size: 16

* eval\_batch\_size: 16

* seed: 42

* gradient\_accumulation\_steps: 2

* total\_train\_batch\_size: 32

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* lr\_scheduler\_warmup\_steps: 1500

* num\_epochs: 10.0

* mixed\_precision\_training: Native AMP

### Training results

### Framework versions

* Transformers 4.16.0.dev0

* Pytorch 1.10.1+cu102

* Datasets 1.17.1.dev0

* Tokenizers 0.11.0

|

[

"### Benchmark WER result:",

"### Benchmark CER result:\n\n\n\nEvaluation\n----------\n\n\nPlease use the URL file to run the evaluation\n\n\nTraining procedure\n------------------",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 5e-05\n* train\\_batch\\_size: 16\n* eval\\_batch\\_size: 16\n* seed: 42\n* gradient\\_accumulation\\_steps: 2\n* total\\_train\\_batch\\_size: 32\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* lr\\_scheduler\\_warmup\\_steps: 1500\n* num\\_epochs: 10.0\n* mixed\\_precision\\_training: Native AMP",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.16.0.dev0\n* Pytorch 1.10.1+cu102\n* Datasets 1.17.1.dev0\n* Tokenizers 0.11.0"

] |

[

"TAGS\n#transformers #pytorch #tensorboard #wav2vec2 #automatic-speech-recognition #common-voice #hf-asr-leaderboard #robust-speech-event #vi #dataset-mozilla-foundation/common_voice_7_0 #license-apache-2.0 #model-index #endpoints_compatible #region-us \n",

"### Benchmark WER result:",

"### Benchmark CER result:\n\n\n\nEvaluation\n----------\n\n\nPlease use the URL file to run the evaluation\n\n\nTraining procedure\n------------------",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 5e-05\n* train\\_batch\\_size: 16\n* eval\\_batch\\_size: 16\n* seed: 42\n* gradient\\_accumulation\\_steps: 2\n* total\\_train\\_batch\\_size: 32\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* lr\\_scheduler\\_warmup\\_steps: 1500\n* num\\_epochs: 10.0\n* mixed\\_precision\\_training: Native AMP",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.16.0.dev0\n* Pytorch 1.10.1+cu102\n* Datasets 1.17.1.dev0\n* Tokenizers 0.11.0"

] |

automatic-speech-recognition

|

transformers

|

# xls-asr-vi-40h

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the common voice 7.0 vi & private dataset.

It achieves the following results on the evaluation set (Without Language Model):

- Loss: 1.1177

- Wer: 60.58

## Evaluation

Please run the eval.py file

```bash

!python eval_custom.py --model_id geninhu/xls-asr-vi-40h --dataset mozilla-foundation/common_voice_7_0 --config vi --split test

```

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-06

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1500

- num_epochs: 50.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 23.3878 | 0.93 | 1500 | 21.9179 | 1.0 |

| 8.8862 | 1.85 | 3000 | 6.0599 | 1.0 |

| 4.3701 | 2.78 | 4500 | 4.3837 | 1.0 |

| 4.113 | 3.7 | 6000 | 4.2698 | 0.9982 |

| 3.9666 | 4.63 | 7500 | 3.9726 | 0.9989 |

| 3.5965 | 5.56 | 9000 | 3.7124 | 0.9975 |

| 3.3944 | 6.48 | 10500 | 3.5005 | 1.0057 |

| 3.304 | 7.41 | 12000 | 3.3710 | 1.0043 |

| 3.2482 | 8.33 | 13500 | 3.4201 | 1.0155 |

| 3.212 | 9.26 | 15000 | 3.3732 | 1.0151 |

| 3.1778 | 10.19 | 16500 | 3.2763 | 1.0009 |

| 3.1027 | 11.11 | 18000 | 3.1943 | 1.0025 |

| 2.9905 | 12.04 | 19500 | 2.8082 | 0.9703 |

| 2.7095 | 12.96 | 21000 | 2.4993 | 0.9302 |

| 2.4862 | 13.89 | 22500 | 2.3072 | 0.9140 |

| 2.3271 | 14.81 | 24000 | 2.1398 | 0.8949 |

| 2.1968 | 15.74 | 25500 | 2.0594 | 0.8817 |

| 2.111 | 16.67 | 27000 | 1.9404 | 0.8630 |

| 2.0387 | 17.59 | 28500 | 1.8895 | 0.8497 |

| 1.9504 | 18.52 | 30000 | 1.7961 | 0.8315 |

| 1.9039 | 19.44 | 31500 | 1.7433 | 0.8213 |

| 1.8342 | 20.37 | 33000 | 1.6790 | 0.7994 |

| 1.7824 | 21.3 | 34500 | 1.6291 | 0.7825 |

| 1.7359 | 22.22 | 36000 | 1.5783 | 0.7706 |

| 1.7053 | 23.15 | 37500 | 1.5248 | 0.7492 |

| 1.6504 | 24.07 | 39000 | 1.4930 | 0.7406 |

| 1.6263 | 25.0 | 40500 | 1.4572 | 0.7348 |

| 1.5893 | 25.93 | 42000 | 1.4202 | 0.7161 |

| 1.5669 | 26.85 | 43500 | 1.3987 | 0.7143 |

| 1.5277 | 27.78 | 45000 | 1.3512 | 0.6991 |

| 1.501 | 28.7 | 46500 | 1.3320 | 0.6879 |

| 1.4781 | 29.63 | 48000 | 1.3112 | 0.6788 |

| 1.4477 | 30.56 | 49500 | 1.2850 | 0.6657 |

| 1.4483 | 31.48 | 51000 | 1.2813 | 0.6633 |

| 1.4065 | 32.41 | 52500 | 1.2475 | 0.6541 |

| 1.3779 | 33.33 | 54000 | 1.2244 | 0.6503 |

| 1.3788 | 34.26 | 55500 | 1.2116 | 0.6407 |

| 1.3428 | 35.19 | 57000 | 1.1938 | 0.6352 |

| 1.3453 | 36.11 | 58500 | 1.1927 | 0.6340 |

| 1.3137 | 37.04 | 60000 | 1.1699 | 0.6252 |

| 1.2984 | 37.96 | 61500 | 1.1666 | 0.6229 |

| 1.2927 | 38.89 | 63000 | 1.1585 | 0.6188 |

| 1.2919 | 39.81 | 64500 | 1.1618 | 0.6190 |

| 1.293 | 40.74 | 66000 | 1.1479 | 0.6181 |

| 1.2853 | 41.67 | 67500 | 1.1423 | 0.6202 |

| 1.2687 | 42.59 | 69000 | 1.1315 | 0.6131 |

| 1.2603 | 43.52 | 70500 | 1.1333 | 0.6128 |

| 1.2577 | 44.44 | 72000 | 1.1191 | 0.6079 |

| 1.2435 | 45.37 | 73500 | 1.1177 | 0.6079 |

| 1.251 | 46.3 | 75000 | 1.1211 | 0.6092 |

| 1.2482 | 47.22 | 76500 | 1.1177 | 0.6060 |

| 1.2422 | 48.15 | 78000 | 1.1227 | 0.6097 |

| 1.2485 | 49.07 | 79500 | 1.1187 | 0.6071 |

| 1.2425 | 50.0 | 81000 | 1.1177 | 0.6058 |

### Framework versions

- Transformers 4.16.0.dev0

- Pytorch 1.10.1+cu102

- Datasets 1.17.1.dev0

- Tokenizers 0.11.0

|

{"language": ["vi"], "license": "apache-2.0", "tags": ["automatic-speech-recognition", "common-voice", "hf-asr-leaderboard", "robust-speech-event"], "datasets": ["mozilla-foundation/common_voice_7_0"], "model-index": [{"name": "xls-asr-vi-40h", "results": [{"task": {"type": "automatic-speech-recognition", "name": "Speech Recognition"}, "dataset": {"name": "Common Voice 7.0", "type": "mozilla-foundation/common_voice_7_0", "args": "vi"}, "metrics": [{"type": "wer", "value": 56.57, "name": "Test WER (with Language model)"}]}]}]}

|

geninhu/xls-asr-vi-40h

| null |

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"common-voice",

"hf-asr-leaderboard",

"robust-speech-event",

"vi",

"dataset:mozilla-foundation/common_voice_7_0",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[

"vi"

] |

TAGS

#transformers #pytorch #tensorboard #wav2vec2 #automatic-speech-recognition #common-voice #hf-asr-leaderboard #robust-speech-event #vi #dataset-mozilla-foundation/common_voice_7_0 #license-apache-2.0 #model-index #endpoints_compatible #region-us

|

xls-asr-vi-40h

==============

This model is a fine-tuned version of facebook/wav2vec2-xls-r-300m on the common voice 7.0 vi & private dataset.

It achieves the following results on the evaluation set (Without Language Model):

* Loss: 1.1177

* Wer: 60.58

Evaluation

----------

Please run the URL file

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 5e-06

* train\_batch\_size: 16

* eval\_batch\_size: 8

* seed: 42

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* lr\_scheduler\_warmup\_steps: 1500

* num\_epochs: 50.0

* mixed\_precision\_training: Native AMP

### Training results

### Framework versions

* Transformers 4.16.0.dev0

* Pytorch 1.10.1+cu102

* Datasets 1.17.1.dev0

* Tokenizers 0.11.0

|

[

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 5e-06\n* train\\_batch\\_size: 16\n* eval\\_batch\\_size: 8\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* lr\\_scheduler\\_warmup\\_steps: 1500\n* num\\_epochs: 50.0\n* mixed\\_precision\\_training: Native AMP",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.16.0.dev0\n* Pytorch 1.10.1+cu102\n* Datasets 1.17.1.dev0\n* Tokenizers 0.11.0"

] |

[

"TAGS\n#transformers #pytorch #tensorboard #wav2vec2 #automatic-speech-recognition #common-voice #hf-asr-leaderboard #robust-speech-event #vi #dataset-mozilla-foundation/common_voice_7_0 #license-apache-2.0 #model-index #endpoints_compatible #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 5e-06\n* train\\_batch\\_size: 16\n* eval\\_batch\\_size: 8\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* lr\\_scheduler\\_warmup\\_steps: 1500\n* num\\_epochs: 50.0\n* mixed\\_precision\\_training: Native AMP",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.16.0.dev0\n* Pytorch 1.10.1+cu102\n* Datasets 1.17.1.dev0\n* Tokenizers 0.11.0"

] |

text-generation

|

transformers

|

# MechDistilGPT2

## Table of Contents

- [Model Details](#model-details)

- [Uses](#uses)

- [Risks, Limitations and Biases](#risks-limitations-and-biases)

- [Training](#training)

- [Environmental Impact](#environmental-impact)

- [How to Get Started With the Model](#how-to-get-started-with-the-model)

## Model Details

- **Model Description:**

This model is fine-tuned on text scraped from 100+ Mechanical/Automotive pdf books.

- **Developed by:** [Ashwin](https://huggingface.co/geralt)

- **Model Type:** Causal Language modeling

- **Language(s):** English

- **License:** [More Information Needed]

- **Parent Model:** See the [DistilGPT2model](https://huggingface.co/distilgpt2) for more information about the Distilled-GPT2 base model.

- **Resources for more information:**

- [Research Paper](https://arxiv.org/abs/2105.09680)

- [GitHub Repo](https://github.com/huggingface/notebooks/blob/master/examples/language_modeling.ipynb)

## Uses

#### Direct Use

The model can be used for tasks including topic classification, Causal Language modeling and text generation

#### Misuse and Out-of-scope Use

The model should not be used to intentionally create hostile or alienating environments for people. In addition, the model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

## Risks, Limitations and Biases

**CONTENT WARNING: Readers should be aware this section contains content that is disturbing, offensive, and can propagate historical and current stereotypes.**

Significant research has explored bias and fairness issues with language models (see, e.g., [Sheng et al. (2021)](https://aclanthology.org/2021.acl-long.330.pdf) and [Bender et al. (2021)](https://dl.acm.org/doi/pdf/10.1145/3442188.3445922)).

## Training

#### Training Data

This model is fine-tuned on text scraped from 100+ Mechanical/Automotive pdf books.

#### Training Procedure

###### Fine-Tuning

* Default Training Args

* Epochs = 3

* Training set = 200k sentences

* Validation set = 40k sentences

###### Framework versions

* Transformers 4.7.0.dev0

* Pytorch 1.8.1+cu111

* Datasets 1.6.2

* Tokenizers 0.10.2

# Environmental Impact

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More information needed]

- **Hours used:** [More information needed]

- **Cloud Provider:** [More information needed]

- **Compute Region:** [More information needed"]

- **Carbon Emitted:** [More information needed]

## How to Get Started With the Model

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("geralt/MechDistilGPT2")

model = AutoModelForCausalLM.from_pretrained("geralt/MechDistilGPT2")

```

|

{"tags": ["Causal Language modeling", "text-generation", "CLM"], "model_index": [{"name": "MechDistilGPT2", "results": [{"task": {"name": "Causal Language modeling", "type": "Causal Language modeling"}}]}]}

|

geralt/MechDistilGPT2

| null |

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"Causal Language modeling",

"CLM",

"arxiv:2105.09680",

"arxiv:1910.09700",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[

"2105.09680",

"1910.09700"

] |

[] |

TAGS

#transformers #pytorch #gpt2 #text-generation #Causal Language modeling #CLM #arxiv-2105.09680 #arxiv-1910.09700 #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

# MechDistilGPT2

## Table of Contents

- Model Details

- Uses

- Risks, Limitations and Biases

- Training

- Environmental Impact

- How to Get Started With the Model

## Model Details

- Model Description:

This model is fine-tuned on text scraped from 100+ Mechanical/Automotive pdf books.

- Developed by: Ashwin

- Model Type: Causal Language modeling

- Language(s): English

- License:

- Parent Model: See the DistilGPT2model for more information about the Distilled-GPT2 base model.

- Resources for more information:

- Research Paper

- GitHub Repo

## Uses

#### Direct Use

The model can be used for tasks including topic classification, Causal Language modeling and text generation

#### Misuse and Out-of-scope Use

The model should not be used to intentionally create hostile or alienating environments for people. In addition, the model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

## Risks, Limitations and Biases

CONTENT WARNING: Readers should be aware this section contains content that is disturbing, offensive, and can propagate historical and current stereotypes.

Significant research has explored bias and fairness issues with language models (see, e.g., Sheng et al. (2021) and Bender et al. (2021)).

## Training

#### Training Data

This model is fine-tuned on text scraped from 100+ Mechanical/Automotive pdf books.

#### Training Procedure

###### Fine-Tuning

* Default Training Args

* Epochs = 3

* Training set = 200k sentences

* Validation set = 40k sentences

###### Framework versions

* Transformers 4.7.0.dev0

* Pytorch 1.8.1+cu111

* Datasets 1.6.2

* Tokenizers 0.10.2

# Environmental Impact

Carbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).

- Hardware Type: [More information needed]

- Hours used: [More information needed]

- Cloud Provider: [More information needed]

- Compute Region: [More information needed"]

- Carbon Emitted: [More information needed]

## How to Get Started With the Model

|

[

"# MechDistilGPT2",

"## Table of Contents\n- Model Details \n- Uses\n- Risks, Limitations and Biases\n- Training\n- Environmental Impact\n- How to Get Started With the Model",

"## Model Details\n- Model Description: \nThis model is fine-tuned on text scraped from 100+ Mechanical/Automotive pdf books.\n\n\n- Developed by: Ashwin\n\n- Model Type: Causal Language modeling\n- Language(s): English\n- License: \n- Parent Model: See the DistilGPT2model for more information about the Distilled-GPT2 base model.\n- Resources for more information:\n - Research Paper\n - GitHub Repo",

"## Uses",

"#### Direct Use\n\nThe model can be used for tasks including topic classification, Causal Language modeling and text generation",

"#### Misuse and Out-of-scope Use\n\nThe model should not be used to intentionally create hostile or alienating environments for people. In addition, the model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.",

"## Risks, Limitations and Biases\n\nCONTENT WARNING: Readers should be aware this section contains content that is disturbing, offensive, and can propagate historical and current stereotypes.\n\nSignificant research has explored bias and fairness issues with language models (see, e.g., Sheng et al. (2021) and Bender et al. (2021)).",

"## Training",

"#### Training Data\n\nThis model is fine-tuned on text scraped from 100+ Mechanical/Automotive pdf books.",

"#### Training Procedure",

"###### Fine-Tuning\n\n* Default Training Args\n* Epochs = 3\n* Training set = 200k sentences\n* Validation set = 40k sentences",

"###### Framework versions\n\n* Transformers 4.7.0.dev0\n* Pytorch 1.8.1+cu111\n* Datasets 1.6.2\n* Tokenizers 0.10.2",

"# Environmental Impact\n\n\nCarbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).\n\n- Hardware Type: [More information needed]\n- Hours used: [More information needed]\n- Cloud Provider: [More information needed]\n- Compute Region: [More information needed\"]\n- Carbon Emitted: [More information needed]\n",

"## How to Get Started With the Model"

] |

[

"TAGS\n#transformers #pytorch #gpt2 #text-generation #Causal Language modeling #CLM #arxiv-2105.09680 #arxiv-1910.09700 #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us \n",

"# MechDistilGPT2",

"## Table of Contents\n- Model Details \n- Uses\n- Risks, Limitations and Biases\n- Training\n- Environmental Impact\n- How to Get Started With the Model",

"## Model Details\n- Model Description: \nThis model is fine-tuned on text scraped from 100+ Mechanical/Automotive pdf books.\n\n\n- Developed by: Ashwin\n\n- Model Type: Causal Language modeling\n- Language(s): English\n- License: \n- Parent Model: See the DistilGPT2model for more information about the Distilled-GPT2 base model.\n- Resources for more information:\n - Research Paper\n - GitHub Repo",

"## Uses",

"#### Direct Use\n\nThe model can be used for tasks including topic classification, Causal Language modeling and text generation",

"#### Misuse and Out-of-scope Use\n\nThe model should not be used to intentionally create hostile or alienating environments for people. In addition, the model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.",

"## Risks, Limitations and Biases\n\nCONTENT WARNING: Readers should be aware this section contains content that is disturbing, offensive, and can propagate historical and current stereotypes.\n\nSignificant research has explored bias and fairness issues with language models (see, e.g., Sheng et al. (2021) and Bender et al. (2021)).",

"## Training",

"#### Training Data\n\nThis model is fine-tuned on text scraped from 100+ Mechanical/Automotive pdf books.",

"#### Training Procedure",

"###### Fine-Tuning\n\n* Default Training Args\n* Epochs = 3\n* Training set = 200k sentences\n* Validation set = 40k sentences",

"###### Framework versions\n\n* Transformers 4.7.0.dev0\n* Pytorch 1.8.1+cu111\n* Datasets 1.6.2\n* Tokenizers 0.10.2",

"# Environmental Impact\n\n\nCarbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).\n\n- Hardware Type: [More information needed]\n- Hours used: [More information needed]\n- Cloud Provider: [More information needed]\n- Compute Region: [More information needed\"]\n- Carbon Emitted: [More information needed]\n",

"## How to Get Started With the Model"

] |

question-answering

|

transformers

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# biobert_v1.1_pubmed-finetuned-squad

This model is a fine-tuned version of [gerardozq/biobert_v1.1_pubmed-finetuned-squad](https://huggingface.co/gerardozq/biobert_v1.1_pubmed-finetuned-squad) on the squad_v2 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Framework versions

- Transformers 4.12.3

- Pytorch 1.9.0+cu111

- Datasets 1.15.1

- Tokenizers 0.10.3

|

{"tags": ["generated_from_trainer"], "datasets": ["squad_v2"], "model-index": [{"name": "biobert_v1.1_pubmed-finetuned-squad", "results": []}]}

|

gerardozq/biobert_v1.1_pubmed-finetuned-squad

| null |

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"question-answering",

"generated_from_trainer",

"dataset:squad_v2",

"endpoints_compatible",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[] |

TAGS

#transformers #pytorch #tensorboard #bert #question-answering #generated_from_trainer #dataset-squad_v2 #endpoints_compatible #region-us

|

# biobert_v1.1_pubmed-finetuned-squad

This model is a fine-tuned version of gerardozq/biobert_v1.1_pubmed-finetuned-squad on the squad_v2 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Framework versions

- Transformers 4.12.3

- Pytorch 1.9.0+cu111

- Datasets 1.15.1

- Tokenizers 0.10.3

|

[

"# biobert_v1.1_pubmed-finetuned-squad\n\nThis model is a fine-tuned version of gerardozq/biobert_v1.1_pubmed-finetuned-squad on the squad_v2 dataset.",

"## Model description\n\nMore information needed",

"## Intended uses & limitations\n\nMore information needed",

"## Training and evaluation data\n\nMore information needed",

"## Training procedure",

"### Training hyperparameters\n\nThe following hyperparameters were used during training:\n- learning_rate: 2e-05\n- train_batch_size: 16\n- eval_batch_size: 16\n- seed: 42\n- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n- lr_scheduler_type: linear\n- num_epochs: 1",

"### Framework versions\n\n- Transformers 4.12.3\n- Pytorch 1.9.0+cu111\n- Datasets 1.15.1\n- Tokenizers 0.10.3"

] |

[

"TAGS\n#transformers #pytorch #tensorboard #bert #question-answering #generated_from_trainer #dataset-squad_v2 #endpoints_compatible #region-us \n",

"# biobert_v1.1_pubmed-finetuned-squad\n\nThis model is a fine-tuned version of gerardozq/biobert_v1.1_pubmed-finetuned-squad on the squad_v2 dataset.",

"## Model description\n\nMore information needed",

"## Intended uses & limitations\n\nMore information needed",

"## Training and evaluation data\n\nMore information needed",

"## Training procedure",

"### Training hyperparameters\n\nThe following hyperparameters were used during training:\n- learning_rate: 2e-05\n- train_batch_size: 16\n- eval_batch_size: 16\n- seed: 42\n- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n- lr_scheduler_type: linear\n- num_epochs: 1",

"### Framework versions\n\n- Transformers 4.12.3\n- Pytorch 1.9.0+cu111\n- Datasets 1.15.1\n- Tokenizers 0.10.3"

] |

null |

transformers

|

# German Electra Uncased

<img width="300px" src="https://raw.githubusercontent.com/German-NLP-Group/german-transformer-training/master/model_cards/german-electra-logo.png">

[¹]

## Version 2 Release

We released an improved version of this model. Version 1 was trained for 766,000 steps. For this new version we continued the training for an additional 734,000 steps. It therefore follows that version 2 was trained on a total of 1,500,000 steps. See "Evaluation of Version 2: GermEval18 Coarse" below for details.

## Model Info

This Model is suitable for training on many downstream tasks in German (Q&A, Sentiment Analysis, etc.).

It can be used as a drop-in replacement for **BERT** in most down-stream tasks (**ELECTRA** is even implemented as an extended **BERT** Class).

At the time of release (August 2020) this model is the best performing publicly available German NLP model on various German evaluation metrics (CONLL03-DE, GermEval18 Coarse, GermEval18 Fine). For GermEval18 Coarse results see below. More will be published soon.

## Installation

This model has the special feature that it is **uncased** but does **not strip accents**.

This possibility was added by us with [PR #6280](https://github.com/huggingface/transformers/pull/6280).

To use it you have to use Transformers version 3.1.0 or newer.

```bash

pip install transformers -U

```

## Uncase and Umlauts ('Ö', 'Ä', 'Ü')

This model is uncased. This helps especially for domains where colloquial terms with uncorrect capitalization is often used.

The special characters 'ö', 'ü', 'ä' are included through the `strip_accent=False` option, as this leads to an improved precision.

## Creators

This model was trained and open sourced in conjunction with the [**German NLP Group**](https://github.com/German-NLP-Group) in equal parts by:

- [**Philip May**](https://May.la) - [Deutsche Telekom](https://www.telekom.de/)

- [**Philipp Reißel**](https://www.linkedin.com/in/philipp-reissel/) - [ambeRoad](https://amberoad.de/)

## Evaluation of Version 2: GermEval18 Coarse

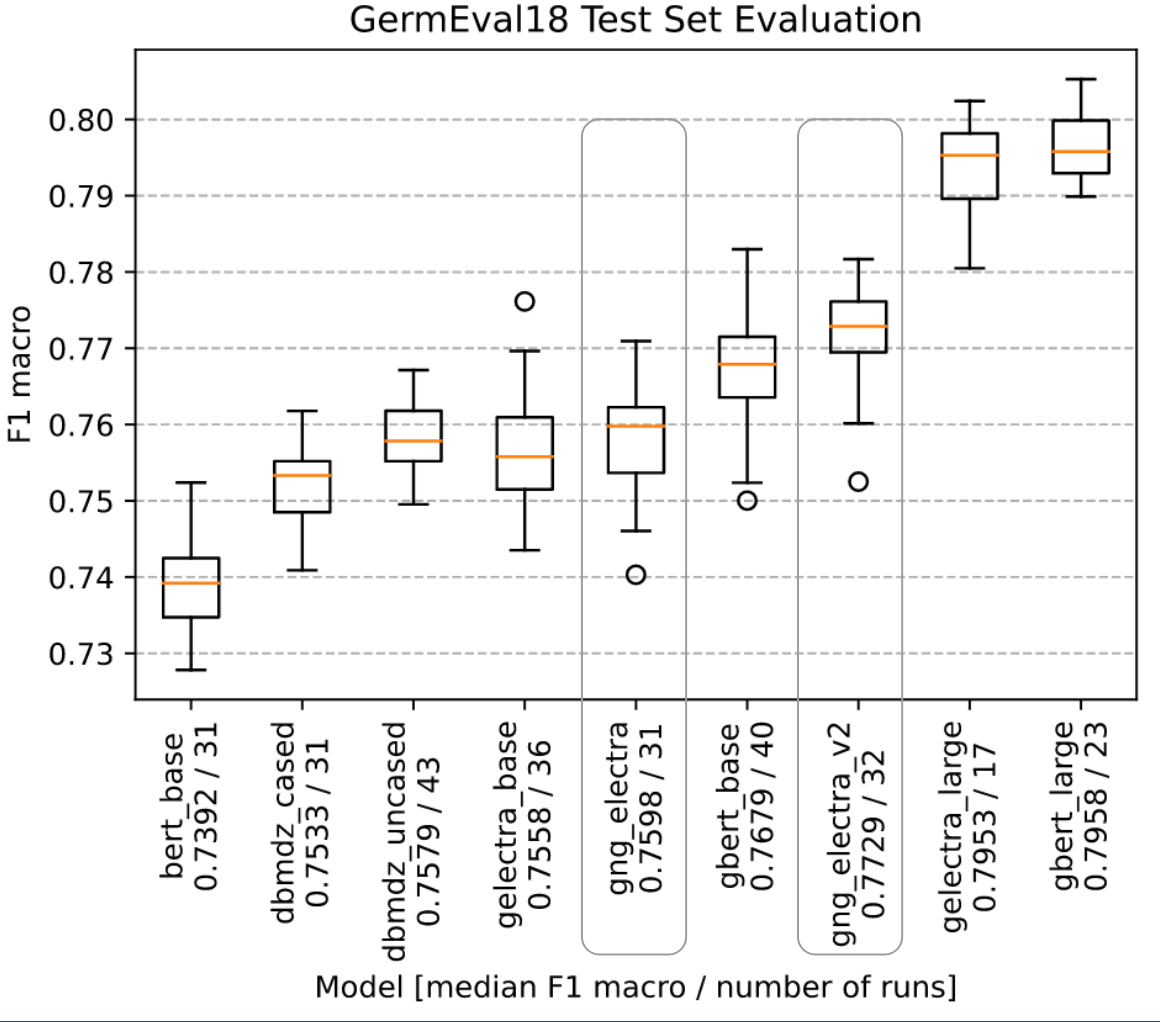

We evaluated all language models on GermEval18 with the F1 macro score. For each model we did an extensive automated hyperparameter search. With the best hyperparmeters we did fit the moodel multiple times on GermEval18. This is done to cancel random effects and get results of statistical relevance.

## Checkpoint evaluation

Since it it not guaranteed that the last checkpoint is the best, we evaluated the checkpoints on GermEval18. We found that the last checkpoint is indeed the best. The training was stable and did not overfit the text corpus.

## Pre-training details

### Data

- Cleaned Common Crawl Corpus 2019-09 German: [CC_net](https://github.com/facebookresearch/cc_net) (Only head coprus and filtered for language_score > 0.98) - 62 GB

- German Wikipedia Article Pages Dump (20200701) - 5.5 GB

- German Wikipedia Talk Pages Dump (20200620) - 1.1 GB

- Subtitles - 823 MB

- News 2018 - 4.1 GB

The sentences were split with [SojaMo](https://github.com/tsproisl/SoMaJo). We took the German Wikipedia Article Pages Dump 3x to oversample. This approach was also used in a similar way in GPT-3 (Table 2.2).

More Details can be found here [Preperaing Datasets for German Electra Github](https://github.com/German-NLP-Group/german-transformer-training)

### Electra Branch no_strip_accents

Because we do not want to stip accents in our training data we made a change to Electra and used this repo [Electra no_strip_accents](https://github.com/PhilipMay/electra/tree/no_strip_accents) (branch `no_strip_accents`). Then created the tf dataset with:

```bash

python build_pretraining_dataset.py --corpus-dir <corpus_dir> --vocab-file <dir>/vocab.txt --output-dir ./tf_data --max-seq-length 512 --num-processes 8 --do-lower-case --no-strip-accents

```

### The training

The training itself can be performed with the Original Electra Repo (No special case for this needed).

We run it with the following Config:

<details>

<summary>The exact Training Config</summary>

<br/>debug False

<br/>disallow_correct False

<br/>disc_weight 50.0

<br/>do_eval False

<br/>do_lower_case True

<br/>do_train True

<br/>electra_objective True

<br/>embedding_size 768

<br/>eval_batch_size 128

<br/>gcp_project None

<br/>gen_weight 1.0

<br/>generator_hidden_size 0.33333

<br/>generator_layers 1.0

<br/>iterations_per_loop 200

<br/>keep_checkpoint_max 0

<br/>learning_rate 0.0002

<br/>lr_decay_power 1.0

<br/>mask_prob 0.15

<br/>max_predictions_per_seq 79

<br/>max_seq_length 512

<br/>model_dir gs://XXX

<br/>model_hparam_overrides {}

<br/>model_name 02_Electra_Checkpoints_32k_766k_Combined

<br/>model_size base

<br/>num_eval_steps 100

<br/>num_tpu_cores 8

<br/>num_train_steps 766000

<br/>num_warmup_steps 10000

<br/>pretrain_tfrecords gs://XXX

<br/>results_pkl gs://XXX

<br/>results_txt gs://XXX

<br/>save_checkpoints_steps 5000

<br/>temperature 1.0

<br/>tpu_job_name None

<br/>tpu_name electrav5

<br/>tpu_zone None

<br/>train_batch_size 256

<br/>uniform_generator False

<br/>untied_generator True

<br/>untied_generator_embeddings False

<br/>use_tpu True

<br/>vocab_file gs://XXX

<br/>vocab_size 32767

<br/>weight_decay_rate 0.01

</details>

Please Note: *Due to the GAN like strucutre of Electra the loss is not that meaningful*

It took about 7 Days on a preemtible TPU V3-8. In total, the Model went through approximately 10 Epochs. For an automatically recreation of a cancelled TPUs we used [tpunicorn](https://github.com/shawwn/tpunicorn). The total cost of training summed up to about 450 $ for one run. The Data-pre processing and Vocab Creation needed approximately 500-1000 CPU hours. Servers were fully provided by [T-Systems on site services GmbH](https://www.t-systems-onsite.de/), [ambeRoad](https://amberoad.de/).

Special thanks to [Stefan Schweter](https://github.com/stefan-it) for your feedback and providing parts of the text corpus.

[¹]: Source for the picture [Pinterest](https://www.pinterest.cl/pin/371828512984142193/)

### Negative Results

We tried the following approaches which we found had no positive influence:

- **Increased Vocab Size**: Leads to more parameters and thus reduced examples/sec while no visible Performance gains were measured

- **Decreased Batch-Size**: The original Electra was trained with a Batch Size per TPU Core of 16 whereas this Model was trained with 32 BS / TPU Core. We found out that 32 BS leads to better results when you compare metrics over computation time

## License - The MIT License

Copyright 2020-2021 Philip May<br>

Copyright 2020-2021 Philipp Reissel

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

|

{"language": "de", "license": "mit", "tags": ["electra", "commoncrawl", "uncased", "umlaute", "umlauts", "german", "deutsch"], "thumbnail": "https://raw.githubusercontent.com/German-NLP-Group/german-transformer-training/master/model_cards/german-electra-logo.png"}

|

german-nlp-group/electra-base-german-uncased

| null |

[

"transformers",

"pytorch",

"electra",

"pretraining",

"commoncrawl",

"uncased",

"umlaute",

"umlauts",

"german",

"deutsch",

"de",

"license:mit",

"endpoints_compatible",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[] |

[

"de"

] |

TAGS

#transformers #pytorch #electra #pretraining #commoncrawl #uncased #umlaute #umlauts #german #deutsch #de #license-mit #endpoints_compatible #region-us

|

# German Electra Uncased

<img width="300px" src="URL

[¹]

## Version 2 Release

We released an improved version of this model. Version 1 was trained for 766,000 steps. For this new version we continued the training for an additional 734,000 steps. It therefore follows that version 2 was trained on a total of 1,500,000 steps. See "Evaluation of Version 2: GermEval18 Coarse" below for details.

## Model Info

This Model is suitable for training on many downstream tasks in German (Q&A, Sentiment Analysis, etc.).

It can be used as a drop-in replacement for BERT in most down-stream tasks (ELECTRA is even implemented as an extended BERT Class).

At the time of release (August 2020) this model is the best performing publicly available German NLP model on various German evaluation metrics (CONLL03-DE, GermEval18 Coarse, GermEval18 Fine). For GermEval18 Coarse results see below. More will be published soon.

## Installation

This model has the special feature that it is uncased but does not strip accents.

This possibility was added by us with PR #6280.

To use it you have to use Transformers version 3.1.0 or newer.

## Uncase and Umlauts ('Ö', 'Ä', 'Ü')

This model is uncased. This helps especially for domains where colloquial terms with uncorrect capitalization is often used.

The special characters 'ö', 'ü', 'ä' are included through the 'strip_accent=False' option, as this leads to an improved precision.

## Creators

This model was trained and open sourced in conjunction with the German NLP Group in equal parts by:

- Philip May - Deutsche Telekom

- Philipp Reißel - ambeRoad

## Evaluation of Version 2: GermEval18 Coarse

We evaluated all language models on GermEval18 with the F1 macro score. For each model we did an extensive automated hyperparameter search. With the best hyperparmeters we did fit the moodel multiple times on GermEval18. This is done to cancel random effects and get results of statistical relevance.

!GermEval18 Coarse Model Evaluation for Version 2

## Checkpoint evaluation

Since it it not guaranteed that the last checkpoint is the best, we evaluated the checkpoints on GermEval18. We found that the last checkpoint is indeed the best. The training was stable and did not overfit the text corpus.

## Pre-training details

### Data

- Cleaned Common Crawl Corpus 2019-09 German: CC_net (Only head coprus and filtered for language_score > 0.98) - 62 GB

- German Wikipedia Article Pages Dump (20200701) - 5.5 GB

- German Wikipedia Talk Pages Dump (20200620) - 1.1 GB

- Subtitles - 823 MB

- News 2018 - 4.1 GB

The sentences were split with SojaMo. We took the German Wikipedia Article Pages Dump 3x to oversample. This approach was also used in a similar way in GPT-3 (Table 2.2).

More Details can be found here Preperaing Datasets for German Electra Github

### Electra Branch no_strip_accents

Because we do not want to stip accents in our training data we made a change to Electra and used this repo Electra no_strip_accents (branch 'no_strip_accents'). Then created the tf dataset with:

### The training

The training itself can be performed with the Original Electra Repo (No special case for this needed).

We run it with the following Config:

<details>

<summary>The exact Training Config</summary>

<br/>debug False

<br/>disallow_correct False

<br/>disc_weight 50.0

<br/>do_eval False

<br/>do_lower_case True

<br/>do_train True

<br/>electra_objective True

<br/>embedding_size 768

<br/>eval_batch_size 128

<br/>gcp_project None

<br/>gen_weight 1.0

<br/>generator_hidden_size 0.33333

<br/>generator_layers 1.0

<br/>iterations_per_loop 200

<br/>keep_checkpoint_max 0

<br/>learning_rate 0.0002

<br/>lr_decay_power 1.0

<br/>mask_prob 0.15

<br/>max_predictions_per_seq 79

<br/>max_seq_length 512

<br/>model_dir gs://XXX

<br/>model_hparam_overrides {}

<br/>model_name 02_Electra_Checkpoints_32k_766k_Combined

<br/>model_size base

<br/>num_eval_steps 100

<br/>num_tpu_cores 8

<br/>num_train_steps 766000

<br/>num_warmup_steps 10000

<br/>pretrain_tfrecords gs://XXX

<br/>results_pkl gs://XXX

<br/>results_txt gs://XXX

<br/>save_checkpoints_steps 5000

<br/>temperature 1.0

<br/>tpu_job_name None

<br/>tpu_name electrav5

<br/>tpu_zone None

<br/>train_batch_size 256

<br/>uniform_generator False

<br/>untied_generator True

<br/>untied_generator_embeddings False

<br/>use_tpu True

<br/>vocab_file gs://XXX

<br/>vocab_size 32767

<br/>weight_decay_rate 0.01

</details>

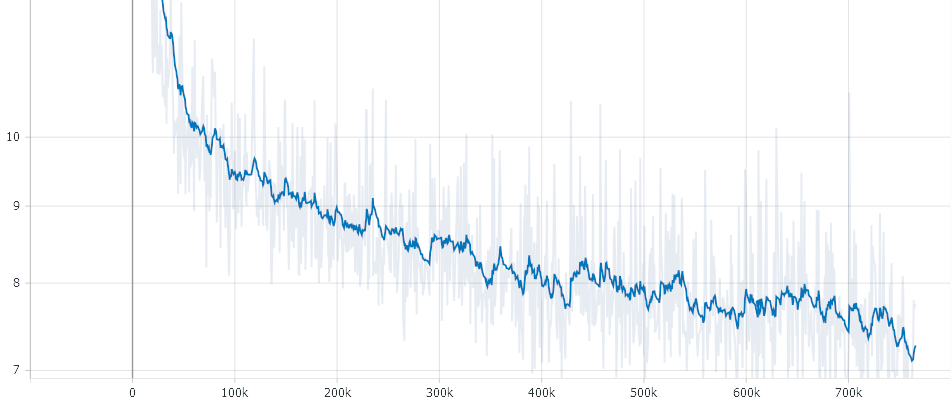

!Training Loss

Please Note: *Due to the GAN like strucutre of Electra the loss is not that meaningful*

It took about 7 Days on a preemtible TPU V3-8. In total, the Model went through approximately 10 Epochs. For an automatically recreation of a cancelled TPUs we used tpunicorn. The total cost of training summed up to about 450 $ for one run. The Data-pre processing and Vocab Creation needed approximately 500-1000 CPU hours. Servers were fully provided by T-Systems on site services GmbH, ambeRoad.

Special thanks to Stefan Schweter for your feedback and providing parts of the text corpus.

[¹]: Source for the picture Pinterest

### Negative Results

We tried the following approaches which we found had no positive influence:

- Increased Vocab Size: Leads to more parameters and thus reduced examples/sec while no visible Performance gains were measured

- Decreased Batch-Size: The original Electra was trained with a Batch Size per TPU Core of 16 whereas this Model was trained with 32 BS / TPU Core. We found out that 32 BS leads to better results when you compare metrics over computation time

## License - The MIT License

Copyright 2020-2021 Philip May<br>

Copyright 2020-2021 Philipp Reissel

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

|

[

"# German Electra Uncased\n<img width=\"300px\" src=\"URL\n[¹]",

"## Version 2 Release\nWe released an improved version of this model. Version 1 was trained for 766,000 steps. For this new version we continued the training for an additional 734,000 steps. It therefore follows that version 2 was trained on a total of 1,500,000 steps. See \"Evaluation of Version 2: GermEval18 Coarse\" below for details.",

"## Model Info\nThis Model is suitable for training on many downstream tasks in German (Q&A, Sentiment Analysis, etc.).\n\nIt can be used as a drop-in replacement for BERT in most down-stream tasks (ELECTRA is even implemented as an extended BERT Class).\n\nAt the time of release (August 2020) this model is the best performing publicly available German NLP model on various German evaluation metrics (CONLL03-DE, GermEval18 Coarse, GermEval18 Fine). For GermEval18 Coarse results see below. More will be published soon.",

"## Installation\nThis model has the special feature that it is uncased but does not strip accents.\nThis possibility was added by us with PR #6280.\nTo use it you have to use Transformers version 3.1.0 or newer.",

"## Uncase and Umlauts ('Ö', 'Ä', 'Ü')\nThis model is uncased. This helps especially for domains where colloquial terms with uncorrect capitalization is often used.\n\nThe special characters 'ö', 'ü', 'ä' are included through the 'strip_accent=False' option, as this leads to an improved precision.",

"## Creators\nThis model was trained and open sourced in conjunction with the German NLP Group in equal parts by:\n- Philip May - Deutsche Telekom\n- Philipp Reißel - ambeRoad",

"## Evaluation of Version 2: GermEval18 Coarse\nWe evaluated all language models on GermEval18 with the F1 macro score. For each model we did an extensive automated hyperparameter search. With the best hyperparmeters we did fit the moodel multiple times on GermEval18. This is done to cancel random effects and get results of statistical relevance.\n\n!GermEval18 Coarse Model Evaluation for Version 2",

"## Checkpoint evaluation\nSince it it not guaranteed that the last checkpoint is the best, we evaluated the checkpoints on GermEval18. We found that the last checkpoint is indeed the best. The training was stable and did not overfit the text corpus.",

"## Pre-training details",

"### Data\n- Cleaned Common Crawl Corpus 2019-09 German: CC_net (Only head coprus and filtered for language_score > 0.98) - 62 GB\n- German Wikipedia Article Pages Dump (20200701) - 5.5 GB\n- German Wikipedia Talk Pages Dump (20200620) - 1.1 GB\n- Subtitles - 823 MB\n- News 2018 - 4.1 GB\n\nThe sentences were split with SojaMo. We took the German Wikipedia Article Pages Dump 3x to oversample. This approach was also used in a similar way in GPT-3 (Table 2.2).\n\nMore Details can be found here Preperaing Datasets for German Electra Github",

"### Electra Branch no_strip_accents\nBecause we do not want to stip accents in our training data we made a change to Electra and used this repo Electra no_strip_accents (branch 'no_strip_accents'). Then created the tf dataset with:",

"### The training\nThe training itself can be performed with the Original Electra Repo (No special case for this needed).\nWe run it with the following Config:\n\n<details>\n<summary>The exact Training Config</summary>\n<br/>debug False\n<br/>disallow_correct False\n<br/>disc_weight 50.0\n<br/>do_eval False\n<br/>do_lower_case True\n<br/>do_train True\n<br/>electra_objective True\n<br/>embedding_size 768\n<br/>eval_batch_size 128\n<br/>gcp_project None\n<br/>gen_weight 1.0\n<br/>generator_hidden_size 0.33333\n<br/>generator_layers 1.0\n<br/>iterations_per_loop 200\n<br/>keep_checkpoint_max 0\n<br/>learning_rate 0.0002\n<br/>lr_decay_power 1.0\n<br/>mask_prob 0.15\n<br/>max_predictions_per_seq 79\n<br/>max_seq_length 512\n<br/>model_dir gs://XXX\n<br/>model_hparam_overrides {}\n<br/>model_name 02_Electra_Checkpoints_32k_766k_Combined\n<br/>model_size base\n<br/>num_eval_steps 100\n<br/>num_tpu_cores 8\n<br/>num_train_steps 766000\n<br/>num_warmup_steps 10000\n<br/>pretrain_tfrecords gs://XXX\n<br/>results_pkl gs://XXX\n<br/>results_txt gs://XXX\n<br/>save_checkpoints_steps 5000\n<br/>temperature 1.0\n<br/>tpu_job_name None\n<br/>tpu_name electrav5\n<br/>tpu_zone None\n<br/>train_batch_size 256\n<br/>uniform_generator False\n<br/>untied_generator True\n<br/>untied_generator_embeddings False\n<br/>use_tpu True\n<br/>vocab_file gs://XXX\n<br/>vocab_size 32767\n<br/>weight_decay_rate 0.01\n </details>\n\n!Training Loss\n\nPlease Note: *Due to the GAN like strucutre of Electra the loss is not that meaningful*\n\nIt took about 7 Days on a preemtible TPU V3-8. In total, the Model went through approximately 10 Epochs. For an automatically recreation of a cancelled TPUs we used tpunicorn. The total cost of training summed up to about 450 $ for one run. The Data-pre processing and Vocab Creation needed approximately 500-1000 CPU hours. Servers were fully provided by T-Systems on site services GmbH, ambeRoad.\nSpecial thanks to Stefan Schweter for your feedback and providing parts of the text corpus.\n\n[¹]: Source for the picture Pinterest",

"### Negative Results\nWe tried the following approaches which we found had no positive influence:\n\n- Increased Vocab Size: Leads to more parameters and thus reduced examples/sec while no visible Performance gains were measured\n- Decreased Batch-Size: The original Electra was trained with a Batch Size per TPU Core of 16 whereas this Model was trained with 32 BS / TPU Core. We found out that 32 BS leads to better results when you compare metrics over computation time",

"## License - The MIT License\nCopyright 2020-2021 Philip May<br>\nCopyright 2020-2021 Philipp Reissel\n\nPermission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the \"Software\"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:\n\nThe above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.\n\nTHE SOFTWARE IS PROVIDED \"AS IS\", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE."

] |

[

"TAGS\n#transformers #pytorch #electra #pretraining #commoncrawl #uncased #umlaute #umlauts #german #deutsch #de #license-mit #endpoints_compatible #region-us \n",

"# German Electra Uncased\n<img width=\"300px\" src=\"URL\n[¹]",

"## Version 2 Release\nWe released an improved version of this model. Version 1 was trained for 766,000 steps. For this new version we continued the training for an additional 734,000 steps. It therefore follows that version 2 was trained on a total of 1,500,000 steps. See \"Evaluation of Version 2: GermEval18 Coarse\" below for details.",

"## Model Info\nThis Model is suitable for training on many downstream tasks in German (Q&A, Sentiment Analysis, etc.).\n\nIt can be used as a drop-in replacement for BERT in most down-stream tasks (ELECTRA is even implemented as an extended BERT Class).\n\nAt the time of release (August 2020) this model is the best performing publicly available German NLP model on various German evaluation metrics (CONLL03-DE, GermEval18 Coarse, GermEval18 Fine). For GermEval18 Coarse results see below. More will be published soon.",

"## Installation\nThis model has the special feature that it is uncased but does not strip accents.\nThis possibility was added by us with PR #6280.\nTo use it you have to use Transformers version 3.1.0 or newer.",

"## Uncase and Umlauts ('Ö', 'Ä', 'Ü')\nThis model is uncased. This helps especially for domains where colloquial terms with uncorrect capitalization is often used.\n\nThe special characters 'ö', 'ü', 'ä' are included through the 'strip_accent=False' option, as this leads to an improved precision.",

"## Creators\nThis model was trained and open sourced in conjunction with the German NLP Group in equal parts by:\n- Philip May - Deutsche Telekom\n- Philipp Reißel - ambeRoad",

"## Evaluation of Version 2: GermEval18 Coarse\nWe evaluated all language models on GermEval18 with the F1 macro score. For each model we did an extensive automated hyperparameter search. With the best hyperparmeters we did fit the moodel multiple times on GermEval18. This is done to cancel random effects and get results of statistical relevance.\n\n!GermEval18 Coarse Model Evaluation for Version 2",

"## Checkpoint evaluation\nSince it it not guaranteed that the last checkpoint is the best, we evaluated the checkpoints on GermEval18. We found that the last checkpoint is indeed the best. The training was stable and did not overfit the text corpus.",

"## Pre-training details",

"### Data\n- Cleaned Common Crawl Corpus 2019-09 German: CC_net (Only head coprus and filtered for language_score > 0.98) - 62 GB\n- German Wikipedia Article Pages Dump (20200701) - 5.5 GB\n- German Wikipedia Talk Pages Dump (20200620) - 1.1 GB\n- Subtitles - 823 MB\n- News 2018 - 4.1 GB\n\nThe sentences were split with SojaMo. We took the German Wikipedia Article Pages Dump 3x to oversample. This approach was also used in a similar way in GPT-3 (Table 2.2).\n\nMore Details can be found here Preperaing Datasets for German Electra Github",

"### Electra Branch no_strip_accents\nBecause we do not want to stip accents in our training data we made a change to Electra and used this repo Electra no_strip_accents (branch 'no_strip_accents'). Then created the tf dataset with:",

"### The training\nThe training itself can be performed with the Original Electra Repo (No special case for this needed).\nWe run it with the following Config:\n\n<details>\n<summary>The exact Training Config</summary>\n<br/>debug False\n<br/>disallow_correct False\n<br/>disc_weight 50.0\n<br/>do_eval False\n<br/>do_lower_case True\n<br/>do_train True\n<br/>electra_objective True\n<br/>embedding_size 768\n<br/>eval_batch_size 128\n<br/>gcp_project None\n<br/>gen_weight 1.0\n<br/>generator_hidden_size 0.33333\n<br/>generator_layers 1.0\n<br/>iterations_per_loop 200\n<br/>keep_checkpoint_max 0\n<br/>learning_rate 0.0002\n<br/>lr_decay_power 1.0\n<br/>mask_prob 0.15\n<br/>max_predictions_per_seq 79\n<br/>max_seq_length 512\n<br/>model_dir gs://XXX\n<br/>model_hparam_overrides {}\n<br/>model_name 02_Electra_Checkpoints_32k_766k_Combined\n<br/>model_size base\n<br/>num_eval_steps 100\n<br/>num_tpu_cores 8\n<br/>num_train_steps 766000\n<br/>num_warmup_steps 10000\n<br/>pretrain_tfrecords gs://XXX\n<br/>results_pkl gs://XXX\n<br/>results_txt gs://XXX\n<br/>save_checkpoints_steps 5000\n<br/>temperature 1.0\n<br/>tpu_job_name None\n<br/>tpu_name electrav5\n<br/>tpu_zone None\n<br/>train_batch_size 256\n<br/>uniform_generator False\n<br/>untied_generator True\n<br/>untied_generator_embeddings False\n<br/>use_tpu True\n<br/>vocab_file gs://XXX\n<br/>vocab_size 32767\n<br/>weight_decay_rate 0.01\n </details>\n\n!Training Loss\n\nPlease Note: *Due to the GAN like strucutre of Electra the loss is not that meaningful*\n\nIt took about 7 Days on a preemtible TPU V3-8. In total, the Model went through approximately 10 Epochs. For an automatically recreation of a cancelled TPUs we used tpunicorn. The total cost of training summed up to about 450 $ for one run. The Data-pre processing and Vocab Creation needed approximately 500-1000 CPU hours. Servers were fully provided by T-Systems on site services GmbH, ambeRoad.\nSpecial thanks to Stefan Schweter for your feedback and providing parts of the text corpus.\n\n[¹]: Source for the picture Pinterest",

"### Negative Results\nWe tried the following approaches which we found had no positive influence:\n\n- Increased Vocab Size: Leads to more parameters and thus reduced examples/sec while no visible Performance gains were measured\n- Decreased Batch-Size: The original Electra was trained with a Batch Size per TPU Core of 16 whereas this Model was trained with 32 BS / TPU Core. We found out that 32 BS leads to better results when you compare metrics over computation time",

"## License - The MIT License\nCopyright 2020-2021 Philip May<br>\nCopyright 2020-2021 Philipp Reissel\n\nPermission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the \"Software\"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:\n\nThe above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.\n\nTHE SOFTWARE IS PROVIDED \"AS IS\", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE."

] |

fill-mask

|

transformers

|

# SlovakBERT (base-sized model)

SlovakBERT pretrained model on Slovak language using a masked language modeling (MLM) objective. This model is case-sensitive: it makes a difference between slovensko and Slovensko.

## Intended uses & limitations

You can use the raw model for masked language modeling, but it's mostly intended to be fine-tuned on a downstream task.

**IMPORTANT**: The model was not trained on the “ and ” (direct quote) character -> so before tokenizing the text, it is advised to replace all “ and ” (direct quote marks) with a single "(double quote marks).

### How to use

You can use this model directly with a pipeline for masked language modeling:

```python

from transformers import pipeline

unmasker = pipeline('fill-mask', model='gerulata/slovakbert')

unmasker("Deti sa <mask> na ihrisku.")

[{'sequence': 'Deti sa hrali na ihrisku.',

'score': 0.6355380415916443,

'token': 5949,

'token_str': ' hrali'},

{'sequence': 'Deti sa hrajú na ihrisku.',

'score': 0.14731724560260773,

'token': 9081,

'token_str': ' hrajú'},

{'sequence': 'Deti sa zahrali na ihrisku.',

'score': 0.05016357824206352,

'token': 32553,

'token_str': ' zahrali'},

{'sequence': 'Deti sa stretli na ihrisku.',

'score': 0.041727423667907715,

'token': 5964,

'token_str': ' stretli'},

{'sequence': 'Deti sa učia na ihrisku.',

'score': 0.01886524073779583,

'token': 18099,

'token_str': ' učia'}]

```

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import RobertaTokenizer, RobertaModel

tokenizer = RobertaTokenizer.from_pretrained('gerulata/slovakbert')

model = RobertaModel.from_pretrained('gerulata/slovakbert')

text = "Text ktorý sa má embedovať."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import RobertaTokenizer, TFRobertaModel

tokenizer = RobertaTokenizer.from_pretrained('gerulata/slovakbert')

model = TFRobertaModel.from_pretrained('gerulata/slovakbert')

text = "Text ktorý sa má embedovať."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

Or extract information from the model like this:

```python

from transformers import pipeline

unmasker = pipeline('fill-mask', model='gerulata/slovakbert')

unmasker("Slovenské národne povstanie sa uskutočnilo v roku <mask>.")

[{'sequence': 'Slovenske narodne povstanie sa uskutočnilo v roku 1944.',

'score': 0.7383289933204651,

'token': 16621,

'token_str': ' 1944'},...]

```

# Training data

The SlovakBERT model was pretrained on these datasets:

- Wikipedia (326MB of text),

- OpenSubtitles (415MB of text),

- Oscar (4.6GB of text),

- Gerulata WebCrawl (12.7GB of text) ,

- Gerulata Monitoring (214 MB of text),

- blbec.online (4.5GB of text)

The text was then processed with the following steps:

- URL and email addresses were replaced with special tokens ("url", "email").

- Elongated interpunction was reduced (e.g. -- to -).

- Markdown syntax was deleted.

- All text content in braces f.g was eliminated to reduce the amount of markup and programming language text.

We segmented the resulting corpus into sentences and removed duplicates to get 181.6M unique sentences. In total, the final corpus has 19.35GB of text.

# Pretraining

The model was trained in **fairseq** on 4 x Nvidia A100 GPUs for 300K steps with a batch size of 512 and a sequence length of 512. The optimizer used is Adam with a learning rate of 5e-4, \\(\beta_{1} = 0.9\\), \\(\beta_{2} = 0.98\\) and \\(\epsilon = 1e-6\\), a weight decay of 0.01, dropout rate 0.1, learning rate warmup for 10k steps and linear decay of the learning rate after. We used 16-bit float precision.

## About us

<a href="https://www.gerulata.com/">

<img width="300px" src="https://www.gerulata.com/assets/images/Logo_Blue.svg">

</a>

Gerulata Technologies is a tech company on a mission to provide tools for fighting disinformation and hostile propaganda.

At Gerulata, we focus on providing state-of-the-art AI-powered tools that empower human analysts and provide them with the ability to make informed decisions.

Our tools allow for the monitoring and analysis of online activity, as well as the detection and tracking of disinformation and hostile propaganda campaigns. With our products, our clients are better equipped to identify and respond to threats in real-time.

### BibTeX entry and citation info

If you find our resource or paper is useful, please consider including the following citation in your paper.

- https://arxiv.org/abs/2109.15254

```

@misc{pikuliak2021slovakbert,

title={SlovakBERT: Slovak Masked Language Model},

author={Matúš Pikuliak and Štefan Grivalský and Martin Konôpka and Miroslav Blšták and Martin Tamajka and Viktor Bachratý and Marián Šimko and Pavol Balážik and Michal Trnka and Filip Uhlárik},

year={2021},

eprint={2109.15254},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

{"language": "sk", "license": "mit", "tags": ["SlovakBERT"], "datasets": ["wikipedia", "opensubtitles", "oscar", "gerulatawebcrawl", "gerulatamonitoring", "blbec.online"]}

|

gerulata/slovakbert

| null |

[

"transformers",

"pytorch",

"tf",

"safetensors",

"roberta",

"fill-mask",

"SlovakBERT",

"sk",

"dataset:wikipedia",

"dataset:opensubtitles",

"dataset:oscar",

"dataset:gerulatawebcrawl",

"dataset:gerulatamonitoring",

"dataset:blbec.online",

"arxiv:2109.15254",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"has_space",

"region:us"

] | null |

2022-03-02T23:29:05+00:00

|

[

"2109.15254"

] |

[

"sk"

] |

TAGS

#transformers #pytorch #tf #safetensors #roberta #fill-mask #SlovakBERT #sk #dataset-wikipedia #dataset-opensubtitles #dataset-oscar #dataset-gerulatawebcrawl #dataset-gerulatamonitoring #dataset-blbec.online #arxiv-2109.15254 #license-mit #autotrain_compatible #endpoints_compatible #has_space #region-us

|

# SlovakBERT (base-sized model)

SlovakBERT pretrained model on Slovak language using a masked language modeling (MLM) objective. This model is case-sensitive: it makes a difference between slovensko and Slovensko.

## Intended uses & limitations

You can use the raw model for masked language modeling, but it's mostly intended to be fine-tuned on a downstream task.

IMPORTANT: The model was not trained on the “ and ” (direct quote) character -> so before tokenizing the text, it is advised to replace all “ and ” (direct quote marks) with a single "(double quote marks).

### How to use

You can use this model directly with a pipeline for masked language modeling:

Here is how to use this model to get the features of a given text in PyTorch:

and in TensorFlow:

Or extract information from the model like this:

# Training data

The SlovakBERT model was pretrained on these datasets:

- Wikipedia (326MB of text),

- OpenSubtitles (415MB of text),

- Oscar (4.6GB of text),

- Gerulata WebCrawl (12.7GB of text) ,

- Gerulata Monitoring (214 MB of text),

- URL (4.5GB of text)

The text was then processed with the following steps:

- URL and email addresses were replaced with special tokens ("url", "email").

- Elongated interpunction was reduced (e.g. -- to -).

- Markdown syntax was deleted.

- All text content in braces f.g was eliminated to reduce the amount of markup and programming language text.

We segmented the resulting corpus into sentences and removed duplicates to get 181.6M unique sentences. In total, the final corpus has 19.35GB of text.

# Pretraining

The model was trained in fairseq on 4 x Nvidia A100 GPUs for 300K steps with a batch size of 512 and a sequence length of 512. The optimizer used is Adam with a learning rate of 5e-4, \\(\beta_{1} = 0.9\\), \\(\beta_{2} = 0.98\\) and \\(\epsilon = 1e-6\\), a weight decay of 0.01, dropout rate 0.1, learning rate warmup for 10k steps and linear decay of the learning rate after. We used 16-bit float precision.

## About us

<a href="URL

<img width="300px" src="URL

</a>

Gerulata Technologies is a tech company on a mission to provide tools for fighting disinformation and hostile propaganda.

At Gerulata, we focus on providing state-of-the-art AI-powered tools that empower human analysts and provide them with the ability to make informed decisions.

Our tools allow for the monitoring and analysis of online activity, as well as the detection and tracking of disinformation and hostile propaganda campaigns. With our products, our clients are better equipped to identify and respond to threats in real-time.

### BibTeX entry and citation info

If you find our resource or paper is useful, please consider including the following citation in your paper.

- URL

|

[

"# SlovakBERT (base-sized model)\nSlovakBERT pretrained model on Slovak language using a masked language modeling (MLM) objective. This model is case-sensitive: it makes a difference between slovensko and Slovensko.",

"## Intended uses & limitations\nYou can use the raw model for masked language modeling, but it's mostly intended to be fine-tuned on a downstream task.\nIMPORTANT: The model was not trained on the “ and ” (direct quote) character -> so before tokenizing the text, it is advised to replace all “ and ” (direct quote marks) with a single \"(double quote marks).",

"### How to use\nYou can use this model directly with a pipeline for masked language modeling:\n\n\n\nHere is how to use this model to get the features of a given text in PyTorch:\n\nand in TensorFlow:\n\nOr extract information from the model like this:",