Serial Number

int64 1

6k

| Issue Number

int64 75.6k

112k

| Title

stringlengths 3

357

| Labels

stringlengths 3

241

⌀ | Body

stringlengths 9

74.5k

⌀ | Comments

int64 0

867

|

|---|---|---|---|---|---|

5,601 | 78,413 |

torch.angle differs from np.angle for -0.

|

triaged, module: numpy, module: primTorch

|

### 🐛 Describe the bug

Discovered in https://github.com/pytorch/pytorch/pull/78349

```python

>>> import torch

>>> import numpy as np

>>> t = torch.tensor(-0.)

>>> torch.angle(t)

tensor(0.)

>>> np.angle(t.numpy())

3.141592653589793

```

cc: @mruberry

### Versions

master

cc @mruberry @rgommers @ezyang @ngimel

| 2 |

5,602 | 93,756 |

Torchdynamo for Deepspeed and FSDP

|

triaged, enhancement, module: fsdp, oncall: pt2

|

In AWS we are working with customers to enable gigantic transformer model training on EC2. Furthermore, we attempt to leverage compiler techniques to optimize Pytorch workloads due to its widely adoption. For example, we recently open-sourced [RAF](https://github.com/awslabs/raf), a deep learning compiler for training, that shows promising training acceleration for a set of models and verified it works with TorchDynamo. On the other hand, we do see the gap converting Pytorch programs to the IR we are using, especially when it comes to complex strategies such as distributed training.

One example is [Deepspeed](https://github.com/microsoft/DeepSpeed). It implements data parallelism (ZeRO) on top of Pytorch, introducing sharding for optimizer states, gradients and parameters. (FSDP is another approach to do ZeRO). The idea itself is pretty straightforward, but when trying to convert the Deepspeed implementation to RAF via lazy-tensor, it doesn’t work well. For instance, the [NaN check for gradients](https://github.com/microsoft/DeepSpeed/blob/master/deepspeed/runtime/zero/stage3.py#L2461) breaks the graph and result performance degradation; It doesn’t capture CUDA stream usage; etc.

In my understanding, those issues could potentially be resolved via TorchDynamo as it has the capability to extract structures and call information via Python bytecode, apart from the lazy tensor tracing mechanism at higher level.

So we’d like to get suggestion from TorchDynamo community, what do you think about supporting such scenarios in TorchDynamo? Specifically, 1) how should TorchDynamo support ZeRO tracing? 2) would that be in the TorchDynamo soon or later? Due to our current roadmap and goals, we at AWS would be interested in collaboration along this direction if possible.

cc @zhaojuanmao @mrshenli @rohan-varma @awgu @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh @comaniac @szhengac @yidawang @mli @hzfan @zachzzc

| 2 |

5,603 | 78,367 |

Split up and reorganize RPC tests

|

oncall: distributed, triaged, better-engineering, module: rpc

|

## Issue description

Right now all RPC tests are in https://github.com/pytorch/pytorch/blob/master/torch/testing/_internal/distributed/rpc/rpc_test.py which is a 6500+ line file containing tests for `CudaRpcTest`, `FaultyAgentRpcTest`, `RpcTest`, `RpcTestCommon`, `TensorPipeAgentRpcTest`, `TensorPipeAgentCudaRpcTest`. These classes are then imported by various files such as https://github.com/pytorch/pytorch/blob/master/torch/testing/_internal/distributed/rpc_utils.py. It would be helpful to separate the tests so it is easier to know what you are adding to. (I encountered this when accidentally adding to RpcTest and the test began to be run internally which I did not want)

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501 @jjlilley @mrzzd

| 2 |

5,604 | 78,346 |

`gradcheck` fails for `torch.distribution.transform` APIs in forward mode

|

module: distributions, module: autograd, triaged, module: forward ad

|

### 🐛 Describe the bug

`gradcheck` fails for `torch.distribution.transform` APIs in forward mode when `cache` is not 0.

Take `AbsTransform` as an example,

```python

import torch

def get_fn():

cache_size = 1

arg_class = torch.distributions.transforms.AbsTransform(cache_size=cache_size, )

def fn(input):

fn_res = arg_class(input)

return fn_res

return fn

fn = get_fn()

input = torch.rand([3], dtype=torch.float64, requires_grad=True)

torch.autograd.gradcheck(fn, (input,), check_forward_ad=True)

# torch.autograd.gradcheck.GradcheckError: Jacobian mismatch for output 0 with respect to input 0,

# numerical:tensor([[0., 0., 0.],

# [0., 0., 0.],

# [0., 0., 0.]], dtype=torch.float64)

# analytical:tensor([[1., 0., 0.],

# [0., 1., 0.],

# [0., 0., 1.]], dtype=torch.float64)

```

Other APIs like `SigmoidTransform, TanhTransform, SoftmaxTransform` also have this issue

### Versions

pytorch: 1.11.0

cc @fritzo @neerajprad @alicanb @nikitaved @ezyang @albanD @zou3519 @gqchen @pearu @soulitzer @Lezcano @Varal7

| 0 |

5,605 | 78,332 |

TRACK: integral + floating inputs to an op with floating requiring grad result in INTERNAL_ASSERT

|

module: autograd, triaged, actionable

|

### 🐛 Describe the bug

This is the tracking of the issues that "integral + floating inputs to an op with floating requiring grad result in INTERNAL_ASSERT"

- tensordot #77517

- addmv #77814

- mv #77814

- bilinear #78087

- matmul #78141

- mm #78141

- baddmm #78143

- index_fill #78443

- layer_norm #78444

### Versions

pytorch: 1.11.0

cc @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7

| 2 |

5,606 | 78,274 |

Memory allocation errors when attempting to initialize a large number of small feed-forward networks in RAM with shared memory despite having enough memory

|

module: memory usage, triaged

|

### 🐛 Describe the bug

Hello,

I am attempting to initialize and allocate space for ~10,000 small, two hidden layer mlps with shared memory in RAM. Here is how the models are created:

```

import torch

import psutil

import torch.nn as nn

class AntNN(nn.Module):

def __init__(self, input_dim=60, hidden_size=256, action_dim=8, init_type='xavier_uniform'):

super().__init__()

self.layers = nn.Sequential(

nn.Linear(input_dim, hidden_size),

nn.Tanh(),

nn.Linear(hidden_size, hidden_size),

nn.Tanh(),

nn.Linear(hidden_size, hidden_size),

nn.Tanh(),

nn.Linear(hidden_size, action_dim)

)

def forward(self, obs):

return self.layers(obs)

def model_factory(device, hidden_size=128, init_type='xavier_uniform', share_memory=True):

model = AntNN(hidden_size=hidden_size, init_type=init_type).to(device)

if share_memory:

model.share_memory()

return model

if __name__ == '__main__':

num_policies = 10000

mlps = []

device = torch.device('cpu')

for _ in range(num_policies):

mlp = model_factory(device, 128, share_memory=True)

mlps.append(mlp)

print(f'RAM Memory % used: {psutil.virtual_memory()[2]}')

```

I’m keeping track of the total RAM usage and this is the last statement that printed before the error:

> RAM Memory % used: 56.8

So I clearly have more than enough memory available. Here is the “cannot allocate memory” error:

> Traceback (most recent call last):

File "/home/user/map-elites/testing.py", line 164, in <module>

mlp = model_factory(device, 128, share_memory=True)

File "/home/user/map-elites/models/ant_model.py", line 11, in model_factory

model.to(device).share_memory()

File "/home/user/miniconda3/envs/map-elites/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1805, in share_memory

return self._apply(lambda t: t.share_memory_())

File "/home/user/miniconda3/envs/map-elites/lib/python3.8/site-packages/torch/nn/modules/module.py", line 578, in _apply

module._apply(fn)

File "/home/user/miniconda3/envs/map-elites/lib/python3.8/site-packages/torch/nn/modules/module.py", line 578, in _apply

module._apply(fn)

File "/home/user/miniconda3/envs/map-elites/lib/python3.8/site-packages/torch/nn/modules/module.py", line 601, in _apply

param_applied = fn(param)

File "/home/user/miniconda3/envs/map-elites/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1805, in <lambda>

return self._apply(lambda t: t.share_memory_())

File "/home/user/miniconda3/envs/map-elites/lib/python3.8/site-packages/torch/_tensor.py", line 482, in share_memory_

self.storage().share_memory_()

File "/home/user/miniconda3/envs/map-elites/lib/python3.8/site-packages/torch/storage.py", line 480, in share_memory_

self._storage.share_memory_()

File "/home/user/miniconda3/envs/map-elites/lib/python3.8/site-packages/torch/storage.py", line 160, in share_memory_

self._share_filename_()

RuntimeError: unable to mmap 65600 bytes from file </torch_6936_3098808190_62696>: Cannot allocate memory (12)

Process finished with exit code 1

With share_memory=False this works just fine, but for my application, it is critical that these tensors exist in shared memory b/c these tensors are modified by different processes. Is this some bug or a fundamental limitation to how shared memory in pytorch works? Is there any way to get around this problem?

Also, I noticed that PyTorch’s default sharing system file_descriptor opens a lot of file descriptors, and that I might be reaching my system’s soft (or hard) open files limit. So I tried increasing the number from 1024 → 1,000,000 and was able to make it to

> Num mlps: 6966 / 10,000

RAM Memory % used: 62.0

Before running into the mmap error. I tried playing around with different values for the max number of open file descriptors allowed by the system and couldn’t get past that number, so I don’t think it’s bottlenecked by the number of allowed file descriptors anymore. I also tried

```torch.multiprocessing.set_sharing_strategy('file_system')```

since it seems to keep track of less file descriptors per tensor, but this didn’t help either.

### Versions

Collecting environment information...

PyTorch version: 1.11.0+cu102

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.4 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.16.3

Libc version: glibc-2.31

Python version: 3.8.5 | packaged by conda-forge | (default, Jul 31 2020, 02:39:48) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.13.0-44-generic-x86_64-with-glibc2.10

Is CUDA available: True

CUDA runtime version: 10.1.243

GPU models and configuration: GPU 0: NVIDIA GeForce GTX 1080

Nvidia driver version: 510.47.03

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.22.3

[pip3] torch==1.11.0

[pip3] torchvision==0.12.0

[conda] blas 1.0 mkl conda-forge

[conda] cudatoolkit 10.2.89 hfd86e86_1

[conda] libblas 3.8.0 16_mkl conda-forge

[conda] libcblas 3.8.0 16_mkl conda-forge

[conda] liblapack 3.8.0 16_mkl conda-forge

[conda] liblapacke 3.8.0 16_mkl conda-forge

[conda] mkl 2020.1 217

[conda] mkl-service 2.3.0 py38he904b0f_0

[conda] mkl_fft 1.1.0 py38h23d657b_0

[conda] mkl_random 1.1.1 py38h0573a6f_0

[conda] numpy 1.22.3 pypi_0 pypi

[conda] torch 1.11.0 pypi_0 pypi

[conda] torchvision 0.12.0 pypi_0 pypi

| 4 |

5,607 | 78,262 |

Request for adding the possibility for training on sparse tensors

|

module: sparse, triaged

|

### 🚀 The feature, motivation and pitch

I'm working on tensors that most of the cells are 0's and some cells are 1's, say `100` 1's out of `50^3` 0's.

I tried to use [turn tensor into sparse tensor](https://pytorch.org/docs/stable/generated/torch.Tensor.to_sparse.html#torch-tensor-to-sparse) in order to turn my tensors into sparse ones.

The problem is that I can't use them in order to propagate inside a neural network for training and I'm getting the error message: `RuntimeError: Input type (torch.cuda.sparse.FloatTensor) and weight type (torch.cuda.FloatTensor) should be the same`.

It would be great if there be a possibility for training on sparse tensors too.

Thank you

### Alternatives

_No response_

### Additional context

_No response_

cc @nikitaved @pearu @cpuhrsch @amjames

| 3 |

5,608 | 78,261 |

pytorch-android-lite use its own libfbjni.so, which is not compatible with any other version at all..

|

oncall: binaries, triaged, module: android

|

### 🐛 Describe the bug

Wanted to have react-native and pytorch lite together in one project.

Already wasted 2 full working days on that, only to find out the root problem (well, there are 2).

The first problem is that strange "0.0.3" version of fbjni, which NEVER existed (and should never). If you go to the fbjni page, you will also see, there was NEVER a release of 0.0.3. Yet, someone uploaded to maven and enough are referencing to it.

0.2.2 should be the version, where react-native >=0.65 and pytorch(lite) should work together, if pytorch lite didnt change the library itself and then break everything else, as you cannot say which .so it should use. Just "pickFirst (woow at android gradle for that)".

Just make a small demo pytorch android lite project and then change your implementation to that (in build.gradle) :

implementation 'com.facebook.fbjni:fbjni:0.2.2' //(not java-only, really that one)

implementation ('org.pytorch:pytorch_android_lite:1.12'){

exclude module:'fbjni'

exclude module:'fbjni-java-only'

exclude module:'soloader'

exclude module:'nativeloader'

}

Also dont forget to say "which" .so it should pick

android {

packagingOptions {

pickFirst "**/*.so"

}

}

Try to start your demo project now and it will just crash with that:

cannot locate symbol "_Unwind_Resume" referenced by "/data/app/com.planradar.demoML-IK4F5CYrCuaaR7Csu8YHzQ==/lib/arm64/libpytorch_jni_lite.so"

Then just REMOVE the exclude parts and also remove the import of fbjni0.2.2. Then build your app again and it works again..

Basically your dependency says, it needs fbjni:0.2.2 (which is true), but you somehow change the library itself, resulting in a bigger .so file.

The problem is, that when using react-native together, it always picks the "wrong" one. So the one with 170kb. React-native starts fine, but pytorch just crashes.

I replaced the .so file manually (apktool and signing) and then react-native and pytorch are starting fine.

### Versions

Collecting environment information...

PyTorch version: N/A

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.5 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: Could not collect

CMake version: version 3.23.1

Libc version: glibc-2.26

Python version: 3.7.5 (default, Feb 23 2021, 13:22:40) [GCC 8.4.0] (64-bit runtime)

Python platform: Linux-5.4.0-74-generic-x86_64-with-Ubuntu-18.04-bionic

Is CUDA available: N/A

CUDA runtime version: Could not collect

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

Versions of relevant libraries:

[pip3] numpy==1.20.3

[conda] Could not collect

PS.: I tested with pytorch android lite 1.11 and 1.12

cc @ezyang @seemethere @malfet

| 1 |

5,609 | 78,260 |

[CI] Detect when tests are no longer running from CI

|

module: ci, triaged

|

### 🐛 Describe the bug

A few days ago (as of 5/25), the mac CI tests stopped running as a result of a yml change that broke GHA parsing (see https://github.com/pytorch/pytorch/pull/78000).

The scary part is that we only noticed it days later, and there was no automatic mechanism warning us that the test was missing. We should have such a mechanism.

How we can detect when this happens next time:

1. We could warn when the number of test cases run for a commit has dropped significantly even when there are no pending jobs

2. We could warn when the number of workflow jobs run for a commit has dropped from the previous workflow run

How should we warn?

- Send an email to OSS CI oncall

- Auto create an issue and tag module: ci and high-priority

- Configure an alert that looks different from our other alerts

### Versions

CI

cc @seemethere @malfet @pytorch/pytorch-dev-infra

| 0 |

5,610 | 78,255 |

Floating point exception in _conv_depthwise2d

|

triaged, module: edge cases

|

### 🐛 Describe the bug

Floating point exception in `_conv_depthwise2d`.

### Example to reproduce

```python

import torch

torch.cuda.init()

gpu_dev = torch.device('cuda')

tensor_0 = torch.full((4, 1, 3, 3,), 1, dtype=torch.float64, requires_grad=False, device=gpu_dev)

tensor_1 = torch.full((4, 1, 3, 3,), 0.5, dtype=torch.float64, requires_grad=False, device=gpu_dev)

intarrayref_2 = [1, 1]

tensor_3 = torch.full((4,), 1, dtype=torch.float64, requires_grad=False, device=gpu_dev)

intarrayref_4 = [0, 0]

intarrayref_5 = [0, 0]

intarrayref_6 = [0, 0]

torch._C._nn._conv_depthwise2d(tensor_0, tensor_1, intarrayref_2, tensor_3, intarrayref_4, intarrayref_5, intarrayref_6)

```

### Result

```floating point exception```

### Expected behavior

Graceful termination or a RuntimeError to be thrown.

### Note

This bug was discovered using IvySyn, a fuzz testing tool which is currently being developed at Secure Systems Labs at Brown University.

### Versions

PyTorch version: 1.11.0a0+git1efeb37

Is debug build: False

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: 4.3.21300-5bbc51d8

OS: Debian GNU/Linux 11 (bullseye) (x86_64)

GCC version: (Debian 10.2.1-6) 10.2.1 20210110

Clang version: Could not collect

CMake version: version 3.22.1

Libc version: glibc-2.31

Python version: 3.9.12 (main, Apr 5 2022, 06:56:58) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.10.0-14-amd64-x86_64-with-glibc2.31

Is CUDA available: True

CUDA runtime version: Could not collect

GPU models and configuration: Lexa XT [Radeon PRO WX 3100]

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: 40321.30

MIOpen runtime version: 2.12.0

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.22.3

[pip3] torch==1.11.0a0+gitfdec0bf

[conda] mkl 2022.0.1 h06a4308_117

[conda] mkl-include 2022.0.1 h06a4308_117

[conda] numpy 1.22.3 pypi_0 pypi

[conda] torch 1.11.0a0+gitcb6fe03 pypi_0 pypi

| 1 |

5,611 | 78,253 |

Any plan to add Noam scheduling?

|

triaged, module: LrScheduler

|

### 🚀 The feature, motivation and pitch

I found Noam scheduling(from "Attention is all you need" paper) important for training transformers.

But it's not included in torch.

Do you have plan to add it or want others to add it?

### Alternatives

_No response_

### Additional context

_No response_

| 2 |

5,612 | 78,249 |

`max_unpool2d` is not deterministic

|

module: numerical-stability, module: nn, triaged, module: determinism, module: pooling

|

### 🐛 Describe the bug

`max_unpool2d` is not deterministic

```python

import torch

def test():

kernel_size = 2

stride = 2

unpool = torch.nn.MaxUnpool2d(kernel_size, stride=stride, )

input0_tensor = torch.rand([1, 1, 2, 2], dtype=torch.float32)

input1_tensor = torch.randint(-2, 8, [1, 1, 2, 2], dtype=torch.int64)

input0 = input0_tensor.clone()

input1 = input1_tensor.clone()

try:

res1 = unpool(input0, input1)

except Exception:

res1 = torch.tensor(0)

input0 = input0_tensor.clone()

input1 = input1_tensor.clone()

try:

res2 = unpool(input0, input1)

except Exception:

res2 = torch.tensor(0)

if torch.allclose(res1, res2) == False:

print(input0_tensor)

print(input1_tensor)

print(res1)

print(res2)

return True

else:

return False

for _ in range(1000):

if test():

break

# tensor([[[[0.8725, 0.3154],

# [0.7132, 0.8304]]]])

# tensor([[[[1, 6],

# [1, 5]]]])

# tensor([[[[0.0000, 0.7132, 0.0000, 0.0000],

# [0.0000, 0.8304, 0.3154, 0.0000],

# [0.0000, 0.0000, 0.0000, 0.0000],

# [0.0000, 0.0000, 0.0000, 0.0000]]]])

# tensor([[[[0.0000, 0.8725, 0.0000, 0.0000],

# [0.0000, 0.8304, 0.3154, 0.0000],

# [0.0000, 0.0000, 0.0000, 0.0000],

# [0.0000, 0.0000, 0.0000, 0.0000]]]])

```

### Versions

pytorch: 1.11.0

cc @albanD @mruberry @jbschlosser @walterddr @kshitij12345 @kurtamohler

| 3 |

5,613 | 78,248 |

USE_NATIVE_ARCH flag causes nvcc build failure due to "'arch=native': expected a number"

|

module: build, triaged

|

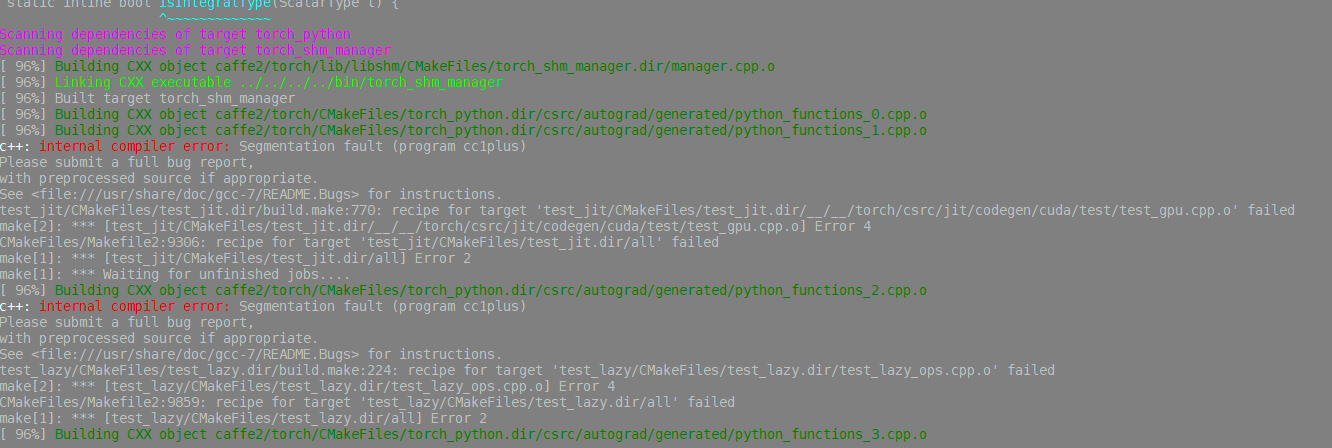

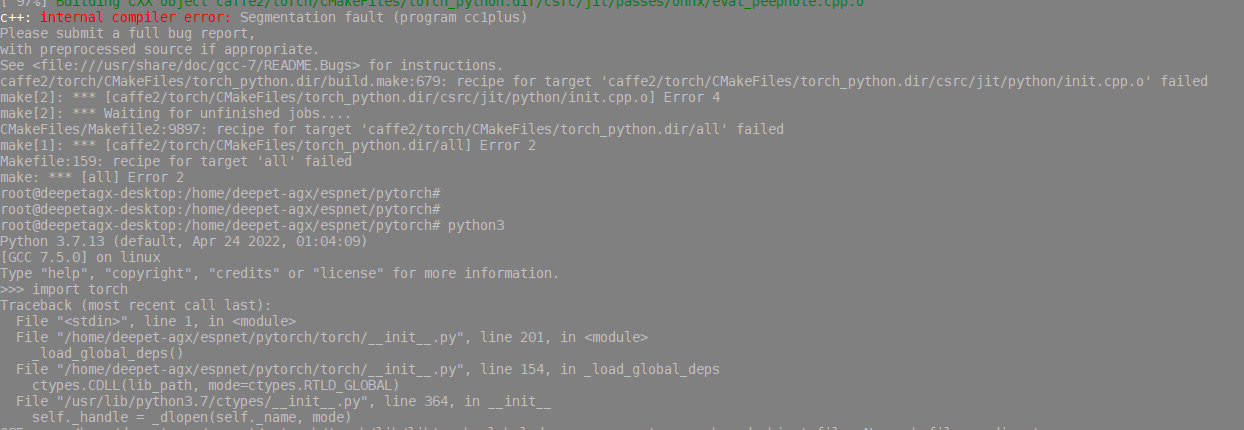

### 🐛 Describe the bug

I'm attempting to build pytorch wheels on a Jetson AGX Xavier DevKit running Ubuntu 18.04.

The script below works fine when the `USE_NATIVE_ARCH` option is not set, and fails when `USE_NATIVE_ARCH=1`.

<details>

<summary>

Script (click to expand)

</summary>

```bash

#!/bin/bash

set -e

set -o pipefail

python3.10 -m venv wheel_builder

source wheel_builder/bin/activate

PYTORCH_VERSION="1.11.0"

cd "${HOME}"

git clone --depth 1 --recursive --branch "v$PYTORCH_VERSION" https://github.com/pytorch/pytorch.git

cd pytorch

export BUILD_TEST=0

export USE_NCCL=0

export USE_DISTRIBUTED=0

export USE_QNNPACK=0

export USE_PYTORCH_QNNPACK=0

export TORCH_CUDA_ARCH_LIST="5.3;6.2;7.2"

export USE_NUMPY=1

export USE_CUDNN=1

export USE_NATIVE_ARCH=1

export PYTORCH_BUILD_VERSION="$PYTORCH_VERSION"

export PYTORCH_BUILD_NUMBER=1

export CC=$(which gcc-8)

export CXX=$(which g++-8)

pip install numpy==1.22.3

pip install wheel

pip install -r requirements.txt

pip install scikit-build

pip install ninja

python setup.py bdist_wheel

```

</details>

The configuration and CMake outputs can be found here: [Summaries.txt](https://github.com/pytorch/pytorch/files/8768732/Summaries.txt)

The build target that fails is

`[2888/3297] Building CUDA object caffe2/CMakeFiles/torch_cuda.dir/__/aten/src/ATen/native/cuda/CrossKernel.cu.o`. It fails because `nvcc` is provided the `-march=native` option, yet doesn't seem to understand or expect that option. `-march` is a known gcc flag and works fine for native binaries but may not be a supported option for nvcc.

It does seem that nvcc has an `arch` option in some contexts, probably referring to CUDA compute capabilities. But `native` isn't an accepted value.

Error output:

```

FAILED: caffe2/CMakeFiles/torch_cuda.dir/__/aten/src/ATen/native/cuda/CrossKernel.cu.o/usr/local/cuda-10.2/bin/nvcc -DAT_PER_OPERATOR_HEADERS -DHAVE_MALLOC_USABLE_SIZE=1 -DHAVE_MMAP=1 -DHAVE_SHM_OPEN=1 -DHAVE_SHM_UNLINK=1 -DMINIZ_DISABLE_ZIP_READER_CRC32_CHECKS -DONNXIFI_ENABLE_EXT=1 -DONNX_ML=1 -DONNX_NAMESPACE=onnx_torch -DTORCH_CUDA_BUILD_MAIN_LIB -DUSE_CUDA -DUSE_EXTERNAL_MZCRC -D_FILE_OFFSET_BITS=64 -Dtorch_cuda_EXPORTS -Iaten/src -I../aten/src -I. -I../ -I../cmake/../third_party/cudnn_frontend/include -I../third_party/onnx -Ithird_party/onnx -I../third_party/foxi -Ithird_party/foxi -Iinclude -I../torch/csrc/distributed -I../aten/src/THC -I../aten/src/ATen/cuda -Icaffe2/aten/src -I../aten/../third_party/catch/single_include -I../aten/src/ATen/.. -I../c10/cuda/../.. -I../c10/.. -I../torch/csrc/api -I../torch/csrc/api/include -isystem=../third_party/protobuf/src -isystem=../third_party/XNNPACK/include -isystem=../cmake/../third_party/eigen -isystem=/usr/include/python3.10 -isystem=/home/aruw/jetson-setup/asset-builds/wheel_builder/lib/python3.10/site-packages/numpy/core/include -isystem=../cmake/../third_party/pybind11/include -isystem=../cmake/../third_party/cub -isystem=/usr/local/cuda-10.2/include -Xfatbin -compress-all -DONNX_NAMESPACE=onnx_torch -gencode arch=compute_53,code=sm_53 -gencode arch=compute_62,code=sm_62 -gencode arch=compute_72,code=sm_72 -Xcudafe --diag_suppress=cc_clobber_ignored,--diag_suppress=integer_sign_change,--diag_suppress=useless_using_declaration,--diag_suppress=set_but_not_used,--diag_suppress=field_without_dll_interface,--diag_suppress=base_class_has_different_dll_interface,--diag_suppress=dll_interface_conflict_none_assumed,--diag_suppress=dll_interface_conflict_dllexport_assumed,--diag_suppress=implicit_return_from_non_void_function,--diag_suppress=unsigned_compare_with_zero,--diag_suppress=declared_but_not_referenced,--diag_suppress=bad_friend_decl --expt-relaxed-constexpr --expt-extended-lambda -Wno-deprecated-gpu-targets --expt-extended-lambda -DCUDA_HAS_FP16=1 -D__CUDA_NO_HALF_OPERATORS__ -D__CUDA_NO_HALF_CONVERSIONS__ -D__CUDA_NO_HALF2_OPERATORS__ -D__CUDA_NO_BFLOAT16_CONVERSIONS__ -O3 -DNDEBUG -Xcompiler=-fPIC -march=native -D__NEON__ -DTH_HAVE_THREAD -Xcompiler=-Wall,-Wextra,-Wno-unused-parameter,-Wno-unused-variable,-Wno-unused-function,-Wno-unused-result,-Wno-unused-local-typedefs,-Wno-missing-field-initializers,-Wno-write-strings,-Wno-unknown-pragmas,-Wno-type-limits,-Wno-array-bounds,-Wno-unknown-pragmas,-Wno-sign-compare,-Wno-strict-overflow,-Wno-strict-aliasing,-Wno-error=deprecated-declarations,-Wno-missing-braces,-Wno-maybe-uninitialized -DTORCH_CUDA_BUILD_MAIN_LIB -Xcompiler -pthread -std=c++14 -x cu -c ../aten/src/ATen/native/cuda/CrossKernel.cu -o caffe2/CMakeFiles/torch_cuda.dir/__/aten/src/ATen/native/cuda/CrossKernel.cu.o && /usr/local/cuda-10.2/bin/nvcc -DAT_PER_OPERATOR_HEADERS -DHAVE_MALLOC_USABLE_SIZE=1 -DHAVE_MMAP=1 -DHAVE_SHM_OPEN=1 -DHAVE_SHM_UNLINK=1 -DMINIZ_DISABLE_ZIP_READER_CRC32_CHECKS -DONNXIFI_ENABLE_EXT=1 -DONNX_ML=1 -DONNX_NAMESPACE=onnx_torch -DTORCH_CUDA_BUILD_MAIN_LIB -DUSE_CUDA -DUSE_EXTERNAL_MZCRC-D_FILE_OFFSET_BITS=64 -Dtorch_cuda_EXPORTS -Iaten/src -I../aten/src -I. -I../ -I../cmake/../third_party/cudnn_frontend/include -I../third_party/onnx -Ithird_party/onnx -I../third_party/foxi -Ithird_party/foxi -Iinclude -I../torch/csrc/distributed -I../aten/src/THC -I../aten/src/ATen/cuda -Icaffe2/aten/src -I../aten/../third_party/catch/single_include -I../aten/src/ATen/.. -I../c10/cuda/../.. -I../c10/.. -I../torch/csrc/api -I../torch/csrc/api/include -isystem=../third_party/protobuf/src -isystem=../third_party/XNNPACK/include -isystem=../cmake/../third_party/eigen -isystem=/usr/include/python3.10 -isystem=/home/aruw/jetson-setup/asset-builds/wheel_builder/lib/python3.10/site-packages/numpy/core/include -isystem=../cmake/../third_party/pybind11/include -isystem=../cmake/../third_party/cub -isystem=/usr/local/cuda-10.2/include -Xfatbin -compress-all -DONNX_NAMESPACE=onnx_torch -gencode arch=compute_53,code=sm_53 -gencode arch=compute_62,code=sm_62 -gencode arch=compute_72,code=sm_72 -Xcudafe --diag_suppress=cc_clobber_ignored,--diag_suppress=integer_sign_change,--diag_suppress=useless_using_declaration,--diag_suppress=set_but_not_used,--diag_suppress=field_without_dll_interface,--diag_suppress=base_class_has_different_dll_interface,--diag_suppress=dll_interface_conflict_none_assumed,--diag_suppress=dll_interface_conflict_dllexport_assumed,--diag_suppress=implicit_return_from_non_void_function,--diag_suppress=unsigned_compare_with_zero,--diag_suppress=declared_but_not_referenced,--diag_suppress=bad_friend_decl --expt-relaxed-constexpr --expt-extended-lambda -Wno-deprecated-gpu-targets --expt-extended-lambda -DCUDA_HAS_FP16=1 -D__CUDA_NO_HALF_OPERATORS__ -D__CUDA_NO_HALF_CONVERSIONS__ -D__CUDA_NO_HALF2_OPERATORS__ -D__CUDA_NO_BFLOAT16_CONVERSIONS__ -O3 -DNDEBUG -Xcompiler=-fPIC -march=native -D__NEON__ -DTH_HAVE_THREAD -Xcompiler=-Wall,-Wextra,-Wno-unused-parameter,-Wno-unused-variable,-Wno-unused-function,-Wno-unused-result,-Wno-unused-local-typedefs,-Wno-missing-field-initializers,-Wno-write-strings,-Wno-unknown-pragmas,-Wno-type-limits,-Wno-array-bounds,-Wno-unknown-pragmas,-Wno-sign-compare,-Wno-strict-overflow,-Wno-strict-aliasing,-Wno-error=deprecated-declarations,-Wno-missing-braces,-Wno-maybe-uninitialized -DTORCH_CUDA_BUILD_MAIN_LIB -Xcompiler -pthread -std=c++14 -x cu -M ../aten/src/ATen/native/cuda/CrossKernel.cu -MT caffe2/CMakeFiles/torch_cuda.dir/__/aten/src/ATen/native/cuda/CrossKernel.cu.o -o caffe2/CMakeFiles/torch_cuda.dir/__/aten/src/ATen/native/cuda/CrossKernel.cu.o.d

nvcc fatal : 'arch=native': expected a number

```

I would expect the `USE_NATIVE_ARCH` option to work for CUDA-enabled builds.

### Versions

Some of the below data is unhelpful because it attempts to use `nvidia-smi`, which isn't available on the Jetson line. However, CUDA 10.2 is available and known to work with other wheels we have built.

```

Collecting environment information...

PyTorch version: N/A

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.5 LTS (aarch64)

GCC version: (Ubuntu/Linaro 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: Could not collect

CMake version: version 3.13.2

Libc version: glibc-2.27

Python version: 3.10.4 (main, Apr 9 2022, 21:27:52) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-4.9.253-tegra-aarch64-with-glibc2.27

Is CUDA available: N/A

CUDA runtime version: 10.2.300

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Probably one of the following:

/usr/lib/aarch64-linux-gnu/libcudnn.so.8.2.1

/usr/lib/aarch64-linux-gnu/libcudnn_adv_infer.so.8.2.1

/usr/lib/aarch64-linux-gnu/libcudnn_adv_train.so.8.2.1

/usr/lib/aarch64-linux-gnu/libcudnn_cnn_infer.so.8.2.1

/usr/lib/aarch64-linux-gnu/libcudnn_cnn_train.so.8.2.1

/usr/lib/aarch64-linux-gnu/libcudnn_ops_infer.so.8.2.1

/usr/lib/aarch64-linux-gnu/libcudnn_ops_train.so.8.2.1

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

Versions of relevant libraries:

[pip3] numpy==1.22.3

[conda] Could not collect

```

```

aruw@jetson-aruw-lambda:~/jetson-setup/asset-builds$ nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2021 NVIDIA Corporation

Built on Sun_Feb_28_22:34:44_PST_2021

Cuda compilation tools, release 10.2, V10.2.300

Build cuda_10.2_r440.TC440_70.29663091_0

```

cc @malfet @seemethere

| 1 |

5,614 | 78,210 |

Performance with MPS on AMD GPUs are worse than CPU

|

module: performance, triaged, module: mps

|

### 🐛 Describe the bug

I tried running some experiments on the RX5300M 4GB GPU and everything seems to work correctly. The problem is that the performance are worse than the ones on the CPU of the same Mac.

To reproduce, just clone the tests in this repo `https://github.com/lucadiliello/pytorch-apple-silicon-benchmarks` and run either

```bash

python tests/transformers_sequence_classification.py --device mps --pre_trained_name bert-base-cased --mode inference --steps 100 --sequence_length 128 --batch_size 16

```

or

```bash

python tests/transformers_sequence_classification.py --device cpu --pre_trained_name bert-base-cased --mode inference --steps 100 --sequence_length 128 --batch_size 16

```

While the CPU took `143s`, with the MPS backend the test completed in `228s`. I'm sure the GPU was being because I constantly monitored the usage with `Activity Monitor`.

### Versions

PyTorch version: 1.13.0.dev20220524

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: macOS 12.3.1 (x86_64)

GCC version: Could not collect

Clang version: 13.1.6 (clang-1316.0.21.2.5)

CMake version: Could not collect

Libc version: N/A

Python version: 3.8.12 (default, Oct 12 2021, 06:23:56) [Clang 10.0.0 ] (64-bit runtime)

Python platform: macOS-10.16-x86_64-i386-64bit

Is CUDA available: False

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.22.4

[pip3] torch==1.13.0.dev20220524

[pip3] torchaudio==0.12.0.dev20220524

[pip3] torchvision==0.13.0.dev20220524

[conda] numpy 1.22.4 pypi_0 pypi

[conda] torch 1.13.0.dev20220524 pypi_0 pypi

[conda] torchaudio 0.12.0.dev20220524 pypi_0 pypi

[conda] torchvision 0.13.0.dev20220524 pypi_0 pypi

cc @VitalyFedyunin @ngimel

| 8 |

5,615 | 78,205 |

DISABLED test_complex_half_reference_testing_as_strided_scatter_cuda_complex32 (__main__.TestCommonCUDA)

|

module: rocm, triaged, skipped

|

Platforms: rocm

This test was disabled because it is failing on master ([recent examples](http://torch-ci.com/failure/test_complex_half_reference_testing_as_strided_scatter_cuda_complex32%2C%20TestCommonCUDA)).

cc @jeffdaily @sunway513 @jithunnair-amd @ROCmSupport @KyleCZH

| 1 |

5,616 | 78,201 |

nn.Sequential causes fx.replace_pattern to not find any match.

|

triaged, module: fx

|

### 🐛 Describe the bug

Having nn.Sequential (.e.g., torchvision resnet50 model has it) in the model code causes fx.replace_pattern to not find any matches. However, it does find a match if nn.Sequential is removed. Overall, I am looking for a way to match all Bottleneck patterns in the resnet50 model.

The following code results in no matches.

```

import torch

import torch.nn as nn

from torchvision import models

from torch.fx import symbolic_trace, replace_pattern

class replacement_pattern(nn.Module):

def __init__(self):

super().__init__()

def forward(self, x):

return x

# no matching pattern found with Sequential

mod = nn.Sequential(models.resnet.Bottleneck(2048, 512))

# The following works

#mod = models.resnet.Bottleneck(2048, 512)

traced = symbolic_trace(mod)

pattern = models.resnet.Bottleneck(2048, 512)

rep_pattern = replacement_pattern()

matches = replace_pattern(traced, pattern, rep_pattern)

print(f"matches: {matches}")

"""

matches: []

"""

```

I think the reason is the presence of "target" match in https://github.com/pytorch/pytorch/blob/master/torch/fx/subgraph_rewriter.py#L59 and for nn.Sequential the target is different. See below.

```

traced.graph.print_tabular()

"""

opcode name target args kwargs

------------- --------- ----------------------- ----------------- --------

placeholder input_1 input () {}

call_module _0_conv1 0.conv1 (input_1,) {}

call_module _0_bn1 0.bn1 (_0_conv1,) {}

call_module _0_relu 0.relu (_0_bn1,) {}

call_module _0_conv2 0.conv2 (_0_relu,) {}

call_module _0_bn2 0.bn2 (_0_conv2,) {}

call_module _0_relu_1 0.relu (_0_bn2,) {}

call_module _0_conv3 0.conv3 (_0_relu_1,) {}

call_module _0_bn3 0.bn3 (_0_conv3,) {}

call_function add <built-in function add> (_0_bn3, input_1) {}

call_module _0_relu_2 0.relu (add,) {}

output output output (_0_relu_2,) {}

"""

```

```

symbolic_trace(pattern).graph.print_tabular()

"""

opcode name target args kwargs

------------- ------ ----------------------- --------- --------

placeholder x x () {}

call_module conv1 conv1 (x,) {}

call_module bn1 bn1 (conv1,) {}

call_module relu relu (bn1,) {}

call_module conv2 conv2 (relu,) {}

call_module bn2 bn2 (conv2,) {}

call_module relu_1 relu (bn2,) {}

call_module conv3 conv3 (relu_1,) {}

call_module bn3 bn3 (conv3,) {}

call_function add <built-in function add> (bn3, x) {}

call_module relu_2 relu (add,) {}

output output output (relu_2,) {}

"""

```

### Versions

PyTorch version: 1.11.0+cu113

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.5 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: Could not collect

CMake version: version 3.10.2

Libc version: glibc-2.27

Python version: 3.8.12 (default, Sep 10 2021, 00:16:05) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.4.0-107-generic-x86_64-with-glibc2.27

Is CUDA available: True

CUDA runtime version: 11.1.105

GPU models and configuration:

GPU 0: NVIDIA A100-SXM4-40GB

GPU 1: NVIDIA A100-SXM4-40GB

Nvidia driver version: 510.47.03

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.0.5

HIP runtime version: N/A

MIOpen runtime version: N/A

Versions of relevant libraries:

[pip3] numpy==1.22.4

[pip3] torch==1.11.0+cu113

[pip3] torchaudio==0.11.0+cu113

[pip3] torchvision==0.12.0+cu113

[conda] Could not collect

| 0 |

5,617 | 78,185 |

test_to (__main__.TestTorch) fails with multiple gpus

|

module: multi-gpu, triaged, actionable

|

### 🐛 Describe the bug

The code below:

https://github.com/pytorch/pytorch/blob/664bb4de490198f1d68b16b21407090daa995ba8/test/test_torch.py#L7645

sets the device as device 1 if multiple gpus are found. It compares against a tensor on device 0 and fails. This test is part of the single gpu tests, and should not be using distributed setup.

```

======================================================================

FAIL: test_to (__main__.TestTorch)

----------------------------------------------------------------------

Traceback (most recent call last):

File "test_torch.py", line 7662, in test_to

self._test_to_with_layout(torch.sparse_csr)

File "test_torch.py", line 7654, in _test_to_with_layout

self.assertEqual(b.device, a.to(cuda, non_blocking=non_blocking).device)

File "/opt/conda/lib/python3.7/site-packages/torch/testing/_internal/common_utils.py", line 2258, in assertEqual

msg=msg,

File "/opt/conda/lib/python3.7/site-packages/torch/testing/_comparison.py", line 1086, in assert_equal

raise error_metas[0].to_error()

AssertionError: Object comparison failed: device(type='cuda', index=1) != device(type='cuda', index=0)

```

### Versions

Collecting environment information...

PyTorch version: N/A

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.4 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: 14.0.0 (https://github.com/RadeonOpenCompute/llvm-project roc-5.1.1 22114 5cba46feb6af367b1cafaa183ec42dbfb8207b14)

CMake version: version 3.22.1

Libc version: glibc-2.17

Python version: 3.7.13 (default, Mar 29 2022, 02:18:16) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.4.0-99-generic-x86_64-with-debian-bullseye-sid

Is CUDA available: N/A

CUDA runtime version: Could not collect

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

Versions of relevant libraries:

[pip3] mypy==0.812

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.20.3

[pip3] torch==1.12.0a0+git0bf2bb0

[conda] mkl 2022.0.1 h06a4308_117

[conda] mkl-include 2022.0.1 h06a4308_117

[conda] numpy 1.20.3 pypi_0 pypi

[conda] torch 1.12.0a0+git0bf2bb0 pypi_0 pypi

| 1 |

5,618 | 78,172 |

Allow specifying pickle module for torch.package

|

enhancement, oncall: package/deploy, imported

|

### 🚀 The feature, motivation and pitch

I'm working on a service that is to receive files created with `torch.package` to instantiate `torch.nn.Module`s created by a different service. Some of the modules use, somewhere in their structure, lambda functions and other constructs that are not supported by `pickle`, but are supported by the `dill` package.

Seeing as how the `torch.save` and `torch.load` functions both support specifying a `pickle_module` to use, I was wondering if it was possible to support specifying it when instantiating `torch.package.PackageExporter` and `torch.package.PackageImporter`, so I could take advantage of the dependency bundling they provide?

### Alternatives

I can use `torch.save` and `torch.load` with `dill` as the `pickle_module`, though that in and out of itself doesn't bundle any dependencies needed to instantiate the module later on, and so I'd need both services to have the classes and methods, and updates on one side won't be reflected on the other.

I could also copy the source code of `torch.package`, make the necessary adjustments to it so that it uses `dill`, and use that code instead, but I'd have to make sure it is up to date with any changes made in this repository, and again will need this code to exist in both services.

### Additional context

_No response_

| 0 |

5,619 | 78,170 |

[chalf] reference_testing: low quality test for fast growing ops

|

triaged, module: complex, module: half

|

### 🐛 Describe the bug

In PR https://github.com/pytorch/pytorch/pull/77640:

Since range of chalf is much less compared to cfloat, we get `inf`s easily (eg. with `pow`, `exp`), so we cast `cfloat` back to `chalf`.

However, this is might mask an actual issue as we don't control the percent of input that will be valid. The correct approach would be to sample input which are valid given the range of `chalf`.

One of the approach would be to add extra meta-data to OpInfo.

cc: @ngimel @mruberry @anjali411

### Versions

master

cc @ezyang @anjali411 @dylanbespalko @mruberry @Lezcano @nikitaved

| 4 |

5,620 | 78,159 |

[Optimizer Overlap] Parameter group support

|

oncall: distributed, triaged, module: ddp

|

### 🚀 The feature, motivation and pitch

We should investigate and fix any missing gaps with parameter group support in DDP's support for overlapped optimizers. Currently, we do not have unittests for optimizer overlap with parameter groups.

We probably will scope this to only specifying parameter groups at construction time and not support `add_param_group` API. The reason is that these functional optimizers are supposed to be mostly transparent to user; user does not directly construct or access them and they work through DDP communication hook internals.

### Alternatives

_No response_

### Additional context

_No response_

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501

| 0 |

5,621 | 78,158 |

[Optimizer Overlap] Proper checkpointing support

|

oncall: distributed, triaged, module: ddp

|

### 🚀 The feature, motivation and pitch

Currently, optimizer overlap with DDP does not support saving / loading checkpoints. The optimizer is not readily accessed from DDP, and thus there is no way to save / load in the optimizer's `state_dict`.

Even if we could access the optimizer from DDP, it is a functional optimizer and not a regular torch.optim.Optimizer, and thus the regular `state_dict` APIs cannot be called.

One potential solution is to make these functional optimizer instances inherit from torch.optim.Optim so that `state_dict` and `load_state_dict` can be reused out of the box. We will override methods that are unsupported on the functional optimizer but supported by regular torch.optim.Optimizer (such as `add_param_group`) to ensure user doesn't attempt to use unsupported behavior with functional optimizers.

This is a feature request from torchrec.

### Alternatives

_No response_

### Additional context

_No response_

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501

| 1 |

5,622 | 78,157 |

[Optimizer Overlap] Custom optimizer registration

|

oncall: distributed, triaged, module: ddp

|

### 🚀 The feature, motivation and pitch

Currently, DDP optimizer overlap only supports a subset of commonly used torch.optim.Optimizers. However, torchrec has the use case of using their own custom implemented optimizers, so we should add support for a registry + API to add to this registry to allow users to register their own custom optimizers to be overlapped with DDP.

### Alternatives

_No response_

### Additional context

_No response_

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501

| 0 |

5,623 | 78,153 |

`pack_sequence` crash

|

triaged, module: edge cases

|

### 🐛 Describe the bug

`pack_sequence` crash

```python

import torch

sequences_0 = torch.rand([1, 16, 86], dtype=torch.float32)

sequences_1 = torch.rand([1, 85, 0], dtype=torch.float16)

sequences_2 = torch.randint(0, 2, [2, 84, 85], dtype=torch.bool)

sequences_3 = torch.randint(-8, 2, [0, 4, 85], dtype=torch.int8)

sequences = [sequences_0,sequences_1,sequences_2,sequences_3,]

enforce_sorted = 0

torch.nn.utils.rnn.pack_sequence(sequences, enforce_sorted=enforce_sorted, )

# Segmentation fault (core dumped)

```

### Versions

pytorch: 1.11.0

| 0 |

5,624 | 78,151 |

`ctc_loss` will backward crash

|

module: autograd, triaged, module: edge cases

|

### 🐛 Describe the bug

`ctc_loss` will backward crash

First, it will succeed in the forward pass

```python

import torch

log_probs = torch.rand([50, 16, 20], dtype=torch.float32).requires_grad_()

targets = torch.randint(-2, 2, [16, 30], dtype=torch.int64)

input_lengths = torch.randint(-4, 1, [16], dtype=torch.int64)

target_lengths = torch.randint(-4, 4, [16], dtype=torch.int64)

blank = 0

reduction = "mean"

zero_infinity = False

res = torch.nn.functional.ctc_loss(log_probs, targets, input_lengths, target_lengths, blank=blank, reduction=reduction, zero_infinity=zero_infinity, )

# succeed

```

But it will crash when backwarding

```python

res.sum().backward()

# Segmentation fault (core dumped)

```

### Versions

pytorch: 1.11.0

Ubuntu: 20.04

Python: 3.9.5

cc @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7

| 0 |

5,625 | 78,143 |

`baddmm` triggers INTERNAL ASSERT FAILED when input requires grad

|

module: autograd, triaged, actionable

|

### 🐛 Describe the bug

`baddmm` triggers INTERNAL ASSERT FAILED when input requires grad

```python

import torch

input_tensor = torch.rand([10, 3, 5], dtype=torch.complex64)

batch1_tensor = torch.randint(-4, 2, [10, 3, 4], dtype=torch.int8)

batch2_tensor = torch.randint(-4, 1, [10, 4, 5], dtype=torch.int8)

input = input_tensor.clone()

batch1 = batch1_tensor.clone()

batch2 = batch2_tensor.clone()

res1 = torch.baddbmm(input, batch1, batch2, )

# Normal Pass

input = input_tensor.clone().requires_grad_()

batch1 = batch1_tensor.clone()

batch2 = batch2_tensor.clone()

res2 = torch.baddbmm(input, batch1, batch2, )

# RuntimeError: isDifferentiableType(variable.scalar_type())INTERNAL ASSERT FAILED at "/Users/distiller/project/pytorch/torch/csrc/autograd/functions/utils.h":65, please report a bug to PyTorch.

```

### Versions

pytorch: 1.11.0

cc @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7

| 0 |

5,626 | 78,141 |

`matmul, mm` triggers INTERNAL ASSERT FAILED when input requires grad

|

module: autograd, triaged, actionable

|

### 🐛 Describe the bug

`matmul` triggers INTERNAL ASSERT FAILED when input requires grad

```python

import torch

input_tensor = torch.randint(-8, 2, [11, 0, 4], dtype=torch.int8)

other_tensor = torch.rand([4], dtype=torch.float32)

input = input_tensor.clone()

other = other_tensor.clone()

res1 = torch.matmul(input, other, )

# Normal Pass

input = input_tensor.clone()

other = other_tensor.clone().requires_grad_()

res2 = torch.matmul(input, other, )

# RuntimeError: isDifferentiableType(variable.scalar_type())INTERNAL ASSERT FAILED at "/Users/distiller/project/pytorch/torch/csrc/autograd/functions/utils.h":65, please report a bug to PyTorch.

```

Plus, `mm` also has such issue

```python

import torch

input_tensor = torch.randint(0, 2, [0, 3], dtype=torch.uint8)

mat2_tensor = torch.rand([3, 2], dtype=torch.float32)

input = input_tensor.clone()

mat2 = mat2_tensor.clone()

res1 = torch.mm(input, mat2, )

input = input_tensor.clone()

mat2 = mat2_tensor.clone().requires_grad_()

res2 = torch.mm(input, mat2, )

# RuntimeError: isDifferentiableType(variable.scalar_type())INTERNAL ASSERT FAILED at "/Users/distiller/project/pytorch/torch/csrc/autograd/functions/utils.h":65, please report a bug to PyTorch.

```

### Versions

pytorch: 1.11.0

cc @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7

| 0 |

5,627 | 78,133 |

Enhancements to AliasDB to handle in-place operations

|

oncall: jit

|

### 🚀 The feature, motivation and pitch

https://github.com/pytorch/pytorch/blob/master/torch/csrc/jit/OVERVIEW.md#handling-mutability

`The intention is that if you only mutate the graph through AliasDb, you don't have to think about mutability/aliasing at all in your pass. As we write more passes, the interface to AliasDb will get richer (one example is transforming an in-place operation to its pure equivalent if we can prove it's safe).`

Is the work called out here to enhance AliasDB currently planned or in-progress? I've hit a few issues around in-place container mutations (ex.` aten::_set_item`) and they don't seem to be handled well in torchscript generally (ex. parseIR does not support read-back of ops that do not produce a value). Expanding AliasDB to provide more tools to understand/resolve these ops would be helpful.

As an example, this function in torch-tensorrt currently misses in-place container operations as dependencies of an input value. Adding a pass to remove these ops or providing access to the underlying graph in AliasDB to identify dependencies correctly would help resolve these issues.

https://github.com/pytorch/TensorRT/blob/e9e824c0ef0a4704826a390fe7d2ef90272a56b7/core/partitioning/partitioning.cpp#L60

### Alternatives

_No response_

### Additional context

_No response_

| 0 |

5,628 | 78,131 |

Segfault in _pad_packed_sequence

|

triaged, module: edge cases

|

### 🐛 Describe the bug

Function `torch._pad_packed_sequence` contains segmentation fault.

### Example to reproduce

```

import torch

data = torch.full([1, 1, 1, 1], -10000, dtype=torch.float16, requires_grad=False)

batch_sizes = torch.full([0], 978, dtype=torch.int64, requires_grad=False)

batch_first = True

padding_value = False

total_length = torch.full([], -9937, dtype=torch.int64, requires_grad=False)

torch._pad_packed_sequence(data, batch_sizes, batch_first, padding_value, total_length)

```

### Result

Segmentation fault

### Expected Behavior

Throwing a Python Exception

### Notes

This bug was discovered using [Atheris](https://github.com/google/atheris).

### Versions

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: Debian GNU/Linux 11 (bullseye) (x86_64)

GCC version: (Debian 10.2.1-6) 10.2.1 20210110

Clang version: 11.0.1-2

CMake version: version 3.18.4

Libc version: glibc-2.31

Python version: 3.9.7 (default, Sep 16 2021, 13:09:58) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.10.0-11-amd64-x86_64-with-glibc2.31

Is CUDA available: N/A

CUDA runtime version: Could not collect

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

Versions of relevant libraries:

[pip3] numpy==1.22.3

[pip3] torch==1.11.0

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] blas 1.0 mkl

[conda] cpuonly 2.0 0 pytorch

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.21.2 py39h20f2e39_0

[conda] numpy-base 1.21.2 py39h79a1101_0

[conda] pytorch 1.11.0 py3.9_cpu_0 pytorch

[conda] pytorch-mutex 1.0 cpu pytorch

[conda] torchaudio 0.11.0 py39_cpu pytorch

[conda] torchvision 0.12.0 py39_cpu pytorch

| 0 |

5,629 | 78,130 |

Segfault in _grid_sampler_2d_cpu_fallback

|

triaged, module: edge cases

|

### 🐛 Describe the bug

Function `torch._grid_sampler_2d_cpu_fallback` contains segmentation fault.

### Example to reproduce

```

import torch

input = torch.full([3, 3, 3, 2], 9490, dtype=torch.float32, requires_grad=False)

grid = torch.full([0, 3, 8, 2, 4, 1], -9545, dtype=torch.float32, requires_grad=False)

interpolation_mode = 8330

padding_mode = 5934

align_corners = False

torch._grid_sampler_2d_cpu_fallback(input, grid, interpolation_mode, padding_mode, align_corners)

```

### Result

Segmentation fault

### Expected Behavior

Throwing a Python Exception

### Notes

This bug was discovered using [Atheris](https://github.com/google/atheris).

### Versions

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: Debian GNU/Linux 11 (bullseye) (x86_64)

GCC version: (Debian 10.2.1-6) 10.2.1 20210110

Clang version: 11.0.1-2

CMake version: version 3.18.4

Libc version: glibc-2.31

Python version: 3.9.7 (default, Sep 16 2021, 13:09:58) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.10.0-11-amd64-x86_64-with-glibc2.31

Is CUDA available: N/A

CUDA runtime version: Could not collect

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

Versions of relevant libraries:

[pip3] numpy==1.22.3

[pip3] torch==1.11.0

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] blas 1.0 mkl

[conda] cpuonly 2.0 0 pytorch

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.21.2 py39h20f2e39_0

[conda] numpy-base 1.21.2 py39h79a1101_0

[conda] pytorch 1.11.0 py3.9_cpu_0 pytorch

[conda] pytorch-mutex 1.0 cpu pytorch

[conda] torchaudio 0.11.0 py39_cpu pytorch

[conda] torchvision 0.12.0 py39_cpu pytorch

| 0 |

5,630 | 78,129 |

Segfault in _embedding_bag_forward_only

|

triaged, module: edge cases

|

### 🐛 Describe the bug

Function `torch._embedding_bag_forward_only` contains segmentation fault.

### Example to reproduce

```

import torch

weight = torch.full([2, 2, 5, 3, 3], -8670, dtype=torch.float64, requires_grad=False)

indices = torch.full([3, 0, 1], -4468, dtype=torch.int32, requires_grad=False)

offsets = torch.full([7, 1, 0], -7226, dtype=torch.int64, requires_grad=False)

scale_grad_by_freq = True

mode = torch.full([], 6318, dtype=torch.int64, requires_grad=False)

sparse = False

per_sample_weights = torch.full([3], -8750, dtype=torch.int64, requires_grad=False)

include_last_offset = False

padding_idx = torch.full([], 6383, dtype=torch.int64, requires_grad=False)

torch._embedding_bag_forward_only(weight, indices, offsets, scale_grad_by_freq, mode,

sparse, per_sample_weights, include_last_offset, padding_idx)

```

### Result

Segmentation fault

### Expected Behavior

Throwing a Python Exception

### Notes

This bug was discovered using [Atheris](https://github.com/google/atheris).

### Versions

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: Debian GNU/Linux 11 (bullseye) (x86_64)

GCC version: (Debian 10.2.1-6) 10.2.1 20210110

Clang version: 11.0.1-2

CMake version: version 3.18.4

Libc version: glibc-2.31

Python version: 3.9.7 (default, Sep 16 2021, 13:09:58) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.10.0-11-amd64-x86_64-with-glibc2.31

Is CUDA available: N/A

CUDA runtime version: Could not collect

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

Versions of relevant libraries:

[pip3] numpy==1.22.3

[pip3] torch==1.11.0

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] blas 1.0 mkl

[conda] cpuonly 2.0 0 pytorch

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.21.2 py39h20f2e39_0

[conda] numpy-base 1.21.2 py39h79a1101_0

[conda] pytorch 1.11.0 py3.9_cpu_0 pytorch

[conda] pytorch-mutex 1.0 cpu pytorch

[conda] torchaudio 0.11.0 py39_cpu pytorch

[conda] torchvision 0.12.0 py39_cpu pytorch

| 0 |

5,631 | 78,128 |

Segfault in torch._C._nn.thnn_conv2d

|

triaged, module: edge cases

|

### 🐛 Describe the bug

Function `torch._C._nn.thnn_conv2d` contains segmentation fault.

### Example to reproduce

```

import torch

tensor_0 = torch.full([3, 3, 3], -4398, dtype=torch.float64, requires_grad=False)

tensor_1 = torch.full([6, 7], -4532, dtype=torch.int32, requires_grad=False)

intarrayref_2 = -10000

tensor_3 = torch.full([3, 3, 3, 6, 7], -2321, dtype=torch.float16, requires_grad=False)

intarrayref_4 = -2807

intarrayref_5 = []

torch._C._nn.thnn_conv2d(tensor_0, tensor_1, intarrayref_2, tensor_3, intarrayref_4, intarrayref_5)

```

### Result

Segmentation fault

### Expected Behavior

Throwing a Python Exception

### Notes

This bug was discovered using [Atheris](https://github.com/google/atheris).

### Versions

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: Debian GNU/Linux 11 (bullseye) (x86_64)

GCC version: (Debian 10.2.1-6) 10.2.1 20210110

Clang version: 11.0.1-2

CMake version: version 3.18.4

Libc version: glibc-2.31

Python version: 3.9.7 (default, Sep 16 2021, 13:09:58) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.10.0-11-amd64-x86_64-with-glibc2.31

Is CUDA available: N/A

CUDA runtime version: Could not collect

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

Versions of relevant libraries:

[pip3] numpy==1.22.3

[pip3] torch==1.11.0

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] blas 1.0 mkl

[conda] cpuonly 2.0 0 pytorch

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.21.2 py39h20f2e39_0

[conda] numpy-base 1.21.2 py39h79a1101_0

[conda] pytorch 1.11.0 py3.9_cpu_0 pytorch

[conda] pytorch-mutex 1.0 cpu pytorch

[conda] torchaudio 0.11.0 py39_cpu pytorch

[conda] torchvision 0.12.0 py39_cpu pytorch

| 0 |

5,632 | 78,127 |

Segfault in torch._C._nn.reflection_pad2d

|

triaged, module: edge cases

|

### 🐛 Describe the bug

Function `torch._C._nn.reflection_pad2d` contains segmentation fault.

### Example to reproduce

```

import torch

tensor_0 = torch.full([6, 5, 7], -8754, dtype=torch.int32, requires_grad=False)

intarrayref_1 = []

torch._C._nn.reflection_pad2d(tensor_0, intarrayref_1)

```

### Result

Segmentation fault

### Expected Behavior

Throwing a Python Exception

### Notes

This bug was discovered using [Atheris](https://github.com/google/atheris).

### Versions

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: Debian GNU/Linux 11 (bullseye) (x86_64)

GCC version: (Debian 10.2.1-6) 10.2.1 20210110

Clang version: 11.0.1-2

CMake version: version 3.18.4

Libc version: glibc-2.31

Python version: 3.9.7 (default, Sep 16 2021, 13:09:58) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.10.0-11-amd64-x86_64-with-glibc2.31

Is CUDA available: N/A

CUDA runtime version: Could not collect

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

Versions of relevant libraries:

[pip3] numpy==1.22.3

[pip3] torch==1.11.0

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] blas 1.0 mkl

[conda] cpuonly 2.0 0 pytorch

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.21.2 py39h20f2e39_0

[conda] numpy-base 1.21.2 py39h79a1101_0

[conda] pytorch 1.11.0 py3.9_cpu_0 pytorch

[conda] pytorch-mutex 1.0 cpu pytorch

[conda] torchaudio 0.11.0 py39_cpu pytorch

[conda] torchvision 0.12.0 py39_cpu pytorch

| 0 |

5,633 | 78,126 |

Segfault in max_unpool3d

|

triaged, module: edge cases

|

### 🐛 Describe the bug

Function `torch.max_unpool3d` contains segmentation fault.

### Example to reproduce

```

import torch

tensor_0 = torch.full([], -10000, dtype=torch.int64, requires_grad=False)

tensor_1 = torch.full([7, 7, 7, 4, 4, 4, 7, 7], -8695, dtype=torch.float16, requires_grad=False)

intarrayref_2 = []

intarrayref_3 = 7052

intarrayref_4 = -9995

torch._C._nn.max_unpool3d(tensor_0, tensor_1, intarrayref_2, intarrayref_3, intarrayref_4)

```

### Result

Segmentation fault

### Expected Behavior

Throwing a Python Exception

### Notes

This bug was discovered using [Atheris](https://github.com/google/atheris).

### Versions

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: Debian GNU/Linux 11 (bullseye) (x86_64)

GCC version: (Debian 10.2.1-6) 10.2.1 20210110

Clang version: 11.0.1-2

CMake version: version 3.18.4

Libc version: glibc-2.31

Python version: 3.9.7 (default, Sep 16 2021, 13:09:58) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.10.0-11-amd64-x86_64-with-glibc2.31

Is CUDA available: N/A

CUDA runtime version: Could not collect

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

Versions of relevant libraries:

[pip3] numpy==1.22.3

[pip3] torch==1.11.0

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] blas 1.0 mkl

[conda] cpuonly 2.0 0 pytorch

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.21.2 py39h20f2e39_0

[conda] numpy-base 1.21.2 py39h79a1101_0

[conda] pytorch 1.11.0 py3.9_cpu_0 pytorch

[conda] pytorch-mutex 1.0 cpu pytorch

[conda] torchaudio 0.11.0 py39_cpu pytorch

[conda] torchvision 0.12.0 py39_cpu pytorch

| 0 |

5,634 | 78,125 |

Segfault in grid_sampler_3d

|

triaged, module: edge cases

|

### 🐛 Describe the bug

Function `torch.grid_sampler_3d` contains segmentation fault.

### Example to reproduce

```

import torch

input = torch.full([4, 2, 0, 0, 4, 0, 0, 3], -1480, dtype=torch.float64, requires_grad=False)

grid = torch.full([1, 6, 3, 5, 3, 4, 0, 6], -2024, dtype=torch.float64, requires_grad=False)

interpolation_mode = -3278

padding_mode = -1469

align_corners = True

torch.grid_sampler_3d(input, grid, interpolation_mode, padding_mode, align_corners)

```

### Result

Segmentation fault

### Expected Behavior

Throwing a Python Exception

### Notes

This bug was discovered using [Atheris](https://github.com/google/atheris).

### Versions

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: Debian GNU/Linux 11 (bullseye) (x86_64)

GCC version: (Debian 10.2.1-6) 10.2.1 20210110

Clang version: 11.0.1-2

CMake version: version 3.18.4

Libc version: glibc-2.31

Python version: 3.9.7 (default, Sep 16 2021, 13:09:58) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.10.0-11-amd64-x86_64-with-glibc2.31

Is CUDA available: N/A

CUDA runtime version: Could not collect

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

Versions of relevant libraries:

[pip3] numpy==1.22.3

[pip3] torch==1.11.0

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] blas 1.0 mkl

[conda] cpuonly 2.0 0 pytorch

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.21.2 py39h20f2e39_0

[conda] numpy-base 1.21.2 py39h79a1101_0

[conda] pytorch 1.11.0 py3.9_cpu_0 pytorch

[conda] pytorch-mutex 1.0 cpu pytorch

[conda] torchaudio 0.11.0 py39_cpu pytorch

[conda] torchvision 0.12.0 py39_cpu pytorch

| 0 |

5,635 | 78,122 |

Segfault in bincount

|

triaged, module: edge cases

|

### 🐛 Describe the bug

Function `torch.bincount` contains segmentation fault.

### Example to reproduce

```

import torch

self = torch.full([3], 2550, dtype=torch.int64, requires_grad=False)

weights = torch.full([3, 1, 3, 0, 0, 0, 1, 1], -4620, dtype=torch.int64, requires_grad=False)

minlength = 9711

torch.bincount(self, weights, minlength)

```

### Result

Segmentation fault

### Expected Behavior

Throwing a Python Exception

### Notes

This bug was discovered using [Atheris](https://github.com/google/atheris).

### Versions

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: Debian GNU/Linux 11 (bullseye) (x86_64)

GCC version: (Debian 10.2.1-6) 10.2.1 20210110

Clang version: 11.0.1-2

CMake version: version 3.18.4

Libc version: glibc-2.31

Python version: 3.9.7 (default, Sep 16 2021, 13:09:58) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.10.0-11-amd64-x86_64-with-glibc2.31

Is CUDA available: N/A

CUDA runtime version: Could not collect

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

Versions of relevant libraries:

[pip3] numpy==1.22.3

[pip3] torch==1.11.0

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] blas 1.0 mkl

[conda] cpuonly 2.0 0 pytorch

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.21.2 py39h20f2e39_0

[conda] numpy-base 1.21.2 py39h79a1101_0

[conda] pytorch 1.11.0 py3.9_cpu_0 pytorch

[conda] pytorch-mutex 1.0 cpu pytorch

[conda] torchaudio 0.11.0 py39_cpu pytorch

[conda] torchvision 0.12.0 py39_cpu pytorch

| 1 |

5,636 | 78,109 |

Doesn't work when register hook to torch.nn.MultiheadAttention.out_proj

|

oncall: transformer/mha

|

### 🐛 Describe the bug

I am not sure whether this issue should be a bug or a new feature reques. The problem is: when you register a hook to out_proj under MultiheadAttention, it will never be called. Which may cause some abnormal behaviors when use tools implemented with hook, e.g. torch.nn.utils.prune (#69353).

And this is because when you forward a MultiheadAttention, it directly call the out_proj.weight without forwarding it in torch._native_multi_head_attention or F.multi_head_attention_forward. (see [#L1113](https://github.com/pytorch/pytorch/blob/cac16e2ee293964033dffa6616f78b68603cd565/torch/nn/modules/activation.py#L1113), [#L1142](https://github.com/pytorch/pytorch/blob/cac16e2ee293964033dffa6616f78b68603cd565/torch/nn/modules/activation.py#L1142), and [#L1153](https://github.com/pytorch/pytorch/blob/cac16e2ee293964033dffa6616f78b68603cd565/torch/nn/modules/activation.py#L1153)) To maintain the consistency of hook behaviors, I suggest call those hooks at some proper places, maybe pass into F.multi_head_attention_forward?

Thanks!

### To Reproduce

```python

import torch

import torch.nn as nn

from functools import partial

test = {}

def hook(name, module, inputs):

global test

test[name] = test.get(name, 0) + 1

class net(nn.Module):

def __init__(self):

super().__init__()

self.attn = nn.MultiheadAttention(100,2)

self.proj = nn.Linear(100, 10)

# register hook

self.attn.register_forward_pre_hook(partial(hook, "attn"))

self.attn.out_proj.register_forward_pre_hook(partial(hook, "attn.out_proj"))

self.proj.register_forward_pre_hook(partial(hook, "proj"))

def forward(self, x):

attn_output, _ = self.attn(x, x, x)

logits = self.proj(attn_output)

return logits

model = net().eval()

x = torch.randn((16, 50, 100))

logits = model(x)

print(test)

```

### Expected behavior

```bash

{'attn': 1, 'attn_out': 1, 'proj': 1}

```

### Real behavior

```bash

{'attn': 1, 'proj': 1}

```

### Versions

Collecting environment information...

PyTorch version: 1.9.1+cu111

Is debug build: False

CUDA used to build PyTorch: 11.1

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.4 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: Could not collect

Libc version: glibc-2.31

Python version: 3.8.11 (default, Aug 3 2021, 15:09:35) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.4.0-110-generic-x86_64-with-glibc2.17

Is CUDA available: True

CUDA runtime version: Could not collect

GPU models and configuration: GPU 0: NVIDIA GeForce GTX 1080

Nvidia driver version: 470.129.06

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.20.3

[pip3] pytorch-lightning==1.5.9

[pip3] pytorch-ranger==0.1.1

[pip3] torch==1.9.1+cu111

[pip3] torch-optimizer==0.3.0

[pip3] torch-stoi==0.1.2

[pip3] torch-tb-profiler==0.2.1

[pip3] torchaudio==0.9.1

[pip3] torchmetrics==0.7.0

[pip3] torchvision==0.10.1+cu111

[conda] cudatoolkit 10.2.89 hfd86e86_1 defaults

[conda] numpy 1.20.3 py38h9894fe3_1 conda-forge

[conda] pytorch-lightning 1.5.9 pypi_0 pypi

[conda] pytorch-qrnn 0.2.1 pypi_0 pypi

[conda] pytorch-ranger 0.1.1 pypi_0 pypi

[conda] torch 1.9.1+cu111 pypi_0 pypi

[conda] torch-optimizer 0.3.0 pypi_0 pypi

[conda] torch-stoi 0.1.2 pypi_0 pypi

[conda] torch-tb-profiler 0.2.1 pypi_0 pypi

[conda] torchaudio 0.9.1 pypi_0 pypi

[conda] torchmetrics 0.7.0 pypi_0 pypi

[conda] torchvision 0.10.1+cu111 pypi_0 pypi

cc @jbschlosser @bhosmer @cpuhrsch

| 7 |

5,637 | 78,102 |

[ONNX] Support tensors as scale and zero_point arguments

|

module: onnx, triaged, OSS contribution wanted, onnx-triaged

|

### 🚀 The feature, motivation and pitch

PyTorch supports using tensors as scale and zero_point arguments. Currently in `test_pytorch_onnx_caffe2_quantized.py`, we get

```

RuntimeError: Expected node type 'onnx::Constant' for argument 'scale' of node 'quantize_per_tensor', got 'prim::Param'.

```

Related:

- https://github.com/pytorch/pytorch/pull/77772

### Alternatives

_No response_

### Additional context

_No response_

| 1 |

5,638 | 78,082 |

RFC: Move functorch into pytorch/pytorch

|

triaged

|

We (some folks from the functorch team, @samdow @Chillee) met with some of the PyTorch GitHub first and dev infra teams (@malfet, @osalpekar, @seemethere) to discuss pytorch/pytorch and functorch co-development pains.

In that meeting, we identified the following solution, some stakeholders for out-of-tree (@suo) and pytorch core development (@gchanan), CI and developer velocity (@suo, @mruberry), and we are putting this issue up for discussion and to ask for sign-off from the stakeholders.

## Motivation 1: internal build failures

pytorch/pytorch and pytorch/functorch get synced internally at different cadences. Realistically this means that e.g. a deletion to a c++ function in pytorch/pytorch can cause the pytorch/functorch build to fail until it is fixed, leading to downstream failures.

## Motivation 2: co-development is difficult

functorch's CI breaks almost daily due to changes to pytorch/pytorch. There are a number of reasons for this:

- a pytorch/pytorch c++ function is deleted or signature is updated (see Motivation 1)

- a operator implementation is updated

- a developer is co-developing a feature between pytorch/pytorch and pytorch/functorch and is unable to atomically commit to both repos

## Proposal: move functorch into pytorch/pytorch

Our proposal is to "cut-and-paste" (with adjustments as necessary) functorch from pytorch/functorch into a top-level folder in pytorch/pytorch named functorch.