modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-08 19:17:42

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 549

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-08 18:30:19

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

dhyay/mistral_slerp_dpo3k

|

dhyay

| 2024-03-24T22:42:21Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2024-03-24T22:42:17Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

betterMateusz/llama-2-7b-hf

|

betterMateusz

| 2024-03-24T22:39:54Z | 78 | 0 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"4-bit",

"bitsandbytes",

"region:us"

] |

text-generation

| 2024-03-24T22:35:58Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

tee-oh-double-dee/social-orientation-multilingual

|

tee-oh-double-dee

| 2024-03-24T22:36:45Z | 106 | 0 |

transformers

|

[

"transformers",

"safetensors",

"xlm-roberta",

"text-classification",

"social-orientation",

"classification",

"multilingual",

"dataset:tee-oh-double-dee/social-orientation",

"arxiv:2403.04770",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2024-03-24T15:14:20Z |

---

library_name: transformers

tags:

- social-orientation

- xlm-roberta

- classification

- multilingual

license: mit

datasets:

- tee-oh-double-dee/social-orientation

metrics:

- accuracy

pipeline_tag: text-classification

widget:

- text: "Speaker 1: These edits are terrible. Please review my comments above again.</s>Speaker 2: I reviewed your comments, which were not helpful. Roll up your sleeves and do some work."

---

# Model Card for the Social Orientation Tagger

This multilingual social orientation tagger is an [XLM-RoBERTa](https://huggingface.co/FacebookAI/xlm-roberta-base) base model trained on the [Conversations Gone Awry](https://convokit.cornell.edu/documentation/awry.html) (CGA) dataset with [social orientation labels](https://huggingface.co/datasets/tee-oh-double-dee/social-orientation) collected using GPT-4. This model can be used to predict social orientation labels for new conversations. See example usage below or our Github repo for more extensive examples: [examples/single_prediction.py](https://github.com/ToddMorrill/social-orientation/blob/master/examples/single_prediction.py) or [examples/evaluate.py](https://github.com/ToddMorrill/social-orientation/blob/master/examples/evaluate.py).

See the **English version** of this model here: [tee-oh-double-dee/social-orientation](https://huggingface.co/tee-oh-double-dee/social-orientation)

This dataset was created as part of the work described in [Social Orientation: A New Feature for Dialogue Analysis](https://arxiv.org/abs/2403.04770), which was accepted to LREC-COLING 2024.

[](https://arxiv.org/abs/2403.04770)

## Usage

You can make direct use of this social orientation tagger as follows:

```python

import pprint

from transformers import AutoModelForSequenceClassification, AutoTokenizer

sample_input = 'Speaker 1: This is really terrific work!'

model = AutoModelForSequenceClassification.from_pretrained('tee-oh-double-dee/social-orientation-multilingual')

model.eval()

tokenizer = AutoTokenizer.from_pretrained('tee-oh-double-dee/social-orientation-multilingual')

model_input = tokenizer(sample_input, return_tensors='pt')

output = model(**model_input)

output_probs = output.logits.softmax(dim=1)

id2label = model.config.id2label

pred_dict = {

id2label[i]: output_probs[0][i].item()

for i in range(len(id2label))

}

pprint.pprint(pred_dict)

```

### Downstream Use

Predicted social orientation tags can be prepended to dialog utterances to assist downstream models. For instance, you could convert

```

Speaker 1: This is really terrific work!

```

to

```

Speaker 1 (Gregarious-Extraverted): This is really terrific work!

```

and then feed these new utterances to a model that predicts if a conversation will succeed or fail. We showed the effectiveness of this strategy in our [paper](https://arxiv.org/abs/2403.04770).

## Model Details

### Model Description

There are many settings where it is useful to predict and explain the success or failure of a dialogue. Circumplex theory from psychology models the social orientations (e.g., Warm-Agreeable, Arrogant-Calculating) of conversation participants, which can in turn can be used to predict and explain the outcome of social interactions, such as in online debates over Wikipedia page edits or on the Reddit ChangeMyView forum. This model enables social orientation tagging of dialog utterances.

The prediction set includes: {Assured-Dominant, Gregarious-Extraverted, Warm-Agreeable, Unassuming-Ingenuous, Unassured-Submissive, Aloof-Introverted, Cold, Arrogant-Calculating, Not Available}

- **Developed by:** Todd Morrill

- **Funded by [optional]:** DARPA

- **Model type:** classification model

- **Language(s) (NLP):** Multilingual

- **Finetuned from model [optional]:** [XLM-RoBERTa](https://huggingface.co/FacebookAI/xlm-roberta-base) base model

### Model Sources

- **Repository:** [Github repository](https://github.com/ToddMorrill/social-orientation)

- **Paper [optional]:** [Social Orientation: A New Feature for Dialogue Analysis](https://arxiv.org/abs/2403.04770)

## Training Details

### Training Data

See [tee-oh-double-dee/social-orientation](https://huggingface.co/datasets/tee-oh-double-dee/social-orientation) for details on the training dataset.

### Training Procedure

We initialize our social orientation tagger weights from the [XLM-RoBERTa](https://huggingface.co/FacebookAI/xlm-roberta-base) base pre-trained checkpoint from Hugging Face. We use following hyperparameter settings: batch size=32, learning rate=1e-6, we include speaker names before each utterance, we train in 16 bit floating point representation, we use window size of two utterances (i.e., we use the previous utterance's text and the current utterance's text to predict the current utterance's social orientation tag), and we use a weighted loss function to address class imbalance and improve prediction set diversity. The weight \\(w'_c\\) assigned to each class \\(c\\) is defined by

$$

w'_c = C \cdot \frac{w_c}{\sum_{c=1}^C w_c}

$$

where \\(w_c = \frac{N}{N_c}\\), where \\(N\\) denotes the number of examples in the training set, and \\(N_c\\) denotes the number of examples in class \\(c\\) in the training set, and \\(C\\) is the number of classes. In our case is \\(C=9\\), including the `Not Available` class, which is used for all empty utterances.

## Evaluation

We evaluate accuracy at the individual utterance level and report the following results:

| Split | Accuracy |

|---|---|

| Train | 39.21% |

| Validation | 35.04% |

| Test | 37.25% |

Without loss weighting, it is possible to achieve an accuracy of 45%.

## Citation

**BibTeX:**

```

@misc{morrill2024social,

title={Social Orientation: A New Feature for Dialogue Analysis},

author={Todd Morrill and Zhaoyuan Deng and Yanda Chen and Amith Ananthram and Colin Wayne Leach and Kathleen McKeown},

year={2024},

eprint={2403.04770},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

JasperGrant/ASTBERT-cb-25k-methods

|

JasperGrant

| 2024-03-24T22:32:23Z | 71 | 0 |

transformers

|

[

"transformers",

"tf",

"bert",

"fill-mask",

"generated_from_keras_callback",

"base_model:microsoft/codebert-base-mlm",

"base_model:finetune:microsoft/codebert-base-mlm",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2024-03-24T22:18:44Z |

---

base_model: microsoft/codebert-base-mlm

tags:

- generated_from_keras_callback

model-index:

- name: ASTcodeBERT-mlm

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# ASTcodeBERT-mlm

This model is a fine-tuned version of [microsoft/codebert-base-mlm](https://huggingface.co/microsoft/codebert-base-mlm) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.0771

- Train Accuracy: 0.9810

- Epoch: 9

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': 1e-04, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Train Accuracy | Epoch |

|:----------:|:--------------:|:-----:|

| 0.6706 | 0.9211 | 0 |

| 0.3946 | 0.9394 | 1 |

| 0.3184 | 0.9457 | 2 |

| 0.2629 | 0.9513 | 3 |

| 0.2173 | 0.9566 | 4 |

| 0.1694 | 0.9633 | 5 |

| 0.1439 | 0.9673 | 6 |

| 0.1158 | 0.9726 | 7 |

| 0.0928 | 0.9774 | 8 |

| 0.0771 | 0.9810 | 9 |

### Framework versions

- Transformers 4.31.0

- TensorFlow 2.10.0

- Datasets 2.18.0

- Tokenizers 0.13.3

|

rajevan123/STS-Lora-Fine-Tuning-Capstone-bert-testing-23-with-lower-r-mid

|

rajevan123

| 2024-03-24T22:32:19Z | 2 | 0 |

peft

|

[

"peft",

"tensorboard",

"safetensors",

"generated_from_trainer",

"base_model:dslim/bert-base-NER",

"base_model:adapter:dslim/bert-base-NER",

"license:mit",

"region:us"

] | null | 2024-03-24T21:55:36Z |

---

license: mit

library_name: peft

tags:

- generated_from_trainer

metrics:

- accuracy

base_model: dslim/bert-base-NER

model-index:

- name: STS-Lora-Fine-Tuning-Capstone-bert-testing-23-with-lower-r-mid

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# STS-Lora-Fine-Tuning-Capstone-bert-testing-23-with-lower-r-mid

This model is a fine-tuned version of [dslim/bert-base-NER](https://huggingface.co/dslim/bert-base-NER) on the None dataset.

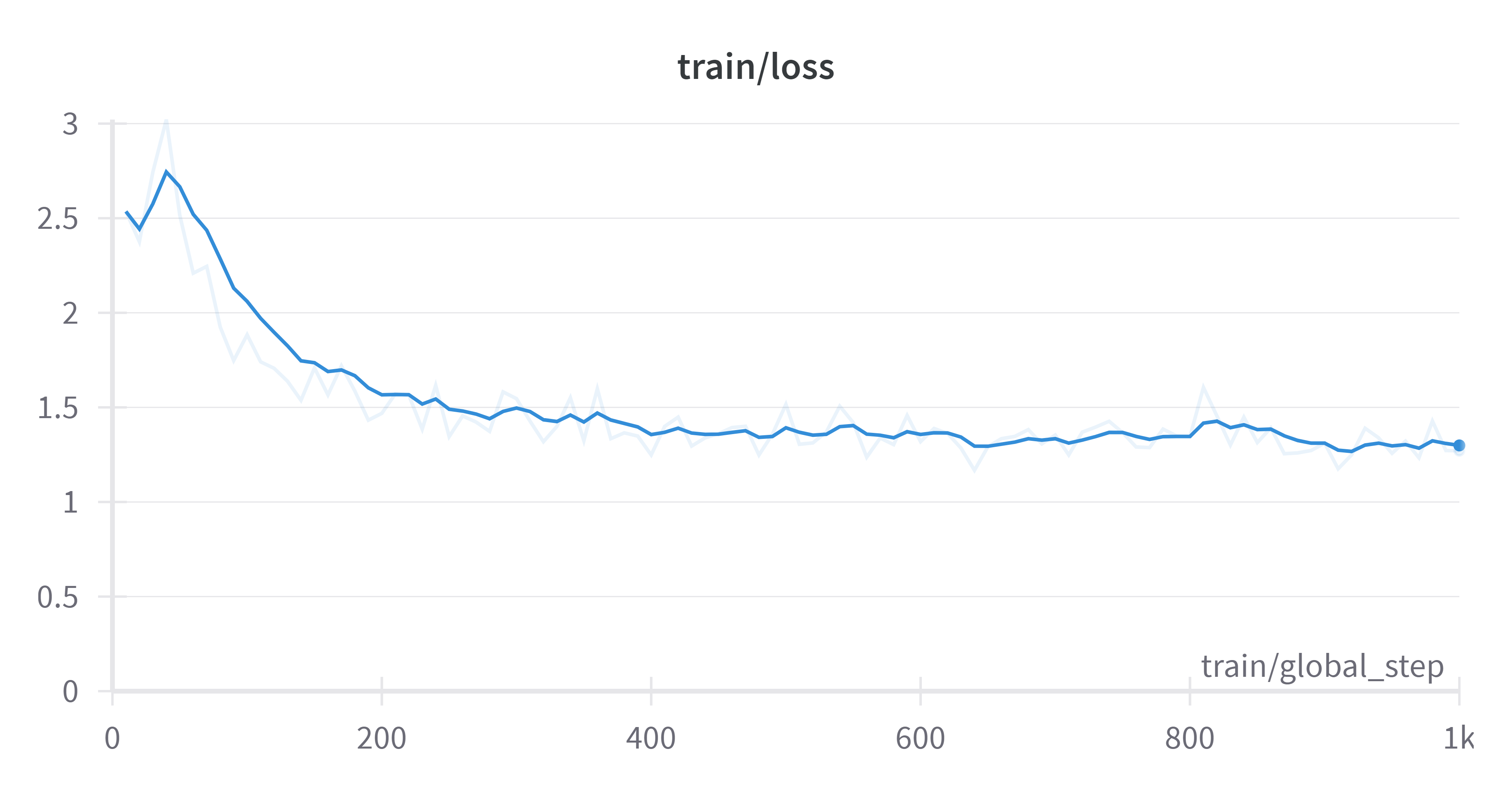

It achieves the following results on the evaluation set:

- Loss: 1.3610

- Accuracy: 0.4300

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 40

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 180 | 1.7491 | 0.2429 |

| No log | 2.0 | 360 | 1.7395 | 0.2451 |

| 1.7055 | 3.0 | 540 | 1.7242 | 0.2451 |

| 1.7055 | 4.0 | 720 | 1.6937 | 0.2980 |

| 1.7055 | 5.0 | 900 | 1.6446 | 0.3038 |

| 1.6419 | 6.0 | 1080 | 1.6173 | 0.3176 |

| 1.6419 | 7.0 | 1260 | 1.5638 | 0.3401 |

| 1.6419 | 8.0 | 1440 | 1.5355 | 0.3524 |

| 1.5258 | 9.0 | 1620 | 1.5112 | 0.3590 |

| 1.5258 | 10.0 | 1800 | 1.4870 | 0.3742 |

| 1.5258 | 11.0 | 1980 | 1.4729 | 0.3749 |

| 1.4424 | 12.0 | 2160 | 1.4664 | 0.3938 |

| 1.4424 | 13.0 | 2340 | 1.4524 | 0.4003 |

| 1.4002 | 14.0 | 2520 | 1.4390 | 0.4061 |

| 1.4002 | 15.0 | 2700 | 1.4317 | 0.4090 |

| 1.4002 | 16.0 | 2880 | 1.4241 | 0.4155 |

| 1.376 | 17.0 | 3060 | 1.4201 | 0.4148 |

| 1.376 | 18.0 | 3240 | 1.4069 | 0.4083 |

| 1.376 | 19.0 | 3420 | 1.4000 | 0.4184 |

| 1.3533 | 20.0 | 3600 | 1.3978 | 0.4235 |

| 1.3533 | 21.0 | 3780 | 1.3929 | 0.4329 |

| 1.3533 | 22.0 | 3960 | 1.3896 | 0.4329 |

| 1.3336 | 23.0 | 4140 | 1.3856 | 0.4264 |

| 1.3336 | 24.0 | 4320 | 1.3833 | 0.4322 |

| 1.3254 | 25.0 | 4500 | 1.3787 | 0.4235 |

| 1.3254 | 26.0 | 4680 | 1.3744 | 0.4329 |

| 1.3254 | 27.0 | 4860 | 1.3751 | 0.4300 |

| 1.3082 | 28.0 | 5040 | 1.3720 | 0.4336 |

| 1.3082 | 29.0 | 5220 | 1.3687 | 0.4300 |

| 1.3082 | 30.0 | 5400 | 1.3674 | 0.4293 |

| 1.3105 | 31.0 | 5580 | 1.3663 | 0.4373 |

| 1.3105 | 32.0 | 5760 | 1.3643 | 0.4351 |

| 1.3105 | 33.0 | 5940 | 1.3630 | 0.4271 |

| 1.295 | 34.0 | 6120 | 1.3628 | 0.4322 |

| 1.295 | 35.0 | 6300 | 1.3625 | 0.4300 |

| 1.295 | 36.0 | 6480 | 1.3623 | 0.4307 |

| 1.2919 | 37.0 | 6660 | 1.3617 | 0.4322 |

| 1.2919 | 38.0 | 6840 | 1.3613 | 0.4315 |

| 1.2905 | 39.0 | 7020 | 1.3610 | 0.4300 |

| 1.2905 | 40.0 | 7200 | 1.3610 | 0.4300 |

### Framework versions

- PEFT 0.10.0

- Transformers 4.38.2

- Pytorch 2.2.1+cu121

- Datasets 2.18.0

- Tokenizers 0.15.2

|

dhyay/mistral_dpo3k

|

dhyay

| 2024-03-24T22:31:32Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2024-03-24T03:45:09Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

gotchachurchkhela/SN6-20

|

gotchachurchkhela

| 2024-03-24T22:23:43Z | 4 | 0 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-03-24T22:18:49Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

Kukedlc/NeuralMergeTest-003

|

Kukedlc

| 2024-03-24T22:20:07Z | 5 | 0 |

transformers

|

[

"transformers",

"safetensors",

"mistral",

"text-generation",

"merge",

"mergekit",

"lazymergekit",

"chihoonlee10/T3Q-Mistral-Orca-Math-DPO",

"automerger/OgnoExperiment27-7B",

"base_model:automerger/OgnoExperiment27-7B",

"base_model:merge:automerger/OgnoExperiment27-7B",

"base_model:chihoonlee10/T3Q-Mistral-Orca-Math-DPO",

"base_model:merge:chihoonlee10/T3Q-Mistral-Orca-Math-DPO",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-03-24T22:15:20Z |

---

tags:

- merge

- mergekit

- lazymergekit

- chihoonlee10/T3Q-Mistral-Orca-Math-DPO

- automerger/OgnoExperiment27-7B

base_model:

- chihoonlee10/T3Q-Mistral-Orca-Math-DPO

- automerger/OgnoExperiment27-7B

---

# NeuralMergeTest-003

NeuralMergeTest-003 is a merge of the following models using [LazyMergekit](https://colab.research.google.com/drive/1obulZ1ROXHjYLn6PPZJwRR6GzgQogxxb?usp=sharing):

* [chihoonlee10/T3Q-Mistral-Orca-Math-DPO](https://huggingface.co/chihoonlee10/T3Q-Mistral-Orca-Math-DPO)

* [automerger/OgnoExperiment27-7B](https://huggingface.co/automerger/OgnoExperiment27-7B)

## 🧩 Configuration

```yaml

slices:

- sources:

- model: chihoonlee10/T3Q-Mistral-Orca-Math-DPO

layer_range: [0, 32]

- model: automerger/OgnoExperiment27-7B

layer_range: [0, 32]

merge_method: slerp

base_model: chihoonlee10/T3Q-Mistral-Orca-Math-DPO

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5

dtype: bfloat16

```

## 💻 Usage

```python

!pip install -qU transformers accelerate

from transformers import AutoTokenizer

import transformers

import torch

model = "Kukedlc/NeuralMergeTest-003"

messages = [{"role": "user", "content": "What is a large language model?"}]

tokenizer = AutoTokenizer.from_pretrained(model)

prompt = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

pipeline = transformers.pipeline(

"text-generation",

model=model,

torch_dtype=torch.float16,

device_map="auto",

)

outputs = pipeline(prompt, max_new_tokens=256, do_sample=True, temperature=0.7, top_k=50, top_p=0.95)

print(outputs[0]["generated_text"])

```

|

pawkanarek/gemmatron2

|

pawkanarek

| 2024-03-24T22:18:19Z | 139 | 0 |

transformers

|

[

"transformers",

"safetensors",

"gemma",

"text-generation",

"conversational",

"base_model:google/gemma-2b-it",

"base_model:finetune:google/gemma-2b-it",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-03-24T22:16:08Z |

---

license: other

base_model: google/gemma-2b-it

model-index:

- name: gemmatron2

results: []

---

This model is a fine-tuned version of [google/gemma-2b-it](https://huggingface.co/google/gemma-2b-it)

|

jgkym/colbert-in-domain

|

jgkym

| 2024-03-24T22:18:03Z | 34 | 0 |

transformers

|

[

"transformers",

"safetensors",

"bert",

"endpoints_compatible",

"region:us"

] | null | 2024-03-23T00:44:44Z |

버팀목전세자금대출 관련 리트리버

|

flammenai/flammen12-mistral-7B

|

flammenai

| 2024-03-24T22:10:25Z | 9 | 1 |

transformers

|

[

"transformers",

"safetensors",

"mistral",

"text-generation",

"mergekit",

"merge",

"base_model:flammenai/flammen11X-mistral-7B",

"base_model:merge:flammenai/flammen11X-mistral-7B",

"base_model:nbeerbower/bruphin-iota",

"base_model:merge:nbeerbower/bruphin-iota",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-03-24T22:07:18Z |

---

license: apache-2.0

base_model:

- nbeerbower/bruphin-iota

- nbeerbower/flammen11X-mistral-7B

library_name: transformers

tags:

- mergekit

- merge

---

# flammen12-mistral-7B

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the SLERP merge method.

### Models Merged

The following models were included in the merge:

* [nbeerbower/bruphin-iota](https://huggingface.co/nbeerbower/bruphin-iota)

* [nbeerbower/flammen11X-mistral-7B](https://huggingface.co/nbeerbower/flammen11X-mistral-7B)

### Configuration

The following YAML configuration was used to produce this model:

```yaml

models:

- model: nbeerbower/flammen11X-mistral-7B

layer_range: [0, 32]

- model: nbeerbower/bruphin-iota

layer_range: [0, 32]

merge_method: slerp

base_model: nbeerbower/flammen11X-mistral-7B

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5

dtype: bfloat16

```

|

B2111797/recipe_v1_lr1e-4_wu200_epo2

|

B2111797

| 2024-03-24T22:06:30Z | 140 | 0 |

transformers

|

[

"transformers",

"safetensors",

"gpt2",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-03-24T17:39:01Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

therealchefdave/llama-2-slerp

|

therealchefdave

| 2024-03-24T22:05:00Z | 5 | 0 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"mergekit",

"merge",

"arxiv:2306.01708",

"base_model:NousResearch/Llama-2-7b-chat-hf",

"base_model:merge:NousResearch/Llama-2-7b-chat-hf",

"base_model:georgesung/llama2_7b_chat_uncensored",

"base_model:merge:georgesung/llama2_7b_chat_uncensored",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-03-24T19:50:18Z |

---

base_model:

- georgesung/llama2_7b_chat_uncensored

- NousResearch/Llama-2-7b-chat-hf

library_name: transformers

tags:

- mergekit

- merge

---

# LlamaKinda

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the [TIES](https://arxiv.org/abs/2306.01708) merge method using [NousResearch/Llama-2-7b-chat-hf](https://huggingface.co/NousResearch/Llama-2-7b-chat-hf) as a base.

### Models Merged

The following models were included in the merge:

* [georgesung/llama2_7b_chat_uncensored](https://huggingface.co/georgesung/llama2_7b_chat_uncensored)

### Configuration

The following YAML configuration was used to produce this model:

```yaml

models:

- model: georgesung/llama2_7b_chat_uncensored

parameters:

density: [1, 0.7, 0.1] # density gradient

weight: 1.0

- model: NousResearch/Llama-2-7b-chat-hf

parameters:

density: 0.5

weight: [0, 0.3, 0.7, 1] # weight gradient

merge_method: ties

base_model: NousResearch/Llama-2-7b-chat-hf

parameters:

normalize: true

int8_mask: true

dtype: bfloat16

```

|

gonzalezrostani/my_awesome_wnut_all_JGTg

|

gonzalezrostani

| 2024-03-24T22:02:33Z | 110 | 0 |

transformers

|

[

"transformers",

"safetensors",

"distilbert",

"token-classification",

"generated_from_trainer",

"base_model:distilbert/distilbert-base-uncased",

"base_model:finetune:distilbert/distilbert-base-uncased",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2024-03-24T21:39:05Z |

---

license: apache-2.0

base_model: distilbert/distilbert-base-uncased

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: my_awesome_wnut_all_JGTg

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# my_awesome_wnut_all_JGTg

This model is a fine-tuned version of [distilbert/distilbert-base-uncased](https://huggingface.co/distilbert/distilbert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0394

- Precision: 0.5149

- Recall: 0.4094

- F1: 0.4561

- Accuracy: 0.9907

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 251 | 0.0342 | 0.4082 | 0.3150 | 0.3556 | 0.9893 |

| 0.0405 | 2.0 | 502 | 0.0365 | 0.5116 | 0.3465 | 0.4131 | 0.9906 |

| 0.0405 | 3.0 | 753 | 0.0354 | 0.5 | 0.4016 | 0.4454 | 0.9905 |

| 0.0136 | 4.0 | 1004 | 0.0394 | 0.5149 | 0.4094 | 0.4561 | 0.9907 |

### Framework versions

- Transformers 4.38.2

- Pytorch 2.2.1+cpu

- Datasets 2.18.0

- Tokenizers 0.15.2

|

balakhonoff/solidity_security_model_merged

|

balakhonoff

| 2024-03-24T21:59:31Z | 5 | 0 |

transformers

|

[

"transformers",

"safetensors",

"mistral",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"8-bit",

"region:us"

] |

text-generation

| 2024-03-24T21:14:41Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

rajevan123/STS-Lora-Fine-Tuning-Capstone-bert-testing-22-with-lower-r

|

rajevan123

| 2024-03-24T21:49:16Z | 6 | 0 |

peft

|

[

"peft",

"tensorboard",

"safetensors",

"generated_from_trainer",

"base_model:dslim/bert-base-NER",

"base_model:adapter:dslim/bert-base-NER",

"license:mit",

"region:us"

] | null | 2024-03-24T21:30:52Z |

---

license: mit

library_name: peft

tags:

- generated_from_trainer

metrics:

- accuracy

base_model: dslim/bert-base-NER

model-index:

- name: STS-Lora-Fine-Tuning-Capstone-bert-testing-22-with-lower-r

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# STS-Lora-Fine-Tuning-Capstone-bert-testing-22-with-lower-r

This model is a fine-tuned version of [dslim/bert-base-NER](https://huggingface.co/dslim/bert-base-NER) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.4650

- Accuracy: 0.3843

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 180 | 1.7491 | 0.2429 |

| No log | 2.0 | 360 | 1.7398 | 0.2451 |

| 1.7057 | 3.0 | 540 | 1.7266 | 0.2408 |

| 1.7057 | 4.0 | 720 | 1.6996 | 0.2922 |

| 1.7057 | 5.0 | 900 | 1.6538 | 0.2988 |

| 1.6492 | 6.0 | 1080 | 1.6283 | 0.3118 |

| 1.6492 | 7.0 | 1260 | 1.5879 | 0.3270 |

| 1.6492 | 8.0 | 1440 | 1.5578 | 0.3387 |

| 1.5479 | 9.0 | 1620 | 1.5355 | 0.3503 |

| 1.5479 | 10.0 | 1800 | 1.5148 | 0.3561 |

| 1.5479 | 11.0 | 1980 | 1.5062 | 0.3561 |

| 1.4735 | 12.0 | 2160 | 1.5005 | 0.3691 |

| 1.4735 | 13.0 | 2340 | 1.4876 | 0.3843 |

| 1.437 | 14.0 | 2520 | 1.4799 | 0.3800 |

| 1.437 | 15.0 | 2700 | 1.4768 | 0.3785 |

| 1.437 | 16.0 | 2880 | 1.4732 | 0.3851 |

| 1.4223 | 17.0 | 3060 | 1.4689 | 0.3800 |

| 1.4223 | 18.0 | 3240 | 1.4684 | 0.3822 |

| 1.4223 | 19.0 | 3420 | 1.4657 | 0.3822 |

| 1.4123 | 20.0 | 3600 | 1.4650 | 0.3843 |

### Framework versions

- PEFT 0.10.0

- Transformers 4.38.2

- Pytorch 2.2.1+cu121

- Datasets 2.18.0

- Tokenizers 0.15.2

|

sosoai/hansoldeco-interior-defective-model-13.6B-v0.1-mlx

|

sosoai

| 2024-03-24T21:45:39Z | 5 | 0 |

mlx

|

[

"mlx",

"safetensors",

"mistral",

"en",

"ko",

"license:apache-2.0",

"region:us"

] | null | 2024-03-24T20:43:27Z |

---

language:

- en

- ko

license: apache-2.0

tags:

- mlx

---

# sosoai/hansoldeco-interior-defective-model-13.6B-v0.1-mlx

This model was converted to MLX format from [`sosoai/hansoldeco-interior-defective-model-13.6B-v0.1`]().

Refer to the [original model card](https://huggingface.co/sosoai/hansoldeco-interior-defective-model-13.6B-v0.1) for more details on the model.

## Use with mlx

```bash

pip install mlx-lm

```

```python

from mlx_lm import load, generate

model, tokenizer = load("sosoai/hansoldeco-interior-defective-model-13.6B-v0.1-mlx")

response = generate(model, tokenizer, prompt="hello", verbose=True)

```

|

readingrocket/clip-vit-base-patch32-002

|

readingrocket

| 2024-03-24T21:39:36Z | 0 | 0 |

transformers

|

[

"transformers",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2024-03-24T21:39:35Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

javijer/lora-phi2-alpaca

|

javijer

| 2024-03-24T21:34:54Z | 56 | 0 |

transformers

|

[

"transformers",

"safetensors",

"phi",

"text-generation",

"custom_code",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-03-24T21:01:09Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

qwp4w3hyb/Cerebrum-1.0-8x7b-iMat-GGUF

|

qwp4w3hyb

| 2024-03-24T21:33:44Z | 5 | 0 | null |

[

"gguf",

"Mixtral",

"instruct",

"finetune",

"imatrix",

"base_model:AetherResearch/Cerebrum-1.0-8x7b",

"base_model:quantized:AetherResearch/Cerebrum-1.0-8x7b",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2024-03-24T18:45:31Z |

---

base_model: AetherResearch/Cerebrum-1.0-8x7b

tags:

- Mixtral

- instruct

- finetune

- imatrix

model-index:

- name: Cerebrum-1.0-8x7b-iMat-GGUF

results: []

license: apache-2.0

---

# Cerebrum-1.0-8x7b-iMat-GGUF

Source Model: [AetherResearch/Cerebrum-1.0-8x7b](https://huggingface.co/AetherResearch/Cerebrum-1.0-8x7b)

Quantized with [llama.cpp](https://github.com/ggerganov/llama.cpp) commit [46acb3676718b983157058aecf729a2064fc7d34](https://github.com/ggerganov/llama.cpp/commit/46acb3676718b983157058aecf729a2064fc7d34)

Imatrix was generated from the f16 gguf via this command:

./imatrix -c 512 -m $out_path/$base_quant_name -f $llama_cpp_path/groups_merged.txt -o $out_path/imat-f16-gmerged.dat

Using the dataset from [here](https://github.com/ggerganov/llama.cpp/discussions/5263#discussioncomment-8395384)

|

rajevan123/STS-Lora-Fine-Tuning-Capstone-bert-testing-21-with-lower-r

|

rajevan123

| 2024-03-24T21:23:36Z | 2 | 0 |

peft

|

[

"peft",

"tensorboard",

"safetensors",

"generated_from_trainer",

"base_model:dslim/bert-base-NER",

"base_model:adapter:dslim/bert-base-NER",

"license:mit",

"region:us"

] | null | 2024-03-24T21:05:07Z |

---

license: mit

library_name: peft

tags:

- generated_from_trainer

metrics:

- accuracy

base_model: dslim/bert-base-NER

model-index:

- name: STS-Lora-Fine-Tuning-Capstone-bert-testing-21-with-lower-r

results: []

---