modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-08-27 18:28:06

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 523

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-08-27 18:27:40

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

rahul77/pegasus-large-finetuned-rahulver-summarization-pegasus-model

|

rahul77

| 2022-12-09T21:07:11Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"pegasus",

"text2text-generation",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-12-09T19:27:29Z |

---

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: pegasus-large-finetuned-rahulver-summarization-pegasus-model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# pegasus-large-finetuned-rahulver-summarization-pegasus-model

This model is a fine-tuned version of [google/pegasus-large](https://huggingface.co/google/pegasus-large) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0906

- Rouge1: 61.2393

- Rouge2: 43.8277

- Rougel: 50.0054

- Rougelsum: 57.4674

- Gen Len: 114.6

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:--------:|

| 1.3648 | 1.0 | 140 | 0.7201 | 50.0081 | 32.6454 | 39.3021 | 45.1602 | 125.7333 |

| 0.8502 | 2.0 | 280 | 0.6067 | 57.8678 | 41.5251 | 46.0694 | 54.1055 | 128.3333 |

| 0.5053 | 3.0 | 420 | 0.6642 | 58.3644 | 41.8619 | 47.6199 | 54.1639 | 108.9667 |

| 0.3469 | 4.0 | 560 | 0.7318 | 61.8988 | 45.7303 | 51.1928 | 57.9306 | 123.1667 |

| 0.2779 | 5.0 | 700 | 0.7274 | 62.9354 | 46.5 | 51.6431 | 59.2443 | 99.6333 |

| 0.2124 | 6.0 | 840 | 0.8618 | 63.8552 | 48.3846 | 53.3804 | 60.2718 | 111.2333 |

| 0.1864 | 7.0 | 980 | 1.0058 | 59.5675 | 42.4324 | 48.462 | 55.3498 | 108.4667 |

| 0.1691 | 8.0 | 1120 | 0.9984 | 60.1063 | 43.6022 | 49.7163 | 56.9865 | 130.2 |

| 0.1603 | 9.0 | 1260 | 1.0062 | 61.398 | 44.4507 | 50.2044 | 57.4447 | 99.0333 |

| 0.1674 | 10.0 | 1400 | 1.0906 | 61.2393 | 43.8277 | 50.0054 | 57.4674 | 114.6 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

|

jennirocket/ppo-LunarLander-v2

|

jennirocket

| 2022-12-09T20:56:17Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-12-09T19:20:15Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 268.41 +/- 17.97

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Gladiator/albert-large-v2_ner_wikiann

|

Gladiator

| 2022-12-09T20:43:01Z | 15 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"albert",

"token-classification",

"generated_from_trainer",

"dataset:wikiann",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-12-09T16:16:16Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- wikiann

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: albert-large-v2_ner_wikiann

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: wikiann

type: wikiann

args: en

metrics:

- name: Precision

type: precision

value: 0.8239671720684378

- name: Recall

type: recall

value: 0.8374805598755832

- name: F1

type: f1

value: 0.8306689103912495

- name: Accuracy

type: accuracy

value: 0.926951922121784

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# albert-large-v2_ner_wikiann

This model is a fine-tuned version of [albert-large-v2](https://huggingface.co/albert-large-v2) on the wikiann dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3416

- Precision: 0.8240

- Recall: 0.8375

- F1: 0.8307

- Accuracy: 0.9270

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.3451 | 1.0 | 2500 | 0.3555 | 0.7745 | 0.7850 | 0.7797 | 0.9067 |

| 0.2995 | 2.0 | 5000 | 0.2927 | 0.7932 | 0.8240 | 0.8083 | 0.9205 |

| 0.252 | 3.0 | 7500 | 0.2936 | 0.8094 | 0.8236 | 0.8164 | 0.9239 |

| 0.1676 | 4.0 | 10000 | 0.3302 | 0.8256 | 0.8359 | 0.8307 | 0.9268 |

| 0.1489 | 5.0 | 12500 | 0.3416 | 0.8240 | 0.8375 | 0.8307 | 0.9270 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

SergejSchweizer/ppo-LunarLander-v2

|

SergejSchweizer

| 2022-12-09T20:37:16Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-12-09T20:36:53Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 246.49 +/- 46.47

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

shreyasharma/t5-small-ret-conceptnet2

|

shreyasharma

| 2022-12-09T20:26:54Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-11-28T08:04:37Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

model-index:

- name: t5-small-ret-conceptnet2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-ret-conceptnet2

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1709

- Acc: {'accuracy': 0.8700980392156863}

- Precision: {'precision': 0.811340206185567}

- Recall: {'recall': 0.9644607843137255}

- F1: {'f1': 0.8812989921612542}

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Acc | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------------------------------:|:--------------------------------:|:------------------------------:|:--------------------------:|

| 0.1989 | 1.0 | 721 | 0.1709 | {'accuracy': 0.8700980392156863} | {'precision': 0.811340206185567} | {'recall': 0.9644607843137255} | {'f1': 0.8812989921612542} |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

|

graydient/diffusers-mattthew-technicolor-50s-diffusion

|

graydient

| 2022-12-09T20:12:14Z | 3 | 1 |

diffusers

|

[

"diffusers",

"license:cc-by-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2022-12-09T19:31:04Z |

---

license: cc-by-sa-4.0

---

# 🌈 Diffusers Adaptation: Technicolor-50s Diffusion

## Style Description

- This is a port of [Mattthew's excellent Technicolor 50s Diffusion](https://huggingface.co/mattthew/technicolor-50s-diffusion/tree/main) model to Huggingface Diffusers.

- Please see original highly-saturated postcard-like colors, flat high-key lighting, strong rim-lighting, 40s and 50s lifestyle

|

thegovind/pills1testmodel

|

thegovind

| 2022-12-09T20:12:07Z | 10 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"text-to-image",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2022-12-07T21:46:05Z |

---

license: creativeml-openrail-m

tags:

- text-to-image

widget:

- text: pills

---

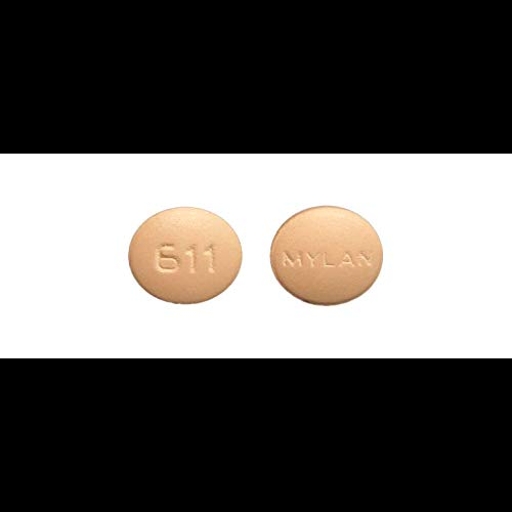

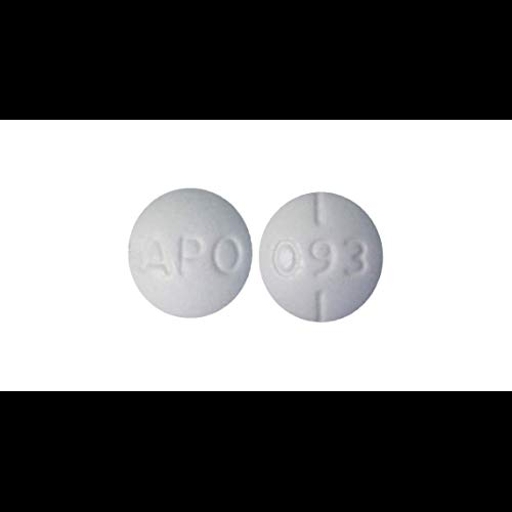

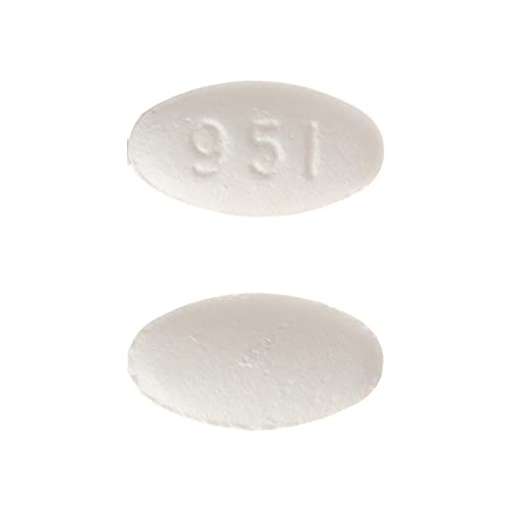

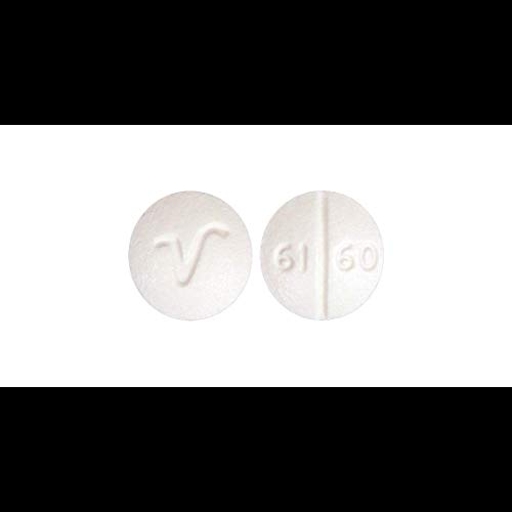

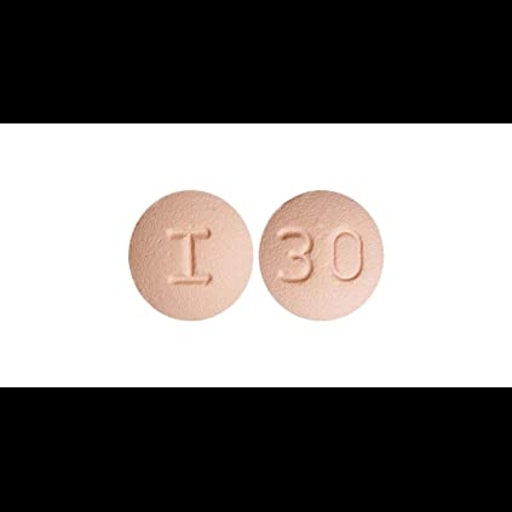

### pills1testmodel Dreambooth model fine-tuned v2-1-512 base model

Sample pictures of:

pills (use that on your prompt)

|

Cbdlt/unit1-LunarLander-1

|

Cbdlt

| 2022-12-09T20:00:29Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-12-09T19:59:01Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 275.72 +/- 20.44

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

rakeshjohny/PPO_LunarLanderV2

|

rakeshjohny

| 2022-12-09T19:51:29Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-12-09T19:50:59Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 230.53 +/- 18.37

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Sandipan1994/t5-small-entailement-Writer

|

Sandipan1994

| 2022-12-09T19:34:46Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-12-09T19:10:22Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: t5-small-entailement-Writer

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-entailement-Writer

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5958

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 150

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 42 | 1.8511 |

| No log | 2.0 | 84 | 1.2249 |

| No log | 3.0 | 126 | 0.9976 |

| No log | 4.0 | 168 | 0.9108 |

| No log | 5.0 | 210 | 0.8478 |

| No log | 6.0 | 252 | 0.8186 |

| No log | 7.0 | 294 | 0.7965 |

| No log | 8.0 | 336 | 0.7815 |

| No log | 9.0 | 378 | 0.7634 |

| No log | 10.0 | 420 | 0.7544 |

| No log | 11.0 | 462 | 0.7408 |

| 1.2198 | 12.0 | 504 | 0.7298 |

| 1.2198 | 13.0 | 546 | 0.7240 |

| 1.2198 | 14.0 | 588 | 0.7139 |

| 1.2198 | 15.0 | 630 | 0.7070 |

| 1.2198 | 16.0 | 672 | 0.7028 |

| 1.2198 | 17.0 | 714 | 0.6977 |

| 1.2198 | 18.0 | 756 | 0.6926 |

| 1.2198 | 19.0 | 798 | 0.6906 |

| 1.2198 | 20.0 | 840 | 0.6846 |

| 1.2198 | 21.0 | 882 | 0.6822 |

| 1.2198 | 22.0 | 924 | 0.6760 |

| 1.2198 | 23.0 | 966 | 0.6710 |

| 0.7403 | 24.0 | 1008 | 0.6667 |

| 0.7403 | 25.0 | 1050 | 0.6657 |

| 0.7403 | 26.0 | 1092 | 0.6653 |

| 0.7403 | 27.0 | 1134 | 0.6588 |

| 0.7403 | 28.0 | 1176 | 0.6584 |

| 0.7403 | 29.0 | 1218 | 0.6573 |

| 0.7403 | 30.0 | 1260 | 0.6520 |

| 0.7403 | 31.0 | 1302 | 0.6522 |

| 0.7403 | 32.0 | 1344 | 0.6525 |

| 0.7403 | 33.0 | 1386 | 0.6463 |

| 0.7403 | 34.0 | 1428 | 0.6453 |

| 0.7403 | 35.0 | 1470 | 0.6437 |

| 0.6642 | 36.0 | 1512 | 0.6397 |

| 0.6642 | 37.0 | 1554 | 0.6382 |

| 0.6642 | 38.0 | 1596 | 0.6365 |

| 0.6642 | 39.0 | 1638 | 0.6332 |

| 0.6642 | 40.0 | 1680 | 0.6335 |

| 0.6642 | 41.0 | 1722 | 0.6325 |

| 0.6642 | 42.0 | 1764 | 0.6295 |

| 0.6642 | 43.0 | 1806 | 0.6304 |

| 0.6642 | 44.0 | 1848 | 0.6287 |

| 0.6642 | 45.0 | 1890 | 0.6272 |

| 0.6642 | 46.0 | 1932 | 0.6267 |

| 0.6642 | 47.0 | 1974 | 0.6242 |

| 0.6127 | 48.0 | 2016 | 0.6232 |

| 0.6127 | 49.0 | 2058 | 0.6225 |

| 0.6127 | 50.0 | 2100 | 0.6211 |

| 0.6127 | 51.0 | 2142 | 0.6204 |

| 0.6127 | 52.0 | 2184 | 0.6196 |

| 0.6127 | 53.0 | 2226 | 0.6183 |

| 0.6127 | 54.0 | 2268 | 0.6168 |

| 0.6127 | 55.0 | 2310 | 0.6175 |

| 0.6127 | 56.0 | 2352 | 0.6160 |

| 0.6127 | 57.0 | 2394 | 0.6154 |

| 0.6127 | 58.0 | 2436 | 0.6143 |

| 0.6127 | 59.0 | 2478 | 0.6142 |

| 0.5799 | 60.0 | 2520 | 0.6131 |

| 0.5799 | 61.0 | 2562 | 0.6122 |

| 0.5799 | 62.0 | 2604 | 0.6120 |

| 0.5799 | 63.0 | 2646 | 0.6115 |

| 0.5799 | 64.0 | 2688 | 0.6119 |

| 0.5799 | 65.0 | 2730 | 0.6112 |

| 0.5799 | 66.0 | 2772 | 0.6099 |

| 0.5799 | 67.0 | 2814 | 0.6094 |

| 0.5799 | 68.0 | 2856 | 0.6082 |

| 0.5799 | 69.0 | 2898 | 0.6092 |

| 0.5799 | 70.0 | 2940 | 0.6081 |

| 0.5799 | 71.0 | 2982 | 0.6071 |

| 0.5558 | 72.0 | 3024 | 0.6062 |

| 0.5558 | 73.0 | 3066 | 0.6079 |

| 0.5558 | 74.0 | 3108 | 0.6072 |

| 0.5558 | 75.0 | 3150 | 0.6052 |

| 0.5558 | 76.0 | 3192 | 0.6066 |

| 0.5558 | 77.0 | 3234 | 0.6049 |

| 0.5558 | 78.0 | 3276 | 0.6042 |

| 0.5558 | 79.0 | 3318 | 0.6039 |

| 0.5558 | 80.0 | 3360 | 0.6050 |

| 0.5558 | 81.0 | 3402 | 0.6042 |

| 0.5558 | 82.0 | 3444 | 0.6040 |

| 0.5558 | 83.0 | 3486 | 0.6029 |

| 0.5292 | 84.0 | 3528 | 0.6032 |

| 0.5292 | 85.0 | 3570 | 0.6039 |

| 0.5292 | 86.0 | 3612 | 0.6036 |

| 0.5292 | 87.0 | 3654 | 0.6019 |

| 0.5292 | 88.0 | 3696 | 0.6014 |

| 0.5292 | 89.0 | 3738 | 0.6022 |

| 0.5292 | 90.0 | 3780 | 0.6014 |

| 0.5292 | 91.0 | 3822 | 0.6020 |

| 0.5292 | 92.0 | 3864 | 0.6028 |

| 0.5292 | 93.0 | 3906 | 0.5994 |

| 0.5292 | 94.0 | 3948 | 0.6004 |

| 0.5292 | 95.0 | 3990 | 0.5987 |

| 0.5159 | 96.0 | 4032 | 0.5992 |

| 0.5159 | 97.0 | 4074 | 0.5993 |

| 0.5159 | 98.0 | 4116 | 0.5989 |

| 0.5159 | 99.0 | 4158 | 0.6004 |

| 0.5159 | 100.0 | 4200 | 0.6001 |

| 0.5159 | 101.0 | 4242 | 0.6008 |

| 0.5159 | 102.0 | 4284 | 0.6006 |

| 0.5159 | 103.0 | 4326 | 0.5999 |

| 0.5159 | 104.0 | 4368 | 0.5994 |

| 0.5159 | 105.0 | 4410 | 0.5996 |

| 0.5159 | 106.0 | 4452 | 0.5991 |

| 0.5159 | 107.0 | 4494 | 0.5990 |

| 0.5004 | 108.0 | 4536 | 0.5996 |

| 0.5004 | 109.0 | 4578 | 0.5988 |

| 0.5004 | 110.0 | 4620 | 0.5992 |

| 0.5004 | 111.0 | 4662 | 0.5984 |

| 0.5004 | 112.0 | 4704 | 0.5982 |

| 0.5004 | 113.0 | 4746 | 0.5973 |

| 0.5004 | 114.0 | 4788 | 0.5984 |

| 0.5004 | 115.0 | 4830 | 0.5973 |

| 0.5004 | 116.0 | 4872 | 0.5977 |

| 0.5004 | 117.0 | 4914 | 0.5970 |

| 0.5004 | 118.0 | 4956 | 0.5976 |

| 0.5004 | 119.0 | 4998 | 0.5962 |

| 0.488 | 120.0 | 5040 | 0.5969 |

| 0.488 | 121.0 | 5082 | 0.5965 |

| 0.488 | 122.0 | 5124 | 0.5969 |

| 0.488 | 123.0 | 5166 | 0.5972 |

| 0.488 | 124.0 | 5208 | 0.5966 |

| 0.488 | 125.0 | 5250 | 0.5962 |

| 0.488 | 126.0 | 5292 | 0.5966 |

| 0.488 | 127.0 | 5334 | 0.5960 |

| 0.488 | 128.0 | 5376 | 0.5969 |

| 0.488 | 129.0 | 5418 | 0.5960 |

| 0.488 | 130.0 | 5460 | 0.5960 |

| 0.483 | 131.0 | 5502 | 0.5960 |

| 0.483 | 132.0 | 5544 | 0.5965 |

| 0.483 | 133.0 | 5586 | 0.5965 |

| 0.483 | 134.0 | 5628 | 0.5963 |

| 0.483 | 135.0 | 5670 | 0.5965 |

| 0.483 | 136.0 | 5712 | 0.5962 |

| 0.483 | 137.0 | 5754 | 0.5963 |

| 0.483 | 138.0 | 5796 | 0.5961 |

| 0.483 | 139.0 | 5838 | 0.5963 |

| 0.483 | 140.0 | 5880 | 0.5964 |

| 0.483 | 141.0 | 5922 | 0.5957 |

| 0.483 | 142.0 | 5964 | 0.5957 |

| 0.4809 | 143.0 | 6006 | 0.5957 |

| 0.4809 | 144.0 | 6048 | 0.5956 |

| 0.4809 | 145.0 | 6090 | 0.5958 |

| 0.4809 | 146.0 | 6132 | 0.5958 |

| 0.4809 | 147.0 | 6174 | 0.5959 |

| 0.4809 | 148.0 | 6216 | 0.5958 |

| 0.4809 | 149.0 | 6258 | 0.5958 |

| 0.4809 | 150.0 | 6300 | 0.5958 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

|

lysandre/dum

|

lysandre

| 2022-12-09T19:34:24Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"OpenCLIP",

"en",

"dataset:sst2",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-03-02T23:29:05Z |

---

language: en

license: apache-2.0

datasets:

- sst2

tags:

- OpenCLIP

---

# Sentiment Analysis

This is a BERT model fine-tuned for sentiment analysis.

|

nbonaker/ddpm-celeb-face-32

|

nbonaker

| 2022-12-09T19:26:57Z | 1 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"en",

"dataset:ddpm-celeb-face-32",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2022-12-09T16:24:53Z |

---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: ddpm-celeb-face-32

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-celeb-face-32

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `ddpm-celeb-face-32` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-06

- train_batch_size: 32

- eval_batch_size: 32

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 50

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/nbonaker/ddpm-celeb-face-32/tensorboard?#scalars)

|

Alexao/whisper-small-swe2

|

Alexao

| 2022-12-09T19:24:47Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"whisper",

"automatic-speech-recognition",

"hf-asr-leaderboard",

"generated_from_trainer",

"swe",

"dataset:mozilla-foundation/common_voice_11_0",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-12-09T19:11:59Z |

---

language:

- swe

license: apache-2.0

tags:

- hf-asr-leaderboard

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_11_0

model-index:

- name: Whisper Small swe - Swedish

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small swe - Swedish

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the Common Voice 11.0 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-06

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 4000

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

|

DimiNim/ppo-LunarLander-v2

|

DimiNim

| 2022-12-09T18:32:09Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-12-09T18:31:41Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 258.91 +/- 21.16

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

romc57/PPO_LunarLanderV2

|

romc57

| 2022-12-09T18:28:42Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-12-09T18:28:22Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 270.65 +/- 16.76

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

tripplyons/flan-t5-base-xsum

|

tripplyons

| 2022-12-09T18:23:33Z | 6 | 0 |

transformers

|

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-12-05T02:21:16Z |

---

license: apache-2.0

---

# google/flan-t5-base finetuned on xsum using LoRA with adapter-transformers

## Usage

Use the original flan-t5-base tokenizer:

```python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("google/flan-t5-base")

model = AutoModelForSeq2SeqLM.from_pretrained("tripplyons/flan-t5-base-xsum")

input_text = "summarize: The ex-Reading defender denied fraudulent trading charges relating to the Sodje Sports Foundation - a charity to raise money for Nigerian sport. Mr Sodje, 37, is jointly charged with elder brothers Efe, 44, Bright, 50 and Stephen, 42. Appearing at the Old Bailey earlier, all four denied the offence. The charge relates to offences which allegedly took place between 2008 and 2014. Sam, from Kent, Efe and Bright, of Greater Manchester, and Stephen, from Bexley, are due to stand trial in July. They were all released on bail."

input_ids = tokenizer([input_text], max_length=512, truncation=True, padding=True, return_tensors='pt')['input_ids']

output = model.generate(input_ids, max_length=512)

output_text = tokenizer.decode(output[0], skip_special_tokens=True)

print(output_text)

```

|

Sanjay-Papaiahgari/ppo-LunarLander-v2

|

Sanjay-Papaiahgari

| 2022-12-09T17:41:10Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-12-09T17:40:48Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: MlpPolicy

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 231.53 +/- 72.30

name: mean_reward

verified: false

---

# **MlpPolicy** Agent playing **LunarLander-v2**

This is a trained model of a **MlpPolicy** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

deepdml/whisper-small-eu

|

deepdml

| 2022-12-09T17:26:01Z | 5 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"whisper",

"automatic-speech-recognition",

"whisper-event",

"generated_from_trainer",

"eu",

"dataset:mozilla-foundation/common_voice_11_0",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-12-08T21:19:49Z |

---

license: apache-2.0

language:

- eu

tags:

- whisper-event

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_11_0

metrics:

- wer

model-index:

- name: openai/whisper-small

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: mozilla-foundation/common_voice_11_0 eu

type: mozilla-foundation/common_voice_11_0

config: eu

split: test

args: eu

metrics:

- name: Wer

type: wer

value: 19.766305675433596

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# openai/whisper-small Basque-Euskera

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the common_voice_11_0 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4485

- Wer: 19.7663

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 64

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 5000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.048 | 4.04 | 1000 | 0.3402 | 21.7816 |

| 0.0047 | 9.03 | 2000 | 0.3862 | 20.1694 |

| 0.0012 | 14.02 | 3000 | 0.4221 | 19.7419 |

| 0.0008 | 19.02 | 4000 | 0.4411 | 19.7174 |

| 0.0006 | 24.01 | 5000 | 0.4485 | 19.7663 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu117

- Datasets 2.7.1.dev0

- Tokenizers 0.13.2

|

EmileEsmaili/ddpm-sheetmusic-clean-l2loss-colabVM

|

EmileEsmaili

| 2022-12-09T17:01:45Z | 3 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"en",

"dataset:EmileEsmaili/sheet_music_clean",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2022-12-09T07:16:34Z |

---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: EmileEsmaili/sheet_music_clean

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-sheetmusic-clean-l2loss-colabVM

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `EmileEsmaili/sheet_music_clean` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 8

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: no

### Training results

📈 [TensorBoard logs](https://huggingface.co/EmileEsmaili/ddpm-sheetmusic-clean-l2loss-colabVM/tensorboard?#scalars)

|

AbyelT/Whisper-models

|

AbyelT

| 2022-12-09T16:41:03Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"whisper",

"automatic-speech-recognition",

"hf-asr-leaderboard",

"generated_from_trainer",

"hi",

"dataset:mozilla-foundation/common_voice_11_0",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-12-05T20:59:34Z |

---

language:

- hi

license: apache-2.0

tags:

- hf-asr-leaderboard

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_11_0

model-index:

- name: Whisper Small - Swedish

results: []

metrics:

- {wer}

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small Hi - Swedish

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the Common Voice 11.0 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 4000

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.12.1+cu113

- Datasets 2.7.1

- Tokenizers 0.13.2

|

parinzee/whisper-small-th-newmm-old

|

parinzee

| 2022-12-09T16:10:33Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"whisper",

"automatic-speech-recognition",

"whisper-event",

"generated_from_trainer",

"th",

"dataset:mozilla-foundation/common_voice_11_0",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-12-08T15:14:14Z |

---

language:

- th

license: apache-2.0

tags:

- whisper-event

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_11_0

model-index:

- name: Whisper Small Thai Newmm Tokenized - Parinthapat Pengpun

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small Thai Newmm Tokenized - Parinthapat Pengpun

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the Common Voice 11.0 dataset.

It achieves the following results on the evaluation set:

- eval_loss: 0.2095

- eval_wer: 26.6533

- eval_cer: 8.0405

- eval_runtime: 5652.2819

- eval_samples_per_second: 1.934

- eval_steps_per_second: 0.061

- epoch: 5.06

- step: 2000

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 64

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 5000

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

|

huggingtweets/thechosenberg

|

huggingtweets

| 2022-12-09T15:58:44Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-12-09T15:47:40Z |

---

language: en

thumbnail: http://www.huggingtweets.com/thechosenberg/1670601518761/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1600957831880097793/TxYmGY8n_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">rosey🌹</div>

<div style="text-align: center; font-size: 14px;">@thechosenberg</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from rosey🌹.

| Data | rosey🌹 |

| --- | --- |

| Tweets downloaded | 3239 |

| Retweets | 3 |

| Short tweets | 310 |

| Tweets kept | 2926 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/1a0vfvx2/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @thechosenberg's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/387zccfj) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/387zccfj/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/thechosenberg')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

adisomani/distilbert-base-uncased-finetuned-sqaud

|

adisomani

| 2022-12-09T15:45:03Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"question-answering",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-12-09T11:01:37Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: distilbert-base-uncased-finetuned-sqaud

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-sqaud

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2831

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 14 | 0.9851 |

| No log | 2.0 | 28 | 0.6955 |

| No log | 3.0 | 42 | 0.5781 |

| No log | 4.0 | 56 | 0.4548 |

| No log | 5.0 | 70 | 0.4208 |

| No log | 6.0 | 84 | 0.3592 |

| No log | 7.0 | 98 | 0.3422 |

| No log | 8.0 | 112 | 0.3424 |

| No log | 9.0 | 126 | 0.4046 |

| No log | 10.0 | 140 | 0.3142 |

| No log | 11.0 | 154 | 0.3262 |

| No log | 12.0 | 168 | 0.2879 |

| No log | 13.0 | 182 | 0.3376 |

| No log | 14.0 | 196 | 0.2870 |

| No log | 15.0 | 210 | 0.2984 |

| No log | 16.0 | 224 | 0.2807 |

| No log | 17.0 | 238 | 0.2889 |

| No log | 18.0 | 252 | 0.2877 |

| No log | 19.0 | 266 | 0.2820 |

| No log | 20.0 | 280 | 0.2831 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

|

Yuyang2022/yue

|

Yuyang2022

| 2022-12-09T15:27:06Z | 16 | 0 |

transformers

|

[

"transformers",

"pytorch",

"whisper",

"automatic-speech-recognition",

"hf-asr-leaderboard",

"generated_from_trainer",

"yue",

"dataset:mozilla-foundation/common_voice_11",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-12-09T15:17:55Z |

---

language:

- yue

license: apache-2.0

tags:

- hf-asr-leaderboard

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_11

metrics:

- wer

model-index:

- name: Whisper Base Yue

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice 11.0 yue

type: mozilla-foundation/common_voice_11

config: unclear

split: None

args: 'config: yue, split: train'

metrics:

- name: Wer

type: wer

value: 69.58637469586375

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Base Yue

This model is a fine-tuned version of [openai/whisper-base](https://huggingface.co/openai/whisper-base) on the Common Voice 11.0 yue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3671

- Wer: 69.5864

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 200

- training_steps: 1000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.0998 | 2.78 | 500 | 0.3500 | 71.4517 |

| 0.0085 | 5.56 | 1000 | 0.3671 | 69.5864 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

|

nandovallec/whisper-tiny-bg-l

|

nandovallec

| 2022-12-09T15:05:19Z | 12 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"whisper",

"automatic-speech-recognition",

"hf-asr-leaderboard",

"generated_from_trainer",

"bg",

"dataset:mozilla-foundation/common_voice_11_0",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-12-09T09:44:44Z |

---

language:

- bg

license: apache-2.0

tags:

- hf-asr-leaderboard

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_11_0

metrics:

- wer

model-index:

- name: Whisper Small Bg - Yonchevisky_tes2t

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice 11.0

type: mozilla-foundation/common_voice_11_0

config: bg

split: test

args: 'config: bg, split: test'

metrics:

- name: Wer

type: wer

value: 61.83524504692388

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small Bg - Yonchevisky_tes2t

This model is a fine-tuned version of [openai/whisper-tiny](https://huggingface.co/openai/whisper-tiny) on the Common Voice 11.0 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7377

- Wer: 61.8352

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 1000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 1.8067 | 0.37 | 100 | 1.6916 | 137.6897 |

| 0.9737 | 0.73 | 200 | 1.1197 | 78.3571 |

| 0.7747 | 1.1 | 300 | 0.9763 | 73.8906 |

| 0.6672 | 1.47 | 400 | 0.8972 | 70.7102 |

| 0.6196 | 1.84 | 500 | 0.8329 | 67.4545 |

| 0.4849 | 2.21 | 600 | 0.7968 | 66.6029 |

| 0.4402 | 2.57 | 700 | 0.7597 | 62.7795 |

| 0.4601 | 2.94 | 800 | 0.7385 | 61.8642 |

| 0.3545 | 3.31 | 900 | 0.7394 | 61.5050 |

| 0.3596 | 3.68 | 1000 | 0.7377 | 61.8352 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu117

- Datasets 2.7.1

- Tokenizers 0.13.2

|

kurianbenoy/whisper-ml-first-model

|

kurianbenoy

| 2022-12-09T14:49:38Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"whisper",

"automatic-speech-recognition",

"whisper-event",

"ml",

"dataset:mozilla-foundation/common_voice_11_0",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-12-09T13:59:15Z |

---

language:

- ml

license: apache-2.0

tags:

- whisper-event

datasets:

- mozilla-foundation/common_voice_11_0

---

#

This model is a fine-tuned version of [openai/whisper-tiny](https://huggingface.co/openai/whisper-tiny) on the Common Voice 11.0 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 64

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 50

- training_steps: 500

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.24.0

- Pytorch 1.13.0+cu117

- Datasets 2.7.1

- Tokenizers 0.13.2

|

ViktorDo/DistilBERT-POWO_Lifecycle_Finetuned

|

ViktorDo

| 2022-12-09T14:31:05Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-10-20T11:22:36Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: DistilBERT-POWO_Lifecycle_Finetuned

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# DistilBERT-POWO_Lifecycle_Finetuned

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0785

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.0875 | 1.0 | 1704 | 0.0806 |

| 0.079 | 2.0 | 3408 | 0.0784 |

| 0.0663 | 3.0 | 5112 | 0.0785 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.12.1+cu113

- Datasets 2.7.1

- Tokenizers 0.13.2

|

ybutsik/ppo-LunarLander-v2-test

|

ybutsik

| 2022-12-09T14:28:18Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-12-09T14:27:41Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: -93.15 +/- 20.45

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

shashank89aiml/ppo-LunarLander-v2

|

shashank89aiml

| 2022-12-09T14:16:35Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-12-09T14:09:47Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 265.61 +/- 21.48

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

klashenrik/ppo-Huggy

|

klashenrik

| 2022-12-09T14:05:55Z | 7 | 0 |

ml-agents

|

[

"ml-agents",

"tensorboard",

"onnx",

"unity-ml-agents",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

] |

reinforcement-learning

| 2022-12-09T14:05:47Z |

---

tags:

- unity-ml-agents

- ml-agents

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

library_name: ml-agents

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Huggy

2. Step 1: Write your model_id: klashenrik/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

Kuaaangwen/SMM-classifier-1

|

Kuaaangwen

| 2022-12-09T13:54:52Z | 6 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-12-09T13:37:29Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: SMM-classifier-1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# SMM-classifier-1

This model is a fine-tuned version of [Kuaaangwen/bert-base-cased-finetuned-chemistry](https://huggingface.co/Kuaaangwen/bert-base-cased-finetuned-chemistry) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5506

- Accuracy: 0.8333

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 7 | 0.2044 | 0.8333 |

| No log | 2.0 | 14 | 0.3574 | 0.8333 |

| No log | 3.0 | 21 | 0.1551 | 0.8333 |

| No log | 4.0 | 28 | 0.9122 | 0.8333 |

| No log | 5.0 | 35 | 0.9043 | 0.8333 |

| No log | 6.0 | 42 | 0.7262 | 0.8333 |

| No log | 7.0 | 49 | 0.5977 | 0.8333 |

| No log | 8.0 | 56 | 0.5567 | 0.8333 |

| No log | 9.0 | 63 | 0.5484 | 0.8333 |

| No log | 10.0 | 70 | 0.5506 | 0.8333 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

|

torileatherman/whisper_small_sv

|

torileatherman

| 2022-12-09T13:47:05Z | 6 | 0 |

transformers

|

[

"transformers",

"pytorch",

"whisper",

"automatic-speech-recognition",

"hf-asr-leaderboard",

"generated_from_trainer",

"sv",

"dataset:mozilla-foundation/common_voice_11_0",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-12-05T23:03:29Z |

---

language:

- sv

license: apache-2.0

tags:

- hf-asr-leaderboard

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_11_0

metrics:

- wer

model-index:

- name: Whisper Small Sv

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice 11.0

type: mozilla-foundation/common_voice_11_0

config: sv

split: test[:10%]

args: 'config: sv, split: test'

metrics:

- name: Wer

type: wer

value: 19.76284584980237

---

# Whisper Small Swedish

This model is an adapted version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the Common Voice 11.0 dataset in Swedish.

It achieves the following results on the evaluation set:

- Wer: 19.8166

## Model description & uses

This model is the openai whisper small transformer adapted for Swedish audio to text transcription.

The model is available through its [HuggingFace web app](https://huggingface.co/spaces/torileatherman/whisper_small_sv)

## Training and evaluation data

Data used for training is the initial 10% of train and validation of [Swedish Common Voice](https://huggingface.co/datasets/mozilla-foundation/common_voice_11_0/viewer/sv/train) 11.0 from Mozilla Foundation.

The dataset used for evaluation is the initial 10% of test of Swedish Common Voice.

The training data has been augmented with random noise, random pitching and change of the speed of the voice.

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- lr_scheduler_type: constant

- lr_scheduler_warmup_steps: 500

- training_steps: 4000

- weight decay: 0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.1379 | 0.95 | 1000 | 0.295811 | 21.467|

| 0.0245 | 2.86 | 3000 | 0.300059 | 20.160 |

| 0.0060 | 3.82 | 4000 | 0.320301 | 19.762 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.12.1+cu113

- Datasets 2.7.1

- Tokenizers 0.13.2

|

hr16/ira-olympus-4000

|

hr16

| 2022-12-09T13:46:47Z | 1 | 0 |

diffusers

|

[

"diffusers",

"text-to-image",

"stable-diffusion",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2022-12-09T13:43:11Z |

---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

---

### Model Dreambooth concept /content/Ira_Olympus/CRHTMJX/4000 được train bởi hr16 bằng [Shinja Zero SoTA DreamBooth_Stable_Diffusion](https://colab.research.google.com/drive/1G7qx6M_S1PDDlsWIMdbZXwdZik6sUlEh) notebook <br>

Test concept bằng [Shinja Zero no Notebook](https://colab.research.google.com/drive/1Hp1ZIjPbsZKlCtomJVmt2oX7733W44b0) <br>

Hoặc test bằng `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb)

Ảnh mẫu của concept: WIP

|

ZDaPlaY/strawmaryarts_style

|

ZDaPlaY

| 2022-12-09T13:32:45Z | 0 | 1 | null |

[

"region:us"

] | null | 2022-12-09T12:55:19Z |

Contains:

strawmaryarts style - model with anime style

Trigger Words: strawmaryarts style

|

lily-phoo-95/sd-class-butterflies-35

|

lily-phoo-95

| 2022-12-09T13:28:31Z | 6 | 0 |

diffusers

|

[

"diffusers",

"pytorch",

"unconditional-image-generation",

"diffusion-models-class",

"license:mit",

"diffusers:DDPMPipeline",

"region:us"

] |

unconditional-image-generation

| 2022-12-08T14:59:17Z |

---

license: mit

tags:

- pytorch

- diffusers

- unconditional-image-generation

- diffusion-models-class

---

# Model Card for Unit 1 of the [Diffusion Models Class 🧨](https://github.com/huggingface/diffusion-models-class)

This model is a diffusion model for unconditional image generation of cute 🦋.

## Usage

```python

from diffusers import DDPMPipeline

pipeline = DDPMPipeline.from_pretrained(lily-phoo-95/sd-class-butterflies-35)

image = pipeline().images[0]

image

```

|

nbonaker/ddpm-celeb-face

|

nbonaker

| 2022-12-09T13:26:14Z | 12 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"en",

"dataset:ddpm-celeb-face",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2022-12-08T17:21:14Z |

---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: ddpm-celeb-face

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-celeb-face

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `ddpm-celeb-face` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-06

- train_batch_size: 32

- eval_batch_size: 32

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 50

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/nbonaker/ddpm-celeb-face/tensorboard?#scalars)

|

geninhu/whisper-medium-vi

|

geninhu

| 2022-12-09T13:09:46Z | 4 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"whisper",

"automatic-speech-recognition",

"generated_from_trainer",

"vi",

"dataset:mozilla-foundation/common_voice_11_0",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-12-08T05:27:05Z |

---

language:

- vi

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- mozilla-foundation/common_voice_11_0

metrics:

- wer

model-index:

- name: openai/whisper-medium

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: mozilla-foundation/common_voice_11_0 vi

type: mozilla-foundation/common_voice_11_0

config: vi

split: test

args: vi

metrics:

- name: Wer

type: wer

value: 19.92761570519851

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# openai/whisper-medium

This model is a fine-tuned version of [openai/whisper-medium](https://huggingface.co/openai/whisper-medium) on the common_voice_11_0 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7599

- Wer: 19.9276

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 32

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 5000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.0001 | 62.0 | 1000 | 0.6531 | 19.3463 |

| 0.0001 | 124.0 | 2000 | 0.6964 | 19.6973 |

| 0.0 | 187.0 | 3000 | 0.7282 | 19.8947 |

| 0.0 | 249.0 | 4000 | 0.7481 | 19.8837 |

| 0.0 | 312.0 | 5000 | 0.7599 | 19.9276 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu117

- Datasets 2.7.1.dev0

- Tokenizers 0.13.2

|

klashenrik/ppo-lunarlander-v2

|

klashenrik

| 2022-12-09T13:02:15Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-12-09T10:27:01Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 277.75 +/- 27.70

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Gladiator/bert-large-uncased_ner_wikiann

|

Gladiator

| 2022-12-09T12:54:43Z | 16 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"dataset:wikiann",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-12-09T12:12:06Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- wikiann

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-large-uncased_ner_wikiann

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: wikiann

type: wikiann

args: en

metrics:

- name: Precision

type: precision

value: 0.8383588049015558

- name: Recall

type: recall

value: 0.8608794005372543

- name: F1

type: f1

value: 0.8494698660714285

- name: Accuracy

type: accuracy

value: 0.9379407966623622

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-large-uncased_ner_wikiann

This model is a fine-tuned version of [bert-large-uncased](https://huggingface.co/bert-large-uncased) on the wikiann dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3373

- Precision: 0.8384

- Recall: 0.8609

- F1: 0.8495