modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

list | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

Muapi/lip-bite-concept-flux.1d

|

Muapi

| 2025-08-19T20:00:47Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-19T20:00:39Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Lip Bite Concept FLUX.1D

**Base model**: Flux.1 D

**Trained words**:

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:735399@1977332", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

hasdal/a51ae003-de10-4a7c-80ea-f24dbec64122

|

hasdal

| 2025-08-19T18:27:46Z | 0 | 0 |

peft

|

[

"peft",

"safetensors",

"base_model:adapter:unsloth/SmolLM2-135M",

"dpo",

"lora",

"transformers",

"trl",

"unsloth",

"text-generation",

"arxiv:1910.09700",

"base_model:unsloth/SmolLM2-135M",

"region:us"

] |

text-generation

| 2025-08-19T18:27:44Z |

---

base_model: unsloth/SmolLM2-135M

library_name: peft

pipeline_tag: text-generation

tags:

- base_model:adapter:unsloth/SmolLM2-135M

- dpo

- lora

- transformers

- trl

- unsloth

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

### Framework versions

- PEFT 0.17.0

|

BootesVoid/cme1nlmc40afpgwtcpc42gvjm_cme7g43p30bf96aq1sh548pe8

|

BootesVoid

| 2025-08-19T18:26:14Z | 0 | 0 |

diffusers

|

[

"diffusers",

"flux",

"lora",

"replicate",

"text-to-image",

"en",

"base_model:black-forest-labs/FLUX.1-dev",

"base_model:adapter:black-forest-labs/FLUX.1-dev",

"license:other",

"region:us"

] |

text-to-image

| 2025-08-19T18:26:12Z |

---

license: other

license_name: flux-1-dev-non-commercial-license

license_link: https://huggingface.co/black-forest-labs/FLUX.1-dev/blob/main/LICENSE.md

language:

- en

tags:

- flux

- diffusers

- lora

- replicate

base_model: "black-forest-labs/FLUX.1-dev"

pipeline_tag: text-to-image

# widget:

# - text: >-

# prompt

# output:

# url: https://...

instance_prompt: OFMODEL

---

# Cme1Nlmc40Afpgwtcpc42Gvjm_Cme7G43P30Bf96Aq1Sh548Pe8

<Gallery />

## About this LoRA

This is a [LoRA](https://replicate.com/docs/guides/working-with-loras) for the FLUX.1-dev text-to-image model. It can be used with diffusers or ComfyUI.

It was trained on [Replicate](https://replicate.com/) using AI toolkit: https://replicate.com/ostris/flux-dev-lora-trainer/train

## Trigger words

You should use `OFMODEL` to trigger the image generation.

## Run this LoRA with an API using Replicate

```py

import replicate

input = {

"prompt": "OFMODEL",

"lora_weights": "https://huggingface.co/BootesVoid/cme1nlmc40afpgwtcpc42gvjm_cme7g43p30bf96aq1sh548pe8/resolve/main/lora.safetensors"

}

output = replicate.run(

"black-forest-labs/flux-dev-lora",

input=input

)

for index, item in enumerate(output):

with open(f"output_{index}.webp", "wb") as file:

file.write(item.read())

```

## Use it with the [🧨 diffusers library](https://github.com/huggingface/diffusers)

```py

from diffusers import AutoPipelineForText2Image

import torch

pipeline = AutoPipelineForText2Image.from_pretrained('black-forest-labs/FLUX.1-dev', torch_dtype=torch.float16).to('cuda')

pipeline.load_lora_weights('BootesVoid/cme1nlmc40afpgwtcpc42gvjm_cme7g43p30bf96aq1sh548pe8', weight_name='lora.safetensors')

image = pipeline('OFMODEL').images[0]

```

For more details, including weighting, merging and fusing LoRAs, check the [documentation on loading LoRAs in diffusers](https://huggingface.co/docs/diffusers/main/en/using-diffusers/loading_adapters)

## Training details

- Steps: 2000

- Learning rate: 0.0004

- LoRA rank: 16

## Contribute your own examples

You can use the [community tab](https://huggingface.co/BootesVoid/cme1nlmc40afpgwtcpc42gvjm_cme7g43p30bf96aq1sh548pe8/discussions) to add images that show off what you’ve made with this LoRA.

|

Dejiat/blockassist-bc-savage_unseen_bobcat_1755627285

|

Dejiat

| 2025-08-19T18:15:34Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"savage unseen bobcat",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T18:15:18Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- savage unseen bobcat

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

ElizabethMohan1872002/t5-sum-colab

|

ElizabethMohan1872002

| 2025-08-19T18:13:31Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"t5",

"text2text-generation",

"generated_from_trainer",

"base_model:google/flan-t5-small",

"base_model:finetune:google/flan-t5-small",

"license:apache-2.0",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | null | 2025-08-19T18:13:20Z |

---

library_name: transformers

license: apache-2.0

base_model: google/flan-t5-small

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: t5-sum-colab

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-sum-colab

This model is a fine-tuned version of [google/flan-t5-small](https://huggingface.co/google/flan-t5-small) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.3480

- Rouge1: 41.6552

- Rouge2: 17.0275

- Rougel: 36.2755

- Rougelsum: 36.2924

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH_FUSED with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|:-------:|:---------:|

| 1.3505 | 1.0 | 3115 | 1.1838 | 40.3409 | 17.4956 | 35.7888 | 35.8149 |

| 1.3026 | 2.0 | 6230 | 1.1448 | 41.1275 | 18.0429 | 36.1663 | 36.2066 |

| 1.2295 | 3.0 | 9345 | 1.1389 | 41.3104 | 18.0181 | 36.2726 | 36.2897 |

### Framework versions

- Transformers 4.55.2

- Pytorch 2.8.0+cu126

- Datasets 4.0.0

- Tokenizers 0.21.4

|

AnonymousCS/xlmr_swedish_immigration3

|

AnonymousCS

| 2025-08-19T18:00:16Z | 0 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"xlm-roberta",

"text-classification",

"generated_from_trainer",

"base_model:FacebookAI/xlm-roberta-large",

"base_model:finetune:FacebookAI/xlm-roberta-large",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2025-08-19T17:55:05Z |

---

library_name: transformers

license: mit

base_model: FacebookAI/xlm-roberta-large

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: xlmr_swedish_immigration3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlmr_swedish_immigration3

This model is a fine-tuned version of [FacebookAI/xlm-roberta-large](https://huggingface.co/FacebookAI/xlm-roberta-large) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3970

- Accuracy: 0.8615

- 1-f1: 0.7857

- 1-recall: 0.7674

- 1-precision: 0.8049

- Balanced Acc: 0.8377

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 128

- eval_batch_size: 128

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH_FUSED with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 15

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | 1-f1 | 1-recall | 1-precision | Balanced Acc |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|:--------:|:-----------:|:------------:|

| 0.4995 | 1.0 | 5 | 0.4609 | 0.8538 | 0.7397 | 0.6279 | 0.9 | 0.7967 |

| 0.361 | 2.0 | 10 | 0.4179 | 0.8231 | 0.7473 | 0.7907 | 0.7083 | 0.8149 |

| 0.3011 | 3.0 | 15 | 0.3806 | 0.8692 | 0.7792 | 0.6977 | 0.8824 | 0.8258 |

| 0.3618 | 4.0 | 20 | 0.4251 | 0.8154 | 0.7391 | 0.7907 | 0.6939 | 0.8091 |

| 0.2286 | 5.0 | 25 | 0.3762 | 0.8692 | 0.7901 | 0.7442 | 0.8421 | 0.8376 |

| 0.5345 | 6.0 | 30 | 0.3777 | 0.8692 | 0.7848 | 0.7209 | 0.8611 | 0.8317 |

| 0.1878 | 7.0 | 35 | 0.3679 | 0.8769 | 0.8 | 0.7442 | 0.8649 | 0.8434 |

| 0.1607 | 8.0 | 40 | 0.3851 | 0.8692 | 0.7901 | 0.7442 | 0.8421 | 0.8376 |

| 0.1597 | 9.0 | 45 | 0.3970 | 0.8615 | 0.7857 | 0.7674 | 0.8049 | 0.8377 |

### Framework versions

- Transformers 4.56.0.dev0

- Pytorch 2.8.0+cu126

- Datasets 4.0.0

- Tokenizers 0.21.4

|

Najin06/esp32-misterius

|

Najin06

| 2025-08-19T17:57:16Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"gpt2",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-08-18T06:10:24Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

vwzyrraz7l/blockassist-bc-tall_hunting_vulture_1755624371

|

vwzyrraz7l

| 2025-08-19T17:54:47Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"tall hunting vulture",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T17:54:44Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- tall hunting vulture

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

AnonymousCS/xlmr_spanish_immigration3

|

AnonymousCS

| 2025-08-19T17:54:32Z | 0 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"xlm-roberta",

"text-classification",

"generated_from_trainer",

"base_model:FacebookAI/xlm-roberta-large",

"base_model:finetune:FacebookAI/xlm-roberta-large",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2025-08-19T17:32:26Z |

---

library_name: transformers

license: mit

base_model: FacebookAI/xlm-roberta-large

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: xlmr_spanish_immigration3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlmr_spanish_immigration3

This model is a fine-tuned version of [FacebookAI/xlm-roberta-large](https://huggingface.co/FacebookAI/xlm-roberta-large) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3622

- Accuracy: 0.9

- 1-f1: 0.8267

- 1-recall: 0.7209

- 1-precision: 0.9688

- Balanced Acc: 0.8547

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 128

- eval_batch_size: 128

- seed: 42

- optimizer: Use OptimizerNames.ADAMW_TORCH_FUSED with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 15

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | 1-f1 | 1-recall | 1-precision | Balanced Acc |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|:--------:|:-----------:|:------------:|

| 0.4144 | 1.0 | 5 | 0.4054 | 0.8769 | 0.7838 | 0.6744 | 0.9355 | 0.8257 |

| 0.2497 | 2.0 | 10 | 0.3461 | 0.8923 | 0.825 | 0.7674 | 0.8919 | 0.8607 |

| 0.2908 | 3.0 | 15 | 0.3786 | 0.9 | 0.8267 | 0.7209 | 0.9688 | 0.8547 |

| 0.1783 | 4.0 | 20 | 0.3622 | 0.9 | 0.8267 | 0.7209 | 0.9688 | 0.8547 |

### Framework versions

- Transformers 4.56.0.dev0

- Pytorch 2.8.0+cu126

- Datasets 4.0.0

- Tokenizers 0.21.4

|

lilTAT/blockassist-bc-gentle_rugged_hare_1755625924

|

lilTAT

| 2025-08-19T17:52:45Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"gentle rugged hare",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T17:52:35Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- gentle rugged hare

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

chainway9/blockassist-bc-untamed_quick_eel_1755624212

|

chainway9

| 2025-08-19T17:49:50Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"untamed quick eel",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T17:49:46Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- untamed quick eel

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

numind/NuExtract-2.0-8B-GPTQ

|

numind

| 2025-08-19T17:42:43Z | 346 | 4 |

transformers

|

[

"transformers",

"safetensors",

"qwen2_5_vl",

"image-to-text",

"image-text-to-text",

"conversational",

"base_model:numind/NuExtract-2.0-8B",

"base_model:quantized:numind/NuExtract-2.0-8B",

"license:mit",

"text-generation-inference",

"endpoints_compatible",

"4-bit",

"gptq",

"region:us"

] |

image-text-to-text

| 2025-06-06T08:38:54Z |

---

library_name: transformers

license: mit

base_model:

- numind/NuExtract-2.0-8B

pipeline_tag: image-text-to-text

---

<p align="center">

<a href="https://nuextract.ai/">

<img src="logo_nuextract.svg" width="200"/>

</a>

</p>

<p align="center">

🖥️ <a href="https://nuextract.ai/">API / Platform</a>   |   📑 <a href="https://numind.ai/blog">Blog</a>   |   🗣️ <a href="https://discord.gg/3tsEtJNCDe">Discord</a>

</p>

# NuExtract 2.0 8B by NuMind 🔥

NuExtract 2.0 is a family of models trained specifically for structured information extraction tasks. It supports both multimodal inputs and is multilingual.

We provide several versions of different sizes, all based on pre-trained models from the QwenVL family.

| Model Size | Model Name | Base Model | License | Huggingface Link |

|------------|------------|------------|---------|------------------|

| 2B | NuExtract-2.0-2B | [Qwen2-VL-2B-Instruct](https://huggingface.co/Qwen/Qwen2-VL-2B-Instruct) | MIT | 🤗 [NuExtract-2.0-2B](https://huggingface.co/numind/NuExtract-2.0-2B) |

| 4B | NuExtract-2.0-4B | [Qwen2.5-VL-3B-Instruct](https://huggingface.co/Qwen/Qwen2.5-VL-3B-Instruct) | Qwen Research License | 🤗 [NuExtract-2.0-4B](https://huggingface.co/numind/NuExtract-2.0-4B) |

| 8B | NuExtract-2.0-8B | [Qwen2.5-VL-7B-Instruct](https://huggingface.co/Qwen/Qwen2.5-VL-7B-Instruct) | MIT | 🤗 [NuExtract-2.0-8B](https://huggingface.co/numind/NuExtract-2.0-8B) |

❗️Note: `NuExtract-2.0-2B` is based on Qwen2-VL rather than Qwen2.5-VL because the smallest Qwen2.5-VL model (3B) has a more restrictive, non-commercial license. We therefore include `NuExtract-2.0-2B` as a small model option that can be used commercially.

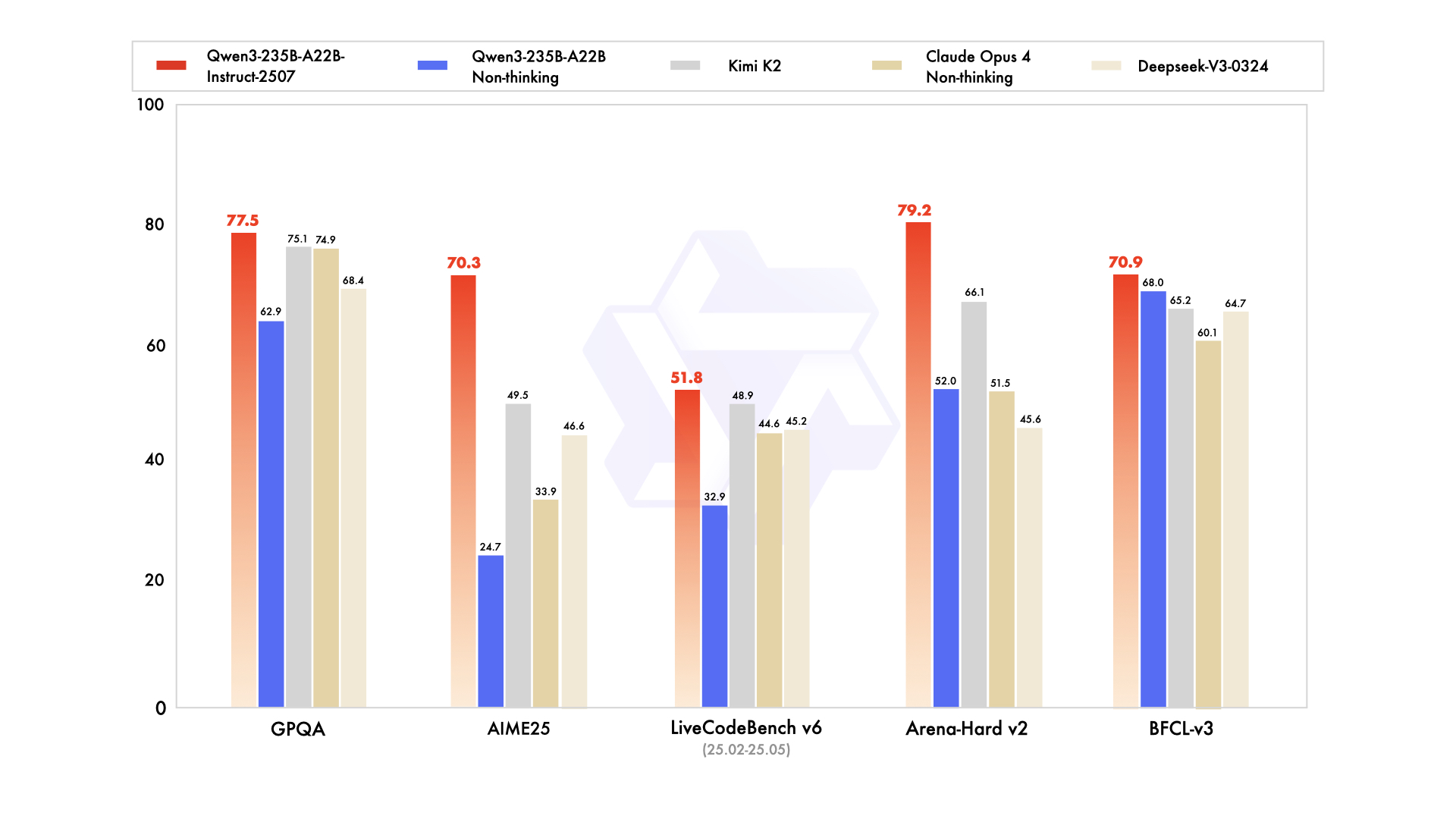

## Benchmark

Performance on collection of ~1,000 diverse extraction examples containing both text and image inputs.

<a href="https://nuextract.ai/">

<img src="nuextract2_bench.png" width="500"/>

</a>

## Overview

To use the model, provide an input text/image and a JSON template describing the information you need to extract. The template should be a JSON object, specifying field names and their expected type.

Support types include:

* `verbatim-string` - instructs the model to extract text that is present verbatim in the input.

* `string` - a generic string field that can incorporate paraphrasing/abstraction.

* `integer` - a whole number.

* `number` - a whole or decimal number.

* `date-time` - ISO formatted date.

* Array of any of the above types (e.g. `["string"]`)

* `enum` - a choice from set of possible answers (represented in template as an array of options, e.g. `["yes", "no", "maybe"]`).

* `multi-label` - an enum that can have multiple possible answers (represented in template as a double-wrapped array, e.g. `[["A", "B", "C"]]`).

If the model does not identify relevant information for a field, it will return `null` or `[]` (for arrays and multi-labels).

The following is an example template:

```json

{

"first_name": "verbatim-string",

"last_name": "verbatim-string",

"description": "string",

"age": "integer",

"gpa": "number",

"birth_date": "date-time",

"nationality": ["France", "England", "Japan", "USA", "China"],

"languages_spoken": [["English", "French", "Japanese", "Mandarin", "Spanish"]]

}

```

An example output:

```json

{

"first_name": "Susan",

"last_name": "Smith",

"description": "A student studying computer science.",

"age": 20,

"gpa": 3.7,

"birth_date": "2005-03-01",

"nationality": "England",

"languages_spoken": ["English", "French"]

}

```

⚠️ We recommend using NuExtract with a temperature at or very close to 0. Some inference frameworks, such as Ollama, use a default of 0.7 which is not well suited to many extraction tasks.

## Using NuExtract with 🤗 Transformers

```python

import torch

from transformers import AutoProcessor

from gptqmodel import GPTQModel

model_name = "numind/NuExtract-2.0-8B-GPTQ"

# model_name = "numind/NuExtract-2.0-4B-GPTQ"

model = GPTQModel.load(model_name)

processor = AutoProcessor.from_pretrained(model_name,

trust_remote_code=True,

padding_side='left',

use_fast=True)

# You can set min_pixels and max_pixels according to your needs, such as a token range of 256-1280, to balance performance and cost.

# min_pixels = 256*28*28

# max_pixels = 1280*28*28

# processor = AutoProcessor.from_pretrained(model_name, min_pixels=min_pixels, max_pixels=max_pixels)

```

You will need the following function to handle loading of image input data:

```python

def process_all_vision_info(messages, examples=None):

"""

Process vision information from both messages and in-context examples, supporting batch processing.

Args:

messages: List of message dictionaries (single input) OR list of message lists (batch input)

examples: Optional list of example dictionaries (single input) OR list of example lists (batch)

Returns:

A flat list of all images in the correct order:

- For single input: example images followed by message images

- For batch input: interleaved as (item1 examples, item1 input, item2 examples, item2 input, etc.)

- Returns None if no images were found

"""

from qwen_vl_utils import process_vision_info, fetch_image

# Helper function to extract images from examples

def extract_example_images(example_item):

if not example_item:

return []

# Handle both list of examples and single example

examples_to_process = example_item if isinstance(example_item, list) else [example_item]

images = []

for example in examples_to_process:

if isinstance(example.get('input'), dict) and example['input'].get('type') == 'image':

images.append(fetch_image(example['input']))

return images

# Normalize inputs to always be batched format

is_batch = messages and isinstance(messages[0], list)

messages_batch = messages if is_batch else [messages]

is_batch_examples = examples and isinstance(examples, list) and (isinstance(examples[0], list) or examples[0] is None)

examples_batch = examples if is_batch_examples else ([examples] if examples is not None else None)

# Ensure examples batch matches messages batch if provided

if examples and len(examples_batch) != len(messages_batch):

if not is_batch and len(examples_batch) == 1:

# Single example set for a single input is fine

pass

else:

raise ValueError("Examples batch length must match messages batch length")

# Process all inputs, maintaining correct order

all_images = []

for i, message_group in enumerate(messages_batch):

# Get example images for this input

if examples and i < len(examples_batch):

input_example_images = extract_example_images(examples_batch[i])

all_images.extend(input_example_images)

# Get message images for this input

input_message_images = process_vision_info(message_group)[0] or []

all_images.extend(input_message_images)

return all_images if all_images else None

```

E.g. To perform a basic extraction of names from a text document:

```python

template = """{"names": ["string"]}"""

document = "John went to the restaurant with Mary. James went to the cinema."

# prepare the user message content

messages = [{"role": "user", "content": document}]

text = processor.tokenizer.apply_chat_template(

messages,

template=template, # template is specified here

tokenize=False,

add_generation_prompt=True,

)

print(text)

""""<|im_start|>user

# Template:

{"names": ["string"]}

# Context:

John went to the restaurant with Mary. James went to the cinema.<|im_end|>

<|im_start|>assistant"""

image_inputs = process_all_vision_info(messages)

inputs = processor(

text=[text],

images=image_inputs,

padding=True,

return_tensors="pt",

).to("cuda")

# we choose greedy sampling here, which works well for most information extraction tasks

generation_config = {"do_sample": False, "num_beams": 1, "max_new_tokens": 2048}

# Inference: Generation of the output

generated_ids = model.generate(

**inputs,

**generation_config

)

generated_ids_trimmed = [

out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

]

output_text = processor.batch_decode(

generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False

)

print(output_text)

# ['{"names": ["John", "Mary", "James"]}']

```

<details>

<summary>In-Context Examples</summary>

Sometimes the model might not perform as well as we want because our task is challenging or involves some degree of ambiguity. Alternatively, we may want the model to follow some specific formatting, or just give it a bit more help. In cases like this it can be valuable to provide "in-context examples" to help NuExtract better understand the task.

To do so, we can provide a list examples (dictionaries of input/output pairs). In the example below, we show to the model that we want the extracted names to be in captial letters with `-` on either side (for the sake of illustration). Usually providing multiple examples will lead to better results.

```python

template = """{"names": ["string"]}"""

document = "John went to the restaurant with Mary. James went to the cinema."

examples = [

{

"input": "Stephen is the manager at Susan's store.",

"output": """{"names": ["-STEPHEN-", "-SUSAN-"]}"""

}

]

messages = [{"role": "user", "content": document}]

text = processor.tokenizer.apply_chat_template(

messages,

template=template,

examples=examples, # examples provided here

tokenize=False,

add_generation_prompt=True,

)

image_inputs = process_all_vision_info(messages, examples)

inputs = processor(

text=[text],

images=image_inputs,

padding=True,

return_tensors="pt",

).to("cuda")

# we choose greedy sampling here, which works well for most information extraction tasks

generation_config = {"do_sample": False, "num_beams": 1, "max_new_tokens": 2048}

# Inference: Generation of the output

generated_ids = model.generate(

**inputs,

**generation_config

)

generated_ids_trimmed = [

out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

]

output_text = processor.batch_decode(

generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False

)

print(output_text)

# ['{"names": ["-JOHN-", "-MARY-", "-JAMES-"]}']

```

</details>

<details>

<summary>Image Inputs</summary>

If we want to give image inputs to NuExtract, instead of text, we simply provide a dictionary specifying the desired image file as the message content, instead of a string. (e.g. `{"type": "image", "image": "file://image.jpg"}`).

You can also specify an image URL (e.g. `{"type": "image", "image": "http://path/to/your/image.jpg"}`) or base64 encoding (e.g. `{"type": "image", "image": "data:image;base64,/9j/..."}`).

```python

template = """{"store": "verbatim-string"}"""

document = {"type": "image", "image": "file://1.jpg"}

messages = [{"role": "user", "content": [document]}]

text = processor.tokenizer.apply_chat_template(

messages,

template=template,

tokenize=False,

add_generation_prompt=True,

)

image_inputs = process_all_vision_info(messages)

inputs = processor(

text=[text],

images=image_inputs,

padding=True,

return_tensors="pt",

).to("cuda")

generation_config = {"do_sample": False, "num_beams": 1, "max_new_tokens": 2048}

# Inference: Generation of the output

generated_ids = model.generate(

**inputs,

**generation_config

)

generated_ids_trimmed = [

out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

]

output_text = processor.batch_decode(

generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False

)

print(output_text)

# ['{"store": "Trader Joe\'s"}']

```

</details>

<details>

<summary>Batch Inference</summary>

```python

inputs = [

# image input with no ICL examples

{

"document": {"type": "image", "image": "file://0.jpg"},

"template": """{"store_name": "verbatim-string"}""",

},

# image input with 1 ICL example

{

"document": {"type": "image", "image": "file://0.jpg"},

"template": """{"store_name": "verbatim-string"}""",

"examples": [

{

"input": {"type": "image", "image": "file://1.jpg"},

"output": """{"store_name": "Trader Joe's"}""",

}

],

},

# text input with no ICL examples

{

"document": {"type": "text", "text": "John went to the restaurant with Mary. James went to the cinema."},

"template": """{"names": ["string"]}""",

},

# text input with ICL example

{

"document": {"type": "text", "text": "John went to the restaurant with Mary. James went to the cinema."},

"template": """{"names": ["string"]}""",

"examples": [

{

"input": "Stephen is the manager at Susan's store.",

"output": """{"names": ["STEPHEN", "SUSAN"]}"""

}

],

},

]

# messages should be a list of lists for batch processing

messages = [

[

{

"role": "user",

"content": [x['document']],

}

]

for x in inputs

]

# apply chat template to each example individually

texts = [

processor.tokenizer.apply_chat_template(

messages[i], # Now this is a list containing one message

template=x['template'],

examples=x.get('examples', None),

tokenize=False,

add_generation_prompt=True)

for i, x in enumerate(inputs)

]

image_inputs = process_all_vision_info(messages, [x.get('examples') for x in inputs])

inputs = processor(

text=texts,

images=image_inputs,

padding=True,

return_tensors="pt",

).to("cuda")

generation_config = {"do_sample": False, "num_beams": 1, "max_new_tokens": 2048}

# Batch Inference

generated_ids = model.generate(**inputs, **generation_config)

generated_ids_trimmed = [

out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

]

output_texts = processor.batch_decode(

generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False

)

for y in output_texts:

print(y)

# {"store_name": "WAL-MART"}

# {"store_name": "Walmart"}

# {"names": ["John", "Mary", "James"]}

# {"names": ["JOHN", "MARY", "JAMES"]}

```

</details>

<details>

<summary>Template Generation</summary>

If you want to convert existing schema files you have in other formats (e.g. XML, YAML, etc.) or start from an example, NuExtract 2.0 models can automatically generate this for you.

E.g. convert XML into a NuExtract template:

```python

xml_template = """<SportResult>

<Date></Date>

<Sport></Sport>

<Venue></Venue>

<HomeTeam></HomeTeam>

<AwayTeam></AwayTeam>

<HomeScore></HomeScore>

<AwayScore></AwayScore>

<TopScorer></TopScorer>

</SportResult>"""

messages = [

{

"role": "user",

"content": [{"type": "text", "text": xml_template}],

}

]

text = processor.apply_chat_template(

messages, tokenize=False, add_generation_prompt=True,

)

image_inputs = process_all_vision_info(messages)

inputs = processor(

text=[text],

images=image_inputs,

padding=True,

return_tensors="pt",

).to("cuda")

generated_ids = model.generate(

**inputs,

**generation_config

)

generated_ids_trimmed = [

out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

]

output_text = processor.batch_decode(

generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False

)

print(output_text[0])

# {

# "Date": "date-time",

# "Sport": "verbatim-string",

# "Venue": "verbatim-string",

# "HomeTeam": "verbatim-string",

# "AwayTeam": "verbatim-string",

# "HomeScore": "integer",

# "AwayScore": "integer",

# "TopScorer": "verbatim-string"

# }

```

E.g. generate a template from natural language description:

```python

description = "I would like to extract important details from the contract."

messages = [

{

"role": "user",

"content": [{"type": "text", "text": description}],

}

]

text = processor.apply_chat_template(

messages, tokenize=False, add_generation_prompt=True,

)

image_inputs = process_all_vision_info(messages)

inputs = processor(

text=[text],

images=image_inputs,

padding=True,

return_tensors="pt",

).to("cuda")

generated_ids = model.generate(

**inputs,

**generation_config

)

generated_ids_trimmed = [

out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

]

output_text = processor.batch_decode(

generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False

)

print(output_text[0])

# {

# "Contract": {

# "Title": "verbatim-string",

# "Description": "verbatim-string",

# "Terms": [

# {

# "Term": "verbatim-string",

# "Description": "verbatim-string"

# }

# ],

# "Date": "date-time",

# "Signatory": "verbatim-string"

# }

# }

```

</details>

## Fine-Tuning

You can find a fine-tuning tutorial notebook in the [cookbooks](https://github.com/numindai/nuextract/tree/main/cookbooks) folder of the [GitHub repo](https://github.com/numindai/nuextract/tree/main).

## vLLM Deployment

Run the command below to serve an OpenAI-compatible API:

```bash

vllm serve numind/NuExtract-2.0-8B --trust_remote_code --limit-mm-per-prompt image=6 --chat-template-content-format openai

```

If you encounter memory issues, set `--max-model-len` accordingly.

Send requests to the model as follows:

```python

import json

from openai import OpenAI

openai_api_key = "EMPTY"

openai_api_base = "http://localhost:8000/v1"

client = OpenAI(

api_key=openai_api_key,

base_url=openai_api_base,

)

chat_response = client.chat.completions.create(

model="numind/NuExtract-2.0-8B",

temperature=0,

messages=[

{

"role": "user",

"content": [{"type": "text", "text": "Yesterday I went shopping at Bunnings"}],

},

],

extra_body={

"chat_template_kwargs": {

"template": json.dumps(json.loads("""{\"store\": \"verbatim-string\"}"""), indent=4)

},

}

)

print("Chat response:", chat_response)

```

For image inputs, structure requests as shown below. Make sure to order the images in `"content"` as they appear in the prompt (i.e. any in-context examples before the main input).

```python

import base64

def encode_image(image_path):

"""

Encode the image file to base64 string

"""

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode('utf-8')

base64_image = encode_image("0.jpg")

base64_image2 = encode_image("1.jpg")

chat_response = client.chat.completions.create(

model="numind/NuExtract-2.0-8B",

temperature=0,

messages=[

{

"role": "user",

"content": [

{"type": "image_url", "image_url": {"url": f"data:image/jpeg;base64,{base64_image}"}}, # first ICL example image

{"type": "image_url", "image_url": {"url": f"data:image/jpeg;base64,{base64_image2}"}}, # real input image

],

},

],

extra_body={

"chat_template_kwargs": {

"template": json.dumps(json.loads("""{\"store\": \"verbatim-string\"}"""), indent=4),

"examples": [

{

"input": "<image>",

"output": """{\"store\": \"Walmart\"}"""

}

]

},

}

)

print("Chat response:", chat_response)

```

|

yookty/blockassist-bc-whistling_exotic_chicken_1755625296

|

yookty

| 2025-08-19T17:41:44Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"whistling exotic chicken",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T17:41:37Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- whistling exotic chicken

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

allisterb/gemma3_270m_tools_test

|

allisterb

| 2025-08-19T17:38:42Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"gguf",

"gemma3_text",

"text-generation",

"text-generation-inference",

"unsloth",

"conversational",

"en",

"base_model:unsloth/gemma-3-270m-it-unsloth-bnb-4bit",

"base_model:quantized:unsloth/gemma-3-270m-it-unsloth-bnb-4bit",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-08-19T15:13:57Z |

---

base_model: unsloth/gemma-3-270m-it-unsloth-bnb-4bit

tags:

- text-generation-inference

- transformers

- unsloth

- gemma3_text

license: apache-2.0

language:

- en

---

# Uploaded finetuned model

- **Developed by:** allisterb

- **License:** apache-2.0

- **Finetuned from model :** unsloth/gemma-3-270m-it-unsloth-bnb-4bit

This gemma3_text model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

Huseyin/teknofest-2025-turkish-edu

|

Huseyin

| 2025-08-19T17:38:35Z | 0 | 0 | null |

[

"region:us"

] | null | 2025-08-19T17:32:35Z |

# 🚀 TEKNOFEST 2025 - Türkçe Eğitim Modeli

Bu model **TEKNOFEST 2025 Eylem Temelli Türkçe Büyük Dil Modeli Yarışması** için geliştirilmiştir.

## 📋 Model Bilgileri

- **Base Model:** Qwen/Qwen3-8B

- **Fine-tuning:** LoRA Adapter (Huseyin/qwen3-8b-turkish-teknofest2025-private)

- **Oluşturma Tarihi:** 2025-08-19 17:37

- **Alan:** Eğitim Teknolojileri

- **Dil:** Türkçe

## 🎯 Kullanım Alanları

- Türkçe eğitim materyali oluşturma

- Öğrenci seviyesine uygun içerik üretimi

- Soru-cevap sistemleri

- Eğitsel içerik özetleme

- Ders planı hazırlama

## 💻 Kullanım

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

# Model ve tokenizer'ı yükle

model_name = "Huseyin/teknofest-2025-turkish-edu"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name, device_map="auto")

# Örnek kullanım

prompt = "Türkçe eğitimi için yaratıcı bir etkinlik önerisi:"

inputs = tokenizer(prompt, return_tensors="pt")

outputs = model.generate(**inputs, max_length=200, temperature=0.7)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(response)

```

## 🏆 TEKNOFEST 2025

Bu model, TEKNOFEST 2025 Türkçe Büyük Dil Modeli Yarışması kapsamında geliştirilmiştir.

### Yarışma Kategorisi

**Eylem Temelli Türkçe Büyük Dil Modeli**

### Ekip

TEKNOFEST 2025 Yarışma Ekibi

## 📊 Performans Metrikleri

- **Perplexity:** [Değerlendirilecek]

- **BLEU Score:** [Değerlendirilecek]

- **Human Evaluation:** [Değerlendirilecek]

## 📄 Lisans

Apache 2.0

## 🙏 Teşekkür

Bu modelin geliştirilmesinde emeği geçen herkese teşekkür ederiz.

---

*TEKNOFEST 2025 - Türkiye'nin Teknoloji Festivali*

|

coelacanthxyz/blockassist-bc-finicky_thriving_grouse_1755623215

|

coelacanthxyz

| 2025-08-19T17:35:48Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"finicky thriving grouse",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T17:35:42Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- finicky thriving grouse

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

shihotan/Silende_Real

|

shihotan

| 2025-08-19T17:33:09Z | 0 | 0 | null |

[

"region:us"

] | null | 2025-05-10T05:54:11Z |

(realistic:1.1),(photorealistic:1.1),(cosplay photo:1.1),(masterpiece, best quality, newest, absurdres, highres:1.4),(real life:1.6),

|

AppliedLucent/nemo-phase5

|

AppliedLucent

| 2025-08-19T17:23:30Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"mistral",

"text-generation",

"text-generation-inference",

"unsloth",

"conversational",

"en",

"base_model:AppliedLucent/nemo-phase4",

"base_model:finetune:AppliedLucent/nemo-phase4",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-08-19T17:10:38Z |

---

base_model: AppliedLucent/nemo-phase4

tags:

- text-generation-inference

- transformers

- unsloth

- mistral

license: apache-2.0

language:

- en

---

# Uploaded finetuned model

- **Developed by:** AppliedLucent

- **License:** apache-2.0

- **Finetuned from model :** AppliedLucent/nemo-phase4

This mistral model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

Orginal-Bindura-University-viral-video-Cli/New.full.videos.Bindura.University.Viral.Video.Official.Tutorial

|

Orginal-Bindura-University-viral-video-Cli

| 2025-08-19T17:22:49Z | 0 | 0 | null |

[

"region:us"

] | null | 2025-08-19T17:22:36Z |

<animated-image data-catalyst=""><a href="https://tinyurl.com/5ye5v3bc?leaked-viral-video" rel="nofollow" data-target="animated-image.originalLink"><img src="https://static.wixstatic.com/media/b249f9_adac8f70fb3f45b88691696c77de18f3~mv2.gif" alt="Foo" data-canonical-src="https://static.wixstatic.com/media/b249f9_adac8f70fb3f45b88691696c77de18f3~mv2.gif" style="max-width: 100%; display: inline-block;" data-target="animated-image.originalImage"></a>

|

Ver-full-videos-maria-b-Clips/Ver.Viral.video.maria.b.polemica.viral.en.twitter.y.telegram

|

Ver-full-videos-maria-b-Clips

| 2025-08-19T17:14:02Z | 0 | 0 | null |

[

"region:us"

] | null | 2025-08-19T17:13:54Z |

[](https://tinyurl.com/bdk3zxvb)

|

kevinshin/test-run-fsdp-v1-full-state-dict

|

kevinshin

| 2025-08-19T17:09:34Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen3",

"text-generation",

"generated_from_trainer",

"sft",

"trl",

"conversational",

"base_model:Qwen/Qwen3-1.7B",

"base_model:finetune:Qwen/Qwen3-1.7B",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-08-19T16:40:15Z |

---

base_model: Qwen/Qwen3-1.7B

library_name: transformers

model_name: test-run-fsdp-v1-full-state-dict

tags:

- generated_from_trainer

- sft

- trl

licence: license

---

# Model Card for test-run-fsdp-v1-full-state-dict

This model is a fine-tuned version of [Qwen/Qwen3-1.7B](https://huggingface.co/Qwen/Qwen3-1.7B).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="kevinshin/test-run-fsdp-v1-full-state-dict", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/myungjune-sogang-university/general_remo_train/runs/3dzoaavc)

This model was trained with SFT.

### Framework versions

- TRL: 0.19.1

- Transformers: 4.54.0

- Pytorch: 2.6.0+cu126

- Datasets: 4.0.0

- Tokenizers: 0.21.2

## Citations

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallou{\'e}dec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

EZCon/Qwen2-VL-7B-Instruct-4bit-mlx

|

EZCon

| 2025-08-19T17:03:10Z | 29 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen2_vl",

"image-to-text",

"multimodal",

"qwen",

"qwen2",

"unsloth",

"vision",

"mlx",

"image-text-to-text",

"conversational",

"en",

"base_model:Qwen/Qwen2-VL-7B-Instruct",

"base_model:quantized:Qwen/Qwen2-VL-7B-Instruct",

"license:apache-2.0",

"text-generation-inference",

"endpoints_compatible",

"4-bit",

"region:us"

] |

image-text-to-text

| 2025-08-05T06:38:42Z |

---

base_model: Qwen/Qwen2-VL-7B-Instruct

language:

- en

library_name: transformers

pipeline_tag: image-text-to-text

license: apache-2.0

tags:

- multimodal

- qwen

- qwen2

- unsloth

- transformers

- vision

- mlx

---

# EZCon/Qwen2-VL-7B-Instruct-4bit-mlx

This model was converted to MLX format from [`unsloth/Qwen2-VL-7B-Instruct`]() using mlx-vlm version **0.3.2**.

Refer to the [original model card](https://huggingface.co/unsloth/Qwen2-VL-7B-Instruct) for more details on the model.

## Use with mlx

```bash

pip install -U mlx-vlm

```

```bash

python -m mlx_vlm.generate --model EZCon/Qwen2-VL-7B-Instruct-4bit-mlx --max-tokens 100 --temperature 0.0 --prompt "Describe this image." --image <path_to_image>

```

|

yaelahnal/blockassist-bc-mute_clawed_crab_1755622908

|

yaelahnal

| 2025-08-19T17:03:00Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"mute clawed crab",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T17:02:41Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- mute clawed crab

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

EZCon/Qwen2-VL-2B-Instruct-abliterated-8bit-mlx

|

EZCon

| 2025-08-19T16:58:14Z | 47 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen2_vl",

"image-to-text",

"chat",

"abliterated",

"uncensored",

"mlx",

"image-text-to-text",

"conversational",

"en",

"base_model:Qwen/Qwen2-VL-2B-Instruct",

"base_model:quantized:Qwen/Qwen2-VL-2B-Instruct",

"license:apache-2.0",

"text-generation-inference",

"endpoints_compatible",

"8-bit",

"region:us"

] |

image-text-to-text

| 2025-08-06T03:44:27Z |

---

library_name: transformers

license: apache-2.0

license_link: https://huggingface.co/huihui-ai/Qwen2-VL-2B-Instruct-abliterated/blob/main/LICENSE

language:

- en

pipeline_tag: image-text-to-text

base_model: Qwen/Qwen2-VL-2B-Instruct

tags:

- chat

- abliterated

- uncensored

- mlx

---

# EZCon/Qwen2-VL-2B-Instruct-abliterated-8bit-mlx

This model was converted to MLX format from [`huihui-ai/Qwen2-VL-2B-Instruct-abliterated`]() using mlx-vlm version **0.3.2**.

Refer to the [original model card](https://huggingface.co/huihui-ai/Qwen2-VL-2B-Instruct-abliterated) for more details on the model.

## Use with mlx

```bash

pip install -U mlx-vlm

```

```bash

python -m mlx_vlm.generate --model EZCon/Qwen2-VL-2B-Instruct-abliterated-8bit-mlx --max-tokens 100 --temperature 0.0 --prompt "Describe this image." --image <path_to_image>

```

|

mang3dd/blockassist-bc-tangled_slithering_alligator_1755620734

|

mang3dd

| 2025-08-19T16:52:32Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"tangled slithering alligator",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T16:52:29Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- tangled slithering alligator

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

New-Clip-prabh-viral-videos/New.full.videos.prabh.Viral.Video.Official.Tutorial

|

New-Clip-prabh-viral-videos

| 2025-08-19T16:52:15Z | 0 | 0 | null |

[

"region:us"

] | null | 2025-08-19T16:51:29Z |

[](https://tinyurl.com/bdk3zxvb)

|

Prathyusha101/tldr-ppco-g0p95-l1p0

|

Prathyusha101

| 2025-08-19T16:44:46Z | 0 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt_neox",

"text-classification",

"generated_from_trainer",

"dataset:trl-internal-testing/tldr-preference-sft-trl-style",

"arxiv:1909.08593",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2025-08-19T11:17:59Z |

---

datasets: trl-internal-testing/tldr-preference-sft-trl-style

library_name: transformers

model_name: tldr-ppco-g0p95-l1p0

tags:

- generated_from_trainer

licence: license

---

# Model Card for tldr-ppco-g0p95-l1p0

This model is a fine-tuned version of [None](https://huggingface.co/None) on the [trl-internal-testing/tldr-preference-sft-trl-style](https://huggingface.co/datasets/trl-internal-testing/tldr-preference-sft-trl-style) dataset.

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="Prathyusha101/tldr-ppco-g0p95-l1p0", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/prathyusha1-the-university-of-texas-at-austin/huggingface/runs/poeo9cdz)

This model was trained with PPO, a method introduced in [Fine-Tuning Language Models from Human Preferences](https://huggingface.co/papers/1909.08593).

### Framework versions

- TRL: 0.15.0.dev0

- Transformers: 4.53.1

- Pytorch: 2.5.1

- Datasets: 3.6.0

- Tokenizers: 0.21.2

## Citations

Cite PPO as:

```bibtex

@article{mziegler2019fine-tuning,

title = {{Fine-Tuning Language Models from Human Preferences}},

author = {Daniel M. Ziegler and Nisan Stiennon and Jeffrey Wu and Tom B. Brown and Alec Radford and Dario Amodei and Paul F. Christiano and Geoffrey Irving},

year = 2019,

eprint = {arXiv:1909.08593}

}

```

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallouédec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

indoempatnol/blockassist-bc-fishy_wary_swan_1755619122

|

indoempatnol

| 2025-08-19T16:25:41Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"fishy wary swan",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T16:25:36Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- fishy wary swan

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

lguaman/MyManufacturingData

|

lguaman

| 2025-08-19T16:24:42Z | 0 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"gemma3_text",

"text-generation",

"generated_from_trainer",

"sft",

"trl",

"conversational",

"base_model:google/gemma-3-270m-it",

"base_model:finetune:google/gemma-3-270m-it",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-08-19T14:09:08Z |

---

base_model: google/gemma-3-270m-it

library_name: transformers

model_name: MyManufacturingData

tags:

- generated_from_trainer

- sft

- trl

licence: license

---

# Model Card for MyManufacturingData

This model is a fine-tuned version of [google/gemma-3-270m-it](https://huggingface.co/google/gemma-3-270m-it).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="lguaman/MyManufacturingData", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

This model was trained with SFT.

### Framework versions

- TRL: 0.21.0

- Transformers: 4.55.2

- Pytorch: 2.6.0+cu124

- Datasets: 4.0.0

- Tokenizers: 0.21.4

## Citations

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallou{\'e}dec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

mang3dd/blockassist-bc-tangled_slithering_alligator_1755618934

|

mang3dd

| 2025-08-19T16:22:08Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"tangled slithering alligator",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-19T16:22:05Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- tangled slithering alligator

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

oceanfish/intent_classify_slot

|

oceanfish

| 2025-08-19T16:20:03Z | 0 | 0 |

peft

|

[

"peft",

"safetensors",

"arxiv:1910.09700",

"base_model:Qwen/Qwen2.5-7B-Instruct",

"base_model:adapter:Qwen/Qwen2.5-7B-Instruct",

"region:us"

] | null | 2025-08-19T16:15:20Z |

---

base_model: Qwen/Qwen2.5-7B-Instruct

library_name: peft

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

### Framework versions

- PEFT 0.15.2

|

exala/db_auto_6.1.2

|

exala

| 2025-08-19T16:16:59Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"distilbert",

"text-classification",

"arxiv:1910.09700",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2025-08-19T16:16:43Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]