problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_3272

|

rasdani/github-patches

|

git_diff

|

dbt-labs__dbt-core-4192

|

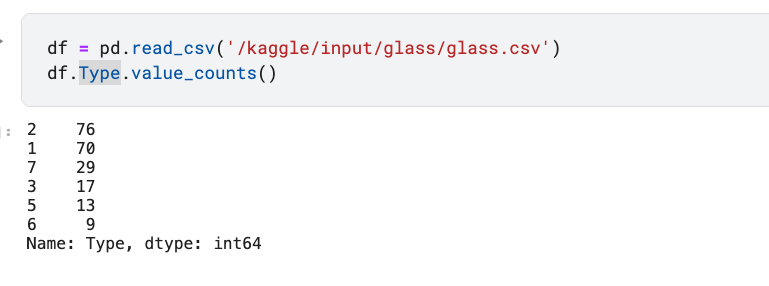

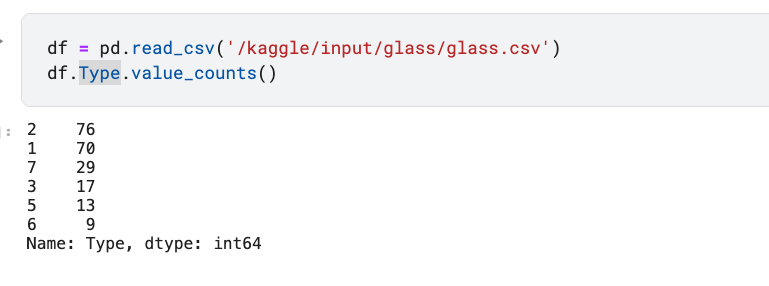

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

[Bug] Incompatible versions of `protobuf` required in sub-dependencies

### Is there an existing issue for this?

- [X] I have searched the existing issues

### Current Behavior

It is not currently possible to install dbt through Pipenv because of incompatible versions of `protobuf` in sub-dependencies used in `dbt-bigquery`.

Specifically;

- `google-api-core[grpc]==1.31.3` requires `protobuf<3.18.0`

- `proto-plus==1.19.7` (used by `google-cloud-bigquery==2.29.0`) requires `protobuf>=3.19.0`

Alternatively, installing dbt through `pip` may appear successful, but the issue of incompatible versions remains -- pip simply installs the version of `protobuf` that satisfies whichever sub-dependency is installed last in the sequence, ignoring that previous sub-dependencies required an incompatible version.

### Expected Behavior

dbt should ideally use non-conflicting versions of `google-api-core[grpc]` and `google-cloud-bigquery` or allow installation of dbt without the `dbt-bigquery` extra.

### Steps To Reproduce

1. `pip install pipenv`

2. `pipenv install dbt`

### Relevant log output

```shell

[pipenv.exceptions.ResolutionFailure]: Warning: Your dependencies could not be resolved. You likely have a mismatch in your sub-dependencies.

First try clearing your dependency cache with $ pipenv lock --clear, then try the original command again.

Alternatively, you can use $ pipenv install --skip-lock to bypass this mechanism, then run $ pipenv graph to inspect the situation.

Hint: try $ pipenv lock --pre if it is a pre-release dependency.

ERROR: Could not find a version that matches protobuf<3.18.0,<4,>=3.12.0,>=3.13.0,>=3.19.0 (from dbt-bigquery==0.20.0->dbt==0.20->-r /var/folders/rq/bvjd2wms54j2m16rt180h5th0000gp/T/pipenvg3nqu30irequirements/pipenv-ng7tsiwu-constraints.txt (line 2))

Tried: 2.0.3, 2.3.0, 2.4.1, 2.5.0, 2.6.0, 2.6.1, 3.0.0, 3.0.0, 3.1.0, 3.1.0.post1, 3.1.0.post1, 3.2.0, 3.2.0, 3.3.0, 3.4.0, 3.4.0, 3.5.0.post1, 3.5.0.post1, 3.5.1, 3.5.1, 3.5.2, 3.5.2, 3.5.2.post1, 3.5.2.post1, 3.6.0, 3.6.0, 3.6.1, 3.6.1, 3.7.0, 3.7.0, 3.7.1, 3.7.1, 3.8.0, 3.8.0, 3.9.0, 3.9.0, 3.9.1, 3.9.1, 3.9.2, 3.9.2, 3.10.0, 3.10.0, 3.11.0, 3.11.0, 3.11.1, 3.11.1, 3.11.2, 3.11.2, 3.11.3, 3.11.3, 3.12.2, 3.12.2, 3.12.4, 3.12.4, 3.13.0, 3.13.0, 3.14.0, 3.14.0, 3.15.0, 3.15.0, 3.15.0, 3.15.1, 3.15.1, 3.15.1, 3.15.2, 3.15.2, 3.15.2, 3.15.3, 3.15.3, 3.15.3, 3.15.4, 3.15.4, 3.15.4, 3.15.5, 3.15.5, 3.15.5, 3.15.6, 3.15.6, 3.15.6, 3.15.7, 3.15.7, 3.15.7, 3.15.8, 3.15.8, 3.15.8, 3.16.0, 3.16.0, 3.16.0, 3.17.0, 3.17.0, 3.17.0, 3.17.1, 3.17.1, 3.17.1, 3.17.2, 3.17.2, 3.17.2, 3.17.3, 3.17.3, 3.17.3, 3.18.0, 3.18.0, 3.18.0, 3.18.1, 3.18.1, 3.18.1, 3.19.0, 3.19.0, 3.19.0, 3.19.1, 3.19.1, 3.19.1

Skipped pre-versions: 2.0.0b0, 3.0.0a2, 3.0.0a3, 3.0.0b1, 3.0.0b1.post1, 3.0.0b1.post2, 3.0.0b2, 3.0.0b2, 3.0.0b2.post1, 3.0.0b2.post1, 3.0.0b2.post2, 3.0.0b2.post2, 3.0.0b3, 3.0.0b4, 3.0.0b4, 3.2.0rc1, 3.2.0rc1, 3.2.0rc1.post1, 3.2.0rc1.post1, 3.2.0rc2, 3.2.0rc2, 3.7.0rc2, 3.7.0rc2, 3.7.0rc3, 3.7.0rc3, 3.8.0rc1, 3.8.0rc1, 3.9.0rc1, 3.9.0rc1, 3.10.0rc1, 3.10.0rc1, 3.11.0rc1, 3.11.0rc1, 3.11.0rc2, 3.11.0rc2, 3.13.0rc3, 3.13.0rc3, 3.14.0rc1, 3.14.0rc1, 3.14.0rc2, 3.14.0rc2, 3.14.0rc3, 3.14.0rc3, 3.15.0rc1, 3.15.0rc1, 3.15.0rc1, 3.15.0rc2, 3.15.0rc2, 3.15.0rc2, 3.16.0rc1, 3.16.0rc1, 3.16.0rc1, 3.16.0rc2, 3.16.0rc2, 3.16.0rc2, 3.17.0rc1, 3.17.0rc1, 3.17.0rc1, 3.17.0rc2, 3.17.0rc2, 3.17.0rc2, 3.18.0rc1, 3.18.0rc1, 3.18.0rc1, 3.18.0rc2, 3.18.0rc2, 3.18.0rc2, 3.19.0rc1, 3.19.0rc1, 3.19.0rc1, 3.19.0rc2, 3.19.0rc2, 3.19.0rc2, 4.0.0rc1, 4.0.0rc1, 4.0.0rc2, 4.0.0rc2

There are incompatible versions in the resolved dependencies:

protobuf<3.18.0,>=3.12.0 (from google-api-core[grpc]==1.31.3->dbt-bigquery==0.20.0->dbt==0.20->-r /var/folders/rq/bvjd2wms54j2m16rt180h5th0000gp/T/pipenvg3nqu30irequirements/pipenv-ng7tsiwu-constraints.txt (line 2))

protobuf<4,>=3.13.0 (from dbt-bigquery==0.20.0->dbt==0.20->-r /var/folders/rq/bvjd2wms54j2m16rt180h5th0000gp/T/pipenvg3nqu30irequirements/pipenv-ng7tsiwu-constraints.txt (line 2))

protobuf>=3.12.0 (from google-cloud-bigquery==2.29.0->dbt-bigquery==0.20.0->dbt==0.20->-r /var/folders/rq/bvjd2wms54j2m16rt180h5th0000gp/T/pipenvg3nqu30irequirements/pipenv-ng7tsiwu-constraints.txt (line 2))

protobuf>=3.12.0 (from googleapis-common-protos==1.53.0->dbt-bigquery==0.20.0->dbt==0.20->-r /var/folders/rq/bvjd2wms54j2m16rt180h5th0000gp/T/pipenvg3nqu30irequirements/pipenv-ng7tsiwu-constraints.txt (line 2))

protobuf>=3.19.0 (from proto-plus==1.19.7->google-cloud-bigquery==2.29.0->dbt-bigquery==0.20.0->dbt==0.20->-r /var/folders/rq/bvjd2wms54j2m16rt180h5th0000gp/T/pipenvg3nqu30irequirements/pipenv-ng7tsiwu-constraints.txt (line 2))

```

### Environment

```markdown

- OS: macOS 11.6

- Python: Python 3.7

- dbt: 0.21 (same error with 0.20)

```

### What database are you using dbt with?

_No response_

### Additional Context

_No response_

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `plugins/bigquery/setup.py`

Content:

```

1 #!/usr/bin/env python

2 import os

3 import sys

4

5 if sys.version_info < (3, 6):

6 print('Error: dbt does not support this version of Python.')

7 print('Please upgrade to Python 3.6 or higher.')

8 sys.exit(1)

9

10

11 from setuptools import setup

12 try:

13 from setuptools import find_namespace_packages

14 except ImportError:

15 # the user has a downlevel version of setuptools.

16 print('Error: dbt requires setuptools v40.1.0 or higher.')

17 print('Please upgrade setuptools with "pip install --upgrade setuptools" '

18 'and try again')

19 sys.exit(1)

20

21

22 package_name = "dbt-bigquery"

23 package_version = "0.21.0"

24 description = """The bigquery adapter plugin for dbt (data build tool)"""

25

26 this_directory = os.path.abspath(os.path.dirname(__file__))

27 with open(os.path.join(this_directory, 'README.md')) as f:

28 long_description = f.read()

29

30 setup(

31 name=package_name,

32 version=package_version,

33 description=description,

34 long_description=long_description,

35 long_description_content_type='text/markdown',

36 author="dbt Labs",

37 author_email="info@dbtlabs.com",

38 url="https://github.com/dbt-labs/dbt",

39 packages=find_namespace_packages(include=['dbt', 'dbt.*']),

40 package_data={

41 'dbt': [

42 'include/bigquery/dbt_project.yml',

43 'include/bigquery/sample_profiles.yml',

44 'include/bigquery/macros/*.sql',

45 'include/bigquery/macros/**/*.sql',

46 ]

47 },

48 install_requires=[

49 'dbt-core=={}'.format(package_version),

50 'protobuf>=3.13.0,<4',

51 'google-cloud-core>=1.3.0,<2',

52 'google-cloud-bigquery>=1.25.0,<3',

53 'google-api-core>=1.16.0,<2',

54 'googleapis-common-protos>=1.6.0,<2',

55 'six>=1.14.0',

56 ],

57 zip_safe=False,

58 classifiers=[

59 'Development Status :: 5 - Production/Stable',

60

61 'License :: OSI Approved :: Apache Software License',

62

63 'Operating System :: Microsoft :: Windows',

64 'Operating System :: MacOS :: MacOS X',

65 'Operating System :: POSIX :: Linux',

66

67 'Programming Language :: Python :: 3.6',

68 'Programming Language :: Python :: 3.7',

69 'Programming Language :: Python :: 3.8',

70 'Programming Language :: Python :: 3.9',

71 ],

72 python_requires=">=3.6.2",

73 )

74

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/plugins/bigquery/setup.py b/plugins/bigquery/setup.py

--- a/plugins/bigquery/setup.py

+++ b/plugins/bigquery/setup.py

@@ -50,7 +50,7 @@

'protobuf>=3.13.0,<4',

'google-cloud-core>=1.3.0,<2',

'google-cloud-bigquery>=1.25.0,<3',

- 'google-api-core>=1.16.0,<2',

+ 'google-api-core>=1.16.0,<1.31.3',

'googleapis-common-protos>=1.6.0,<2',

'six>=1.14.0',

],

|

{"golden_diff": "diff --git a/plugins/bigquery/setup.py b/plugins/bigquery/setup.py\n--- a/plugins/bigquery/setup.py\n+++ b/plugins/bigquery/setup.py\n@@ -50,7 +50,7 @@\n 'protobuf>=3.13.0,<4',\n 'google-cloud-core>=1.3.0,<2',\n 'google-cloud-bigquery>=1.25.0,<3',\n- 'google-api-core>=1.16.0,<2',\n+ 'google-api-core>=1.16.0,<1.31.3',\n 'googleapis-common-protos>=1.6.0,<2',\n 'six>=1.14.0',\n ],\n", "issue": "[Bug] Incompatible versions of `protobuf` required in sub-dependencies\n### Is there an existing issue for this?\r\n\r\n- [X] I have searched the existing issues\r\n\r\n### Current Behavior\r\n\r\nIt is not currently possible to install dbt through Pipenv because of incompatible versions of `protobuf` in sub-dependencies used in `dbt-bigquery`. \r\n\r\nSpecifically;\r\n\r\n- `google-api-core[grpc]==1.31.3` requires `protobuf<3.18.0`\r\n- `proto-plus==1.19.7` (used by `google-cloud-bigquery==2.29.0`) requires `protobuf>=3.19.0`\r\n\r\nAlternatively, installing dbt through `pip` may appear successful, but the issue of incompatible versions remains -- pip simply installs the version of `protobuf` that satisfies whichever sub-dependency is installed last in the sequence, ignoring that previous sub-dependencies required an incompatible version.\r\n\r\n### Expected Behavior\r\n\r\ndbt should ideally use non-conflicting versions of `google-api-core[grpc]` and `google-cloud-bigquery` or allow installation of dbt without the `dbt-bigquery` extra.\r\n\r\n### Steps To Reproduce\r\n\r\n1. `pip install pipenv`\r\n2. `pipenv install dbt`\r\n\r\n### Relevant log output\r\n\r\n```shell\r\n[pipenv.exceptions.ResolutionFailure]: Warning: Your dependencies could not be resolved. You likely have a mismatch in your sub-dependencies.\r\n First try clearing your dependency cache with $ pipenv lock --clear, then try the original command again.\r\n Alternatively, you can use $ pipenv install --skip-lock to bypass this mechanism, then run $ pipenv graph to inspect the situation.\r\n Hint: try $ pipenv lock --pre if it is a pre-release dependency.\r\nERROR: Could not find a version that matches protobuf<3.18.0,<4,>=3.12.0,>=3.13.0,>=3.19.0 (from dbt-bigquery==0.20.0->dbt==0.20->-r /var/folders/rq/bvjd2wms54j2m16rt180h5th0000gp/T/pipenvg3nqu30irequirements/pipenv-ng7tsiwu-constraints.txt (line 2))\r\nTried: 2.0.3, 2.3.0, 2.4.1, 2.5.0, 2.6.0, 2.6.1, 3.0.0, 3.0.0, 3.1.0, 3.1.0.post1, 3.1.0.post1, 3.2.0, 3.2.0, 3.3.0, 3.4.0, 3.4.0, 3.5.0.post1, 3.5.0.post1, 3.5.1, 3.5.1, 3.5.2, 3.5.2, 3.5.2.post1, 3.5.2.post1, 3.6.0, 3.6.0, 3.6.1, 3.6.1, 3.7.0, 3.7.0, 3.7.1, 3.7.1, 3.8.0, 3.8.0, 3.9.0, 3.9.0, 3.9.1, 3.9.1, 3.9.2, 3.9.2, 3.10.0, 3.10.0, 3.11.0, 3.11.0, 3.11.1, 3.11.1, 3.11.2, 3.11.2, 3.11.3, 3.11.3, 3.12.2, 3.12.2, 3.12.4, 3.12.4, 3.13.0, 3.13.0, 3.14.0, 3.14.0, 3.15.0, 3.15.0, 3.15.0, 3.15.1, 3.15.1, 3.15.1, 3.15.2, 3.15.2, 3.15.2, 3.15.3, 3.15.3, 3.15.3, 3.15.4, 3.15.4, 3.15.4, 3.15.5, 3.15.5, 3.15.5, 3.15.6, 3.15.6, 3.15.6, 3.15.7, 3.15.7, 3.15.7, 3.15.8, 3.15.8, 3.15.8, 3.16.0, 3.16.0, 3.16.0, 3.17.0, 3.17.0, 3.17.0, 3.17.1, 3.17.1, 3.17.1, 3.17.2, 3.17.2, 3.17.2, 3.17.3, 3.17.3, 3.17.3, 3.18.0, 3.18.0, 3.18.0, 3.18.1, 3.18.1, 3.18.1, 3.19.0, 3.19.0, 3.19.0, 3.19.1, 3.19.1, 3.19.1\r\nSkipped pre-versions: 2.0.0b0, 3.0.0a2, 3.0.0a3, 3.0.0b1, 3.0.0b1.post1, 3.0.0b1.post2, 3.0.0b2, 3.0.0b2, 3.0.0b2.post1, 3.0.0b2.post1, 3.0.0b2.post2, 3.0.0b2.post2, 3.0.0b3, 3.0.0b4, 3.0.0b4, 3.2.0rc1, 3.2.0rc1, 3.2.0rc1.post1, 3.2.0rc1.post1, 3.2.0rc2, 3.2.0rc2, 3.7.0rc2, 3.7.0rc2, 3.7.0rc3, 3.7.0rc3, 3.8.0rc1, 3.8.0rc1, 3.9.0rc1, 3.9.0rc1, 3.10.0rc1, 3.10.0rc1, 3.11.0rc1, 3.11.0rc1, 3.11.0rc2, 3.11.0rc2, 3.13.0rc3, 3.13.0rc3, 3.14.0rc1, 3.14.0rc1, 3.14.0rc2, 3.14.0rc2, 3.14.0rc3, 3.14.0rc3, 3.15.0rc1, 3.15.0rc1, 3.15.0rc1, 3.15.0rc2, 3.15.0rc2, 3.15.0rc2, 3.16.0rc1, 3.16.0rc1, 3.16.0rc1, 3.16.0rc2, 3.16.0rc2, 3.16.0rc2, 3.17.0rc1, 3.17.0rc1, 3.17.0rc1, 3.17.0rc2, 3.17.0rc2, 3.17.0rc2, 3.18.0rc1, 3.18.0rc1, 3.18.0rc1, 3.18.0rc2, 3.18.0rc2, 3.18.0rc2, 3.19.0rc1, 3.19.0rc1, 3.19.0rc1, 3.19.0rc2, 3.19.0rc2, 3.19.0rc2, 4.0.0rc1, 4.0.0rc1, 4.0.0rc2, 4.0.0rc2\r\nThere are incompatible versions in the resolved dependencies:\r\n protobuf<3.18.0,>=3.12.0 (from google-api-core[grpc]==1.31.3->dbt-bigquery==0.20.0->dbt==0.20->-r /var/folders/rq/bvjd2wms54j2m16rt180h5th0000gp/T/pipenvg3nqu30irequirements/pipenv-ng7tsiwu-constraints.txt (line 2))\r\n protobuf<4,>=3.13.0 (from dbt-bigquery==0.20.0->dbt==0.20->-r /var/folders/rq/bvjd2wms54j2m16rt180h5th0000gp/T/pipenvg3nqu30irequirements/pipenv-ng7tsiwu-constraints.txt (line 2))\r\n protobuf>=3.12.0 (from google-cloud-bigquery==2.29.0->dbt-bigquery==0.20.0->dbt==0.20->-r /var/folders/rq/bvjd2wms54j2m16rt180h5th0000gp/T/pipenvg3nqu30irequirements/pipenv-ng7tsiwu-constraints.txt (line 2))\r\n protobuf>=3.12.0 (from googleapis-common-protos==1.53.0->dbt-bigquery==0.20.0->dbt==0.20->-r /var/folders/rq/bvjd2wms54j2m16rt180h5th0000gp/T/pipenvg3nqu30irequirements/pipenv-ng7tsiwu-constraints.txt (line 2))\r\n protobuf>=3.19.0 (from proto-plus==1.19.7->google-cloud-bigquery==2.29.0->dbt-bigquery==0.20.0->dbt==0.20->-r /var/folders/rq/bvjd2wms54j2m16rt180h5th0000gp/T/pipenvg3nqu30irequirements/pipenv-ng7tsiwu-constraints.txt (line 2))\r\n```\r\n\r\n\r\n### Environment\r\n\r\n```markdown\r\n- OS: macOS 11.6\r\n- Python: Python 3.7\r\n- dbt: 0.21 (same error with 0.20)\r\n```\r\n\r\n\r\n### What database are you using dbt with?\r\n\r\n_No response_\r\n\r\n### Additional Context\r\n\r\n_No response_\n", "before_files": [{"content": "#!/usr/bin/env python\nimport os\nimport sys\n\nif sys.version_info < (3, 6):\n print('Error: dbt does not support this version of Python.')\n print('Please upgrade to Python 3.6 or higher.')\n sys.exit(1)\n\n\nfrom setuptools import setup\ntry:\n from setuptools import find_namespace_packages\nexcept ImportError:\n # the user has a downlevel version of setuptools.\n print('Error: dbt requires setuptools v40.1.0 or higher.')\n print('Please upgrade setuptools with \"pip install --upgrade setuptools\" '\n 'and try again')\n sys.exit(1)\n\n\npackage_name = \"dbt-bigquery\"\npackage_version = \"0.21.0\"\ndescription = \"\"\"The bigquery adapter plugin for dbt (data build tool)\"\"\"\n\nthis_directory = os.path.abspath(os.path.dirname(__file__))\nwith open(os.path.join(this_directory, 'README.md')) as f:\n long_description = f.read()\n\nsetup(\n name=package_name,\n version=package_version,\n description=description,\n long_description=long_description,\n long_description_content_type='text/markdown',\n author=\"dbt Labs\",\n author_email=\"info@dbtlabs.com\",\n url=\"https://github.com/dbt-labs/dbt\",\n packages=find_namespace_packages(include=['dbt', 'dbt.*']),\n package_data={\n 'dbt': [\n 'include/bigquery/dbt_project.yml',\n 'include/bigquery/sample_profiles.yml',\n 'include/bigquery/macros/*.sql',\n 'include/bigquery/macros/**/*.sql',\n ]\n },\n install_requires=[\n 'dbt-core=={}'.format(package_version),\n 'protobuf>=3.13.0,<4',\n 'google-cloud-core>=1.3.0,<2',\n 'google-cloud-bigquery>=1.25.0,<3',\n 'google-api-core>=1.16.0,<2',\n 'googleapis-common-protos>=1.6.0,<2',\n 'six>=1.14.0',\n ],\n zip_safe=False,\n classifiers=[\n 'Development Status :: 5 - Production/Stable',\n\n 'License :: OSI Approved :: Apache Software License',\n\n 'Operating System :: Microsoft :: Windows',\n 'Operating System :: MacOS :: MacOS X',\n 'Operating System :: POSIX :: Linux',\n\n 'Programming Language :: Python :: 3.6',\n 'Programming Language :: Python :: 3.7',\n 'Programming Language :: Python :: 3.8',\n 'Programming Language :: Python :: 3.9',\n ],\n python_requires=\">=3.6.2\",\n)\n", "path": "plugins/bigquery/setup.py"}], "after_files": [{"content": "#!/usr/bin/env python\nimport os\nimport sys\n\nif sys.version_info < (3, 6):\n print('Error: dbt does not support this version of Python.')\n print('Please upgrade to Python 3.6 or higher.')\n sys.exit(1)\n\n\nfrom setuptools import setup\ntry:\n from setuptools import find_namespace_packages\nexcept ImportError:\n # the user has a downlevel version of setuptools.\n print('Error: dbt requires setuptools v40.1.0 or higher.')\n print('Please upgrade setuptools with \"pip install --upgrade setuptools\" '\n 'and try again')\n sys.exit(1)\n\n\npackage_name = \"dbt-bigquery\"\npackage_version = \"0.21.0\"\ndescription = \"\"\"The bigquery adapter plugin for dbt (data build tool)\"\"\"\n\nthis_directory = os.path.abspath(os.path.dirname(__file__))\nwith open(os.path.join(this_directory, 'README.md')) as f:\n long_description = f.read()\n\nsetup(\n name=package_name,\n version=package_version,\n description=description,\n long_description=long_description,\n long_description_content_type='text/markdown',\n author=\"dbt Labs\",\n author_email=\"info@dbtlabs.com\",\n url=\"https://github.com/dbt-labs/dbt\",\n packages=find_namespace_packages(include=['dbt', 'dbt.*']),\n package_data={\n 'dbt': [\n 'include/bigquery/dbt_project.yml',\n 'include/bigquery/sample_profiles.yml',\n 'include/bigquery/macros/*.sql',\n 'include/bigquery/macros/**/*.sql',\n ]\n },\n install_requires=[\n 'dbt-core=={}'.format(package_version),\n 'protobuf>=3.13.0,<4',\n 'google-cloud-core>=1.3.0,<2',\n 'google-cloud-bigquery>=1.25.0,<3',\n 'google-api-core>=1.16.0,<1.31.3',\n 'googleapis-common-protos>=1.6.0,<2',\n 'six>=1.14.0',\n ],\n zip_safe=False,\n classifiers=[\n 'Development Status :: 5 - Production/Stable',\n\n 'License :: OSI Approved :: Apache Software License',\n\n 'Operating System :: Microsoft :: Windows',\n 'Operating System :: MacOS :: MacOS X',\n 'Operating System :: POSIX :: Linux',\n\n 'Programming Language :: Python :: 3.6',\n 'Programming Language :: Python :: 3.7',\n 'Programming Language :: Python :: 3.8',\n 'Programming Language :: Python :: 3.9',\n ],\n python_requires=\">=3.6.2\",\n)\n", "path": "plugins/bigquery/setup.py"}]}

| 3,710 | 154 |

gh_patches_debug_26755

|

rasdani/github-patches

|

git_diff

|

dask__dask-8213

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

⚠️ Upstream CI failed ⚠️

[Workflow Run URL](https://github.com/dask/dask/actions/runs/1304259797)

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `dask/dataframe/tseries/resample.py`

Content:

```

1 import numpy as np

2 import pandas as pd

3 from pandas.core.resample import Resampler as pd_Resampler

4

5 from ...base import tokenize

6 from ...highlevelgraph import HighLevelGraph

7 from ...utils import derived_from

8 from .. import methods

9 from ..core import DataFrame, Series

10

11

12 def getnanos(rule):

13 try:

14 return getattr(rule, "nanos", None)

15 except ValueError:

16 return None

17

18

19 def _resample_series(

20 series,

21 start,

22 end,

23 reindex_closed,

24 rule,

25 resample_kwargs,

26 how,

27 fill_value,

28 how_args,

29 how_kwargs,

30 ):

31 out = getattr(series.resample(rule, **resample_kwargs), how)(

32 *how_args, **how_kwargs

33 )

34

35 new_index = pd.date_range(

36 start.tz_localize(None),

37 end.tz_localize(None),

38 freq=rule,

39 closed=reindex_closed,

40 name=out.index.name,

41 ).tz_localize(start.tz, nonexistent="shift_forward")

42

43 if not out.index.isin(new_index).all():

44 raise ValueError(

45 "Index is not contained within new index. This can often be "

46 "resolved by using larger partitions, or unambiguous "

47 "frequencies: 'Q', 'A'..."

48 )

49

50 return out.reindex(new_index, fill_value=fill_value)

51

52

53 def _resample_bin_and_out_divs(divisions, rule, closed="left", label="left"):

54 rule = pd.tseries.frequencies.to_offset(rule)

55 g = pd.Grouper(freq=rule, how="count", closed=closed, label=label)

56

57 # Determine bins to apply `how` to. Disregard labeling scheme.

58 divs = pd.Series(range(len(divisions)), index=divisions)

59 temp = divs.resample(rule, closed=closed, label="left").count()

60 tempdivs = temp.loc[temp > 0].index

61

62 # Cleanup closed == 'right' and label == 'right'

63 res = pd.offsets.Nano() if hasattr(rule, "delta") else pd.offsets.Day()

64 if g.closed == "right":

65 newdivs = tempdivs + res

66 else:

67 newdivs = tempdivs

68 if g.label == "right":

69 outdivs = tempdivs + rule

70 else:

71 outdivs = tempdivs

72

73 newdivs = methods.tolist(newdivs)

74 outdivs = methods.tolist(outdivs)

75

76 # Adjust ends

77 if newdivs[0] < divisions[0]:

78 newdivs[0] = divisions[0]

79 if newdivs[-1] < divisions[-1]:

80 if len(newdivs) < len(divs):

81 setter = lambda a, val: a.append(val)

82 else:

83 setter = lambda a, val: a.__setitem__(-1, val)

84 setter(newdivs, divisions[-1] + res)

85 if outdivs[-1] > divisions[-1]:

86 setter(outdivs, outdivs[-1])

87 elif outdivs[-1] < divisions[-1]:

88 setter(outdivs, temp.index[-1])

89

90 return tuple(map(pd.Timestamp, newdivs)), tuple(map(pd.Timestamp, outdivs))

91

92

93 class Resampler:

94 """Class for resampling timeseries data.

95

96 This class is commonly encountered when using ``obj.resample(...)`` which

97 return ``Resampler`` objects.

98

99 Parameters

100 ----------

101 obj : Dask DataFrame or Series

102 Data to be resampled.

103 rule : str, tuple, datetime.timedelta, DateOffset or None

104 The offset string or object representing the target conversion.

105 kwargs : optional

106 Keyword arguments passed to underlying pandas resampling function.

107

108 Returns

109 -------

110 Resampler instance of the appropriate type

111 """

112

113 def __init__(self, obj, rule, **kwargs):

114 if not obj.known_divisions:

115 msg = (

116 "Can only resample dataframes with known divisions\n"

117 "See https://docs.dask.org/en/latest/dataframe-design.html#partitions\n"

118 "for more information."

119 )

120 raise ValueError(msg)

121 self.obj = obj

122 self._rule = pd.tseries.frequencies.to_offset(rule)

123 self._kwargs = kwargs

124

125 def _agg(self, how, meta=None, fill_value=np.nan, how_args=(), how_kwargs={}):

126 """Aggregate using one or more operations

127

128 Parameters

129 ----------

130 how : str

131 Name of aggregation operation

132 fill_value : scalar, optional

133 Value to use for missing values, applied during upsampling.

134 Default is NaN.

135 how_args : optional

136 Positional arguments for aggregation operation.

137 how_kwargs : optional

138 Keyword arguments for aggregation operation.

139

140 Returns

141 -------

142 Dask DataFrame or Series

143 """

144 rule = self._rule

145 kwargs = self._kwargs

146 name = "resample-" + tokenize(

147 self.obj, rule, kwargs, how, *how_args, **how_kwargs

148 )

149

150 # Create a grouper to determine closed and label conventions

151 newdivs, outdivs = _resample_bin_and_out_divs(

152 self.obj.divisions, rule, **kwargs

153 )

154

155 # Repartition divs into bins. These won't match labels after mapping

156 partitioned = self.obj.repartition(newdivs, force=True)

157

158 keys = partitioned.__dask_keys__()

159 dsk = {}

160

161 args = zip(keys, outdivs, outdivs[1:], ["left"] * (len(keys) - 1) + [None])

162 for i, (k, s, e, c) in enumerate(args):

163 dsk[(name, i)] = (

164 _resample_series,

165 k,

166 s,

167 e,

168 c,

169 rule,

170 kwargs,

171 how,

172 fill_value,

173 list(how_args),

174 how_kwargs,

175 )

176

177 # Infer output metadata

178 meta_r = self.obj._meta_nonempty.resample(self._rule, **self._kwargs)

179 meta = getattr(meta_r, how)(*how_args, **how_kwargs)

180

181 graph = HighLevelGraph.from_collections(name, dsk, dependencies=[partitioned])

182 if isinstance(meta, pd.DataFrame):

183 return DataFrame(graph, name, meta, outdivs)

184 return Series(graph, name, meta, outdivs)

185

186 @derived_from(pd_Resampler)

187 def agg(self, agg_funcs, *args, **kwargs):

188 return self._agg("agg", how_args=(agg_funcs,) + args, how_kwargs=kwargs)

189

190 @derived_from(pd_Resampler)

191 def count(self):

192 return self._agg("count", fill_value=0)

193

194 @derived_from(pd_Resampler)

195 def first(self):

196 return self._agg("first")

197

198 @derived_from(pd_Resampler)

199 def last(self):

200 return self._agg("last")

201

202 @derived_from(pd_Resampler)

203 def mean(self):

204 return self._agg("mean")

205

206 @derived_from(pd_Resampler)

207 def min(self):

208 return self._agg("min")

209

210 @derived_from(pd_Resampler)

211 def median(self):

212 return self._agg("median")

213

214 @derived_from(pd_Resampler)

215 def max(self):

216 return self._agg("max")

217

218 @derived_from(pd_Resampler)

219 def nunique(self):

220 return self._agg("nunique", fill_value=0)

221

222 @derived_from(pd_Resampler)

223 def ohlc(self):

224 return self._agg("ohlc")

225

226 @derived_from(pd_Resampler)

227 def prod(self):

228 return self._agg("prod")

229

230 @derived_from(pd_Resampler)

231 def sem(self):

232 return self._agg("sem")

233

234 @derived_from(pd_Resampler)

235 def std(self):

236 return self._agg("std")

237

238 @derived_from(pd_Resampler)

239 def size(self):

240 return self._agg("size", fill_value=0)

241

242 @derived_from(pd_Resampler)

243 def sum(self):

244 return self._agg("sum", fill_value=0)

245

246 @derived_from(pd_Resampler)

247 def var(self):

248 return self._agg("var")

249

250 @derived_from(pd_Resampler)

251 def quantile(self):

252 return self._agg("quantile")

253

```

Path: `dask/dataframe/_compat.py`

Content:

```

1 import string

2

3 import numpy as np

4 import pandas as pd

5 from packaging.version import parse as parse_version

6

7 PANDAS_VERSION = parse_version(pd.__version__)

8 PANDAS_GT_104 = PANDAS_VERSION >= parse_version("1.0.4")

9 PANDAS_GT_110 = PANDAS_VERSION >= parse_version("1.1.0")

10 PANDAS_GT_120 = PANDAS_VERSION >= parse_version("1.2.0")

11 PANDAS_GT_121 = PANDAS_VERSION >= parse_version("1.2.1")

12 PANDAS_GT_130 = PANDAS_VERSION >= parse_version("1.3.0")

13 PANDAS_GT_131 = PANDAS_VERSION >= parse_version("1.3.1")

14 PANDAS_GT_133 = PANDAS_VERSION >= parse_version("1.3.3")

15

16 import pandas.testing as tm

17

18

19 def assert_categorical_equal(left, right, *args, **kwargs):

20 tm.assert_extension_array_equal(left, right, *args, **kwargs)

21 assert pd.api.types.is_categorical_dtype(

22 left.dtype

23 ), "{} is not categorical dtype".format(left)

24 assert pd.api.types.is_categorical_dtype(

25 right.dtype

26 ), "{} is not categorical dtype".format(right)

27

28

29 def assert_numpy_array_equal(left, right):

30 left_na = pd.isna(left)

31 right_na = pd.isna(right)

32 np.testing.assert_array_equal(left_na, right_na)

33

34 left_valid = left[~left_na]

35 right_valid = right[~right_na]

36 np.testing.assert_array_equal(left_valid, right_valid)

37

38

39 def makeDataFrame():

40 data = np.random.randn(30, 4)

41 index = list(string.ascii_letters)[:30]

42 return pd.DataFrame(data, index=index, columns=list("ABCD"))

43

44

45 def makeTimeDataFrame():

46 data = makeDataFrame()

47 data.index = makeDateIndex()

48 return data

49

50

51 def makeTimeSeries():

52 return makeTimeDataFrame()["A"]

53

54

55 def makeDateIndex(k=30, freq="B"):

56 return pd.date_range("2000", periods=k, freq=freq)

57

58

59 def makeTimedeltaIndex(k=30, freq="D"):

60 return pd.timedelta_range("1 day", periods=k, freq=freq)

61

62

63 def makeMissingDataframe():

64 df = makeDataFrame()

65 data = df.values

66 data = np.where(data > 1, np.nan, data)

67 return pd.DataFrame(data, index=df.index, columns=df.columns)

68

69

70 def makeMixedDataFrame():

71 df = pd.DataFrame(

72 {

73 "A": [0.0, 1, 2, 3, 4],

74 "B": [0.0, 1, 0, 1, 0],

75 "C": ["foo{}".format(i) for i in range(5)],

76 "D": pd.date_range("2009-01-01", periods=5),

77 }

78 )

79 return df

80

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/dask/dataframe/_compat.py b/dask/dataframe/_compat.py

--- a/dask/dataframe/_compat.py

+++ b/dask/dataframe/_compat.py

@@ -12,6 +12,7 @@

PANDAS_GT_130 = PANDAS_VERSION >= parse_version("1.3.0")

PANDAS_GT_131 = PANDAS_VERSION >= parse_version("1.3.1")

PANDAS_GT_133 = PANDAS_VERSION >= parse_version("1.3.3")

+PANDAS_GT_140 = PANDAS_VERSION.release == (1, 4, 0) # include pre-release

import pandas.testing as tm

diff --git a/dask/dataframe/tseries/resample.py b/dask/dataframe/tseries/resample.py

--- a/dask/dataframe/tseries/resample.py

+++ b/dask/dataframe/tseries/resample.py

@@ -6,6 +6,7 @@

from ...highlevelgraph import HighLevelGraph

from ...utils import derived_from

from .. import methods

+from .._compat import PANDAS_GT_140

from ..core import DataFrame, Series

@@ -32,11 +33,20 @@

*how_args, **how_kwargs

)

+ if PANDAS_GT_140:

+ if reindex_closed is None:

+ inclusive = "both"

+ else:

+ inclusive = reindex_closed

+ closed_kwargs = {"inclusive": inclusive}

+ else:

+ closed_kwargs = {"closed": reindex_closed}

+

new_index = pd.date_range(

start.tz_localize(None),

end.tz_localize(None),

freq=rule,

- closed=reindex_closed,

+ **closed_kwargs,

name=out.index.name,

).tz_localize(start.tz, nonexistent="shift_forward")

|

{"golden_diff": "diff --git a/dask/dataframe/_compat.py b/dask/dataframe/_compat.py\n--- a/dask/dataframe/_compat.py\n+++ b/dask/dataframe/_compat.py\n@@ -12,6 +12,7 @@\n PANDAS_GT_130 = PANDAS_VERSION >= parse_version(\"1.3.0\")\n PANDAS_GT_131 = PANDAS_VERSION >= parse_version(\"1.3.1\")\n PANDAS_GT_133 = PANDAS_VERSION >= parse_version(\"1.3.3\")\n+PANDAS_GT_140 = PANDAS_VERSION.release == (1, 4, 0) # include pre-release\n \n import pandas.testing as tm\n \ndiff --git a/dask/dataframe/tseries/resample.py b/dask/dataframe/tseries/resample.py\n--- a/dask/dataframe/tseries/resample.py\n+++ b/dask/dataframe/tseries/resample.py\n@@ -6,6 +6,7 @@\n from ...highlevelgraph import HighLevelGraph\n from ...utils import derived_from\n from .. import methods\n+from .._compat import PANDAS_GT_140\n from ..core import DataFrame, Series\n \n \n@@ -32,11 +33,20 @@\n *how_args, **how_kwargs\n )\n \n+ if PANDAS_GT_140:\n+ if reindex_closed is None:\n+ inclusive = \"both\"\n+ else:\n+ inclusive = reindex_closed\n+ closed_kwargs = {\"inclusive\": inclusive}\n+ else:\n+ closed_kwargs = {\"closed\": reindex_closed}\n+\n new_index = pd.date_range(\n start.tz_localize(None),\n end.tz_localize(None),\n freq=rule,\n- closed=reindex_closed,\n+ **closed_kwargs,\n name=out.index.name,\n ).tz_localize(start.tz, nonexistent=\"shift_forward\")\n", "issue": "\u26a0\ufe0f Upstream CI failed \u26a0\ufe0f\n[Workflow Run URL](https://github.com/dask/dask/actions/runs/1304259797)\n", "before_files": [{"content": "import numpy as np\nimport pandas as pd\nfrom pandas.core.resample import Resampler as pd_Resampler\n\nfrom ...base import tokenize\nfrom ...highlevelgraph import HighLevelGraph\nfrom ...utils import derived_from\nfrom .. import methods\nfrom ..core import DataFrame, Series\n\n\ndef getnanos(rule):\n try:\n return getattr(rule, \"nanos\", None)\n except ValueError:\n return None\n\n\ndef _resample_series(\n series,\n start,\n end,\n reindex_closed,\n rule,\n resample_kwargs,\n how,\n fill_value,\n how_args,\n how_kwargs,\n):\n out = getattr(series.resample(rule, **resample_kwargs), how)(\n *how_args, **how_kwargs\n )\n\n new_index = pd.date_range(\n start.tz_localize(None),\n end.tz_localize(None),\n freq=rule,\n closed=reindex_closed,\n name=out.index.name,\n ).tz_localize(start.tz, nonexistent=\"shift_forward\")\n\n if not out.index.isin(new_index).all():\n raise ValueError(\n \"Index is not contained within new index. This can often be \"\n \"resolved by using larger partitions, or unambiguous \"\n \"frequencies: 'Q', 'A'...\"\n )\n\n return out.reindex(new_index, fill_value=fill_value)\n\n\ndef _resample_bin_and_out_divs(divisions, rule, closed=\"left\", label=\"left\"):\n rule = pd.tseries.frequencies.to_offset(rule)\n g = pd.Grouper(freq=rule, how=\"count\", closed=closed, label=label)\n\n # Determine bins to apply `how` to. Disregard labeling scheme.\n divs = pd.Series(range(len(divisions)), index=divisions)\n temp = divs.resample(rule, closed=closed, label=\"left\").count()\n tempdivs = temp.loc[temp > 0].index\n\n # Cleanup closed == 'right' and label == 'right'\n res = pd.offsets.Nano() if hasattr(rule, \"delta\") else pd.offsets.Day()\n if g.closed == \"right\":\n newdivs = tempdivs + res\n else:\n newdivs = tempdivs\n if g.label == \"right\":\n outdivs = tempdivs + rule\n else:\n outdivs = tempdivs\n\n newdivs = methods.tolist(newdivs)\n outdivs = methods.tolist(outdivs)\n\n # Adjust ends\n if newdivs[0] < divisions[0]:\n newdivs[0] = divisions[0]\n if newdivs[-1] < divisions[-1]:\n if len(newdivs) < len(divs):\n setter = lambda a, val: a.append(val)\n else:\n setter = lambda a, val: a.__setitem__(-1, val)\n setter(newdivs, divisions[-1] + res)\n if outdivs[-1] > divisions[-1]:\n setter(outdivs, outdivs[-1])\n elif outdivs[-1] < divisions[-1]:\n setter(outdivs, temp.index[-1])\n\n return tuple(map(pd.Timestamp, newdivs)), tuple(map(pd.Timestamp, outdivs))\n\n\nclass Resampler:\n \"\"\"Class for resampling timeseries data.\n\n This class is commonly encountered when using ``obj.resample(...)`` which\n return ``Resampler`` objects.\n\n Parameters\n ----------\n obj : Dask DataFrame or Series\n Data to be resampled.\n rule : str, tuple, datetime.timedelta, DateOffset or None\n The offset string or object representing the target conversion.\n kwargs : optional\n Keyword arguments passed to underlying pandas resampling function.\n\n Returns\n -------\n Resampler instance of the appropriate type\n \"\"\"\n\n def __init__(self, obj, rule, **kwargs):\n if not obj.known_divisions:\n msg = (\n \"Can only resample dataframes with known divisions\\n\"\n \"See https://docs.dask.org/en/latest/dataframe-design.html#partitions\\n\"\n \"for more information.\"\n )\n raise ValueError(msg)\n self.obj = obj\n self._rule = pd.tseries.frequencies.to_offset(rule)\n self._kwargs = kwargs\n\n def _agg(self, how, meta=None, fill_value=np.nan, how_args=(), how_kwargs={}):\n \"\"\"Aggregate using one or more operations\n\n Parameters\n ----------\n how : str\n Name of aggregation operation\n fill_value : scalar, optional\n Value to use for missing values, applied during upsampling.\n Default is NaN.\n how_args : optional\n Positional arguments for aggregation operation.\n how_kwargs : optional\n Keyword arguments for aggregation operation.\n\n Returns\n -------\n Dask DataFrame or Series\n \"\"\"\n rule = self._rule\n kwargs = self._kwargs\n name = \"resample-\" + tokenize(\n self.obj, rule, kwargs, how, *how_args, **how_kwargs\n )\n\n # Create a grouper to determine closed and label conventions\n newdivs, outdivs = _resample_bin_and_out_divs(\n self.obj.divisions, rule, **kwargs\n )\n\n # Repartition divs into bins. These won't match labels after mapping\n partitioned = self.obj.repartition(newdivs, force=True)\n\n keys = partitioned.__dask_keys__()\n dsk = {}\n\n args = zip(keys, outdivs, outdivs[1:], [\"left\"] * (len(keys) - 1) + [None])\n for i, (k, s, e, c) in enumerate(args):\n dsk[(name, i)] = (\n _resample_series,\n k,\n s,\n e,\n c,\n rule,\n kwargs,\n how,\n fill_value,\n list(how_args),\n how_kwargs,\n )\n\n # Infer output metadata\n meta_r = self.obj._meta_nonempty.resample(self._rule, **self._kwargs)\n meta = getattr(meta_r, how)(*how_args, **how_kwargs)\n\n graph = HighLevelGraph.from_collections(name, dsk, dependencies=[partitioned])\n if isinstance(meta, pd.DataFrame):\n return DataFrame(graph, name, meta, outdivs)\n return Series(graph, name, meta, outdivs)\n\n @derived_from(pd_Resampler)\n def agg(self, agg_funcs, *args, **kwargs):\n return self._agg(\"agg\", how_args=(agg_funcs,) + args, how_kwargs=kwargs)\n\n @derived_from(pd_Resampler)\n def count(self):\n return self._agg(\"count\", fill_value=0)\n\n @derived_from(pd_Resampler)\n def first(self):\n return self._agg(\"first\")\n\n @derived_from(pd_Resampler)\n def last(self):\n return self._agg(\"last\")\n\n @derived_from(pd_Resampler)\n def mean(self):\n return self._agg(\"mean\")\n\n @derived_from(pd_Resampler)\n def min(self):\n return self._agg(\"min\")\n\n @derived_from(pd_Resampler)\n def median(self):\n return self._agg(\"median\")\n\n @derived_from(pd_Resampler)\n def max(self):\n return self._agg(\"max\")\n\n @derived_from(pd_Resampler)\n def nunique(self):\n return self._agg(\"nunique\", fill_value=0)\n\n @derived_from(pd_Resampler)\n def ohlc(self):\n return self._agg(\"ohlc\")\n\n @derived_from(pd_Resampler)\n def prod(self):\n return self._agg(\"prod\")\n\n @derived_from(pd_Resampler)\n def sem(self):\n return self._agg(\"sem\")\n\n @derived_from(pd_Resampler)\n def std(self):\n return self._agg(\"std\")\n\n @derived_from(pd_Resampler)\n def size(self):\n return self._agg(\"size\", fill_value=0)\n\n @derived_from(pd_Resampler)\n def sum(self):\n return self._agg(\"sum\", fill_value=0)\n\n @derived_from(pd_Resampler)\n def var(self):\n return self._agg(\"var\")\n\n @derived_from(pd_Resampler)\n def quantile(self):\n return self._agg(\"quantile\")\n", "path": "dask/dataframe/tseries/resample.py"}, {"content": "import string\n\nimport numpy as np\nimport pandas as pd\nfrom packaging.version import parse as parse_version\n\nPANDAS_VERSION = parse_version(pd.__version__)\nPANDAS_GT_104 = PANDAS_VERSION >= parse_version(\"1.0.4\")\nPANDAS_GT_110 = PANDAS_VERSION >= parse_version(\"1.1.0\")\nPANDAS_GT_120 = PANDAS_VERSION >= parse_version(\"1.2.0\")\nPANDAS_GT_121 = PANDAS_VERSION >= parse_version(\"1.2.1\")\nPANDAS_GT_130 = PANDAS_VERSION >= parse_version(\"1.3.0\")\nPANDAS_GT_131 = PANDAS_VERSION >= parse_version(\"1.3.1\")\nPANDAS_GT_133 = PANDAS_VERSION >= parse_version(\"1.3.3\")\n\nimport pandas.testing as tm\n\n\ndef assert_categorical_equal(left, right, *args, **kwargs):\n tm.assert_extension_array_equal(left, right, *args, **kwargs)\n assert pd.api.types.is_categorical_dtype(\n left.dtype\n ), \"{} is not categorical dtype\".format(left)\n assert pd.api.types.is_categorical_dtype(\n right.dtype\n ), \"{} is not categorical dtype\".format(right)\n\n\ndef assert_numpy_array_equal(left, right):\n left_na = pd.isna(left)\n right_na = pd.isna(right)\n np.testing.assert_array_equal(left_na, right_na)\n\n left_valid = left[~left_na]\n right_valid = right[~right_na]\n np.testing.assert_array_equal(left_valid, right_valid)\n\n\ndef makeDataFrame():\n data = np.random.randn(30, 4)\n index = list(string.ascii_letters)[:30]\n return pd.DataFrame(data, index=index, columns=list(\"ABCD\"))\n\n\ndef makeTimeDataFrame():\n data = makeDataFrame()\n data.index = makeDateIndex()\n return data\n\n\ndef makeTimeSeries():\n return makeTimeDataFrame()[\"A\"]\n\n\ndef makeDateIndex(k=30, freq=\"B\"):\n return pd.date_range(\"2000\", periods=k, freq=freq)\n\n\ndef makeTimedeltaIndex(k=30, freq=\"D\"):\n return pd.timedelta_range(\"1 day\", periods=k, freq=freq)\n\n\ndef makeMissingDataframe():\n df = makeDataFrame()\n data = df.values\n data = np.where(data > 1, np.nan, data)\n return pd.DataFrame(data, index=df.index, columns=df.columns)\n\n\ndef makeMixedDataFrame():\n df = pd.DataFrame(\n {\n \"A\": [0.0, 1, 2, 3, 4],\n \"B\": [0.0, 1, 0, 1, 0],\n \"C\": [\"foo{}\".format(i) for i in range(5)],\n \"D\": pd.date_range(\"2009-01-01\", periods=5),\n }\n )\n return df\n", "path": "dask/dataframe/_compat.py"}], "after_files": [{"content": "import numpy as np\nimport pandas as pd\nfrom pandas.core.resample import Resampler as pd_Resampler\n\nfrom ...base import tokenize\nfrom ...highlevelgraph import HighLevelGraph\nfrom ...utils import derived_from\nfrom .. import methods\nfrom .._compat import PANDAS_GT_140\nfrom ..core import DataFrame, Series\n\n\ndef getnanos(rule):\n try:\n return getattr(rule, \"nanos\", None)\n except ValueError:\n return None\n\n\ndef _resample_series(\n series,\n start,\n end,\n reindex_closed,\n rule,\n resample_kwargs,\n how,\n fill_value,\n how_args,\n how_kwargs,\n):\n out = getattr(series.resample(rule, **resample_kwargs), how)(\n *how_args, **how_kwargs\n )\n\n if PANDAS_GT_140:\n if reindex_closed is None:\n inclusive = \"both\"\n else:\n inclusive = reindex_closed\n closed_kwargs = {\"inclusive\": inclusive}\n else:\n closed_kwargs = {\"closed\": reindex_closed}\n\n new_index = pd.date_range(\n start.tz_localize(None),\n end.tz_localize(None),\n freq=rule,\n **closed_kwargs,\n name=out.index.name,\n ).tz_localize(start.tz, nonexistent=\"shift_forward\")\n\n if not out.index.isin(new_index).all():\n raise ValueError(\n \"Index is not contained within new index. This can often be \"\n \"resolved by using larger partitions, or unambiguous \"\n \"frequencies: 'Q', 'A'...\"\n )\n\n return out.reindex(new_index, fill_value=fill_value)\n\n\ndef _resample_bin_and_out_divs(divisions, rule, closed=\"left\", label=\"left\"):\n rule = pd.tseries.frequencies.to_offset(rule)\n g = pd.Grouper(freq=rule, how=\"count\", closed=closed, label=label)\n\n # Determine bins to apply `how` to. Disregard labeling scheme.\n divs = pd.Series(range(len(divisions)), index=divisions)\n temp = divs.resample(rule, closed=closed, label=\"left\").count()\n tempdivs = temp.loc[temp > 0].index\n\n # Cleanup closed == 'right' and label == 'right'\n res = pd.offsets.Nano() if hasattr(rule, \"delta\") else pd.offsets.Day()\n if g.closed == \"right\":\n newdivs = tempdivs + res\n else:\n newdivs = tempdivs\n if g.label == \"right\":\n outdivs = tempdivs + rule\n else:\n outdivs = tempdivs\n\n newdivs = methods.tolist(newdivs)\n outdivs = methods.tolist(outdivs)\n\n # Adjust ends\n if newdivs[0] < divisions[0]:\n newdivs[0] = divisions[0]\n if newdivs[-1] < divisions[-1]:\n if len(newdivs) < len(divs):\n setter = lambda a, val: a.append(val)\n else:\n setter = lambda a, val: a.__setitem__(-1, val)\n setter(newdivs, divisions[-1] + res)\n if outdivs[-1] > divisions[-1]:\n setter(outdivs, outdivs[-1])\n elif outdivs[-1] < divisions[-1]:\n setter(outdivs, temp.index[-1])\n\n return tuple(map(pd.Timestamp, newdivs)), tuple(map(pd.Timestamp, outdivs))\n\n\nclass Resampler:\n \"\"\"Class for resampling timeseries data.\n\n This class is commonly encountered when using ``obj.resample(...)`` which\n return ``Resampler`` objects.\n\n Parameters\n ----------\n obj : Dask DataFrame or Series\n Data to be resampled.\n rule : str, tuple, datetime.timedelta, DateOffset or None\n The offset string or object representing the target conversion.\n kwargs : optional\n Keyword arguments passed to underlying pandas resampling function.\n\n Returns\n -------\n Resampler instance of the appropriate type\n \"\"\"\n\n def __init__(self, obj, rule, **kwargs):\n if not obj.known_divisions:\n msg = (\n \"Can only resample dataframes with known divisions\\n\"\n \"See https://docs.dask.org/en/latest/dataframe-design.html#partitions\\n\"\n \"for more information.\"\n )\n raise ValueError(msg)\n self.obj = obj\n self._rule = pd.tseries.frequencies.to_offset(rule)\n self._kwargs = kwargs\n\n def _agg(self, how, meta=None, fill_value=np.nan, how_args=(), how_kwargs={}):\n \"\"\"Aggregate using one or more operations\n\n Parameters\n ----------\n how : str\n Name of aggregation operation\n fill_value : scalar, optional\n Value to use for missing values, applied during upsampling.\n Default is NaN.\n how_args : optional\n Positional arguments for aggregation operation.\n how_kwargs : optional\n Keyword arguments for aggregation operation.\n\n Returns\n -------\n Dask DataFrame or Series\n \"\"\"\n rule = self._rule\n kwargs = self._kwargs\n name = \"resample-\" + tokenize(\n self.obj, rule, kwargs, how, *how_args, **how_kwargs\n )\n\n # Create a grouper to determine closed and label conventions\n newdivs, outdivs = _resample_bin_and_out_divs(\n self.obj.divisions, rule, **kwargs\n )\n\n # Repartition divs into bins. These won't match labels after mapping\n partitioned = self.obj.repartition(newdivs, force=True)\n\n keys = partitioned.__dask_keys__()\n dsk = {}\n\n args = zip(keys, outdivs, outdivs[1:], [\"left\"] * (len(keys) - 1) + [None])\n for i, (k, s, e, c) in enumerate(args):\n dsk[(name, i)] = (\n _resample_series,\n k,\n s,\n e,\n c,\n rule,\n kwargs,\n how,\n fill_value,\n list(how_args),\n how_kwargs,\n )\n\n # Infer output metadata\n meta_r = self.obj._meta_nonempty.resample(self._rule, **self._kwargs)\n meta = getattr(meta_r, how)(*how_args, **how_kwargs)\n\n graph = HighLevelGraph.from_collections(name, dsk, dependencies=[partitioned])\n if isinstance(meta, pd.DataFrame):\n return DataFrame(graph, name, meta, outdivs)\n return Series(graph, name, meta, outdivs)\n\n @derived_from(pd_Resampler)\n def agg(self, agg_funcs, *args, **kwargs):\n return self._agg(\"agg\", how_args=(agg_funcs,) + args, how_kwargs=kwargs)\n\n @derived_from(pd_Resampler)\n def count(self):\n return self._agg(\"count\", fill_value=0)\n\n @derived_from(pd_Resampler)\n def first(self):\n return self._agg(\"first\")\n\n @derived_from(pd_Resampler)\n def last(self):\n return self._agg(\"last\")\n\n @derived_from(pd_Resampler)\n def mean(self):\n return self._agg(\"mean\")\n\n @derived_from(pd_Resampler)\n def min(self):\n return self._agg(\"min\")\n\n @derived_from(pd_Resampler)\n def median(self):\n return self._agg(\"median\")\n\n @derived_from(pd_Resampler)\n def max(self):\n return self._agg(\"max\")\n\n @derived_from(pd_Resampler)\n def nunique(self):\n return self._agg(\"nunique\", fill_value=0)\n\n @derived_from(pd_Resampler)\n def ohlc(self):\n return self._agg(\"ohlc\")\n\n @derived_from(pd_Resampler)\n def prod(self):\n return self._agg(\"prod\")\n\n @derived_from(pd_Resampler)\n def sem(self):\n return self._agg(\"sem\")\n\n @derived_from(pd_Resampler)\n def std(self):\n return self._agg(\"std\")\n\n @derived_from(pd_Resampler)\n def size(self):\n return self._agg(\"size\", fill_value=0)\n\n @derived_from(pd_Resampler)\n def sum(self):\n return self._agg(\"sum\", fill_value=0)\n\n @derived_from(pd_Resampler)\n def var(self):\n return self._agg(\"var\")\n\n @derived_from(pd_Resampler)\n def quantile(self):\n return self._agg(\"quantile\")\n", "path": "dask/dataframe/tseries/resample.py"}, {"content": "import string\n\nimport numpy as np\nimport pandas as pd\nfrom packaging.version import parse as parse_version\n\nPANDAS_VERSION = parse_version(pd.__version__)\nPANDAS_GT_104 = PANDAS_VERSION >= parse_version(\"1.0.4\")\nPANDAS_GT_110 = PANDAS_VERSION >= parse_version(\"1.1.0\")\nPANDAS_GT_120 = PANDAS_VERSION >= parse_version(\"1.2.0\")\nPANDAS_GT_121 = PANDAS_VERSION >= parse_version(\"1.2.1\")\nPANDAS_GT_130 = PANDAS_VERSION >= parse_version(\"1.3.0\")\nPANDAS_GT_131 = PANDAS_VERSION >= parse_version(\"1.3.1\")\nPANDAS_GT_133 = PANDAS_VERSION >= parse_version(\"1.3.3\")\nPANDAS_GT_140 = PANDAS_VERSION.release == (1, 4, 0) # include pre-release\n\nimport pandas.testing as tm\n\n\ndef assert_categorical_equal(left, right, *args, **kwargs):\n tm.assert_extension_array_equal(left, right, *args, **kwargs)\n assert pd.api.types.is_categorical_dtype(\n left.dtype\n ), \"{} is not categorical dtype\".format(left)\n assert pd.api.types.is_categorical_dtype(\n right.dtype\n ), \"{} is not categorical dtype\".format(right)\n\n\ndef assert_numpy_array_equal(left, right):\n left_na = pd.isna(left)\n right_na = pd.isna(right)\n np.testing.assert_array_equal(left_na, right_na)\n\n left_valid = left[~left_na]\n right_valid = right[~right_na]\n np.testing.assert_array_equal(left_valid, right_valid)\n\n\ndef makeDataFrame():\n data = np.random.randn(30, 4)\n index = list(string.ascii_letters)[:30]\n return pd.DataFrame(data, index=index, columns=list(\"ABCD\"))\n\n\ndef makeTimeDataFrame():\n data = makeDataFrame()\n data.index = makeDateIndex()\n return data\n\n\ndef makeTimeSeries():\n return makeTimeDataFrame()[\"A\"]\n\n\ndef makeDateIndex(k=30, freq=\"B\"):\n return pd.date_range(\"2000\", periods=k, freq=freq)\n\n\ndef makeTimedeltaIndex(k=30, freq=\"D\"):\n return pd.timedelta_range(\"1 day\", periods=k, freq=freq)\n\n\ndef makeMissingDataframe():\n df = makeDataFrame()\n data = df.values\n data = np.where(data > 1, np.nan, data)\n return pd.DataFrame(data, index=df.index, columns=df.columns)\n\n\ndef makeMixedDataFrame():\n df = pd.DataFrame(\n {\n \"A\": [0.0, 1, 2, 3, 4],\n \"B\": [0.0, 1, 0, 1, 0],\n \"C\": [\"foo{}\".format(i) for i in range(5)],\n \"D\": pd.date_range(\"2009-01-01\", periods=5),\n }\n )\n return df\n", "path": "dask/dataframe/_compat.py"}]}

| 3,598 | 417 |

gh_patches_debug_26197

|

rasdani/github-patches

|

git_diff

|

automl__auto-sklearn-1508

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Fix timeouts related to metalearnings tests

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `scripts/03_calculate_metafeatures.py`

Content:

```

1 from argparse import ArgumentParser

2 from collections import defaultdict, OrderedDict

3 import copy

4 import logging

5 import os

6 import sys

7 import unittest.mock

8

9 import arff

10 import joblib

11 import numpy as np

12 import pandas as pd

13

14 from autosklearn.constants import (

15 BINARY_CLASSIFICATION,

16 MULTICLASS_CLASSIFICATION,

17 REGRESSION,

18 )

19 from autosklearn.metalearning.metafeatures import metafeatures

20 from autosklearn.smbo import (

21 _calculate_metafeatures,

22 _calculate_metafeatures_encoded,

23 EXCLUDE_META_FEATURES_REGRESSION,

24 EXCLUDE_META_FEATURES_CLASSIFICATION,

25 )

26 from autosklearn.util.stopwatch import StopWatch

27

28 sys.path.append(".")

29 from update_metadata_util import load_task, classification_tasks, regression_tasks

30

31 logger = logging.getLogger("03_calculate_metafeatures")

32

33

34 def calculate_metafeatures(task_id):

35 X_train, y_train, X_test, y_test, cat, task_type, dataset_name = load_task(task_id)

36 stopwatch = StopWatch()

37

38 if task_type == "classification":

39 if len(np.unique(y_train)) == 2:

40 task_type = BINARY_CLASSIFICATION

41 else:

42 task_type = MULTICLASS_CLASSIFICATION

43 else:

44 task_type = REGRESSION

45

46 _metafeatures_labels = _calculate_metafeatures(

47 x_train=X_train,

48 y_train=y_train,

49 data_feat_type=cat,

50 data_info_task=task_type,

51 basename=dataset_name,

52 logger_=logger,

53 stopwatch=stopwatch,

54 )

55

56 _metafeatures_encoded_labels = _calculate_metafeatures_encoded(

57 x_train=X_train,

58 y_train=y_train,

59 data_feat_type=cat,

60 task=task_type,

61 basename=dataset_name,

62 logger_=logger,

63 stopwatch=stopwatch,

64 )

65

66 mf = _metafeatures_labels

67 mf.metafeature_values.update(_metafeatures_encoded_labels.metafeature_values)

68

69 return mf

70

71

72 if __name__ == "__main__":

73 parser = ArgumentParser()

74 parser.add_argument("--working-directory", type=str, required=True)

75 parser.add_argument("--memory-limit", type=int, default=3072)

76 parser.add_argument("--test-mode", action="store_true")

77

78 args = parser.parse_args()

79 working_directory = args.working_directory

80 memory_limit = args.memory_limit

81 test_mode = args.test_mode

82

83 for task_type in ("classification", "regression"):

84 output_directory = os.path.join(working_directory, "metafeatures", task_type)

85 try:

86 os.makedirs(output_directory)

87 except:

88 pass

89

90 all_metafeatures = {}

91

92 if task_type == "classification":

93 tasks = classification_tasks

94 else:

95 tasks = regression_tasks

96

97 if test_mode:

98 tasks = [tasks[0]]

99

100 tasks = copy.deepcopy(tasks)

101 np.random.shuffle(tasks)

102

103 def producer():

104 for task_id in tasks:

105 yield task_id

106

107 memory = joblib.Memory(location="/tmp/joblib", verbose=10)

108 cached_calculate_metafeatures = memory.cache(calculate_metafeatures)

109 mfs = [cached_calculate_metafeatures(task_id) for task_id in producer()]

110

111 for mf in mfs:

112 if mf is not None:

113 all_metafeatures[mf.dataset_name] = mf

114

115 # Write the calculation times as a csv file to disc (can be viewed in

116 # LibreOffice calc afterwards)

117 calculation_times = defaultdict(dict)

118 metafeature_values = defaultdict(dict)

119 helperfunction_values = defaultdict(dict)

120

121 for i, task_id in enumerate(all_metafeatures):

122 calculation_times[task_id] = dict()

123 for metafeature_name in sorted(

124 all_metafeatures[task_id].metafeature_values

125 ):

126 metafeature_value = all_metafeatures[task_id].metafeature_values[

127 metafeature_name

128 ]

129 calculation_times[task_id][metafeature_name] = metafeature_value.time

130 if metafeature_value.type_ == "HELPERFUNCTION":

131 helperfunction_values[task_id][

132 metafeature_name

133 ] = metafeature_value.value

134 else:

135 metafeature_values[task_id][

136 metafeature_name

137 ] = metafeature_value.value

138

139 calculation_times = pd.DataFrame(calculation_times).transpose()

140 calculation_times = calculation_times.sort_index()

141 with open(os.path.join(output_directory, "calculation_times.csv"), "w") as fh:

142 fh.write(calculation_times.to_csv())

143

144 # Write all metafeatures in the aslib1.0 format

145 metafeature_values = metafeature_values = pd.DataFrame(

146 metafeature_values

147 ).transpose()

148 metafeature_values = metafeature_values.sort_index()

149 arff_object = dict()

150 arff_object["attributes"] = [

151 ("instance_id", "STRING"),

152 ("repetition", "NUMERIC"),

153 ] + [("%s" % name, "NUMERIC") for name in metafeature_values.columns]

154 arff_object["relation"] = "FEATURE_VALUES"

155 arff_object["description"] = ""

156

157 data = []

158 for idx in metafeature_values.index:

159 line = [idx, 1]

160 line += [

161 value if np.isfinite(value) else None

162 for value in metafeature_values.loc[idx, :].values

163 ]

164 data.append(line)

165 arff_object["data"] = data

166

167 with open(os.path.join(output_directory, "feature_values.arff"), "w") as fh:

168 arff.dump(arff_object, fh)

169

170 # Feature steps and runtimes according to the aslib1.0 format

171 feature_steps = defaultdict(list)

172 metafeature_names = list()

173

174 exclude_metafeatures = (

175 EXCLUDE_META_FEATURES_CLASSIFICATION

176 if task_type == "classification"

177 else EXCLUDE_META_FEATURES_REGRESSION

178 )

179

180 for metafeature_name in metafeatures.metafeatures.functions:

181

182 if metafeature_name in exclude_metafeatures:

183 continue

184

185 dependency = metafeatures.metafeatures.get_dependency(metafeature_name)

186 if dependency is not None:

187 feature_steps[dependency].append(metafeature_name)

188 feature_steps[metafeature_name].append(metafeature_name)

189

190 metafeature_names.append(metafeature_name)

191

192 # Write the feature runstatus in the aslib1.0 format

193 arff_object = dict()

194 arff_object["attributes"] = [

195 ("instance_id", "STRING"),

196 ("repetition", "NUMERIC"),

197 ] + [

198 ("%s" % name, ["ok", "timeout", "memout", "presolved", "crash", "other"])

199 for name in feature_steps

200 ]

201 arff_object["relation"] = "FEATURE_RUNSTATUS"

202 arff_object["description"] = ""

203

204 data = []

205 for idx in metafeature_values.index:

206 line = [idx, 1]

207 for feature_step in feature_steps:

208 if feature_step in helperfunction_values[idx]:

209 line.append(

210 "ok"

211 if helperfunction_values[feature_step] is not None

212 else "other"

213 )

214 elif feature_step in metafeature_values.loc[idx]:

215 line.append(

216 "ok"

217 if np.isfinite(metafeature_values.loc[idx][feature_step])

218 else "other"

219 )

220 else:

221 line.append("other")

222

223 data.append(line)

224 arff_object["data"] = data

225

226 with open(os.path.join(output_directory, "feature_runstatus.arff"), "w") as fh:

227 arff.dump(arff_object, fh)

228

229 arff_object = dict()

230 arff_object["attributes"] = [

231 ("instance_id", "STRING"),

232 ("repetition", "NUMERIC"),

233 ] + [("%s" % feature_step, "NUMERIC") for feature_step in feature_steps]

234 arff_object["relation"] = "FEATURE_COSTS"

235 arff_object["description"] = ""

236

237 data = []

238 for instance_id in calculation_times.index:

239 calculation_times_per_group = dict()

240 line = [instance_id, 1]

241 for feature_step in feature_steps:

242 time_ = 0.0

243 for feature in feature_steps[feature_step]:

244 time_ += calculation_times[feature][instance_id]

245 if not np.isfinite(time_):

246 raise ValueError(

247 "Feature cost %s for instance %s and feature "

248 "step %s not finite" % (time_, instance_id, feature)

249 )

250 line.append(time_)

251 data.append(line)

252 arff_object["data"] = data

253

254 with open(os.path.join(output_directory, "feature_costs.arff"), "w") as fh:

255 arff.dump(arff_object, fh)

256

257 # Write the features part of the description.txt to a file

258 description = OrderedDict()

259 description["features_cutoff_time"] = "3600"

260 description["features_cutoff_memory"] = args.memory_limit

261 description["number_of_feature_steps"] = str(len(feature_steps))

262

263 for feature_step in feature_steps:

264 description["feature_step %s" % feature_step] = ", ".join(

265 feature_steps[feature_step]

266 )

267 description["features_deterministic"] = ", ".join(

268 [metafeature_name for metafeature_name in metafeature_names]

269 )

270 description["features_stochastic"] = ""

271 description["default_steps"] = ", ".join(feature_steps)

272

273 with open(

274 os.path.join(output_directory, "description.features.txt"), "w"

275 ) as fh:

276 for entry in description:

277 fh.write("%s: %s\n" % (entry, description[entry]))

278

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/scripts/03_calculate_metafeatures.py b/scripts/03_calculate_metafeatures.py

--- a/scripts/03_calculate_metafeatures.py

+++ b/scripts/03_calculate_metafeatures.py

@@ -5,6 +5,7 @@

import os

import sys

import unittest.mock

+import tempfile

import arff

import joblib

@@ -82,10 +83,7 @@

for task_type in ("classification", "regression"):

output_directory = os.path.join(working_directory, "metafeatures", task_type)

- try:

- os.makedirs(output_directory)

- except:

- pass

+ os.makedirs(output_directory, exist_ok=True)

all_metafeatures = {}

@@ -100,13 +98,10 @@

tasks = copy.deepcopy(tasks)

np.random.shuffle(tasks)

- def producer():

- for task_id in tasks:

- yield task_id

-

- memory = joblib.Memory(location="/tmp/joblib", verbose=10)

+ tmpdir = os.path.join(tempfile.gettempdir(), "joblib")

+ memory = joblib.Memory(location=tmpdir, verbose=10)

cached_calculate_metafeatures = memory.cache(calculate_metafeatures)

- mfs = [cached_calculate_metafeatures(task_id) for task_id in producer()]

+ mfs = [cached_calculate_metafeatures(task_id) for task_id in tasks]

for mf in mfs:

if mf is not None:

|