problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_19299

|

rasdani/github-patches

|

git_diff

|

bentoml__BentoML-4685

|

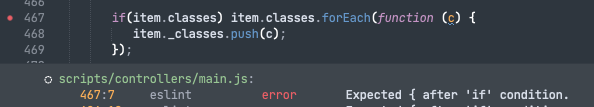

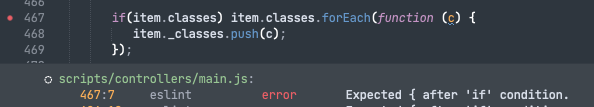

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

bug: module 'socket' has no attribute 'AF_UNIX'

### Describe the bug

Hello,

I'm trying to use Bentoml by playing with the quick start examples. When running the Iris classification example on a windows machine, I have this error message:

```

File "C:\Users\Path\lib\site-packages\uvicorn\server.py", line 140, in startup

sock = socket.fromfd(config.fd, socket.AF_UNIX, socket.SOCK_STREAM)

AttributeError: module 'socket' has no attribute 'AF_UNIX'

```

I tried to change the socket attribute to AF_INET, the error messages disappear but the client cannot connect to the bentoml server.

Thanks,

### To reproduce

_No response_

### Expected behavior

_No response_

### Environment

bentoml:1.2.12

python:3.9.18

uvicorn:0.29.0

Windows: 11 Pro 22H2

</issue>

<code>

[start of src/_bentoml_impl/worker/service.py]

1 from __future__ import annotations

2

3 import json

4 import os

5 import typing as t

6

7 import click

8

9

10 @click.command()

11 @click.argument("bento_identifier", type=click.STRING, required=False, default=".")

12 @click.option("--service-name", type=click.STRING, required=False, default="")

13 @click.option(

14 "--fd",

15 type=click.INT,

16 required=True,

17 help="File descriptor of the socket to listen on",

18 )

19 @click.option(

20 "--runner-map",

21 type=click.STRING,

22 envvar="BENTOML_RUNNER_MAP",

23 help="JSON string of runners map, default sets to envars `BENTOML_RUNNER_MAP`",

24 )

25 @click.option(

26 "--backlog", type=click.INT, default=2048, help="Backlog size for the socket"

27 )

28 @click.option(

29 "--prometheus-dir",

30 type=click.Path(exists=True),

31 help="Required by prometheus to pass the metrics in multi-process mode",

32 )

33 @click.option(

34 "--worker-env", type=click.STRING, default=None, help="Environment variables"

35 )

36 @click.option(

37 "--worker-id",

38 required=False,

39 type=click.INT,

40 default=None,

41 help="If set, start the server as a bare worker with the given worker ID. Otherwise start a standalone server with a supervisor process.",

42 )

43 @click.option(

44 "--ssl-certfile",

45 type=str,

46 default=None,

47 help="SSL certificate file",

48 )

49 @click.option(

50 "--ssl-keyfile",

51 type=str,

52 default=None,

53 help="SSL key file",

54 )

55 @click.option(

56 "--ssl-keyfile-password",

57 type=str,

58 default=None,

59 help="SSL keyfile password",

60 )

61 @click.option(

62 "--ssl-version",

63 type=int,

64 default=None,

65 help="SSL version to use (see stdlib 'ssl' module)",

66 )

67 @click.option(

68 "--ssl-cert-reqs",

69 type=int,

70 default=None,

71 help="Whether client certificate is required (see stdlib 'ssl' module)",

72 )

73 @click.option(

74 "--ssl-ca-certs",

75 type=str,

76 default=None,

77 help="CA certificates file",

78 )

79 @click.option(

80 "--ssl-ciphers",

81 type=str,

82 default=None,

83 help="Ciphers to use (see stdlib 'ssl' module)",

84 )

85 @click.option(

86 "--development-mode",

87 type=click.BOOL,

88 help="Run the API server in development mode",

89 is_flag=True,

90 default=False,

91 show_default=True,

92 )

93 @click.option(

94 "--timeout",

95 type=click.INT,

96 help="Specify the timeout for API server",

97 )

98 def main(

99 bento_identifier: str,

100 service_name: str,

101 fd: int,

102 runner_map: str | None,

103 backlog: int,

104 worker_env: str | None,

105 worker_id: int | None,

106 prometheus_dir: str | None,

107 ssl_certfile: str | None,

108 ssl_keyfile: str | None,

109 ssl_keyfile_password: str | None,

110 ssl_version: int | None,

111 ssl_cert_reqs: int | None,

112 ssl_ca_certs: str | None,

113 ssl_ciphers: str | None,

114 development_mode: bool,

115 timeout: int,

116 ):

117 """

118 Start a HTTP server worker for given service.

119 """

120 import psutil

121 import uvicorn

122

123 if worker_env:

124 env_list: list[dict[str, t.Any]] = json.loads(worker_env)

125 if worker_id is not None:

126 # worker id from circus starts from 1

127 worker_key = worker_id - 1

128 if worker_key >= len(env_list):

129 raise IndexError(

130 f"Worker ID {worker_id} is out of range, "

131 f"the maximum worker ID is {len(env_list)}"

132 )

133 os.environ.update(env_list[worker_key])

134

135 from _bentoml_impl.loader import import_service

136 from bentoml._internal.container import BentoMLContainer

137 from bentoml._internal.context import server_context

138 from bentoml._internal.log import configure_server_logging

139

140 if runner_map:

141 BentoMLContainer.remote_runner_mapping.set(

142 t.cast(t.Dict[str, str], json.loads(runner_map))

143 )

144

145 service = import_service(bento_identifier)

146

147 if service_name and service_name != service.name:

148 service = service.find_dependent(service_name)

149 server_context.service_type = "service"

150 else:

151 server_context.service_type = "entry_service"

152

153 if worker_id is not None:

154 server_context.worker_index = worker_id

155

156 configure_server_logging()

157 BentoMLContainer.development_mode.set(development_mode)

158

159 if prometheus_dir is not None:

160 BentoMLContainer.prometheus_multiproc_dir.set(prometheus_dir)

161 server_context.service_name = service.name

162

163 asgi_app = service.to_asgi(

164 is_main=server_context.service_type == "entry_service", init=False

165 )

166

167 uvicorn_extra_options: dict[str, t.Any] = {}

168 if ssl_version is not None:

169 uvicorn_extra_options["ssl_version"] = ssl_version

170 if ssl_cert_reqs is not None:

171 uvicorn_extra_options["ssl_cert_reqs"] = ssl_cert_reqs

172 if ssl_ciphers is not None:

173 uvicorn_extra_options["ssl_ciphers"] = ssl_ciphers

174

175 if psutil.WINDOWS:

176 # 1. uvloop is not supported on Windows

177 # 2. the default policy for Python > 3.8 on Windows is ProactorEventLoop, which doesn't

178 # support listen on a existing socket file descriptors

179 # See https://docs.python.org/3.8/library/asyncio-platforms.html#windows

180 uvicorn_extra_options["loop"] = "asyncio"

181 import asyncio

182

183 asyncio.set_event_loop_policy(asyncio.WindowsSelectorEventLoopPolicy()) # type: ignore

184

185 uvicorn.run(

186 app=asgi_app,

187 fd=fd,

188 backlog=backlog,

189 log_config=None,

190 workers=1,

191 ssl_certfile=ssl_certfile,

192 ssl_keyfile=ssl_keyfile,

193 ssl_keyfile_password=ssl_keyfile_password,

194 ssl_ca_certs=ssl_ca_certs,

195 server_header=False,

196 **uvicorn_extra_options,

197 )

198

199

200 if __name__ == "__main__":

201 main() # pylint: disable=no-value-for-parameter

202

[end of src/_bentoml_impl/worker/service.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/src/_bentoml_impl/worker/service.py b/src/_bentoml_impl/worker/service.py

--- a/src/_bentoml_impl/worker/service.py

+++ b/src/_bentoml_impl/worker/service.py

@@ -117,6 +117,8 @@

"""

Start a HTTP server worker for given service.

"""

+ import socket

+

import psutil

import uvicorn

@@ -182,9 +184,8 @@

asyncio.set_event_loop_policy(asyncio.WindowsSelectorEventLoopPolicy()) # type: ignore

- uvicorn.run(

+ config = uvicorn.Config(

app=asgi_app,

- fd=fd,

backlog=backlog,

log_config=None,

workers=1,

@@ -195,6 +196,8 @@

server_header=False,

**uvicorn_extra_options,

)

+ socket = socket.socket(fileno=fd)

+ uvicorn.Server(config).run(sockets=[socket])

if __name__ == "__main__":

|

{"golden_diff": "diff --git a/src/_bentoml_impl/worker/service.py b/src/_bentoml_impl/worker/service.py\n--- a/src/_bentoml_impl/worker/service.py\n+++ b/src/_bentoml_impl/worker/service.py\n@@ -117,6 +117,8 @@\n \"\"\"\n Start a HTTP server worker for given service.\n \"\"\"\n+ import socket\n+\n import psutil\n import uvicorn\n \n@@ -182,9 +184,8 @@\n \n asyncio.set_event_loop_policy(asyncio.WindowsSelectorEventLoopPolicy()) # type: ignore\n \n- uvicorn.run(\n+ config = uvicorn.Config(\n app=asgi_app,\n- fd=fd,\n backlog=backlog,\n log_config=None,\n workers=1,\n@@ -195,6 +196,8 @@\n server_header=False,\n **uvicorn_extra_options,\n )\n+ socket = socket.socket(fileno=fd)\n+ uvicorn.Server(config).run(sockets=[socket])\n \n \n if __name__ == \"__main__\":\n", "issue": "bug: module 'socket' has no attribute 'AF_UNIX'\n### Describe the bug\r\n\r\nHello,\r\nI'm trying to use Bentoml by playing with the quick start examples. When running the Iris classification example on a windows machine, I have this error message:\r\n```\r\nFile \"C:\\Users\\Path\\lib\\site-packages\\uvicorn\\server.py\", line 140, in startup\r\n sock = socket.fromfd(config.fd, socket.AF_UNIX, socket.SOCK_STREAM)\r\nAttributeError: module 'socket' has no attribute 'AF_UNIX'\r\n```\r\n\r\nI tried to change the socket attribute to AF_INET, the error messages disappear but the client cannot connect to the bentoml server.\r\n\r\nThanks,\r\n\r\n\r\n### To reproduce\r\n\r\n_No response_\r\n\r\n### Expected behavior\r\n\r\n_No response_\r\n\r\n### Environment\r\n\r\nbentoml:1.2.12\r\npython:3.9.18\r\nuvicorn:0.29.0\r\nWindows: 11 Pro 22H2\n", "before_files": [{"content": "from __future__ import annotations\n\nimport json\nimport os\nimport typing as t\n\nimport click\n\n\n@click.command()\n@click.argument(\"bento_identifier\", type=click.STRING, required=False, default=\".\")\n@click.option(\"--service-name\", type=click.STRING, required=False, default=\"\")\n@click.option(\n \"--fd\",\n type=click.INT,\n required=True,\n help=\"File descriptor of the socket to listen on\",\n)\n@click.option(\n \"--runner-map\",\n type=click.STRING,\n envvar=\"BENTOML_RUNNER_MAP\",\n help=\"JSON string of runners map, default sets to envars `BENTOML_RUNNER_MAP`\",\n)\n@click.option(\n \"--backlog\", type=click.INT, default=2048, help=\"Backlog size for the socket\"\n)\n@click.option(\n \"--prometheus-dir\",\n type=click.Path(exists=True),\n help=\"Required by prometheus to pass the metrics in multi-process mode\",\n)\n@click.option(\n \"--worker-env\", type=click.STRING, default=None, help=\"Environment variables\"\n)\n@click.option(\n \"--worker-id\",\n required=False,\n type=click.INT,\n default=None,\n help=\"If set, start the server as a bare worker with the given worker ID. Otherwise start a standalone server with a supervisor process.\",\n)\n@click.option(\n \"--ssl-certfile\",\n type=str,\n default=None,\n help=\"SSL certificate file\",\n)\n@click.option(\n \"--ssl-keyfile\",\n type=str,\n default=None,\n help=\"SSL key file\",\n)\n@click.option(\n \"--ssl-keyfile-password\",\n type=str,\n default=None,\n help=\"SSL keyfile password\",\n)\n@click.option(\n \"--ssl-version\",\n type=int,\n default=None,\n help=\"SSL version to use (see stdlib 'ssl' module)\",\n)\n@click.option(\n \"--ssl-cert-reqs\",\n type=int,\n default=None,\n help=\"Whether client certificate is required (see stdlib 'ssl' module)\",\n)\n@click.option(\n \"--ssl-ca-certs\",\n type=str,\n default=None,\n help=\"CA certificates file\",\n)\n@click.option(\n \"--ssl-ciphers\",\n type=str,\n default=None,\n help=\"Ciphers to use (see stdlib 'ssl' module)\",\n)\n@click.option(\n \"--development-mode\",\n type=click.BOOL,\n help=\"Run the API server in development mode\",\n is_flag=True,\n default=False,\n show_default=True,\n)\n@click.option(\n \"--timeout\",\n type=click.INT,\n help=\"Specify the timeout for API server\",\n)\ndef main(\n bento_identifier: str,\n service_name: str,\n fd: int,\n runner_map: str | None,\n backlog: int,\n worker_env: str | None,\n worker_id: int | None,\n prometheus_dir: str | None,\n ssl_certfile: str | None,\n ssl_keyfile: str | None,\n ssl_keyfile_password: str | None,\n ssl_version: int | None,\n ssl_cert_reqs: int | None,\n ssl_ca_certs: str | None,\n ssl_ciphers: str | None,\n development_mode: bool,\n timeout: int,\n):\n \"\"\"\n Start a HTTP server worker for given service.\n \"\"\"\n import psutil\n import uvicorn\n\n if worker_env:\n env_list: list[dict[str, t.Any]] = json.loads(worker_env)\n if worker_id is not None:\n # worker id from circus starts from 1\n worker_key = worker_id - 1\n if worker_key >= len(env_list):\n raise IndexError(\n f\"Worker ID {worker_id} is out of range, \"\n f\"the maximum worker ID is {len(env_list)}\"\n )\n os.environ.update(env_list[worker_key])\n\n from _bentoml_impl.loader import import_service\n from bentoml._internal.container import BentoMLContainer\n from bentoml._internal.context import server_context\n from bentoml._internal.log import configure_server_logging\n\n if runner_map:\n BentoMLContainer.remote_runner_mapping.set(\n t.cast(t.Dict[str, str], json.loads(runner_map))\n )\n\n service = import_service(bento_identifier)\n\n if service_name and service_name != service.name:\n service = service.find_dependent(service_name)\n server_context.service_type = \"service\"\n else:\n server_context.service_type = \"entry_service\"\n\n if worker_id is not None:\n server_context.worker_index = worker_id\n\n configure_server_logging()\n BentoMLContainer.development_mode.set(development_mode)\n\n if prometheus_dir is not None:\n BentoMLContainer.prometheus_multiproc_dir.set(prometheus_dir)\n server_context.service_name = service.name\n\n asgi_app = service.to_asgi(\n is_main=server_context.service_type == \"entry_service\", init=False\n )\n\n uvicorn_extra_options: dict[str, t.Any] = {}\n if ssl_version is not None:\n uvicorn_extra_options[\"ssl_version\"] = ssl_version\n if ssl_cert_reqs is not None:\n uvicorn_extra_options[\"ssl_cert_reqs\"] = ssl_cert_reqs\n if ssl_ciphers is not None:\n uvicorn_extra_options[\"ssl_ciphers\"] = ssl_ciphers\n\n if psutil.WINDOWS:\n # 1. uvloop is not supported on Windows\n # 2. the default policy for Python > 3.8 on Windows is ProactorEventLoop, which doesn't\n # support listen on a existing socket file descriptors\n # See https://docs.python.org/3.8/library/asyncio-platforms.html#windows\n uvicorn_extra_options[\"loop\"] = \"asyncio\"\n import asyncio\n\n asyncio.set_event_loop_policy(asyncio.WindowsSelectorEventLoopPolicy()) # type: ignore\n\n uvicorn.run(\n app=asgi_app,\n fd=fd,\n backlog=backlog,\n log_config=None,\n workers=1,\n ssl_certfile=ssl_certfile,\n ssl_keyfile=ssl_keyfile,\n ssl_keyfile_password=ssl_keyfile_password,\n ssl_ca_certs=ssl_ca_certs,\n server_header=False,\n **uvicorn_extra_options,\n )\n\n\nif __name__ == \"__main__\":\n main() # pylint: disable=no-value-for-parameter\n", "path": "src/_bentoml_impl/worker/service.py"}]}

| 2,647 | 238 |

gh_patches_debug_18185

|

rasdani/github-patches

|

git_diff

|

mozilla__bugbug-214

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Use the bug snapshot transform in the "uplift" model

Depends on #5.

</issue>

<code>

[start of bugbug/models/uplift.py]

1 # -*- coding: utf-8 -*-

2 # This Source Code Form is subject to the terms of the Mozilla Public

3 # License, v. 2.0. If a copy of the MPL was not distributed with this file,

4 # You can obtain one at http://mozilla.org/MPL/2.0/.

5

6 import xgboost

7 from imblearn.under_sampling import RandomUnderSampler

8 from sklearn.compose import ColumnTransformer

9 from sklearn.feature_extraction import DictVectorizer

10 from sklearn.pipeline import Pipeline

11

12 from bugbug import bug_features

13 from bugbug import bugzilla

14 from bugbug.model import Model

15

16

17 class UpliftModel(Model):

18 def __init__(self, lemmatization=False):

19 Model.__init__(self, lemmatization)

20

21 self.sampler = RandomUnderSampler(random_state=0)

22

23 feature_extractors = [

24 bug_features.has_str(),

25 bug_features.has_regression_range(),

26 bug_features.severity(),

27 bug_features.keywords(),

28 bug_features.is_coverity_issue(),

29 bug_features.has_crash_signature(),

30 bug_features.has_url(),

31 bug_features.has_w3c_url(),

32 bug_features.has_github_url(),

33 bug_features.whiteboard(),

34 bug_features.patches(),

35 bug_features.landings(),

36 bug_features.title(),

37 ]

38

39 cleanup_functions = [

40 bug_features.cleanup_fileref,

41 bug_features.cleanup_url,

42 bug_features.cleanup_synonyms,

43 ]

44

45 self.extraction_pipeline = Pipeline([

46 ('bug_extractor', bug_features.BugExtractor(feature_extractors, cleanup_functions)),

47 ('union', ColumnTransformer([

48 ('data', DictVectorizer(), 'data'),

49

50 ('title', self.text_vectorizer(), 'title'),

51

52 ('comments', self.text_vectorizer(), 'comments'),

53 ])),

54 ])

55

56 self.clf = xgboost.XGBClassifier(n_jobs=16)

57 self.clf.set_params(predictor='cpu_predictor')

58

59 def get_labels(self):

60 classes = {}

61

62 for bug_data in bugzilla.get_bugs():

63 bug_id = int(bug_data['id'])

64

65 for attachment in bug_data['attachments']:

66 for flag in attachment['flags']:

67 if not flag['name'].startswith('approval-mozilla-') or flag['status'] not in ['+', '-']:

68 continue

69

70 if flag['status'] == '+':

71 classes[bug_id] = 1

72 elif flag['status'] == '-':

73 classes[bug_id] = 0

74

75 return classes

76

77 def get_feature_names(self):

78 return self.extraction_pipeline.named_steps['union'].get_feature_names()

79

[end of bugbug/models/uplift.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/bugbug/models/uplift.py b/bugbug/models/uplift.py

--- a/bugbug/models/uplift.py

+++ b/bugbug/models/uplift.py

@@ -43,7 +43,7 @@

]

self.extraction_pipeline = Pipeline([

- ('bug_extractor', bug_features.BugExtractor(feature_extractors, cleanup_functions)),

+ ('bug_extractor', bug_features.BugExtractor(feature_extractors, cleanup_functions, rollback=True, rollback_when=self.rollback)),

('union', ColumnTransformer([

('data', DictVectorizer(), 'data'),

@@ -56,6 +56,9 @@

self.clf = xgboost.XGBClassifier(n_jobs=16)

self.clf.set_params(predictor='cpu_predictor')

+ def rollback(self, change):

+ return (change['field_name'] == 'flagtypes.name' and change['added'].startswith('approval-mozilla-') and (change['added'].endswith('+') or change['added'].endswith('-')))

+

def get_labels(self):

classes = {}

|

{"golden_diff": "diff --git a/bugbug/models/uplift.py b/bugbug/models/uplift.py\n--- a/bugbug/models/uplift.py\n+++ b/bugbug/models/uplift.py\n@@ -43,7 +43,7 @@\n ]\n \n self.extraction_pipeline = Pipeline([\n- ('bug_extractor', bug_features.BugExtractor(feature_extractors, cleanup_functions)),\n+ ('bug_extractor', bug_features.BugExtractor(feature_extractors, cleanup_functions, rollback=True, rollback_when=self.rollback)),\n ('union', ColumnTransformer([\n ('data', DictVectorizer(), 'data'),\n \n@@ -56,6 +56,9 @@\n self.clf = xgboost.XGBClassifier(n_jobs=16)\n self.clf.set_params(predictor='cpu_predictor')\n \n+ def rollback(self, change):\n+ return (change['field_name'] == 'flagtypes.name' and change['added'].startswith('approval-mozilla-') and (change['added'].endswith('+') or change['added'].endswith('-')))\n+\n def get_labels(self):\n classes = {}\n", "issue": "Use the bug snapshot transform in the \"uplift\" model\nDepends on #5.\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\n# This Source Code Form is subject to the terms of the Mozilla Public\n# License, v. 2.0. If a copy of the MPL was not distributed with this file,\n# You can obtain one at http://mozilla.org/MPL/2.0/.\n\nimport xgboost\nfrom imblearn.under_sampling import RandomUnderSampler\nfrom sklearn.compose import ColumnTransformer\nfrom sklearn.feature_extraction import DictVectorizer\nfrom sklearn.pipeline import Pipeline\n\nfrom bugbug import bug_features\nfrom bugbug import bugzilla\nfrom bugbug.model import Model\n\n\nclass UpliftModel(Model):\n def __init__(self, lemmatization=False):\n Model.__init__(self, lemmatization)\n\n self.sampler = RandomUnderSampler(random_state=0)\n\n feature_extractors = [\n bug_features.has_str(),\n bug_features.has_regression_range(),\n bug_features.severity(),\n bug_features.keywords(),\n bug_features.is_coverity_issue(),\n bug_features.has_crash_signature(),\n bug_features.has_url(),\n bug_features.has_w3c_url(),\n bug_features.has_github_url(),\n bug_features.whiteboard(),\n bug_features.patches(),\n bug_features.landings(),\n bug_features.title(),\n ]\n\n cleanup_functions = [\n bug_features.cleanup_fileref,\n bug_features.cleanup_url,\n bug_features.cleanup_synonyms,\n ]\n\n self.extraction_pipeline = Pipeline([\n ('bug_extractor', bug_features.BugExtractor(feature_extractors, cleanup_functions)),\n ('union', ColumnTransformer([\n ('data', DictVectorizer(), 'data'),\n\n ('title', self.text_vectorizer(), 'title'),\n\n ('comments', self.text_vectorizer(), 'comments'),\n ])),\n ])\n\n self.clf = xgboost.XGBClassifier(n_jobs=16)\n self.clf.set_params(predictor='cpu_predictor')\n\n def get_labels(self):\n classes = {}\n\n for bug_data in bugzilla.get_bugs():\n bug_id = int(bug_data['id'])\n\n for attachment in bug_data['attachments']:\n for flag in attachment['flags']:\n if not flag['name'].startswith('approval-mozilla-') or flag['status'] not in ['+', '-']:\n continue\n\n if flag['status'] == '+':\n classes[bug_id] = 1\n elif flag['status'] == '-':\n classes[bug_id] = 0\n\n return classes\n\n def get_feature_names(self):\n return self.extraction_pipeline.named_steps['union'].get_feature_names()\n", "path": "bugbug/models/uplift.py"}]}

| 1,249 | 231 |

gh_patches_debug_35982

|

rasdani/github-patches

|

git_diff

|

open-telemetry__opentelemetry-python-contrib-260

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

gRPC server instrumentation creates multiple traces on streaming requests

**Environment**

Current `master` code, basically the sample code in the documentation, testing with a unary request vs. a streaming request.

**Steps to reproduce**

Create a simple gRPC servicer with two RPCs, one which returns a single message (the unary response), and one which yields items in a list for a streaming response.

The key here is to make an instrumented request within the primary request handler (I'm using a simple HTTP get with the Requests instrumentation), so you get an _additional_ span which should be attached to the same trace.

**What is the expected behavior?**

A single trace with the main span, and a second child span for the HTTP request.

**What is the actual behavior?**

Two separate traces, each containing a single span.

**Additional context**

The problem _only_ occurs on streaming requests - I'm sure the reworking I did as part of https://github.com/open-telemetry/opentelemetry-python/pull/1171 is where the problem started, I didn't take into account the streaming case specifically with multiple spans, and naturally, there are no tests for anything streaming, only unary responses.

So as part of this, we'll need some useful tests as well. I'll see if I can write up my test case as an actual test case.

And again, I've got a vested interest in this working, so I'll have a PR up soon.

</issue>

<code>

[start of instrumentation/opentelemetry-instrumentation-grpc/src/opentelemetry/instrumentation/grpc/_server.py]

1 # Copyright The OpenTelemetry Authors

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 # pylint:disable=relative-beyond-top-level

16 # pylint:disable=arguments-differ

17 # pylint:disable=no-member

18 # pylint:disable=signature-differs

19

20 """

21 Implementation of the service-side open-telemetry interceptor.

22 """

23

24 import logging

25 from contextlib import contextmanager

26

27 import grpc

28

29 from opentelemetry import propagators, trace

30 from opentelemetry.context import attach, detach

31 from opentelemetry.trace.propagation.textmap import DictGetter

32 from opentelemetry.trace.status import Status, StatusCode

33

34 logger = logging.getLogger(__name__)

35

36

37 # wrap an RPC call

38 # see https://github.com/grpc/grpc/issues/18191

39 def _wrap_rpc_behavior(handler, continuation):

40 if handler is None:

41 return None

42

43 if handler.request_streaming and handler.response_streaming:

44 behavior_fn = handler.stream_stream

45 handler_factory = grpc.stream_stream_rpc_method_handler

46 elif handler.request_streaming and not handler.response_streaming:

47 behavior_fn = handler.stream_unary

48 handler_factory = grpc.stream_unary_rpc_method_handler

49 elif not handler.request_streaming and handler.response_streaming:

50 behavior_fn = handler.unary_stream

51 handler_factory = grpc.unary_stream_rpc_method_handler

52 else:

53 behavior_fn = handler.unary_unary

54 handler_factory = grpc.unary_unary_rpc_method_handler

55

56 return handler_factory(

57 continuation(

58 behavior_fn, handler.request_streaming, handler.response_streaming

59 ),

60 request_deserializer=handler.request_deserializer,

61 response_serializer=handler.response_serializer,

62 )

63

64

65 # pylint:disable=abstract-method

66 class _OpenTelemetryServicerContext(grpc.ServicerContext):

67 def __init__(self, servicer_context, active_span):

68 self._servicer_context = servicer_context

69 self._active_span = active_span

70 self.code = grpc.StatusCode.OK

71 self.details = None

72 super().__init__()

73

74 def is_active(self, *args, **kwargs):

75 return self._servicer_context.is_active(*args, **kwargs)

76

77 def time_remaining(self, *args, **kwargs):

78 return self._servicer_context.time_remaining(*args, **kwargs)

79

80 def cancel(self, *args, **kwargs):

81 return self._servicer_context.cancel(*args, **kwargs)

82

83 def add_callback(self, *args, **kwargs):

84 return self._servicer_context.add_callback(*args, **kwargs)

85

86 def disable_next_message_compression(self):

87 return self._service_context.disable_next_message_compression()

88

89 def invocation_metadata(self, *args, **kwargs):

90 return self._servicer_context.invocation_metadata(*args, **kwargs)

91

92 def peer(self):

93 return self._servicer_context.peer()

94

95 def peer_identities(self):

96 return self._servicer_context.peer_identities()

97

98 def peer_identity_key(self):

99 return self._servicer_context.peer_identity_key()

100

101 def auth_context(self):

102 return self._servicer_context.auth_context()

103

104 def set_compression(self, compression):

105 return self._servicer_context.set_compression(compression)

106

107 def send_initial_metadata(self, *args, **kwargs):

108 return self._servicer_context.send_initial_metadata(*args, **kwargs)

109

110 def set_trailing_metadata(self, *args, **kwargs):

111 return self._servicer_context.set_trailing_metadata(*args, **kwargs)

112

113 def abort(self, code, details):

114 self.code = code

115 self.details = details

116 self._active_span.set_attribute("rpc.grpc.status_code", code.value[0])

117 self._active_span.set_status(

118 Status(

119 status_code=StatusCode.ERROR,

120 description="{}:{}".format(code, details),

121 )

122 )

123 return self._servicer_context.abort(code, details)

124

125 def abort_with_status(self, status):

126 return self._servicer_context.abort_with_status(status)

127

128 def set_code(self, code):

129 self.code = code

130 # use details if we already have it, otherwise the status description

131 details = self.details or code.value[1]

132 self._active_span.set_attribute("rpc.grpc.status_code", code.value[0])

133 if code != grpc.StatusCode.OK:

134 self._active_span.set_status(

135 Status(

136 status_code=StatusCode.ERROR,

137 description="{}:{}".format(code, details),

138 )

139 )

140 return self._servicer_context.set_code(code)

141

142 def set_details(self, details):

143 self.details = details

144 if self.code != grpc.StatusCode.OK:

145 self._active_span.set_status(

146 Status(

147 status_code=StatusCode.ERROR,

148 description="{}:{}".format(self.code, details),

149 )

150 )

151 return self._servicer_context.set_details(details)

152

153

154 # pylint:disable=abstract-method

155 # pylint:disable=no-self-use

156 # pylint:disable=unused-argument

157 class OpenTelemetryServerInterceptor(grpc.ServerInterceptor):

158 """

159 A gRPC server interceptor, to add OpenTelemetry.

160

161 Usage::

162

163 tracer = some OpenTelemetry tracer

164

165 interceptors = [

166 OpenTelemetryServerInterceptor(tracer),

167 ]

168

169 server = grpc.server(

170 futures.ThreadPoolExecutor(max_workers=concurrency),

171 interceptors = interceptors)

172

173 """

174

175 def __init__(self, tracer):

176 self._tracer = tracer

177 self._carrier_getter = DictGetter()

178

179 @contextmanager

180 def _set_remote_context(self, servicer_context):

181 metadata = servicer_context.invocation_metadata()

182 if metadata:

183 md_dict = {md.key: md.value for md in metadata}

184 ctx = propagators.extract(self._carrier_getter, md_dict)

185 token = attach(ctx)

186 try:

187 yield

188 finally:

189 detach(token)

190 else:

191 yield

192

193 def _start_span(self, handler_call_details, context):

194

195 # standard attributes

196 attributes = {

197 "rpc.system": "grpc",

198 "rpc.grpc.status_code": grpc.StatusCode.OK.value[0],

199 }

200

201 # if we have details about the call, split into service and method

202 if handler_call_details.method:

203 service, method = handler_call_details.method.lstrip("/").split(

204 "/", 1

205 )

206 attributes.update({"rpc.method": method, "rpc.service": service})

207

208 # add some attributes from the metadata

209 metadata = dict(context.invocation_metadata())

210 if "user-agent" in metadata:

211 attributes["rpc.user_agent"] = metadata["user-agent"]

212

213 # Split up the peer to keep with how other telemetry sources

214 # do it. This looks like:

215 # * ipv6:[::1]:57284

216 # * ipv4:127.0.0.1:57284

217 # * ipv4:10.2.1.1:57284,127.0.0.1:57284

218 #

219 try:

220 ip, port = (

221 context.peer().split(",")[0].split(":", 1)[1].rsplit(":", 1)

222 )

223 attributes.update({"net.peer.ip": ip, "net.peer.port": port})

224

225 # other telemetry sources add this, so we will too

226 if ip in ("[::1]", "127.0.0.1"):

227 attributes["net.peer.name"] = "localhost"

228

229 except IndexError:

230 logger.warning("Failed to parse peer address '%s'", context.peer())

231

232 return self._tracer.start_as_current_span(

233 name=handler_call_details.method,

234 kind=trace.SpanKind.SERVER,

235 attributes=attributes,

236 )

237

238 def intercept_service(self, continuation, handler_call_details):

239 def telemetry_wrapper(behavior, request_streaming, response_streaming):

240 def telemetry_interceptor(request_or_iterator, context):

241

242 with self._set_remote_context(context):

243 with self._start_span(

244 handler_call_details, context

245 ) as span:

246 # wrap the context

247 context = _OpenTelemetryServicerContext(context, span)

248

249 # And now we run the actual RPC.

250 try:

251 return behavior(request_or_iterator, context)

252 except Exception as error:

253 # Bare exceptions are likely to be gRPC aborts, which

254 # we handle in our context wrapper.

255 # Here, we're interested in uncaught exceptions.

256 # pylint:disable=unidiomatic-typecheck

257 if type(error) != Exception:

258 span.record_exception(error)

259 raise error

260

261 return telemetry_interceptor

262

263 return _wrap_rpc_behavior(

264 continuation(handler_call_details), telemetry_wrapper

265 )

266

[end of instrumentation/opentelemetry-instrumentation-grpc/src/opentelemetry/instrumentation/grpc/_server.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/instrumentation/opentelemetry-instrumentation-grpc/src/opentelemetry/instrumentation/grpc/_server.py b/instrumentation/opentelemetry-instrumentation-grpc/src/opentelemetry/instrumentation/grpc/_server.py

--- a/instrumentation/opentelemetry-instrumentation-grpc/src/opentelemetry/instrumentation/grpc/_server.py

+++ b/instrumentation/opentelemetry-instrumentation-grpc/src/opentelemetry/instrumentation/grpc/_server.py

@@ -239,6 +239,15 @@

def telemetry_wrapper(behavior, request_streaming, response_streaming):

def telemetry_interceptor(request_or_iterator, context):

+ # handle streaming responses specially

+ if response_streaming:

+ return self._intercept_server_stream(

+ behavior,

+ handler_call_details,

+ request_or_iterator,

+ context,

+ )

+

with self._set_remote_context(context):

with self._start_span(

handler_call_details, context

@@ -249,6 +258,7 @@

# And now we run the actual RPC.

try:

return behavior(request_or_iterator, context)

+

except Exception as error:

# Bare exceptions are likely to be gRPC aborts, which

# we handle in our context wrapper.

@@ -263,3 +273,23 @@

return _wrap_rpc_behavior(

continuation(handler_call_details), telemetry_wrapper

)

+

+ # Handle streaming responses separately - we have to do this

+ # to return a *new* generator or various upstream things

+ # get confused, or we'll lose the consistent trace

+ def _intercept_server_stream(

+ self, behavior, handler_call_details, request_or_iterator, context

+ ):

+

+ with self._set_remote_context(context):

+ with self._start_span(handler_call_details, context) as span:

+ context = _OpenTelemetryServicerContext(context, span)

+

+ try:

+ yield from behavior(request_or_iterator, context)

+

+ except Exception as error:

+ # pylint:disable=unidiomatic-typecheck

+ if type(error) != Exception:

+ span.record_exception(error)

+ raise error

|

{"golden_diff": "diff --git a/instrumentation/opentelemetry-instrumentation-grpc/src/opentelemetry/instrumentation/grpc/_server.py b/instrumentation/opentelemetry-instrumentation-grpc/src/opentelemetry/instrumentation/grpc/_server.py\n--- a/instrumentation/opentelemetry-instrumentation-grpc/src/opentelemetry/instrumentation/grpc/_server.py\n+++ b/instrumentation/opentelemetry-instrumentation-grpc/src/opentelemetry/instrumentation/grpc/_server.py\n@@ -239,6 +239,15 @@\n def telemetry_wrapper(behavior, request_streaming, response_streaming):\n def telemetry_interceptor(request_or_iterator, context):\n \n+ # handle streaming responses specially\n+ if response_streaming:\n+ return self._intercept_server_stream(\n+ behavior,\n+ handler_call_details,\n+ request_or_iterator,\n+ context,\n+ )\n+\n with self._set_remote_context(context):\n with self._start_span(\n handler_call_details, context\n@@ -249,6 +258,7 @@\n # And now we run the actual RPC.\n try:\n return behavior(request_or_iterator, context)\n+\n except Exception as error:\n # Bare exceptions are likely to be gRPC aborts, which\n # we handle in our context wrapper.\n@@ -263,3 +273,23 @@\n return _wrap_rpc_behavior(\n continuation(handler_call_details), telemetry_wrapper\n )\n+\n+ # Handle streaming responses separately - we have to do this\n+ # to return a *new* generator or various upstream things\n+ # get confused, or we'll lose the consistent trace\n+ def _intercept_server_stream(\n+ self, behavior, handler_call_details, request_or_iterator, context\n+ ):\n+\n+ with self._set_remote_context(context):\n+ with self._start_span(handler_call_details, context) as span:\n+ context = _OpenTelemetryServicerContext(context, span)\n+\n+ try:\n+ yield from behavior(request_or_iterator, context)\n+\n+ except Exception as error:\n+ # pylint:disable=unidiomatic-typecheck\n+ if type(error) != Exception:\n+ span.record_exception(error)\n+ raise error\n", "issue": "gRPC server instrumentation creates multiple traces on streaming requests\n**Environment**\r\nCurrent `master` code, basically the sample code in the documentation, testing with a unary request vs. a streaming request.\r\n\r\n**Steps to reproduce**\r\nCreate a simple gRPC servicer with two RPCs, one which returns a single message (the unary response), and one which yields items in a list for a streaming response.\r\n\r\nThe key here is to make an instrumented request within the primary request handler (I'm using a simple HTTP get with the Requests instrumentation), so you get an _additional_ span which should be attached to the same trace.\r\n\r\n**What is the expected behavior?**\r\nA single trace with the main span, and a second child span for the HTTP request.\r\n\r\n**What is the actual behavior?**\r\nTwo separate traces, each containing a single span.\r\n\r\n**Additional context**\r\nThe problem _only_ occurs on streaming requests - I'm sure the reworking I did as part of https://github.com/open-telemetry/opentelemetry-python/pull/1171 is where the problem started, I didn't take into account the streaming case specifically with multiple spans, and naturally, there are no tests for anything streaming, only unary responses.\r\n\r\nSo as part of this, we'll need some useful tests as well. I'll see if I can write up my test case as an actual test case.\r\n\r\nAnd again, I've got a vested interest in this working, so I'll have a PR up soon.\n", "before_files": [{"content": "# Copyright The OpenTelemetry Authors\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\n# pylint:disable=relative-beyond-top-level\n# pylint:disable=arguments-differ\n# pylint:disable=no-member\n# pylint:disable=signature-differs\n\n\"\"\"\nImplementation of the service-side open-telemetry interceptor.\n\"\"\"\n\nimport logging\nfrom contextlib import contextmanager\n\nimport grpc\n\nfrom opentelemetry import propagators, trace\nfrom opentelemetry.context import attach, detach\nfrom opentelemetry.trace.propagation.textmap import DictGetter\nfrom opentelemetry.trace.status import Status, StatusCode\n\nlogger = logging.getLogger(__name__)\n\n\n# wrap an RPC call\n# see https://github.com/grpc/grpc/issues/18191\ndef _wrap_rpc_behavior(handler, continuation):\n if handler is None:\n return None\n\n if handler.request_streaming and handler.response_streaming:\n behavior_fn = handler.stream_stream\n handler_factory = grpc.stream_stream_rpc_method_handler\n elif handler.request_streaming and not handler.response_streaming:\n behavior_fn = handler.stream_unary\n handler_factory = grpc.stream_unary_rpc_method_handler\n elif not handler.request_streaming and handler.response_streaming:\n behavior_fn = handler.unary_stream\n handler_factory = grpc.unary_stream_rpc_method_handler\n else:\n behavior_fn = handler.unary_unary\n handler_factory = grpc.unary_unary_rpc_method_handler\n\n return handler_factory(\n continuation(\n behavior_fn, handler.request_streaming, handler.response_streaming\n ),\n request_deserializer=handler.request_deserializer,\n response_serializer=handler.response_serializer,\n )\n\n\n# pylint:disable=abstract-method\nclass _OpenTelemetryServicerContext(grpc.ServicerContext):\n def __init__(self, servicer_context, active_span):\n self._servicer_context = servicer_context\n self._active_span = active_span\n self.code = grpc.StatusCode.OK\n self.details = None\n super().__init__()\n\n def is_active(self, *args, **kwargs):\n return self._servicer_context.is_active(*args, **kwargs)\n\n def time_remaining(self, *args, **kwargs):\n return self._servicer_context.time_remaining(*args, **kwargs)\n\n def cancel(self, *args, **kwargs):\n return self._servicer_context.cancel(*args, **kwargs)\n\n def add_callback(self, *args, **kwargs):\n return self._servicer_context.add_callback(*args, **kwargs)\n\n def disable_next_message_compression(self):\n return self._service_context.disable_next_message_compression()\n\n def invocation_metadata(self, *args, **kwargs):\n return self._servicer_context.invocation_metadata(*args, **kwargs)\n\n def peer(self):\n return self._servicer_context.peer()\n\n def peer_identities(self):\n return self._servicer_context.peer_identities()\n\n def peer_identity_key(self):\n return self._servicer_context.peer_identity_key()\n\n def auth_context(self):\n return self._servicer_context.auth_context()\n\n def set_compression(self, compression):\n return self._servicer_context.set_compression(compression)\n\n def send_initial_metadata(self, *args, **kwargs):\n return self._servicer_context.send_initial_metadata(*args, **kwargs)\n\n def set_trailing_metadata(self, *args, **kwargs):\n return self._servicer_context.set_trailing_metadata(*args, **kwargs)\n\n def abort(self, code, details):\n self.code = code\n self.details = details\n self._active_span.set_attribute(\"rpc.grpc.status_code\", code.value[0])\n self._active_span.set_status(\n Status(\n status_code=StatusCode.ERROR,\n description=\"{}:{}\".format(code, details),\n )\n )\n return self._servicer_context.abort(code, details)\n\n def abort_with_status(self, status):\n return self._servicer_context.abort_with_status(status)\n\n def set_code(self, code):\n self.code = code\n # use details if we already have it, otherwise the status description\n details = self.details or code.value[1]\n self._active_span.set_attribute(\"rpc.grpc.status_code\", code.value[0])\n if code != grpc.StatusCode.OK:\n self._active_span.set_status(\n Status(\n status_code=StatusCode.ERROR,\n description=\"{}:{}\".format(code, details),\n )\n )\n return self._servicer_context.set_code(code)\n\n def set_details(self, details):\n self.details = details\n if self.code != grpc.StatusCode.OK:\n self._active_span.set_status(\n Status(\n status_code=StatusCode.ERROR,\n description=\"{}:{}\".format(self.code, details),\n )\n )\n return self._servicer_context.set_details(details)\n\n\n# pylint:disable=abstract-method\n# pylint:disable=no-self-use\n# pylint:disable=unused-argument\nclass OpenTelemetryServerInterceptor(grpc.ServerInterceptor):\n \"\"\"\n A gRPC server interceptor, to add OpenTelemetry.\n\n Usage::\n\n tracer = some OpenTelemetry tracer\n\n interceptors = [\n OpenTelemetryServerInterceptor(tracer),\n ]\n\n server = grpc.server(\n futures.ThreadPoolExecutor(max_workers=concurrency),\n interceptors = interceptors)\n\n \"\"\"\n\n def __init__(self, tracer):\n self._tracer = tracer\n self._carrier_getter = DictGetter()\n\n @contextmanager\n def _set_remote_context(self, servicer_context):\n metadata = servicer_context.invocation_metadata()\n if metadata:\n md_dict = {md.key: md.value for md in metadata}\n ctx = propagators.extract(self._carrier_getter, md_dict)\n token = attach(ctx)\n try:\n yield\n finally:\n detach(token)\n else:\n yield\n\n def _start_span(self, handler_call_details, context):\n\n # standard attributes\n attributes = {\n \"rpc.system\": \"grpc\",\n \"rpc.grpc.status_code\": grpc.StatusCode.OK.value[0],\n }\n\n # if we have details about the call, split into service and method\n if handler_call_details.method:\n service, method = handler_call_details.method.lstrip(\"/\").split(\n \"/\", 1\n )\n attributes.update({\"rpc.method\": method, \"rpc.service\": service})\n\n # add some attributes from the metadata\n metadata = dict(context.invocation_metadata())\n if \"user-agent\" in metadata:\n attributes[\"rpc.user_agent\"] = metadata[\"user-agent\"]\n\n # Split up the peer to keep with how other telemetry sources\n # do it. This looks like:\n # * ipv6:[::1]:57284\n # * ipv4:127.0.0.1:57284\n # * ipv4:10.2.1.1:57284,127.0.0.1:57284\n #\n try:\n ip, port = (\n context.peer().split(\",\")[0].split(\":\", 1)[1].rsplit(\":\", 1)\n )\n attributes.update({\"net.peer.ip\": ip, \"net.peer.port\": port})\n\n # other telemetry sources add this, so we will too\n if ip in (\"[::1]\", \"127.0.0.1\"):\n attributes[\"net.peer.name\"] = \"localhost\"\n\n except IndexError:\n logger.warning(\"Failed to parse peer address '%s'\", context.peer())\n\n return self._tracer.start_as_current_span(\n name=handler_call_details.method,\n kind=trace.SpanKind.SERVER,\n attributes=attributes,\n )\n\n def intercept_service(self, continuation, handler_call_details):\n def telemetry_wrapper(behavior, request_streaming, response_streaming):\n def telemetry_interceptor(request_or_iterator, context):\n\n with self._set_remote_context(context):\n with self._start_span(\n handler_call_details, context\n ) as span:\n # wrap the context\n context = _OpenTelemetryServicerContext(context, span)\n\n # And now we run the actual RPC.\n try:\n return behavior(request_or_iterator, context)\n except Exception as error:\n # Bare exceptions are likely to be gRPC aborts, which\n # we handle in our context wrapper.\n # Here, we're interested in uncaught exceptions.\n # pylint:disable=unidiomatic-typecheck\n if type(error) != Exception:\n span.record_exception(error)\n raise error\n\n return telemetry_interceptor\n\n return _wrap_rpc_behavior(\n continuation(handler_call_details), telemetry_wrapper\n )\n", "path": "instrumentation/opentelemetry-instrumentation-grpc/src/opentelemetry/instrumentation/grpc/_server.py"}]}

| 3,527 | 485 |

gh_patches_debug_349

|

rasdani/github-patches

|

git_diff

|

google__turbinia-1070

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Missing sys module import in logger.py

Logger module is missing an import statement for 'sys'

</issue>

<code>

[start of turbinia/config/logger.py]

1 # -*- coding: utf-8 -*-

2 # Copyright 2017 Google Inc.

3 #

4 # Licensed under the Apache License, Version 2.0 (the "License");

5 # you may not use this file except in compliance with the License.

6 # You may obtain a copy of the License at

7 #

8 # http://www.apache.org/licenses/LICENSE-2.0

9 #

10 # Unless required by applicable law or agreed to in writing, software

11 # distributed under the License is distributed on an "AS IS" BASIS,

12 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 # See the License for the specific language governing permissions and

14 # limitations under the License.

15 """Sets up logging."""

16

17 from __future__ import unicode_literals

18 import logging

19

20 import warnings

21 import logging.handlers

22 import os

23

24 from turbinia import config

25 from turbinia import TurbiniaException

26

27 # Environment variable to look for node name in

28 ENVNODENAME = 'NODE_NAME'

29

30

31 def setup(need_file_handler=True, need_stream_handler=True, log_file_path=None):

32 """Set up logging parameters.

33

34 This will also set the root logger, which is the default logger when a named

35 logger is not specified. We currently use 'turbinia' as the named logger,

36 however some external modules that are called by Turbinia can use the root

37 logger, so we want to be able to optionally configure that as well.

38 """

39 # Remove known warning about credentials

40 warnings.filterwarnings(

41 'ignore', 'Your application has authenticated using end user credentials')

42

43 logger = logging.getLogger('turbinia')

44 # Eliminate double logging from root logger

45 logger.propagate = False

46

47 # We only need a handler if one of that type doesn't exist already

48 if logger.handlers:

49 for handler in logger.handlers:

50 # Want to do strict type-checking here because is instance will include

51 # subclasses and so won't distinguish between StreamHandlers and

52 # FileHandlers.

53 # pylint: disable=unidiomatic-typecheck

54 if type(handler) == logging.FileHandler:

55 need_file_handler = False

56

57 # pylint: disable=unidiomatic-typecheck

58 if type(handler) == logging.StreamHandler:

59 need_stream_handler = False

60

61 if need_file_handler:

62 try:

63 config.LoadConfig()

64 except TurbiniaException as exception:

65 print(

66 'Could not load config file ({0!s}).\n{1:s}'.format(

67 exception, config.CONFIG_MSG))

68 sys.exit(1)

69

70 # Check if a user specified log path was provided else create default path

71 if not log_file_path:

72 log_name = os.uname().nodename

73 # Check if NODE_NAME available for GKE setups

74 if ENVNODENAME in os.environ:

75 log_name = log_name + '.{0!s}'.format(os.environ[ENVNODENAME])

76 log_file_path = os.path.join(config.LOG_DIR, log_name) + '.log'

77

78 file_handler = logging.FileHandler(log_file_path)

79 formatter = logging.Formatter('%(asctime)s:%(levelname)s:%(message)s')

80 file_handler.setFormatter(formatter)

81 file_handler.setLevel(logging.DEBUG)

82 logger.addHandler(file_handler)

83

84 console_handler = logging.StreamHandler()

85 formatter = logging.Formatter(

86 '%(asctime)s [%(levelname)s] %(message)s', "%Y-%m-%d %H:%M:%S")

87 console_handler.setFormatter(formatter)

88 if need_stream_handler:

89 logger.addHandler(console_handler)

90

91 # Configure the root logger to use exactly our handlers because other modules

92 # like PSQ use this, and we want to see log messages from it when executing

93 # from CLI.

94 root_log = logging.getLogger()

95 for handler in root_log.handlers:

96 root_log.removeHandler(handler)

97 root_log.addHandler(console_handler)

98 if need_file_handler:

99 root_log.addHandler(file_handler)

100

101 # Set filelock logging to ERROR due to log spam

102 logging.getLogger("filelock").setLevel(logging.ERROR)

103

[end of turbinia/config/logger.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/turbinia/config/logger.py b/turbinia/config/logger.py

--- a/turbinia/config/logger.py

+++ b/turbinia/config/logger.py

@@ -20,6 +20,7 @@

import warnings

import logging.handlers

import os

+import sys

from turbinia import config

from turbinia import TurbiniaException

|

{"golden_diff": "diff --git a/turbinia/config/logger.py b/turbinia/config/logger.py\n--- a/turbinia/config/logger.py\n+++ b/turbinia/config/logger.py\n@@ -20,6 +20,7 @@\n import warnings\n import logging.handlers\n import os\n+import sys\n \n from turbinia import config\n from turbinia import TurbiniaException\n", "issue": "Missing sys module import in logger.py\nLogger module is missing an import statement for 'sys'\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\n# Copyright 2017 Google Inc.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\"\"\"Sets up logging.\"\"\"\n\nfrom __future__ import unicode_literals\nimport logging\n\nimport warnings\nimport logging.handlers\nimport os\n\nfrom turbinia import config\nfrom turbinia import TurbiniaException\n\n# Environment variable to look for node name in\nENVNODENAME = 'NODE_NAME'\n\n\ndef setup(need_file_handler=True, need_stream_handler=True, log_file_path=None):\n \"\"\"Set up logging parameters.\n\n This will also set the root logger, which is the default logger when a named\n logger is not specified. We currently use 'turbinia' as the named logger,\n however some external modules that are called by Turbinia can use the root\n logger, so we want to be able to optionally configure that as well.\n \"\"\"\n # Remove known warning about credentials\n warnings.filterwarnings(\n 'ignore', 'Your application has authenticated using end user credentials')\n\n logger = logging.getLogger('turbinia')\n # Eliminate double logging from root logger\n logger.propagate = False\n\n # We only need a handler if one of that type doesn't exist already\n if logger.handlers:\n for handler in logger.handlers:\n # Want to do strict type-checking here because is instance will include\n # subclasses and so won't distinguish between StreamHandlers and\n # FileHandlers.\n # pylint: disable=unidiomatic-typecheck\n if type(handler) == logging.FileHandler:\n need_file_handler = False\n\n # pylint: disable=unidiomatic-typecheck\n if type(handler) == logging.StreamHandler:\n need_stream_handler = False\n\n if need_file_handler:\n try:\n config.LoadConfig()\n except TurbiniaException as exception:\n print(\n 'Could not load config file ({0!s}).\\n{1:s}'.format(\n exception, config.CONFIG_MSG))\n sys.exit(1)\n\n # Check if a user specified log path was provided else create default path\n if not log_file_path:\n log_name = os.uname().nodename\n # Check if NODE_NAME available for GKE setups\n if ENVNODENAME in os.environ:\n log_name = log_name + '.{0!s}'.format(os.environ[ENVNODENAME])\n log_file_path = os.path.join(config.LOG_DIR, log_name) + '.log'\n\n file_handler = logging.FileHandler(log_file_path)\n formatter = logging.Formatter('%(asctime)s:%(levelname)s:%(message)s')\n file_handler.setFormatter(formatter)\n file_handler.setLevel(logging.DEBUG)\n logger.addHandler(file_handler)\n\n console_handler = logging.StreamHandler()\n formatter = logging.Formatter(\n '%(asctime)s [%(levelname)s] %(message)s', \"%Y-%m-%d %H:%M:%S\")\n console_handler.setFormatter(formatter)\n if need_stream_handler:\n logger.addHandler(console_handler)\n\n # Configure the root logger to use exactly our handlers because other modules\n # like PSQ use this, and we want to see log messages from it when executing\n # from CLI.\n root_log = logging.getLogger()\n for handler in root_log.handlers:\n root_log.removeHandler(handler)\n root_log.addHandler(console_handler)\n if need_file_handler:\n root_log.addHandler(file_handler)\n\n # Set filelock logging to ERROR due to log spam\n logging.getLogger(\"filelock\").setLevel(logging.ERROR)\n", "path": "turbinia/config/logger.py"}]}

| 1,618 | 83 |

gh_patches_debug_26789

|

rasdani/github-patches

|

git_diff

|

cloud-custodian__cloud-custodian-5796

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

aws.elasticsearch Error Scanning More Than 5 domains

**Describe the bug**

When running any elasticsearch policy on an account region with more than 5 elasticsearch domains the policy now bombs out with the error - ```error:An error occurred (ValidationException) when calling the DescribeElasticsearchDomains operation: Please provide a maximum of 5 Elasticsearch domain names to describe.```

**To Reproduce**

Create 6 es domains and run an elasticsearch c7n policy, error will occur

**Expected behavior**

It should chunk the calls into domains of 5 or less

**Background (please complete the following information):**

- OS: Ubuntu v20

- Python Version: 3.8

- Custodian Version: 0.9.2.0

- Tool Version: [if applicable]

- Cloud Provider: aws

- Policy: any policy which queries ES

- Traceback:

```

[ERROR] 2020-05-22T14:51:25.978Z 9ef7929b-b494-434e-9f9f-dfdfdfdfdfdfd Error while executing policy

Traceback (most recent call last):

File "/var/task/c7n/policy.py", line 291, in run

resources = self.policy.resource_manager.resources()

File "/var/task/c7n/query.py", line 466, in resources

resources = self.augment(resources)

File "/var/task/c7n/query.py", line 521, in augment

return self.source.augment(resources)

File "/var/task/c7n/resources/elasticsearch.py", line 48, in augment

return _augment(domains)

File "/var/task/c7n/resources/elasticsearch.py", line 39, in _augment

resources = self.manager.retry(

File "/var/task/c7n/utils.py", line 373, in _retry

return func(*args, **kw)

File "/var/task/botocore/client.py", line 316, in _api_call

return self._make_api_call(operation_name, kwargs)

File "/var/task/botocore/client.py", line 635, in _make_api_call

raise error_class(parsed_response, operation_name)

botocore.errorfactory.ValidationException: An error occurred (ValidationException) when calling the DescribeElasticsearchDomains operation: Please provide a maximum of 5 Elasticsearch domain names to describe.

```

- `custodian version --debug` output

**Additional context**

Seems to be introduced with 0.9.2.0

</issue>

<code>

[start of c7n/resources/elasticsearch.py]

1 # Copyright 2016-2017 Capital One Services, LLC

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14 import jmespath

15

16 from c7n.actions import Action, ModifyVpcSecurityGroupsAction

17 from c7n.filters import MetricsFilter

18 from c7n.filters.vpc import SecurityGroupFilter, SubnetFilter, VpcFilter

19 from c7n.manager import resources

20 from c7n.query import ConfigSource, DescribeSource, QueryResourceManager, TypeInfo

21 from c7n.utils import local_session, type_schema

22 from c7n.tags import Tag, RemoveTag, TagActionFilter, TagDelayedAction

23

24 from .securityhub import PostFinding

25

26

27 class DescribeDomain(DescribeSource):

28

29 def get_resources(self, resource_ids):

30 client = local_session(self.manager.session_factory).client('es')

31 return client.describe_elasticsearch_domains(

32 DomainNames=resource_ids)['DomainStatusList']

33

34 def augment(self, domains):

35 client = local_session(self.manager.session_factory).client('es')

36 model = self.manager.get_model()

37

38 def _augment(resource_set):

39 resources = self.manager.retry(

40 client.describe_elasticsearch_domains,

41 DomainNames=resource_set)['DomainStatusList']

42 for r in resources:

43 rarn = self.manager.generate_arn(r[model.id])

44 r['Tags'] = self.manager.retry(

45 client.list_tags, ARN=rarn).get('TagList', [])

46 return resources

47

48 return _augment(domains)

49

50

51 @resources.register('elasticsearch')

52 class ElasticSearchDomain(QueryResourceManager):

53

54 class resource_type(TypeInfo):

55 service = 'es'

56 arn = 'ARN'

57 arn_type = 'domain'

58 enum_spec = (

59 'list_domain_names', 'DomainNames[].DomainName', None)

60 id = 'DomainName'

61 name = 'Name'

62 dimension = "DomainName"

63 cfn_type = config_type = 'AWS::Elasticsearch::Domain'

64

65 source_mapping = {

66 'describe': DescribeDomain,

67 'config': ConfigSource

68 }

69

70

71 ElasticSearchDomain.filter_registry.register('marked-for-op', TagActionFilter)

72

73

74 @ElasticSearchDomain.filter_registry.register('subnet')

75 class Subnet(SubnetFilter):

76

77 RelatedIdsExpression = "VPCOptions.SubnetIds[]"

78

79

80 @ElasticSearchDomain.filter_registry.register('security-group')

81 class SecurityGroup(SecurityGroupFilter):

82

83 RelatedIdsExpression = "VPCOptions.SecurityGroupIds[]"

84

85

86 @ElasticSearchDomain.filter_registry.register('vpc')

87 class Vpc(VpcFilter):

88

89 RelatedIdsExpression = "VPCOptions.VPCId"

90

91

92 @ElasticSearchDomain.filter_registry.register('metrics')

93 class Metrics(MetricsFilter):

94

95 def get_dimensions(self, resource):

96 return [{'Name': 'ClientId',

97 'Value': self.manager.account_id},

98 {'Name': 'DomainName',

99 'Value': resource['DomainName']}]

100

101

102 @ElasticSearchDomain.action_registry.register('post-finding')

103 class ElasticSearchPostFinding(PostFinding):

104

105 resource_type = 'AwsElasticsearchDomain'

106

107 def format_resource(self, r):

108 envelope, payload = self.format_envelope(r)

109 payload.update(self.filter_empty({

110 'AccessPolicies': r.get('AccessPolicies'),

111 'DomainId': r['DomainId'],

112 'DomainName': r['DomainName'],

113 'Endpoint': r.get('Endpoint'),

114 'Endpoints': r.get('Endpoints'),

115 'DomainEndpointOptions': self.filter_empty({

116 'EnforceHTTPS': jmespath.search(

117 'DomainEndpointOptions.EnforceHTTPS', r),

118 'TLSSecurityPolicy': jmespath.search(

119 'DomainEndpointOptions.TLSSecurityPolicy', r)

120 }),

121 'ElasticsearchVersion': r['ElasticsearchVersion'],

122 'EncryptionAtRestOptions': self.filter_empty({

123 'Enabled': jmespath.search(

124 'EncryptionAtRestOptions.Enabled', r),

125 'KmsKeyId': jmespath.search(

126 'EncryptionAtRestOptions.KmsKeyId', r)

127 }),

128 'NodeToNodeEncryptionOptions': self.filter_empty({

129 'Enabled': jmespath.search(

130 'NodeToNodeEncryptionOptions.Enabled', r)

131 }),

132 'VPCOptions': self.filter_empty({

133 'AvailabilityZones': jmespath.search(

134 'VPCOptions.AvailabilityZones', r),

135 'SecurityGroupIds': jmespath.search(

136 'VPCOptions.SecurityGroupIds', r),

137 'SubnetIds': jmespath.search('VPCOptions.SubnetIds', r),

138 'VPCId': jmespath.search('VPCOptions.VPCId', r)

139 })

140 }))

141 return envelope

142

143

144 @ElasticSearchDomain.action_registry.register('modify-security-groups')

145 class ElasticSearchModifySG(ModifyVpcSecurityGroupsAction):

146 """Modify security groups on an Elasticsearch domain"""

147

148 permissions = ('es:UpdateElasticsearchDomainConfig',)

149

150 def process(self, domains):

151 groups = super(ElasticSearchModifySG, self).get_groups(domains)

152 client = local_session(self.manager.session_factory).client('es')

153

154 for dx, d in enumerate(domains):

155 client.update_elasticsearch_domain_config(

156 DomainName=d['DomainName'],

157 VPCOptions={

158 'SecurityGroupIds': groups[dx]})

159

160

161 @ElasticSearchDomain.action_registry.register('delete')

162 class Delete(Action):

163

164 schema = type_schema('delete')

165 permissions = ('es:DeleteElasticsearchDomain',)

166

167 def process(self, resources):

168 client = local_session(self.manager.session_factory).client('es')

169 for r in resources:

170 client.delete_elasticsearch_domain(DomainName=r['DomainName'])

171

172

173 @ElasticSearchDomain.action_registry.register('tag')

174 class ElasticSearchAddTag(Tag):

175 """Action to create tag(s) on an existing elasticsearch domain

176

177 :example:

178

179 .. code-block:: yaml

180

181 policies:

182 - name: es-add-tag

183 resource: elasticsearch

184 filters:

185 - "tag:DesiredTag": absent

186 actions:

187 - type: tag

188 key: DesiredTag

189 value: DesiredValue

190 """

191 permissions = ('es:AddTags',)

192

193 def process_resource_set(self, client, domains, tags):

194 for d in domains:

195 try:

196 client.add_tags(ARN=d['ARN'], TagList=tags)

197 except client.exceptions.ResourceNotFoundExecption:

198 continue

199

200

201 @ElasticSearchDomain.action_registry.register('remove-tag')

202 class ElasticSearchRemoveTag(RemoveTag):

203 """Removes tag(s) on an existing elasticsearch domain

204

205 :example:

206

207 .. code-block:: yaml

208

209 policies:

210 - name: es-remove-tag

211 resource: elasticsearch

212 filters:

213 - "tag:ExpiredTag": present

214 actions:

215 - type: remove-tag

216 tags: ['ExpiredTag']

217 """

218 permissions = ('es:RemoveTags',)

219

220 def process_resource_set(self, client, domains, tags):

221 for d in domains:

222 try:

223 client.remove_tags(ARN=d['ARN'], TagKeys=tags)

224 except client.exceptions.ResourceNotFoundExecption:

225 continue

226

227

228 @ElasticSearchDomain.action_registry.register('mark-for-op')

229 class ElasticSearchMarkForOp(TagDelayedAction):

230 """Tag an elasticsearch domain for action later

231

232 :example:

233

234 .. code-block:: yaml

235

236 policies:

237 - name: es-delete-missing

238 resource: elasticsearch

239 filters:

240 - "tag:DesiredTag": absent

241 actions:

242 - type: mark-for-op

243 days: 7

244 op: delete

245 tag: c7n_es_delete

246 """

247

[end of c7n/resources/elasticsearch.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/c7n/resources/elasticsearch.py b/c7n/resources/elasticsearch.py

--- a/c7n/resources/elasticsearch.py

+++ b/c7n/resources/elasticsearch.py

@@ -18,7 +18,7 @@

from c7n.filters.vpc import SecurityGroupFilter, SubnetFilter, VpcFilter

from c7n.manager import resources

from c7n.query import ConfigSource, DescribeSource, QueryResourceManager, TypeInfo

-from c7n.utils import local_session, type_schema

+from c7n.utils import chunks, local_session, type_schema

from c7n.tags import Tag, RemoveTag, TagActionFilter, TagDelayedAction

from .securityhub import PostFinding

@@ -34,6 +34,7 @@

def augment(self, domains):

client = local_session(self.manager.session_factory).client('es')

model = self.manager.get_model()

+ results = []

def _augment(resource_set):

resources = self.manager.retry(

@@ -45,7 +46,10 @@

client.list_tags, ARN=rarn).get('TagList', [])

return resources

- return _augment(domains)

+ for resource_set in chunks(domains, 5):

+ results.extend(_augment(resource_set))

+

+ return results

@resources.register('elasticsearch')

|