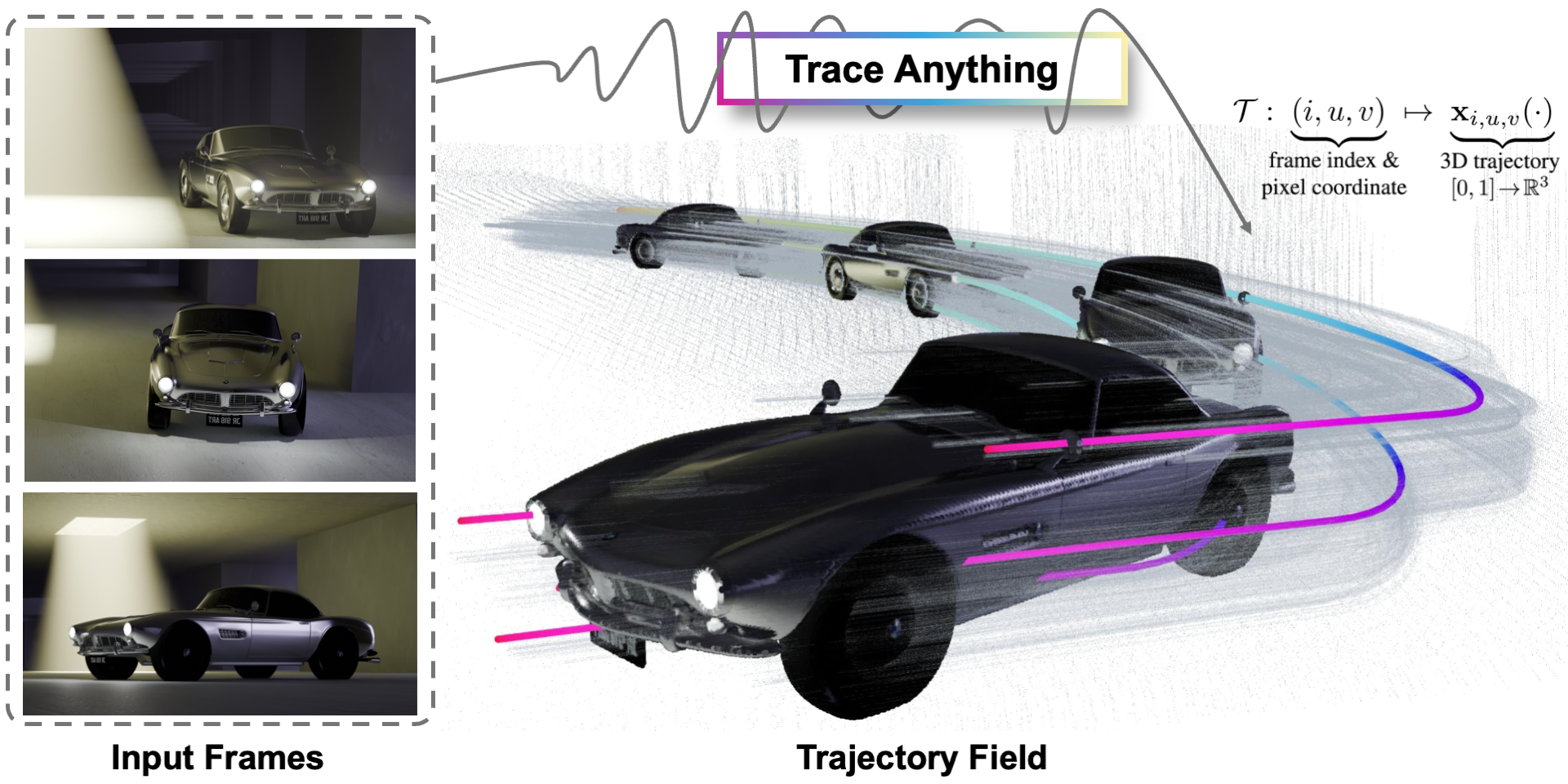

Trace Anything: Representing Any Video in 4D via Trajectory Fields

This repository contains the official implementation of the paper Trace Anything: Representing Any Video in 4D via Trajectory Fields.

Trace Anything proposes a novel approach to represent any video as a Trajectory Field, a dense mapping that assigns a continuous 3D trajectory function of time to each pixel in every frame. The model predicts the entire trajectory field in a single feed-forward pass, enabling applications like goal-conditioned manipulation, motion forecasting, and spatio-temporal fusion.

Project Page: https://trace-anything.github.io/ Code: https://github.com/ByteDance-Seed/TraceAnything

Overview

Installation

For detailed installation instructions, please refer to the GitHub repository.

Sample Usage

To run inference with the Trace Anything model, first, download the pretrained weights (see GitHub for details). Then, you can use the provided script as follows:

# Download the model weights to checkpoints/trace_anything.pt

# Place your input video/image sequence in examples/input/<scene_name>/

python scripts/infer.py \

--input_dir examples/input \

--output_dir examples/output \

--ckpt checkpoints/trace_anything.pt

Results, including 3D control points and confidence maps, will be saved to <output_dir>/<scene>/output.pt.

Interactive Visualization

An interactive 3D viewer is available to explore the generated trajectory fields. Run it using:

python scripts/view.py --output examples/output/<scene>/output.pt

For more options and remote usage, check the GitHub repository.

Citation

If you find this work useful, please consider citing the paper:

@misc{liu2025traceanythingrepresentingvideo,

title={Trace Anything: Representing Any Video in 4D via Trajectory Fields},

author={Xinhang Liu and Yuxi Xiao and Donny Y. Chen and Jiashi Feng and Yu-Wing Tai and Chi-Keung Tang and Bingyi Kang},

year={2025},

eprint={2510.13802},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2510.13802},

}