Spaces:

Running

🛡️Threefold Robustness Improvement Design for Terramind: Adversarial Training, Guardrails, and External Verifiers

🛡️ Threefold Robustness Improvement Design for Terramind: Adversarial Training, Guardrails, and External Verifiers

B.Wang, X.Huang and Y.Dong, Department of Computer Science, University of Liverpool

Contact: Boxuan.Wang@liverpool.ac.uk

1. Motivation

While Terramind demonstrates impressive performance in multi-modal geospatial generation, its inherent uncertainty poses challenges for deployment in critical, high-precision scenarios. Tasks such as ship detection in busy ports demand precise and consistent inference, where even small variations in generation outputs can undermine practical reliability.

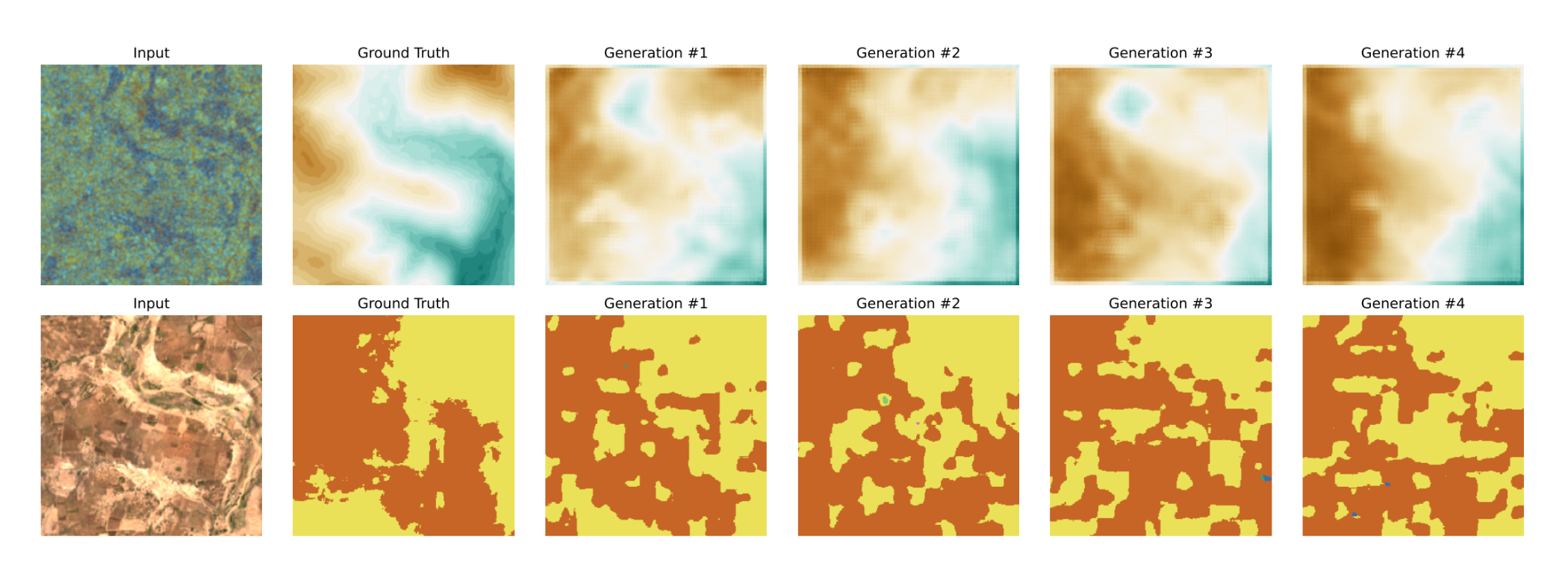

As illustrated in the diagram below, given the same input image and ground truth for the target modality, multiple generation results exhibit noticeable differences and inherent uncertainty. This highlights the need for systematic robustness enhancement.

To this end, we propose a systematic, three-layered defense strategy to significantly enhance Terramind's robustness at the model, output, and system levels.

2. Threefold Robustness Design

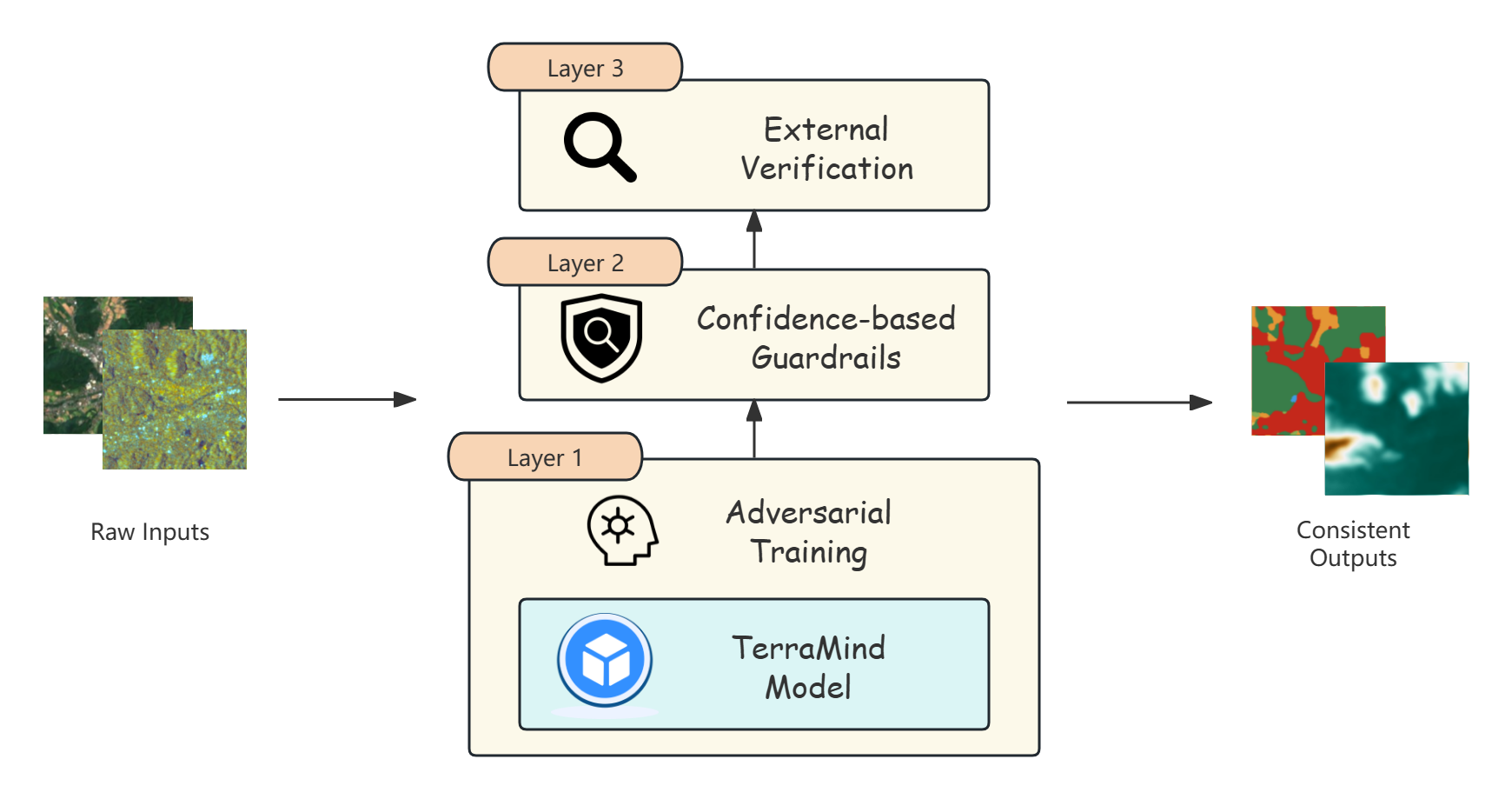

To systematically enhance Terramind's robustness, we adopt a three-layered defense strategy, as illustrated in the diagram below. This design improves reliability from the model level to the system level, ensuring stable, trustworthy outputs even under challenging real-world conditions.

Formal Definition of Robustness

We define the robustness objective of Terramind as:

Where:

xis the input from the data distributionDdeltais the admissible perturbation within constraint setSf(·)is the Terramind generation modelyis the ground truth outputLis the task-specific loss function (e.g., pixel-wise loss for segmentation)

Our goal is to minimize R, ensuring stable outputs under worst-case perturbations.

Layer 1 — Intrinsic Robustness via Adversarial Training

We enhance model-level robustness through adversarial training, formulated as:

Key techniques:

- Introduce carefully crafted adversarial perturbations to the input modalities

- Optionally apply latent-space perturbations for stability

- Train Terramind to maintain consistent, high-quality outputs under such conditions

Layer 2 — Output Reliability with Confidence-based Guardrails

We integrate inference-time guardrails based on predictive confidence:

- Estimate uncertainty via techniques such as Monte Carlo Dropout, Ensemble Variance, or Evidential Deep Learning

- Define a confidence threshold

tau - Filter outputs where estimated confidence

c(x)is belowtau

This prevents unreliable or misleading generations from propagating to downstream tasks.

Layer 3 — External Verification for System-level Assurance

We introduce independent, domain-specific verification mechanisms:

- Use land cover classifiers or DEM consistency checks as external validators

- Given Terramind's output

y_hatand external validatorg(·), enforce:

Where y is the ground truth or trusted reference.

Inconsistencies trigger user review or automated rejection.

3. Expected Benefits

The proposed design offers:

- Improved resilience to noisy, incomplete, or corrupted inputs

- Reliable output filtering based on uncertainty estimates

- Independent, complementary validation of generated results

- A principled framework for safe, trustworthy deployment

4. Conclusion

Robustness is not an afterthought — it demands systematic, multi-layered design. By integrating model-level defenses, output guardrails, and external verification, Terramind moves closer to practical, resilient, and trustworthy real-world deployment.