repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

sd-concepts-library/carlitos-el-mago

|

sd-concepts-library

| null | 9 | 0 | null | 1 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,085 | false |

### carlitos el mago on Stable Diffusion

This is the `<carloscarbonell>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

b6ff16262f9c5abad876050d0a78c25d

|

sd-concepts-library/ingmar-bergman

|

sd-concepts-library

| null | 10 | 0 | null | 6 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,184 | false |

### ingmar-bergman on Stable Diffusion

This is the `<ingmar-bergman>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

0e7c79b6812c4c21d9b407cfba93d8b3

|

jonatasgrosman/exp_w2v2t_uk_hubert_s878

|

jonatasgrosman

|

hubert

| 10 | 4 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['uk']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'uk']

| false | true | true | 452 | false |

# exp_w2v2t_uk_hubert_s878

Fine-tuned [facebook/hubert-large-ll60k](https://huggingface.co/facebook/hubert-large-ll60k) for speech recognition using the train split of [Common Voice 7.0 (uk)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

ef822685940eee51935ef6ad0295dfa2

|

nmb-paperspace-hf/roberta-base-finetuned-swag

|

nmb-paperspace-hf

|

roberta

| 12 | 0 |

transformers

| 0 | null | true | false | false |

mit

| null |

['swag']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,467 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-finetuned-swag

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on the swag dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5161

- Accuracy: 0.8266

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- distributed_type: IPU

- gradient_accumulation_steps: 16

- total_train_batch_size: 32

- total_eval_batch_size: 10

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

- training precision: Mixed Precision

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 1.1273 | 1.0 | 2298 | 0.5415 | 0.7898 |

| 0.2373 | 2.0 | 4596 | 0.4756 | 0.8175 |

| 0.1788 | 3.0 | 6894 | 0.5161 | 0.8266 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.10.0+cpu

- Datasets 2.7.1

- Tokenizers 0.12.1

|

3d67e58f42e4574c7910c80afc6063b8

|

vamads/distilbert-base-uncased-finetuned-preprint_full

|

vamads

|

distilbert

| 31 | 2 |

transformers

| 1 |

fill-mask

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,328 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-preprint_full

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 2.3258

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.7315 | 1.0 | 47 | 2.4462 |

| 2.577 | 2.0 | 94 | 2.3715 |

| 2.5386 | 3.0 | 141 | 2.3692 |

### Framework versions

- Transformers 4.25.0.dev0

- Pytorch 1.12.1

- Datasets 2.7.0

- Tokenizers 0.13.2

|

f21c09895cf0757659c4d67c94b64a74

|

gokuls/mobilebert_sa_GLUE_Experiment_logit_kd_wnli_256

|

gokuls

|

mobilebert

| 17 | 2 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

|

['en']

|

['glue']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,592 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mobilebert_sa_GLUE_Experiment_logit_kd_wnli_256

This model is a fine-tuned version of [google/mobilebert-uncased](https://huggingface.co/google/mobilebert-uncased) on the GLUE WNLI dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3453

- Accuracy: 0.5634

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 128

- eval_batch_size: 128

- seed: 10

- distributed_type: multi-GPU

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 50

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.3472 | 1.0 | 5 | 0.3453 | 0.5634 |

| 0.3469 | 2.0 | 10 | 0.3464 | 0.5634 |

| 0.3467 | 3.0 | 15 | 0.3465 | 0.5634 |

| 0.3465 | 4.0 | 20 | 0.3457 | 0.5634 |

| 0.3466 | 5.0 | 25 | 0.3453 | 0.5634 |

| 0.3466 | 6.0 | 30 | 0.3454 | 0.5634 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.14.0a0+410ce96

- Datasets 2.9.0

- Tokenizers 0.13.2

|

92ec65ee171c7c22055c5a47601bc8df

|

IDEA-CCNL/Randeng-BART-139M

|

IDEA-CCNL

|

bart

| 9 | 166 |

transformers

| 2 |

text2text-generation

| true | false | false |

apache-2.0

|

['zh']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 2,703 | false |

# Randeng-BART-139M

- Github: [Fengshenbang-LM](https://github.com/IDEA-CCNL/Fengshenbang-LM)

- Docs: [Fengshenbang-Docs](https://fengshenbang-doc.readthedocs.io/)

## 简介 Brief Introduction

善于处理NLT任务,中文版的BART-base。

Good at solving NLT tasks, Chinese BART-base.

## 模型分类 Model Taxonomy

| 需求 Demand | 任务 Task | 系列 Series | 模型 Model | 参数 Parameter | 额外 Extra |

| :----: | :----: | :----: | :----: | :----: | :----: |

| 通用 General | 自然语言转换 NLT | 燃灯 Randeng | BART | 139M | 中文-Chinese |

## 模型信息 Model Information

参考论文:[BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension](https://arxiv.org/pdf/1910.13461.pdf)

为了得到一个中文版的BART-base,我们用悟道语料库(180G版本)进行预训练。具体地,我们在预训练阶段中使用了[封神框架](https://github.com/IDEA-CCNL/Fengshenbang-LM/tree/main/fengshen)大概花费了8张A100约3天。

To get a Chinese BART-base, we use WuDao Corpora (180 GB version) for pre-training. Specifically, we use the [fengshen framework](https://github.com/IDEA-CCNL/Fengshenbang-LM/tree/main/fengshen) in the pre-training phase which cost about 3 days with 8 A100 GPUs.

## 使用 Usage

```python

from transformers import BartForConditionalGeneration, AutoTokenizer, Text2TextGenerationPipeline

import torch

tokenizer=AutoTokenizer.from_pretrained('IDEA-CCNL/Randeng-BART-139M', use_fast=false)

model=BartForConditionalGeneration.from_pretrained('IDEA-CCNL/Randeng-BART-139M')

text = '桂林市是世界闻名<mask> ,它有悠久的<mask>'

text2text_generator = Text2TextGenerationPipeline(model, tokenizer)

print(text2text_generator(text, max_length=50, do_sample=False))

```

## 引用 Citation

如果您在您的工作中使用了我们的模型,可以引用我们的[论文](https://arxiv.org/abs/2209.02970):

If you are using the resource for your work, please cite the our [paper](https://arxiv.org/abs/2209.02970):

```text

@article{fengshenbang,

author = {Junjie Wang and Yuxiang Zhang and Lin Zhang and Ping Yang and Xinyu Gao and Ziwei Wu and Xiaoqun Dong and Junqing He and Jianheng Zhuo and Qi Yang and Yongfeng Huang and Xiayu Li and Yanghan Wu and Junyu Lu and Xinyu Zhu and Weifeng Chen and Ting Han and Kunhao Pan and Rui Wang and Hao Wang and Xiaojun Wu and Zhongshen Zeng and Chongpei Chen and Ruyi Gan and Jiaxing Zhang},

title = {Fengshenbang 1.0: Being the Foundation of Chinese Cognitive Intelligence},

journal = {CoRR},

volume = {abs/2209.02970},

year = {2022}

}

```

也可以引用我们的[网站](https://github.com/IDEA-CCNL/Fengshenbang-LM/):

You can also cite our [website](https://github.com/IDEA-CCNL/Fengshenbang-LM/):

```text

@misc{Fengshenbang-LM,

title={Fengshenbang-LM},

author={IDEA-CCNL},

year={2021},

howpublished={\url{https://github.com/IDEA-CCNL/Fengshenbang-LM}},

}

```

|

660fc2b70146570a120537b48081c430

|

likejazz/xlm-roberta-base-finetuned-panx-de

|

likejazz

|

xlm-roberta

| 39 | 1 |

transformers

| 0 |

token-classification

| true | false | false |

mit

| null |

['xtreme']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,320 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1351

- F1: 0.8516

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 96

- eval_batch_size: 96

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| No log | 1.0 | 132 | 0.1641 | 0.8141 |

| No log | 2.0 | 264 | 0.1410 | 0.8399 |

| No log | 3.0 | 396 | 0.1351 | 0.8516 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.13.1+cu117

- Datasets 1.16.1

- Tokenizers 0.10.3

|

a4055cf8befa3cf2d9b6be08a2be911b

|

FredMath/distilbert-base-uncased-finetuned-ner

|

FredMath

|

distilbert

| 12 | 9 |

transformers

| 0 |

token-classification

| true | false | false |

apache-2.0

| null |

['conll2003']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,555 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-ner

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0625

- Precision: 0.9243

- Recall: 0.9361

- F1: 0.9302

- Accuracy: 0.9835

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.2424 | 1.0 | 878 | 0.0685 | 0.9152 | 0.9235 | 0.9193 | 0.9813 |

| 0.0539 | 2.0 | 1756 | 0.0621 | 0.9225 | 0.9333 | 0.9279 | 0.9828 |

| 0.0298 | 3.0 | 2634 | 0.0625 | 0.9243 | 0.9361 | 0.9302 | 0.9835 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

51e6594152a1c6d15d68944f6eeeb749

|

kejian/mighty-filtering

|

kejian

|

gpt2

| 36 | 4 |

transformers

| 0 | null | true | false | false |

apache-2.0

|

['en']

|

['kejian/codeparrot-train-more-filter-3.3b-cleaned']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 4,332 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mighty-filtering

This model was trained from scratch on the kejian/codeparrot-train-more-filter-3.3b-cleaned dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0008

- train_batch_size: 32

- eval_batch_size: 16

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.01

- training_steps: 50354

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.23.0

- Pytorch 1.13.0+cu116

- Datasets 2.0.0

- Tokenizers 0.12.1

# Full config

{'dataset': {'datasets': ['kejian/codeparrot-train-more-filter-3.3b-cleaned'],

'filter_threshold': 0.002361,

'is_split_by_sentences': True},

'generation': {'batch_size': 128,

'metrics_configs': [{}, {'n': 1}, {}],

'scenario_configs': [{'display_as_html': True,

'generate_kwargs': {'do_sample': True,

'eos_token_id': 0,

'max_length': 640,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'unconditional',

'num_hits_threshold': 0,

'num_samples': 2048},

{'display_as_html': True,

'generate_kwargs': {'do_sample': True,

'eos_token_id': 0,

'max_length': 272,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'functions',

'num_hits_threshold': 0,

'num_samples': 2048,

'prompts_path': 'resources/functions_csnet.jsonl',

'use_prompt_for_scoring': True}],

'scorer_config': {}},

'kl_gpt3_callback': {'gpt3_kwargs': {'model_name': 'code-cushman-001'},

'max_tokens': 64,

'num_samples': 4096},

'model': {'from_scratch': True,

'gpt2_config_kwargs': {'reorder_and_upcast_attn': True,

'scale_attn_by': True},

'path_or_name': 'codeparrot/codeparrot-small'},

'objective': {'name': 'MLE'},

'tokenizer': {'path_or_name': 'codeparrot/codeparrot-small'},

'training': {'dataloader_num_workers': 0,

'effective_batch_size': 64,

'evaluation_strategy': 'no',

'fp16': True,

'hub_model_id': 'mighty-filtering',

'hub_strategy': 'all_checkpoints',

'learning_rate': 0.0008,

'logging_first_step': True,

'logging_steps': 1,

'num_tokens': 3300000000.0,

'output_dir': 'training_output',

'per_device_train_batch_size': 16,

'push_to_hub': True,

'remove_unused_columns': False,

'save_steps': 25177,

'save_strategy': 'steps',

'seed': 42,

'warmup_ratio': 0.01,

'weight_decay': 0.1}}

# Wandb URL:

https://wandb.ai/kejian/uncategorized/runs/zk4rbxx0

|

c05203d9c3cb86c5758554a84dfd7035

|

Helsinki-NLP/opus-mt-fr-mt

|

Helsinki-NLP

|

marian

| 10 | 15 |

transformers

| 0 |

translation

| true | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['translation']

| false | true | true | 768 | false |

### opus-mt-fr-mt

* source languages: fr

* target languages: mt

* OPUS readme: [fr-mt](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/fr-mt/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-09.zip](https://object.pouta.csc.fi/OPUS-MT-models/fr-mt/opus-2020-01-09.zip)

* test set translations: [opus-2020-01-09.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/fr-mt/opus-2020-01-09.test.txt)

* test set scores: [opus-2020-01-09.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/fr-mt/opus-2020-01-09.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.fr.mt | 28.7 | 0.466 |

|

ee9b50ff1b5224148594871dffd8d953

|

racai/e4a-covid-bert-base-romanian-cased-v1

|

racai

|

bert

| 9 | 4 |

transformers

| 0 |

fill-mask

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,791 | false |

The model generated in the Enrich4All project.<br>

Evaluated the perplexity of MLM Task fine-tuned for COVID-related corpus.<br>

Baseline model: https://huggingface.co/dumitrescustefan/bert-base-romanian-cased-v1 <br>

Scripts and corpus used for training: https://github.com/racai-ai/e4all-models

Corpus

---------------

The COVID-19 datasets we designed are a small corpus and a question-answer dataset. The targeted sources were official websites of Romanian institutions involved in managing the COVID-19 pandemic, like The Ministry of Health, Bucharest Public Health Directorate, The National Information Platform on Vaccination against COVID-19, The Ministry of Foreign Affairs, as well as of the European Union. We also harvested the website of a non-profit organization initiative, in partnership with the Romanian Government through the Romanian Digitization Authority, that developed an ample platform with different sections dedicated to COVID-19 official news and recommendations. News websites were avoided due to the volatile character of the continuously changing pandemic situation, but a reliable source of information was a major private medical clinic website (Regina Maria), which provided detailed medical articles on important subjects of immediate interest to the readers and patients, like immunity, the emergent treating protocols or the new Omicron variant of the virus.

The corpus dataset was manually collected and revised. Data were checked for grammatical correctness, and missing diacritics were introduced.

<br><br>

The corpus is structured in 55 UTF-8 documents and contains 147,297 words.

Results

-----------------

| MLM Task | Perplexity |

| ------------- | ------------- |

| Baseline | 5.13 |

| COVID Fine-tuning| 2.74 |

|

cf9bc0b7d216501f87651daf1797ec43

|

huggingnft/nftrex

|

huggingnft

| null | 5 | 19 |

transformers

| 1 |

unconditional-image-generation

| false | false | false |

mit

| null |

['huggingnft/nftrex']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['huggingnft', 'nft', 'huggan', 'gan', 'image', 'images', 'unconditional-image-generation']

| false | true | true | 2,166 | false |

# Hugging NFT: nftrex

## Disclaimer

All rights belong to their owners. Models and datasets can be removed from the site at the request of the copyright

holder.

## Model description

LightWeight GAN model for unconditional generation.

NFT collection available [here](https://opensea.io/collection/nftrex).

Dataset is available [here](https://huggingface.co/datasets/huggingnft/nftrex).

Check Space: [link](https://huggingface.co/spaces/AlekseyKorshuk/huggingnft).

Project repository: [link](https://github.com/AlekseyKorshuk/huggingnft).

[](https://github.com/AlekseyKorshuk/huggingnft)

## Intended uses & limitations

#### How to use

Check project repository: [link](https://github.com/AlekseyKorshuk/huggingnft).

#### Limitations and bias

Check project repository: [link](https://github.com/AlekseyKorshuk/huggingnft).

## Training data

Dataset is available [here](https://huggingface.co/datasets/huggingnft/nftrex).

## Training procedure

Training script is available [here](https://github.com/AlekseyKorshuk/huggingnft).

## Generated Images

Check results with Space: [link](https://huggingface.co/spaces/AlekseyKorshuk/huggingnft).

## About

*Built by Aleksey Korshuk*

[](https://github.com/AlekseyKorshuk)

[](https://twitter.com/intent/follow?screen_name=alekseykorshuk)

[](https://t.me/joinchat/_CQ04KjcJ-4yZTky)

For more details, visit the project repository.

[](https://github.com/AlekseyKorshuk/huggingnft)

### BibTeX entry and citation info

```bibtex

@InProceedings{huggingnft,

author={Aleksey Korshuk}

year=2022

}

```

|

a2bd66f446d154e09747382c687d806d

|

jonatasgrosman/exp_w2v2t_en_xls-r_s468

|

jonatasgrosman

|

wav2vec2

| 10 | 3 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['en']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'en']

| false | true | true | 459 | false |

# exp_w2v2t_en_xls-r_s468

Fine-tuned [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) for speech recognition on English using the train split of [Common Voice 7.0](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

8d9c38df256e5f0953102f2a39b863c9

|

google/t5-efficient-small-el8-dl2

|

google

|

t5

| 12 | 7 |

transformers

| 0 |

text2text-generation

| true | true | true |

apache-2.0

|

['en']

|

['c4']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['deep-narrow']

| false | true | true | 6,281 | false |

# T5-Efficient-SMALL-EL8-DL2 (Deep-Narrow version)

T5-Efficient-SMALL-EL8-DL2 is a variation of [Google's original T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) following the [T5 model architecture](https://huggingface.co/docs/transformers/model_doc/t5).

It is a *pretrained-only* checkpoint and was released with the

paper **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)**

by *Yi Tay, Mostafa Dehghani, Jinfeng Rao, William Fedus, Samira Abnar, Hyung Won Chung, Sharan Narang, Dani Yogatama, Ashish Vaswani, Donald Metzler*.

In a nutshell, the paper indicates that a **Deep-Narrow** model architecture is favorable for **downstream** performance compared to other model architectures

of similar parameter count.

To quote the paper:

> We generally recommend a DeepNarrow strategy where the model’s depth is preferentially increased

> before considering any other forms of uniform scaling across other dimensions. This is largely due to

> how much depth influences the Pareto-frontier as shown in earlier sections of the paper. Specifically, a

> tall small (deep and narrow) model is generally more efficient compared to the base model. Likewise,

> a tall base model might also generally more efficient compared to a large model. We generally find

> that, regardless of size, even if absolute performance might increase as we continue to stack layers,

> the relative gain of Pareto-efficiency diminishes as we increase the layers, converging at 32 to 36

> layers. Finally, we note that our notion of efficiency here relates to any one compute dimension, i.e.,

> params, FLOPs or throughput (speed). We report all three key efficiency metrics (number of params,

> FLOPS and speed) and leave this decision to the practitioner to decide which compute dimension to

> consider.

To be more precise, *model depth* is defined as the number of transformer blocks that are stacked sequentially.

A sequence of word embeddings is therefore processed sequentially by each transformer block.

## Details model architecture

This model checkpoint - **t5-efficient-small-el8-dl2** - is of model type **Small** with the following variations:

- **el** is **8**

- **dl** is **2**

It has **50.03** million parameters and thus requires *ca.* **200.11 MB** of memory in full precision (*fp32*)

or **100.05 MB** of memory in half precision (*fp16* or *bf16*).

A summary of the *original* T5 model architectures can be seen here:

| Model | nl (el/dl) | ff | dm | kv | nh | #Params|

| ----| ---- | ---- | ---- | ---- | ---- | ----|

| Tiny | 4/4 | 1024 | 256 | 32 | 4 | 16M|

| Mini | 4/4 | 1536 | 384 | 32 | 8 | 31M|

| Small | 6/6 | 2048 | 512 | 32 | 8 | 60M|

| Base | 12/12 | 3072 | 768 | 64 | 12 | 220M|

| Large | 24/24 | 4096 | 1024 | 64 | 16 | 738M|

| Xl | 24/24 | 16384 | 1024 | 128 | 32 | 3B|

| XXl | 24/24 | 65536 | 1024 | 128 | 128 | 11B|

whereas the following abbreviations are used:

| Abbreviation | Definition |

| ----| ---- |

| nl | Number of transformer blocks (depth) |

| dm | Dimension of embedding vector (output vector of transformers block) |

| kv | Dimension of key/value projection matrix |

| nh | Number of attention heads |

| ff | Dimension of intermediate vector within transformer block (size of feed-forward projection matrix) |

| el | Number of transformer blocks in the encoder (encoder depth) |

| dl | Number of transformer blocks in the decoder (decoder depth) |

| sh | Signifies that attention heads are shared |

| skv | Signifies that key-values projection matrices are tied |

If a model checkpoint has no specific, *el* or *dl* than both the number of encoder- and decoder layers correspond to *nl*.

## Pre-Training

The checkpoint was pretrained on the [Colossal, Cleaned version of Common Crawl (C4)](https://huggingface.co/datasets/c4) for 524288 steps using

the span-based masked language modeling (MLM) objective.

## Fine-Tuning

**Note**: This model is a **pretrained** checkpoint and has to be fine-tuned for practical usage.

The checkpoint was pretrained in English and is therefore only useful for English NLP tasks.

You can follow on of the following examples on how to fine-tune the model:

*PyTorch*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/pytorch/summarization)

- [Question Answering](https://github.com/huggingface/transformers/blob/master/examples/pytorch/question-answering/run_seq2seq_qa.py)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/pytorch/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

*Tensorflow*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/tensorflow/summarization)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/tensorflow/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

*JAX/Flax*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/flax/summarization)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/flax/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

## Downstream Performance

TODO: Add table if available

## Computational Complexity

TODO: Add table if available

## More information

We strongly recommend the reader to go carefully through the original paper **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)** to get a more nuanced understanding of this model checkpoint.

As explained in the following [issue](https://github.com/google-research/google-research/issues/986#issuecomment-1035051145), checkpoints including the *sh* or *skv*

model architecture variations have *not* been ported to Transformers as they are probably of limited practical usage and are lacking a more detailed description. Those checkpoints are kept [here](https://huggingface.co/NewT5SharedHeadsSharedKeyValues) as they might be ported potentially in the future.

|

f2d7609c090e479af8fcd242783b441f

|

anuragshas/wav2vec2-large-xlsr-53-rm-vallader

|

anuragshas

|

wav2vec2

| 9 | 9 |

transformers

| 0 |

automatic-speech-recognition

| true | false | true |

apache-2.0

|

['rm-vallader']

|

['common_voice']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['audio', 'automatic-speech-recognition', 'speech', 'xlsr-fine-tuning-week']

| true | true | true | 3,527 | false |

# Wav2Vec2-Large-XLSR-53-Romansh Vallader

Fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on Romansh Vallader using the [Common Voice](https://huggingface.co/datasets/common_voice).

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

test_dataset = load_dataset("common_voice", "rm-vallader", split="test[:2%]")

processor = Wav2Vec2Processor.from_pretrained("anuragshas/wav2vec2-large-xlsr-53-rm-vallader")

model = Wav2Vec2ForCTC.from_pretrained("anuragshas/wav2vec2-large-xlsr-53-rm-vallader")

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

inputs = processor(test_dataset["speech"][:2], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values, attention_mask=inputs.attention_mask).logits

predicted_ids = torch.argmax(logits, dim=-1)

print("Prediction:", processor.batch_decode(predicted_ids))

print("Reference:", test_dataset["sentence"][:2])

```

## Evaluation

The model can be evaluated as follows on the Romansh Vallader test data of Common Voice.

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import re

test_dataset = load_dataset("common_voice", "rm-vallader", split="test")

wer = load_metric("wer")

processor = Wav2Vec2Processor.from_pretrained("anuragshas/wav2vec2-large-xlsr-53-rm-vallader")

model = Wav2Vec2ForCTC.from_pretrained("anuragshas/wav2vec2-large-xlsr-53-rm-vallader")

model.to("cuda")

chars_to_ignore_regex = '[\,\?\.\!\-\;\:\"\“\%\”\„\–\…\«\»]'

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

batch["sentence"] = re.sub('’ ',' ',batch["sentence"])

batch["sentence"] = re.sub(' ‘',' ',batch["sentence"])

batch["sentence"] = re.sub('’|‘','\'',batch["sentence"])

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower()

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def evaluate(batch):

inputs = processor(batch["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values.to("cuda"), attention_mask=inputs.attention_mask.to("cuda")).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["pred_strings"] = processor.batch_decode(pred_ids)

return batch

result = test_dataset.map(evaluate, batched=True, batch_size=8)

print("WER: {:2f}".format(100 * wer.compute(predictions=result["pred_strings"], references=result["sentence"])))

```

**Test Result**: 32.89 %

## Training

The Common Voice `train` and `validation` datasets were used for training.

|

90e2f95ff3f3656395b7874e929794db

|

aapot/wav2vec2-xlsr-1b-finnish-lm

|

aapot

|

wav2vec2

| 21 | 4 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['fi']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'fi', 'finnish', 'generated_from_trainer', 'hf-asr-leaderboard', 'robust-speech-event']

| true | true | true | 9,461 | false |

# Wav2Vec2 XLS-R for Finnish ASR

This acoustic model is a fine-tuned version of [facebook/wav2vec2-xls-r-1b](https://huggingface.co/facebook/wav2vec2-xls-r-1b) for Finnish ASR. The model has been fine-tuned with 259.57 hours of Finnish transcribed speech data. Wav2Vec2 XLS-R was introduced in

[this paper](https://arxiv.org/abs/2111.09296) and first released at [this page](https://github.com/pytorch/fairseq/tree/main/examples/wav2vec#wav2vec-20).

This repository also includes Finnish KenLM language model used in the decoding phase with the acoustic model.

**Note**: this model is exactly the same as the [Finnish-NLP/wav2vec2-xlsr-1b-finnish-lm](https://huggingface.co/Finnish-NLP/wav2vec2-xlsr-1b-finnish-lm) model so this model has just been copied/moved to the `Finnish-NLP` Hugging Face organization.

**Note**: there is a better V2 version of this model which has been fine-tuned longer with 16 hours of more data: [Finnish-NLP/wav2vec2-xlsr-1b-finnish-lm-v2](https://huggingface.co/Finnish-NLP/wav2vec2-xlsr-1b-finnish-lm-v2)

## Model description

Wav2Vec2 XLS-R is Facebook AI's large-scale multilingual pretrained model for speech. It is pretrained on 436k hours of unlabeled speech, including VoxPopuli, MLS, CommonVoice, BABEL, and VoxLingua107. It uses the wav2vec 2.0 objective, in 128 languages.

You can read more about the pretrained model from [this blog](https://ai.facebook.com/blog/xls-r-self-supervised-speech-processing-for-128-languages) and [this paper](https://arxiv.org/abs/2111.09296).

This model is fine-tuned version of the pretrained model (1 billion parameter variant) for Finnish ASR.

## Intended uses & limitations

You can use this model for Finnish ASR (speech-to-text) task.

### How to use

Check the [run-finnish-asr-models.ipynb](https://huggingface.co/aapot/wav2vec2-xlsr-1b-finnish-lm/blob/main/run-finnish-asr-models.ipynb) notebook in this repository for an detailed example on how to use this model.

### Limitations and bias

This model was fine-tuned with audio samples which maximum length was 20 seconds so this model most likely works the best for quite short audios of similar length. However, you can try this model with a lot longer audios too and see how it works. If you encounter out of memory errors with very long audio files you can use the audio chunking method introduced in [this blog post](https://huggingface.co/blog/asr-chunking).

A vast majority of the data used for fine-tuning was from the Finnish Parliament dataset so this model may not generalize so well to very different domains like common daily spoken Finnish with dialects etc. In addition, audios of the datasets tend to be adult male dominated so this model may not work as well for speeches of children and women, for example.

The Finnish KenLM language model used in the decoding phase has been trained with text data from the audio transcriptions. Thus, the decoder's language model may not generalize to very different language, for example to spoken daily language with dialects. It may be beneficial to train your own KenLM language model for your domain language and use that in the decoding.

## Training data

This model was fine-tuned with 259.57 hours of Finnish transcribed speech data from following datasets:

| Dataset | Hours | % of total hours |

|:----------------------------------------------------------------------------------------------------------------------------------|:--------:|:----------------:|

| [Common Voice 7.0 Finnish train + evaluation + other splits](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0) | 9.70 h | 3.74 % |

| [Finnish parliament session 2](https://b2share.eudat.eu/records/4df422d631544ce682d6af1d4714b2d4) | 0.24 h | 0.09 % |

| [VoxPopuli Finnish](https://github.com/facebookresearch/voxpopuli) | 5.94 h | 2.29 % |

| [CSS10 Finnish](https://github.com/kyubyong/css10) | 10.32 h | 3.98 % |

| [Aalto Finnish Parliament ASR Corpus](http://urn.fi/urn:nbn:fi:lb-2021051903) | 228.00 h | 87.84 % |

| [Finnish Broadcast Corpus](http://urn.fi/urn:nbn:fi:lb-2016042502) | 5.37 h | 2.07 % |

Datasets were filtered to include maximum length of 20 seconds long audio samples.

## Training procedure

This model was trained during [Robust Speech Challenge Event](https://discuss.huggingface.co/t/open-to-the-community-robust-speech-recognition-challenge/13614) organized by Hugging Face. Training was done on a Tesla V100 GPU, sponsored by OVHcloud.

Training script was provided by Hugging Face and it is available [here](https://github.com/huggingface/transformers/blob/main/examples/research_projects/robust-speech-event/run_speech_recognition_ctc_bnb.py). We only modified its data loading for our custom datasets.

For the KenLM language model training, we followed the [blog post tutorial](https://huggingface.co/blog/wav2vec2-with-ngram) provided by Hugging Face. Training data for the 5-gram KenLM were text transcriptions of the audio training data.

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 8

- seed: 42

- optimizer: [8-bit Adam](https://github.com/facebookresearch/bitsandbytes) with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 5

- mixed_precision_training: Native AMP

The pretrained `facebook/wav2vec2-xls-r-1b` model was initialized with following hyperparameters:

- attention_dropout: 0.094

- hidden_dropout: 0.047

- feat_proj_dropout: 0.04

- mask_time_prob: 0.082

- layerdrop: 0.041

- activation_dropout: 0.055

- ctc_loss_reduction: "mean"

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 0.968 | 0.18 | 500 | 0.4870 | 0.4720 |

| 0.6557 | 0.36 | 1000 | 0.2450 | 0.2931 |

| 0.647 | 0.54 | 1500 | 0.1818 | 0.2255 |

| 0.5297 | 0.72 | 2000 | 0.1698 | 0.2354 |

| 0.5802 | 0.9 | 2500 | 0.1581 | 0.2355 |

| 0.6351 | 1.07 | 3000 | 0.1689 | 0.2336 |

| 0.4626 | 1.25 | 3500 | 0.1719 | 0.3099 |

| 0.4526 | 1.43 | 4000 | 0.1434 | 0.2069 |

| 0.4692 | 1.61 | 4500 | 0.1645 | 0.2192 |

| 0.4584 | 1.79 | 5000 | 0.1483 | 0.1987 |

| 0.4234 | 1.97 | 5500 | 0.1499 | 0.2178 |

| 0.4243 | 2.15 | 6000 | 0.1345 | 0.2070 |

| 0.4108 | 2.33 | 6500 | 0.1383 | 0.1850 |

| 0.4048 | 2.51 | 7000 | 0.1338 | 0.1811 |

| 0.4085 | 2.69 | 7500 | 0.1290 | 0.1780 |

| 0.4026 | 2.87 | 8000 | 0.1239 | 0.1650 |

| 0.4033 | 3.04 | 8500 | 0.1346 | 0.1657 |

| 0.3986 | 3.22 | 9000 | 0.1310 | 0.1850 |

| 0.3867 | 3.4 | 9500 | 0.1273 | 0.1741 |

| 0.3658 | 3.58 | 10000 | 0.1219 | 0.1672 |

| 0.382 | 3.76 | 10500 | 0.1306 | 0.1698 |

| 0.3847 | 3.94 | 11000 | 0.1230 | 0.1577 |

| 0.3691 | 4.12 | 11500 | 0.1310 | 0.1615 |

| 0.3593 | 4.3 | 12000 | 0.1296 | 0.1622 |

| 0.3619 | 4.48 | 12500 | 0.1285 | 0.1601 |

| 0.3361 | 4.66 | 13000 | 0.1261 | 0.1569 |

| 0.3603 | 4.84 | 13500 | 0.1235 | 0.1533 |

### Framework versions

- Transformers 4.17.0.dev0

- Pytorch 1.10.2+cu102

- Datasets 1.18.3

- Tokenizers 0.11.0

## Evaluation results

Evaluation was done with the [Common Voice 7.0 Finnish test split](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

To evaluate this model, run the `eval.py` script in this repository:

```bash

python3 eval.py --model_id aapot/wav2vec2-xlsr-1b-finnish-lm --dataset mozilla-foundation/common_voice_7_0 --config fi --split test

```

This model (the second row of the table) achieves the following WER (Word Error Rate) and CER (Character Error Rate) results compared to our other models:

| | WER (with LM) | WER (without LM) | CER (with LM) | CER (without LM) |

|-----------------------------------------|---------------|------------------|---------------|------------------|

|aapot/wav2vec2-xlsr-1b-finnish-lm-v2 |**4.09** |**9.73** |**0.88** |**1.65** |

|aapot/wav2vec2-xlsr-1b-finnish-lm |5.65 |13.11 |1.20 |2.23 |

|aapot/wav2vec2-xlsr-300m-finnish-lm |8.16 |17.92 |1.97 |3.36 |

## Team Members

- Aapo Tanskanen, [Hugging Face profile](https://huggingface.co/aapot), [LinkedIn profile](https://www.linkedin.com/in/aapotanskanen/)

- Rasmus Toivanen, [Hugging Face profile](https://huggingface.co/RASMUS), [LinkedIn profile](https://www.linkedin.com/in/rasmustoivanen/)

Feel free to contact us for more details 🤗

|

d2612bbc04ae5ef76b3e637a86f3d84b

|

jgammack/roberta-base-squad

|

jgammack

|

roberta

| 15 | 6 |

transformers

| 0 |

question-answering

| true | false | false |

mit

| null |

['squad']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 947 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-squad

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on the squad dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.16.2

- Pytorch 1.10.0+cu111

- Datasets 1.18.3

- Tokenizers 0.11.0

|

970cd456f7ce21b118ac7fc86adbb360

|

SEUNGWON1/distilgpt2-finetuned-wikitext2

|

SEUNGWON1

|

gpt2

| 9 | 4 |

transformers

| 0 |

text-generation

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,243 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilgpt2-finetuned-wikitext2

This model is a fine-tuned version of [distilgpt2](https://huggingface.co/distilgpt2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.6421

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 3.7602 | 1.0 | 2334 | 3.6669 |

| 3.653 | 2.0 | 4668 | 3.6472 |

| 3.6006 | 3.0 | 7002 | 3.6421 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.12.1+cu113

- Datasets 2.7.1

- Tokenizers 0.13.2

|

5aa385f2eeb9ea184e37123362c16e6b

|

garyw/clinical-embeddings-100d-w2v-cr

|

garyw

| null | 5 | 0 | null | 1 | null | false | false | false |

gpl-3.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,547 | false |

Pre-trained word embeddings using the text of published clinical case reports. These embeddings use 100 dimensions and were trained using the word2vec algorithm on published clinical case reports found in the [PMC Open Access Subset](https://www.ncbi.nlm.nih.gov/pmc/tools/openftlist/). See the paper here: https://pubmed.ncbi.nlm.nih.gov/34920127/

Citation:

```

@article{flamholz2022word,

title={Word embeddings trained on published case reports are lightweight, effective for clinical tasks, and free of protected health information},

author={Flamholz, Zachary N and Crane-Droesch, Andrew and Ungar, Lyle H and Weissman, Gary E},

journal={Journal of Biomedical Informatics},

volume={125},

pages={103971},

year={2022},

publisher={Elsevier}

}

```

## Quick start

Word embeddings are compatible with the [`gensim` Python package](https://radimrehurek.com/gensim/) format.

First download the files from this archive. Then load the embeddings into Python.

```python

from gensim.models import FastText, Word2Vec, KeyedVectors # KeyedVectors are used to load the GloVe models

# Load the model

model = Word2Vec.load('w2v_oa_cr_100d.bin')

# Return 100-dimensional vector representations of each word

model.wv.word_vec('diabetes')

model.wv.word_vec('cardiac_arrest')

model.wv.word_vec('lymphangioleiomyomatosis')

# Try out cosine similarity

model.wv.similarity('copd', 'chronic_obstructive_pulmonary_disease')

model.wv.similarity('myocardial_infarction', 'heart_attack')

model.wv.similarity('lymphangioleiomyomatosis', 'lam')

```

|

e3317287015960343723950784a550a8

|

beyond/genius-large-k2t

|

beyond

|

bart

| 9 | 4 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

|

['en']

|

['wikipedia']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['GENIUS', 'conditional text generation', 'sketch-based text generation', 'keywords-to-text generation', 'data augmentation']

| false | true | true | 1,710 | false |

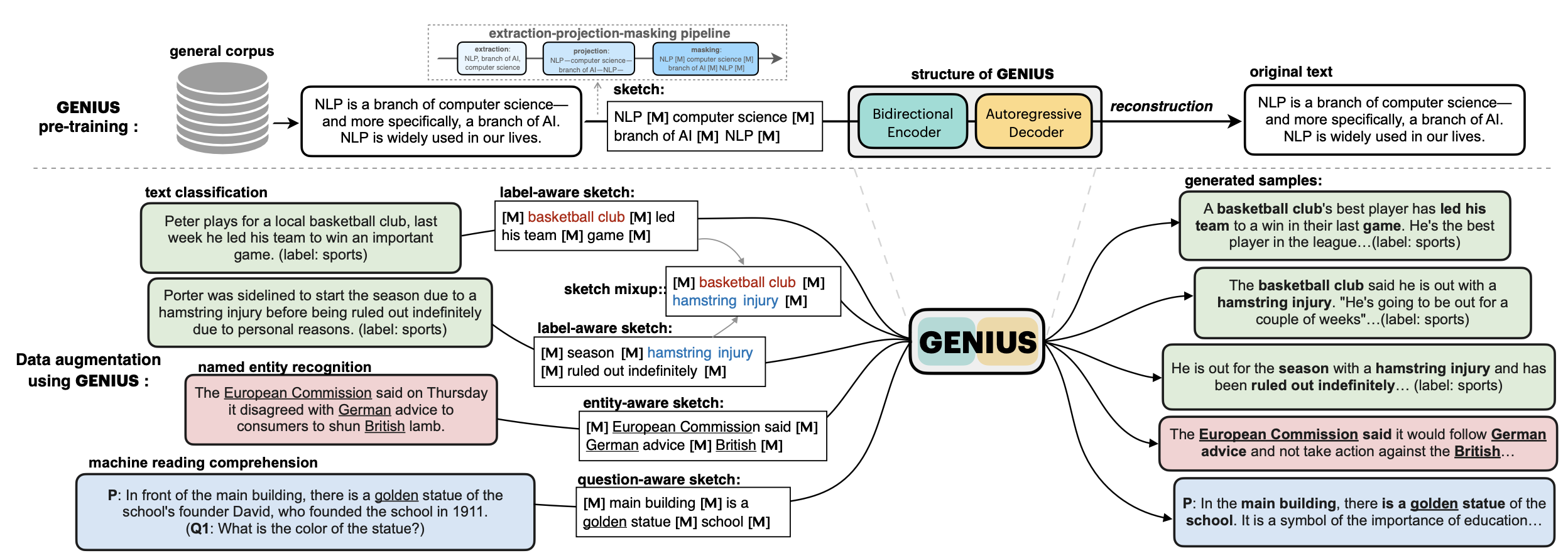

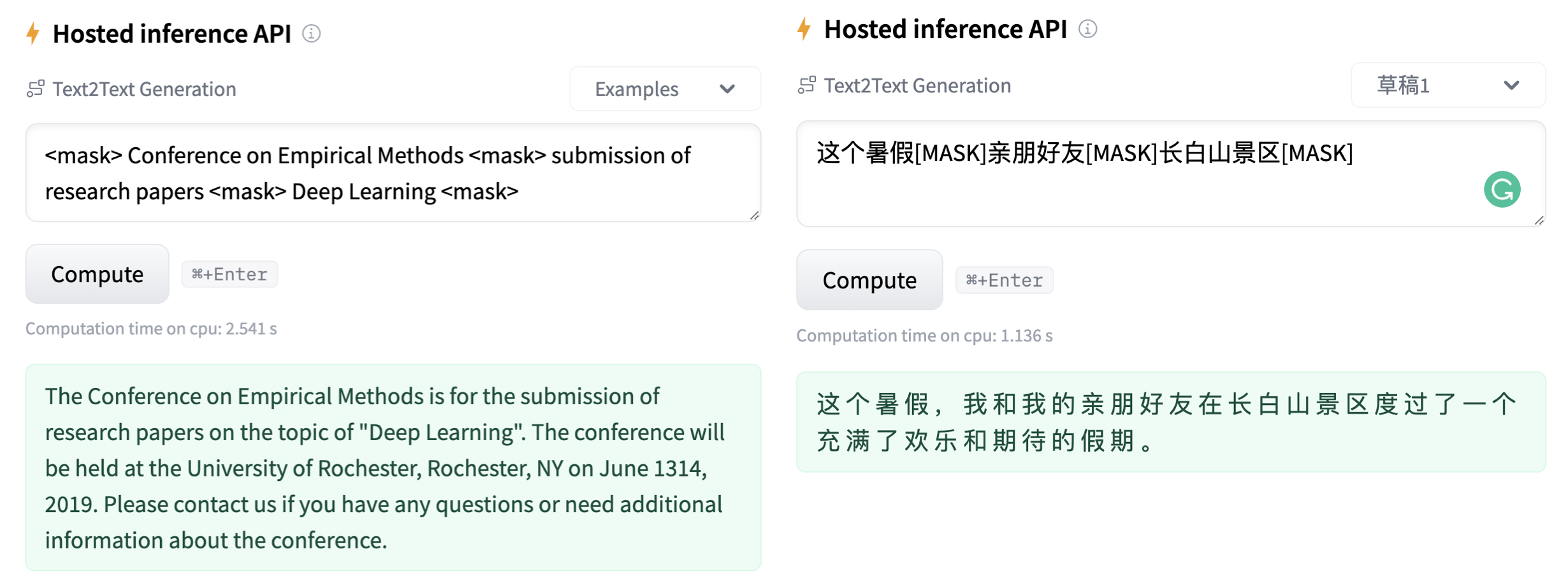

# 💡GENIUS – generating text using sketches!

- **Paper: [GENIUS: Sketch-based Language Model Pre-training via Extreme and Selective Masking for Text Generation and Augmentation](https://github.com/beyondguo/genius/blob/master/GENIUS_gby_arxiv.pdf)**

💡**GENIUS** is a powerful conditional text generation model using sketches as input, which can fill in the missing contexts for a given **sketch** (key information consisting of textual spans, phrases, or words, concatenated by mask tokens). GENIUS is pre-trained on a large- scale textual corpus with a novel *reconstruction from sketch* objective using an *extreme and selective masking* strategy, enabling it to generate diverse and high-quality texts given sketches.

- Models hosted in 🤗 Huggingface:

**Model variations:**

| Model | #params | Language | comment|

|------------------------|--------------------------------|-------|---------|

| [`genius-large`](https://huggingface.co/beyond/genius-large) | 406M | English | The version used in **paper** (recommend) |

| [`genius-large-k2t`](https://huggingface.co/beyond/genius-large-k2t) | 406M | English | keywords-to-text |

| [`genius-base`](https://huggingface.co/beyond/genius-base) | 139M | English | smaller version |

| [`genius-base-ps`](https://huggingface.co/beyond/genius-base) | 139M | English | pre-trained both in paragraphs and short sentences |

| [`genius-base-chinese`](https://huggingface.co/beyond/genius-base-chinese) | 116M | 中文 | 在一千万纯净中文段落上预训练|

|

b2ef5692dd12757b03402d8a7bce21ee

|

sd-concepts-library/eastward

|

sd-concepts-library

| null | 20 | 0 | null | 3 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 2,162 | false |

### Eastward on Stable Diffusion

This is the `<eastward>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

0a2f80e8cbd7757ca3445d9f95583e43

|

BN87/sample

|

BN87

| null | 2 | 0 | null | 0 | null | false | false | false |

openrail

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 4,739 | false |

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

# Model Details

## Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

## Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

# Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

## Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

## Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

## Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

# Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

## Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

# Training Details

## Training Data

<!-- This should link to a Data Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

## Training Procedure [optional]

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

### Preprocessing

[More Information Needed]

### Speeds, Sizes, Times

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

# Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

## Testing Data, Factors & Metrics

### Testing Data

<!-- This should link to a Data Card if possible. -->

[More Information Needed]

### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

## Results

[More Information Needed]

### Summary

# Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

# Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

# Technical Specifications [optional]

## Model Architecture and Objective

[More Information Needed]

## Compute Infrastructure

[More Information Needed]

### Hardware

[More Information Needed]

### Software

[More Information Needed]

# Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

# Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

# More Information [optional]

[More Information Needed]

# Model Card Authors [optional]

[More Information Needed]

# Model Card Contact

[More Information Needed]

# How to Get Started with the Model

Use the code below to get started with the model.

<details>

<summary> Click to expand </summary>

[More Information Needed]

</details>

|

8c16c88e1125fe4658d8e75ce6d9fbea

|

jonatasgrosman/exp_w2v2t_pl_vp-es_s840

|

jonatasgrosman

|

wav2vec2

| 10 | 5 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['pl']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'pl']

| false | true | true | 469 | false |

# exp_w2v2t_pl_vp-es_s840

Fine-tuned [facebook/wav2vec2-large-es-voxpopuli](https://huggingface.co/facebook/wav2vec2-large-es-voxpopuli) for speech recognition using the train split of [Common Voice 7.0 (pl)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

a9dd3fdf9afbf47f3ee7335bfc80d10b

|

kyo/distilbert-base-uncased-finetuned-imdb

|

kyo

|

distilbert

| 12 | 4 |

transformers

| 0 |

fill-mask

| true | false | false |

apache-2.0

| null |

['imdb']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,319 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-imdb

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 2.4718

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.707 | 1.0 | 157 | 2.4883 |

| 2.572 | 2.0 | 314 | 2.4240 |

| 2.5377 | 3.0 | 471 | 2.4355 |

### Framework versions

- Transformers 4.12.5

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

b53e956660a729299fcb42621c1a188f

|

eduardopds/mt5-small-finetuned-amazon-en-es

|

eduardopds

|

mt5

| 8 | 1 |

transformers

| 0 |

text2text-generation

| false | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,651 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# eduardopds/mt5-small-finetuned-amazon-en-es

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 4.0870

- Validation Loss: 3.3925

- Epoch: 7

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 5.6e-05, 'decay_steps': 9672, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 9.8646 | 4.3778 | 0 |

| 5.9307 | 3.8057 | 1 |

| 5.1494 | 3.6458 | 2 |

| 4.7430 | 3.5501 | 3 |

| 4.4782 | 3.4870 | 4 |

| 4.2922 | 3.4339 | 5 |

| 4.1536 | 3.4037 | 6 |

| 4.0870 | 3.3925 | 7 |

### Framework versions

- Transformers 4.18.0

- TensorFlow 2.8.0

- Datasets 2.2.1

- Tokenizers 0.12.1

|

a5a12122524bd20a37dbe9b117f8141b

|

huiziy/my_awesome_qa_model

|

huiziy

|

distilbert

| 12 | 1 |

transformers

| 0 |

question-answering

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,279 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# my_awesome_qa_model

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an High School Health Science dataset.

It achieves the following results on the evaluation set:

- Loss: 5.2683

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 3 | 5.6569 |

| No log | 2.0 | 6 | 5.3967 |

| No log | 3.0 | 9 | 5.2683 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

|

dafdc56c0ad3508daadc75bc4cc28d1c

|

KoichiYasuoka/roberta-base-vietnamese-ud-goeswith

|

KoichiYasuoka

|

roberta

| 10 | 4 |

transformers

| 0 |

token-classification

| true | false | false |

cc-by-sa-4.0

|

['vi']

|

['universal_dependencies']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['vietnamese', 'token-classification', 'pos', 'dependency-parsing']

| false | true | true | 2,759 | false |

# roberta-base-vietnamese-ud-goeswith

## Model Description

This is a RoBERTa model pre-trained on Vietnamese texts for POS-tagging and dependency-parsing (using `goeswith` for subwords), derived from [roberta-base-vietnamese-upos](https://huggingface.co/KoichiYasuoka/roberta-base-vietnamese-upos).

## How to Use

```py

class UDgoeswith(object):

def __init__(self,bert):

from transformers import AutoTokenizer,AutoModelForTokenClassification

self.tokenizer=AutoTokenizer.from_pretrained(bert)

self.model=AutoModelForTokenClassification.from_pretrained(bert)

def __call__(self,text):

import numpy,torch,ufal.chu_liu_edmonds

w=self.tokenizer(text,return_offsets_mapping=True)

v=w["input_ids"]

x=[v[0:i]+[self.tokenizer.mask_token_id]+v[i+1:]+[j] for i,j in enumerate(v[1:-1],1)]

with torch.no_grad():

e=self.model(input_ids=torch.tensor(x)).logits.numpy()[:,1:-2,:]

r=[1 if i==0 else -1 if j.endswith("|root") else 0 for i,j in sorted(self.model.config.id2label.items())]

e+=numpy.where(numpy.add.outer(numpy.identity(e.shape[0]),r)==0,0,numpy.nan)

g=self.model.config.label2id["X|_|goeswith"]

r=numpy.tri(e.shape[0])

for i in range(e.shape[0]):

for j in range(i+2,e.shape[1]):

r[i,j]=r[i,j-1] if numpy.nanargmax(e[i,j-1])==g else 1

e[:,:,g]+=numpy.where(r==0,0,numpy.nan)

m=numpy.full((e.shape[0]+1,e.shape[1]+1),numpy.nan)

m[1:,1:]=numpy.nanmax(e,axis=2).transpose()

p=numpy.zeros(m.shape)

p[1:,1:]=numpy.nanargmax(e,axis=2).transpose()

for i in range(1,m.shape[0]):

m[i,0],m[i,i],p[i,0]=m[i,i],numpy.nan,p[i,i]

h=ufal.chu_liu_edmonds.chu_liu_edmonds(m)[0]

if [0 for i in h if i==0]!=[0]:

m[:,0]+=numpy.where(m[:,0]==numpy.nanmax(m[[i for i,j in enumerate(h) if j==0],0]),0,numpy.nan)

m[[i for i,j in enumerate(h) if j==0]]+=[0 if i==0 or j==0 else numpy.nan for i,j in enumerate(h)]

h=ufal.chu_liu_edmonds.chu_liu_edmonds(m)[0]

u="# text = "+text+"\n"

v=[(s,e) for s,e in w["offset_mapping"] if s<e]

for i,(s,e) in enumerate(v,1):

q=self.model.config.id2label[p[i,h[i]]].split("|")

u+="\t".join([str(i),text[s:e],"_",q[0],"_","|".join(q[1:-1]),str(h[i]),q[-1],"_","_" if i<len(v) and e<v[i][0] else "SpaceAfter=No"])+"\n"

return u+"\n"

nlp=UDgoeswith("KoichiYasuoka/roberta-base-vietnamese-ud-goeswith")

print(nlp("Hai cái đầu thì tốt hơn một."))

```

with [ufal.chu-liu-edmonds](https://pypi.org/project/ufal.chu-liu-edmonds/).

Or without ufal.chu-liu-edmonds:

```

from transformers import pipeline

nlp=pipeline("universal-dependencies","KoichiYasuoka/roberta-base-vietnamese-ud-goeswith",trust_remote_code=True,aggregation_strategy="simple")

print(nlp("Hai cái đầu thì tốt hơn một."))

```

|

62a24c63e77528313450ebf9ce8c82f3

|

IDEA-CCNL/Erlangshen-TCBert-1.3B-Classification-Chinese

|

IDEA-CCNL

|

bert

| 5 | 34 |

transformers

| 1 | null | true | false | false |

apache-2.0

|

['zh']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['classification']

| false | true | true | 8,644 | false |

# Erlangshen-TCBert-1.3B-Classification-Chinese

- Github: [Fengshenbang-LM](https://github.com/IDEA-CCNL/Fengshenbang-LM)

- Docs: [Fengshenbang-Docs](https://fengshenbang-doc.readthedocs.io/)

## 简介 Brief Introduction

1.3BM参数的Topic Classification BERT (TCBert)。

The TCBert with 1.3BM parameters is pre-trained for, not limited to, Chinese topic classification tasks.

## 模型分类 Model Taxonomy

| 需求 Demand | 任务 Task | 系列 Series | 模型 Model | 参数 Parameter | 额外 Extra |

| :----: | :----: | :----: | :----: | :----: | :----: |

| 通用 General | 自然语言理解 NLU | 二郎神 Erlangshen | TCBert | 1.3BM | Chinese |

## 模型信息 Model Information

为了提高模型在话题分类上的效果,我们收集了大量话题分类数据进行基于prompts的预训练。

To improve the model performance on the topic classification task, we collected numerous topic classification datasets for pre-training based on general prompts.

### 下游效果 Performance

我们为每个数据集设计了两个prompt模板。

We customize two prompts templates for each dataset.

第一个prompt模板:

For ***prompt template 1***:

| Dataset | Prompt template 1 |

|---------|:------------------------:|

| TNEWS | 下面是一则关于__的新闻: |

| CSLDCP | 这一句描述__的内容如下: |

| IFLYTEK | 这一句描述__的内容如下: |

第一个prompt模板的微调实验结果:

The **fine-tuning** results for prompt template 1:

| Model | TNEWS | CLSDCP | IFLYTEK |

|-----------------|:------:|:------:|:-------:|

| Macbert-base | 55.02 | 57.37 | 51.34 |

| Macbert-large | 55.77 | 58.99 | 50.31 |

| Erlangshen-1.3B | 57.36 | 62.35 | 53.23 |

| TCBert-base<sub>110M-Classification-Chinese | 55.57 | 58.60 | 49.63 |

| TCBert-large<sub>330M-Classification-Chinese | 56.17 | 60.06 | 51.34 |

| TCBert-1.3B<sub>1.3B-Classification-Chinese | 57.41 | 65.10 | 53.75 |

| TCBert-base<sub>110M-Sentence-Embedding-Chinese | 54.68 | 59.78 | 49.40 |

| TCBert-large<sub>330M-Sentence-Embedding-Chinese | 55.32 | 62.07 | 51.11 |

| TCBert-1.3B<sub>1.3B-Sentence-Embedding-Chinese | 57.46 | 65.04 | 53.06 |

第一个prompt模板的句子相似度结果:

The **sentence similarity** results for prompt template 1:

| | TNEWS | | CSLDCP | | IFLYTEK | |

|-----------------|:--------:|:---------:|:---------:|:---------:|:---------:|:---------:|

| Model | referece | whitening | reference | whitening | reference | whitening |

| Macbert-base | 43.53 | 47.16 | 33.50 | 36.53 | 28.99 | 33.85 |

| Macbert-large | 46.17 | 49.35 | 37.65 | 39.38 | 32.36 | 35.33 |

| Erlangshen-1.3B | 45.72 | 49.60 | 40.56 | 44.26 | 29.33 | 36.48 |

| TCBert-base<sub>110M-Classification-Chinese | 48.61 | 51.99 | 43.31 | 45.15 | 33.45 | 37.28 |

| TCBert-large<sub>330M-Classification-Chinese | 50.50 | 52.79 | 52.89 | 53.89 | 34.93 | 38.31 |

| TCBert-1.3B<sub>1.3B-Classification-Chinese | 50.80 | 51.59 | 51.93 | 54.12 | 33.96 | 38.08 |

| TCBert-base<sub>110M-Sentence-Embedding-Chinese | 45.82 | 47.06 | 42.91 | 43.87 | 33.28 | 34.76 |

| TCBert-large<sub>330M-Sentence-Embedding-Chinese | 50.10 | 50.90 | 53.78 | 53.33 | 37.62 | 36.94 |

| TCBert-1.3B<sub>1.3B-Sentence-Embedding-Chinese | 50.70 | 53.48 | 52.66 | 54.40 | 36.88 | 38.48 |

第二个prompt模板:

For ***prompt template 2***:

| Dataset | Prompt template 2 |

|---------|:------------------------:|

| TNEWS | 接下来的新闻,是跟__相关的内容: |

| CSLDCP | 接下来的学科,是跟__相关: |

| IFLYTEK | 接下来的生活内容,是跟__相关: |

第二个prompt模板的微调结果:

The **fine-tuning** results for prompt template 2:

| Model | TNEWS | CLSDCP | IFLYTEK |

|-----------------|:------:|:------:|:-------:|

| Macbert-base | 54.78 | 58.38 | 50.83 |

| Macbert-large | 56.77 | 60.22 | 51.63 |

| Erlangshen-1.3B | 57.81 | 62.80 | 52.77 |

| TCBert-base<sub>110M-Classification-Chinese | 54.58 | 59.16 | 49.80 |

| TCBert-large<sub>330M-Classification-Chinese | 56.22 | 61.23 | 50.77 |

| TCBert-1.3B<sub>1.3B-Classification-Chinese | 57.41 | 64.82 | 53.34 |

| TCBert-base<sub>110M-Sentence-Embedding-Chinese | 54.68 | 59.78 | 49.40 |

| TCBert-large<sub>330M-Sentence-Embedding-Chinese | 55.32 | 62.07 | 51.11 |

| TCBert-1.3B<sub>1.3B-Sentence-Embedding-Chinese | 56.87 | 65.83 | 52.94 |

第二个prompt模板的句子相似度结果:

The **sentence similarity** results for prompt template 2:

| | TNEWS | | CSLDCP | | IFLYTEK | |

|-----------------|:--------:|:---------:|:---------:|:---------:|:---------:|:---------:|

| Model | referece | whitening | reference | whitening | reference | whitening |

| Macbert-base | 42.29 | 45.22 | 34.23 | 37.48 | 29.62 | 34.13 |

| Macbert-large | 46.22 | 49.60 | 40.11 | 44.26 | 32.36 | 35.16 |

| Erlangshen-1.3B | 46.17 | 49.10 | 40.45 | 45.88 | 30.36 | 36.88 |

| TCBert-base<sub>110M-Classification-Chinese | 48.31 | 51.34 | 43.42 | 45.27 | 33.10 | 36.19 |

| TCBert-large<sub>330M-Classification-Chinese | 51.19 | 51.69 | 52.55 | 53.28 | 34.31 | 37.45 |

| TCBert-1.3B<sub>1.3B-Classification-Chinese | 52.14 | 52.39 | 51.71 | 53.89 | 33.62 | 38.14 |

| TCBert-base<sub>110M-Sentence-Embedding-Chinese | 46.72 | 48.86 | 43.19 | 43.53 | 34.08 | 35.79 |

| TCBert-large<sub>330M-Sentence-Embedding-Chinese | 50.65 | 51.94 | 53.84 | 53.67 | 37.74 | 36.65 |

| TCBert-1.3B<sub>1.3B-Sentence-Embedding-Chinese | 50.75 | 54.78 | 51.43 | 54.34 | 36.48 | 38.36 |

更多关于TCBERTs的细节,请参考我们的技术报告。基于新的数据,我们会更新TCBERTs,请留意我们仓库的更新。

For more details about TCBERTs, please refer to our paper. We may regularly update TCBERTs upon new coming data, please keep an eye on the repo!

## 使用 Usage

### 使用示例 Usage Examples

```python

# Prompt-based MLM fine-tuning

from transformers import BertForMaskedLM, BertTokenizer

import torch

# Loading models

tokenizer=BertTokenizer.from_pretrained("IDEA-CCNL/Erlangshen-TCBert-1.3B-Classification-Chinese")

model=BertForMaskedLM.from_pretrained("IDEA-CCNL/Erlangshen-TCBert-1.3B-Classification-Chinese")

# Prepare the data

inputs = tokenizer("下面是一则关于[MASK][MASK]的新闻:怎样的房子才算户型方正?", return_tensors="pt")

labels = tokenizer("下面是一则关于房产的新闻:怎样的房子才算户型方正?", return_tensors="pt")["input_ids"]