repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

spacy/en_core_web_trf

|

spacy

| null | 26 | 699 |

spacy

| 10 |

token-classification

| false | false | false |

mit

|

['en']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['spacy', 'token-classification']

| false | true | true | 2,830 | false |

### Details: https://spacy.io/models/en#en_core_web_trf

English transformer pipeline (roberta-base). Components: transformer, tagger, parser, ner, attribute_ruler, lemmatizer.

| Feature | Description |

| --- | --- |

| **Name** | `en_core_web_trf` |

| **Version** | `3.5.0` |

| **spaCy** | `>=3.5.0,<3.6.0` |

| **Default Pipeline** | `transformer`, `tagger`, `parser`, `attribute_ruler`, `lemmatizer`, `ner` |

| **Components** | `transformer`, `tagger`, `parser`, `attribute_ruler`, `lemmatizer`, `ner` |

| **Vectors** | 0 keys, 0 unique vectors (0 dimensions) |

| **Sources** | [OntoNotes 5](https://catalog.ldc.upenn.edu/LDC2013T19) (Ralph Weischedel, Martha Palmer, Mitchell Marcus, Eduard Hovy, Sameer Pradhan, Lance Ramshaw, Nianwen Xue, Ann Taylor, Jeff Kaufman, Michelle Franchini, Mohammed El-Bachouti, Robert Belvin, Ann Houston)<br />[ClearNLP Constituent-to-Dependency Conversion](https://github.com/clir/clearnlp-guidelines/blob/master/md/components/dependency_conversion.md) (Emory University)<br />[WordNet 3.0](https://wordnet.princeton.edu/) (Princeton University)<br />[roberta-base](https://github.com/pytorch/fairseq/tree/master/examples/roberta) (Yinhan Liu and Myle Ott and Naman Goyal and Jingfei Du and Mandar Joshi and Danqi Chen and Omer Levy and Mike Lewis and Luke Zettlemoyer and Veselin Stoyanov) |

| **License** | `MIT` |

| **Author** | [Explosion](https://explosion.ai) |

### Label Scheme

<details>

<summary>View label scheme (112 labels for 3 components)</summary>

| Component | Labels |

| --- | --- |

| **`tagger`** | `$`, `''`, `,`, `-LRB-`, `-RRB-`, `.`, `:`, `ADD`, `AFX`, `CC`, `CD`, `DT`, `EX`, `FW`, `HYPH`, `IN`, `JJ`, `JJR`, `JJS`, `LS`, `MD`, `NFP`, `NN`, `NNP`, `NNPS`, `NNS`, `PDT`, `POS`, `PRP`, `PRP$`, `RB`, `RBR`, `RBS`, `RP`, `SYM`, `TO`, `UH`, `VB`, `VBD`, `VBG`, `VBN`, `VBP`, `VBZ`, `WDT`, `WP`, `WP$`, `WRB`, `XX`, ```` |

| **`parser`** | `ROOT`, `acl`, `acomp`, `advcl`, `advmod`, `agent`, `amod`, `appos`, `attr`, `aux`, `auxpass`, `case`, `cc`, `ccomp`, `compound`, `conj`, `csubj`, `csubjpass`, `dative`, `dep`, `det`, `dobj`, `expl`, `intj`, `mark`, `meta`, `neg`, `nmod`, `npadvmod`, `nsubj`, `nsubjpass`, `nummod`, `oprd`, `parataxis`, `pcomp`, `pobj`, `poss`, `preconj`, `predet`, `prep`, `prt`, `punct`, `quantmod`, `relcl`, `xcomp` |

| **`ner`** | `CARDINAL`, `DATE`, `EVENT`, `FAC`, `GPE`, `LANGUAGE`, `LAW`, `LOC`, `MONEY`, `NORP`, `ORDINAL`, `ORG`, `PERCENT`, `PERSON`, `PRODUCT`, `QUANTITY`, `TIME`, `WORK_OF_ART` |

</details>

### Accuracy

| Type | Score |

| --- | --- |

| `TOKEN_ACC` | 99.86 |

| `TOKEN_P` | 99.57 |

| `TOKEN_R` | 99.58 |

| `TOKEN_F` | 99.57 |

| `TAG_ACC` | 97.79 |

| `SENTS_P` | 95.04 |

| `SENTS_R` | 84.92 |

| `SENTS_F` | 89.69 |

| `DEP_UAS` | 95.27 |

| `DEP_LAS` | 93.95 |

| `ENTS_P` | 89.78 |

| `ENTS_R` | 90.49 |

| `ENTS_F` | 90.13 |

|

d3bb3f6ccc7732cce4ce4544f36046db

|

netsvetaev/netsvetaev-black

|

netsvetaev

| null | 12 | 0 | null | 0 |

text-to-image

| false | false | false |

mit

|

['en']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['diffusion', 'netsvetaev', 'dreambooth', 'stable-diffusion', 'text-to-image']

| false | true | true | 1,885 | false |

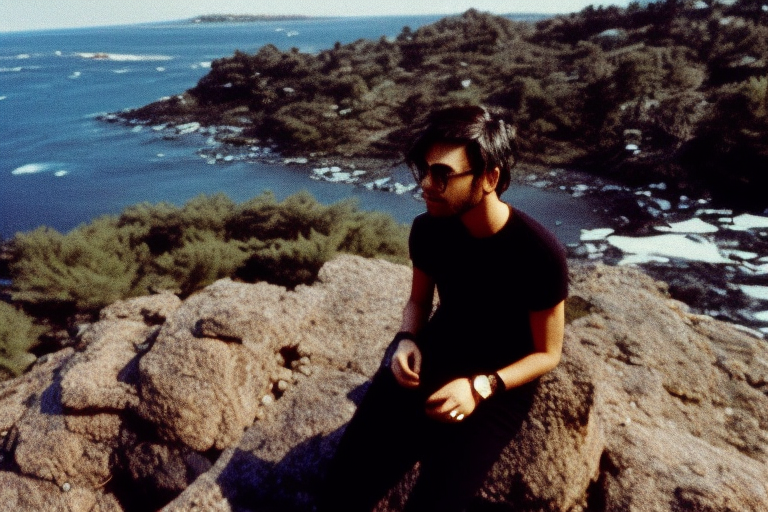

Hello!

This is the model, based on my paintings on a black background and SD 1.5. This is the second onw, trained with 29 images and 2900 steps.

The token is «netsvetaev black style».

Best suited for: abstract seamless patterns, images similar to my original paintings with blue triangles, and large objects like «cat face» or «girl face».

It works well with landscape orientation and embiggen.

It has MIT license, you can use it for free.

Best used with Invoke AI: https://github.com/invoke-ai/InvokeAI (The examples below contain metadata for it)

________________________

Artur Netsvetaev, 2022

https://netsvetaev.com

|

095ceb5e80f95d76f1b4a8cb782848d7

|

flood/distilbert-base-uncased-finetuned-clinc

|

flood

|

distilbert

| 10 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null |

['clinc_oos']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,481 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-clinc

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the clinc_oos dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7793

- Accuracy: 0.9161

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 48

- eval_batch_size: 48

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 4.2926 | 1.0 | 318 | 3.2834 | 0.7374 |

| 2.6259 | 2.0 | 636 | 1.8736 | 0.8303 |

| 1.5511 | 3.0 | 954 | 1.1612 | 0.8913 |

| 1.0185 | 4.0 | 1272 | 0.8625 | 0.91 |

| 0.8046 | 5.0 | 1590 | 0.7793 | 0.9161 |

### Framework versions

- Transformers 4.19.3

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

5486ae3503f9737683e6ae1ef1399064

|

ychu4/distilbert-base-uncased-finetuned-cola

|

ychu4

|

distilbert

| 10 | 3 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null |

['glue']

| null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,571 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-cola

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7512

- Matthews Correlation: 0.5097

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Matthews Correlation |

|:-------------:|:-----:|:----:|:---------------:|:--------------------:|

| 0.5237 | 1.0 | 535 | 0.5117 | 0.4469 |

| 0.3496 | 2.0 | 1070 | 0.5538 | 0.4965 |

| 0.2377 | 3.0 | 1605 | 0.6350 | 0.4963 |

| 0.1767 | 4.0 | 2140 | 0.7512 | 0.5097 |

| 0.1383 | 5.0 | 2675 | 0.8647 | 0.5056 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.8.1+cu102

- Datasets 1.15.1

- Tokenizers 0.10.1

|

b9b875b63ab2df93af7c92c6910bbb29

|

Helsinki-NLP/opus-mt-de-hr

|

Helsinki-NLP

|

marian

| 10 | 102 |

transformers

| 0 |

translation

| true | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['translation']

| false | true | true | 770 | false |

### opus-mt-de-hr

* source languages: de

* target languages: hr

* OPUS readme: [de-hr](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/de-hr/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-26.zip](https://object.pouta.csc.fi/OPUS-MT-models/de-hr/opus-2020-01-26.zip)

* test set translations: [opus-2020-01-26.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/de-hr/opus-2020-01-26.test.txt)

* test set scores: [opus-2020-01-26.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/de-hr/opus-2020-01-26.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba.de.hr | 42.6 | 0.643 |

|

d0c270513d89d9b266baea2a48da2e0e

|

lizaboiarchuk/bert-tiny-oa-finetuned

|

lizaboiarchuk

|

bert

| 8 | 2 |

transformers

| 0 |

fill-mask

| false | true | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,823 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# lizaboiarchuk/bert-tiny-oa-finetuned

This model is a fine-tuned version of [prajjwal1/bert-tiny](https://huggingface.co/prajjwal1/bert-tiny) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 4.0626

- Validation Loss: 3.7514

- Epoch: 4

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'inner_optimizer': {'class_name': 'AdamWeightDecay', 'config': {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'WarmUp', 'config': {'initial_learning_rate': 2e-05, 'decay_schedule_fn': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': -525, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, '__passive_serialization__': True}, 'warmup_steps': 1000, 'power': 1.0, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}}, 'dynamic': True, 'initial_scale': 32768.0, 'dynamic_growth_steps': 2000}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 4.6311 | 4.1088 | 0 |

| 4.2579 | 3.7859 | 1 |

| 4.0635 | 3.7253 | 2 |

| 4.0658 | 3.6842 | 3 |

| 4.0626 | 3.7514 | 4 |

### Framework versions

- Transformers 4.22.1

- TensorFlow 2.8.2

- Tokenizers 0.12.1

|

31a99ed4917ff2e63d6cc3366110de3c

|

google/t5-efficient-large-nh12

|

google

|

t5

| 12 | 7 |

transformers

| 0 |

text2text-generation

| true | true | true |

apache-2.0

|

['en']

|

['c4']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['deep-narrow']

| false | true | true | 6,258 | false |

# T5-Efficient-LARGE-NH12 (Deep-Narrow version)

T5-Efficient-LARGE-NH12 is a variation of [Google's original T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) following the [T5 model architecture](https://huggingface.co/docs/transformers/model_doc/t5).

It is a *pretrained-only* checkpoint and was released with the

paper **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)**

by *Yi Tay, Mostafa Dehghani, Jinfeng Rao, William Fedus, Samira Abnar, Hyung Won Chung, Sharan Narang, Dani Yogatama, Ashish Vaswani, Donald Metzler*.

In a nutshell, the paper indicates that a **Deep-Narrow** model architecture is favorable for **downstream** performance compared to other model architectures

of similar parameter count.

To quote the paper:

> We generally recommend a DeepNarrow strategy where the model’s depth is preferentially increased

> before considering any other forms of uniform scaling across other dimensions. This is largely due to

> how much depth influences the Pareto-frontier as shown in earlier sections of the paper. Specifically, a

> tall small (deep and narrow) model is generally more efficient compared to the base model. Likewise,

> a tall base model might also generally more efficient compared to a large model. We generally find

> that, regardless of size, even if absolute performance might increase as we continue to stack layers,

> the relative gain of Pareto-efficiency diminishes as we increase the layers, converging at 32 to 36

> layers. Finally, we note that our notion of efficiency here relates to any one compute dimension, i.e.,

> params, FLOPs or throughput (speed). We report all three key efficiency metrics (number of params,

> FLOPS and speed) and leave this decision to the practitioner to decide which compute dimension to

> consider.

To be more precise, *model depth* is defined as the number of transformer blocks that are stacked sequentially.

A sequence of word embeddings is therefore processed sequentially by each transformer block.

## Details model architecture

This model checkpoint - **t5-efficient-large-nh12** - is of model type **Large** with the following variations:

- **nh** is **12**

It has **662.23** million parameters and thus requires *ca.* **2648.91 MB** of memory in full precision (*fp32*)

or **1324.45 MB** of memory in half precision (*fp16* or *bf16*).

A summary of the *original* T5 model architectures can be seen here:

| Model | nl (el/dl) | ff | dm | kv | nh | #Params|

| ----| ---- | ---- | ---- | ---- | ---- | ----|

| Tiny | 4/4 | 1024 | 256 | 32 | 4 | 16M|

| Mini | 4/4 | 1536 | 384 | 32 | 8 | 31M|

| Small | 6/6 | 2048 | 512 | 32 | 8 | 60M|

| Base | 12/12 | 3072 | 768 | 64 | 12 | 220M|

| Large | 24/24 | 4096 | 1024 | 64 | 16 | 738M|

| Xl | 24/24 | 16384 | 1024 | 128 | 32 | 3B|

| XXl | 24/24 | 65536 | 1024 | 128 | 128 | 11B|

whereas the following abbreviations are used:

| Abbreviation | Definition |

| ----| ---- |

| nl | Number of transformer blocks (depth) |

| dm | Dimension of embedding vector (output vector of transformers block) |

| kv | Dimension of key/value projection matrix |

| nh | Number of attention heads |

| ff | Dimension of intermediate vector within transformer block (size of feed-forward projection matrix) |

| el | Number of transformer blocks in the encoder (encoder depth) |

| dl | Number of transformer blocks in the decoder (decoder depth) |

| sh | Signifies that attention heads are shared |

| skv | Signifies that key-values projection matrices are tied |

If a model checkpoint has no specific, *el* or *dl* than both the number of encoder- and decoder layers correspond to *nl*.

## Pre-Training

The checkpoint was pretrained on the [Colossal, Cleaned version of Common Crawl (C4)](https://huggingface.co/datasets/c4) for 524288 steps using

the span-based masked language modeling (MLM) objective.

## Fine-Tuning

**Note**: This model is a **pretrained** checkpoint and has to be fine-tuned for practical usage.

The checkpoint was pretrained in English and is therefore only useful for English NLP tasks.

You can follow on of the following examples on how to fine-tune the model:

*PyTorch*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/pytorch/summarization)

- [Question Answering](https://github.com/huggingface/transformers/blob/master/examples/pytorch/question-answering/run_seq2seq_qa.py)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/pytorch/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

*Tensorflow*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/tensorflow/summarization)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/tensorflow/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

*JAX/Flax*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/flax/summarization)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/flax/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

## Downstream Performance

TODO: Add table if available

## Computational Complexity

TODO: Add table if available

## More information

We strongly recommend the reader to go carefully through the original paper **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)** to get a more nuanced understanding of this model checkpoint.

As explained in the following [issue](https://github.com/google-research/google-research/issues/986#issuecomment-1035051145), checkpoints including the *sh* or *skv*

model architecture variations have *not* been ported to Transformers as they are probably of limited practical usage and are lacking a more detailed description. Those checkpoints are kept [here](https://huggingface.co/NewT5SharedHeadsSharedKeyValues) as they might be ported potentially in the future.

|

4ec890fd73f0c992b43a7913e18eeb2b

|

sd-concepts-library/female-kpop-singer

|

sd-concepts-library

| null | 10 | 0 | null | 5 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,436 | false |

### female kpop singer on Stable Diffusion

This is the `<female-kpop-star>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Simple test model I made with images of Choerry, Hwasa, Nancy, and last 2 are Hyuna.

Placeholder token: <female-kpop-star>

Initializer token: musician

Here is the new concept you will be able to use as an `object`:

Feel free to modify / further train this model without credit.

|

98fd16d131ba13128ff02d5ae5397111

|

cahya/whisper-large-id

|

cahya

|

whisper

| 27 | 32 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['id']

|

['mozilla-foundation/common_voice_11_0', 'magic_data', 'TITML']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['whisper-event', 'generated_from_trainer']

| true | true | true | 1,891 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Large Indonesian

This model is a fine-tuned version of [openai/whisper-large](https://huggingface.co/openai/whisper-large) on the mozilla-foundation/common_voice_11_0, magic_data, titml id dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2034

- Wer: 6.2483

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-06

- train_batch_size: 12

- eval_batch_size: 12

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 10000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 0.1516 | 0.5 | 1000 | 0.1730 | 6.5664 |

| 0.1081 | 1.0 | 2000 | 0.1638 | 6.3682 |

| 0.0715 | 1.49 | 3000 | 0.1803 | 6.2713 |

| 0.1009 | 1.99 | 4000 | 0.1796 | 6.2667 |

| 0.0387 | 2.49 | 5000 | 0.2054 | 6.4927 |

| 0.0494 | 2.99 | 6000 | 0.2034 | 6.2483 |

| 0.0259 | 3.48 | 7000 | 0.2226 | 6.3497 |

| 0.0265 | 3.98 | 8000 | 0.2274 | 6.4004 |

| 0.0232 | 4.48 | 9000 | 0.2443 | 6.5618 |

| 0.015 | 4.98 | 10000 | 0.2413 | 6.4927 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu117

- Datasets 2.7.1.dev0

- Tokenizers 0.13.2

|

74b8270fc623df3b7fea9f8ea5a476cf

|

sd-concepts-library/gram-tops

|

sd-concepts-library

| null | 12 | 0 | null | 0 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,338 | false |

### gram-tops on Stable Diffusion

This is the `<gram-tops>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

af6f1a1fddfc382747711f1fd157184a

|

AndyChiang/cdgp-csg-bart-cloth

|

AndyChiang

|

bart

| 9 | 3 |

transformers

| 0 |

text2text-generation

| true | false | false |

mit

|

['en']

|

['cloth']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['bart', 'cloze', 'distractor', 'generation']

| false | true | true | 3,710 | false |

# cdgp-csg-bart-cloth

## Model description

This model is a Candidate Set Generator in **"CDGP: Automatic Cloze Distractor Generation based on Pre-trained Language Model", Findings of EMNLP 2022**.

Its input are stem and answer, and output is candidate set of distractors. It is fine-tuned by [**CLOTH**](https://www.cs.cmu.edu/~glai1/data/cloth/) dataset based on [**facebook/bart-base**](https://huggingface.co/facebook/bart-base) model.

For more details, you can see our **paper** or [**GitHub**](https://github.com/AndyChiangSH/CDGP).

## How to use?

1. Download the model by hugging face transformers.

```python

from transformers import BartTokenizer, BartForConditionalGeneration, pipeline

tokenizer = BartTokenizer.from_pretrained("AndyChiang/cdgp-csg-bart-cloth")

csg_model = BartForConditionalGeneration.from_pretrained("AndyChiang/cdgp-csg-bart-cloth")

```

2. Create a unmasker.

```python

unmasker = pipeline("fill-mask", tokenizer=tokenizer, model=csg_model, top_k=10)

```

3. Use the unmasker to generate the candidate set of distractors.

```python

sent = "I feel <mask> now. </s> happy"

cs = unmasker(sent)

print(cs)

```

## Dataset

This model is fine-tuned by [CLOTH](https://www.cs.cmu.edu/~glai1/data/cloth/) dataset, which is a collection of nearly 100,000 cloze questions from middle school and high school English exams. The detail of CLOTH dataset is shown below.

| Number of questions | Train | Valid | Test |

| ------------------- | ----- | ----- | ----- |

| **Middle school** | 22056 | 3273 | 3198 |

| **High school** | 54794 | 7794 | 8318 |

| **Total** | 76850 | 11067 | 11516 |

You can also use the [dataset](https://huggingface.co/datasets/AndyChiang/cloth) we have already cleaned.

## Training

We use a special way to fine-tune model, which is called **"Answer-Relating Fine-Tune"**. More detail is in our paper.

### Training hyperparameters

The following hyperparameters were used during training:

- Pre-train language model: [facebook/bart-base](https://huggingface.co/facebook/bart-base)

- Optimizer: adam

- Learning rate: 0.0001

- Max length of input: 64

- Batch size: 64

- Epoch: 1

- Device: NVIDIA® Tesla T4 in Google Colab

## Testing

The evaluations of this model as a Candidate Set Generator in CDGP is as follows:

| P@1 | F1@3 | F1@10 | MRR | NDCG@10 |

| ----- | ----- | ----- | ----- | ------- |

| 14.20 | 11.07 | 11.37 | 24.29 | 31.74 |

## Other models

### Candidate Set Generator

| Models | CLOTH | DGen |

| ----------- | ----------------------------------------------------------------------------------- | -------------------------------------------------------------------------------- |

| **BERT** | [cdgp-csg-bert-cloth](https://huggingface.co/AndyChiang/cdgp-csg-bert-cloth) | [cdgp-csg-bert-dgen](https://huggingface.co/AndyChiang/cdgp-csg-bert-dgen) |

| **SciBERT** | [cdgp-csg-scibert-cloth](https://huggingface.co/AndyChiang/cdgp-csg-scibert-cloth) | [cdgp-csg-scibert-dgen](https://huggingface.co/AndyChiang/cdgp-csg-scibert-dgen) |

| **RoBERTa** | [cdgp-csg-roberta-cloth](https://huggingface.co/AndyChiang/cdgp-csg-roberta-cloth) | [cdgp-csg-roberta-dgen](https://huggingface.co/AndyChiang/cdgp-csg-roberta-dgen) |

| **BART** | [*cdgp-csg-bart-cloth*](https://huggingface.co/AndyChiang/cdgp-csg-bart-cloth) | [cdgp-csg-bart-dgen](https://huggingface.co/AndyChiang/cdgp-csg-bart-dgen) |

### Distractor Selector

**fastText**: [cdgp-ds-fasttext](https://huggingface.co/AndyChiang/cdgp-ds-fasttext)

## Citation

None

|

538cd8db3aa5cc4b6e121358f763768b

|

deepiit98/Pub-clustered

|

deepiit98

|

distilbert

| 8 | 10 |

transformers

| 0 |

question-answering

| false | true | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,855 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# deepiit98/Pub-clustered

This model is a fine-tuned version of [nandysoham16/16-clustered_aug](https://huggingface.co/nandysoham16/16-clustered_aug) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.3787

- Train End Logits Accuracy: 0.8715

- Train Start Logits Accuracy: 0.8924

- Validation Loss: 0.1505

- Validation End Logits Accuracy: 1.0

- Validation Start Logits Accuracy: 0.9231

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 18, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Train End Logits Accuracy | Train Start Logits Accuracy | Validation Loss | Validation End Logits Accuracy | Validation Start Logits Accuracy | Epoch |

|:----------:|:-------------------------:|:---------------------------:|:---------------:|:------------------------------:|:--------------------------------:|:-----:|

| 0.3787 | 0.8715 | 0.8924 | 0.1505 | 1.0 | 0.9231 | 0 |

### Framework versions

- Transformers 4.26.0

- TensorFlow 2.9.2

- Datasets 2.9.0

- Tokenizers 0.13.2

|

07b0c2b3af54d701409d6043048ff3be

|

rishabhjain16/whisper_medium_to_pf10h

|

rishabhjain16

|

whisper

| 23 | 0 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,725 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# openai/whisper-medium

This model is a fine-tuned version of [openai/whisper-medium](https://huggingface.co/openai/whisper-medium) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1594

- Wer: 21.8343

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 4000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.0269 | 5.0 | 500 | 0.1069 | 118.0302 |

| 0.0049 | 10.01 | 1000 | 0.1263 | 135.2788 |

| 0.0009 | 15.01 | 1500 | 0.1355 | 94.5731 |

| 0.0001 | 20.01 | 2000 | 0.1413 | 7.5188 |

| 0.0001 | 25.01 | 2500 | 0.1515 | 7.2508 |

| 0.0001 | 30.02 | 3000 | 0.1568 | 24.8493 |

| 0.0 | 35.02 | 3500 | 0.1588 | 22.1470 |

| 0.0 | 40.02 | 4000 | 0.1594 | 21.8343 |

### Framework versions

- Transformers 4.27.0.dev0

- Pytorch 1.13.1+cu117

- Datasets 2.9.1.dev0

- Tokenizers 0.13.2

|

bd7d5d769b59f9440273c7bb88d63ecf

|

sd-concepts-library/kodakvision500t

|

sd-concepts-library

| null | 9 | 0 | null | 13 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,222 | false |

### KodakVision500T on Stable Diffusion

This is the `<kodakvision_500T>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

This concept was trained on **6** photographs taken with **Kodak Vision 3 500T**, through **1800** steps.

Here are some generated images from the concept that you will be able to use as a `style`:

|

75d2bdca72eef586f7ff1c3b8150ab78

|

Robertooo/ELL_pretrained

|

Robertooo

|

roberta

| 6 | 7 |

transformers

| 0 |

fill-mask

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,243 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ELL_pretrained

This model is a fine-tuned version of [distilroberta-base](https://huggingface.co/distilroberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.9006

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.1542 | 1.0 | 1627 | 2.1101 |

| 2.0739 | 2.0 | 3254 | 2.0006 |

| 2.0241 | 3.0 | 4881 | 1.7874 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu102

- Datasets 2.6.1

- Tokenizers 0.13.1

|

d8f5079101ebdef7f79abbdf0b8f4f1b

|

shashank2123/t5-finetuned-for-GEC

|

shashank2123

|

t5

| 10 | 3 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| false | true | true | 1,537 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-finetuned-for-GEC

This model is a fine-tuned version of [t5-base](https://huggingface.co/t5-base) on an unkown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3949

- Bleu: 0.3571

- Gen Len: 19.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bleu | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:-------:|

| 0.3958 | 1.0 | 4053 | 0.4236 | 0.3493 | 19.0 |

| 0.3488 | 2.0 | 8106 | 0.4076 | 0.3518 | 19.0 |

| 0.319 | 3.0 | 12159 | 0.3962 | 0.3523 | 19.0 |

| 0.3105 | 4.0 | 16212 | 0.3951 | 0.3567 | 19.0 |

| 0.3016 | 5.0 | 20265 | 0.3949 | 0.3571 | 19.0 |

### Framework versions

- Transformers 4.9.1

- Pytorch 1.9.0+cu102

- Datasets 1.11.0

- Tokenizers 0.10.3

|

e9a98bc915e3de98fef4984774dd17a9

|

ncats/EpiExtract4GARD-v2

|

ncats

|

bert

| 17 | 114 |

transformers

| 0 |

token-classification

| true | false | false |

other

|

['en']

|

['ncats/EpiSet4NER']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['token-classification', 'ncats']

| false | true | true | 9,493 | false |

## DOCUMENTATION UPDATES IN PROGRESS

## Model description

**EpiExtract4GARD-v2** is a fine-tuned [BioBERT-base-cased](https://huggingface.co/dmis-lab/biobert-base-cased-v1.1) model that is ready to use for **Named Entity Recognition** of locations (LOC), epidemiologic types (EPI), and epidemiologic rates (STAT). This model was fine-tuned on EpiSet4NER-v2 for epidemiological information from rare disease abstracts. See dataset documentation for details on the weakly supervised teaching methods and dataset biases and limitations. See [EpiExtract4GARD on GitHub](https://github.com/ncats/epi4GARD/tree/master/EpiExtract4GARD#epiextract4gard) for details on the entire pipeline.

#### How to use

You can use this model with the Hosted inference API to the right with this [test sentence](https://pubmed.ncbi.nlm.nih.gov/21659675/): "27 patients have been diagnosed with PKU in Iceland since 1947. Incidence 1972-2008 is 1/8400 living births."

See code below for use with Transformers *pipeline* for NER.:

~~~

from transformers import pipeline, AutoModelForTokenClassification, AutoTokenizer

model = AutoModelForTokenClassification.from_pretrained("ncats/EpiExtract4GARD")

tokenizer = AutoTokenizer.from_pretrained("ncats/EpiExtract4GARD")

NER_pipeline = pipeline('ner', model=model, tokenizer=tokenizer,aggregation_strategy='simple')

sample = "The live-birth prevalence of mucopolysaccharidoses in Estonia. Previous studies on the prevalence of mucopolysaccharidoses (MPS) in different populations have shown considerable variations. There are, however, few data with regard to the prevalence of MPSs in Fenno-Ugric populations or in north-eastern Europe, except for a report about Scandinavian countries. A retrospective epidemiological study of MPSs in Estonia was undertaken, and live-birth prevalence of MPS patients born between 1985 and 2006 was estimated. The live-birth prevalence for all MPS subtypes was found to be 4.05 per 100,000 live births, which is consistent with most other European studies. MPS II had the highest calculated incidence, with 2.16 per 100,000 live births (4.2 per 100,000 male live births), forming 53% of all diagnosed MPS cases, and was twice as high as in other studied European populations. The second most common subtype was MPS IIIA, with a live-birth prevalence of 1.62 in 100,000 live births. With 0.27 out of 100,000 live births, MPS VI had the third-highest live-birth prevalence. No cases of MPS I were diagnosed in Estonia, making the prevalence of MPS I in Estonia much lower than in other European populations. MPSs are the third most frequent inborn error of metabolism in Estonia after phenylketonuria and galactosemia."

sample2 = "Early Diagnosis of Classic Homocystinuria in Kuwait through Newborn Screening: A 6-Year Experience. Kuwait is a small Arabian Gulf country with a high rate of consanguinity and where a national newborn screening program was expanded in October 2014 to include a wide range of endocrine and metabolic disorders. A retrospective study conducted between January 2015 and December 2020 revealed a total of 304,086 newborns have been screened in Kuwait. Six newborns were diagnosed with classic homocystinuria with an incidence of 1:50,000, which is not as high as in Qatar but higher than the global incidence. Molecular testing for five of them has revealed three previously reported pathogenic variants in the <i>CBS</i> gene, c.969G>A, p.(Trp323Ter); c.982G>A, p.(Asp328Asn); and the Qatari founder variant c.1006C>T, p.(Arg336Cys). This is the first study to review the screening of newborns in Kuwait for classic homocystinuria, starting with the detection of elevated blood methionine and providing a follow-up strategy for positive results, including plasma total homocysteine and amino acid analyses. Further, we have demonstrated an increase in the specificity of the current newborn screening test for classic homocystinuria by including the methionine to phenylalanine ratio along with the elevated methionine blood levels in first-tier testing. Here, we provide evidence that the newborn screening in Kuwait has led to the early detection of classic homocystinuria cases and enabled the affected individuals to lead active and productive lives."

#Sample 1 is from: Krabbi K, Joost K, Zordania R, Talvik I, Rein R, Huijmans JG, Verheijen FV, Õunap K. The live-birth prevalence of mucopolysaccharidoses in Estonia. Genet Test Mol Biomarkers. 2012 Aug;16(8):846-9. doi: 10.1089/gtmb.2011.0307. Epub 2012 Apr 5. PMID: 22480138; PMCID: PMC3422553.

#Sample 2 is from: Alsharhan H, Ahmed AA, Ali NM, Alahmad A, Albash B, Elshafie RM, Alkanderi S, Elkazzaz UM, Cyril PX, Abdelrahman RM, Elmonairy AA, Ibrahim SM, Elfeky YME, Sadik DI, Al-Enezi SD, Salloum AM, Girish Y, Al-Ali M, Ramadan DG, Alsafi R, Al-Rushood M, Bastaki L. Early Diagnosis of Classic Homocystinuria in Kuwait through Newborn Screening: A 6-Year Experience. Int J Neonatal Screen. 2021 Aug 17;7(3):56. doi: 10.3390/ijns7030056. PMID: 34449519; PMCID: PMC8395821.

NER_pipeline(sample)

NER_pipeline(sample2)

~~~

Or if you download [*classify_abs.py*](https://github.com/ncats/epi4GARD/blob/master/EpiExtract4GARD/classify_abs.py), [*extract_abs.py*](https://github.com/ncats/epi4GARD/blob/master/EpiExtract4GARD/extract_abs.py), and [*gard-id-name-synonyms.json*](https://github.com/ncats/epi4GARD/blob/master/EpiExtract4GARD/gard-id-name-synonyms.json) from GitHub then you can test with this [*additional* code](https://github.com/ncats/epi4GARD/blob/master/EpiExtract4GARD/Case%20Study.ipynb):

~~~

import pandas as pd

import extract_abs

import classify_abs

pd.set_option('display.max_colwidth', None)

NER_pipeline = extract_abs.init_NER_pipeline()

GARD_dict, max_length = extract_abs.load_GARD_diseases()

nlp, nlpSci, nlpSci2, classify_model, classify_tokenizer = classify_abs.init_classify_model()

def search(term,num_results = 50):

return extract_abs.search_term_extraction(term, num_results, NER_pipeline, GARD_dict, max_length,nlp, nlpSci, nlpSci2, classify_model, classify_tokenizer)

a = search(7058)

a

b = search('Santos Mateus Leal syndrome')

b

c = search('Fellman syndrome')

c

d = search('GARD:0009941')

d

e = search('Homocystinuria')

e

~~~

#### Limitations and bias

## Training data

It was trained on [EpiSet4NER](https://huggingface.co/datasets/ncats/EpiSet4NER). See dataset documentation for details on the weakly supervised teaching methods and dataset biases and limitations. The training dataset distinguishes between the beginning and continuation of an entity so that if there are back-to-back entities of the same type, the model can output where the second entity begins. As in the dataset, each token will be classified as one of the following classes:

Abbreviation|Description

---------|--------------

O |Outside of a named entity

B-LOC | Beginning of a location

I-LOC | Inside of a location

B-EPI | Beginning of an epidemiologic type (e.g. "incidence", "prevalence", "occurrence")

I-EPI | Epidemiologic type that is not the beginning token.

B-STAT | Beginning of an epidemiologic rate

I-STAT | Inside of an epidemiologic rate

+More | Description pending

### EpiSet Statistics

Beyond any limitations due to the EpiSet4NER dataset, this model is limited in numeracy due to BERT-based model's use of subword embeddings, which is crucial for epidemiologic rate identification and limits the entity-level results. Recent techniques in numeracy could be used to improve the performance of the model without improving the underlying dataset.

## Training procedure

This model was trained on a [AWS EC2 p3.2xlarge](https://aws.amazon.com/ec2/instance-types/), which utilized a single Tesla V100 GPU, with these hyperparameters:

4 epochs of training (AdamW weight decay = 0.05) with a batch size of 16. Maximum sequence length = 192. Model was fed one sentence at a time.

<!--- Full config [here](https://wandb.ai/wzkariampuzha/huggingface/runs/353prhts/files/config.yaml). --->

<!--- THIS IS NOT THE UPDATED RESULTS --->

<!--- ## Hold-out validation results --->

<!--- metric| entity-level result --->

<!--- -|- --->

<!--- f1 | 83.8 --->

<!--- precision | 83.2 --->

<!--- recall | 84.5 --->

<!--- ## Test results --->

<!--- | Dataset for Model Training | Evaluation Level | Entity | Precision | Recall | F1 | --->

<!--- |:--------------------------:|:----------------:|:------------------:|:---------:|:------:|:-----:| --->

<!--- | EpiSet | Entity-Level | Overall | 0.556 | 0.662 | 0.605 | --->

<!--- | | | Location | 0.661 | 0.696 | 0.678 | --->

<!--- | | | Epidemiologic Type | 0.854 | 0.911 | 0.882 | --->

<!--- | | | Epidemiologic Rate | 0.143 | 0.218 | 0.173 | --->

<!--- | | Token-Level | Overall | 0.811 | 0.713 | 0.759 | --->

<!--- | | | Location | 0.949 | 0.742 | 0.833 | --->

<!--- | | | Epidemiologic Type | 0.9 | 0.917 | 0.908 | --->

<!--- | | | Epidemiologic Rate | 0.724 | 0.636 | 0.677 | --->

Thanks to [@William Kariampuzha](https://github.com/wzkariampuzha) at Axle Informatics/NCATS for contributing this model.

|

bf4976e91400ee73838550905b8a7e0d

|

Khalsuu/english-filipino-wav2vec2-l-xls-r-test-08

|

Khalsuu

|

wav2vec2

| 13 | 8 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null |

['filipino_voice']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,888 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# english-filipino-wav2vec2-l-xls-r-test-08

This model is a fine-tuned version of [jonatasgrosman/wav2vec2-large-xlsr-53-english](https://huggingface.co/jonatasgrosman/wav2vec2-large-xlsr-53-english) on the filipino_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5968

- Wer: 0.4255

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 20

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 3.3434 | 2.09 | 400 | 2.2857 | 0.9625 |

| 1.6304 | 4.19 | 800 | 1.1547 | 0.7268 |

| 0.9231 | 6.28 | 1200 | 1.0252 | 0.6186 |

| 0.6098 | 8.38 | 1600 | 0.9371 | 0.5494 |

| 0.4922 | 10.47 | 2000 | 0.7092 | 0.5478 |

| 0.3652 | 12.57 | 2400 | 0.7358 | 0.5149 |

| 0.2735 | 14.66 | 2800 | 0.6270 | 0.4646 |

| 0.2038 | 16.75 | 3200 | 0.5717 | 0.4506 |

| 0.1552 | 18.85 | 3600 | 0.5968 | 0.4255 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu113

- Datasets 1.18.3

- Tokenizers 0.10.3

|

5eda547dc16e02d5cbf848c5bb79cbd5

|

dweb/deberta-base-CoLA

|

dweb

|

deberta

| 13 | 9 |

transformers

| 0 |

text-classification

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,180 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# deberta-base-CoLA

This model is a fine-tuned version of [microsoft/deberta-base](https://huggingface.co/microsoft/deberta-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.1655

- Accuracy: 0.8482

- F1: 0.8961

- Roc Auc: 0.8987

- Mcc: 0.6288

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.05

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Roc Auc | Mcc |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|:-------:|:------:|

| 0.5266 | 1.0 | 535 | 0.4138 | 0.8159 | 0.8698 | 0.8627 | 0.5576 |

| 0.3523 | 2.0 | 1070 | 0.3852 | 0.8387 | 0.8880 | 0.9041 | 0.6070 |

| 0.2479 | 3.0 | 1605 | 0.3981 | 0.8482 | 0.8901 | 0.9120 | 0.6447 |

| 0.1712 | 4.0 | 2140 | 0.4732 | 0.8558 | 0.9008 | 0.9160 | 0.6486 |

| 0.1354 | 5.0 | 2675 | 0.7181 | 0.8463 | 0.8938 | 0.9024 | 0.6250 |

| 0.0876 | 6.0 | 3210 | 0.8453 | 0.8520 | 0.8992 | 0.9123 | 0.6385 |

| 0.0682 | 7.0 | 3745 | 1.0282 | 0.8444 | 0.8938 | 0.9061 | 0.6189 |

| 0.0431 | 8.0 | 4280 | 1.1114 | 0.8463 | 0.8960 | 0.9010 | 0.6239 |

| 0.0323 | 9.0 | 4815 | 1.1663 | 0.8501 | 0.8970 | 0.8967 | 0.6340 |

| 0.0163 | 10.0 | 5350 | 1.1655 | 0.8482 | 0.8961 | 0.8987 | 0.6288 |

### Framework versions

- Transformers 4.11.0

- Pytorch 1.9.0+cu102

- Datasets 1.12.1

- Tokenizers 0.10.3

|

ac8fa12d2c41b12d5aad9e72086b5748

|

espnet/kan-bayashi_jsut_tts_train_transformer_raw_phn_jaconv_pyopenjtalk_prosody_train.loss.ave

|

espnet

| null | 20 | 0 |

espnet

| 0 |

text-to-speech

| false | false | false |

cc-by-4.0

|

['ja']

|

['jsut']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['espnet', 'audio', 'text-to-speech']

| false | true | true | 1,859 | false |

## ESPnet2 TTS pretrained model

### `kan-bayashi/jsut_tts_train_transformer_raw_phn_jaconv_pyopenjtalk_prosody_train.loss.ave`

♻️ Imported from https://zenodo.org/record/5499040/

This model was trained by kan-bayashi using jsut/tts1 recipe in [espnet](https://github.com/espnet/espnet/).

### Demo: How to use in ESPnet2

```python

# coming soon

```

### Citing ESPnet

```BibTex

@inproceedings{watanabe2018espnet,

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson {Enrique Yalta Soplin} and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

title={{ESPnet}: End-to-End Speech Processing Toolkit},

year={2018},

booktitle={Proceedings of Interspeech},

pages={2207--2211},

doi={10.21437/Interspeech.2018-1456},

url={http://dx.doi.org/10.21437/Interspeech.2018-1456}

}

@inproceedings{hayashi2020espnet,

title={{Espnet-TTS}: Unified, reproducible, and integratable open source end-to-end text-to-speech toolkit},

author={Hayashi, Tomoki and Yamamoto, Ryuichi and Inoue, Katsuki and Yoshimura, Takenori and Watanabe, Shinji and Toda, Tomoki and Takeda, Kazuya and Zhang, Yu and Tan, Xu},

booktitle={Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

pages={7654--7658},

year={2020},

organization={IEEE}

}

```

or arXiv:

```bibtex

@misc{watanabe2018espnet,

title={ESPnet: End-to-End Speech Processing Toolkit},

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Enrique Yalta Soplin and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

year={2018},

eprint={1804.00015},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

0eb689d7bfc3433a0acf40fbb681bf82

|

Helsinki-NLP/opus-mt-en-kwn

|

Helsinki-NLP

|

marian

| 10 | 7 |

transformers

| 0 |

translation

| true | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['translation']

| false | true | true | 776 | false |

### opus-mt-en-kwn

* source languages: en

* target languages: kwn

* OPUS readme: [en-kwn](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/en-kwn/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-08.zip](https://object.pouta.csc.fi/OPUS-MT-models/en-kwn/opus-2020-01-08.zip)

* test set translations: [opus-2020-01-08.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/en-kwn/opus-2020-01-08.test.txt)

* test set scores: [opus-2020-01-08.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/en-kwn/opus-2020-01-08.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.en.kwn | 27.6 | 0.513 |

|

aeee6eb87d1d6b766b4a8a2a0bd16b87

|

jonatasgrosman/exp_w2v2t_ar_vp-nl_s103

|

jonatasgrosman

|

wav2vec2

| 10 | 5 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['ar']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'ar']

| false | true | true | 469 | false |

# exp_w2v2t_ar_vp-nl_s103

Fine-tuned [facebook/wav2vec2-large-nl-voxpopuli](https://huggingface.co/facebook/wav2vec2-large-nl-voxpopuli) for speech recognition using the train split of [Common Voice 7.0 (ar)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

90d0f3a6a25dc6430040c3df00e40823

|

jonatasgrosman/exp_w2v2t_de_hubert_s921

|

jonatasgrosman

|

hubert

| 10 | 3 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['de']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'de']

| false | true | true | 452 | false |

# exp_w2v2t_de_hubert_s921

Fine-tuned [facebook/hubert-large-ll60k](https://huggingface.co/facebook/hubert-large-ll60k) for speech recognition using the train split of [Common Voice 7.0 (de)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

b88c8a04b69f8ebac733f1b04d5e89e9

|

sd-concepts-library/karl-s-lzx-1

|

sd-concepts-library

| null | 9 | 0 | null | 0 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,003 | false |

### karl's lzx 1 on Stable Diffusion

This is the `<lzx>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

2d522dfb442f6f2e2524168113891e96

|

jonatasgrosman/exp_w2v2r_de_xls-r_age_teens-0_sixties-10_s113

|

jonatasgrosman

|

wav2vec2

| 10 | 0 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['de']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'de']

| false | true | true | 476 | false |

# exp_w2v2r_de_xls-r_age_teens-0_sixties-10_s113

Fine-tuned [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) for speech recognition using the train split of [Common Voice 7.0 (de)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

2df71a7511737286b41d7e63aa418607

|

maesneako/ES_corlec_DeepESP-gpt2-spanish

|

maesneako

|

gpt2

| 8 | 2 |

transformers

| 0 |

text-generation

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,029 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ES_corlec_DeepESP-gpt2-spanish

This model is a fine-tuned version of [DeepESP/gpt2-spanish](https://huggingface.co/DeepESP/gpt2-spanish) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 4.0360

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-06

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 200

- num_epochs: 7

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 4.2471 | 0.4 | 2000 | 4.2111 |

| 4.1503 | 0.79 | 4000 | 4.1438 |

| 4.0749 | 1.19 | 6000 | 4.1077 |

| 4.024 | 1.59 | 8000 | 4.0857 |

| 3.9855 | 1.98 | 10000 | 4.0707 |

| 3.9465 | 2.38 | 12000 | 4.0605 |

| 3.9277 | 2.78 | 14000 | 4.0533 |

| 3.9159 | 3.17 | 16000 | 4.0482 |

| 3.8918 | 3.57 | 18000 | 4.0448 |

| 3.8789 | 3.97 | 20000 | 4.0421 |

| 3.8589 | 4.36 | 22000 | 4.0402 |

| 3.8554 | 4.76 | 24000 | 4.0387 |

| 3.8509 | 5.15 | 26000 | 4.0377 |

| 3.8389 | 5.55 | 28000 | 4.0370 |

| 3.8288 | 5.95 | 30000 | 4.0365 |

| 3.8293 | 6.34 | 32000 | 4.0362 |

| 3.8202 | 6.74 | 34000 | 4.0360 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.10.1+cu102

- Datasets 2.3.2

- Tokenizers 0.12.1

|

3777a0b48e15aa83fbfcc3a8d58367f1

|

kadirnar/RRDB_ESRGAN_x4

|

kadirnar

| null | 3 | 0 | null | 0 | null | false | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['Super-Resolution', 'computer-vision', 'ESRGAN', 'gan']

| false | true | true | 1,229 | false |

### Model Description

[ESRGAN](https://arxiv.org/abs/2107.10833): ECCV18 Workshops - Enhanced SRGAN. Champion PIRM Challenge on Perceptual Super-Resolution

[Paper Repo](https://github.com/xinntao/ESRGAN): Implementation of paper.

### Installation

```

pip install bsrgan

```

### BSRGAN Usage

```python

from bsrgan import BSRGAN

model = BSRGAN(weights='kadirnar/RRDB_ESRGAN_x4', device='cuda:0', hf_model=True)

model.save = True

pred = model.predict(img_path='data/image/test.png')

```

### BibTeX Entry and Citation Info

```

@inproceedings{zhang2021designing,

title={Designing a Practical Degradation Model for Deep Blind Image Super-Resolution},

author={Zhang, Kai and Liang, Jingyun and Van Gool, Luc and Timofte, Radu},

booktitle={IEEE International Conference on Computer Vision},

pages={4791--4800},

year={2021}

}

```

```

@InProceedings{wang2018esrgan,

author = {Wang, Xintao and Yu, Ke and Wu, Shixiang and Gu, Jinjin and Liu, Yihao and Dong, Chao and Qiao, Yu and Loy, Chen Change},

title = {ESRGAN: Enhanced super-resolution generative adversarial networks},

booktitle = {The European Conference on Computer Vision Workshops (ECCVW)},

month = {September},

year = {2018}

}

```

|

7d1bdaa4ecfd1abdd5fd07d706828baf

|

gchhablani/bert-base-cased-finetuned-qnli

|

gchhablani

|

bert

| 52 | 75 |

transformers

| 1 |

text-classification

| true | false | false |

apache-2.0

|

['en']

|

['glue']

| null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer', 'fnet-bert-base-comparison']

| true | true | true | 2,215 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-cased-finetuned-qnli

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the GLUE QNLI dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3986

- Accuracy: 0.9099

The model was fine-tuned to compare [google/fnet-base](https://huggingface.co/google/fnet-base) as introduced in [this paper](https://arxiv.org/abs/2105.03824) against [bert-base-cased](https://huggingface.co/bert-base-cased).

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

This model is trained using the [run_glue](https://github.com/huggingface/transformers/blob/master/examples/pytorch/text-classification/run_glue.py) script. The following command was used:

```bash

#!/usr/bin/bash

python ../run_glue.py \\n --model_name_or_path bert-base-cased \\n --task_name qnli \\n --do_train \\n --do_eval \\n --max_seq_length 512 \\n --per_device_train_batch_size 16 \\n --learning_rate 2e-5 \\n --num_train_epochs 3 \\n --output_dir bert-base-cased-finetuned-qnli \\n --push_to_hub \\n --hub_strategy all_checkpoints \\n --logging_strategy epoch \\n --save_strategy epoch \\n --evaluation_strategy epoch \\n```

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Accuracy | Validation Loss |

|:-------------:|:-----:|:-----:|:--------:|:---------------:|

| 0.337 | 1.0 | 6547 | 0.9013 | 0.2448 |

| 0.1971 | 2.0 | 13094 | 0.9143 | 0.2839 |

| 0.1175 | 3.0 | 19641 | 0.9099 | 0.3986 |

### Framework versions

- Transformers 4.11.0.dev0

- Pytorch 1.9.0

- Datasets 1.12.1

- Tokenizers 0.10.3

|

3cdfb6d834695135d2121ce917efadfe

|

kamilali/distilbert-base-uncased-finetuned-custom

|

kamilali

|

bert

| 12 | 5 |

transformers

| 0 |

question-answering

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,338 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-custom

This model is a fine-tuned version of [bert-large-uncased-whole-word-masking-finetuned-squad](https://huggingface.co/bert-large-uncased-whole-word-masking-finetuned-squad) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7808

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 368 | 1.1128 |

| 2.1622 | 2.0 | 736 | 0.8494 |

| 1.2688 | 3.0 | 1104 | 0.7808 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.0+cu111

- Datasets 1.18.4

- Tokenizers 0.11.6

|

d2e58228e74b9ef3f6fe20b0f21751c6

|

vitorgrs/MyModel

|

vitorgrs

| null | 20 | 15 |

diffusers

| 1 |

text-to-image

| false | false | false |

openrail

| null | null | null | 1 | 0 | 1 | 0 | 0 | 0 | 0 |

['text-to-image', 'stable-diffusion']

| false | true | true | 1,400 | false |

# Welcome to MY MODEL!

Hi! Dreambooth model for me. Use it as you want and respect the license model.

## How do I use it?

The keyword for this model is **vitordeluccagrs**.

Try to use "*portrait of vitordeluccagrs shirtless reading a book in a chair, art by artgerm*".

Be creative. Specify the faces features you want, hair style, the environment, if is a art, it's in which artist style? Want it realistic? Say it! And also say which ISO, camera, etc. Want to look like 500px and unsplash pictures? Say it too! Want it to look like Blade Runner? Say it. **Be creative**.

USE NEGATIVE PROMPT! Stable Diffusion works best if you use negative prompt. With negative prompt, you are saying what things you don't want in picture, like you know... extra fingers!.

# My social networks

[Mastodon](https://bolha.us/vitordelucca)

[Instagram](https://instagram.com/vitor_dlucca)

[Twitter](https://twitter.com/vitor_dlucca)

[Telegram](https://vitordelucca.t.me)

# Sample pictures

|

666eb99b6a7ebe5c40c670b750a35b96

|

sd-concepts-library/bob-dobbs

|

sd-concepts-library

| null | 19 | 0 | null | 2 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 2,128 | false |

### Bob Dobbs on Stable Diffusion

This is the `<bob>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

19c82a393e0dae7c5f0b7004ba42f542

|

figfig/local_test_model

|

figfig

|

whisper

| 10 | 1 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['en']

|

['figfig/restaurant_order_local_test']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['hf-asr-leaderboard', 'generated_from_trainer']

| true | true | true | 1,476 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# restaurant_local_test_model

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the local_test_data dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5435

- Wer: 78.5714

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 5

- training_steps: 40

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| No log | 10.0 | 10 | 2.2425 | 7.1429 |

| No log | 20.0 | 20 | 0.6651 | 0.0 |

| 2.4375 | 30.0 | 30 | 0.5776 | 35.7143 |

| 2.4375 | 40.0 | 40 | 0.5435 | 78.5714 |

### Framework versions

- Transformers 4.27.0.dev0

- Pytorch 1.13.1+cu116

- Datasets 2.9.0

- Tokenizers 0.13.2

|

9b7c2631cbd37143750ea1036e1c2bea

|

sd-concepts-library/lego-astronaut

|

sd-concepts-library

| null | 9 | 0 | null | 3 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,070 | false |

### Lego astronaut on Stable Diffusion

This is the `<lego-astronaut>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

44a2655b30c3724d1ab3d54e61eb1e24

|

sd-concepts-library/monster-toy

|

sd-concepts-library

| null | 9 | 0 | null | 4 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,042 | false |

### <monster-toy> on Stable Diffusion

This is the `<monster-toy>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

381ec11a3c238585e8dd29a5918888b6

|

gustavecortal/distilcamembert-cae-feeling

|

gustavecortal

|

camembert

| 6 | 3 |

transformers

| 0 |

text-classification

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,673 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilcamembert-cae-feeling

This model is a fine-tuned version of [cmarkea/distilcamembert-base](https://huggingface.co/cmarkea/distilcamembert-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9307

- Precision: 0.6783

- Recall: 0.6835

- F1: 0.6767

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 5.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|

| 1.1901 | 1.0 | 40 | 1.0935 | 0.1963 | 0.4430 | 0.2720 |

| 1.0584 | 2.0 | 80 | 0.8978 | 0.6304 | 0.6076 | 0.5776 |

| 0.6805 | 3.0 | 120 | 0.8577 | 0.6918 | 0.6709 | 0.6759 |

| 0.3938 | 4.0 | 160 | 1.0034 | 0.6966 | 0.6582 | 0.6586 |

| 0.2713 | 5.0 | 200 | 0.9307 | 0.6783 | 0.6835 | 0.6767 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.1

- Tokenizers 0.13.2

|

16f29d1e63eb3b0a6a8b8d93e0d6a15c

|

plai-edp-test/distilbert_base_uncased

|

plai-edp-test

|

distilbert

| 8 | 0 |

transformers

| 0 |

fill-mask

| false | true | false |

apache-2.0

|

['en']

|

['bookcorpus', 'wikipedia']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['exbert']

| false | true | true | 8,470 | false |

# DistilBERT base model (uncased)

This model is a distilled version of the [BERT base model](https://huggingface.co/bert-base-uncased). It was

introduced in [this paper](https://arxiv.org/abs/1910.01108). The code for the distillation process can be found

[here](https://github.com/huggingface/transformers/tree/main/examples/research_projects/distillation). This model is uncased: it does

not make a difference between english and English.