repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

DrishtiSharma/wav2vec2-large-xls-r-300m-mr-v2

|

DrishtiSharma

|

wav2vec2

| 12 | 5 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['mr']

|

['mozilla-foundation/common_voice_8_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'mozilla-foundation/common_voice_8_0', 'generated_from_trainer', 'mr', 'robust-speech-event', 'hf-asr-leaderboard']

| true | true | true | 3,083 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-mr-v2

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the MOZILLA-FOUNDATION/COMMON_VOICE_8_0 - MR dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8729

- Wer: 0.4942

### Evaluation Commands

1. To evaluate on mozilla-foundation/common_voice_8_0 with test split

python eval.py --model_id DrishtiSharma/wav2vec2-large-xls-r-300m-mr-v2 --dataset mozilla-foundation/common_voice_8_0 --config mr --split test --log_outputs

2. To evaluate on speech-recognition-community-v2/dev_data

python eval.py --model_id DrishtiSharma/wav2vec2-large-xls-r-300m-mr-v2 --dataset speech-recognition-community-v2/dev_data --config mr --split validation --chunk_length_s 10 --stride_length_s 1

Note: Marathi language not found in speech-recognition-community-v2/dev_data!

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.000333

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 200

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:------:|:----:|:---------------:|:------:|

| 8.4934 | 9.09 | 200 | 3.7326 | 1.0 |

| 3.4234 | 18.18 | 400 | 3.3383 | 0.9996 |

| 3.2628 | 27.27 | 600 | 2.7482 | 0.9992 |

| 1.7743 | 36.36 | 800 | 0.6755 | 0.6787 |

| 1.0346 | 45.45 | 1000 | 0.6067 | 0.6193 |

| 0.8137 | 54.55 | 1200 | 0.6228 | 0.5612 |

| 0.6637 | 63.64 | 1400 | 0.5976 | 0.5495 |

| 0.5563 | 72.73 | 1600 | 0.7009 | 0.5383 |

| 0.4844 | 81.82 | 1800 | 0.6662 | 0.5287 |

| 0.4057 | 90.91 | 2000 | 0.6911 | 0.5303 |

| 0.3582 | 100.0 | 2200 | 0.7207 | 0.5327 |

| 0.3163 | 109.09 | 2400 | 0.7107 | 0.5118 |

| 0.2761 | 118.18 | 2600 | 0.7538 | 0.5118 |

| 0.2415 | 127.27 | 2800 | 0.7850 | 0.5178 |

| 0.2127 | 136.36 | 3000 | 0.8016 | 0.5034 |

| 0.1873 | 145.45 | 3200 | 0.8302 | 0.5187 |

| 0.1723 | 154.55 | 3400 | 0.9085 | 0.5223 |

| 0.1498 | 163.64 | 3600 | 0.8396 | 0.5126 |

| 0.1425 | 172.73 | 3800 | 0.8776 | 0.5094 |

| 0.1258 | 181.82 | 4000 | 0.8651 | 0.5014 |

| 0.117 | 190.91 | 4200 | 0.8772 | 0.4970 |

| 0.1093 | 200.0 | 4400 | 0.8729 | 0.4942 |

### Framework versions

- Transformers 4.16.1

- Pytorch 1.10.0+cu111

- Datasets 1.18.2

- Tokenizers 0.11.0

|

6e83d3525f7b641e6503765a14894a23

|

theojolliffe/bart-cnn-science-v3-e1-v4-e4-manual

|

theojolliffe

|

bart

| 13 | 1 |

transformers

| 0 |

text2text-generation

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,790 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-cnn-science-v3-e1-v4-e4-manual

This model is a fine-tuned version of [theojolliffe/bart-cnn-science-v3-e1](https://huggingface.co/theojolliffe/bart-cnn-science-v3-e1) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2615

- Rouge1: 53.36

- Rouge2: 32.0237

- Rougel: 33.2835

- Rougelsum: 50.7455

- Gen Len: 142.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:-------:|

| No log | 1.0 | 42 | 1.0675 | 51.743 | 31.3774 | 34.1939 | 48.7234 | 142.0 |

| No log | 2.0 | 84 | 1.0669 | 49.4166 | 28.1438 | 30.188 | 46.0289 | 142.0 |

| No log | 3.0 | 126 | 1.1799 | 52.6909 | 31.0174 | 35.441 | 50.0351 | 142.0 |

| No log | 4.0 | 168 | 1.2615 | 53.36 | 32.0237 | 33.2835 | 50.7455 | 142.0 |

### Framework versions

- Transformers 4.20.0

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

ff8ab05385267dd05553bcb7c7e386d3

|

sujathass/distilbert-base-uncased-finetuned-cola

|

sujathass

|

distilbert

| 19 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null |

['glue']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,497 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-cola

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5532

- Matthews Correlation: 0.5452

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Matthews Correlation |

|:-------------:|:-----:|:----:|:---------------:|:--------------------:|

| 0.5248 | 1.0 | 535 | 0.5479 | 0.3922 |

| 0.3503 | 2.0 | 1070 | 0.5148 | 0.4822 |

| 0.2386 | 3.0 | 1605 | 0.5532 | 0.5452 |

| 0.1773 | 4.0 | 2140 | 0.6818 | 0.5282 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

130df59874821f2d9ebf48d538947de5

|

jonatasgrosman/exp_w2v2t_es_unispeech_s767

|

jonatasgrosman

|

unispeech

| 10 | 3 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['es']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'es']

| false | true | true | 469 | false |

# exp_w2v2t_es_unispeech_s767

Fine-tuned [microsoft/unispeech-large-1500h-cv](https://huggingface.co/microsoft/unispeech-large-1500h-cv) for speech recognition using the train split of [Common Voice 7.0 (es)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

063f4b69e511feb90556fe95384c590c

|

rishabhjain16/whisper_large_to_pf10h

|

rishabhjain16

|

whisper

| 23 | 2 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,711 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# openai/whisper-large

This model is a fine-tuned version of [openai/whisper-large](https://huggingface.co/openai/whisper-large) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1412

- Wer: 6.7893

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 4000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.0475 | 2.03 | 500 | 0.1095 | 62.6591 |

| 0.0201 | 5.01 | 1000 | 0.1225 | 16.9285 |

| 0.0044 | 7.03 | 1500 | 0.1312 | 3.6701 |

| 0.0026 | 10.01 | 2000 | 0.1278 | 7.9506 |

| 0.0001 | 12.04 | 2500 | 0.1323 | 17.9186 |

| 0.0001 | 15.02 | 3000 | 0.1386 | 16.3031 |

| 0.0001 | 17.05 | 3500 | 0.1403 | 6.7074 |

| 0.0 | 20.02 | 4000 | 0.1412 | 6.7893 |

### Framework versions

- Transformers 4.27.0.dev0

- Pytorch 1.13.1+cu117

- Datasets 2.9.1.dev0

- Tokenizers 0.13.2

|

75ea4a3b12ef02bafcfa00fcab57474a

|

jayantapaul888/vit-base-patch16-224-finetuned-memes-v3

|

jayantapaul888

|

vit

| 12 | 9 |

transformers

| 0 |

image-classification

| true | false | false |

apache-2.0

| null |

['imagefolder']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,527 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# vit-base-patch16-224-finetuned-memes-v3

This model is a fine-tuned version of [google/vit-base-patch16-224](https://huggingface.co/google/vit-base-patch16-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3862

- Accuracy: 0.8478

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.00012

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.5649 | 0.99 | 40 | 0.6342 | 0.7488 |

| 0.3083 | 1.99 | 80 | 0.4146 | 0.8423 |

| 0.1563 | 2.99 | 120 | 0.3900 | 0.8547 |

| 0.0827 | 3.99 | 160 | 0.3862 | 0.8478 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

9c7e55749f4cdff0a07234ac3edfa792

|

ali2066/finetuned_token_3e-05_all_16_02_2022-16_25_56

|

ali2066

|

distilbert

| 13 | 10 |

transformers

| 0 |

token-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,791 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuned_token_3e-05_all_16_02_2022-16_25_56

This model is a fine-tuned version of [distilbert-base-uncased-finetuned-sst-2-english](https://huggingface.co/distilbert-base-uncased-finetuned-sst-2-english) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1630

- Precision: 0.3684

- Recall: 0.3714

- F1: 0.3699

- Accuracy: 0.9482

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 38 | 0.3339 | 0.1075 | 0.2324 | 0.1470 | 0.8379 |

| No log | 2.0 | 76 | 0.3074 | 0.1589 | 0.2926 | 0.2060 | 0.8489 |

| No log | 3.0 | 114 | 0.2914 | 0.2142 | 0.3278 | 0.2591 | 0.8591 |

| No log | 4.0 | 152 | 0.2983 | 0.1951 | 0.3595 | 0.2529 | 0.8454 |

| No log | 5.0 | 190 | 0.2997 | 0.1851 | 0.3528 | 0.2428 | 0.8487 |

### Framework versions

- Transformers 4.15.0

- Pytorch 1.10.1+cu113

- Datasets 1.18.0

- Tokenizers 0.10.3

|

af79ee7cbeb622fde1968682e8e9da46

|

osanseviero/tips

|

osanseviero

| null | 3 | 0 |

sklearn

| 0 | null | false | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 8,875 | false |

## Baseline Model trained on tips to predict sex

Metrics of the best model:

accuracy 0.647364

average_precision 0.481257

roc_auc 0.608805

recall_macro 0.588751

f1_macro 0.588435

Name: MultinomialNB(), dtype: float64

See model plot below:

<style>#sk-container-id-2 {color: black;background-color: white;}#sk-container-id-2 pre{padding: 0;}#sk-container-id-2 div.sk-toggleable {background-color: white;}#sk-container-id-2 label.sk-toggleable__label {cursor: pointer;display: block;width: 100%;margin-bottom: 0;padding: 0.3em;box-sizing: border-box;text-align: center;}#sk-container-id-2 label.sk-toggleable__label-arrow:before {content: "▸";float: left;margin-right: 0.25em;color: #696969;}#sk-container-id-2 label.sk-toggleable__label-arrow:hover:before {color: black;}#sk-container-id-2 div.sk-estimator:hover label.sk-toggleable__label-arrow:before {color: black;}#sk-container-id-2 div.sk-toggleable__content {max-height: 0;max-width: 0;overflow: hidden;text-align: left;background-color: #f0f8ff;}#sk-container-id-2 div.sk-toggleable__content pre {margin: 0.2em;color: black;border-radius: 0.25em;background-color: #f0f8ff;}#sk-container-id-2 input.sk-toggleable__control:checked~div.sk-toggleable__content {max-height: 200px;max-width: 100%;overflow: auto;}#sk-container-id-2 input.sk-toggleable__control:checked~label.sk-toggleable__label-arrow:before {content: "▾";}#sk-container-id-2 div.sk-estimator input.sk-toggleable__control:checked~label.sk-toggleable__label {background-color: #d4ebff;}#sk-container-id-2 div.sk-label input.sk-toggleable__control:checked~label.sk-toggleable__label {background-color: #d4ebff;}#sk-container-id-2 input.sk-hidden--visually {border: 0;clip: rect(1px 1px 1px 1px);clip: rect(1px, 1px, 1px, 1px);height: 1px;margin: -1px;overflow: hidden;padding: 0;position: absolute;width: 1px;}#sk-container-id-2 div.sk-estimator {font-family: monospace;background-color: #f0f8ff;border: 1px dotted black;border-radius: 0.25em;box-sizing: border-box;margin-bottom: 0.5em;}#sk-container-id-2 div.sk-estimator:hover {background-color: #d4ebff;}#sk-container-id-2 div.sk-parallel-item::after {content: "";width: 100%;border-bottom: 1px solid gray;flex-grow: 1;}#sk-container-id-2 div.sk-label:hover label.sk-toggleable__label {background-color: #d4ebff;}#sk-container-id-2 div.sk-serial::before {content: "";position: absolute;border-left: 1px solid gray;box-sizing: border-box;top: 0;bottom: 0;left: 50%;z-index: 0;}#sk-container-id-2 div.sk-serial {display: flex;flex-direction: column;align-items: center;background-color: white;padding-right: 0.2em;padding-left: 0.2em;position: relative;}#sk-container-id-2 div.sk-item {position: relative;z-index: 1;}#sk-container-id-2 div.sk-parallel {display: flex;align-items: stretch;justify-content: center;background-color: white;position: relative;}#sk-container-id-2 div.sk-item::before, #sk-container-id-2 div.sk-parallel-item::before {content: "";position: absolute;border-left: 1px solid gray;box-sizing: border-box;top: 0;bottom: 0;left: 50%;z-index: -1;}#sk-container-id-2 div.sk-parallel-item {display: flex;flex-direction: column;z-index: 1;position: relative;background-color: white;}#sk-container-id-2 div.sk-parallel-item:first-child::after {align-self: flex-end;width: 50%;}#sk-container-id-2 div.sk-parallel-item:last-child::after {align-self: flex-start;width: 50%;}#sk-container-id-2 div.sk-parallel-item:only-child::after {width: 0;}#sk-container-id-2 div.sk-dashed-wrapped {border: 1px dashed gray;margin: 0 0.4em 0.5em 0.4em;box-sizing: border-box;padding-bottom: 0.4em;background-color: white;}#sk-container-id-2 div.sk-label label {font-family: monospace;font-weight: bold;display: inline-block;line-height: 1.2em;}#sk-container-id-2 div.sk-label-container {text-align: center;}#sk-container-id-2 div.sk-container {/* jupyter's `normalize.less` sets `[hidden] { display: none; }` but bootstrap.min.css set `[hidden] { display: none !important; }` so we also need the `!important` here to be able to override the default hidden behavior on the sphinx rendered scikit-learn.org. See: https://github.com/scikit-learn/scikit-learn/issues/21755 */display: inline-block !important;position: relative;}#sk-container-id-2 div.sk-text-repr-fallback {display: none;}</style><div id="sk-container-id-2" class="sk-top-container"><div class="sk-text-repr-fallback"><pre>Pipeline(steps=[('easypreprocessor',EasyPreprocessor(types= continuous dirty_float low_card_int ... date free_string useless

total_bill True False False ... False False False

tip True False False ... False False False

smoker False False False ... False False False

day False False False ... False False False

time False False False ... False False False

size False False True ... False False False[6 rows x 7 columns])),('pipeline',Pipeline(steps=[('minmaxscaler', MinMaxScaler()),('multinomialnb', MultinomialNB())]))])</pre><b>In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. <br />On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.</b></div><div class="sk-container" hidden><div class="sk-item sk-dashed-wrapped"><div class="sk-label-container"><div class="sk-label sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-4" type="checkbox" ><label for="sk-estimator-id-4" class="sk-toggleable__label sk-toggleable__label-arrow">Pipeline</label><div class="sk-toggleable__content"><pre>Pipeline(steps=[('easypreprocessor',EasyPreprocessor(types= continuous dirty_float low_card_int ... date free_string useless

total_bill True False False ... False False False

tip True False False ... False False False

smoker False False False ... False False False

day False False False ... False False False

time False False False ... False False False

size False False True ... False False False[6 rows x 7 columns])),('pipeline',Pipeline(steps=[('minmaxscaler', MinMaxScaler()),('multinomialnb', MultinomialNB())]))])</pre></div></div></div><div class="sk-serial"><div class="sk-item"><div class="sk-estimator sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-5" type="checkbox" ><label for="sk-estimator-id-5" class="sk-toggleable__label sk-toggleable__label-arrow">EasyPreprocessor</label><div class="sk-toggleable__content"><pre>EasyPreprocessor(types= continuous dirty_float low_card_int ... date free_string useless

total_bill True False False ... False False False

tip True False False ... False False False

smoker False False False ... False False False

day False False False ... False False False

time False False False ... False False False

size False False True ... False False False[6 rows x 7 columns])</pre></div></div></div><div class="sk-item"><div class="sk-label-container"><div class="sk-label sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-6" type="checkbox" ><label for="sk-estimator-id-6" class="sk-toggleable__label sk-toggleable__label-arrow">pipeline: Pipeline</label><div class="sk-toggleable__content"><pre>Pipeline(steps=[('minmaxscaler', MinMaxScaler()),('multinomialnb', MultinomialNB())])</pre></div></div></div><div class="sk-serial"><div class="sk-item"><div class="sk-estimator sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-7" type="checkbox" ><label for="sk-estimator-id-7" class="sk-toggleable__label sk-toggleable__label-arrow">MinMaxScaler</label><div class="sk-toggleable__content"><pre>MinMaxScaler()</pre></div></div></div><div class="sk-item"><div class="sk-estimator sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="sk-estimator-id-8" type="checkbox" ><label for="sk-estimator-id-8" class="sk-toggleable__label sk-toggleable__label-arrow">MultinomialNB</label><div class="sk-toggleable__content"><pre>MultinomialNB()</pre></div></div></div></div></div></div></div></div></div>

|

a714059d8d88f26113ba49c67eafc6a1

|

varadhbhatnagar/fc-claim-det-DPEGASUS

|

varadhbhatnagar

|

pegasus

| 10 | 6 |

transformers

| 0 |

summarization

| true | false | false |

apache-2.0

|

['en']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 6,250 | false |

# Model Card for Pegasus for Claim Summarization

<!-- Provide a quick summary of what the model is/does. -->

This model can be used to summarize noisy claims on social media into clean and concise claims which can be used for downstream tasks in a fact-checking pipeline.

# Model Details

This is the fine-tuned D PEGASUS model with 'No Preprocessing (NP)' detailed in Table 2 in the paper.

This was the best performing model at the time of experimentation.

## Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** Varad Bhatnagar, Diptesh Kanojia and Kameswari Chebrolu

- **Model type:** Summarization

- **Language(s) (NLP):** English

- **Finetuned from model:** https://huggingface.co/sshleifer/distill-pegasus-cnn-16-4

## Model Sources

<!-- Provide the basic links for the model. -->

- **Repository:** https://github.com/varadhbhatnagar/FC-Claim-Det

- **Paper:** https://aclanthology.org/2022.coling-1.259/

## Tokenizer

Same as https://huggingface.co/sshleifer/distill-pegasus-cnn-16-4

# Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

## Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

English to English summarization on noisy fact-checking worthy claims found on social media.

## Downstream Use

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

Can be used for other tasks in a fact-checking pipeline such as claim matching and evidence retrieval.

# Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

As the [Google Fact Check Explorer](https://toolbox.google.com/factcheck/explorer) is an ever growing and evolving system, the current Retrieval@k results may not exactly match

those in the corresponding paper as those experiments were conducted in the month of April and May 2022.

# Training Details

## Training Data

<!-- This should link to a Data Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[Data](https://github.com/varadhbhatnagar/FC-Claim-Det/blob/main/public_data/released_data.csv)

## Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

Finetuning the pretrained Distilled PEGASUS model on the 567 pairs released in our paper.

### Preprocessing

No preprocessing of input is done while fine-tuning this model.

# Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

Retrieval@5 and Mean Reciprocal Recall scores are reported.

## Results

Retrieval@5 = 34.91

MRR = 0.3

Further details can be found in the paper.

# Other Models from same work

[DBART](https://huggingface.co/varadhbhatnagar/fc-claim-det-DBART)

[T5-Base](https://huggingface.co/varadhbhatnagar/fc-claim-det-T5-base)

# Citation

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

```

@inproceedings{bhatnagar-etal-2022-harnessing,

title = "Harnessing Abstractive Summarization for Fact-Checked Claim Detection",

author = "Bhatnagar, Varad and

Kanojia, Diptesh and

Chebrolu, Kameswari",

booktitle = "Proceedings of the 29th International Conference on Computational Linguistics",

month = oct,

year = "2022",

address = "Gyeongju, Republic of Korea",

publisher = "International Committee on Computational Linguistics",

url = "https://aclanthology.org/2022.coling-1.259",

pages = "2934--2945",

abstract = "Social media platforms have become new battlegrounds for anti-social elements, with misinformation being the weapon of choice. Fact-checking organizations try to debunk as many claims as possible while staying true to their journalistic processes but cannot cope with its rapid dissemination. We believe that the solution lies in partial automation of the fact-checking life cycle, saving human time for tasks which require high cognition. We propose a new workflow for efficiently detecting previously fact-checked claims that uses abstractive summarization to generate crisp queries. These queries can then be executed on a general-purpose retrieval system associated with a collection of previously fact-checked claims. We curate an abstractive text summarization dataset comprising noisy claims from Twitter and their gold summaries. It is shown that retrieval performance improves 2x by using popular out-of-the-box summarization models and 3x by fine-tuning them on the accompanying dataset compared to verbatim querying. Our approach achieves Recall@5 and MRR of 35{\%} and 0.3, compared to baseline values of 10{\%} and 0.1, respectively. Our dataset, code, and models are available publicly: https://github.com/varadhbhatnagar/FC-Claim-Det/.",

}

```

# Model Card Authors

Varad Bhatnagar

# Model Card Contact

Email: varadhbhatnagar@gmail.com

# How to Get Started with the Model

Use the code below to get started with the model.

```

from transformers import PegasusForConditionalGeneration, PegasusTokenizerFast

tokeizer = PegasusTokenizerFast.from_pretrained('varadhbhatnagar/fc-claim-det-DPEGASUS')

model = PegasusForConditionalGeneration.from_pretrained('varadhbhatnagar/fc-claim-det-DPEGASUS')

text ='world health organisation has taken a complete u turn and said that corona patients neither need isolate nor quarantine nor social distance and it can not even transmit from one patient to another'

tokenized_text = tokeizer.encode(text, return_tensors="pt")

summary_ids = model.generate(tokenized_text,

num_beams=6,

no_repeat_ngram_size=2,

min_length=5,

max_length=15,

early_stopping=True)

output = tokenizer.decode(summary_ids[0], skip_special_tokens=True)

```

|

5b7c94c80f74d25dfe6e1045cdb944b5

|

yoavgur/gpt2-bash-history-baseline2

|

yoavgur

|

gpt2

| 13 | 4 |

transformers

| 0 |

text-generation

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,333 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-bash-history-baseline2

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.6480

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 158 | 1.8653 |

| No log | 2.0 | 316 | 1.7574 |

| No log | 3.0 | 474 | 1.6939 |

| 1.9705 | 4.0 | 632 | 1.6597 |

| 1.9705 | 5.0 | 790 | 1.6480 |

### Framework versions

- Transformers 4.16.2

- Pytorch 1.10.0+cu111

- Datasets 1.18.3

- Tokenizers 0.11.6

|

ed795f6ccd0045793f6ba5368b572a09

|

microsoft/beit-base-patch16-384

|

microsoft

|

beit

| 6 | 387 |

transformers

| 0 |

image-classification

| true | false | true |

apache-2.0

| null |

['imagenet', 'imagenet-21k']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['image-classification', 'vision']

| false | true | true | 5,476 | false |

# BEiT (base-sized model, fine-tuned on ImageNet-1k)

BEiT model pre-trained in a self-supervised fashion on ImageNet-21k (14 million images, 21,841 classes) at resolution 224x224, and fine-tuned on ImageNet 2012 (1 million images, 1,000 classes) at resolution 384x384. It was introduced in the paper [BEIT: BERT Pre-Training of Image Transformers](https://arxiv.org/abs/2106.08254) by Hangbo Bao, Li Dong and Furu Wei and first released in [this repository](https://github.com/microsoft/unilm/tree/master/beit).

Disclaimer: The team releasing BEiT did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The BEiT model is a Vision Transformer (ViT), which is a transformer encoder model (BERT-like). In contrast to the original ViT model, BEiT is pretrained on a large collection of images in a self-supervised fashion, namely ImageNet-21k, at a resolution of 224x224 pixels. The pre-training objective for the model is to predict visual tokens from the encoder of OpenAI's DALL-E's VQ-VAE, based on masked patches.

Next, the model was fine-tuned in a supervised fashion on ImageNet (also referred to as ILSVRC2012), a dataset comprising 1 million images and 1,000 classes, also at resolution 224x224.

Images are presented to the model as a sequence of fixed-size patches (resolution 16x16), which are linearly embedded. Contrary to the original ViT models, BEiT models do use relative position embeddings (similar to T5) instead of absolute position embeddings, and perform classification of images by mean-pooling the final hidden states of the patches, instead of placing a linear layer on top of the final hidden state of the [CLS] token.

By pre-training the model, it learns an inner representation of images that can then be used to extract features useful for downstream tasks: if you have a dataset of labeled images for instance, you can train a standard classifier by placing a linear layer on top of the pre-trained encoder. One typically places a linear layer on top of the [CLS] token, as the last hidden state of this token can be seen as a representation of an entire image. Alternatively, one can mean-pool the final hidden states of the patch embeddings, and place a linear layer on top of that.

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=microsoft/beit) to look for

fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model to classify an image of the COCO 2017 dataset into one of the 1,000 ImageNet classes:

```python

from transformers import BeitFeatureExtractor, BeitForImageClassification

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = BeitFeatureExtractor.from_pretrained('microsoft/beit-base-patch16-384')

model = BeitForImageClassification.from_pretrained('microsoft/beit-base-patch16-384')

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

logits = outputs.logits

# model predicts one of the 1000 ImageNet classes

predicted_class_idx = logits.argmax(-1).item()

print("Predicted class:", model.config.id2label[predicted_class_idx])

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The BEiT model was pretrained on [ImageNet-21k](http://www.image-net.org/), a dataset consisting of 14 million images and 21k classes, and fine-tuned on [ImageNet](http://www.image-net.org/challenges/LSVRC/2012/), a dataset consisting of 1 million images and 1k classes.

## Training procedure

### Preprocessing

The exact details of preprocessing of images during training/validation can be found [here](https://github.com/microsoft/unilm/blob/master/beit/datasets.py).

Images are resized/rescaled to the same resolution (224x224) and normalized across the RGB channels with mean (0.5, 0.5, 0.5) and standard deviation (0.5, 0.5, 0.5).

### Pretraining

For all pre-training related hyperparameters, we refer to page 15 of the [original paper](https://arxiv.org/abs/2106.08254).

## Evaluation results

For evaluation results on several image classification benchmarks, we refer to tables 1 and 2 of the original paper. Note that for fine-tuning, the best results are obtained with a higher resolution (384x384). Of course, increasing the model size will result in better performance.

### BibTeX entry and citation info

```@article{DBLP:journals/corr/abs-2106-08254,

author = {Hangbo Bao and

Li Dong and

Furu Wei},

title = {BEiT: {BERT} Pre-Training of Image Transformers},

journal = {CoRR},

volume = {abs/2106.08254},

year = {2021},

url = {https://arxiv.org/abs/2106.08254},

archivePrefix = {arXiv},

eprint = {2106.08254},

timestamp = {Tue, 29 Jun 2021 16:55:04 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2106-08254.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

```bibtex

@inproceedings{deng2009imagenet,

title={Imagenet: A large-scale hierarchical image database},

author={Deng, Jia and Dong, Wei and Socher, Richard and Li, Li-Jia and Li, Kai and Fei-Fei, Li},

booktitle={2009 IEEE conference on computer vision and pattern recognition},

pages={248--255},

year={2009},

organization={Ieee}

}

```

|

1dbbc509c03ae588eaa3dffd2e241f61

|

WillHeld/bert-base-cased-stsb

|

WillHeld

|

bert

| 14 | 6 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

|

['en']

|

['glue']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,896 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-cased-stsb

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the GLUE STSB dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4322

- Pearson: 0.9007

- Spearmanr: 0.8963

- Combined Score: 0.8985

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.06

- num_epochs: 10.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Pearson | Spearmanr | Combined Score |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:---------:|:--------------:|

| 1.6464 | 1.39 | 500 | 0.5662 | 0.8820 | 0.8814 | 0.8817 |

| 0.3329 | 2.78 | 1000 | 0.5070 | 0.8913 | 0.8883 | 0.8898 |

| 0.173 | 4.17 | 1500 | 0.4465 | 0.8988 | 0.8943 | 0.8966 |

| 0.1085 | 5.56 | 2000 | 0.4537 | 0.8958 | 0.8917 | 0.8937 |

| 0.0816 | 6.94 | 2500 | 0.4594 | 0.8977 | 0.8933 | 0.8955 |

| 0.0621 | 8.33 | 3000 | 0.4450 | 0.8997 | 0.8950 | 0.8974 |

| 0.0519 | 9.72 | 3500 | 0.4322 | 0.9007 | 0.8963 | 0.8985 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.7.1

- Datasets 1.18.3

- Tokenizers 0.11.6

|

1e0e5f307fccadc9cfac79f68b689af7

|

KoboldAI/fairseq-dense-6.7B-Shinen

|

KoboldAI

|

xglm

| 8 | 2,536 |

transformers

| 0 |

text-generation

| true | false | false |

mit

|

['en']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,270 | false |

# Fairseq-dense 6.7B - Shinen

## Model Description

Fairseq-dense 6.7B-Shinen is a finetune created using Fairseq's MoE dense model. Compared to GPT-Neo-2.7-Horni, this model is much heavier on the sexual content.

**Warning: THIS model is NOT suitable for use by minors. The model will output X-rated content.**

## Training data

The training data contains user-generated stories from sexstories.com. All stories are tagged using the following way:

```

[Theme: <theme1>, <theme2> ,<theme3>]

<Story goes here>

```

### How to use

You can use this model directly with a pipeline for text generation. This example generates a different sequence each time it's run:

```py

>>> from transformers import pipeline

>>> generator = pipeline('text-generation', model='KoboldAI/fairseq-dense-6.7B-Shinen')

>>> generator("She was staring at me", do_sample=True, min_length=50)

[{'generated_text': 'She was staring at me with a look that said it all. She wanted me so badly tonight that I wanted'}]

```

### Limitations and Biases

Based on known problems with NLP technology, potential relevant factors include bias (gender, profession, race and religion).

### BibTeX entry and citation info

```

Artetxe et al. (2021): Efficient Large Scale Language Modeling with Mixtures of Experts

```

|

e298e6401a1be407af94527004b4109f

|

Yuang/unilm-base-chinese-news-sum

|

Yuang

|

bert

| 7 | 19 |

transformers

| 1 |

text2text-generation

| true | false | false |

apache-2.0

|

['zh']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['unilm']

| false | true | true | 858 | false |

# unilm-base-chinese-news-sum

```sh

pip install git+https://github.com/Liadrinz/transformers-unilm # 安装兼容HuggingFace的UniLM模型代码

```

```py

from unilm import UniLMTokenizer, UniLMForConditionalGeneration

news_article = (

"12月23日,河北石家庄。8岁哥哥轻车熟路哄睡弟弟,姿势标准动作熟练。"

"妈妈杨女士表示:哥哥很喜欢弟弟,因为心思比较细,自己平时带孩子的习惯他都会跟着学习,"

"哄睡孩子也都会争着来,技巧很娴熟,两人在一块很有爱,自己感到很幸福,平时帮了自己很大的忙,感恩有这么乖的宝宝。"

)

tokenizer = UniLMTokenizer.from_pretrained("Yuang/unilm-base-chinese-news-sum")

model = UniLMForConditionalGeneration.from_pretrained("Yuang/unilm-base-chinese-news-sum")

inputs = tokenizer(news_article, return_tensors="pt")

output_ids = model.generate(**inputs, max_new_tokens=16)

output_text = tokenizer.decode(output_ids[0])

print(output_text) # "[CLS] <news_article> [SEP] <news_summary> [SEP]"

news_summary = output_text.split("[SEP]")[1].strip()

print(news_summary)

```

|

16675c0877927c8da4182bfdfa3700ae

|

deblagoj/distilbert-base-uncased-finetuned-emotion

|

deblagoj

|

distilbert

| 28 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null |

['emotion']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,343 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2225

- Accuracy: 0.919

- F1: 0.9191

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.814 | 1.0 | 250 | 0.3153 | 0.904 | 0.9016 |

| 0.2515 | 2.0 | 500 | 0.2225 | 0.919 | 0.9191 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu116

- Datasets 2.6.1

- Tokenizers 0.13.1

|

e4ff784545aac7f2f902a872bc7c6536

|

mrm8488/t5-small-finetuned-text-simplification

|

mrm8488

|

t5

| 11 | 3 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

| null |

['wiki_auto_asset_turk']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,859 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-finetuned-text-simplification

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the wiki_auto_asset_turk dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1217

- Rouge2 Precision: 0.5537

- Rouge2 Recall: 0.4251

- Rouge2 Fmeasure: 0.4616

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge2 Precision | Rouge2 Recall | Rouge2 Fmeasure |

|:-------------:|:-----:|:-----:|:---------------:|:----------------:|:-------------:|:---------------:|

| 0.1604 | 1.0 | 15119 | 0.1156 | 0.5567 | 0.4266 | 0.4633 |

| 0.1573 | 2.0 | 30238 | 0.1163 | 0.5534 | 0.4258 | 0.462 |

| 0.1552 | 3.0 | 45357 | 0.1197 | 0.5527 | 0.4244 | 0.4608 |

| 0.1514 | 4.0 | 60476 | 0.1214 | 0.5528 | 0.4257 | 0.4617 |

| 0.1524 | 5.0 | 75595 | 0.1217 | 0.5537 | 0.4251 | 0.4616 |

### Framework versions

- Transformers 4.22.0

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

91b5322cd79ec24f9edbb93e41baa655

|

an303042/Jocelyn_Hobbie_Diffusion_v1

|

an303042

| null | 23 | 3 |

diffusers

| 1 |

text-to-image

| false | false | false |

creativeml-openrail-m

|

['en']

| null | null | 2 | 1 | 1 | 0 | 0 | 0 | 0 |

['stable-diffusion', 'text-to-image', 'image-to-image', 'diffusers']

| false | true | true | 1,643 | false |

### Jocelyn Hobbie Diffusion v1

This model was created to celebrate the works of Jocelyn Hobbie - A wonderful contemporary artist.

Check out her works @ www.jocelynhobbie.com and @jocelynhobbie

**Token to use is "jclnhbe style" **

### 🧨 Diffusers

This model can be used just like any other Stable Diffusion model. For more information,

please have a look at the [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

## License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license)

### Examples

|

9188b06f898687a1aba05a90eb0493f3

|

Kaku0o0/distilbert-base-uncased-finetuned-squad

|

Kaku0o0

|

distilbert

| 16 | 3 |

transformers

| 0 |

question-answering

| true | false | false |

apache-2.0

| null |

['squad']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,279 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-squad

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the squad dataset.

It achieves the following results on the evaluation set:

- Loss: 1.6090

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 274 | 1.5943 |

| 0.9165 | 2.0 | 548 | 1.5836 |

| 0.9165 | 3.0 | 822 | 1.6090 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

d72b717e9045dd10b1935091cc228aee

|

jonatasgrosman/exp_w2v2r_de_xls-r_accent_germany-8_austria-2_s452

|

jonatasgrosman

|

wav2vec2

| 10 | 3 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['de']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'de']

| false | true | true | 480 | false |

# exp_w2v2r_de_xls-r_accent_germany-8_austria-2_s452

Fine-tuned [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) for speech recognition using the train split of [Common Voice 7.0 (de)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

ace2107aff97558bfdd0b20289eec12f

|

luccazen/finetuning-sentiment-model-3000-samples

|

luccazen

|

distilbert

| 19 | 11 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null |

['imdb']

| null | 1 | 0 | 1 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,055 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-3000-samples

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3026

- Accuracy: 0.8667

- F1: 0.8667

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

b0c2608194605d909e3c6fefc09b6c05

|

jonatasgrosman/exp_w2v2t_zh-cn_no-pretraining_s805

|

jonatasgrosman

|

wav2vec2

| 10 | 5 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['zh-CN']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'zh-CN']

| false | true | true | 420 | false |

# exp_w2v2t_zh-cn_no-pretraining_s805

Fine-tuned randomly initialized wav2vec2 model for speech recognition using the train split of [Common Voice 7.0 (zh-CN)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

8a91a3682d09b46388d94c6315ab861a

|

nandysoham/Human_Development_Index-clustered

|

nandysoham

|

distilbert

| 8 | 0 |

transformers

| 0 |

question-answering

| false | true | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,877 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# nandysoham/Human_Development_Index-clustered

This model is a fine-tuned version of [nandysoham16/4-clustered_aug](https://huggingface.co/nandysoham16/4-clustered_aug) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.2234

- Train End Logits Accuracy: 0.9410

- Train Start Logits Accuracy: 0.9479

- Validation Loss: 1.1060

- Validation End Logits Accuracy: 0.6667

- Validation Start Logits Accuracy: 0.6667

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 18, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Train End Logits Accuracy | Train Start Logits Accuracy | Validation Loss | Validation End Logits Accuracy | Validation Start Logits Accuracy | Epoch |

|:----------:|:-------------------------:|:---------------------------:|:---------------:|:------------------------------:|:--------------------------------:|:-----:|

| 0.2234 | 0.9410 | 0.9479 | 1.1060 | 0.6667 | 0.6667 | 0 |

### Framework versions

- Transformers 4.26.0

- TensorFlow 2.9.2

- Datasets 2.9.0

- Tokenizers 0.13.2

|

84fceefdce7b88d4c94bcbc34365dc34

|

cwkeam/mctct-large

|

cwkeam

|

mctct

| 9 | 5 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['en']

|

['librispeech_asr', 'common_voice']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['speech']

| false | true | true | 2,641 | false |

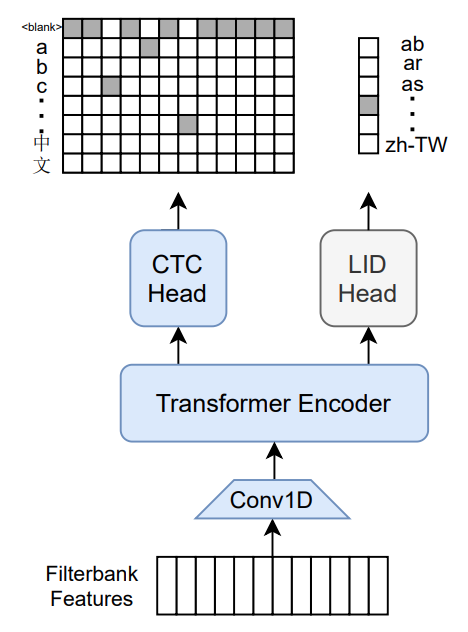

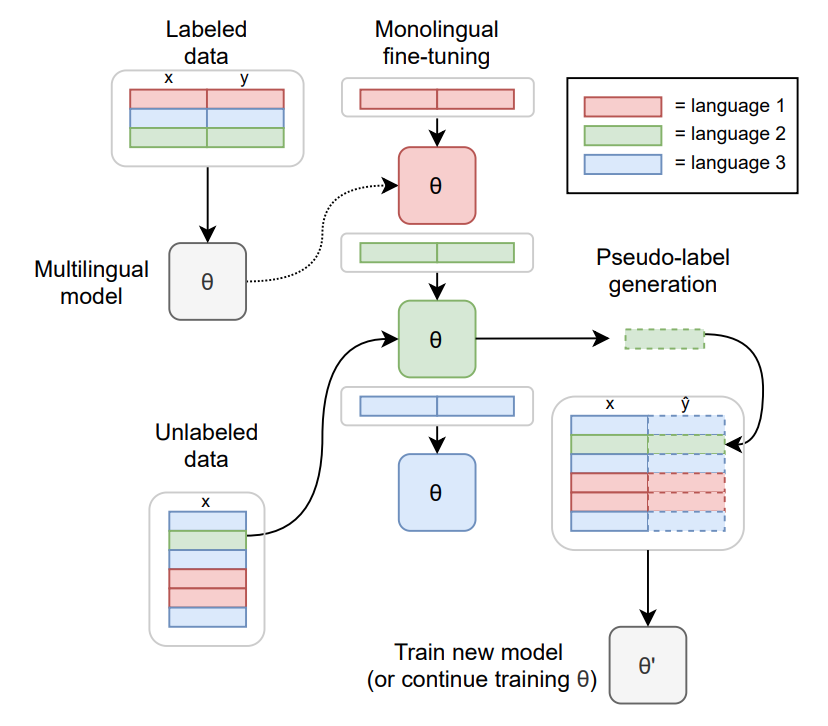

# M-CTC-T

Massively multilingual speech recognizer from Meta AI. The model is a 1B-param transformer encoder, with a CTC head over 8065 character labels and a language identification head over 60 language ID labels. It is trained on Common Voice (version 6.1, December 2020 release) and VoxPopuli. After training on Common Voice and VoxPopuli, the model is trained on Common Voice only. The labels are unnormalized character-level transcripts (punctuation and capitalization are not removed). The model takes as input Mel filterbank features from a 16Khz audio signal.

The original Flashlight code, model checkpoints, and Colab notebook can be found at https://github.com/flashlight/wav2letter/tree/main/recipes/mling_pl .

## Citation

[Paper](https://arxiv.org/abs/2111.00161)

Authors: Loren Lugosch, Tatiana Likhomanenko, Gabriel Synnaeve, Ronan Collobert

```

@article{lugosch2021pseudo,

title={Pseudo-Labeling for Massively Multilingual Speech Recognition},

author={Lugosch, Loren and Likhomanenko, Tatiana and Synnaeve, Gabriel and Collobert, Ronan},

journal={ICASSP},

year={2022}

}

```

Additional thanks to [Chan Woo Kim](https://huggingface.co/cwkeam) and [Patrick von Platen](https://huggingface.co/patrickvonplaten) for porting the model from Flashlight to PyTorch.

# Training method

TO-DO: replace with the training diagram from paper

For more information on how the model was trained, please take a look at the [official paper](https://arxiv.org/abs/2111.00161).

# Usage

To transcribe audio files the model can be used as a standalone acoustic model as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import MCTCTForCTC, MCTCTProcessor

model = MCTCTForCTC.from_pretrained("speechbrain/mctct-large")

processor = MCTCTProcessor.from_pretrained("speechbrain/mctct-large")

# load dummy dataset and read soundfiles

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

# tokenize

input_features = processor(ds[0]["audio"]["array"], return_tensors="pt").input_features

# retrieve logits

logits = model(input_features).logits

# take argmax and decode

predicted_ids = torch.argmax(logits, dim=-1)

transcription = processor.batch_decode(predicted_ids)

```

Results for Common Voice, averaged over all languages:

*Character error rate (CER)*:

| Valid | Test |

|-------|------|

| 21.4 | 23.3 |

|

ed65c127da6e0aa38494aefb4897f005

|

Helsinki-NLP/opus-mt-lue-en

|

Helsinki-NLP

|

marian

| 10 | 14 |

transformers

| 0 |

translation

| true | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['translation']

| false | true | true | 776 | false |

### opus-mt-lue-en

* source languages: lue

* target languages: en

* OPUS readme: [lue-en](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/lue-en/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-09.zip](https://object.pouta.csc.fi/OPUS-MT-models/lue-en/opus-2020-01-09.zip)

* test set translations: [opus-2020-01-09.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/lue-en/opus-2020-01-09.test.txt)

* test set scores: [opus-2020-01-09.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/lue-en/opus-2020-01-09.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.lue.en | 31.7 | 0.469 |

|

f491a004b92f2843883c300950a40c6a

|

IIIT-L/hing-mbert-finetuned-code-mixed-DS

|

IIIT-L

|

bert

| 10 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

cc-by-4.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,380 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# hing-mbert-finetuned-code-mixed-DS

This model is a fine-tuned version of [l3cube-pune/hing-mbert](https://huggingface.co/l3cube-pune/hing-mbert) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7248

- Accuracy: 0.7364

- Precision: 0.6847

- Recall: 0.7048

- F1: 0.6901

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2.7277800745684633e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 43

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:---------:|:------:|:------:|

| 0.6977 | 2.0 | 497 | 0.7248 | 0.7364 | 0.6847 | 0.7048 | 0.6901 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.10.1+cu111

- Datasets 2.3.2

- Tokenizers 0.12.1

|

e21f506810cb337315a23c35890a68f0

|

BAAI/AltCLIP-m9

|

BAAI

|

altclip

| 10 | 68 |

transformers

| 5 |

text-to-image

| true | false | false |

creativeml-openrail-m

|

['zh']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['stable-diffusion', 'stable-diffusion-diffusers', 'text-to-image', 'zh', 'Chinese', 'multilingual', 'English(En)', 'Chinese(Zh)', 'Spanish(Es)', 'French(Fr)', 'Russian(Ru)', 'Japanese(Ja)', 'Korean(Ko)', 'Arabic(Ar)', 'Italian(It)']

| false | true | true | 4,172 | false |

# AltCLIP-m9

It supports English(En), Chinese(Zh), Spanish(Es), French(Fr), Russian(Ru), Japanese(Ja), Korean(Ko), Arabic(Ar) and Italian(It) languages.

| 名称 Name | 任务 Task | 语言 Language(s) | 模型 Model | Github |

|:------------------:|:----------:|:-------------------:|:--------:|:------:|

| AltCLIP-m9 | Text-Image | Multilingual | CLIP | [FlagAI](https://github.com/FlagAI-Open/FlagAI) |

## 简介 Brief Introduction

我们提出了一个简单高效的方法去训练更加优秀的九语CLIP模型。命名为AltCLIP-m9。AltCLIP训练数据来自 [WuDao数据集](https://data.baai.ac.cn/details/WuDaoCorporaText) 和 [LIAON](https://huggingface.co/datasets/ChristophSchuhmann/improved_aesthetics_6plus)

AltCLIP-m9模型可以为本项目中的AltDiffusion-m9模型提供支持,关于AltDiffusion-m9模型的具体信息可查看[此教程](https://github.com/FlagAI-Open/FlagAI/tree/master/examples/AltDiffusion/README.md) 。

模型代码已经在 [FlagAI](https://github.com/FlagAI-Open/FlagAI/tree/master/examples/AltCLIP) 上开源,权重位于我们搭建的 [modelhub](https://model.baai.ac.cn/model-detail/100077) 上。我们还提供了微调,推理,验证的脚本,欢迎试用。

We propose a simple and efficient method to train a better multilingua CLIP model. Named AltCLIP-m9. AltCLIP-m9 is trained with training data from [WuDao dataset](https://data.baai.ac.cn/details/WuDaoCorporaText) and [Liaon](https://huggingface.co/datasets/laion/laion2B-en).

The AltCLIP-m9 model can provide support for the AltDiffusion-m9 model in this project. Specific information on the AltDiffusion model can be found in [this tutorial](https://github.com/FlagAI-Open/FlagAI/tree/master/examples/AltDiffusion/README.md).

The model code has been open sourced on [FlagAI](https://github.com/FlagAI-Open/FlagAI/tree/master/examples/AltCLIP) and the weights are located on [modelhub](https://model.baai.ac.cn/model-detail/100077). We also provide scripts for fine-tuning, inference, and validation, so feel free to try them out.

## 引用

关于AltCLIP,我们已经推出了相关报告,有更多细节可以查阅,如对您的工作有帮助,欢迎引用。

If you find this work helpful, please consider to cite

```

@article{https://doi.org/10.48550/arxiv.2211.06679,

doi = {10.48550/ARXIV.2211.06679},

url = {https://arxiv.org/abs/2211.06679},

author = {Chen, Zhongzhi and Liu, Guang and Zhang, Bo-Wen and Ye, Fulong and Yang, Qinghong and Wu, Ledell},

keywords = {Computation and Language (cs.CL), FOS: Computer and information sciences},

title = {AltCLIP: Altering the Language Encoder in CLIP for Extended Language Capabilities},

publisher = {arXiv},

year = {2022},

copyright = {arXiv.org perpetual, non-exclusive license}

}

```

## 训练 Training

训练共有两个阶段。

在平行知识蒸馏阶段,我们只是使用平行语料文本来进行蒸馏(平行语料相对于图文对更容易获取且数量更大)。在多语对比学习阶段,我们使用少量的中-英 图像-文本对(每种语言6百万)来训练我们的文本编码器以更好地适应图像编码器。

There are two phases of training.

In the parallel knowledge distillation phase, we only use parallel corpus texts for distillation (parallel corpus is easier to obtain and larger in number compared to image text pairs). In the multilingual comparison learning phase, we use a small number of text-image pairs (about 6 million in each language) to train our text encoder to better fit the image encoder.

## 下游效果 Performance

## 可视化效果 Visualization effects

基于AltCLIP,我们还开发了AltDiffusion模型,可视化效果如下。

Based on AltCLIP, we have also developed the AltDiffusion model, visualized as follows.

## 模型推理 Inference

Please download the code from [FlagAI AltCLIP](https://github.com/FlagAI-Open/FlagAI/tree/master/examples/AltCLIP)

```python

from PIL import Image

import requests

# transformers version >= 4.21.0

from modeling_altclip import AltCLIP

from processing_altclip import AltCLIPProcessor

# now our repo's in private, so we need `use_auth_token=True`

model = AltCLIP.from_pretrained("BAAI/AltCLIP-m9")

processor = AltCLIPProcessor.from_pretrained("BAAI/AltCLIP-m9")

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

inputs = processor(text=["a photo of a cat", "a photo of a dog"], images=image, return_tensors="pt", padding=True)

outputs = model(**inputs)

logits_per_image = outputs.logits_per_image # this is the image-text similarity score

probs = logits_per_image.softmax(dim=1) # we can take the softmax to get the label probabilities

```

|

68750b38809d102196dd00ad93645524

|

gabrielgmendonca/bert-base-portuguese-cased-finetuned-chico-xavier

|

gabrielgmendonca

|

bert

| 17 | 10 |

transformers

| 0 |

fill-mask

| true | true | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,357 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-portuguese-cased-finetuned-chico-xavier

This model is a fine-tuned version of [neuralmind/bert-base-portuguese-cased](https://huggingface.co/neuralmind/bert-base-portuguese-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.7196

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.0733 | 1.0 | 561 | 1.8147 |

| 1.8779 | 2.0 | 1122 | 1.7624 |

| 1.8345 | 3.0 | 1683 | 1.7206 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

|

d5d143c8259c4d79cce030265b0aa0fc

|

Yagorka/ddpm-butterflies-256_new_data

|

Yagorka

| null | 13 | 0 |

diffusers

| 0 | null | false | false | false |

apache-2.0

|

['en']

|

['imagefolder']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,219 | false |

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-butterflies-256_new_data

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `imagefolder` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 2

- eval_batch_size: 2

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/Yagorka/ddpm-butterflies-256_new_data/tensorboard?#scalars)

|

1c9932db46508cc533b9cde330e7af62

|

DunnBC22/distilbert-base-uncased_research_articles_multilabel

|

DunnBC22

|

distilbert

| 10 | 2 |

transformers

| 1 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,647 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased_research_articles_multilabel

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1956

- F1: 0.8395

- Roc Auc: 0.8909

- Accuracy: 0.6977

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 | Roc Auc | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:------:|:-------:|:--------:|

| 0.3043 | 1.0 | 263 | 0.2199 | 0.8198 | 0.8686 | 0.6829 |

| 0.2037 | 2.0 | 526 | 0.1988 | 0.8355 | 0.8845 | 0.7010 |

| 0.1756 | 3.0 | 789 | 0.1956 | 0.8395 | 0.8909 | 0.6977 |

| 0.1579 | 4.0 | 1052 | 0.1964 | 0.8371 | 0.8902 | 0.6919 |

| 0.1461 | 5.0 | 1315 | 0.1991 | 0.8353 | 0.8874 | 0.6953 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1

- Datasets 2.4.0

- Tokenizers 0.12.1

|

c76d52c9647733727a993ed42ba09e51

|

Helsinki-NLP/opus-mt-tc-big-en-cat_oci_spa

|

Helsinki-NLP

|

marian

| 13 | 7 |

transformers

| 1 |

translation

| true | true | false |

cc-by-4.0

|

['ca', 'en', 'es', 'oc']

| null | null | 2 | 1 | 1 | 0 | 0 | 0 | 0 |

['translation', 'opus-mt-tc']

| true | true | true | 6,454 | false |

# opus-mt-tc-big-en-cat_oci_spa

Neural machine translation model for translating from English (en) to Catalan, Occitan and Spanish (cat+oci+spa).

This model is part of the [OPUS-MT project](https://github.com/Helsinki-NLP/Opus-MT), an effort to make neural machine translation models widely available and accessible for many languages in the world. All models are originally trained using the amazing framework of [Marian NMT](https://marian-nmt.github.io/), an efficient NMT implementation written in pure C++. The models have been converted to pyTorch using the transformers library by huggingface. Training data is taken from [OPUS](https://opus.nlpl.eu/) and training pipelines use the procedures of [OPUS-MT-train](https://github.com/Helsinki-NLP/Opus-MT-train).

* Publications: [OPUS-MT – Building open translation services for the World](https://aclanthology.org/2020.eamt-1.61/) and [The Tatoeba Translation Challenge – Realistic Data Sets for Low Resource and Multilingual MT](https://aclanthology.org/2020.wmt-1.139/) (Please, cite if you use this model.)

```

@inproceedings{tiedemann-thottingal-2020-opus,

title = "{OPUS}-{MT} {--} Building open translation services for the World",

author = {Tiedemann, J{\"o}rg and Thottingal, Santhosh},

booktitle = "Proceedings of the 22nd Annual Conference of the European Association for Machine Translation",

month = nov,

year = "2020",

address = "Lisboa, Portugal",

publisher = "European Association for Machine Translation",

url = "https://aclanthology.org/2020.eamt-1.61",

pages = "479--480",

}

@inproceedings{tiedemann-2020-tatoeba,

title = "The Tatoeba Translation Challenge {--} Realistic Data Sets for Low Resource and Multilingual {MT}",

author = {Tiedemann, J{\"o}rg},

booktitle = "Proceedings of the Fifth Conference on Machine Translation",

month = nov,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2020.wmt-1.139",

pages = "1174--1182",

}

```

## Model info

* Release: 2022-03-13

* source language(s): eng

* target language(s): cat spa

* valid target language labels: >>cat<< >>spa<<