repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

oskarandrsson/mt-uk-sv-finetuned

|

oskarandrsson

|

marian

| 11 | 19 |

transformers

| 0 |

translation

| true | false | false |

apache-2.0

|

['uk', 'sv']

| null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer', 'translation']

| true | true | true | 1,171 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mt-uk-sv-finetuned

This model is a fine-tuned version of [Helsinki-NLP/opus-mt-uk-sv](https://huggingface.co/Helsinki-NLP/opus-mt-uk-sv) on the None dataset.

It achieves the following results on the evaluation set:

- eval_loss: 1.4210

- eval_bleu: 40.6634

- eval_runtime: 966.5303

- eval_samples_per_second: 18.744

- eval_steps_per_second: 4.687

- epoch: 6.0

- step: 40764

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-06

- train_batch_size: 24

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.25.0.dev0

- Pytorch 1.13.0+cu117

- Datasets 2.6.1

- Tokenizers 0.13.1

|

163e9e496198d44a5e1edf8af147c856

|

sayakpaul/glpn-nyu-finetuned-diode-221116-110652

|

sayakpaul

|

glpn

| 7 | 1 |

transformers

| 0 |

depth-estimation

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['vision', 'depth-estimation', 'generated_from_trainer']

| true | true | true | 2,737 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# glpn-nyu-finetuned-diode-221116-110652

This model is a fine-tuned version of [vinvino02/glpn-nyu](https://huggingface.co/vinvino02/glpn-nyu) on the diode-subset dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4018

- Mae: 0.3272

- Rmse: 0.4546

- Abs Rel: 0.3934

- Log Mae: 0.1380

- Log Rmse: 0.1907

- Delta1: 0.4598

- Delta2: 0.7659

- Delta3: 0.9082

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 24

- eval_batch_size: 48

- seed: 2022

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Mae | Rmse | Abs Rel | Log Mae | Log Rmse | Delta1 | Delta2 | Delta3 |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:-------:|:-------:|:--------:|:------:|:------:|:------:|

| 1.3984 | 1.0 | 72 | 1.1606 | 3.2154 | 3.2710 | 4.6927 | 0.6627 | 0.7082 | 0.0 | 0.0053 | 0.0893 |

| 0.8305 | 2.0 | 144 | 0.5445 | 0.6035 | 0.8404 | 0.8013 | 0.2102 | 0.2726 | 0.2747 | 0.5358 | 0.7609 |

| 0.4601 | 3.0 | 216 | 0.4484 | 0.4041 | 0.5376 | 0.5417 | 0.1617 | 0.2188 | 0.3771 | 0.6932 | 0.8692 |

| 0.4211 | 4.0 | 288 | 0.4251 | 0.3634 | 0.4914 | 0.4800 | 0.1499 | 0.2069 | 0.4136 | 0.7270 | 0.8931 |

| 0.4162 | 5.0 | 360 | 0.4170 | 0.3537 | 0.4833 | 0.4483 | 0.1455 | 0.2005 | 0.4303 | 0.7444 | 0.8992 |

| 0.3776 | 6.0 | 432 | 0.4115 | 0.3491 | 0.4692 | 0.4558 | 0.1449 | 0.1999 | 0.4281 | 0.7471 | 0.9018 |

| 0.3729 | 7.0 | 504 | 0.4058 | 0.3337 | 0.4590 | 0.4135 | 0.1396 | 0.1935 | 0.4517 | 0.7652 | 0.9072 |

| 0.3235 | 8.0 | 576 | 0.4035 | 0.3304 | 0.4602 | 0.4043 | 0.1383 | 0.1929 | 0.4613 | 0.7679 | 0.9073 |

| 0.3382 | 9.0 | 648 | 0.3990 | 0.3254 | 0.4546 | 0.3937 | 0.1365 | 0.1900 | 0.4671 | 0.7717 | 0.9102 |

| 0.3265 | 10.0 | 720 | 0.4018 | 0.3272 | 0.4546 | 0.3934 | 0.1380 | 0.1907 | 0.4598 | 0.7659 | 0.9082 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu116

- Tokenizers 0.13.2

|

89a55d0c3aab1c17acfe84c0f8818285

|

gokuls/distilbert_add_GLUE_Experiment_logit_kd_pretrain_qnli

|

gokuls

|

distilbert

| 17 | 3 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

|

['en']

|

['glue']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,859 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert_add_GLUE_Experiment_logit_kd_pretrain_qnli

This model is a fine-tuned version of [gokuls/distilbert_add_pre-training-complete](https://huggingface.co/gokuls/distilbert_add_pre-training-complete) on the GLUE QNLI dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3579

- Accuracy: 0.6522

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 256

- eval_batch_size: 256

- seed: 10

- distributed_type: multi-GPU

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 50

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.4059 | 1.0 | 410 | 0.4016 | 0.5585 |

| 0.3907 | 2.0 | 820 | 0.3735 | 0.6094 |

| 0.3715 | 3.0 | 1230 | 0.3602 | 0.6480 |

| 0.352 | 4.0 | 1640 | 0.3579 | 0.6522 |

| 0.3314 | 5.0 | 2050 | 0.3626 | 0.6670 |

| 0.309 | 6.0 | 2460 | 0.3650 | 0.6776 |

| 0.2865 | 7.0 | 2870 | 0.3799 | 0.6776 |

| 0.2679 | 8.0 | 3280 | 0.3817 | 0.6903 |

| 0.2525 | 9.0 | 3690 | 0.3942 | 0.6822 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.14.0a0+410ce96

- Datasets 2.9.0

- Tokenizers 0.13.2

|

622a8c8599de7e9698fb0935712226d7

|

Zhaohui/finetuning-misinfo-model-700-Zhaohui-1_misinfo

|

Zhaohui

|

distilbert

| 13 | 3 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,063 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-misinfo-model-700-Zhaohui-1_misinfo

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5343

- Accuracy: 0.8571

- F1: 0.8571

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.1

- Tokenizers 0.13.2

|

2d0305e6347a7c0796af99e8a3c4e4f6

|

vasista22/whisper-kannada-tiny

|

vasista22

|

whisper

| 12 | 0 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['kn']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['whisper-event']

| true | true | true | 1,317 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Kannada Tiny

This model is a fine-tuned version of [openai/whisper-tiny](https://huggingface.co/openai/whisper-tiny) on the Kannada data available from multiple publicly available ASR corpuses.

It has been fine-tuned as a part of the Whisper fine-tuning sprint.

## Training and evaluation data

Training Data: MILE ASR Corpus, ULCA ASR Corpus, Shrutilipi ASR Corpus, Google/Fleurs Train+Dev set.

Evaluation Data: Google/Fleurs Test set, MILE Test set, OpenSLR.

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 88

- eval_batch_size: 88

- seed: 22

- optimizer: adamw_bnb_8bit

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 10000

- training_steps: 15008 (terminated upon convergence. Initially set to 51570 steps)

- mixed_precision_training: True

## Acknowledgement

This work was done at Speech Lab, IITM.

The compute resources for this work were funded by "Bhashini: National Language translation Mission" project of the Ministry of Electronics and Information Technology (MeitY), Government of India.

|

8427c3083f00cb9306407779b3a688cb

|

Helsinki-NLP/opus-mt-fse-fi

|

Helsinki-NLP

|

marian

| 10 | 7 |

transformers

| 0 |

translation

| true | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['translation']

| false | true | true | 776 | false |

### opus-mt-fse-fi

* source languages: fse

* target languages: fi

* OPUS readme: [fse-fi](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/fse-fi/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-09.zip](https://object.pouta.csc.fi/OPUS-MT-models/fse-fi/opus-2020-01-09.zip)

* test set translations: [opus-2020-01-09.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/fse-fi/opus-2020-01-09.test.txt)

* test set scores: [opus-2020-01-09.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/fse-fi/opus-2020-01-09.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.fse.fi | 90.2 | 0.943 |

|

660644bdfeefc4e0c3ac5bc28bf8a9cb

|

tosin/dialogpt_mwoz_idioms

|

tosin

|

gpt2

| 9 | 3 |

transformers

| 0 |

conversational

| true | false | false |

cc-by-4.0

|

['en']

|

['multi_woz_v22 and PIE-English idioms corpus']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['conversational', 'transformers']

| false | true | true | 2,300 | false |

## DialoGPT_MWOZ_Idioms

This is a fine-tuned model of DialoGPT (medium)-MultiWOZ on the PIE-English idioms corpus. It is intended to be used as an idiom-aware conversational system.

The dataset it's trained on is limited in scope, as it covers only 10 classes of idioms ( metaphor, simile, euphemism, parallelism, personification, oxymoron, paradox, hyperbole, irony and literal).

The perplexity achieved on the test and validation sets are 160.9 and 160.46, respectively. More information about the original pre-trained model can be found [here](https://huggingface.co/tosin/dialogpt_mwoz)

* Generation example from an interactive environment:

|Role | Response |

|---------|------------|

|User | remind her to take it off the hook. |

|Bot | the bookie took it on the nod |

|User | A lengthy break is in order ; so make sure you keep your nose clean |

|Bot | they are under a cloud because of fears about US President's wife Hillary Clinton |

|User | Some families breed like rabbits and send us all their male heirs |

|Bot | he was out for the count |

Please find the information about preprocessing, training and full details of the DialoGPT in the [original DialoGPT repository](https://github.com/microsoft/DialoGPT)

### How to use

Now we are ready to try out how the model works as a chatting partner!

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

tokenizer = AutoTokenizer.from_pretrained("tosin/dialogpt_mwoz_idioms")

model = AutoModelForCausalLM.from_pretrained("tosin/dialogpt_mwoz_idioms")

# Let's chat for 5 lines

for step in range(5):

# encode the new user input, add the eos_token and return a tensor in Pytorch

new_user_input_ids = tokenizer.encode(input(">> User:") + tokenizer.eos_token, return_tensors='pt')

# append the new user input tokens to the chat history

bot_input_ids = torch.cat([chat_history_ids, new_user_input_ids], dim=-1) if step > 0 else new_user_input_ids

# generated a response while limiting the total chat history to 1000 tokens,

chat_history_ids = model.generate(bot_input_ids, max_length=1000, pad_token_id=tokenizer.eos_token_id)

# pretty print last ouput tokens from bot

print("DialoGPT_MWOZ_Bot: {}".format(tokenizer.decode(chat_history_ids[:, bot_input_ids.shape[-1]:][0], skip_special_tokens=True)))

|

a6bf7cbd1df03ea0c772d94bb707b830

|

jonatasgrosman/exp_w2v2t_sv-se_vp-it_s817

|

jonatasgrosman

|

wav2vec2

| 10 | 7 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['sv-SE']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'sv-SE']

| false | true | true | 475 | false |

# exp_w2v2t_sv-se_vp-it_s817

Fine-tuned [facebook/wav2vec2-large-it-voxpopuli](https://huggingface.co/facebook/wav2vec2-large-it-voxpopuli) for speech recognition using the train split of [Common Voice 7.0 (sv-SE)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

062c36861549d27668a59ac9ff7ca776

|

henryjiang/distilbert-base-uncased-finetuned-emotion

|

henryjiang

|

distilbert

| 12 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null |

['emotion']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,343 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2223

- Accuracy: 0.927

- F1: 0.9271

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.8412 | 1.0 | 250 | 0.3215 | 0.904 | 0.9010 |

| 0.2535 | 2.0 | 500 | 0.2223 | 0.927 | 0.9271 |

### Framework versions

- Transformers 4.22.2

- Pytorch 1.12.1+cu113

- Datasets 2.5.1

- Tokenizers 0.12.1

|

b22436f7da3fbd5e41a5f218e45a449a

|

josetapia/hygpt2-cml

|

josetapia

|

gpt2

| 29 | 2 |

transformers

| 0 |

text-generation

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 978 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# hygpt2-cml

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 256

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 15

### Training results

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.2

- Datasets 1.18.4

- Tokenizers 0.11.6

|

596740ff975b17d062dcf9f4cb64fa6c

|

Helsinki-NLP/opus-mt-sem-sem

|

Helsinki-NLP

|

marian

| 11 | 7 |

transformers

| 0 |

translation

| true | true | false |

apache-2.0

|

['mt', 'ar', 'he', 'ti', 'am', 'sem']

| null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['translation']

| false | true | true | 2,578 | false |

### sem-sem

* source group: Semitic languages

* target group: Semitic languages

* OPUS readme: [sem-sem](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/sem-sem/README.md)

* model: transformer

* source language(s): apc ara arq arz heb mlt

* target language(s): apc ara arq arz heb mlt

* model: transformer

* pre-processing: normalization + SentencePiece (spm32k,spm32k)

* a sentence initial language token is required in the form of `>>id<<` (id = valid target language ID)

* download original weights: [opus-2020-07-27.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/sem-sem/opus-2020-07-27.zip)

* test set translations: [opus-2020-07-27.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/sem-sem/opus-2020-07-27.test.txt)

* test set scores: [opus-2020-07-27.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/sem-sem/opus-2020-07-27.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba-test.ara-ara.ara.ara | 4.2 | 0.200 |

| Tatoeba-test.ara-heb.ara.heb | 34.0 | 0.542 |

| Tatoeba-test.ara-mlt.ara.mlt | 16.6 | 0.513 |

| Tatoeba-test.heb-ara.heb.ara | 18.8 | 0.477 |

| Tatoeba-test.mlt-ara.mlt.ara | 20.7 | 0.388 |

| Tatoeba-test.multi.multi | 27.1 | 0.507 |

### System Info:

- hf_name: sem-sem

- source_languages: sem

- target_languages: sem

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/sem-sem/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['mt', 'ar', 'he', 'ti', 'am', 'sem']

- src_constituents: {'apc', 'mlt', 'arz', 'ara', 'heb', 'tir', 'arq', 'afb', 'amh', 'acm', 'ary'}

- tgt_constituents: {'apc', 'mlt', 'arz', 'ara', 'heb', 'tir', 'arq', 'afb', 'amh', 'acm', 'ary'}

- src_multilingual: True

- tgt_multilingual: True

- prepro: normalization + SentencePiece (spm32k,spm32k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/sem-sem/opus-2020-07-27.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/sem-sem/opus-2020-07-27.test.txt

- src_alpha3: sem

- tgt_alpha3: sem

- short_pair: sem-sem

- chrF2_score: 0.507

- bleu: 27.1

- brevity_penalty: 0.972

- ref_len: 13472.0

- src_name: Semitic languages

- tgt_name: Semitic languages

- train_date: 2020-07-27

- src_alpha2: sem

- tgt_alpha2: sem

- prefer_old: False

- long_pair: sem-sem

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41

|

c9a37e0ab4fa857c319bbeed3508aee3

|

annahaz/roberta-large-mnli-misogyny-sexism-4tweets-3e-05-0.05

|

annahaz

|

roberta

| 11 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,899 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-large-mnli-misogyny-sexism-4tweets-3e-05-0.05

This model is a fine-tuned version of [roberta-large-mnli](https://huggingface.co/roberta-large-mnli) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9069

- Accuracy: 0.6914

- F1: 0.7061

- Precision: 0.6293

- Recall: 0.8043

- Mae: 0.3086

- Tn: 320

- Fp: 218

- Fn: 90

- Tp: 370

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Precision | Recall | Mae | Tn | Fp | Fn | Tp |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|:---------:|:------:|:------:|:---:|:---:|:---:|:---:|

| 0.5248 | 1.0 | 1346 | 0.7245 | 0.6513 | 0.6234 | 0.6207 | 0.6261 | 0.3487 | 362 | 176 | 172 | 288 |

| 0.4553 | 2.0 | 2692 | 0.6894 | 0.6693 | 0.7043 | 0.5991 | 0.8543 | 0.3307 | 275 | 263 | 67 | 393 |

| 0.3753 | 3.0 | 4038 | 0.6966 | 0.7234 | 0.7326 | 0.6608 | 0.8217 | 0.2766 | 344 | 194 | 82 | 378 |

| 0.2986 | 4.0 | 5384 | 0.9069 | 0.6914 | 0.7061 | 0.6293 | 0.8043 | 0.3086 | 320 | 218 | 90 | 370 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.0+cu102

- Datasets 2.3.2

- Tokenizers 0.12.1

|

4c2fd564125fbd37a8e6448c11ec6372

|

google/t5-efficient-small-nl36

|

google

|

t5

| 12 | 32 |

transformers

| 3 |

text2text-generation

| true | true | true |

apache-2.0

|

['en']

|

['c4']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['deep-narrow']

| false | true | true | 6,257 | false |

# T5-Efficient-SMALL-NL36 (Deep-Narrow version)

T5-Efficient-SMALL-NL36 is a variation of [Google's original T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) following the [T5 model architecture](https://huggingface.co/docs/transformers/model_doc/t5).

It is a *pretrained-only* checkpoint and was released with the

paper **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)**

by *Yi Tay, Mostafa Dehghani, Jinfeng Rao, William Fedus, Samira Abnar, Hyung Won Chung, Sharan Narang, Dani Yogatama, Ashish Vaswani, Donald Metzler*.

In a nutshell, the paper indicates that a **Deep-Narrow** model architecture is favorable for **downstream** performance compared to other model architectures

of similar parameter count.

To quote the paper:

> We generally recommend a DeepNarrow strategy where the model’s depth is preferentially increased

> before considering any other forms of uniform scaling across other dimensions. This is largely due to

> how much depth influences the Pareto-frontier as shown in earlier sections of the paper. Specifically, a

> tall small (deep and narrow) model is generally more efficient compared to the base model. Likewise,

> a tall base model might also generally more efficient compared to a large model. We generally find

> that, regardless of size, even if absolute performance might increase as we continue to stack layers,

> the relative gain of Pareto-efficiency diminishes as we increase the layers, converging at 32 to 36

> layers. Finally, we note that our notion of efficiency here relates to any one compute dimension, i.e.,

> params, FLOPs or throughput (speed). We report all three key efficiency metrics (number of params,

> FLOPS and speed) and leave this decision to the practitioner to decide which compute dimension to

> consider.

To be more precise, *model depth* is defined as the number of transformer blocks that are stacked sequentially.

A sequence of word embeddings is therefore processed sequentially by each transformer block.

## Details model architecture

This model checkpoint - **t5-efficient-small-nl36** - is of model type **Small** with the following variations:

- **nl** is **36**

It has **280.87** million parameters and thus requires *ca.* **1123.47 MB** of memory in full precision (*fp32*)

or **561.74 MB** of memory in half precision (*fp16* or *bf16*).

A summary of the *original* T5 model architectures can be seen here:

| Model | nl (el/dl) | ff | dm | kv | nh | #Params|

| ----| ---- | ---- | ---- | ---- | ---- | ----|

| Tiny | 4/4 | 1024 | 256 | 32 | 4 | 16M|

| Mini | 4/4 | 1536 | 384 | 32 | 8 | 31M|

| Small | 6/6 | 2048 | 512 | 32 | 8 | 60M|

| Base | 12/12 | 3072 | 768 | 64 | 12 | 220M|

| Large | 24/24 | 4096 | 1024 | 64 | 16 | 738M|

| Xl | 24/24 | 16384 | 1024 | 128 | 32 | 3B|

| XXl | 24/24 | 65536 | 1024 | 128 | 128 | 11B|

whereas the following abbreviations are used:

| Abbreviation | Definition |

| ----| ---- |

| nl | Number of transformer blocks (depth) |

| dm | Dimension of embedding vector (output vector of transformers block) |

| kv | Dimension of key/value projection matrix |

| nh | Number of attention heads |

| ff | Dimension of intermediate vector within transformer block (size of feed-forward projection matrix) |

| el | Number of transformer blocks in the encoder (encoder depth) |

| dl | Number of transformer blocks in the decoder (decoder depth) |

| sh | Signifies that attention heads are shared |

| skv | Signifies that key-values projection matrices are tied |

If a model checkpoint has no specific, *el* or *dl* than both the number of encoder- and decoder layers correspond to *nl*.

## Pre-Training

The checkpoint was pretrained on the [Colossal, Cleaned version of Common Crawl (C4)](https://huggingface.co/datasets/c4) for 524288 steps using

the span-based masked language modeling (MLM) objective.

## Fine-Tuning

**Note**: This model is a **pretrained** checkpoint and has to be fine-tuned for practical usage.

The checkpoint was pretrained in English and is therefore only useful for English NLP tasks.

You can follow on of the following examples on how to fine-tune the model:

*PyTorch*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/pytorch/summarization)

- [Question Answering](https://github.com/huggingface/transformers/blob/master/examples/pytorch/question-answering/run_seq2seq_qa.py)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/pytorch/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

*Tensorflow*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/tensorflow/summarization)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/tensorflow/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

*JAX/Flax*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/flax/summarization)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/flax/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

## Downstream Performance

TODO: Add table if available

## Computational Complexity

TODO: Add table if available

## More information

We strongly recommend the reader to go carefully through the original paper **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)** to get a more nuanced understanding of this model checkpoint.

As explained in the following [issue](https://github.com/google-research/google-research/issues/986#issuecomment-1035051145), checkpoints including the *sh* or *skv*

model architecture variations have *not* been ported to Transformers as they are probably of limited practical usage and are lacking a more detailed description. Those checkpoints are kept [here](https://huggingface.co/NewT5SharedHeadsSharedKeyValues) as they might be ported potentially in the future.

|

3fc50de70a2ca161c3393e012cadca62

|

tkubotake/xlm-roberta-base-finetuned-panx-en

|

tkubotake

|

xlm-roberta

| 9 | 8 |

transformers

| 0 |

token-classification

| true | false | false |

mit

| null |

['xtreme']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,375 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-en

This model is a fine-tuned version of [tkubotake/xlm-roberta-base-finetuned-panx-de](https://huggingface.co/tkubotake/xlm-roberta-base-finetuned-panx-de) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5430

- F1: 0.7580

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.1318 | 1.0 | 50 | 0.4145 | 0.7557 |

| 0.0589 | 2.0 | 100 | 0.5016 | 0.7524 |

| 0.0314 | 3.0 | 150 | 0.5430 | 0.7580 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

5255d1d2d19580e965c3d35d1e080141

|

geevegeorge/customdbmodelv7

|

geevegeorge

| null | 8 | 3 |

diffusers

| 0 | null | false | false | false |

apache-2.0

|

['en']

|

['geevegeorge/customdbv7']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,210 | false |

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# customdbmodelv7

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `geevegeorge/customdbv7` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 2

- eval_batch_size: 2

- gradient_accumulation_steps: 8

- optimizer: AdamW with betas=(0.95, 0.999), weight_decay=1e-06 and epsilon=1e-08

- lr_scheduler: cosine

- lr_warmup_steps: 500

- ema_inv_gamma: 1.0

- ema_inv_gamma: 0.75

- ema_inv_gamma: 0.9999

- mixed_precision: no

### Training results

📈 [TensorBoard logs](https://huggingface.co/geevegeorge/customdbmodelv7/tensorboard?#scalars)

|

b458654ef066d0441e09770b9ed641bf

|

ishaankul67/2008_Sichuan_earthquake-clustered

|

ishaankul67

|

distilbert

| 8 | 0 |

transformers

| 0 |

question-answering

| false | true | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,880 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# ishaankul67/2008_Sichuan_earthquake-clustered

This model is a fine-tuned version of [nandysoham16/12-clustered_aug](https://huggingface.co/nandysoham16/12-clustered_aug) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.4882

- Train End Logits Accuracy: 0.8924

- Train Start Logits Accuracy: 0.7882

- Validation Loss: 0.2788

- Validation End Logits Accuracy: 0.8947

- Validation Start Logits Accuracy: 0.8947

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 18, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Train End Logits Accuracy | Train Start Logits Accuracy | Validation Loss | Validation End Logits Accuracy | Validation Start Logits Accuracy | Epoch |

|:----------:|:-------------------------:|:---------------------------:|:---------------:|:------------------------------:|:--------------------------------:|:-----:|

| 0.4882 | 0.8924 | 0.7882 | 0.2788 | 0.8947 | 0.8947 | 0 |

### Framework versions

- Transformers 4.26.0

- TensorFlow 2.9.2

- Datasets 2.9.0

- Tokenizers 0.13.2

|

a6174c4800ee0a8bcbc021f3fa50cb71

|

hrdipto/wav2vec2-base-timit-demo-colab

|

hrdipto

|

wav2vec2

| 12 | 8 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,641 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-timit-demo-colab

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4241

- Wer: 0.3381

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 3.7749 | 4.0 | 500 | 2.0639 | 1.0018 |

| 0.9252 | 8.0 | 1000 | 0.4853 | 0.4821 |

| 0.3076 | 12.0 | 1500 | 0.4507 | 0.4044 |

| 0.1732 | 16.0 | 2000 | 0.4315 | 0.3688 |

| 0.1269 | 20.0 | 2500 | 0.4481 | 0.3559 |

| 0.1087 | 24.0 | 3000 | 0.4354 | 0.3464 |

| 0.0832 | 28.0 | 3500 | 0.4241 | 0.3381 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu111

- Datasets 1.13.3

- Tokenizers 0.10.3

|

b6f85e1e1b2faad70c5a106927e1d6b9

|

microsoft/conditional-detr-resnet-50

|

microsoft

|

conditional_detr

| 5 | 1,007 |

transformers

| 1 |

object-detection

| true | false | false |

apache-2.0

| null |

['coco']

| null | 1 | 0 | 1 | 0 | 0 | 0 | 0 |

['object-detection', 'vision']

| false | true | true | 4,237 | false |

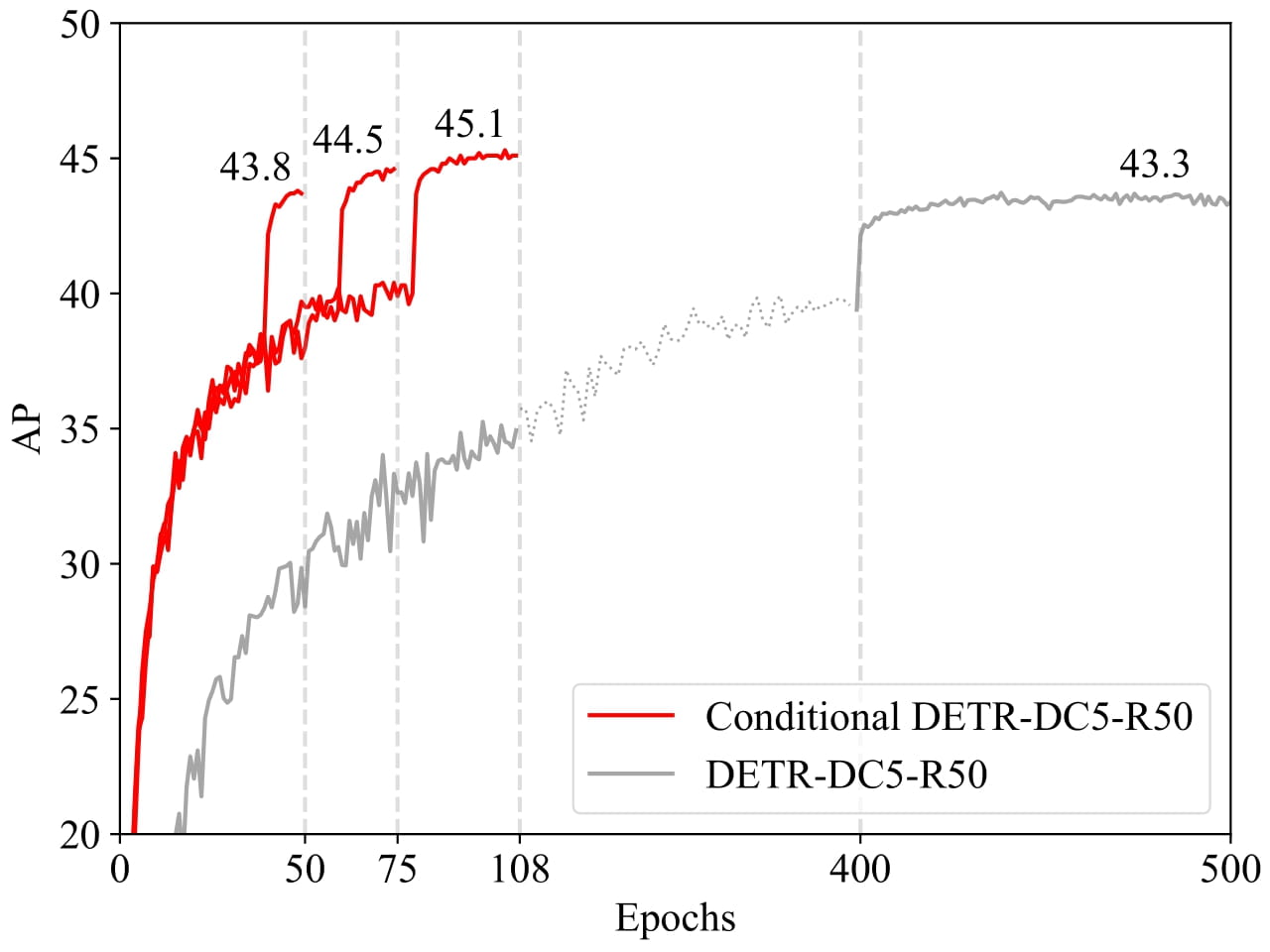

# Conditional DETR model with ResNet-50 backbone

Conditional DEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper [Conditional DETR for Fast Training Convergence](https://arxiv.org/abs/2108.06152) by Meng et al. and first released in [this repository](https://github.com/Atten4Vis/ConditionalDETR).

## Model description

The recently-developed DETR approach applies the transformer encoder and decoder architecture to object detection and achieves promising performance. In this paper, we handle the critical issue, slow training convergence, and present a conditional cross-attention mechanism for fast DETR training. Our approach is motivated by that the cross-attention in DETR relies highly on the content embeddings for localizing the four extremities and predicting the box, which increases the need for high-quality content embeddings and thus the training difficulty. Our approach, named conditional DETR, learns a conditional spatial query from the decoder embedding for decoder multi-head cross-attention. The benefit is that through the conditional spatial query, each cross-attention head is able to attend to a band containing a distinct region, e.g., one object extremity or a region inside the object box. This narrows down the spatial range for localizing the distinct regions for object classification and box regression, thus relaxing the dependence on the content embeddings and easing the training. Empirical results show that conditional DETR converges 6.7× faster for the backbones R50 and R101 and 10× faster for stronger backbones DC5-R50 and DC5-R101.

## Intended uses & limitations

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=microsoft/conditional-detr) to look for all available Conditional DETR models.

### How to use

Here is how to use this model:

```python

from transformers import AutoImageProcessor, ConditionalDetrForObjectDetection

import torch

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

processor = AutoImageProcessor.from_pretrained("microsoft/conditional-detr-resnet-50")

model = ConditionalDetrForObjectDetection.from_pretrained("microsoft/conditional-detr-resnet-50")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

# convert outputs (bounding boxes and class logits) to COCO API

# let's only keep detections with score > 0.7

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.7)[0]

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

```

This should output:

```

Detected remote with confidence 0.833 at location [38.31, 72.1, 177.63, 118.45]

Detected cat with confidence 0.831 at location [9.2, 51.38, 321.13, 469.0]

Detected cat with confidence 0.804 at location [340.3, 16.85, 642.93, 370.95]

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The Conditional DETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

### BibTeX entry and citation info

```bibtex

@inproceedings{MengCFZLYS021,

author = {Depu Meng and

Xiaokang Chen and

Zejia Fan and

Gang Zeng and

Houqiang Li and

Yuhui Yuan and

Lei Sun and

Jingdong Wang},

title = {Conditional {DETR} for Fast Training Convergence},

booktitle = {2021 {IEEE/CVF} International Conference on Computer Vision, {ICCV}

2021, Montreal, QC, Canada, October 10-17, 2021},

}

```

|

45f3301dbac25d6bb39c97dd7c33cd14

|

wyu1/FiD-NQ

|

wyu1

|

t5

| 6 | 0 |

transformers

| 1 | null | true | false | false |

cc-by-4.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 747 | false |

# FiD model trained on NQ

-- This is the model checkpoint of FiD [2], based on the T5 large (with 770M parameters) and trained on the natural question (NQ) dataset [1].

-- Hyperparameters: 8 x 40GB A100 GPUs; batch size 8; AdamW; LR 3e-5; 50000 steps

References:

[1] Natural Questions: A Benchmark for Question Answering Research. TACL 2019.

[2] Leveraging Passage Retrieval with Generative Models for Open Domain Question Answering. EACL 2021.

## Model performance

We evaluate it on the NQ dataset, the EM score is 51.3 (0.1 lower than original performance reported in the paper).

<a href="https://huggingface.co/exbert/?model=bert-base-uncased">

<img width="300px" src="https://cdn-media.huggingface.co/exbert/button.png">

</a>

|

b3b8e6e356edf3d566328022e047557b

|

OpenMatch/t5-ance

|

OpenMatch

|

t5

| 7 | 2 |

transformers

| 0 |

feature-extraction

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 597 | false |

# T5-ANCE

T5-ANCE generally follows the training procedure described in [this page](https://openmatch.readthedocs.io/en/latest/dr-msmarco-passage.html), but uses a much larger batch size.

Dataset used for training:

- MS MARCO Passage

Evaluation result:

|Dataset|Metric|Result|

|---|---|---|

|MS MARCO Passage (dev) | MRR@10 | 0.3570|

Important hyper-parameters:

|Name|Value|

|---|---|

|Global batch size|256|

|Learning rate|5e-6|

|Maximum length of query|32|

|Maximum length of document|128|

|Template for query|`<text>`|

|Template for document|`Title: <title> Text: <text>`|

### Paper

\-

|

38561e01fe2690d63f701d179786a2cd

|

shreeshaaithal/DialoGPT-small-Michael-Scott

|

shreeshaaithal

|

gpt2

| 9 | 4 |

transformers

| 0 |

conversational

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['conversational']

| false | true | true | 1,643 | false |

# DialoGPT Trained on WhatsApp chats

This is an instance of [microsoft/DialoGPT-medium](https://huggingface.co/microsoft/DialoGPT-medium) trained on WhatsApp chats or you can train this model on [a Kaggle game script dataset](https://www.kaggle.com/ruolinzheng/twewy-game-script).

feel free to ask me questions on discord server [discord server](https://discord.gg/Gqhje8Z7DX)

Chat with the model:

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("harrydonni/DialoGPT-small-Michael-Scott")

model = AutoModelWithLMHead.from_pretrained("harrydonni/DialoGPT-small-Michael-Scott")

# Let's chat for 4 lines

for step in range(4):

# encode the new user input, add the eos_token and return a tensor in Pytorch

new_user_input_ids = tokenizer.encode(input(">> User:") + tokenizer.eos_token, return_tensors='pt')

# print(new_user_input_ids)

# append the new user input tokens to the chat history

bot_input_ids = torch.cat([chat_history_ids, new_user_input_ids], dim=-1) if step > 0 else new_user_input_ids

# generated a response while limiting the total chat history to 1000 tokens,

chat_history_ids = model.generate(

bot_input_ids, max_length=200,

pad_token_id=tokenizer.eos_token_id,

no_repeat_ngram_size=3,

do_sample=True,

top_k=100,

top_p=0.7,

temperature=0.8

)

# pretty print last ouput tokens from bot

print("Michael: {}".format(tokenizer.decode(chat_history_ids[:, bot_input_ids.shape[-1]:][0], skip_special_tokens=True)))

```

this is done by shreesha thank you......

|

1fc68a1eb8946e0738b753a1206c67da

|

it5/mt5-small-repubblica-to-ilgiornale

|

it5

|

mt5

| 11 | 4 |

transformers

| 0 |

text2text-generation

| true | true | true |

apache-2.0

|

['it']

|

['gsarti/change_it']

|

{'emissions': '17g', 'source': 'Google Cloud Platform Carbon Footprint', 'training_type': 'fine-tuning', 'geographical_location': 'Eemshaven, Netherlands, Europe', 'hardware_used': '1 TPU v3-8 VM'}

| 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['italian', 'sequence-to-sequence', 'newspaper', 'ilgiornale', 'repubblica', 'style-transfer']

| true | true | true | 3,264 | false |

# mT5 Small for News Headline Style Transfer (Repubblica to Il Giornale) 🗞️➡️🗞️ 🇮🇹

This repository contains the checkpoint for the [mT5 Small](https://huggingface.co/google/mt5-small) model fine-tuned on news headline style transfer in the Repubblica to Il Giornale direction on the Italian CHANGE-IT dataset as part of the experiments of the paper [IT5: Large-scale Text-to-text Pretraining for Italian Language Understanding and Generation](https://arxiv.org/abs/2203.03759) by [Gabriele Sarti](https://gsarti.com) and [Malvina Nissim](https://malvinanissim.github.io).

A comprehensive overview of other released materials is provided in the [gsarti/it5](https://github.com/gsarti/it5) repository. Refer to the paper for additional details concerning the reported scores and the evaluation approach.

## Using the model

The model is trained to generate an headline in the style of Il Giornale from the full body of an article written in the style of Repubblica. Model checkpoints are available for usage in Tensorflow, Pytorch and JAX. They can be used directly with pipelines as:

```python

from transformers import pipelines

r2g = pipeline("text2text-generation", model='it5/mt5-small-repubblica-to-ilgiornale')

r2g("Arriva dal Partito nazionalista basco (Pnv) la conferma che i cinque deputati che siedono in parlamento voteranno la sfiducia al governo guidato da Mariano Rajoy. Pochi voti, ma significativi quelli della formazione politica di Aitor Esteban, che interverrà nel pomeriggio. Pur con dimensioni molto ridotte, il partito basco si è trovato a fare da ago della bilancia in aula. E il sostegno alla mozione presentata dai Socialisti potrebbe significare per il primo ministro non trovare quei 176 voti che gli servono per continuare a governare. \" Perché dovrei dimettermi io che per il momento ho la fiducia della Camera e quella che mi è stato data alle urne \", ha detto oggi Rajoy nel suo intervento in aula, mentre procedeva la discussione sulla mozione di sfiducia. Il voto dei baschi ora cambia le carte in tavola e fa crescere ulteriormente la pressione sul premier perché rassegni le sue dimissioni. La sfiducia al premier, o un'eventuale scelta di dimettersi, porterebbe alle estreme conseguenze lo scandalo per corruzione che ha investito il Partito popolare. Ma per ora sembra pensare a tutt'altro. \"Non ha intenzione di dimettersi - ha detto il segretario generale del Partito popolare , María Dolores de Cospedal - Non gioverebbe all'interesse generale o agli interessi del Pp\".")

>>> [{"generated_text": "il nazionalista rajoy: 'voteremo la sfiducia'"}]

```

or loaded using autoclasses:

```python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("it5/mt5-small-repubblica-to-ilgiornale")

model = AutoModelForSeq2SeqLM.from_pretrained("it5/mt5-small-repubblica-to-ilgiornale")

```

If you use this model in your research, please cite our work as:

```bibtex

@article{sarti-nissim-2022-it5,

title={{IT5}: Large-scale Text-to-text Pretraining for Italian Language Understanding and Generation},

author={Sarti, Gabriele and Nissim, Malvina},

journal={ArXiv preprint 2203.03759},

url={https://arxiv.org/abs/2203.03759},

year={2022},

month={mar}

}

```

|

8ebe2663939cc869a7c7a1838b2cff9e

|

Lvxue/distilled-mt5-small-010099

|

Lvxue

|

mt5

| 14 | 2 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

|

['en', 'ro']

|

['wmt16']

| null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,037 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilled-mt5-small-010099

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on the wmt16 ro-en dataset.

It achieves the following results on the evaluation set:

- Loss: 2.9787

- Bleu: 5.9209

- Gen Len: 50.1856

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5.0

### Training results

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0+cu102

- Datasets 2.3.2

- Tokenizers 0.12.1

|

4d6ead1af09698b62bee7ec0279bbbdd

|

aXhyra/presentation_hate_1234567

|

aXhyra

|

distilbert

| 10 | 5 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null |

['tweet_eval']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,403 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# presentation_hate_1234567

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the tweet_eval dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8438

- F1: 0.7680

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5.436235805743952e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 1234567

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.6027 | 1.0 | 282 | 0.5186 | 0.7209 |

| 0.3537 | 2.0 | 564 | 0.4989 | 0.7619 |

| 0.0969 | 3.0 | 846 | 0.6405 | 0.7697 |

| 0.0514 | 4.0 | 1128 | 0.8438 | 0.7680 |

### Framework versions

- Transformers 4.12.5

- Pytorch 1.9.1

- Datasets 1.16.1

- Tokenizers 0.10.3

|

6474939a362aaf6d43fd44d86802e47b

|

sd-concepts-library/cortana

|

sd-concepts-library

| null | 12 | 0 | null | 0 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,306 | false |

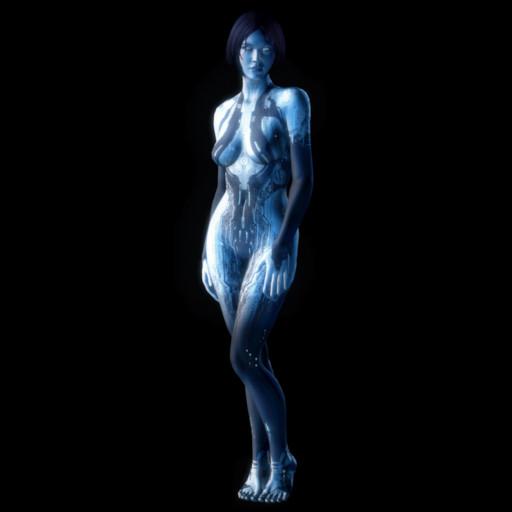

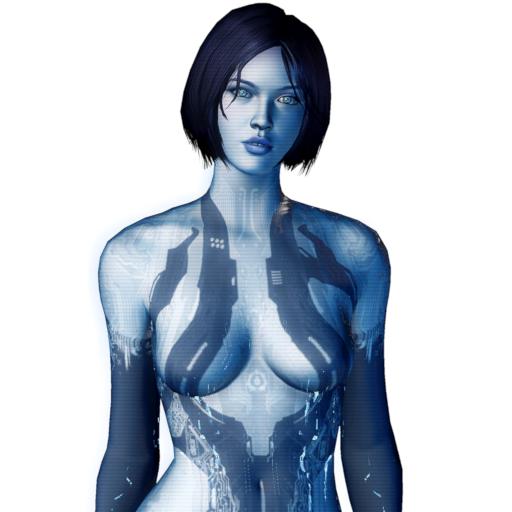

### cortana on Stable Diffusion

This is the `<cortana>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

cda8285610d93c8d879c929285372443

|

gchhablani/wav2vec2-large-xlsr-eo

|

gchhablani

|

wav2vec2

| 10 | 8 |

transformers

| 0 |

automatic-speech-recognition

| true | false | true |

apache-2.0

|

['eo']

|

['common_voice']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['audio', 'automatic-speech-recognition', 'speech', 'xlsr-fine-tuning-week']

| true | true | true | 4,414 | false |

# Wav2Vec2-Large-XLSR-53-Esperanto

Fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on Esperanto using the [Common Voice](https://huggingface.co/datasets/common_voice) dataset.

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

test_dataset = load_dataset("common_voice", "eo", split="test[:2%]")

processor = Wav2Vec2Processor.from_pretrained('gchhablani/wav2vec2-large-xlsr-eo')

model = Wav2Vec2ForCTC.from_pretrained('gchhablani/wav2vec2-large-xlsr-eo')

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

inputs = processor(test_dataset["speech"][:2], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values, attention_mask=inputs.attention_mask).logits

predicted_ids = torch.argmax(logits, dim=-1)

print("Prediction:", processor.batch_decode(predicted_ids))

print("Reference:", test_dataset["sentence"][:2])

```

## Evaluation

The model can be evaluated as follows on the Portuguese test data of Common Voice.

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import re

import jiwer

def chunked_wer(targets, predictions, chunk_size=None):

if chunk_size is None: return jiwer.wer(targets, predictions)

start = 0

end = chunk_size

H, S, D, I = 0, 0, 0, 0

while start < len(targets):

chunk_metrics = jiwer.compute_measures(targets[start:end], predictions[start:end])

H = H + chunk_metrics["hits"]

S = S + chunk_metrics["substitutions"]

D = D + chunk_metrics["deletions"]

I = I + chunk_metrics["insertions"]

start += chunk_size

end += chunk_size

return float(S + D + I) / float(H + S + D)

test_dataset = load_dataset("common_voice", "eo", split="test") #TODO: replace {lang_id} in your language code here. Make sure the code is one of the *ISO codes* of [this](https://huggingface.co/languages) site.

wer = load_metric("wer")

processor = Wav2Vec2Processor.from_pretrained('gchhablani/wav2vec2-large-xlsr-eo')

model = Wav2Vec2ForCTC.from_pretrained('gchhablani/wav2vec2-large-xlsr-eo')

model.to("cuda")

chars_to_ignore_regex = """[\\\\\\\\,\\\\\\\\?\\\\\\\\.\\\\\\\\!\\\\\\\\-\\\\\\\\;\\\\\\\\:\\\\\\\\"\\\\\\\\“\\\\\\\\%\\\\\\\\‘\\\\\\\\”\\\\\\\\�\\\\\\\\„\\\\\\\\«\\\\\\\\(\\\\\\\\»\\\\\\\\)\\\\\\\\’\\\\\\\\']"""

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower().replace('—',' ').replace('–',' ')

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def evaluate(batch):

inputs = processor(batch["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values.to("cuda"), attention_mask=inputs.attention_mask.to("cuda")).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["pred_strings"] = processor.batch_decode(pred_ids)

return batch

result = test_dataset.map(evaluate, batched=True, batch_size=8)

print("WER: {:2f}".format(100 * chunked_wer(predictions=result["pred_strings"], targets=result["sentence"],chunk_size=5000)))

```

**Test Result**: 10.13 %

## Training

The Common Voice `train` and `validation` datasets were used for training. The code can be found [here](https://github.com/gchhablani/wav2vec2-week/blob/main/fine-tune-xlsr-wav2vec2-on-esperanto-asr-with-transformers-final.ipynb).

|

e8503a345651d80c47d7e06114314d20

|

Helsinki-NLP/opus-mt-de-hil

|

Helsinki-NLP

|

marian

| 10 | 8 |

transformers

| 0 |

translation

| true | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['translation']

| false | true | true | 776 | false |

### opus-mt-de-hil

* source languages: de

* target languages: hil

* OPUS readme: [de-hil](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/de-hil/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-20.zip](https://object.pouta.csc.fi/OPUS-MT-models/de-hil/opus-2020-01-20.zip)

* test set translations: [opus-2020-01-20.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/de-hil/opus-2020-01-20.test.txt)

* test set scores: [opus-2020-01-20.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/de-hil/opus-2020-01-20.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.de.hil | 33.9 | 0.563 |

|

5073fce856762ba8521956a93424dd0b

|

espnet/YushiUeda_mini_librispeech_diar_train_diar_raw_valid.acc.best

|

espnet

| null | 24 | 2 |

espnet

| 0 | null | false | false | false |

cc-by-4.0

|

['noinfo']

|

['mini_librispeech']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['espnet', 'audio', 'diarization']

| false | true | true | 5,382 | false |

## ESPnet2 DIAR model

### `espnet/YushiUeda_mini_librispeech_diar_train_diar_raw_valid.acc.best`

This model was trained by YushiUeda using mini_librispeech recipe in [espnet](https://github.com/espnet/espnet/).

### Demo: How to use in ESPnet2

```bash

cd espnet

git checkout 650472b45a67612eaac09c7fbd61dc25f8ff2405

pip install -e .

cd egs2/mini_librispeech/diar1

./run.sh --skip_data_prep false --skip_train true --download_model espnet/YushiUeda_mini_librispeech_diar_train_diar_raw_valid.acc.best

```

<!-- Generated by scripts/utils/show_diar_result.sh -->

# RESULTS

## Environments

- date: `Tue Jan 4 16:43:34 EST 2022`

- python version: `3.7.11 (default, Jul 27 2021, 14:32:16) [GCC 7.5.0]`

- espnet version: `espnet 0.10.5a1`

- pytorch version: `pytorch 1.9.0+cu102`

- Git hash: `0b2a6786b6f627f47defaee22911b3c2dc04af2a`

- Commit date: `Thu Dec 23 12:22:49 2021 -0500`

## diar_train_diar_raw

### DER

dev_clean_2_ns2_beta2_500

|threshold_median_collar|DER|

|---|---|

|result_th0.3_med11_collar0.0|32.28|

|result_th0.3_med1_collar0.0|32.64|

|result_th0.4_med11_collar0.0|30.43|

|result_th0.4_med1_collar0.0|31.15|

|result_th0.5_med11_collar0.0|29.45|

|result_th0.5_med1_collar0.0|30.53|

|result_th0.6_med11_collar0.0|29.52|

|result_th0.6_med1_collar0.0|30.95|

|result_th0.7_med11_collar0.0|30.92|

|result_th0.7_med1_collar0.0|32.69|

## DIAR config

<details><summary>expand</summary>

```

config: conf/train_diar.yaml

print_config: false

log_level: INFO

dry_run: false

iterator_type: chunk

output_dir: exp/diar_train_diar_raw

ngpu: 1

seed: 0

num_workers: 1

num_att_plot: 3

dist_backend: nccl

dist_init_method: env://

dist_world_size: 4

dist_rank: 0

local_rank: 0

dist_master_addr: localhost

dist_master_port: 33757

dist_launcher: null

multiprocessing_distributed: true

unused_parameters: false

sharded_ddp: false

cudnn_enabled: true

cudnn_benchmark: false

cudnn_deterministic: true

collect_stats: false

write_collected_feats: false

max_epoch: 100

patience: 3

val_scheduler_criterion:

- valid

- loss

early_stopping_criterion:

- valid

- loss

- min

best_model_criterion:

- - valid

- acc

- max

keep_nbest_models: 3

nbest_averaging_interval: 0

grad_clip: 5

grad_clip_type: 2.0

grad_noise: false

accum_grad: 2

no_forward_run: false

resume: true

train_dtype: float32

use_amp: false

log_interval: null

use_matplotlib: true

use_tensorboard: true

use_wandb: false

wandb_project: null

wandb_id: null

wandb_entity: null

wandb_name: null

wandb_model_log_interval: -1

detect_anomaly: false

pretrain_path: null

init_param: []

ignore_init_mismatch: false

freeze_param: []

num_iters_per_epoch: null

batch_size: 16

valid_batch_size: null

batch_bins: 1000000

valid_batch_bins: null

train_shape_file:

- exp/diar_stats_8k/train/speech_shape

- exp/diar_stats_8k/train/spk_labels_shape

valid_shape_file:

- exp/diar_stats_8k/valid/speech_shape

- exp/diar_stats_8k/valid/spk_labels_shape

batch_type: folded

valid_batch_type: null

fold_length:

- 80000

- 800

sort_in_batch: descending

sort_batch: descending

multiple_iterator: false

chunk_length: 200000

chunk_shift_ratio: 0.5

num_cache_chunks: 64

train_data_path_and_name_and_type:

- - dump/raw/simu/data/train_clean_5_ns2_beta2_500/wav.scp

- speech

- sound

- - dump/raw/simu/data/train_clean_5_ns2_beta2_500/espnet_rttm

- spk_labels

- rttm

valid_data_path_and_name_and_type:

- - dump/raw/simu/data/dev_clean_2_ns2_beta2_500/wav.scp

- speech

- sound

- - dump/raw/simu/data/dev_clean_2_ns2_beta2_500/espnet_rttm

- spk_labels

- rttm

allow_variable_data_keys: false

max_cache_size: 0.0

max_cache_fd: 32

valid_max_cache_size: null

optim: adam

optim_conf:

lr: 0.01

scheduler: noamlr

scheduler_conf:

warmup_steps: 1000

num_spk: 2

init: xavier_uniform

input_size: null

model_conf:

attractor_weight: 1.0

use_preprocessor: true

frontend: default

frontend_conf:

fs: 8k

hop_length: 128

specaug: null

specaug_conf: {}

normalize: global_mvn

normalize_conf:

stats_file: exp/diar_stats_8k/train/feats_stats.npz

encoder: transformer

encoder_conf:

input_layer: linear

num_blocks: 2

linear_units: 512

dropout_rate: 0.1

output_size: 256

attention_heads: 4

attention_dropout_rate: 0.0

decoder: linear

decoder_conf: {}

label_aggregator: label_aggregator

label_aggregator_conf: {}

attractor: null

attractor_conf: {}

required:

- output_dir

version: 0.10.5a1

distributed: true

```

</details>

### Citing ESPnet

```BibTex

@inproceedings{watanabe2018espnet,

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Yalta and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

title={{ESPnet}: End-to-End Speech Processing Toolkit},

year={2018},

booktitle={Proceedings of Interspeech},

pages={2207--2211},

doi={10.21437/Interspeech.2018-1456},

url={http://dx.doi.org/10.21437/Interspeech.2018-1456}

}

```

or arXiv:

```bibtex

@misc{watanabe2018espnet,

title={ESPnet: End-to-End Speech Processing Toolkit},

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Yalta and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

year={2018},

eprint={1804.00015},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

1b8fcad247fa5591f9e0586e668e8d44

|

k4black/edos-2023-baseline-bert-base-uncased-label_vector

|

k4black

|

bert

| 10 | 3 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,706 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# edos-2023-baseline-bert-base-uncased-label_vector

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.1312

- F1: 0.4311

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 5

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 2.1453 | 0.59 | 100 | 1.9401 | 0.1077 |

| 1.8818 | 1.18 | 200 | 1.7312 | 0.1350 |

| 1.7149 | 1.78 | 300 | 1.5556 | 0.2047 |

| 1.5769 | 2.37 | 400 | 1.4030 | 0.2815 |

| 1.4909 | 2.96 | 500 | 1.3020 | 0.3217 |

| 1.3472 | 3.55 | 600 | 1.2238 | 0.3872 |

| 1.2856 | 4.14 | 700 | 1.1584 | 0.4162 |

| 1.2455 | 4.73 | 800 | 1.1312 | 0.4311 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.13.0+cu117

- Datasets 2.7.1

- Tokenizers 0.13.2

|

28620131ac8aa21dac8ca7369237143d

|

Evgeneus/distilbert-base-uncased-finetuned-ner

|

Evgeneus

|

distilbert

| 15 | 4 |

transformers

| 0 |

token-classification

| true | false | false |

apache-2.0

| null |

['conll2003']

| null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,372 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-ner

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0845

- Precision: 0.8754

- Recall: 0.9058

- F1: 0.8904

- Accuracy: 0.9763

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.2529 | 1.0 | 878 | 0.0845 | 0.8754 | 0.9058 | 0.8904 | 0.9763 |

### Framework versions

- Transformers 4.13.0

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

a43473c2c3ba5110311d451e6536cf46

|

yhavinga/ul2-small-dutch

|

yhavinga

|

t5

| 18 | 37 |

transformers

| 0 |

text2text-generation

| true | false | true |

apache-2.0

|

['nl']

|

['yhavinga/mc4_nl_cleaned', 'yhavinga/nedd_wiki_news']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['dutch', 't5', 't5x', 'ul2', 'seq2seq']

| false | true | true | 10,354 | false |

# ul2-small-dutch for Dutch

Pretrained T5 model on Dutch using a UL2 (Mixture-of-Denoisers) objective.

The T5 model was introduced in

[this paper](https://arxiv.org/abs/1910.10683)

and first released at [this page](https://github.com/google-research/text-to-text-transfer-transformer).

The UL2 objective was introduced in

[this paper](https://arxiv.org/abs/2205.05131)

and first released at [this page](https://github.com/google-research/google-research/tree/master/ul2).

**Note:** The Hugging Face inference widget is deactivated because this model needs a text-to-text fine-tuning on

a specific downstream task to be useful in practice.

## Model description

T5 is an encoder-decoder model and treats all NLP problems in a text-to-text format.

`ul2-small-dutch` T5 is a transformers model pretrained on a very large corpus of

Dutch data in a self-supervised fashion.

This means it was pretrained on the raw texts only, with no humans labelling them in any way

(which is why it can use lots of publicly available data) with an automatic process to generate

inputs and outputs from those texts.

This model used the [T5 v1.1](https://github.com/google-research/text-to-text-transfer-transformer/blob/main/released_checkpoints.md#t511) improvements compared to the original T5 model during the pretraining:

- GEGLU activation in the feed-forward hidden layer, rather than ReLU - see [here](https://arxiv.org/abs/2002.05202)

- Dropout was turned off during pre-training. Dropout should be re-enabled during fine-tuning

- Pre-trained on self-supervised objective only without mixing in the downstream tasks

- No parameter sharing between embedding and classifier layer

### UL2 pretraining objective

This model was pretrained with the UL2's Mixture-of-Denoisers (MoD) objective, that combines diverse pre-training