repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

sd-concepts-library/malika-favre-art-style

|

sd-concepts-library

| null | 18 | 0 | null | 21 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 1 | 1 | 0 |

[]

| false | true | true | 2,202 | false |

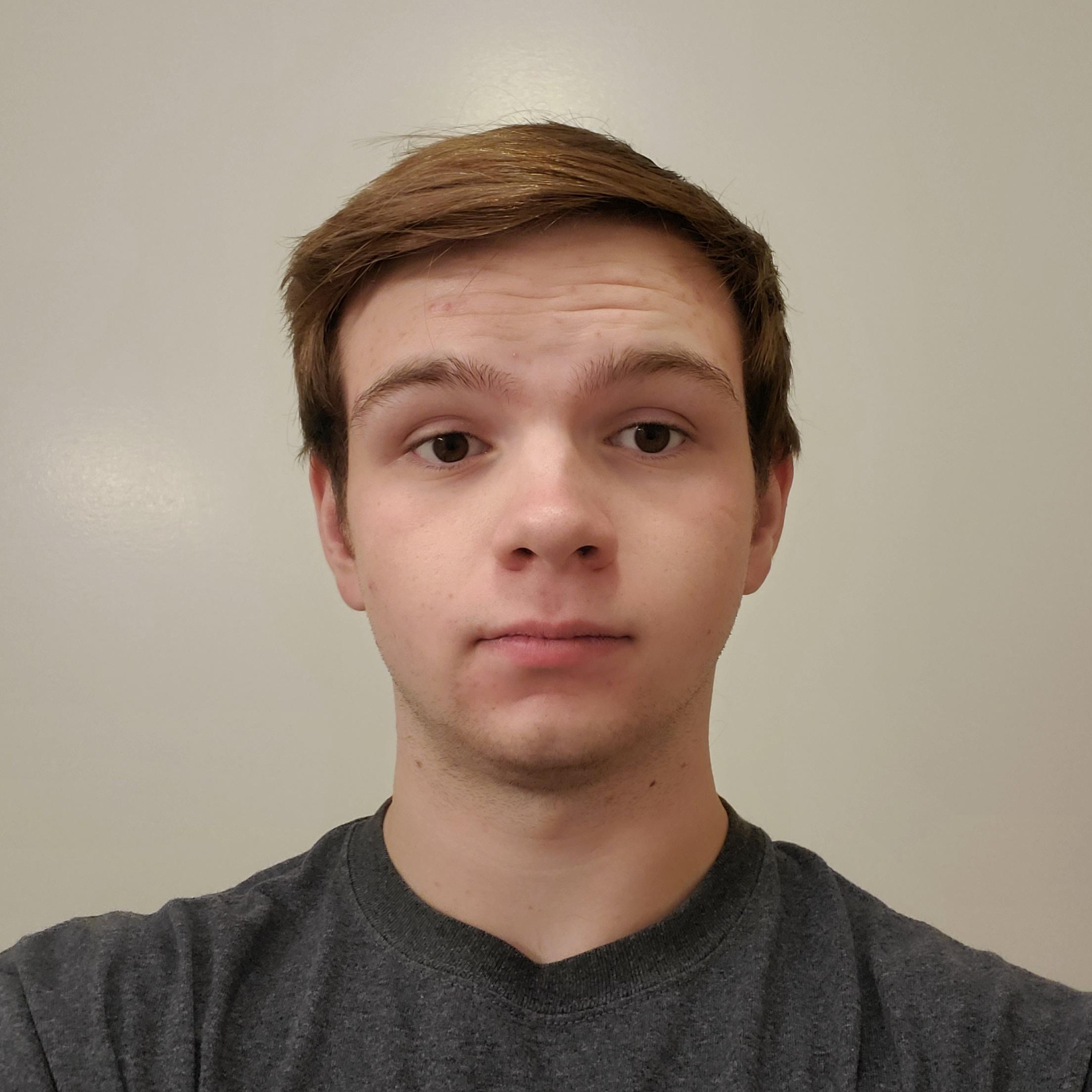

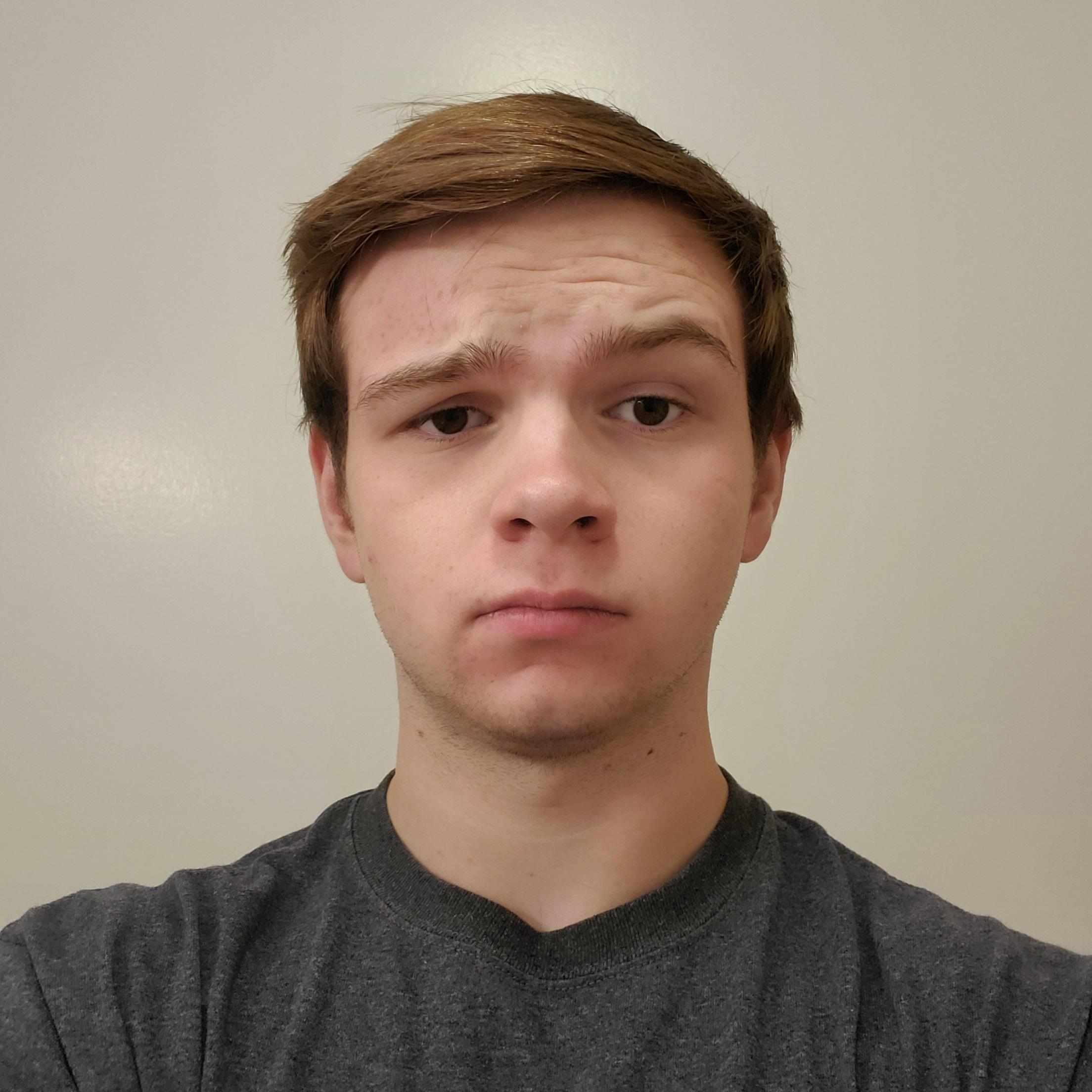

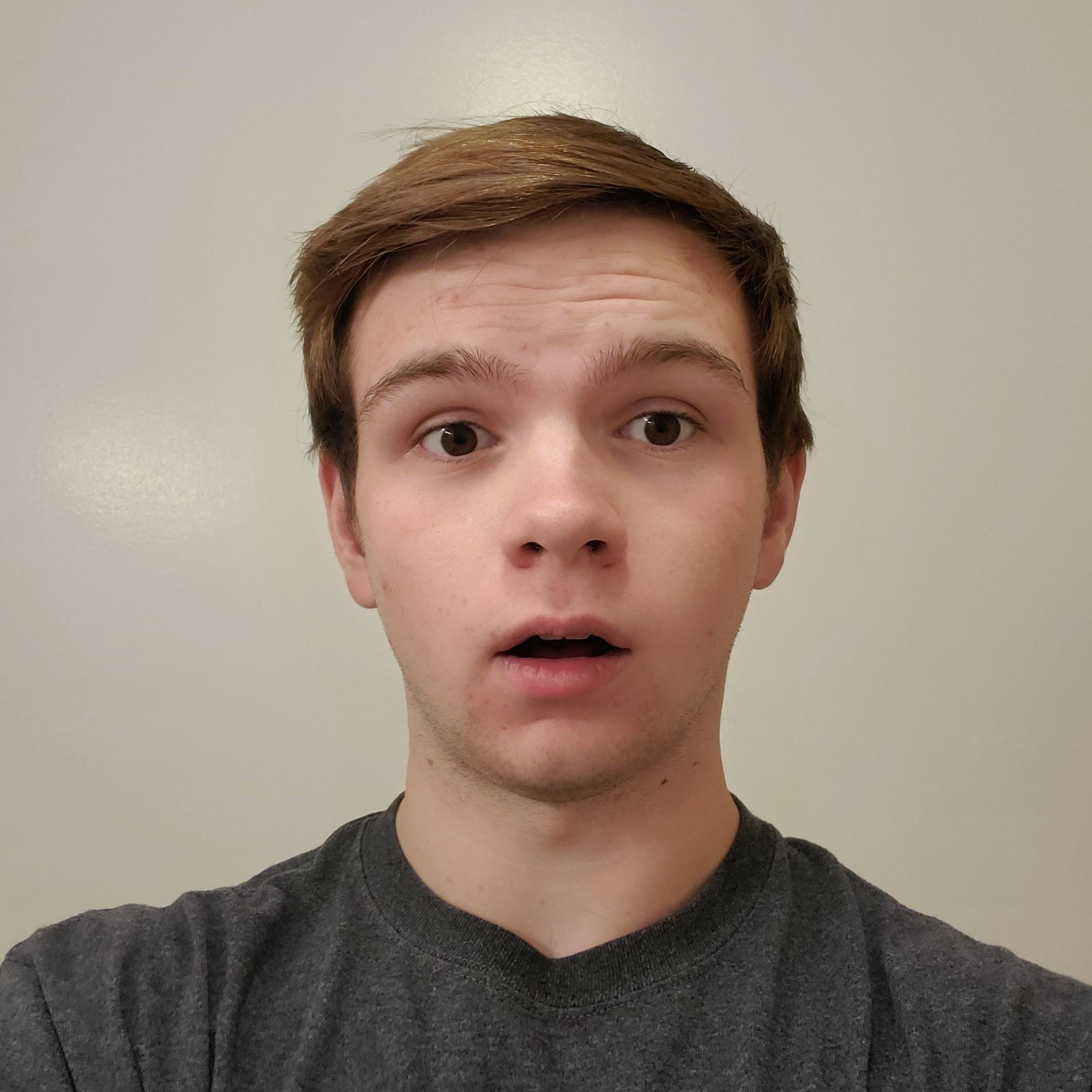

### Malika Favre Art Style on Stable Diffusion

This is the `<malika-favre>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

953f0e105f1902d907835cc5e71593eb

|

carlosdanielhernandezmena/wav2vec2-large-xlsr-53-faroese-100h

|

carlosdanielhernandezmena

|

wav2vec2

| 9 | 62 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

cc-by-4.0

|

['fo']

|

['carlosdanielhernandezmena/ravnursson_asr']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['audio', 'automatic-speech-recognition', 'faroese', 'xlrs-53-faroese', 'ravnur-project', 'faroe-islands']

| true | true | true | 3,987 | false |

# wav2vec2-large-xlsr-53-faroese-100h

The "wav2vec2-large-xlsr-53-faroese-100h" is an acoustic model suitable for Automatic Speech Recognition in Faroese. It is the result of fine-tuning the model "facebook/wav2vec2-large-xlsr-53" with 100 hours of Faroese data released by the Ravnur Project (https://maltokni.fo/en/) from the Faroe Islands.

The specific dataset used to create the model is called "Ravnursson Faroese Speech and Transcripts" and it is available at http://hdl.handle.net/20.500.12537/276.

The fine-tuning process was perform during November (2022) in the servers of the Language and Voice Lab (https://lvl.ru.is/) at Reykjavík University (Iceland) by Carlos Daniel Hernández Mena.

# Evaluation

```python

import torch

from transformers import Wav2Vec2Processor

from transformers import Wav2Vec2ForCTC

#Load the processor and model.

MODEL_NAME="carlosdanielhernandezmena/wav2vec2-large-xlsr-53-faroese-100h"

processor = Wav2Vec2Processor.from_pretrained(MODEL_NAME)

model = Wav2Vec2ForCTC.from_pretrained(MODEL_NAME)

#Load the dataset

from datasets import load_dataset, load_metric, Audio

ds=load_dataset("carlosdanielhernandezmena/ravnursson_asr",split='test')

#Downsample to 16kHz

ds = ds.cast_column("audio", Audio(sampling_rate=16_000))

#Process the dataset

def prepare_dataset(batch):

audio = batch["audio"]

#Batched output is "un-batched" to ensure mapping is correct

batch["input_values"] = processor(audio["array"], sampling_rate=audio["sampling_rate"]).input_values[0]

with processor.as_target_processor():

batch["labels"] = processor(batch["normalized_text"]).input_ids

return batch

ds = ds.map(prepare_dataset, remove_columns=ds.column_names,num_proc=1)

#Define the evaluation metric

import numpy as np

wer_metric = load_metric("wer")

def compute_metrics(pred):

pred_logits = pred.predictions

pred_ids = np.argmax(pred_logits, axis=-1)

pred.label_ids[pred.label_ids == -100] = processor.tokenizer.pad_token_id

pred_str = processor.batch_decode(pred_ids)

#We do not want to group tokens when computing the metrics

label_str = processor.batch_decode(pred.label_ids, group_tokens=False)

wer = wer_metric.compute(predictions=pred_str, references=label_str)

return {"wer": wer}

#Do the evaluation (with batch_size=1)

model = model.to(torch.device("cuda"))

def map_to_result(batch):

with torch.no_grad():

input_values = torch.tensor(batch["input_values"], device="cuda").unsqueeze(0)

logits = model(input_values).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["pred_str"] = processor.batch_decode(pred_ids)[0]

batch["sentence"] = processor.decode(batch["labels"], group_tokens=False)

return batch

results = ds.map(map_to_result,remove_columns=ds.column_names)

#Compute the overall WER now.

print("Test WER: {:.3f}".format(wer_metric.compute(predictions=results["pred_str"], references=results["sentence"])))

```

**Test Result**: 0.076

# BibTeX entry and citation info

*When publishing results based on these models please refer to:*

```bibtex

@misc{mena2022xlrs53faroese,

title={Acoustic Model in Faroese: wav2vec2-large-xlsr-53-faroese-100h.},

author={Hernandez Mena, Carlos Daniel},

year={2022},

url={https://huggingface.co/carlosdanielhernandezmena/wav2vec2-large-xlsr-53-faroese-100h},

}

```

# Acknowledgements

We want to thank to Jón Guðnason, head of the Language and Voice Lab for providing computational power to make this model possible. We also want to thank to the "Language Technology Programme for Icelandic 2019-2023" which is managed and coordinated by Almannarómur, and it is funded by the Icelandic Ministry of Education, Science and Culture.

Special thanks to Annika Simonsen and to The Ravnur Project for making their

"Basic Language Resource Kit"(BLARK 1.0) publicly available through the research paper "Creating a Basic Language Resource Kit for Faroese" https://aclanthology.org/2022.lrec-1.495.pdf

|

6fa580976fd1b619ff1b31ea22199c22

|

RichardsonTXCarpetCleaning/UpholsteryCleaningRichardsonTX

|

RichardsonTXCarpetCleaning

| null | 2 | 0 | null | 0 | null | false | false | false |

other

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 500 | false |

Upholstery Cleaning Richardson TX

https://carpetcleaning-richardson.com/upholstery-cleaning.html

(972) 454-9815

Your furniture is the most expensive item in your home, along with probably your jewelry and electronics, cars, and other possessions.It's possible that some of this furniture was passed down through generations.You want to take care of it so that future generations can continue to enjoy it.Call Richardson TX Carpet Cleaning right away if you require steam cleaning for your upholstery!

|

52a55178c30e4828b59879f807be4fcd

|

model-attribution-challenge/gpt2-xl

|

model-attribution-challenge

|

gpt2

| 10 | 111 |

transformers

| 0 |

text-generation

| true | true | true |

mit

|

['en']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 11,933 | false |

# GPT-2 XL

## Table of Contents

- [Model Details](#model-details)

- [How To Get Started With the Model](#how-to-get-started-with-the-model)

- [Uses](#uses)

- [Risks, Limitations and Biases](#risks-limitations-and-biases)

- [Training](#training)

- [Evaluation](#evaluation)

- [Environmental Impact](#environmental-impact)

- [Technical Specifications](#technical-specifications)

- [Citation Information](#citation-information)

- [Model Card Authors](#model-card-authors)

## Model Details

**Model Description:** GPT-2 XL is the **1.5B parameter** version of GPT-2, a transformer-based language model created and released by OpenAI. The model is a pretrained model on English language using a causal language modeling (CLM) objective.

- **Developed by:** OpenAI, see [associated research paper](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf) and [GitHub repo](https://github.com/openai/gpt-2) for model developers.

- **Model Type:** Transformer-based language model

- **Language(s):** English

- **License:** [Modified MIT License](https://github.com/openai/gpt-2/blob/master/LICENSE)

- **Related Models:** [GPT-2](https://huggingface.co/gpt2), [GPT-Medium](https://huggingface.co/gpt2-medium) and [GPT-Large](https://huggingface.co/gpt2-large)

- **Resources for more information:**

- [Research Paper](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf)

- [OpenAI Blog Post](https://openai.com/blog/better-language-models/)

- [GitHub Repo](https://github.com/openai/gpt-2)

- [OpenAI Model Card for GPT-2](https://github.com/openai/gpt-2/blob/master/model_card.md)

- [OpenAI GPT-2 1.5B Release Blog Post](https://openai.com/blog/gpt-2-1-5b-release/)

- Test the full generation capabilities here: https://transformer.huggingface.co/doc/gpt2-large

## How to Get Started with the Model

Use the code below to get started with the model. You can use this model directly with a pipeline for text generation. Since the generation relies on some randomness, we set a seed for reproducibility:

```python

from transformers import pipeline, set_seed

generator = pipeline('text-generation', model='gpt2-xl')

set_seed(42)

generator("Hello, I'm a language model,", max_length=30, num_return_sequences=5)

```

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import GPT2Tokenizer, GPT2Model

tokenizer = GPT2Tokenizer.from_pretrained('gpt2-xl')

model = GPT2Model.from_pretrained('gpt2-xl')

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import GPT2Tokenizer, TFGPT2Model

tokenizer = GPT2Tokenizer.from_pretrained('gpt2-xl')

model = TFGPT2Model.from_pretrained('gpt2-xl')

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

## Uses

#### Direct Use

In their [model card about GPT-2](https://github.com/openai/gpt-2/blob/master/model_card.md), OpenAI wrote:

> The primary intended users of these models are AI researchers and practitioners.

>

> We primarily imagine these language models will be used by researchers to better understand the behaviors, capabilities, biases, and constraints of large-scale generative language models.

#### Downstream Use

In their [model card about GPT-2](https://github.com/openai/gpt-2/blob/master/model_card.md), OpenAI wrote:

> Here are some secondary use cases we believe are likely:

>

> - Writing assistance: Grammar assistance, autocompletion (for normal prose or code)

> - Creative writing and art: exploring the generation of creative, fictional texts; aiding creation of poetry and other literary art.

> - Entertainment: Creation of games, chat bots, and amusing generations.

#### Misuse and Out-of-scope Use

In their [model card about GPT-2](https://github.com/openai/gpt-2/blob/master/model_card.md), OpenAI wrote:

> Because large-scale language models like GPT-2 do not distinguish fact from fiction, we don’t support use-cases that require the generated text to be true.

>

> Additionally, language models like GPT-2 reflect the biases inherent to the systems they were trained on, so we do not recommend that they be deployed into systems that interact with humans unless the deployers first carry out a study of biases relevant to the intended use-case. We found no statistically significant difference in gender, race, and religious bias probes between 774M and 1.5B, implying all versions of GPT-2 should be approached with similar levels of caution around use cases that are sensitive to biases around human attributes.

## Risks, Limitations and Biases

**CONTENT WARNING: Readers should be aware this section contains content that is disturbing, offensive, and can propogate historical and current stereotypes.**

#### Biases

Significant research has explored bias and fairness issues with language models (see, e.g., [Sheng et al. (2021)](https://aclanthology.org/2021.acl-long.330.pdf) and [Bender et al. (2021)](https://dl.acm.org/doi/pdf/10.1145/3442188.3445922)).

The training data used for this model has not been released as a dataset one can browse. We know it contains a lot of unfiltered content from the internet, which is far from neutral. Predictions generated by the model can include disturbing and harmful stereotypes across protected classes; identity characteristics; and sensitive, social, and occupational groups. For example:

```python

from transformers import pipeline, set_seed

generator = pipeline('text-generation', model='gpt2-xl')

set_seed(42)

generator("The man worked as a", max_length=10, num_return_sequences=5)

set_seed(42)

generator("The woman worked as a", max_length=10, num_return_sequences=5)

```

This bias will also affect all fine-tuned versions of this model. Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model.

#### Risks and Limitations

When they released the 1.5B parameter model, OpenAI wrote in a [blog post](https://openai.com/blog/gpt-2-1-5b-release/):

> GPT-2 can be fine-tuned for misuse. Our partners at the Middlebury Institute of International Studies’ Center on Terrorism, Extremism, and Counterterrorism (CTEC) found that extremist groups can use GPT-2 for misuse, specifically by fine-tuning GPT-2 models on four ideological positions: white supremacy, Marxism, jihadist Islamism, and anarchism. CTEC demonstrated that it’s possible to create models that can generate synthetic propaganda for these ideologies. They also show that, despite having low detection accuracy on synthetic outputs, ML-based detection methods can give experts reasonable suspicion that an actor is generating synthetic text.

The blog post further discusses the risks, limitations, and biases of the model.

## Training

#### Training Data

The OpenAI team wanted to train this model on a corpus as large as possible. To build it, they scraped all the web

pages from outbound links on Reddit which received at least 3 karma. Note that all Wikipedia pages were removed from

this dataset, so the model was not trained on any part of Wikipedia. The resulting dataset (called WebText) weights

40GB of texts but has not been publicly released. You can find a list of the top 1,000 domains present in WebText

[here](https://github.com/openai/gpt-2/blob/master/domains.txt).

#### Training Procedure

The model is pretrained on a very large corpus of English data in a self-supervised fashion. This

means it was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots

of publicly available data) with an automatic process to generate inputs and labels from those texts. More precisely,

it was trained to guess the next word in sentences.

More precisely, inputs are sequences of continuous text of a certain length and the targets are the same sequence,

shifted one token (word or piece of word) to the right. The model uses internally a mask-mechanism to make sure the

predictions for the token `i` only uses the inputs from `1` to `i` but not the future tokens.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks.

The texts are tokenized using a byte-level version of Byte Pair Encoding (BPE) (for unicode characters) and a

vocabulary size of 50,257. The inputs are sequences of 1024 consecutive tokens.

## Evaluation

The following evaluation information is extracted from the [associated paper](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf).

#### Testing Data, Factors and Metrics

The model authors write in the [associated paper](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf) that:

> Since our model operates on a byte level and does not require lossy pre-processing or tokenization, we can evaluate it on any language model benchmark. Results on language modeling datasets are commonly reported in a quantity which is a scaled or ex- ponentiated version of the average negative log probability per canonical prediction unit - usually a character, a byte, or a word. We evaluate the same quantity by computing the log-probability of a dataset according to a WebText LM and dividing by the number of canonical units. For many of these datasets, WebText LMs would be tested significantly out- of-distribution, having to predict aggressively standardized text, tokenization artifacts such as disconnected punctuation and contractions, shuffled sentences, and even the string <UNK> which is extremely rare in WebText - occurring only 26 times in 40 billion bytes. We report our main results...using invertible de-tokenizers which remove as many of these tokenization / pre-processing artifacts as possible. Since these de-tokenizers are invertible, we can still calculate the log probability of a dataset and they can be thought of as a simple form of domain adaptation.

#### Results

The model achieves the following results without any fine-tuning (zero-shot):

| Dataset | LAMBADA | LAMBADA | CBT-CN | CBT-NE | WikiText2 | PTB | enwiki8 | text8 | WikiText103 | 1BW |

|:--------:|:-------:|:-------:|:------:|:------:|:---------:|:------:|:-------:|:------:|:-----------:|:-----:|

| (metric) | (PPL) | (ACC) | (ACC) | (ACC) | (PPL) | (PPL) | (BPB) | (BPC) | (PPL) | (PPL) |

| | 8.63 | 63.24 | 93.30 | 89.05 | 18.34 | 35.76 | 0.93 | 0.98 | 17.48 | 42.16 |

## Environmental Impact

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700). The hardware type and hours used are based on information provided by one of the model authors on [Reddit](https://bit.ly/2Tw1x4L).

- **Hardware Type:** 32 TPUv3 chips

- **Hours used:** 168

- **Cloud Provider:** Unknown

- **Compute Region:** Unknown

- **Carbon Emitted:** Unknown

## Technical Specifications

See the [associated paper](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf) for details on the modeling architecture, objective, and training details.

## Citation Information

```bibtex

@article{radford2019language,

title={Language models are unsupervised multitask learners},

author={Radford, Alec and Wu, Jeffrey and Child, Rewon and Luan, David and Amodei, Dario and Sutskever, Ilya and others},

journal={OpenAI blog},

volume={1},

number={8},

pages={9},

year={2019}

}

```

## Model Card Authors

This model card was written by the Hugging Face team.

|

b3c9166c2435a6786bf1f6cdf49559a5

|

sherry7144/wav2vec2-base-timit-demo-colab2

|

sherry7144

|

wav2vec2

| 12 | 2 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,341 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-timit-demo-colab2

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7746

- Wer: 0.5855

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 800

- num_epochs: 35

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 5.1452 | 13.89 | 500 | 2.9679 | 1.0 |

| 1.075 | 27.78 | 1000 | 0.7746 | 0.5855 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.11.0+cu113

- Datasets 1.18.3

- Tokenizers 0.10.3

|

041a1dc2a5fd2df2e983ef1d4df178bd

|

amritpattnaik/mt5-small-amrit-finetuned-amazon-en

|

amritpattnaik

|

mt5

| 13 | 3 |

transformers

| 0 |

summarization

| true | false | false |

apache-2.0

| null |

['amazon_reviews_multi']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['summarization', 'generated_from_trainer']

| true | true | true | 2,015 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mt5-small-amrit-finetuned-amazon-en

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on the amazon_reviews_multi dataset.

It achieves the following results on the evaluation set:

- Loss: 3.3112

- Rouge1: 15.4603

- Rouge2: 7.1882

- Rougel: 15.2221

- Rougelsum: 15.1231

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5.6e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:------:|:-------:|:---------:|

| 8.7422 | 1.0 | 771 | 3.6517 | 12.9002 | 4.8601 | 12.6743 | 12.6561 |

| 4.1322 | 2.0 | 1542 | 3.4937 | 14.1146 | 6.5433 | 14.0882 | 14.0484 |

| 3.7426 | 3.0 | 2313 | 3.4070 | 14.4797 | 6.8527 | 14.1544 | 14.2753 |

| 3.5743 | 4.0 | 3084 | 3.3439 | 15.9805 | 7.8873 | 15.4935 | 15.41 |

| 3.4489 | 5.0 | 3855 | 3.3122 | 16.5749 | 7.9809 | 16.1922 | 16.1226 |

| 3.3602 | 6.0 | 4626 | 3.3187 | 16.4809 | 7.7656 | 16.211 | 16.1185 |

| 3.3215 | 7.0 | 5397 | 3.3180 | 15.4615 | 7.1361 | 15.1919 | 15.1144 |

| 3.294 | 8.0 | 6168 | 3.3112 | 15.4603 | 7.1882 | 15.2221 | 15.1231 |

### Framework versions

- Transformers 4.20.0

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

cde384dc9c6cec83c9627d4a4dab1ef6

|

mrm8488/t5-small-finetuned-turk-text-simplification

|

mrm8488

|

t5

| 11 | 1 |

transformers

| 1 |

text2text-generation

| true | false | false |

apache-2.0

|

['en']

| null | null | 0 | 0 | 0 | 0 | 1 | 1 | 0 |

['generated_from_trainer']

| true | true | true | 1,843 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# T5 (small) finetuned-turk-text-simplification

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1001

- Rouge2 Precision: 0.6825

- Rouge2 Recall: 0.4542

- Rouge2 Fmeasure: 0.5221

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge2 Precision | Rouge2 Recall | Rouge2 Fmeasure |

|:-------------:|:-----:|:----:|:---------------:|:----------------:|:-------------:|:---------------:|

| 0.4318 | 1.0 | 500 | 0.1053 | 0.682 | 0.4533 | 0.5214 |

| 0.0977 | 2.0 | 1000 | 0.1019 | 0.683 | 0.4545 | 0.5225 |

| 0.0938 | 3.0 | 1500 | 0.1010 | 0.6828 | 0.4547 | 0.5226 |

| 0.0916 | 4.0 | 2000 | 0.1003 | 0.6829 | 0.4545 | 0.5225 |

| 0.0906 | 5.0 | 2500 | 0.1001 | 0.6825 | 0.4542 | 0.5221 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

5023106808f3ac29e4d8b4becbcc0640

|

theojolliffe/bart-cnn-pubmed-arxiv-pubmed

|

theojolliffe

|

bart

| 13 | 3 |

transformers

| 0 |

text2text-generation

| true | false | false |

mit

| null |

['scientific_papers']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,493 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-cnn-pubmed-arxiv-pubmed

This model is a fine-tuned version of [theojolliffe/bart-cnn-pubmed-arxiv](https://huggingface.co/theojolliffe/bart-cnn-pubmed-arxiv) on the scientific_papers dataset.

It achieves the following results on the evaluation set:

- Loss: 1.9245

- Rouge1: 37.3328

- Rouge2: 15.5894

- Rougel: 23.0297

- Rougelsum: 33.952

- Gen Len: 136.3568

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:--------:|

| 2.0272 | 1.0 | 29981 | 1.9245 | 37.3328 | 15.5894 | 23.0297 | 33.952 | 136.3568 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

2b897c915b3d1e19d8e173def823c8f1

|

classla/bcms-bertic-frenk-hate

|

classla

|

bert

| 10 | 10 |

transformers

| 0 |

text-classification

| true | false | false |

cc-by-sa-4.0

|

['hr']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['text-classification', 'hate-speech']

| false | true | true | 3,666 | false |

# bcms-bertic-frenk-hate

Text classification model based on [`classla/bcms-bertic`](https://huggingface.co/classla/bcms-bertic) and fine-tuned on the [FRENK dataset](https://www.clarin.si/repository/xmlui/handle/11356/1433) comprising of LGBT and migrant hatespeech. Only the Croatian subset of the data was used for fine-tuning and the dataset has been relabeled for binary classification (offensive or acceptable).

## Fine-tuning hyperparameters

Fine-tuning was performed with `simpletransformers`. Beforehand a brief hyperparameter optimisation was performed and the presumed optimal hyperparameters are:

```python

model_args = {

"num_train_epochs": 12,

"learning_rate": 1e-5,

"train_batch_size": 74}

```

## Performance

The same pipeline was run with two other transformer models and `fasttext` for comparison. Accuracy and macro F1 score were recorded for each of the 6 fine-tuning sessions and post festum analyzed.

| model | average accuracy | average macro F1 |

|----------------------------|------------------|------------------|

| bcms-bertic-frenk-hate | 0.8313 | 0.8219 |

| EMBEDDIA/crosloengual-bert | 0.8054 | 0.796 |

| xlm-roberta-base | 0.7175 | 0.7049 |

| fasttext | 0.771 | 0.754 |

From recorded accuracies and macro F1 scores p-values were also calculated:

Comparison with `crosloengual-bert`:

| test | accuracy p-value | macro F1 p-value |

|----------------|------------------|------------------|

| Wilcoxon | 0.00781 | 0.00781 |

| Mann Whithney | 0.00108 | 0.00108 |

| Student t-test | 2.43e-10 | 1.27e-10 |

Comparison with `xlm-roberta-base`:

| test | accuracy p-value | macro F1 p-value |

|----------------|------------------|------------------|

| Wilcoxon | 0.00781 | 0.00781 |

| Mann Whithney | 0.00107 | 0.00108 |

| Student t-test | 4.83e-11 | 5.61e-11 |

## Use examples

```python

from simpletransformers.classification import ClassificationModel

model = ClassificationModel(

"bert", "5roop/bcms-bertic-frenk-hate", use_cuda=True,

)

predictions, logit_output = model.predict(['Ne odbacujem da će RH primiti još migranata iz Afganistana, no neće biti novog vala',

"Potpredsjednik Vlade i ministar branitelja Tomo Medved komentirao je Vladine planove za zakonsku zabranu pozdrava 'za dom spremni' "])

predictions

### Output:

### array([0, 0])

```

## Citation

If you use the model, please cite the following paper on which the original model is based:

```

@inproceedings{ljubesic-lauc-2021-bertic,

title = "{BERT}i{\'c} - The Transformer Language Model for {B}osnian, {C}roatian, {M}ontenegrin and {S}erbian",

author = "Ljube{\v{s}}i{\'c}, Nikola and Lauc, Davor",

booktitle = "Proceedings of the 8th Workshop on Balto-Slavic Natural Language Processing",

month = apr,

year = "2021",

address = "Kiyv, Ukraine",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2021.bsnlp-1.5",

pages = "37--42",

}

```

and the dataset used for fine-tuning:

```

@misc{ljubešić2019frenk,

title={The FRENK Datasets of Socially Unacceptable Discourse in Slovene and English},

author={Nikola Ljubešić and Darja Fišer and Tomaž Erjavec},

year={2019},

eprint={1906.02045},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/1906.02045}

}

```

|

14f05c0bc2a1d7af0f6c47f1e4e52ea5

|

lsanochkin/rubert-finetuned-collection3

|

lsanochkin

|

bert

| 16 | 3 |

transformers

| 0 |

token-classification

| true | false | false |

apache-2.0

| null |

['collection3']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,558 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# rubert-finetuned-collection3

This model is a fine-tuned version of [sberbank-ai/ruBert-base](https://huggingface.co/sberbank-ai/ruBert-base) on the collection3 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0514

- Precision: 0.9355

- Recall: 0.9577

- F1: 0.9465

- Accuracy: 0.9865

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.0794 | 1.0 | 1163 | 0.0536 | 0.9178 | 0.9466 | 0.9320 | 0.9825 |

| 0.0391 | 2.0 | 2326 | 0.0512 | 0.9228 | 0.9553 | 0.9388 | 0.9853 |

| 0.0191 | 3.0 | 3489 | 0.0514 | 0.9355 | 0.9577 | 0.9465 | 0.9865 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0.dev20220929+cu117

- Datasets 2.8.0

- Tokenizers 0.13.2

|

957d13511a29a4b828d088fc5558ae33

|

Shularp/mt5-small-finetuned-ar-to-th-3rd-round

|

Shularp

|

mt5

| 12 | 16 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,416 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mt5-small-finetuned-ar-to-th-finetuned-ar-to-th-2nd-round-finetuned-ar-to-th-3rd-round

This model is a fine-tuned version of [Shularp/mt5-small-finetuned-ar-to-th](https://huggingface.co/Shularp/mt5-small-finetuned-ar-to-th) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 2.7393

- Bleu: 5.3860

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bleu |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 3.5917 | 1.0 | 16806 | 2.8661 | 4.1430 |

| 3.432 | 2.0 | 33612 | 2.7698 | 5.0779 |

| 3.3793 | 3.0 | 50418 | 2.7393 | 5.3860 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.13.1+cu116

- Datasets 2.9.0

- Tokenizers 0.13.2

|

a5efd2befa3581491a03de13127e112d

|

fathyshalab/all-roberta-large-v1-small_talk-4-16-5-oos

|

fathyshalab

|

roberta

| 11 | 3 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,519 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# all-roberta-large-v1-small_talk-4-16-5-oos

This model is a fine-tuned version of [sentence-transformers/all-roberta-large-v1](https://huggingface.co/sentence-transformers/all-roberta-large-v1) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 2.3566

- Accuracy: 0.3855

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 48

- eval_batch_size: 48

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 2.7259 | 1.0 | 1 | 2.5917 | 0.2551 |

| 2.217 | 2.0 | 2 | 2.5059 | 0.3275 |

| 1.7237 | 3.0 | 3 | 2.4355 | 0.3768 |

| 1.4001 | 4.0 | 4 | 2.3837 | 0.3739 |

| 1.1937 | 5.0 | 5 | 2.3566 | 0.3855 |

### Framework versions

- Transformers 4.20.0

- Pytorch 1.11.0+cu102

- Datasets 2.3.2

- Tokenizers 0.12.1

|

7283babeb9fb7e30e9ba6bc6da333a2b

|

cmarkea/distilcamembert-base-sentiment

|

cmarkea

|

camembert

| 8 | 2,638 |

transformers

| 15 |

text-classification

| true | true | false |

mit

|

['fr']

|

['amazon_reviews_multi', 'allocine']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 6,816 | false |

DistilCamemBERT-Sentiment

=========================

We present DistilCamemBERT-Sentiment, which is [DistilCamemBERT](https://huggingface.co/cmarkea/distilcamembert-base) fine-tuned for the sentiment analysis task for the French language. This model is built using two datasets: [Amazon Reviews](https://huggingface.co/datasets/amazon_reviews_multi) and [Allociné.fr](https://huggingface.co/datasets/allocine) to minimize the bias. Indeed, Amazon reviews are similar in messages and relatively shorts, contrary to Allociné critics, who are long and rich texts.

This modelization is close to [tblard/tf-allocine](https://huggingface.co/tblard/tf-allocine) based on [CamemBERT](https://huggingface.co/camembert-base) model. The problem of the modelizations based on CamemBERT is at the scaling moment, for the production phase, for example. Indeed, inference cost can be a technological issue. To counteract this effect, we propose this modelization which **divides the inference time by two** with the same consumption power thanks to [DistilCamemBERT](https://huggingface.co/cmarkea/distilcamembert-base).

Dataset

-------

The dataset comprises 204,993 reviews for training and 4,999 reviews for the test from Amazon, and 235,516 and 4,729 critics from [Allocine website](https://www.allocine.fr/). The dataset is labeled into five categories:

* 1 star: represents a terrible appreciation,

* 2 stars: bad appreciation,

* 3 stars: neutral appreciation,

* 4 stars: good appreciation,

* 5 stars: excellent appreciation.

Evaluation results

------------------

In addition of accuracy (called here *exact accuracy*) in order to be robust to +/-1 star estimation errors, we will take the following definition as a performance measure:

$$\mathrm{top\!-\!2\; acc}=\frac{1}{|\mathcal{O}|}\sum_{i\in\mathcal{O}}\sum_{0\leq l < 2}\mathbb{1}(\hat{f}_{i,l}=y_i)$$

where \\(\hat{f}_l\\) is the l-th largest predicted label, \\(y\\) the true label, \\(\mathcal{O}\\) is the test set of the observations and \\(\mathbb{1}\\) is the indicator function.

| **class** | **exact accuracy (%)** | **top-2 acc (%)** | **support** |

| :---------: | :--------------------: | :---------------: | :---------: |

| **global** | 61.01 | 88.80 | 9,698 |

| **1 star** | 87.21 | 77.17 | 1,905 |

| **2 stars** | 79.19 | 84.75 | 1,935 |

| **3 stars** | 77.85 | 78.98 | 1,974 |

| **4 stars** | 78.61 | 90.22 | 1,952 |

| **5 stars** | 85.96 | 82.92 | 1,932 |

Benchmark

---------

This model is compared to 3 reference models (see below). As each model doesn't have the exact definition of targets, we detail the performance measure used for each. An **AMD Ryzen 5 4500U @ 2.3GHz with 6 cores** was used for the mean inference time measure.

#### bert-base-multilingual-uncased-sentiment

[nlptown/bert-base-multilingual-uncased-sentiment](https://huggingface.co/nlptown/bert-base-multilingual-uncased-sentiment) is based on BERT model in the multilingual and uncased version. This sentiment analyzer is trained on Amazon reviews, similar to our model. Hence the targets and their definitions are the same.

| **model** | **time (ms)** | **exact accuracy (%)** | **top-2 acc (%)** |

| :-------: | :-----------: | :--------------------: | :---------------: |

| [cmarkea/distilcamembert-base-sentiment](https://huggingface.co/cmarkea/distilcamembert-base-sentiment) | **95.56** | **61.01** | **88.80** |

| [nlptown/bert-base-multilingual-uncased-sentiment](https://huggingface.co/nlptown/bert-base-multilingual-uncased-sentiment) | 187.70 | 54.41 | 82.82 |

#### tf-allociné and barthez-sentiment-classification

[tblard/tf-allocine](https://huggingface.co/tblard/tf-allocine) based on [CamemBERT](https://huggingface.co/camembert-base) model and [moussaKam/barthez-sentiment-classification](https://huggingface.co/moussaKam/barthez-sentiment-classification) based on [BARThez](https://huggingface.co/moussaKam/barthez) use the same bi-class definition between them. To bring this back to a two-class problem, we will only consider the *"1 star"* and *"2 stars"* labels for the *negative* sentiments and *"4 stars"* and *"5 stars"* for *positive* sentiments. We exclude the *"3 stars"* which can be interpreted as a *neutral* class. In this context, the problem of +/-1 star estimation errors disappears. Then we use only the classical accuracy definition.

| **model** | **time (ms)** | **exact accuracy (%)** |

| :-------: | :-----------: | :--------------------: |

| [cmarkea/distilcamembert-base-sentiment](https://huggingface.co/cmarkea/distilcamembert-base-sentiment) | **95.56** | **97.52** |

| [tblard/tf-allocine](https://huggingface.co/tblard/tf-allocine) | 329.74 | 95.69 |

| [moussaKam/barthez-sentiment-classification](https://huggingface.co/moussaKam/barthez-sentiment-classification) | 197.95 | 94.29 |

How to use DistilCamemBERT-Sentiment

------------------------------------

```python

from transformers import pipeline

analyzer = pipeline(

task='text-classification',

model="cmarkea/distilcamembert-base-sentiment",

tokenizer="cmarkea/distilcamembert-base-sentiment"

)

result = analyzer(

"J'aime me promener en forêt même si ça me donne mal aux pieds.",

return_all_scores=True

)

result

[{'label': '1 star',

'score': 0.047529436647892},

{'label': '2 stars',

'score': 0.14150355756282806},

{'label': '3 stars',

'score': 0.3586442470550537},

{'label': '4 stars',

'score': 0.3181498646736145},

{'label': '5 stars',

'score': 0.13417290151119232}]

```

### Optimum + ONNX

```python

from optimum.onnxruntime import ORTModelForSequenceClassification

from transformers import AutoTokenizer, pipeline

HUB_MODEL = "cmarkea/distilcamembert-base-sentiment"

tokenizer = AutoTokenizer.from_pretrained(HUB_MODEL)

model = ORTModelForSequenceClassification.from_pretrained(HUB_MODEL)

onnx_qa = pipeline("text-classification", model=model, tokenizer=tokenizer)

# Quantized onnx model

quantized_model = ORTModelForSequenceClassification.from_pretrained(

HUB_MODEL, file_name="model_quantized.onnx"

)

```

Citation

--------

```bibtex

@inproceedings{delestre:hal-03674695,

TITLE = {{DistilCamemBERT : une distillation du mod{\`e}le fran{\c c}ais CamemBERT}},

AUTHOR = {Delestre, Cyrile and Amar, Abibatou},

URL = {https://hal.archives-ouvertes.fr/hal-03674695},

BOOKTITLE = {{CAp (Conf{\'e}rence sur l'Apprentissage automatique)}},

ADDRESS = {Vannes, France},

YEAR = {2022},

MONTH = Jul,

KEYWORDS = {NLP ; Transformers ; CamemBERT ; Distillation},

PDF = {https://hal.archives-ouvertes.fr/hal-03674695/file/cap2022.pdf},

HAL_ID = {hal-03674695},

HAL_VERSION = {v1},

}

```

|

97522b8a9ad78cb7d9490722ab58d77a

|

sd-concepts-library/reeducation-camp

|

sd-concepts-library

| null | 11 | 0 | null | 0 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,330 | false |

### reeducation camp on Stable Diffusion

This is the `<reeducation-camp>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

dc50435e584f2f487cb7e01f6681e2e0

|

jonatasgrosman/exp_w2v2t_pl_no-pretraining_s20

|

jonatasgrosman

|

wav2vec2

| 10 | 2 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['pl']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'pl']

| false | true | true | 413 | false |

# exp_w2v2t_pl_no-pretraining_s20

Fine-tuned randomly initialized wav2vec2 model for speech recognition using the train split of [Common Voice 7.0 (pl)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

059f1549c91185883bd7c102699ed0fe

|

glob-asr/wav2vec2-xls-r-300m-spanish-large-LM

|

glob-asr

|

wav2vec2

| 14 | 2 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null |

['common_voice']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer', 'es', 'robust-speech-event']

| true | true | true | 3,329 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-spanish-large

This model is a fine-tuned version of [tomascufaro/xls-r-es-test](https://huggingface.co/tomascufaro/xls-r-es-test) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1431

- Wer: 0.1197

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 10

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 20

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 300

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 0.1769 | 0.15 | 400 | 0.1795 | 0.1698 |

| 0.217 | 0.3 | 800 | 0.2000 | 0.1945 |

| 0.2372 | 0.45 | 1200 | 0.1985 | 0.1859 |

| 0.2351 | 0.6 | 1600 | 0.1901 | 0.1772 |

| 0.2269 | 0.75 | 2000 | 0.1968 | 0.1783 |

| 0.2284 | 0.9 | 2400 | 0.1873 | 0.1771 |

| 0.2014 | 1.06 | 2800 | 0.1840 | 0.1696 |

| 0.1988 | 1.21 | 3200 | 0.1904 | 0.1730 |

| 0.1919 | 1.36 | 3600 | 0.1827 | 0.1630 |

| 0.1919 | 1.51 | 4000 | 0.1788 | 0.1629 |

| 0.1817 | 1.66 | 4400 | 0.1755 | 0.1558 |

| 0.1812 | 1.81 | 4800 | 0.1795 | 0.1638 |

| 0.1808 | 1.96 | 5200 | 0.1762 | 0.1603 |

| 0.1625 | 2.11 | 5600 | 0.1721 | 0.1557 |

| 0.1477 | 2.26 | 6000 | 0.1735 | 0.1504 |

| 0.1508 | 2.41 | 6400 | 0.1708 | 0.1478 |

| 0.157 | 2.56 | 6800 | 0.1644 | 0.1466 |

| 0.1491 | 2.71 | 7200 | 0.1638 | 0.1445 |

| 0.1458 | 2.86 | 7600 | 0.1582 | 0.1426 |

| 0.1387 | 3.02 | 8000 | 0.1607 | 0.1376 |

| 0.1269 | 3.17 | 8400 | 0.1559 | 0.1364 |

| 0.1172 | 3.32 | 8800 | 0.1521 | 0.1335 |

| 0.1203 | 3.47 | 9200 | 0.1534 | 0.1330 |

| 0.1177 | 3.62 | 9600 | 0.1485 | 0.1304 |

| 0.1167 | 3.77 | 10000 | 0.1498 | 0.1302 |

| 0.1194 | 3.92 | 10400 | 0.1463 | 0.1287 |

| 0.1053 | 4.07 | 10800 | 0.1483 | 0.1282 |

| 0.098 | 4.22 | 11200 | 0.1498 | 0.1267 |

| 0.0958 | 4.37 | 11600 | 0.1461 | 0.1233 |

| 0.0946 | 4.52 | 12000 | 0.1444 | 0.1218 |

| 0.094 | 4.67 | 12400 | 0.1434 | 0.1206 |

| 0.0932 | 4.82 | 12800 | 0.1424 | 0.1206 |

| 0.0912 | 4.98 | 13200 | 0.1431 | 0.1197 |

### Framework versions

- Transformers 4.17.0.dev0

- Pytorch 1.10.2+cu102

- Datasets 1.18.2.dev0

- Tokenizers 0.11.0

|

372d17eabe2b466794ac0c17ed476ec8

|

jayanta/distilbert-base-uncased-sentiment-finetuned-memes

|

jayanta

|

distilbert

| 13 | 3 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,030 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-sentiment-finetuned-memes

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.1824

- Accuracy: 0.8270

- Precision: 0.8270

- Recall: 0.8270

- F1: 0.8270

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|:---------:|:------:|:------:|

| 0.5224 | 1.0 | 4293 | 0.5321 | 0.7720 | 0.8084 | 0.7720 | 0.7721 |

| 0.4386 | 2.0 | 8586 | 0.4930 | 0.7961 | 0.7980 | 0.7961 | 0.7967 |

| 0.3722 | 3.0 | 12879 | 0.7652 | 0.7925 | 0.7955 | 0.7925 | 0.7932 |

| 0.3248 | 4.0 | 17172 | 0.9827 | 0.8045 | 0.8047 | 0.8045 | 0.8023 |

| 0.308 | 5.0 | 21465 | 0.9518 | 0.8244 | 0.8260 | 0.8244 | 0.8249 |

| 0.2906 | 6.0 | 25758 | 1.0971 | 0.8155 | 0.8166 | 0.8155 | 0.8159 |

| 0.2036 | 7.0 | 30051 | 1.1457 | 0.8260 | 0.8271 | 0.8260 | 0.8264 |

| 0.1747 | 8.0 | 34344 | 1.1824 | 0.8270 | 0.8270 | 0.8270 | 0.8270 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

72a0b3f02ce675776ed1e069bc586a6f

|

Krishadow/biobert-finetuned-ner-K

|

Krishadow

|

bert

| 8 | 9 |

transformers

| 0 |

token-classification

| false | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,518 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Krishadow/biobert-finetuned-ner-K

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.0099

- Validation Loss: 0.0676

- Epoch: 4

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 1695, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 0.1222 | 0.0604 | 0 |

| 0.0398 | 0.0531 | 1 |

| 0.0220 | 0.0616 | 2 |

| 0.0134 | 0.0653 | 3 |

| 0.0099 | 0.0676 | 4 |

### Framework versions

- Transformers 4.18.0

- TensorFlow 2.8.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

c0cac851ff8881f121e3fb6b9b8e3fff

|

AaronMarker/my-awesome-model

|

AaronMarker

|

distilbert

| 4 | 1 |

transformers

| 0 |

text-classification

| false | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,304 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# my-awesome-model

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.8153

- Validation Loss: 0.4165

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 500, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 0.8153 | 0.4165 | 0 |

### Framework versions

- Transformers 4.21.2

- TensorFlow 2.8.2

- Datasets 2.4.0

- Tokenizers 0.12.1

|

6688ba9bc3b8996185995039182f37d4

|

TheLastBen/rick-roll-style

|

TheLastBen

| null | 38 | 51 |

diffusers

| 11 |

text-to-image

| false | false | false |

creativeml-openrail-m

| null | null | null | 1 | 0 | 1 | 0 | 0 | 0 | 0 |

['text-to-image', 'stable-diffusion']

| false | true | true | 2,906 | false |

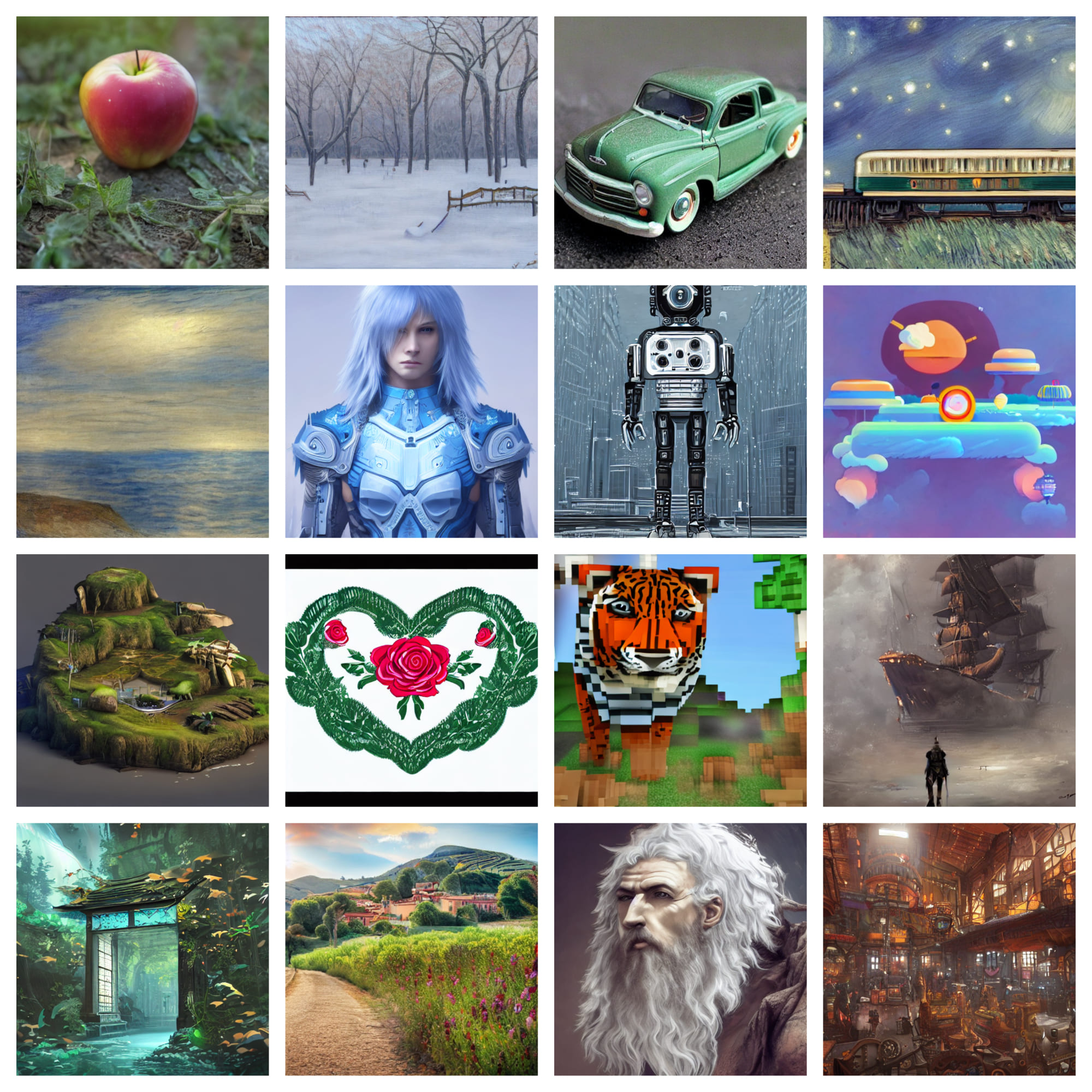

### Rick Roll Style V2.1

#### V2.1-768 Model by TheLastBen

This model is trained on 130 images, 1200 steps UNet and 400 steps text_encoder.

#### Prompt example :

(anthropomorphic) chicken rckrll, closeup

Negative : painting, fake, drawing

768x768

Each 5 steps can make a big output difference with the same seed.

You can use the images below to load the full settings in A1111

A1111 Colab :[fast-stable-diffusion-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

#### Sample pictures of this concept:

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

|

ad7e41a77227327d7c08d4377a2f307d

|

ahmad573/wav2vec2-base-timit-demo-colab2

|

ahmad573

|

wav2vec2

| 12 | 5 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,438 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-timit-demo-colab2

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.1914

- Wer: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 700

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:---:|

| 3.8196 | 7.04 | 500 | 3.2201 | 1.0 |

| 3.1517 | 14.08 | 1000 | 3.1876 | 1.0 |

| 3.1493 | 21.13 | 1500 | 3.1837 | 1.0 |

| 3.1438 | 28.17 | 2000 | 3.1914 | 1.0 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.11.0+cu113

- Datasets 1.18.3

- Tokenizers 0.10.3

|

41d4fbaca3f624785d223b0126e007e1

|

sayakpaul/glpn-nyu-finetuned-diode-221221-102136

|

sayakpaul

|

glpn

| 7 | 1 |

transformers

| 0 |

depth-estimation

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['vision', 'depth-estimation', 'generated_from_trainer']

| true | true | true | 2,756 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# glpn-nyu-finetuned-diode-221221-102136

This model is a fine-tuned version of [vinvino02/glpn-nyu](https://huggingface.co/vinvino02/glpn-nyu) on the diode-subset dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4222

- Mae: 0.4110

- Rmse: 0.6292

- Abs Rel: 0.3778

- Log Mae: 0.1636

- Log Rmse: 0.2240

- Delta1: 0.4320

- Delta2: 0.6806

- Delta3: 0.8068

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 24

- eval_batch_size: 48

- seed: 2022

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.15

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Mae | Rmse | Abs Rel | Log Mae | Log Rmse | Delta1 | Delta2 | Delta3 |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:-------:|:-------:|:--------:|:------:|:------:|:------:|

| 0.4953 | 1.0 | 72 | 0.4281 | 0.4216 | 0.6448 | 0.3539 | 0.1696 | 0.2312 | 0.4427 | 0.6625 | 0.7765 |

| 0.3855 | 2.0 | 144 | 0.4749 | 0.4444 | 0.6498 | 0.4156 | 0.1846 | 0.2408 | 0.3612 | 0.6027 | 0.7728 |

| 0.4158 | 3.0 | 216 | 0.5042 | 0.5122 | 0.7196 | 0.4385 | 0.2264 | 0.2834 | 0.2797 | 0.4837 | 0.6699 |

| 0.388 | 4.0 | 288 | 0.4418 | 0.4304 | 0.6473 | 0.4030 | 0.1745 | 0.2378 | 0.4027 | 0.6497 | 0.7900 |

| 0.4595 | 5.0 | 360 | 0.4394 | 0.4154 | 0.6292 | 0.4012 | 0.1664 | 0.2285 | 0.4262 | 0.6613 | 0.8021 |

| 0.393 | 6.0 | 432 | 0.4252 | 0.4060 | 0.6153 | 0.3944 | 0.1617 | 0.2215 | 0.4318 | 0.6747 | 0.8128 |

| 0.3468 | 7.0 | 504 | 0.4413 | 0.4366 | 0.6479 | 0.3835 | 0.1818 | 0.2385 | 0.3778 | 0.6248 | 0.7770 |

| 0.316 | 8.0 | 576 | 0.4218 | 0.4048 | 0.6192 | 0.3844 | 0.1606 | 0.2215 | 0.4374 | 0.6896 | 0.8119 |

| 0.3123 | 9.0 | 648 | 0.4263 | 0.4168 | 0.6295 | 0.3765 | 0.1689 | 0.2267 | 0.4139 | 0.6612 | 0.7976 |

| 0.2973 | 10.0 | 720 | 0.4222 | 0.4110 | 0.6292 | 0.3778 | 0.1636 | 0.2240 | 0.4320 | 0.6806 | 0.8068 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu116

- Datasets 2.8.0

- Tokenizers 0.13.2

|

ab8834659ac9df8bece2a2aee441edeb

|

thaonguyen274/resnet-50-finetuned-eurosat

|

thaonguyen274

|

resnet

| 18 | 4 |

transformers

| 0 |

image-classification

| true | false | false |

apache-2.0

| null |

['imagefolder']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,871 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# resnet-50-finetuned-eurosat

This model is a fine-tuned version of [microsoft/resnet-50](https://huggingface.co/microsoft/resnet-50) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9095

- Accuracy: 0.8240

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 1.78 | 0.96 | 17 | 1.7432 | 0.4321 |

| 1.7105 | 1.96 | 34 | 1.6596 | 0.6307 |

| 1.6045 | 2.96 | 51 | 1.5369 | 0.6758 |

| 1.6526 | 3.96 | 68 | 1.4111 | 0.7139 |

| 1.4018 | 4.96 | 85 | 1.2686 | 0.7602 |

| 1.2812 | 5.96 | 102 | 1.1433 | 0.7714 |

| 1.3282 | 6.96 | 119 | 1.0643 | 0.7910 |

| 1.1246 | 7.96 | 136 | 0.9794 | 0.8133 |

| 1.0731 | 8.96 | 153 | 0.9279 | 0.8087 |

| 1.0531 | 9.96 | 170 | 0.9095 | 0.8240 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

1f6467ab1b7be0be4c76c2bbec031142

|

sd-concepts-library/nathan-wyatt

|

sd-concepts-library

| null | 12 | 0 | null | 0 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,386 | false |

### Nathan-Wyatt on Stable Diffusion

This is the `<Nathan-Wyatt>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

1470469ed599bdfa0e0f96d05e48f8f3

|

baruga/hideous-blobfish

|

baruga

| null | 17 | 4 |

diffusers

| 1 |

text-to-image

| true | false | false |

creativeml-openrail-m

| null | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['pytorch', 'diffusers', 'stable-diffusion', 'text-to-image', 'diffusion-models-class', 'dreambooth-hackathon', 'animal']

| false | true | true | 1,396 | false |

## Description

This is a Stable Diffusion model fine-tuned on the infamous blobfish (often remarked as the ugliest animal in the world) for the DreamBooth Hackathon 🔥 animal theme. To participate or learn more, visit [this page](https://huggingface.co/dreambooth-hackathon).

To generate blobfish images, use **a photo of blofi fish in [your choice]** or experiment with other variations. For some reason, GFC scale 5 seems to give the best results, at 7 images start get "overcooked". Despite multiple training runs with various settings, I couldn't fully solve this problem. Additional modifiers and negative prompts may also improve results.

## Examples

*a photo of blofi fish wearing a beautiful flower crown.*

*a photo of blofi fish in nerdy glasses.*

*a photo of blofi fish at the Arctic in a fluffy hat.*

*top rated surrealist painting of blofi fish by Salvador Dalí, intricate details.*

*top rated colorful origami photo of blofi fish.*

## Usage

```python

from diffusers import StableDiffusionPipeline

pipeline = StableDiffusionPipeline.from_pretrained('baruga/hideous-blobfish')

image = pipeline().images[0]

image

```

|

cde52202a5c05730729097b78fa5387b

|

espnet/simpleoier_chime6_asr_transformer_wavlm_lr1e-3

|

espnet

| null | 23 | 22 |

espnet

| 0 |

automatic-speech-recognition

| false | false | false |

cc-by-4.0

|

['en']

|

['chime6']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['espnet', 'audio', 'automatic-speech-recognition']

| false | true | true | 17,649 | false |

## ESPnet2 ASR model

### `espnet/simpleoier_chime6_asr_transformer_wavlm_lr1e-3`

This model was trained by simpleoier using chime6 recipe in [espnet](https://github.com/espnet/espnet/).

### Demo: How to use in ESPnet2

```bash

cd espnet

git checkout b757b89d45d5574cebf44e225cbe32e3e9e4f522

pip install -e .

cd egs2/chime6/asr1

./run.sh --skip_data_prep false --skip_train true --download_model espnet/simpleoier_chime6_asr_transformer_wavlm_lr1e-3

```

<!-- Generated by scripts/utils/show_asr_result.sh -->

# RESULTS

## Environments

- date: `Tue May 3 16:47:10 EDT 2022`

- python version: `3.9.12 (main, Apr 5 2022, 06:56:58) [GCC 7.5.0]`

- espnet version: `espnet 202204`

- pytorch version: `pytorch 1.10.1`

- Git hash: `b757b89d45d5574cebf44e225cbe32e3e9e4f522`

- Commit date: `Mon May 2 09:21:08 2022 -0400`

## asr_train_asr_transformer_wavlm_lr1e-3_specaug_accum1_preenc128_warmup20k_raw_en_bpe1000_sp

### WER

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|---|---|---|---|---|---|---|---|---|

|decode_asr_transformer_asr_model_1epoch/dev_gss_multiarray|7437|58881|66.5|21.3|12.2|8.8|42.3|77.4|

|decode_asr_transformer_asr_model_2epoch/dev_gss_multiarray|7437|58881|68.6|20.7|10.6|8.4|39.8|77.5|

|decode_asr_transformer_asr_model_3epoch/dev_gss_multiarray|7437|58881|67.5|20.3|12.2|8.0|40.5|76.5|

|decode_asr_transformer_asr_model_5epoch/dev_gss_multiarray|7437|58881|67.7|21.4|10.9|8.6|40.9|77.9|

|decode_asr_transformer_asr_model_7epoch/dev_gss_multiarray|7437|58881|66.6|20.9|12.5|8.2|41.6|77.8|

|decode_asr_transformer_asr_model_valid.acc.ave/dev_gss_multiarray|0|0|0.0|0.0|0.0|0.0|0.0|0.0|

|decode_asr_transformer_asr_model_valid.acc.ave_5best/dev_gss_multiarray|7437|58881|69.4|20.2|10.4|8.6|39.1|75.8|

|decode_asr_transformer_lw0.5_lm_lm_train_lm_en_bpe1000_valid.loss.ave_asr_model_valid.acc.ave_5best/dev_gss_multiarray|7437|58881|65.7|20.2|14.1|7.5|41.8|77.8|

|decode_asr_transformer_lw0.5_ngram_ngram_3gram_asr_model_valid.acc.ave/dev_gss_multiarray|7437|58881|65.7|19.0|15.3|6.2|40.6|78.8|

### CER

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|---|---|---|---|---|---|---|---|---|

|decode_asr_transformer_asr_model_1epoch/dev_gss_multiarray|7437|280767|78.1|7.7|14.1|9.1|31.0|77.9|

|decode_asr_transformer_asr_model_2epoch/dev_gss_multiarray|7437|280767|80.0|7.6|12.5|8.7|28.8|78.1|

|decode_asr_transformer_asr_model_3epoch/dev_gss_multiarray|7437|280767|78.6|7.3|14.1|8.1|29.5|77.5|

|decode_asr_transformer_asr_model_5epoch/dev_gss_multiarray|7437|280767|79.5|7.7|12.8|9.1|29.6|78.8|

|decode_asr_transformer_asr_model_7epoch/dev_gss_multiarray|7437|280767|77.9|7.6|14.5|8.3|30.3|78.6|

|decode_asr_transformer_asr_model_valid.acc.ave/dev_gss_multiarray|0|0|0.0|0.0|0.0|0.0|0.0|0.0|

|decode_asr_transformer_asr_model_valid.acc.ave_5best/dev_gss_multiarray|7437|280767|80.6|7.4|12.0|8.9|28.3|76.6|

|decode_asr_transformer_lw0.5_lm_lm_train_lm_en_bpe1000_valid.loss.ave_asr_model_valid.acc.ave_5best/dev_gss_multiarray|7437|280767|76.5|7.4|16.1|7.7|31.2|78.5|

|decode_asr_transformer_lw0.5_ngram_ngram_3gram_asr_model_valid.acc.ave/dev_gss_multiarray|7437|280767|77.0|7.6|15.4|7.2|30.2|79.8|

### TER

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|---|---|---|---|---|---|---|---|---|

|decode_asr_transformer_asr_model_1epoch/dev_gss_multiarray|7437|92680|65.8|18.8|15.4|8.7|42.9|78.0|

|decode_asr_transformer_asr_model_2epoch/dev_gss_multiarray|7437|92680|67.9|18.1|13.9|8.2|40.3|78.2|

|decode_asr_transformer_asr_model_3epoch/dev_gss_multiarray|7437|92680|66.9|17.8|15.2|8.0|41.1|77.7|

|decode_asr_transformer_asr_model_5epoch/dev_gss_multiarray|7437|92680|67.2|18.5|14.3|8.2|40.9|78.9|

|decode_asr_transformer_asr_model_7epoch/dev_gss_multiarray|7437|92680|66.1|18.2|15.7|7.8|41.7|78.6|

|decode_asr_transformer_asr_model_valid.acc.ave/dev_gss_multiarray|0|0|0.0|0.0|0.0|0.0|0.0|0.0|

|decode_asr_transformer_asr_model_valid.acc.ave_5best/dev_gss_multiarray|7437|92680|68.9|17.7|13.4|8.2|39.3|76.6|

|decode_asr_transformer_lw0.5_lm_lm_train_lm_en_bpe1000_valid.loss.ave_asr_model_valid.acc.ave_5best/dev_gss_multiarray|7437|92680|66.1|19.1|14.8|10.2|44.1|78.6|

|decode_asr_transformer_lw0.5_ngram_ngram_3gram_asr_model_valid.acc.ave/dev_gss_multiarray|7437|92680|66.0|19.9|14.1|9.5|43.6|79.8|

## ASR config

<details><summary>expand</summary>

```

config: conf/tuning/train_asr_transformer_wavlm_lr1e-3_specaug_accum1_preenc128_warmup20k.yaml

print_config: false

log_level: INFO

dry_run: false

iterator_type: sequence

output_dir: exp/asr_train_asr_transformer_wavlm_lr1e-3_specaug_accum1_preenc128_warmup20k_raw_en_bpe1000_sp

ngpu: 0

seed: 0

num_workers: 1

num_att_plot: 3

dist_backend: nccl

dist_init_method: env://

dist_world_size: null

dist_rank: null

local_rank: null

dist_master_addr: null

dist_master_port: null

dist_launcher: null

multiprocessing_distributed: false

unused_parameters: true

sharded_ddp: false

cudnn_enabled: true

cudnn_benchmark: false

cudnn_deterministic: true

collect_stats: false

write_collected_feats: false

max_epoch: 8

patience: null

val_scheduler_criterion:

- valid

- loss

early_stopping_criterion:

- valid

- loss

- min

best_model_criterion:

- - valid

- acc

- max

keep_nbest_models: 5

nbest_averaging_interval: 0

grad_clip: 5

grad_clip_type: 2.0

grad_noise: false

accum_grad: 1

no_forward_run: false

resume: true

train_dtype: float32

use_amp: false

log_interval: null

use_matplotlib: true

use_tensorboard: true

use_wandb: false

wandb_project: null

wandb_id: null

wandb_entity: null

wandb_name: null

wandb_model_log_interval: -1

detect_anomaly: false

pretrain_path: null

init_param: []

ignore_init_mismatch: false

freeze_param:

- frontend.upstream

num_iters_per_epoch: null

batch_size: 48

valid_batch_size: null

batch_bins: 1000000

valid_batch_bins: null

train_shape_file:

- exp/asr_stats_raw_en_bpe1000_sp/train/speech_shape

- exp/asr_stats_raw_en_bpe1000_sp/train/text_shape.bpe

valid_shape_file:

- exp/asr_stats_raw_en_bpe1000_sp/valid/speech_shape

- exp/asr_stats_raw_en_bpe1000_sp/valid/text_shape.bpe

batch_type: folded

valid_batch_type: null

fold_length:

- 80000

- 150

sort_in_batch: descending

sort_batch: descending

multiple_iterator: false

chunk_length: 500

chunk_shift_ratio: 0.5

num_cache_chunks: 1024

train_data_path_and_name_and_type:

- - dump/raw/train_worn_simu_u400k_cleaned_sp/wav.scp

- speech

- kaldi_ark

- - dump/raw/train_worn_simu_u400k_cleaned_sp/text

- text

- text

valid_data_path_and_name_and_type:

- - dump/raw/dev_gss_multiarray/wav.scp

- speech

- kaldi_ark

- - dump/raw/dev_gss_multiarray/text

- text

- text

allow_variable_data_keys: false

max_cache_size: 0.0

max_cache_fd: 32

valid_max_cache_size: null

optim: adam

optim_conf:

lr: 0.001

scheduler: warmuplr

scheduler_conf:

warmup_steps: 20000

token_list:

- <blank>

- <unk>

- '[inaudible]'

- '[laughs]'

- '[noise]'

- ▁

- s

- ''''

- ▁i

- ▁it

- t

- ▁you

- ▁the

- ▁yeah

- ▁a

- ▁like

- ▁that

- ▁and

- ▁to

- m

- ▁oh

- ▁so

- '-'