repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

deepset/gelectra-base-germanquad

|

deepset

|

electra

| 9 | 10,283 |

transformers

| 13 |

question-answering

| true | true | false |

mit

|

['de']

|

['deepset/germanquad']

| null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['exbert']

| false | true | true | 3,697 | false |

## Overview

**Language model:** gelectra-base-germanquad

**Language:** German

**Training data:** GermanQuAD train set (~ 12MB)

**Eval data:** GermanQuAD test set (~ 5MB)

**Infrastructure**: 1x V100 GPU

**Published**: Apr 21st, 2021

## Details

- We trained a German question answering model with a gelectra-base model as its basis.

- The dataset is GermanQuAD, a new, German language dataset, which we hand-annotated and published [online](https://deepset.ai/germanquad).

- The training dataset is one-way annotated and contains 11518 questions and 11518 answers, while the test dataset is three-way annotated so that there are 2204 questions and with 2204·3−76 = 6536answers, because we removed 76 wrong answers.

See https://deepset.ai/germanquad for more details and dataset download in SQuAD format.

## Hyperparameters

```

batch_size = 24

n_epochs = 2

max_seq_len = 384

learning_rate = 3e-5

lr_schedule = LinearWarmup

embeds_dropout_prob = 0.1

```

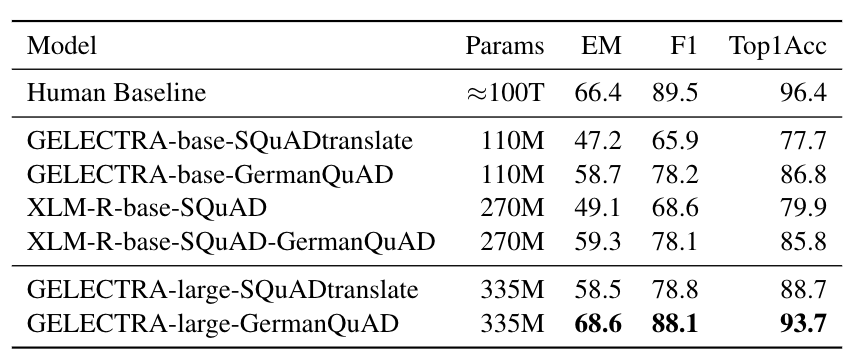

## Performance

We evaluated the extractive question answering performance on our GermanQuAD test set.

Model types and training data are included in the model name.

For finetuning XLM-Roberta, we use the English SQuAD v2.0 dataset.

The GELECTRA models are warm started on the German translation of SQuAD v1.1 and finetuned on [GermanQuAD](https://deepset.ai/germanquad).

The human baseline was computed for the 3-way test set by taking one answer as prediction and the other two as ground truth.

## Authors

**Timo Möller:** timo.moeller@deepset.ai

**Julian Risch:** julian.risch@deepset.ai

**Malte Pietsch:** malte.pietsch@deepset.ai

## About us

<div class="grid lg:grid-cols-2 gap-x-4 gap-y-3">

<div class="w-full h-40 object-cover mb-2 rounded-lg flex items-center justify-center">

<img alt="" src="https://raw.githubusercontent.com/deepset-ai/.github/main/deepset-logo-colored.png" class="w-40"/>

</div>

<div class="w-full h-40 object-cover mb-2 rounded-lg flex items-center justify-center">

<img alt="" src="https://raw.githubusercontent.com/deepset-ai/.github/main/haystack-logo-colored.png" class="w-40"/>

</div>

</div>

[deepset](http://deepset.ai/) is the company behind the open-source NLP framework [Haystack](https://haystack.deepset.ai/) which is designed to help you build production ready NLP systems that use: Question answering, summarization, ranking etc.

Some of our other work:

- [Distilled roberta-base-squad2 (aka "tinyroberta-squad2")]([https://huggingface.co/deepset/tinyroberta-squad2)

- [German BERT (aka "bert-base-german-cased")](https://deepset.ai/german-bert)

- [GermanQuAD and GermanDPR datasets and models (aka "gelectra-base-germanquad", "gbert-base-germandpr")](https://deepset.ai/germanquad)

## Get in touch and join the Haystack community

<p>For more info on Haystack, visit our <strong><a href="https://github.com/deepset-ai/haystack">GitHub</a></strong> repo and <strong><a href="https://docs.haystack.deepset.ai">Documentation</a></strong>.

We also have a <strong><a class="h-7" href="https://haystack.deepset.ai/community">Discord community open to everyone!</a></strong></p>

[Twitter](https://twitter.com/deepset_ai) | [LinkedIn](https://www.linkedin.com/company/deepset-ai/) | [Discord](https://haystack.deepset.ai/community) | [GitHub Discussions](https://github.com/deepset-ai/haystack/discussions) | [Website](https://deepset.ai)

By the way: [we're hiring!](http://www.deepset.ai/jobs)

|

04d0a31bec6c69bcbab602d6cb4d59e6

|

MartinoMensio/racism-models-w-m-vote-nonstrict-epoch-3

|

MartinoMensio

|

bert

| 4 | 4 |

transformers

| 0 |

text-classification

| true | false | false |

mit

|

['es']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 4,106 | false |

### Description

This model is a fine-tuned version of [BETO (spanish bert)](https://huggingface.co/dccuchile/bert-base-spanish-wwm-uncased) that has been trained on the *Datathon Against Racism* dataset (2022)

We performed several experiments that will be described in the upcoming paper "Estimating Ground Truth in a Low-labelled Data Regime:A Study of Racism Detection in Spanish" (NEATClasS 2022)

We applied 6 different methods ground-truth estimations, and for each one we performed 4 epochs of fine-tuning. The result is made of 24 models:

| method | epoch 1 | epoch 3 | epoch 3 | epoch 4 |

|--- |--- |--- |--- |--- |

| raw-label | [raw-label-epoch-1](https://huggingface.co/MartinoMensio/racism-models-raw-label-epoch-1) | [raw-label-epoch-2](https://huggingface.co/MartinoMensio/racism-models-raw-label-epoch-2) | [raw-label-epoch-3](https://huggingface.co/MartinoMensio/racism-models-raw-label-epoch-3) | [raw-label-epoch-4](https://huggingface.co/MartinoMensio/racism-models-raw-label-epoch-4) |

| m-vote-strict | [m-vote-strict-epoch-1](https://huggingface.co/MartinoMensio/racism-models-m-vote-strict-epoch-1) | [m-vote-strict-epoch-2](https://huggingface.co/MartinoMensio/racism-models-m-vote-strict-epoch-2) | [m-vote-strict-epoch-3](https://huggingface.co/MartinoMensio/racism-models-m-vote-strict-epoch-3) | [m-vote-strict-epoch-4](https://huggingface.co/MartinoMensio/racism-models-m-vote-strict-epoch-4) |

| m-vote-nonstrict | [m-vote-nonstrict-epoch-1](https://huggingface.co/MartinoMensio/racism-models-m-vote-nonstrict-epoch-1) | [m-vote-nonstrict-epoch-2](https://huggingface.co/MartinoMensio/racism-models-m-vote-nonstrict-epoch-2) | [m-vote-nonstrict-epoch-3](https://huggingface.co/MartinoMensio/racism-models-m-vote-nonstrict-epoch-3) | [m-vote-nonstrict-epoch-4](https://huggingface.co/MartinoMensio/racism-models-m-vote-nonstrict-epoch-4) |

| regression-w-m-vote | [regression-w-m-vote-epoch-1](https://huggingface.co/MartinoMensio/racism-models-regression-w-m-vote-epoch-1) | [regression-w-m-vote-epoch-2](https://huggingface.co/MartinoMensio/racism-models-regression-w-m-vote-epoch-2) | [regression-w-m-vote-epoch-3](https://huggingface.co/MartinoMensio/racism-models-regression-w-m-vote-epoch-3) | [regression-w-m-vote-epoch-4](https://huggingface.co/MartinoMensio/racism-models-regression-w-m-vote-epoch-4) |

| w-m-vote-strict | [w-m-vote-strict-epoch-1](https://huggingface.co/MartinoMensio/racism-models-w-m-vote-strict-epoch-1) | [w-m-vote-strict-epoch-2](https://huggingface.co/MartinoMensio/racism-models-w-m-vote-strict-epoch-2) | [w-m-vote-strict-epoch-3](https://huggingface.co/MartinoMensio/racism-models-w-m-vote-strict-epoch-3) | [w-m-vote-strict-epoch-4](https://huggingface.co/MartinoMensio/racism-models-w-m-vote-strict-epoch-4) |

| w-m-vote-nonstrict | [w-m-vote-nonstrict-epoch-1](https://huggingface.co/MartinoMensio/racism-models-w-m-vote-nonstrict-epoch-1) | [w-m-vote-nonstrict-epoch-2](https://huggingface.co/MartinoMensio/racism-models-w-m-vote-nonstrict-epoch-2) | [w-m-vote-nonstrict-epoch-3](https://huggingface.co/MartinoMensio/racism-models-w-m-vote-nonstrict-epoch-3) | [w-m-vote-nonstrict-epoch-4](https://huggingface.co/MartinoMensio/racism-models-w-m-vote-nonstrict-epoch-4) |

This model is `w-m-vote-nonstrict-epoch-3`

### Usage

```python

from transformers import AutoTokenizer, AutoModelForSequenceClassification, pipeline

model_name = 'w-m-vote-nonstrict-epoch-3'

tokenizer = AutoTokenizer.from_pretrained("dccuchile/bert-base-spanish-wwm-uncased")

full_model_path = f'MartinoMensio/racism-models-{model_name}'

model = AutoModelForSequenceClassification.from_pretrained(full_model_path)

pipe = pipeline("text-classification", model = model, tokenizer = tokenizer)

texts = [

'y porqué es lo que hay que hacer con los menas y con los adultos también!!!! NO a los inmigrantes ilegales!!!!',

'Es que los judíos controlan el mundo'

]

print(pipe(texts))

# [{'label': 'racist', 'score': 0.9937393665313721}, {'label': 'non-racist', 'score': 0.9902436137199402}]

```

For more details, see https://github.com/preyero/neatclass22

|

bc20e0c52d81f9b81064e3b900880c2b

|

mollypak/distilbert-base-uncased-finetuned-cola

|

mollypak

|

distilbert

| 10 | 3 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null |

['glue']

| null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,565 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-cola

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7629

- Matthews Correlation: 0.5556

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Matthews Correlation |

|:-------------:|:-----:|:----:|:---------------:|:--------------------:|

| 0.538 | 1.0 | 535 | 0.5812 | 0.3250 |

| 0.3669 | 2.0 | 1070 | 0.5216 | 0.4993 |

| 0.2461 | 3.0 | 1605 | 0.6071 | 0.5016 |

| 0.1811 | 4.0 | 2140 | 0.7629 | 0.5556 |

| 0.1347 | 5.0 | 2675 | 0.8480 | 0.5547 |

### Framework versions

- Transformers 4.12.3

- Pytorch 1.9.1

- Datasets 1.15.1

- Tokenizers 0.10.3

|

1f745898edd34c0a7240c1db10e16568

|

cohogain/whisper-medium-ga-IE-cv11-fleurs-livaud

|

cohogain

|

whisper

| 23 | 17 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null |

['common_voice_11_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['whisper-event', 'generated_from_trainer']

| true | true | true | 1,666 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# openai/whisper-medium

This model is a fine-tuned version of [openai/whisper-medium](https://huggingface.co/openai/whisper-medium) on the common_voice_11_0 dataset.

It achieves the following results on the evaluation set:

- Loss: 1.1422

- Wer: 35.2207

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 7000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.1137 | 4.02 | 1000 | 0.9072 | 40.0987 |

| 0.0153 | 9.02 | 2000 | 1.0351 | 38.7631 |

| 0.0042 | 14.01 | 3000 | 1.0507 | 36.4402 |

| 0.0013 | 19.0 | 4000 | 1.0924 | 36.2660 |

| 0.0003 | 23.02 | 5000 | 1.1422 | 35.2207 |

| 0.0001 | 28.02 | 6000 | 1.1688 | 35.3368 |

| 0.0001 | 33.01 | 7000 | 1.1768 | 35.5110 |

### Framework versions

- Transformers 4.27.0.dev0

- Pytorch 1.13.1+cu117

- Datasets 2.9.1.dev0

- Tokenizers 0.13.2

|

5cf800a15d2dbeb3731c6fee2066a61b

|

ali2066/finetuned_sentence_itr0_2e-05_all_27_02_2022-22_25_09

|

ali2066

|

distilbert

| 13 | 6 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,615 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuned_sentence_itr0_2e-05_all_27_02_2022-22_25_09

This model is a fine-tuned version of [distilbert-base-uncased-finetuned-sst-2-english](https://huggingface.co/distilbert-base-uncased-finetuned-sst-2-english) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4638

- Accuracy: 0.8247

- F1: 0.8867

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| No log | 1.0 | 195 | 0.4069 | 0.7976 | 0.875 |

| No log | 2.0 | 390 | 0.4061 | 0.8134 | 0.8838 |

| 0.4074 | 3.0 | 585 | 0.4075 | 0.8134 | 0.8798 |

| 0.4074 | 4.0 | 780 | 0.4746 | 0.8256 | 0.8885 |

| 0.4074 | 5.0 | 975 | 0.4881 | 0.8220 | 0.8845 |

### Framework versions

- Transformers 4.15.0

- Pytorch 1.10.1+cu113

- Datasets 1.18.0

- Tokenizers 0.10.3

|

9d086d956391db3fa5b7087fd62911cf

|

henryscheible/rte_bert-base-uncased_144_v2

|

henryscheible

| null | 13 | 0 | null | 0 | null | true | false | false |

apache-2.0

|

['en']

|

['glue']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,018 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# rte_bert-base-uncased_144_v2

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the GLUE RTE dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7639

- Accuracy: 0.6498

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5.0

### Training results

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1

- Datasets 2.6.1

- Tokenizers 0.13.1

|

79ef22ef1305aee280d340bf8cd50c64

|

Xinrui/t5-small-finetuned-eli5

|

Xinrui

|

t5

| 15 | 3 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

| null |

['eli5']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,410 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-finetuned-eli5

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the eli5 dataset.

It achieves the following results on the evaluation set:

- Loss: 3.7555

- Rouge1: 11.8922

- Rouge2: 1.88

- Rougel: 9.6595

- Rougelsum: 10.8308

- Gen Len: 18.9911

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|:------:|:------:|:---------:|:-------:|

| 3.9546 | 1.0 | 34080 | 3.7555 | 11.8922 | 1.88 | 9.6595 | 10.8308 | 18.9911 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

caa0971fe85b28501dcb787fc97fb6eb

|

SkyR/roberta-base-ours-run-4

|

SkyR

|

roberta

| 11 | 6 |

transformers

| 0 |

text-classification

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 3,045 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# run-4

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 2.6296

- Accuracy: 0.685

- Precision: 0.6248

- Recall: 0.6164

- F1: 0.6188

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:---------:|:------:|:------:|

| 1.0195 | 1.0 | 50 | 0.8393 | 0.615 | 0.4126 | 0.5619 | 0.4606 |

| 0.7594 | 2.0 | 100 | 0.7077 | 0.7 | 0.6896 | 0.6663 | 0.6178 |

| 0.5515 | 3.0 | 150 | 0.9342 | 0.68 | 0.6334 | 0.5989 | 0.6016 |

| 0.3739 | 4.0 | 200 | 0.7755 | 0.735 | 0.7032 | 0.7164 | 0.7063 |

| 0.2648 | 5.0 | 250 | 0.9200 | 0.7 | 0.6584 | 0.6677 | 0.6611 |

| 0.1726 | 6.0 | 300 | 1.1898 | 0.71 | 0.6653 | 0.6550 | 0.6570 |

| 0.1452 | 7.0 | 350 | 1.5086 | 0.73 | 0.6884 | 0.6768 | 0.6812 |

| 0.0856 | 8.0 | 400 | 2.6159 | 0.68 | 0.6754 | 0.5863 | 0.5951 |

| 0.1329 | 9.0 | 450 | 1.9491 | 0.71 | 0.6692 | 0.6442 | 0.6463 |

| 0.0322 | 10.0 | 500 | 1.7897 | 0.74 | 0.6977 | 0.6939 | 0.6946 |

| 0.0345 | 11.0 | 550 | 1.9100 | 0.725 | 0.6827 | 0.6853 | 0.6781 |

| 0.026 | 12.0 | 600 | 2.5041 | 0.68 | 0.6246 | 0.6115 | 0.6137 |

| 0.0084 | 13.0 | 650 | 2.5343 | 0.715 | 0.6708 | 0.6617 | 0.6637 |

| 0.0145 | 14.0 | 700 | 2.4112 | 0.715 | 0.6643 | 0.6595 | 0.6614 |

| 0.0119 | 15.0 | 750 | 2.5303 | 0.705 | 0.6479 | 0.6359 | 0.6390 |

| 0.0026 | 16.0 | 800 | 2.6299 | 0.705 | 0.6552 | 0.6447 | 0.6455 |

| 0.0077 | 17.0 | 850 | 2.4044 | 0.715 | 0.6667 | 0.6576 | 0.6596 |

| 0.0055 | 18.0 | 900 | 2.8077 | 0.68 | 0.6208 | 0.6065 | 0.6098 |

| 0.0078 | 19.0 | 950 | 2.5608 | 0.68 | 0.6200 | 0.6104 | 0.6129 |

| 0.0018 | 20.0 | 1000 | 2.6296 | 0.685 | 0.6248 | 0.6164 | 0.6188 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.1+cu116

- Tokenizers 0.13.2

|

c6c2452e74e841a72fdaead3025f6608

|

espnet/pt_commonvoice_blstm

|

espnet

| null | 22 | 0 |

espnet

| 1 |

automatic-speech-recognition

| false | false | false |

cc-by-4.0

|

['pt']

|

['commonvoice']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['espnet', 'audio', 'automatic-speech-recognition']

| false | true | true | 6,942 | false |

## ESPnet2 ASR model

### `espnet/pt_commonvoice_blstm`

This model was trained by dzeinali using commonvoice recipe in [espnet](https://github.com/espnet/espnet/).

### Demo: How to use in ESPnet2

```bash

cd espnet

git checkout 716eb8f92e19708acfd08ba3bd39d40890d3a84b

pip install -e .

cd egs2/commonvoice/asr1

./run.sh --skip_data_prep false --skip_train true --download_model espnet/pt_commonvoice_blstm

```

<!-- Generated by scripts/utils/show_asr_result.sh -->

# RESULTS

## Environments

- date: `Mon Apr 11 18:55:23 EDT 2022`

- python version: `3.9.5 (default, Jun 4 2021, 12:28:51) [GCC 7.5.0]`

- espnet version: `espnet 0.10.6a1`

- pytorch version: `pytorch 1.8.1+cu102`

- Git hash: `5e6e95d087af8a7a4c33c4248b75114237eae64b`

- Commit date: `Mon Apr 4 21:04:45 2022 -0400`

## asr_train_asr_rnn_raw_pt_bpe150_sp

### WER

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|---|---|---|---|---|---|---|---|---|

|decode_rnn_asr_model_valid.acc.best/test_pt|4334|33716|84.7|12.4|2.9|1.3|16.6|46.8|

### CER

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|---|---|---|---|---|---|---|---|---|

|decode_rnn_asr_model_valid.acc.best/test_pt|4334|191499|93.4|3.0|3.6|1.2|7.8|46.9|

### TER

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|---|---|---|---|---|---|---|---|---|

|decode_rnn_asr_model_valid.acc.best/test_pt|4334|116003|90.4|5.7|3.9|1.5|11.1|46.9|

## ASR config

<details><summary>expand</summary>

```

config: conf/tuning/train_asr_rnn.yaml

print_config: false

log_level: INFO

dry_run: false

iterator_type: sequence

output_dir: exp/asr_train_asr_rnn_raw_pt_bpe150_sp

ngpu: 1

seed: 0

num_workers: 1

num_att_plot: 3

dist_backend: nccl

dist_init_method: env://

dist_world_size: null

dist_rank: null

local_rank: 0

dist_master_addr: null

dist_master_port: null

dist_launcher: null

multiprocessing_distributed: false

unused_parameters: false

sharded_ddp: false

cudnn_enabled: true

cudnn_benchmark: false

cudnn_deterministic: true

collect_stats: false

write_collected_feats: false

max_epoch: 15

patience: 3

val_scheduler_criterion:

- valid

- loss

early_stopping_criterion:

- valid

- loss

- min

best_model_criterion:

- - train

- loss

- min

- - valid

- loss

- min

- - train

- acc

- max

- - valid

- acc

- max

keep_nbest_models:

- 10

nbest_averaging_interval: 0

grad_clip: 5.0

grad_clip_type: 2.0

grad_noise: false

accum_grad: 1

no_forward_run: false

resume: true

train_dtype: float32

use_amp: false

log_interval: null

use_matplotlib: true

use_tensorboard: true

use_wandb: false

wandb_project: null

wandb_id: null

wandb_entity: null

wandb_name: null

wandb_model_log_interval: -1

detect_anomaly: false

pretrain_path: null

init_param: []

ignore_init_mismatch: false

freeze_param: []

num_iters_per_epoch: null

batch_size: 30

valid_batch_size: null

batch_bins: 1000000

valid_batch_bins: null

train_shape_file:

- exp/asr_stats_raw_pt_bpe150_sp/train/speech_shape

- exp/asr_stats_raw_pt_bpe150_sp/train/text_shape.bpe

valid_shape_file:

- exp/asr_stats_raw_pt_bpe150_sp/valid/speech_shape

- exp/asr_stats_raw_pt_bpe150_sp/valid/text_shape.bpe

batch_type: folded

valid_batch_type: null

fold_length:

- 80000

- 150

sort_in_batch: descending

sort_batch: descending

multiple_iterator: false

chunk_length: 500

chunk_shift_ratio: 0.5

num_cache_chunks: 1024

train_data_path_and_name_and_type:

- - dump/raw/train_pt_sp/wav.scp

- speech

- sound

- - dump/raw/train_pt_sp/text

- text

- text

valid_data_path_and_name_and_type:

- - dump/raw/dev_pt/wav.scp

- speech

- sound

- - dump/raw/dev_pt/text

- text

- text

allow_variable_data_keys: false

max_cache_size: 0.0

max_cache_fd: 32

valid_max_cache_size: null

optim: adadelta

optim_conf:

lr: 0.1

scheduler: null

scheduler_conf: {}

token_list:

- <blank>

- <unk>

- ▁

- S

- R

- I

- U

- E

- O

- A

- .

- N

- M

- L

- ▁A

- ▁DE

- RA

- ▁O

- T

- ▁E

- ▁UM

- C

- TA

- DO

- G

- TO

- TE

- DA

- VE

- B

- NDO

- ▁SE

- ▁QUE

- P

- ▁UMA

- LA

- D

- ▁COM

- CA

- á

- '?'

- ▁PE

- ▁EM

- IN

- TI

- IS

- ▁C

- H

- HO

- ▁CA

- ▁P

- CO

- ','

- ▁NO

- MA

- NTE

- PA

- ▁NãO

- DE

- ãO

- ▁ME

- ▁PARA

- Z

- ▁MA

- VA

- PO

- ▁DO

- ▁VOCê

- RI

- ▁DI

- GA

- VI

- ▁é

- LO

- IA

- ▁ELE

- ▁EU

- ▁ESTá

- HA

- ▁M

- X

- ▁NA

- NA

- é

- CE

- LE

- GO

- VO

- ▁RE

- ▁FO

- ▁FA

- ▁CO

- QUE

- ▁EST

- BE

- ▁CON

- ó

- SE

- ▁POR

- ê

- í

- çãO

- ▁DA

- RES

- ▁QUA

- ▁HOMEM

- RIA

- çA

- ▁SA

- V

- ▁PRE

- MENTE

- ZE

- NHA

- '-'

- ▁BA

- MOS

- ▁SO

- ▁BO

- ç

- '"'

- '!'

- ú

- ã

- K

- Y

- É

- W

- ô

- Á

- ':'

- ;

- ''''

- ”

- Ô

- ñ

- “

- Ú

- Í

- Ó

- ü

- À

- â

- à

- õ

- J

- Q

- F

- Â

- <sos/eos>

init: null

input_size: null

ctc_conf:

dropout_rate: 0.0

ctc_type: builtin

reduce: true

ignore_nan_grad: true

joint_net_conf: null

model_conf:

ctc_weight: 0.5

use_preprocessor: true

token_type: bpe

bpemodel: data/pt_token_list/bpe_unigram150/bpe.model

non_linguistic_symbols: null

cleaner: null

g2p: null

speech_volume_normalize: null

rir_scp: null

rir_apply_prob: 1.0

noise_scp: null

noise_apply_prob: 1.0

noise_db_range: '13_15'

frontend: default

frontend_conf:

fs: 16k

specaug: specaug

specaug_conf:

apply_time_warp: true

time_warp_window: 5

time_warp_mode: bicubic

apply_freq_mask: true

freq_mask_width_range:

- 0

- 27

num_freq_mask: 2

apply_time_mask: true

time_mask_width_ratio_range:

- 0.0

- 0.05

num_time_mask: 2

normalize: global_mvn

normalize_conf:

stats_file: exp/asr_stats_raw_pt_bpe150_sp/train/feats_stats.npz

preencoder: null

preencoder_conf: {}

encoder: vgg_rnn

encoder_conf:

rnn_type: lstm

bidirectional: true

use_projection: true

num_layers: 4

hidden_size: 1024

output_size: 1024

postencoder: null

postencoder_conf: {}

decoder: rnn

decoder_conf:

num_layers: 2

hidden_size: 1024

sampling_probability: 0

att_conf:

atype: location

adim: 1024

aconv_chans: 10

aconv_filts: 100

required:

- output_dir

- token_list

version: 0.10.6a1

distributed: false

```

</details>

### Citing ESPnet

```BibTex

@inproceedings{watanabe2018espnet,

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Yalta and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

title={{ESPnet}: End-to-End Speech Processing Toolkit},

year={2018},

booktitle={Proceedings of Interspeech},

pages={2207--2211},

doi={10.21437/Interspeech.2018-1456},

url={http://dx.doi.org/10.21437/Interspeech.2018-1456}

}

```

or arXiv:

```bibtex

@misc{watanabe2018espnet,

title={ESPnet: End-to-End Speech Processing Toolkit},

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Yalta and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

year={2018},

eprint={1804.00015},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

7092c9bb205dd5269e3a881672537ba4

|

ssharm87/t5-small-finetuned-xsum-ss

|

ssharm87

|

t5

| 13 | 0 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

| null |

['xsum']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,417 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-finetuned-xsum-ss

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the xsum dataset.

It achieves the following results on the evaluation set:

- Loss: 2.5823

- Rouge1: 26.3663

- Rouge2: 6.4727

- Rougel: 20.538

- Rougelsum: 20.5411

- Gen Len: 18.8006

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 0.25

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:------:|:------:|:---------:|:-------:|

| 2.8125 | 0.25 | 3189 | 2.5823 | 26.3663 | 6.4727 | 20.538 | 20.5411 | 18.8006 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

ccea3564ded49d9e71afaeb34e61cf50

|

xhyi/CodeGen-350M-Multi

|

xhyi

|

codegen

| 11 | 0 |

transformers

| 0 |

text-generation

| true | false | false |

bsd-3-clause

|

['en']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['codegen', 'text generation', 'pytorch', 'causal-lm']

| false | true | true | 2,323 | false |

# Salesforce CodeGen

ported salesforce codegen models to work on huggingface transformers without any extra code (the model specific code is bundled)

## Overview

The CodeGen model was proposed in by Erik Nijkamp, Bo Pang, Hiroaki Hayashi, Lifu Tu, Huan Wang, Yingbo Zhou, Silvio Savarese, and Caiming Xiong. From Salesforce Research.

The abstract from the paper is the following: Program synthesis strives to generate a computer program as a solution to a given problem specification. We propose a conversational program synthesis approach via large language models, which addresses the challenges of searching over a vast program space and user intent specification faced in prior approaches. Our new approach casts the process of writing a specification and program as a multi-turn conversation between a user and a system. It treats program synthesis as a sequence prediction problem, in which the specification is expressed in natural language and the desired program is conditionally sampled. We train a family of large language models, called CodeGen, on natural language and programming language data. With weak supervision in the data and the scaling up of data size and model size, conversational capacities emerge from the simple autoregressive language modeling. To study the model behavior on conversational program synthesis, we develop a multi-turn programming benchmark (MTPB), where solving each problem requires multi-step synthesis via multi-turn conversation between the user and the model. Our findings show the emergence of conversational capabilities and the effectiveness of the proposed conversational program synthesis paradigm. In addition, our model CodeGen (with up to 16B parameters trained on TPU-v4) outperforms OpenAI's Codex on the HumanEval benchmark. We plan to make the training library JaxFormer including checkpoints available as open source.

## Usage

`trust_remote_code` is needed because the [torch modules](https://github.com/salesforce/CodeGen/tree/main/jaxformer/hf/codegen) for the custom codegen model is bundled.

```sh

from transformers import AutoModelForCausalLM, GPT2Tokenizer

tokenizer = GPT2Tokenizer.from_pretrained(model_folder, local_files_only=True)

model = AutoModelForCausalLM.from_pretrained(model_folder, local_files_only=True, trust_remote_code=True)

```

|

ec59c285a25348f321779684c7c94c71

|

SiddharthaM/hasoc19-xlm-roberta-base-targinsult1

|

SiddharthaM

|

xlm-roberta

| 12 | 3 |

transformers

| 0 |

text-classification

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,185 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# hasoc19-xlm-roberta-base-targinsult1

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7512

- Accuracy: 0.7096

- Precision: 0.6720

- Recall: 0.6675

- F1: 0.6695

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:---------:|:------:|:------:|

| No log | 1.0 | 263 | 0.5619 | 0.6996 | 0.6660 | 0.6717 | 0.6684 |

| 0.5931 | 2.0 | 526 | 0.5350 | 0.7239 | 0.6880 | 0.6576 | 0.6655 |

| 0.5931 | 3.0 | 789 | 0.5438 | 0.7239 | 0.6872 | 0.6644 | 0.6714 |

| 0.5101 | 4.0 | 1052 | 0.5595 | 0.7196 | 0.6866 | 0.6909 | 0.6886 |

| 0.5101 | 5.0 | 1315 | 0.5580 | 0.7186 | 0.6818 | 0.6743 | 0.6774 |

| 0.4313 | 6.0 | 1578 | 0.6000 | 0.7039 | 0.6679 | 0.6692 | 0.6686 |

| 0.4313 | 7.0 | 1841 | 0.6429 | 0.7082 | 0.6765 | 0.6841 | 0.6794 |

| 0.3591 | 8.0 | 2104 | 0.6626 | 0.7115 | 0.6772 | 0.6803 | 0.6786 |

| 0.3591 | 9.0 | 2367 | 0.7231 | 0.7139 | 0.6764 | 0.6700 | 0.6727 |

| 0.3016 | 10.0 | 2630 | 0.7512 | 0.7096 | 0.6720 | 0.6675 | 0.6695 |

### Framework versions

- Transformers 4.24.0.dev0

- Pytorch 1.11.0+cu102

- Datasets 2.6.1

- Tokenizers 0.13.1

|

cbced0ba70f9521fec1432e6be732489

|

theojolliffe/bart-cnn-pubmed-arxiv-v3-e4

|

theojolliffe

|

bart

| 13 | 3 |

transformers

| 0 |

text2text-generation

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,791 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-cnn-pubmed-arxiv-v3-e4

This model is a fine-tuned version of [theojolliffe/bart-cnn-pubmed-arxiv](https://huggingface.co/theojolliffe/bart-cnn-pubmed-arxiv) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7934

- Rouge1: 54.2624

- Rouge2: 35.6024

- Rougel: 37.1697

- Rougelsum: 51.5144

- Gen Len: 141.9815

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:--------:|

| No log | 1.0 | 398 | 0.9533 | 52.3191 | 32.4576 | 33.2016 | 49.6502 | 142.0 |

| 1.1154 | 2.0 | 796 | 0.8407 | 53.6639 | 34.3433 | 36.1893 | 50.9077 | 142.0 |

| 0.6856 | 3.0 | 1194 | 0.7978 | 54.4723 | 36.1315 | 37.7891 | 51.902 | 142.0 |

| 0.4943 | 4.0 | 1592 | 0.7934 | 54.2624 | 35.6024 | 37.1697 | 51.5144 | 141.9815 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

9dd1f71700707e68223d77f99051a63f

|

mehnaazasad/swin-tiny-patch4-window7-224-finetuned-eurosat

|

mehnaazasad

|

swin

| 14 | 3 |

transformers

| 0 |

image-classification

| true | false | false |

apache-2.0

| null |

['image_folder']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,493 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-tiny-patch4-window7-224-finetuned-eurosat

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the image_folder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0703

- Accuracy: 0.9770

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.2369 | 1.0 | 190 | 0.1683 | 0.9433 |

| 0.1812 | 2.0 | 380 | 0.0972 | 0.9670 |

| 0.1246 | 3.0 | 570 | 0.0703 | 0.9770 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

cd1ed1587988b40fe3077a3bfef0859c

|

speechbrain/asr-wav2vec2-dvoice-fongbe

|

speechbrain

|

wav2vec2

| 9 | 5 |

speechbrain

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['fon']

|

['Dvoice']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['CTC', 'pytorch', 'speechbrain', 'Transformer']

| false | true | true | 6,368 | false |

<iframe src="https://ghbtns.com/github-btn.html?user=speechbrain&repo=speechbrain&type=star&count=true&size=large&v=2" frameborder="0" scrolling="0" width="170" height="30" title="GitHub"></iframe>

<br/><br/>

# wav2vec 2.0 with CTC/Attention trained on DVoice Fongbe (No LM)

This repository provides all the necessary tools to perform automatic speech

recognition from an end-to-end system pretrained on a [ALFFA](https://github.com/besacier/ALFFA_PUBLIC) Fongbe dataset within

SpeechBrain. For a better experience, we encourage you to learn more about

[SpeechBrain](https://speechbrain.github.io).

| DVoice Release | Val. CER | Val. WER | Test CER | Test WER |

|:-------------:|:---------------------------:| -----:| -----:| -----:|

| v2.0 | 4.16 | 9.19 | 3.98 | 9.00 |

# Pipeline description

This ASR system is composed of 2 different but linked blocks:

- Tokenizer (unigram) that transforms words into subword units and is trained with the train transcriptions.

- Acoustic model (wav2vec2.0 + CTC). A pretrained wav2vec 2.0 model ([facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53)) is combined with two DNN layers and finetuned on the Darija dataset.

The obtained final acoustic representation is given to the CTC greedy decoder.

The system is trained with recordings sampled at 16kHz (single channel).

The code will automatically normalize your audio (i.e., resampling + mono channel selection) when calling *transcribe_file* if needed.

# Install SpeechBrain

First of all, please install transformers and SpeechBrain with the following command:

```

pip install speechbrain transformers

```

Please notice that we encourage you to read the SpeechBrain tutorials and learn more about

[SpeechBrain](https://speechbrain.github.io).

# Transcribing your own audio files (in Fongbe)

```python

from speechbrain.pretrained import EncoderASR

asr_model = EncoderASR.from_hparams(source="speechbrain/asr-wav2vec2-dvoice-fongbe", savedir="pretrained_models/asr-wav2vec2-dvoice-fongbe")

asr_model.transcribe_file('speechbrain/asr-wav2vec2-dvoice-fongbe/example_fongbe.wav')

```

# Inference on GPU

To perform inference on the GPU, add `run_opts={"device":"cuda"}` when calling the `from_hparams` method.

# Training

The model was trained with SpeechBrain.

To train it from scratch follow these steps:

1. Clone SpeechBrain:

```bash

git clone https://github.com/speechbrain/speechbrain/

```

2. Install it:

```bash

cd speechbrain

pip install -r requirements.txt

pip install -e .

```

3. Run Training:

```bash

cd recipes/DVoice/ASR/CTC

python train_with_wav2vec2.py hparams/train_fon_with_wav2vec.yaml --data_folder=/localscratch/ALFFA_PUBLIC/ASR/FONGBE/data/

```

You can find our training results (models, logs, etc) [here](https://drive.google.com/drive/folders/1vNT7RjRuELs7pumBHmfYsrOp9m46D0ym?usp=sharing).

# Limitations

The SpeechBrain team does not provide any warranty on the performance achieved by this model when used on other datasets.

# About DVoice

DVoice is a community initiative that aims to provide African low resources languages with data and models to facilitate their use of voice technologies. The lack of data on these languages makes it necessary to collect data using methods that are specific to each one. Two different approaches are currently used: the DVoice platforms ([https://dvoice.ma](https://dvoice.ma) and [https://dvoice.sn](https://dvoice.sn)), which are based on Mozilla Common Voice, for collecting authentic recordings from the community, and transfer learning techniques for automatically labeling recordings that are retrieved from social media. The DVoice platform currently manages 7 languages including Darija (Moroccan Arabic dialect) whose dataset appears on this version, Wolof, Mandingo, Serere, Pular, Diola, and Soninke.

For this project, AIOX Labs and the SI2M Laboratory are joining forces to build the future of technologies together.

# About AIOX Labs

Based in Rabat, London, and Paris, AIOX-Labs mobilizes artificial intelligence technologies to meet the business needs and data projects of companies.

- He is at the service of the growth of groups, the optimization of processes, or the improvement of the customer experience.

- AIOX-Labs is multi-sector, from fintech to industry, including retail and consumer goods.

- Business-ready data products with a solid algorithmic base and adaptability for the specific needs of each client.

- A complementary team made up of doctors in AI and business experts with a solid scientific base and international publications.

Website: [https://www.aiox-labs.com/](https://www.aiox-labs.com/)

# SI2M Laboratory

The Information Systems, Intelligent Systems, and Mathematical Modeling Research Laboratory (SI2M) is an academic research laboratory of the National Institute of Statistics and Applied Economics (INSEA). The research areas of the laboratories are Information Systems, Intelligent Systems, Artificial Intelligence, Decision Support, Network, and System Security, and Mathematical Modelling.

Website: [SI2M Laboratory](https://insea.ac.ma/index.php/pole-recherche/equipe-de-recherche/150-laboratoire-de-recherche-en-systemes-d-information-systemes-intelligents-et-modelisation-mathematique)

# About SpeechBrain

SpeechBrain is an open-source and all-in-one speech toolkit. It is designed to be simple, extremely flexible, and user-friendly. Competitive or state-of-the-art performance is obtained in various domains.

Website: https://speechbrain.github.io/

GitHub: https://github.com/speechbrain/speechbrain

# Referencing SpeechBrain

```

@misc{SB2021,

author = {Ravanelli, Mirco and Parcollet, Titouan and Rouhe, Aku and Plantinga, Peter and Rastorgueva, Elena and Lugosch, Loren and Dawalatabad, Nauman and Ju-Chieh, Chou and Heba, Abdel and Grondin, Francois and Aris, William and Liao, Chien-Feng and Cornell, Samuele and Yeh, Sung-Lin and Na, Hwidong and Gao, Yan and Fu, Szu-Wei and Subakan, Cem and De Mori, Renato and Bengio, Yoshua },

title = {SpeechBrain},

year = {2021},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\\\\url{https://github.com/speechbrain/speechbrain}},

}

```

# Acknowledgements

This research was supported through computational resources of HPC-MARWAN (www.marwan.ma/hpc) provided by CNRST, Rabat, Morocco. We deeply thank this institution.

|

97a4ab154eec2598b4ca3949955a141e

|

MehdiHosseiniMoghadam/wav2vec2-large-xlsr-53-German

|

MehdiHosseiniMoghadam

|

wav2vec2

| 11 | 9 |

transformers

| 0 |

automatic-speech-recognition

| true | false | true |

apache-2.0

|

['de']

|

['common_voice']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['audio', 'automatic-speech-recognition', 'speech', 'xlsr-fine-tuning-week']

| true | true | true | 3,496 | false |

# wav2vec2-large-xlsr-53-German

Fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) in German using the [Common Voice](https://huggingface.co/datasets/common_voice)

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

test_dataset = load_dataset("common_voice", "de", split="test[:2%]")

processor = Wav2Vec2Processor.from_pretrained("MehdiHosseiniMoghadam/wav2vec2-large-xlsr-53-German")

model = Wav2Vec2ForCTC.from_pretrained("MehdiHosseiniMoghadam/wav2vec2-large-xlsr-53-German")

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

inputs = processor(test_dataset["speech"][:2], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values, attention_mask=inputs.attention_mask).logits

predicted_ids = torch.argmax(logits, dim=-1)

print("Prediction:", processor.batch_decode(predicted_ids))

print("Reference:", test_dataset["sentence"][:2])

```

## Evaluation

The model can be evaluated as follows on the Czech test data of Common Voice.

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import re

test_dataset = load_dataset("common_voice", "de", split="test[:15%]")

wer = load_metric("wer")

processor = Wav2Vec2Processor.from_pretrained("MehdiHosseiniMoghadam/wav2vec2-large-xlsr-53-German")

model = Wav2Vec2ForCTC.from_pretrained("MehdiHosseiniMoghadam/wav2vec2-large-xlsr-53-German")

model.to("cuda")

chars_to_ignore_regex = '[\,\?\.\!\-\;\:\"\“\%\‘\”\�]'

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower()

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def evaluate(batch):

inputs = processor(batch["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values.to("cuda"), attention_mask=inputs.attention_mask.to("cuda")).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["pred_strings"] = processor.batch_decode(pred_ids)

return batch

result = test_dataset.map(evaluate, batched=True, batch_size=8)

print("WER: {:2f}".format(100 * wer.compute(predictions=result["pred_strings"], references=result["sentence"])))

```

**Test Result**: 25.284593 %

## Training

10% of the Common Voice `train`, `validation` datasets were used for training.

## Testing

15% of the Common Voice `Test` dataset were used for training.

|

52282c5a30de01fc3e0c22690c473f9d

|

Helsinki-NLP/opus-mt-crs-fr

|

Helsinki-NLP

|

marian

| 10 | 10 |

transformers

| 0 |

translation

| true | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['translation']

| false | true | true | 776 | false |

### opus-mt-crs-fr

* source languages: crs

* target languages: fr

* OPUS readme: [crs-fr](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/crs-fr/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-08.zip](https://object.pouta.csc.fi/OPUS-MT-models/crs-fr/opus-2020-01-08.zip)

* test set translations: [opus-2020-01-08.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/crs-fr/opus-2020-01-08.test.txt)

* test set scores: [opus-2020-01-08.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/crs-fr/opus-2020-01-08.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.crs.fr | 29.4 | 0.475 |

|

c9b725012cf5eb6cc156873b059e461b

|

aliosm/ai-soco-cpp-roberta-tiny-clas

|

aliosm

| null | 2 | 0 | null | 0 | null | false | false | false |

mit

|

['c++']

|

['ai-soco']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['exbert', 'authorship-identification', 'fire2020', 'pan2020', 'ai-soco', 'classification']

| false | true | true | 1,684 | false |

# ai-soco-c++-roberta-tiny-clas

## Model description

`ai-soco-c++-roberta-tiny` model fine-tuned on [AI-SOCO](https://sites.google.com/view/ai-soco-2020) task.

#### How to use

You can use the model directly after tokenizing the text using the provided tokenizer with the model files.

#### Limitations and bias

The model is limited to C++ programming language only.

## Training data

The model initialized from [`ai-soco-c++-roberta-tiny`](https://github.com/huggingface/transformers/blob/master/model_cards/aliosm/ai-soco-c++-roberta-tiny) model and trained using [AI-SOCO](https://sites.google.com/view/ai-soco-2020) dataset to do text classification.

## Training procedure

The model trained on Google Colab platform using V100 GPU for 10 epochs, 32 batch size, 512 max sequence length (sequences larger than 512 were truncated). Each continues 4 spaces were converted to a single tab character (`\t`) before tokenization.

## Eval results

The model achieved 87.66%/87.46% accuracy on AI-SOCO task and ranked in the 9th place.

### BibTeX entry and citation info

```bibtex

@inproceedings{ai-soco-2020-fire,

title = "Overview of the {PAN@FIRE} 2020 Task on {Authorship Identification of SOurce COde (AI-SOCO)}",

author = "Fadel, Ali and Musleh, Husam and Tuffaha, Ibraheem and Al-Ayyoub, Mahmoud and Jararweh, Yaser and Benkhelifa, Elhadj and Rosso, Paolo",

booktitle = "Proceedings of The 12th meeting of the Forum for Information Retrieval Evaluation (FIRE 2020)",

year = "2020"

}

```

<a href="https://huggingface.co/exbert/?model=aliosm/ai-soco-c++-roberta-tiny-clas">

<img width="300px" src="https://cdn-media.huggingface.co/exbert/button.png">

</a>

|

253a7d1a3deda77661d5f8bf1e72063e

|

lucafrost/whispQuote-ChunkDQ-DistilBERT

|

lucafrost

|

distilbert

| 10 | 0 |

transformers

| 0 |

token-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,722 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# whispQuote-ChunkDQ-DistilBERT

This model is a fine-tuned version of [distilbert-base-cased](https://huggingface.co/distilbert-base-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2582

- Precision: 0.5816

- Recall: 0.8129

- F1: 0.6780

- Accuracy: 0.9126

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 164 | 0.3432 | 0.4477 | 0.5795 | 0.5052 | 0.8796 |

| No log | 2.0 | 328 | 0.3053 | 0.4308 | 0.6985 | 0.5329 | 0.8952 |

| No log | 3.0 | 492 | 0.2602 | 0.5716 | 0.7775 | 0.6588 | 0.9097 |

| 0.3826 | 4.0 | 656 | 0.2607 | 0.5664 | 0.8070 | 0.6656 | 0.9114 |

| 0.3826 | 5.0 | 820 | 0.2582 | 0.5816 | 0.8129 | 0.6780 | 0.9126 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.10.2+cu113

- Datasets 2.9.0

- Tokenizers 0.13.2

|

3f451d0d05d044e20d96a33eb7730d97

|

cammy/bart-large-cnn-finetune

|

cammy

|

bart

| 15 | 1 |

transformers

| 0 |

text2text-generation

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,434 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-large-cnn-finetune

This model is a fine-tuned version of [facebook/bart-large-cnn](https://huggingface.co/facebook/bart-large-cnn) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.5677

- Rouge1: 9.9893

- Rouge2: 5.2818

- Rougel: 9.7766

- Rougelsum: 9.7951

- Gen Len: 58.1672

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:------:|:---------:|:-------:|

| 0.2639 | 1.0 | 4774 | 1.5677 | 9.9893 | 5.2818 | 9.7766 | 9.7951 | 58.1672 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.13.0+cu116

- Datasets 2.7.0

- Tokenizers 0.13.2

|

20620f427c371587c728fe6ab4114ff6

|

Rerare/distilbert-base-uncased-finetuned-cola

|

Rerare

|

distilbert

| 13 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null |

['glue']

| null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,571 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-cola

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7643

- Matthews Correlation: 0.5291

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Matthews Correlation |

|:-------------:|:-----:|:----:|:---------------:|:--------------------:|

| 0.5288 | 1.0 | 535 | 0.5111 | 0.4154 |

| 0.3546 | 2.0 | 1070 | 0.5285 | 0.4887 |

| 0.235 | 3.0 | 1605 | 0.5950 | 0.5153 |

| 0.1722 | 4.0 | 2140 | 0.7643 | 0.5291 |

| 0.1346 | 5.0 | 2675 | 0.8441 | 0.5185 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

deefda695d2ad082c15092bb3fb7f8d6

|

S2312dal/M7_MLM_final

|

S2312dal

|

roberta

| 14 | 4 |

transformers

| 0 |

fill-mask

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,332 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# M7_MLM_final

This model is a fine-tuned version of [sentence-transformers/all-distilroberta-v1](https://huggingface.co/sentence-transformers/all-distilroberta-v1) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 5.4732

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 8.769 | 1.0 | 92 | 6.6861 |

| 6.3549 | 2.0 | 184 | 5.7455 |

| 5.826 | 3.0 | 276 | 5.5610 |

### Framework versions

- Transformers 4.20.0

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

|

d5851cec739c21d477d6b97d3d0864ef

|

jonatasgrosman/exp_w2v2t_id_unispeech-ml_s418

|

jonatasgrosman

|

unispeech

| 10 | 7 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['id']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'id']

| false | true | true | 500 | false |

# exp_w2v2t_id_unispeech-ml_s418

Fine-tuned [microsoft/unispeech-large-multi-lingual-1500h-cv](https://huggingface.co/microsoft/unispeech-large-multi-lingual-1500h-cv) for speech recognition using the train split of [Common Voice 7.0 (id)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

d982d4eeec4cc3549391b882edcd4a44

|

espnet/kan-bayashi_jsut_fastspeech2

|

espnet

| null | 21 | 3 |

espnet

| 0 |

text-to-speech

| false | false | false |

cc-by-4.0

|

['ja']

|

['jsut']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['espnet', 'audio', 'text-to-speech']

| false | true | true | 1,796 | false |

## Example ESPnet2 TTS model

### `kan-bayashi/jsut_fastspeech2`

♻️ Imported from https://zenodo.org/record/4032224/

This model was trained by kan-bayashi using jsut/tts1 recipe in [espnet](https://github.com/espnet/espnet/).

### Demo: How to use in ESPnet2

```python

# coming soon

```

### Citing ESPnet

```BibTex

@inproceedings{watanabe2018espnet,

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson {Enrique Yalta Soplin} and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

title={{ESPnet}: End-to-End Speech Processing Toolkit},

year={2018},

booktitle={Proceedings of Interspeech},

pages={2207--2211},

doi={10.21437/Interspeech.2018-1456},

url={http://dx.doi.org/10.21437/Interspeech.2018-1456}

}

@inproceedings{hayashi2020espnet,

title={{Espnet-TTS}: Unified, reproducible, and integratable open source end-to-end text-to-speech toolkit},

author={Hayashi, Tomoki and Yamamoto, Ryuichi and Inoue, Katsuki and Yoshimura, Takenori and Watanabe, Shinji and Toda, Tomoki and Takeda, Kazuya and Zhang, Yu and Tan, Xu},

booktitle={Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

pages={7654--7658},

year={2020},

organization={IEEE}

}

```

or arXiv:

```bibtex

@misc{watanabe2018espnet,

title={ESPnet: End-to-End Speech Processing Toolkit},

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Enrique Yalta Soplin and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

year={2018},

eprint={1804.00015},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

ebc4cf6340df0d702073bdc24ad0d215

|

minoosh/wav2vec2-base-finetuned-ie

|

minoosh

|

wav2vec2

| 27 | 2 |

transformers

| 0 |

audio-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,219 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-finetuned-ie

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- eval_loss: 1.5355

- eval_accuracy: 0.4318

- eval_runtime: 111.662

- eval_samples_per_second: 17.983

- eval_steps_per_second: 0.564

- epoch: 8.38

- step: 520

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

e368d2caf65de24e0ae4f8e438ac8f0c

|

Geotrend/distilbert-base-el-cased

|

Geotrend

|

distilbert

| 6 | 5 |

transformers

| 0 |

fill-mask

| true | false | false |

apache-2.0

|

['el']

|

['wikipedia']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,215 | false |

# distilbert-base-el-cased

We are sharing smaller versions of [distilbert-base-multilingual-cased](https://huggingface.co/distilbert-base-multilingual-cased) that handle a custom number of languages.

Our versions give exactly the same representations produced by the original model which preserves the original accuracy.

For more information please visit our paper: [Load What You Need: Smaller Versions of Multilingual BERT](https://www.aclweb.org/anthology/2020.sustainlp-1.16.pdf).

## How to use

```python

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("Geotrend/distilbert-base-el-cased")

model = AutoModel.from_pretrained("Geotrend/distilbert-base-el-cased")

```

To generate other smaller versions of multilingual transformers please visit [our Github repo](https://github.com/Geotrend-research/smaller-transformers).

### How to cite

```bibtex

@inproceedings{smallermdistilbert,

title={Load What You Need: Smaller Versions of Mutlilingual BERT},

author={Abdaoui, Amine and Pradel, Camille and Sigel, Grégoire},

booktitle={SustaiNLP / EMNLP},

year={2020}

}

```

## Contact

Please contact amine@geotrend.fr for any question, feedback or request.

|

75c244c71751457da4b52eb472d723f4

|

Jaspal/distilbert-base-uncased-finetuned-cola

|

Jaspal

|

distilbert

| 10 | 3 |

transformers

| 0 |

text-classification

| false | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,598 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Jaspal/distilbert-base-uncased-finetuned-cola

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.1904

- Validation Loss: 0.5593

- Train Matthews Correlation: 0.5189

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 2670, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Train Matthews Correlation | Epoch |

|:----------:|:---------------:|:--------------------------:|:-----:|

| 0.5175 | 0.4542 | 0.4684 | 0 |

| 0.3255 | 0.4617 | 0.5007 | 1 |

| 0.1904 | 0.5593 | 0.5189 | 2 |

### Framework versions

- Transformers 4.20.1

- TensorFlow 2.8.2

- Datasets 2.3.2

- Tokenizers 0.12.1

|

d4478e476f2b654fda0b15f730a61219

|

Helsinki-NLP/opus-mt-en-kg

|

Helsinki-NLP

|

marian

| 10 | 41 |

transformers

| 0 |

translation

| true | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['translation']

| false | true | true | 768 | false |

### opus-mt-en-kg

* source languages: en

* target languages: kg

* OPUS readme: [en-kg](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/en-kg/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-08.zip](https://object.pouta.csc.fi/OPUS-MT-models/en-kg/opus-2020-01-08.zip)

* test set translations: [opus-2020-01-08.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/en-kg/opus-2020-01-08.test.txt)

* test set scores: [opus-2020-01-08.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/en-kg/opus-2020-01-08.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.en.kg | 39.6 | 0.613 |

|

a1903abb8066b98b10420ee1f7f95bb0

|

MultiversexPeeps/wave-concepts

|

MultiversexPeeps

| null | 21 | 9 |

diffusers

| 0 |

text-to-image

| false | false | false |

creativeml-openrail-m

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['text-to-image']

| false | true | true | 863 | false |

### Wave Concepts Dreambooth model trained by Duskfallcrew with [Hugging Face Dreambooth Training Space](https://huggingface.co/spaces/multimodalart/dreambooth-training) with the v1-5 base model

You run your new concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb). Don't forget to use the concept prompts!

Information on this model will be here: https://civitai.com/user/duskfallcrew

If you want to donate towards costs and don't want to subscribe:

https://ko-fi.com/DUSKFALLcrew

If you want to monthly support the EARTH & DUSK media projects and not just AI:

https://www.patreon.com/earthndusk