repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

jonatasgrosman/exp_w2v2t_et_vp-nl_s353

|

jonatasgrosman

|

wav2vec2

| 10 | 5 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['et']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'et']

| false | true | true | 469 | false |

# exp_w2v2t_et_vp-nl_s353

Fine-tuned [facebook/wav2vec2-large-nl-voxpopuli](https://huggingface.co/facebook/wav2vec2-large-nl-voxpopuli) for speech recognition using the train split of [Common Voice 7.0 (et)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

0518ff5feed68accbdb7ccaee0c6b303

|

gokuls/distilbert_sa_GLUE_Experiment_rte_96

|

gokuls

|

distilbert

| 17 | 4 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

|

['en']

|

['glue']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,173 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert_sa_GLUE_Experiment_rte_96

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the GLUE RTE dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6925

- Accuracy: 0.5271

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 256

- eval_batch_size: 256

- seed: 10

- distributed_type: multi-GPU

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 50

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.6932 | 1.0 | 10 | 0.6928 | 0.5271 |

| 0.6934 | 2.0 | 20 | 0.6927 | 0.5271 |

| 0.6934 | 3.0 | 30 | 0.6932 | 0.4729 |

| 0.6931 | 4.0 | 40 | 0.6930 | 0.5271 |

| 0.6936 | 5.0 | 50 | 0.6932 | 0.4440 |

| 0.6932 | 6.0 | 60 | 0.6927 | 0.5271 |

| 0.6932 | 7.0 | 70 | 0.6926 | 0.5271 |

| 0.6928 | 8.0 | 80 | 0.6932 | 0.4477 |

| 0.6935 | 9.0 | 90 | 0.6932 | 0.4260 |

| 0.6933 | 10.0 | 100 | 0.6925 | 0.5271 |

| 0.6929 | 11.0 | 110 | 0.6932 | 0.4440 |

| 0.693 | 12.0 | 120 | 0.6935 | 0.4729 |

| 0.6926 | 13.0 | 130 | 0.6931 | 0.5307 |

| 0.6916 | 14.0 | 140 | 0.6932 | 0.5199 |

| 0.6903 | 15.0 | 150 | 0.6943 | 0.4440 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.14.0a0+410ce96

- Datasets 2.8.0

- Tokenizers 0.13.2

|

5523ef341e57bf40aafef8306964c645

|

Lvxue/distilled-mt5-small-1-1

|

Lvxue

|

mt5

| 14 | 1 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

|

['en', 'ro']

|

['wmt16']

| null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,034 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilled-mt5-small-1-1

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on the wmt16 ro-en dataset.

It achieves the following results on the evaluation set:

- Loss: 2.8289

- Bleu: 6.6959

- Gen Len: 45.7539

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5.0

### Training results

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0+cu102

- Datasets 2.3.2

- Tokenizers 0.12.1

|

da0ec09716f568c416953b30498c529c

|

flamesbob/Yuko_model

|

flamesbob

| null | 4 | 0 | null | 0 | null | false | false | false |

creativeml-openrail-m

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 955 | false |

To use draw emphasis from the training model include the word `m_yukoring` in your prompt.

Yukoring is an artists that does a lot of anime watercolor style art.

License This embedding is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage. The CreativeML OpenRAIL License specifies:

You can't use the embedding to deliberately produce nor share illegal or harmful outputs or content The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license You may re-distribute the weights and use the embedding commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully) Please read the full license here

|

96d4e1e1910aeb40ab1df3ecff18724b

|

zendiode69/electra-base-squad2-finetuned-squad-12-trainedfor-3

|

zendiode69

|

electra

| 12 | 0 |

transformers

| 0 |

question-answering

| true | false | false |

cc-by-4.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,298 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# electra-base-squad2-finetuned-squad-12-trainedfor-3

This model is a fine-tuned version of [deepset/electra-base-squad2](https://huggingface.co/deepset/electra-base-squad2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3064

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-06

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.6128 | 1.0 | 578 | 0.3142 |

| 0.4583 | 2.0 | 1156 | 0.3072 |

| 0.415 | 3.0 | 1734 | 0.3064 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.13.1+cu116

- Datasets 2.9.0

- Tokenizers 0.13.2

|

9a70bfbf6fe35b88a7e8f27c4bc33795

|

Martha-987/whisper-small-Arabic

|

Martha-987

|

whisper

| 24 | 2 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['ar']

|

['mozilla-foundation/common_voice_11_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['hf-asr-leaderboard', 'generated_from_trainer']

| true | true | true | 1,296 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small Ar- Martha

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the Common Voice 11.0 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3837

- Wer: 51.1854

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 1000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.2726 | 0.42 | 1000 | 0.3837 | 51.1854 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu116

- Datasets 2.8.0

- Tokenizers 0.13.2

|

c3e578f6ad21da408cbae02f45d61a7d

|

asapp/sew-d-tiny-100k

|

asapp

|

sew-d

| 5 | 126 |

transformers

| 0 |

feature-extraction

| true | false | false |

apache-2.0

|

['en']

|

['librispeech_asr']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['speech']

| false | true | true | 1,699 | false |

# SEW-D-tiny

[SEW-D by ASAPP Research](https://github.com/asappresearch/sew)

The base model pretrained on 16kHz sampled speech audio. When using the model make sure that your speech input is also sampled at 16Khz. Note that this model should be fine-tuned on a downstream task, like Automatic Speech Recognition, Speaker Identification, Intent Classification, Emotion Recognition, etc...

Paper: [Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition](https://arxiv.org/abs/2109.06870)

Authors: Felix Wu, Kwangyoun Kim, Jing Pan, Kyu Han, Kilian Q. Weinberger, Yoav Artzi

**Abstract**

This paper is a study of performance-efficiency trade-offs in pre-trained models for automatic speech recognition (ASR). We focus on wav2vec 2.0, and formalize several architecture designs that influence both the model performance and its efficiency. Putting together all our observations, we introduce SEW (Squeezed and Efficient Wav2vec), a pre-trained model architecture with significant improvements along both performance and efficiency dimensions across a variety of training setups. For example, under the 100h-960h semi-supervised setup on LibriSpeech, SEW achieves a 1.9x inference speedup compared to wav2vec 2.0, with a 13.5% relative reduction in word error rate. With a similar inference time, SEW reduces word error rate by 25-50% across different model sizes.

The original model can be found under https://github.com/asappresearch/sew#model-checkpoints .

# Usage

See [this blog](https://huggingface.co/blog/fine-tune-wav2vec2-english) for more information on how to fine-tune the model. Note that the class `Wav2Vec2ForCTC` has to be replaced by `SEWDForCTC`.

|

71cb2d3f37d9bbb03327f419eeb83e88

|

KoichiYasuoka/roberta-base-korean-hanja

|

KoichiYasuoka

|

roberta

| 7 | 73 |

transformers

| 1 |

fill-mask

| true | false | false |

cc-by-sa-4.0

|

['ko']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['korean', 'masked-lm']

| false | true | true | 775 | false |

# roberta-base-korean-hanja

## Model Description

This is a RoBERTa model pre-trained on Korean texts, derived from [klue/roberta-base](https://huggingface.co/klue/roberta-base). Token-embeddings are enhanced to include all 한문 교육용 기초 한자 and 인명용 한자 characters. You can fine-tune `roberta-base-korean-hanja` for downstream tasks, such as [POS-tagging](https://huggingface.co/KoichiYasuoka/roberta-base-korean-upos), [dependency-parsing](https://huggingface.co/KoichiYasuoka/roberta-base-korean-ud-goeswith), and so on.

## How to Use

```py

from transformers import AutoTokenizer,AutoModelForMaskedLM

tokenizer=AutoTokenizer.from_pretrained("KoichiYasuoka/roberta-base-korean-hanja")

model=AutoModelForMaskedLM.from_pretrained("KoichiYasuoka/roberta-base-korean-hanja")

```

|

9444e26a24aa70e106eba1ad42e105d0

|

sukhendrasingh/finetuning-sentiment-model-3000-samples

|

sukhendrasingh

|

distilbert

| 13 | 11 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null |

['imdb']

| null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,056 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-3000-samples

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3323

- Accuracy: 0.8733

- F1: 0.8797

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.16.2

- Pytorch 1.10.0+cu111

- Datasets 1.18.3

- Tokenizers 0.11.0

|

212f80c1139b3a3b41ef73daafc2f5df

|

EleutherAI/pythia-1b

|

EleutherAI

|

gpt_neox

| 7 | 7,595 |

transformers

| 3 |

text-generation

| true | false | false |

apache-2.0

|

['en']

|

['the_pile']

| null | 1 | 0 | 1 | 0 | 0 | 0 | 0 |

['pytorch', 'causal-lm', 'pythia']

| false | true | true | 10,783 | false |

The *Pythia Scaling Suite* is a collection of models developed to facilitate

interpretability research. It contains two sets of eight models of sizes

70M, 160M, 410M, 1B, 1.4B, 2.8B, 6.9B, and 12B. For each size, there are two

models: one trained on the Pile, and one trained on the Pile after the dataset

has been globally deduplicated. All 8 model sizes are trained on the exact

same data, in the exact same order. All Pythia models are available

[on Hugging Face](https://huggingface.co/EleutherAI).

The Pythia model suite was deliberately designed to promote scientific

research on large language models, especially interpretability research.

Despite not centering downstream performance as a design goal, we find the

models match or exceed the performance of similar and same-sized models,

such as those in the OPT and GPT-Neo suites.

Please note that all models in the *Pythia* suite were renamed in January

2023. For clarity, a <a href="#naming-convention-and-parameter-count">table

comparing the old and new names</a> is provided in this model card, together

with exact model parameter counts.

## Pythia-1B

### Model Details

- Developed by: [EleutherAI](http://eleuther.ai)

- Model type: Transformer-based Language Model

- Language: English

- Learn more: [Pythia's GitHub repository](https://github.com/EleutherAI/pythia)

for training procedure, config files, and details on how to use.

- Library: [GPT-NeoX](https://github.com/EleutherAI/gpt-neox)

- License: Apache 2.0

- Contact: to ask questions about this model, join the [EleutherAI

Discord](https://discord.gg/zBGx3azzUn), and post them in `#release-discussion`.

Please read the existing *Pythia* documentation before asking about it in the

EleutherAI Discord. For general correspondence: [contact@eleuther.

ai](mailto:contact@eleuther.ai).

<figure>

| Pythia model | Non-Embedding Params | Layers | Model Dim | Heads | Batch Size | Learning Rate | Equivalent Models |

| -----------: | -------------------: | :----: | :-------: | :---: | :--------: | :-------------------: | :--------------------: |

| 70M | 18,915,328 | 6 | 512 | 8 | 2M | 1.0 x 10<sup>-3</sup> | — |

| 160M | 85,056,000 | 12 | 768 | 12 | 4M | 6.0 x 10<sup>-4</sup> | GPT-Neo 125M, OPT-125M |

| 410M | 302,311,424 | 24 | 1024 | 16 | 4M | 3.0 x 10<sup>-4</sup> | OPT-350M |

| 1.0B | 805,736,448 | 16 | 2048 | 8 | 2M | 3.0 x 10<sup>-4</sup> | — |

| 1.4B | 1,208,602,624 | 24 | 2048 | 16 | 4M | 2.0 x 10<sup>-4</sup> | GPT-Neo 1.3B, OPT-1.3B |

| 2.8B | 2,517,652,480 | 32 | 2560 | 32 | 2M | 1.6 x 10<sup>-4</sup> | GPT-Neo 2.7B, OPT-2.7B |

| 6.9B | 6,444,163,072 | 32 | 4096 | 32 | 2M | 1.2 x 10<sup>-4</sup> | OPT-6.7B |

| 12B | 11,327,027,200 | 36 | 5120 | 40 | 2M | 1.2 x 10<sup>-4</sup> | — |

<figcaption>Engineering details for the <i>Pythia Suite</i>. Deduped and

non-deduped models of a given size have the same hyperparameters. “Equivalent”

models have <b>exactly</b> the same architecture, and the same number of

non-embedding parameters.</figcaption>

</figure>

### Uses and Limitations

#### Intended Use

The primary intended use of Pythia is research on the behavior, functionality,

and limitations of large language models. This suite is intended to provide

a controlled setting for performing scientific experiments. To enable the

study of how language models change over the course of training, we provide

143 evenly spaced intermediate checkpoints per model. These checkpoints are

hosted on Hugging Face as branches. Note that branch `143000` corresponds

exactly to the model checkpoint on the `main` branch of each model.

You may also further fine-tune and adapt Pythia-1B for deployment,

as long as your use is in accordance with the Apache 2.0 license. Pythia

models work with the Hugging Face [Transformers

Library](https://huggingface.co/docs/transformers/index). If you decide to use

pre-trained Pythia-1B as a basis for your fine-tuned model, please

conduct your own risk and bias assessment.

#### Out-of-scope use

The Pythia Suite is **not** intended for deployment. It is not a in itself

a product and cannot be used for human-facing interactions.

Pythia models are English-language only, and are not suitable for translation

or generating text in other languages.

Pythia-1B has not been fine-tuned for downstream contexts in which

language models are commonly deployed, such as writing genre prose,

or commercial chatbots. This means Pythia-1B will **not**

respond to a given prompt the way a product like ChatGPT does. This is because,

unlike this model, ChatGPT was fine-tuned using methods such as Reinforcement

Learning from Human Feedback (RLHF) to better “understand” human instructions.

#### Limitations and biases

The core functionality of a large language model is to take a string of text

and predict the next token. The token deemed statistically most likely by the

model need not produce the most “accurate” text. Never rely on

Pythia-1B to produce factually accurate output.

This model was trained on [the Pile](https://pile.eleuther.ai/), a dataset

known to contain profanity and texts that are lewd or otherwise offensive.

See [Section 6 of the Pile paper](https://arxiv.org/abs/2101.00027) for a

discussion of documented biases with regards to gender, religion, and race.

Pythia-1B may produce socially unacceptable or undesirable text, *even if*

the prompt itself does not include anything explicitly offensive.

If you plan on using text generated through, for example, the Hosted Inference

API, we recommend having a human curate the outputs of this language model

before presenting it to other people. Please inform your audience that the

text was generated by Pythia-1B.

### Quickstart

Pythia models can be loaded and used via the following code, demonstrated here

for the third `pythia-70m-deduped` checkpoint:

```python

from transformers import GPTNeoXForCausalLM, AutoTokenizer

model = GPTNeoXForCausalLM.from_pretrained(

"EleutherAI/pythia-70m-deduped",

revision="step3000",

cache_dir="./pythia-70m-deduped/step3000",

)

tokenizer = AutoTokenizer.from_pretrained(

"EleutherAI/pythia-70m-deduped",

revision="step3000",

cache_dir="./pythia-70m-deduped/step3000",

)

inputs = tokenizer("Hello, I am", return_tensors="pt")

tokens = model.generate(**inputs)

tokenizer.decode(tokens[0])

```

Revision/branch `step143000` corresponds exactly to the model checkpoint on

the `main` branch of each model.

For more information on how to use all Pythia models, see [documentation on

GitHub](https://github.com/EleutherAI/pythia).

### Training

#### Training data

[The Pile](https://pile.eleuther.ai/) is a 825GiB general-purpose dataset in

English. It was created by EleutherAI specifically for training large language

models. It contains texts from 22 diverse sources, roughly broken down into

five categories: academic writing (e.g. arXiv), internet (e.g. CommonCrawl),

prose (e.g. Project Gutenberg), dialogue (e.g. YouTube subtitles), and

miscellaneous (e.g. GitHub, Enron Emails). See [the Pile

paper](https://arxiv.org/abs/2101.00027) for a breakdown of all data sources,

methodology, and a discussion of ethical implications. Consult [the

datasheet](https://arxiv.org/abs/2201.07311) for more detailed documentation

about the Pile and its component datasets. The Pile can be downloaded from

the [official website](https://pile.eleuther.ai/), or from a [community

mirror](https://the-eye.eu/public/AI/pile/).

The Pile was **not** deduplicated before being used to train Pythia-1B.

#### Training procedure

Pythia uses the same tokenizer as [GPT-NeoX-

20B](https://huggingface.co/EleutherAI/gpt-neox-20b).

All models were trained on the exact same data, in the exact same order. Each

model saw 299,892,736,000 tokens during training, and 143 checkpoints for each

model are saved every 2,097,152,000 tokens, spaced evenly throughout training.

This corresponds to training for just under 1 epoch on the Pile for

non-deduplicated models, and about 1.5 epochs on the deduplicated Pile.

All *Pythia* models trained for the equivalent of 143000 steps at a batch size

of 2,097,152 tokens. Two batch sizes were used: 2M and 4M. Models with a batch

size of 4M tokens listed were originally trained for 71500 steps instead, with

checkpoints every 500 steps. The checkpoints on Hugging Face are renamed for

consistency with all 2M batch models, so `step1000` is the first checkpoint

for `pythia-1.4b` that was saved (corresponding to step 500 in training), and

`step1000` is likewise the first `pythia-6.9b` checkpoint that was saved

(corresponding to 1000 “actual” steps).

See [GitHub](https://github.com/EleutherAI/pythia) for more details on training

procedure, including [how to reproduce

it](https://github.com/EleutherAI/pythia/blob/main/README.md#reproducing-training).

### Evaluations

All 16 *Pythia* models were evaluated using the [LM Evaluation

Harness](https://github.com/EleutherAI/lm-evaluation-harness). You can access

the results by model and step at `results/json/*` in the [GitHub

repository](https://github.com/EleutherAI/pythia/tree/main/results/json).

February 2023 note: select evaluations and comparison with OPT and BLOOM

models will be added here at a later date.

### Naming convention and parameter count

*Pythia* models were renamed in January 2023. It is possible that the old

naming convention still persists in some documentation by accident. The

current naming convention (70M, 160M, etc.) is based on total parameter count.

<figure style="width:32em">

| current Pythia suffix | old suffix | total params | non-embedding params |

| --------------------: | ---------: | -------------: | -------------------: |

| 70M | 19M | 70,426,624 | 18,915,328 |

| 160M | 125M | 162,322,944 | 85,056,000 |

| 410M | 350M | 405,334,016 | 302,311,424 |

| 1B | 800M | 1,011,781,632 | 805,736,448 |

| 1.4B | 1.3B | 1,414,647,808 | 1,208,602,624 |

| 2.8B | 2.7B | 2,775,208,960 | 2,517,652,480 |

| 6.9B | 6.7B | 6,857,302,016 | 6,444,163,072 |

| 12B | 13B | 11,846,072,320 | 11,327,027,200 |

</figure>

|

b7fa2eb99d59ee6a3ecec8cb473eedda

|

sd-concepts-library/scratch-project

|

sd-concepts-library

| null | 16 | 0 | null | 0 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,906 | false |

### Scratch project on Stable Diffusion

This is the `<scratch-project>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

9025fb3f45049e252c9eb387ce2468cd

|

Haakf/allsides_left_text_padded_overfit

|

Haakf

|

distilbert

| 8 | 4 |

transformers

| 0 |

fill-mask

| false | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 2,467 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Haakf/allsides_left_text_padded_overfit

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 1.9591

- Validation Loss: 1.9856

- Epoch: 19

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'inner_optimizer': {'class_name': 'AdamWeightDecay', 'config': {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'WarmUp', 'config': {'initial_learning_rate': 2e-05, 'decay_schedule_fn': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': -712, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, '__passive_serialization__': True}, 'warmup_steps': 1000, 'power': 1.0, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}}, 'dynamic': True, 'initial_scale': 32768.0, 'dynamic_growth_steps': 2000}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 2.0625 | 1.8988 | 0 |

| 2.0063 | 1.9757 | 1 |

| 2.0061 | 1.9345 | 2 |

| 1.9730 | 1.9248 | 3 |

| 1.9572 | 1.8433 | 4 |

| 1.9645 | 1.9104 | 5 |

| 1.9584 | 1.9017 | 6 |

| 1.9508 | 1.9430 | 7 |

| 1.9716 | 1.9498 | 8 |

| 1.9613 | 1.9312 | 9 |

| 1.9625 | 1.8820 | 10 |

| 1.9573 | 1.8768 | 11 |

| 1.9612 | 1.8837 | 12 |

| 1.9501 | 1.9325 | 13 |

| 1.9471 | 1.9231 | 14 |

| 1.9567 | 1.8987 | 15 |

| 1.9605 | 1.9159 | 16 |

| 1.9661 | 1.9157 | 17 |

| 1.9513 | 1.8840 | 18 |

| 1.9591 | 1.9856 | 19 |

### Framework versions

- Transformers 4.24.0

- TensorFlow 2.9.2

- Datasets 2.7.1

- Tokenizers 0.13.2

|

c5dc687e358f92e689cc89c35172278c

|

research-backup/bart-base-squadshifts-vanilla-reddit-qg

|

research-backup

|

bart

| 15 | 1 |

transformers

| 0 |

text2text-generation

| true | false | false |

cc-by-4.0

|

['en']

|

['lmqg/qg_squadshifts']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['question generation']

| true | true | true | 4,148 | false |

# Model Card of `research-backup/bart-base-squadshifts-vanilla-reddit-qg`

This model is fine-tuned version of [facebook/bart-base](https://huggingface.co/facebook/bart-base) for question generation task on the [lmqg/qg_squadshifts](https://huggingface.co/datasets/lmqg/qg_squadshifts) (dataset_name: reddit) via [`lmqg`](https://github.com/asahi417/lm-question-generation).

### Overview

- **Language model:** [facebook/bart-base](https://huggingface.co/facebook/bart-base)

- **Language:** en

- **Training data:** [lmqg/qg_squadshifts](https://huggingface.co/datasets/lmqg/qg_squadshifts) (reddit)

- **Online Demo:** [https://autoqg.net/](https://autoqg.net/)

- **Repository:** [https://github.com/asahi417/lm-question-generation](https://github.com/asahi417/lm-question-generation)

- **Paper:** [https://arxiv.org/abs/2210.03992](https://arxiv.org/abs/2210.03992)

### Usage

- With [`lmqg`](https://github.com/asahi417/lm-question-generation#lmqg-language-model-for-question-generation-)

```python

from lmqg import TransformersQG

# initialize model

model = TransformersQG(language="en", model="research-backup/bart-base-squadshifts-vanilla-reddit-qg")

# model prediction

questions = model.generate_q(list_context="William Turner was an English painter who specialised in watercolour landscapes", list_answer="William Turner")

```

- With `transformers`

```python

from transformers import pipeline

pipe = pipeline("text2text-generation", "research-backup/bart-base-squadshifts-vanilla-reddit-qg")

output = pipe("<hl> Beyonce <hl> further expanded her acting career, starring as blues singer Etta James in the 2008 musical biopic, Cadillac Records.")

```

## Evaluation

- ***Metric (Question Generation)***: [raw metric file](https://huggingface.co/research-backup/bart-base-squadshifts-vanilla-reddit-qg/raw/main/eval/metric.first.sentence.paragraph_answer.question.lmqg_qg_squadshifts.reddit.json)

| | Score | Type | Dataset |

|:-----------|--------:|:-------|:---------------------------------------------------------------------------|

| BERTScore | 91.89 | reddit | [lmqg/qg_squadshifts](https://huggingface.co/datasets/lmqg/qg_squadshifts) |

| Bleu_1 | 25.35 | reddit | [lmqg/qg_squadshifts](https://huggingface.co/datasets/lmqg/qg_squadshifts) |

| Bleu_2 | 16.53 | reddit | [lmqg/qg_squadshifts](https://huggingface.co/datasets/lmqg/qg_squadshifts) |

| Bleu_3 | 10.97 | reddit | [lmqg/qg_squadshifts](https://huggingface.co/datasets/lmqg/qg_squadshifts) |

| Bleu_4 | 7.52 | reddit | [lmqg/qg_squadshifts](https://huggingface.co/datasets/lmqg/qg_squadshifts) |

| METEOR | 21.32 | reddit | [lmqg/qg_squadshifts](https://huggingface.co/datasets/lmqg/qg_squadshifts) |

| MoverScore | 61.44 | reddit | [lmqg/qg_squadshifts](https://huggingface.co/datasets/lmqg/qg_squadshifts) |

| ROUGE_L | 24.67 | reddit | [lmqg/qg_squadshifts](https://huggingface.co/datasets/lmqg/qg_squadshifts) |

## Training hyperparameters

The following hyperparameters were used during fine-tuning:

- dataset_path: lmqg/qg_squadshifts

- dataset_name: reddit

- input_types: ['paragraph_answer']

- output_types: ['question']

- prefix_types: None

- model: facebook/bart-base

- max_length: 512

- max_length_output: 32

- epoch: 6

- batch: 8

- lr: 5e-05

- fp16: False

- random_seed: 1

- gradient_accumulation_steps: 8

- label_smoothing: 0.15

The full configuration can be found at [fine-tuning config file](https://huggingface.co/research-backup/bart-base-squadshifts-vanilla-reddit-qg/raw/main/trainer_config.json).

## Citation

```

@inproceedings{ushio-etal-2022-generative,

title = "{G}enerative {L}anguage {M}odels for {P}aragraph-{L}evel {Q}uestion {G}eneration",

author = "Ushio, Asahi and

Alva-Manchego, Fernando and

Camacho-Collados, Jose",

booktitle = "Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2022",

address = "Abu Dhabi, U.A.E.",

publisher = "Association for Computational Linguistics",

}

```

|

cf2ff92a65d8a73fd4c4cf79025e7034

|

Helsinki-NLP/opus-mt-pl-sv

|

Helsinki-NLP

|

marian

| 10 | 16 |

transformers

| 0 |

translation

| true | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['translation']

| false | true | true | 770 | false |

### opus-mt-pl-sv

* source languages: pl

* target languages: sv

* OPUS readme: [pl-sv](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/pl-sv/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-24.zip](https://object.pouta.csc.fi/OPUS-MT-models/pl-sv/opus-2020-01-24.zip)

* test set translations: [opus-2020-01-24.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/pl-sv/opus-2020-01-24.test.txt)

* test set scores: [opus-2020-01-24.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/pl-sv/opus-2020-01-24.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba.pl.sv | 58.9 | 0.717 |

|

ea05d5b8cdb8ae18c9fd6b1fe33dd38e

|

JTH/results

|

JTH

|

distilbert

| 10 | 1 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 921 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# results

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

### Framework versions

- Transformers 4.21.1

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

fbaa51094a310b8bf0ee81d405720cc4

|

natedog/my_awesome_billsum_model

|

natedog

|

t5

| 14 | 0 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

| null |

['billsum']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,203 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# my_awesome_billsum_model

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the billsum dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:------:|:---------:|:-------:|

| No log | 1.0 | 62 | 3.5089 | 0.1247 | 0.0333 | 0.1056 | 0.1055 | 19.0 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.13.1

- Datasets 2.9.0

- Tokenizers 0.13.2

|

e20d75e29c267137e8b973c401b3629c

|

openmmlab/upernet-swin-base

|

openmmlab

|

upernet

| 5 | 29 |

transformers

| 0 |

image-segmentation

| true | false | false |

mit

|

['en']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['vision', 'image-segmentation']

| false | true | true | 1,520 | false |

# UperNet, Swin Transformer base-sized backbone

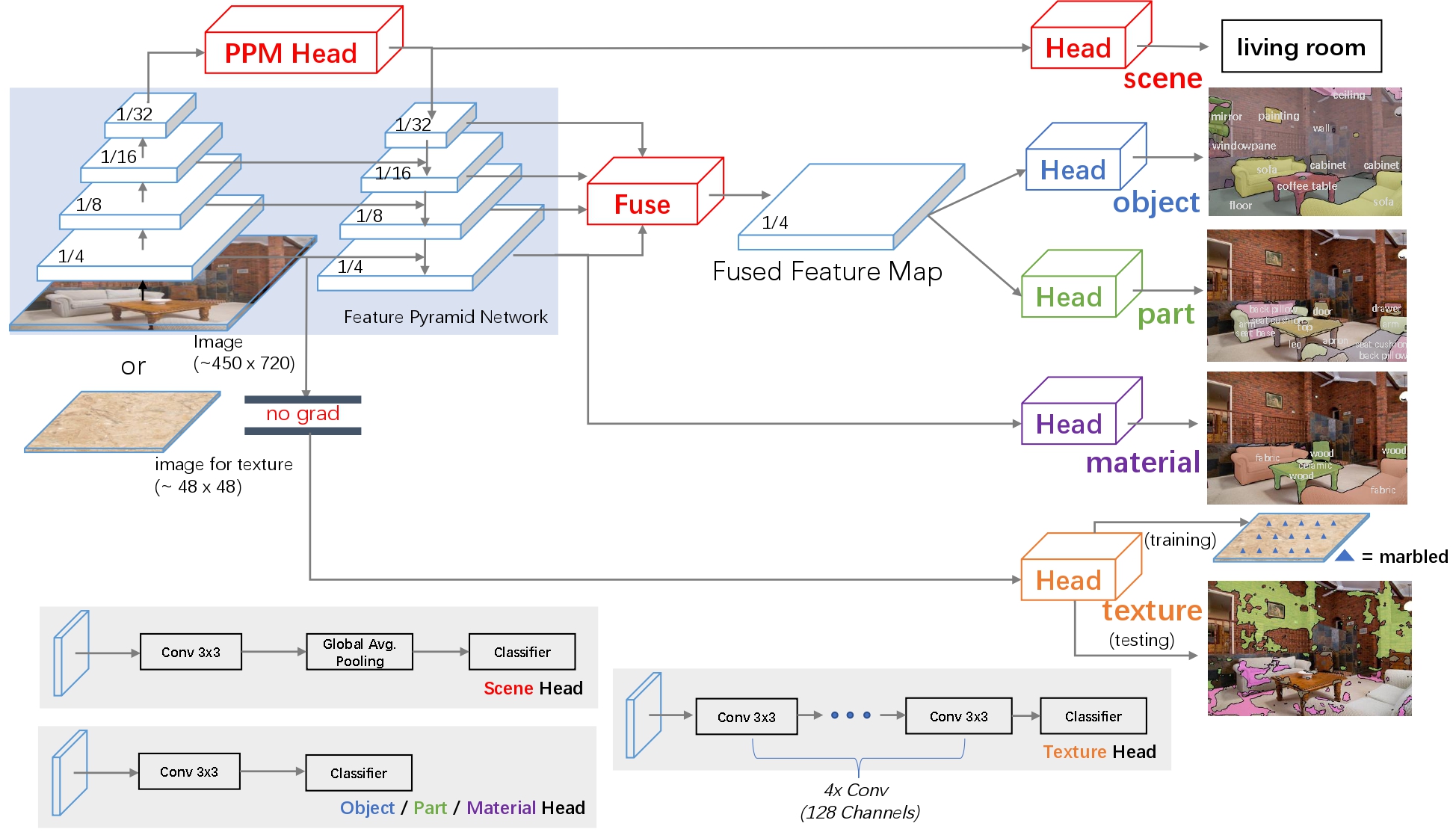

UperNet framework for semantic segmentation, leveraging a Swin Transformer backbone. UperNet was introduced in the paper [Unified Perceptual Parsing for Scene Understanding](https://arxiv.org/abs/1807.10221) by Xiao et al.

Combining UperNet with a Swin Transformer backbone was introduced in the paper [Swin Transformer: Hierarchical Vision Transformer using Shifted Windows](https://arxiv.org/abs/2103.14030).

Disclaimer: The team releasing UperNet + Swin Transformer did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

UperNet is a framework for semantic segmentation. It consists of several components, including a backbone, a Feature Pyramid Network (FPN) and a Pyramid Pooling Module (PPM).

Any visual backbone can be plugged into the UperNet framework. The framework predicts a semantic label per pixel.

## Intended uses & limitations

You can use the raw model for semantic segmentation. See the [model hub](https://huggingface.co/models?search=openmmlab/upernet) to look for

fine-tuned versions (with various backbones) on a task that interests you.

### How to use

For code examples, we refer to the [documentation](https://huggingface.co/docs/transformers/main/en/model_doc/upernet#transformers.UperNetForSemanticSegmentation).

|

493ffea0e4665b131757555da3d51ff8

|

sd-concepts-library/im-poppy

|

sd-concepts-library

| null | 21 | 0 | null | 3 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 2,236 | false |

### im-poppy on Stable Diffusion

This is the `im-poppy` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

3766baf7adcbf928086c3536e59c92f5

|

kasrahabib/200-500-bucket-finetunned

|

kasrahabib

|

bert

| 10 | 5 |

transformers

| 0 |

text-classification

| false | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,724 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# kasrahabib/200-500-bucket-finetunned

This model is a fine-tuned version of [sentence-transformers/all-MiniLM-L6-v2](https://huggingface.co/sentence-transformers/all-MiniLM-L6-v2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.0280

- Validation Loss: 0.3784

- Epoch: 9

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 3110, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 1.0739 | 0.6559 | 0 |

| 0.4665 | 0.4309 | 1 |

| 0.2473 | 0.3669 | 2 |

| 0.1437 | 0.3746 | 3 |

| 0.0825 | 0.3663 | 4 |

| 0.0592 | 0.3649 | 5 |

| 0.0451 | 0.3523 | 6 |

| 0.0345 | 0.3710 | 7 |

| 0.0292 | 0.3705 | 8 |

| 0.0280 | 0.3784 | 9 |

### Framework versions

- Transformers 4.26.0

- TensorFlow 2.9.2

- Datasets 2.9.0

- Tokenizers 0.13.2

|

95a84dfa0e0943f27c2ec257247e0cf8

|

WALIDALI/cynthiasly

|

WALIDALI

| null | 18 | 2 |

diffusers

| 0 |

text-to-image

| false | false | false |

creativeml-openrail-m

| null | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

['text-to-image', 'stable-diffusion']

| false | true | true | 420 | false |

### cynthiasly Dreambooth model trained by WALIDALI with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Sample pictures of this concept:

|

4ec1640cbe26bc35afa3293468688c5d

|

frahman/distilbert-base-uncased-distilled-clinc

|

frahman

|

distilbert

| 10 | 3 |

transformers

| 0 |

text-classification

| true | false | false |

apache-2.0

| null |

['clinc_oos']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,793 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-distilled-clinc

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the clinc_oos dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1002

- Accuracy: 0.9406

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 48

- eval_batch_size: 48

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.9039 | 1.0 | 318 | 0.5777 | 0.7335 |

| 0.4486 | 2.0 | 636 | 0.2860 | 0.8768 |

| 0.2528 | 3.0 | 954 | 0.1792 | 0.9210 |

| 0.176 | 4.0 | 1272 | 0.1398 | 0.9274 |

| 0.1417 | 5.0 | 1590 | 0.1209 | 0.9329 |

| 0.1245 | 6.0 | 1908 | 0.1110 | 0.94 |

| 0.1135 | 7.0 | 2226 | 0.1061 | 0.9390 |

| 0.1074 | 8.0 | 2544 | 0.1026 | 0.94 |

| 0.1032 | 9.0 | 2862 | 0.1006 | 0.9410 |

| 0.1017 | 10.0 | 3180 | 0.1002 | 0.9406 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

8dc285a1cbb7c9119222736c974e13dd

|

meongracun/nmt-mpst-id-en-lr_1e-05-ep_20-seq_128_bs-32

|

meongracun

|

t5

| 9 | 1 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,548 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# nmt-mpst-id-en-lr_1e-05-ep_20-seq_128_bs-32

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 2.7787

- Bleu: 0.0338

- Meteor: 0.1312

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bleu | Meteor |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|

| No log | 1.0 | 202 | 3.1965 | 0.0132 | 0.0696 |

| No log | 2.0 | 404 | 3.0644 | 0.0224 | 0.0975 |

| 3.5509 | 3.0 | 606 | 2.9995 | 0.0255 | 0.1075 |

| 3.5509 | 4.0 | 808 | 2.9538 | 0.0269 | 0.1106 |

| 3.2374 | 5.0 | 1010 | 2.9221 | 0.0277 | 0.1134 |

| 3.2374 | 6.0 | 1212 | 2.8996 | 0.0286 | 0.1165 |

| 3.2374 | 7.0 | 1414 | 2.8750 | 0.0291 | 0.1177 |

| 3.143 | 8.0 | 1616 | 2.8611 | 0.0297 | 0.1197 |

| 3.143 | 9.0 | 1818 | 2.8466 | 0.0303 | 0.1209 |

| 3.092 | 10.0 | 2020 | 2.8330 | 0.0312 | 0.1229 |

| 3.092 | 11.0 | 2222 | 2.8234 | 0.0318 | 0.1247 |

| 3.092 | 12.0 | 2424 | 2.8130 | 0.0322 | 0.1264 |

| 3.0511 | 13.0 | 2626 | 2.8058 | 0.0323 | 0.1269 |

| 3.0511 | 14.0 | 2828 | 2.7970 | 0.0324 | 0.1272 |

| 3.0288 | 15.0 | 3030 | 2.7914 | 0.033 | 0.1288 |

| 3.0288 | 16.0 | 3232 | 2.7877 | 0.0331 | 0.1299 |

| 3.0288 | 17.0 | 3434 | 2.7837 | 0.0333 | 0.1302 |

| 3.0133 | 18.0 | 3636 | 2.7809 | 0.0336 | 0.1308 |

| 3.0133 | 19.0 | 3838 | 2.7792 | 0.0337 | 0.131 |

| 3.0028 | 20.0 | 4040 | 2.7787 | 0.0338 | 0.1312 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.0

- Tokenizers 0.13.2

|

9ab48c64bbbd301c596a81dc18e59809

|

schorndorfer/distilroberta-base-finetuned-wikitext2

|

schorndorfer

|

roberta

| 9 | 4 |

transformers

| 0 |

fill-mask

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,267 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilroberta-base-finetuned-wikitext2

This model is a fine-tuned version of [distilroberta-base](https://huggingface.co/distilroberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.8347

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.0853 | 1.0 | 2406 | 1.9214 |

| 1.986 | 2.0 | 4812 | 1.8799 |

| 1.9568 | 3.0 | 7218 | 1.8202 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

|

7643a16fe5243196a3125d2e7ebf8158

|

IDEA-CCNL/Randeng-T5-Char-57M-Chinese

|

IDEA-CCNL

|

mt5

| 8 | 15 |

transformers

| 0 |

text2text-generation

| true | false | false |

apache-2.0

|

['zh']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['T5', 'chinese', 'sentencepiece']

| false | true | true | 2,724 | false |

# Randeng-T5-Char-57M-Chinese

- Github: [Fengshenbang-LM](https://github.com/IDEA-CCNL/Fengshenbang-LM)

- Docs: [Fengshenbang-Docs](https://fengshenbang-doc.readthedocs.io/)

## 简介 Brief Introduction

善于处理NLT任务,中文版的T5-small,采用了BertTokenizer和中文字级别词典。

Good at handling NLT tasks, Chinese T5-small, use BertTokenizer and chinese vocab.

## 模型分类 Model Taxonomy

| 需求 Demand | 任务 Task | 系列 Series | 模型 Model | 参数 Parameter | 额外 Extra |

| :----: | :----: | :----: | :----: | :----: | :----: |

| 通用 General | 自然语言转换 NLT | 燃灯 Randeng | T5 | 57M | 中文-Chinese |

## 模型信息 Model Information

对比T5-small,训练了它的中文版。为了更好适用于中文任务,我们仅使用BertTokenzier,和支持中英文的词表,并且使用了语料库自适应预训练(Corpus-Adaptive Pre-Training, CAPT)技术在悟道语料库(180G版本)继续预训练。预训练目标为破坏span。具体地,我们在预训练阶段中使用了[封神框架](https://github.com/IDEA-CCNL/Fengshenbang-LM/tree/main/fengshen)大概花费了8张A100约24小时。

Compared with T5-samll, we implement its Chinese version. In order to use for chinese tasks, we use BertTokenizer and Chinese vocabulary, and Corpus-Adaptive Pre-Training (CAPT) on the WuDao Corpora (180 GB version). The pretraining objective is span corruption. Specifically, we use the [fengshen framework](https://github.com/IDEA-CCNL/Fengshenbang-LM/tree/main/fengshen) in the pre-training phase which cost about 24 hours with 8 A100 GPUs.

## 使用 Usage

```python

from transformers import T5ForConditionalGeneration, BertTokenizer

import torch

tokenizer=BertTokenizer.from_pretrained('IDEA-CCNL/Randeng-T5-Char-57M-Chinese', add_special_tokens=False)

model=T5ForConditionalGeneration.from_pretrained('IDEA-CCNL/Randeng-T5-Char-57M-Chinese')

```

## 引用 Citation

如果您在您的工作中使用了我们的模型,可以引用我们的[论文](https://arxiv.org/abs/2209.02970):

If you are using the resource for your work, please cite the our [paper](https://arxiv.org/abs/2209.02970):

```text

@article{fengshenbang,

author = {Junjie Wang and Yuxiang Zhang and Lin Zhang and Ping Yang and Xinyu Gao and Ziwei Wu and Xiaoqun Dong and Junqing He and Jianheng Zhuo and Qi Yang and Yongfeng Huang and Xiayu Li and Yanghan Wu and Junyu Lu and Xinyu Zhu and Weifeng Chen and Ting Han and Kunhao Pan and Rui Wang and Hao Wang and Xiaojun Wu and Zhongshen Zeng and Chongpei Chen and Ruyi Gan and Jiaxing Zhang},

title = {Fengshenbang 1.0: Being the Foundation of Chinese Cognitive Intelligence},

journal = {CoRR},

volume = {abs/2209.02970},

year = {2022}

}

```

也可以引用我们的[网站](https://github.com/IDEA-CCNL/Fengshenbang-LM/):

You can also cite our [website](https://github.com/IDEA-CCNL/Fengshenbang-LM/):

```text

@misc{Fengshenbang-LM,

title={Fengshenbang-LM},

author={IDEA-CCNL},

year={2021},

howpublished={\url{https://github.com/IDEA-CCNL/Fengshenbang-LM}},

}

```

|

66f925954581b239a7d667e4a6372853

|

jo0hnd0e/mt5-small-finetuned-amazon-en-es

|

jo0hnd0e

|

mt5

| 8 | 1 |

transformers

| 0 |

text2text-generation

| false | true | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,649 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# jo0hnd0e/mt5-small-finetuned-amazon-en-es

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 3.9844

- Validation Loss: 3.3610

- Epoch: 7

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 5.6e-05, 'decay_steps': 9672, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 9.6302 | 4.2399 | 0 |

| 5.7657 | 3.7191 | 1 |

| 4.9972 | 3.5931 | 2 |

| 4.6081 | 3.5038 | 3 |

| 4.3425 | 3.4322 | 4 |

| 4.1758 | 3.3950 | 5 |

| 4.0512 | 3.3649 | 6 |

| 3.9844 | 3.3610 | 7 |

### Framework versions

- Transformers 4.19.2

- TensorFlow 2.8.0

- Datasets 2.2.2

- Tokenizers 0.12.1

|

07bedf8f59fc110d64dbabbd8228df8e

|

TalTechNLP/voxlingua107-epaca-tdnn

|

TalTechNLP

| null | 8 | 24,084 |

speechbrain

| 20 |

audio-classification

| true | false | false |

apache-2.0

|

['multilingual']

|

['VoxLingua107']

| null | 2 | 2 | 0 | 0 | 1 | 1 | 0 |

['audio-classification', 'speechbrain', 'embeddings', 'Language', 'Identification', 'pytorch', 'ECAPA-TDNN', 'TDNN', 'VoxLingua107']

| false | true | true | 5,487 | false |

# VoxLingua107 ECAPA-TDNN Spoken Language Identification Model

## Model description

This is a spoken language recognition model trained on the VoxLingua107 dataset using SpeechBrain.

The model uses the ECAPA-TDNN architecture that has previously been used for speaker recognition.

The model can classify a speech utterance according to the language spoken.

It covers 107 different languages (

Abkhazian,

Afrikaans,

Amharic,

Arabic,

Assamese,

Azerbaijani,

Bashkir,

Belarusian,

Bulgarian,

Bengali,

Tibetan,

Breton,

Bosnian,

Catalan,

Cebuano,

Czech,

Welsh,

Danish,

German,

Greek,

English,

Esperanto,

Spanish,

Estonian,

Basque,

Persian,

Finnish,

Faroese,

French,

Galician,

Guarani,

Gujarati,

Manx,

Hausa,

Hawaiian,

Hindi,

Croatian,

Haitian,

Hungarian,

Armenian,

Interlingua,

Indonesian,

Icelandic,

Italian,

Hebrew,

Japanese,

Javanese,

Georgian,

Kazakh,

Central Khmer,

Kannada,

Korean,

Latin,

Luxembourgish,

Lingala,

Lao,

Lithuanian,

Latvian,

Malagasy,

Maori,

Macedonian,

Malayalam,

Mongolian,

Marathi,

Malay,

Maltese,

Burmese,

Nepali,

Dutch,

Norwegian Nynorsk,

Norwegian,

Occitan,

Panjabi,

Polish,

Pushto,

Portuguese,

Romanian,

Russian,

Sanskrit,

Scots,

Sindhi,

Sinhala,

Slovak,

Slovenian,

Shona,

Somali,

Albanian,

Serbian,

Sundanese,

Swedish,

Swahili,

Tamil,

Telugu,

Tajik,

Thai,

Turkmen,

Tagalog,

Turkish,

Tatar,

Ukrainian,

Urdu,

Uzbek,

Vietnamese,

Waray,

Yiddish,

Yoruba,

Mandarin Chinese).

## Intended uses & limitations

The model has two uses:

- use 'as is' for spoken language recognition

- use as an utterance-level feature (embedding) extractor, for creating a dedicated language ID model on your own data

The model is trained on automatically collected YouTube data. For more

information about the dataset, see [here](http://bark.phon.ioc.ee/voxlingua107/).

#### How to use

```python

import torchaudio

from speechbrain.pretrained import EncoderClassifier

language_id = EncoderClassifier.from_hparams(source="TalTechNLP/voxlingua107-epaca-tdnn", savedir="tmp")

# Download Thai language sample from Omniglot and cvert to suitable form

signal = language_id.load_audio("https://omniglot.com/soundfiles/udhr/udhr_th.mp3")

prediction = language_id.classify_batch(signal)

print(prediction)

(tensor([[0.3210, 0.3751, 0.3680, 0.3939, 0.4026, 0.3644, 0.3689, 0.3597, 0.3508,

0.3666, 0.3895, 0.3978, 0.3848, 0.3957, 0.3949, 0.3586, 0.4360, 0.3997,

0.4106, 0.3886, 0.4177, 0.3870, 0.3764, 0.3763, 0.3672, 0.4000, 0.4256,

0.4091, 0.3563, 0.3695, 0.3320, 0.3838, 0.3850, 0.3867, 0.3878, 0.3944,

0.3924, 0.4063, 0.3803, 0.3830, 0.2996, 0.4187, 0.3976, 0.3651, 0.3950,

0.3744, 0.4295, 0.3807, 0.3613, 0.4710, 0.3530, 0.4156, 0.3651, 0.3777,

0.3813, 0.6063, 0.3708, 0.3886, 0.3766, 0.4023, 0.3785, 0.3612, 0.4193,

0.3720, 0.4406, 0.3243, 0.3866, 0.3866, 0.4104, 0.4294, 0.4175, 0.3364,

0.3595, 0.3443, 0.3565, 0.3776, 0.3985, 0.3778, 0.2382, 0.4115, 0.4017,

0.4070, 0.3266, 0.3648, 0.3888, 0.3907, 0.3755, 0.3631, 0.4460, 0.3464,

0.3898, 0.3661, 0.3883, 0.3772, 0.9289, 0.3687, 0.4298, 0.4211, 0.3838,

0.3521, 0.3515, 0.3465, 0.4772, 0.4043, 0.3844, 0.3973, 0.4343]]), tensor([0.9289]), tensor([94]), ['th'])

# The scores in the prediction[0] tensor can be interpreted as cosine scores between

# the languages and the given utterance (i.e., the larger the better)

# The identified language ISO code is given in prediction[3]

print(prediction[3])

['th']

# Alternatively, use the utterance embedding extractor:

emb = language_id.encode_batch(signal)

print(emb.shape)

torch.Size([1, 1, 256])

```

#### Limitations and bias

Since the model is trained on VoxLingua107, it has many limitations and biases, some of which are:

- Probably it's accuracy on smaller languages is quite limited

- Probably it works worse on female speech than male speech (because YouTube data includes much more male speech)

- Based on subjective experiments, it doesn't work well on speech with a foreign accent

- Probably it doesn't work well on children's speech and on persons with speech disorders

## Training data

The model is trained on [VoxLingua107](http://bark.phon.ioc.ee/voxlingua107/).

VoxLingua107 is a speech dataset for training spoken language identification models.

The dataset consists of short speech segments automatically extracted from YouTube videos and labeled according the language of the video title and description, with some post-processing steps to filter out false positives.

VoxLingua107 contains data for 107 languages. The total amount of speech in the training set is 6628 hours.

The average amount of data per language is 62 hours. However, the real amount per language varies a lot. There is also a seperate development set containing 1609 speech segments from 33 languages, validated by at least two volunteers to really contain the given language.

## Training procedure

We used [SpeechBrain](https://github.com/speechbrain/speechbrain) to train the model.

Training recipe will be published soon.

## Evaluation results

Error rate: 7% on the development dataset

### BibTeX entry and citation info

```bibtex

@inproceedings{valk2021slt,

title={{VoxLingua107}: a Dataset for Spoken Language Recognition},

author={J{\"o}rgen Valk and Tanel Alum{\"a}e},

booktitle={Proc. IEEE SLT Workshop},

year={2021},

}

```

|

97fcf0587724b1a6bdf6a728f1d109bc

|

polejowska/swin-tiny-patch4-window7-224-eurosat

|

polejowska

|

swin

| 18 | 13 |

transformers

| 0 |

image-classification

| true | false | false |

apache-2.0

| null |

['imagefolder']

| null | 1 | 0 | 1 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,606 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-tiny-patch4-window7-224-eurosat

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0447

- Accuracy: 0.9852

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.1547 | 0.99 | 147 | 0.0956 | 0.9711 |

| 0.0707 | 1.99 | 294 | 0.0759 | 0.9733 |

| 0.0537 | 2.99 | 441 | 0.0680 | 0.9768 |

| 0.0302 | 3.99 | 588 | 0.0447 | 0.9852 |

| 0.0225 | 4.99 | 735 | 0.0489 | 0.9837 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

|

ea9fed40410a5ed9153800c580640cfc

|

Anjoe/german-poetry-gpt2-large

|

Anjoe

|

gpt2

| 15 | 170 |

transformers

| 0 |

text-generation

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,179 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# german-poetry-gpt2-large

This model is a fine-tuned version of [benjamin/gerpt2-large](https://huggingface.co/benjamin/gerpt2-large) on German poems.

It achieves the following results on the evaluation set:

- eval_loss: 3.5753

- eval_runtime: 100.7173

- eval_samples_per_second: 51.6

- eval_steps_per_second: 25.805

- epoch: 4.0

- step: 95544

## Model description

large version of gpt-2

## Intended uses & limitations

It could be used for poetry generation

## Training and evaluation data

The model was trained on german poems from projekt Gutenberg

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 6

### Framework versions

- Transformers 4.19.4

- Pytorch 1.11.0+cu113

- Datasets 2.3.0

- Tokenizers 0.12.1

|

fec6841219380c04f06fbc69fa7f3f28

|

mideind/IceBERT-xlmr-ic3

|

mideind

|

roberta

| 6 | 418 |

transformers

| 0 |

fill-mask

| true | false | false |

agpl-3.0

|

['is']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['roberta', 'icelandic', 'masked-lm', 'pytorch']

| false | true | true | 1,604 | false |

# IceBERT-xlmr-ic3

This model was trained with fairseq using the RoBERTa-base architecture. The model `xlm-roberta-base` was used as a starting point. It is one of many models we have trained for Icelandic, see the paper referenced below for further details. The training data used is shown in the table below.

| Dataset | Size | Tokens |

|------------------------------------------------------|---------|--------|

| Icelandic Common Crawl Corpus (IC3) | 4.9 GB | 824M |

## Citation

The model is described in this paper [https://arxiv.org/abs/2201.05601](https://arxiv.org/abs/2201.05601). Please cite the paper if you make use of the model.

```

@article{DBLP:journals/corr/abs-2201-05601,

author = {V{\'{e}}steinn Sn{\ae}bjarnarson and

Haukur Barri S{\'{\i}}monarson and

P{\'{e}}tur Orri Ragnarsson and

Svanhv{\'{\i}}t Lilja Ing{\'{o}}lfsd{\'{o}}ttir and

Haukur P{\'{a}}ll J{\'{o}}nsson and

Vilhj{\'{a}}lmur {\TH}orsteinsson and

Hafsteinn Einarsson},

title = {A Warm Start and a Clean Crawled Corpus - {A} Recipe for Good Language

Models},

journal = {CoRR},

volume = {abs/2201.05601},

year = {2022},

url = {https://arxiv.org/abs/2201.05601},

eprinttype = {arXiv},

eprint = {2201.05601},

timestamp = {Thu, 20 Jan 2022 14:21:35 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-2201-05601.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

b10133e36a22903eabb2491180928fd1

|

KoichiYasuoka/bert-large-japanese-upos

|

KoichiYasuoka

|

bert

| 9 | 11 |

transformers

| 2 |

token-classification

| true | false | false |

cc-by-sa-4.0

|

['ja']

|

['universal_dependencies']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['japanese', 'token-classification', 'pos', 'wikipedia', 'dependency-parsing']

| false | true | true | 1,120 | false |

# bert-large-japanese-upos

## Model Description

This is a BERT model pre-trained on Japanese Wikipedia texts for POS-tagging and dependency-parsing, derived from [bert-large-japanese-char-extended](https://huggingface.co/KoichiYasuoka/bert-large-japanese-char-extended). Every short-unit-word is tagged by [UPOS](https://universaldependencies.org/u/pos/) (Universal Part-Of-Speech).

## How to Use

```py

import torch

from transformers import AutoTokenizer,AutoModelForTokenClassification

tokenizer=AutoTokenizer.from_pretrained("KoichiYasuoka/bert-large-japanese-upos")

model=AutoModelForTokenClassification.from_pretrained("KoichiYasuoka/bert-large-japanese-upos")

s="国境の長いトンネルを抜けると雪国であった。"

p=[model.config.id2label[q] for q in torch.argmax(model(tokenizer.encode(s,return_tensors="pt"))["logits"],dim=2)[0].tolist()[1:-1]]

print(list(zip(s,p)))

```

or

```py

import esupar

nlp=esupar.load("KoichiYasuoka/bert-large-japanese-upos")

print(nlp("国境の長いトンネルを抜けると雪国であった。"))

```

## See Also

[esupar](https://github.com/KoichiYasuoka/esupar): Tokenizer POS-tagger and Dependency-parser with BERT/RoBERTa/DeBERTa models

|

1d7c5f0f065aaee61cb9351a6a1b3f01

|

42MARU/ko-ctc-kenlm-spelling-only-wiki

|

42MARU

| null | 10 | 0 |

kenlm

| 0 |

text2text-generation

| false | false | false |

apache-2.0

|

['ko']

|

['korean-wiki']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['audio', 'automatic-speech-recognition', 'text2text-generation']

| false | true | true | 2,218 | false |

# ko-ctc-kenlm-spelling-only-wiki

## Table of Contents

- [ko-ctc-kenlm-spelling-only-wiki](#ko-ctc-kenlm-spelling-only-wiki)

- [Table of Contents](#table-of-contents)

- [Model Details](#model-details)

- [How to Get Started With the Model](#how-to-get-started-with-the-model)

## Model Details

- **Model Description** <br />

- 음향 모델을 위한 N-gram Base의 LM으로 자소별 단어기반으로 만들어졌으며, KenLM으로 학습되었습니다. 해당 모델은 [ko-spelling-wav2vec2-conformer-del-1s](https://huggingface.co/42MARU/ko-spelling-wav2vec2-conformer-del-1s)과 사용하십시오. <br />

- HuggingFace Transformers Style로 불러와 사용할 수 있도록 처리했습니다. <br />

- pyctcdecode lib을 이용해서도 바로 사용가능합니다. <br />

- data는 wiki korean을 사용했습니다. <br />

spelling vocab data에 없는 문장은 전부 제거하여, 오히려 LM으로 Outlier가 발생할 소요를 최소화 시켰습니다. <br />

해당 모델은 **철자전사** 기준의 데이터로 학습된 모델입니다. (숫자와 영어는 각 표기법을 따름) <br />

- **Developed by:** TADev (@lIlBrother)

- **Language(s):** Korean

- **License:** apache-2.0

## How to Get Started With the Model

```python

import librosa

from pyctcdecode import build_ctcdecoder

from transformers import (

AutoConfig,

AutoFeatureExtractor,

AutoModelForCTC,

AutoTokenizer,

Wav2Vec2ProcessorWithLM,

)

from transformers.pipelines import AutomaticSpeechRecognitionPipeline

audio_path = ""

# 모델과 토크나이저, 예측을 위한 각 모듈들을 불러옵니다.

model = AutoModelForCTC.from_pretrained("42MARU/ko-spelling-wav2vec2-conformer-del-1s")

feature_extractor = AutoFeatureExtractor.from_pretrained("42MARU/ko-spelling-wav2vec2-conformer-del-1s")

tokenizer = AutoTokenizer.from_pretrained("42MARU/ko-spelling-wav2vec2-conformer-del-1s")

processor = Wav2Vec2ProcessorWithLM("42MARU/ko-ctc-kenlm-spelling-only-wiki")

# 실제 예측을 위한 파이프라인에 정의된 모듈들을 삽입.

asr_pipeline = AutomaticSpeechRecognitionPipeline(

model=model,

tokenizer=processor.tokenizer,

feature_extractor=processor.feature_extractor,

decoder=processor.decoder,

device=-1,

)

# 음성파일을 불러오고 beamsearch 파라미터를 특정하여 예측을 수행합니다.

raw_data, _ = librosa.load(audio_path, sr=16000)

kwargs = {"decoder_kwargs": {"beam_width": 100}}

pred = asr_pipeline(inputs=raw_data, **kwargs)["text"]

# 모델이 자소 분리 유니코드 텍스트로 나오므로, 일반 String으로 변환해줄 필요가 있습니다.

result = unicodedata.normalize("NFC", pred)

print(result)

# 안녕하세요 123 테스트입니다.

```

|

357153ea48981220b2834c23fd847186

|

rafiulrumy/wav2vec2-large-xlsr-53-demo-colab

|

rafiulrumy

|

wav2vec2

| 21 | 8 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null |

['common_voice']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,657 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xlsr-53-demo-colab

This model is a fine-tuned version of [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 6.7860

- Wer: 1.1067

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 1000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:------:|:----:|:---------------:|:------:|

| 8.2273 | 44.42 | 400 | 3.3544 | 1.0 |

| 0.9228 | 88.84 | 800 | 4.7054 | 1.1601 |

| 0.1423 | 133.32 | 1200 | 5.9489 | 1.1578 |

| 0.0751 | 177.74 | 1600 | 5.5939 | 1.1717 |

| 0.0554 | 222.21 | 2000 | 6.1230 | 1.1717 |

| 0.0356 | 266.63 | 2400 | 6.2845 | 1.1613 |

| 0.0288 | 311.11 | 2800 | 6.6109 | 1.2100 |

| 0.0223 | 355.53 | 3200 | 6.5605 | 1.1299 |

| 0.0197 | 399.95 | 3600 | 7.1242 | 1.1682 |

| 0.0171 | 444.42 | 4000 | 7.2452 | 1.1578 |

| 0.0149 | 488.84 | 4400 | 7.4048 | 1.0684 |

| 0.0118 | 533.32 | 4800 | 6.6227 | 1.1172 |

| 0.011 | 577.74 | 5200 | 6.7909 | 1.1566 |

| 0.0095 | 622.21 | 5600 | 6.8088 | 1.1102 |

| 0.0077 | 666.63 | 6000 | 7.4451 | 1.1311 |

| 0.0062 | 711.11 | 6400 | 6.8486 | 1.0777 |

| 0.0051 | 755.53 | 6800 | 6.8812 | 1.1241 |

| 0.0051 | 799.95 | 7200 | 6.9987 | 1.1450 |