repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

jonatasgrosman/exp_w2v2r_fr_xls-r_age_teens-2_sixties-8_s598

|

jonatasgrosman

|

wav2vec2

| 10 | 0 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['fr']

|

['mozilla-foundation/common_voice_7_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['automatic-speech-recognition', 'fr']

| false | true | true | 475 | false |

# exp_w2v2r_fr_xls-r_age_teens-2_sixties-8_s598

Fine-tuned [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) for speech recognition using the train split of [Common Voice 7.0 (fr)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

|

ba4deea330bbb081effbd88d617f9df5

|

hirohiroz/wav2vec2-base-timit-demo-google-colab

|

hirohiroz

|

wav2vec2

| 12 | 7 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,998 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-timit-demo-google-colab

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5173

- Wer: 0.3399

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 3.5684 | 1.0 | 500 | 2.1662 | 1.0068 |

| 0.9143 | 2.01 | 1000 | 0.5820 | 0.5399 |

| 0.439 | 3.01 | 1500 | 0.4596 | 0.4586 |

| 0.3122 | 4.02 | 2000 | 0.4623 | 0.4181 |

| 0.2391 | 5.02 | 2500 | 0.4243 | 0.3938 |

| 0.1977 | 6.02 | 3000 | 0.4421 | 0.3964 |

| 0.1635 | 7.03 | 3500 | 0.5076 | 0.3977 |

| 0.145 | 8.03 | 4000 | 0.4639 | 0.3754 |

| 0.1315 | 9.04 | 4500 | 0.5181 | 0.3652 |

| 0.1131 | 10.04 | 5000 | 0.4496 | 0.3778 |

| 0.1005 | 11.04 | 5500 | 0.4438 | 0.3664 |

| 0.0919 | 12.05 | 6000 | 0.4868 | 0.3865 |

| 0.0934 | 13.05 | 6500 | 0.5163 | 0.3694 |

| 0.076 | 14.06 | 7000 | 0.4543 | 0.3719 |

| 0.0727 | 15.06 | 7500 | 0.5296 | 0.3807 |

| 0.0657 | 16.06 | 8000 | 0.4715 | 0.3699 |

| 0.0578 | 17.07 | 8500 | 0.4927 | 0.3699 |

| 0.057 | 18.07 | 9000 | 0.4767 | 0.3660 |

| 0.0493 | 19.08 | 9500 | 0.5306 | 0.3623 |

| 0.0425 | 20.08 | 10000 | 0.4828 | 0.3561 |

| 0.0431 | 21.08 | 10500 | 0.4875 | 0.3620 |

| 0.0366 | 22.09 | 11000 | 0.4984 | 0.3482 |

| 0.0332 | 23.09 | 11500 | 0.5375 | 0.3477 |

| 0.0348 | 24.1 | 12000 | 0.5406 | 0.3361 |

| 0.0301 | 25.1 | 12500 | 0.4954 | 0.3381 |

| 0.0294 | 26.1 | 13000 | 0.5033 | 0.3424 |

| 0.026 | 27.11 | 13500 | 0.5254 | 0.3384 |

| 0.0243 | 28.11 | 14000 | 0.5189 | 0.3402 |

| 0.0221 | 29.12 | 14500 | 0.5173 | 0.3399 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.11.0+cu113

- Datasets 1.18.3

- Tokenizers 0.12.1

|

542d95dd1e638bb6a8114dbe0a8dd4fd

|

juro95/xlm-roberta-finetuned-ner-cased_1_ratio

|

juro95

|

xlm-roberta

| 8 | 2 |

transformers

| 0 |

token-classification

| false | true | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_keras_callback']

| true | true | true | 1,489 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# juro95/xlm-roberta-finetuned-ner-cased_1_ratio

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.0633

- Validation Loss: 0.0940

- Epoch: 3

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 14272, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 0.3166 | 0.1533 | 0 |

| 0.1376 | 0.1114 | 1 |

| 0.0909 | 0.0988 | 2 |

| 0.0633 | 0.0940 | 3 |

### Framework versions

- Transformers 4.25.1

- TensorFlow 2.6.5

- Datasets 2.3.2

- Tokenizers 0.13.2

|

afb56fc19fbff6c2885bb89cf2627060

|

doddle124578/wav2vec2-base-timit-demo-colab-3

|

doddle124578

|

wav2vec2

| 14 | 3 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,402 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-timit-demo-colab-3

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6622

- Wer: 0.5082

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 10

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 800

- num_epochs: 35

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 2.2195 | 8.77 | 500 | 0.9187 | 0.6635 |

| 0.5996 | 17.54 | 1000 | 0.6569 | 0.5347 |

| 0.2855 | 26.32 | 1500 | 0.6622 | 0.5082 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.11.0+cu113

- Datasets 1.18.3

- Tokenizers 0.10.3

|

c79351f7c2ad4844f35281f2ae47ab4a

|

joaoluislins/wmt-ptt5-colab-base-finetuned-en-to-pt

|

joaoluislins

|

t5

| 12 | 5 |

transformers

| 0 |

text2text-generation

| true | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 2,688 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wmt-mbart50-large-finetuned-en-to-pt

This model is a fine-tuned version of [facebook/mbart-large-50](https://huggingface.co/facebook/mbart-large-50) on the WMT dataset (bi and mono-backtranslated)

It achieves the following results on the evaluation set:

- Loss: 0.2510

- Bleu: 62.7011

- Gen Len: 19.224

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bleu | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|

| 1.6426 | 1.0 | 433 | 0.5323 | 4.484 | 10.5635 |

| 0.2571 | 2.0 | 866 | 0.1965 | 47.6449 | 19.164 |

| 0.1043 | 3.0 | 1299 | 0.1723 | 53.6231 | 19.1455 |

| 0.058 | 4.0 | 1732 | 0.1908 | 52.9831 | 18.5543 |

| 0.0382 | 5.0 | 2165 | 0.1801 | 58.4418 | 19.0808 |

| 0.0244 | 6.0 | 2598 | 0.2014 | 56.0197 | 20.0485 |

| 0.0195 | 7.0 | 3031 | 0.2029 | 56.7903 | 18.642 |

| 0.0138 | 8.0 | 3464 | 0.2015 | 57.6855 | 19.0 |

| 0.0126 | 9.0 | 3897 | 0.2095 | 58.5733 | 18.7644 |

| 0.0095 | 10.0 | 4330 | 0.1946 | 60.3165 | 19.6097 |

| 0.0067 | 11.0 | 4763 | 0.2094 | 60.2691 | 18.9561 |

| 0.0055 | 12.0 | 5196 | 0.2202 | 60.375 | 19.3025 |

| 0.0046 | 13.0 | 5629 | 0.2153 | 60.7254 | 19.0855 |

| 0.0035 | 14.0 | 6062 | 0.2239 | 61.458 | 19.0647 |

| 0.0054 | 15.0 | 6495 | 0.2250 | 61.5297 | 19.164 |

| 0.0025 | 16.0 | 6928 | 0.2458 | 61.263 | 19.0531 |

| 0.002 | 17.0 | 7361 | 0.2354 | 62.4404 | 19.2102 |

| 0.0015 | 18.0 | 7794 | 0.2403 | 62.0235 | 19.1293 |

| 0.0011 | 19.0 | 8227 | 0.2477 | 62.6301 | 19.2494 |

| 0.0009 | 20.0 | 8660 | 0.2510 | 62.7011 | 19.224 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.13.1+cu116

- Datasets 2.9.0

- Tokenizers 0.13.2

|

441eace720226a280107694549207b32

|

xezpeleta/whisper-small-eu-v2

|

xezpeleta

|

whisper

| 26 | 14 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['eu']

|

['mozilla-foundation/common_voice_11_0']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['whisper-event', 'generated_from_trainer']

| true | true | true | 1,564 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small Basque

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the mozilla-foundation/common_voice_11_0 eu dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3580

- Wer: 18.9337

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 32

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 5000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.1372 | 2.04 | 1000 | 0.3166 | 22.2335 |

| 0.0175 | 4.07 | 2000 | 0.3356 | 19.9862 |

| 0.0055 | 7.02 | 3000 | 0.3580 | 18.9337 |

| 0.0015 | 9.06 | 4000 | 0.3803 | 18.9581 |

| 0.0013 | 12.01 | 5000 | 0.3908 | 18.9541 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.1+cu117

- Datasets 2.8.1.dev0

- Tokenizers 0.13.2

|

6b94877a39c93e4dde4e963ea7f938cf

|

shi-labs/oneformer_ade20k_swin_large

|

shi-labs

|

oneformer

| 10 | 394 |

transformers

| 0 |

image-segmentation

| true | false | false |

mit

| null |

['scene_parse_150']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['vision', 'image-segmentation', 'universal-image-segmentation']

| false | true | true | 3,329 | false |

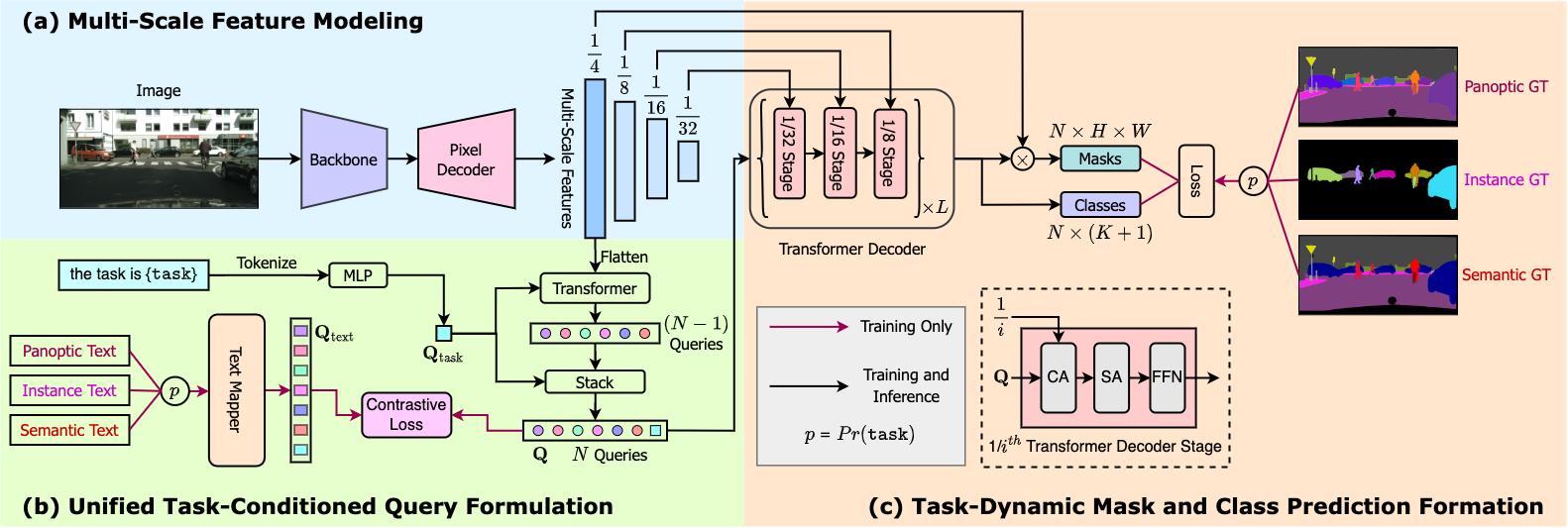

# OneFormer

OneFormer model trained on the ADE20k dataset (large-sized version, Swin backbone). It was introduced in the paper [OneFormer: One Transformer to Rule Universal Image Segmentation](https://arxiv.org/abs/2211.06220) by Jain et al. and first released in [this repository](https://github.com/SHI-Labs/OneFormer).

## Model description

OneFormer is the first multi-task universal image segmentation framework. It needs to be trained only once with a single universal architecture, a single model, and on a single dataset, to outperform existing specialized models across semantic, instance, and panoptic segmentation tasks. OneFormer uses a task token to condition the model on the task in focus, making the architecture task-guided for training, and task-dynamic for inference, all with a single model.

## Intended uses & limitations

You can use this particular checkpoint for semantic, instance and panoptic segmentation. See the [model hub](https://huggingface.co/models?search=oneformer) to look for other fine-tuned versions on a different dataset.

### How to use

Here is how to use this model:

```python

from transformers import OneFormerProcessor, OneFormerForUniversalSegmentation

from PIL import Image

import requests

url = "https://huggingface.co/datasets/shi-labs/oneformer_demo/blob/main/ade20k.jpeg"

image = Image.open(requests.get(url, stream=True).raw)

# Loading a single model for all three tasks

processor = OneFormerProcessor.from_pretrained("shi-labs/oneformer_ade20k_swin_large")

model = OneFormerForUniversalSegmentation.from_pretrained("shi-labs/oneformer_ade20k_swin_large")

# Semantic Segmentation

semantic_inputs = processor(images=image, task_inputs=["semantic"], return_tensors="pt")

semantic_outputs = model(**semantic_inputs)

# pass through image_processor for postprocessing

predicted_semantic_map = processor.post_process_semantic_segmentation(outputs, target_sizes=[image.size[::-1]])[0]

# Instance Segmentation

instance_inputs = processor(images=image, task_inputs=["instance"], return_tensors="pt")

instance_outputs = model(**instance_inputs)

# pass through image_processor for postprocessing

predicted_instance_map = processor.post_process_instance_segmentation(outputs, target_sizes=[image.size[::-1]])[0]["segmentation"]

# Panoptic Segmentation

panoptic_inputs = processor(images=image, task_inputs=["panoptic"], return_tensors="pt")

panoptic_outputs = model(**panoptic_inputs)

# pass through image_processor for postprocessing

predicted_semantic_map = processor.post_process_panoptic_segmentation(outputs, target_sizes=[image.size[::-1]])[0]["segmentation"]

```

For more examples, please refer to the [documentation](https://huggingface.co/docs/transformers/master/en/model_doc/oneformer).

### Citation

```bibtex

@article{jain2022oneformer,

title={{OneFormer: One Transformer to Rule Universal Image Segmentation}},

author={Jitesh Jain and Jiachen Li and MangTik Chiu and Ali Hassani and Nikita Orlov and Humphrey Shi},

journal={arXiv},

year={2022}

}

```

|

a8177b7a18302f46119a2a33ecce841f

|

lmqg/mbart-large-cc25-ruquad-qg

|

lmqg

|

mbart

| 20 | 63 |

transformers

| 0 |

text2text-generation

| true | false | false |

cc-by-4.0

|

['ru']

|

['lmqg/qg_ruquad']

| null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['question generation']

| true | true | true | 6,815 | false |

# Model Card of `lmqg/mbart-large-cc25-ruquad-qg`

This model is fine-tuned version of [facebook/mbart-large-cc25](https://huggingface.co/facebook/mbart-large-cc25) for question generation task on the [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) (dataset_name: default) via [`lmqg`](https://github.com/asahi417/lm-question-generation).

### Overview

- **Language model:** [facebook/mbart-large-cc25](https://huggingface.co/facebook/mbart-large-cc25)

- **Language:** ru

- **Training data:** [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) (default)

- **Online Demo:** [https://autoqg.net/](https://autoqg.net/)

- **Repository:** [https://github.com/asahi417/lm-question-generation](https://github.com/asahi417/lm-question-generation)

- **Paper:** [https://arxiv.org/abs/2210.03992](https://arxiv.org/abs/2210.03992)

### Usage

- With [`lmqg`](https://github.com/asahi417/lm-question-generation#lmqg-language-model-for-question-generation-)

```python

from lmqg import TransformersQG

# initialize model

model = TransformersQG(language="ru", model="lmqg/mbart-large-cc25-ruquad-qg")

# model prediction

questions = model.generate_q(list_context="Нелишним будет отметить, что, развивая это направление, Д. И. Менделеев, поначалу априорно выдвинув идею о температуре, при которой высота мениска будет нулевой, в мае 1860 года провёл серию опытов.", list_answer="в мае 1860 года")

```

- With `transformers`

```python

from transformers import pipeline

pipe = pipeline("text2text-generation", "lmqg/mbart-large-cc25-ruquad-qg")

output = pipe("Нелишним будет отметить, что, развивая это направление, Д. И. Менделеев, поначалу априорно выдвинув идею о температуре, при которой высота мениска будет нулевой, <hl> в мае 1860 года <hl> провёл серию опытов.")

```

## Evaluation

- ***Metric (Question Generation)***: [raw metric file](https://huggingface.co/lmqg/mbart-large-cc25-ruquad-qg/raw/main/eval/metric.first.sentence.paragraph_answer.question.lmqg_qg_ruquad.default.json)

| | Score | Type | Dataset |

|:-----------|--------:|:--------|:-----------------------------------------------------------------|

| BERTScore | 87.18 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| Bleu_1 | 35.25 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| Bleu_2 | 28.1 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| Bleu_3 | 22.87 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| Bleu_4 | 18.8 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| METEOR | 29.3 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| MoverScore | 65.88 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| ROUGE_L | 34.18 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

- ***Metric (Question & Answer Generation, Reference Answer)***: Each question is generated from *the gold answer*. [raw metric file](https://huggingface.co/lmqg/mbart-large-cc25-ruquad-qg/raw/main/eval/metric.first.answer.paragraph.questions_answers.lmqg_qg_ruquad.default.json)

| | Score | Type | Dataset |

|:--------------------------------|--------:|:--------|:-----------------------------------------------------------------|

| QAAlignedF1Score (BERTScore) | 92.08 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| QAAlignedF1Score (MoverScore) | 71.45 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| QAAlignedPrecision (BERTScore) | 92.09 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| QAAlignedPrecision (MoverScore) | 71.46 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| QAAlignedRecall (BERTScore) | 92.08 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| QAAlignedRecall (MoverScore) | 71.45 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

- ***Metric (Question & Answer Generation, Pipeline Approach)***: Each question is generated on the answer generated by [`lmqg/mbart-large-cc25-ruquad-ae`](https://huggingface.co/lmqg/mbart-large-cc25-ruquad-ae). [raw metric file](https://huggingface.co/lmqg/mbart-large-cc25-ruquad-qg/raw/main/eval_pipeline/metric.first.answer.paragraph.questions_answers.lmqg_qg_ruquad.default.lmqg_mbart-large-cc25-ruquad-ae.json)

| | Score | Type | Dataset |

|:--------------------------------|--------:|:--------|:-----------------------------------------------------------------|

| QAAlignedF1Score (BERTScore) | 79.14 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| QAAlignedF1Score (MoverScore) | 56.25 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| QAAlignedPrecision (BERTScore) | 75.88 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| QAAlignedPrecision (MoverScore) | 54.01 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| QAAlignedRecall (BERTScore) | 82.85 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

| QAAlignedRecall (MoverScore) | 58.93 | default | [lmqg/qg_ruquad](https://huggingface.co/datasets/lmqg/qg_ruquad) |

## Training hyperparameters

The following hyperparameters were used during fine-tuning:

- dataset_path: lmqg/qg_ruquad

- dataset_name: default

- input_types: ['paragraph_answer']

- output_types: ['question']

- prefix_types: None

- model: facebook/mbart-large-cc25

- max_length: 512

- max_length_output: 32

- epoch: 17

- batch: 4

- lr: 0.0001

- fp16: False

- random_seed: 1

- gradient_accumulation_steps: 16

- label_smoothing: 0.15

The full configuration can be found at [fine-tuning config file](https://huggingface.co/lmqg/mbart-large-cc25-ruquad-qg/raw/main/trainer_config.json).

## Citation

```

@inproceedings{ushio-etal-2022-generative,

title = "{G}enerative {L}anguage {M}odels for {P}aragraph-{L}evel {Q}uestion {G}eneration",

author = "Ushio, Asahi and

Alva-Manchego, Fernando and

Camacho-Collados, Jose",

booktitle = "Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2022",

address = "Abu Dhabi, U.A.E.",

publisher = "Association for Computational Linguistics",

}

```

|

b2816cee53af91e0ce3e10c761a19c78

|

steysie/bert-base-multilingual-cased-tuned-smartcat

|

steysie

|

bert

| 6 | 4 |

transformers

| 0 |

text-generation

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,297 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-multilingual-cased-tuned-smartcat

This model is a fine-tuned version of [bert-base-multilingual-cased](https://huggingface.co/bert-base-multilingual-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0000

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 0.0006 | 1.0 | 11586 | 0.0000 |

| 0.0003 | 2.0 | 23172 | 0.0000 |

| 0.0 | 3.0 | 34806 | 0.0000 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu102

- Datasets 2.1.0

- Tokenizers 0.12.1

|

35c2a2d7a1094087ff828a3ad151694a

|

chinhon/distilgpt2-sgnews

|

chinhon

|

gpt2

| 16 | 3 |

transformers

| 0 |

text-generation

| true | false | false |

apache-2.0

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['generated_from_trainer']

| true | true | true | 1,235 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilgpt2-sgnews

This model is a fine-tuned version of [distilgpt2](https://huggingface.co/distilgpt2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.1516

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 3.3558 | 1.0 | 23769 | 3.2316 |

| 3.2558 | 2.0 | 47538 | 3.1683 |

| 3.2321 | 3.0 | 71307 | 3.1516 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.9.0+cu111

- Datasets 1.14.0

- Tokenizers 0.10.3

|

a88eaf255d9cb1e69f010113bfea6c03

|

emilios/whisper-medium-el

|

emilios

|

whisper

| 106 | 7 |

transformers

| 0 |

automatic-speech-recognition

| true | false | false |

apache-2.0

|

['el']

|

['mozilla-foundation/common_voice_11_0', 'google/fleurs']

| null | 3 | 0 | 3 | 0 | 0 | 0 | 0 |

['hf-asr-leaderboard', 'whisper-medium', 'mozilla-foundation/common_voice_11_0', 'greek', 'whisper-event', 'generated_from_trainer', 'whisper-event']

| true | true | true | 1,225 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Medium El Greco

This model is a fine-tuned version of [openai/whisper-medium](https://huggingface.co/openai/whisper-medium) on the Common Voice 11.0 dataset.

It achieves the following results on the evaluation set:

- eval_loss: 0.4245

- eval_wer: 10.7448

- eval_runtime: 1107.1212

- eval_samples_per_second: 1.532

- eval_steps_per_second: 0.096

- epoch: 33.98

- step: 7000

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 32

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 7000

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu117

- Datasets 2.7.1.dev0

- Tokenizers 0.13.2

|

a767e91f6fe50fa588640760792ce815

|

nellchic/test

|

nellchic

| null | 12 | 0 | null | 0 | null | false | false | false |

mit

| null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

[]

| false | true | true | 1,319 | false |

### NOTE: USED WAIFU DIFFUSION

<https://huggingface.co/hakurei/waifu-diffusion>

### hitokomoru-style

Artist: <https://www.pixiv.net/en/users/30837811>

This is the `<hitokomoru-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

35cfe0910c9d86237e52578ebcd505be

|

explosion/ko_udv25_koreankaist_trf

|

explosion

| null | 28 | 3 |

spacy

| 0 |

token-classification

| false | false | false |

cc-by-sa-4.0

|

['ko']

| null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

['spacy', 'token-classification']

| false | true | true | 61,725 | false |

UD v2.5 benchmarking pipeline for UD_Korean-Kaist

| Feature | Description |

| --- | --- |

| **Name** | `ko_udv25_koreankaist_trf` |

| **Version** | `0.0.1` |

| **spaCy** | `>=3.2.1,<3.3.0` |

| **Default Pipeline** | `experimental_char_ner_tokenizer`, `transformer`, `tagger`, `morphologizer`, `parser`, `experimental_edit_tree_lemmatizer` |

| **Components** | `experimental_char_ner_tokenizer`, `transformer`, `senter`, `tagger`, `morphologizer`, `parser`, `experimental_edit_tree_lemmatizer` |

| **Vectors** | 0 keys, 0 unique vectors (0 dimensions) |

| **Sources** | [Universal Dependencies v2.5](https://lindat.mff.cuni.cz/repository/xmlui/handle/11234/1-3105) (Zeman, Daniel; et al.) |

| **License** | `CC BY-SA 4.0` |

| **Author** | [Explosion](https://explosion.ai) |

### Label Scheme

<details>

<summary>View label scheme (5329 labels for 6 components)</summary>

| Component | Labels |

| --- | --- |

| **`experimental_char_ner_tokenizer`** | `TOKEN` |

| **`senter`** | `I`, `S` |

| **`tagger`** | `ecs`, `etm`, `f`, `f+f+jcj`, `f+f+jcs`, `f+f+jct`, `f+f+jxt`, `f+jca`, `f+jca+jp+ecc`, `f+jca+jp+ep+ef`, `f+jca+jxc`, `f+jca+jxc+jcm`, `f+jca+jxt`, `f+jcj`, `f+jcm`, `f+jco`, `f+jcs`, `f+jct`, `f+jct+jcm`, `f+jp+ef`, `f+jp+ep+ef`, `f+jp+etm`, `f+jxc`, `f+jxt`, `f+ncn`, `f+ncn+jcm`, `f+ncn+jcs`, `f+ncn+jp+ecc`, `f+ncn+jxt`, `f+ncpa+jcm`, `f+npp+jcs`, `f+nq`, `f+xsn`, `f+xsn+jco`, `f+xsn+jxt`, `ii`, `jca`, `jca+jcm`, `jca+jxc`, `jca+jxt`, `jcc`, `jcj`, `jcm`, `jco`, `jcr`, `jcr+jxc`, `jcs`, `jct`, `jct+jcm`, `jct+jxt`, `jp+ecc`, `jp+ecs`, `jp+ef`, `jp+ef+jcr`, `jp+ef+jcr+jxc`, `jp+ep+ecs`, `jp+ep+ef`, `jp+ep+etm`, `jp+ep+etn`, `jp+etm`, `jp+etn`, `jp+etn+jco`, `jp+etn+jxc`, `jxc`, `jxc+jca`, `jxc+jco`, `jxc+jcs`, `jxt`, `mad`, `mad+jxc`, `mad+jxt`, `mag`, `mag+jca`, `mag+jcm`, `mag+jcs`, `mag+jp+ef+jcr`, `mag+jxc`, `mag+jxc+jxc`, `mag+jxt`, `mag+xsn`, `maj`, `maj+jxc`, `maj+jxt`, `mma`, `mmd`, `nbn`, `nbn+jca`, `nbn+jca+jcj`, `nbn+jca+jcm`, `nbn+jca+jp+ef`, `nbn+jca+jxc`, `nbn+jca+jxt`, `nbn+jcc`, `nbn+jcj`, `nbn+jcm`, `nbn+jco`, `nbn+jcr`, `nbn+jcs`, `nbn+jct`, `nbn+jct+jcm`, `nbn+jct+jxt`, `nbn+jp+ecc`, `nbn+jp+ecs`, `nbn+jp+ecs+jca`, `nbn+jp+ecs+jcm`, `nbn+jp+ecs+jco`, `nbn+jp+ecs+jxc`, `nbn+jp+ecs+jxt`, `nbn+jp+ecx`, `nbn+jp+ef`, `nbn+jp+ef+jca`, `nbn+jp+ef+jco`, `nbn+jp+ef+jcr`, `nbn+jp+ef+jcr+jxc`, `nbn+jp+ef+jcr+jxt`, `nbn+jp+ef+jcs`, `nbn+jp+ef+jxc`, `nbn+jp+ef+jxc+jco`, `nbn+jp+ef+jxf`, `nbn+jp+ef+jxt`, `nbn+jp+ep+ecc`, `nbn+jp+ep+ecs`, `nbn+jp+ep+ecs+jxc`, `nbn+jp+ep+ef`, `nbn+jp+ep+ef+jcr`, `nbn+jp+ep+etm`, `nbn+jp+ep+etn`, `nbn+jp+ep+etn+jco`, `nbn+jp+ep+etn+jcs`, `nbn+jp+etm`, `nbn+jp+etn`, `nbn+jp+etn+jca`, `nbn+jp+etn+jca+jxt`, `nbn+jp+etn+jco`, `nbn+jp+etn+jcs`, `nbn+jp+etn+jxc`, `nbn+jp+etn+jxt`, `nbn+jxc`, `nbn+jxc+jca`, `nbn+jxc+jca+jxc`, `nbn+jxc+jca+jxt`, `nbn+jxc+jcc`, `nbn+jxc+jcm`, `nbn+jxc+jco`, `nbn+jxc+jcs`, `nbn+jxc+jp+ef`, `nbn+jxc+jxc`, `nbn+jxc+jxt`, `nbn+jxt`, `nbn+nbn`, `nbn+nbn+jp+ef`, `nbn+xsm+ecs`, `nbn+xsm+ef`, `nbn+xsm+ep+ef`, `nbn+xsm+ep+ef+jcr`, `nbn+xsm+etm`, `nbn+xsn`, `nbn+xsn+jca`, `nbn+xsn+jca+jp+ef+jcr`, `nbn+xsn+jca+jxc`, `nbn+xsn+jca+jxt`, `nbn+xsn+jcm`, `nbn+xsn+jco`, `nbn+xsn+jcs`, `nbn+xsn+jct`, `nbn+xsn+jp+ecc`, `nbn+xsn+jp+ecs`, `nbn+xsn+jp+ef`, `nbn+xsn+jp+ef+jcr`, `nbn+xsn+jp+ep+ef`, `nbn+xsn+jxc`, `nbn+xsn+jxt`, `nbn+xsv+etm`, `nbu`, `nbu+jca`, `nbu+jca+jxc`, `nbu+jca+jxt`, `nbu+jcc`, `nbu+jcc+jxc`, `nbu+jcj`, `nbu+jcm`, `nbu+jco`, `nbu+jcs`, `nbu+jct`, `nbu+jct+jxc`, `nbu+jp+ecc`, `nbu+jp+ecs`, `nbu+jp+ef`, `nbu+jp+ef+jcr`, `nbu+jp+ef+jxc`, `nbu+jp+ep+ecc`, `nbu+jp+ep+ecs`, `nbu+jp+ep+ef`, `nbu+jp+ep+ef+jcr`, `nbu+jp+ep+etm`, `nbu+jp+ep+etn+jco`, `nbu+jp+etm`, `nbu+jxc`, `nbu+jxc+jca`, `nbu+jxc+jcs`, `nbu+jxc+jp+ef`, `nbu+jxc+jp+ep+ef`, `nbu+jxc+jxt`, `nbu+jxt`, `nbu+ncn`, `nbu+ncn+jca`, `nbu+ncn+jcm`, `nbu+xsn`, `nbu+xsn+jca`, `nbu+xsn+jca+jxc`, `nbu+xsn+jca+jxt`, `nbu+xsn+jcm`, `nbu+xsn+jco`, `nbu+xsn+jcs`, `nbu+xsn+jp+ecs`, `nbu+xsn+jp+ep+ef`, `nbu+xsn+jxc`, `nbu+xsn+jxc+jxt`, `nbu+xsn+jxt`, `nbu+xsv+ecc`, `nbu+xsv+etm`, `ncn`, `ncn+f+ncpa+jco`, `ncn+jca`, `ncn+jca+jca`, `ncn+jca+jcc`, `ncn+jca+jcj`, `ncn+jca+jcm`, `ncn+jca+jcs`, `ncn+jca+jct`, `ncn+jca+jp+ecc`, `ncn+jca+jp+ecs`, `ncn+jca+jp+ef`, `ncn+jca+jp+ep+ef`, `ncn+jca+jp+etm`, `ncn+jca+jp+etn+jxt`, `ncn+jca+jxc`, `ncn+jca+jxc+jcc`, `ncn+jca+jxc+jcm`, `ncn+jca+jxc+jxc`, `ncn+jca+jxc+jxt`, `ncn+jca+jxt`, `ncn+jcc`, `ncn+jcc+jxc`, `ncn+jcj`, `ncn+jcj+jxt`, `ncn+jcm`, `ncn+jco`, `ncn+jcr`, `ncn+jcr+jxc`, `ncn+jcs`, `ncn+jcs+jxt`, `ncn+jct`, `ncn+jct+jcm`, `ncn+jct+jxc`, `ncn+jct+jxt`, `ncn+jcv`, `ncn+jp+ecc`, `ncn+jp+ecc+jct`, `ncn+jp+ecc+jxc`, `ncn+jp+ecs`, `ncn+jp+ecs+jcm`, `ncn+jp+ecs+jco`, `ncn+jp+ecs+jxc`, `ncn+jp+ecs+jxt`, `ncn+jp+ecx`, `ncn+jp+ef`, `ncn+jp+ef+jca`, `ncn+jp+ef+jcm`, `ncn+jp+ef+jco`, `ncn+jp+ef+jcr`, `ncn+jp+ef+jcr+jxc`, `ncn+jp+ef+jcr+jxt`, `ncn+jp+ef+jp+etm`, `ncn+jp+ef+jxc`, `ncn+jp+ef+jxf`, `ncn+jp+ef+jxt`, `ncn+jp+ep+ecc`, `ncn+jp+ep+ecs`, `ncn+jp+ep+ecs+jxc`, `ncn+jp+ep+ecx`, `ncn+jp+ep+ef`, `ncn+jp+ep+ef+jcr`, `ncn+jp+ep+ef+jcr+jxc`, `ncn+jp+ep+ef+jxc`, `ncn+jp+ep+ef+jxf`, `ncn+jp+ep+ef+jxt`, `ncn+jp+ep+ep+etm`, `ncn+jp+ep+etm`, `ncn+jp+ep+etn`, `ncn+jp+ep+etn+jca`, `ncn+jp+ep+etn+jca+jxc`, `ncn+jp+ep+etn+jco`, `ncn+jp+ep+etn+jcs`, `ncn+jp+ep+etn+jxt`, `ncn+jp+etm`, `ncn+jp+etn`, `ncn+jp+etn+jca`, `ncn+jp+etn+jca+jxc`, `ncn+jp+etn+jca+jxt`, `ncn+jp+etn+jco`, `ncn+jp+etn+jcs`, `ncn+jp+etn+jct`, `ncn+jp+etn+jxc`, `ncn+jp+etn+jxt`, `ncn+jxc`, `ncn+jxc+jca`, `ncn+jxc+jca+jxc`, `ncn+jxc+jca+jxt`, `ncn+jxc+jcc`, `ncn+jxc+jcm`, `ncn+jxc+jco`, `ncn+jxc+jcs`, `ncn+jxc+jct+jxt`, `ncn+jxc+jp+ef`, `ncn+jxc+jp+ef+jcr`, `ncn+jxc+jp+ep+ecs`, `ncn+jxc+jp+ep+ef`, `ncn+jxc+jp+etm`, `ncn+jxc+jxc`, `ncn+jxc+jxt`, `ncn+jxt`, `ncn+jxt+jcm`, `ncn+jxt+jxc`, `ncn+nbn`, `ncn+nbn+jca`, `ncn+nbn+jcm`, `ncn+nbn+jcs`, `ncn+nbn+jp+ecc`, `ncn+nbn+jp+ep+ef`, `ncn+nbn+jxc`, `ncn+nbn+jxt`, `ncn+nbu`, `ncn+nbu+jca`, `ncn+nbu+jcm`, `ncn+nbu+jco`, `ncn+nbu+jp+ef`, `ncn+nbu+jxc`, `ncn+nbu+ncn`, `ncn+ncn`, `ncn+ncn+jca`, `ncn+ncn+jca+jcc`, `ncn+ncn+jca+jcm`, `ncn+ncn+jca+jxc`, `ncn+ncn+jca+jxc+jcm`, `ncn+ncn+jca+jxc+jxc`, `ncn+ncn+jca+jxt`, `ncn+ncn+jcc`, `ncn+ncn+jcj`, `ncn+ncn+jcm`, `ncn+ncn+jco`, `ncn+ncn+jcr`, `ncn+ncn+jcs`, `ncn+ncn+jct`, `ncn+ncn+jct+jcm`, `ncn+ncn+jct+jxc`, `ncn+ncn+jct+jxt`, `ncn+ncn+jp+ecc`, `ncn+ncn+jp+ecs`, `ncn+ncn+jp+ef`, `ncn+ncn+jp+ef+jcm`, `ncn+ncn+jp+ef+jcr`, `ncn+ncn+jp+ef+jcs`, `ncn+ncn+jp+ep+ecc`, `ncn+ncn+jp+ep+ecs`, `ncn+ncn+jp+ep+ef`, `ncn+ncn+jp+ep+ef+jcr`, `ncn+ncn+jp+ep+ep+etm`, `ncn+ncn+jp+ep+etm`, `ncn+ncn+jp+ep+etn`, `ncn+ncn+jp+etm`, `ncn+ncn+jp+etn`, `ncn+ncn+jp+etn+jca`, `ncn+ncn+jp+etn+jco`, `ncn+ncn+jp+etn+jxc`, `ncn+ncn+jxc`, `ncn+ncn+jxc+jca`, `ncn+ncn+jxc+jcc`, `ncn+ncn+jxc+jcm`, `ncn+ncn+jxc+jco`, `ncn+ncn+jxc+jcs`, `ncn+ncn+jxc+jxc`, `ncn+ncn+jxt`, `ncn+ncn+nbn`, `ncn+ncn+ncn`, `ncn+ncn+ncn+jca`, `ncn+ncn+ncn+jca+jcm`, `ncn+ncn+ncn+jca+jxt`, `ncn+ncn+ncn+jcj`, `ncn+ncn+ncn+jcm`, `ncn+ncn+ncn+jco`, `ncn+ncn+ncn+jcs`, `ncn+ncn+ncn+jct+jxt`, `ncn+ncn+ncn+jp+etn+jxc`, `ncn+ncn+ncn+jxt`, `ncn+ncn+ncn+ncn+jca`, `ncn+ncn+ncn+ncn+jca+jxt`, `ncn+ncn+ncn+ncn+jco`, `ncn+ncn+ncn+xsn+jp+etm`, `ncn+ncn+ncpa`, `ncn+ncn+ncpa+jca`, `ncn+ncn+ncpa+jcm`, `ncn+ncn+ncpa+jco`, `ncn+ncn+ncpa+jcs`, `ncn+ncn+ncpa+jxc`, `ncn+ncn+ncpa+jxt`, `ncn+ncn+ncpa+ncn`, `ncn+ncn+ncpa+ncn+jca`, `ncn+ncn+ncpa+ncn+jcj`, `ncn+ncn+ncpa+ncn+jcm`, `ncn+ncn+ncpa+ncn+jxt`, `ncn+ncn+xsn`, `ncn+ncn+xsn+jca`, `ncn+ncn+xsn+jca+jxt`, `ncn+ncn+xsn+jcj`, `ncn+ncn+xsn+jcm`, `ncn+ncn+xsn+jco`, `ncn+ncn+xsn+jcs`, `ncn+ncn+xsn+jct`, `ncn+ncn+xsn+jp+ecs`, `ncn+ncn+xsn+jp+ep+ef`, `ncn+ncn+xsn+jp+etm`, `ncn+ncn+xsn+jxc`, `ncn+ncn+xsn+jxc+jcs`, `ncn+ncn+xsn+jxt`, `ncn+ncn+xsv+ecc`, `ncn+ncn+xsv+etm`, `ncn+ncpa`, `ncn+ncpa+jca`, `ncn+ncpa+jca+jcm`, `ncn+ncpa+jca+jxc`, `ncn+ncpa+jca+jxt`, `ncn+ncpa+jcc`, `ncn+ncpa+jcj`, `ncn+ncpa+jcm`, `ncn+ncpa+jco`, `ncn+ncpa+jcr`, `ncn+ncpa+jcs`, `ncn+ncpa+jct`, `ncn+ncpa+jct+jcm`, `ncn+ncpa+jct+jxt`, `ncn+ncpa+jp+ecc`, `ncn+ncpa+jp+ecc+jxc`, `ncn+ncpa+jp+ecs`, `ncn+ncpa+jp+ecs+jxc`, `ncn+ncpa+jp+ef`, `ncn+ncpa+jp+ef+jcr`, `ncn+ncpa+jp+ef+jcr+jxc`, `ncn+ncpa+jp+ep+ef`, `ncn+ncpa+jp+ep+etm`, `ncn+ncpa+jp+ep+etn`, `ncn+ncpa+jp+etm`, `ncn+ncpa+jxc`, `ncn+ncpa+jxc+jca+jxc`, `ncn+ncpa+jxc+jco`, `ncn+ncpa+jxc+jcs`, `ncn+ncpa+jxt`, `ncn+ncpa+nbn+jcs`, `ncn+ncpa+ncn`, `ncn+ncpa+ncn+jca`, `ncn+ncpa+ncn+jca+jcm`, `ncn+ncpa+ncn+jca+jxc`, `ncn+ncpa+ncn+jca+jxt`, `ncn+ncpa+ncn+jcj`, `ncn+ncpa+ncn+jcm`, `ncn+ncpa+ncn+jco`, `ncn+ncpa+ncn+jcs`, `ncn+ncpa+ncn+jct`, `ncn+ncpa+ncn+jct+jcm`, `ncn+ncpa+ncn+jp+ef+jcr`, `ncn+ncpa+ncn+jp+ep+etm`, `ncn+ncpa+ncn+jxc`, `ncn+ncpa+ncn+jxt`, `ncn+ncpa+ncn+xsn+jcm`, `ncn+ncpa+ncn+xsn+jxt`, `ncn+ncpa+ncpa`, `ncn+ncpa+ncpa+jca`, `ncn+ncpa+ncpa+jcj`, `ncn+ncpa+ncpa+jcm`, `ncn+ncpa+ncpa+jco`, `ncn+ncpa+ncpa+jcs`, `ncn+ncpa+ncpa+jp+ep+ef`, `ncn+ncpa+ncpa+jxt`, `ncn+ncpa+ncpa+ncn`, `ncn+ncpa+xsn`, `ncn+ncpa+xsn+jcm`, `ncn+ncpa+xsn+jco`, `ncn+ncpa+xsn+jcs`, `ncn+ncpa+xsn+jp+ecc`, `ncn+ncpa+xsn+jp+etm`, `ncn+ncpa+xsn+jxt`, `ncn+ncpa+xsv+ecc`, `ncn+ncpa+xsv+ecs`, `ncn+ncpa+xsv+ecx`, `ncn+ncpa+xsv+ecx+px+etm`, `ncn+ncpa+xsv+ef`, `ncn+ncpa+xsv+ef+jcm`, `ncn+ncpa+xsv+ef+jcr`, `ncn+ncpa+xsv+etm`, `ncn+ncpa+xsv+etn`, `ncn+ncpa+xsv+etn+jco`, `ncn+ncps`, `ncn+ncps+jca`, `ncn+ncps+jcm`, `ncn+ncps+jco`, `ncn+ncps+jcs`, `ncn+ncps+jp+ecs`, `ncn+ncps+jxt`, `ncn+ncps+ncn+jcs`, `ncn+ncps+ncpa+ncn`, `ncn+ncps+xsm+ef`, `ncn+ncps+xsm+etm`, `ncn+nnc`, `ncn+nnc+jcs`, `ncn+nnc+nnc`, `ncn+nno`, `ncn+nq`, `ncn+nq+jca`, `ncn+nq+jca+jxc`, `ncn+nq+jca+jxt`, `ncn+nq+jcm`, `ncn+nq+jcs`, `ncn+nq+jxt`, `ncn+nq+ncn+jcm`, `ncn+nq+ncn+xsn+jcs`, `ncn+nq+xsn+jxt`, `ncn+xsa`, `ncn+xsm+ecc`, `ncn+xsm+ecs`, `ncn+xsm+ecs+jxc`, `ncn+xsm+ecx`, `ncn+xsm+ecx+jcs`, `ncn+xsm+ecx+px+ep+etm`, `ncn+xsm+ef`, `ncn+xsm+ef+jcr`, `ncn+xsm+etm`, `ncn+xsm+etn+jcm`, `ncn+xsm+etn+jp+ef+jcr`, `ncn+xsn`, `ncn+xsn+jca`, `ncn+xsn+jca+jcj`, `ncn+xsn+jca+jxc`, `ncn+xsn+jca+jxc+jxc`, `ncn+xsn+jca+jxt`, `ncn+xsn+jcc`, `ncn+xsn+jcj`, `ncn+xsn+jcm`, `ncn+xsn+jco`, `ncn+xsn+jcs`, `ncn+xsn+jcs+jxt`, `ncn+xsn+jct`, `ncn+xsn+jct+jcm`, `ncn+xsn+jct+jxc`, `ncn+xsn+jct+jxt`, `ncn+xsn+jcv`, `ncn+xsn+jp+ecc`, `ncn+xsn+jp+ecc+jxc`, `ncn+xsn+jp+ecs`, `ncn+xsn+jp+ecs+jxc`, `ncn+xsn+jp+ecx`, `ncn+xsn+jp+ecx+jxt`, `ncn+xsn+jp+ef`, `ncn+xsn+jp+ef+jca`, `ncn+xsn+jp+ef+jcr`, `ncn+xsn+jp+ep+ecc`, `ncn+xsn+jp+ep+ecs`, `ncn+xsn+jp+ep+ef`, `ncn+xsn+jp+ep+ef+jcr`, `ncn+xsn+jp+ep+etm`, `ncn+xsn+jp+ep+etn`, `ncn+xsn+jp+etm`, `ncn+xsn+jp+etn`, `ncn+xsn+jp+etn+jca`, `ncn+xsn+jp+etn+jca+jxt`, `ncn+xsn+jp+etn+jxc`, `ncn+xsn+jp+etn+jxt`, `ncn+xsn+jxc`, `ncn+xsn+jxc+jcm`, `ncn+xsn+jxc+jco`, `ncn+xsn+jxc+jcs`, `ncn+xsn+jxc+jxc`, `ncn+xsn+jxt`, `ncn+xsn+ncn+jca`, `ncn+xsn+ncn+jca+jxt`, `ncn+xsn+ncn+jcs`, `ncn+xsn+ncpa+jca`, `ncn+xsn+xsn`, `ncn+xsn+xsn+jca`, `ncn+xsn+xsn+jcm`, `ncn+xsn+xsn+jp+ecs`, `ncn+xsn+xsn+jxc`, `ncn+xsn+xsn+jxc+jcc`, `ncn+xsn+xsn+jxc+jcs`, `ncn+xsn+xsv+ecc`, `ncn+xsn+xsv+etm`, `ncn+xsn+xsv+etn`, `ncn+xsv+ecc`, `ncn+xsv+ecs`, `ncn+xsv+ecx`, `ncn+xsv+ef`, `ncn+xsv+ep+ecs`, `ncn+xsv+ep+ef`, `ncn+xsv+ep+etm`, `ncn+xsv+etm`, `ncn+xsv+etn+jca`, `ncpa`, `ncpa+jca`, `ncpa+jca+jcm`, `ncpa+jca+jct`, `ncpa+jca+jp+ecs`, `ncpa+jca+jp+ef`, `ncpa+jca+jp+ep+ef`, `ncpa+jca+jxc`, `ncpa+jca+jxc+jcm`, `ncpa+jca+jxc+jxc`, `ncpa+jca+jxc+jxt`, `ncpa+jca+jxt`, `ncpa+jcc`, `ncpa+jcj`, `ncpa+jcm`, `ncpa+jco`, `ncpa+jcr`, `ncpa+jcs`, `ncpa+jct`, `ncpa+jct+jcm`, `ncpa+jct+jxc`, `ncpa+jct+jxt`, `ncpa+jp+ecc`, `ncpa+jp+ecs`, `ncpa+jp+ecs+jxc`, `ncpa+jp+ecx`, `ncpa+jp+ecx+jxc`, `ncpa+jp+ef`, `ncpa+jp+ef+jca`, `ncpa+jp+ef+jco`, `ncpa+jp+ef+jcr`, `ncpa+jp+ef+jxc`, `ncpa+jp+ef+jxf`, `ncpa+jp+ep+ecc`, `ncpa+jp+ep+ecs`, `ncpa+jp+ep+ef`, `ncpa+jp+ep+ef+jca`, `ncpa+jp+ep+ef+jcr`, `ncpa+jp+ep+ef+jxt`, `ncpa+jp+ep+etm`, `ncpa+jp+ep+etn+jca`, `ncpa+jp+ep+etn+jca+jxc`, `ncpa+jp+ep+etn+jcs`, `ncpa+jp+etm`, `ncpa+jp+etn`, `ncpa+jp+etn+jca`, `ncpa+jp+etn+jca+jxt`, `ncpa+jp+etn+jco`, `ncpa+jp+etn+jcs`, `ncpa+jp+etn+jxc`, `ncpa+jp+etn+jxt`, `ncpa+jxc`, `ncpa+jxc+jca`, `ncpa+jxc+jca+jxc`, `ncpa+jxc+jca+jxt`, `ncpa+jxc+jcc`, `ncpa+jxc+jcm`, `ncpa+jxc+jco`, `ncpa+jxc+jcs`, `ncpa+jxc+jxc`, `ncpa+jxt`, `ncpa+jxt+jxc`, `ncpa+jxt+jxt`, `ncpa+nbn+jca`, `ncpa+nbn+jct`, `ncpa+nbn+jp+ef`, `ncpa+nbn+jp+ep+ef`, `ncpa+nbn+jp+etm`, `ncpa+nbn+jxc+jcc`, `ncpa+nbu+jca`, `ncpa+ncn`, `ncpa+ncn+jca`, `ncpa+ncn+jca+jcm`, `ncpa+ncn+jca+jxc`, `ncpa+ncn+jca+jxc+jcm`, `ncpa+ncn+jca+jxt`, `ncpa+ncn+jcc`, `ncpa+ncn+jcj`, `ncpa+ncn+jcm`, `ncpa+ncn+jco`, `ncpa+ncn+jcr`, `ncpa+ncn+jcs`, `ncpa+ncn+jct`, `ncpa+ncn+jct+jcm`, `ncpa+ncn+jct+jxc`, `ncpa+ncn+jp+ecc`, `ncpa+ncn+jp+ecs`, `ncpa+ncn+jp+ef`, `ncpa+ncn+jp+ef+jcr`, `ncpa+ncn+jp+ef+jcr+jxc`, `ncpa+ncn+jp+ep+ef`, `ncpa+ncn+jp+ep+etm`, `ncpa+ncn+jp+etm`, `ncpa+ncn+jp+etn+jca+jxt`, `ncpa+ncn+jp+etn+jco`, `ncpa+ncn+jp+etn+jxc`, `ncpa+ncn+jxc`, `ncpa+ncn+jxc+jcc`, `ncpa+ncn+jxc+jco`, `ncpa+ncn+jxt`, `ncpa+ncn+nbn`, `ncpa+ncn+ncn`, `ncpa+ncn+ncn+jca`, `ncpa+ncn+ncn+jca+jxt`, `ncpa+ncn+ncn+jcm`, `ncpa+ncn+ncn+jco`, `ncpa+ncn+ncn+jcs`, `ncpa+ncn+ncn+jp+ep+ef`, `ncpa+ncn+ncn+jp+etm`, `ncpa+ncn+ncn+jxt`, `ncpa+ncn+ncn+ncn`, `ncpa+ncn+ncn+xsn+jxt`, `ncpa+ncn+ncpa`, `ncpa+ncn+ncpa+jca`, `ncpa+ncn+ncpa+jcj`, `ncpa+ncn+ncpa+jco`, `ncpa+ncn+ncpa+ncn`, `ncpa+ncn+ncpa+ncn+jco`, `ncpa+ncn+xsn`, `ncpa+ncn+xsn+jca`, `ncpa+ncn+xsn+jca+jxc`, `ncpa+ncn+xsn+jcj`, `ncpa+ncn+xsn+jcm`, `ncpa+ncn+xsn+jco`, `ncpa+ncn+xsn+jcs`, `ncpa+ncn+xsn+jct`, `ncpa+ncn+xsn+jp+ep+ef`, `ncpa+ncn+xsn+jp+etm`, `ncpa+ncn+xsn+jxt`, `ncpa+ncpa`, `ncpa+ncpa+jca`, `ncpa+ncpa+jca+jcm`, `ncpa+ncpa+jca+jxc`, `ncpa+ncpa+jca+jxt`, `ncpa+ncpa+jcj`, `ncpa+ncpa+jcm`, `ncpa+ncpa+jco`, `ncpa+ncpa+jcs`, `ncpa+ncpa+jct`, `ncpa+ncpa+jct+jxc`, `ncpa+ncpa+jct+jxt`, `ncpa+ncpa+jp+ecc`, `ncpa+ncpa+jp+ecs`, `ncpa+ncpa+jp+ecx`, `ncpa+ncpa+jp+ef`, `ncpa+ncpa+jp+ef+jca`, `ncpa+ncpa+jp+ef+jcr`, `ncpa+ncpa+jp+ef+jcr+jxc`, `ncpa+ncpa+jp+ep+ecs`, `ncpa+ncpa+jp+etm`, `ncpa+ncpa+jxc`, `ncpa+ncpa+jxt`, `ncpa+ncpa+ncn`, `ncpa+ncpa+ncn+jca`, `ncpa+ncpa+ncn+jcj`, `ncpa+ncpa+ncn+jcm`, `ncpa+ncpa+ncn+jco`, `ncpa+ncpa+ncn+jcs`, `ncpa+ncpa+ncn+jxt`, `ncpa+ncpa+ncpa+jcm`, `ncpa+ncpa+ncpa+jcs`, `ncpa+ncpa+ncpa+ncpa+jco`, `ncpa+ncpa+xsn`, `ncpa+ncpa+xsn+jca`, `ncpa+ncpa+xsn+jcj`, `ncpa+ncpa+xsn+jco`, `ncpa+ncpa+xsn+jcs`, `ncpa+ncpa+xsn+jxc`, `ncpa+ncpa+xsn+jxt`, `ncpa+ncpa+xsv+ecc`, `ncpa+ncpa+xsv+ecs`, `ncpa+ncpa+xsv+ef`, `ncpa+ncpa+xsv+ep+ef`, `ncpa+ncpa+xsv+ep+etm`, `ncpa+ncpa+xsv+etm`, `ncpa+ncpa+xsv+etn+jca`, `ncpa+ncps`, `ncpa+ncps+jca`, `ncpa+ncps+jcm`, `ncpa+ncps+jco`, `ncpa+ncps+jcs`, `ncpa+ncps+jxt`, `ncpa+ncps+xsm+etm`, `ncpa+nq+jca`, `ncpa+xsa`, `ncpa+xsn`, `ncpa+xsn+jca`, `ncpa+xsn+jca+jxc`, `ncpa+xsn+jca+jxt`, `ncpa+xsn+jcc`, `ncpa+xsn+jcj`, `ncpa+xsn+jcm`, `ncpa+xsn+jco`, `ncpa+xsn+jcs`, `ncpa+xsn+jct`, `ncpa+xsn+jp+ecc`, `ncpa+xsn+jp+ecs`, `ncpa+xsn+jp+ecs+jxc`, `ncpa+xsn+jp+ecx`, `ncpa+xsn+jp+ecx+jxt`, `ncpa+xsn+jp+ef`, `ncpa+xsn+jp+ef+jcr`, `ncpa+xsn+jp+ef+jxf`, `ncpa+xsn+jp+ep+ecc`, `ncpa+xsn+jp+ep+ef`, `ncpa+xsn+jp+ep+ef+jco`, `ncpa+xsn+jp+ep+ef+jcr`, `ncpa+xsn+jp+etm`, `ncpa+xsn+jp+etn`, `ncpa+xsn+jp+etn+jco`, `ncpa+xsn+jp+etn+jxc`, `ncpa+xsn+jxc`, `ncpa+xsn+jxt`, `ncpa+xsv+ecc`, `ncpa+xsv+ecc+jcm`, `ncpa+xsv+ecc+jxc`, `ncpa+xsv+ecc+jxt`, `ncpa+xsv+ecs`, `ncpa+xsv+ecs+jca`, `ncpa+xsv+ecs+jco`, `ncpa+xsv+ecs+jp+ef`, `ncpa+xsv+ecs+jxc`, `ncpa+xsv+ecs+jxc+jxt`, `ncpa+xsv+ecs+jxt`, `ncpa+xsv+ecx`, `ncpa+xsv+ecx+jco`, `ncpa+xsv+ecx+jxc`, `ncpa+xsv+ecx+jxt`, `ncpa+xsv+ecx+px+ecc`, `ncpa+xsv+ecx+px+ecs`, `ncpa+xsv+ecx+px+ecx`, `ncpa+xsv+ecx+px+ecx+jxc`, `ncpa+xsv+ecx+px+ecx+px+ecs`, `ncpa+xsv+ecx+px+ef`, `ncpa+xsv+ecx+px+ef+jcr`, `ncpa+xsv+ecx+px+ep+ecc`, `ncpa+xsv+ecx+px+ep+ecs`, `ncpa+xsv+ecx+px+ep+ef`, `ncpa+xsv+ecx+px+ep+ef+jcr`, `ncpa+xsv+ecx+px+ep+etm`, `ncpa+xsv+ecx+px+ep+etn+jca`, `ncpa+xsv+ecx+px+ep+etn+jco`, `ncpa+xsv+ecx+px+ep+etn+jxc`, `ncpa+xsv+ecx+px+ep+etn+jxt`, `ncpa+xsv+ecx+px+etm`, `ncpa+xsv+ecx+px+etn`, `ncpa+xsv+ecx+px+etn+jca`, `ncpa+xsv+ecx+px+etn+jco`, `ncpa+xsv+ef`, `ncpa+xsv+ef+jca`, `ncpa+xsv+ef+jcj`, `ncpa+xsv+ef+jcm`, `ncpa+xsv+ef+jco`, `ncpa+xsv+ef+jcr`, `ncpa+xsv+ef+jcr+jxt`, `ncpa+xsv+ef+jcs`, `ncpa+xsv+ef+jxc`, `ncpa+xsv+ef+jxf`, `ncpa+xsv+ef+jxt`, `ncpa+xsv+ep+ecc`, `ncpa+xsv+ep+ecs`, `ncpa+xsv+ep+ecs+jco`, `ncpa+xsv+ep+ecs+jxc`, `ncpa+xsv+ep+ecs+jxt`, `ncpa+xsv+ep+ecx`, `ncpa+xsv+ep+ecx+jxc`, `ncpa+xsv+ep+ef`, `ncpa+xsv+ep+ef+jca`, `ncpa+xsv+ep+ef+jca+jxt`, `ncpa+xsv+ep+ef+jco`, `ncpa+xsv+ep+ef+jcr`, `ncpa+xsv+ep+ef+jcr+jxc`, `ncpa+xsv+ep+ef+jcr+jxc+jxt`, `ncpa+xsv+ep+ef+jxc`, `ncpa+xsv+ep+ef+jxf`, `ncpa+xsv+ep+ef+jxt`, `ncpa+xsv+ep+ep+ecs`, `ncpa+xsv+ep+ep+ef`, `ncpa+xsv+ep+etm`, `ncpa+xsv+ep+etn`, `ncpa+xsv+ep+etn+jca`, `ncpa+xsv+ep+etn+jca+jxc`, `ncpa+xsv+ep+etn+jcj`, `ncpa+xsv+ep+etn+jco`, `ncpa+xsv+ep+etn+jcs`, `ncpa+xsv+ep+etn+jxt`, `ncpa+xsv+etm`, `ncpa+xsv+etn`, `ncpa+xsv+etn+jca`, `ncpa+xsv+etn+jca+jxc`, `ncpa+xsv+etn+jca+jxt`, `ncpa+xsv+etn+jco`, `ncpa+xsv+etn+jcs`, `ncpa+xsv+etn+jct`, `ncpa+xsv+etn+jxc`, `ncpa+xsv+etn+jxc+jcm`, `ncpa+xsv+etn+jxc+jcs`, `ncpa+xsv+etn+jxc+jxc`, `ncpa+xsv+etn+jxc+jxt`, `ncpa+xsv+etn+jxt`, `ncps`, `ncps+jca`, `ncps+jca+jcm`, `ncps+jca+jxc`, `ncps+jca+jxc+jcm`, `ncps+jcc`, `ncps+jcj`, `ncps+jcm`, `ncps+jco`, `ncps+jcs`, `ncps+jct`, `ncps+jct+jcm`, `ncps+jct+jxt`, `ncps+jp+ecc`, `ncps+jp+ecs`, `ncps+jp+ecs+jxt`, `ncps+jp+ef`, `ncps+jp+ef+jcr`, `ncps+jp+ep+ef`, `ncps+jp+ep+etn`, `ncps+jp+etm`, `ncps+jp+etn+jcs`, `ncps+jp+etn+jxt`, `ncps+jxc`, `ncps+jxc+jxc`, `ncps+jxt`, `ncps+nbn+jp+etm`, `ncps+nbn+jxc`, `ncps+ncn`, `ncps+ncn+jca`, `ncps+ncn+jca+jcm`, `ncps+ncn+jcm`, `ncps+ncn+jco`, `ncps+ncn+jcs`, `ncps+ncn+jct+jxt`, `ncps+ncn+jp+ef`, `ncps+ncn+jp+ef+jcr`, `ncps+ncn+jp+etm`, `ncps+ncn+jxc+jco`, `ncps+ncn+jxt`, `ncps+ncn+ncn`, `ncps+ncn+ncn+jca+jxc`, `ncps+ncn+ncn+jcm`, `ncps+ncn+ncn+jco`, `ncps+ncn+ncn+jxt`, `ncps+ncn+xsn`, `ncps+ncn+xsn+jca`, `ncps+ncn+xsn+jcj`, `ncps+ncn+xsn+jco`, `ncps+ncn+xsn+jp+ecc`, `ncps+ncn+xsn+jp+etm`, `ncps+ncpa`, `ncps+ncpa+jca`, `ncps+ncpa+jcc`, `ncps+ncpa+jcj`, `ncps+ncpa+jcm`, `ncps+ncpa+jco`, `ncps+ncpa+jcs`, `ncps+ncpa+jp+etm`, `ncps+ncpa+jxt`, `ncps+ncpa+xsv+etm`, `ncps+ncps+jca`, `ncps+ncps+jcm`, `ncps+ncps+xsm+ecc`, `ncps+ncps+xsm+ecs`, `ncps+ncps+xsm+etm`, `ncps+xsa`, `ncps+xsa+jxc`, `ncps+xsm+ecc`, `ncps+xsm+ecc+jxc`, `ncps+xsm+ecc+jxt`, `ncps+xsm+ecs`, `ncps+xsm+ecs+jxc`, `ncps+xsm+ecs+jxt`, `ncps+xsm+ecx`, `ncps+xsm+ecx+jcs`, `ncps+xsm+ecx+jxc`, `ncps+xsm+ecx+jxt`, `ncps+xsm+ecx+px+ecc`, `ncps+xsm+ecx+px+ecs`, `ncps+xsm+ecx+px+ecx`, `ncps+xsm+ecx+px+ecx+jxt`, `ncps+xsm+ecx+px+ef`, `ncps+xsm+ecx+px+ep+ecs`, `ncps+xsm+ecx+px+ep+ef`, `ncps+xsm+ecx+px+ep+etm`, `ncps+xsm+ecx+px+ep+etn+jco`, `ncps+xsm+ecx+px+etm`, `ncps+xsm+ecx+px+etn`, `ncps+xsm+ecx+px+etn+jca`, `ncps+xsm+ecx+px+etn+jcj`, `ncps+xsm+ecx+px+etn+jco`, `ncps+xsm+ef`, `ncps+xsm+ef+jco`, `ncps+xsm+ef+jcr`, `ncps+xsm+ef+jcr+jxc`, `ncps+xsm+ef+jcr+jxt`, `ncps+xsm+ef+jxf`, `ncps+xsm+ef+jxt`, `ncps+xsm+ep+ecc`, `ncps+xsm+ep+ecs`, `ncps+xsm+ep+ecs+etm`, `ncps+xsm+ep+ef`, `ncps+xsm+ep+ef+jco`, `ncps+xsm+ep+ef+jcr`, `ncps+xsm+ep+ef+jxf`, `ncps+xsm+ep+ep+ef`, `ncps+xsm+ep+etm`, `ncps+xsm+ep+etn`, `ncps+xsm+ep+etn+jxt`, `ncps+xsm+etm`, `ncps+xsm+etn`, `ncps+xsm+etn+jca`, `ncps+xsm+etn+jca+jxt`, `ncps+xsm+etn+jcj`, `ncps+xsm+etn+jcm`, `ncps+xsm+etn+jco`, `ncps+xsm+etn+jcs`, `ncps+xsm+etn+jct`, `ncps+xsm+etn+jct+jcm`, `ncps+xsm+etn+jp+ef+jcr`, `ncps+xsm+etn+jp+etm`, `ncps+xsm+etn+jxc`, `ncps+xsm+etn+jxc+jxt`, `ncps+xsm+etn+jxt`, `ncps+xsn`, `ncps+xsn+jca`, `ncps+xsn+jca+jxt`, `ncps+xsn+jcm`, `ncps+xsn+jco`, `ncps+xsn+jcs`, `ncps+xsn+jp+ecc`, `ncps+xsn+jp+ep+ecs`, `ncps+xsn+jp+etm`, `ncps+xsn+jxc`, `ncps+xsn+jxt`, `ncps+xsv+etm`, `nnc`, `nnc+f`, `nnc+f+jca`, `nnc+f+jp+ef`, `nnc+jca`, `nnc+jca+jxc`, `nnc+jca+jxt`, `nnc+jcc`, `nnc+jcj`, `nnc+jcm`, `nnc+jco`, `nnc+jcs`, `nnc+jp+ecc`, `nnc+jp+ecs`, `nnc+jp+ef`, `nnc+jp+ef+jcr`, `nnc+jp+ep+ef`, `nnc+jp+ep+etm`, `nnc+jp+etm`, `nnc+jp+etn+jca`, `nnc+jxc`, `nnc+jxt`, `nnc+nbn`, `nnc+nbn+jcm`, `nnc+nbn+jco`, `nnc+nbn+nbu+jcc`, `nnc+nbn+nbu+jcs`, `nnc+nbn+xsn`, `nnc+nbu`, `nnc+nbu+jca`, `nnc+nbu+jca+jxc`, `nnc+nbu+jcc`, `nnc+nbu+jcj`, `nnc+nbu+jcm`, `nnc+nbu+jco`, `nnc+nbu+jcs`, `nnc+nbu+jp+ef`, `nnc+nbu+jp+ef+jcr`, `nnc+nbu+jp+ep+ecs`, `nnc+nbu+jp+ep+ef`, `nnc+nbu+jp+etm`, `nnc+nbu+jxc`, `nnc+nbu+jxc+jcs`, `nnc+nbu+jxc+jxt`, `nnc+nbu+jxt`, `nnc+nbu+nbu`, `nnc+nbu+nbu+jcm`, `nnc+nbu+nbu+jp+ef+jcr`, `nnc+nbu+ncn`, `nnc+nbu+ncn+jca`, `nnc+nbu+ncn+jcj`, `nnc+nbu+ncn+jcm`, `nnc+nbu+ncn+jxc`, `nnc+nbu+xsn`, `nnc+nbu+xsn+jca`, `nnc+nbu+xsn+jcm`, `nnc+nbu+xsn+jco`, `nnc+nbu+xsn+jcs`, `nnc+nbu+xsn+jp+ecc`, `nnc+nbu+xsn+jp+ef`, `nnc+nbu+xsn+jxc`, `nnc+nbu+xsn+jxc+jcm`, `nnc+nbu+xsn+jxt`, `nnc+nbu+xsv+etm`, `nnc+ncn`, `nnc+ncn+jca`, `nnc+ncn+jca+jxt`, `nnc+ncn+jcj`, `nnc+ncn+jcm`, `nnc+ncn+jco`, `nnc+ncn+jcs`, `nnc+ncn+jct`, `nnc+ncn+jp+ef`, `nnc+ncn+jp+etm`, `nnc+ncn+jxc`, `nnc+ncn+jxt`, `nnc+ncn+nbu`, `nnc+ncn+nbu+xsn+jca`, `nnc+ncn+ncn+jca+jxt`, `nnc+ncn+ncn+xsn`, `nnc+ncn+nnc+nnc`, `nnc+ncn+xsn`, `nnc+ncn+xsn+jp+etm`, `nnc+ncn+xsn+jxt`, `nnc+ncpa`, `nnc+ncpa+jcs`, `nnc+nnc`, `nnc+nnc+jca`, `nnc+nnc+jca+jxt`, `nnc+nnc+jcm`, `nnc+nnc+jco`, `nnc+nnc+jp+ef`, `nnc+nnc+nbu`, `nnc+nnc+nbu+jca`, `nnc+nnc+nbu+jcc`, `nnc+nnc+nbu+jcm`, `nnc+nnc+nbu+jco`, `nnc+nnc+nbu+jcs`, `nnc+nnc+nbu+jp+ep+ef`, `nnc+nnc+nbu+jp+etm`, `nnc+nnc+nbu+jxc`, `nnc+nnc+nbu+xsn`, `nnc+nnc+nbu+xsn+jcm`, `nnc+nnc+nbu+xsn+jxc`, `nnc+nnc+ncn+jco`, `nnc+nnc+nnc`, `nnc+nnc+nnc+nnc`, `nnc+nnc+su+jp+ef`, `nnc+nnc+xsn`, `nnc+nnc+xsn+jcm`, `nnc+nnc+xsn+nbu+jca`, `nnc+nnc+xsn+nbu+jcm`, `nnc+nnc+xsn+nbu+jco`, `nnc+nnc+xsn+nbu+jcs`, `nnc+nno+nbu`, `nnc+nno+nbu+jcc`, `nnc+su`, `nnc+su+jca`, `nnc+su+jcm`, `nnc+su+jco`, `nnc+su+jcs`, `nnc+su+jxc`, `nnc+su+xsn`, `nnc+xsn`, `nnc+xsn+jca`, `nnc+xsn+jca+jxt`, `nnc+xsn+jcm`, `nnc+xsn+jco`, `nnc+xsn+jcs`, `nnc+xsn+jp+ef`, `nnc+xsn+jxc`, `nnc+xsn+nbn+jca`, `nnc+xsn+nbu`, `nnc+xsn+nbu+jca`, `nnc+xsn+nbu+jcm`, `nnc+xsn+nbu+jco`, `nnc+xsn+nbu+jcs`, `nnc+xsn+nnc+nbu`, `nnc+xsn+nnc+nbu+jcm`, `nno`, `nno+jca`, `nno+jca+jxt`, `nno+jcj`, `nno+jcm`, `nno+jco`, `nno+jcs`, `nno+jxt`, `nno+nbn`, `nno+nbn+jcm`, `nno+nbn+xsn`, `nno+nbu`, `nno+nbu+jca`, `nno+nbu+jca+jxc`, `nno+nbu+jca+jxt`, `nno+nbu+jcc`, `nno+nbu+jcj`, `nno+nbu+jcm`, `nno+nbu+jco`, `nno+nbu+jcs`, `nno+nbu+jct`, `nno+nbu+jp+ecc`, `nno+nbu+jp+ecs`, `nno+nbu+jp+ef`, `nno+nbu+jp+ep+ecc`, `nno+nbu+jp+ep+ecs`, `nno+nbu+jp+ep+ef`, `nno+nbu+jp+etm`, `nno+nbu+jxc`, `nno+nbu+jxc+jca`, `nno+nbu+jxc+jcm`, `nno+nbu+jxc+jp+ef`, `nno+nbu+jxc+jp+etm`, `nno+nbu+jxc+jxc`, `nno+nbu+jxc+jxt`, `nno+nbu+jxt`, `nno+nbu+nbu`, `nno+nbu+ncn`, `nno+nbu+ncn+jp+ep+ef`, `nno+nbu+ncn+ncn`, `nno+nbu+xsn`, `nno+nbu+xsn+jca`, `nno+nbu+xsn+jcc`, `nno+nbu+xsn+jcm`, `nno+nbu+xsn+jxc`, `nno+nbu+xsn+jxt`, `nno+ncn`, `nno+ncn+jca`, `nno+ncn+jca+jxc`, `nno+ncn+jca+jxt`, `nno+ncn+jcm`, `nno+ncn+jco`, `nno+ncn+jcs`, `nno+ncn+jct`, `nno+ncn+jp+ef`, `nno+ncn+jp+etm`, `nno+ncn+jxc`, `nno+ncn+jxc+jxt`, `nno+ncn+ncn+jp+etm`, `nno+ncn+xsn`, `nno+ncn+xsn+jca`, `nno+ncn+xsn+jp+ep+ef`, `nno+ncn+xsn+jp+etm`, `nno+ncpa+jp+ep+etn+jca+jxc`, `nno+nnc`, `nno+xsn`, `nno+xsn+jca`, `nno+xsn+jca+jxc`, `nno+xsn+jxc`, `nno+xsn+jxc+jcs`, `nno+xsn+nbu`, `nno+xsn+nbu+jcm`, `npd`, `npd+jca`, `npd+jca+jcm`, `npd+jca+jp+ef`, `npd+jca+jp+ef+jca`, `npd+jca+jxc`, `npd+jca+jxc+jcm`, `npd+jca+jxt`, `npd+jcc`, `npd+jcj`, `npd+jcm`, `npd+jco`, `npd+jcs`, `npd+jct`, `npd+jct+jcm`, `npd+jct+jxt`, `npd+jp+ecc`, `npd+jp+ecs`, `npd+jp+ecs+jco`, `npd+jp+ecs+jxt`, `npd+jp+ef`, `npd+jp+ef+jca`, `npd+jp+ef+jcm`, `npd+jp+ef+jco`, `npd+jp+ef+jcr`, `npd+jp+ef+jcs`, `npd+jp+ef+jp+ef`, `npd+jp+ef+jp+etm`, `npd+jp+ef+jxc`, `npd+jp+ef+jxt`, `npd+jp+ep+ef`, `npd+jp+etm`, `npd+jxc`, `npd+jxc+jca`, `npd+jxc+jca+jxc`, `npd+jxc+jcc`, `npd+jxc+jcr`, `npd+jxc+jp+ef`, `npd+jxc+jxc`, `npd+jxc+jxt`, `npd+jxt`, `npd+nbn`, `npd+nbn+jca`, `npd+nbn+jcs`, `npd+nbn+jxc`, `npd+nbn+jxc+jxt`, `npd+ncn`, `npd+ncn+jca`, `npd+ncn+jca+jxc`, `npd+ncn+jcm`, `npd+ncn+jco`, `npd+ncn+jcs`, `npd+ncn+jxt`, `npd+npd`, `npd+xsn`, `npd+xsn+jca`, `npd+xsn+jca+jxc`, `npd+xsn+jca+jxt`, `npd+xsn+jcm`, `npd+xsn+jco`, `npd+xsn+jcs`, `npd+xsn+jct`, `npd+xsn+jp+ef`, `npd+xsn+jxc`, `npd+xsn+jxt`, `npp`, `npp+jca`, `npp+jca+jcm`, `npp+jca+jxc`, `npp+jca+jxc+jcm`, `npp+jca+jxt`, `npp+jcc`, `npp+jcj`, `npp+jcm`, `npp+jco`, `npp+jcs`, `npp+jcs+jxt`, `npp+jct`, `npp+jct+jcm`, `npp+jct+jxc`, `npp+jct+jxt`, `npp+jp+ecs`, `npp+jp+ecs+jco`, `npp+jp+ef`, `npp+jp+ef+jcs`, `npp+jp+ef+jxc+jcs`, `npp+jp+ef+jxt`, `npp+jp+ep+ecc`, `npp+jp+ep+ef`, `npp+jp+ep+etm`, `npp+jp+etm`, `npp+jxc`, `npp+jxc+jcc`, `npp+jxc+jcm`, `npp+jxc+jco`, `npp+jxt`, `npp+nbn+jca`, `npp+nbn+jcs`, `npp+ncn`, `npp+ncn+jca`, `npp+ncn+jca+jxc`, `npp+ncn+jca+jxt`, `npp+ncn+jcm`, `npp+ncn+jco`, `npp+ncn+jcs`, `npp+ncn+jct`, `npp+ncn+jct+jxt`, `npp+ncn+jp+ecs`, `npp+ncn+jxc`, `npp+ncn+jxt`, `npp+ncn+xsn`, `npp+ncpa`, `npp+ncpa+jca`, `npp+ncpa+jca+jxc`, `npp+ncpa+jcj`, `npp+ncpa+jcm`, `npp+ncpa+jco`, `npp+ncpa+jcs`, `npp+ncpa+jxt`, `npp+ncpa+ncpa+jca`, `npp+ncpa+xsn+jp+ecc`, `npp+ncpa+xsn+jp+etm`, `npp+npp+jco`, `npp+xsn`, `npp+xsn+jca`, `npp+xsn+jca+jxc`, `npp+xsn+jca+jxc+jxc`, `npp+xsn+jca+jxt`, `npp+xsn+jcj`, `npp+xsn+jcm`, `npp+xsn+jco`, `npp+xsn+jcs`, `npp+xsn+jcs+jxt`, `npp+xsn+jct`, `npp+xsn+jct+jcm`, `npp+xsn+jct+jxt`, `npp+xsn+jp+ecs`, `npp+xsn+jp+ef`, `npp+xsn+jp+etm`, `npp+xsn+jxc`, `npp+xsn+jxc+jcs`, `npp+xsn+jxc+jxt`, `npp+xsn+jxt`, `npp+xsn+ncn`, `npp+xsn+xsn`, `npp+xsn+xsn+jca`, `npp+xsn+xsn+jca+jxt`, `nq`, `nq+jca`, `nq+jca+jca`, `nq+jca+jca+jxc`, `nq+jca+jcm`, `nq+jca+jxc`, `nq+jca+jxc+jcm`, `nq+jca+jxc+jxc`, `nq+jca+jxt`, `nq+jcc`, `nq+jcj`, `nq+jcm`, `nq+jco`, `nq+jcr`, `nq+jcs`, `nq+jcs+jca+jxc`, `nq+jcs+jxt`, `nq+jct`, `nq+jct+jcm`, `nq+jct+jxt`, `nq+jp+ecc`, `nq+jp+ecs`, `nq+jp+ef`, `nq+jp+ef+jcr`, `nq+jp+ef+jcr+jxc`, `nq+jp+ep+ecc`, `nq+jp+ep+ecs`, `nq+jp+ep+ef`, `nq+jp+ep+etm`, `nq+jp+ep+etn`, `nq+jp+etm`, `nq+jp+etn+jco`, `nq+jxc`, `nq+jxc+jca+jxt`, `nq+jxc+jcm`, `nq+jxc+jcs`, `nq+jxc+jp+ef`, `nq+jxc+jp+ef+jcr`, `nq+jxc+jxc`, `nq+jxc+jxc+jxt`, `nq+jxc+jxt`, `nq+jxt`, `nq+nbn`, `nq+nbn+jca`, `nq+nbn+jcm`, `nq+nbn+jp+ep+ef`, `nq+ncn`, `nq+ncn+jca`, `nq+ncn+jca+jcm`, `nq+ncn+jca+jxc`, `nq+ncn+jca+jxt`, `nq+ncn+jcc`, `nq+ncn+jcj`, `nq+ncn+jcm`, `nq+ncn+jco`, `nq+ncn+jcs`, `nq+ncn+jct`, `nq+ncn+jct+jcm`, `nq+ncn+jct+jxc`, `nq+ncn+jct+jxt`, `nq+ncn+jp+ef`, `nq+ncn+jp+ep+ef`, `nq+ncn+jp+ep+etm`, `nq+ncn+jp+etm`, `nq+ncn+jxc`, `nq+ncn+jxc+jxt`, `nq+ncn+jxt`, `nq+ncn+ncn`, `nq+ncn+ncn+jca`, `nq+ncn+ncn+jca+jxt`, `nq+ncn+ncn+jcm`, `nq+ncn+ncn+jco`, `nq+ncn+ncn+jp+etm`, `nq+ncn+ncn+jxc`, `nq+ncn+ncn+ncn`, `nq+ncn+ncn+ncn+jca`, `nq+ncn+ncn+ncn+jcs`, `nq+ncn+ncn+xsn+jxt`, `nq+ncn+ncpa+jca`, `nq+ncn+ncpa+jcs`, `nq+ncn+ncpa+jxt`, `nq+ncn+ncpa+ncn`, `nq+ncn+ncpa+ncn+jcm`, `nq+ncn+xsn`, `nq+ncn+xsn+jca`, `nq+ncn+xsn+jca+jxt`, `nq+ncn+xsn+jcm`, `nq+ncn+xsn+jco`, `nq+ncn+xsn+jcs`, `nq+ncn+xsn+jct`, `nq+ncn+xsn+jp+etm`, `nq+ncn+xsn+jxt`, `nq+ncpa`, `nq+ncpa+jca`, `nq+ncpa+jcm`, `nq+ncpa+jco`, `nq+ncpa+jxt`, `nq+ncpa+ncn+jcm`, `nq+ncpa+ncn+jp+ef`, `nq+ncpa+ncn+jp+etm`, `nq+nq`, `nq+nq+jca`, `nq+nq+jcj`, `nq+nq+jcm`, `nq+nq+jcs`, `nq+nq+jct`, `nq+nq+jxc+jcs`, `nq+nq+jxt`, `nq+nq+ncn`, `nq+nq+ncn+jca`, `nq+nq+nq+jxt`, `nq+nq+nq+nq+jcm`, `nq+xsm+ecs`, `nq+xsm+etm`, `nq+xsn`, `nq+xsn+jca`, `nq+xsn+jca+jxc`, `nq+xsn+jca+jxt`, `nq+xsn+jcj`, `nq+xsn+jcm`, `nq+xsn+jco`, `nq+xsn+jcs`, `nq+xsn+jcs+jxt`, `nq+xsn+jct`, `nq+xsn+jct+jcm`, `nq+xsn+jp+ef`, `nq+xsn+jp+ef+jcr`, `nq+xsn+jp+ep+ef`, `nq+xsn+jp+etm`, `nq+xsn+jp+etn+jco`, `nq+xsn+jxc`, `nq+xsn+jxt`, `nq+xsn+xsn`, `nq+xsn+xsn+jcj`, `nq+xsn+xsn+jcs`, `nq+xsn+xsv+ep+etm`, `nq+xsv+ecs`, `paa+ecc`, `paa+ecc+jxc`, `paa+ecc+jxt`, `paa+ecs`, `paa+ecs+etm`, `paa+ecs+jca`, `paa+ecs+jcm`, `paa+ecs+jco`, `paa+ecs+jct`, `paa+ecs+jp+ecc`, `paa+ecs+jp+ep+ef`, `paa+ecs+jxc`, `paa+ecs+jxc+jxt`, `paa+ecs+jxt`, `paa+ecx`, `paa+ecx+jco`, `paa+ecx+jcs`, `paa+ecx+jxc`, `paa+ecx+jxt`, `paa+ecx+px+ecc`, `paa+ecx+px+ecs`, `paa+ecx+px+ecx`, `paa+ecx+px+ecx+jxc`, `paa+ecx+px+ecx+px+ecc`, `paa+ecx+px+ecx+px+ecx`, `paa+ecx+px+ecx+px+ef`, `paa+ecx+px+ecx+px+ep+ef`, `paa+ecx+px+ecx+px+etm`, `paa+ecx+px+ef`, `paa+ecx+px+ef+jcr`, `paa+ecx+px+ep+ecc`, `paa+ecx+px+ep+ecs`, `paa+ecx+px+ep+ef`, `paa+ecx+px+ep+ef+jcr`, `paa+ecx+px+ep+etm`, `paa+ecx+px+ep+etn`, `paa+ecx+px+ep+etn+jco`, `paa+ecx+px+etm`, `paa+ecx+px+etn`, `paa+ecx+px+etn+jca`, `paa+ecx+px+etn+jco`, `paa+ecx+px+etn+jcs`, `paa+ecx+px+etn+jxc`, `paa+ecx+px+etn+jxt`, `paa+ef`, `paa+ef+ecc`, `paa+ef+ecs`, `paa+ef+ecs+jxc`, `paa+ef+jca`, `paa+ef+jcm`, `paa+ef+jco`, `paa+ef+jcr`, `paa+ef+jcr+jxc`, `paa+ef+jcr+jxt`, `paa+ef+jxf`, `paa+ep+ecc`, `paa+ep+ecs`, `paa+ep+ecs+jxc`, `paa+ep+ef`, `paa+ep+ef+jcr`, `paa+ep+ef+jxc`, `paa+ep+ef+jxf`, `paa+ep+ef+jxt`, `paa+ep+ep+ecs`, `paa+ep+ep+ef`, `paa+ep+ep+etm`, `paa+ep+etm`, `paa+ep+etn`, `paa+ep+etn+jca`, `paa+ep+etn+jca+jxc`, `paa+ep+etn+jco`, `paa+ep+etn+jcs`, `paa+ep+etn+jxt`, `paa+etm`, `paa+etn`, `paa+etn+jca`, `paa+etn+jca+jxc`, `paa+etn+jca+jxt`, `paa+etn+jcc`, `paa+etn+jcj`, `paa+etn+jcm`, `paa+etn+jco`, `paa+etn+jcs`, `paa+etn+jct`, `paa+etn+jp+ecc`, `paa+etn+jp+ef`, `paa+etn+jp+ep+ecs`, `paa+etn+jp+ep+ef`, `paa+etn+jxc`, `paa+etn+jxt`, `paa+jxt`, `pad+ecc`, `pad+ecc+jxt`, `pad+ecs`, `pad+ecs+jxc`, `pad+ecs+jxt`, `pad+ecx`, `pad+ecx+jcs`, `pad+ecx+jxc`, `pad+ecx+jxt`, `pad+ecx+px+ecs`, `pad+ecx+px+ecx+px+ecc+jxt`, `pad+ef`, `pad+ef+jcr`, `pad+ef+jcr+jxt`, `pad+ef+jxf`, `pad+ef+jxt`, `pad+ep+ecc`, `pad+ep+ecs`, `pad+ep+ef`, `pad+ep+ef+jco`, `pad+ep+etm`, `pad+etm`, `pad+etn`, `pad+etn+jxt`, `pvd+ecc+jxc`, `pvd+ecs`, `pvd+ecs+jp+ecs`, `pvd+ecs+jxc`, `pvd+ecs+jxt`, `pvd+ecx`, `pvd+ep+ef`, `pvd+ep+etm`, `pvd+etm`, `pvd+etn`, `pvd+etn+jca`, `pvd+etn+jca+jxc`, `pvg+ecc`, `pvg+ecc+jxc`, `pvg+ecc+jxt`, `pvg+ecs`, `pvg+ecs+ecs`, `pvg+ecs+jca`, `pvg+ecs+jca+jxt`, `pvg+ecs+jcc`, `pvg+ecs+jcm`, `pvg+ecs+jco`, `pvg+ecs+jcs`, `pvg+ecs+jct`, `pvg+ecs+jp+ecs`, `pvg+ecs+jp+ef`, `pvg+ecs+jp+ep+ecs`, `pvg+ecs+jp+ep+ef`, `pvg+ecs+jp+ep+ef+jcr`, `pvg+ecs+jxc`, `pvg+ecs+jxc+jcc`, `pvg+ecs+jxc+jp+ef`, `pvg+ecs+jxc+jp+ep+ef`, `pvg+ecs+jxt`, `pvg+ecx`, `pvg+ecx+jco`, `pvg+ecx+jxc`, `pvg+ecx+jxt`, `pvg+ecx+jxt+px+ep+ef`, `pvg+ecx+px+ecc`, `pvg+ecx+px+ecc+jxc`, `pvg+ecx+px+ecc+jxt`, `pvg+ecx+px+ecs`, `pvg+ecx+px+ecs+jxc`, `pvg+ecx+px+ecs+jxt`, `pvg+ecx+px+ecx`, `pvg+ecx+px+ecx+jco`, `pvg+ecx+px+ecx+jxc`, `pvg+ecx+px+ecx+jxt`, `pvg+ecx+px+ecx+px+ecc`, `pvg+ecx+px+ecx+px+ecs`, `pvg+ecx+px+ecx+px+ecs+jxt`, `pvg+ecx+px+ecx+px+ecx`, `pvg+ecx+px+ecx+px+ecx+px+ecc`, `pvg+ecx+px+ecx+px+ef`, `pvg+ecx+px+ecx+px+ep+ecc`, `pvg+ecx+px+ecx+px+ep+ef`, `pvg+ecx+px+ecx+px+ep+etm`, `pvg+ecx+px+ecx+px+ep+etn+jco`, `pvg+ecx+px+ecx+px+etm`, `pvg+ecx+px+ecx+px+etn`, `pvg+ecx+px+ecx+px+etn+jca`, `pvg+ecx+px+ef`, `pvg+ecx+px+ef+jca`, `pvg+ecx+px+ef+jcm`, `pvg+ecx+px+ef+jcr`, `pvg+ecx+px+ep+ecc`, `pvg+ecx+px+ep+ecs`, `pvg+ecx+px+ep+ecs+jxc`, `pvg+ecx+px+ep+ef`, `pvg+ecx+px+ep+ef+jcm`, `pvg+ecx+px+ep+ef+jcr`, `pvg+ecx+px+ep+ef+jxf`, `pvg+ecx+px+ep+ep+ecs`, `pvg+ecx+px+ep+etm`, `pvg+ecx+px+ep+etn`, `pvg+ecx+px+ep+etn+jca`, `pvg+ecx+px+ep+etn+jca+jxc`, `pvg+ecx+px+ep+etn+jco`, `pvg+ecx+px+etm`, `pvg+ecx+px+etn`, `pvg+ecx+px+etn+jca`, `pvg+ecx+px+etn+jca+jxc`, `pvg+ecx+px+etn+jca+jxt`, `pvg+ecx+px+etn+jco`, `pvg+ecx+px+etn+jcs`, `pvg+ecx+px+etn+jct`, `pvg+ecx+px+etn+jxc`, `pvg+ecx+px+etn+jxc+jxt`, `pvg+ecx+px+etn+jxt`, `pvg+ef`, `pvg+ef+jca`, `pvg+ef+jcm`, `pvg+ef+jco`, `pvg+ef+jcr`, `pvg+ef+jcr+jxc`, `pvg+ef+jcr+jxt`, `pvg+ef+jcs`, `pvg+ef+jp+ef+jcr`, `pvg+ef+jp+etm`, `pvg+ef+jxc`, `pvg+ef+jxf`, `pvg+ef+jxt`, `pvg+ep+ecc`, `pvg+ep+ecc+jxt`, `pvg+ep+ecs`, `pvg+ep+ecs+jca+jxt`, `pvg+ep+ecs+jco`, `pvg+ep+ecs+jxc`, `pvg+ep+ecs+jxt`, `pvg+ep+ecx`, `pvg+ep+ecx+px+ef`, `pvg+ep+ef`, `pvg+ep+ef+jca`, `pvg+ep+ef+jcm`, `pvg+ep+ef+jco`, `pvg+ep+ef+jcr`, `pvg+ep+ef+jcr+jxc`, `pvg+ep+ef+jcr+jxt`, `pvg+ep+ef+jct`, `pvg+ep+ef+jxc`, `pvg+ep+ef+jxf`, `pvg+ep+ef+jxt`, `pvg+ep+ep+ef`, `pvg+ep+ep+ef+jco`, `pvg+ep+ep+ef+jxf`, `pvg+ep+etm`, `pvg+ep+etn`, `pvg+ep+etn+jca`, `pvg+ep+etn+jca+jxc`, `pvg+ep+etn+jca+jxt`, `pvg+ep+etn+jco`, `pvg+ep+etn+jcs`, `pvg+ep+etn+jxt`, `pvg+etm`, `pvg+etn`, `pvg+etn+jca`, `pvg+etn+jca+jxc`, `pvg+etn+jca+jxt`, `pvg+etn+jcc`, `pvg+etn+jcj`, `pvg+etn+jcm`, `pvg+etn+jco`, `pvg+etn+jcr`, `pvg+etn+jcs`, `pvg+etn+jct`, `pvg+etn+jct+jxt`, `pvg+etn+jp+ecc`, `pvg+etn+jp+ecs`, `pvg+etn+jp+ef`, `pvg+etn+jp+ef+jcr`, `pvg+etn+jp+ef+jcs`, `pvg+etn+jp+ep+ef`, `pvg+etn+jp+ep+ef+jcr`, `pvg+etn+jp+etm`, `pvg+etn+jxc`, `pvg+etn+jxc+jca+jxt`, `pvg+etn+jxc+jcm`, `pvg+etn+jxc+jco`, `pvg+etn+jxc+jcs`, `pvg+etn+jxc+jxt`, `pvg+etn+jxt`, `pvg+etn+xsm+ecs`, `pvg+etn+xsn+jcm`, `px+ecc`, `px+ecc+jxc`, `px+ecc+jxc+jp+ef`, `px+ecc+jxt`, `px+ecs`, `px+ecs+jca`, `px+ecs+jcc`, `px+ecs+jcj`, `px+ecs+jcm`, `px+ecs+jco`, `px+ecs+jp+ep+ef`, `px+ecs+jxc`, `px+ecs+jxt`, `px+ecx`, `px+ecx+jxc`, `px+ecx+jxt`, `px+ecx+px+ecs`, `px+ecx+px+ecx`, `px+ecx+px+ef`, `px+ecx+px+ef+jcr`, `px+ecx+px+ep+ef`, `px+ecx+px+etm`, `px+ecx+px+etn+jca`, `px+ef`, `px+ef+etm`, `px+ef+jca`, `px+ef+jca+jxc`, `px+ef+jcj`, `px+ef+jcm`, `px+ef+jco`, `px+ef+jcr`, `px+ef+jcr+jxc`, `px+ef+jcs`, `px+ef+jp+etm`, `px+ef+jxc`, `px+ef+jxf`, `px+ef+jxt`, `px+ep+ecc`, `px+ep+ecs`, `px+ep+ecs+jxc`, `px+ep+ecs+jxt`, `px+ep+ecx`, `px+ep+ef`, `px+ep+ef+jca`, `px+ep+ef+jco`, `px+ep+ef+jcr`, `px+ep+ef+jcr+jxc`, `px+ep+ef+jxf`, `px+ep+ep+ef`, `px+ep+ep+ef+jxf`, `px+ep+etm`, `px+ep+etn`, `px+ep+etn+jca`, `px+ep+etn+jca+jxc`, `px+ep+etn+jco`, `px+ep+etn+jcs`, `px+ep+etn+jxc`, `px+ep+etn+jxt`, `px+etm`, `px+etn`, `px+etn+jca`, `px+etn+jca+jxc`, `px+etn+jca+jxt`, `px+etn+jco`, `px+etn+jcs`, `px+etn+jct`, `px+etn+jxc`, `px+etn+jxc+jxt`, `px+etn+jxt`, `sf`, `sl`, `sp`, `sr`, `su`, `su+jca`, `su+jcm`, `xp+nbn`, `xp+nbu`, `xp+ncn`, `xp+ncn+jca`, `xp+ncn+jcm`, `xp+ncn+jco`, `xp+ncn+jcs`, `xp+ncn+jp+ef`, `xp+ncn+jp+ep+ef`, `xp+ncn+jxt`, `xp+ncn+ncn+jca`, `xp+ncn+ncn+jcm`, `xp+ncn+ncn+jco`, `xp+ncn+ncpa+jco`, `xp+ncn+xsn`, `xp+ncn+xsn+jca`, `xp+ncn+xsn+jcm`, `xp+ncn+xsn+jp+ef`, `xp+ncn+xsn+jp+etm`, `xp+ncpa`, `xp+ncpa+jca`, `xp+ncpa+jcm`, `xp+ncpa+jco`, `xp+ncpa+ncn+jcm`, `xp+ncpa+ncn+jco`, `xp+ncpa+ncpa+jco`, `xp+ncpa+xsn`, `xp+ncpa+xsn+jp+etm`, `xp+ncpa+xsv+ecc`, `xp+ncpa+xsv+ecs`, `xp+ncpa+xsv+ecx`, `xp+ncpa+xsv+ef`, `xp+ncpa+xsv+ef+jcr`, `xp+ncpa+xsv+ep+ef`, `xp+ncpa+xsv+etm`, `xp+ncpa+xsv+etn+jca`, `xp+ncps`, `xp+ncps+xsm+ecs`, `xp+ncps+xsm+ecx`, `xp+ncps+xsm+ef`, `xp+ncps+xsm+ep+ef`, `xp+ncps+xsm+etm`, `xp+ncps+xsn`, `xp+nnc`, `xp+nnc+jcm`, `xp+nnc+nbn`, `xp+nnc+nbu`, `xp+nnc+nbu+jcs`, `xp+nnc+ncn`, `xp+nnc+ncn+jca`, `xp+nnc+ncn+jcm`, `xp+nnc+ncn+jcs`, `xp+nnc+ncn+jp+ef+jcr`, `xp+nno`, `xp+nno+jcm`, `xp+nno+nbn+jca`, `xp+nno+nbu`, `xp+nno+nbu+jcs`, `xp+nno+ncn`, `xp+nno+ncn+jca`, `xp+nno+ncn+jcs`, `xp+nno+ncn+jxt`, `xp+nq`, `xp+nq+ncn+jca`, `xp+nq+ncpa`, `xp+nq+ncpa+jco`, `xp+nq+ncpa+jp+etm`, `xsm+etm`, `xsn`, `xsn+jca`, `xsn+jca+jxt`, `xsn+jco`, `xsn+jcs`, `xsn+jp+ef`, `xsn+jp+ep+ef`, `xsn+jxc+jca+jxt`, `xsn+jxc+jcs`, `xsn+jxt`, `xsv+ecc`, `xsv+ecs`, `xsv+ecx+px+ep+ef`, `xsv+ep+ecx`, `xsv+etm` |

| **`morphologizer`** | `POS=CCONJ`, `POS=ADV`, `POS=SCONJ`, `POS=DET`, `POS=NOUN`, `POS=VERB`, `POS=ADJ`, `POS=PUNCT`, `POS=AUX`, `POS=PRON`, `POS=PROPN`, `POS=NUM`, `POS=INTJ`, `POS=PART`, `POS=X`, `POS=ADP`, `POS=SYM` |

| **`parser`** | `ROOT`, `acl`, `advcl`, `advmod`, `amod`, `appos`, `aux`, `case`, `cc`, `ccomp`, `compound`, `conj`, `cop`, `csubj`, `dep`, `det`, `discourse`, `dislocated`, `fixed`, `flat`, `iobj`, `mark`, `nmod`, `nsubj`, `nummod`, `obj`, `obl`, `punct`, `vocative`, `xcomp` |