Serial Number

int64 1

6k

| Issue Number

int64 75.6k

112k

| Title

stringlengths 3

357

| Labels

stringlengths 3

241

⌀ | Body

stringlengths 9

74.5k

⌀ | Comments

int64 0

867

|

|---|---|---|---|---|---|

2,701 | 100,733 |

Nightly torch.compile fails with dynamically patched `nn.module.forward`

|

high priority, module: nn, triaged, oncall: pt2, module: dynamo

|

### 🐛 Describe the bug

When an `nn.Module` forward is modified during runtime, `torch.compile` fails.

```

import torch

import torch.nn as nn

import functools

model = nn.Linear(5, 5)

old_forward = model.forward

@functools.wraps(old_forward)

def new_forward(*args, **kwargs):

return old_forward(*args, **kwargs)

model.forward = new_forward

model = torch.compile(model, backend='eager')

model(torch.randn(1, 5))

```

Output

```

Traceback (most recent call last):

File "/home/jgu/Projects/dynamite/env/lib/python3.8/site-packages/torch/_dynamo/utils.py", line 1228, in get_fake_value

return wrap_fake_exception(

File "/home/jgu/Projects/dynamite/env/lib/python3.8/site-packages/torch/_dynamo/utils.py", line 848, in wrap_fake_exception

return fn()

File "/home/jgu/Projects/dynamite/env/lib/python3.8/site-packages/torch/_dynamo/utils.py", line 1229, in <lambda>

lambda: run_node(tx.output, node, args, kwargs, nnmodule)

File "/home/jgu/Projects/dynamite/env/lib/python3.8/site-packages/torch/_dynamo/utils.py", line 1292, in run_node

raise RuntimeError(

RuntimeError: Failed running call_method forward(*(Linear(in_features=5, out_features=5, bias=True), FakeTensor(..., size=(1, 5))), **{}):

Please convert all Tensors to FakeTensors first or instantiate FakeTensorMode with 'allow_non_fake_inputs'. Found in aten.t.default(*(Parameter containing:

tensor([[ 0.1118, 0.2579, 0.2571, -0.2395, 0.2391],

[-0.3907, -0.2695, 0.2111, -0.4282, -0.2784],

[ 0.1674, 0.3213, -0.1111, 0.2801, 0.3222],

[-0.0022, 0.2045, -0.2440, 0.2014, -0.3790],

[ 0.4095, -0.2653, -0.2879, 0.2875, -0.1032]], requires_grad=True),), **{})

(scroll up for backtrace)

```

Expected Output: exit 0

### Impact

I accidentally hit this when running a model that implicitly uses hugging face's accelerate module with torch.compile. HF's accelerate dynamically patches model.forward to do device offload. https://github.com/huggingface/accelerate/blob/main/src/accelerate/hooks.py#L118

### Versions

torch==2.1.0.dev20230428+cu117

The above example works with torch2.0 but fails on nightly

cc @ezyang @gchanan @zou3519 @albanD @mruberry @jbschlosser @walterddr @mikaylagawarecki @msaroufim @wconstab @ngimel @bdhirsh @anijain2305 @voznesenskym @penguinwu @EikanWang @jgong5 @Guobing-Chen @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx @soumith @desertfire

| 6 |

2,702 | 100,730 |

`torch::jit::EliminateExceptions` lowering pass never completes on specific model

|

oncall: jit

|

### 🐛 Describe the bug

## Bug Description and Overview

When running the `torch::jit::EliminateExceptions` lowering pass on a specific model, the program never completes.

https://github.com/pytorch/pytorch/blob/40df6e164777e834b5f5b50e066632ed40bd25ef/torch/csrc/jit/passes/remove_exceptions.h#L20

A simple model which elicits this error (when run through the `torch::jit::EliminateExceptions` lowering pass), as adapted from https://github.com/pytorch/TensorRT/issues/1823 is:

```python

import torch

class UpSample(torch.nn.Module):

def __init__(self):

super(UpSample, self).__init__()

self.upsample = torch.nn.Upsample(

scale_factor=2, mode="bilinear", align_corners=False

)

def forward(self, x: torch.Tensor) -> torch.Tensor:

return self.upsample(x) + self.upsample(x)

model = torch.jit.script(UpSample().eval().to("cuda"))

input = torch.randn((5, 5, 5, 5)).to("cuda")

```

The corresponding IR for the above code is reproduced here: https://github.com/pytorch/TensorRT/issues/1823#issuecomment-1509005086.

## Context and Findings

The bug was traced to the use of `replaceAllUsesWith` (see https://github.com/pytorch/TensorRT/pull/1859#issuecomment-1534153675), which can cause invalid IR to be generated, especially in cases where there are nested `prim::If` calls. https://github.com/pytorch/TensorRT/pull/1859 gives a sample solution to this issue, substituting the `replaceAllUsesWith` operator with `replaceFirstUseWith`.

Would this substitution retain the original functionality/intent of the `torch::jit::EliminateExceptions` lowering pass?

### Versions

Versions of relevant libraries:

```

[pip3] torch==2.1.0.dev20230419+cu117

```

cc @EikanWang @jgong5 @wenzhe-nrv @sanchitintel

| 0 |

2,703 | 100,725 |

[CUDA RPC] Incorrect messages in CUDA Support RPC when parallelized with other GPU programs

|

oncall: distributed

|

### 🐛 Describe the bug

### Issue Summary

When running the official example of [DISTRIBUTED PIPELINE PARALLELISM USING RPC](https://pytorch.org/tutorials/intermediate/dist_pipeline_parallel_tutorial.html) and modifying the transferred tensors into GPU tensors (i.e., return out.cpu() -> return out), inconsistencies in the transferred tensor values are observed. Specifically, there are discrepancies in the loss values for the same inputs and model parameters.

These discrepancies only occur when running other GPU programs, such as a pure computation task in the backend using PyTorch or nccl-tests tasks. When the other GPU task is stopped, the loss values become consistent.

This suggests that other GPU programs could be interfering with the CUDA Support RPC, leading to incorrect message transfers.

**Could anyone provide some insight or guidance on how to prevent other GPU programs from interfering with the CUDA Support RPC and ensure correct message transfers?**

Any help or suggestions would be greatly appreciated.

### Steps to Reproduce

1. Run a pure computation task in the backend using PyTorch.

```python

import torch

import time

from multiprocessing import Pool, set_start_method

def run_on_single_gpu(device):

a = torch.randn(20000,20000).cuda(device)

b = torch.randn(20000,20000).cuda(device)

ta = a

tb = b

while True:

a = ta

b = tb

a = torch.sin(a)

b = torch.sin(b)

a = torch.cos(a)

b = torch.cos(b)

a = torch.tan(a)

b = torch.tan(b)

a = torch.exp(a)

b = torch.exp(b)

a = torch.log(a)

b = torch.log(b)

b = torch.matmul(a, b)

#time.sleep(0.000005)

if __name__ == '__main__':

set_start_method('spawn')

print('start running')

num_gpus = torch.cuda.device_count()

pool = Pool(processes=num_gpus)

pool.map(run_on_single_gpu, range(num_gpus))

pool.close()

pool.join()

```

2. Run the official example of [DISTRIBUTED PIPELINE PARALLELISM USING RPC](https://pytorch.org/tutorials/intermediate/dist_pipeline_parallel_tutorial.html) and modifying the transferred tensors into GPU tensors (i.e., return out.cpu() -> return out),

```python

import os

import threading

import time

from functools import wraps

import torch

import torch.nn as nn

import torch.distributed.autograd as dist_autograd

import torch.distributed.rpc as rpc

import torch.multiprocessing as mp

import torch.optim as optim

from torch.distributed.optim import DistributedOptimizer

from torch.distributed.rpc import RRef

from torchvision.models.resnet import Bottleneck

#########################################################

# Define Model Parallel ResNet50 #

#########################################################

# In order to split the ResNet50 and place it on two different workers, we

# implement it in two model shards. The ResNetBase class defines common

# attributes and methods shared by two shards. ResNetShard1 and ResNetShard2

# contain two partitions of the model layers respectively.

num_classes = 1000

def conv1x1(in_planes, out_planes, stride=1):

"""1x1 convolution"""

return nn.Conv2d(in_planes, out_planes, kernel_size=1, stride=stride, bias=False)

class ResNetBase(nn.Module):

def __init__(self, block, inplanes, num_classes=1000,

groups=1, width_per_group=64, norm_layer=None):

super(ResNetBase, self).__init__()

self._lock = threading.Lock()

self._block = block

self._norm_layer = nn.BatchNorm2d

self.inplanes = inplanes

self.dilation = 1

self.groups = groups

self.base_width = width_per_group

def _make_layer(self, planes, blocks, stride=1):

norm_layer = self._norm_layer

downsample = None

previous_dilation = self.dilation

if stride != 1 or self.inplanes != planes * self._block.expansion:

downsample = nn.Sequential(

conv1x1(self.inplanes, planes * self._block.expansion, stride),

norm_layer(planes * self._block.expansion),

)

layers = []

layers.append(self._block(self.inplanes, planes, stride, downsample, self.groups,

self.base_width, previous_dilation, norm_layer))

self.inplanes = planes * self._block.expansion

for _ in range(1, blocks):

layers.append(self._block(self.inplanes, planes, groups=self.groups,

base_width=self.base_width, dilation=self.dilation,

norm_layer=norm_layer))

return nn.Sequential(*layers)

def parameter_rrefs(self):

r"""

Create one RRef for each parameter in the given local module, and return a

list of RRefs.

"""

return [RRef(p) for p in self.parameters()]

class ResNetShard1(ResNetBase):

"""

The first part of ResNet.

"""

def __init__(self, device, *args, **kwargs):

super(ResNetShard1, self).__init__(

Bottleneck, 64, num_classes=num_classes, *args, **kwargs)

self.device = device

self.seq = nn.Sequential(

nn.Conv2d(3, self.inplanes, kernel_size=7, stride=2, padding=3, bias=False),

self._norm_layer(self.inplanes),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1),

self._make_layer(64, 3),

self._make_layer(128, 4, stride=2)

).to(self.device)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

elif isinstance(m, nn.BatchNorm2d):

nn.init.ones_(m.weight)

nn.init.zeros_(m.bias)

def forward(self, x_rref):

x = x_rref.to_here().to(self.device)

with self._lock:

out = self.seq(x)

# return out.cpu()

return out

class ResNetShard2(ResNetBase):

"""

The second part of ResNet.

"""

def __init__(self, device, *args, **kwargs):

super(ResNetShard2, self).__init__(

Bottleneck, 512, num_classes=num_classes, *args, **kwargs)

self.device = device

self.seq = nn.Sequential(

self._make_layer(256, 6, stride=2),

self._make_layer(512, 3, stride=2),

nn.AdaptiveAvgPool2d((1, 1)),

).to(self.device)

self.fc = nn.Linear(512 * self._block.expansion, num_classes).to(self.device)

def forward(self, x_rref):

x = x_rref.to_here().to(self.device)

with self._lock:

out = self.fc(torch.flatten(self.seq(x), 1))

# return out.cpu()

return out

class DistResNet50(nn.Module):

"""

Assemble two parts as an nn.Module and define pipelining logic

"""

def __init__(self, split_size, workers, *args, **kwargs):

super(DistResNet50, self).__init__()

self.split_size = split_size

# Put the first part of the ResNet50 on workers[0]

self.p1_rref = rpc.remote(

workers[0],

ResNetShard1,

args = ("cuda:0",) + args,

kwargs = kwargs

)

# Put the second part of the ResNet50 on workers[1]

self.p2_rref = rpc.remote(

workers[1],

ResNetShard2,

args = ("cuda:1",) + args,

kwargs = kwargs

)

def forward(self, xs):

# Split the input batch xs into micro-batches, and collect async RPC

# futures into a list

out_futures = []

for x in iter(xs.split(self.split_size, dim=0)):

x_rref = RRef(x)

y_rref = self.p1_rref.remote().forward(x_rref)

z_fut = self.p2_rref.rpc_async().forward(y_rref)

out_futures.append(z_fut)

# collect and cat all output tensors into one tensor.

return torch.cat(torch.futures.wait_all(out_futures))

def parameter_rrefs(self):

remote_params = []

remote_params.extend(self.p1_rref.remote().parameter_rrefs().to_here())

remote_params.extend(self.p2_rref.remote().parameter_rrefs().to_here())

return remote_params

#########################################################

# Run RPC Processes #

#########################################################

num_batches = 3

batch_size = 120

image_w = 128

image_h = 128

def run_master(split_size):

# put the two model parts on worker1 and worker2 respectively

model = DistResNet50(split_size, ["worker1", "worker2"])

loss_fn = nn.MSELoss()

opt = DistributedOptimizer(

optim.SGD,

model.parameter_rrefs(),

lr=0.05,

)

one_hot_indices = torch.LongTensor(batch_size) \

.random_(0, num_classes) \

.view(batch_size, 1)

for i in range(num_batches):

print(f"Processing batch {i}")

# generate random inputs and labels

torch.manual_seed(123)

inputs = torch.randn(batch_size, 3, image_w, image_h)

labels = torch.zeros(batch_size, num_classes) \

.scatter_(1, one_hot_indices, 1)

# The distributed autograd context is the dedicated scope for the

# distributed backward pass to store gradients, which can later be

# retrieved using the context_id by the distributed optimizer.

with dist_autograd.context() as context_id:

outputs = model(inputs)

loss = loss_fn(outputs, labels.to("cuda:0"))

print(f"loss: {loss.item()}")

dist_autograd.backward(context_id, [loss])

# opt.step(context_id)

def run_worker(rank, world_size, num_split):

os.environ['MASTER_ADDR'] = '11.218.124.179'

# os.environ['MASTER_ADDR'] = 'localhost'

os.environ['MASTER_PORT'] = '29000'

# Higher timeout is added to accommodate for kernel compilation time in case of ROCm.

options = rpc.TensorPipeRpcBackendOptions(num_worker_threads=256, rpc_timeout=300)

if rank == 0:

options.set_device_map("master", {rank: 0})

options.set_device_map("worker1", {rank: 0})

options.set_device_map("worker2", {rank: 1})

rpc.init_rpc(

"master",

rank=rank,

world_size=world_size,

rpc_backend_options=options

)

run_master(num_split)

else:

options.set_device_map("master", {rank-1: 0})

options.set_device_map("worker1", {rank-1: 0})

options.set_device_map("worker2", {rank-1: 1})

rpc.init_rpc(

f"worker{rank}",

rank=rank,

world_size=world_size,

rpc_backend_options=options

)

pass

# block until all rpcs finish

rpc.shutdown()

if __name__=="__main__":

world_size = 3

for num_split in [1, 2, 4, 8]:

tik = time.time()

mp.spawn(run_worker, args=(world_size, num_split), nprocs=world_size, join=True)

tok = time.time()

print(f"number of splits = {num_split}, execution time = {tok - tik}")

```

3. Observe the log of the RPC task and note that the loss values are inconsistent. For example, the losses in 'number of splits = 4 and 8' of my log.

```Processing batch 0

loss: 0.3522467613220215

Processing batch 1

loss: 0.3522467613220215

Processing batch 2

loss: 0.3522467613220215

number of splits = 1, execution time = 30.63558030128479

Processing batch 0

loss: 0.37125730514526367

Processing batch 1

loss: 0.37125730514526367

Processing batch 2

loss: 0.37125730514526367

number of splits = 2, execution time = 25.16931390762329

Processing batch 0

loss: 0.3850472569465637

Processing batch 1

loss: 0.3850732445716858

Processing batch 2

loss: 0.38506922125816345

number of splits = 4, execution time = 24.290683269500732

Processing batch 0

loss: 0.38254597783088684

Processing batch 1

loss: 0.38254597783088684

Processing batch 2

loss: 0.35708141326904297

number of splits = 8, execution time = 22.055739402770996

```

4. Close the pure computation task and only run the RPC task. Observe that the loss values become consistent.

```

Processing batch 0

loss: 0.3423759937286377

Processing batch 1

loss: 0.3423759937286377

Processing batch 2

loss: 0.3423759937286377

number of splits = 1, execution time = 19.911068439483643

Processing batch 0

loss: 0.40370798110961914

Processing batch 1

loss: 0.40370798110961914

Processing batch 2

loss: 0.40370798110961914

number of splits = 2, execution time = 14.234280824661255

Processing batch 0

loss: 0.36699122190475464

Processing batch 1

loss: 0.36699122190475464

Processing batch 2

loss: 0.36699122190475464

number of splits = 4, execution time = 12.719268560409546

Processing batch 0

loss: 0.3601795434951782

Processing batch 1

loss: 0.3601795434951782

Processing batch 2

loss: 0.3601795434951782

number of splits = 8, execution time = 11.449119329452515

```

### Versions

PyTorch version: 2.0.0+cu117

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Tencent tlinux 2.2 (Final) (x86_64)

GCC version: (GCC) 8.3.0

Clang version: Could not collect

CMake version: version 3.26.1

Libc version: glibc-2.17

Python version: 3.8.12 (default, Jun 13 2022, 19:37:57) [GCC 8.3.0] (64-bit runtime)

Python platform: Linux-4.14.105-1-tlinux3-0013-x86_64-with-glibc2.2.5

Is CUDA available: True

CUDA runtime version: 11.7.99

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration:

GPU 0: A100-SXM4-40GB

GPU 1: A100-SXM4-40GB

GPU 2: A100-SXM4-40GB

GPU 3: A100-SXM4-40GB

GPU 4: A100-SXM4-40GB

GPU 5: A100-SXM4-40GB

GPU 6: A100-SXM4-40GB

GPU 7: A100-SXM4-40GB

Nvidia driver version: 450.156.00

cuDNN version: Probably one of the following:

/usr/lib64/libcudnn.so.8.5.0

/usr/lib64/libcudnn_adv_infer.so.8.5.0

/usr/lib64/libcudnn_adv_train.so.8.5.0

/usr/lib64/libcudnn_cnn_infer.so.8.5.0

/usr/lib64/libcudnn_cnn_train.so.8.5.0

/usr/lib64/libcudnn_ops_infer.so.8.5.0

/usr/lib64/libcudnn_ops_train.so.8.5.0

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 192

On-line CPU(s) list: 0-191

Thread(s) per core: 2

Core(s) per socket: 48

Socket(s): 2

NUMA node(s): 2

Vendor ID: AuthenticAMD

CPU family: 23

Model: 49

Model name: AMD EPYC 7K62 48-Core Processor

Stepping: 0

CPU MHz: 3292.824

CPU max MHz: 2600.0000

CPU min MHz: 1500.0000

BogoMIPS: 5190.52

Virtualization: AMD-V

L1d cache: 32K

L1i cache: 32K

L2 cache: 512K

L3 cache: 16384K

NUMA node0 CPU(s): 0-47,96-143

NUMA node1 CPU(s): 48-95,144-191

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology nonstop_tsc cpuid extd_apicid aperfmperf pni pclmulqdq monitor ssse3 fma cx16 sse4_1 sse4_2 movbe popcnt aes xsave avx f16c rdrand lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw ibs skinit wdt tce topoext perfctr_core perfctr_nb bpext perfctr_llc mwaitx cpb cat_l3 cdp_l3 hw_pstate ssbd ibrs ibpb stibp vmmcall fsgsbase bmi1 avx2 smep bmi2 cqm rdt_a rdseed adx smap clflushopt clwb sha_ni xsaveopt xsavec xgetbv1 xsaves cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local clzero irperf xsaveerptr arat npt lbrv svm_lock nrip_save tsc_scale vmcb_clean flushbyasid decodeassists pausefilter pfthreshold avic v_vmsave_vmload vgif umip rdpid overflow_recov succor smca

Versions of relevant libraries:

[pip3] numpy==1.23.5

[pip3] torch==2.0.0

[pip3] torchaudio==2.0.1

[pip3] torchpippy==0.1.0

[pip3] torchvision==0.12.0

[pip3] triton==2.0.0

[conda] Could not collect

cc @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @H-Huang @kwen2501 @awgu

| 0 |

2,704 | 100,705 |

torch.cuda.amp.GradScaler initialization

|

triaged, module: amp (automated mixed precision)

|

### 🐛 Describe the bug

If I just create a scaler using the following code

`scaler = torch.cuda.amp.GradScaler()`

and do not use this `scaler` in my code, then somehow training faces issue with convergence.

I have faced it with at least 2 models.

### Versions

I have faced this in torch-1.6, torch-1.12.

cc @mcarilli @ptrblck @leslie-fang-intel @jgong5

| 2 |

2,705 | 100,704 |

[Discussion] Investigate possibilities for Windows Arm64 BLAS and LAPACK

|

module: windows, triaged, module: linear algebra

|

We are trying to investigate what implementations of BLAS/LAPACK are compatible with PyTorch and Windows Arm64.

Considering the options listed [here](https://github.com/pytorch/pytorch/blob/master/cmake/Dependencies.cmake#L246), we have the following conclusions:

- [ATLAS](https://math-atlas.sourceforge.net/): outdated (last update was in 2015)

- [BLIS](https://github.com/flame/blis): prebuild Windows [binaries](https://ci.appveyor.com/project/shpc/blis), [instructions](https://github.com/flame/blis/blob/e14424f55b15d67e8d18384aea45a11b9b772e02/docs/FAQ.md#can-i-build-blis-on-windows) on how to build it on Windows

- When running the instructions for building on Windows on an Arm64 machine, there is a SEH unwinding issue in the LLVM

- [OpenBLAS](https://github.com/xianyi/OpenBLAS): Compiled with PyTorch and it seems to be working:

- Some core tests are failing on access violation or similar issues

- WIP testing pipeline is [here](https://github.com/Windows-on-ARM-Experiments/pytorch-ci/actions/runs/4587922322)

- Generic BLAS: TBD

- [EigenBLAS](https://eigen.tuxfamily.org/dox/index.html): [here](https://gist.github.com/danielTobon43/8ef3d15f84a43fb15f1f4a49de5fcc75) are some instructions about building Eigen on Windows. There is also some Windows Arm64 option on https://vcpkg.io/en/packages.html

- [MKL](https://www.intel.com/content/www/us/en/developer/tools/oneapi/onemkl.html): for Intel processors

- [VecLib](https://developer.apple.com/documentation/accelerate/veclib): for Apple devices

- [Nvidia MAGMA](https://developer.nvidia.com/magma): optimization for GPU (out of our scope for now)

- [FlexiBLAS](https://github.com/mpimd-csc/flexiblas): no Windows support https://github.com/mpimd-csc/flexiblas/issues/9

- [libflame](https://github.com/flame/libflame): It is a sister project to BLIS. Last update in August 2019

- [Netlib Reference Implementation](https://github.com/flame/libflame): [how to build on windows](https://icl.utk.edu/lapack-for-windows/lapack/)

- [APL](https://developer.arm.com/Tools%20and%20Software/Arm%20Performance%20Libraries#:~:text=Arm%20Performance%20Libraries%20provide%20optimized,performance%20in%20multi%2Dprocessor%20environments.): No support for Windows

## Open questions

- What did we miss with the implementations described above?

- What other implementations are worth being taken into consideration?

cc @peterjc123 @mszhanyi @skyline75489 @nbcsm @vladimir-aubrecht @jianyuh @nikitaved @pearu @mruberry @walterddr @IvanYashchuk @xwang233 @Lezcano

| 0 |

2,706 | 100,675 |

DISABLED test_inplace_gradgrad_remainder_cuda_float64 (__main__.TestBwdGradientsCUDA)

|

module: autograd, triaged, module: flaky-tests, skipped

|

Platforms: linux

This test was disabled because it is failing in CI. See [recent examples](https://hud.pytorch.org/flakytest?name=test_inplace_gradgrad_remainder_cuda_float64&suite=TestBwdGradientsCUDA) and the most recent trunk [workflow logs](https://github.com/pytorch/pytorch/runs/undefined).

Over the past 3 hours, it has been determined flaky in 7 workflow(s) with 7 failures and 7 successes.

**Debugging instructions (after clicking on the recent samples link):**

DO NOT ASSUME THINGS ARE OKAY IF THE CI IS GREEN. We now shield flaky tests from developers so CI will thus be green but it will be harder to parse the logs.

To find relevant log snippets:

1. Click on the workflow logs linked above

2. Click on the Test step of the job so that it is expanded. Otherwise, the grepping will not work.

3. Grep for `test_inplace_gradgrad_remainder_cuda_float64`

4. There should be several instances run (as flaky tests are rerun in CI) from which you can study the logs.

Test file path: `test_ops_gradients.py`

ConnectionTimeoutError: Connect timeout for 5000ms, GET https://raw.githubusercontent.com/pytorch/pytorch/master/test/test_ops_gradients.py -2 (connected: false, keepalive socket: false, socketHandledRequests: 1, socketHandledResponses: 0)

headers: {}

cc @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7

| 1 |

2,707 | 100,674 |

Add support for MaxPool3D on the MPS backend

|

triaged, module: mps

|

### 🚀 The feature, motivation and pitch

Currently, Pooling operations are only supported on MPS for 1D and 2D inputs. While MPS doesn't have native support for 3d pooling operations, it does support 4d pooling operations (e.g. [`maxPooling4DWithSourceTensor()`](https://developer.apple.com/documentation/metalperformanceshadersgraph/mpsgraph/3750695-maxpooling4dwithsourcetensor?language=objc)). 3d tensors can be expanded to become 4d tensors, passed to 4d pooling operations, and then squeezed back to 3d tensors. This wouldn't affect the accuracy of the results when compared to direct 3d pooling operations. So, we can start working to add support for 3d pooling operations on the MPS backend.

cc @kulinseth @albanD @malfet @DenisVieriu97 @razarmehr @abhudev @mattiaspaul

### Alternatives

_No response_

### Additional context

This feature, along with the introduction of 3d convolutions in #99246 would open up lots of possible model architectures for 3D inputs.

Full disclosure: I already have a working implementation of MaxPool3D support for MPS, and @mattiaspaul already has something in the works to address this as well.

| 0 |

2,708 | 100,656 |

On UMA systems, pytorch fails to reserve memory exceeding the initial memory size

|

module: rocm, module: memory usage, triaged

|

### 🐛 Describe the bug

When using pytorch with ROCm / HIP on systems with unified memory, eg. AMD APUs, pytorch seems to stick to the inital reported GPU memory (which is 512 MB on my system for example), even if plenty of system RAM (and so candidate VRAM) is available. It seems the available memory is only polled once (or with an unreliable method), and after using up this memory, torch bails out like:

"HIP out of memory. Tried to allocate 2.00 MiB (GPU 0; 512.00 MiB total capacity; 455.97 MiB already allocated; 42.00 MiB free; 470.00 MiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_HIP_ALLOC_CONF"

Other applications on my system (eg. OpenGL) manage to use 4GB+ of GPU memory. So it seems pytorch is stuck to the initial reported "free" GPU memory forever.

### Versions

PyTorch version: 2.0.0+rocm5.4.2

Is debug build: False

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: 5.4.22803-474e8620

OS: Ubuntu 22.10 (x86_64)

GCC version: (Ubuntu 12.2.0-3ubuntu1) 12.2.0

Clang version: Could not collect

CMake version: version 3.26.3

Libc version: glibc-2.36

Python version: 3.10.7 (main, Mar 10 2023, 10:47:39) [GCC 12.2.0] (64-bit runtime)

Python platform: Linux-6.2.0-060200-generic-x86_64-with-glibc2.36

Is CUDA available: True

CUDA runtime version: Could not collect

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration: AMD Radeon Graphics

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: 5.4.22803

MIOpen runtime version: 2.19.0

Is XNNPACK available: True

CPU:

Architektur: x86_64

CPU Operationsmodus: 32-bit, 64-bit

Adressgrößen: 48 bits physical, 48 bits virtual

Byte-Reihenfolge: Little Endian

CPU(s): 16

Liste der Online-CPU(s): 0-15

Anbieterkennung: AuthenticAMD

Modellname: AMD Ryzen 9 6900HX with Radeon Graphics

Prozessorfamilie: 25

Modell: 68

Thread(s) pro Kern: 2

Kern(e) pro Socket: 8

Sockel: 1

Stepping: 1

BogoMIPS: 6587.65

Markierungen: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl nonstop_tsc cpuid extd_apicid aperfmperf rapl pni pclmulqdq monitor ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw ibs skinit wdt tce topoext perfctr_core perfctr_nb bpext perfctr_llc mwaitx cpb cat_l3 cdp_l3 hw_pstate ssbd mba ibrs ibpb stibp vmmcall fsgsbase bmi1 avx2 smep bmi2 erms invpcid cqm rdt_a rdseed adx smap clflushopt clwb sha_ni xsaveopt xsavec xgetbv1 xsaves cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local clzero irperf xsaveerptr rdpru wbnoinvd cppc arat npt lbrv svm_lock nrip_save tsc_scale vmcb_clean flushbyasid decodeassists pausefilter pfthreshold avic v_vmsave_vmload vgif v_spec_ctrl umip pku ospke vaes vpclmulqdq rdpid overflow_recov succor smca fsrm

Virtualisierung: AMD-V

L1d Cache: 256 KiB (8 instances)

L1i Cache: 256 KiB (8 instances)

L2 Cache: 4 MiB (8 instances)

L3 Cache: 16 MiB (1 instance)

NUMA-Knoten: 1

NUMA-Knoten0 CPU(s): 0-15

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Mmio stale data: Not affected

Vulnerability Retbleed: Not affected

Vulnerability Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Retpolines, IBPB conditional, IBRS_FW, STIBP always-on, RSB filling, PBRSB-eIBRS Not affected

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Not affected

Versions of relevant libraries:

[pip3] clip-anytorch==2.5.2

[pip3] mypy-extensions==1.0.0

[pip3] numpy==1.23.5

[pip3] open-clip-torch==2.16.0

[pip3] pytorch-lightning==1.7.7

[pip3] pytorch-triton-rocm==2.0.2

[pip3] torch==2.0.0+rocm5.4.2

[pip3] torch-fidelity==0.3.0

[pip3] torchaudio==2.0.0+rocm5.4.2

[pip3] torchcontrib==0.0.2

[pip3] torchdiffeq==0.2.3

[pip3] torchlibrosa==0.0.9

[pip3] torchmetrics==0.11.4

[pip3] torchsde==0.2.5

[pip3] torchvision==0.15.1+rocm5.4.2

[conda] No relevant packages

cc @jeffdaily @sunway513 @jithunnair-amd @pruthvistony @ROCmSupport

| 0 |

2,709 | 100,654 |

UserWarning: must run observer before calling calculate_qparams. Returning default values.

|

oncall: quantization, triaged

|

### 🐛 Describe the bug

I am testing out quantization results for a resnet based model. I started with resnet18. Using both eager and fx mode, I get the below warning, and the subsequent accuracy of the quantized model is close to zero, making me suspicious whether calibration is happening correctly if at all.

**/home/chinmay/anaconda3/envs/v_pytorch2/lib/python3.8/site-packages/torch/ao/quantization/utils.py:302: UserWarning: must run observer before calling calculate_qparams. Returning default values.

warnings.warn(**

I follow the code shown in the tutorials, pasting the fx code below, but the same issue occurs with eager as well.

[Code](https://gist.github.com/chinmayjog13/c66226681d538382014a9140e0bc8de1)

```[tasklist]

### Tasks

- [ ] Add a draft title or issue reference here

```

### Versions

```

Collecting environment information...

PyTorch version: 2.0.0

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.6 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.16.3

Libc version: glibc-2.31

Python version: 3.8.16 (default, Mar 2 2023, 03:21:46) [GCC 11.2.0] (64-bit runtime)

Python platform: Linux-5.8.0-63-generic-x86_64-with-glibc2.17

Is CUDA available: True

CUDA runtime version: Could not collect

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration:

GPU 0: NVIDIA A100 80GB PCIe

GPU 1: NVIDIA A100 80GB PCIe

GPU 2: NVIDIA A100 80GB PCIe

GPU 3: NVIDIA A100 80GB PCIe

GPU 4: NVIDIA A100 80GB PCIe

GPU 5: NVIDIA A100 80GB PCIe

GPU 6: NVIDIA A100 80GB PCIe

GPU 7: NVIDIA A100 80GB PCIe

Nvidia driver version: 515.105.01

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.5.0

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.5.0

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.5.0

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.5.0

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.5.0

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.5.0

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.5.0

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

Address sizes: 43 bits physical, 48 bits virtual

CPU(s): 64

On-line CPU(s) list: 0-63

Thread(s) per core: 1

Core(s) per socket: 32

Socket(s): 2

NUMA node(s): 2

Vendor ID: AuthenticAMD

CPU family: 23

Model: 49

Model name: AMD EPYC 7502 32-Core Processor

Stepping: 0

Frequency boost: enabled

CPU MHz: 1498.611

CPU max MHz: 3354.4919

CPU min MHz: 1500.0000

BogoMIPS: 5000.33

Virtualization: AMD-V

L1d cache: 2 MiB

L1i cache: 2 MiB

L2 cache: 32 MiB

L3 cache: 256 MiB

NUMA node0 CPU(s): 0-31

NUMA node1 CPU(s): 32-63

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl and seccomp

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Full AMD retpoline, IBPB conditional, IBRS_FW, STIBP disabled, RSB filling

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Not affected

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl nonstop_tsc cpuid extd_apicid aperfmperf pni pclmulqdq monitor ssse3 fma cx16 sse4_1 sse4_2 movbe popcnt aes xsave avx f16c rdrand lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw ibs skinit wdt tce topoext perfctr_core perfctr_nb bpext perfctr_llc mwaitx cpb cat_l3 cdp_l3 hw_pstate sme ssbd mba sev ibrs ibpb stibp vmmcall fsgsbase bmi1 avx2 smep bmi2 cqm rdt_a rdseed adx smap clflushopt clwb sha_ni xsaveopt xsavec xgetbv1 xsaves cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local clzero irperf xsaveerptr rdpru wbnoinvd arat npt lbrv svm_lock nrip_save tsc_scale vmcb_clean flushbyasid decodeassists pausefilter pfthreshold avic v_vmsave_vmload vgif umip rdpid overflow_recov succor smca

Versions of relevant libraries:

[pip3] numpy==1.24.3

[pip3] torch==2.0.0

[pip3] torchaudio==2.0.0

[pip3] torchvision==0.15.0

[pip3] triton==2.0.0

[conda] blas 1.0 mkl

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py38h7f8727e_0

[conda] mkl_fft 1.3.1 py38hd3c417c_0

[conda] mkl_random 1.2.2 py38h51133e4_0

[conda] numpy 1.24.3 py38h14f4228_0

[conda] numpy-base 1.24.3 py38h31eccc5_0

[conda] pytorch 2.0.0 py3.8_cuda11.7_cudnn8.5.0_0 pytorch

[conda] pytorch-cuda 11.7 h778d358_3 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchaudio 2.0.0 py38_cu117 pytorch

[conda] torchtriton 2.0.0 py38 pytorch

[conda] torchvision 0.15.0 py38_cu117 pytorch

```

cc @jerryzh168 @jianyuh @raghuramank100 @jamesr66a @vkuzo @jgong5 @Xia-Weiwen @leslie-fang-intel

| 25 |

2,710 | 100,637 |

Optimal Batch Size Selection in Torchdynamo Benchmarks for Different GPU Memory Sizes

|

triaged, oncall: pt2

|

### 🚀 The feature, motivation and pitch

The torchdynamo benchmarking scripts come with default batch size for each model based on A100 40GB GPU tests. When adapting these scripts for training on smaller sized GPUs, we see OOM errors. The performance and accuracy tests will fail for most models and the benchmarking results becomes less informative. Thus it makes more sense to assign an optimal batch size according to the actual GPU memory size for each model, where the optimal batch size map will be kept as a list in the directory `benchmarks/dynamo`. By adding this batch size selection feature in the benchmarking scripts for all model suites, the benchmarking can be adapted by wider communities and suits their needs for testing and comparison among different GPU types.

### Alternatives

_No response_

### Additional context

_No response_

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh @anijain2305

| 7 |

2,711 | 100,636 |

tracing does not work when torch.distributions is involved

|

oncall: jit

|

### 🐛 Describe the bug

Basically the title, i am working on a reinforcement learning model and wanted to trace it to a scriptmodel to use it in C++, but it wont trace when torch.distributions, specifically the MultivariateNormal distribution (i have not tried the others).

I define a module that uses the distribution like this

```python

class ActorModel(torch.nn.Module):

def __init__(self, actor):

super().__init__()

self.actor = actor

def forward(self, x):

logits = self.actor(x)[0]

batch_size = logits.shape[0]

action_dim = 3

# Split the logits into the mean and Cholesky factor

tril_flat = logits[:, action_dim:]

mean = logits[:, :action_dim]

tril_flat = torch.where(tril_flat == 0, 1e-8, tril_flat).detach()

tril = torch.zeros(batch_size, action_dim, action_dim, device=logits.device)

tril[:, torch.tril(torch.ones(action_dim, action_dim, device=logits.device)).bool()] = tril_flat

# Construct the multivariate normal distribution

dist = MultivariateNormal(mean, scale_tril=tril)

return dist.sample()

```

and I then (try to) trace it like this

```python

actor.load_state_dict(policy.actor.state_dict())

actor = actor.to(torch.device('cpu'))

actor_module = ActorModel(actor)

actor_module.eval()

for param in actor_module.parameters():

param.requires_grad = False

with torch.no_grad():

example_input = torch.rand((1, 2, 290))

traced_script_last_model = torch.jit.script(actor_module, example_input)

```

The result is a fail in the sanity check, along with a warning about non-deterministic nodes

```

/home/bigbaduser/.local/lib/python3.11/site-packages/torch/jit/_trace.py:1084: TracerWarning: Trace had nondeterministic nodes. Did you forget call .eval() on your model? Nodes:

%bvec : Float(1, 3, strides=[3, 1], requires_grad=0, device=cpu) = aten::normal(%588, %596, %597) # /home/bigbaduser/.local/lib/python3.11/site-packages/torch/distributions/u

tils.py:48:0

This may cause errors in trace checking. To disable trace checking, pass check_trace=False to torch.jit.trace()

_check_trace(

/home/bigbaduser/.local/lib/python3.11/site-packages/torch/jit/_trace.py:1084: TracerWarning: Output nr 1. of the traced function does not match the corresponding output of the Pytho

n function. Detailed error:

Tensor-likes are not close!

Mismatched elements: 3 / 3 (100.0%)

Greatest absolute difference: 6.103819370269775 at index (0, 2) (up to 1e-05 allowed)

Greatest relative difference: 9.780014123811462 at index (0, 1) (up to 1e-05 allowed)

_check_trace(

```

And I know that the issue is in the distributions because when I try to trace the model itself with

```python

with torch.no_grad():

example_input = torch.rand((1, 2, 290))

traced_script_last_model = torch.jit.script(lambda x: actor(x)[0], example_input)

```

it works without issue. I thought it could be the `torch.where` since that is (at least in my head, i dont know how it is implemented) a control flow operation, but i tried deleting that line and it didn't matter, same error

### Versions

```

Collecting environment information...

PyTorch version: 2.0.0+cu117

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Arch Linux (x86_64)

GCC version: (GCC) 13.1.1 20230429

Clang version: 15.0.7

CMake version: version 3.26.3

Libc version: glibc-2.37

Python version: 3.11.3 (main, Apr 5 2023, 15:52:25) [GCC 12.2.1 20230201] (64-bit runtime)

Python platform: Linux-6.3.1-arch1-1-x86_64-with-glibc2.37

Is CUDA available: True

CUDA runtime version: Could not collect

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 3080 Ti

Nvidia driver version: 530.41.03

cuDNN version: Probably one of the following:

/usr/lib/libcudnn.so.8.8.0

/usr/lib/libcudnn_adv_infer.so.8.8.0

/usr/lib/libcudnn_adv_train.so.8.8.0

/usr/lib/libcudnn_cnn_infer.so.8.8.0

/usr/lib/libcudnn_cnn_train.so.8.8.0

/usr/lib/libcudnn_ops_infer.so.8.8.0

/usr/lib/libcudnn_ops_train.so.8.8.0

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 39 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 6

On-line CPU(s) list: 0-5

Vendor ID: GenuineIntel

Model name: Intel(R) Core(TM) i5-8400 CPU @ 2.80GHz

CPU family: 6

Model: 158

Thread(s) per core: 1

Core(s) per socket: 6

Socket(s): 1

Stepping: 10

CPU(s) scaling MHz: 95%

CPU max MHz: 4000.0000

CPU min MHz: 800.0000

BogoMIPS: 5602.18

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf pni pclmulqdq dtes64 monitor ds_cpl vmx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault invpcid_single pti ibrs ibpb stibp tpr_shadow vnmi flexpriority ept vpid ept_ad fsgsbase tsc_adjust bmi1 avx2 smep bmi2 erms invpcid mpx rdseed adx smap clflushopt intel_pt xsaveopt xsavec xgetbv1 xsaves dtherm ida arat pln pts hwp hwp_notify hwp_act_window hwp_epp

Virtualization: VT-x

L1d cache: 192 KiB (6 instances)

L1i cache: 192 KiB (6 instances)

L2 cache: 1.5 MiB (6 instances)

L3 cache: 9 MiB (1 instance)

NUMA node(s): 1

NUMA node0 CPU(s): 0-5

Vulnerability Itlb multihit: KVM: Mitigation: VMX disabled

Vulnerability L1tf: Mitigation; PTE Inversion; VMX conditional cache flushes, SMT disabled

Vulnerability Mds: Vulnerable: Clear CPU buffers attempted, no microcode; SMT disabled

Vulnerability Meltdown: Mitigation; PTI

Vulnerability Mmio stale data: Vulnerable: Clear CPU buffers attempted, no microcode; SMT disabled

Vulnerability Retbleed: Mitigation; IBRS

Vulnerability Spec store bypass: Vulnerable

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; IBRS, IBPB conditional, STIBP disabled, RSB filling, PBRSB-eIBRS Not affected

Vulnerability Srbds: Vulnerable: No microcode

Vulnerability Tsx async abort: Not affected

Versions of relevant libraries:

[pip3] numpy==1.24.3

[pip3] torch==2.0.0

[pip3] triton==2.0.0

[conda] Could not collect

```

cc @EikanWang @jgong5 @wenzhe-nrv @sanchitintel

| 0 |

2,712 | 100,626 |

Will Deep Implicit Models ever become first class citizens in PyTorch?

|

module: autograd, triaged, oncall: pt2, module: functorch

|

### 🚀 The feature, motivation and pitch

PyTorch makes it unnecessarily difficult to work with [Deep Implicit Models](https://implicit-layers-tutorial.org/). Deep Implicit Layers/Models are characterized by the need to implement custom backward passes to be efficient, making use of the [implicit function theorem](https://en.wikipedia.org/wiki/Implicit_function_theorem).

In particular, the following issues persist:

- JIT is incompatible with custom backwards. The only way around this is writing custom C++ extensions. https://github.com/pytorch/pytorch/issues/35749

- Implementing custom backward hooks for a whole `nn.Module` is extremely counter-intuitive, consider this example from Chapter 4:

```python

def forward(self, x):

# compute forward pass and re-engage autograd tape

with torch.no_grad():

z, self.forward_res = self.solver(

lambda z : self.f(z, x), torch.zeros_like(x), **self.kwargs)

z = self.f(z,x)

# set up Jacobian vector product (without additional forward calls)

z0 = z.clone().detach().requires_grad_()

f0 = self.f(z0,x)

def backward_hook(grad):

g, self.backward_res = self.solver(

lambda y : autograd.grad(f0, z0, y, retain_graph=True)[0] + grad,

grad, **self.kwargs)

return g

z.register_hook(backward_hook)

return z

```

One needs to `no_grad` the forward, and then manually surgically insert the custom backward.

- Custom backward of nn.Modules are also incompatible with JIT, and it's unclear how to work around it.

### Alternatives

JAX supports per-function custom backward overrides via decorators: https://jax.readthedocs.io/en/latest/notebooks/Custom_derivative_rules_for_Python_code.html

```python

import jax.numpy as jnp

from jax import custom_jvp

@custom_jvp

def f(x, y):

return jnp.sin(x) * y

@f.defjvp

def f_jvp(primals, tangents):

x, y = primals

x_dot, y_dot = tangents

primal_out = f(x, y)

tangent_out = jnp.cos(x) * x_dot * y + jnp.sin(x) * y_dot

return primal_out, tangent_out

```

If supported, this would solve all 3 issues.

### Additional context

_No response_

cc @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7 @soumith @msaroufim @wconstab @ngimel @bdhirsh @Chillee @samdow @kshitij12345 @janeyx99

| 12 |

2,713 | 100,623 |

Fix static lib

|

triaged, open source, ciflow/binaries, release notes: releng

|

Fix linking issues when building with BUILD_SHARED_LIBS=OFF.

Added 2 CI jobs: one that builds with BUILD_SHARED_LIBS=OFF with cuda12.1, and another job that creates a project from a cmake file using the previously built libtorch and runs it.

This PR is using https://github.com/pytorch/builder/pull/1465 to test the changes work.

The third commit changes the builder branch to my branch in order to do the test.

Fixes #87499

| 10 |

2,714 | 100,617 |

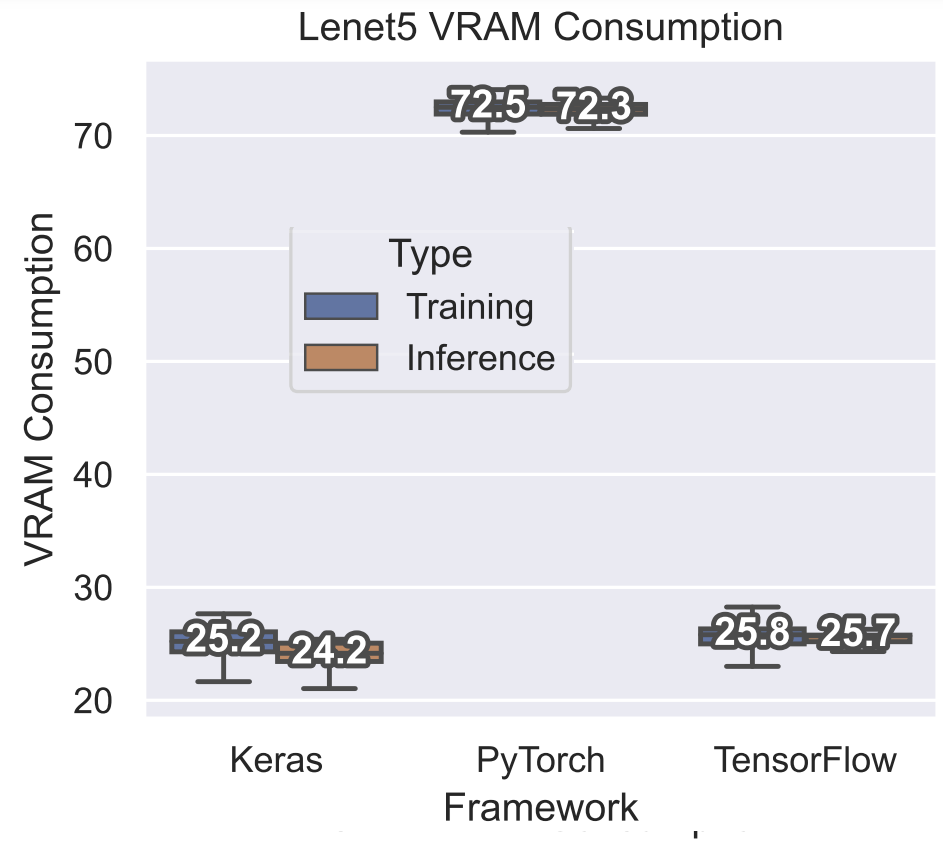

GPU VRAM usage significantly higher for Lenet5 models when compared to other frameworks

|

triaged, better-on-discuss-forum

|

### 🐛 Describe the bug

Hello everybody.

I’ve been experimenting with different models and different frameworks, and I’ve noticed that, when using GPU, training Lenet-5 on the MNIST dataset on PyTorch leads to higher GPU VRAM usage when compared to the Keras and TensorFlow v1.X implementations.

I'm on a Ubuntu 18.04.4 system equipped with an NVIDIA Quadro RTX 4000 GPU with 8GB of VRAM and an Intel(R) Core(TM) i9-9900K CPU running at 3.60GHz.

Here are boxplots that showcase the performance of numerous Lenet5:

Any ideas on what may be causing this?

### Versions

The version of relevant libraries are:

numpy==1.19.5

torch==1.10.0

torchaudio==0.10.0

torchvision==0.11.1

mkl==2022.2.1

mkl-fft==1.3.0

mkl-random==1.2.1

mkl-service==2.4.0

| 2 |

2,715 | 100,584 |

[doc] torch.scalar_tensor doc is missing

|

module: docs, triaged

|

### 📚 The doc issue

```python

>>> import torch

>>> k = 4

>>> torch.scalar_tensor(k)

tensor(4.)

>>> torch.scalar_tensor.__doc__

>>>

```

Main branch: `2.1.0a0+git2ac6ee7`

cc @svekars @carljparker

| 0 |

2,716 | 100,582 |

Synchronization issue when combining DPP and RPC - "Parameter marked twice"

|

oncall: distributed

|

### 🐛 Describe the bug

I'm using DDP in conjunction with RPC to create a master-worker setup to train a DQN agent using multiple GPUs in parallel. The master process manages the game and memory buffer and fits one of the partitions of the data, while the workers only fit their respective partitions of the data. I'm training on 2 NVIDIA 3090 GPUs within a single machine.

The minimal code to reproduce the error is shown below. The problem appears to be that the master process triggers a second call to the worker's `_train_on_batch` before the first call is completed. As a result, the parameters of the worker get marked twice, which throws the error. This seems like a race condition since the error isn't reproduced 100% of the time. I added some prints to the code (not shown in the example for clarity purposes) which confirm this is the issue:

```

2023-05-03 20:48:59,315 [INFO] rank=0 | start local_iter=0 | master_iter=0

2023-05-03 20:48:59,330 [INFO] rank=1 | start local_iter=0 | master_iter=0

2023-05-03 20:48:59,935 [INFO] rank=0 | bckwd done local_iter=0 | master_iter=0

2023-05-03 20:48:59,937 [INFO] rank=0 | optim done local_iter=0 | master_iter=0

2023-05-03 20:48:59,938 [INFO] rank=0 | start local_iter=1 | master_iter=1

2023-05-03 20:48:59,939 [INFO] rank=1 | start local_iter=0 | master_iter=1

2023-05-03 20:48:59,959 [INFO] rank=1 | bckwd done local_iter=0 | master_iter=0

2023-05-03 20:48:59,959 [INFO] rank=1 | bckwd done local_iter=0 | master_iter=1

On WorkerInfo(id=1, name=worker_1):

RuntimeError('Expected to mark a variable ready only once. This error is caused [...]

```

In the logs above, `rank` is the process rank (`0` for master, `1` for worker), `local_iter` is the `_train_on_batch` call number (i.e `0` for the first call, `1` for the second call), and `master_iter` is the number of `_train_on_batch` calls the master process has completed (iterations numbers are incremented just before exiting `_train_on_batch`). `backwd done` and `optim done` means the process has returned from the `loss.backward` and `optimizer.step` calls, respectively. We see that the master process has finished it's first training iteration when it signals the worker to start its second iteration, while the latter hasn't finished its first iteration yet.

The error is fixed adding a `dist.barrier` before exiting `_train_on_batch` method, which points towards this being a process synchronization issue. However, the DDP documentation states that

> Constructor, forward method, and differentiation of the output (or a function of the output of this module) are distributed synchronization points. Take that into account in case different processes might be executing different code.

Thus, shouldn't the processes be synchronized on the `preds = self.model(batch.data.to(self.rank))` call? Or is the `dist.barrier` usage intended in this use case?

Minimal code to reproduce the error (might not be reproduced 100% of the time - bigger models tend to show more failures):

```python

import os

from dataclasses import dataclass

import torch

import torch.nn as nn

import torch.distributed as dist

import torch.distributed.rpc as rpc

import torch.multiprocessing as mp

from torch.nn.parallel import DistributedDataParallel as DDP

from torch.optim import Optimizer

MASTER_ADDR = "localhost"

MASTER_PORT = "29500"

BACKEND = "nccl"

def _set_master_addr() -> None:

os.environ["MASTER_ADDR"] = MASTER_ADDR

os.environ["MASTER_PORT"] = MASTER_PORT

@dataclass

class Batch:

data: torch.Tensor

labels: torch.Tensor

class TrainOnBatchMixin:

def _train_on_batch(self, batch: Batch) -> float:

preds = self.model(batch.data.to(self.rank))

self.optimizer.zero_grad()

batch_loss = self.loss(preds, batch.labels.to(self.rank))

batch_loss.backward()

self.optimizer.step()

class Trainer(TrainOnBatchMixin):

def __init__(

self,

model: nn.Module,

optimizer: Optimizer,

in_feats: int,

out_feats: int,

loss: nn.Module = nn.MSELoss(),

num_workers: int = 1,

num_epochs: int = 5,

) -> None:

self.model = model

self.optimizer = optimizer

self.in_feats = in_feats

self.out_feats = out_feats

self.loss = loss

self.num_workers = num_workers

self.num_epochs = num_epochs

self.rank = 0

self.world_size = self.num_workers + 1

self._setup()

def _setup(self) -> None:

# spawn workers

for rank in range(1, self.world_size):

worker_process = mp.Process(

target=Worker.init_process,

kwargs={"rank": 1, "world_size": self.world_size},

)

worker_process.start()

# initialize self

_set_master_addr()

# init self RPC

rpc.init_rpc("master", rank=0, world_size=self.world_size)

# init self DDP

dist.init_process_group(rank=0, world_size=self.world_size, backend=BACKEND)

self.worker_rrefs = [

rpc.remote(

f"worker_{rank}",

Worker,

kwargs={

"rank": rank,

"model": self.model,

"optimizer": self.optimizer,

"loss": self.loss,

},

)

for rank in range(1, self.world_size)

]

self.model = DDP(self.model.to(0), device_ids=[0])

def train(self) -> None:

for num_epoch in range(self.num_epochs):

# create fake data

data = torch.tensor(

[[1] * self.in_feats, [12] * self.in_feats], dtype=torch.float32

)

labels = torch.tensor(

[[1] * self.out_feats, [13] * self.out_feats], dtype=torch.float32

)

batch = Batch(data, labels)

# execute workers

for worker_rref in self.worker_rrefs:

rpc.rpc_async(

worker_rref.owner(),

worker_rref.rpc_sync()._train_on_batch,

args=(batch,),

)

# execute self

self._train_on_batch(batch)

rpc.shutdown()

class Worker(TrainOnBatchMixin):

def __init__(

self,

rank: int,

model: nn.Module,

optimizer: Optimizer,

loss: nn.Module,

) -> None:

self.rank = rank

self.model = DDP(model.to(rank), device_ids=[rank])

self.optimizer = self._copy_optimizer(optimizer, self.model)

self.loss = type(loss)()

@staticmethod

def _copy_optimizer(optimizer: Optimizer, model: nn.Module) -> Optimizer:

return type(optimizer)(

params=[p for p in model.parameters()], **optimizer.defaults

)

@staticmethod

def init_process(

rank: int,

world_size: int,

) -> None:

_set_master_addr()

# init RPC

rpc.init_rpc(name=f"worker_{rank}", rank=rank, world_size=world_size)

# init DDP

dist.init_process_group(rank=rank, world_size=world_size, backend=BACKEND)

# block until trainer has given shut down signal

rpc.shutdown()

if __name__ == "__main__":

in_feats = int(1e5)

out_feats = 10

model = nn.Linear(in_feats, out_feats)

optimizer = torch.optim.SGD(params=model.parameters(), lr=1e-3)

trainer = Trainer(

model=model, optimizer=optimizer, in_feats=in_feats, out_feats=out_feats

)

trainer.train()

```

### Versions

PyTorch version: 2.0.0+cu117

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.5 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.26.3

Libc version: glibc-2.31

Python version: 3.9.4 (default, Apr 23 2023, 21:06:21) [GCC 9.4.0] (64-bit runtime)

Python platform: Linux-5.4.0-144-generic-x86_64-with-glibc2.31

Is CUDA available: True

CUDA runtime version: 10.1.243

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration:

GPU 0: NVIDIA GeForce RTX 3090

GPU 1: NVIDIA GeForce RTX 3090

Nvidia driver version: 495.29.05

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

Address sizes: 39 bits physical, 48 bits virtual

CPU(s): 16

On-line CPU(s) list: 0-15

Thread(s) per core: 2

Core(s) per socket: 8

Socket(s): 1

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 158

Model name: Intel(R) Core(TM) i9-9900K CPU @ 3.60GHz

Stepping: 12

CPU MHz: 4868.364

CPU max MHz: 5000.0000

CPU min MHz: 800.0000

BogoMIPS: 7200.00

Virtualization: VT-x

L1d cache: 256 KiB

L1i cache: 256 KiB

L2 cache: 2 MiB

L3 cache: 16 MiB

NUMA node0 CPU(s): 0-15

Vulnerability Itlb multihit: KVM: Mitigation: Split huge pages

Vulnerability L1tf: Not affected

Vulnerability Mds: Mitigation; Clear CPU buffers; SMT vulnerable

Vulnerability Meltdown: Not affected

Vulnerability Mmio stale data: Mitigation; Clear CPU buffers; SMT vulnerable

Vulnerability Retbleed: Mitigation; IBRS

Vulnerability Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl and seccomp

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; IBRS, IBPB conditional, RSB filling, PBRSB-eIBRS Not affected

Vulnerability Srbds: Mitigation; Microcode

Vulnerability Tsx async abort: Mitigation; Clear CPU buffers; SMT vulnerable

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault invpcid_single ssbd ibrs ibpb stibp tpr_shadow vnmi flexpriority ept vpid ept_ad fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm mpx rdseed adx smap clflushopt intel_pt xsaveopt xsavec xgetbv1 xsaves dtherm ida arat pln pts hwp hwp_notify hwp_act_window hwp_epp md_clear flush_l1d arch_capabilities

Versions of relevant libraries:

[pip3] flake8==4.0.1

[pip3] mypy-extensions==1.0.0

[pip3] numpy==1.24.3

[pip3] torch==2.0.0

[pip3] triton==2.0.0

[conda] Could not collect

cc @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @H-Huang @kwen2501 @awgu

| 3 |

2,717 | 100,578 |

Add support for aten::tril_indices for MPS backend

|

feature, triaged, module: linear algebra, module: mps

|

### 🐛 Describe the bug

First time contributors are welcome! 🙂

Add support for 'aten::tril_indices' for MPS backend. Generic support for adding operations to MPS backend is captured here: https://github.com/pytorch/pytorch/wiki/MPS-Backend#adding-op-for-mps-backend

### Versions

N/A

cc @jianyuh @nikitaved @pearu @mruberry @walterddr @IvanYashchuk @xwang233 @Lezcano @kulinseth @albanD @malfet @DenisVieriu97 @razarmehr @abhudev

| 0 |

2,718 | 100,574 |

undocumented error on torch.autograd.Function.jvp for non-Tensor forward returns

|

module: docs, module: autograd, triaged, actionable

|

### 🐛 Describe the bug

Returning non-Tensor values from torch.autograd.Function.forward works fine with backpropoagation but breaks jvp with the obscure error "RuntimeError: bad optional access". Either forward should require Tensor return values or this should work.

```

from torch import randn

from torch.autograd import Function

from torch.autograd.forward_ad import dual_level

from torch.autograd.forward_ad import make_dual

class TestFunc1(Function):

@staticmethod

def forward(ctx, x):

return 1, x

@staticmethod

def backward(ctx, dy, dz):

return dz

@staticmethod

def jvp(ctx, dz):

return None, dz

x = randn(5, requires_grad=True)

# this works

z2 = TestFunc1.apply(x)[1].sum().backward()

assert x.grad is not None

# this breaks

dx = randn(5)

with dual_level():

x2 = make_dual(x, dx)

z2 = TestFunc1.apply(x2) # raises RuntimeError: bad optional access

```

### Versions

PyTorch version: 2.0.0+cu117

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Manjaro Linux (x86_64)

GCC version: (GCC) 12.2.1 20230201

Clang version: 15.0.7

CMake version: version 3.26.3

Libc version: glibc-2.37

Python version: 3.11.3 (main, Apr 13 2023, 18:03:05) [GCC 12.2.1 20230201] (64-bit runtime)

Python platform: Linux-6.1.25-1-MANJARO-x86_64-with-glibc2.37

Is CUDA available: True

CUDA runtime version: Could not collect

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 3080

Nvidia driver version: 530.41.03

cuDNN version: Probably one of the following:

/usr/lib/libcudnn.so.8.8.0

/usr/lib/libcudnn_adv_infer.so.8.8.0

/usr/lib/libcudnn_adv_train.so.8.8.0

/usr/lib/libcudnn_cnn_infer.so.8.8.0

/usr/lib/libcudnn_cnn_train.so.8.8.0

/usr/lib/libcudnn_ops_infer.so.8.8.0

/usr/lib/libcudnn_ops_train.so.8.8.0

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 43 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 24

On-line CPU(s) list: 0-23

Vendor ID: AuthenticAMD

Model name: AMD Ryzen 9 3900X 12-Core Processor

CPU family: 23

Model: 113

Thread(s) per core: 2

Core(s) per socket: 12

Socket(s): 1

Stepping: 0

Frequency boost: enabled

CPU(s) scaling MHz: 78%

CPU max MHz: 4672,0698

CPU min MHz: 2200,0000

BogoMIPS: 7588,32

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl nonstop_tsc cpuid extd_apicid aperfmperf rapl pni pclmulqdq monitor ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw ibs skinit wdt tce topoext perfctr_core perfctr_nb bpext perfctr_llc mwaitx cpb cat_l3 cdp_l3 hw_pstate ssbd mba ibpb stibp vmmcall fsgsbase bmi1 avx2 smep bmi2 cqm rdt_a rdseed adx smap clflushopt clwb sha_ni xsaveopt xsavec xgetbv1 cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local clzero irperf xsaveerptr rdpru wbnoinvd arat npt lbrv svm_lock nrip_save tsc_scale vmcb_clean flushbyasid decodeassists pausefilter pfthreshold avic v_vmsave_vmload vgif v_spec_ctrl umip rdpid overflow_recov succor smca sev sev_es

Virtualization: AMD-V

L1d cache: 384 KiB (12 instances)

L1i cache: 384 KiB (12 instances)

L2 cache: 6 MiB (12 instances)

L3 cache: 64 MiB (4 instances)

NUMA node(s): 1

NUMA node0 CPU(s): 0-23

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Mmio stale data: Not affected

Vulnerability Retbleed: Mitigation; untrained return thunk; SMT enabled with STIBP protection

Vulnerability Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Retpolines, IBPB conditional, STIBP always-on, RSB filling, PBRSB-eIBRS Not affected

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Not affected

Versions of relevant libraries:

[pip3] flake8==6.0.0

[pip3] mypy==1.1.1

[pip3] mypy-extensions==1.0.0

[pip3] numpy==1.24.2

[pip3] torch==2.0.0

[pip3] triton==2.0.0

[conda] Could not collect

cc @svekars @carljparker @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7

| 2 |

2,719 | 100,562 |

Use a label instead of body text for merge blocking CI SEVs

|

module: ci, triaged

|

See https://github.com/pytorch/pytorch/pull/100559#pullrequestreview-1411334737

cc @seemethere @malfet @pytorch/pytorch-dev-infra

| 0 |

2,720 | 100,561 |

[ONNX] Opset 18 support for TorchScript exporter

|

module: onnx, triaged

|

* Modified ONNX Ops

- [ ] Reduce ops. E.g., https://onnx.ai/onnx/operators/text_diff_ReduceMax_13_18.html#l-onnx-op-reducemax-d13-18

- [ ] Pad. https://onnx.ai/onnx/operators/text_diff_Pad_13_18.html#l-onnx-op-pad-d13-18

- [ ] Resize. https://onnx.ai/onnx/operators/text_diff_Resize_13_18.html#l-onnx-op-resize-d13-18

- [ ] ScatterElements, ScatterND. Native reduction mode https://onnx.ai/onnx/operators/text_diff_ScatterElements_16_18.html#l-onnx-op-scatterelements-d16-18

- [ ] Split. New attribute `num_outputs`. https://onnx.ai/onnx/operators/text_diff_Split_13_18.html#l-onnx-op-split-d13-18

* New ONNX Ops

- [ ] CenterCropPad

- [ ] Col2Im

- [ ] Mish

- [ ] BitwiseAnd, BitwiseNot, BitwiseOr, BitwiseXor

- [ ] GroupNormalization

| 1 |

2,721 | 100,528 |

Backward hook execution order changes when input.requires_grad is False

|

module: docs, module: autograd, module: nn, triaged, actionable

|

### 🐛 Describe the bug

When using the `register_module_full_backward_pre_hook` and `register_module_full_backward_hook` functions, the order of hooks execution is affected by whether the input requires gradient or not. If the input does not require a gradient, then the order of execution becomes incorrect.

```

------ train_data.requires_grad = True ------

Push: MSELoss

Pop: MSELoss

-->Push: Sequential

Push: Sequential<--

Push: Linear

Pop: Linear

Push: ReLU

Pop: ReLU

Push: Linear

Pop: Linear

Pop: Sequential

Push: Sequential

Push: Linear

Pop: Linear

Push: ReLU

Pop: ReLU

Push: Linear

Pop: Linear

Pop: Sequential

Pop: Sequential

------ train_data.requires_grad = False ------

Push: MSELoss

Pop: MSELoss

-->Push: Sequential

Pop: Sequential

Push: Sequential<--

Push: Linear

Pop: Linear

Push: ReLU

Pop: ReLU

Push: Linear

Pop: Linear

Pop: Sequential

Push: Sequential

Pop: Sequential

Push: Linear

Pop: Linear

Push: ReLU

Pop: ReLU

Push: Linear

Pop: Linear

Finished Training

```

Code to reproduce:

```python

import torch

import torch.nn as nn

import torch.optim as optim

# Define the neural network architecture

net = nn.Sequential(nn.Sequential(

nn.Linear(10, 20),

nn.ReLU(),

nn.Linear(20, 1)

), nn.Sequential(

nn.Linear(1, 20),

nn.ReLU(),

nn.Linear(20, 1)))

# Create dummy data

train_data = torch.randn(100, 10, requires_grad=True)

train_labels = torch.randn(100, 1)

# Define the loss function and optimizer

criterion = nn.MSELoss()

# Train the neural network

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

net = net.to(device)

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

def _pre_hook(module, *_, **__):

print('Push: ', f'{module.__class__.__name__}')

def _after_hook(module, *_, **__):

print('Pop: ', f'{module.__class__.__name__}')

torch.nn.modules.module.register_module_full_backward_pre_hook(_pre_hook)

torch.nn.modules.module.register_module_full_backward_hook(_after_hook)

print('------ train_data.requires_grad = True ------')

for epoch in range(1):

running_loss = 0.0

for i in range(1):

inputs, labels = train_data[i].to(device), train_labels[i].to(device)

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

# print('Epoch %d loss: %.3f' % (epoch + 1, running_loss / 100))

running_loss = 0.0

print('------ train_data.requires_grad = False ------')

train_data.requires_grad = False

for epoch in range(1):

running_loss = 0.0

for i in range(1):

inputs, labels = train_data[i].to(device), train_labels[i].to(device)

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

# print('Epoch %d loss: %.3f' % (epoch + 1, running_loss / 100))

running_loss = 0.0

print('Finished Training')

```

### Versions

```

Collecting environment information...

PyTorch version: 1.14.0a0+44dac51

Is debug build: False

CUDA used to build PyTorch: 12.0

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.5 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.24.1

Libc version: glibc-2.31

Python version: 3.8.10 (default, Nov 14 2022, 12:59:47) [GCC 9.4.0] (64-bit runtime)

Python platform: Linux-5.4.0-77-generic-x86_64-with-glibc2.29

Is CUDA available: True

CUDA runtime version: 12.0.140

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration:

GPU 0: NVIDIA A30

GPU 1: NVIDIA A30

GPU 2: NVIDIA A30

GPU 3: NVIDIA A30

GPU 4: NVIDIA A30

GPU 5: NVIDIA A30

GPU 6: NVIDIA A30

GPU 7: NVIDIA A30

Nvidia driver version: 525.85.12

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.7.0

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.7.0

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.7.0

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.7.0

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.7.0

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.7.0

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.7.0

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

Address sizes: 43 bits physical, 48 bits virtual

CPU(s): 256

On-line CPU(s) list: 0-255

Thread(s) per core: 2

Core(s) per socket: 64

Socket(s): 2

NUMA node(s): 8

Vendor ID: AuthenticAMD

CPU family: 23

Model: 49

Model name: AMD EPYC 7742 64-Core Processor

Stepping: 0

Frequency boost: enabled

CPU MHz: 1500.048

CPU max MHz: 2250.0000

CPU min MHz: 1500.0000

BogoMIPS: 4500.40

Virtualization: AMD-V

L1d cache: 4 MiB

L1i cache: 4 MiB

L2 cache: 64 MiB

L3 cache: 512 MiB

NUMA node0 CPU(s): 0-15,128-143

NUMA node1 CPU(s): 16-31,144-159

NUMA node2 CPU(s): 32-47,160-175

NUMA node3 CPU(s): 48-63,176-191

NUMA node4 CPU(s): 64-79,192-207

NUMA node5 CPU(s): 80-95,208-223

NUMA node6 CPU(s): 96-111,224-239

NUMA node7 CPU(s): 112-127,240-255

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Vulnerable, IBPB: disabled, STIBP: disabled

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Not affected

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl nonstop_tsc cpuid extd_apicid aperfmperf pni pclmulqdq monitor ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw ibs skinit wdt tce topoext perfctr_core perfctr_nb bpext perfctr_llc mwaitx cpb cat_l3 cdp_l3 hw_pstate sme ssbd mba sev ibrs ibpb stibp vmmcall fsgsbase bmi1 avx2 smep bmi2 cqm rdt_a rdseed adx smap clflushopt clwb sha_ni xsaveopt xsavec xgetbv1 xsaves cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local clzero irperf xsaveerptr wbnoinvd arat npt lbrv svm_lock nrip_save tsc_scale vmcb_clean flushbyasid decodeassists pausefilter pfthreshold avic v_vmsave_vmload vgif umip rdpid overflow_recov succor smca

Versions of relevant libraries:

[pip3] mypy-extensions==1.0.0

[pip3] numpy==1.22.2

[pip3] pytorch-lightning==1.9.4

[pip3] pytorch-quantization==2.1.2

[pip3] torch==1.14.0a0+44dac51

[pip3] torch-automated-profiler==1.10.0

[pip3] torch-performance-linter==0.2.1.dev36+gd3906c3

[pip3] torch-tb-profiler==0.4.1

[pip3] torch-tensorrt==1.4.0.dev0

[pip3] torchmetrics==0.9.1

[pip3] torchtext==0.13.0a0+fae8e8c

[pip3] torchvision==0.15.0a0

[pip3] triton==2.0.0

[pip3] tritonclient==2.22.4

[conda] Could not collect

```

cc @svekars @carljparker @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7 @mruberry @jbschlosser @walterddr @mikaylagawarecki

| 3 |

2,722 | 100,520 |

DISABLED test_inplace_grad_div_floor_rounding_cuda_float64 (__main__.TestBwdGradientsCUDA)

|

triaged, module: flaky-tests, skipped, oncall: pt2, module: inductor

|

Platforms: inductor

This test was disabled because it is failing in CI. See [recent examples](https://hud.pytorch.org/flakytest?name=test_inplace_grad_div_floor_rounding_cuda_float64&suite=TestBwdGradientsCUDA) and the most recent trunk [workflow logs](https://github.com/pytorch/pytorch/runs/undefined).

Over the past 3 hours, it has been determined flaky in 7 workflow(s) with 7 failures and 7 successes.

**Debugging instructions (after clicking on the recent samples link):**

DO NOT ASSUME THINGS ARE OKAY IF THE CI IS GREEN. We now shield flaky tests from developers so CI will thus be green but it will be harder to parse the logs.

To find relevant log snippets:

1. Click on the workflow logs linked above

2. Click on the Test step of the job so that it is expanded. Otherwise, the grepping will not work.

3. Grep for `test_inplace_grad_div_floor_rounding_cuda_float64`