Serial Number

int64 1

6k

| Issue Number

int64 75.6k

112k

| Title

stringlengths 3

357

| Labels

stringlengths 3

241

⌀ | Body

stringlengths 9

74.5k

⌀ | Comments

int64 0

867

|

|---|---|---|---|---|---|

2,801 | 99,866 |

Logs output_code and inductor do not interact as expected

|

module: logging, triaged

|

### 🐛 Describe the bug

As discussed in https://github.com/pytorch/pytorch/issues/94788#issuecomment-1515441713, setting `inductor` does not generate the `output_code` artefact. This goes against the definition that `output_code` is an artifact owned by `inductor`. This issue lead to the discussion in https://github.com/pytorch/pytorch/pull/99038 of wanting to log the output folder at two different levels, which signals that there's something a bit off about the whole thing. The better way of controlling this would be via logging the output file and the code at two different verbosity levels.

cc @mlazos @kurtamohler

### Versions

master

| 0 |

2,802 | 99,852 |

Slight numerical divergence between torch.compile and eager; shows up in practice on yolov3

|

triaged, oncall: pt2, module: pt2 accuracy

|

### 🐛 Describe the bug

This repro uses the new style accuracy repro infra from https://github.com/pytorch/pytorch/pull/99834 ; to run it, you will need to patch in the two PRs in that stack. This repro does not reproduce without the actual data.

```

import torch._inductor.overrides

import torch

from torch import tensor, device

import torch.fx as fx

from torch._dynamo.testing import rand_strided

from math import inf

from torch.fx.experimental.proxy_tensor import make_fx

# REPLACEABLE COMMENT FOR TESTING PURPOSES

# torch version: 2.0.0a0+gita27bd42

# torch cuda version: 11.4

# torch git version: a27bd42bb9ad39504fdd94ad38a5ad0346f1758b

# CUDA Info:

# nvcc: NVIDIA (R) Cuda compiler driver

# Copyright (c) 2005-2021 NVIDIA Corporation

# Built on Sun_Aug_15_21:14:11_PDT_2021

# Cuda compilation tools, release 11.4, V11.4.120

# Build cuda_11.4.r11.4/compiler.30300941_0

# GPU Hardware Info:

# NVIDIA PG509-210 : 8

from torch.nn import *

class Repro(torch.nn.Module):

def __init__(self):

super().__init__()

def forward(self, unsqueeze_1, unsqueeze_3, rsqrt, convolution):

var_mean = torch.ops.aten.var_mean.correction(convolution, [0, 2, 3], correction = 0, keepdim = True)

getitem_1 = var_mean[1]; var_mean = None

sub = torch.ops.aten.sub.Tensor(convolution, getitem_1); convolution = getitem_1 = None

mul = torch.ops.aten.mul.Tensor(sub, rsqrt); sub = rsqrt = None

mul_6 = torch.ops.aten.mul.Tensor(mul, unsqueeze_1); mul = unsqueeze_1 = None

add_4 = torch.ops.aten.add.Tensor(mul_6, unsqueeze_3); mul_6 = unsqueeze_3 = None

gt = torch.ops.aten.gt.Scalar(add_4, 0); add_4 = None

return (gt,)

import torch._dynamo.repro.after_aot

reader = torch._dynamo.repro.after_aot.InputReader(save_dir='/tmp/minifier-20230423')

buf0 = reader.storage('b3e108077e73f8bbdefbd419a1798700731646a1', 256, device=device(type='cuda', index=0))

t0 = reader.tensor(buf0, (64, 1, 1))

buf1 = reader.storage('6ee108072a73f8bb41fbd4197ff98700151646a1', 256, device=device(type='cuda', index=0))

t1 = reader.tensor(buf1, (64, 1, 1))

buf2 = reader.storage('65d17de8e97efedb72fa1a01d7f26cc32f948b59', 256, device=device(type='cuda', index=0))

t2 = reader.tensor(buf2, (1, 64, 1, 1))

buf3 = reader.storage('38c695755b4a84a70b07c240f092e2b293280811', 50331648, device=device(type='cuda', index=0))

t3 = reader.tensor(buf3, (4, 64, 192, 256))

args = [t0, t1, t2, t3]

mod = make_fx(Repro(), tracing_mode='symbolic')(*args)

from torch._inductor.compile_fx import compile_fx_inner

from torch._dynamo.debug_utils import same_two_models

compiled = compile_fx_inner(mod, args)

class AccuracyError(Exception):

pass

if not same_two_models(mod, compiled, args, only_fwd=True):

raise AccuracyError("Bad accuracy detected")

```

Meta employees only: to fetch the test data, run

```

cd /tmp && manifold getr ai_training_ftw/tree/ezyang/minifier-20230423

```

(or drop it into whatever directory you like and modify the `save_dir` in the repro script.)

I'm hesitant to say this is a bug per se, because the accuracy problem is induced by a `> 0` operation, which if we are unlucky, will be sensitive to epsilon perturbations if we are unlucky with the particular data we get. And I guess there are a few places which could cause divergence, notably it looks like there is an opportunity to introduce a fused multiply-add.

This is a bit annoying for accuracy repro extraction though, because the mask is different, and this difference could get amplified if the network is poorly designed! In the case of yolov3, even when this mask changes, the regular model passes accuracy E2E, so who can say if it's a problem. What is a good strategy for letting people know that the accuracy problem here is benign?

### Versions

master

cc @msaroufim @wconstab @ngimel @bdhirsh @anijain2305 @soumith

| 3 |

2,803 | 99,836 |

NTK notebook calculates wrong object - wrong output dimensions

|

triaged, module: functorch

|

### 📚 The doc issue

The notebook given in https://pytorch.org/functorch/stable/notebooks/neural_tangent_kernels.html does not calculate the NTK - the output depends on the size of the input tensors instead of the size of the network's input. The NTK should be a n_in x n_in x n_out x n_out matrix (https://en.wikipedia.org/wiki/Neural_tangent_kernel), where n_in/n_out are the sizes of the network's inputs/outputs.

### Suggest a potential alternative/fix

_No response_

cc @zou3519 @Chillee @samdow @soumith @kshitij12345 @janeyx99

| 0 |

2,804 | 99,825 |

When backend is nccl, the distribution group type generated by Pytorch 2.0 shoule be ProcessGroupNCCL, but is ProcessGroup

|

oncall: distributed

|

### 🐛 Describe the bug

Hi, I have discovered an issue. The default distribution group type generated by Pytorch 2.0 is ProcessGroup, while in Pytorch version 1.8, it is ProcessGroupNCCL. By debugging the code, it was found that the return values of the code in Pytorch 2.0 and 1.8 are different

Pytorch 2.0

```

import torch

issubclass(torch._C._distributed_c10d.ProcessGroupNCCL, torch._C._distributed_c10d.ProcessGroup)

False

```

Pytorch 1.8

```

import torch

issubclass(torch._C._distributed_c10d.ProcessGroupNCCL, torch._C._distributed_c10d.ProcessGroup)

True

```

The above issue will cause problems with this function “_new_process_group_helper”:

```

if issubclass(type(backend_class), ProcessGroup):

pg = backend_class

break

```

cc @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @H-Huang @kwen2501 @awgu

| 1 |

2,805 | 99,821 |

Tracer cannot infer type of Seq2SeqLMOutput

|

oncall: jit

|

### Tracer cannot infer type of Seq2SeqLMOutput

使用torch.jit.trace对模型进行追踪的时候发生了一个错误

错误信息为:

RuntimeError: Tracer cannot infer type of Seq2SeqLMOutput

下面是我这边的代码:

tokenizer = AutoTokenizer.from_pretrained('./outputs/model_files/')

model = AutoModelForSeq2SeqLM.from_pretrained('./outputs/model_files/')

device = torch.device("cpu")

model.to(device)

model.eval()

sample_sentence = "generate some numbers"

encoding = tokenizer(sample_sentence,

padding="max_length",

max_length=5,

return_tensors="pt",

return_attention_mask=True,

truncation=True)

input_ids = encoding.input_ids

attention_mask = encoding.attention_mask

decoder_input_ids = torch.ones(1,1, dtype=torch.int32) * model.config.decoder_start_token_id

traced_model = torch.jit.trace(model, (input_ids,attention_mask,decoder_input_ids),strict=False)

traced_model.save("./model.pt")

具体的错误为信息:

D:\Program Files\Python310\lib\site-packages\transformers\modeling_utils.py:701: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

if causal_mask.shape[1] < attention_mask.shape[1]:

Traceback (most recent call last):

File "E:\Python\project\Chinese_Chat_T5_Base-main\convertModel.py", line 37, in <module>

traced_model = torch.jit.trace(model, (input_ids,attention_mask,decoder_input_ids),strict=False)

File "D:\Program Files\Python310\lib\site-packages\torch\jit\_trace.py", line 759, in trace

return trace_module(

File "D:\Program Files\Python310\lib\site-packages\torch\jit\_trace.py", line 976, in trace_module

module._c._create_method_from_trace(

RuntimeError: Tracer cannot infer type of Seq2SeqLMOutput(loss=None, logits=tensor([[[-8.0331, -0.6127, 1.7029, ..., -6.0205, -4.9355, -7.5521]]],

grad_fn=<UnsafeViewBackward0>), past_key_values=((tensor([[[[-4.1845e-01, -3.1748e+00, 3.5584e-01, 1.3317e-01, -4.8382e-01,

4.9041e-01, 1.2883e+00, 5.5251e-01, 2.3777e+00, 3.6629e-01,

-2.3793e-01, 1.6337e+00, 9.4133e-01, -1.0904e+00, -2.8644e+00,

-5.2565e-02, 2.9996e-01, -4.1858e-01, -7.8744e-01, -1.7734e+00,

-1.0728e+00, 5.5014e-01, -1.5405e+00, 2.7343e+00, 3.5340e+00,

-1.5999e-02, -7.7990e-01, 4.5489e-01, -2.4964e-01, -2.9343e-01,

7.0564e-01, 9.1929e-01, 3.4561e+00, -6.6381e-01, 8.5702e-01,

6.3156e-01, -7.5711e-01, 1.6548e+00, -8.5602e-01, -9.3094e-01,

9.1188e-02, -8.6472e-01, 6.4054e-01, 4.7034e-01, 3.4763e+00,

-1.0079e+00, 1.2279e-01, 1.5227e+00, 1.6583e-01, 9.4017e-01,

1.5735e+00, 3.4655e-01, -8.0972e-01, 9.2279e-01, 3.1652e-01,

-2.3178e+00, 5.2484e-02, 4.8382e-01, -1.7146e-01, 2.4539e+00,

.......

[-2.7458e-03, -4.8062e-02, -5.2608e-02, ..., -4.8220e-03,

5.0419e-02, 2.8005e-03]]]], grad_fn=<TransposeBackward0>))), decoder_hidden_states=None, decoder_attentions=None, cross_attentions=None, encoder_last_hidden_state=tensor([[[-0.0070, 0.1318, -0.0300, ..., 0.0244, -0.0696, 0.0580],

[-0.0274, 0.0240, -0.0552, ..., -0.0846, -0.0992, 0.0408],

[-0.0647, 0.0068, -0.0779, ..., 0.0064, 0.0316, 0.0111],

[-0.0445, -0.0067, -0.0273, ..., 0.0320, 0.0382, 0.0814],

[-0.0006, 0.0002, 0.0010, ..., -0.0002, 0.0009, -0.0009]]],

grad_fn=<MulBackward0>), encoder_hidden_states=None, encoder_attentions=None)

:Dictionary inputs to traced functions must have consistent type. Found Tensor and Tuple[Tuple[Tensor, Tensor, Tensor, Tensor], Tuple[Tensor, Tensor, Tensor, Tensor], Tuple[Tensor, Tensor, Tensor, Tensor], Tuple[Tensor, Tensor, Tensor, Tensor], Tuple[Tensor, Tensor, Tensor, Tensor], Tuple[Tensor, Tensor, Tensor, Tensor], Tuple[Tensor, Tensor, Tensor, Tensor], Tuple[Tensor, Tensor, Tensor, Tensor], Tuple[Tensor, Tensor, Tensor, Tensor], Tuple[Tensor, Tensor, Tensor, Tensor], Tuple[Tensor, Tensor, Tensor, Tensor], Tuple[Tensor, Tensor, Tensor, Tensor]]

原模型地址为:https://huggingface.co/mxmax/Chinese_Chat_T5_Base

### Versions

torch2.0

cc @EikanWang @jgong5 @wenzhe-nrv @sanchitintel

| 1 |

2,806 | 99,812 |

cuda.is_available() error

|

module: cuda, triaged

|

I test pytorch on a server with epyc 7742 and 8*A100 40G, when print torch.cuda.is_available() there is no output and even can't kill the python process with Ctrl+C, how can i solve this bug without update the driver version? I try install another version cuda like 11.1 or 11.0.2 but still no result.

Hope your reply!

Thanks!

- PyTorch:

- How you installed PyTorch: conda install pytorch==1.7.1 torchvision==0.8.2 torchaudio==0.7.2 cudatoolkit=11.0 -c pytorch

- OS: Ubuntu18.04

- PyTorch version:1.7.1

- Python version:3.8.3

- CUDA/cuDNN version: cuda11.0.3

- GPU models and configuration: 8*A100

- Nvidia Driver:450.51.06

cc @ngimel

| 1 |

2,807 | 99,807 |

AOTAutograd/Inductor file system cache

|

triaged, oncall: pt2, module: inductor

|

### 🐛 Describe the bug

I was playing around with implementing a kernel using torch.compile(dynamic=True) to guarantee low memory usage (and also because I needed an unusual reduction, xor_sum, which wasn't in PyTorch proper.)

```

# Use of torch.compile is mandatory for (1) good memory usage

# and (2) xor_sum implementation

@torch.compile(dynamic=True)

def kernel(x):

# The randint calls are carefully written to hit things we

# have lowerings for in inductor. Lack of unsigned 32-bit integer

# is a pain.

a = torch.randint(

-2**31, 2**31,

x.shape, device=x.device, dtype=torch.int32

).abs()

a = ((a % (2**31-1)) + 1).long()

b = torch.randint(

-2**31, 2**31,

x.shape, device=x.device, dtype=torch.int32

).abs().long()

# This is a standard shift-multiply universal hash family

# plus xor sum hash, using Philox to generate random numbers.

# Our Philox RNG is not deterministic across devices so

# don't use this for stable hashing.

#

# This assumes fixed length so you're also obligated to bucket

# by the length of tensor as well

return prims.xor_sum(

(a * x + b).int(),

[0]

)

```

On a warm Triton cache, this still takes a good 10s to compile the kernel for the first time on any given process invocation. This is way too slow, esp since once I'm done working on the kernel, it probably will never change. A good file system cache would improve this situation quite a bit.

### Versions

master

cc @soumith @msaroufim @wconstab @ngimel @bdhirsh @voznesenskym @penguinwu @anijain2305 @EikanWang @jgong5 @Guobing-Chen @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx @peterbell10 @desertfire

| 3 |

2,808 | 99,806 |

`cat` gradgrad tests failing

|

module: autograd, triaged, module: edge cases

|

## Issue description

Previously `cat` grad tests were surprisingly skipped here:

https://github.com/pytorch/pytorch/blob/e63c502baa4a6f2109749984be701e722b3b7232/torch/testing/_internal/common_utils.py#L4371-L4372

And the following tests are failing after removing the skip:

```

test/test_ops_gradients.py::TestBwdGradientsCPU::test_fn_gradgrad_cat_cpu_float64

test/test_ops_gradients.py::TestBwdGradientsCPU::test_fn_gradgrad_cat_cpu_complex128

```

```

# RuntimeError: The size of tensor a (25) must match the size of tensor b (0) at non-singleton dimension 0.

```

which is caused by the below input sample:

https://github.com/pytorch/pytorch/blob/6580b160d35a75d5ceebf376d55422376d0c0d2c/torch/testing/_internal/common_methods_invocations.py#L2043

Added these tests to xfail in #99596

## Version

On master

cc @ezyang @gchanan @zou3519 @albanD @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7

| 3 |

2,809 | 99,802 |

torch.multinomial() always returns [0] using MPS

|

triaged, module: mps

|

### 🐛 Describe the bug

Using MPS, torch.multinomial() always return [0], even when the probability is 0.

It works as expected on CPU:

```

import torch

In [1]: x = torch.tensor([0.5, 0.5], dtype=torch.float, device='cpu')

...: print(set(torch.multinomial(x, 1, True).item() for i in range(100)))

{0, 1}

In [2]: x = torch.tensor([0, 0.5], dtype=torch.float, device='cpu')

...: print(set(torch.multinomial(x, 1, True).item() for i in range(100)))

{1}

```

But on MPS, it always return [0].

```

In [3]: x = torch.tensor([0.5, 0.5], dtype=torch.float, device='mps:1')

...: print(set(torch.multinomial(x, 1, True).item() for i in range(100)))

{0}

In [4]: x = torch.tensor([0, 0.5], dtype=torch.float, device='mps:1')

...: print(set(torch.multinomial(x, 1, True).item() for i in range(100)))

{0}

In [5]: torch.multinomial(x, 1, True)

Out[5]: tensor([0], device='mps:0')

```

I am actually quite new to torch, so please let me know if I misunderstood anything.

### Versions

PyTorch version: 2.0.0

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: macOS 12.6.3 (x86_64)

GCC version: Could not collect

Clang version: 14.0.0 (clang-1400.0.29.202)

CMake version: version 3.22.4

Libc version: N/A

Python version: 3.9.6 (default, Jul 7 2021, 12:22:14) [Clang 12.0.5 (clang-1205.0.22.9)] (64-bit runtime)

Python platform: macOS-12.6.3-x86_64-i386-64bit

Is CUDA available: False

CUDA runtime version: No CUDA

CUDA_MODULE_LOADING set to: N/A

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Apple M1 Max

Versions of relevant libraries:

[pip3] numpy==1.23.5

[pip3] torch==2.0.0

[conda] No relevant packages

cc @kulinseth @albanD @malfet @DenisVieriu97 @razarmehr @abhudev

| 3 |

2,810 | 101,073 |

Windows fatal exception: stack overflow while using pytorch for computing

|

triaged, module: functorch

|

Hi, I am guessing that overflow is due to jacfwd, because if I increase the Nx, Ny, Nz here is the error (Windows fatal exception: stack overflow), and Nx Ny Nz is related to the size of the jacobian matrix. If Nx, Ny are small, like 10, the code works well

But this works fine on my friend's computer which has the same pytorch version and lower memory size

My pytorch is 2.0.0+cu117, but now this is only running on CPU

Thanks, @zou3519

```

# -*- coding: utf-8 -*-

"""

"""

import torch

from torch.func import jacfwd

import numpy as np

import matplotlib.pyplot as plt

from timeit import default_timer as timer

#from functorch import jacfwd

#from functorch import jacrev

#from torch.autograd.functional import jacobian

from scipy.sparse.linalg import gmres

def get_capillary(swnew):

capillary = torch.div(1, swnew)

capillary[capillary == float("Inf")] = 200

capillary = 2*swnew

capillary = swnew

return capillary

def get_relaperm (slnew):

k0r1=0.6

L1=1.8

L2=1.8

E1=2.1

E2=2.1

T1=2.3

T2=2.3

krl=torch.div(k0r1*slnew**L1,slnew**L2+E1*(1-slnew)**T1)

krg=torch.div((1-slnew)**L2,(1-slnew)**L2+E2*slnew**T2)

return krl, krg

def get_relaperm_classic (swnew):

s_w_r = 0.2

s_o_r = 0.2

nw = 2

no = 2

krw_ = 1

kro_ = 1

krw = krw_*((swnew-s_w_r)/(1-s_w_r))**nw

kro = kro_*(((1-swnew)-s_o_r)/(1-s_o_r))**no

return krw, kro

def get_residual (unknown):

residual = torch.zeros(Np*Nx*Ny*Nz, requires_grad=False, dtype=torch.float64)

pre_o = unknown[::2]

pre_w = pre_o

sat_w = unknown[1::2]

sat_o = 1 - sat_w

pre_o_old = unknownold[::2]

pre_w_old = pre_o_old

sat_w_old = unknownold[1::2]

sat_o_old = 1 - sat_w_old

poro = poroini*(1+c_r*(pre_o-p_ref)+0.5*(c_r*(pre_o-p_ref))**2)

poroold = poroini*(1+c_r*(pre_o_old-p_ref)+0.5*(c_r*(pre_o_old-p_ref))**2)

Bo = Bo_ref/((1+c_o*(pre_o-p_ref)+0.5*(c_o*(pre_o-p_ref))**2))

Boold = Bo_ref/((1+c_o*(pre_o_old-p_ref)+0.5*(c_o*(pre_o_old-p_ref))**2))

Bw = Bw_ref/((1+c_w*(pre_w-p_ref)+0.5*(c_w*(pre_w-p_ref))**2))

Bwold = Bw_ref/((1+c_w*(pre_w_old-p_ref)+0.5*(c_w*(pre_w_old-p_ref))**2))

miu_o = miu_o_ref*(((1+c_o*(pre_o-p_ref)+0.5*(c_o*(pre_o-p_ref))**2))/((1+(c_o-upsilon_o)*(pre_o-p_ref)+0.5*((c_o-upsilon_o)*(pre_o-p_ref))**2)))

miu_w = miu_w_ref*(((1+c_w*(pre_w-p_ref)+0.5*(c_w*(pre_w-p_ref))**2))/((1+(c_w-upsilon_w)*(pre_w-p_ref)+0.5*((c_w-upsilon_w)*(pre_w-p_ref))**2)))

Accumulation_o = (1/C1)*(sat_o*poro/Bo - sat_o_old*poroold/Boold)*vol

Accumulation_w = (1/C1)*(sat_w*poro/Bw - sat_w_old*poroold/Bwold)*vol

residual[::2] += Accumulation_o

residual[1::2] += Accumulation_w

kro = get_relaperm (sat_w)[1]

krw = get_relaperm (sat_w)[0]

mobi_o = torch.div(kro, (Bo*miu_o))

mobi_w = torch.div(krw, (Bw*miu_w))

oterm = mobi_o/(mobi_o+mobi_w)*qp*dt

wterm = mobi_w/(mobi_o+mobi_w)*qp*dt+qi*dt

residual[::2] += oterm

residual[1::2] += wterm

'''

capillary = get_capillary(sat_l)

pre_l = pre_g - capillary

pre_l = pre_g

gravity_g = rho_g*g

gravity_l = rho_l*g

'''

for i in connection_index:

phi_pre_o = pre_o[connection_a[i]] - pre_o[connection_b[i]]

phi_pre_w = pre_w[connection_a[i]] - pre_w[connection_b[i]]

up_o = connection_a[i] if phi_pre_o >= 0 else connection_b[i]

up_w = connection_a[i] if phi_pre_w >= 0 else connection_b[i]

K_h = 2*K[connection_a[i]]*K[connection_b[i]] / (K[connection_a[i]] + K[connection_b[i]])

Tran_h = K_h*A[i]/d[i]

Tran_o = Tran_h*kro[up_o]/miu_o[up_o]/Bo[up_o]

Tran_w = Tran_h*krw[up_w]/miu_w[up_w]/Bw[up_w]

flux_o = Tran_o*phi_pre_o

flux_w = Tran_w*phi_pre_w

ind_a = 2*connection_a[i]

ind_b = 2*connection_b[i]

residual[ind_a] += C2*dt*flux_o

residual[ind_b] -= C2*dt*flux_o

ind_a += 1

ind_b += 1

residual[ind_a] += C2*dt*flux_w

residual[ind_b] -= C2*dt*flux_w

return residual

if __name__ == '__main__':

C1 = 5.615

C2 = 1.12712e-3

Nx = 25

Ny = 25

Nz = 1

Lx = 500

Ly = 100

Lz = 100

p_ref = 14.7

dx = Lx/Nx

dy = Ly/Ny

dz = Lz/Nz

vol = dx*dy*dz;

K = 100*torch.ones(Nx*Ny*Nz, requires_grad=True, dtype=torch.float64)

dt = 0.1

tf = 1

time = 0

alpha_chop = 0.5

alpha_grow = 2

dt_min = 0.1

Max_iter = 10

Tol_resi = 1e-7

g = 9.80665

Np= 2

c_r = 1e-6

c_o = 1e-4 #oil

c_w = 1e-6 #water

p_ref = 14.7

Bo_ref = 1

Bw_ref = 1

miu_o_ref = 1

miu_w_ref = 1

rho_o_ref = 53

rho_w_ref = 64

poroini = 0.2

upsilon_o = 0

upsilon_w = 0

#Well

qi = torch.zeros(Nx, Ny)

qp = torch.zeros(Nx, Ny)

#wilocax = torch.randint(0, Nx, (1,))

#wilocay = torch.randint(0, Ny, (1,))

wilocax = 0*torch.ones(1, dtype=torch.int64)

wilocay = 0*torch.ones(1, dtype=torch.int64)

qi[wilocax, wilocay] = -100

#wplocax = torch.randint(0, Nx, (1,))

#wplocay = torch.randint(0, Ny, (1,))

wplocax = 4*torch.ones(1,dtype=torch.int64)

wplocay = 0*torch.ones(1,dtype=torch.int64)

qp[wplocax, wplocay] = 10

qi=qi.reshape([-1,])

qp=qp.reshape([-1,])

#grids = torch.arange(0, Nx*Ny*Nz, requires_grad=False, dtype=torch.int32)

#grids = torch.reshape(grids,(Nx,Ny)).t()

connection_x = torch.arange(0, (Nx-1)*Ny*Nz, requires_grad=False, dtype=torch.int32)

connection_y = torch.arange(0, Nx*(Ny-1)*Nz, requires_grad=False, dtype=torch.int32)

connection_z = torch.arange(0, Nx*Ny*(Nz-1), requires_grad=False, dtype=torch.int32)

connection = torch.cat((connection_x, connection_y, connection_z), 0)

A_x = dy*dz*torch.ones(connection_x.size(dim=0), requires_grad=False, dtype=torch.int32)

A_y = dx*dz*torch.ones(connection_y.size(dim=0), requires_grad=False, dtype=torch.int32)

A_z = dx*dy*torch.ones(connection_z.size(dim=0), requires_grad=False, dtype=torch.int32)

A = torch.cat((A_x, A_y, A_z), 0)

d_x = dx*torch.ones(connection_x.size(dim=0), requires_grad=False, dtype=torch.int32)

d_y = dy*torch.ones(connection_y.size(dim=0), requires_grad=False, dtype=torch.int32)

d_z = dz*torch.ones(connection_z.size(dim=0), requires_grad=False, dtype=torch.int32)

d = torch.cat((d_x, d_y, d_z), 0)

connection_x_index = torch.arange(0, (Nx-1)*Ny*Nz, requires_grad=False, dtype=torch.int32)

connection_y_index = torch.arange((Nx-1)*Ny*Nz, (Nx-1)*Ny*Nz+Nx*(Ny-1)*Nz, requires_grad=False, dtype=torch.int32)

connection_z_index = torch.arange((Nx-1)*Ny*Nz+Nx*(Ny-1)*Nz, (Nx-1)*Ny*Nz+Nx*(Ny-1)*Nz+Nx*Ny*(Nz-1), requires_grad=False, dtype=torch.int32)

connection_index = torch.cat((connection_x_index, connection_y_index, connection_z_index), 0)

connection_xa = connection_x + torch.div(connection_x, Nx-1, rounding_mode='trunc')

connection_xb = connection_xa + 1

connection_ya = connection_y + Nx*torch.div(connection_y, Nx*(Ny-1), rounding_mode='trunc')

connection_yb = connection_ya + Nx

connection_za = connection_z

connection_zb = connection_za + Nx*Ny

connection_a = torch.cat((connection_xa, connection_ya, connection_za), 0)

connection_b = torch.cat((connection_xb, connection_yb, connection_zb), 0)

# IC

swnew = 0.3*torch.ones(Nx*Ny*Nz, requires_grad=True, dtype=torch.float64)

ponew = 6000*torch.ones(Nx*Ny*Nz, requires_grad=True, dtype=torch.float64)

pwnew = 6000*torch.ones(Nx*Ny*Nz, requires_grad=True, dtype=torch.float64)

pc = get_capillary(swnew)

unknown = torch.ravel(torch.column_stack((ponew, swnew)))

unknownold = unknown.detach().clone()

ntimestep = 1;

print('PyTorch version is '+torch.__version__)

if torch.cuda.is_available():

print("PyTorch is installed with GPU support.")

print("Number of available GPUs:", torch.cuda.device_count())

for i in range(torch.cuda.device_count()):

print(f"GPU {i}: {torch.cuda.get_device_name(i)}")

else:

print("PyTorch is installed without GPU support.")

while abs(time - tf) > 1e-8:

niter = 0

start = timer()

r = get_residual(unknown)

end = timer()

print('get_residual timing:', end - start)

start = timer()

J = jacfwd(get_residual)(unknown)

#J = jacobian(get_residual, unknown)

end = timer()

print('Jacfwd timing: ', end - start)

#cr = r.detach().numpy()

#cJ = J.detach().numpy()

#x, exitCode = gmres(Jnew, -rnew)

while True:

update = torch.linalg.solve(J, r)

niter = niter+1;

unknown -= update

#XiaoYuLing = torch.where(unknown[1::2] < 0)

#unknown[1::2][XiaoYuLing] = 0

r = get_residual(unknown)

if (torch.linalg.vector_norm(r, 2) <= Tol_resi):

is_coverged = 1

print (' ')

print ('****************************************************************************************')

print ('From time '+str(time)+' to time '+str(time+dt))

print ('Timestep '+str(ntimestep)+' convergers, here is the report:')

print ('2-Norm of the residual system: '+ str(torch.linalg.vector_norm(r, 2).detach().numpy()))

print ('Number of iterations: '+ str(niter))

print ('****************************************************************************************')

print (' ')

ntimestep = ntimestep + 1

unknownold = unknown.detach().clone()

plt.plot(unknown[::2].detach().numpy())

plt.ylabel('Pressure')

#plt.show()

else:

is_coverged = 0

J = jacfwd(get_residual)(unknown)

if ((niter > Max_iter) or (is_coverged)):

break

if (not is_coverged):

#dt *= alpha_chop

dt = dt if dt >= dt_min else dt_min

else:

time += dt

#dt *= alpha_grow

dt = (tf - time) if (time + dt) > tf else dt

```

Main thread:

Current thread 0x00002934 (most recent call first):

File "d:\dlsim\ai\25x25\main_ow.py", line 328 in <module>

File "C:\Users\wec8371\Anaconda3\envs\AISim\lib\site-packages\spyder_kernels\py3compat.py", line 356 in compat_exec

File "C:\Users\wec8371\Anaconda3\envs\AISim\lib\site-packages\spyder_kernels\customize\spydercustomize.py", line 469 in exec_code

File "C:\Users\wec8371\Anaconda3\envs\AISim\lib\site-packages\spyder_kernels\customize\spydercustomize.py", line 611 in _exec_file

File "C:\Users\wec8371\Anaconda3\envs\AISim\lib\site-packages\spyder_kernels\customize\spydercustomize.py", line 524 in runfile

File "C:\Users\wec8371\AppData\Local\Temp\ipykernel_8860\3764171792.py", line 1 in <module>

Restarting kernel...

cc @zou3519 @Chillee @samdow @soumith @kshitij12345 @janeyx99

| 0 |

2,811 | 99,797 |

Automatic broadcasting for sparse csr tensors

|

module: sparse, triaged

|

### 🚀 The feature, motivation and pitch

Hey, would it be possible to add broadcasting to sparse csr tensors.

```python

import torch

input = torch.randn(6,6).to_sparse_csr()

bias = torch.tensor([[1,2,0,0,3,2]]).t().to_sparse_csr()

input.to_dense() + bias.to_dense() # working

input + bias.to_dense() # not working

input.to_dense() + bias # not working

input + bias # not working

```

### Alternatives

While elementwise dense matrix multplication/addition broadcasting is supported, it is not supported for sparse (csr) tensors.

Could that be added?

### Additional context

_No response_

cc @alexsamardzic @nikitaved @pearu @cpuhrsch @amjames @bhosmer

| 2 |

2,812 | 99,794 |

Apple metal (MPS) device returning incorrect keypoints for YOLOv8 pose estimation model

|

high priority, oncall: binaries, oncall: releng, triaged, module: correctness (silent), module: mps

|

### 🐛 Describe the bug

I'm encountering an issue with the YOLOv8 pose estimation model inference using the MPS model. The model is returning incorrect key points. However, when I use the same method and code on the CPU, the key points are correct. I believe the issue may be related to the Apple Metal library. From my research, it seems to be causing the error. The bounding boxes detection seems to be working fine, but there's an issue with calculating the key points. Could you please help me solve this problem?

```python

import cv2

import numpy as np

from ultralytics import YOLO

cv2.namedWindow("mps_test",cv2.WINDOW_NORMAL)

image = cv2.imread("pose_test.jpeg")

model = YOLO('yolov8s-pose.pt') # load an official model

results = model(source=image,device="cpu",conf=0.6)

#results = model(source=image,device="mps",conf=0.6)

result_image = results[0].plot()

cv2.imshow("cpu_test", result_image)

cv2.waitKey(0)

cv2.destroyAllWindows()

```

Output With "MPS" (apple metal)

<img width="1168" alt="Screenshot 2023-04-19 at 15 54 42" src="https://user-images.githubusercontent.com/46287166/233779812-87e363e1-b37e-4cbe-b628-5952c3bfc35f.png">

Output With "CPU"

<img width="1212" alt="Screenshot 2023-04-19 at 16 18 57" src="https://user-images.githubusercontent.com/46287166/233779839-1fb70ff8-2bc4-43db-b270-caa495e19921.png">

### Versions

Macbook Air m1 chip

Metal: Version: 306.2.4

XCode:14.3

Macos Ventura:13.0

ultralytics Version: 8.0.81

Python Version: 3.10.8

Torch Version: 2.1.0.dev20230417

Opencv Version: 4.7.0.72

cc @ezyang @gchanan @zou3519 @seemethere @malfet @kulinseth @albanD @DenisVieriu97 @razarmehr @abhudev

| 2 |

2,813 | 99,790 |

Cannot compile torch 1.10 in CentOS 7.3

|

triaged

|

### 🐛 Describe the bug

I tried to compile PyTorch 1.10 on CentOS, but after configuring the system environment, I always got compilation errors as follows. I found that the gcc version was incorrect and could not be upgraded on CentOS. How can I solve this?

### Versions

PyTorch version: 1.10.1

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: CentOS Linux 7 (Core) (x86_64)

GCC version: (conda-forge gcc 12.2.0-19) 12.2.0

Clang version: Could not collect

CMake version: version 3.22.1

Libc version: glibc-2.17

Python version: 3.9.16 (main, Mar 8 2023, 14:00:05) [GCC 11.2.0] (64-bit runtime)

Python platform: Linux-3.10.0-514.el7.x86_64-x86_64-with-glibc2.17

Is CUDA available: True

CUDA runtime version: 10.2.89

CUDA_MODULE_LOADING set to:

GPU models and configuration: GPU 0: Tesla T4

Nvidia driver version: 440.33.01

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 12

On-line CPU(s) list: 0-11

Thread(s) per core: 2

Core(s) per socket: 6

座: 1

NUMA 节点: 1

厂商 ID: GenuineIntel

CPU 系列: 6

型号: 158

型号名称: Intel(R) Core(TM) i7-8700 CPU @ 3.20GHz

步进: 10

CPU MHz: 3155.250

BogoMIPS: 6400.00

虚拟化: VT-x

L1d 缓存: 32K

L1i 缓存: 32K

L2 缓存: 256K

L3 缓存: 12288K

NUMA 节点0 CPU: 0-11

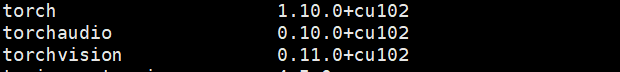

Versions of relevant libraries:

[pip3] numpy==1.23.5

[pip3] torch==1.10.1

[pip3] torchaudio==0.10.1

[pip3] torchvision==0.11.2

[conda] blas 1.0 mkl

[conda] cudatoolkit 10.2.89 hfd86e86_1

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-include 2023.1.0 h06a4308_46342

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.23.5 py39h14f4228_0

[conda] numpy-base 1.23.5 py39h31eccc5_0

[conda] pytorch 1.10.1 py3.9_cuda10.2_cudnn7.6.5_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchaudio 0.10.1 py39_cu102 pytorch

[conda] torchvision 0.11.2 py39_cu102 pytorch

| 2 |

2,814 | 99,781 |

2.0.0+cu118 package missing proper libnvrtc-builtins.so.11.8

|

oncall: binaries, module: cpp

|

### 🐛 Describe the bug

Within the following package:

https://download.pytorch.org/libtorch/cu118/libtorch-cxx11-abi-shared-with-deps-2.0.0%2Bcu118.zip

`libnvrtc-builtins.so.11.8` is not packaged correctly (under `./lib`):

```

(base) ➜ lib git:(master) ✗ ls -la | grep libnvrtc

-rwxr-xr-x 1 evadne evadne 54417561 Mar 10 00:04 libnvrtc-672ee683.so.11.2

-rwxr-xr-x 1 evadne evadne 7722649 Mar 10 00:04 libnvrtc-builtins-2dc4bf68.so.11.8

```

As a result nvFuser fails sometimes with the following error:

```

CUDA NVRTC

compile error: nvrtc: error: failed to open libnvrtc-builtins.so.11.8.

Make sure that libnvrtc-builtins.so.11.8 is installed correctly.

```

This can be worked around by symlinking / renaming `libnvrtc-builtins-2dc4bf68.so.11.8` to `libnvrtc-builtins.so.11.8` but in my opinion should be fixed from source.

### Versions

Collecting environment information...

PyTorch version: N/A

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: Ubuntu 22.04.1 LTS (x86_64)

GCC version: (Ubuntu 11.3.0-1ubuntu1~22.04) 11.3.0

Clang version: 14.0.0-1ubuntu1

CMake version: version 3.22.1

Libc version: glibc-2.35

Python version: 3.10.9 (main, Jan 11 2023, 15:21:40) [GCC 11.2.0] (64-bit runtime)

Python platform: Linux-5.10.16.3-microsoft-standard-WSL2-x86_64-with-glibc2.35

Is CUDA available: N/A

CUDA runtime version: Could not collect

CUDA_MODULE_LOADING set to: N/A

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 4090

Nvidia driver version: 531.41

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.9.0

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.9.0

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.9.0

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.9.0

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.9.0

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.9.0

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.9.0

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 48 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 32

On-line CPU(s) list: 0-31

Vendor ID: AuthenticAMD

Model name: AMD Ryzen 9 7950X 16-Core Processor

CPU family: 25

Model: 97

Thread(s) per core: 2

Core(s) per socket: 16

Socket(s): 1

Stepping: 2

BogoMIPS: 8999.76

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl tsc_reliable nonstop_tsc cpuid extd_apicid pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm cmp_legacy cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw topoext ibrs ibpb stibp vmmcall fsgsbase bmi1 avx2 smep bmi2 erms avx512f avx512dq rdseed adx smap avx512ifma clflushopt clwb avx512cd sha_ni avx512bw avx512vl xsaveopt xsavec xgetbv1 xsaves avx512_bf16 clzero xsaveerptr arat avx512vbmi umip avx512_vbmi2 gfni vaes vpclmulqdq avx512_vnni avx512_bitalg avx512_vpopcntdq rdpid fsrm

Hypervisor vendor: Microsoft

Virtualization type: full

L1d cache: 512 KiB (16 instances)

L1i cache: 512 KiB (16 instances)

L2 cache: 16 MiB (16 instances)

L3 cache: 32 MiB (1 instance)

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Spec store bypass: Vulnerable

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Full AMD retpoline, IBPB conditional, IBRS_FW, STIBP conditional, RSB filling

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Not affected

Versions of relevant libraries:

[pip3] No relevant packages

[conda] No relevant packages

cc @seemethere @malfet @jbschlosser

| 2 |

2,815 | 99,774 |

RuntimeError: Cannot call sizes() on tensor with symbolic sizes/strides w/ `dynamo.export`, `make_fx` and `functionalize`

|

triaged, module: functionalization, oncall: pt2, module: export

|

### Latest update

This is the most distilled repro.

```python

import torch

import torch._dynamo

import torch.func

from torch.fx.experimental import proxy_tensor

from torch._dispatch.python import enable_python_dispatcher

def func(x, y):

return torch.matmul(x, y)

x = torch.randn(2, 4, 3, 4)

y = torch.randn(2, 4, 4, 3)

with enable_python_dispatcher():

# RuntimeError: Cannot call sizes() on tensor with symbolic sizes/strides

gm = proxy_tensor.make_fx(torch.func.functionalize(func), tracing_mode="symbolic")(x, y)

```

Below is original issue post before further discussion.

### 🐛 Describe the bug

Distilled repro, greatly appreciate hints how to approach/debug this.

```python

import torch

import torch._dynamo

import torch.func

from torch.fx.experimental import proxy_tensor

def func(x, y):

return torch.matmul(x, y.transpose(-1, -2))

x = torch.randn(2, 4, 3, 4)

y = torch.randn(2, 4, 3, 4)

gm, _ = torch._dynamo.export(func, x, y)

gm.print_readable()

gm = proxy_tensor.make_fx(torch.func.functionalize(gm), tracing_mode="symbolic")(x, y)

gm.print_readable()

```

```

Traceback (most recent call last):

File "/home/bowbao/pytorch_dev/torch/fx/graph_module.py", line 271, in __call__

return super(self.cls, obj).__call__(*args, **kwargs) # type: ignore[misc]

File "/home/bowbao/pytorch_dev/torch/fx/_symbolic_trace.py", line 756, in module_call_wrapper

return self.call_module(mod, forward, args, kwargs)

File "/home/bowbao/pytorch_dev/torch/fx/experimental/proxy_tensor.py", line 433, in call_module

return forward(*args, **kwargs)

File "/home/bowbao/pytorch_dev/torch/fx/_symbolic_trace.py", line 749, in forward

return _orig_module_call(mod, *args, **kwargs)

File "/home/bowbao/pytorch_dev/torch/nn/modules/module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "<eval_with_key>.3", line 7, in forward

matmul = torch.matmul(arg0, transpose); arg0 = transpose = None

RuntimeError: Cannot call sizes() on tensor with symbolic sizes/strides

Call using an FX-traced Module, line 7 of the traced Module's generated forward function:

transpose = arg1.transpose(-1, -2); arg1 = None

matmul = torch.matmul(arg0, transpose); arg0 = transpose = None

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ <--- HERE

return pytree.tree_unflatten([matmul], self._out_spec)

Traceback (most recent call last):

File "repro_simpler_func_dynamic.py", line 15, in <module>

gm = proxy_tensor.make_fx(torch.func.functionalize(gm), tracing_mode="symbolic")(x, y)

File "/home/bowbao/pytorch_dev/torch/fx/experimental/proxy_tensor.py", line 771, in wrapped

t = dispatch_trace(wrap_key(func, args, fx_tracer, pre_autograd), tracer=fx_tracer, concrete_args=tuple(phs))

File "/home/bowbao/pytorch_dev/torch/_dynamo/eval_frame.py", line 252, in _fn

return fn(*args, **kwargs)

File "/home/bowbao/pytorch_dev/torch/fx/experimental/proxy_tensor.py", line 467, in dispatch_trace

graph = tracer.trace(root, concrete_args)

File "/home/bowbao/pytorch_dev/torch/_dynamo/eval_frame.py", line 252, in _fn

return fn(*args, **kwargs)

File "/home/bowbao/pytorch_dev/torch/fx/_symbolic_trace.py", line 778, in trace

(self.create_arg(fn(*args)),),

File "/home/bowbao/pytorch_dev/torch/fx/experimental/proxy_tensor.py", line 484, in wrapped

out = f(*tensors)

File "<string>", line 1, in <lambda>

File "/home/bowbao/pytorch_dev/torch/_functorch/vmap.py", line 39, in fn

return f(*args, **kwargs)

File "/home/bowbao/pytorch_dev/torch/_functorch/eager_transforms.py", line 1600, in wrapped

func_outputs = func(*func_args, **func_kwargs)

File "/home/bowbao/pytorch_dev/torch/fx/graph_module.py", line 662, in call_wrapped

return self._wrapped_call(self, *args, **kwargs)

File "/home/bowbao/pytorch_dev/torch/fx/graph_module.py", line 279, in __call__

raise e.with_traceback(None)

RuntimeError: Cannot call sizes() on tensor with symbolic sizes/strides

```

### Versions

Main on e9786149ab71874fad478109de173af6996f7eec

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh @voznesenskym @penguinwu @anijain2305 @EikanWang @jgong5 @Guobing-Chen @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx @desertfire

| 12 |

2,816 | 99,770 |

Deformable Convolution export to onnx

|

module: onnx, triaged

|

### Deformable Convs in onnx export

Dear Torch Team,

Is there a possibilty that the torch.onnx.export() function gets updated so that it support the deformable convolution layer that is now supported in the latest opset (version 19) of ONNX.

I believe that adding support for deformable convolutions in torch.onnx.export() would greatly enhance the functionality and versatility of the export possibilities.

Nick

| 0 |

2,817 | 99,722 |

cuda 12.0 support request for building pytorch from source code

|

module: build, module: cuda, triaged, enhancement

|

### 🚀 The feature, motivation and pitch

Motivation:

It does support cuda 12.1, but it does not support cuda 12.0

According to the doc: https://github.com/pytorch/pytorch#from-source , the magma-cuda* that does not match the CUDA version 12.0 from https://anaconda.org/pytorch/repo. Only magma-cuda121 can be found, no magma-cuda120

feature: please support cuda 12.0 for building pytorch from source code

Thank you!

### Alternatives

_No response_

### Additional context

_No response_

cc @malfet @seemethere @ngimel

| 3 |

2,818 | 99,715 |

no-duplicate-decl-specifier as a invalid compile flag for CXX in GCC

|

module: build, module: rocm, triaged

|

### 🐛 Describe the bug

[cmake/Dependencies.cmake](https://github.com/pytorch/pytorch/blob/9861ec9785b53e71c9affd7d268ef7073eb1c446/cmake/Dependencies.cmake#L1272) sets -Wno-duplicate-decl-specifier for HIP_CXX_FLAGS. at least for GCC 12 this is not a valid compile flag for c++ translation units causeing lots of `cc1plus: warning: command-line option ‘-Wno-duplicate-decl-specifier’ is valid for C/ObjC but not for C++` warnings

### Versions

9861ec9785b53e71c9affd7d268ef7073eb1c446

cc @malfet @seemethere @jeffdaily @sunway513 @jithunnair-amd @pruthvistony @ROCmSupport

| 0 |

2,819 | 99,710 |

pca_lowrank and svd_lowrank broken under automatic mixed precision.

|

module: cuda, triaged, module: half, module: linear algebra, module: amp (automated mixed precision)

|

### 🐛 Describe the bug

`torch.pca_lowrank` and `torch.svd_lowrank` does not work with automatic mixed precision, even if the inputs are 32 bit.

```python

import torch

x = torch.rand(1000, 3, device="cuda")

with torch.cuda.amp.autocast(True):

assert x.dtype is torch.float32

torch.pca_lowrank(x)

```

Trace:

```

Traceback (most recent call last):

File "/home/pbsds/ntnu/ifield/tmp/pca-repro.py", line 6, in <module>

torch.pca_lowrank(x)

File "/home/pbsds/.cache/pypoetry/virtualenvs/ifield-P6Ko3Gy1-py3.10/lib/python3.10/site-packages/torch/_lowrank.py", line 299, in pca_lowrank

return _svd_lowrank(A - C, q, niter=niter, M=None)

File "/home/pbsds/.cache/pypoetry/virtualenvs/ifield-P6Ko3Gy1-py3.10/lib/python3.10/site-packages/torch/_lowrank.py", line 174, in _svd_lowrank

Q = get_approximate_basis(A, q, niter=niter, M=M)

File "/home/pbsds/.cache/pypoetry/virtualenvs/ifield-P6Ko3Gy1-py3.10/lib/python3.10/site-packages/torch/_lowrank.py", line 70, in get_approximate_basis

Q = torch.linalg.qr(matmul(A, R)).Q

RuntimeError: "geqrf_cuda" not implemented for 'Half'

```

Likely the `matmul` in `get_approximate_basis` in `_lowrank.py` downcasts to float16, which is not supported in `torch.linalg.qr`.

### Versions

Collecting environment information...

PyTorch version: 1.13.1+cu117

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Manjaro Linux (x86_64)

GCC version: (GCC) 11.3.0

Clang version: 15.0.7

CMake version: version 3.25.2

Libc version: glibc-2.37

Python version: 3.10.9 (main, Dec 19 2022, 17:35:49) [GCC 12.2.0] (64-bit runtime)

Python platform: Linux-5.15.102-1-MANJARO-x86_64-with-glibc2.37

Is CUDA available: True

CUDA runtime version: 11.8.89

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 3070 Laptop GPU

Nvidia driver version: 525.89.02

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 39 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 16

On-line CPU(s) list: 0-15

Vendor ID: GenuineIntel

Model name: 11th Gen Intel(R) Core(TM) i7-11800H @ 2.30GHz

CPU family: 6

Model: 141

Thread(s) per core: 2

Core(s) per socket: 8

Socket(s): 1

Stepping: 1

CPU(s) scaling MHz: 79%

CPU max MHz: 4600,0000

CPU min MHz: 800,0000

BogoMIPS: 4609,00

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf tsc_known_freq pni pclmulqdq dtes64 monitor ds_cpl vmx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault epb cat_l2 invpcid_single cdp_l2 ssbd ibrs ibpb stibp ibrs_enhanced tpr_shadow vnmi flexpriority ept vpid ept_ad fsgsbase tsc_adjust bmi1 avx2 smep bmi2 erms invpcid rdt_a avx512f avx512dq rdseed adx smap avx512ifma clflushopt clwb intel_pt avx512cd sha_ni avx512bw avx512vl xsaveopt xsavec xgetbv1 xsaves split_lock_detect dtherm ida arat pln pts hwp hwp_notify hwp_act_window hwp_epp hwp_pkg_req avx512vbmi umip pku ospke avx512_vbmi2 gfni vaes vpclmulqdq avx512_vnni avx512_bitalg avx512_vpopcntdq rdpid movdiri movdir64b fsrm avx512_vp2intersect md_clear flush_l1d arch_capabilities

Virtualization: VT-x

L1d cache: 384 KiB (8 instances)

L1i cache: 256 KiB (8 instances)

L2 cache: 10 MiB (8 instances)

L3 cache: 24 MiB (1 instance)

NUMA node(s): 1

NUMA node0 CPU(s): 0-15

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Mmio stale data: Not affected

Vulnerability Retbleed: Not affected

Vulnerability Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl and seccomp

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Vulnerable: eIBRS with unprivileged eBPF

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Not affected

Versions of relevant libraries:

[pip3] flake8==6.0.0

[pip3] numpy==1.23.3

[pip3] pytorch-lightning==1.9.4

[pip3] pytorch3d==0.7.2

[pip3] torch==1.13.1

[pip3] torchmeta==1.8.0

[pip3] torchmetrics==0.11.4

[pip3] torchvision==0.14.1

[pip3] torchviz==0.0.2

[conda] No relevant packages

cc @ngimel @jianyuh @nikitaved @pearu @mruberry @walterddr @IvanYashchuk @xwang233 @Lezcano @mcarilli @ptrblck @leslie-fang-intel @jgong5

| 6 |

2,820 | 99,701 |

when convert to onnx with dynamix_axis, the Reshape op value is always the same as static, dynamic_axis is useless, it cant't inference right shape dynamically

|

module: onnx, triaged

|

### 🐛 Describe the bug

### 🐛 Describe the bug

when convert to onnx with dynamix_axis, the Reshape op value is always the same as static, dynamic_axis is useless, it cant't inference right shape dynamically

the graph is part of transfomers, in Fig , Thre Reshape value is always unchanged, is obtained by dummpy_input when using totch.onnx.export, after i convert to onnx ,and infer with another size Input , raise an error:

Non-zero status code returned while running Reshape node. Name:'Reshape_375' Status Message: /onnxruntime_src/onnxruntime/core/providers/cpu/tensor/reshape_helper.h:41 onnxruntime::ReshapeHelper::ReshapeHelper(const onnxruntime::TensorShape&, onnxruntime::TensorShapeVector&, bool) gsl::narrow_cast<int64_t>(input_shape.Size()) == size was false. The input tensor cannot be reshaped to the requested shape. Input shape:{12,7,512}, requested shape:{18,36,128}

the shape {18,36,128} is obtained by torch.onnx.export , this shape unchanged , it results to error when i try to another Input, actually, i use dynamic_axis flags . i doubt q = q.contiguous().view(tgt_len, bsz * num_heads, head_dim).transpose(0, 1) shape is not right,here

tgt_len, bsz, embed_dim = query.shape is get the shape.

but its value is infered by dummpy_input shape by totch.onnx.export, the model only remember {18,36,128},not dynamic size, when infer with shape {12,7,512}, raise error:

q = q.contiguous().view(tgt_len, bsz * num_heads, head_dim).transpose(0, 1) this code can't get dynamic shape

what is the reason that results to errors??? it is very strange , i have no ideas to solve it???

the code to the graph is as follows:

def multi_head_attention_forward(

query: Tensor,

key: Tensor,

value: Tensor,

embed_dim_to_check: int,

num_heads: int,

in_proj_weight: Tensor,

in_proj_bias: Optional[Tensor],

bias_k: Optional[Tensor],

bias_v: Optional[Tensor],

add_zero_attn: bool,

dropout_p: float,

out_proj_weight: Tensor,

out_proj_bias: Optional[Tensor],

training: bool = True,

key_padding_mask: Optional[Tensor] = None,

need_weights: bool = True,

attn_mask: Optional[Tensor] = None,

use_separate_proj_weight: bool = False,

q_proj_weight: Optional[Tensor] = None,

k_proj_weight: Optional[Tensor] = None,

v_proj_weight: Optional[Tensor] = None,

static_k: Optional[Tensor] = None,

static_v: Optional[Tensor] = None,

) -> Tuple[Tensor, Optional[Tensor]]:

r"""

Args:

query, key, value: map a query and a set of key-value pairs to an output.

See "Attention Is All You Need" for more details.

embed_dim_to_check: total dimension of the model.

num_heads: parallel attention heads.

in_proj_weight, in_proj_bias: input projection weight and bias.

bias_k, bias_v: bias of the key and value sequences to be added at dim=0.

add_zero_attn: add a new batch of zeros to the key and

value sequences at dim=1.

dropout_p: probability of an element to be zeroed.

out_proj_weight, out_proj_bias: the output projection weight and bias.

training: apply dropout if is ``True``.

key_padding_mask: if provided, specified padding elements in the key will

be ignored by the attention. This is an binary mask. When the value is True,

the corresponding value on the attention layer will be filled with -inf.

need_weights: output attn_output_weights.

attn_mask: 2D or 3D mask that prevents attention to certain positions. A 2D mask will be broadcasted for all

the batches while a 3D mask allows to specify a different mask for the entries of each batch.

use_separate_proj_weight: the function accept the proj. weights for query, key,

and value in different forms. If false, in_proj_weight will be used, which is

a combination of q_proj_weight, k_proj_weight, v_proj_weight.

q_proj_weight, k_proj_weight, v_proj_weight, in_proj_bias: input projection weight and bias.

static_k, static_v: static key and value used for attention operators.

tens_ops = (query, key, value, in_proj_weight, in_proj_bias, bias_k, bias_v, out_proj_weight, out_proj_bias)

if has_torch_function(tens_ops):

return handle_torch_function(

multi_head_attention_forward,

tens_ops,

query,

key,

value,

embed_dim_to_check,

num_heads,

in_proj_weight,

in_proj_bias,

bias_k,

bias_v,

add_zero_attn,

dropout_p,

out_proj_weight,

out_proj_bias,

training=training,

key_padding_mask=key_padding_mask,

need_weights=need_weights,

attn_mask=attn_mask,

use_separate_proj_weight=use_separate_proj_weight,

q_proj_weight=q_proj_weight,

k_proj_weight=k_proj_weight,

v_proj_weight=v_proj_weight,

static_k=static_k,

static_v=static_v,

)

# set up shape vars

tgt_len, bsz, embed_dim = query.shape

src_len, _, _ = key.shape

assert embed_dim == embed_dim_to_check, \

f"was expecting embedding dimension of {embed_dim_to_check}, but got {embed_dim}"

if isinstance(embed_dim, torch.Tensor):

# embed_dim can be a tensor when JIT tracing

head_dim = embed_dim.div(num_heads, rounding_mode='trunc')

else:

head_dim = embed_dim // num_heads

assert head_dim * num_heads == embed_dim, f"embed_dim {embed_dim} not divisible by num_heads {num_heads}"

if use_separate_proj_weight:

# allow MHA to have different embedding dimensions when separate projection weights are used

assert key.shape[:2] == value.shape[:2], \

f"key's sequence and batch dims {key.shape[:2]} do not match value's {value.shape[:2]}"

else:

assert key.shape == value.shape, f"key shape {key.shape} does not match value shape {value.shape}"

#

# compute in-projection

#

if not use_separate_proj_weight:

q, k, v = _in_projection_packed(query, key, value, in_proj_weight, in_proj_bias)

else:

assert q_proj_weight is not None, "use_separate_proj_weight is True but q_proj_weight is None"

assert k_proj_weight is not None, "use_separate_proj_weight is True but k_proj_weight is None"

assert v_proj_weight is not None, "use_separate_proj_weight is True but v_proj_weight is None"

if in_proj_bias is None:

b_q = b_k = b_v = None

else:

b_q, b_k, b_v = in_proj_bias.chunk(3)

q, k, v = _in_projection(query, key, value, q_proj_weight, k_proj_weight, v_proj_weight, b_q, b_k, b_v)

# prep attention mask

if attn_mask is not None:

if attn_mask.dtype == torch.uint8:

warnings.warn("Byte tensor for attn_mask in nn.MultiheadAttention is deprecated. Use bool tensor instead.")

attn_mask = attn_mask.to(torch.bool)

else:

assert attn_mask.is_floating_point() or attn_mask.dtype == torch.bool, \

f"Only float, byte, and bool types are supported for attn_mask, not {attn_mask.dtype}"

# ensure attn_mask's dim is 3

if attn_mask.dim() == 2:

correct_2d_size = (tgt_len, src_len)

if attn_mask.shape != correct_2d_size:

raise RuntimeError(f"The shape of the 2D attn_mask is {attn_mask.shape}, but should be {correct_2d_size}.")

attn_mask = attn_mask.unsqueeze(0)

elif attn_mask.dim() == 3:

correct_3d_size = (bsz * num_heads, tgt_len, src_len)

if attn_mask.shape != correct_3d_size:

raise RuntimeError(f"The shape of the 3D attn_mask is {attn_mask.shape}, but should be {correct_3d_size}.")

else:

raise RuntimeError(f"attn_mask's dimension {attn_mask.dim()} is not supported")

# prep key padding mask

if key_padding_mask is not None and key_padding_mask.dtype == torch.uint8:

warnings.warn("Byte tensor for key_padding_mask in nn.MultiheadAttention is deprecated. Use bool tensor instead.")

key_padding_mask = key_padding_mask.to(torch.bool)

# add bias along batch dimension (currently second)

if bias_k is not None and bias_v is not None:

assert static_k is None, "bias cannot be added to static key."

assert static_v is None, "bias cannot be added to static value."

k = torch.cat([k, bias_k.repeat(1, bsz, 1)])

v = torch.cat([v, bias_v.repeat(1, bsz, 1)])

if attn_mask is not None:

attn_mask = pad(attn_mask, (0, 1))

if key_padding_mask is not None:

key_padding_mask = pad(key_padding_mask, (0, 1))

else:

assert bias_k is None

assert bias_v is None

#

# reshape q, k, v for multihead attention and make em batch first

#

q = q.contiguous().view(tgt_len, bsz * num_heads, head_dim).transpose(0, 1)

if static_k is None:

k = k.contiguous().view(k.shape[0], bsz * num_heads, head_dim).transpose(0, 1)

else:

# TODO finish disentangling control flow so we don't do in-projections when statics are passed

assert static_k.size(0) == bsz * num_heads, \

f"expecting static_k.size(0) of {bsz * num_heads}, but got {static_k.size(0)}"

assert static_k.size(2) == head_dim, \

f"expecting static_k.size(2) of {head_dim}, but got {static_k.size(2)}"

k = static_k

if static_v is None:

v = v.contiguous().view(v.shape[0], bsz * num_heads, head_dim).transpose(0, 1)

else:

# TODO finish disentangling control flow so we don't do in-projections when statics are passed

assert static_v.size(0) == bsz * num_heads, \

f"expecting static_v.size(0) of {bsz * num_heads}, but got {static_v.size(0)}"

assert static_v.size(2) == head_dim, \

f"expecting static_v.size(2) of {head_dim}, but got {static_v.size(2)}"

v = static_v

# add zero attention along batch dimension (now first)

if add_zero_attn:

zero_attn_shape = (bsz * num_heads, 1, head_dim)

k = torch.cat([k, torch.zeros(zero_attn_shape, dtype=k.dtype, device=k.device)], dim=1)

v = torch.cat([v, torch.zeros(zero_attn_shape, dtype=v.dtype, device=v.device)], dim=1)

if attn_mask is not None:

attn_mask = pad(attn_mask, (0, 1))

if key_padding_mask is not None:

key_padding_mask = pad(key_padding_mask, (0, 1))

# update source sequence length after adjustments

src_len = k.size(1)

# merge key padding and attention masks

if key_padding_mask is not None:

assert key_padding_mask.shape == (bsz, src_len), \

f"expecting key_padding_mask shape of {(bsz, src_len)}, but got {key_padding_mask.shape}"

key_padding_mask = key_padding_mask.view(bsz, 1, 1, src_len). \

expand(-1, num_heads, -1, -1).reshape(bsz * num_heads, 1, src_len)

if attn_mask is None:

attn_mask = key_padding_mask

elif attn_mask.dtype == torch.bool:

attn_mask = attn_mask.logical_or(key_padding_mask)

else:

attn_mask = attn_mask.masked_fill(key_padding_mask, float("-inf"))

# convert mask to float

if attn_mask is not None and attn_mask.dtype == torch.bool:

new_attn_mask = torch.zeros_like(attn_mask, dtype=torch.float)

new_attn_mask.masked_fill_(attn_mask, float("-inf"))

attn_mask = new_attn_mask

# adjust dropout probability

if not training:

dropout_p = 0.0

#

# (deep breath) calculate attention and out projection

#

attn_output, attn_output_weights = _scaled_dot_product_attention(q, k, v, attn_mask, dropout_p)

attn_output = attn_output.transpose(0, 1).contiguous().view(tgt_len, bsz, embed_dim)

attn_output = linear(attn_output, out_proj_weight, out_proj_bias)

if need_weights:

# average attention weights over heads

attn_output_weights = attn_output_weights.view(bsz, num_heads, tgt_len, src_len)

return attn_output, attn_output_weights.sum(dim=1) / num_heads

else:

return attn_output, None

### Versions

pytorch 1.10.1

CUDA 10.2

@svenstaro @JackDanger @infil00p @eklitzke

@soulitzer @infil00p @svenstaro @svenstaro

### Versions

pytorch 1.10.1

CUDA 10.2

| 5 |

2,821 | 99,693 |

WARNING: The shape inference of prim::PadPacked type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

|

oncall: jit, onnx-triaged

|

### 🐛 Describe the bug

WARNING: The shape inference of prim::PadPacked type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function.

### Versions

CUDA 10.2

pytorch 1.10.1

cc @EikanWang @jgong5 @wenzhe-nrv @sanchitintel

| 2 |

2,822 | 99,690 |

gpu training work well, but cpu training not work

|

module: cpu, triaged, module: fft

|

### 🐛 Describe the bug

model{

self.a=torch.nn.Parameter(torch.rand(10,10))

self.b=torch.nn.Parameter(torch.rand(10,10))

self.c=torch.nn.Parameter(torch.rand(1))

}

forward{

fft

ifft

...

}

i do model=model.cuda().cpu()

cpu training will work

### Versions

1.10

cuda 11.1

cc @jgong5 @mingfeima @XiaobingSuper @sanchitintel @ashokei @jingxu10 @mruberry @peterbell10

| 5 |

2,823 | 99,689 |

[torch.compile] can't multiply sequence by non-int of type 'float' when enabling `shape_padding`

|

triaged, oncall: pt2, module: inductor

|

### 🐛 Describe the bug

`torch.compile` raises an error that `can't multiply sequence by non-int of type 'float'` when enabling `shape_padding`

```py

import torch

torch.manual_seed(420)

class Model(torch.nn.Module):

def forward(self, x, y, inp):

return torch.add(torch.mm(x, y), inp)

x = torch.randn(3, 4).cuda()

y = torch.randn(4, 5).cuda()

inp = torch.randn(3, 5).cuda()

func = Model()

res1 = func(x, y, inp)

print(res1)

jit_func = torch.compile(func)

res2 = jit_func(x, y, inp)

print(res2)

# TypeError: can't multiply sequence by non-int of type 'float'

# While executing %mm : [#users=1] = call_function[target=torch.mm](args = (%l_x_, %l_y_), kwargs = {})

```

After checking the call stack, I fount that this bug is in `should_pad_bench` in the decomposition of `addmm`

### Versions

```

Collecting environment information...

PyTorch version: 2.1.0.dev20230419+cu118

Is debug build: False

CUDA used to build PyTorch: 11.8

ROCM used to build PyTorch: N/A

OS: Ubuntu 22.04.1 LTS (x86_64)

GCC version: (Ubuntu 11.3.0-1ubuntu1~22.04) 11.3.0

Clang version: 14.0.0-1ubuntu1

CMake version: Could not collect

Libc version: glibc-2.35

Python version: 3.9.16 (main, Mar 8 2023, 14:00:05) [GCC 11.2.0] (64-bit runtime)

Python platform: Linux-5.19.5-051905-generic-x86_64-with-glibc2.35

Is CUDA available: True

CUDA runtime version: 11.5.119

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 3060

Nvidia driver version: 510.108.03

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 46 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 24

On-line CPU(s) list: 0-23

Vendor ID: GenuineIntel

Model name: 12th Gen Intel(R) Core(TM) i9-12900K

CPU family: 6

Model: 151

Thread(s) per core: 2

Core(s) per socket: 16

Socket(s): 1

Stepping: 2

CPU max MHz: 6700.0000

CPU min MHz: 800.0000

BogoMIPS: 6374.40

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf tsc_known_freq pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault cat_l2 invpcid_single cdp_l2 ssbd ibrs ibpb stibp ibrs_enhanced tpr_shadow vnmi flexpriority ept vpid ept_ad fsgsbase tsc_adjust bmi1 avx2 smep bmi2 erms invpcid rdt_a rdseed adx smap clflushopt clwb intel_pt sha_ni xsaveopt xsavec xgetbv1 xsaves split_lock_detect avx_vnni dtherm ida arat pln pts hwp hwp_notify hwp_act_window hwp_epp hwp_pkg_req hfi umip pku ospke waitpkg gfni vaes vpclmulqdq tme rdpid movdiri movdir64b fsrm md_clear serialize pconfig arch_lbr ibt flush_l1d arch_capabilities

Virtualization: VT-x

L1d cache: 640 KiB (16 instances)

L1i cache: 768 KiB (16 instances)

L2 cache: 14 MiB (10 instances)

L3 cache: 30 MiB (1 instance)

NUMA node(s): 1

NUMA node0 CPU(s): 0-23

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Mmio stale data: Not affected

Vulnerability Retbleed: Not affected

Vulnerability Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Enhanced IBRS, IBPB conditional, RSB filling, PBRSB-eIBRS SW sequence

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Not affected

Versions of relevant libraries:

[pip3] numpy==1.24.1

[pip3] pytorch-triton==2.1.0+46672772b4

[pip3] torch==2.1.0.dev20230419+cu118

[pip3] torchaudio==2.1.0.dev20230419+cu118

[pip3] torchvision==0.16.0.dev20230419+cu118

[conda] numpy 1.24.1 pypi_0 pypi

[conda] pytorch-triton 2.1.0+46672772b4 pypi_0 pypi

[conda] torch 2.1.0.dev20230419+cu118 pypi_0 pypi

[conda] torchaudio 2.1.0.dev20230419+cu118 pypi_0 pypi

[conda] torchvision 0.16.0.dev20230419+cu118 pypi_0 pypi

```

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh @voznesenskym @penguinwu @anijain2305 @EikanWang @jgong5 @Guobing-Chen @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx @peterbell10 @desertfire

| 1 |

2,824 | 99,684 |

In torchelastic support running worker rank 0 on agent rank 0 consistently

|

oncall: distributed, triaged, module: elastic

|

### 🚀 The feature, motivation and pitch

Currently, when launching distributed jobs using torchrun, the Rank 0 worker can land on any arbitrary node. This ask is to add a new rendezvous implementation for which worker rank 0 always runs on agent rank 0.

### Additional context

This will improve observability of the distributed job by easily locating logs for the rank0 worker

cc @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @H-Huang @kwen2501 @awgu @dzhulgakov

| 0 |

2,825 | 99,681 |

`torch.ops.aten.empty` is not discoverable from `dir(torch.ops.aten)` until explicitly calling getattr

|

triaged, module: library

|

### 🐛 Describe the bug

Accidentally discovered this when I was trying to retrieve all `OpOverload`s under `torch.ops.aten`. I noticed that `torch.ops.aten.empty` (may or may not be the only case) is not discoverable from `for op in torch.ops.aten`.

However, it does appear there is such an op since `torch.ops.aten.empty` returns a valid object. And after retrieving that through `getattr` explicitly, it appears under `dir(torch.ops.aten)`.

I wonder if this is a bug or by design? And if it's not a bug what's the recommended way to loop over all `torch.ops.aten`?

```python

>>> import torch

>>> "empty" in torch.ops.aten

False

>>> torch.ops.aten.empty

<OpOverloadPacket(op='aten.empty')>

>>> "empty" in torch.ops.aten

True

```

### Versions

Main from 5315317b7bbb13d1b8d91a682cec1fb4dace79e4

cc @anjali411

| 8 |

2,826 | 99,653 |

Conda MacOS installation install pytorch-1.13 rather than 2.0 as of Apr 4th

|

high priority, triage review, oncall: binaries, module: regression

|

### 🐛 Describe the bug

See https://hud.pytorch.org/hud/pytorch/builder/main/1?per_page=50&name_filter=cron%20%2F%20release%20%2F%20mac%20%2F%20conda-py3

Last successful run: https://github.com/pytorch/builder/actions/runs/4619355597/jobs/8168032918

First faulty run: https://github.com/pytorch/builder/actions/runs/4629742465/jobs/8190405098

### Versions

2.0

cc @ezyang @gchanan @zou3519 @seemethere

| 2 |

2,827 | 99,652 |

DistributedDataParallel doesn't work with complex buffers

|

oncall: distributed, module: complex

|

### 🐛 Describe the bug

DistributedDataParallel doesn't work with complex buffers, even when `broadcast_buffers=False`.

```py

import os

import torch

from torch import nn

torch.distributed.init_process_group(backend="nccl")

rank = int(os.environ["LOCAL_RANK"])

device = f"cuda:{rank}"

torch.cuda.set_device(device)

class Net(nn.Module):

def __init__(self):

super().__init__()

self.register_buffer("complex", torch.tensor([1.0 + 1.0j], requires_grad=False))

self.net = nn.Linear(16, 32)

def forward(self, x):

return self.net(x)

if __name__ == "__main__":

model = Net()

model = model.to(device)

model = torch.nn.parallel.DistributedDataParallel(

model, device_ids=[rank], broadcast_buffers=False

)

```

Throws `RuntimeError: Input Tensor data type is not supported for NCCL process group: ComplexFloat`. But I do not need DDP to sync the buffer--it's a static parameter that I just want moved with the model when I do `.to(device)`. It doesn't have any gradients, it doesn't need any syncing, it will never change during training.

How can I get DDP to ignore this buffer?

### Versions

By the way, `https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py` is missing; it should be `wget https://raw.githubusercontent.com/pytorch/pytorch/main/torch/utils/collect_env.py` (master should be main).

```

Collecting environment information...

PyTorch version: 2.1.0.dev20230416+cu118

Is debug build: False

CUDA used to build PyTorch: 11.8

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.6 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: 10.0.0-4ubuntu1

CMake version: version 3.26.0

Libc version: glibc-2.31

Python version: 3.10.9 (main, Mar 22 2023, 11:20:39) [GCC 9.4.0] (64-bit runtime)

Python platform: Linux-5.4.0-146-generic-x86_64-with-glibc2.31

Is CUDA available: True

CUDA runtime version: Could not collect

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration:

GPU 0: NVIDIA RTX A6000

GPU 1: NVIDIA RTX A6000

GPU 2: NVIDIA RTX A6000

GPU 3: NVIDIA RTX A6000

GPU 4: NVIDIA RTX A6000

GPU 5: NVIDIA RTX A6000

GPU 6: NVIDIA RTX A6000

GPU 7: NVIDIA RTX A6000

Nvidia driver version: 530.30.02

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.8.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.8.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.8.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.8.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.8.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.8.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.8.1

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

Address sizes: 48 bits physical, 48 bits virtual

CPU(s): 64

On-line CPU(s) list: 0-63

Thread(s) per core: 1

Core(s) per socket: 32

Socket(s): 2

NUMA node(s): 8

Vendor ID: AuthenticAMD

CPU family: 25

Model: 1

Model name: AMD EPYC 7513 32-Core Processor

Stepping: 1

Frequency boost: enabled

CPU MHz: 3552.598

CPU max MHz: 2600.0000

CPU min MHz: 1500.0000

BogoMIPS: 5200.14

Virtualization: AMD-V

L1d cache: 2 MiB

L1i cache: 2 MiB

L2 cache: 32 MiB

L3 cache: 256 MiB

NUMA node0 CPU(s): 0-7

NUMA node1 CPU(s): 8-15

NUMA node2 CPU(s): 16-23

NUMA node3 CPU(s): 24-31

NUMA node4 CPU(s): 32-39

NUMA node5 CPU(s): 40-47

NUMA node6 CPU(s): 48-55

NUMA node7 CPU(s): 56-63

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Mmio stale data: Not affected

Vulnerability Retbleed: Not affected

Vulnerability Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl and seccomp

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Retpolines, IBPB conditional, IBRS_FW, STIBP disabled, RSB filling, PBRSB-eIBRS Not affected

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Not affected

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl nonstop_tsc cpuid extd_apicid aperfmperf pni pclmulqdq monitor ssse3 fma cx16 pcid sse4_1 sse4_2 movbe popcnt aes xsave avx f16c rdrand lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw ibs skinit wdt tce topoext perfctr_core perfctr_nb bpext perfctr_llc mwaitx cpb cat_l3 cdp_l3 invpcid_single hw_pstate ssbd mba ibrs ibpb stibp vmmcall fsgsbase bmi1 avx2 smep bmi2 erms invpcid cqm rdt_a rdseed adx smap clflushopt clwb sha_ni xsaveopt xsavec xgetbv1 xsaves cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local clzero irperf xsaveerptr wbnoinvd arat npt lbrv svm_lock nrip_save tsc_scale vmcb_clean flushbyasid decodeassists pausefilter pfthreshold v_vmsave_vmload vgif umip pku ospke vaes vpclmulqdq rdpid overflow_recov succor smca

Versions of relevant libraries:

[pip3] mypy-extensions==1.0.0

[pip3] numpy==1.24.2

[pip3] pytorch-triton==2.1.0+46672772b4

[pip3] torch==2.1.0.dev20230416+cu118

[pip3] triton==2.0.0

[conda] Could not collect

```

cc @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @H-Huang @kwen2501 @awgu @ezyang @anjali411 @dylanbespalko @mruberry @Lezcano @nikitaved

| 1 |

2,828 | 99,649 |

[torch.compile] raises an error that expanded size doesn't match when enabling `shape_padding`

|

triaged, oncall: pt2, module: inductor

|

### 🐛 Describe the bug

`torch.compile` raises an error that expanded size doesn't match when enabling `shape_padding` by setting `TORCHINDUCTOR_SHAPE_PADDING=1`