Serial Number

int64 1

6k

| Issue Number

int64 75.6k

112k

| Title

stringlengths 3

357

| Labels

stringlengths 3

241

⌀ | Body

stringlengths 9

74.5k

⌀ | Comments

int64 0

867

|

|---|---|---|---|---|---|

3,001 | 98,269 |

Inconsistent nn.KLDivLoss behavior: 0s in target OK on cpu, but gives nan on mps

|

triaged, module: mps

|

### 🐛 Describe the bug

KLDivLoss is supposed to take the log of a probability distribution as target, sometimes this target contains 0s. This is handled correctly when device='cpu', but when device='mps' we get nans. Current workaround is to add some small eps to the target.

```python

torch.manual_seed(1)

x = torch.rand(10, 8, device='mps')

x = x / x.sum(dim=1, keepdim=True)

x = log_softmax(x, dim=-1)

y = torch.rand(10, 8, device='mps')

y = y / y.sum(dim=1, keepdim=True)

criterion = nn.KLDivLoss(reduction="sum")

print(criterion(x, y), criterion(x.to('cpu'), y.to('cpu')))

# mask out random entries of y

mask = torch.rand(10, 8, device='mps') < 0.5

y = y * mask

print(criterion(x, y), criterion(x.to('cpu'), y.to('cpu')))

```

Outputs:

```tensor(1.6974, device='mps:0') tensor(1.6974)```

```tensor(nan, device='mps:0') tensor(1.0370)```

### Versions

```

PyTorch version: 2.0.0

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: macOS 13.2.1 (x86_64)

GCC version: Could not collect

Clang version: 14.0.0 (clang-1400.0.29.202)

CMake version: Could not collect

Libc version: N/A

Python version: 3.10.10 (main, Mar 21 2023, 13:41:39) [Clang 14.0.6 ] (64-bit runtime)

Python platform: macOS-10.16-x86_64-i386-64bit

Is CUDA available: False

CUDA runtime version: No CUDA

CUDA_MODULE_LOADING set to: N/A

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Apple M1 Pro

Versions of relevant libraries:

[pip3] numpy==1.23.5

[pip3] torch==2.0.0

[pip3] torchaudio==2.0.0

[pip3] torchvision==0.15.0

[conda] blas 1.0 mkl

[conda] ffmpeg 4.3 h0a44026_0 pytorch

[conda] mkl 2021.4.0 hecd8cb5_637

[conda] mkl-service 2.4.0 py310hca72f7f_0

[conda] mkl_fft 1.3.1 py310hf879493_0

[conda] mkl_random 1.2.2 py310hc081a56_0

[conda] numpy 1.23.5 py310h9638375_0

[conda] numpy-base 1.23.5 py310ha98c3c9_0

[conda] pytorch 2.0.0 py3.10_0 pytorch

[conda] torchaudio 2.0.0 py310_cpu pytorch

[conda] torchvision 0.15.0 py310_cpu pytorch```

cc @kulinseth @albanD @malfet @DenisVieriu97 @razarmehr @abhudev

| 5 |

3,002 | 98,268 |

hf_Longformer regression caused by https://github.com/pytorch/pytorch/pull/98119

|

triaged, oncall: pt2

|

Bisect points to https://github.com/pytorch/pytorch/pull/98119 and reverting it makes the error go away.

Repro:

```

python benchmarks/dynamo/torchbench.py --inductor --amp --training --accuracy --device cuda --only hf_Longformer

```

Error msg:

```

ValueError: Cannot view a tensor with shape torch.Size([4, 12, 1024, 513]) and strides (6303744, 513, 6156, 1) as a tensor with shape (48, 4, 256, 513)!

```

Q: Why is this not caught by CI?

A: Because `hf_Longformer` uses more than 24GB GPU memory, it is currently skipped on CI. I will fix this by running larger models on A100 instances.

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh

| 5 |

3,003 | 98,260 |

Broken mypy check in test_type_hints.py::TestTypeHints::test_doc_examples

|

module: typing, triaged

|

### 🐛 Describe the bug

There are two problems with this test that surfaces today:

1. The `mypy==0.960` version we are using has a bug with Python 3.10.6+ https://github.com/python/mypy/issues/13627. It breaks for Python 3.11 in CI. The fix requires an upgrade to 0.981 or newer. The bug is that mypy not only checks stuffs in PyTorch, but also PyTorch dependencies. Specifically, it's numpy `/opt/conda/envs/py_3.11/lib/python3.11/site-packages/numpy/__init__.pyi:638:48: error: Positional-only parameters are only supported in Python 3.8 and greater [syntax]`. For example, https://hud.pytorch.org/pytorch/pytorch/commit/73b06a0268bb89c09a86f16fa0f72818baa4b250. I found a PR https://github.com/pytorch/pytorch/pull/91983 to upgrade mypy. Could we go ahead with that? (cc @ezyang @malfet @rgommers @xuzhao9 @gramster)

2. The test has been broken on ASAN after https://github.com/pytorch/pytorch/pull/94525 (cc @wanchaol) with the following error `mypy.ini:5:1: error: Error importing plugin "numpy.typing.mypy_plugin": No module named 'numpy.typing.mypy_plugin' [misc]`, for example https://github.com/pytorch/pytorch/actions/runs/4601886643/jobs/8130489457

So why today you ask? As these changes have been in trunk for a while. It turns out that `test_doc_examples` was slow, so it wasn't run in these jobs till now.

```

test_type_hints.py::TestTypeHints::test_doc_examples SKIPPED [0.0002s] (test is slow; run with PYTORCH_TEST_WITH_SLOW to enable test)

```

The test is run as part of [slow jobs](https://github.com/pytorch/pytorch/blob/master/.github/workflows/slow.yml) but they don't cover 3.11 nor ASAN to trigger those bugs (Ouch!)

I have disabled the test in the mean time while we take actions https://github.com/pytorch/pytorch/issues/98259

### Versions

PyTorch CI

| 0 |

3,004 | 98,259 |

DISABLED test_doc_examples (__main__.TestTypeHints)

|

module: typing, triaged, skipped

|

Platforms: linux

This test was disabled because it is failing on master ([recent examples](https://hud.pytorch.org/failure/%2Fopt%2Fconda%2Fenvs%2Fpy_3.11%2Flib%2Fpython3.11%2Fsite-packages%2Fnumpy%2F__init__.pyi%3A638%3A48%3A%20error%3A%20Positional-only%20parameters%20are%20only%20supported%20in%20Python%203.8%20and%20greater%20%20%5Bsyntax%5D)).

This looks like an upstream mypy issue https://github.com/python/mypy/issues/13627 as documented in https://github.com/pytorch/pytorch/pull/94255. Disable the test to investigate further

cc @ezyang @malfet @rgommers @xuzhao9 @gramster

| 4 |

3,005 | 98,256 |

[CUDA][MAGMA][Linalg] Remove MAGMA from CUDA linear algebra dependencies

|

triaged, open source, ciflow/trunk, release notes: releng, ciflow/periodic, no-stale, keep-going

|

# wip

linear algebra on CUDA (non-ROCM) devices will only use cuSOLVER library; magma will be removed

Todo:

- [ ] remove this has_magma check in pytorch/builder for cuda build https://github.com/pytorch/builder/blob/3df3313b12e91a9feae40f15681f505b9f3d1354/check_binary.sh#L392-L393, and make sure https://github.com/pytorch/pytorch/pull/98256/commits/34194b8f3b42f0a63098cddefa565cb20aa0b171 is reverted

| 4 |

3,006 | 98,251 |

[Dynamo] Enable `dynamo.export` for huggingface models w/ `ModelOutput`

|

feature, triaged, module: pytree, oncall: pt2, module: dynamo, module: export

|

### 🚀 The feature, motivation and pitch

Initially reported at #96386, `dynamo.export` raises for any huggingface model that returns a subclass of `ModelOutput`.

Here is an experimental patch I have done to unblock `dynamo.export` based ONNX export. The idea is to extend `pytree` to be able to flatten/unflatten `ModelOutput` and its subclasses.

https://github.com/pytorch/pytorch/blob/95de585a7f208476ae06894acbcc897a0bf9abed/torch/onnx/_internal/fx/exporter.py#L21-L68

I wonder if it is a good idea to bring this native into `pytree`? The context of this patch needs to cover not only `dynamo.export` but any other subsequent fx pass that may interact with the formatted output, otherwise it won't recognize the output structure.

Feedbacks and suggestions are welcomed. Feel free to let me know if there are other more suitable solutions.

@jansel @voznesenskym @wconstab

### Alternatives

_No response_

### Additional context

_No response_

cc @zou3519 @ezyang @msaroufim @wconstab @ngimel @bdhirsh @anijain2305 @voznesenskym @penguinwu @EikanWang @jgong5 @Guobing-Chen @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx @soumith @desertfire

| 5 |

3,007 | 98,237 |

inductor `compile_fx_inner()` segfaults on `torch.isinf`

|

triaged, oncall: pt2, module: inductor

|

minimal repro:

```

import torch

from torch._inductor.compile_fx import compile_fx_inner

from torch._subclasses import FakeTensorMode

from torch.fx.experimental.proxy_tensor import make_fx

def f(x):

isinf = torch.isinf(x)

return [isinf]

with FakeTensorMode():

inp = torch.ones(2048, device='cuda')

fx_g = make_fx(f)(inp)

fx_inner = compile_fx_inner(fx_g, [inp])

```

This fails with:

```

File "/tmp/torchinductor_hirsheybar/3h/c3hkvftvlgct3scdmbgllet56gcfozfyloqidc57nlxmixrk5ioq.py", line 45, in <module>

async_compile.wait(globals())

File "/scratch/hirsheybar1/work/pytorch/torch/_inductor/codecache.py", line 876, in wait

scope[key] = result.result()

File "/scratch/hirsheybar1/work/pytorch/torch/_inductor/codecache.py", line 734, in result

self.future.result()

File "/scratch/hirsheybar1/work/py38/lib/python3.8/concurrent/futures/_base.py", line 444, in result

return self.__get_result()

File "/scratch/hirsheybar1/work/py38/lib/python3.8/concurrent/futures/_base.py", line 389, in __get_result

raise self._exception

concurrent.futures.process.BrokenProcessPool: A process in the process pool was terminated abruptly while the future was running or pending.

```

cc @ezyang @soumith @msaroufim @wconstab @ngimel @voznesenskym @penguinwu @anijain2305 @EikanWang @jgong5 @Guobing-Chen @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx @peterbell10 @desertfire

| 5 |

3,008 | 98,222 |

aten::_linalg_solve_ex.result' is not currently implemented for the MPS

|

feature, triaged, module: mps

|

### 🐛 Describe the bug

I would like to request an implementation to fix "aten::_linalg_solve_ex.result' is not currently implemented for the MPS"

### Versions

aten::_linalg_solve_ex.result

cc @kulinseth @albanD @malfet @DenisVieriu97 @razarmehr @abhudev

| 10 |

3,009 | 98,212 |

Wrong results for GELU forward pass (CPU vs MPS) while inferencing a GLPN model from huggingface

|

high priority, triaged, module: correctness (silent), module: mps

|

### 🐛 Describe the bug

Hi, there. I've discovered strange behaviour when using MPS device. When infer the same model with the same input on "mps" device, the result is numerically wrong and meaningless.

@amyeroberts from huggingface narrowed down the scope of the problem to GELU layer, more information is here: https://github.com/huggingface/transformers/issues/22468

The issue makes it impossible to use GLPN architecture with MPS device.

Here are some examples:

MPS | CPU

:-------------------------:|:-------------------------:

|

Thanks in advance for the help!

### Versions

PyTorch version: 2.1.0.dev20230329

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: macOS 13.3 (arm64)

GCC version: Could not collect

Clang version: 14.0.3 (clang-1403.0.22.14.1)

CMake version: Could not collect

Libc version: N/A

Python version: 3.9.6 (default, Mar 10 2023, 20:16:38) [Clang 14.0.3 (clang-1403.0.22.14.1)] (64-bit runtime)

Python platform: macOS-13.3-arm64-arm-64bit

Is CUDA available: False

CUDA runtime version: No CUDA

CUDA_MODULE_LOADING set to: N/A

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Apple M2 Max

Versions of relevant libraries:

[pip3] numpy==1.24.2

[pip3] torch==2.1.0.dev20230329

[pip3] torchaudio==2.1.0.dev20230329

[pip3] torchvision==0.16.0.dev20230329

[conda] Could not collect

cc @ezyang @gchanan @zou3519 @kulinseth @albanD @malfet @DenisVieriu97 @razarmehr @abhudev

| 2 |

3,010 | 98,210 |

torch.jit.script + legacy executor mode has diff in some pattern

|

oncall: jit

|

### 🐛 Describe the bug

# there is output diff between origin and script

``` python

import torch

import os

os.environ['TORCH_JIT_DISABLE_NEW_EXECUTOR'] = '1'

torch._C._jit_set_nvfuser_enabled(False)

class TempModule(torch.nn.Module):

def __init__(self):

super().__init__()

self.head_size = 64

def forward(self, x):

score_scale1 = torch.rsqrt(torch.tensor(self.head_size) * 3)

return score_scale1

module = TempModule()

x = module(torch.randn(4, 5))

print(x) # print 0.0722

ts_module = torch.jit.script(module)

y = ts_module(torch.randn(4, 5))

print(y) # print 0, unexpectly

```

### Versions

torch==2.0.0

cc @EikanWang @jgong5 @wenzhe-nrv @sanchitintel

| 1 |

3,011 | 98,208 |

Add a deterministic version of reflection_pad2d_backward_cuda

|

module: nn, triaged, enhancement, module: determinism, actionable

|

Running ResViT model in deterministic mode i face

UserWarning: reflection_pad2d_backward_cuda does not have a deterministic implementation, but you set 'torch.use_deterministic_algorithms(True, warn_only=True)'. You can file an issue at https://github.com/pytorch/pytorch/issues to help us prioritize adding deterministic support for this operation. (Triggered internally at ../aten/src/ATen/Context.cpp:82.)

pytorch version 1.13.1

Thank you!

### Alternatives

_No response_

### Additional context

_No response_

cc @albanD @mruberry @jbschlosser @walterddr @mikaylagawarecki @kurtamohler

| 4 |

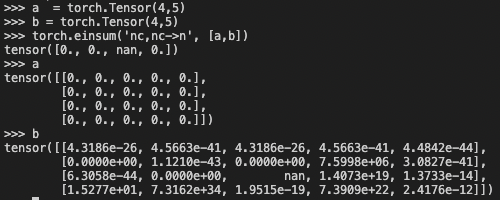

3,012 | 98,204 |

NaN appears when initializing tensor

|

needs reproduction, triaged, module: NaNs and Infs

|

### 🐛 Describe the bug

When I initialize a tensor, Nan appears such as the picture below. But I can't reimplement this bug again. Can anyone tell me why?

### Versions

PyTorch version: 1.12.0

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: CentOS Linux 7 (Core) (x86_64)

GCC version: (GCC) 4.8.5 20150623 (Red Hat 4.8.5-44)

Clang version: Could not collect

CMake version: Could not collect

Libc version: glibc-2.17

Python version: 3.9.12 (main, Apr 5 2022, 06:56:58) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-4.14.0_1-0-0-41-x86_64-with-glibc2.17

Is CUDA available: True

CUDA runtime version: Could not collect

CUDA_MODULE_LOADING set to:

GPU models and configuration:

GPU 0: Tesla V100-SXM2-32GB

GPU 1: Tesla V100-SXM2-32GB

GPU 2: Tesla V100-SXM2-32GB

GPU 3: Tesla V100-SXM2-32GB

GPU 4: Tesla V100-SXM2-32GB

GPU 5: Tesla V100-SXM2-32GB

GPU 6: Tesla V100-SXM2-32GB

GPU 7: Tesla V100-SXM2-32GB

Nvidia driver version: 460.32.03

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 96

On-line CPU(s) list: 0-95

Thread(s) per core: 2

Core(s) per socket: 24

Socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 85

Model name: Intel(R) Xeon(R) Gold 6271C CPU @ 2.60GHz

Stepping: 7

CPU MHz: 1000.000

CPU max MHz: 2601.0000

CPU min MHz: 1000.0000

BogoMIPS: 5200.00

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 1024K

L3 cache: 33792K

NUMA node0 CPU(s): 0-23,48-71

NUMA node1 CPU(s): 24-47,72-95

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault epb cat_l3 cdp_l3 invpcid_single intel_ppin ssbd mba ibrs ibpb stibp ibrs_enhanced tpr_shadow vnmi flexpriority ept vpid fsgsbase tsc_adjust bmi1 avx2 smep bmi2 erms invpcid cqm mpx rdt_a avx512f avx512dq rdseed adx smap clflushopt clwb intel_pt avx512cd avx512bw avx512vl xsaveopt xsavec xgetbv1 xsaves cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local dtherm ida arat pln pts pku avx512_vnni md_clear flush_l1d arch_capabilities

Versions of relevant libraries:

[pip3] flake8==3.9.2

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.21.5

[pip3] numpydoc==1.2

[pip3] pytorch-metric-learning==1.5.0

[pip3] torch==1.12.0

[pip3] torchvision==0.13.0

[pip3] tritonclient==2.31.0

[conda] blas 1.0 mkl defaults

[conda] cudatoolkit 11.3.1 h2bc3f7f_2 defaults

[conda] faiss-gpu 1.7.3 py3.9_h28a55e0_0_cuda11.3 pytorch

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] libfaiss 1.7.3 hfc2d529_0_cuda11.3 pytorch

[conda] mkl 2021.4.0 h06a4308_640 defaults

[conda] mkl-service 2.4.0 py39h7f8727e_0 defaults

[conda] mkl_fft 1.3.1 py39hd3c417c_0 defaults

[conda] mkl_random 1.2.2 py39h51133e4_0 defaults

[conda] numpy 1.21.5 py39he7a7128_1 defaults

[conda] numpy-base 1.21.5 py39hf524024_1 defaults

[conda] numpydoc 1.2 pyhd3eb1b0_0 defaults

[conda] pytorch 1.12.0 py3.9_cuda11.3_cudnn8.3.2_0 pytorch

[conda] pytorch-metric-learning 1.5.0 pypi_0 pypi

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchvision 0.13.0 py39_cu113 pytorch

[conda] tritonclient 2.31.0 pypi_0 pypi

| 0 |

3,013 | 98,203 |

AssertionError: was expecting embedding dimension of 22, but got 1320

|

oncall: transformer/mha

|

### 🐛 Describe the bug

I am new to pytorch on Colab. I used Transformer encoder as feature extractor to build DQN. The input shape of DQN is (60,22), and the output is one of the three numbers of 0,1,2. model.learn completed successfully. But model.predict is wrong. How can I change it?

code:

class TransformerFeaturesExtractor(BaseFeaturesExtractor):

def __init__(self, observation_space, features_dim=128):

super(TransformerFeaturesExtractor, self).__init__(observation_space, features_dim)

self.transformer_encoder = nn.TransformerEncoder(

nn.TransformerEncoderLayer(d_model=features_dim, nhead=2), num_layers=6

)

self.flatten = nn.Flatten()

def forward(self, observations):

x = self.flatten(observations)

x = self.transformer_encoder(x.unsqueeze(0))

return x.squeeze(0)

policy_kwargs = dict(

features_extractor_class=TransformerFeaturesExtractor,

features_extractor_kwargs=dict(features_dim=22), #, action_space=env.action_space),

)

# Create an instance of the DQN agent

model = DQN("MlpPolicy", env,policy_kwargs=policy_kwargs, verbose=1)

#model = DQN('MlpPolicy', stock_trade_env, verbose=1)

#model = PPO('MlpPolicy', env, verbose=1)

# Train the agent

# pdb.set_trace()

model.learn(total_timesteps=10_000, progress_bar=True)

obs = env.reset()

for i in range(100):

action, _states = model.predict(obs)

#action = env.action_space.sample()

obs, rewards, dones, info = env.step(action)

if dones:

break

env.render()

### Versions

---------------------------------------------------------------------------

AssertionError Traceback (most recent call last)

<ipython-input-8-b1d23f2bc043> in <cell line: 366>()

365 obs = env.reset()

366 for i in range(100):

--> 367 action, _states = model.predict(obs)

368 #action = env.action_space.sample()

369 obs, rewards, dones, info = env.step(action)

16 frames

/usr/local/lib/python3.9/dist-packages/torch/nn/functional.py in multi_head_attention_forward(query, key, value, embed_dim_to_check, num_heads, in_proj_weight, in_proj_bias, bias_k, bias_v, add_zero_attn, dropout_p, out_proj_weight, out_proj_bias, training, key_padding_mask, need_weights, attn_mask, use_separate_proj_weight, q_proj_weight, k_proj_weight, v_proj_weight, static_k, static_v, average_attn_weights)

5024 raise AssertionError(

5025 "only bool and floating types of key_padding_mask are supported")

-> 5026 assert embed_dim == embed_dim_to_check, \

5027 f"was expecting embedding dimension of {embed_dim_to_check}, but got {embed_dim}"

5028 if isinstance(embed_dim, torch.Tensor):

AssertionError: was expecting embedding dimension of 22, but got 1320

cc @jbschlosser @bhosmer @cpuhrsch @erichan1

| 3 |

3,014 | 98,200 |

torch.nn.init functions with `generator` argument

|

module: nn, triaged, module: random, actionable

|

### 🚀 The feature, motivation and pitch

I might have a custom random generator (`torch.Generator`). All the low-level random functions support a `generator` argument for this. All the functions in `torch.nn.init` just call the low-level functions.

It would be useful if I can pass `generator` to those `torch.nn.init` functions and it would just pass it on to the low-level functions.

In my case, I'm mostly interested in `torch.nn.init.trunc_normal_`, as there is no such low-level `trunc_normal_` function.

### Alternatives

For my use case, it would also be fine if you add a `Tensor.trunc_normal_` low-level function, next to the `Tensor.normal_` function.

However, I think having `generator` as an argument for the `torch.nn.init` functions can anyway be helpful.

### Additional context

_No response_

cc @albanD @mruberry @jbschlosser @walterddr @mikaylagawarecki @pbelevich

| 5 |

3,015 | 98,194 |

add register_default_collate_for

|

triaged, open source, release notes: dataloader

|

Fixes #97498

| 9 |

3,016 | 98,193 |

RuntimeError: CUDA error: an illegal memory access was encountered, torch/cuda/streams.py", line 94, in synchronize

|

module: cuda, triaged

|

Hi.

I get a torch.cuda.synchronize() error, when i infer the TRT plugin. The detailed error information is as follows.

Building TRT engine....

[04/03/2023-06:52:48] [TRT] [V] Applying generic optimizations to the graph for inference.

[04/03/2023-06:52:48] [TRT] [V] Original: 1 layers

[04/03/2023-06:52:48] [TRT] [V] After dead-layer removal: 1 layers

[04/03/2023-06:52:48] [TRT] [V] After Myelin optimization: 1 layers

[04/03/2023-06:52:48] [TRT] [V] Applying ScaleNodes fusions.

[04/03/2023-06:52:48] [TRT] [V] After scale fusion: 1 layers

[04/03/2023-06:52:48] [TRT] [V] After dupe layer removal: 1 layers

[04/03/2023-06:52:48] [TRT] [V] After final dead-layer removal: 1 layers

[04/03/2023-06:52:48] [TRT] [V] After tensor merging: 1 layers

[04/03/2023-06:52:48] [TRT] [V] After vertical fusions: 1 layers

[04/03/2023-06:52:48] [TRT] [V] After dupe layer removal: 1 layers

[04/03/2023-06:52:48] [TRT] [V] After final dead-layer removal: 1 layers

[04/03/2023-06:52:48] [TRT] [V] After tensor merging: 1 layers

[04/03/2023-06:52:48] [TRT] [V] After slice removal: 1 layers

[04/03/2023-06:52:48] [TRT] [V] After concat removal: 1 layers

[04/03/2023-06:52:48] [TRT] [V] Trying to split Reshape and strided tensor

[04/03/2023-06:52:48] [TRT] [V] Graph construction and optimization completed in 0.109626 seconds.

[04/03/2023-06:52:49] [TRT] [V] Using cublasLt as a tactic source

[04/03/2023-06:52:49] [TRT] [I] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +67, GPU +8, now: CPU 4520, GPU 3365 (MiB)

[04/03/2023-06:52:49] [TRT] [V] Using cuDNN as a tactic source

[04/03/2023-06:52:49] [TRT] [I] [MemUsageChange] Init cuDNN: CPU +0, GPU +10, now: CPU 4520, GPU 3375 (MiB)

[04/03/2023-06:52:49] [TRT] [I] Local timing cache in use. Profiling results in this builder pass will not be stored.

[04/03/2023-06:52:49] [TRT] [V] Constructing optimization profile number 0 [1/1].

[04/03/2023-06:52:49] [TRT] [V] Reserving memory for host IO tensors. Host: 0 bytes

[04/03/2023-06:52:49] [TRT] [V] =============== Computing reformatting costs

[04/03/2023-06:52:49] [TRT] [V] =============== Computing reformatting costs

[04/03/2023-06:52:49] [TRT] [V] =============== Computing costs for

[04/03/2023-06:52:49] [TRT] [V] *************** Autotuning format combination: Float(1327104,20736,144,1) -> Float(165888,512,1) ***************

[04/03/2023-06:52:49] [TRT] [V] Formats and tactics selection completed in 0.0748828 seconds.

[04/03/2023-06:52:49] [TRT] [V] After reformat layers: 1 layers

[04/03/2023-06:52:49] [TRT] [V] Pre-optimized block assignment.

[04/03/2023-06:52:49] [TRT] [V] Block size 8589934592

[04/03/2023-06:52:49] [TRT] [V] Total Activation Memory: 8589934592

[04/03/2023-06:52:49] [TRT] [I] Detected 1 inputs and 1 output network tensors.

[04/03/2023-06:52:49] [TRT] [V] Layer: (Unnamed Layer* 0) [PluginV2DynamicExt] Host Persistent: 112 Device Persistent: 0 Scratch Memory: 0

[04/03/2023-06:52:49] [TRT] [I] Total Host Persistent Memory: 112

[04/03/2023-06:52:49] [TRT] [I] Total Device Persistent Memory: 0

[04/03/2023-06:52:49] [TRT] [I] Total Scratch Memory: 0

[04/03/2023-06:52:49] [TRT] [I] [MemUsageStats] Peak memory usage of TRT CPU/GPU memory allocators: CPU 0 MiB, GPU 0 MiB

[04/03/2023-06:52:49] [TRT] [V] Optimized block assignment.

[04/03/2023-06:52:49] [TRT] [I] Total Activation Memory: 0

[04/03/2023-06:52:49] [TRT] [V] Disabling unused tactic source: EDGE_MASK_CONVOLUTIONS

[04/03/2023-06:52:49] [TRT] [V] Using cublasLt as a tactic source

[04/03/2023-06:52:49] [TRT] [I] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +1, GPU +8, now: CPU 4619, GPU 3821 (MiB)

[04/03/2023-06:52:49] [TRT] [V] Using cuDNN as a tactic source

[04/03/2023-06:52:49] [TRT] [I] [MemUsageChange] Init cuDNN: CPU +0, GPU +8, now: CPU 4619, GPU 3829 (MiB)

[04/03/2023-06:52:49] [TRT] [V] Engine generation completed in 0.842963 seconds.

[04/03/2023-06:52:49] [TRT] [V] Engine Layer Information:

Layer(PluginV2): (Unnamed Layer* 0) [PluginV2DynamicExt], Tactic: 0x0000000000000000, input_img[Float(-2,64,144,144)] -> vision_transformer_output[Float(-2,324,512)]

[04/03/2023-06:52:49] [TRT] [I] [MemUsageChange] TensorRT-managed allocation in building engine: CPU +0, GPU +0, now: CPU 0, GPU 0 (MiB)

[04/03/2023-06:52:49] [TRT] [V] Using cublasLt as a tactic source

[04/03/2023-06:52:49] [TRT] [I] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +0, GPU +8, now: CPU 4573, GPU 3681 (MiB)

[04/03/2023-06:52:49] [TRT] [V] Using cuDNN as a tactic source

[04/03/2023-06:52:49] [TRT] [I] [MemUsageChange] Init cuDNN: CPU +0, GPU +8, now: CPU 4573, GPU 3689 (MiB)

[04/03/2023-06:52:49] [TRT] [V] Total per-runner device persistent memory is 0

[04/03/2023-06:52:49] [TRT] [V] Total per-runner host persistent memory is 112

[04/03/2023-06:52:49] [TRT] [V] Allocated activation device memory of size 0

[04/03/2023-06:52:49] [TRT] [I] [MemUsageChange] TensorRT-managed allocation in IExecutionContext creation: CPU +0, GPU +0, now: CPU 0, GPU 0 (MiB)

Traceback (most recent call last):

File "examples/infer_visiontransformer_plugin.py", line 310, in <module>

main(args)

File "examples/infer_visiontransformer_plugin.py", line 128, in main

plugin_output = run_trt_plugin(p_loader, images_tensor, engine)

File "/opt/conda/lib/python3.8/site-packages/torch/autograd/grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "examples/infer_visiontransformer_plugin.py", line 166, in run_trt_plugin

stream.synchronize()

File "/opt/conda/lib/python3.8/site-packages/torch/cuda/streams.py", line 94, in synchronize

super(Stream, self).synchronize()

RuntimeError: CUDA error: an illegal memory access was encountered

CUDA kernel errors might be asynchronously reported at some other API call,so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

- PyTorch or Caffe2:

- How you installed PyTorch (conda, pip, source):

- Build command you used (if compiling from source):

- OS:

- PyTorch version:

- Python version:

- CUDA/cuDNN version:

- GPU models and configuration:

- GCC version (if compiling from source):

- CMake version:

- Versions of any other relevant libraries:

cc @ngimel

| 0 |

3,017 | 98,189 |

[onnx] AdaptiveMaxPool2d can not convert to GlobalMaxPool

|

module: onnx, triaged

|

### 🐛 Describe the bug

I try to convert the cbam block to onnx. The nn.AdaptiveMaxPool2d([1, 1]) used in ChannelAttention is expected to be a GlobalMaxPool in onnx accodring to according to https://github.com/pytorch/pytorch/blob/ff4569ae2939c3e81092fdf43c9d5f2f08453c42/torch/onnx/symbolic_opset9.py#L981, but i got onnx::MaxPool. Is there any way to solve this problem?

python script:

~~~python

import torch

import torch.nn as nn

def conv3x3(in_planes, out_planes, stride=1):

"3x3 convolution with padding"

return nn.Conv2d(in_planes, out_planes, kernel_size=3, stride=stride,

padding=1, bias=False)

class ChannelAttention(nn.Module):

def __init__(self, in_planes, ratio=16):

super(ChannelAttention, self).__init__()

self.avg_pool = nn.AdaptiveAvgPool2d([1, 1])

self.max_pool = nn.AdaptiveMaxPool2d([1, 1])

self.fc = nn.Sequential(nn.Conv2d(in_planes, in_planes // 16, 1, bias=False),

nn.ReLU(),

nn.Conv2d(in_planes // 16, in_planes, 1, bias=False))

self.sigmoid = nn.Sigmoid()

def forward(self, x):

avg_out = self.fc(self.avg_pool(x))

max_out = self.fc(self.max_pool(x))

out = avg_out + max_out

return self.sigmoid(out)

class SpatialAttention(nn.Module):

def __init__(self, kernel_size=7):

super(SpatialAttention, self).__init__()

self.conv1 = nn.Conv2d(2, 1, kernel_size, padding=kernel_size//2, bias=False)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

avg_out = torch.mean(x, dim=1, keepdim=True)

max_out, _ = torch.max(x, dim=1, keepdim=True)

x = torch.cat([avg_out, max_out], dim=1)

x = self.conv1(x)

return self.sigmoid(x)

class CBAM(nn.Module):

expansion = 1

def __init__(self, inplanes, planes, stride=1, downsample=None):

super(CBAM, self).__init__()

self.conv1 = conv3x3(inplanes, planes, stride)

self.bn1 = nn.BatchNorm2d(planes)

self.relu = nn.ReLU(inplace=True)

self.conv2 = conv3x3(planes, planes)

self.bn2 = nn.BatchNorm2d(planes)

self.ca = ChannelAttention(planes)

self.sa = SpatialAttention()

self.downsample = downsample

self.stride = stride

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.ca(out) * out

out = self.sa(out) * out

if self.downsample is not None:

residual = self.downsample(x)

out += residual

out = self.relu(out)

return out

if __name__ == '__main__':

model = CBAM(16, 16)

device = 'cuda:0'

model.to(device)

model.eval()

dummpy_input = torch.zeros([1, 16, 400, 640], device=device)

torch.onnx.export(

model,

dummpy_input,

'model.onnx',

verbose=True,

input_names=["input"],

output_names=["output"],

opset_version=14,

)

~~~

script output:

~~~

Exported graph: graph(%input : Float(1, 16, 400, 640, strides=[4096000, 256000, 640, 1], requires_grad=0, device=cuda:0),

%ca.fc.0.weight : Float(1, 16, 1, 1, strides=[16, 1, 1, 1], requires_grad=1, device=cuda:0),

%ca.fc.2.weight : Float(16, 1, 1, 1, strides=[1, 1, 1, 1], requires_grad=1, device=cuda:0),

%sa.conv1.weight : Float(1, 2, 7, 7, strides=[98, 49, 7, 1], requires_grad=1, device=cuda:0),

%onnx::Conv_41 : Float(16, 16, 3, 3, strides=[144, 9, 3, 1], requires_grad=0, device=cuda:0),

%onnx::Conv_42 : Float(16, strides=[1], requires_grad=0, device=cuda:0),

%onnx::Conv_44 : Float(16, 16, 3, 3, strides=[144, 9, 3, 1], requires_grad=0, device=cuda:0)):

%onnx::Conv_45 : Float(16, strides=[1], requires_grad=0, device=cuda:0) = onnx::Identity(%onnx::Conv_42)

%/conv1/Conv_output_0 : Float(1, 16, 400, 640, strides=[4096000, 256000, 640, 1], requires_grad=1, device=cuda:0) = onnx::Conv[dilations=[1, 1], group=1, kernel_shape=[3, 3], pads=[1, 1, 1, 1], strides=[1, 1], onnx_name="/conv1/Conv"](%input, %onnx::Conv_41, %onnx::Conv_42), scope: __main__.CBAM::/torch.nn.modules.conv.Conv2d::conv1 # /home/oem/anaconda3/envs/torch/lib/python3.10/site-packages/torch/nn/modules/conv.py:459:0

%/relu/Relu_output_0 : Float(1, 16, 400, 640, strides=[4096000, 256000, 640, 1], requires_grad=1, device=cuda:0) = onnx::Relu[onnx_name="/relu/Relu"](%/conv1/Conv_output_0), scope: __main__.CBAM::/torch.nn.modules.activation.ReLU::relu # /home/oem/anaconda3/envs/torch/lib/python3.10/site-packages/torch/nn/functional.py:1455:0

%/conv2/Conv_output_0 : Float(1, 16, 400, 640, strides=[4096000, 256000, 640, 1], requires_grad=1, device=cuda:0) = onnx::Conv[dilations=[1, 1], group=1, kernel_shape=[3, 3], pads=[1, 1, 1, 1], strides=[1, 1], onnx_name="/conv2/Conv"](%/relu/Relu_output_0, %onnx::Conv_44, %onnx::Conv_45), scope: __main__.CBAM::/torch.nn.modules.conv.Conv2d::conv2 # /home/oem/anaconda3/envs/torch/lib/python3.10/site-packages/torch/nn/modules/conv.py:459:0

%/ca/avg_pool/GlobalAveragePool_output_0 : Float(1, 16, 1, 1, strides=[16, 1, 1, 1], requires_grad=1, device=cuda:0) = onnx::GlobalAveragePool[onnx_name="/ca/avg_pool/GlobalAveragePool"](%/conv2/Conv_output_0), scope: __main__.CBAM::/__main__.ChannelAttention::ca/torch.nn.modules.pooling.AdaptiveAvgPool2d::avg_pool # /home/oem/anaconda3/envs/torch/lib/python3.10/site-packages/torch/nn/functional.py:1214:0

%/ca/fc/fc.0/Conv_output_0 : Float(1, 1, 1, 1, strides=[1, 1, 1, 1], requires_grad=0, device=cuda:0) = onnx::Conv[dilations=[1, 1], group=1, kernel_shape=[1, 1], pads=[0, 0, 0, 0], strides=[1, 1], onnx_name="/ca/fc/fc.0/Conv"](%/ca/avg_pool/GlobalAveragePool_output_0, %ca.fc.0.weight), scope: __main__.CBAM::/__main__.ChannelAttention::ca/torch.nn.modules.container.Sequential::fc/torch.nn.modules.conv.Conv2d::fc.0 # /home/oem/anaconda3/envs/torch/lib/python3.10/site-packages/torch/nn/modules/conv.py:459:0

%/ca/fc/fc.1/Relu_output_0 : Float(1, 1, 1, 1, strides=[1, 1, 1, 1], requires_grad=1, device=cuda:0) = onnx::Relu[onnx_name="/ca/fc/fc.1/Relu"](%/ca/fc/fc.0/Conv_output_0), scope: __main__.CBAM::/__main__.ChannelAttention::ca/torch.nn.modules.container.Sequential::fc/torch.nn.modules.activation.ReLU::fc.1 # /home/oem/anaconda3/envs/torch/lib/python3.10/site-packages/torch/nn/functional.py:1457:0

%/ca/fc/fc.2/Conv_output_0 : Float(1, 16, 1, 1, strides=[16, 1, 1, 1], requires_grad=0, device=cuda:0) = onnx::Conv[dilations=[1, 1], group=1, kernel_shape=[1, 1], pads=[0, 0, 0, 0], strides=[1, 1], onnx_name="/ca/fc/fc.2/Conv"](%/ca/fc/fc.1/Relu_output_0, %ca.fc.2.weight), scope: __main__.CBAM::/__main__.ChannelAttention::ca/torch.nn.modules.container.Sequential::fc/torch.nn.modules.conv.Conv2d::fc.2 # /home/oem/anaconda3/envs/torch/lib/python3.10/site-packages/torch/nn/modules/conv.py:459:0

%/ca/max_pool/MaxPool_output_0 : Float(1, 16, 1, 1, strides=[16, 1, 1, 1], requires_grad=1, device=cuda:0) = onnx::MaxPool[kernel_shape=[400, 640], pads=[0, 0, 0, 0], strides=[400, 640], onnx_name="/ca/max_pool/MaxPool"](%/conv2/Conv_output_0), scope: __main__.CBAM::/__main__.ChannelAttention::ca/torch.nn.modules.pooling.AdaptiveMaxPool2d::max_pool # /home/oem/anaconda3/envs/torch/lib/python3.10/site-packages/torch/nn/functional.py:1121:0

%/ca/fc/fc.0_1/Conv_output_0 : Float(1, 1, 1, 1, strides=[1, 1, 1, 1], requires_grad=0, device=cuda:0) = onnx::Conv[dilations=[1, 1], group=1, kernel_shape=[1, 1], pads=[0, 0, 0, 0], strides=[1, 1], onnx_name="/ca/fc/fc.0_1/Conv"](%/ca/max_pool/MaxPool_output_0, %ca.fc.0.weight), scope: __main__.CBAM::/__main__.ChannelAttention::ca/torch.nn.modules.container.Sequential::fc/torch.nn.modules.conv.Conv2d::fc.0 # /home/oem/anaconda3/envs/torch/lib/python3.10/site-packages/torch/nn/modules/conv.py:459:0

%/ca/fc/fc.1_1/Relu_output_0 : Float(1, 1, 1, 1, strides=[1, 1, 1, 1], requires_grad=1, device=cuda:0) = onnx::Relu[onnx_name="/ca/fc/fc.1_1/Relu"](%/ca/fc/fc.0_1/Conv_output_0), scope: __main__.CBAM::/__main__.ChannelAttention::ca/torch.nn.modules.container.Sequential::fc/torch.nn.modules.activation.ReLU::fc.1 # /home/oem/anaconda3/envs/torch/lib/python3.10/site-packages/torch/nn/functional.py:1457:0

%/ca/fc/fc.2_1/Conv_output_0 : Float(1, 16, 1, 1, strides=[16, 1, 1, 1], requires_grad=0, device=cuda:0) = onnx::Conv[dilations=[1, 1], group=1, kernel_shape=[1, 1], pads=[0, 0, 0, 0], strides=[1, 1], onnx_name="/ca/fc/fc.2_1/Conv"](%/ca/fc/fc.1_1/Relu_output_0, %ca.fc.2.weight), scope: __main__.CBAM::/__main__.ChannelAttention::ca/torch.nn.modules.container.Sequential::fc/torch.nn.modules.conv.Conv2d::fc.2 # /home/oem/anaconda3/envs/torch/lib/python3.10/site-packages/torch/nn/modules/conv.py:459:0

%/ca/Add_output_0 : Float(1, 16, 1, 1, strides=[16, 1, 1, 1], requires_grad=1, device=cuda:0) = onnx::Add[onnx_name="/ca/Add"](%/ca/fc/fc.2/Conv_output_0, %/ca/fc/fc.2_1/Conv_output_0), scope: __main__.CBAM::/__main__.ChannelAttention::ca # /home/oem/Downloads/test.py:23:0

%/ca/sigmoid/Sigmoid_output_0 : Float(1, 16, 1, 1, strides=[16, 1, 1, 1], requires_grad=1, device=cuda:0) = onnx::Sigmoid[onnx_name="/ca/sigmoid/Sigmoid"](%/ca/Add_output_0), scope: __main__.CBAM::/__main__.ChannelAttention::ca/torch.nn.modules.activation.Sigmoid::sigmoid # /home/oem/anaconda3/envs/torch/lib/python3.10/site-packages/torch/nn/modules/activation.py:295:0

%/Mul_output_0 : Float(1, 16, 400, 640, strides=[4096000, 256000, 640, 1], requires_grad=1, device=cuda:0) = onnx::Mul[onnx_name="/Mul"](%/ca/sigmoid/Sigmoid_output_0, %/conv2/Conv_output_0), scope: __main__.CBAM:: # /home/oem/Downloads/test.py:67:0

%/sa/ReduceMean_output_0 : Float(1, 1, 400, 640, strides=[256000, 256000, 640, 1], requires_grad=1, device=cuda:0) = onnx::ReduceMean[axes=[1], keepdims=1, onnx_name="/sa/ReduceMean"](%/Mul_output_0), scope: __main__.CBAM::/__main__.SpatialAttention::sa # /home/oem/Downloads/test.py:34:0

%/sa/ReduceMax_output_0 : Float(1, 1, 400, 640, strides=[256000, 256000, 640, 1], requires_grad=1, device=cuda:0) = onnx::ReduceMax[axes=[1], keepdims=1, onnx_name="/sa/ReduceMax"](%/Mul_output_0), scope: __main__.CBAM::/__main__.SpatialAttention::sa # /home/oem/Downloads/test.py:35:0

%/sa/Concat_output_0 : Float(1, 2, 400, 640, strides=[512000, 256000, 640, 1], requires_grad=1, device=cuda:0) = onnx::Concat[axis=1, onnx_name="/sa/Concat"](%/sa/ReduceMean_output_0, %/sa/ReduceMax_output_0), scope: __main__.CBAM::/__main__.SpatialAttention::sa # /home/oem/Downloads/test.py:36:0

%/sa/conv1/Conv_output_0 : Float(1, 1, 400, 640, strides=[256000, 256000, 640, 1], requires_grad=0, device=cuda:0) = onnx::Conv[dilations=[1, 1], group=1, kernel_shape=[7, 7], pads=[3, 3, 3, 3], strides=[1, 1], onnx_name="/sa/conv1/Conv"](%/sa/Concat_output_0, %sa.conv1.weight), scope: __main__.CBAM::/__main__.SpatialAttention::sa/torch.nn.modules.conv.Conv2d::conv1 # /home/oem/anaconda3/envs/torch/lib/python3.10/site-packages/torch/nn/modules/conv.py:459:0

%/sa/sigmoid/Sigmoid_output_0 : Float(1, 1, 400, 640, strides=[256000, 256000, 640, 1], requires_grad=1, device=cuda:0) = onnx::Sigmoid[onnx_name="/sa/sigmoid/Sigmoid"](%/sa/conv1/Conv_output_0), scope: __main__.CBAM::/__main__.SpatialAttention::sa/torch.nn.modules.activation.Sigmoid::sigmoid # /home/oem/anaconda3/envs/torch/lib/python3.10/site-packages/torch/nn/modules/activation.py:295:0

%/Mul_1_output_0 : Float(1, 16, 400, 640, strides=[4096000, 256000, 640, 1], requires_grad=1, device=cuda:0) = onnx::Mul[onnx_name="/Mul_1"](%/sa/sigmoid/Sigmoid_output_0, %/Mul_output_0), scope: __main__.CBAM:: # /home/oem/Downloads/test.py:68:0

%/Add_output_0 : Float(1, 16, 400, 640, strides=[4096000, 256000, 640, 1], requires_grad=1, device=cuda:0) = onnx::Add[onnx_name="/Add"](%/Mul_1_output_0, %input), scope: __main__.CBAM:: # /home/oem/Downloads/test.py:73:0

%output : Float(1, 16, 400, 640, strides=[4096000, 256000, 640, 1], requires_grad=1, device=cuda:0) = onnx::Relu[onnx_name="/relu_1/Relu"](%/Add_output_0), scope: __main__.CBAM::/torch.nn.modules.activation.ReLU::relu # /home/oem/anaconda3/envs/torch/lib/python3.10/site-packages/torch/nn/functional.py:1455:0

return (%output)

================ Diagnostic Run torch.onnx.export version 2.0.0 ================

verbose: False, log level: Level.ERROR

======================= 0 NONE 0 NOTE 0 WARNING 0 ERROR ========================

~~~

### Versions

Versions of relevant libraries:

[pip3] numpy==1.23.5

[pip3] pytorch-lightning==2.0.0

[pip3] torch==2.0.0

[pip3] torchaudio==2.0.0

[pip3] torchmetrics==0.11.4

[pip3] torchvision==0.15.0

[pip3] triton==2.0.0

[conda] blas 1.0 mkl

[conda] libblas 3.9.0 12_linux64_mkl conda-forge

[conda] libcblas 3.9.0 12_linux64_mkl conda-forge

[conda] liblapack 3.9.0 12_linux64_mkl conda-forge

[conda] liblapacke 3.9.0 12_linux64_mkl conda-forge

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py310h7f8727e_0

[conda] mkl_fft 1.3.1 py310hd6ae3a3_0

[conda] mkl_random 1.2.2 py310h00e6091_0

[conda] numpy 1.23.5 py310hd5efca6_0

[conda] numpy-base 1.23.5 py310h8e6c178_0

[conda] pytorch 2.0.0 py3.10_cuda11.8_cudnn8.7.0_0 pytorch

[conda] pytorch-cuda 11.8 h7e8668a_3 pytorch

[conda] pytorch-lightning 2.0.0 pyhd8ed1ab_1 conda-forge

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchaudio 2.0.0 py310_cu118 pytorch

[conda] torchmetrics 0.11.4 pyhd8ed1ab_0 conda-forge

[conda] torchtriton 2.0.0 py310 pytorch

[conda] torchvision 0.15.0 py310_cu118 pytorch

| 2 |

3,018 | 98,187 |

how can i load seperate pytorch_model.bin?

|

module: serialization, triaged

|

If you have a question or would like help and support, please ask at our

[forums](https://discuss.pytorch.org/).

If you are submitting a feature request, please preface the title with [feature request].

If you are submitting a bug report, please fill in the following details.

## Issue description

Provide a short description.

## Code example

Please try to provide a minimal example to repro the bug.

Error messages and stack traces are also helpful.

## System Info

Please copy and paste the output from our

[environment collection script](https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py)

(or fill out the checklist below manually).

You can get the script and run it with:

```

wget https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py

# For security purposes, please check the contents of collect_env.py before running it.

python collect_env.py

```

- PyTorch or Caffe2:

- How you installed PyTorch (conda, pip, source):

- Build command you used (if compiling from source):

- OS:

- PyTorch version: 1.13.1

- Python version: 3.8

- CUDA/cuDNN version:

- GPU models and configuration:

- GCC version (if compiling from source):

- CMake version:

- Versions of any other relevant libraries:

I have a separate binary file (pytorch_model-00001-of-00002.bin, pytorch_model-00002-of-00002.bin, pytorch_model.bin.index.json).

When I substitute path into torch.load, I get an error.

How can I get this into torch.load?

cc @mruberry

| 0 |

3,019 | 98,169 |

The operator 'aten::_weight_norm_interface' is not currently implemented for the MPS device.

|

feature, triaged, module: mps

|

### 🐛 Describe the bug

NotImplementedError: The operator 'aten::_weight_norm_interface' is not currently implemented for the MPS device. If you want this op to be added in priority during the prototype phase of this feature, please comment on https://github.com/pytorch/pytorch/issues/77764. As a temporary fix, you can set the environment variable `PYTORCH_ENABLE_MPS_FALLBACK=1` to use the CPU as a fallback for this op. WARNING: this will be slower than running natively on MPS.

### Versions

NotImplementedError: The operator 'aten::_weight_norm_interface' is not currently implemented for the MPS device. If you want this op to be added in priority during the prototype phase of this feature, please comment on https://github.com/pytorch/pytorch/issues/77764. As a temporary fix, you can set the environment variable `PYTORCH_ENABLE_MPS_FALLBACK=1` to use the CPU as a fallback for this op. WARNING: this will be slower than running natively on MPS.

cc @kulinseth @albanD @malfet @DenisVieriu97 @razarmehr @abhudev

| 0 |

3,020 | 98,164 |

forward AD implimentation : _scaled_dot_product_efficient_attention

|

triaged, module: forward ad

|

### 🚀 The feature, motivation and pitch

Hi there,

I encountered an error message that requests me to file an issue regarding a feature implementation. The error message is as follows:

NotImplementedError: Trying to use forward AD with _scaled_dot_product_efficient_attention that does not support it because it has not been implemented yet.

Please file an issue to PyTorch at https://github.com/pytorch/pytorch/issues/new?template=feature-request.yml so that we can prioritize its implementation.

Note that forward AD support for some operators require PyTorch to be built with TorchScript and for JIT to be enabled. If the environment var PYTORCH_JIT=0 is set or if the

library is not built with TorchScript, some operators may no longer be used with forward AD.

I would appreciate it if you could prioritize the implementation of this feature. Thank you for your help.

### Alternatives

_No response_

### Additional context

I ran forward AD of Stable-Diffusion with diffusers library, dtype = torch.float32, device=cuda.

| 3 |

3,021 | 98,161 |

Compiling complex-valued functions fails

|

triaged, oncall: pt2, module: inductor

|

### 🐛 Describe the bug

Compiling fails for even very simple functions when tensors are complex-valued. See e.g.

```python

import torch

@torch.compile

def foo(X, Y):

Z = X + Y

return Z

X = torch.zeros(10, dtype=torch.complex128)

Y = torch.zeros(10, dtype=torch.complex128)

foo(X, Y)

```

### Error logs

```python

---------------------------------------------------------------------------

KeyError Traceback (most recent call last)

File [~/torch/_dynamo/output_graph.py:670](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/output_graph.py:670), in OutputGraph.call_user_compiler(self, gm)

[669](/torch/_dynamo/output_graph.py?line=668) else:

--> [670](/torch/_dynamo/output_graph.py?line=669) compiled_fn = compiler_fn(gm, self.fake_example_inputs())

[671](/torch/_dynamo/output_graph.py?line=670) _step_logger()(logging.INFO, f"done compiler function {name}")

File [~/torch/_dynamo/debug_utils.py:1055](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/debug_utils.py:1055), in wrap_backend_debug..debug_wrapper(gm, example_inputs, **kwargs)

[1054](/torch/_dynamo/debug_utils.py?line=1053) else:

-> [1055](/torch/_dynamo/debug_utils.py?line=1054) compiled_gm = compiler_fn(gm, example_inputs)

[1057](/torch/_dynamo/debug_utils.py?line=1056) return compiled_gm

File [~/torch/__init__.py:1390](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/__init__.py:1390), in _TorchCompileInductorWrapper.__call__(self, model_, inputs_)

[1388](/torch/__init__.py?line=1387) from torch._inductor.compile_fx import compile_fx

-> [1390](/torch/__init__.py?line=1389) return compile_fx(model_, inputs_, config_patches=self.config)

File [~/torch/_inductor/compile_fx.py:455](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_inductor/compile_fx.py:455), in compile_fx(model_, example_inputs_, inner_compile, config_patches)

[450](/torch/_inductor/compile_fx.py?line=449) with overrides.patch_functions():

[451](/torch/_inductor/compile_fx.py?line=450)

[452](/torch/_inductor/compile_fx.py?line=451) # TODO: can add logging before[/after](https://file+.vscode-resource.vscode-cdn.net/after) the call to create_aot_dispatcher_function

[453](/torch/_inductor/compile_fx.py?line=452) # in torch._functorch[/aot_autograd.py](https://file+.vscode-resource.vscode-cdn.net/aot_autograd.py)::aot_module_simplified::aot_function_simplified::new_func

[454](/torch/_inductor/compile_fx.py?line=453) # once torchdynamo is merged into pytorch

--> [455](/torch/_inductor/compile_fx.py?line=454) return aot_autograd(

[456](/torch/_inductor/compile_fx.py?line=455) fw_compiler=fw_compiler,

[457](/torch/_inductor/compile_fx.py?line=456) bw_compiler=bw_compiler,

[458](/torch/_inductor/compile_fx.py?line=457) decompositions=select_decomp_table(),

[459](/torch/_inductor/compile_fx.py?line=458) partition_fn=functools.partial(

[460](/torch/_inductor/compile_fx.py?line=459) min_cut_rematerialization_partition, compiler="inductor"

[461](/torch/_inductor/compile_fx.py?line=460) ),

[462](/torch/_inductor/compile_fx.py?line=461) keep_inference_input_mutations=True,

[463](/torch/_inductor/compile_fx.py?line=462) )(model_, example_inputs_)

File [~/torch/_dynamo/backends/common.py:48](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/backends/common.py:48), in aot_autograd..compiler_fn(gm, example_inputs)

[47](/torch/_dynamo/backends/common.py?line=46) with enable_aot_logging():

---> [48](/torch/_dynamo/backends/common.py?line=47) cg = aot_module_simplified(gm, example_inputs, **kwargs)

[49](/torch/_dynamo/backends/common.py?line=48) counters["aot_autograd"]["ok"] += 1

File [~/torch/_functorch/aot_autograd.py:2805](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_functorch/aot_autograd.py:2805), in aot_module_simplified(mod, args, fw_compiler, bw_compiler, partition_fn, decompositions, hasher_type, static_argnums, keep_inference_input_mutations)

[2803](/torch/_functorch/aot_autograd.py?line=2802) full_args.extend(args)

-> [2805](/torch/_functorch/aot_autograd.py?line=2804) compiled_fn = create_aot_dispatcher_function(

[2806](/torch/_functorch/aot_autograd.py?line=2805) functional_call,

[2807](/torch/_functorch/aot_autograd.py?line=2806) full_args,

[2808](/torch/_functorch/aot_autograd.py?line=2807) aot_config,

[2809](/torch/_functorch/aot_autograd.py?line=2808) )

[2811](/torch/_functorch/aot_autograd.py?line=2810) # TODO: There is something deeply wrong here; compiled_fn running with

[2812](/torch/_functorch/aot_autograd.py?line=2811) # the boxed calling convention, but aot_module_simplified somehow

[2813](/torch/_functorch/aot_autograd.py?line=2812) # historically returned a function that was not the boxed calling

[2814](/torch/_functorch/aot_autograd.py?line=2813) # convention. This should get fixed...

File [~/torch/_dynamo/utils.py:163](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/utils.py:163), in dynamo_timed..dynamo_timed_inner..time_wrapper(*args, **kwargs)

[162](/torch/_dynamo/utils.py?line=161) t0 = time.time()

--> [163](/torch/_dynamo/utils.py?line=162) r = func(*args, **kwargs)

[164](/torch/_dynamo/utils.py?line=163) time_spent = time.time() - t0

File [~/torch/_functorch/aot_autograd.py:2498](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_functorch/aot_autograd.py:2498), in create_aot_dispatcher_function(flat_fn, flat_args, aot_config)

[2496](/torch/_functorch/aot_autograd.py?line=2495) # You can put more passes here

-> [2498](/torch/_functorch/aot_autograd.py?line=2497) compiled_fn = compiler_fn(flat_fn, fake_flat_args, aot_config)

[2500](/torch/_functorch/aot_autograd.py?line=2499) if not hasattr(compiled_fn, "_boxed_call"):

File [~/torch/_functorch/aot_autograd.py:1713](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_functorch/aot_autograd.py:1713), in aot_wrapper_dedupe(flat_fn, flat_args, aot_config, compiler_fn)

[1712](/torch/_functorch/aot_autograd.py?line=1711) if ok:

-> [1713](/torch/_functorch/aot_autograd.py?line=1712) return compiler_fn(flat_fn, leaf_flat_args, aot_config)

[1715](/torch/_functorch/aot_autograd.py?line=1714) # Strategy 2: Duplicate specialize.

[1716](/torch/_functorch/aot_autograd.py?line=1715) #

[1717](/torch/_functorch/aot_autograd.py?line=1716) # In Haskell types, suppose you have:

(...)

[1749](/torch/_functorch/aot_autograd.py?line=1748) # }

[1750](/torch/_functorch/aot_autograd.py?line=1749) # keep_arg_mask = [True, True, False, True]

File [~/torch/_functorch/aot_autograd.py:1326](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_functorch/aot_autograd.py:1326), in aot_dispatch_base(flat_fn, flat_args, aot_config)

[1325](/torch/_functorch/aot_autograd.py?line=1324) with context(), track_graph_compiling(aot_config, "inference"):

-> [1326](/torch/_functorch/aot_autograd.py?line=1325) compiled_fw = aot_config.fw_compiler(fw_module, flat_args_with_views_handled)

[1328](/torch/_functorch/aot_autograd.py?line=1327) compiled_fn = create_runtime_wrapper(

[1329](/torch/_functorch/aot_autograd.py?line=1328) compiled_fw,

[1330](/torch/_functorch/aot_autograd.py?line=1329) runtime_metadata=metadata_,

[1331](/torch/_functorch/aot_autograd.py?line=1330) trace_joint=False,

[1332](/torch/_functorch/aot_autograd.py?line=1331) keep_input_mutations=aot_config.keep_inference_input_mutations

[1333](/torch/_functorch/aot_autograd.py?line=1332) )

File [~/torch/_dynamo/utils.py:163](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/utils.py:163), in dynamo_timed..dynamo_timed_inner..time_wrapper(*args, **kwargs)

[162](/torch/_dynamo/utils.py?line=161) t0 = time.time()

--> [163](/torch/_dynamo/utils.py?line=162) r = func(*args, **kwargs)

[164](/torch/_dynamo/utils.py?line=163) time_spent = time.time() - t0

File [~/torch/_inductor/compile_fx.py:430](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_inductor/compile_fx.py:430), in compile_fx..fw_compiler(model, example_inputs)

[429](/torch/_inductor/compile_fx.py?line=428) model = convert_outplace_to_inplace(model)

--> [430](/torch/_inductor/compile_fx.py?line=429) return inner_compile(

[431](/torch/_inductor/compile_fx.py?line=430) model,

[432](/torch/_inductor/compile_fx.py?line=431) example_inputs,

[433](/torch/_inductor/compile_fx.py?line=432) num_fixed=fixed,

[434](/torch/_inductor/compile_fx.py?line=433) cudagraphs=cudagraphs,

[435](/torch/_inductor/compile_fx.py?line=434) graph_id=graph_id,

[436](/torch/_inductor/compile_fx.py?line=435) )

File [~/torch/_dynamo/debug_utils.py:595](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/debug_utils.py:595), in wrap_compiler_debug..debug_wrapper(gm, example_inputs, **kwargs)

[594](/torch/_dynamo/debug_utils.py?line=593) else:

--> [595](/torch/_dynamo/debug_utils.py?line=594) compiled_fn = compiler_fn(gm, example_inputs)

[597](/torch/_dynamo/debug_utils.py?line=596) return compiled_fn

File [~/torch/_inductor/debug.py:239](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_inductor/debug.py:239), in DebugContext.wrap..inner(*args, **kwargs)

[238](/torch/_inductor/debug.py?line=237) with DebugContext():

--> [239](/torch/_inductor/debug.py?line=238) return fn(*args, **kwargs)

File [~/miniconda3/lib/python3.9/contextlib.py:79](https://file+.vscode-resource.vscode-cdn.net/Users/~/miniconda3/lib/python3.9/contextlib.py:79), in ContextDecorator.__call__..inner(*args, **kwds)

[78](/miniconda3/lib/python3.9/contextlib.py?line=77) with self._recreate_cm():

---> [79](/miniconda3/lib/python3.9/contextlib.py?line=78) return func(*args, **kwds)

File [~/torch/_inductor/compile_fx.py:177](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_inductor/compile_fx.py:177), in compile_fx_inner(gm, example_inputs, cudagraphs, num_fixed, is_backward, graph_id)

[176](/torch/_inductor/compile_fx.py?line=175) graph.run(*example_inputs)

--> [177](/torch/_inductor/compile_fx.py?line=176) compiled_fn = graph.compile_to_fn()

[179](/torch/_inductor/compile_fx.py?line=178) if cudagraphs:

File [~/torch/_inductor/graph.py:586](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_inductor/graph.py:586), in GraphLowering.compile_to_fn(self)

[585](/torch/_inductor/graph.py?line=584) def compile_to_fn(self):

--> [586](/torch/_inductor/graph.py?line=585) return self.compile_to_module().call

File [~/torch/_dynamo/utils.py:163](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/utils.py:163), in dynamo_timed..dynamo_timed_inner..time_wrapper(*args, **kwargs)

[162](/torch/_dynamo/utils.py?line=161) t0 = time.time()

--> [163](/torch/_dynamo/utils.py?line=162) r = func(*args, **kwargs)

[164](/torch/_dynamo/utils.py?line=163) time_spent = time.time() - t0

File [~/torch/_inductor/graph.py:571](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_inductor/graph.py:571), in GraphLowering.compile_to_module(self)

[569](/torch/_inductor/graph.py?line=568) from .codecache import PyCodeCache

--> [571](/torch/_inductor/graph.py?line=570) code = self.codegen()

[572](/torch/_inductor/graph.py?line=571) if config.debug:

File [~/torch/_inductor/graph.py:522](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_inductor/graph.py:522), in GraphLowering.codegen(self)

[521](/torch/_inductor/graph.py?line=520) assert self.scheduler is not None # mypy can't figure this out

--> [522](/torch/_inductor/graph.py?line=521) self.scheduler.codegen()

[523](/torch/_inductor/graph.py?line=522) assert self.wrapper_code is not None

File [~/torch/_dynamo/utils.py:163](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/utils.py:163), in dynamo_timed..dynamo_timed_inner..time_wrapper(*args, **kwargs)

[162](/torch/_dynamo/utils.py?line=161) t0 = time.time()

--> [163](/torch/_dynamo/utils.py?line=162) r = func(*args, **kwargs)

[164](/torch/_dynamo/utils.py?line=163) time_spent = time.time() - t0

File [~/torch/_inductor/scheduler.py:1177](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_inductor/scheduler.py:1177), in Scheduler.codegen(self)

[1175](/torch/_inductor/scheduler.py?line=1174) self.available_buffer_names.update(node.get_names())

-> [1177](/torch/_inductor/scheduler.py?line=1176) self.flush()

File [~/torch/_inductor/scheduler.py:1095](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_inductor/scheduler.py:1095), in Scheduler.flush(self)

[1094](/torch/_inductor/scheduler.py?line=1093) for backend in self.backends.values():

-> [1095](/torch/_inductor/scheduler.py?line=1094) backend.flush()

[1096](/torch/_inductor/scheduler.py?line=1095) self.free_buffers()

File [~/torch/_inductor/codegen/cpp.py:1975](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_inductor/codegen/cpp.py:1975), in CppScheduling.flush(self)

[1974](/torch/_inductor/codegen/cpp.py?line=1973) def flush(self):

-> [1975](/torch/_inductor/codegen/cpp.py?line=1974) self.kernel_group.codegen_define_and_call(V.graph.wrapper_code)

[1976](/torch/_inductor/codegen/cpp.py?line=1975) self.get_kernel_group()

File [~/torch/_inductor/codegen/cpp.py:2004](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_inductor/codegen/cpp.py:2004), in KernelGroup.codegen_define_and_call(self, wrapper)

[2003](/torch/_inductor/codegen/cpp.py?line=2002) kernel_name = "kernel_cpp_" + wrapper.next_kernel_suffix()

-> [2004](/torch/_inductor/codegen/cpp.py?line=2003) arg_defs, call_args, arg_types = self.args.cpp_argdefs()

[2005](/torch/_inductor/codegen/cpp.py?line=2004) arg_defs = ",\n".ljust(25).join(arg_defs)

File [~/torch/_inductor/codegen/common.py:330](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_inductor/codegen/common.py:330), in KernelArgs.cpp_argdefs(self)

[329](/torch/_inductor/codegen/common.py?line=328) dtype = buffer_types[outer]

--> [330](/torch/_inductor/codegen/common.py?line=329) cpp_dtype = DTYPE_TO_CPP[dtype]

[331](/torch/_inductor/codegen/common.py?line=330) arg_defs.append(f"const {cpp_dtype}* __restrict__ {inner}")

KeyError: torch.complex128

The above exception was the direct cause of the following exception:

BackendCompilerFailed Traceback (most recent call last)

[/Users/notebooks/test_compile.py](https://file+.vscode-resource.vscode-cdn.net/Users/notebooks/test_compile.py) in line 9

[7](/notebooks/test_compile.py?line=6) X = torch.zeros(10, dtype=torch.complex128)

[8](/notebooks/test_compile.py?line=7) Y = torch.zeros(10, dtype=torch.complex128)

----> [9](/notebooks/test_compile.py?line=8) foo(X, Y)

File [~/torch/_dynamo/eval_frame.py:209](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/eval_frame.py:209), in _TorchDynamoContext.__call__.._fn(*args, **kwargs)

[207](/torch/_dynamo/eval_frame.py?line=206) dynamic_ctx.__enter__()

[208](/torch/_dynamo/eval_frame.py?line=207) try:

--> [209](/torch/_dynamo/eval_frame.py?line=208) return fn(*args, **kwargs)

[210](/torch/_dynamo/eval_frame.py?line=209) finally:

[211](/torch/_dynamo/eval_frame.py?line=210) set_eval_frame(prior)

File [~/torch/_dynamo/eval_frame.py:337](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/eval_frame.py:337), in catch_errors_wrapper..catch_errors(frame, cache_size)

[334](/torch/_dynamo/eval_frame.py?line=333) return hijacked_callback(frame, cache_size, hooks)

[336](/torch/_dynamo/eval_frame.py?line=335) with compile_lock:

--> [337](/torch/_dynamo/eval_frame.py?line=336) return callback(frame, cache_size, hooks)

File [~/torch/_dynamo/convert_frame.py:404](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/convert_frame.py:404), in convert_frame.._convert_frame(frame, cache_size, hooks)

[402](/torch/_dynamo/convert_frame.py?line=401) counters["frames"]["total"] += 1

[403](/torch/_dynamo/convert_frame.py?line=402) try:

--> [404](/torch/_dynamo/convert_frame.py?line=403) result = inner_convert(frame, cache_size, hooks)

[405](/torch/_dynamo/convert_frame.py?line=404) counters["frames"]["ok"] += 1

[406](/torch/_dynamo/convert_frame.py?line=405) return result

File [~/torch/_dynamo/convert_frame.py:104](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/convert_frame.py:104), in wrap_convert_context.._fn(*args, **kwargs)

[102](/torch/_dynamo/convert_frame.py?line=101) torch.fx.graph_module._forward_from_src = fx_forward_from_src_skip_result

[103](/torch/_dynamo/convert_frame.py?line=102) try:

--> [104](/torch/_dynamo/convert_frame.py?line=103) return fn(*args, **kwargs)

[105](/torch/_dynamo/convert_frame.py?line=104) finally:

[106](/torch/_dynamo/convert_frame.py?line=105) torch._C._set_grad_enabled(prior_grad_mode)

File [~/torch/_dynamo/convert_frame.py:262](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/convert_frame.py:262), in convert_frame_assert.._convert_frame_assert(frame, cache_size, hooks)

[259](/torch/_dynamo/convert_frame.py?line=258) global initial_grad_state

[260](/torch/_dynamo/convert_frame.py?line=259) initial_grad_state = torch.is_grad_enabled()

--> [262](/torch/_dynamo/convert_frame.py?line=261) return _compile(

[263](/torch/_dynamo/convert_frame.py?line=262) frame.f_code,

[264](/torch/_dynamo/convert_frame.py?line=263) frame.f_globals,

[265](/torch/_dynamo/convert_frame.py?line=264) frame.f_locals,

[266](/torch/_dynamo/convert_frame.py?line=265) frame.f_builtins,

[267](/torch/_dynamo/convert_frame.py?line=266) compiler_fn,

[268](/torch/_dynamo/convert_frame.py?line=267) one_graph,

[269](/torch/_dynamo/convert_frame.py?line=268) export,

[270](/torch/_dynamo/convert_frame.py?line=269) hooks,

[271](/torch/_dynamo/convert_frame.py?line=270) frame,

[272](/torch/_dynamo/convert_frame.py?line=271) )

File [~/torch/_dynamo/utils.py:163](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/utils.py:163), in dynamo_timed..dynamo_timed_inner..time_wrapper(*args, **kwargs)

[161](/torch/_dynamo/utils.py?line=160) compilation_metrics[key] = []

[162](/torch/_dynamo/utils.py?line=161) t0 = time.time()

--> [163](/torch/_dynamo/utils.py?line=162) r = func(*args, **kwargs)

[164](/torch/_dynamo/utils.py?line=163) time_spent = time.time() - t0

[165](/torch/_dynamo/utils.py?line=164) # print(f"Dynamo timer: key={key}, latency={latency:.2f} sec")

File [~/torch/_dynamo/convert_frame.py:324](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/convert_frame.py:324), in _compile(code, globals, locals, builtins, compiler_fn, one_graph, export, hooks, frame)

[322](/torch/_dynamo/convert_frame.py?line=321) for attempt in itertools.count():

[323](/torch/_dynamo/convert_frame.py?line=322) try:

--> [324](/torch/_dynamo/convert_frame.py?line=323) out_code = transform_code_object(code, transform)

[325](/torch/_dynamo/convert_frame.py?line=324) orig_code_map[out_code] = code

[326](/torch/_dynamo/convert_frame.py?line=325) break

File [~/torch/_dynamo/bytecode_transformation.py:445](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/bytecode_transformation.py:445), in transform_code_object(code, transformations, safe)

[442](/torch/_dynamo/bytecode_transformation.py?line=441) instructions = cleaned_instructions(code, safe)

[443](/torch/_dynamo/bytecode_transformation.py?line=442) propagate_line_nums(instructions)

--> [445](/torch/_dynamo/bytecode_transformation.py?line=444) transformations(instructions, code_options)

[446](/torch/_dynamo/bytecode_transformation.py?line=445) return clean_and_assemble_instructions(instructions, keys, code_options)[1]

File [~/torch/_dynamo/convert_frame.py:311](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/convert_frame.py:311), in _compile..transform(instructions, code_options)

[298](/torch/_dynamo/convert_frame.py?line=297) nonlocal output

[299](/torch/_dynamo/convert_frame.py?line=298) tracer = InstructionTranslator(

[300](/torch/_dynamo/convert_frame.py?line=299) instructions,

[301](/torch/_dynamo/convert_frame.py?line=300) code,

(...)

[309](/torch/_dynamo/convert_frame.py?line=308) mutated_closure_cell_contents,

[310](/torch/_dynamo/convert_frame.py?line=309) )

--> [311](/torch/_dynamo/convert_frame.py?line=310) tracer.run()

[312](/torch/_dynamo/convert_frame.py?line=311) output = tracer.output

[313](/torch/_dynamo/convert_frame.py?line=312) assert output is not None

File [~/torch/_dynamo/symbolic_convert.py:1726](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/symbolic_convert.py:1726), in InstructionTranslator.run(self)

[1724](/torch/_dynamo/symbolic_convert.py?line=1723) def run(self):

[1725](/torch/_dynamo/symbolic_convert.py?line=1724) _step_logger()(logging.INFO, f"torchdynamo start tracing {self.f_code.co_name}")

-> [1726](/torch/_dynamo/symbolic_convert.py?line=1725) super().run()

File [~/torch/_dynamo/symbolic_convert.py:576](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/symbolic_convert.py:576), in InstructionTranslatorBase.run(self)

[571](/torch/_dynamo/symbolic_convert.py?line=570) try:

[572](/torch/_dynamo/symbolic_convert.py?line=571) self.output.push_tx(self)

[573](/torch/_dynamo/symbolic_convert.py?line=572) while (

[574](/torch/_dynamo/symbolic_convert.py?line=573) self.instruction_pointer is not None

[575](/torch/_dynamo/symbolic_convert.py?line=574) and not self.output.should_exit

--> [576](/torch/_dynamo/symbolic_convert.py?line=575) and self.step()

[577](/torch/_dynamo/symbolic_convert.py?line=576) ):

[578](/torch/_dynamo/symbolic_convert.py?line=577) pass

[579](/torch/_dynamo/symbolic_convert.py?line=578) except BackendCompilerFailed:

File [~/torch/_dynamo/symbolic_convert.py:540](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/symbolic_convert.py:540), in InstructionTranslatorBase.step(self)

[538](/torch/_dynamo/symbolic_convert.py?line=537) if not hasattr(self, inst.opname):

[539](/torch/_dynamo/symbolic_convert.py?line=538) unimplemented(f"missing: {inst.opname}")

--> [540](/torch/_dynamo/symbolic_convert.py?line=539) getattr(self, inst.opname)(inst)

[542](/torch/_dynamo/symbolic_convert.py?line=541) return inst.opname != "RETURN_VALUE"

[543](/torch/_dynamo/symbolic_convert.py?line=542) except BackendCompilerFailed:

File [~/torch/_dynamo/symbolic_convert.py:1792](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/symbolic_convert.py:1792), in InstructionTranslator.RETURN_VALUE(self, inst)

[1787](/torch/_dynamo/symbolic_convert.py?line=1786) _step_logger()(

[1788](/torch/_dynamo/symbolic_convert.py?line=1787) logging.INFO,

[1789](/torch/_dynamo/symbolic_convert.py?line=1788) f"torchdynamo done tracing {self.f_code.co_name} (RETURN_VALUE)",

[1790](/torch/_dynamo/symbolic_convert.py?line=1789) )

[1791](/torch/_dynamo/symbolic_convert.py?line=1790) log.debug("RETURN_VALUE triggered compile")

-> [1792](/torch/_dynamo/symbolic_convert.py?line=1791) self.output.compile_subgraph(

[1793](/torch/_dynamo/symbolic_convert.py?line=1792) self, reason=GraphCompileReason("return_value", [self.frame_summary()])

[1794](/torch/_dynamo/symbolic_convert.py?line=1793) )

[1795](/torch/_dynamo/symbolic_convert.py?line=1794) self.output.add_output_instructions([create_instruction("RETURN_VALUE")])

File [~/torch/_dynamo/output_graph.py:517](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/output_graph.py:517), in OutputGraph.compile_subgraph(self, tx, partial_convert, reason)

[503](/torch/_dynamo/output_graph.py?line=502) self.add_output_instructions(random_calls_instructions)

[505](/torch/_dynamo/output_graph.py?line=504) if (

[506](/torch/_dynamo/output_graph.py?line=505) stack_values

[507](/torch/_dynamo/output_graph.py?line=506) and all(

(...)

[514](/torch/_dynamo/output_graph.py?line=513)

[515](/torch/_dynamo/output_graph.py?line=514) # optimization to generate better code in a common case

[516](/torch/_dynamo/output_graph.py?line=515) self.add_output_instructions(

--> [517](/torch/_dynamo/output_graph.py?line=516) self.compile_and_call_fx_graph(tx, list(reversed(stack_values)), root)

[518](/torch/_dynamo/output_graph.py?line=517) + [create_instruction("UNPACK_SEQUENCE", len(stack_values))]

[519](/torch/_dynamo/output_graph.py?line=518) )

[520](/torch/_dynamo/output_graph.py?line=519) else:

[521](/torch/_dynamo/output_graph.py?line=520) graph_output_var = self.new_var("graph_out")

File [~/torch/_dynamo/output_graph.py:588](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/output_graph.py:588), in OutputGraph.compile_and_call_fx_graph(self, tx, rv, root)

[586](/torch/_dynamo/output_graph.py?line=585) assert_no_fake_params_or_buffers(gm)

[587](/torch/_dynamo/output_graph.py?line=586) with tracing(self.tracing_context):

--> [588](/torch/_dynamo/output_graph.py?line=587) compiled_fn = self.call_user_compiler(gm)

[589](/torch/_dynamo/output_graph.py?line=588) compiled_fn = disable(compiled_fn)

[591](/torch/_dynamo/output_graph.py?line=590) counters["stats"]["unique_graphs"] += 1

File [~/torch/_dynamo/utils.py:163](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/utils.py:163), in dynamo_timed..dynamo_timed_inner..time_wrapper(*args, **kwargs)

[161](/torch/_dynamo/utils.py?line=160) compilation_metrics[key] = []

[162](/torch/_dynamo/utils.py?line=161) t0 = time.time()

--> [163](/torch/_dynamo/utils.py?line=162) r = func(*args, **kwargs)

[164](/torch/_dynamo/utils.py?line=163) time_spent = time.time() - t0

[165](/torch/_dynamo/utils.py?line=164) # print(f"Dynamo timer: key={key}, latency={latency:.2f} sec")

File [~/torch/_dynamo/output_graph.py:675](https://file+.vscode-resource.vscode-cdn.net/Users/~/torch/_dynamo/output_graph.py:675), in OutputGraph.call_user_compiler(self, gm)

[673](/torch/_dynamo/output_graph.py?line=672) except Exception as e:

[674](/torch/_dynamo/output_graph.py?line=673) compiled_fn = gm.forward

--> [675](/torch/_dynamo/output_graph.py?line=674) raise BackendCompilerFailed(self.compiler_fn, e) from e

[676](/torch/_dynamo/output_graph.py?line=675) return compiled_fn

BackendCompilerFailed: debug_wrapper raised KeyError: torch.complex128

Set torch._dynamo.config.verbose=True for more information

You can suppress this exception and fall back to eager by setting:

torch._dynamo.config.suppress_errors = True

```

### Minified repro

_No response_

### Versions

PyTorch version: 2.0.0

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: macOS 13.2.1 (x86_64)

GCC version: Could not collect

Clang version: 14.0.0 (clang-1400.0.29.202)

CMake version: Could not collect

Libc version: N/A

Python version: 3.9.16 (main, Jan 11 2023, 10:02:19) [Clang 14.0.6 ] (64-bit runtime)

Python platform: macOS-10.16-x86_64-i386-64bit

Is CUDA available: False

CUDA runtime version: No CUDA

CUDA_MODULE_LOADING set to: N/A

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Intel(R) Core(TM) i7-9750H CPU @ 2.60GHz

Versions of relevant libraries:

[pip3] flake8==6.0.0

[pip3] mypy-extensions==1.0.0

[pip3] numpy==1.24.2

[pip3] pytorch-lightning==2.0.0

[pip3] torch==2.0.0

[pip3] torchaudio==2.0.1

[pip3] torchcde==0.2.5

[pip3] torchdiffeq==0.2.3

[pip3] torchmetrics==0.11.4

[pip3] torchqdynamics==0.1.0

[pip3] torchsde==0.2.5

[pip3] torchvision==0.15.1

[conda] blas 1.0 mkl

[conda] mkl 2021.4.0 hecd8cb5_637

[conda] mkl-service 2.4.0 py39h9ed2024_0

[conda] mkl_fft 1.3.1 py39h4ab4a9b_0

[conda] mkl_random 1.2.2 py39hb2f4e1b_0

[conda] numpy 1.24.2 pypi_0 pypi

[conda] pytorch-lightning 2.0.0 pypi_0 pypi

[conda] torch 2.0.0 pypi_0 pypi

[conda] torchaudio 2.0.1 pypi_0 pypi

[conda] torchcde 0.2.5 pypi_0 pypi

[conda] torchdiffeq 0.2.3 pypi_0 pypi

[conda] torchmetrics 0.11.4 pypi_0 pypi

[conda] torchqdynamics 0.1.0 pypi_0 pypi

[conda] torchsde 0.2.5 pypi_0 pypi

[conda] torchvision 0.15.1 pypi_0 pypi

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh @voznesenskym @penguinwu @anijain2305 @EikanWang @jgong5 @Guobing-Chen @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx @peterbell10 @desertfire

| 22 |

3,022 | 98,142 |

double free or corruption (fasttop)

|

needs reproduction, triaged

|

### 🐛 Describe the bug

pytoch 2.0

conda install pytorch torchvision torchaudio cpuonly -c pytorch

Based on C ++ custom class, TORCH TENSOR as the inherent attribute, when the program executes the completion of the object degeneration, there is an error **Double Free or Corruption (Fasttop)**. Theoretically TORCH Tensor memory is managed and released by TORCH itself.

torch::TensorOptions options(torch::kFloat32);

if (is_train) { options.requires_grad(); }

if (normal) {

auto gen_opt = c10::optional<at::Generator>();

value_tensor = torch::normal(mean, std, {1, dim_size}, gen_opt, options = torch::kFloat32);

} else {

value_tensor = torch::rand({1, dim_size}, options = options);

}

// torch::DeviceType device = torch::DeviceType::CPU

value_tensor.to(torch::kCPU);

valgrind log:

```

==1989799== 8 bytes in 1 blocks are still reachable in loss record 299 of 3,796

==1989799== at 0x483DF0F: operator new(unsigned long) (vg_replace_malloc.c:434)

==1989799== by 0x9398B29: char const* c10::demangle_type<torch::jit::SRNativeOperatorFunctor_prim_TupleIndex>() (in /App/conda/envs/conda_xmake_cpu/lib/python3.9/site-packages/torch/lib/libtorch_cpu.so)

==1989799== by 0x5C55771: __static_initialization_and_destruction_0(int, int) [clone .constprop.0] (in /App/conda/envs/conda_xmake_cpu/lib/python3.9/site-packages/torch/lib/libtorch_cpu.so)

==1989799== by 0x4011B99: call_init.part.0 (dl-init.c:72)