Serial Number

int64 1

6k

| Issue Number

int64 75.6k

112k

| Title

stringlengths 3

357

| Labels

stringlengths 3

241

⌀ | Body

stringlengths 9

74.5k

⌀ | Comments

int64 0

867

|

|---|---|---|---|---|---|

4,801 | 84,681 |

JIT will affect the gradient computation of forward mode

|

oncall: jit

|

### 🐛 Describe the bug

JIT will affect the gradient computation of forward mode for some API

For example, if we directly call `jit_fn` with forward mode, it will output the correct gradient:

```py

import torch

def fn(input, target):

weight = None

reduction = "mean"

return torch.nn.functional.multilabel_soft_margin_loss(input, target, weight=weight, reduction=reduction, )

input = torch.tensor([[1., 1.]], dtype=torch.float32)

target = torch.tensor([[2., 2.]], dtype=torch.float32)

inputs1 = (input.clone().requires_grad_(), target.clone().requires_grad_())

inputs2 = (input.clone().requires_grad_(), target.clone().requires_grad_())

jit_fn = torch.jit.trace(fn, (input, target))

print(torch.autograd.functional.jacobian(jit_fn, inputs2, vectorize=True, strategy='forward-mode'))

# [tensor([[-0.6345, -0.6345]], grad_fn=<ReshapeAliasBackward0>), tensor([[-0.5000, -0.5000]], grad_fn=<ReshapeAliasBackward0>)

```

However, if we call `jit_fn(*inputs1)` before the gradient computation in forward mode with `inputs2`, the gradient will be all 0, which is wrong

```py

import torch

def fn(input, target):

weight = None

reduction = "mean"

return torch.nn.functional.multilabel_soft_margin_loss(input, target, weight=weight, reduction=reduction, )

input = torch.tensor([[1., 1.]], dtype=torch.float32)

target = torch.tensor([[2., 2.]], dtype=torch.float32)

inputs1 = (input.clone().requires_grad_(), target.clone().requires_grad_())

inputs2 = (input.clone().requires_grad_(), target.clone().requires_grad_())

jit_fn = torch.jit.trace(fn, (input, target))

jit_fn(*inputs1)

print(torch.autograd.functional.jacobian(jit_fn, inputs2, vectorize=True, strategy='forward-mode'))

# [tensor([[0., 0.]]), tensor([[0., 0.]])]

```

Interestingly, this cannot affect the gradient computation in reverse mode.

Many APIs suffer from this problem, like `lp_pool1d`

### Versions

pytorch: 1.12.1

| 2 |

4,802 | 84,673 |

Autograd will take `init` module API into account when using `jit`

|

oncall: jit

|

### 🐛 Describe the bug

As mentioned in doc,

> All the functions in this module (`init`) are intended to be used to initialize neural network parameters, so they all run in :func:`torch.no_grad` mode and will not be taken into account by autograd.

However, autograd will take `init` module API into account when using `jit`.

```py

import torch

def fn(input):

return torch.nn.init.ones_(input)

input = torch.rand([4])

jit_fn = torch.jit.trace(fn, (input.clone(), ))

fn(input.clone().requires_grad_()) # work

jit_fn(input.clone().requires_grad_())

# RuntimeError: a leaf Variable that requires grad is being used in an in-place operation.

```

### Versions

pytorch: 1.12.1

| 2 |

4,803 | 84,661 |

[ONNX] Track non-exportable pattern as diagnostics.

|

module: onnx, triaged, onnx-triaged

|

Issue hub for tracking and organizing patterns to be added as diagnostic rules.

- [ ] [#87738](https://github.com/pytorch/pytorch/issues/87738) Not non-exportable. Convert shape inference warning to diagnostic.

| 0 |

4,804 | 84,652 |

Support FP16 with torch._fake_quantize_learnable_per_channel_affine & torch._fake_quantize_learnable_per_tensor_affine

|

oncall: quantization, triaged

|

### 🚀 The feature, motivation and pitch

The PyTorch operators `torch._fake_quantize_learnable_per_channel_affine` and `torch._fake_quantize_learnable_per_tensor_affine` currently only support `torch.float32` datatypes, while the non-learnable scale operators `torch.fake_quantize_per_channel_affine` and `torch.fake_quantize_per_tensor_affine` support more datatypes (ie torch.float16). This makes using the learnable scale fake quantization operators break some pipelines which use CUDA mixed precision training, and otherwise breaks the consistency between the learnable and non-learnable scale fake quantize operators.

The non-learnable scale fake quantize operators originally only supported `torch.float32` (see [this issue ](https://github.com/pytorch/pytorch/issues/50417) that ran into this problem, and [this issue](https://github.com/pytorch/pytorch/issues/42351) which tracked the implementation of more datatypes). I'd like to know if there is a timeline to expand support for extra datatypes for the learnable scale fake quantization operators as well.

### Alternatives

_No response_

### Additional context

_No response_

cc @jerryzh168 @jianyuh @raghuramank100 @jamesr66a @vkuzo @jgong5 @Xia-Weiwen @leslie-fang-intel

| 2 |

4,805 | 84,646 |

JIT script calculation/dtype inconsistent depending on operator expression

|

oncall: jit

|

### 🐛 Describe the bug

In the following simple snippet, we expect `sum_terms` to be equal to `one_expression` (both have all entries -1), and that is indeed the case in the python interpreter. With the `@torch.jit.script` decorator, that is no longer the case, indicating a bug with the JIT compiler.

```

import torch

@torch.jit.script # code works as expected if decorator is removed

def func(a, b):

term_1 = 1 * (a & b)

term_2 = -1 * (a != b)

sum_terms = term_1 + term_2

one_expression = 1 * (a & b) - 1 * (a != b)

# workaround

# one_expression = (a & b).to(torch.int64) - (a != b).to(torch.int64)

print(sum_terms == one_expression)

a = torch.zeros(10, dtype=bool, device='cuda')

b = torch.ones(10, dtype=bool, device='cuda')

func(a, b)

```

This might have to do with the inconsistent types yielded, `term_1` of `CUDABoolType{10}` whereas `term_2` of `CUDALongType{10}`.

Also in this minimal version, I get the following error. But in my original programme containing identical code I don't--only the values are wrong (all entries 1 instead of -1). I have yet to figure out why the RuntimeError occurs in only one of the two.

```

Traceback (most recent call last):

File "test.py", line 22, in <module>

func(a, b)

RuntimeError: The following operation failed in the TorchScript interpreter.

Traceback of TorchScript (most recent call last):

File "test.py", line 15, in func

1 * (a & b) - 1 * (a != b),

)

one_expression = 1 * (a & b) - 1 * (a != b)

~~~~~~~~~~~~~~~~~~~~~~~~~ <--- HERE

print(sum_terms == one_expression)

RuntimeError: Subtraction, the `-` operator, with two bool tensors is not supported. Use the `^` or `logical_xor()` operator instead.

```

### Versions

```

Collecting environment information...

PyTorch version: 1.12.0+cu102

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.5 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: Could not collect

Libc version: glibc-2.31

Python version: 3.8.10 (default, Jun 22 2022, 20:18:18) [GCC 9.4.0] (64-bit runtime)

Python platform: Linux-5.15.0-46-generic-x86_64-with-glibc2.29

Is CUDA available: True

CUDA runtime version: Could not collect

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 2080 Ti

Nvidia driver version: 515.65.01

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.23.1

[pip3] torch==1.12.0

[pip3] torchvision==0.13.0

[conda] Could not collect

```

| 0 |

4,806 | 84,630 |

torch.nn.functional.interpolate fails on some degenerate shapes, but passes on others

|

triaged, module: interpolation

|

Not sure if this can be counted as bug or not. If you have an input with an empty batch dimension, everything works fine

```py

>>> t = torch.rand(0, 3, 16, 16)

>>> interpolate(t, (15, 17))

tensor([], size=(0, 3, 15, 17))

```

If any other dimension is zero, it will throw an error:

```py

>>> t = torch.rand(4, 0, 16, 16)

>>> interpolate(t, (15, 17))

RuntimeError: Non-empty 4D data tensor expected but got a tensor with sizes [4, 0, 16, 16]

>>> t = torch.rand(4, 3, 0, 16)

>>> interpolate(t, (15, 17))

RuntimeError: Input and output sizes should be greater than 0, but got input (H: 0, W: 16) output (H: 15, W: 17)

>>> t = torch.rand(4, 3, 16, 0)

>>> interpolate(t, (15, 17))

RuntimeError: Input and output sizes should be greater than 0, but got input (H: 16, W: 0) output (H: 15, W: 17)

```

The latter two are somewhat understandable. Still, since no interpolation will happen in either case, shouldn't we align the behavior?

| 1 |

4,807 | 84,628 |

INTERNAL ASSERT when the type of argument is not considered in JIT

|

oncall: jit

|

### 🐛 Describe the bug

INTERNAL ASSERT when the type of argument is not considered in JIT. For example, some argument may be fed with boolean, but `torch.jit.trace` doesn't consider such cases and output INTERNAL ASSERT directly

```py

import torch

def fn(input):

return torch.add(input, other=True)

input = torch.tensor([1.])

fn(input) # tensor([2.])

jit_fn = torch.jit.trace(fn, input)

```

```

RuntimeError: 0INTERNAL ASSERT FAILED at "/opt/conda/conda-bld/pytorch_1646756402876/work/torch/csrc/jit/ir/alias_analysis.cpp":607, please report a bug to PyTorch. We don't have an op for aten::add but it isn't a special case. Argument types: Tensor, bool, int,

Candidates:

aten::add.Tensor(Tensor self, Tensor other, *, Scalar alpha=1) -> (Tensor)

aten::add.Scalar(Tensor self, Scalar other, Scalar alpha=1) -> (Tensor)

aten::add.out(Tensor self, Tensor other, *, Scalar alpha=1, Tensor(a!) out) -> (Tensor(a!))

aten::add.t(t[] a, t[] b) -> (t[])

aten::add.str(str a, str b) -> (str)

aten::add.int(int a, int b) -> (int)

aten::add.complex(complex a, complex b) -> (complex)

aten::add.float(float a, float b) -> (float)

aten::add.int_complex(int a, complex b) -> (complex)

aten::add.complex_int(complex a, int b) -> (complex)

aten::add.float_complex(float a, complex b) -> (complex)

aten::add.complex_float(complex a, float b) -> (complex)

aten::add.int_float(int a, float b) -> (float)

aten::add.float_int(float a, int b) -> (float)

aten::add(Scalar a, Scalar b) -> (Scalar)

...

aten::add.complex_float(complex a, float b) -> (complex)

aten::add.int_float(int a, float b) -> (float)

aten::add.float_int(float a, int b) -> (float)

aten::add(Scalar a, Scalar b) -> (Scalar)

```

It seems that many APIs suffer from this issue, such as `ne, le, ge, div, mul, ...`

### Versions

pytorch: 1.12.1

| 2 |

4,808 | 84,625 |

Beta distribution behaves incorrectly for small parameters

|

module: distributions, triaged, module: edge cases

|

### 🐛 Describe the bug

The `Beta(alpha, alpha)` distribution should converge to `Bernoulli(0.5)` as `alpha -> 0`. However, we get a `Dirac(0.5)`, which is what we expect for `alpha -> infty` (last line, correct).

```python

import torch

print("Torch version:", torch.__version__)

torch.manual_seed(0)

beta = torch.distributions.Beta(1e-3, 1e-3)

print("Sample from Beta(1e-6, 1e-6):", beta.sample())

beta = torch.distributions.Beta(1e3, 1e+3)

print("Sample from Beta(1e+3, 1e+3):", beta.sample())

```

Gives:

```

Torch version: 1.12.1

Sample from Beta(1e-6, 1e-6): tensor(0.5000)

Sample from Beta(1e+3, 1e+3): tensor(0.4966)

```

### Versions

```

Collecting environment information...

PyTorch version: 1.12.1

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: macOS 12.5.1 (x86_64)

GCC version: Could not collect

Clang version: 13.1.6 (clang-1316.0.21.2.5)

CMake version: Could not collect

Libc version: N/A

Python version: 3.9.7 (default, Sep 16 2021, 08:50:36) [Clang 10.0.0 ] (64-bit runtime)

Python platform: macOS-10.16-x86_64-i386-64bit

Is CUDA available: False

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.22.4

[pip3] numpydoc==1.1.0

[pip3] pytorch-ignite==0.4.9

[pip3] torch==1.12.1

[pip3] torchaudio==0.12.1

[pip3] torchmetrics==0.9.3

[pip3] torchvision==0.13.1

[conda] blas 1.0 mkl

[conda] ffmpeg 4.3 h0a44026_0 pytorch

[conda] mkl 2021.4.0 hecd8cb5_637

[conda] mkl-service 2.4.0 py39h9ed2024_0

[conda] mkl_fft 1.3.1 py39h4ab4a9b_0

[conda] mkl_random 1.2.2 py39hb2f4e1b_0

[conda] numpy 1.22.4 pypi_0 pypi

[conda] numpydoc 1.1.0 pyhd3eb1b0_1

[conda] pytorch 1.12.1 py3.9_0 pytorch

[conda] pytorch-ignite 0.4.9 pypi_0 pypi

[conda] torchaudio 0.12.1 py39_cpu pytorch

[conda] torchmetrics 0.9.3 pypi_0 pypi

[conda] torchvision 0.13.1 py39_cpu pytorch

```

cc @fritzo @neerajprad @alicanb @nikitaved

| 1 |

4,809 | 84,620 |

torch.hub.load local model

|

triaged, module: hub

|

### 🐛 Describe the bug

python code:

```

model_name = 'x3d_m'

# model = torch.hub.load('facebookresearch/pytorchvideo', model_name, pretrained=True)

model = torch.hub.load('/Users/kpinfo/.cache/torch/hub/pytorch_vision_master/', model_name, source='local')

```

error:

```

ImportError: cannot import name 'get_model_weights' from 'torchvision.models

```

I have run "model = torch.hub.load('facebookresearch/pytorchvideo', model_name, pretrained=True)" well. But sometimes it will stuck and exit for timeout. I find x3d_m model have load in "/Users/kpinfo/.cache/torch/hub/checkpoints/X3D_M.pyth" , and I want load this local model when I need x3d model next time.

### Versions

Collecting environment information...

PyTorch version: 1.11.0

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: macOS 10.15.2 (x86_64)

GCC version: Could not collect

Clang version: 11.0.3 (clang-1103.0.32.62)

CMake version: Could not collect

Libc version: N/A

Python version: 3.7.11 (default, Jul 27 2021, 07:03:16) [Clang 10.0.0 ] (64-bit runtime)

Python platform: Darwin-19.2.0-x86_64-i386-64bit

Is CUDA available: False

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] efficientnet-pytorch==0.7.1

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.21.6

[pip3] pytorchvideo==0.1.3

[pip3] torch==1.11.0

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] efficientnet-pytorch 0.7.1 pypi_0 pypi

[conda] numpy 1.21.6 pypi_0 pypi

[conda] pytorchvideo 0.1.3 pypi_0 pypi

[conda] torch 1.11.0 pypi_0 pypi

[conda] torchaudio 0.11.0 pypi_0 pypi

[conda] torchvision 0.12.0 pypi_0 pypi

cc @nairbv @NicolasHug @vmoens @jdsgomes

| 1 |

4,810 | 84,616 |

Autogenerated out functions are missing at::cpu:: and co bindings

|

triaged, module: codegen, topic: build

|

### 🐛 Describe the bug

Example: look at `build/aten/src/ATen/ops/prod_cpu_dispatch.h`. On my build it looks like

```

TORCH_API at::Tensor prod(const at::Tensor & self, c10::optional<at::ScalarType> dtype=c10::nullopt);

TORCH_API at::Tensor prod(const at::Tensor & self, int64_t dim, bool keepdim=false, c10::optional<at::ScalarType> dtype=c10::nullopt);

TORCH_API at::Tensor & prod_out(at::Tensor & out, const at::Tensor & self, int64_t dim, bool keepdim=false, c10::optional<at::ScalarType> dtype=c10::nullopt);

TORCH_API at::Tensor & prod_outf(const at::Tensor & self, int64_t dim, bool keepdim, c10::optional<at::ScalarType> dtype, at::Tensor & out);

```

However, notice that there are two functional overloads, but only one `prod_out` overload. We're missing the Tensor, ScalarType overload for out.

This is affecting static runtime.

cc @bhosmer @bdhirsh @d1jang @tenpercent

### Versions

master

| 0 |

4,811 | 84,615 |

Serialize the warmed up torchscript module

|

oncall: jit

|

### 🚀 The feature, motivation and pitch

- JIT and torchscript can provide a huge performance boost for models, but the long warm-up time for large models like stable diffusion unet(~90s) can be a huge blocker for production, even individual use

- If there could be a way of serializing the warmed-up module (even with limitation that needs to be run on specific hardware), would be super helpful

### Alternatives

- Tried to `torch._C._jit_set_profiling_executor(False) eliminates the extra recompilations` but this eliminates performance gain

### Additional context

_No response_

| 1 |

4,812 | 93,661 |

Capture scalar outputs / dynamically sized outputs by default, partition graphs for backends that can't handle it

|

triaged, ezyang's list, oncall: pt2, module: dynamic shapes

|

Capturing scalar outputs improves perf e.g. by capturing the optimizer. Now that the fx subgraph capability matcher has landed, it should be easy for backends which do not support scalars to compile subgraphs even with this config turned on. Additionally, having a config option be off by default means its test coverage is extremely limited.

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh

| 4 |

4,813 | 84,597 |

Accept SymInts and SymFloats For Scalar Inputs

|

triaged

|

### 🚀 The feature, motivation and pitch

When we are a tracing context (ProxyTensor/FakeTensor/TorchDynamo) tensor -> scalar conversions can cause graph breaks because the tracking tensors do not have real data associated with them. This can occur with user invocations: `x.item()` and in implicit Tensor->Scalar conversions when a 0-element tensor is passed in as a `Scalar` argument.

ProxyTensor and FakeTensor do some amount of [constant](https://github.com/pytorch/pytorch/pull/84387) tracking for cases where the value of the 0-element tensor is known statically, but there are other cases where this will occur.

For example, in [adagrad](https://github.com/pytorch/pytorch/blob/master/torch/optim/adagrad.py#L279): `param.addcdiv_(grad, std, value=-clr)`

`addcdiv` has the signature: `addcdiv(Tensor self, Tensor tensor1, Tensor tensor2, *, Scalar value=1) -> Tensor`. When the config capture_scalar_outputs is true, `-clr` will be a 0-element tensor, and the dispatcher call of _local_scalar_dense in converting `-clr` to a scalar will cause a graph break.

If Scalars could contain SymInts/Symfloats, `-clr` could be returned from `_local_scalar_dense` as a SymFloat without graph breaking.

More generally, this might allow us to re-factor the "capture scalar inputs" config to work by tracing SymInts/Symfloats instead of wrapping scalars to tensors, and potentially track other dynamic shape operations that occur from indexing tensor data.

| 0 |

4,814 | 84,593 |

Uneven and/or Dynamically sized collectives

|

good first issue, triaged, module: c10d

|

### 🚀 The feature, motivation and pitch

A recurring question we get is how to handle uneven or dynamic sized collectives.

For example, users want to:

- Scatter tensors of different sizes.

- Dynamically determine broadcast tensor sizes on the source rank.

There are plenty of forum questions on this subject that validate the need for such new API.

In addition to be a constant source of problem for our users, such APIs are particularly difficult to efficiently and correctly implement when using NCCL. This stems from the fact that shape calculation happens on CPU but values must transit in CUDA tensors as NCCL doesn't support CPU tensors.

This device mismatch issue is not trivial and resulted in quite a few bugs in c10d's object collectives.

## The API

Add a `torch.distributed.dynamic` package that supports slower collectives that are significantly flexible and address those concerns.

The overall design of those collectives is that they should not assume perfect uniform knowledge across all ranks.

The module would have variants of broadcast, all_gather, gather, scatter, all_to_all that can handle dynamic and uneven tensor sizes transparently for users.

It's unclear whether we should extend this to reduce collectives as we'd have to deal with the issue of missing data.

### Alternatives

Not implement this module and keep suggesting work-arounds to users.

### Additional context

Data-dependent collective shapes is particularly common with models that employ sparse-computation like Mixture-of-Experts.

| 7 |

4,815 | 84,588 |

torch.jit.script IndentationError: unexpected indent

|

oncall: jit

|

### 🐛 Describe the bug

```

import torch

import torchvision

import torchvision.models as models

import torch.nn as nn

from torch.utils.mobile_optimizer import optimize_for_mobile

class ModifiedResNet18Model(torch.nn.Module):

def __init__(self):

super(ModifiedResNet18Model, self).__init__()

model = models.resnet18(pretrained=True)

modules = list(model.children())[:-1]

model = nn.Sequential(*modules)

self.features = model

for param in self.features.parameters():

param.requires_grad = False

self.fc = nn.Sequential(

nn.Dropout(),

nn.Linear(512,1024),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(1024,512),

nn.ReLU(inplace=True),

nn.Linear(512, 4))

def forward(self, x):

x = self.features(x)

x = nn.functional.adaptive_avg_pool2d(x, 1).reshape(x.shape[0], -1)

x = self.fc(x)

return x

model = ModifiedResNet18Model()

print(model)

model_scripted = torch.jit.script(model) # throwing the error

opti_model = optimize_for_mobile(model_scripted)

opti_model._save_for_lite_interpreter("resnet4.ptl")

print('Create Model Success')

```

### Versions

Torch Version: 1.11.0+cu113

TorchVision Version: 0.12.0+cu113

**Error**

Traceback (most recent call last):

File "C:\Users\Visionhealth\Desktop\Experiment_3\onlyforpruning\testtorchscript.py", line 37, in <module>

model_scripted = torch.jit.script(model)

File "C:\Users\Visionhealth\AppData\Local\Programs\Python\Python310\lib\site-packages\torch\jit\_script.py", line 1265, in script

return torch.jit._recursive.create_script_module(

File "C:\Users\Visionhealth\AppData\Local\Programs\Python\Python310\lib\site-packages\torch\jit\_recursive.py", line 453, in create_script_module

AttributeTypeIsSupportedChecker().check(nn_module)

File "C:\Users\Visionhealth\AppData\Local\Programs\Python\Python310\lib\site-packages\torch\jit\_check.py", line 74, in check

init_ast = ast.parse(textwrap.dedent(source_lines))

File "C:\Users\Visionhealth\AppData\Local\Programs\Python\Python310\lib\ast.py", line 50, in parse

return compile(source, filename, mode, flags,

File "<unknown>", line 1

def __init__(self):

IndentationError: unexpected indent

| 0 |

4,816 | 84,578 |

module: multiprocessing SimpleQueue put cannot bigger 716 in windows.And it is not has any info.The program is blocked and does not move.

|

module: multiprocessing, triaged

|

### 🐛 Describe the bug

#run the code.It is not has any info,and not work.

#python3.9.13 64-bit windows10 torch1.12.1+cu116

#the last code is connection.py line 205 self._send_bytes(m[offset:offset + size])

from torch import multiprocessing as mp

ctx = mp.get_context("spawn")

free_queue = ctx.SimpleQueue()

full_queue = ctx.SimpleQueue()

for m in range(1536):

print("put data index:",m)

if(m==716):

print("The program is blocked and does not move in 716.")

free_queue.put(m)

print("It is not go end.")

### Versions

Collecting environment information...

PyTorch version: 1.12.1+cu116

Is debug build: False

CUDA used to build PyTorch: 11.6

ROCM used to build PyTorch: N/A

OS: Microsoft Windows 10 家庭中文版

GCC version: (GCC) 5.3.0

Clang version: Could not collect

CMake version: Could not collect

Libc version: N/A

Python version: 3.9.13 (tags/v3.9.13:6de2ca5, May 17 2022, 16:36:42) [MSC v.1929 64 bit (AMD64)] (64-bit runtime)

Python platform: Windows-10-10.0.19044-SP0

Is CUDA available: True

CUDA runtime version: 11.7.64

GPU models and configuration: GPU 0: NVIDIA GeForce GTX 1070

Nvidia driver version: 516.59

cuDNN version: C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.7\bin\cudnn_ops_train64_8.dll

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.23.2

[pip3] torch==1.12.1+cu116

[pip3] torch-tb-profiler==0.4.0

[pip3] torchaudio==0.12.1+cu116

[pip3] torchvision==0.13.1+cu116

[conda] Could not collect

cc @VitalyFedyunin

| 0 |

4,817 | 84,573 |

Tensor slice copy across multiple devices fails silently

|

triaged, module: advanced indexing

|

### 🐛 Describe the bug

When trying to update certain elements between two tensors with the source on GPU memory and the target on CPU memory, the .copy_ operation fails silently. Below is an example:

```

import torch as pt

dims = (4,5,)

gpu0 = pt.device(0)

# src and tgt matrices...src on gpu0...target on CPU

src = pt.randn(*dims).to(gpu0)

tgt = pt.zeros(dims)

# mask and idxs

mask = src > 0 # sample function...mask on GPU

idxs = mask.nonzero(as_tuple=True) # idxs on GPU as well

# copy elements

tgt[idxs].copy_(src[idxs]) # does not update tgt

```

### Versions

Collecting environment information...

PyTorch version: 1.10.0+cu113

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.6 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: Could not collect

CMake version: version 3.17.3

Libc version: glibc-2.25

Python version: 3.6.9 (default, Jun 29 2022, 11:45:57) [GCC 8.4.0] (64-bit runtime)

Python platform: Linux-5.4.0-124-generic-x86_64-with-Ubuntu-18.04-bionic

Is CUDA available: True

CUDA runtime version: 11.3.109

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 3070 Ti

Nvidia driver version: 470.141.03

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.2.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.2.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.2.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.2.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.2.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.2.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.2.1

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.19.5

[pip3] torch==1.10.0+cu113

[pip3] torch-scatter==2.0.5

[pip3] torchaudio==0.10.0+cu113

[pip3] torchvision==0.11.1+cu113

[conda] blas 1.0 mkl

[conda] mkl 2020.0 166

[conda] mkl-service 2.3.0 py37he904b0f_0

[conda] mkl_fft 1.0.15 py37ha843d7b_0

[conda] mkl_random 1.1.0 py37hd6b4f25_0

[conda] numpy 1.18.1 py37h4f9e942_0

[conda] numpy-base 1.18.1 py37hde5b4d6_1

[conda] numpydoc 0.9.2 py_0

| 13 |

4,818 | 84,565 |

Tensor Subclass that doesn't require grad may wrap a Tensor subclass that requires grad

|

triaged, tensor subclass

|

From a practical perspective, this only happens today in functorch.

## The Problem

We have some composite operations that check if a Tensor requires grad. If it does, then it goes down one path; if it doesn't, then it goes down a "non-differentiable" path.

In functorch, we can have Tensor subclasses that do not require grad that wrap Tensors that do require grad. Unfortunately, the composite operations check if a Tensor requires grad and return false on the Tensor subclass, causing it to go down a non-differentiable path.

See https://github.com/pytorch/pytorch/pull/84137 for example. This problem is related to composite compliance: https://github.com/pytorch/pytorch/issues/69991

cc @ezyang

| 1 |

4,819 | 84,560 |

[optim] asgd : handling of complex params as real params (NaN vs inf)

|

module: optimizer, triaged, module: edge cases

|

### 🐛 Describe the bug

With patch in https://github.com/pytorch/pytorch/pull/84472

```python

def print_grad(grad):

print("PRINT GRAD HOOK:", grad)

return grad

a1 = torch.tensor([-0.4329+0.3561j, 0.1633+0.4901j], requires_grad=True)

a1_real = a1.real.clone().detach()

a1_imag = a1.imag.clone().detach()

a1_real.requires_grad_()

a1_imag.requires_grad_()

optimizer_constructor = torch.optim.ASGD

# Attach hook

a1.register_hook(print_grad)

a1_real.register_hook(print_grad)

a1_imag.register_hook(print_grad)

optim1 = optimizer_constructor([a1])

optim2 = optimizer_constructor([a1_real, a1_imag])

for i in range(10):

print(f"*****{i}****")

print(a1)

print(a1_real, a1_imag)

optim1.zero_grad()

optim2.zero_grad()

if i == 0:

torch.testing.assert_close(a1.grad, None)

torch.testing.assert_close(a1_real.grad, None)

torch.testing.assert_close(a1_imag.grad, None)

else:

torch.testing.assert_close(a1.grad.real, a1_real.grad, equal_nan=True)

torch.testing.assert_close(a1.grad.imag, a1_imag.grad, equal_nan=True)

a2 = torch.complex(a1_real, a1_imag)

torch.testing.assert_close(a1, a2)

o = f(a1)

o2 = f(a2)

o.backward()

print("GRAD:", a1.grad)

o2.backward()

print("REAL GRAD", a1.grad.real, a1_real.grad)

print("IMAG GRAD", a1.grad.imag, a1_imag.grad)

torch.testing.assert_close(a1.grad.real, a1_real.grad, equal_nan=True) # Fails here (optimizer shouldn't affect this ideally)!

torch.testing.assert_close(a1.grad.imag, a1_imag.grad, equal_nan=True)

optim1.step()

optim2.step()

```

Fails with

```

AssertionError: Tensor-likes are not close!

Mismatched elements: 1 / 2 (50.0%)

Greatest absolute difference: nan at index (1,) (up to 1e-05 allowed)

Greatest relative difference: nan at index (1,) (up to 1.3e-06 allowed)

```

NOTE:

Interestingly value printed by the hook for a1.grad is different from the next print at

`print("REAL GRAD", a1.grad.real, a1_real.grad)`.

cc: @albanD @soulitzer

### Versions

PR : https://github.com/pytorch/pytorch/pull/84472

cc @vincentqb @jbschlosser @albanD

| 0 |

4,820 | 84,550 |

Pytorch does not recognize GPU in WSL2

|

triaged, module: wsl

|

### 🐛 Describe the bug

After install pytorch in Ubuntu 20.4 in WSL2,

conda install pytorch torchvision torchaudio cudatoolkit=11.6 -c pytorch -c conda-forge

pytorch does not recognize GPU:

python3 -c 'import torch; print(torch.cuda.is_available())'

returned False

Similar setup worked on windows environment. Only WSL2 have the problem.

nvidia-smi gave the following output:

Mon Sep 5 14:42:50 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 510.85.02 Driver Version: 516.94 CUDA Version: 11.7 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... On | 00000000:01:00.0 On | N/A |

| 23% 33C P8 11W / 250W | 1281MiB / 11264MiB | 11% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

I tried install and uninstall cuda-toolkit and cuDnn following instructions from nvidia, did not help either.

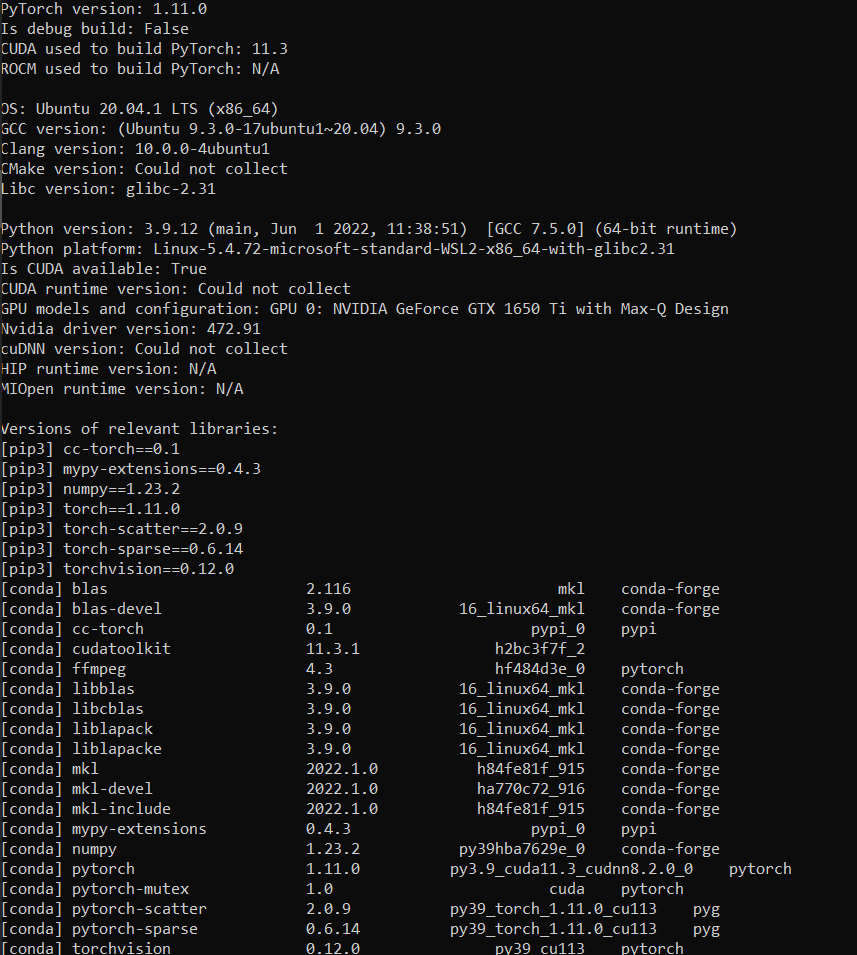

### Versions

Collecting environment information...

PyTorch version: 1.12.1

Is debug build: False

CUDA used to build PyTorch: 11.6

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.5 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: Could not collect

Libc version: glibc-2.31

Python version: 3.8.5 (default, Sep 4 2020, 07:30:14) [GCC 7.3.0] (64-bit runtime)

Python platform: Linux-5.10.102.1-microsoft-standard-WSL2-x86_64-with-glibc2.10

Is CUDA available: False

CUDA runtime version: 11.6.124

GPU models and configuration: GPU 0: NVIDIA GeForce GTX 1080 Ti

Nvidia driver version: 516.94

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.23.2

[pip3] pytorch-lightning==1.4.2

[pip3] torch==1.12.1

[pip3] torch-fidelity==0.3.0

[pip3] torchaudio==0.12.1

[pip3] torchmetrics==0.6.0

[pip3] torchvision==0.13.1

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.6.0 hecad31d_10 conda-forge

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py38h7f8727e_0

[conda] mkl_fft 1.3.1 py38hd3c417c_0

[conda] mkl_random 1.2.2 py38h51133e4_0

[conda] numpy 1.23.2 pypi_0 pypi

[conda] numpy-base 1.19.2 py38h4c65ebe_1

[conda] pytorch 1.12.1 py3.8_cuda11.6_cudnn8.3.2_0 pytorch

[conda] pytorch-lightning 1.4.2 pypi_0 pypi

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torch-fidelity 0.3.0 pypi_0 pypi

[conda] torchaudio 0.12.1 py38_cu116 pytorch

[conda] torchmetrics 0.6.0 pypi_0 pypi

[conda] torchvision 0.13.1 py38_cu116 pytorch

cc @peterjc123 @mszhanyi @skyline75489 @nbcsm

| 4 |

4,821 | 84,545 |

Add nvfuser support for prims.copy_to

|

oncall: jit, triaged, open source, cla signed, release notes: jit, module: nvfuser, module: primTorch, no-stale

|

I use nvFuser's `aliasOutputToInput` here and since it implicitly adds outputs to the fusion, I need to drop those within Python.

Now we can lower the batch_norm implementation from torch._decomp to nvprims(see `test_batch_norm_forward_nvprims`).

cc @EikanWang @jgong5 @wenzhe-nrv @sanchitintel @kevinstephano @jjsjann123 @ezyang @mruberry @ngimel @Lezcano @fdrocha @peterbell10

| 8 |

4,822 | 84,539 |

list of tensors can't be converted to a torch tensor while list of lists gets easily converted to a pytorch tensor

|

triaged, module: numpy

|

### 🐛 Describe the bug

```

import torch

import numpy as np

a = [torch.tensor([1,2]),torch.tensor([0.4,0.5]),torch.tensor([1,4])]

torch.tensor(np.array(a))

```

This is going to give type error

While this works correctly

```

a = [[1,2],[0.4,0.5],[1,4]]

torch.tensor(np.array(a))

```

### Versions

PyTorch version: 1.12.1+cu113

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.6 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: 6.0.0-1ubuntu2 (tags/RELEASE_600/final)

CMake version: version 3.22.6

Libc version: glibc-2.26

Python version: 3.7.13 (default, Apr 24 2022, 01:04:09) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.4.188+-x86_64-with-Ubuntu-18.04-bionic

Is CUDA available: True

CUDA runtime version: 11.1.105

GPU models and configuration: GPU 0: Tesla T4

Nvidia driver version: 460.32.03

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.7.6.5

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.0.5

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.21.6

[pip3] torch==1.12.1+cu113

[pip3] torchaudio==0.12.1+cu113

[pip3] torchsummary==1.5.1

[pip3] torchtext==0.13.1

[pip3] torchvision==0.13.1+cu113

[conda] Could not collect

cc @mruberry @rgommers

| 0 |

4,823 | 84,538 |

OpInfo tests should compare gpu to cpu implementations

|

module: tests, triaged, topic: not user facing

|

I believe that currently we do not have any OpInfo based tests that compare the results of gpu to cpu implementations.

Seems like this would be very useful to have, and shouldn't be too hard to implement.

You could just take the intersection of cpu and gpu dtypes for each OpInfo and compare outputs.

(FYI, I came across a discrepancy between gpu and cpu implementations (of `nn.functional.grid_sample`) which made me think of this. )

cc @mruberry

| 4 |

4,824 | 84,537 |

Minimal example for torch.optim.SparseAdam

|

module: sparse, module: docs, triaged

|

### 📚 The doc issue

Hey,

it is a bit confusing for me and my group for which cases [SparseAdam](https://pytorch.org/docs/stable/generated/torch.optim.SparseAdam.html) can be used:

There is a lone saying

```

Implements lazy version of Adam algorithm suitable for sparse tensors.

```

but it is unclear wether the parameters are allowed to be sparse (With sparse parameters, it throws the error

```

ValueError: Sparse params at indices [0]: SparseAdam requires dense parameter tensors

```

Could you provide more details and a minimal example?

Thank you

### Suggest a potential alternative/fix

Give a minimal example of how to use this optimizer.

cc @nikitaved @pearu @cpuhrsch @amjames @bhosmer @svekars @holly1238

| 1 |

4,825 | 84,530 |

`tensordot` not working for dtype int32 and lower when there is only 1 element in the given axis

|

triaged, module: linear algebra, actionable, bug

|

### 🐛 Describe the bug

`tensordot` seems to be failing when there is only one element in the given axis and dtype is `int32` or lower. This happens when providing explicit lists of dimensions for `a` and `b`.

```

import torch

x = torch.randint(1, 10, ([1, 2, 3]), dtype=torch.int32)

axis = 0

torch.tensordot(x, x, dims=([axis], [axis]))

```

with the following error:

`RuntimeError: expected scalar type Long but found Int`

But it works completely fine with `int64` and float dtypes. It also works fine for `int32` but only when there are multiple elements in the given axis. For instance, the following works fine:

```

x = torch.randint(1, 10, ([2, 2, 3]), dtype=torch.int32)

axis = 0

torch.tensordot(x, x, dims=([axis], [axis]))

```

### Versions

```

PyTorch version: 1.12.1+cu113

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.6 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: 6.0.0-1ubuntu2 (tags/RELEASE_600/final)

CMake version: version 3.22.6

Libc version: glibc-2.26

Python version: 3.7.13 (default, Apr 24 2022, 01:04:09) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.4.188+-x86_64-with-Ubuntu-18.04-bionic

Is CUDA available: True

CUDA runtime version: 11.1.105

GPU models and configuration: GPU 0: Tesla T4

Nvidia driver version: 460.32.03

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.7.6.5

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.0.5

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.21.6

[pip3] torch==1.12.1+cu113

[pip3] torchaudio==0.12.1+cu113

[pip3] torchsummary==1.5.1

[pip3] torchtext==0.13.1

[pip3] torchvision==0.13.1+cu113

[conda] Could not collect

```

cc @jianyuh @nikitaved @pearu @mruberry @walterddr @IvanYashchuk @xwang233 @Lezcano

| 0 |

4,826 | 84,529 |

test_prims.py:test_nvfuser_no_args_cuda, memory leak

|

triaged, module: primTorch

|

### 🐛 Describe the bug

```py

2022-09-02T16:37:13.3046215Z ERROR [0.094s]: test_nvfuser_no_args_cuda (__main__.TestPrimsCUDA)

2022-09-02T16:37:13.3046792Z ----------------------------------------------------------------------

2022-09-02T16:37:13.3047015Z Traceback (most recent call last):

2022-09-02T16:37:13.3047567Z File "/opt/conda/lib/python3.10/site-packages/torch/testing/_internal/common_utils.py", line 1940, in wrapper

2022-09-02T16:37:13.3048068Z method(*args, **kwargs)

2022-09-02T16:37:13.3048458Z File "/opt/conda/lib/python3.10/site-packages/torch/testing/_internal/common_utils.py", line 1939, in wrapper

2022-09-02T16:37:13.3049178Z with policy():

2022-09-02T16:37:13.3049613Z File "/opt/conda/lib/python3.10/site-packages/torch/testing/_internal/common_utils.py", line 1530, in __exit__

2022-09-02T16:37:13.3050013Z raise RuntimeError(msg)

2022-09-02T16:37:13.3050584Z RuntimeError: CUDA driver API confirmed a leak in __main__.TestPrimsCUDA.test_nvfuser_no_args_cuda! Caching allocator allocated memory was 2048 and is now reported as 2560 on device 0. CUDA driver allocated memory was 520880128 and is now 522977280.

```

### Versions

The test is added in https://github.com/pytorch/pytorch/pull/84416.

cc @ezyang @mruberry @ngimel

| 0 |

4,827 | 84,524 |

nn.Softmax should not allow default/implicit/unset dim constructor argument

|

module: nn, triaged, needs research, module: deprecation

|

### 🐛 Describe the bug

Originally discussed in https://github.com/pytorch/pytorch/issues/84290#issuecomment-1232133690, more links to related comments / issues in this cited comment

The F.softmax / softmin / log_softmax have implicit dim support deprecated since a few years ago, so nn.Softmax and friends nn.LogSoftmax / nn.Softmin should also remove this support for reducing possible confusion

cc @albanD @mruberry @jbschlosser @walterddr @kshitij12345 @saketh-are @alband @jerryzh168

### Versions

N/A

| 6 |

4,828 | 84,523 |

Issue with MPS ops lead to make_grid broken with mps device Tensors, whole grid is the 'first' image

|

triaged, module: mps

|

### 🐛 Describe the bug

This is a upstreaming of the following bug in torch vision that I've been asked to dos due to the lack of access to MPS hardware.

https://github.com/pytorch/vision/issues/6533

### 🐛 Describe the bug

When calling make_grid on Tensor and List of Tensors where the device in 'mps' it returns a grid where all the Tensors are the same.

Simple example

create a venv and activate

cd into the venv directory

install the nightlies

pip3 install --pre torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/nightly/cpu

unzip the attached zip file, it contains a python script and two images.

validate the python script and run it.

```

from PIL import Image

from torchvision.utils import make_grid

import torchvision.transforms as transforms

import torch

img1 = Image.open('00035.png')

img2 = Image.open('00036.png')

transform = transforms.Compose([

transforms.PILToTensor()

])

t_img1 = transform(img1).to(torch.device("mps"), dtype=torch.float32) / 256.0

t_img2 = transform(img2).to(torch.device("mps"), dtype=torch.float32) / 256.0

grid = make_grid([t_img1, t_img2], nrow=1)

gridImage = transforms.ToPILImage()(grid.cpu());

gridImage.save('mps_grid.png')

t_img1 = transform(img1).to(torch.device("cpu"), dtype=torch.float32) / 256.0

t_img2 = transform(img2).to(torch.device("cpu"), dtype=torch.float32) / 256.0

grid = make_grid([t_img1, t_img2], nrow=1)

gridImage = transforms.ToPILImage()(grid.cpu());

gridImage.save('cpu_grid.png')

```

It generate two images, you'll see the cpu_grid image are the two images vertically stacked, the mps_grid image has the same image vertically stacked twice.

[make_grid_bug.zip](https://github.com/pytorch/vision/files/9473078/make_grid_bug.zip)

### Versions

PyTorch version: 1.13.0.dev20220901

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: macOS 12.5.1 (arm64)

GCC version: Could not collect

Clang version: 13.1.6 (clang-1316.0.21.2.5)

CMake version: version 3.22.4

Libc version: N/A

Python version: 3.10.4 (main, May 10 2022, 03:52:14) [Clang 13.0.0 (clang-1300.0.29.30)] (64-bit runtime)

Python platform: macOS-12.5.1-arm64-arm-64bit

Is CUDA available: False

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.23.2

[pip3] torch==1.13.0.dev20220901

[pip3] torchaudio==0.13.0.dev20220901

[pip3] torchvision==0.14.0.dev20220901

[conda] Could not collect

cc @kulinseth @albanD

| 4 |

4,829 | 84,520 |

MPS backend appears to be limited to 32 bits

|

triaged, module: mps

|

### 🐛 Describe the bug

Create a large job for MPS to work on and we hit an inbuilt limit that bombs out with...

/AppleInternal/Library/BuildRoots/20d6c351-ee94-11ec-bcaf-7247572f23b4/Library/Caches/com.apple.xbs/Sources/MetalPerformanceShaders/MPSCore/Types/MPSNDArray.mm:705: failed assertion `[MPSTemporaryNDArray initWithDevice:descriptor:] Error: product of dimension sizes > 2**31'

This only occurs on MPS, I can easily run larger jobs on Cuda

### Versions

Not overly helpful...

Collecting environment information...

Traceback (most recent call last):

File "/Users/ec2-user/Library/Lartis/collect_env.py", line 492, in <module>

main()

File "/Users/ec2-user/Library/Lartis/collect_env.py", line 475, in main

output = get_pretty_env_info()

File "/Users/ec2-user/Library/Lartis/collect_env.py", line 470, in get_pretty_env_info

return pretty_str(get_env_info())

File "/Users/ec2-user/Library/Lartis/collect_env.py", line 319, in get_env_info

pip_version, pip_list_output = get_pip_packages(run_lambda)

File "/Users/ec2-user/Library/Lartis/collect_env.py", line 301, in get_pip_packages

out = run_with_pip(sys.executable + ' -mpip')

File "/Users/ec2-user/Library/Lartis/collect_env.py", line 289, in run_with_pip

for line in out.splitlines()

AttributeError: 'NoneType' object has no attribute 'splitlines'

cc @kulinseth @albanD

| 6 |

4,830 | 84,515 |

Torch.FX work with autograd.Function

|

triaged, module: fx, fx

|

### 🚀 The feature, motivation and pitch

Dear fx guys,

Making Torch.FX work with `autograd.Function` is important when transform or fuse custom implemented operators, there's someone else meet the same problem at https://discuss.pytorch.org/t/how-can-torch-fx-work-with-autograd-function/145922.

For now, Torch.JIT can trace `autograd.Function` like this: `%x : Float(a, b, c, strides=[a, b, c], requires_grad=1, device=cpu) = ^CustomFunction()(...)`.

While Torch.FX trying to call the custom function with proxied `fx.Proxy` parameters, that causes errors.

### Alternatives

_No response_

### Additional context

_No response_

cc @ezyang @SherlockNoMad @soumith

| 0 |

4,831 | 84,510 |

[NVFuser] RuntimeError: ref_id_it != replayed_concrete_ids_.vector().end() INTERNAL ASSERT FAILED

|

triaged, module: assert failure, module: nvfuser

|

### 🐛 Describe the bug

```python

# debug_aev_nvfuser_minimal.py

import torch

torch._C._jit_set_nvfuser_single_node_mode(True)

torch._C._debug_set_autodiff_subgraph_inlining(False)

torch.manual_seed(0)

def func(x, y, z):

return (x + y)**z

func_script = torch.jit.script(func)

x = torch.rand([3, 1, 1, 1, 1], device="cuda").requires_grad_()

y = torch.rand([1, 1, 1, 4], device="cuda")

z = torch.rand([1, 1, 1, 1], device="cuda")

for i in range(10):

res = func(x, y, z)

grad = torch.autograd.grad(res, x, torch.ones_like(res))[0]

res_script = func_script(x, y, z)

grad_script = torch.autograd.grad(res_script, x, torch.ones_like(res))[0]

print(f"{i}: max_result_error {(res_script-res).abs().max()}, max_grad_error {(grad_script-grad).abs().max()}")

```

Run with

```

PYTORCH_NVFUSER_DISABLE=fallback PYTORCH_JIT_LOG_LEVEL=">partition:graph_fuser:>>kernel_cache" python debug_aev_nvfuser_minimal.py

```

error message:

```

[DEBUG kernel_cache.cpp:638] GraphCache constructor: 0x7fb774056cc0

[DUMP kernel_cache.cpp:639] GraphCache created for graph

[DUMP kernel_cache.cpp:639] graph(%0 : Float(3, 1, 1, 1, 4, strides=[4, 4, 4, 4, 1], requires_grad=0, device=cuda:0),

[DUMP kernel_cache.cpp:639] %1 : Float(3, 1, 1, 1, 4, strides=[4, 4, 4, 4, 1], requires_grad=0, device=cuda:0),

[DUMP kernel_cache.cpp:639] %2 : Float(1, 1, 1, 1, strides=[1, 1, 1, 1], requires_grad=0, device=cuda:0),

[DUMP kernel_cache.cpp:639] %3 : Float(3, 1, 1, 1, 4, strides=[4, 4, 4, 4, 1], requires_grad=0, device=cuda:0)):

[DUMP kernel_cache.cpp:639] %4 : int[] = prim::Constant[value=[3, 1, 1, 1, 1]]()

[DUMP kernel_cache.cpp:639] %5 : int = prim::Constant[value=1]() # <string>:240:94

[DUMP kernel_cache.cpp:639] %6 : float = prim::Constant[value=0.]() # <string>:240:52

[DUMP kernel_cache.cpp:639] %7 : Bool(1, 1, 1, 1, strides=[1, 1, 1, 1], requires_grad=0, device=cuda:0) = aten::eq(%2, %6) # <string>:240:40

[DUMP kernel_cache.cpp:639] %8 : Float(3, 1, 1, 1, 4, strides=[4, 4, 4, 4, 1], requires_grad=0, device=cuda:0) = aten::mul(%3, %2) # <string>:240:98

[DUMP kernel_cache.cpp:639] %9 : Float(1, 1, 1, 1, strides=[1, 1, 1, 1], requires_grad=0, device=cuda:0) = aten::sub(%2, %5, %5) # <string>:240:139

[DUMP kernel_cache.cpp:639] %10 : Float(3, 1, 1, 1, 4, strides=[4, 4, 4, 4, 1], requires_grad=0, device=cuda:0) = aten::pow(%1, %9) # <string>:240:123

[DUMP kernel_cache.cpp:639] %11 : Float(3, 1, 1, 1, 4, strides=[4, 4, 4, 4, 1], requires_grad=0, device=cuda:0) = aten::mul(%8, %10) # <string>:240:98

[DUMP kernel_cache.cpp:639] %12 : Float(3, 1, 1, 1, 4, strides=[4, 4, 4, 4, 1], requires_grad=0, device=cuda:0) = aten::where(%7, %0, %11) # <string>:240:28

[DUMP kernel_cache.cpp:639] %grad_self.20 : Float(3, 1, 1, 1, 1, strides=[1, 1, 1, 1, 1], requires_grad=0, device=cuda:0) = aten::_grad_sum_to_size(%12, %4) # <string>:13:29

[DUMP kernel_cache.cpp:639] return (%grad_self.20)

[DEBUG kernel_cache.cpp:647] running GraphCache: 0x7fb774056cc0

Traceback (most recent call last):

File "debug_aev_nvfuser_minimal.py", line 23, in <module>

grad_script = torch.autograd.grad(res_script, x, torch.ones_like(res))[0]

File "/home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/autograd/__init__.py", line 294, in grad

return Variable._execution_engine.run_backward( # Calls into the C++ engine to run the backward pass

RuntimeError: The following operation failed in the TorchScript interpreter.

Traceback of TorchScript (most recent call last):

RuntimeError: ref_id_it != replayed_concrete_ids_.vector().end() INTERNAL ASSERT FAILED at "/opt/conda/conda-bld/pytorch_1662103173222/work/torch/csrc/jit/codegen/cuda/lower_index_compute.cpp":724, please report a bug to PyTorch. Could not find required iter domain in reference replay: bblockIdx.y214{( 1 * ( 1 * 1 ) )}

ref_id_it != replayed_concrete_ids_.vector().end() INTERNAL ASSERT FAILED at "/opt/conda/conda-bld/pytorch_1662103173222/work/torch/csrc/jit/codegen/cuda/lower_index_compute.cpp":724, please report a bug to PyTorch. Could not find required iter domain in reference replay: bblockIdx.y214{( 1 * ( 1 * 1 ) )}

Exception raised from constructLoopDomains at /opt/conda/conda-bld/pytorch_1662103173222/work/torch/csrc/jit/codegen/cuda/lower_index_compute.cpp:724 (most recent call first):

frame #0: c10::Error::Error(c10::SourceLocation, std::string) + 0x57 (0x7fd528ba9577 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libc10.so)

frame #1: c10::detail::torchCheckFail(char const*, char const*, unsigned int, std::string const&) + 0x64 (0x7fd528b77e2c in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libc10.so)

frame #2: c10::detail::torchInternalAssertFail(char const*, char const*, unsigned int, char const*, std::string const&) + 0x3f (0x7fd528ba749f in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libc10.so)

frame #3: <unknown function> + 0x2f5c6aa (0x7fd52bb566aa in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #4: <unknown function> + 0x2f5df57 (0x7fd52bb57f57 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #5: <unknown function> + 0x2f5e263 (0x7fd52bb58263 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #6: <unknown function> + 0x2f48370 (0x7fd52bb42370 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #7: <unknown function> + 0x2f4e569 (0x7fd52bb48569 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #8: <unknown function> + 0x2f4e692 (0x7fd52bb48692 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #9: torch::jit::fuser::cuda::IndexLowering::handle(torch::jit::fuser::cuda::BinaryOp const*) + 0x21 (0x7fd52bbd7301 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #10: torch::jit::fuser::cuda::IndexLowering::handle(torch::jit::fuser::cuda::kir::IfThenElse const*) + 0xc0 (0x7fd52bbd6e90 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #11: torch::jit::fuser::cuda::IndexLowering::handle(torch::jit::fuser::cuda::kir::ForLoop const*) + 0xdf (0x7fd52bbd815f in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #12: torch::jit::fuser::cuda::IndexLowering::handle(torch::jit::fuser::cuda::kir::IfThenElse const*) + 0xc0 (0x7fd52bbd6e90 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #13: torch::jit::fuser::cuda::IndexLowering::handle(torch::jit::fuser::cuda::kir::ForLoop const*) + 0xdf (0x7fd52bbd815f in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #14: torch::jit::fuser::cuda::IndexLowering::handle(torch::jit::fuser::cuda::kir::ForLoop const*) + 0xdf (0x7fd52bbd815f in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #15: torch::jit::fuser::cuda::IndexLowering::handle(torch::jit::fuser::cuda::kir::ForLoop const*) + 0xdf (0x7fd52bbd815f in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #16: torch::jit::fuser::cuda::IndexLowering::handle(torch::jit::fuser::cuda::kir::ForLoop const*) + 0xdf (0x7fd52bbd815f in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #17: torch::jit::fuser::cuda::IndexLowering::generate(std::vector<torch::jit::fuser::cuda::Expr*, std::allocator<torch::jit::fuser::cuda::Expr*> > const&) + 0x27 (0x7fd52bbd6ca7 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #18: torch::jit::fuser::cuda::GpuLower::lower(torch::jit::fuser::cuda::Fusion*, torch::jit::fuser::cuda::DataType) + 0x13c7 (0x7fd52bc276e7 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #19: torch::jit::fuser::cuda::FusionExecutor::compileFusion(torch::jit::fuser::cuda::Fusion*, c10::ArrayRef<c10::IValue> const&, torch::jit::fuser::cuda::LaunchParams const&, torch::jit::fuser::cuda::CompileOptions) + 0xcc1 (0x7fd52baea111 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #20: torch::jit::fuser::cuda::FusionKernelRuntime::runKernelWithInput(c10::ArrayRef<c10::IValue> const&, unsigned long, torch::jit::fuser::cuda::SegmentedGroup*) + 0x591 (0x7fd52bba7421 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #21: torch::jit::fuser::cuda::FusionKernelRuntime::runWithInput(c10::ArrayRef<c10::IValue> const&, unsigned long) + 0x4ff (0x7fd52bba908f in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #22: torch::jit::fuser::cuda::FusionExecutorCache::runFusionWithInputs(c10::ArrayRef<c10::IValue> const&) + 0x375 (0x7fd52bbab915 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #23: <unknown function> + 0x2fb1c8f (0x7fd52bbabc8f in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #24: <unknown function> + 0x302ffa8 (0x7fd52bc29fa8 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #25: torch::jit::fuser::cuda::runCudaFusionGroup(torch::jit::Node const*, std::vector<c10::IValue, std::allocator<c10::IValue> >&) + 0x43c (0x7fd52bc2a7fc in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cuda_cu.so)

frame #26: <unknown function> + 0x443fef2 (0x7fd55c8f7ef2 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so)

frame #27: torch::jit::InterpreterState::run(std::vector<c10::IValue, std::allocator<c10::IValue> >&) + 0x3f (0x7fd55c8e407f in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so)

frame #28: <unknown function> + 0x441c61a (0x7fd55c8d461a in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so)

frame #29: <unknown function> + 0x441f4f6 (0x7fd55c8d74f6 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so)

frame #30: <unknown function> + 0x406051b (0x7fd55c51851b in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so)

frame #31: torch::autograd::Engine::evaluate_function(std::shared_ptr<torch::autograd::GraphTask>&, torch::autograd::Node*, torch::autograd::InputBuffer&, std::shared_ptr<torch::autograd::ReadyQueue> const&) + 0x1638 (0x7fd55c511c28 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so)

frame #32: torch::autograd::Engine::thread_main(std::shared_ptr<torch::autograd::GraphTask> const&) + 0x698 (0x7fd55c512798 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so)

frame #33: torch::autograd::Engine::thread_init(int, std::shared_ptr<torch::autograd::ReadyQueue> const&, bool) + 0x8b (0x7fd55c509b3b in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_cpu.so)

frame #34: torch::autograd::python::PythonEngine::thread_init(int, std::shared_ptr<torch::autograd::ReadyQueue> const&, bool) + 0x4f (0x7fd56ce42d4f in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/libtorch_python.so)

frame #35: <unknown function> + 0xdbbf4 (0x7fd57f9d8bf4 in /home/richard/program/anaconda3/envs/torch_nightly/lib/python3.8/site-packages/torch/lib/../../../../libstdc++.so.6)

frame #36: <unknown function> + 0x8609 (0x7fd5a0383609 in /lib/x86_64-linux-gnu/libpthread.so.0)

frame #37: clone + 0x43 (0x7fd5a02a8133 in /lib/x86_64-linux-gnu/libc.so.6)

```

cc @ngimel @jjsjann123 @zasdfgbnm

### Versions

the latest pytorch nightly

```

PyTorch version: 1.13.0.dev20220902

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.5 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.16.3

Libc version: glibc-2.31

Python version: 3.8.13 (default, Mar 28 2022, 11:38:47) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.4.0-125-generic-x86_64-with-glibc2.17

Is CUDA available: True

CUDA runtime version: 11.3.109

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 3080

Nvidia driver version: 510.85.02

cuDNN version: /usr/lib/x86_64-linux-gnu/libcudnn.so.7.6.5

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.23.1

[pip3] torch==1.13.0.dev20220902

[pip3] torchani==2.3.dev174+gbe932233.d20220903

[pip3] torchaudio==0.13.0.dev20220902

[pip3] torchvision==0.14.0.dev20220902

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.3.1 h2bc3f7f_2

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py38h7f8727e_0

[conda] mkl_fft 1.3.1 py38hd3c417c_0

[conda] mkl_random 1.2.2 py38h51133e4_0

[conda] numpy 1.23.1 py38h6c91a56_0

[conda] numpy-base 1.23.1 py38ha15fc14_0

[conda] pytorch 1.13.0.dev20220902 py3.8_cuda11.3_cudnn8.3.2_0 pytorch-nightly

[conda] pytorch-mutex 1.0 cuda pytorch-nightly

[conda] torchani 2.3.dev174+gbe932233.d20220903 pypi_0 pypi

[conda] torchaudio 0.13.0.dev20220902 py38_cu113 pytorch-nightly

[conda] torchvision 0.14.0.dev20220902 py38_cu113 pytorch-nightly

```

| 6 |

4,832 | 84,495 |

functionalize: Does not compose cleanly with torch.jit.script/torch.jit.trace

|

oncall: jit, module: functionalization

|

### 🐛 Describe the bug

```python

import torch

from functorch.experimental import functionalize

class Net(torch.nn.Module):

def __init__(self):

super().__init__()

self.fc0 = torch.nn.Linear(12, 17)

def forward(self, x):

return self.fc0(x).relu_()

net = Net()

input = torch.randn([1, 12])

# These work.

torch.jit.script(net)

torch.jit.trace(net, input)

# Both of these fail with different errors (see below).

#torch.jit.script(functionalize(net))

#torch.jit.trace(functionalize(net), input)

```

For script the error is:

```

TypeError: module, class, method, function, traceback, frame, or code object was expected, got Net

```

([full traceback](https://gist.github.com/silvasean/6cfa2510a010c4f8b07721b96caa4509))

For trace the error is:

```

RuntimeError: Cannot insert a Tensor that requires grad as a constant. Consider making it a parameter or input, or detaching the gradient

```

([full traceback](https://gist.github.com/silvasean/833df0af01bdf489529713d4684e30ca))

### Versions

torch 1.13.0.dev20220830+cpu

cc @bdhirsh @ezyang @soumith

| 3 |

4,833 | 84,491 |

Embedding scale_grad_by_freq should probably shrink by sqrt(count)

|

triaged, module: embedding, oncall: pt2

|

Given the analysis and experiments in "Frequency-aware SGD for Efficient Embedding Learning with Provable Benefits" (https://openreview.net/pdf?id=ibqTBNfJmi), it would seem that it would be better for `scale_grad_by_freq` to divide by sqrt(count) rather than count.

https://github.com/pytorch/pytorch/blob/0a07488ed2c47765e337e290bd138c0e6e459cbd/aten/src/ATen/native/Embedding.cpp#L133

Also, what is the reason for initializing the counter to zeros, then incrementing by 1 [here](https://github.com/pytorch/pytorch/blob/0a07488ed2c47765e337e290bd138c0e6e459cbd/aten/src/ATen/native/Embedding.cpp#L120), rather than initializing to 1?

cc @ezyang @soumith

| 1 |

4,834 | 84,489 |

For PyTorch Nightly, failure when changing MPS device to CPU after PYTORCH_ENABLE_MPS_FALLBACK occurs.

|

triaged, module: mps

|

### 🐛 Describe the bug

When trying to generate text with a GPT-2 from the transformers library, I get this error:

NotImplementedError: The operator 'aten::cumsum.out' is not current implemented for the MPS device. If you want this op to be added in priority during the prototype phase of this feature, please comment on https://github.com/pytorch/pytorch/issues/77764. As a temporary fix, you can set the environment variable `PYTORCH_ENABLE_MPS_FALLBACK=1` to use the CPU as a fallback for this op. WARNING: this will be slower than running natively on MPS.

So I activated the environment variable (I set it in the terminal, because it didn't work with the version in the following code), but afterwards another error occurs (I posted it after the code used). I need to mention that if I only use CPU from the start, the generation works without problems.

```python

# Sample code to reproduce the problem

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

import os

os.environ['PYTORCH_ENABLE_MPS_FALLBACK'] = "1"

device = torch.device('mps' if torch.backends.mps.is_available() else 'cpu')

tokenizer = AutoTokenizer.from_pretrained('readerbench/RoGPT2-base')

model = AutoModelForCausalLM.from_pretrained('readerbench/RoGPT2-base').to(device)

inputs = tokenizer('Salut priete', return_tensors='pt').to(device)

generation = model.generate(inputs['input_ids'], max_length = len(inputs['input_ids'][0]) + 10, no_repeat_ngram_size=2, num_beams=5, early_stopping=True, num_return_sequences=1)

```

```

The error message you got, with the full traceback.

Traceback (most recent call last):

File "/Users/alexandrudima/home/Research/test.py", line 15, in <module>

generation = model.generate(inputs['input_ids'], max_length = len(inputs['input_ids'][0]) + 10, no_repeat_ngram_size=2, num_beams=5, early_stopping=True, num_return_sequences=1)[0][len(inputs['input_ids'][0]):]

File "/opt/homebrew/Caskroom/miniforge/base/envs/ml/lib/python3.9/site-packages/torch/autograd/grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "/opt/homebrew/Caskroom/miniforge/base/envs/ml/lib/python3.9/site-packages/transformers/generation_utils.py", line 1386, in generate

return self.beam_search(

File "/opt/homebrew/Caskroom/miniforge/base/envs/ml/lib/python3.9/site-packages/transformers/generation_utils.py", line 2232, in beam_search

model_inputs = self.prepare_inputs_for_generation(input_ids, **model_kwargs)

File "/opt/homebrew/Caskroom/miniforge/base/envs/ml/lib/python3.9/site-packages/transformers/models/gpt2/modeling_gpt2.py", line 1016, in prepare_inputs_for_generation

position_ids = attention_mask.long().cumsum(-1) - 1

NotImplementedError: The operator 'aten::cumsum.out' is not current implemented for the MPS device. If you want this op to be added in priority during the prototype phase of this feature, please comment on https://github.com/pytorch/pytorch/issues/77764. As a temporary fix, you can set the environment variable `PYTORCH_ENABLE_MPS_FALLBACK=1` to use the CPU as a fallback for this op. WARNING: this will be slower than running natively on MPS.

(ml) alexandrudima@Alex-MacBook Research % PYTORCH_ENABLE_MPS_FALLBACK=1 python test.py

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

tensor([[23640, 344, 3205]], device='mps:0') tensor([23640, 344, 3205], device='mps:0')

The attention mask and the pad token id were not set. As a consequence, you may observe unexpected behavior. Please pass your input's `attention_mask` to obtain reliable results.

Setting `pad_token_id` to `eos_token_id`:0 for open-end generation.

/opt/homebrew/Caskroom/miniforge/base/envs/ml/lib/python3.9/site-packages/transformers/models/gpt2/modeling_gpt2.py:1016: UserWarning: The operator 'aten::cumsum.out' is not currently supported on the MPS backend and will fall back to run on the CPU. This may have performance implications. (Triggered internally at /Users/runner/work/_temp/anaconda/conda-bld/pytorch_1661929883516/work/aten/src/ATen/mps/MPSFallback.mm:11.)

position_ids = attention_mask.long().cumsum(-1) - 1

Traceback (most recent call last):

File "/Users/alexandrudima/home/Research/test.py", line 15, in <module>

generation = model.generate(inputs['input_ids'], max_length = len(inputs['input_ids'][0]) + 10, no_repeat_ngram_size=2, num_beams=5, early_stopping=True, num_return_sequences=1)[0][len(inputs['input_ids'][0]):]

File "/opt/homebrew/Caskroom/miniforge/base/envs/ml/lib/python3.9/site-packages/torch/autograd/grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "/opt/homebrew/Caskroom/miniforge/base/envs/ml/lib/python3.9/site-packages/transformers/generation_utils.py", line 1386, in generate

return self.beam_search(

File "/opt/homebrew/Caskroom/miniforge/base/envs/ml/lib/python3.9/site-packages/transformers/generation_utils.py", line 2253, in beam_search

next_token_scores_processed = logits_processor(input_ids, next_token_scores)

File "/opt/homebrew/Caskroom/miniforge/base/envs/ml/lib/python3.9/site-packages/transformers/generation_logits_process.py", line 92, in __call__

scores = processor(input_ids, scores)

File "/opt/homebrew/Caskroom/miniforge/base/envs/ml/lib/python3.9/site-packages/transformers/generation_logits_process.py", line 333, in __call__

scores[i, banned_tokens] = -float("inf")

RuntimeError: dst_.nbytes() >= dst_byte_offset INTERNAL ASSERT FAILED at "/Users/runner/work/_temp/anaconda/conda-bld/pytorch_1661929883516/work/aten/src/ATen/native/mps/operations/Copy.mm":184, please report a bug to PyTorch.

```

### Versions

Collecting environment information...

PyTorch version: 1.13.0.dev20220831

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: macOS 12.5.1 (arm64)

GCC version: Could not collect

Clang version: 13.1.6 (clang-1316.0.21.2.5)

CMake version: Could not collect

Libc version: N/A

Python version: 3.9.13 | packaged by conda-forge | (main, May 27 2022, 17:00:33) [Clang 13.0.1 ] (64-bit runtime)

Python platform: macOS-12.5.1-arm64-arm-64bit

Is CUDA available: False

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.22.4

[pip3] pytorch-lightning==1.5.8

[pip3] torch==1.13.0.dev20220831

[pip3] torchaudio==0.13.0.dev20220831

[pip3] torchmetrics==0.9.3

[pip3] torchvision==0.14.0.dev20220831

[conda] numpy 1.22.4 py39h7df2422_0 conda-forge

[conda] pytorch 1.13.0.dev20220831 py3.9_0 pytorch-nightly

[conda] pytorch-lightning 1.5.8 pyhd8ed1ab_0 conda-forge

[conda] torchaudio 0.13.0.dev20220831 py39_cpu pytorch-nightly

[conda] torchmetrics 0.9.3 pyhd8ed1ab_0 conda-forge

[conda] torchvision 0.14.0.dev20220831 py39_cpu pytorch-nightly

cc @kulinseth @albanD

| 1 |

4,835 | 84,487 |

A little improvement to torch.nn.ReflectionPad2d

|

triaged, module: padding, oncall: pt2

|

### 🚀 The feature, motivation and pitch

A little improvement, so little that I think it does not deserve to post about my intended feature and later discuss the design and implementation. Probably you can consider this little extension directly.

torch.nn.ReflectionPad2d is limited to pad to a new size less than or equal to the double of the original size.

I want to propose a new MultipleReflectionPad2d without this limitation.

```

class MultipleReflectionPad2d():

def __init__(self, out_height, out_width):

self.out_height = out_height

self.out_width = out_width

def __call__(self, image):

height, width = image.shape[-2:]

while height<self.out_height or width<self.out_width:

new_height = self.out_height

new_width = self.out_width

if new_height > 2*height : new_height = 2*height

if new_width > 2*width : new_width = 2*width

padding_top = (new_height-height)//2

padding_bottom = new_height-height-padding_top

padding_left = (new_width-width)//2

padding_right = new_width-width-padding_left

padder = torch.nn.ReflectionPad2d((padding_left, padding_right, padding_top, padding_bottom))

image = padder(image)

height, width = image.shape[-2:]

return image

```

### Alternatives

May be you can rewrite ReflectionPad2d without this limitation

### Additional context

_No response_

cc @ezyang @soumith

| 1 |

4,836 | 84,473 |

Install LibTorch by Conan or other C++ package manager

|

module: cpp, triaged, topic: binaries

|

### 🚀 The feature, motivation and pitch

At present, There is no detailed guide for how to install LibTorch or construct the environment. The official tutorial does not show where should I put the downloaded library, or how to write a correct `CMakeList.txt` file.

### Alternatives

I find out that there are some good C++ package managers like [Conan](https://conan.io). It is kind of like `pip` and `conda` for Python. It would be easier to install and use LibTorch if we can access it by a C++ package manager, say [Conan](https://conan.io).

### Additional context

_No response_

cc @jbschlosser

| 0 |

4,837 | 84,468 |

[c10d] Support a public API to retrieve default process group

|

oncall: distributed, feature, triaged, pt_distributed_rampup

|

### 🚀 The feature, motivation and pitch