Serial Number

int64 1

6k

| Issue Number

int64 75.6k

112k

| Title

stringlengths 3

357

| Labels

stringlengths 3

241

⌀ | Body

stringlengths 9

74.5k

⌀ | Comments

int64 0

867

|

|---|---|---|---|---|---|

4,901 | 83,968 |

Python3 Depletes 2021 M1 Mac Memory Running Training Ops For Model's M, L and X

|

triaged, module: macos

|

### 🐛 Describe the bug

Hi Admin exec/s,

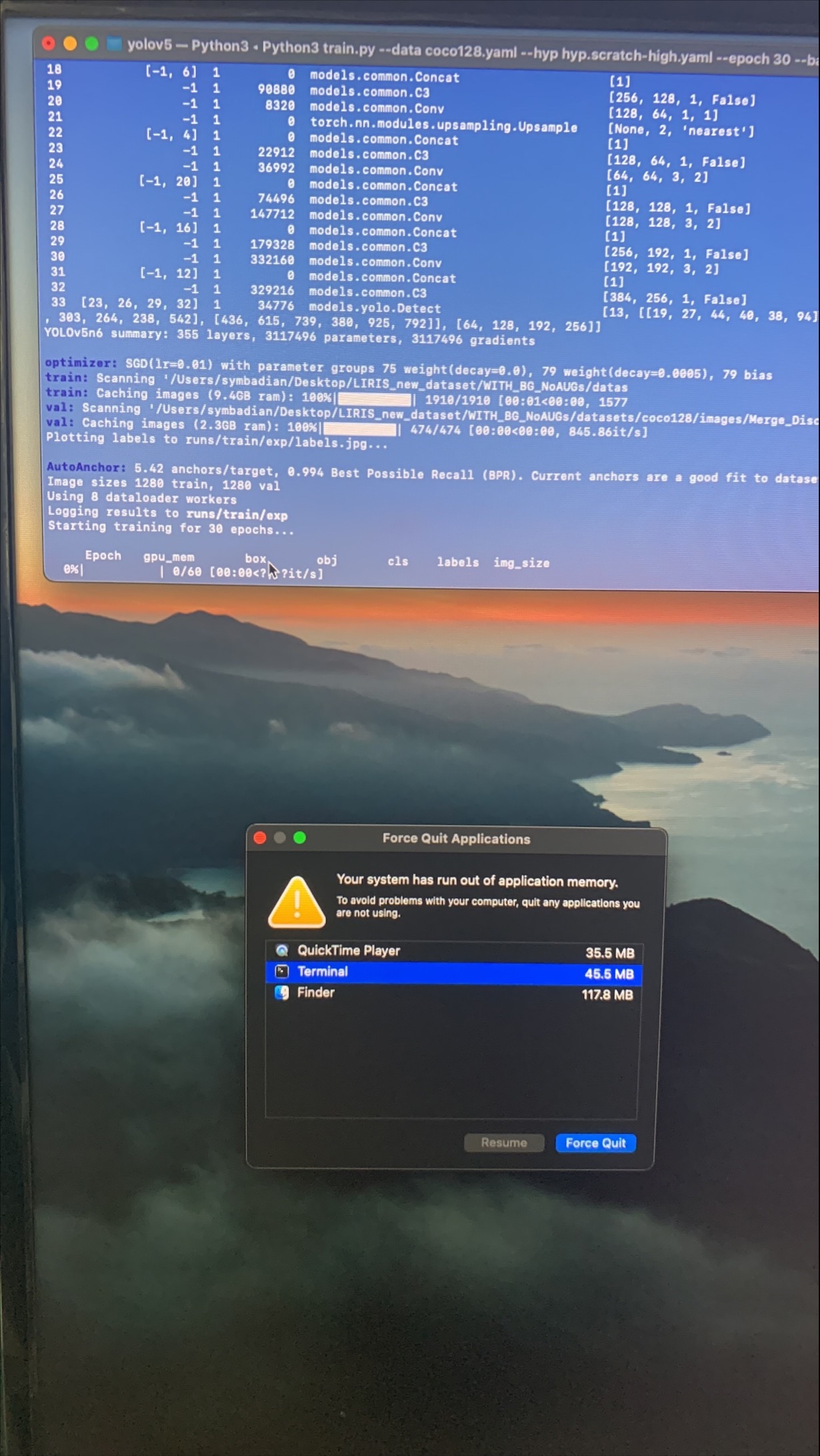

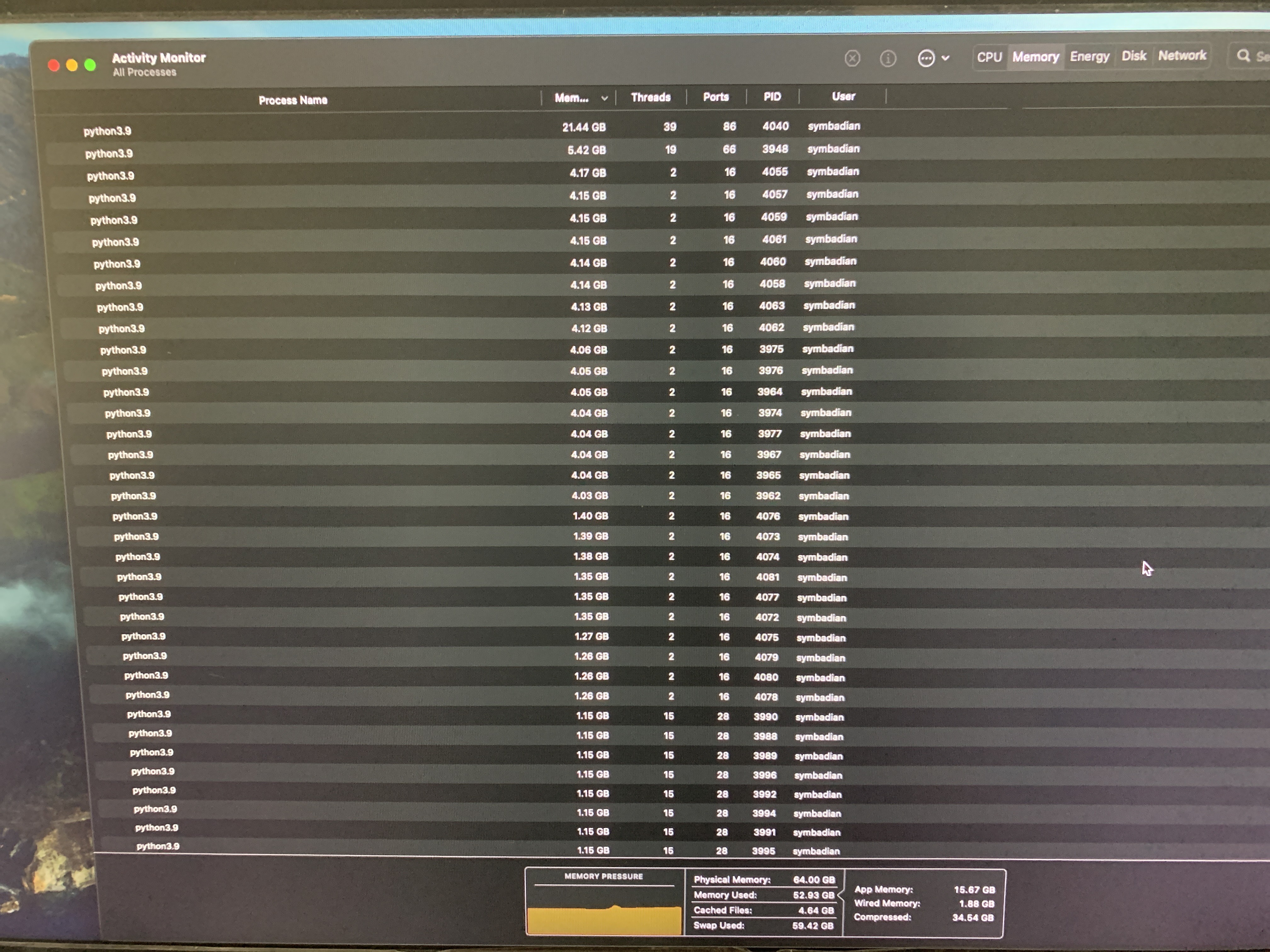

I am experiencing a strange issue where Python3 is hogging all of the resources while running the training operations for the yolov5 model's M, L and X. It is pointless to deploy the m6 models, for examples yolov5m6.pt or .ymal, l6, x6.

My research lead me to initialise the GPU/MPS on the silicon MacBook Pro 2021 14" MAX 32 cores. But this proved futile as the training will not allow the GPU to be initialised, so I followed PyTorch's nightly installation and that was futile as well.

Though it was not allowing the gpu/mps processing, somehow the yolov5m.pt model was able to work as well as from scratch, but not the larger models. This (m) took 4 days to train on the minuscule batch and epoch sizes, it took approximately 2hrs+ for 1 epoch iteration cycle.

Everyday the initialisation of the Training Operations is constantly prompting that yolov5 is out of date and I have to pull the necessary files to get the process to work as it should based on my knowledge.

What I did was:

1. Create an environment ML

2. Then install the requirements as per the instructions from @Glenn-Jocher downloaded in the folder of files from the clone process.

3. Install packages as prompted that required upgrading and then initialise the run commands below:

Packages:

```

Package Version

----------------------- --------------------

absl-py 1.2.0

appnope 0.1.3

asttokens 2.0.8

backcall 0.2.0

cachetools 5.2.0

certifi 2022.6.15

charset-normalizer 2.1.1

coremltools 5.2.0

cycler 0.11.0

decorator 5.1.1

executing 0.10.0

fonttools 4.36.0

google-auth 2.11.0

google-auth-oauthlib 0.4.6

grpcio 1.47.0

idna 3.3

ipython 8.4.0

jedi 0.18.1

kiwisolver 1.4.4

Markdown 3.4.1

MarkupSafe 2.1.1

matplotlib 3.5.3

matplotlib-inline 0.1.6

mpmath 1.2.1

natsort 8.1.0

numpy 1.23.2

oauthlib 3.2.0

opencv-python 4.6.0.66

packaging 21.3

pandas 1.4.3

parso 0.8.3

pexpect 4.8.0

pickleshare 0.7.5

Pillow 9.2.0

pip 22.2.2

prompt-toolkit 3.0.30

protobuf 3.19.4

psutil 5.9.1

ptyprocess 0.7.0

pure-eval 0.2.2

pyasn1 0.4.8

pyasn1-modules 0.2.8

Pygments 2.13.0

pyparsing 3.0.9

python-dateutil 2.8.2

pytz 2022.2.1

PyYAML 6.0

requests 2.28.1

requests-oauthlib 1.3.1

rsa 4.9

scipy 1.9.0

seaborn 0.11.2

setuptools 63.2.0

six 1.16.0

stack-data 0.4.0

sympy 1.10.1

tensorboard 2.10.0

tensorboard-data-server 0.6.1

tensorboard-plugin-wit 1.8.1

thop 0.1.1.post2207130030

torch 1.12.1

torchaudio 0.12.1

torchvision 0.13.1

tqdm 4.64.0

traitlets 5.3.0

typing_extensions 4.3.0

urllib3 1.26.11

wcwidth 0.2.5

Werkzeug 2.2.2

wheel 0.37.1

```

Initialisation Code: For Both M and M6 Models

I swapped between 412, 640 and 1280 image sizes to reduce the drag on resources. Then I tried increasing and decreasing the batch size from 4-10-16-32 and 64. I also tried --hyp low, med, high but this created more drag and nothing worked, see the images below:

COMMANDS:

1. Python3 train.py --data coco128.yaml --epoch 30 --batch 32 --weights yolov5m6.pt --img 640 --cache

2. Python3 train.py --data coco128.yaml --epoch 30 --batch 32 --weights yolov5m.pt --img 640 --cache

All additional unnecessary APPS via Activity Monitor WERE STOPPED(Shut Down)

Image of issue:

THEN, I also Tried Initialising the MPS as it was installed but a different set of errors persist.

1. Python3 train.py --data coco128.yaml --epoch 30 --batch 32 --weights yolov5m6.pt --img 640 --cache --device mps

2. Python3 train.py --data coco128.yaml --epoch 30 --batch 32 --weights yolov5m.pt --img 640 --cache --device mps

The larger models is my preference due to heavy dependency on accuracy, it's no use changing to a large model the resources are being depleted and the operations is too slow. I am aware that pytorch is still testing operations with packages and particulars for efficiency via MAC, but this is way to slow especially for a new machine with all the bells and whistles.

I have been stuck at this stage for 2 weeks now and not sure how to proceed, please assist me?

THANX LOADs in advance, I really appreciate you for taking the time to acknowledge my digital presence.

Cheers..

Sym.

### Versions

`Python3 train.py --data coco128.yaml --epoch 30 --batch 32 --weights yolov5x6.pt --img 640 --cache --device mps

train: weights=yolov5x6.pt, cfg=, data=coco128.yaml, hyp=data/hyps/hyp.scratch-low.yaml, epochs=30, batch_size=32, imgsz=640, rect=False, resume=False, nosave=False, noval=False, noautoanchor=False, noplots=False, evolve=None, bucket=, cache=ram, image_weights=False, device=mps, multi_scale=False, single_cls=False, optimizer=SGD, sync_bn=False, workers=8, project=runs/train, name=exp, exist_ok=False, quad=False, cos_lr=False, label_smoothing=0.0, patience=100, freeze=[0], save_period=-1, seed=0, local_rank=-1, entity=None, upload_dataset=False, bbox_interval=-1, artifact_alias=latest

github: up to date with https://github.com/ultralytics/yolov5 ✅

YOLOv5 🚀 v6.2-51-ge6f54c5 Python-3.10.6 torch-1.12.1 MPS

hyperparameters: lr0=0.01, lrf=0.01, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=0.05, cls=0.5, cls_pw=1.0, obj=1.0, obj_pw=1.0, iou_t=0.2, anchor_t=4.0, fl_gamma=0.0, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.0, copy_paste=0.0

Weights & Biases: run 'pip install wandb' to automatically track and visualize YOLOv5 🚀 runs in Weights & Biases

ClearML: run 'pip install clearml' to automatically track, visualize and remotely train YOLOv5 🚀 in ClearML

TensorBoard: Start with 'tensorboard --logdir runs/train', view at http://localhost:6006/

Downloading https://github.com/ultralytics/yolov5/releases/download/v6.2/yolov5x6.pt to yolov5x6.pt...

ERROR: <urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: unable to get local issuer certificate (_ssl.c:997)>

Re-attempting https://storage.googleapis.com/ultralytics/yolov5/v6.2/yolov5x6.pt to yolov5x6.pt...

############################################################################################################################################################################################################################################ 100.0%

Overriding model.yaml nc=80 with nc=13

from n params module arguments

0 -1 1 8800 models.common.Conv [3, 80, 6, 2, 2]

1 -1 1 115520 models.common.Conv [80, 160, 3, 2]

2 -1 4 309120 models.common.C3 [160, 160, 4]

3 -1 1 461440 models.common.Conv [160, 320, 3, 2]

4 -1 8 2259200 models.common.C3 [320, 320, 8]

5 -1 1 1844480 models.common.Conv [320, 640, 3, 2]

6 -1 12 13125120 models.common.C3 [640, 640, 12]

7 -1 1 5531520 models.common.Conv [640, 960, 3, 2]

8 -1 4 11070720 models.common.C3 [960, 960, 4]

9 -1 1 11061760 models.common.Conv [960, 1280, 3, 2]

10 -1 4 19676160 models.common.C3 [1280, 1280, 4]

11 -1 1 4099840 models.common.SPPF [1280, 1280, 5]

12 -1 1 1230720 models.common.Conv [1280, 960, 1, 1]

13 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

14 [-1, 8] 1 0 models.common.Concat [1]

15 -1 4 11992320 models.common.C3 [1920, 960, 4, False]

16 -1 1 615680 models.common.Conv [960, 640, 1, 1]

17 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

18 [-1, 6] 1 0 models.common.Concat [1]

19 -1 4 5332480 models.common.C3 [1280, 640, 4, False]

20 -1 1 205440 models.common.Conv [640, 320, 1, 1]

21 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

22 [-1, 4] 1 0 models.common.Concat [1]

23 -1 4 1335040 models.common.C3 [640, 320, 4, False]

24 -1 1 922240 models.common.Conv [320, 320, 3, 2]

25 [-1, 20] 1 0 models.common.Concat [1]

26 -1 4 4922880 models.common.C3 [640, 640, 4, False]

27 -1 1 3687680 models.common.Conv [640, 640, 3, 2]

28 [-1, 16] 1 0 models.common.Concat [1]

29 -1 4 11377920 models.common.C3 [1280, 960, 4, False]

30 -1 1 8296320 models.common.Conv [960, 960, 3, 2]

31 [-1, 12] 1 0 models.common.Concat [1]

32 -1 4 20495360 models.common.C3 [1920, 1280, 4, False]

33 [23, 26, 29, 32] 1 173016 models.yolo.Detect [13, [[19, 27, 44, 40, 38, 94], [96, 68, 86, 152, 180, 137], [140, 301, 303, 264, 238, 542], [436, 615, 739, 380, 925, 792]], [320, 640, 960, 1280]]

[W NNPACK.cpp:51] Could not initialize NNPACK! Reason: Unsupported hardware.

Model summary: 733 layers, 140150776 parameters, 140150776 gradients, 209.0 GFLOPs

Transferred 955/963 items from yolov5x6.pt

AMP: checks failed ❌, disabling Automatic Mixed Precision. See https://github.com/ultralytics/yolov5/issues/7908

optimizer: SGD(lr=0.01) with parameter groups 159 weight(decay=0.0), 163 weight(decay=0.0005), 163 bias

train: Scanning '/Users/symbadian/Desktop/LIRIS_new_dataset/WITH_BG_WITHAUGs/datasets/coco128/images/Merge_Discussions-Stab_Knife_Deploy/train/labels.cache' images and labels... 5730 found, 0 missing, 240 empty, 0 corrupt: 100%|██████████| 573

train: Caching images (7.0GB ram): 100%|██████████| 5730/5730 [00:03<00:00, 1739.79it/s]

val: Scanning '/Users/symbadian/Desktop/LIRIS_new_dataset/WITH_BG_WITHAUGs/datasets/coco128/images/Merge_Discussions-Stab_Knife_Deploy/val/labels.cache' images and labels... 474 found, 0 missing, 20 empty, 0 corrupt: 100%|██████████| 474/474 [

val: Caching images (0.6GB ram): 100%|██████████| 474/474 [00:00<00:00, 1035.74it/s]

AutoAnchor: 5.83 anchors/target, 0.972 Best Possible Recall (BPR). Anchors are a poor fit to dataset ⚠️, attempting to improve...

AutoAnchor: WARNING: Extremely small objects found: 64 of 30526 labels are < 3 pixels in size

AutoAnchor: Running kmeans for 12 anchors on 30526 points...

AutoAnchor: Evolving anchors with Genetic Algorithm: fitness = 0.7549: 100%|██████████| 1000/1000 [00:08<00:00, 124.95it/s]

AutoAnchor: thr=0.25: 0.9926 best possible recall, 7.51 anchors past thr

AutoAnchor: n=12, img_size=640, metric_all=0.351/0.756-mean/best, past_thr=0.497-mean: 17,15, 87,77, 198,124, 131,192, 263,176, 162,319, 384,151, 369,272, 260,481, 530,247, 488,420, 582,587

Traceback (most recent call last):

File "/Users/symbadian/Desktop/LIRIS_new_dataset/WITH_BG_WITHAUGs/yolov5/train.py", line 630, in <module>

main(opt)

File "/Users/symbadian/Desktop/LIRIS_new_dataset/WITH_BG_WITHAUGs/yolov5/train.py", line 526, in main

train(opt.hyp, opt, device, callbacks)

File "/Users/symbadian/Desktop/LIRIS_new_dataset/WITH_BG_WITHAUGs/yolov5/train.py", line 222, in train

check_anchors(dataset, model=model, thr=hyp['anchor_t'], imgsz=imgsz) # run AutoAnchor

File "/Users/symbadian/Desktop/LIRIS_new_dataset/WITH_BG_WITHAUGs/yolov5/utils/autoanchor.py", line 58, in check_anchors

anchors = torch.tensor(anchors, device=m.anchors.device).type_as(m.anchors)

TypeError: Cannot convert a MPS Tensor to float64 dtype as the MPS framework doesn't support float64. Please use float32 instead.

(/Users/symbadian/miniforge3/ml) Matthews-MacBook-Pro:yolov5 symbadian$

`

cc @malfet @albanD

| 2 |

4,902 | 83,956 |

[FSDP] Make sharded / unsharded check more robust

|

oncall: distributed, triaged, module: fsdp

|

### 🚀 The feature, motivation and pitch

As per the comment in https://github.com/pytorch/pytorch/pull/83195#discussion_r952937179, we currently check if we are using the local shard for the param to check if a param is sharded or unsharded:

```

p.data.data_ptr() == p._local_shard.data_ptr():

```

This assumes that if we are using the local shard, we already correctly freed the full parameter. This assumption holds true today but if it were to break this would be a silent correctness issue. We should figure out a robust, future-proof approach to checking if we need to reshard a parameter or not.

### Alternatives

_No response_

### Additional context

_No response_

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501 @ezyang

| 0 |

4,903 | 83,947 |

Are PyTorch Android nightly builds getting automatically published

|

module: ci, triaged, module: android

|

### 🐛 Describe the bug

https://github.com/pytorch/pytorch/pull/83930 kills logic for uploading nightly builds, because apparently it were never used.

Lets track whether we need to publish nightly builds for Android.... And if do not, let's close this issue

Our [documentation](https://pytorch.org/mobile/android/#using-the-nightly-pytorch-android-libraries) claims that Android nightly builds exist, but still references Python-1.8.0 and the last non-trivial update to this page were in Jun 2021: https://github.com/pytorch/pytorch.github.io/commits/site/_mobile/android.md

### Versions

CI

cc @seemethere @pytorch/pytorch-dev-infra

| 0 |

4,904 | 83,941 |

empty_quantized should probably be new_empty_quantized

|

oncall: quantization, triaged

|

### 🐛 Describe the bug

the current empty_quantized takes a Tensor as input, so it matches the semantics of new_empty instead of empty (which does not take Tensor input), we should merge the empty_quantized implementation under new_empty instead

### Versions

master

cc @jianyuh @raghuramank100 @jamesr66a @vkuzo

| 0 |

4,905 | 83,948 |

Add torch nightly builds pipeline for aarch64 linux

|

module: ci, triaged, enhancement, module: arm

|

There are no aarch64_linux nightly wheels here:

https://download.pytorch.org/whl/nightly/cpu/torch_nightly.html

However, I see the wheels are published for [PT1.12.1 release](https://pypi.org/project/torch/#files) on Aug 5th, so, the issue with the nightly builds might be with some infrastructure not the codebase.

cc @seemethere @malfet @pytorch/pytorch-dev-infra

| 5 |

4,906 | 83,932 |

Hitting rate limits for pytorchbot token

|

triaged, module: infra

|

example: https://github.com/pytorch/pytorch/runs/7733767854?check_suite_focus=true

| 1 |

4,907 | 83,931 |

primTorch: support refs and decompositions when ATen and Python disagree

|

triaged, module: primTorch

|

### 🐛 Describe the bug

1. Policy (related): We need to agree on how to handle refs/decomps like `binary_cross_entropy` where the ATen op and the Python frontend have different number/type of arguments. Right now, `binary_cross_entropy` just defines a decomp. Do we want to split the ref/decomp implementations for these (with a shared core/conversion logic) or handle in some other way?

2. Bug: if `register_decomposition` is used with ops like these to define _one_ Python function that's both registered as a ref and a decomp, there are no type signature checks in `register_decomposition` to catch this. So it'll just run and we might not notice unless it breaks for some other reason.

I have a demo branch with this issue here, see the commit message and notes in the topmost commit:

https://github.com/nkaretnikov/pytorch/commits/primtorch-l1-loss-decomp-ref-compat-issue

### Versions

master (e0f2eba93d2804d22cd53ea8c09a479ae546dc7f)

cc @ezyang @mruberry @ngimel

| 1 |

4,908 | 83,929 |

ModuleNotFoundError: No module named 'torch.ao.quantization.experimental'

|

oncall: quantization, triaged

|

### 🐛 Describe the bug

Running 'pytest' gets these errors:

```

ModuleNotFoundError: No module named 'torch.ao.quantization.experimental'

```

https://github.com/facebookresearch/d2go/issues/141

The code likely needs to be changed from:

```

from torch.ao.quantization.experimental.observer import APoTObserver

```

to:

```

from torch.quantization.experimental.observer import APoTObserver

```

in these file:

```

% grep torch.ao.quantization test/quantization/core/experimental/*.py

test/quantization/core/experimental/apot_fx_graph_mode_ptq.py:from torch.ao.quantization.experimental.quantization_helper import (

test/quantization/core/experimental/apot_fx_graph_mode_ptq.py:from torch.ao.quantization.experimental.qconfig import (

test/quantization/core/experimental/apot_fx_graph_mode_qat.py:from torch.ao.quantization.experimental.quantization_helper import (

test/quantization/core/experimental/test_fake_quantize.py:from torch.ao.quantization.experimental.observer import APoTObserver

test/quantization/core/experimental/test_fake_quantize.py:from torch.ao.quantization.experimental.quantizer import quantize_APoT, dequantize_APoT

test/quantization/core/experimental/test_fake_quantize.py:from torch.ao.quantization.experimental.fake_quantize import APoTFakeQuantize

test/quantization/core/experimental/test_fake_quantize.py:from torch.ao.quantization.experimental.fake_quantize_function import fake_quantize_function

test/quantization/core/experimental/test_linear.py:from torch.ao.quantization.experimental.linear import LinearAPoT

test/quantization/core/experimental/test_nonuniform_observer.py:from torch.ao.quantization.experimental.observer import APoTObserver

test/quantization/core/experimental/test_quantized_tensor.py:from torch.ao.quantization.experimental.observer import APoTObserver

test/quantization/core/experimental/test_quantized_tensor.py:from torch.ao.quantization.experimental.quantizer import quantize_APoT

test/quantization/core/experimental/test_quantizer.py:from torch.ao.quantization.observer import MinMaxObserver

test/quantization/core/experimental/test_quantizer.py:from torch.ao.quantization.experimental.observer import APoTObserver

test/quantization/core/experimental/test_quantizer.py:from torch.ao.quantization.experimental.quantizer import APoTQuantizer, quantize_APoT, dequantize_APoT

```

### Versions

```

% python collect_env.py

Collecting environment information...

PyTorch version: N/A

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: macOS 12.5.1 (x86_64)

GCC version: Could not collect

Clang version: 13.1.6 (clang-1316.0.21.2.5)

CMake version: version 3.22.1

Libc version: N/A

Python version: 3.10.4 (main, Mar 31 2022, 03:38:35) [Clang 12.0.0 ] (64-bit runtime)

Python platform: macOS-10.16-x86_64-i386-64bit

Is CUDA available: N/A

CUDA runtime version: Could not collect

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

Versions of relevant libraries:

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.22.3

[pip3] torch==1.13.0a0+gitb2ddef2

[pip3] torchmetrics==0.9.3

[pip3] torchvision==0.14.0a0+a61e6ef

[conda] blas 1.0 mkl anaconda

[conda] captum 0.5.0 0 pytorch

[conda] mkl 2021.4.0 hecd8cb5_637 anaconda

[conda] mkl-service 2.4.0 py310hca72f7f_0 anaconda

[conda] mkl_fft 1.3.1 py310hf879493_0 anaconda

[conda] mkl_random 1.2.2 py310hc081a56_0 anaconda

[conda] numpy 1.22.3 py310hdcd3fac_0 anaconda

[conda] numpy-base 1.22.3 py310hfd2de13_0 anaconda

[conda] pytorch 1.12.1 py3.10_0 pytorch

[conda] torch 1.13.0a0+git09157c7 pypi_0 pypi

[conda] torchmetrics 0.9.3 pyhd8ed1ab_0 conda-forge

[conda] torchvision 0.14.0a0+a61e6ef pypi_0 pypi

(AI-Feynman) davidlaxer@x86_64-apple-darwin13 pytorch %

```

cc @jerryzh168 @jianyuh @raghuramank100 @jamesr66a @vkuzo

| 5 |

4,909 | 83,923 |

Support primtorch view ops in functionalization

|

triaged, module: viewing and reshaping, module: functionalization, module: primTorch

|

I was starting to look at failing inductor models with `functorch.config.use_functionalize=True` turned on, and one failure that I noticed is:

```

// run this

python benchmarks/timm_models.py --float32 -dcuda --no-skip --training --inductor --use-eval-mode --only=mobilevit_s

// output

return forward_call(*input, **kwargs)

File "<eval_with_key>.4", line 394, in forward

broadcast_in_dim_default = torch.ops.prims.broadcast_in_dim.default(var_default, [256, 256, 1], [0, 1]); var_default = None

File "/scratch/hirsheybar/work/benchmark/pytorch/torch/_ops.py", line 60, in __call__

return self._op(*args, **kwargs or {})

RuntimeError: !schema.hasAnyAliasInfo() INTERNAL ASSERT FAILED at "/scratch/hirsheybar/work/benchmark/pytorch/aten/src/ATen/FunctionalizeFallbackKernel.cpp":30, please report a bug to PyTorch. mutating and aliasing ops should all have codegen'd kernels

```

This error didn't show up in my AOT + eager tests, probably because the inductor backend is choosing to run extra primtorch decompositions. This causes functionalization to see `prims.broadcast_in_dim`, which is a new "view" op that it doesn't know how to handle.

For what it's worth - the code above fails with `use_functionalize=False` turned off today, too, because of some issue's in dynamo' `normalize_ir()` code. So this technically isn't a regression, although it seems like time would be better spent fixing the problem with functionalization instead of fixing dynamo's normalization logic.

Two potential ways to handle this are:

(1) write a custom functionalization kernel for `prims.broadcast_in_dims.default`. We'd need to expose the right C++ API's to python in order to do this

(2) Beef up the functionalization boxed fallback to handle views. This would be very useful, but it's a bit unclear how this would work: for every primtorch view, the boxed fallback needs to know how to map it to a "view inverse" function.

cc @ezyang @mruberry @ngimel

| 9 |

4,910 | 83,914 |

RAM not free when deleting a model in CPU? worse after inference, is there some cache hidden?

|

module: memory usage, triaged

|

### 🐛 Describe the bug

Hi, all,

After deleting a model (e.g. ResNet50) with `del(model)`, the memory does not seem to be releasing.

Worse, memory grows too much after simple inference, in eval mode, without grads : I understand the model needs to keep some infos about tensors during inference for skip connections, but I guess it should not retain anything after this connection, and be back to same state after initialization?

I checked it's not the case for simple modules like Sequentials of Linears etc., so it may be related to special modules.

Not sure if it's a real bug, but I feel like it's not the output we would expect. If I should ask on the Forum, please tell me.

Manual Garbage Collection does not change anything, so i'm confused where the memory goes. Thanks for the answer

first, some quick code to get RAM usage

```python

import os, psutil

import time

def print_memory_usage(prefix):

"""prints current process RAM consumption in Go"""

process = psutil.Process(os.getpid())

print("memory usage ", prefix, process.memory_info().rss / 1e9, "Go")

```

Now the ResNet50 initialization

```python

import torch

from torchvision.models import resnet50

print_memory_usage(prefix="before anything")

model = resnet50(pretrained=True)

model.eval()

for param in model.parameters():

param.requires_grad_(False)

print_memory_usage(prefix="after model init")

del model

print_memory_usage(prefix="after removing model")

```

```

memory usage before anything 0.15622144 Go

memory usage after model init 0.362528768 Go

memory usage after removing model 0.362561536 Go # not released

```

and worse : memory "explosion" after inference in eval mode and grad-free

```python

import torch

from torchvision.models import resnet50

print_memory_usage(prefix="before anything")

model = resnet50(pretrained=True)

model.eval()

for param in model.parameters():

param.requires_grad_(False)

print_memory_usage(prefix="after model init")

input_tensor = torch.rand((100, 3, 128, 128))

print_memory_usage(prefix="after init input")

with torch.no_grad():

model.forward(input_tensor)

print_memory_usage(prefix="after inference")

del model

print_memory_usage(prefix="after removing model")

del input_tensor

print_memory_usage(prefix="after removing input")

```

```

memory usage before anything 0.155877376 Go

memory usage after model init 0.362856448 Go

memory usage after init input 0.382713856 Go

memory usage after inference 1.41819904 Go # outch, where does it comes from, if it's grad-free?

memory usage after removing model 1.294467072 Go # memory not released

memory usage after removing input 1.18964224 Go

```

### Versions

```

Collecting environment information...

PyTorch version: 1.12.0.post2

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: macOS 12.4 (arm64)

GCC version: Could not collect

Clang version: 12.0.5 (clang-1205.0.22.9)

CMake version: Could not collect

Libc version: N/A

Python version: 3.9.2 | packaged by conda-forge | (default, Feb 21 2021, 05:00:30) [Clang 11.0.1 ] (64-bit runtime)

Python platform: macOS-12.4-arm64-arm-64bit

Is CUDA available: False

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy==0.920

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.21.2

[pip3] torch==1.12.0.post2

[pip3] torchaudio==0.12.1

[pip3] torchvision==0.11.3

[conda] numpy 1.20.3 pypi_0 pypi

[conda] pytorch 1.12.0 cpu_py39h0768760_2 conda-forge

[conda] torch 1.10.2 pypi_0 pypi

[conda] torchaudio 0.12.1 pypi_0 pypi

[conda] torchvision 0.11.3 pypi_0 pypi

```

| 1 |

4,911 | 83,910 |

Tracking nested tensor functions with backward kernels registered in derivatives.yaml

|

triaged, module: nestedtensor

|

### 🐛 Describe the bug

Context:

Backward formulas for certain nested tensor functions do not work as they call `.sizes()`. As a workaround, we can register formulas specific to the AutogradNestedTensor dispatch key in derivatives.yaml. This should be removed when SymInts for nested tensor sizes are ready for use. This issue serves as a tracker for functions for which we have added this workaround so that we can remove them in the future.

- [ ] [_nested_sum_backward](https://github.com/pytorch/pytorch/pull/82625)

- [ ] [_select_backward](https://github.com/pytorch/pytorch/pull/83875)

### Versions

n/a

cc @cpuhrsch @jbschlosser @bhosmer @drisspg @albanD

| 0 |

4,912 | 83,909 |

Grad strides do not match bucket view strides

|

oncall: distributed, triaged, module: memory format, module: ddp

|

### 🐛 Describe the bug

@mcarilli

Hello,

I am using torch.nn.parallel.DistributedDataParallel and get the following warning:

> Variable._execution_engine.run_backward( # Calls into the C++ engine to run the backward pass

> /\*\*\*\*\*\*\/miniconda3/envs/pym/lib/python3.10/site-packages/torch/autograd/__init__.py:173: UserWarning: Grad strides do not match bucket view strides. This may indicate grad was not created according to the gradient layout contract, or that the param's strides changed since DDP was constructed. This is not an error, but may impair performance.

> grad.sizes() = [300, 100, 1, 1], strides() = [100, 1, 1, 1]

> bucket_view.sizes() = [300, 100, 1, 1], strides() = [100, 1, 100, 100] (Triggered internally at /opt/conda/conda-bld/pytorch_1659484803030/work/torch/csrc/distributed/c10d/reducer.cpp:312.)

I could not write the minimal reproducing code because I can't find what part of the code create this warning.

However, I know that it is due to an operation in my model as the warning does not appear with other models.

But I do not get any warning when running the same code with Apex, so could it be an issue with the native DDP?

### Versions

Collecting environment information...

PyTorch version: 1.12.1

Is debug build: False

CUDA used to build PyTorch: 11.6

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.3 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: Could not collect

CMake version: Could not collect

Libc version: glibc-2.27

Python version: 3.10.6 | packaged by conda-forge | (main, Aug 22 2022, 20:35:26) [GCC 10.4.0] (64-bit runtime)

Python platform: Linux-5.4.0-120-generic-x86_64-with-glibc2.27

Is CUDA available: True

CUDA runtime version: Could not collect

GPU models and configuration:

GPU 0: NVIDIA GeForce RTX 3090

GPU 1: NVIDIA GeForce RTX 3090

GPU 2: NVIDIA GeForce RTX 3090

GPU 3: NVIDIA GeForce RTX 3090

GPU 4: NVIDIA GeForce RTX 3090

GPU 5: NVIDIA GeForce RTX 3090

GPU 6: NVIDIA GeForce RTX 3090

GPU 7: NVIDIA GeForce RTX 3090

Nvidia driver version: 515.48.07

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.23.2

[pip3] torch==1.12.1

[pip3] torchaudio==0.12.1

[pip3] torchvision==0.13.1

[conda] blas 2.116 mkl conda-forge

[conda] blas-devel 3.9.0 16_linux64_mkl conda-forge

[conda] cudatoolkit 11.6.0 hecad31d_10 conda-forge

[conda] libblas 3.9.0 16_linux64_mkl conda-forge

[conda] libcblas 3.9.0 16_linux64_mkl conda-forge

[conda] liblapack 3.9.0 16_linux64_mkl conda-forge

[conda] liblapacke 3.9.0 16_linux64_mkl conda-forge

[conda] mkl 2022.1.0 h84fe81f_915 conda-forge

[conda] mkl-devel 2022.1.0 ha770c72_916 conda-forge

[conda] mkl-include 2022.1.0 h84fe81f_915 conda-forge

[conda] numpy 1.23.2 py310h53a5b5f_0 conda-forge

[conda] pytorch 1.12.1 py3.10_cuda11.6_cudnn8.3.2_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchaudio 0.12.1 py310_cu116 pytorch

[conda] torchvision 0.13.1 py310_cu116 pytorch

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501 @VitalyFedyunin @jamesr66a @ezyang

| 4 |

4,913 | 83,902 |

Bug in batch names with matmul (result tensor has names=('i', 'i', 'k')).

|

triaged, module: named tensor

|

### 🐛 Describe the bug

The following code should fail, as it gives duplicate names in the output.

```

A = t.ones((3,3), names=('i', 'j'))

B = t.ones((3,3,3), names=('i', 'j', 'k'))

print((A@B).names)

```

Instead, it gives duplicated names (which is not allowed e.g. in the named constructors):

```

('i', 'i', 'k')

```

I'm on PyTorch version 1.12.1 on CPU.

### Versions

Collecting environment information...

PyTorch version: 1.12.1

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: CentOS Linux release 7.9.2009 (Core) (x86_64)

GCC version: (GCC) 9.3.0

Clang version: Could not collect

CMake version: Could not collect

Libc version: glibc-2.17

Python version: 3.9.12 (main, Apr 5 2022, 06:56:58) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-3.10.0-1160.45.1.el7.x86_64-x86_64-with-glibc2.17

Is CUDA available: False

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.21.5

[pip3] numpydoc==1.2

[pip3] torch==1.12.1

[pip3] torchaudio==0.12.1+cu113

[pip3] torchvision==0.13.1+cu113

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.3.1 h2bc3f7f_2

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.21.5 py39he7a7128_1

[conda] numpy-base 1.21.5 py39hf524024_1

[conda] numpydoc 1.2 pyhd3eb1b0_0

[conda] pytorch 1.12.1 py3.9_cuda11.3_cudnn8.3.2_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchaudio 0.12.1+cu113 pypi_0 pypi

[conda] torchvision 0.13.1+cu113 pypi_0 pypi

cc @zou3519

| 3 |

4,914 | 83,901 |

pytorch 1.12.1 Adam Optimizer Malfunction!!!

|

needs reproduction, module: optimizer, triaged

|

If you have a question or would like help and support, please ask at our

[forums](https://discuss.pytorch.org/).

If you are submitting a feature request, please preface the title with [feature request].

If you are submitting a bug report, please fill in the following details.

## Issue description

Provide a short description.

In pytorch 1.12.1, Adam optimization doesn't work well.

I think, It seems that the internal behavior has changed as the version is upgraded, please check

## Code example

Please try to provide a minimal example to repro the bug.

Error messages and stack traces are also helpful.

## System Info

Please copy and paste the output from our

[environment collection script](https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py)

(or fill out the checklist below manually).

You can get the script and run it with:

```

wget https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py

# For security purposes, please check the contents of collect_env.py before running it.

python collect_env.py

```

- PyTorch

- How you installed PyTorch Conda

- Build command you used (if compiling from source):

- OS: window10, Ubuntu 18.04

- PyTorch version: 1.12.1

- Python version: 3.8.0

- CUDA/cuDNN version: Cuda11.3

- GPU models and configuration: gtx1080ti, gtx3070

- GCC version (if compiling from source):

- CMake version:

- Versions of any other relevant libraries:

cc @vincentqb @jbschlosser @albanD

| 1 |

4,915 | 83,884 |

Improve FSDP error msg on wrong attr access

|

oncall: distributed, module: bootcamp, triaged, pt_distributed_rampup, module: fsdp

|

### 🚀 The feature, motivation and pitch

If FSDP does not have an attr, it will dispatch into the contained module to try to get the attribute. However if this fails, the error raised is confusing, since it prints out a msg containing info about the wrapped module not having the attribute, instead of the FSDP module. An example:

```

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/fsx/users/rvarm1/rvarm1/repos/pytorch/torch/testing/_internal/common_distributed.py", line 622, in run_test

getattr(self, test_name)()

File "/fsx/users/rvarm1/rvarm1/repos/pytorch/torch/testing/_internal/common_distributed.py", line 503, in wrapper

fn()

File "/fsx/users/rvarm1/rvarm1/repos/pytorch/torch/testing/_internal/common_distributed.py", line 145, in wrapper

return func(*args, **kwargs)

File "/fsx/users/rvarm1/rvarm1/repos/pytorch/test/distributed/fsdp/test_fsdp_misc.py", line 150, in test_fsdp_not_all_outputs_used_in_loss

loss.backward()

File "/fsx/users/rvarm1/rvarm1/repos/pytorch/torch/_tensor.py", line 484, in backward

torch.autograd.backward(

File "/fsx/users/rvarm1/rvarm1/repos/pytorch/torch/autograd/__init__.py", line 191, in backward

Variable._execution_engine.run_backward( # Calls into the C++ engine to run the backward pass

File "/fsx/users/rvarm1/rvarm1/repos/pytorch/torch/autograd/grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "/fsx/users/rvarm1/rvarm1/repos/pytorch/torch/distributed/fsdp/fully_sharded_data_parallel.py", line 2880, in _post_backward_hook

if self._should_free_full_params():

File "/fsx/users/rvarm1/rvarm1/repos/pytorch/torch/distributed/fsdp/fully_sharded_data_parallel.py", line 3483, in _should_free_full_params

self.sharding_stratagy == ShardingStrategy.FULL_SHARD

File "/fsx/users/rvarm1/rvarm1/repos/pytorch/torch/distributed/fsdp/fully_sharded_data_parallel.py", line 1536, in __getattr__

return getattr(self._fsdp_wrapped_module, name)

File "/fsx/users/rvarm1/rvarm1/repos/pytorch/torch/distributed/fsdp/flatten_params_wrapper.py", line 146, in __getattr__

return getattr(self.module, name) # fall back to the wrapped module

File "/fsx/users/rvarm1/rvarm1/repos/pytorch/torch/nn/modules/module.py", line 1260, in __getattr__

raise AttributeError("'{}' object has no attribute '{}'".format(

AttributeError: 'Linear' object has no attribute 'sharding_stratagy'

```

In this case, we have a typo `sharding_stratagy` but it is harder to debug since the error `AttributeError: 'Linear' object has no attribute 'sharding_stratagy'` is misleading. We should improve this to include information about `FullyShardedDataParallel`.

### Alternatives

_No response_

### Additional context

_No response_

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501 @ezyang

| 0 |

4,916 | 83,863 |

bfloat16 matmul gives incorrect result on CPU (without mkldnn)

|

module: cpu, triaged, module: bfloat16, module: linear algebra

|

### 🐛 Describe the bug

It seems that Pytorch's default CPU backend does not compute bfloat16 matmul correctly. The expected behavior is observed for certain dimensions only (the outcome is as expected for 256x256x256), but fails for others (1024x1024x1024).

In addition, it seems that the mkl-dnn backend correctly computes the result. However, it's only limited to avx512+ systems only.

```python

import torch

def check_correctness(a: torch.Tensor, b:torch.Tensor, expected: int):

for mkldnn_flag in [True, False]:

with torch.backends.mkldnn.flags(enabled=mkldnn_flag):

c = torch.matmul(a, b)

assert(torch.all(c == expected)), "Incorrect result with\n" \

f"torch.backends.mkldnn.flags(enabled={mkldnn_flag}),\n" \

f"and dtypes: {a.dtype}, {b.dtype}, {c.dtype}\n" \

f"expected: {expected}\n" \

f"got: {c}\n"

val = 1024

a = torch.ones(val, val)

b = torch.ones(val, val)

check_correctness(a, b, expected=val)

a = a.to(torch.bfloat16)

b = b.to(torch.bfloat16)

check_correctness(a, b, expected=val)

```

Executing the above code yields the following message:

```sh

Traceback (most recent call last):

File "test_matmul.py", line 23, in <module>

check_correctness(a, b, expected=val)

File "test_matmul.py", line 7, in check_correctness

assert(torch.all(c == expected)), "Incorrect result with\n" \

AssertionError: Incorrect result with

torch.backends.mkldnn.flags(enabled=False),

and dtypes: torch.bfloat16, torch.bfloat16, torch.bfloat16

expected: 1024

got: tensor([[256., 256., 256., ..., 256., 256., 256.],

[256., 256., 256., ..., 256., 256., 256.],

[256., 256., 256., ..., 256., 256., 256.],

...,

[256., 256., 256., ..., 256., 256., 256.],

[256., 256., 256., ..., 256., 256., 256.],

[256., 256., 256., ..., 256., 256., 256.]], dtype=torch.bfloat16)

```

### Versions

```sh

PyTorch version: 1.12.1+cpu

Is debug build: False

CUDA used to build PyTorch: Could not collect

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.4 LTS (x86_64)

GCC version: (Ubuntu 10.3.0-1ubuntu1~20.04) 10.3.0

Clang version: Could not collect

CMake version: version 3.24.1

Libc version: glibc-2.31

Python version: 3.8.10 (default, Jun 22 2022, 20:18:18) [GCC 9.4.0] (64-bit runtime)

Python platform: Linux-5.10.102.1-microsoft-standard-WSL2-x86_64-with-glibc2.29

Is CUDA available: False

CUDA runtime version: Could not collect

GPU models and configuration: GPU 0: Quadro P1000

Nvidia driver version: 516.40

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.23.2

[pip3] torch==1.12.1+cpu

[pip3] torchaudio==0.12.1+cpu

[pip3] torchvision==0.13.1+cpu

[conda] No relevant packages

```

cc @VitalyFedyunin @jianyuh @nikitaved @pearu @mruberry @walterddr @IvanYashchuk @xwang233 @Lezcano

| 5 |

4,917 | 83,854 |

Pytorch/Nova CI should monitor service outages for major dependencies

|

module: ci, triaged, needs design

|

### 🐛 Describe the bug

Followup after https://github.com/pytorch/vision/issues/6466

There should be a mechanism one can rely to tell whether some of the components CI depends on were experiencing outage at the time CI job were run.

This includes, but not limited:

- https://www.githubstatus.com/

- https://status.circleci.com/

- https://developer.download.nvidia.com

- https://download.pytorch.org

- https://anaconda.org/ and its CDN

- PyPI and its CDN

### Versions

CI

cc @seemethere @malfet @pytorch/pytorch-dev-infra

| 1 |

4,918 | 83,851 |

torch fx cannot trace assert for some cases

|

triaged, fx

|

### 🐛 Describe the bug

torch fx cannot trace assert for some cases.

```

import torch

from torch.fx import Tracer

def test(x):

H, W = x.shape

assert (H, W) == (2, 3), 'haha'

tracer = Tracer()

tracer.trace_asserts = True

graph = tracer.trace(test)

print(graph)

```

It failed for this case.

```

(ai-0401)yinsun@se02ln001:~/tmp/txp$ python test.py

Traceback (most recent call last):

File "test.py", line 10, in <module>

graph = tracer.trace(test)

File "/home/sa/ac-ap-ci/.conda/envs/ai-0401/lib/python3.8/site-packages/torch/fx/_symbolic_trace.py", line 566, in trace

self.create_node('output', 'output', (self.create_arg(fn(*args)),), {},

File "test.py", line 6, in test

assert (H, W) == (2, 3), 'haha'

File "/home/sa/ac-ap-ci/.conda/envs/ai-0401/lib/python3.8/site-packages/torch/fx/proxy.py", line 278, in __bool__

return self.tracer.to_bool(self)

File "/home/sa/ac-ap-ci/.conda/envs/ai-0401/lib/python3.8/site-packages/torch/fx/proxy.py", line 154, in to_bool

raise TraceError('symbolically traced variables cannot be used as inputs to control flow')

torch.fx.proxy.TraceError: symbolically traced variables cannot be used as inputs to control flow

```

### Versions

```

(ai-0401)yinsun@se02ln001:~/tmp/txp$ python collect_env.py

Collecting environment information...

PyTorch version: 1.11.0a0+gitbc2c6ed

Is debug build: False

CUDA used to build PyTorch: 11.4

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.3 LTS (x86_64)

GCC version: (Ubuntu 9.3.0-17ubuntu1~20.04) 9.3.0

Clang version: 14.0.6 (https://github.com/conda-forge/clangdev-feedstock 28f7809e7f4286b203af212a154f5a8327bd6fd6)

CMake version: version 3.19.1

Libc version: glibc-2.31

Python version: 3.8.13 | packaged by conda-forge | (default, Mar 25 2022, 06:04:10) [GCC 10.3.0] (64-bit runtime)

Python platform: Linux-5.4.0-64-generic-x86_64-with-glibc2.10

Is CUDA available: False

CUDA runtime version: 11.4.120

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.19.5

[pip3] pytorch3d==0.6.2

[pip3] torch==1.11.0+bc2c6ed.cuda114.cudnn841.se02s03.ap

[pip3] torch-scatter==2.0.8

[pip3] torch-tb-profiler==0.4.0

[pip3] torchfile==0.1.0

[pip3] torchvision==0.9.0a0+8fb5838

[conda] magma-cuda111 2.5.2 1 pytorch

[conda] mkl 2020.4 h726a3e6_304 conda-forge

[conda] mkl-include 2022.1.0 h84fe81f_915 conda-forge

[conda] numpy 1.19.5 py38h8246c76_3 conda-forge

[conda] pytorch3d 0.6.2 pypi_0 pypi

[conda] torch 1.11.0+bc2c6ed.cuda114.cudnn841.se02s03.ap pypi_0 pypi

[conda] torch-scatter 2.0.8 pypi_0 pypi

[conda] torch-tb-profiler 0.4.0 pypi_0 pypi

[conda] torchfile 0.1.0 pypi_0 pypi

[conda] torchvision 0.9.0a0+8fb5838 pypi_0 pypi

```

cc @ezyang @SherlockNoMad @soumith

| 2 |

4,919 | 83,826 |

test_lazy spuriously fails if LAPACK is not installed

|

module: tests, triaged, module: lazy

|

### 🐛 Describe the bug

should skip if not compiled with lapack

### Versions

master

cc @mruberry

| 0 |

4,920 | 83,824 |

RuntimeError: Interrupted system call when doing distributed training

|

oncall: distributed, module: c10d

|

### 🐛 Describe the bug

When running distributed GPU training, I get the following error:

```

File "train_mae_2d.py", line 120, in train

run_trainer(

File "train_mae_2d.py", line 41, in run_trainer

trainer = make_trainer(

File "/home/ubuntu/video-recommendation/trainer/trainer.py", line 78, in make_trainer

return Trainer(

File "/home/ubuntu/miniconda/envs/video-rec/lib/python3.8/site-packages/composer/trainer/trainer.py", line 781, in __init__

dist.initialize_dist(self._device, datetime.timedelta(seconds=dist_timeout))

File "/home/ubuntu/miniconda/envs/video-rec/lib/python3.8/site-packages/composer/utils/dist.py", line 433, in initialize_dist

dist.init_process_group(device.dist_backend, timeout=timeout)

File "/home/ubuntu/miniconda/envs/video-rec/lib/python3.8/site-packages/torch/distributed/distributed_c10d.py", line 595, in init_process_group

store, rank, world_size = next(rendezvous_iterator)

File "/home/ubuntu/miniconda/envs/video-rec/lib/python3.8/site-packages/torch/distributed/rendezvous.py", line 257, in _env_rendezvous_handler

store = _create_c10d_store(master_addr, master_port, rank, world_size, timeout)

File "/home/ubuntu/miniconda/envs/video-rec/lib/python3.8/site-packages/torch/distributed/rendezvous.py", line 188, in _create_c10d_store

return TCPStore(

RuntimeError: Interrupted system call

```

Is there any way to diagnose what is going on here?

### Versions

Collecting environment information...

PyTorch version: 1.12.0+cu116

Is debug build: False

CUDA used to build PyTorch: 11.6

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.4 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.16.3

Libc version: glibc-2.31

Python version: 3.8.13 (default, Mar 28 2022, 11:38:47) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.15.0-41-generic-x86_64-with-glibc2.17

Is CUDA available: True

CUDA runtime version: 11.6.124

GPU models and configuration:

GPU 0: NVIDIA A100-SXM4-40GB

GPU 1: NVIDIA A100-SXM4-40GB

GPU 2: NVIDIA A100-SXM4-40GB

GPU 3: NVIDIA A100-SXM4-40GB

GPU 4: NVIDIA A100-SXM4-40GB

GPU 5: NVIDIA A100-SXM4-40GB

GPU 6: NVIDIA A100-SXM4-40GB

GPU 7: NVIDIA A100-SXM4-40GB

Nvidia driver version: 510.47.03

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.22.4

[pip3] pytorch-ranger==0.1.1

[pip3] torch==1.12.0+cu116

[pip3] torch-optimizer==0.1.0

[pip3] torchdata==0.4.0

[pip3] torchmetrics==0.7.3

[pip3] torchvision==0.13.0a0+da3794e

[conda] numpy 1.22.4 pypi_0 pypi

[conda] pytorch-ranger 0.1.1 pypi_0 pypi

[conda] torch 1.12.0+cu116 pypi_0 pypi

[conda] torch-optimizer 0.1.0 pypi_0 pypi

[conda] torchdata 0.4.0 pypi_0 pypi

[conda] torchmetrics 0.7.3 pypi_0 pypi

[conda] torchvision 0.13.0a0+da3794e pypi_0 pypi

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501

| 4 |

4,921 | 93,639 |

Explore TorchInductor optimization pass to reorder kernel bodies

|

triaged, oncall: pt2

|

See pytorch/torchdynamo#934 for more context. We found an example of a 10% performance difference from very similar kernels, where the biggest difference seemed to be instruction ordering.

Interesting, my first guess looking at the two kernels is just the ordering of ops. The loads on the faster kernel are "spread out" while the loads in the slow kernel are "bunched up".

Perhaps we should explore a compiler pass that reorders ops within a kernel.

Our current inductor kernels usually look like:

```

<all of the loads>

<all of the compute>

<all of the stores>

````

When you have indirect loads, it moves those indirect loads into the "compute" section, because they must come after the address computation. Thus allowing that spread out pattern to be generated.

My thinking of doing that ordering was it makes compiler analysis easier for Triton/LLVM. I may have been wrong there... especially if Triton doesn't yet have an instruction reordering pass.

This is just one theory though, we should test it.

_Originally posted by @jansel in https://github.com/pytorch/torchdynamo/issues/934#issuecomment-1221583598_

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh

| 0 |

4,922 | 83,818 |

torch.linalg.eigh crashe for matrices of size 2895×2895 or larger on eigen and M1

|

module: crash, triaged, module: linear algebra, module: m1

|

### 🐛 Describe the bug

### From python

```python

>>> import torch as t

>>> t.linalg.eigh(t.randn([2895, 2895]))

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

RuntimeError: false INTERNAL ASSERT FAILED at "/var/tmp/portage/sci-libs/caffe2-1.12.0/work/pytorch-1.12.0/aten/src/ATen/native/LinearAlgebraUtils.h":288, please report a bug to PyTorch. torch.linalg.eigh: Argument 8 has illegal value. Most certainly there is a bug in the implementation calling the backend library.

>>> t.linalg.eigh(t.randn([2894, 2894]))

torch.return_types.linalg_eigh(

eigenvalues=tensor([-107.5161, -107.0879, -106.6525, ..., 106.2521, 106.6649,

107.0642]),

eigenvectors=tensor([[ 0.0078, -0.0312, 0.0016, ..., -0.0169, 0.0231, -0.0228],

[-0.0116, -0.0156, -0.0480, ..., -0.0078, -0.0399, -0.0170],

[-0.0112, 0.0034, 0.0137, ..., 0.0073, 0.0098, 0.0088],

...,

[ 0.0109, -0.0148, 0.0302, ..., 0.0077, -0.0162, 0.0146],

[-0.0004, -0.0294, 0.0220, ..., -0.0102, -0.0062, 0.0327],

[-0.0262, 0.0164, -0.0376, ..., 0.0289, -0.0080, -0.0037]]))

>>> 2 ** 11.5

2896.309375740099

```

### From C++ interface

Can't get a backtrace with gdb, since `bt` says the program already exited. So it's just a mysterious undebuggable crash, until narrowing it down to a `torch::linalg::eigh` call.

```

** On entry to SSYEVD parameter number 8 had an illegal value

```

### See also?

Possibly related to #68291 / #51720?

### Versions

1.12

```

$ wget https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py

--2022-08-21 09:45:24-- https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py

Resolving raw.githubusercontent.com... 185.199.110.133, 185.199.108.133, 185.199.111.133, ...

Connecting to raw.githubusercontent.com|185.199.110.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 16906 (17K) [text/plain]

Saving to: ‘collect_env.py’

collect_env.py 100%[====================================================================================================================>] 16.51K --.-KB/s in 0.02s

2022-08-21 09:45:24 (1009 KB/s) - ‘collect_env.py’ saved [16906/16906]

$ python collect_env.py

Collecting environment information...

Traceback (most recent call last):

File "~/collect_env.py", line 492, in <module>

main()

File "~/collect_env.py", line 475, in main

output = get_pretty_env_info()

File "~/collect_env.py", line 470, in get_pretty_env_info

return pretty_str(get_env_info())

File "~/collect_env.py", line 319, in get_env_info

pip_version, pip_list_output = get_pip_packages(run_lambda)

File "~/collect_env.py", line 301, in get_pip_packages

out = run_with_pip(sys.executable + ' -mpip')

File "~/collect_env.py", line 289, in run_with_pip

for line in out.splitlines()

AttributeError: 'NoneType' object has no attribute 'splitlines'

```

cc @jianyuh @nikitaved @pearu @mruberry @walterddr @IvanYashchuk @xwang233 @Lezcano

| 8 |

4,923 | 83,817 |

[feature request] Add new device type works on CPU

|

triaged, enhancement

|

### 🚀 The feature, motivation and pitch

When we write code on a CPU machine and it runs on a GPU machine, we sometimes forget to transfer tensor GPU to CPU or opposite.

Because we write `device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")` on head of code.

When we test it on a CPU machine, all tensors are put on the CPU and do not tell us whether the tensor will transfer as we expect or not.

So, how about adding a new device type that works on a CPU? (like a fake-gpu)

In my assumption,

Now(no GPU machine)

```

device = torch.device("cuda:0" if torch.cuda.is_available else "cpu")

a = torch.arange(10).to(device)

a.numpy() # actually need a.cpu() before numpy()

>>> No Error

```

New Feature(no GPU machine)

```

device = torch.device("cuda:0" if torch.cuda.is_available else "fake-gpu")

a = torch.arange(10).to(device)

a.numpy() # need a.cpu() before numpy()

>>> Error

```

### Alternatives

_No response_

### Additional context

_No response_

| 3 |

4,924 | 83,800 |

torch.var_mean is slower than layer norm

|

module: performance, module: nn, triaged, needs research

|

### 🐛 Describe the bug

It's known that layer norm needs to compute the variance and mean of its input. So we can expect that `torch.var_mean` runs faster than `LayerNorm`. But, when I time them, I find that `torch.var_mean` runs much slower than `LayerNorm` on cpu.

```python

from functools import partial

import torch

import timeit

x = torch.randn((257, 252, 192),dtype=torch.float32)

ln = torch.nn.LayerNorm(192)

ln.eval()

with torch.no_grad():

var_mean_time = timeit.timeit(partial(torch.var_mean, input=x, dim=(2,)), number=100)

ln_time = timeit.timeit(partial(ln, input=x), number=100)

print(var_mean_time, ln_time) # 3.149209 1.2331005

```

### Versions

PyTorch version: 1.12.1

Is debug build: False

CUDA used to build PyTorch: 11.6

ROCM used to build PyTorch: N/A

OS: Microsoft Windows 11 Home China

GCC version: (x86_64-posix-seh, Built by strawberryperl.com project) 8.3.0

Clang version: Could not collect

CMake version: Could not collect

Libc version: N/A

Python version: 3.9.12 (main, Apr 4 2022, 05:22:27) [MSC v.1916 64 bit (AMD64)] (64-bit runtime)

Python platform: Windows-10-10.0.22622-SP0

Is CUDA available: True

CUDA runtime version: 11.7.64

GPU models and configuration: GPU 0: NVIDIA GeForce GTX 1080

Nvidia driver version: 516.01

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy==0.971

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.22.4

[pip3] pytorch-lightning==1.7.2

[pip3] pytorch-ranger==0.1.1

[pip3] torch==1.12.1

[pip3] torch-complex==0.4.3

[pip3] torch-optimizer==0.3.0

[pip3] torch-stoi==0.1.2

[pip3] torchaudio==0.12.1

[pip3] torchdata==0.4.1

[pip3] torchmetrics==0.9.3

[pip3] torchvision==0.13.1

[conda] blas 2.115 mkl conda-forge

[conda] blas-devel 3.9.0 15_win64_mkl conda-forge

[conda] cudatoolkit 11.6.0 hc0ea762_10 conda-forge

[conda] libblas 3.9.0 15_win64_mkl conda-forge

[conda] libcblas 3.9.0 15_win64_mkl conda-forge

[conda] liblapack 3.9.0 15_win64_mkl conda-forge

[conda] liblapacke 3.9.0 15_win64_mkl conda-forge

[conda] mkl 2022.1.0 pypi_0 pypi

[conda] mkl-devel 2022.1.0 h57928b3_875 conda-forge

[conda] mkl-fft 1.3.1 pypi_0 pypi

[conda] mkl-include 2022.1.0 h6a75c08_874 conda-forge

[conda] mkl-random 1.2.2 pypi_0 pypi

[conda] mkl-service 2.4.0 pypi_0 pypi

[conda] numpy 1.22.4 pypi_0 pypi

[conda] pytorch 1.12.1 py3.9_cuda11.6_cudnn8_0 pytorch

[conda] pytorch-lightning 1.7.2 pypi_0 pypi

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] pytorch-ranger 0.1.1 pypi_0 pypi

[conda] torch-complex 0.4.3 pypi_0 pypi

[conda] torch-optimizer 0.3.0 pypi_0 pypi

[conda] torch-stoi 0.1.2 pypi_0 pypi

[conda] torchaudio 0.12.1 py39_cu116 pytorch

[conda] torchdata 0.4.1 pypi_0 pypi

[conda] torchmetrics 0.9.3 pypi_0 pypi

[conda] torchvision 0.13.1 py39_cu116 pytorch

cc @albanD @mruberry @jbschlosser @walterddr @kshitij12345 @saketh-are @VitalyFedyunin @ngimel

| 3 |

4,925 | 83,795 |

Error on installation

|

module: rocm, triaged

|

### 🐛 Describe the bug

Hello, after "python setup.py install" the script shows this error and the installation fails:

fatal error: error in backend: Cannot select: intrinsic %llvm.amdgcn.ds.bpermute

clang-14: error: clang frontend command failed with exit code 70 (use -v to see invocation)

AMD clang version 14.0.0 (https://github.com/RadeonOpenCompute/llvm-project roc-5.2.3 22324 d6c88e5a78066d5d7a1e8db6c5e3e9884c6ad10e)

Target: x86_64-unknown-linux-gnu

Thread model: posix

InstalledDir: /opt/rocm/hip/../llvm/bin

clang-14: note: diagnostic msg: Error generating preprocessed source(s).

CMake Error at torch_hip_generated_cub-RadixSortPairs.hip.o.cmake:200 (message):

Error generating file

/home/ferna/pytorch/build/caffe2/CMakeFiles/torch_hip.dir/__/aten/src/ATen/hip/./torch_hip_generated_cub-RadixSortPairs.hip.o

Something is missing? Thanks

### Versions

roc-5.2.3

python 3.9

cc @malfet @seemethere @jeffdaily @sunway513 @jithunnair-amd @ROCmSupport @KyleCZH

| 6 |

4,926 | 83,775 |

[Nested Tensor] Move nested tensor specific ops to nested namespace

|

triaged, module: nestedtensor

|

# Summary

Currently all nested tensor specific ops are dumped into the default namespace `torch`. This issue is used to track work on creating a nested tensor namespace and moving all the nested tensor specific functions that namespace.

cc @cpuhrsch @jbschlosser @bhosmer

| 1 |

4,927 | 93,638 |

Inductor Error: aten.fill_.Tensor

|

triaged, oncall: pt2

|

`python /scratch/eellison/work/torchdynamo/benchmarks/microbenchmarks/operatorbench.py --op=aten.fill_.Tensor --dtype=float16 --suite=huggingface`

> CUDA error: operation failed due to a previous error during capture

CUDA kernel errors might be asynchronously reported at some other API call,so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh

| 0 |

4,928 | 83,773 |

[Nested Tensor] view + inplace for autograd.

|

module: autograd, triaged, module: nestedtensor

|

## Summary

This is currently erroring:

```

import torch

a = torch.randn(1, 2, 4, requires_grad=True)

b = torch.randn(2, 2, 4, requires_grad=True)

c = torch.randn(3, 2, 4, requires_grad=True)

nt = torch.nested_tensor([a,b,c])

buffer = nt.values()

buffer.mul_(2)

```

This is

1. Creating a view nt -> buffer

2. Applying an inplace op on buffer.

This triggers rebase_history() which in turn creates copy_slices. Copy slices utilize a struct called TensorGeometry to track geometry information about the base and the view. The view_fn will be used instead of as_strided. However we apply this function on result which is an empty of clone of base. We don't currently have a factory function that can do this. This will be unblocked when we add this.

cc @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7 @cpuhrsch @jbschlosser @bhosmer

| 0 |

4,929 | 93,637 |

HuggingFace Slow Operators

|

triaged, oncall: pt2

|

Operators with 50th percentile below 98% of aten perf or 20th percentile below 96%:

Float16:

```

aten._log_softmax.default:: [0.8083701248515153, 0.9260282672431682, 1.0251716763358925]

aten._log_softmax_backward_data.default:: [0.9195609454113238, 0.9607073111684497, 1.0512267863036555]

aten.addmm.default:: [0.7886046418843882, 0.9329217798915578, 0.9962013717422963]

aten.sum.SymInt:: [0.9358205974583087, 0.9814126709379405, 1.006583550282628]

aten.add_.Tensor:: [0.7253489773412456, 0.7703528781962926, 0.7976537424898803]

aten.new_empty_strided.default:: [0.9366916208071663, 0.9398957719201622, 0.9472405067590517]

aten.sqrt.default:: [0.9791511684613813, 0.9791511684613813, 0.9791511684613813]

aten.new_empty.default:: [0.7994959370210444, 0.804862283140364, 0.8102286292596836]

aten.native_layer_norm_backward.default:: [0.8506269179221464, 1.1152916772486603, 1.6212585844412815]

```

Float32:

```

aten._log_softmax.default:: [0.9550605426043154, 0.9823529203640421, 1.002335693504097]

aten._log_softmax_backward_data.default:: [0.9260106786584326, 0.9406878586461753, 1.0006503787776375]

aten.addmm.default:: [0.9509063155376642, 0.9920652896706708, 1.0371791371367722]

aten.sum.SymInt:: [0.9487470525571405, 0.9901798410457018, 1.05810554621502]

aten.new_empty_strided.default:: [0.7239798153666939, 0.7404751002819113, 0.7636612269539008]

aten.sqrt.default:: [0.9371196135483814, 0.9371196135483814, 0.9371196135483814]

aten.new_empty.default:: [0.8338423596933202, 0.850186671512076, 0.866530983330832]

```

`_log_softmax` and `_log_softmax_backward_data`, and `aten.sum` in fp16 stand out to me.

Full log: [float32](https://gist.github.com/eellison/b312dc4adc512599084486df667b6264), [float16](https://gist.github.com/eellison/5e3ec5064a19100f95a67b7c69c9ca0a)

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh

| 0 |

4,930 | 93,636 |

Timm Model Slow Operators

|

triaged, oncall: pt2

|

### 🐛 Describe the bug

Operators with 50th percentile below 98% of aten perf or 20th percentile below 96%:

```

aten.addmm.default:: [0.8386316138954961, 0.9255533387715138, 0.955919097384518]

aten.new_empty_strided.default:: [0.7074878925134296, 0.7366914699489878, 0.7576066949031502]

aten._softmax.default:: [0.844983630149154, 0.9835961036033891, 1.068020755737445]

aten.max_pool2d_with_indices.default:: [0.6342950044218125, 1.0382350718486175, 1.1183607334882082]

aten.hardsigmoid_backward.default:: [0.9559467667087947, 1.0058027523555526, 1.0368018754050459]

```

```

aten.addmm.default:: [0.8430439948974364, 0.9937072943974172, 1.1970515808766191]

aten.new_empty_strided.default:: [0.715191214805386, 0.7424496557446567, 0.7541450693325825]

aten.max_pool2d_with_indices.default:: [0.836623979229799, 1.0084812810590111, 1.033377775031802]

aten._softmax.default:: [0.8147407919961176, 1.004666288386921, 1.1519087656954243]

aten.select_backward.default:: [0.8844741032127397, 1.0799256067174667, 1.1887779335651258]

aten.new_empty.default:: [0.9331701212881051, 0.9612216337535711, 0.9760060763695062]

aten.div.Tensor:: [0.9588477230714266, 0.9588477230714266, 0.9588477230714266]

aten.rsqrt.default:: [0.9006916404896046, 0.9011194114944925, 0.9032443478821974]

```

`max_pool2d_with_indices`, and `_softmax` stand out. `rsqrt` is probably because we are using the decomposition and not the `rsqrt` ptx instruction.

Full logs: [float16](https://gist.github.com/eellison/1af4f518318d8892159765cb20fd3299), [float32](https://gist.github.com/eellison/3d2c9139c164d3190c8da520018dc99f)

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh

| 4 |

4,931 | 83,769 |

[TorchTidy] Check if `set_to_none` would change optimizer semantics.

|

oncall: profiler

|

### 🚀 The feature, motivation and pitch

Several PyTorch optimizers offer a `set_to_none` argument to delete gradients rather than zeroing them. This is particularly important for CUDA where each operation incurs a separate `cudaLaunchKernel` call. [GradNotSetToNonePattern](https://github.com/pytorch/pytorch/blob/master/torch/profiler/_pattern_matcher.py#L429) attempts to identify this opportunity for improvement; however it is currently overzealous. The issue is that certain optimizers will interpret `None` gradients as cause to reset optimizer state (e.g. [SGD momentum](https://github.com/pytorch/pytorch/blob/master/torch/optim/sgd.py#L234-L238)), and as a result `set_to_none` changes the semantics of the optimizer. This task is to extend `GradNotSetToNonePattern` to check for such exceptions, and only recommend `set_to_none` when it can be safely applied.

CC @tiffzhaofb (you can grab the issue when you get your github in the pytorch org)

### Alternatives

_No response_

### Additional context

_No response_

cc @robieta @chaekit @aaronenyeshi @ngimel @nbcsm @guotuofeng @guyang3532 @gaoteng-git @tiffzhaofb

| 1 |

4,932 | 83,764 |

Missing header file

|

triaged

|

### 🚀 The feature, motivation and pitch

Hi, I always build PyTorch from source and link my library to it. Today, I tried link my library to PyTorch nightly package, the error message below came out.

```

Cannot open include file: 'torch/csrc/jit/passes/onnx/constant_map.h': No such file or directory

```

Could we add this header to the package? Or do you have specific policy regarding what should be included and what no? Thanks.

### Alternatives

_No response_

### Additional context

_No response_

| 4 |

4,933 | 93,635 |

tabulate.tabulate causes a lot of memory to be allocated in yolov3

|

triaged, oncall: pt2

|

Calling tabulate.tabulate to render the output graph causes 1 GB of additional memory allocation in yolov3.

This is gated on the log level now, but as a result when DEBUG or INFO log levels are set, this will cause additional memory to be allocated.

https://github.com/pytorch/torchdynamo/blob/0e4d5ee9db6dfeba4d424b2e5d2ad96c79f1dffa/torchdynamo/utils.py#:~:text=tabulate(node_specs%2C%20headers%3D%5B%22opcode%22%2C%20%22name%22%2C%20%22target%22%2C%20%22args%22%2C%20%22kwargs%22%5D)

cc @ezyang @soumith @msaroufim @wconstab @ngimel @bdhirsh

| 2 |

4,934 | 83,749 |

[Nested Tensor] Update TestCase.AssertEqual

|

triaged, module: nestedtensor, module: testing

|

## TLDR: Update `TestCase.assertEqual` for nested tensors

We are currently unbinding nested tensors and comparing elements to assert equality. This works but I think that when the metadata for nested tensors if fully ironed out this can be more direct. This ticket is to track follow up work when the metadata scenario has been solidified.

cc @cpuhrsch @jbschlosser @bhosmer @pmeier

| 0 |

4,935 | 83,737 |

Profiler reports different # of Calls depending on group_by_stack_n

|

oncall: profiler

|

### 🐛 Describe the bug

The number of calls reported by the profiler to different functions doesn't have the same total when you change how the profiler results are printed.

Example: running the script

```python

import torch

import torchvision.models as models

from torch.profiler import profile, record_function, ProfilerActivity

model = models.resnet50().cuda()

inputs = torch.randn(5, 3, 224, 224).cuda()

with profile(activities=[ProfilerActivity.CPU, ProfilerActivity.CUDA], with_stack=True, with_modules=False) as prof:

model(inputs)

print(prof.key_averages(group_by_stack_n=2).table(sort_by="self_cuda_time_total"))

```

the aten::empty function is called 319 times, with the following lines found by grep:

```

aten::empty 0.16% 2.199ms 0.16% 2.199ms 8.298us 1.699ms 0.13% 1.699ms 6.411us 265 <built-in method batch_norm of type object at 0x7febe43d8f20>

aten::empty 0.04% 572.000us 0.04% 572.000us 10.792us 434.000us 0.03% 434.000us 8.189us 53 <built-in method conv2d of type object at 0x7febe43d8f20>

aten::empty 0.00% 1.000us 0.00% 1.000us 1.000us 3.000us 0.00% 3.000us 3.000us 1 <built-in function linear>

```

However, if we change group_by_stack_n to 4, it's only called 47 times!

The output is longer here, so you may find it helpful to use a line like

```bash

python test.py | grep empty | grep -v empty_like | tr -s ' ' | cut -d' ' -f 12 | paste -sd+ - | bc

```

but examining by eye should show that it's clearly far less than 300.

### Versions

Collecting environment information...

PyTorch version: 1.13.0a0+git4b3f1bd

Is debug build: False

CUDA used to build PyTorch: 11.6

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.1 LTS (x86_64)

GCC version: (Ubuntu 9.3.0-10ubuntu2) 9.3.0

Clang version: Could not collect

CMake version: version 3.16.3

Libc version: glibc-2.31

Python version: 3.8.5 (default, Jul 28 2020, 12:59:40) [GCC 9.3.0] (64-bit runtime)

Python platform: Linux-5.4.0-47-generic-x86_64-with-glibc2.29

Is CUDA available: True

CUDA runtime version: 11.6.124

GPU models and configuration:

GPU 0: NVIDIA GeForce RTX 2080 Ti

GPU 1: NVIDIA GeForce RTX 2080 Ti

GPU 2: NVIDIA GeForce RTX 2080 Ti

GPU 3: NVIDIA GeForce RTX 2080 Ti

GPU 4: NVIDIA GeForce RTX 2080 Ti

GPU 5: NVIDIA GeForce RTX 2080 Ti

GPU 6: NVIDIA GeForce RTX 2080 Ti

GPU 7: NVIDIA GeForce RTX 2080 Ti

Nvidia driver version: 510.73.05

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.7.6.5

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.0.4

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.0.4

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.0.4

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.0.4

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.0.4

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.0.4

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.0.4

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.23.2

[pip3] torch==1.13.0a0+git4b3f1bd

[pip3] torchvision==0.14.0a0+9c3e2bf

[conda] Could not collect

cc @robieta @chaekit @aaronenyeshi @ngimel @nbcsm @guotuofeng @guyang3532 @gaoteng-git @tiffzhaofb

| 1 |

4,936 | 83,733 |

BCELoss results in autocast CUDA warning

|

triaged, module: amp (automated mixed precision)

|

### 🐛 Describe the bug

Hi folks, BCELoss on calling *backward* method creates warning UserWarning: User provided device_type of 'cuda', but CUDA is not available. Disabling warnings.warn('User provided device_type of \'cuda\', but CUDA is not available. Disabling'). The code works normally but it is very irritant. torch/cuda/amp/autocast_mode.py creates a warning because it gets called with `device_type` "cuda". I can get around by commenting line 199 but that is not the solution. Although I am using `torch` together with `DGL` library, the error source seems to be in PyTorch.

```

# Define training EdgeDataLoader

train_dataloader = dgl.dataloading.DataLoader(

graph, # The graph

train_eid_dict, # The edges to iterate over

sampler, # The neighbor sampler

batch_size=batch_size, # Batch size

shuffle=True, # Whether to shuffle the nodes for every epoch

drop_last=False, # Whether to drop the last incomplete batch

num_workers=sampling_workers, # Number of sampling processes

)

# Define validation EdgeDataLoader

validation_dataloader = dgl.dataloading.DataLoader(

graph, # The graph

val_eid_dict, # The edges to iterate over

sampler, # The neighbor sampler

batch_size=batch_size, # Batch size

shuffle=True, # Whether to shuffle the nodes for every epoch

drop_last=False, # Whether to drop the last incomplete batch

num_workers=sampling_workers, # Number of sampler processes

)

print(f"Canonical etypes: {graph.canonical_etypes}")

# Initialize loss

loss = torch.nn.BCELoss()

# Initialize activation func

m, threshold = torch.nn.Sigmoid(), 0.5

# Iterate for every epoch

for epoch in range(1, num_epochs+1):

model.train()

tr_finished = False

for _, pos_graph, neg_graph, blocks in train_dataloader:

input_features = blocks[0].ndata[node_features_property]

# Perform forward pass

probs, labels, loss_output = batch_forward_pass(model, predictor, loss, m, target_relation, input_features, pos_graph, neg_graph, blocks)

# Make an optimization step

optimizer.zero_grad()

loss_output.backward() # ***This line generates warning***

optimizer.step()

```

### Versions

o CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.22.2

[pip3] numpydoc==1.4.0

[pip3] torch==1.12.1+cpu

[pip3] torchaudio==0.12.1+cpu

[pip3] torchvision==0.13.1+cpu

[conda] Could not collect

cc @mcarilli @ptrblck

| 8 |

4,937 | 83,726 |

nvfuser + prim stack generated illegal PTX code on hardware with sm <= 70

|

triaged, module: nvfuser, module: primTorch

|

### 🐛 Describe the bug

```

import torch

from torch._prims.context import TorchRefsNvfuserCapabilityMode, TorchRefsMode, _is_func_unsupported_nvfuser

from torch.fx.experimental.proxy_tensor import make_fx

from torch._prims.executor import execute

dtype = torch.bfloat16

# dtype = torch.float16

x = torch.rand(5, device="cuda").to(dtype)

def fn(x):

return (x + 1.0).relu()

with TorchRefsNvfuserCapabilityMode():

nvprim_gm = make_fx(fn)(x)

print(nvprim_gm.graph)

for i in range(5):

o = execute(nvprim_gm, x, executor="nvfuser")

print(o)

```

gives the error message:

```

CUDA NVRTC compile error: ptxas application ptx input, line 55; error : Feature '.bf16' requires .target sm_80 or higher

ptxas application ptx input, line 55; error : Feature 'cvt with .bf16' requires .target sm_80 or higher

ptxas fatal : Ptx assembly aborted due to errors

```

### Versions

Collecting environment information...

PyTorch version: 1.13.0a0+gitce7177f

Is debug build: False

CUDA used to build PyTorch: 11.7

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.4 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.24.1

Libc version: glibc-2.31