Serial Number

int64 1

6k

| Issue Number

int64 75.6k

112k

| Title

stringlengths 3

357

| Labels

stringlengths 3

241

⌀ | Body

stringlengths 9

74.5k

⌀ | Comments

int64 0

867

|

|---|---|---|---|---|---|

5,101 | 82,517 |

Symbolic tensors are not printable

|

module: printing, triaged, module: dynamic shapes

|

### 🐛 Describe the bug

```

File "/data/users/ezyang/pytorch-tmp/test/test_dynamic_shapes.py", line 258, in test_aten_ops

print(x)

File "/data/users/ezyang/pytorch-tmp/torch/_tensor.py", line 423, in __repr__

return torch._tensor_str._str(self, tensor_contents=tensor_contents)

File "/data/users/ezyang/pytorch-tmp/torch/_tensor_str.py", line 591, in _str

return _str_intern(self, tensor_contents=tensor_contents)

File "/data/users/ezyang/pytorch-tmp/torch/_tensor_str.py", line 535, in _str_intern

if self.numel() == 0 and not self.is_sparse:

RuntimeError: Tensors of type TensorImpl do not have numel

```

Maybe this will get fixed when we get sym numel landed

### Versions

master

| 1 |

5,102 | 82,510 |

Complex addition result in NaN when it shouldn't

|

triaged, module: complex, module: NaNs and Infs

|

### 🐛 Describe the bug

When adding two complex numbers where both real components (or both imaginary components) are infinities with equal sign, the imaginary (or real) component of the result will become NaN. There should be no interaction between the different components when adding two complex numbers, and in this case adding two infinities with equal sign shouldn't result in a NaN value regardless.

As a simple workaround, one can perform addition using torch.view_as_real and then use torch.view_as_complex on the result.

Simple test to reproduce (or [see in this Colab notebook](https://colab.research.google.com/drive/1KhWPscjEtM_SZebapVVDi55H5LkGI_zS?usp=sharing)):

```python3

import torch

x_native = float('inf') + 1j * 0

x_pytorch = torch.tensor(x_native)

x_as_real = torch.view_as_real(x_pytorch)

print('dtype:', x_pytorch.dtype)

assert (x_native + x_native) == x_native, f'{x_native + x_native} != {x_native}'

assert ((x_as_real + x_as_real) == x_as_real).all().item(), f'{x_as_real + x_as_real} != {x_as_real}'

assert ((x_pytorch + x_pytorch) == x_pytorch).all().item(), f'{x_pytorch + x_pytorch} != {x_pytorch}'

```

Result of running the above:

```

dtype: torch.complex64

---------------------------------------------------------------------------

AssertionError Traceback (most recent call last)

[<ipython-input-11-53bec36d73d6>](https://wgwat1jum1d-496ff2e9c6d22116-0-colab.googleusercontent.com/outputframe.html?vrz=colab-20220728-060048-RC00_463807217#) in <module>()

7 assert (x_native + x_native) == x_native, f'{x_native + x_native} != {x_native}'

8 assert ((x_as_real + x_as_real) == x_as_real).all().item(), f'{x_as_real + x_as_real} != {x_as_real}'

----> 9 assert ((x_pytorch + x_pytorch) == x_pytorch).all().item(), f'{x_pytorch + x_pytorch} != {x_pytorch}'

AssertionError: (inf+nanj) != (inf+0j)

```

### Versions

Collecting environment information...

PyTorch version: 1.12.0+cu113

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.5 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: 6.0.0-1ubuntu2 (tags/RELEASE_600/final)

CMake version: version 3.22.5

Libc version: glibc-2.26

Python version: 3.7.13 (default, Apr 24 2022, 01:04:09) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.4.188+-x86_64-with-Ubuntu-18.04-bionic

Is CUDA available: False

CUDA runtime version: 11.1.105

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.7.6.5

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.0.5

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.21.6

[pip3] torch==1.12.0+cu113

[pip3] torchaudio==0.12.0+cu113

[pip3] torchsummary==1.5.1

[pip3] torchtext==0.13.0

[pip3] torchvision==0.13.0+cu113

[conda] Could not collect

cc @ezyang @anjali411 @dylanbespalko @mruberry @Lezcano @nikitaved

| 3 |

5,103 | 82,494 |

Implement torch.clamp() on sparse tensors with SparseCPU backend

|

module: sparse, triaged

|

### 🚀 The feature, motivation and pitch

torch.clamp() fails on sparse tensors using SparseCPU backend. Among other things, this breaks gradient clipping with sparse tensors.

```

import torch

sparse_tensor = torch.sparse_coo_tensor([[1,2]], [1,5], (3,))

torch.clamp(sparse_tensor, -1, 1)

```

Fails with error: `Could not run 'aten::clamp' with arguments from the 'SparseCPU' backend.`

### Alternatives

It's not exactly difficult to manually clamp sparse tensors, but we shouldn't have to when a function exists.

### Additional context

_No response_

cc @nikitaved @pearu @cpuhrsch @amjames @bhosmer

| 4 |

5,104 | 82,479 |

Cloning conjugate tensor in torch_dispatch context produces non equality.

|

triaged, module: complex, module: __torch_dispatch__

|

### 🐛 Describe the bug

```python

class TestMode(TorchDispatchMode):

def __torch_dispatch__(self, func, types, args=(), kwargs=None):

args2 = clone_inputs(args)

out = func(*args, **kwargs)

for i in range(len(args2)):

print(f'before {args2[i]}, after {args[i]}, {torch.equal(args2[i], args[i])}')

return out

a = torch.rand((3,3), dtype=torch.complex64)

b = torch.rand((3,3), dtype=torch.complex64)

b = torch.conj(b)

with enable_torch_dispatch_mode(TestMode()):

torch.mm(a, b)

```

This prints:

```python

before tensor([[0.0290+0.4019j, 0.2598+0.3666j, 0.0583+0.7006j],

[0.0518+0.4681j, 0.6738+0.3315j, 0.7837+0.5631j],

[0.7749+0.8208j, 0.2793+0.6817j, 0.2837+0.6567j]]), after tensor([[0.0290+0.4019j, 0.2598+0.3666j, 0.0583+0.7006j],

[0.0518+0.4681j, 0.6738+0.3315j, 0.7837+0.5631j],

[0.7749+0.8208j, 0.2793+0.6817j, 0.2837+0.6567j]]), True

before tensor([[0.2388-0.7313j, 0.6012-0.3043j, 0.2548-0.6294j],

[0.9665-0.7399j, 0.4517-0.4757j, 0.7842-0.1525j],

[0.6662-0.3343j, 0.7893-0.3216j, 0.5247-0.6688j]]), after tensor([[0.2388-0.7313j, 0.6012-0.3043j, 0.2548-0.6294j],

[0.9665-0.7399j, 0.4517-0.4757j, 0.7842-0.1525j],

[0.6662-0.3343j, 0.7893-0.3216j, 0.5247-0.6688j]]), False

```

Which indicates that `mm` mutates its second input which is not the case.

### Versions

N/A

cc @ezyang @anjali411 @dylanbespalko @mruberry @Lezcano @nikitaved @Chillee @zou3519 @albanD @samdow

| 2 |

5,105 | 93,793 |

Guide for diagnosing excess graph breaks

|

module: docs, triaged, oncall: pt2, module: dynamo

|

Something a user using torchdynamo might want to do is figure out why they are having lots of graph breaks. We have tools for doing this, but they are not documented. Prior to the PT2 release it would be good to document them. cc @svekars @carljparker @soumith @msaroufim @wconstab @ngimel @bdhirsh @voznesenskym @yanboliang @penguinwu @anijain2305 @EikanWang @jgong5 @Guobing-Chen @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx @desertfire

| 3 |

5,106 | 82,465 |

Does torch.utils.checkpoint compatible with torch.cuda.make_graphed_callables?

|

module: checkpoint, triaged, module: cuda graphs

|

### 🐛 Describe the bug

When attempting to use

```

model = torch.cuda.make_graphed_callables(model, (rand_data,))

```

and our model contains checkpoints or sequential checkpoints like this:

```

input_next = torch.utils.checkpoint.checkpoint_sequential(self.layer, segments, input_prev)

```

then we got this error:

```

Traceback (most recent call last):

File "multitask.py", line 43, in <module>

main()

File "multitask.py", line 36, in main

S.initialize(args)

File "/mnt/lustre/chendingyu1/multitask-unify-democode/core/solvers/solver_fp16.py", line 63, in initialize

super().initialize(args)

File "/mnt/lustre/chendingyu1/multitask-unify-democode/core/solvers/solver.py", line 317, in initialize

self.create_model()

File "/mnt/lustre/chendingyu1/multitask-unify-democode/core/solvers/solver_fp16.py", line 109, in create_model

model = torch.cuda.make_graphed_callables(model, (rand_data,))

File "/mnt/cache/share/spring/conda_envs/miniconda3/envs/s0.3.5/lib/python3.7/site-packages/torch/cuda/graphs.py", line 264, in make_graphed_callables

allow_unused=False)

File "/mnt/cache/share/spring/conda_envs/miniconda3/envs/s0.3.5/lib/python3.7/site-packages/torch/autograd/__init__.py", line 277, in grad

allow_unused, accumulate_grad=False) # Calls into the C++ engine to run the backward pass

File "/mnt/cache/share/spring/conda_envs/miniconda3/envs/s0.3.5/lib/python3.7/site-packages/torch/autograd/function.py", line 253, in apply

return user_fn(self, *args)

File "/mnt/cache/share/spring/conda_envs/miniconda3/envs/s0.3.5/lib/python3.7/site-packages/torch/utils/checkpoint.py", line 103, in backward

"Checkpointing is not compatible with .grad() or when an `inputs` parameter"

RuntimeError: Checkpointing is not compatible with .grad() or when an `inputs` parameter is passed to .backward(). Please use .backward() and do not pass its `inputs` argument.

```

If we don't use checkpoints in our model like

```

input_next = self.layer(input_prev)

```

then we didn't get any errors.

Does checkpoint compatible with torch.cuda.make_graphed_callables?

### Versions

PyTorch version: 1.11.0+cu113

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: CentOS Linux 7 (Core) (x86_64)

GCC version: (GCC) 4.8.5 20150623 (Red Hat 4.8.5-44)

Clang version: Could not collect

CMake version: version 2.8.12.2

Libc version: glibc-2.17

Python version: 3.7.11 (default, Jul 27 2021, 14:32:16) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-3.10.0-957.el7.x86_64-x86_64-with-centos-7.6.1810-Core

Is CUDA available: True

CUDA runtime version: 9.0.176

Nvidia driver version: 460.32.03

Versions of relevant libraries:

[pip3] numpy==1.21.5

[pip3] spring==0.7.2+cu112.torch1110.mvapich2.nartgpu.develop.805601a8

[pip3] torch==1.11.0+cu113

[pip3] torchvision==0.12.0+cu113

[conda] numpy 1.21.5 pypi_0 pypi

[conda] spring 0.7.0+cu112.torch1110.mvapich2.pmi2.nartgpu pypi_0 pypi

[conda] torch 1.11.0+cu113 pypi_0 pypi

[conda] torchvision 0.12.0+cu113 pypi_0 pypi

cc @mcarilli @ezyang

| 7 |

5,107 | 82,464 |

SyncBatchNorm does not work on CPU

|

oncall: distributed, module: nn

|

Using Pytorch 1.12 on Debian Linux (conda env).

SyncBatchNorm does not seem to work with jit.trace. On a mobilenet V3 model, I first do:

```

model = torch.nn.SyncBatchNorm.convert_sync_batchnorm(model)

```

After training, normally, with my models I do this:

```

traced_script_module = torch.jit.trace(swa_model, (data))

traced_script_module.save("./swa_model.tar")

```

both model and data are on cpu - the plan is to use the model for inference on cpu only.

However, it throws me this:

```

raise ValueError("SyncBatchNorm expected input tensor to be on GPU")

ValueError: SyncBatchNorm expected input tensor to be on GPU

```

Is there a workaround for this? or is this some weird edge case which is not supported?

Ofcourse, without SyncBatchNorm, everything is fine - ie the trace is produced and gives me the same result as the original model.

Thank you.

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501 @albanD @mruberry @jbschlosser @walterddr @kshitij12345 @saketh-are

| 5 |

5,108 | 82,451 |

add support for bitwise operations with floating point numbers

|

oncall: jit

|

### 🚀 The feature, motivation and pitch

I was rewriting hash nerf so I could export a jitted version for training in c++, when I came across this limitation. Doing bitwise operation with floats is easy in eager mode with torch.tensor.view(torch.int32). However currently TorchScript does not support reinterpret_casting tensors, and jitting is a must.

### Alternatives

Support for reinterpret_casting with tensors in TorchScript would be a better solution but I assume that would take a long time to optimize well.

### Additional context

_No response_

| 0 |

5,109 | 82,443 |

Quantization issue in transformers

|

oncall: quantization, triaged

|

### 🐛 Describe the bug

This issue happened when quantizing a simple transformer model

Example

```

class M(torch.nn.Module):

def __init__(self):

super(DynamicQuantModule.M, self).__init__()

self.transformer = nn.Transformer(d_model=2, nhead=2, num_encoder_layers=1, num_decoder_layers=1)

def forward(self):

return self.transformer(torch.randn(1, 16, 2))

torch.quantization.quantize_dynamic(M(), dtype=torch.qint8)

```

The error is

```

File "/Users/linbin/opt/anaconda3/lib/python3.8/site-packages/torch/jit/_recursive.py", line 516, in init_fn

scripted = create_script_module_impl(orig_value, sub_concrete_type, stubs_fn)

File "/Users/linbin/opt/anaconda3/lib/python3.8/site-packages/torch/jit/_recursive.py", line 542, in create_script_module_impl

create_methods_and_properties_from_stubs(concrete_type, method_stubs, property_stubs)

File "/Users/linbin/opt/anaconda3/lib/python3.8/site-packages/torch/jit/_recursive.py", line 393, in create_methods_and_properties_from_stubs

concrete_type._create_methods_and_properties(property_defs, property_rcbs, method_defs, method_rcbs, method_defaults)

RuntimeError:

method cannot be used as a value:

File "/Users/linbin/opt/anaconda3/lib/python3.8/site-packages/torch/nn/modules/transformer.py", line 468

self.norm2.weight,

self.norm2.bias,

self.linear1.weight,

~~~~~~~~~~~~~~~~~~~ <--- HERE

self.linear1.bias,

self.linear2.weight,

```

### Versions

PyTorch version: N/A

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: macOS 12.4 (x86_64)

GCC version: Could not collect

Clang version: 13.0.0 (clang-1300.0.18.6)

CMake version: version 3.21.3

Libc version: N/A

Python version: 3.7.5 (default, Oct 22 2019, 10:35:10) [Clang 10.0.1 (clang-1001.0.46.4)] (64-bit runtime)

Python platform: Darwin-21.5.0-x86_64-i386-64bit

Is CUDA available: N/A

CUDA runtime version: Could not collect

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

Versions of relevant libraries:

[pip3] No relevant packages

[conda] blas 1.0 mkl

[conda] mkl 2021.2.0 hecd8cb5_269

[conda] mkl-include 2022.0.0 hecd8cb5_105

[conda] mkl-service 2.4.0 py38h9ed2024_0

[conda] mkl_fft 1.3.0 py38h4a7008c_2

[conda] mkl_random 1.2.2 py38hb2f4e1b_0

[conda] numpy 1.20.0 pypi_0 pypi

[conda] numpy-base 1.20.2 py38he0bd621_0

[conda] numpydoc 1.4.0 py38hecd8cb5_0

[conda] pytorch 1.13.0.dev20220728 py3.8_0 pytorch-nightly

[conda] torch 1.11.0 pypi_0 pypi

[conda] torchaudio 0.13.0.dev20220728 py38_cpu pytorch-nightly

[conda] torchvision 0.13.0 pypi_0 pypi

Another way to trigger it is just run:

```

python3 test/mobile/model_test/gen_test_model.py dynamic_quant_ops

```

in the latest nightly build.

cc @jerryzh168 @jianyuh @raghuramank100 @jamesr66a @vkuzo

| 18 |

5,110 | 82,430 |

Minor inconsistency in description of `attn_output_weights` in MultiheadAttention docs

|

module: docs, module: nn, triaged, actionable

|

### 📚 The doc issue

On the documentation of [MultiheadAttention](https://pytorch.org/docs/stable/generated/torch.nn.MultiheadAttention.html?highlight=multihead#torch.nn.MultiheadAttention) of version 1.12 on the description of `attn_output_weights` argument, in the last sentence, it is mentioned that:

> If **average_weights=False**, returns attention weights per head of shape [...].

I think that the correct argument on the forward method is `average_attn_weights` and not `average_weights`.

### Suggest a potential alternative/fix

Can be fixed by replacing `average_weights=False` to `average_attn_weights`.

cc @svekars @holly1238 @albanD @mruberry @jbschlosser @walterddr @kshitij12345 @saketh-are

| 1 |

5,111 | 82,419 |

The torch::deploy document is not updated

|

triaged, module: deploy

|

### 📚 The doc issue

Hi,

I was trying the tutorial example in torch::deploy document, more specifically (deploy with C++)[https://pytorch.org/docs/stable/deploy.html#building-and-running-the-application]. However, the example doesn't work. Here are the details.

It looks like it is because of compilation problem in pytorch. After seemingly sucuessful compilation, the `libtorch_deployinterpreter.o` file (or similar files) cannot be found in the directory. This blocks the next steps of the example. Another related (compiling issue)[https://github.com/pytorch/pytorch/issues/82382] shows that the compilation is even not successful in Linux (due to missing file).

Could you update this part of document or point to me other working demo examples?

---

Here is the environment of my system

```

Collecting environment information...

PyTorch version: 1.13.0a0+git1a9317c

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: macOS 12.4 (x86_64)

GCC version: Could not collect

Clang version: 13.1.6 (clang-1316.0.21.2.5)

CMake version: version 3.19.6

Libc version: N/A

Python version: 3.9.12 (main, Apr 5 2022, 01:53:17) [Clang 12.0.0 ] (64-bit runtime)

Python platform: macOS-10.16-x86_64-i386-64bit

Is CUDA available: False

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.21.5

[pip3] numpydoc==1.2

[pip3] torch==1.13.0a0+git1a9317c

[pip3] torchaudio==0.12.0

[pip3] torchvision==0.13.0

[conda] blas 1.0 mkl

[conda] ffmpeg 4.3 h0a44026_0 pytorch

[conda] mkl 2021.4.0 hecd8cb5_637

[conda] mkl-include 2022.0.0 hecd8cb5_105

[conda] mkl-service 2.4.0 py39h9ed2024_0

[conda] mkl_fft 1.3.1 py39h4ab4a9b_0

[conda] mkl_random 1.2.2 py39hb2f4e1b_0

[conda] numpy 1.21.5 py39h2e5f0a9_1

[conda] numpy-base 1.21.5 py39h3b1a694_1

[conda] numpydoc 1.2 pyhd3eb1b0_0

[conda] pytorch 1.12.0 py3.9_0 pytorch

[conda] torch 1.13.0a0+git1a9317c pypi_0 pypi

[conda] torchaudio 0.12.0 py39_cpu pytorch

[conda] torchvision 0.13.0 py39_cpu pytorch

```

### Suggest a potential alternative/fix

_No response_

cc @wconstab

| 0 |

5,112 | 82,417 |

[JIT] _unsafe_view returns alias when size(input) = size argument

|

oncall: jit

|

### 🐛 Describe the bug

aten::_unsafe_view seems to return an alias of input as output when the size argument is equal to the size of the initial input. This isn't currently marked on native_functions.yaml, but seems to be intended due to:

https://github.com/pytorch/pytorch/blob/master/aten/src/ATen/native/TensorShape.cpp#L2728

Keeping this here just to track this possible issue.

### Versions

N/A

| 0 |

5,113 | 82,397 |

Bilinear interpolation with antialiasing is slow in performance

|

module: performance, triaged

|

### 🐛 Describe the bug

Bilinear interpolation with antialiasing is significantly slower than without.

Is this intended behavior or am I missing something?

Code to reproduce

```python

import torch

import torch.nn.functional as F

from tqdm import tqdm

x = torch.randn((7, 3, 256, 256), device='cuda')

start = torch.cuda.Event(enable_timing=True)

end = torch.cuda.Event(enable_timing=True)

start.record()

for i in enumerate(tqdm(range(10000))):

F.interpolate(x, (64, 64), mode='bilinear')

end.record()

torch.cuda.synchronize()

print(start.elapsed_time(end))

# 310.09381103515625

start.record()

for i in enumerate(tqdm(range(10000))):

F.interpolate(x, (64, 64), mode='bilinear', antialias=True)

end.record()

torch.cuda.synchronize()

print(start.elapsed_time(end))

# 1558.083984375

```

### Versions

```

Collecting environment information...

PyTorch version: 1.12.0+cu113

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.5 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: 6.0.0-1ubuntu2 (tags/RELEASE_600/final)

CMake version: version 3.22.5

Libc version: glibc-2.26

Python version: 3.7.13 (default, Apr 24 2022, 01:04:09) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.4.188+-x86_64-with-Ubuntu-18.04-bionic

Is CUDA available: True

CUDA runtime version: 11.1.105

GPU models and configuration: GPU 0: Tesla T4

Nvidia driver version: 460.32.03

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.7.6.5

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.0.5

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.21.6

[pip3] torch==1.12.0+cu113

[pip3] torchaudio==0.12.0+cu113

[pip3] torchsummary==1.5.1

[pip3] torchtext==0.13.0

[pip3] torchvision==0.13.0+cu113

[conda] Could not collect

```

cc @VitalyFedyunin @ngimel

| 4 |

5,114 | 82,382 |

Problems in built-from-source pytorch with USE_DEPLOY=1 in Ubuntu

|

triaged, module: deploy

|

### 📚 The doc issue

Hi,

I'm trying the tutorial example of deploy and aim to package a model and do inference in C++. But I ran into a problems when working with build-from-source pytorch. There are following issue:

1. Build-from-source on Ubuntu failed becasue of a missing file

The error message is the following. Note that I have used CPU built by setting the environment variable as `export USE_CUDA=0`.

```

Building wheel torch-1.13.0a0+gitce92c1c

-- Building version 1.13.0a0+gitce92c1c

cmake --build . --target install --config Release

[1/214] Performing archive_stdlib step for 'cpython'

FAILED: torch/csrc/deploy/interpreter/cpython/src/cpython-stamp/cpython-archive_stdlib ../torch/csrc/deploy/interpreter/cpython/lib/libpython_stdlib3.8.a

cd /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter && ar -rc /home/ubuntu/pytorch/torch/csrc/deploy/interpreter/cpython/lib/libpython_stdlib3.8.a /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/arraymodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_asynciomodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/audioop.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/binascii.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_bisectmodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_blake2/blake2module.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_blake2/blake2b_impl.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_blake2/blake2s_impl.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_bz2module.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/cmathmodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/cjkcodecs/_codecs_cn.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/cjkcodecs/_codecs_hk.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/cjkcodecs/_codecs_iso2022.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/cjkcodecs/_codecs_jp.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/cjkcodecs/_codecs_kr.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/cjkcodecs/_codecs_tw.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_contextvarsmodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_cryptmodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_csv.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_ctypes/_ctypes.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_ctypes/callbacks.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_ctypes/callproc.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_ctypes/stgdict.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_ctypes/cfield.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_ctypes/_ctypes_test.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_cursesmodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_curses_panel.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_datetimemodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_decimal/_decimal.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_decimal/libmpdec/basearith.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_decimal/libmpdec/constants.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_decimal/libmpdec/context.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_decimal/libmpdec/convolute.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_decimal/libmpdec/crt.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_decimal/libmpdec/difradix2.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_decimal/libmpdec/fnt.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_decimal/libmpdec/fourstep.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_decimal/libmpdec/io.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_decimal/libmpdec/memory.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_decimal/libmpdec/mpdecimal.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_decimal/libmpdec/numbertheory.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_decimal/libmpdec/sixstep.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_decimal/libmpdec/transpose.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_elementtree.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/fcntlmodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/grpmodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_hashopenssl.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_heapqmodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_json.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_lsprof.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_lzmamodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/mathmodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/md5module.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/mmapmodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/cjkcodecs/multibytecodec.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_multiprocessing/multiprocessing.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_multiprocessing/semaphore.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/nismodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_opcode.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/ossaudiodev.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/parsermodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_pickle.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_posixsubprocess.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/pyexpat.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/expat/xmlparse.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/expat/xmlrole.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/expat/xmltok.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_queuemodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_randommodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/readline.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/resource.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/selectmodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/sha1module.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/sha256module.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_sha3/sha3module.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/sha512module.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/socketmodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/spwdmodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_ssl.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_struct.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/syslogmodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/termios.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_testbuffer.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_testcapimodule.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_testimportmultiple.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_testmultiphase.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/unicodedata.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/xxlimited.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_xxtestfuzz/_xxtestfuzz.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_xxtestfuzz/fuzzer.o /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/zlibmodule.o && /home/ubuntu/anaconda3/bin/cmake -E touch /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython-stamp/cpython-archive_stdlib

ar: /home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/build/temp.linux-x86_64-3.8//home/ubuntu/pytorch/build/torch/csrc/deploy/interpreter/cpython/src/cpython/Modules/_ctypes/_ctypes.o: No such file or directory

[3/214] Linking CXX shared library lib/libtorch_cpu.so

ninja: build stopped: subcommand failed.

```

I have also set `export USE_DEPLOY=1` following (`deploy` tutorial)[https://pytorch.org/docs/stable/deploy.html#loading-and-running-the-model-in-c].

Any help will be appreciated!

---

Here is the system info:

```

Collecting environment information...

PyTorch version: N/A

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.6 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: Could not collect

CMake version: version 3.20.2

Libc version: glibc-2.27

Python version: 3.9.12 (main, Apr 5 2022, 06:56:58) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.4.0-1068-aws-x86_64-with-glibc2.27

Is CUDA available: N/A

CUDA runtime version: Could not collect

GPU models and configuration: GPU 0: Tesla T4

Nvidia driver version: 510.47.03

cuDNN version: Probably one of the following:

/usr/local/cuda-11.0/targets/x86_64-linux/lib/libcudnn.so.8.0.5

/usr/local/cuda-11.0/targets/x86_64-linux/lib/libcudnn_adv_infer.so.8.0.5

/usr/local/cuda-11.0/targets/x86_64-linux/lib/libcudnn_adv_train.so.8.0.5

/usr/local/cuda-11.0/targets/x86_64-linux/lib/libcudnn_cnn_infer.so.8.0.5

/usr/local/cuda-11.0/targets/x86_64-linux/lib/libcudnn_cnn_train.so.8.0.5

/usr/local/cuda-11.0/targets/x86_64-linux/lib/libcudnn_ops_infer.so.8.0.5

/usr/local/cuda-11.0/targets/x86_64-linux/lib/libcudnn_ops_train.so.8.0.5

/usr/local/cuda-11.1/targets/x86_64-linux/lib/libcudnn.so.8.0.5

/usr/local/cuda-11.1/targets/x86_64-linux/lib/libcudnn_adv_infer.so.8.0.5

/usr/local/cuda-11.1/targets/x86_64-linux/lib/libcudnn_adv_train.so.8.0.5

/usr/local/cuda-11.1/targets/x86_64-linux/lib/libcudnn_cnn_infer.so.8.0.5

/usr/local/cuda-11.1/targets/x86_64-linux/lib/libcudnn_cnn_train.so.8.0.5

/usr/local/cuda-11.1/targets/x86_64-linux/lib/libcudnn_ops_infer.so.8.0.5

/usr/local/cuda-11.1/targets/x86_64-linux/lib/libcudnn_ops_train.so.8.0.5

/usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn.so.8.1.1

/usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_adv_infer.so.8.1.1

/usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_adv_train.so.8.1.1

/usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_cnn_infer.so.8.1.1

/usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_cnn_train.so.8.1.1

/usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_ops_infer.so.8.1.1

/usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_ops_train.so.8.1.1

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

Versions of relevant libraries:

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.21.5

[pip3] numpydoc==1.2

[pip3] torch==1.12.0

[pip3] torchaudio==0.12.0

[pip3] torchvision==0.13.0

[conda] blas 1.0 mkl

[conda] cpuonly 2.0 0 pytorch

[conda] cudatoolkit 11.2.2 he111cf0_8 conda-forge

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] magma-cuda112 2.5.2 1 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-include 2022.1.0 h84fe81f_915 conda-forge

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.21.5 py39he7a7128_1

[conda] numpy-base 1.21.5 py39hf524024_1

[conda] numpydoc 1.2 pyhd3eb1b0_0

[conda] pytorch 1.12.0 py3.9_cpu_0 pytorch

[conda] pytorch-mutex 1.0 cpu pytorch

[conda] torchaudio 0.12.0 py39_cpu pytorch

[conda] torchvision 0.13.0 py39_cpu pytorch

```

### Suggest a potential alternative/fix

_No response_

cc @wconstab

| 0 |

5,115 | 82,377 |

masked_scatter_ is very lacking

|

module: docs, triaged, module: scatter & gather ops

|

### 📚 The doc issue

The documentation for `masked_scatter_` says

```

Tensor.masked_scatter_(mask, source):

Copies elements from source into self tensor at positions where the mask is True.

```

Which suggests it might work similar to

```

tensor[mask] = source[mask]

```

however, the actual semantics are quite different, which leads to confusion as evidenced here: https://stackoverflow.com/questions/68675160/torch-masked-scatter-result-did-not-meet-expectations/73145411#73145411

### Suggest a potential alternative/fix

The docs should specify that the way scatter works is that the `i`th `True` in a row is given the `i`th value from the source. So not the value corresponding to the position of the `True`.

Maybe an example of the difference between the two methods described in the issue.

cc @svekars @holly1238 @mikaylagawarecki

| 1 |

5,116 | 82,357 |

ufmt and flake8 lints race

|

triaged

|

### 🐛 Describe the bug

ufmt changes python source code, but it runs in parallel with flake8. This means that flake8 errors may be out of date by the time ufmt is done processing.

### Versions

master

| 3 |

5,117 | 82,354 |

Offer a way to really force merges via pytorchbot

|

module: ci, triaged

|

### 🚀 The feature, motivation and pitch

I had a fun situation in https://github.com/pytorch/pytorch/pull/82236. Here's the summary:

- I created PR C, and then imported it into fbcode to check test signal.

- Next, I created PRs A, B, D, which were stacked like the following: A <- B <- C <- D

- Then, I merged A and B via GHF

- PR C refused to merge via GHF due to "This PR has internal changes and must be landed via Phabricator".

We tried a couple of things:

1. resync the PR to fbcode. The resync failed because commits A and B had not yet made it into fbcode

2. unlink the PR from the fbcode diff and then attempting land via GHF. The unlink didn't seem to do anything.

What I described above probably isn't a common workflow, but it would be nice to have a "ignore all checks and force merge" button for GHF for the cases where tooling didn't work out

cc @seemethere @malfet @pytorch/pytorch-dev-infra

### Alternatives

Alternatively, using "unlink" on a PR should make it so that this check doesn't appear anymore. This was not the case for https://github.com/pytorch/pytorch/pull/82236 -- after unlink, the PR still could not be merged.

### Additional context

_No response_

| 1 |

5,118 | 82,324 |

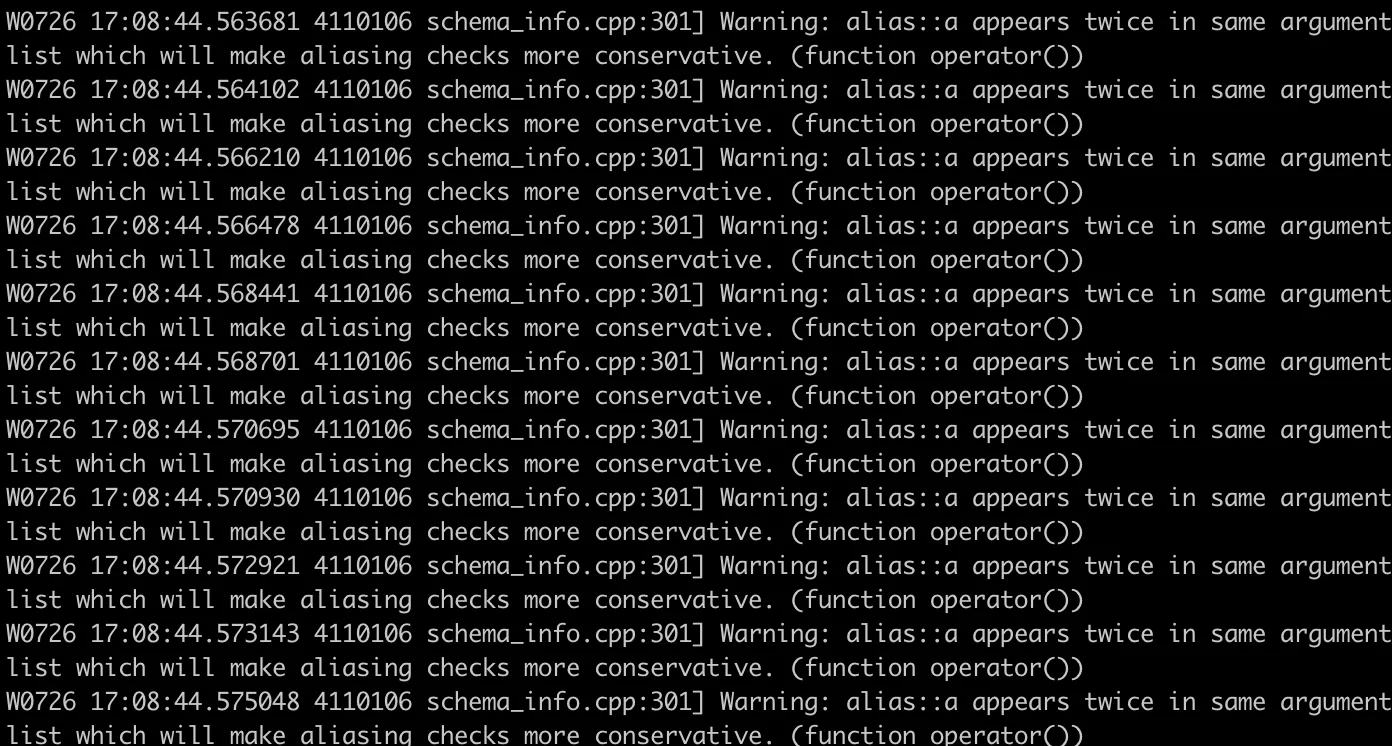

[JIT] SchemaInfo warning appears out in the wild

|

oncall: jit

|

### 🐛 Describe the bug

^ Warning for SchemaInfo having multiple input arguments with the same alias set appears in the wild when there shouldn't be any ops that trigger this warning.

### Versions

n/a

| 0 |

5,119 | 82,318 |

test_make_fx_symbolic_exhaustive should pass dynamic ints for shape arguments

|

triaged, fx, module: dynamic shapes

|

### 🐛 Describe the bug

```

diff --git a/test/test_proxy_tensor.py b/test/test_proxy_tensor.py

index 9e22de81529..f3809fc22d9 100644

--- a/test/test_proxy_tensor.py

+++ b/test/test_proxy_tensor.py

@@ -844,6 +844,9 @@ def _test_make_fx_helper(self, device, dtype, op, tracing_mode):

except DynamicOutputShapeException as e:

self.skipTest("Dynamic output shape operation in trace")

+ print(new_f)

+ print(new_f.shape_env.guards)

+

for arg in args:

if isinstance(arg, torch.Tensor) and arg.dtype == torch.float:

arg.uniform_(0, 1)

```

then

```

python test/test_proxy_tensor.py -k test_make_fx_symbolic_exhaustive_new_zeros_cpu_float32

```

gives, for example,

```

def forward(self, args, kwargs):

args_1, args_2, args_3, args_4, kwargs_1, kwargs_2, = fx_pytree.tree_flatten_spec([args, kwargs], self._in_spec)

size = args_1.size(0)

_tensor_constant0 = self._tensor_constant0

device_1 = torch.ops.prim.device.default(_tensor_constant0); _tensor_constant0 = None

new_zeros_default = torch.ops.aten.new_zeros.default(args_1, [2, 2, 2], dtype = torch.float64, layout = torch.strided, device = device(type='cpu'), pin_memory = False); args_1 = None

return pytree.tree_unflatten([new_zeros_default], self._out_spec)

[]

```

the call to `new_zeros` is clearly specialized but there are no guards.

cc @ezyang @SherlockNoMad @Chillee

### Versions

master

| 2 |

5,120 | 82,316 |

Add more Vulkan operations

|

triaged, module: vulkan, ciflow/periodic

|

### 🚀 The feature, motivation and pitch

I got an error while trying to host my model into mobile using Vulkan

`Could not run aten::max_pool2d_with_indices`

And I found the list of operations of vulkan quite short.

### Alternatives

_No response_

### Additional context

_No response_

cc @jeffdaily @sunway513

| 1 |

5,121 | 82,312 |

A/libc: Fatal signal 6 (SIGABRT), code -1 (SI_QUEUE) in tid 9792 (Background), pid 9674 (ample.testtorch)

|

oncall: mobile

|

### 🐛 Describe the bug

Hello. I'm running into a problem on d2go when I'm trying to retrain a model. The assembly of the pt model is successful, but when I run the model in my Android Studio, I get an error:

```

E/libc++abi: terminating with uncaught exception of type c10::Error: isTuple()INTERNAL ASSERT FAILED at "../../../../src/main/cpp/libtorch_include/arm64-v8a/ ATen/core/ivalue_inl.h":1306, please report a bug to PyTorch. Expected Tuple but got String

Exception raised from toTuple at ../../../../src/main/cpp/libtorch_include/arm64-v8a/ATen/core/ivalue_inl.h:1306 (most recent call first):

(no backtrace available)

A/libc: Fatal signal 6 (SIGABRT), code -1 (SI_QUEUE) in tid 9792 (Background), pid 9674 (ample.testtorch)

```

A similar [question]( https://github.com/pytorch/pytorch/issues/79875) was asked here, but the solution was not found, and the campaign about this question was already forgotten, so I decided to remind about it again😄. I am willing to share any information to resolve this issue. I tried running a model that was trained on balloon_dataset but got a similar error.

I already got acquainted with the source code of `ivalue_inl.h`, and have not yet understood what line 1306 has to do with it, but I found the assert code on line [1924](https://github.com/pytorch/pytorch/blob/master/aten/src/ATen/core/ivalue_inl.h#L1924) and [1928](https://github.com/pytorch/pytorch/blob/master/aten/src/ATen/core/ivalue_inl.h#L1928).

I assume that this is either related to the cfg configuration file when we generate it and a string was passed to some field instead of a tuple, or it is related somehow to the wrapper itself. If I understood everything correctly, then the trained model in the notebook works well, but when the wrapper itself is created, then an error occurs in java on Android. That is the model:

module = PyTorchAndroid.loadModuleFromAsset(getAssets(), "d2go_test.pt");

is not available, i.e. an error occurs here.

Maybe it's because I didn't call patch_d2_meta_arch()? It's just that the pytorch night version for some reason does not contain this import function, or I didn't search well. I also tried different versions of cuda nightly build of pytorch - without success.

Collected and trained the project in Google Collab. I used pip instead of conda. This is how I set up the dependencies:

```

!pip install 'git+https://github.com/facebookresearch/detectron2.git'

!pip install 'git+https://github.com/facebookresearch/d2go'

!pip install 'git+https://github.com/facebookresearch/mobile-vision.git'

!pip uninstall torch -y

!pip uninstall torchvision -y

!pip install --pre torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/nightly/cu102 # Tried cu102 and cu116

```

Try installing the packages as well to reproduce the error. I also tried to create my own dataset with 1 class - car, and created the annotation file via_region_data.json through this [site](https://www.robots.ox.ac.uk/~vgg/software/via/via-1.0.6.html)

I took the code directly from d2go_beginner.ipynb. If you need a code - just try to train a model in d2go_beginner.ipynb ONLY for 1 class - balloon with `faster_rcnn_fbnetv3a_dsmask_C4.yaml` or `faster_rcnn_fbnetv3a_C4.yaml` from the example and tell me if this model works for you on android. Be careful, because in the instructions here it says:

```

def prepare_for_launch():

runner = GeneralizedRCNNRunner()

cfg = runner.get_default_cfg()

cfg.merge_from_file(model_zoo.get_config_file("faster_rcnn_fbnetv3a_C4.yaml")) <- written faster_rcnn_fbnetv3a_C4.yaml

...

```

And in the next cell it says:

```

import copy

from detectron2.data import build_detection_test_loader

from d2go.export.exporter import convert_and_export_predictor

from d2go.utils.testing.data_loader_helper import create_detection_data_loader_on_toy_dataset

# from d2go.export.d2_meta_arch import patch_d2_meta_arch # i commented

import logging

# disable all the warnings

previous_level = logging.root.manager.disable

logging.disable(logging.INFO)

patch_d2_meta_arch() # I could not find this import at all in any of the versions of pytorch, could this be the problem? I run model without this import.

cfg_name = 'faster_rcnn_fbnetv3a_dsmask_C4.yaml' # <- written already faster_rcnn_fbnetv3a_dsmask_C4.yaml. Is it so necessary, or did you accidentally mix up the yaml files?

pytorch_model = model_zoo.get(cfg_name, trained=True, device='cpu')

...

```

Code of Wrapper:

```

from typing import List, Dict

import torch

class Wrapper(torch.nn.Module):

def __init__(self, model):

super().__init__()

self.model = model

coco_idx_list = [1]

self.coco_idx = torch.tensor(coco_idx_list)

def forward(self, inputs: List[torch.Tensor]):

x = inputs[0].unsqueeze(0) * 255

scale = 320.0 / min(x.shape[-2], x.shape[-1])

x = torch.nn.functional.interpolate(x, scale_factor=scale, mode='bilinear', align_corners=True, recompute_scale_factor=True)

out = self.model(x[0])

res: Dict[str, torch.Tensor] = {}

res["boxes"] = out[0] /scale

res["labels"] = torch.index_select(self.coco_idx, 0, out[1])

res["scores"] = out[2]

return inputs, [res]

orig_model = torch.jit.load(os.path.join(predictor_path, "model.jit"))

wrapped_model = Wrapper(orig_model)

scripted_model = torch.jit.script(wrapped_model)

scripted_model.save("/content/d2go_test.pt")

```

Ask any questions - I will answer what I can.

P.S. Sorry for my English:)

### Versions

Collecting environment information...

PyTorch version: 1.13.0.dev20220726+cu102

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.5 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: 6.0.0-1ubuntu2 (tags/RELEASE_600/final)

CMake version: version 3.22.5

Libc version: glibc-2.26

Python version: 3.7.13 (default, Apr 24 2022, 01:04:09) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.4.188+-x86_64-with-Ubuntu-18.04-bionic

Is CUDA available: True

CUDA runtime version: 11.1.105

GPU models and configuration: GPU 0: Tesla T4

Nvidia driver version: 460.32.03

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.7.6.5

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.0.5

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.21.6

[pip3] pytorch-lightning==1.6.0.dev0

[pip3] torch==1.13.0.dev20220726+cu102

[pip3] torchaudio==0.13.0.dev20220726+cu102

[pip3] torchmetrics==0.9.3

[pip3] torchsummary==1.5.1

[pip3] torchtext==0.13.0

[pip3] torchvision==0.14.0.dev20220726+cu102

[conda] Could not collect

| 15 |

5,122 | 82,308 |

torch.einsum gets wrong results randomly when training with multi-gpu

|

oncall: distributed

|

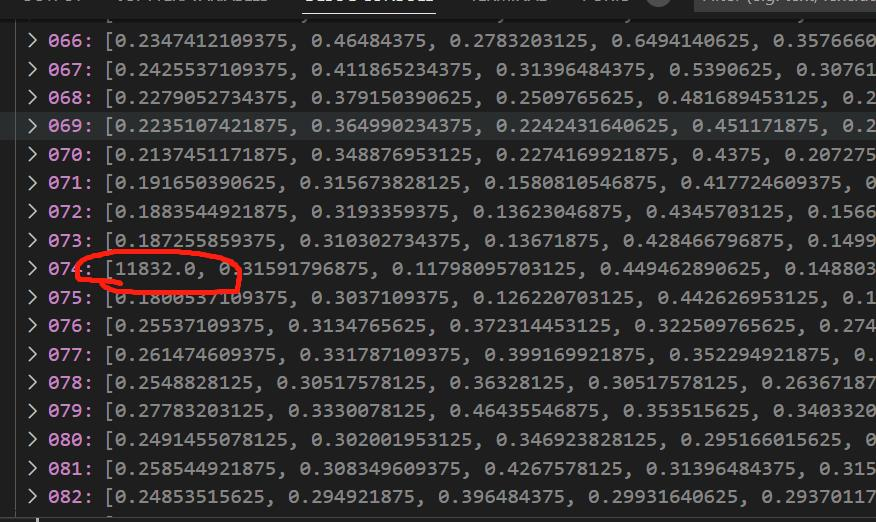

### 🐛 Describe the bug

When training with multi-gpu, I find that the loss will grow rapidly and randomly. So I investigated the cause of the problem through debugging and found that one of the element in the result matrix became very large when torch.einsum was executed. And this problem does not appear when DistributedDataParallel is not used.

I implemented the DistributedDataParallel method of multi-gpu training by following the official tutorial, and everything was fine except for this problem, the loss dropped normally in the first few epochs.

here is the code :

``` python

scores: torch.Tensor = torch.einsum("bdhn,bdhm->bhnm", query, key) / dim**0.5

```

query and key both are correct, and this is a part of the first result:

I run the code once more, and this is the second result:

By the way, data type is half. and here is my multi-gpu training script

``` python

def setup(rank, world_size):

os.environ["MASTER_ADDR"] = "127.0.0.1"

os.environ["MASTER_PORT"] = "12355"

os.environ["NCCL_SOCKET_IFNAME"] = "docker0"

os.environ["GLOO_SOCKET_IFNAME"] = "docker0"

dist.init_process_group("nccl", rank=rank, world_size=world_size)

def train(rank, world_size, epoch, lr, graphs_pr: Dict, output: List):

print(f"Training on rank {rank}.")

setup(rank, world_size)

try:

torch.cuda.set_device(rank)

with open(os.path.join(cache_path, "traning.plk"), "rb") as f:

training: Training = dill.load(f)

training.notify_callback = notify

training.model = torch.nn.SyncBatchNorm.convert_sync_batchnorm(training.model)

training.to(rank)

# Train loss

training.model = MatchingDDP(training.model, device_ids=[rank])

log_loss = losses.LandmarkLogLoss(training.model)

log_loss2 = losses.LaneLogLoss()

train_loss = losses.ProductLoss([log_loss, log_loss2])

# Dataset

with open(os.path.join(cache_path, "dataset.plk"), "rb") as f:

dataset: NewGtDataset = dill.load(f)

dataset.load(os.path.join(cache_path, "dataset"))

# Dataloader

sampler = DistributedSampler(dataset, num_replicas=world_size, rank=rank)

dataloader = DataLoader(dataset, batch_size, sampler=sampler, pin_memory=True)

dataloader.collate_fn = Lambda1(training.model, dataset).f

# Evaluator and update_pr

with open(os.path.join(cache_path, "evaluator.plk"), "rb") as f:

evaluator: evaluation.SubmapEvaluator = dill.load(f)

def update_pr(data):

graphs_pr.update(evaluator.eval_graphs_pr(data, training.model))

training.fit(

train_objective=(dataloader, train_loss),

epochs=epoch,

scheduler_name="ConstantLR",

optimizer_params={"lr": lr},

use_amp=True,

callback=update_pr,

checkpoint_path="saved_models" if rank == 0 else None,

checkpoint_save_steps=40,

checkpoint_save_total_limit=1,

rank=rank,

)

if rank == 0:

training.to("cpu")

output.append(training.model.state_dict())

output.append(training.epoch)

output.append(training.global_step)

except Exception as e:

notify(e)

finally:

dist.destroy_process_group()

```

### Versions

pytorch = 1.12.0

python = 3.9.12

cuda = 10.2

devices: TITAN X (Pascal) * 8

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501

| 1 |

5,123 | 82,306 |

when distribute training load pretrain model error

|

oncall: distributed, module: serialization

|

### 🐛 Describe the bug

```python

def resnet50(pretrained=False, **kwargs):

"""Constructs a ResNet-50 model.

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

"""

model = ResNet(Bottleneck, [3, 4, 6, 3], **kwargs)

local_rank = kwargs.get("local_rank", -1)

if pretrained:

pretrained_model = model_zoo.load_url(model_urls['resnet50'])

state = model.state_dict()

for key in state.keys():

if key in pretrained_model.keys():

state[key] = pretrained_model[key]

model.load_state_dict(state)

return model

```

when training with multi Card,and one host, i try to load pretrain model of resnet50 by function (resnet50) as above:

like this:

```python

model = models.resnet50(pretrained=True, num_classes=kernel_num,local_rank=args.local_rank)

```

here is errors:

```

Traceback (most recent call last):

File "./train.py", line 443, in <module>

main(args)

File "./train.py", line 289, in main

model = models.resnet50(pretrained=True, num_classes=kernel_num,local_rank=args.local_rank)

File "/home/siit/ccprjnet/.GitNet/models/fpn_resnet.py", line 291, in resnet50

pretrained_model = model_zoo.load_url(model_urls['resnet50'])

File "/home/siit/.local/lib/python3.6/site-packages/torch/hub.py", line 509, in load_state_dict_from_url

return torch.load(cached_file, map_location=map_location)

File "/home/siit/.local/lib/python3.6/site-packages/torch/serialization.py", line 593, in load

return _legacy_load(opened_file, map_location, pickle_module, **pickle_load_args)

File "/home/siit/.local/lib/python3.6/site-packages/torch/serialization.py", line 763, in _legacy_load

magic_number = pickle_module.load(f, **pickle_load_args)

_pickle.UnpicklingError: unpickling stack underflow

Traceback (most recent call last):

File "/usr/lib/python3.6/runpy.py", line 193, in _run_module_as_main

"__main__", mod_spec)

File "/usr/lib/python3.6/runpy.py", line 85, in _run_code

exec(code, run_globals)

File "/home/siit/.local/lib/python3.6/site-packages/torch/distributed/launch.py", line 263, in <module>

main()

File "/home/siit/.local/lib/python3.6/site-packages/torch/distributed/launch.py", line 259, in main

cmd=cmd)

subprocess.CalledProcessError: Command '['/usr/bin/python3.6', '-u', './train.py', '--local_rank=1']' returned non-zero exit status 1.

work = _default_pg.barrier() RuntimeError: Connection reset by peer

```

### Versions

```python

def resnet50(pretrained=False, **kwargs):

model = ResNet(Bottleneck, [3, 4, 6, 3], **kwargs)

if pretrained:

pretrained_model = model_zoo.load_url(model_urls['resnet50'])

state = model.state_dict()

for key in state.keys():

if key in pretrained_model.keys():

state[key] = pretrained_model[key]

model.load_state_dict(state)

return model

```

when training with multi Card,and one host, i try to load pretrain model of resnet50 by function (resnet50) as above:

like this:

```

model = models.resnet50(pretrained=True,)

```

here is errors:

```

Traceback (most recent call last):

File "./train.py", line 443, in <module>

main(args)

File "./train.py", line 289, in main

model = models.resnet50(pretrained=True, num_classes=kernel_num,local_rank=args.local_rank)

File "/models/fpn_resnet.py", line 291, in resnet50

pretrained_model = model_zoo.load_url(model_urls['resnet50'])

File "local/lib/python3.6/site-packages/torch/hub.py", line 509, in load_state_dict_from_url

return torch.load(cached_file, map_location=map_location)

File ".local/lib/python3.6/site-packages/torch/serialization.py", line 593, in load

return _legacy_load(opened_file, map_location, pickle_module, **pickle_load_args)

File "/.local/lib/python3.6/site-packages/torch/serialization.py", line 763, in _legacy_load

magic_number = pickle_module.load(f, **pickle_load_args)

_pickle.UnpicklingError: unpickling stack underflow

Traceback (most recent call last):

File "/usr/lib/python3.6/runpy.py", line 193, in _run_module_as_main

"__main__", mod_spec)

File "/usr/lib/python3.6/runpy.py", line 85, in _run_code

exec(code, run_globals)

File ".local/lib/python3.6/site-packages/torch/distributed/launch.py", line 263, in <module>

main()

File "/.local/lib/python3.6/site-packages/torch/distributed/launch.py", line 259, in main

cmd=cmd)

subprocess.CalledProcessError: Command '['/usr/bin/python3.6', '-u', './train.py', '--local_rank=1']' returned non-zero exit status 1.

```

did it can't not load pretrain model when distribute training?

python:3.6

pytorch version:1.5.1

nccl version:nccl-local-repo-ubuntu1804-2.11.4-cuda10.2_1.0-1_amd64

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501 @mruberry

| 1 |

5,124 | 82,303 |

Race condition between torch.tensor's view and /= (/= returns incorrect result)

|

triaged, module: partial aliasing

|

### 🐛 Describe the bug

I found a race condition on some of my CPU families. Basically the operation v /= v[-1] with v = [a, b, c, d] delivers random results of the type:

v = [a/d, b, c/d, 1]

or

v = [a/d, b/d, c, 1]

My uneducated guess is that at a random moment the operation v[-1]/v[-1] = 1 is executed and then the result is used as new v[-1] (i.e. v /= 1) for the rest of the calculation.

Some of my CPUs show the problem and some don't (see down below in the version section).

First I thought it is a problem of the new E/P core concept of the i9-12900K. Then I found it on a dual CPU system Xeon too. And finally I found it on an AMD 2. gen EPYC mono CPU. However, normal traditonal desktop CPU doesn't show the problem.

```

import torch

A: torch.Tensor = torch.full((24, 75, 24, 24), 2, dtype=torch.float32)

B: torch.Tensor = A[:, -1, :, :].unsqueeze(1)

A /= B

print(A[:, 0, 0, 0])

```

(EDIT: A = A / B doesn't show this problem.)

My expectation is (and this is the result on an old i7-5820K):

```

tensor([1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1.,

1., 1., 1., 1., 1., 1.])

```

but I get on i9-12900K CPUs

```

tensor([1., 2., 1., 1., 2., 1., 1., 2., 1., 1., 2., 1., 1., 1., 1., 1., 2., 1.,

1., 2., 1., 1., 2., 1.]

```

### Versions

**Bad computer: i9-12900K**

Collecting environment information...

PyTorch version: 1.11.0+cu102

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: Fedora release 36 (Thirty Six) (x86_64)

GCC version: (GCC) 12.1.1 20220507 (Red Hat 12.1.1-1)

Clang version: 14.0.0 (Fedora 14.0.0-1.fc36)

CMake version: version 3.22.2

Libc version: glibc-2.35

Python version: 3.10.4 (main, Apr 6 2022, 17:03:02) [GCC 11.2.1 20220127 (Red Hat 11.2.1-9)] (64-bit runtime)

Python platform: Linux-5.17.7-300.fc36.x86_64-x86_64-with-glibc2.35

Is CUDA available: False

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy==0.942

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.21.5

[pip3] torch==1.11.0

[pip3] torch-tb-profiler==0.3.1

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] Could not collect

**Good computer: i7-5820K**

Collecting environment information...

PyTorch version: 1.11.0+cu102

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: Fedora release 36 (Thirty Six) (x86_64)

GCC version: (GCC) 12.1.1 20220507 (Red Hat 12.1.1-1)

Clang version: 14.0.0 (Fedora 14.0.0-1.fc36)

CMake version: version 3.22.2

Libc version: glibc-2.35

Python version: 3.10.4 (main, Apr 2 2022, 19:00:15) [GCC 11.2.1 20211203 (Red Hat 11.2.1-7)] (64-bit runtime)

Python platform: Linux-5.17.9-300.fc36.x86_64-x86_64-with-glibc2.35

Is CUDA available: True

CUDA runtime version: Could not collect

GPU models and configuration: GPU 0: NVIDIA GeForce GTX 980 Ti

Nvidia driver version: 510.68.02

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy==0.942

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.21.5

[pip3] torch==1.11.0

[pip3] torch-tb-profiler==0.4.0

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] Could not collect

**Bad computer: dual E5-2640**

Collecting environment information...

PyTorch version: 1.11.0+cu102

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: Fedora release 36 (Thirty Six) (x86_64)

GCC version: (GCC) 12.1.1 20220507 (Red Hat 12.1.1-1)

Clang version: 14.0.0 (Fedora 14.0.0-1.fc36)

CMake version: version 3.22.2

Libc version: glibc-2.35

Python version: 3.10.4 (main, Apr 6 2022, 17:03:02) [GCC 11.2.1 20220127 (Red Hat 11.2.1-9)] (64-bit runtime)

Python platform: Linux-5.17.7-300.fc36.x86_64-x86_64-with-glibc2.35

Is CUDA available: False

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy==0.942

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.21.5

[pip3] torch==1.11.0

[pip3] torch-tb-profiler==0.3.1

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] Could not collect

**Good computer: i7-3930K**

Collecting environment information...

PyTorch version: 1.11.0+cu102

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: Fedora release 36 (Thirty Six) (x86_64)

GCC version: (GCC) 12.1.1 20220507 (Red Hat 12.1.1-1)

Clang version: 14.0.0 (Fedora 14.0.0-1.fc36)

CMake version: version 3.22.2

Libc version: glibc-2.35

Python version: 3.10.4 (main, Apr 6 2022, 17:03:02) [GCC 11.2.1 20220127 (Red Hat 11.2.1-9)] (64-bit runtime)

Python platform: Linux-5.17.7-300.fc36.x86_64-x86_64-with-glibc2.35

Is CUDA available: False

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy==0.942

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.21.5

[pip3] torch==1.11.0

[pip3] torch-tb-profiler==0.3.1

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] Could not collect

**Bad computer: AMD EPYC 7282**

Collecting environment information...

PyTorch version: 1.11.0+cu102

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: Fedora release 36 (Thirty Six) (x86_64)

GCC version: (GCC) 12.1.1 20220507 (Red Hat 12.1.1-1)

Clang version: 14.0.0 (Fedora 14.0.0-1.fc36)

CMake version: version 3.22.2

Libc version: glibc-2.35

Python version: 3.10.4 (main, Apr 6 2022, 17:03:02) [GCC 11.2.1 20220127 (Red Hat 11.2.1-9)] (64-bit runtime)

Python platform: Linux-5.17.7-300.fc36.x86_64-x86_64-with-glibc2.35

Is CUDA available: False

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy==0.942

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.21.5

[pip3] torch==1.11.0

[pip3] torch-tb-profiler==0.3.1

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] Could not collect

cc @svekars @holly1238

| 3 |

5,125 | 82,293 |

pytorch's checkpoint_wrapper does not save memory while fairscale's checkpoint_wrapper saves huge memory

|

high priority, oncall: distributed, module: checkpoint

|

### 🐛 Describe the bug

I'm testing activation checkpointing on FSDP models, to my surprise, PyTorch's native checkpoint_wrapper seems not working at all, not saving any memory whatsoever, I switched to fairscale's checkpoint_wrapper, huge memory has been saved.

To reproduce this issue,

main.py:

```python

import functools

import os

import torch

import torch.nn as nn

import torch.distributed as dist

from functools import partial

from fsdp_examples.unet import UNetModel

from torch.distributed.fsdp.fully_sharded_data_parallel import (

FullyShardedDataParallel as FSDP,

CPUOffload

)

from torch.cuda.amp import autocast

from torch.optim import AdamW

from torch.distributed.fsdp.wrap import (

size_based_auto_wrap_policy,

transformer_auto_wrap_policy,

)

from torch.distributed.fsdp.sharded_grad_scaler import ShardedGradScaler

from torch.nn.parallel import DistributedDataParallel as DDP