Serial Number

int64 1

6k

| Issue Number

int64 75.6k

112k

| Title

stringlengths 3

357

| Labels

stringlengths 3

241

⌀ | Body

stringlengths 9

74.5k

⌀ | Comments

int64 0

867

|

|---|---|---|---|---|---|

5,201 | 81,722 |

Inconsistent naming convention for end of enum in DispatchKey

|

triaged, module: dispatch

|

### 🐛 Describe the bug

Here is a clear contradiction:

```

EndOfBackendKeys = PrivateUse3Bit,

```

but

```

EndOfFunctionalityKeys, // End of functionality keys.

```

This means for iterating through backend keys you should <= the ending, but for functionality keys you should < the ending. It is inconsistent.

----

Let's take a step back. There are a few problems with our naming conventions here.

1. It's not clear if end is inclusive or exclusive

2. It's not clear if start is inclusive or exclusive

3. It's not clear if length includes the zero-valued invalid key

We should establish clear definitions for each of these (possibly renaming if the existing names are ambiguous) and then apply this uniformly.

My preference is that:

1. End is exclusive

2. Start is inclusive

3. Length includes zero-valued key

cc @bdhirsh

### Versions

master

| 0 |

5,202 | 81,717 |

PyTorch Embedding Op with max_norm is not working as expected

|

module: cuda, triaged, module: norms and normalization, module: embedding, bug

|

### 🐛 Describe the bug

The behavior of renorm inside the `max_norm` will renorm the weight to the norm same as `max_norm` when it is specified.

However, under GPU and if the input is multi-dimensional (like dim = 3). Its behavior is not expected and also weight will be renormed to the wrong norm (like less than `max_norm`)

To repo this here is code snippet:

```

import torch

input_size = [36, 15, 48]

embedding_size = 28

embedding_dim = 5

embedding = torch.nn.Embedding(embedding_size, embedding_dim, max_norm=2.0, norm_type=2.0).cuda()

weight_norm = torch.linalg.norm(embedding.weight, dim=-1).clone()

original_weight_norm = weight_norm.clone()

print("weight_norm", original_weight_norm)

weight_norm[weight_norm > 2.0] = 2.0

print("expected weight_norm", weight_norm)

for i in range(4):

input = torch.randint(0, embedding_size, tuple(input_size)).cuda()

torch.nn.functional.embedding(input, embedding.weight, max_norm=2.0, norm_type=2.0)

actual_weight_norm = torch.linalg.norm(embedding.weight, dim=-1)

print("actual weight ", actual_weight_norm)

delta = actual_weight_norm - weight_norm

threshold = 0.01

print(torch.allclose(weight_norm, actual_weight_norm), "norm more than threshold ", threshold, delta[torch.abs(delta) > threshold])

```

One example output is like:

```

weight_norm tensor([2.0776, 2.0551, 2.6955, 1.9832, 1.6644, 1.8127, 2.5646, 2.5205, 1.6097,

3.0849, 1.6583, 1.6348, 2.7448, 1.6877, 1.7610, 1.9241, 0.8003, 0.7706,

2.2592, 2.0385, 2.6742, 1.5317, 2.1481, 0.9371, 1.6462, 1.7186, 1.2157,

2.8872], device='cuda:0', grad_fn=<CloneBackward0>)

expected weight_norm tensor([2.0000, 2.0000, 2.0000, 1.9832, 1.6644, 1.8127, 2.0000, 2.0000, 1.6097,

2.0000, 1.6583, 1.6348, 2.0000, 1.6877, 1.7610, 1.9241, 0.8003, 0.7706,

2.0000, 2.0000, 2.0000, 1.5317, 2.0000, 0.9371, 1.6462, 1.7186, 1.2157,

2.0000], device='cuda:0', grad_fn=<IndexPutBackward0>)

actual weight tensor([2.0000, 2.0000, 2.0000, 1.9832, 1.6644, 1.8127, 2.0000, 1.5870, 1.6097,

1.2966, 1.6583, 1.6348, 2.0000, 1.6877, 1.7610, 1.9241, 0.8003, 0.7706,

1.7705, 2.0000, 1.8975, 1.5317, 2.0000, 0.9371, 1.6462, 1.7186, 1.2157,

1.8775], device='cuda:0', grad_fn=<LinalgVectorNormBackward0>)

False norm more than threshold 0.01 tensor([-0.4130, -0.7034, -0.2295, -0.1025, -0.1225], device='cuda:0',

grad_fn=<IndexBackward0>)

```

Sometimes, you need to run it more than once to repo but it is usually within 2-3 runs.

### Versions

Run on the latest night build and Linux and cuda 11.0

cc @ngimel

| 2 |

5,203 | 81,703 |

Dispatcher debug/logging mode

|

triaged, module: dispatch

|

### 🚀 The feature, motivation and pitch

I recently ran into a situation where I was trying to understand why the dispatcher dispatched the way it had, and it (re)occurred to me that having a way of turning on chatty dispatcher mode would be pretty useful. What I'd like to see is a log something like (maybe done more compactly):

```

call aten::add

argument 1 keys: [Dense], [CPUBit]

argument 2 keys: [Python, PythonSnapshotTLS], [MetaBit]

merged keys: [Python, PythonSnapshotTLS, ...], [CPUBit, MetaBit]

include, exclude set: [], [Autograd]

dispatching to: DenseCPU

```

for every operator going through the dispatcher.

Probably this should be done using the profiler. cc @robieta

### Alternatives

_No response_

### Additional context

_No response_

| 4 |

5,204 | 81,692 |

Failed to static link latest cuDNN while compiling

|

module: build, module: cudnn, triaged

|

### 🐛 Describe the bug

I tried to compile 1.12 with `TORCH_CUDA_ARCH_LIST="6.0;6.1;7.0;7.5;7.5+PTX" CUDNN_LIBRARY_PATH=/usr/lib/x86_64-linux-gnu/ CUDNN_INCLUDE_DIR=/usr/include/ USE_CUDNN=1 USE_STATIC_CUDNN=1 USE_STATIC_NCCL=1 CAFFE2_STATIC_LINK_CUDA=1 python setup.py bdist_wheel`.

It cannot find the cuDNN installed on my system:

<img width="587" alt="截屏2022-07-19 下午7 58 23" src="https://user-images.githubusercontent.com/73142299/179744748-92517f55-a1ee-49cb-bdf8-49d94c41fd7b.png">

I located the problem in file `cmake/Modules_CUDA_fix/FindCUDNN.cmake`

```

option(CUDNN_STATIC "Look for static CUDNN" OFF)

if (CUDNN_STATIC)

set(CUDNN_LIBNAME "libcudnn_static.a")

else()

set(CUDNN_LIBNAME "cudnn")

endif()

set(CUDNN_LIBRARY $ENV{CUDNN_LIBRARY} CACHE PATH "Path to the cudnn library file (e.g., libcudnn.so)")

if (CUDNN_LIBRARY MATCHES ".*cudnn_static.a" AND NOT CUDNN_STATIC)

message(WARNING "CUDNN_LIBRARY points to a static library (${CUDNN_LIBRARY}) but CUDNN_STATIC is OFF.")

endif()

find_library(CUDNN_LIBRARY_PATH ${CUDNN_LIBNAME}

PATHS ${CUDNN_LIBRARY}

PATH_SUFFIXES lib lib64 cuda/lib cuda/lib64 lib/x64)

```

This code would look for `libcudnn_static.a` if `USE_STATIC_CUDNN` was set.

However, as follow:

<img width="1422" alt="截屏2022-07-19 下午8 02 37" src="https://user-images.githubusercontent.com/73142299/179745670-1d27d21a-eca7-402d-b94e-c2c0701b3757.png">

Newer version of cuDNN does not have the `libcudnn_static.a`.

### Versions

Collecting environment information...

PyTorch version: N/A

Is debug build: N/A

CUDA used to build PyTorch: N/A

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.6 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: Could not collect

CMake version: version 3.22.1

Libc version: glibc-2.17

Python version: 3.7.13 (default, Mar 29 2022, 02:18:16) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-4.19.91-011.ali4000.alios7.x86_64-x86_64-with-debian-buster-sid

Is CUDA available: N/A

CUDA runtime version: 10.2.89

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.4.1

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: N/A

Versions of relevant libraries:

[pip3] numpy==1.21.5

[pip3] torch==1.12.0

[conda] mkl 2022.0.1 h06a4308_117 defaults

[conda] mkl-include 2022.0.1 h06a4308_117 defaults

[conda] numpy 1.21.5 py37hf838250_3 defaults

[conda] numpy-base 1.21.5 py37h1e6e340_3 defaults

[conda] torch 1.12.0 pypi_0 pypi

cc @malfet @seemethere @csarofeen @ptrblck @xwang233

| 5 |

5,205 | 81,684 |

Message exchange failure when perform alltoallv (cpus)

|

high priority, triage review, oncall: distributed, module: c10d

|

### 🐛 Describe the bug

When performing an alltoallv message exchange on cpus results in the following error:

--------------------------------------------------------------------

terminate called after throwing an instance of 'gloo::EnforceNotMet'

what(): [enforce fail at ../third_party/gloo/gloo/transport/tcp/pair.cc:490] op.preamble.length <= op.nbytes. 881392472 vs 881392448

---------------------------------------------------------------------

This error is reproducible and a standalone python script is included in this Issue report submission.

This is a very simple script which uses 10 machines/10-processes to reproduce this above mentioned error.

I used 10 machine cluster to reproduce this error repeatedly... however I guess the same may happen on a single machine using 10 processes.

This script performs the following tasks

1. create the processgroup with 10 ranks

2. exchanges no of int64's which will be exchanged, this no. is used on the receiving side to allocate buffers.

3. once the buffers are allocated alltoallv (which is included in the standalone script) is performed to exchange int64's.

4. The error happens when performing alltoallv using cpus.

Some observations about this error:

1. This error happens when sending large messages. The same piece of logic works when smaller messages were sent.

2. Of the 10 processes/rank some of these ranks fail with the above error message. However, the no. of ranks failing is unpredictable... suggesting there is somekind of buffer overwrite or buffer corruption.

3. This standalone script is created by mimic'ing some of the functionality in the application I am working on at the moment.

4. The hardcoded no. of int64's is one such instance when this error is deterministically reproducible.

Please use the following standalone script

```python

import numpy as np

import argparse

import torch

import os

import time

from datetime import timedelta

import torch.distributed as dist

from timeit import default_timer as timer

from datetime import timedelta

def alltoall_cpu(rank, world_size, output_tensor_list, input_tensor_list):

input_tensor_list = [tensor.to(torch.device('cpu')) for tensor in input_tensor_list]

for i in range(world_size):

dist.scatter(output_tensor_list[i], input_tensor_list if i == rank else [], src=i)

def alltoallv_cpu(rank, world_size, output_tensor_list, input_tensor_list):

senders = []

for i in range(world_size):

if i == rank:

output_tensor_list[i] = input_tensor_list[i].to(torch.device('cpu'))

else:

sender = dist.isend(input_tensor_list[i].to(torch.device('cpu')), dst=i, tag=i)

senders.append(sender)

for i in range(world_size):

if i != rank:

dist.recv(output_tensor_list[i], src=i, tag=i)

torch.distributed.barrier()

def splitdata_exec(rank, world_size):

int64_counts = np.array([

[0, 110105856, 110093280, 110116272, 110097840, 110111128, 110174059, 110087008, 110125040, 110087400],#0

[110174059, 0, 110158903, 110160317, 110149564, 110170899, 110166538, 110139263, 110163283, 110154040],#1

[110251793, 110254110, 0, 110243087, 110249640, 110270594, 110248594, 110249172, 110277587, 110242484],#2

[110191018, 110171210, 110170046, 0, 110167632, 110165475, 110174676, 110158908, 110171609, 110158631],#3

[110197278, 110198689, 110193780, 110198301, 0, 110208663, 110184046, 110194628, 110200308, 110168337],#4

[110256343, 110244546, 110248884, 110255858, 110236621, 0, 110247954, 110246921, 110247543, 110243309],#5

[110113348, 109915976, 109891208, 109908240, 109916552, 109917544, 0, 109893592, 109930888, 109895912],#6

[110024052, 109995591, 110003242, 110013125, 110002038, 110013278, 110003047, 0, 110015547, 109981915],#7

[109936439, 109948208, 109937391, 109936696, 109930888, 109941325, 109940259, 109917662, 0, 109917002],#8

[110050394, 110029327, 110036926, 110043437, 110021664, 110051453, 110036305, 110039768, 110054324, 0],#9

])

start = timer()

sizes = int64_counts[rank]

print('[Rank: ', rank, '] outgoing int64 counts: ', sizes)

# buffer sizes send/recv

send_counts = list(torch.Tensor(sizes).type(dtype=torch.int64).chunk(world_size))

recv_counts = list(torch.zeros([world_size], dtype=torch.int64).chunk(world_size))

alltoall_cpu(rank, world_size, recv_counts, send_counts)

#allocate buffers

recv_nodes = []

for i in recv_counts:

recv_nodes.append(torch.zeros(i.tolist(), dtype=torch.int64))

#form the outgoing message

send_nodes = []

for i in range(world_size):

# sending

d = np.ones(shape=(sizes[i]), dtype=np.int64)*rank

send_nodes.append(torch.from_numpy(d))

alltoallv_cpu(rank, world_size, recv_nodes, send_nodes)

end = timer()

for i in range(world_size):

data = recv_nodes[i].numpy()

assert np.all(data == np.ones(data.shape, dtype=np.int64)*i)

print('[Rank: ', rank, '] Done with the test...')

def multi_dev_proc_init(params):

rank = int(os.environ["RANK"])

dist.init_process_group("gloo", rank=rank, world_size=params.world_size, timeout=timedelta(seconds=5*60))

splitdata_exec(rank, params.world_size)

if __name__ == "__main__":

parser = argparse.ArgumentParser(description='Construct graph partitions')

parser.add_argument('--world-size', help='no. of processes to spawn', default=1, type=int, required=True)

params = parser.parse_args()

multi_dev_proc_init(params)

```

### Versions

The output of the python collect_env.py is as follows

```

Collecting environment information...

PyTorch version: 1.8.0a0+ae5c2fe

Is debug build: False

CUDA used to build PyTorch: 11.0

ROCM used to build PyTorch: N/A

OS: Amazon Linux 2 (x86_64)

GCC version: (GCC) 7.3.1 20180712 (Red Hat 7.3.1-9)

Clang version: 7.0.1 (Amazon Linux 2 7.0.1-1.amzn2.0.2)

CMake version: version 3.18.2

Libc version: glibc-2.2.5

Python version: 3.7.9 (default, Aug 27 2020, 21:59:41) [GCC 7.3.1 20180712 (Red Hat 7.3.1-9)] (64-bit runtime)

Python platform: Linux-4.14.200-155.322.amzn2.x86_64-x86_64-with-glibc2.2.5

Is CUDA available: True

CUDA runtime version: 11.0.221

GPU models and configuration:

GPU 0: NVIDIA A10G

GPU 1: NVIDIA A10G

GPU 2: NVIDIA A10G

GPU 3: NVIDIA A10G

GPU 4: NVIDIA A10G

GPU 5: NVIDIA A10G

GPU 6: NVIDIA A10G

GPU 7: NVIDIA A10G

Nvidia driver version: 510.47.03

cuDNN version: Probably one of the following:

/usr/local/cuda-10.1/targets/x86_64-linux/lib/libcudnn.so.7.6.5

/usr/local/cuda-10.2/targets/x86_64-linux/lib/libcudnn.so.7.6.5

/usr/local/cuda-11.0/targets/x86_64-linux/lib/libcudnn.so.8.0.4

/usr/local/cuda-11.0/targets/x86_64-linux/lib/libcudnn_adv_infer.so.8.0.4

/usr/local/cuda-11.0/targets/x86_64-linux/lib/libcudnn_adv_train.so.8.0.4

/usr/local/cuda-11.0/targets/x86_64-linux/lib/libcudnn_cnn_infer.so.8.0.4

/usr/local/cuda-11.0/targets/x86_64-linux/lib/libcudnn_cnn_train.so.8.0.4

/usr/local/cuda-11.0/targets/x86_64-linux/lib/libcudnn_ops_infer.so.8.0.4

/usr/local/cuda-11.0/targets/x86_64-linux/lib/libcudnn_ops_train.so.8.0.4

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.19.3

[pip3] pytorch-ignite==0.4.8

[pip3] torch==1.8.0a0

[pip3] torchaudio==0.8.2

[pip3] torchvision==0.9.2+cu111

[conda] Could not collect

```

cc @ezyang @gchanan @zou3519 @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501

| 2 |

5,206 | 81,682 |

Python operator registration API for subclasses

|

feature, triaged, module: dispatch, module: __torch_dispatch__

|

### 🚀 The feature, motivation and pitch

We have python op registration by @anjali411 but this is only allowed for dispatch keys. A nice extension would be to allow tensor subclasses to be passed in lieu of dispatch key and get the same behavior. This would be an alternative to defining `__torch_dispatch__` but would have better performance in the passthrough case as we could bypass calling into Python in that case.

### Alternatives

_No response_

### Additional context

_No response_

cc @Chillee @ezyang @zou3519 @albanD @samdow

| 0 |

5,207 | 81,681 |

FakeTensor consolidated strategy for in_kernel_invocation and dispatch keys

|

triaged, module: fakeTensor

|

### 🐛 Describe the bug

@eellison has done a lot of good work fixing segfaults related to meta tensors incorrectly reporting themselves as cpu tensors (due to fake tensor) and then causing in c++ code that is subsequently expecting the tensor in question to actually be a cpu tensor and have real data. This issue is an attempt to explicate the underlying conceptual strategy for how to decide whether or not to treat a fake tensor as a cpu tensor or not.

The fundamental problem is we have two opposing requirements:

* We wish fake tensors to mimic CPU/CUDA tensors as much as possible, so that a fake tensor behaves much the same way as their real brethren. This applies to both to device tests (e.g., looking at device, or is_cpu) as well as to dispatch key dispatch (relevant for any functionality keys, esp autocast)

* We don't want any code that directly accesses pointer data to operate on fake tensors, as there isn't any data and you will segfault.

Here is a simple heuristic for how to distinguish:

* In Python, direct data access never happens, we advertise as CPU/CUDA

* In C++, where direct data access can happen, we advertise as Meta

But this is inaccurate on a number of fronts:

* When we autocast, we want to get the CPU/CUDA autocast logic on meta tensors, otherwise the dtype is not accurate. But we don't set CPU/CUDA dispatch key on fake tensor, so we don't get the corresponding autocast logic. Repro:

```

import torch

from torch._subclasses.fake_tensor import FakeTensorMode

def f():

x = torch.randn(2, 3, requires_grad=True)

y = torch.randn(3, 4)

with torch.autocast('cpu'):

r = x @ y

return r

with FakeTensorMode():

r = f()

print(r)

# prints FakeTensor(cpu, torch.Size([2, 4]), torch.float32)

# should be bfloat32

```

* When we query for device on a FakeTensor, this unconditionally dispatches to `torch.ops.aten.device`, no matter if subclass dispatch is disabled. This means that without some other logic, we will report the wrong device type in C++. This is why `in_kernel_invocation_manager` exists in the fake tensor implementation today

It would be good to have a clearer delineation of the boundary here. This is also intimately related to https://github.com/pytorch/pytorch/pull/81471 where FunctionalWrapperTensors don't have full implementation and also must avoid hitting Dense kernels.

It seems to me that the right way to do this is to focus on "direct data access". Our primary problem is avoiding direct access to data pointers. This happens only at a very specific part of the dispatch key order (Dense and similar). So we just need to make sure these keys are not runnable, either by removing the Dense from the functionality dispatch key set, or by adding another dispatch key in front of all of these keys to "block" execution of those keys (this is how Python key and wrapper subclass works).

PS What's the difference between wrapper subclass and fake tensor? At this point, it seems primarily because fake tensor "is a" meta tensor, which makes it marginally more efficient (one dynamic allocation rather than two) and means in C++ we can go straight to meta tensor implementation in the back... it's kind of weak, sorry @eellison for making you suffer with is-a haha.

cc @bdhirsh @Chillee

### Versions

master

| 3 |

5,208 | 81,680 |

Provide an option to disable CUDA_GCC_VERSIONS

|

module: build, triaged

|

### 🚀 The feature, motivation and pitch

I really understand the motivations of recent additions to CUDA_GCC_VERSIONS that were done in

* https://github.com/pytorch/pytorch/commit/86deecd7be248016d413e9ad6f5527a70c88b454

* https://github.com/pytorch/pytorch/pull/63230

I do think they are a step in the right direction toward improving the situation (xref https://github.com/pytorch/pytorch/issues/55267)

I'm wondering if you could add an option, as an environment variable, for us to ignore it. The motivation is that I believe that it is possible to patch up the GCC 10 compiler with a small patch to help nvidia compatibility.

https://github.com/conda-forge/ctng-compilers-feedstock/blob/main/recipe/meta.yaml#L22=

### Alternatives

I can patch things out.... However, I would rather have the override done by other packagers that assert they know what they are doing.

You can also patch things out with a sed command

```

sed -i '/ (MINIMUM_GCC_VERSION,/d' ${SP_DIR}/torch/utils/cpp_extension.py

```

### Additional context

The other motivation is that soon enough, the GCC 11 compilers should be compatible with nvidia.

I also believe that 10.4 is compatible with the nvcc

cc @malfet @seemethere

| 5 |

5,209 | 81,678 |

Export quantized shufflenet_v2_x0_5 to ONNX

|

module: onnx, triaged, onnx-triaged

|

### 🐛 Describe the bug

Fail to export quantized shufflenet_v2_x0_5 to ONNX using the following code:

```python

import io

import numpy as np

import torch

import torch.utils.model_zoo as model_zoo

import torch.onnx

import torchvision.models.quantization as models

torch_model = models.shufflenet_v2_x0_5(pretrained=True, quantize=True)

torch_model.eval()

batch_size = 1

input_float = torch.zeros(1, 3, 224, 224)

input_tensor = input_float

torch_out = torch.jit.trace(torch_model, input_tensor)

# Export the model

torch.onnx.export(torch_model, # model being run

input_tensor, # model input (or a tuple for multiple inputs)

"shufflenet_v2_x0_5_qdq.onnx", # where to save the model (can be a file or file-like object)

export_params=True, # store the trained parameter weights inside the model file

opset_version=16, # the ONNX version to export the model to

do_constant_folding=True, # whether to execute constant folding for optimization

input_names = ['input'], # the model's input names

output_names = ['output'], # the model's output names

dynamic_axes={'input' : {0 : 'batch_size'}, # variable length axes

'output' : {0 : 'batch_size'}})

```

Stacktrace:

```text

/home/skyline/miniconda3/envs/torch-nightly-py39/lib/python3.9/site-packages/torchvision/models/_utils.py:208: UserWarning: The parameter 'pretrained' is deprecated since 0.13 and will be removed in 0.15, please use 'weights' instead.

warnings.warn(

/home/skyline/miniconda3/envs/torch-nightly-py39/lib/python3.9/site-packages/torchvision/models/_utils.py:223: UserWarning: Arguments other than a weight enum or `None` for 'weights' are deprecated since 0.13 and will be removed in 0.15. The current behavior is equivalent to passing `weights=ShuffleNet_V2_X0_5_QuantizedWeights.IMAGENET1K_FBGEMM_V1`. You can also use `weights=ShuffleNet_V2_X0_5_QuantizedWeights.DEFAULT` to get the most up-to-date weights.

warnings.warn(msg)

/home/skyline/miniconda3/envs/torch-nightly-py39/lib/python3.9/site-packages/torch/onnx/_patch_torch.py:67: UserWarning: The shape inference of prim::TupleConstruct type is missing, so it may result in wrong shape inference for the exported graph. Please consider adding it in symbolic function. (Triggered internally at /opt/conda/conda-bld/pytorch_1658041842671/work/torch/csrc/jit/passes/onnx/shape_type_inference.cpp:1874.)

torch._C._jit_pass_onnx_node_shape_type_inference(

Traceback (most recent call last):

File "/home/skyline/Projects/python-playground/ml/ModelConversion/torch_quantizied_model_to_onnx.py", line 20, in <module>

torch.onnx.export(torch_model, # model being run

File "/home/skyline/miniconda3/envs/torch-nightly-py39/lib/python3.9/site-packages/torch/onnx/utils.py", line 479, in export

_export(

File "/home/skyline/miniconda3/envs/torch-nightly-py39/lib/python3.9/site-packages/torch/onnx/utils.py", line 1411, in _export

graph, params_dict, torch_out = _model_to_graph(

File "/home/skyline/miniconda3/envs/torch-nightly-py39/lib/python3.9/site-packages/torch/onnx/utils.py", line 1054, in _model_to_graph

graph = _optimize_graph(

File "/home/skyline/miniconda3/envs/torch-nightly-py39/lib/python3.9/site-packages/torch/onnx/utils.py", line 624, in _optimize_graph

graph = _C._jit_pass_onnx(graph, operator_export_type)

File "/home/skyline/miniconda3/envs/torch-nightly-py39/lib/python3.9/site-packages/torch/onnx/utils.py", line 1744, in _run_symbolic_function

return symbolic_fn(g, *inputs, **attrs)

File "/home/skyline/miniconda3/envs/torch-nightly-py39/lib/python3.9/site-packages/torch/onnx/symbolic_opset13.py", line 716, in conv2d_relu

input, input_scale, _, _ = symbolic_helper.dequantize_helper(g, q_input)

File "/home/skyline/miniconda3/envs/torch-nightly-py39/lib/python3.9/site-packages/torch/onnx/symbolic_helper.py", line 1235, in dequantize_helper

unpacked_qtensors = _unpack_tuple(qtensor)

File "/home/skyline/miniconda3/envs/torch-nightly-py39/lib/python3.9/site-packages/torch/onnx/symbolic_helper.py", line 167, in _unpack_tuple

raise RuntimeError(

RuntimeError: ONNX symbolic expected node type `prim::TupleConstruct`, got `%423 : Tensor(*, *, *, *) = onnx::Slice(%x.8, %419, %422, %411), scope: __module.stage2/__module.stage2.1 # /home/skyline/miniconda3/envs/torch-nightly-py39/lib/python3.9/site-packages/torchvision/models/quantization/shufflenetv2.py:42:0

`

```

### Versions

PyTorch version: 1.13.0.dev20220717

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: Ubuntu 22.04 LTS (x86_64)

GCC version: (Ubuntu 11.2.0-19ubuntu1) 11.2.0

Clang version: 14.0.6

CMake version: version 3.22.1

Libc version: glibc-2.35

Python version: 3.9.12 (main, Jun 1 2022, 11:38:51) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.10.102.1-microsoft-standard-WSL2-x86_64-with-glibc2.35

Is CUDA available: False

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.23.1

[pip3] torch==1.13.0.dev20220717

[pip3] torchaudio==0.13.0.dev20220717

[pip3] torchvision==0.14.0.dev20220717

[conda] blas 1.0 mkl

[conda] cpuonly 2.0 0 pytorch-nightly

[conda] cudatoolkit 10.2.89 hfd86e86_1

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.23.1 pypi_0 pypi

[conda] numpy-base 1.22.3 py39hf524024_0

[conda] pytorch 1.13.0.dev20220717 py3.9_cpu_0 pytorch-nightly

[conda] pytorch-mutex 1.0 cpu pytorch-nightly

[conda] torchaudio 0.13.0.dev20220717 py39_cpu pytorch-nightly

[conda] torchvision 0.14.0.dev20220717 py39_cpu pytorch-nightly

| 4 |

5,210 | 81,669 |

Register refs for CompositeImplicitAutograd ops as decompositions

|

triaged, module: primTorch

|

There are some ops that explicitly don't have decompositions registered because they are CompositeImplicitAutograd

https://github.com/pytorch/pytorch/blob/bf36d8b987b2ef2fd9cb26bae3acc29c03b05072/test/test_ops.py#L1538-L1567

However, it is still possible to observe them in inference mode (or possibly if CompositeImplicitAutograd is overridden by a different kernel)

```python

from torch.testing._internal.logging_tensor import capture_logs_with_logging_tensor_mode

with capture_logs_with_logging_tensor_mode() as logs:

with torch.inference_mode():

a = torch.ones(2, 2)

a.reshape(-1)

print('\n'.join(logs))

# $0 = torch._ops.aten.ones.default([2, 2], dtype=torch.float32, device=device(type='cpu'), pin_memory=False)

# $1 = torch._ops.aten.reshape.default($0, [-1])

```

When registering these ops as decompositions, to appease the test that detects that we have indeed registered it in the table, we may need to specify what op it decomposes into:

https://github.com/pytorch/pytorch/blob/443b13fa232c52bddf20726a6da040d8957a3c49/torch/testing/_internal/common_methods_invocations.py#L744-L745

cc @ezyang @mruberry @ngimel

| 6 |

5,211 | 81,667 |

[Tracker] AO migration of quantization from `torch.nn` to `torch.ao.nn`

|

oncall: quantization, low priority, triaged

|

## Motivation

There are several locations under the `torch.nn` that are related to the quantization. In order to reduce the cluttering and "takeover" by the quantization, the nn modules that are relevant are being migrated to `torch.ao`.

## Timeline

### Upcoming deadlines

- [x] 08/2022: Migration of the code from `torch.nn` to `torch.ao.nn`. At this point both location could be used, while the old location would have `from torch.ao... import ...`

- [x] 08/2022: Importing from the `torch.nn` would show a deprecation warning

- [ ] PyTorch 1.14 ~~1.13~~: Documentation updated from old locations to AO locations

- [ ] PyTorch 1.14 ~~1.13~~: Tutorials updated from old locations to AO locations

- [ ] PyTorch 1.14 ~~1.13~~: The deprecation warning is shown

- [ ] PyTorch 1.14+: The deprecation error is shown

- [ ] PyTorch 1.14+: The `torch.nn` location is cleaned up and not usable anymore.

### TODOs

- [ ] All docstrings within `torch.ao` are up to date (classes and functions)

- Note: If not cleaned up, these will generate a lot of duplicates under the `generated`

- Example: https://github.com/pytorch/pytorch/blob/015b05af18b78ca9c77c997bc277eec66b5b1542/torch/ao/nn/quantized/dynamic/modules/conv.py#L22

- [ ] The documentation in the `docs` is only referring to the `torch.ao` for quantization related stuff

- Note: There are a lot of `:noindex:` in the documentation that need to be cleaned up

- Note: There are a lot of `.. py::module::` in the documentation that need to be cleaned up

- Example: https://github.dev/pytorch/pytorch/blob/015b05af18b78ca9c77c997bc277eec66b5b1542/docs/source/quantization-support.rst#L410

- [ ] Tutorials that are using the `torch.ao`

- [ ] https://pytorch.org/tutorials/intermediate/realtime_rpi.html

- [ ] https://pytorch.org/tutorials/advanced/dynamic_quantization_tutorial.html

- [ ] https://pytorch.org/tutorials/intermediate/dynamic_quantization_bert_tutorial.html

- [ ] https://pytorch.org/tutorials/intermediate/quantized_transfer_learning_tutorial.html

- [ ] https://pytorch.org/tutorials/advanced/static_quantization_tutorial.html

- [ ] Blog posts

- Note: THis is one of the blog posts: https://pytorch.org/blog/introduction-to-quantization-on-pytorch/

### Complete

- [x] `torch.nn.quantized` → `torch.ao.nn.quantized`

- [x] [`torch.nn.quantized.functional` → `torch.ao.nn.quantized.functional`](https://github.com/pytorch/pytorch/pull/78712)

- [x] [`torch.nn.quantized.modules` → `torch.ao.nn.quantized.modules`](https://github.com/pytorch/pytorch/pull/78713)

- [x] [`torch.nn.quantized.dynamic` → `torch.ao.nn.quantized.dynamic`](https://github.com/pytorch/pytorch/pull/78714)

- [x] [`torch.nn.quantized._reference` → `torch.ao.nn.quantized._reference`](https://github.com/pytorch/pytorch/pull/78715)

- [x] [`torch.nn.quantizable` → `torch.ao.nn.quantizable`](https://github.com/pytorch/pytorch/pull/78717)

- [x] [`torch.nn.qat` → `torch.ao.nn.qat`](https://github.com/pytorch/pytorch/pull/78716)

- [x] `torch.nn.qat.modules` → `torch.ao.nn.qat.modules`

- [x] `torch.nn.qat.dynamic` → `torch.ao.nn.qat.dynamic`

- [x] `torch.nn.intrinsic` → `torch.ao.nn.intrinsic`

- [x] [`torch.nn.intrinsic.modules` → `torch.ao.nn.intrinsic.modules`](https://github.com/pytorch/pytorch/pull/84842)

- [x] [`torch.nn.intrinsic.qat` → `torch.ao.nn.intrinsic.qat`](https://github.com/pytorch/pytorch/pull/86171)

- [x] [`torch.nn.intrinsic.quantized` → `torch.ao.nn.intrinsic.quantized`](https://github.com/pytorch/pytorch/pull/86172)

- [x] `torch.nn.intrinsic.quantized.modules` → `torch.ao.nn.intrinsic.quantized.modules`

- [x] `torch.nn.intrinsic.quantized.dynamic` → `torch.ao.nn.intrinsic.quantized.dynamic`

## Blockers

1. Preserving the blame history

1. Currently, we are pushing for the internal blame history preservation, as the sync between internal and external history post-migration proves to be non-trivial

cc @jerryzh168 @jianyuh @raghuramank100 @jamesr66a @vkuzo @jgong5 @Xia-Weiwen @leslie-fang-intel @anjali411 @albanD

| 2 |

5,212 | 81,654 |

[packaging] Conda install missing python local version label (+cu123 or +cpu)

|

oncall: releng, triaged

|

### 🐛 Describe the bug

I'm installing pytorch through Conda then using poetry in my projects.

The issue is that when I use the recommended installation method for pytorch (`conda install -y -c pytorch pytorch==1.11.0 cudatoolkit=11.3`), I end up with:

`conda list`: `pytorch 1.11.0 py3.7_cuda11.3_cudnn8.2.0_0 pytorch`.

`pip list`: `torch 1.11.0`.

My issue is that conda installed torch `1.11.0+cu113` but did not include the local version label (`+cu113`), so then when I use pip or poetry, it thinks it needs to replace torch `1.11.0` by `1.11.0+cu113`.

I use this dirty hack to fix the package version of torch:

```bash

mv /[...]/conda/lib/python3.7/site-packages/torch-1.11.0-py3.7.egg-info/ /[...]/conda/lib/python3.7/site-packages/torch-1.11.0+cu113-py3.7.egg-info/

sed -i -E 's/(Version: [^+]+)\n/\1+cu113\n/' /opt/conda/lib/python3.7/site-packages/torch-1.11.0+cu113-py3.7.egg-info/PKG-INFO

```

### Versions

PyTorch version: 1.11.0

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.3 LTS (x86_64)

GCC version: Could not collect

Clang version: Could not collect

CMake version: Could not collect

Libc version: glibc-2.17

Python version: 3.7.13 (default, Mar 29 2022, 02:18:16) [GCC 7.5.0] (64-bit runtime)

| 0 |

5,213 | 81,651 |

optimize_for_mobile has an issue with constant operations at the end of a loop

|

oncall: mobile

|

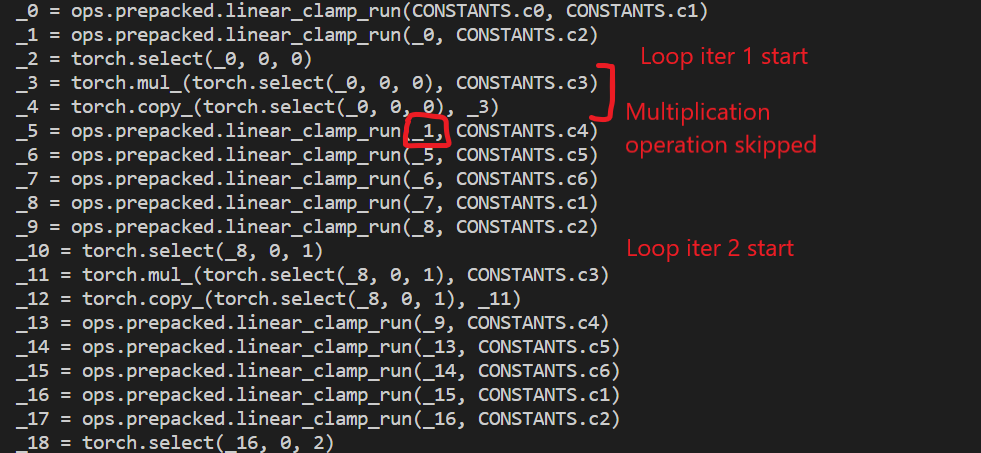

### 🐛 Describe the bug

I've been working on a model that I want to optimize for mobile, but when optimizing the model for mobile, I ran into an issue with an operation at the end of a loop. I'll use the code example below to show the issue:

```

import torch

from torch import nn

from torch.utils.mobile_optimizer import optimize_for_mobile

class testModel(nn.Module):

def __init__(self):

super(testModel, self).__init__()

# Input is 5, output is 5

self.m = nn.ModuleList([nn.Linear(5, 5) for i in range(5)])

# Forward takes noise as input and returns 5 outputs

# from the network

def forward(self, X):

# the output is initially all ones

Y = torch.ones((5))

# array to hold the outputs

out = []

# Iterate 5 times to get 5 outputs

for i in range(0, 5):

# Send the inputs through the blocks

for b in self.m:

Y = b(Y)

# Save the ith output

out.append(Y[i])

# Add a constant to the ith value of Y. This

# is what breaks the optimized model

Y[i] *= 2

return torch.stack(out)

def main():

# Create a new network

model = testModel()

# Trash input

X = torch.zeros((5))

# Get the network output

Y = model(X)

# Create torch script form of the model

ts_model = torch.jit.trace(model, X)

ts_model_mobile = optimize_for_mobile(ts_model)

# Get the output from the torch script models

Y_ts_model = ts_model(X)

Y_ts_model_mobile = ts_model_mobile(X)

print(ts_model_mobile.code)

# What are the outputs from the model?

print("Original: ", Y)

print("Optimized: ", Y_ts_model)

print("Mobile Optimized: ", Y_ts_model_mobile)

# The output of the model should be the same as the

# output from the optimized models

assert torch.all(Y.eq(Y_ts_model)), "Torch script different from original model"

assert torch.all(Y.eq(Y_ts_model_mobile)), "Mobile torch script different from original model"

if __name__=='__main__':

main()

```

If you run the code, you would expect the output of the torch script model to be the same as the output of the original model, but the outputs of the models are slightly off. The original traced module works perfectly fine, but the mobile script runs into an issue where the output is slightly off for all values in the output vector (excluding the first). Taking a look at the mobile torch script code, I think I see what the issue is:

At the beginning of each loop, it applies the constant multiplication operation. The first operation is applied at _3 and _4. This operation should be applied after the loop instead of at the beginning.

Another issue (which I think is the main problem) is after the multiplication operation is performed on the Y tensor (at _3 and _4), the value is never used. As seen in _5, the _1 tensor is used which is the tensor before the constant operation is applied. Instead, the forward method at _5 should probably be using _4.

### Versions

torch: 1.12.0

| 2 |

5,214 | 81,650 |

RFC: auto-generated plain Tensor argument only sparse primitives

|

module: sparse, triaged

|

### 🐛 Describe the bug

When we say `at::add(sparse_x, sparse_y)`, this is an operator that takes in two sparse tensors and produces a sparse tensor. This requires a backend compiler to have a model of what it means to be a sparse tensor. Sometimes, this is inconvenient for a backend; the backend would rather have the input all be in terms of dense inputs.

We can help by generating sparse prims that are expressed entirely in terms of dense tensors. So for example, the sparse variant of at::add above would be `sparse_prims::add(self_sparse_dim: int, self_dense_dim: int, self_size: int[], self_indices: Tensor, self_values: Tensor, other_sparse_dim: int, other_dense_dim: int, other_size: int[], other_indices: Tensor, other_values: Tensor) -> (int, int, int[], Tensor, Tensor)`. Essentially, each of the substantive fields in the sparse tensor (sparse dim, dense dim, size, indices and values) has been inlined into the function argument. The new function signature is Tensors only and doesn't have any explicit sparse tensors.

Some notes:

* In many situations, a sparse operation isn't supported in full generality. For example, the add signature above suggests that it is ok to mix different amounts of sparse/dense dims in addition. In actuality, they have to be the same (so the second pair of sparse dense dims could be deleted.) This simplification cannot be done mechanically, you have to know about the preconditions for the function. But we could imagine further decomposing these prims by hand into simpler versions, eliminating the need for backends to do this error checking.

* You need separate sparse prim for multiple dispatch; e.g. dense-sparse addition needs yet another prim. sparse-dense addition should decompose into dense-sparse and thus not require another prim.

* AOTDispatch would be responsible for unpacking and repacking the dense tensors into sparse tensors.

* This trick applies to all TensorImpl subclasses which ultimately backend to dense tensors

We could also *not* do this and force passes and compilers to know how to deal with sparse tensors directly.

cc @nikitaved @pearu @cpuhrsch @amjames, please tag anyone else relevant

cc @bdhirsh

### Versions

master

| 2 |

5,215 | 81,649 |

Idiom for PrimTorch refs for Tensor methods

|

triaged, module: primTorch

|

### 🐛 Describe the bug

Right now if you want to write a ref for a Tensor method, there's no precedent for how to do this. Some of these right now are getting dumped directly in torch._refs which is wrong (since torch._refs.foo should only exist if torch.foo exists, but some methods do not exist as functions) and getting remapped with `torch_to_refs_map`

The goal would be to create some sort of synthetic class or module for tensor methods, and then automatically map tensor methods to that class, so that TorchRefsMode works out of the box with a new tensor without having to route through a special case mapping.

### Versions

master

cc @ezyang @mruberry @ngimel

| 0 |

5,216 | 81,648 |

`sparse_coo.to_dense()` produces different results between CPU and CUDA backends for boolean non-coalesced inputs.

|

module: sparse, triaged

|

### 🐛 Describe the bug

As per title. To reproduce, run the following:

```python

In [1]: import torch

In [2]: idx = torch.tensor([[1, 1, 1, 2, 2, 2], [2, 2, 1, 3, 3, 3]], dtype=torch.long)

In [3]: val = torch.tensor([True, False, True, False, False, True])

In [4]: s = torch.sparse_coo_tensor(idx, val, size=(10, 10))

In [5]: torch.all(s.to('cuda').to_dense() == s.to_dense().to('cuda'))

Out[5]: tensor(False, device='cuda:0')

```

The CPU result seems correct, so I assume a parallelization issue.

### Versions

Current master.

cc @nikitaved @pearu @cpuhrsch @amjames

| 4 |

5,217 | 81,635 |

Windows Debug binaries crash on forward: assert fail on IListRefIterator destructor

|

oncall: jit

|

### 🐛 Describe the bug

libtorch 1.12 CUDA 11.6 on windows

I load a saved jit model, run it with the Release binary and it works.

If instead I compile and link with Debug binaries, at the 'forward' call i got an assert fail.

Line 405 of IListRef.h

In the trace i read a lot of box-unbox and a call to 'materialize'.

I believe the crash happens at the end of the forward, bceause some time has passed and the ram has been filled by some Gbs.

### Versions

[pip3] numpy==1.23.1

[pip3] torch==1.12.0+cu116

[pip3] torchaudio==0.12.0+cu116

[pip3] torchvision==0.13.0+cu116

[conda] Could not collect

| 1 |

5,218 | 81,626 |

DISABLED test_profiler (test_jit.TestJit)

|

oncall: jit, module: flaky-tests, skipped

|

Platforms: linux

This test was disabled because it is failing in CI. See [recent examples](https://hud.pytorch.org/flakytest?name=test_profiler&suite=test_jit.TestJit&file=test_jit.py) and the most recent trunk [workflow logs](https://github.com/pytorch/pytorch/runs/7381856543).

Over the past 3 hours, it has been determined flaky in 1 workflow(s) with 1 red and 1 green.

| 6 |

5,219 | 81,625 |

[bug] the output shape from torch::mean and torch::var is different in libtorch

|

module: cpp, triaged

|

### 🐛 Describe the bug

~~~

#include <torch/torch.h>

#include <ATen/ATen.h>

torch::Tensor x = torch::randn({3,4,5});

at::IntArrayRef dim{{0,2}};

std::cout << x.mean(dim, 1).sizes() << std::endl;

/*

[1, 4, 1]

*/

std::cout << x.var(dim, 1).sizes() << std::endl;

/*

[4]

*/

~~~

the shape of output from mean and var is not compatible.

### Versions

libtorch: 1.11.0+cu113

cc @jbschlosser

| 0 |

5,220 | 81,622 |

[Distributed] test_dynamic_rpc_existing_rank_can_communicate_with_new_rank_cuda fails in caching allocator

|

oncall: distributed

|

### 🐛 Describe the bug

Code to reproduce the issue:

```python

python distributed/rpc/test_tensorpipe_agent.py -v -k test_dynamic_rpc_existing_rank_can_communicate_with_new_rank_cuda

```

Output:

```python

INFO:torch.distributed.nn.jit.instantiator:Created a temporary directory at /tmp/tmpce6ewbo1

INFO:torch.distributed.nn.jit.instantiator:Writing /tmp/tmpce6ewbo1/_remote_module_non_scriptable.py

test_dynamic_rpc_existing_rank_can_communicate_with_new_rank_cuda (__main__.TensorPipeTensorPipeAgentRpcTest) ... INFO:numba.cuda.cudadrv.driver:init

INFO:torch.testing._internal.common_distributed:Started process 0 with pid 64064

INFO:torch.testing._internal.common_distributed:Started process 1 with pid 64065

INFO:torch.testing._internal.common_distributed:Started process 2 with pid 64066

INFO:torch.testing._internal.common_distributed:Started process 3 with pid 64067

INFO:torch.distributed.nn.jit.instantiator:Created a temporary directory at /tmp/tmpj5jtkv1l

INFO:torch.distributed.nn.jit.instantiator:Writing /tmp/tmpj5jtkv1l/_remote_module_non_scriptable.py

INFO:torch.distributed.nn.jit.instantiator:Created a temporary directory at /tmp/tmp3xq_65qx

INFO:torch.distributed.nn.jit.instantiator:Writing /tmp/tmp3xq_65qx/_remote_module_non_scriptable.py

INFO:torch.distributed.nn.jit.instantiator:Created a temporary directory at /tmp/tmp0oc80wck

INFO:torch.distributed.nn.jit.instantiator:Writing /tmp/tmp0oc80wck/_remote_module_non_scriptable.py

INFO:torch.distributed.nn.jit.instantiator:Created a temporary directory at /tmp/tmpp47jqr8l

INFO:torch.distributed.nn.jit.instantiator:Writing /tmp/tmpp47jqr8l/_remote_module_non_scriptable.py

INFO:torch.testing._internal.common_distributed:Starting event listener thread for rank 1

INFO:torch.testing._internal.common_distributed:Starting event listener thread for rank 0

INFO:torch.testing._internal.common_distributed:Starting event listener thread for rank 2

INFO:torch.testing._internal.common_distributed:Starting event listener thread for rank 3

INFO:torch.distributed.distributed_c10d:Added key: store_based_barrier_key:1 to store for rank: 0

INFO:torch.distributed.distributed_c10d:Added key: store_based_barrier_key:1 to store for rank: 1

INFO:torch.distributed.distributed_c10d:Added key: store_based_barrier_key:1 to store for rank: 3

INFO:torch.distributed.distributed_c10d:Rank 3: Completed store-based barrier for key:store_based_barrier_key:1 with 4 nodes.

INFO:torch.distributed.distributed_c10d:Added key: store_based_barrier_key:1 to store for rank: 2

INFO:torch.distributed.distributed_c10d:Rank 1: Completed store-based barrier for key:store_based_barrier_key:1 with 4 nodes.

INFO:torch.distributed.distributed_c10d:Rank 2: Completed store-based barrier for key:store_based_barrier_key:1 with 4 nodes.

INFO:torch.distributed.distributed_c10d:Rank 0: Completed store-based barrier for key:store_based_barrier_key:1 with 4 nodes.

terminate called after throwing an instance of 'c10::Error'

what(): 0 <= device && static_cast<size_t>(device) < device_allocator.size() INTERNAL ASSERT FAILED at "/workspace/src/pytorch/c10/cuda/CUDACachingAllocator.cpp":1602, please report a bug to PyTorch. Allocator not initialized for device 1: did you call init?

Exception raised from malloc at /workspace/src/pytorch/c10/cuda/CUDACachingAllocator.cpp:1602 (most recent call first):

frame #0: c10::Error::Error(c10::SourceLocation, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >) + 0x6c (0x7f63d94b96cc in /workspace/src/pytorch/torch/lib/libc10.so)

frame #1: c10::detail::torchCheckFail(char const*, char const*, unsigned int, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 0xfa (0x7f63d948f12c in /workspace/src/pytorch/torch/lib/libc10.so)

frame #2: c10::detail::torchInternalAssertFail(char const*, char const*, unsigned int, char const*, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&) + 0x53 (0x7f63d94b73e3 in /workspace/src/pytorch/torch/lib/libc10.so)

...

```

Unsure, if the test is executed in an unsupported way or why CI isn't seeing this issue.

### Versions

Current master build at [fe7262329c](https://github.com/pytorch/pytorch/commit/fe7262329c6ae702df185bdbeac702e0d2edc123) on a node with 8x A100.

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501

| 1 |

5,221 | 81,620 |

PyTorch 1.12 cu113 Illegal Memory Access or Internal Error instead of Out of Memory cases

|

module: cudnn, module: cuda, triaged

|

### 🐛 Describe the bug

I'm currently doing benchmark runs on latest timm release with PyTorch 1.12 cu113 (conda) to update timing spreadsheets. I've run into a number of cases where out of memory situations are not being picked up correctly (for illegal memory access, unrecoverable within same process). @ptrblck has mentioned these should be reported. Previous 1.11 cu113 runs were completely clean for contiguous and had only a few channels_last cases.

The illegal memory access are consistent every run (if using same starting batch size), verified across more than one machine.

`CUDA error: an illegal memory access was encountered`:

* vgg11

* vgg13

* vgg16

* vgg19

* nf_regnet_b5

The CUDNN_STATUS_INTERNAL_ERROR model failures below are not as consistent, I have reproduced most of them, but sometimes fail with correct OOM error on a different machine or across same machine restart.

`cuDNN error: CUDNN_STATUS_INTERNAL_ERROR`

* resnetv2_50x3_bitm

* resnetv2_101x3_bitm

* resnetv2_152x2_bit_teacher_384

* resnetv2_152x2_bitm

All failures were recorded on RTX 3090, running `timm` benchmark script (in root of, https://github.com/rwightman/pytorch-image-models) as per example below (with a starting batch size of 1024). Starting w/ a lower batch size for all failure cases will succeed.

ex: `python benchmark.py --amp --model nf_regnet_b5 --bench inference -b 1024`

### Versions

```

Collecting environment information...

PyTorch version: 1.12.0

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.3 LTS (x86_64)

GCC version: (Ubuntu 9.3.0-17ubuntu1~20.04) 9.3.0

Clang version: Could not collect

CMake version: Could not collect

Libc version: glibc-2.31

Python version: 3.10.4 (main, Mar 31 2022, 08:41:55) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.4.0-99-generic-x86_64-with-glibc2.31

Is CUDA available: True

CUDA runtime version: 11.4.152

GPU models and configuration:

GPU 0: NVIDIA GeForce RTX 3090

GPU 1: NVIDIA GeForce RTX 3090

GPU 2: NVIDIA GeForce RTX 3090

Nvidia driver version: 470.103.01

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.22.3

[pip3] torch==1.12.0

[pip3] torchvision==0.13.0

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.3.1 h2bc3f7f_2

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py310h7f8727e_0

[conda] mkl_fft 1.3.1 py310hd6ae3a3_0

[conda] mkl_random 1.2.2 py310h00e6091_0

[conda] numpy 1.22.3 py310hfa59a62_0

[conda] numpy-base 1.22.3 py310h9585f30_0

[conda] pytorch 1.12.0 py3.10_cuda11.3_cudnn8.3.2_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchvision 0.13.0 py310_cu113 pytorch

```

cc @csarofeen @ptrblck @xwang233 @ngimel

| 6 |

5,222 | 81,608 |

FakeTensorMode cannot handle non-fake tensor, but non-fake tensors can arise from non-interposable Tensor construction calls

|

triaged, oncall: pt2

|

### 🐛 Describe the bug

Currently, FakeTensorMode does not support non-Fake inputs in operations, except in a few special cases. These special cases typically have to do with cases where we produce tensors deep in the bowels of ATen via a non-dispatchable function, so FakeTensorMode doesn't get to interpose on the construction and we end up with a freshly generated non-fake tensor floating around.

The particular case I noticed this occurring was `at::scalar_to_tensor`, which internally bypasses the dispatcher (actually, I'm pretty sure the guards are unnecessary):

```

template <typename scalar_t>

inline void fill_inplace(Tensor& self, const Scalar& value_scalar) {

auto value = value_scalar.to<scalar_t>();

scalar_t* dptr = static_cast<scalar_t*>(self.data_ptr());

*dptr = value;

}

}

namespace detail {

Tensor& scalar_fill(Tensor& self, const Scalar& value) {

AT_DISPATCH_ALL_TYPES_AND_COMPLEX_AND4(

kComplexHalf, kHalf, kBool, kBFloat16, self.scalar_type(), "fill_out", [&]() {

fill_inplace<scalar_t>(self, value);

});

return self;

}

Tensor scalar_tensor_static(const Scalar& s, c10::optional<ScalarType> dtype_opt, c10::optional<Device> device_opt) {

at::tracer::impl::NoTracerDispatchMode tracer_guard;

at::AutoDispatchBelowAutograd mode;

Tensor result = at::detail::empty_cpu(

{}, dtype_opt, c10::nullopt, device_opt, c10::nullopt, c10::nullopt);

scalar_fill(result, s);

return result;

}

```

This makes it similar to `torch.tensor` in Python: a tensor constant materializes out of nowhere, and so if we want a mode to be able to interpose on it, we have to then call the result with `at::lift_fresh`.

Now, I'm a little leery about actually inserting the dispatcher call here, because we explicitly removed the dispatch call for speed (from @ailzhang in #29915). And in fact, we only have to worry about a bare `scalar_to_tensor` call for composite ops. In https://github.com/pytorch/pytorch/pull/81609 ~~I remove `scalar_to_tensor` from autograd formulas which are interpreted as composites and was the source of the bug I was tracking down. All other usages are OK.~~ I ended up *only* calling `lift_fresh` in the derivative formulas, and not in `scalar_to_tensor` generally. Testing this feels like a beefier form of composite compliance https://github.com/pytorch/pytorch/blob/master/aten/src/ATen/native/README.md#composite-compliance (cc @ezyang @zou3519 ).

The crux of the matter is this: if we don't allow FakeTensorMode to handle non-fake tensor, we must audit all composite functions for calls to non-dispatching functions that construct Tensors, and make them not call that or call lift. This includes auditing for uses of `scalar_to_tensor` in composites beyond the derivative formulas (which I have finished auditing). However, we have another option: we can make FakeTensorMode handle non-fake tensors, by implicitly adding the equivalent of a `lift_fresh` call whenever it sees an unknown tensor. However, this makes it easier for an end user of fake tensor to foot-gun themselves by passing in a non-fake tensor from the ambient context, and then attempting to mutate its metadata (which is not going to ge propagated to the original). So maybe auditing is better, esp if we can come up with a better composite compliance test.

BTW, another way tensors can be created is from saved variable. Hypothetically, a wrapped number tensor could be saved as a variable and we don't currently propagate this property.

```

diff --git a/torch/csrc/autograd/saved_variable.cpp b/torch/csrc/autograd/saved_variable.cpp

index c6ca8eda13..bfc3bd6277 100644

--- a/torch/csrc/autograd/saved_variable.cpp

+++ b/torch/csrc/autograd/saved_variable.cpp

@@ -51,6 +51,7 @@ SavedVariable::SavedVariable(

is_leaf_ = variable.is_leaf();

is_output_ = is_output;

is_inplace_on_view_ = is_inplace_on_view;

+ is_wrapped_number_ = variable.unsafeGetTensorImpl()->is_wrapped_number();

if (is_inplace_on_view) {

TORCH_INTERNAL_ASSERT(!is_leaf_ && is_output);

@@ -223,6 +224,10 @@ Variable SavedVariable::unpack(std::shared_ptr<Node> saved_for) const {

var._set_fw_grad(new_fw_grad, /* level */ 0, /* is_inplace_op */ false);

}

+ if (is_wrapped_number_) {

+ var.unsafeGetTensorImpl()->set_wrapped_number(true);

+ }

+

return var;

}

diff --git a/torch/csrc/autograd/saved_variable.h b/torch/csrc/autograd/saved_variable.h

index 9136ff1a62..23f18f5b72 100644

--- a/torch/csrc/autograd/saved_variable.h

+++ b/torch/csrc/autograd/saved_variable.h

@@ -93,6 +93,7 @@ class TORCH_API SavedVariable {

bool saved_original_ = false;

bool is_leaf_ = false;

bool is_output_ = false;

+ bool is_wrapped_number_ = false;

// Hooks are a pair of functions pack_hook/unpack_hook that provides

// fine-grained control over how the SavedVariable should save its data.

```

However, I could not find any case where this actually made a difference. cc @albanD

cc @ezyang @bdhirsh @Chillee @eellison

### Versions

master

| 2 |

5,223 | 81,568 |

Improve interaction of PyTorch downstream libraries and torchdeploy

|

triaged, module: deploy

|

### 🐛 Describe the bug

torchdeploy's Python library model is that each interpreter loads and manages downstream libraries (including those with compiled extension modules) separately, since the extension modules in general need to link against libpython (which is duplicated per interpreter). However, this causes a problem if the downstream libraries do custom operator registration, which hits a shared global state among all interpreters.

### Versions

master

cc @wconstab

| 1 |

5,224 | 81,565 |

__getitem__ is returned as an OverloadPacket instead of an OpOverload in __torch_dispatch__

|

triaged, module: __torch_dispatch__, bug

|

### 🐛 Describe the bug

As per the title. Caught this while testing tags in a TorchDispatchMode.

### Versions

master

cc @Chillee @ezyang @zou3519 @albanD @samdow

| 5 |

5,225 | 81,559 |

[Profiler] Defer thread assignment for python startup events.

|

triaged, oncall: profiler

|

### 🚀 The feature, motivation and pitch

When the python function tracer starts, there are generally frames which are already live in the cPython interpreter. In order to produce a comprehensible trace we treat them as though they just started: https://github.com/pytorch/pytorch/blob/master/torch/csrc/autograd/profiler_python.cpp#L538 However, this will cause a problem when we support multiple python threads because all of these initial events will use the system TID of the thread which started the tracer. In order to treat them properly, we should lazily assign them system TIDs. This can be accomplished by keeping track of which events were start events (just tracking the number of start events is sufficient to reconstruct this information later) and then use the TID of the first call in the actual tracing block to assign the proper TID during post processing.

To take a concrete example:

```

# Initial stacks

Python thread 0: [foo()][bar()][baz()]

Python thread 1: [f0()][f1()]

```

Let's say we start the tracer from system thread 3. The five frames which were active when the tracer started will all be stored in the event buffer for thread 3 and in the current machinery that is the inferred TID. Suppose we see a call on Python thread 0 during profiling which occurs on system thread 2. We can use that information to change the system TID of the `foo`, `bar`, and `baz` frames to system TID 2 during post processing and produce a more accurate trace. (And will separate the stacks on the chrome trace.)

### Alternatives

_No response_

### Additional context

_No response_

cc @ilia-cher @robieta @chaekit @gdankel @bitfort @ngimel @nbcsm @guotuofeng @guyang3532 @gaoteng-git

| 0 |

5,226 | 81,554 |

float' object is not callable when using scheduler.step() with MultiplicativeLR

|

module: optimizer, triaged, actionable

|

### 🐛 Describe the bug

## 🐛 Bug

I have initialized an optimizer and a scheduler like this:

```

def configure_optimizers(self):

opt = torch.optim.Adam(self.model.parameters(), lr=cfg.learning_rate)

sch = torch.optim.lr_scheduler.MultiplicativeLR(opt, lr_lambda = 0.95) #decrease of 5% every epoch

return [opt], [sch]`

```

Since I just want to updte the scheduler after each epoch, I did not modify this updating method in the training phase, but this is the error I get after the first epoch:

> self.update_lr_schedulers("epoch", update_plateau_schedulers=False)

> File "/home/lsa/anaconda3/envs/randla_36/lib/python3.6/site-packages/pytorch_lightning/loops/epoch/training_epoch_loop.py", line 448, in update_lr_schedulers

> opt_indices=[opt_idx for opt_idx, _ in active_optimizers],

> File "/home/lsa/anaconda3/envs/randla_36/lib/python3.6/site-packages/pytorch_lightning/loops/epoch/training_epoch_loop.py", line 509, in _update_learning_rates

> lr_scheduler["scheduler"].step()

> File "/home/lsa/anaconda3/envs/randla_36/lib/python3.6/site-packages/torch/optim/lr_scheduler.py", line 152, in step

> values = self.get_lr()

> File "/home/lsa/anaconda3/envs/randla_36/lib/python3.6/site-packages/torch/optim/lr_scheduler.py", line 329, in get_lr

> for lmbda, group in zip(self.lr_lambdas, self.optimizer.param_groups)]

> File "/home/lsa/anaconda3/envs/randla_36/lib/python3.6/site-packages/torch/optim/lr_scheduler.py", line 329, in <listcomp>

> for lmbda, group in zip(self.lr_lambdas, self.optimizer.param_groups)]

> TypeError: 'float' object is not callable

### Expected behavior

This error should not be there, in fact, using another scheduler ( in particular this

` sch = torch.optim.lr_scheduler.CosineAnnealingLR(opt, T_max=10)` ) I do not get the error and the training proceedes smoothly.

### To Reproduce

```

import torch

from torchvision.models import resnet18

net = resnet18()

optimizer = torch.optim.Adam(net.parameters(), lr=0.01)

scheduler = torch.optim.lr_scheduler.MultiplicativeLR(optimizer, 0.95) # BUG SCHEDULER

#scheduler = torch.optim.lr_scheduler.StepLR(optimizer, 3, gamma=0.1) # WORKING ONE

for i in range(10):

print(i, scheduler.get_lr())

scheduler.step()

```

### Environment

* CUDA:

- GPU:

- NVIDIA RTX A6000

- available: True

- version: 11.3

* Packages:

- numpy: 1.19.2

- pyTorch_debug: False

- pyTorch_version: 1.10.2

- pytorch-lightning: 1.5.0

- tqdm: 4.64.0

* System:

- OS: Linux

- architecture:

- 64bit

-

- processor: x86_64

- python: 3.6.13

- version: #44~20.04.1-Ubuntu SMP Fri Jun 24 13:27:29 UTC 2022

cc @vincentqb @jbschlosser @albanD

| 2 |

5,227 | 81,552 |

Support Swift Package Manager (SPM) for iOS

|

oncall: mobile

|

### 🚀 The feature, motivation and pitch

Currently, Pytorch needs to be added to an iOS project via Cocoapods. However, many newer projects have migrated to Swift Package Manager. It would be fantastic if one could add Pytorch mobile via SPM, too.

### Alternatives

Alternatively, I'd also be happy to vendor pytorch into my codebase. We threw out Cocoapods a while ago and don't really want to reintroduce it if possible.

### Additional context

_No response_

| 3 |

5,228 | 81,545 |

Precision error from torch.distributed.send() to recv()

|

oncall: distributed

|

### 🐛 Describe the bug

There is probably a precision error when using `torch.distributed.send()` and `torch.distributed.recv()` pairs.

`torch.distributed.recv()` can receive a tensor correctly only when that tensor is sent of type `torch.float32`. The other float types `torch.float16` and `torch.float64` leads to wrong tensor values in receiver side.

## Reproduce

(on a dual GPU platform)

```python

import torch

import torch.multiprocessing as mp

import torch.distributed as dist

import time

def main_worker(rank, world_size, args):

dist.init_process_group(

backend="nccl",

init_method="tcp://127.0.0.1:9001",

world_size=world_size,

rank=rank,

)

print("process begin", rank)

for datatype in [None,torch.float,torch.float16,torch.float32,torch.float64]:

if rank == 0:

print(f"Current datatype: {datatype}.")

t = torch.rand([4,4],dtype=datatype).to(torch.device('cuda',rank))

print(f"Generate tensor{t}")

dist.send(t,1)

elif rank == 1:

r = torch.rand([4,4]).to(torch.device('cuda',rank))

dist.recv(r,0)

print("recv",r)

print()

time.sleep(1)

def main():

mp.spawn(main_worker, nprocs=2, args=(2, 2))

if __name__ == "__main__":

main()

```

## Output:

```python

process begin 0

Current datatype: None.

process begin 1

Generate tensortensor([[0.9230, 0.2856, 0.9419, 0.2844],

[0.9732, 0.7029, 0.0026, 0.9697],

[0.2188, 0.4143, 0.5163, 0.9863],

[0.1562, 0.3484, 0.1138, 0.3271]], device='cuda:0')

recv tensor([[0.9230, 0.2856, 0.9419, 0.2844],

[0.9732, 0.7029, 0.0026, 0.9697],

[0.2188, 0.4143, 0.5163, 0.9863],

[0.1562, 0.3484, 0.1138, 0.3271]], device='cuda:1')

Current datatype: torch.float32.

Generate tensortensor([[0.6158, 0.9911, 0.0677, 0.2109],

[0.0591, 0.5609, 0.4182, 0.4432],

[0.9296, 0.2350, 0.1028, 0.7265],

[0.1949, 0.0324, 0.4484, 0.8104]], device='cuda:0')

recv tensor([[0.6158, 0.9911, 0.0677, 0.2109],

[0.0591, 0.5609, 0.4182, 0.4432],

[0.9296, 0.2350, 0.1028, 0.7265],

[0.1949, 0.0324, 0.4484, 0.8104]], device='cuda:1')

Current datatype: torch.float16.

Generate tensortensor([[0.7212, 0.8945, 0.3042, 0.3184],

[0.3804, 0.9648, 0.8076, 0.9756],

[0.3862, 0.7358, 0.6611, 0.2539],

[0.4365, 0.9434, 0.7075, 0.6084]], device='cuda:0',

dtype=torch.float16)

recv tensor([[2.5669e-03, 5.6701e-07, 5.6217e-03, 6.2936e-03],

[4.3337e-04, 1.3432e-07, 4.2790e-03, 1.0597e-04],

[0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00],

[0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00]], device='cuda:1')

Current datatype: torch.float32.

Generate tensortensor([[0.7289, 0.0532, 0.6294, 0.5030],

[0.1043, 0.3015, 0.2626, 0.2357],

[0.8202, 0.1919, 0.3556, 0.2653],

[0.9763, 0.3292, 0.9931, 0.8236]], device='cuda:0')

recv tensor([[0.7289, 0.0532, 0.6294, 0.5030],

[0.1043, 0.3015, 0.2626, 0.2357],

[0.8202, 0.1919, 0.3556, 0.2653],

[0.9763, 0.3292, 0.9931, 0.8236]], device='cuda:1')

Current datatype: torch.float64.

Generate tensortensor([[0.1401, 0.5205, 0.3881, 0.1536],

[0.4686, 0.3280, 0.0725, 0.7440],

[0.5029, 0.2960, 0.5149, 0.2452],

[0.2024, 0.5243, 0.8930, 0.2613]], device='cuda:0',

dtype=torch.float64)

recv tensor([[-9.8724e-14, 1.5151e+00, 1.6172e-05, 1.7551e+00],

[ 1.6390e+35, 1.6941e+00, -1.9036e+12, 1.5286e+00],

[ 6.4192e-38, 1.7343e+00, 2.3965e+07, 1.6640e+00],

[-1.7412e+19, 1.3951e+00, -6.5071e+35, 1.8110e+00]], device='cuda:1')

```

### Versions

I tested and confirmed this phenomenon on two dual-GPU PCs and two versions of `PyTorch`.

#### Configure0: PyTorch `1.12.0`+`TITAN RTX`*2

```python

Collecting environment information...

PyTorch version: 1.12.0

Is debug build: False

CUDA used to build PyTorch: 11.6

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.4 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-6ubuntu2) 7.5.0

Clang version: Could not collect

CMake version: version 3.16.3

Libc version: glibc-2.31

Python version: 3.9.12 (main, Jun 1 2022, 11:38:51) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.13.0-52-generic-x86_64-with-glibc2.31

Is CUDA available: True

CUDA runtime version: 11.7.64

GPU models and configuration:

GPU 0: NVIDIA TITAN RTX

GPU 1: NVIDIA TITAN RTX

Nvidia driver version: 510.73.05

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] info-nce-pytorch==0.1.4

[pip3] numpy==1.22.3

[pip3] torch==1.12.0

[pip3] torch-tb-profiler==0.4.0

[pip3] torchaudio==0.12.0

[pip3] torchinfo==1.6.5

[pip3] torchvision==0.13.0

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.6.0 hecad31d_10 conda-forge

[conda] info-nce-pytorch 0.1.4 pypi_0 pypi

[conda] libblas 3.9.0 12_linux64_mkl conda-forge

[conda] libcblas 3.9.0 12_linux64_mkl conda-forge

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.22.3 py39he7a7128_0

[conda] numpy-base 1.22.3 py39hf524024_0

[conda] pytorch 1.12.0 py3.9_cuda11.6_cudnn8.3.2_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torch-tb-profiler 0.4.0 pypi_0 pypi

[conda] torchaudio 0.12.0 py39_cu116 pytorch

[conda] torchinfo 1.6.5 pyhd8ed1ab_0 conda-forge

[conda] torchvision 0.13.0 py39_cu116 pytorch

```

#### Configure1: PyTorch `1.8.2 LTS`+`TITAN RTX`*2

```python

Collecting environment information...

PyTorch version: 1.8.2

Is debug build: False

CUDA used to build PyTorch: 11.1

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.4 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-6ubuntu2) 7.5.0

Clang version: Could not collect

CMake version: version 3.16.3

Libc version: glibc-2.31

Python version: 3.8.13 (default, Mar 28 2022, 11:38:47) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.13.0-52-generic-x86_64-with-glibc2.17

Is CUDA available: True

CUDA runtime version: 11.7.64

GPU models and configuration:

GPU 0: NVIDIA TITAN RTX

GPU 1: NVIDIA TITAN RTX

Nvidia driver version: 510.73.05

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] info-nce-pytorch==0.1.4

[pip3] numpy==1.22.3

[pip3] torch==1.8.2

[pip3] torchaudio==0.8.2

[pip3] torchinfo==1.6.5

[pip3] torchvision==0.9.2

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.1.74 h6bb024c_0 nvidia

[conda] info-nce-pytorch 0.1.4 pypi_0 pypi

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py38h7f8727e_0

[conda] mkl_fft 1.3.1 py38hd3c417c_0

[conda] mkl_random 1.2.2 py38h51133e4_0

[conda] numpy 1.21.5 py38he7a7128_2

[conda] numpy-base 1.21.5 py38hf524024_2

[conda] pytorch 1.8.2 py3.8_cuda11.1_cudnn8.0.5_0 pytorch-lts

[conda] torchaudio 0.8.2 py38 pytorch-lts

[conda] torchinfo 1.6.5 pyhd8ed1ab_0 conda-forge

[conda] torchvision 0.9.2 py38_cu111 pytorch-lts

```

#### Configure2: PyTorch `1.12.0`+`RTX3090`*2

```python

Collecting environment information...

PyTorch version: 1.12.0

Is debug build: False

CUDA used to build PyTorch: 11.6

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.4 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.22.0-rc2

Libc version: glibc-2.31

Python version: 3.10.4 (main, Mar 31 2022, 08:41:55) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.13.0-52-generic-x86_64-with-glibc2.31

Is CUDA available: True

CUDA runtime version: 11.7.64

GPU models and configuration:

GPU 0: NVIDIA GeForce RTX 3090

GPU 1: NVIDIA GeForce RTX 3090

Nvidia driver version: 510.73.05

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.4.1

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] info-nce-pytorch==0.1.4

[pip3] numpy==1.22.3

[pip3] torch==1.12.0

[pip3] torchaudio==0.12.0

[pip3] torchvision==0.13.0

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.6.0 habf752d_9 nvidia

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] info-nce-pytorch 0.1.4 pypi_0 pypi

[conda] libblas 3.9.0 12_linux64_mkl conda-forge

[conda] libcblas 3.9.0 12_linux64_mkl conda-forge

[conda] liblapack 3.9.0 12_linux64_mkl conda-forge

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py310ha2c4b55_0 conda-forge

[conda] mkl_fft 1.3.1 py310h2b4bcf5_1 conda-forge

[conda] mkl_random 1.2.2 py310h00e6091_0

[conda] numpy 1.22.3 py310hfa59a62_0

[conda] numpy-base 1.22.3 py310h9585f30_0

[conda] pytorch 1.12.0 py3.10_cuda11.6_cudnn8.3.2_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchaudio 0.12.0 py310_cu116 pytorch

[conda] torchvision 0.13.0 py310_cu116 pytorch

```

#### Configure3: PyTorch `1.8.2 LTS`+`RTX3090`*2

```python

Collecting environment information...

PyTorch version: 1.8.2

Is debug build: False

CUDA used to build PyTorch: 11.1

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.4 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.22.0-rc2

Libc version: glibc-2.31

Python version: 3.8.13 (default, Mar 28 2022, 11:38:47) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.13.0-52-generic-x86_64-with-glibc2.17

Is CUDA available: True

CUDA runtime version: 11.7.64

GPU models and configuration:

GPU 0: NVIDIA GeForce RTX 3090

GPU 1: NVIDIA GeForce RTX 3090

Nvidia driver version: 510.73.05

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.4.1

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.4.1

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] info-nce-pytorch==0.1.4

[pip3] numpy==1.21.5

[pip3] torch==1.8.2

[pip3] torch-tb-profiler==0.3.1

[pip3] torchaudio==0.8.2

[pip3] torchinfo==1.7.0

[pip3] torchvision==0.9.2

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.1.74 h6bb024c_0 nvidia

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py38h7f8727e_0

[conda] mkl_fft 1.3.1 py38hd3c417c_0

[conda] mkl_random 1.2.2 py38h51133e4_0

[conda] numpy 1.21.5 py38he7a7128_2

[conda] numpy-base 1.21.5 py38hf524024_2

[conda] pytorch 1.8.2 py3.8_cuda11.1_cudnn8.0.5_0 pytorch-lts

[conda] torchaudio 0.8.2 py38 pytorch-lts

[conda] torchinfo 1.7.0 pyhd8ed1ab_0 conda-forge

[conda] torchvision 0.9.2 py38_cu111 pytorch-lts

```

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501

| 3 |

5,229 | 81,544 |

Torch does not build with Lazy TS disabled

|

module: build, triaged

|

### 🐛 Describe the bug

If I compile with `BUILD_LAZY_TS_BACKEND=0`, the linker fails with:

> Creating library lib\torch_cpu.lib and object lib\torch_cpu.exp