Serial Number

int64 1

6k

| Issue Number

int64 75.6k

112k

| Title

stringlengths 3

357

| Labels

stringlengths 3

241

⌀ | Body

stringlengths 9

74.5k

⌀ | Comments

int64 0

867

|

|---|---|---|---|---|---|

5,301 | 80,903 |

make_fx doesn't work with truly dynamic argument functions (e.g. fx.Interpreter)

|

triaged, module: fx

|

### 🐛 Describe the bug

sample which breaks:

```

make_fx(lambda *args: torch.cat(args))(torch.randn(2), torch.randn(2))

```

Fails with

```

File "test/test_dynamo_cudagraphs.py", line 145, in cudagraphs

make_fx(lambda *args: torch.cat(args))([torch.randn(2), torch.randn(2)])

File "/raid/ezyang/pytorch-scratch2/torch/fx/experimental/proxy_tensor.py", line 281, in wrapped

t = dispatch_trace(wrap_key(f, args), tracer=fx_tracer, concrete_args=tuple(phs))

File "/raid/ezyang/pytorch-scratch2/torch/fx/experimental/proxy_tensor.py", line 177, in dispatch_trace

graph = tracer.trace(root, concrete_args)

File "/raid/ezyang/torchdynamo/torchdynamo/eval_frame.py", line 88, in _fn

return fn(*args, **kwargs)

File "/raid/ezyang/pytorch-scratch2/torch/fx/_symbolic_trace.py", line 667, in trace

fn, args = self.create_args_for_root(

File "/raid/ezyang/pytorch-scratch2/torch/fx/_symbolic_trace.py", line 524, in create_args_for_root

raise RuntimeError(

RuntimeError: Tracing expected 0 arguments but got 1 concrete arguments

```

The problem is FX knows how to see through varargs if there is an inner function its wrapping that has a true argument list, but cannot deal with truly dynamic lists. This is fine for vanilla FX as FX is unwilling to burn in the number of tensors in the list but for AOTAutograd/ProxyTensor we should burn in the list count and trace anyway.

cc @ezyang @Chillee @zdevito @jamesr66a

### Versions

master

| 11 |

5,302 | 80,875 |

slow test infra cannot handle nested suites

|

module: ci, triaged

|

### 🐛 Describe the bug

We currently categorize a selection of slow test cases in our repo to run on a particular config. We query for tests slower than a certain threshold and put these tests into a json every night. The slow tests json generated by our infra looks like: https://github.com/pytorch/test-infra/blob/generated-stats/stats/slow-tests.json.

The code in our CI that parses the JSON to detect whether or not to run the test is https://github.com/pytorch/pytorch/blob/master/torch/testing/_internal/common_utils.py#L1544. Note that we perfectly match a test name with the keys of the JSON.

This is almost always correct, except not all test suites proceed with a `__main__`, which we assume in our query here https://github.com/pytorch/test-infra/blob/main/torchci/rockset/commons/__sql/slow_tests.sql#L57.

### Solution

Let's change the logic in the test selection side to not care about `__main__` when figuring out if a test is a match.

### Versions

CI related

cc @seemethere @malfet @pytorch/pytorch-dev-infra

| 0 |

5,303 | 80,874 |

C++ extensions inject a bunch of compilation flags

|

oncall: binaries, module: cpp-extensions, triaged, better-engineering

|

### 🐛 Describe the bug

Discovered by @vfdev-5

This is possibly intentional, but I've noticed that C++ extensions, while building on linux, inject a bunch of compilation flags. For example, in functorch, we do not specify either of ['-g -NDEBUG'](https://github.com/pytorch/functorch/blob/b22d52bb15276cd919815cde01a16d4b3d8f798e/setup.py#L84-L88), but those appear in [our build logs](https://github.com/pytorch/functorch/runs/7140654131?check_suite_focus=true).

The end result of `-g` is that our linux binaries are a lot larger than they need to be (the debug symbols are 10s of mb) while our mac and windows binaries are very small < 1mb. I would expect the domain libraries to have the same issue.

## The diagnosis

In C++ extensions we adopt [certain flags from distutils](https://github.com/pytorch/pytorch/blob/f7678055033045688ae5916c8df72f5107d86a4a/torch/utils/cpp_extension.py#L558).

These flags come from Python's built-in sysconfig module (https://github.com/python/cpython/blob/ec5e253556875640b1ac514e85c545346ac3f1e0/setup.py#L476), which contains a list of flags used to compile Python. These include -g, among other things.

This is probably intentional (I wrote these lines by copy-pasting them from distutils) but I don't have a good understanding of why these flags are necessary or if only some of them are.

### Versions

main

cc @ezyang @seemethere @malfet @zou3519

| 2 |

5,304 | 80,867 |

[BE] Refactor FSDP Unit Tests

|

triaged, better-engineering, module: fsdp

|

## Motivation & Goals

1. In H2, we plan to land new FSDP features such as non-recursive wrapping and multiple parameter group support within one `FlatParameter`. Since these features correspond to distinct code paths, the core unit tests need to parameterize over them. This means an increase in the time-to-signal (TTS) by up to 4x and may increase with further features (e.g. native XLA support).

**Goal 1: Cut down the existing TTS while maintaining feature coverage**.

2. The test files have become fragmented. Despite the existence of `common_fsdp.py`, test files often define their own models and training loops, leading to redundancy, and the methods that do exist in `common_fsdp.py` are not well-documented, leading to confusion.

**Goal 2: Refactor common models and boilerplate to `common_fsdp.py`.**

3. For the non-recursive wrapping code path, the model construction differs from the existing recursive wrapping code path. As a result, much of the existing tests cannot be directly adapted to test the non-recursive path by simply changing a single `FullyShardedDataParallel` constructor call.

**Goal 3: Refactor model construction to enable simpler testing for the non-recursive wrapping path.**

## Status Quo

On the AI AWS cluster with 2 A100 GPUs, the current TTS is approximately **4967.06 seconds = 82.78 minutes = 1.38 hours**[0]. PyTorch Dev Infra has asked to have our multi-GPU tests run in < 75 minutes in CI, which uses P60 GPUs.

The largest contributors to the TTS are `test_fsdp_core.py` (2191.89 seconds = 36.53 minutes) and `test_fsdp_mixed_precision.py` (1074.42 seconds = 17.91 minutes), representing almost 2/3 of the total. Since these test the core FSDP runtime, newly-added code paths will target these tests.

[0]This is a point estimate from running each test file once and excludes recent changes to the test files (my stack was rebased on a commit from 6/21).

## Approach

I will proceed with a series of PRs. The order will be to address Goal 3 -> Goal 1 -> Goal 2.

- For Goal 3, I will introduce a common interface `FSDPTestModel`.

https://github.com/pytorch/pytorch/pull/80873

- For Goal 1, I will use `self.subTest()` with `dist.barrier()` to avoid the expensive process spawn and `dist.init_process_group()` for each parameterization.

https://github.com/pytorch/pytorch/pull/80908

https://github.com/pytorch/pytorch/pull/80915

https://blog.ganssle.io/articles/2020/04/subtests-in-python.html

> There are, however, occasionally situations where the subTest form factor offers some advantages even in parameterization. For example, if you have a number of tests you'd like to perform that have an expensive set-up function that builds or acquires an immutable resource that is used in common by all the subtests:

- For Goal 2, I will perform a deep comb through the existing test suite.

## Related Issues

https://github.com/pytorch/pytorch/issues/80872

https://github.com/pytorch/pytorch/issues/78277

https://github.com/pytorch/pytorch/issues/67288

cc @zhaojuanmao @mrshenli @rohan-varma @ezyang

| 3 |

5,305 | 80,863 |

SummaryWriter add_embedding issue with label_img

|

oncall: visualization

|

### 🐛 Describe the bug

Posting working make_sprite function from `utils/tensorboard/_embedding.py`

https://github.com/pytorch/pytorch/blob/master/torch/utils/tensorboard/_embedding.py#L24

```

def make_sprite(label_img, save_path):

from PIL import Image

from io import BytesIO

# this ensures the sprite image has correct dimension as described in

# https://www.tensorflow.org/get_started/embedding_viz

print('label_img.size(0):', label_img.size(0))

nrow = int(math.ceil((label_img.size(0)) ** 0.5))

print('nrow:', nrow)

np_imgs = make_np(label_img)

print('np_imgs:', np_imgs)

# plt.imshow(np.moveaxis(np_imgs[0], 0, 2))

# plt.show()

arranged_img_CHW = make_grid(np_imgs, ncols=nrow)

print('arranged_img_CHW:', arranged_img_CHW)

print('arranged_img_CHW.shape:', arranged_img_CHW.shape)

# plt.imshow(np.moveaxis(arranged_img_CHW, 0, 2))

# plt.show()

# augment images so that #images equals nrow*nrow

arranged_augment_square_HWC = np.zeros((arranged_img_CHW.shape[2], arranged_img_CHW.shape[2], 3))

print('arranged_augment_square_HWC:', arranged_augment_square_HWC)

print('arranged_augment_square_HWC.shape:', arranged_augment_square_HWC.shape)

arranged_img_HWC = arranged_img_CHW.transpose(1, 2, 0) # chw -> hwc

print('arranged_img_HWC:', arranged_img_HWC)

print('arranged_img_HWC.shape:', arranged_img_HWC.shape)

# plt.imshow(arranged_img_HWC)

# plt.show()

arranged_augment_square_HWC[:arranged_img_HWC.shape[0], :, :] = arranged_img_HWC

print('arranged_augment_square_HWC:', arranged_augment_square_HWC)

print('arranged_augment_square_HWC.shape:', arranged_augment_square_HWC.shape)

plt.imshow(arranged_img_HWC)

plt.show()

# transformed_img = np.uint8((arranged_augment_square_HWC * 255).clip(0, 255))

# print(transformed_img)

# print(transformed_img.shape)

im = Image.fromarray(arranged_img_HWC)

# im = Image.fromarray(arranged_augment_square_HWC)

with BytesIO() as buf:

im.save(buf, format="PNG")

im_bytes = buf.getvalue()

fs = tf.io.gfile.get_filesystem(save_path)

# print(im_bytes)

fs.write(fs.join(save_path, 'sprite.png'), im_bytes, binary_mode=True)

```

Commenting this line creates correct sprite and tensorboard is correctly displaying it, seems like it is not required here, please clarify what it should be doing...:

```

arranged_augment_square_HWC[:arranged_img_HWC.shape[0], :, :] = arranged_img_HWC

```

Along with this change, which is not required, because i already have correct rgb integers from 0 to 255:

```

im = Image.fromarray(np.uint8((arranged_augment_square_HWC * 255).clip(0, 255)))

```

Changed to:

```

im = Image.fromarray(arranged_img_HWC)

```

From my perspective, additional asserts are required, to provide useful information when forwarding data into `add_embedding` function, to inform devs about expected data format inside it or create additional `if` statements and check which transformations should be done and which shouldn't in the above two likes of code which i have commented out or changed.

### Versions

Collecting environment information...

PyTorch version: 1.11.0+cu102

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: Ubuntu 21.10 (x86_64)

GCC version: (Ubuntu 11.2.0-7ubuntu2) 11.2.0

Clang version: 13.0.0-2

CMake version: version 3.18.4

Libc version: glibc-2.34

Python version: 3.9.7 (default, Sep 16 2021, 13:09:58) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.17.0-051700-generic-x86_64-with-glibc2.34

Is CUDA available: True

CUDA runtime version: Could not collect

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 3070 Laptop GPU

Nvidia driver version: 510.60.02

cuDNN version: Probably one of the following:

/usr/local/cuda-11.5/targets/x86_64-linux/lib/libcudnn.so.8.3.3

/usr/local/cuda-11.5/targets/x86_64-linux/lib/libcudnn_adv_infer.so.8.3.3

/usr/local/cuda-11.5/targets/x86_64-linux/lib/libcudnn_adv_train.so.8.3.3

/usr/local/cuda-11.5/targets/x86_64-linux/lib/libcudnn_cnn_infer.so.8.3.3

/usr/local/cuda-11.5/targets/x86_64-linux/lib/libcudnn_cnn_train.so.8.3.3

/usr/local/cuda-11.5/targets/x86_64-linux/lib/libcudnn_ops_infer.so.8.3.3

/usr/local/cuda-11.5/targets/x86_64-linux/lib/libcudnn_ops_train.so.8.3.3

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] facenet-pytorch==2.5.2

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.23.0

[pip3] numpydoc==1.1.0

[pip3] torch==1.11.0

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.3.1 h2bc3f7f_2

[conda] facenet-pytorch 2.5.2 pypi_0 pypi

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.23.0 pypi_0 pypi

[conda] numpydoc 1.1.0 pyhd3eb1b0_1

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torch 1.11.0 pypi_0 pypi

[conda] torchaudio 0.11.0 py39_cu113 pytorch

[conda] torchvision 0.12.0 py39_cu113 pytorch

| 7 |

5,306 | 80,861 |

jit.freeze throws RuntimeError: stack_out && stack_out->size() == 1 INTERNAL ASSERT FAILED at "../torch/csrc/jit/passes/frozen_conv_folding.cpp":281

|

oncall: jit

|

### 🐛 Describe the bug

When trying to `jit.freeze` my torchscript module, I am encountering an `INTERNAL ASSERT FAILED` error. I would have expected the freezing to simply run without errors. I have cut down my code to this minimal sample:

```python

import torch

from torch import nn

device = 'cuda'

def get_dummy_input():

img_seq = torch.randn(3, 5, 3, 256, 256, device=device)

return img_seq

class MinimalHead(nn.Module):

def __init__(

self,

num_inp=5,

):

super().__init__()

self.num_inp = num_inp

self.inp_heads = nn.ModuleList([

nn.Conv2d(3, 3, 32)

for _ in range(num_inp)

])

def forward(self, inp_seq):

inp_features = 0

for inp_i in range(self.num_inp):

inp_features = inp_features + self.inp_heads[inp_i](inp_seq[:, inp_i])

return inp_features

def main():

head = MinimalHead().to(device)

head = torch.jit.trace(head, get_dummy_input()).eval()

torch.jit.freeze(head)

if __name__ == '__main__':

main()

```

Running the above script results in this error and traceback:

```

Traceback (most recent call last):

File "minimal_freeze_error.py", line 39, in <module>

main()

File "minimal_freeze_error.py", line 35, in main

torch.jit.freeze(head)

File "[...]/torch/jit/_freeze.py", line 119, in freeze

run_frozen_optimizations(out, optimize_numerics, preserved_methods)

File "[...]/torch/jit/_freeze.py", line 167, in run_frozen_optimizations

torch._C._jit_pass_optimize_frozen_graph(mod.graph, optimize_numerics)

RuntimeError: stack_out && stack_out->size() == 1 INTERNAL ASSERT FAILED at "../torch/csrc/jit/passes/frozen_conv_folding.cpp":281, please report a bug to PyTorch.

```

Since the error message suggests reporting this, that is what I am doing.

Interestingly, this same script running in my laptop instead of my server results in a smooth run.

Another workaround (not ideal due to existing jit checkpoints) I have currently found is to replace my model's `forward` with the following equivalent code:

```python

def forward(self, inp_seq):

inp_features = [

self.inp_heads[inp_i](inp_seq[:, inp_i])

for inp_i in range(self.num_inp)

]

inp_features = torch.sum(

torch.stack(

inp_features,

dim=0,

),

dim=0,

)

return inp_features

```

### Versions

```

Collecting environment information...

PyTorch version: 1.12.0+cu113

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: CentOS Linux 7 (Core) (x86_64)

GCC version: (GCC) 4.8.5 20150623 (Red Hat 4.8.5-44)

Clang version: Could not collect

CMake version: version 2.8.12.2

Libc version: glibc-2.17

Python version: 3.8.11 (default, Aug 3 2021, 15:09:35) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-3.10.0-1160.49.1.el7.x86_64-x86_64-with-glibc2.17

Is CUDA available: True

CUDA runtime version: 11.6.112

GPU models and configuration:

GPU 0: Tesla P100-PCIE-16GB

GPU 1: Tesla P100-PCIE-16GB

GPU 2: Tesla P100-PCIE-16GB

GPU 3: Tesla P100-PCIE-16GB

GPU 4: Tesla P100-PCIE-16GB

GPU 5: Tesla P100-PCIE-16GB

GPU 6: Tesla P100-PCIE-16GB

GPU 7: Tesla P100-PCIE-16GB

Nvidia driver version: 510.47.03

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] efficientnet-pytorch==0.7.1

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.19.5

[pip3] pytorch-lightning==1.4.9

[pip3] pytorch-metric-learning==0.9.99

[pip3] torch==1.12.0+cu113

[pip3] torch-fidelity==0.3.0

[pip3] torchaudio==0.12.0+cu113

[pip3] torchmetrics==0.5.1

[pip3] torchvision==0.13.0+cu113

[conda] efficientnet-pytorch 0.7.1 pypi_0 pypi

[conda] numpy 1.19.5 pypi_0 pypi

[conda] pytorch-lightning 1.4.9 pypi_0 pypi

[conda] pytorch-metric-learning 0.9.99 pypi_0 pypi

[conda] torch 1.12.0+cu113 pypi_0 pypi

[conda] torch-fidelity 0.3.0 pypi_0 pypi

[conda] torchaudio 0.12.0+cu113 pypi_0 pypi

[conda] torchmetrics 0.5.1 pypi_0 pypi

[conda] torchvision 0.13.0+cu113 pypi_0 pypi

```

| 4 |

5,307 | 80,857 |

Compatibility List

|

oncall: binaries, module: docs

|

### 🚀 The feature, motivation and pitch

Have an easily to find (e.g. in the previous-versions page) compatibility list. If it has to be in the form of commands and comments ( just like in the previous-versions page), instead of a proper table, then this would be fine, too.

However I believe many people wont know what version to use. and a proper tabulation would help.

### Alternatives

wasting good nights sleep to find out it doesn't work, after jumping through all the hoops was not a good alternative.

### Additional context

this is no "help request" or anything, but simply a feature request for a general professional table (or some list of arbitrary commands)

for context:

Even though my pytorch version and "CUDA SDK version" are valid and below the max supported driver version as reported by smi (max = 11.4, installed 11.3) ( `pytorch==1.11.0 torchvision==0.12.0 torchaudio==0.11.0 cudatoolkit=11.3` ) pytorch slams the GC max supported "CUDA version" as 3.7 ... nothing you can actually find out until after the fact based on looking at the installation information

cc @ezyang @seemethere @malfet @svekars @holly1238

| 2 |

5,308 | 80,851 |

[bug][nvfuser] Applying nvfuser to the model leads to runtime error

|

triaged, module: nvfuser

|

### 🐛 Describe the bug

```

Traceback (most recent call last):

File "main.py", line 202, in <module>

all_metrics = trainer.train(args.steps, args.val_steps, args.save_every, args.eval_every)

File "/h/zhengboj/SetGan/set-gan/trainer.py", line 127, in train

d_loss, g_loss, d_aux_losses, g_aux_losses = self.train_step(args_i)

File "/h/zhengboj/SetGan/set-gan/trainer.py", line 189, in train_step

d_base_loss_i, d_aux_losses_i = self._discriminator_step(args)

File "/h/zhengboj/SetGan/set-gan/trainer.py", line 383, in _discriminator_step

aux_losses[loss_fct.name] = loss_fct(self.discriminator, self.generator, candidate_batch, fake_batch, args, "discriminator", reference_batch)

File "/opt/conda/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1111, in _call_impl

return forward_call(*input, **kwargs)

File "/h/zhengboj/SetGan/set-gan/training_utils.py", line 204, in forward

return self._discriminator_loss(discriminator, generator, real_batch, fake_batch, args, *loss_args, **loss_kwargs)

File "/h/zhengboj/SetGan/set-gan/training_utils.py", line 236, in _discriminator_loss

scaled_gradients = torch.autograd.grad(outputs=self.scaler.scale(disc_interpolates), inputs=interpolates,

File "/opt/conda/lib/python3.8/site-packages/torch/autograd/__init__.py", line 275, in grad

return Variable._execution_engine.run_backward( # Calls into the C++ engine to run the backward pass

RuntimeError: 0INTERNAL ASSERT FAILED at "/opt/pytorch/pytorch/torch/csrc/jit/ir/alias_analysis.cpp":602, please report a bug to PyTorch. We don't have an op for aten::cat but it isn't a special case. Argument types: Tensor, int,

Candidates:

aten::cat(Tensor[] tensors, int dim=0) -> (Tensor)

aten::cat.names(Tensor[] tensors, str dim) -> (Tensor)

aten::cat.names_out(Tensor[] tensors, str dim, *, Tensor(a!) out) -> (Tensor(a!))

aten::cat.out(Tensor[] tensors, int dim=0, *, Tensor(a!) out) -> (Tensor(a!))

Generated:

```

I tried to enable nvfuser when training my model but got the above runtime error. I also tried running my code without scripting the model and everything goes fine. It seems that the error is caused by invoking the `torch.cat` operator with a single tensor. However, after checking the source code, I can verify that each `torch.cat` operator is invoked with a list of tensors. Therefore, I am not sure what is causing this issue. Any help is appreciated. Thanks.

### Versions

```

Collecting environment information...

PyTorch version: 1.12.0a0+2c916ef

Is debug build: False

CUDA used to build PyTorch: 11.6

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.4 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04) 9.4.0

Clang version: Could not collect

CMake version: version 3.22.3

Libc version: glibc-2.31

Python version: 3.8.12 | packaged by conda-forge | (default, Jan 30 2022, 23:42:07) [GCC 9.4.0] (64-bit runtime)

Python platform: Linux-5.4.0-110-generic-x86_64-with-glibc2.10

Is CUDA available: True

CUDA runtime version: 11.6.112

GPU models and configuration:

GPU 0: Tesla T4

GPU 1: Tesla T4

GPU 2: Tesla T4

GPU 3: Tesla T4

Nvidia driver version: 470.103.01

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.3.3

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.3.3

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.3.3

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.3.3

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.3.3

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.3.3

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.3.3

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.22.3

[pip3] pytorch-quantization==2.1.2

[pip3] torch==1.12.0a0+2c916ef

[pip3] torch-tensorrt==1.1.0a0

[pip3] torchtext==0.12.0a0

[pip3] torchvision==0.13.0a0

[conda] magma-cuda110 2.5.2 5 local

[conda] mkl 2019.5 281 conda-forge

[conda] mkl-include 2019.5 281 conda-forge

[conda] numpy 1.22.3 py38h05e7239_0 conda-forge

[conda] pytorch-quantization 2.1.2 pypi_0 pypi

[conda] torch 1.12.0a0+2c916ef pypi_0 pypi

[conda] torch-tensorrt 1.1.0a0 pypi_0 pypi

[conda] torchtext 0.12.0a0 pypi_0 pypi

[conda] torchvision 0.13.0a0 pypi_0 pypi

```

FYI, @wangshangsam

| 23 |

5,309 | 80,832 |

[DDP] doesn't support multiple backwards when static_graph=True

|

oncall: distributed, module: ddp

|

### 🐛 Describe the bug

```python

import torch

import torch.distributed as dist

import torch.nn as nn

from torch.nn.parallel import DistributedDataParallel as DDP

class ToyModel(nn.Module):

def __init__(self, in_dim=10, out_dim=5):

super(ToyModel, self).__init__()

self.dense1 = nn.Linear(in_dim, in_dim)

self.dense2 = nn.Linear(in_dim, out_dim)

def forward(self, x):

x = self.dense1(x)

return self.dense2(x)

dist.init_process_group("nccl")

rank = dist.get_rank()

model = ToyModel().to(rank)

ddp_model = DDP(model, device_ids=[rank], find_unused_parameters=True)

ddp_model._set_static_graph()

x = torch.randn(5, 10)

y = torch.randn(5, 5).to(rank)

loss_fn = nn.MSELoss()

output = ddp_model(x)

loss = loss_fn(output, y)

loss.backward(retain_graph=True)

output.backward(torch.zeros_like(output))

```

It will report the error:

```

SystemError: <built-in method run_backward of torch._C._EngineBase object at 0x7fb37a2ec200> returned NULL without setting an error

```

But if I call the prepare_for_backward before backward (like #47260),

```python

ddp_model.reducer.prepare_for_backward(loss)

loss.backward(retain_graph=True)

ddp_model.reducer.prepare_for_backward(output)

output.backward(torch.zeros_like(output))

```

It works and the output seems ok. But I don't know if this is correct or potentially risky?

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501 @ezyang @gchanan @zou3519 @bdhirsh @agolynski @mrzzd @xush6528

### Versions

PyTorch 1.10.0

| 0 |

5,310 | 80,829 |

Can torchscript dump backward graph?

|

oncall: jit

|

### 🚀 The feature, motivation and pitch

Can torchscript dump backward graph?

I am interested in tracing through both the forward and backward graph using TorchScript and dumping the IR, for full graph optimization in a separate framework.

Currently, can torchscript dump backward graph?

### Alternatives

_No response_

### Additional context

_No response_

| 1 |

5,311 | 80,827 |

Inconsistent computation of gradient in MaxUnPooling

|

module: autograd, triaged, module: determinism, actionable, module: correctness (silent)

|

### 🐛 Describe the bug

Hey

I think there is a inconsistent definition how MaxUnPool and it's gradient are computed. Here is an example code.

```python

import torch

torch.manual_seed(123)

A = torch.rand(1, 1, 9, 9)

_, I = torch.nn.MaxPool2d(3, 1, return_indices=True)(A)

print("Indices", I)

B = torch.arange(I.numel(), 0, -1).to(torch.float).view(I.shape).detach()

B.requires_grad = True

print("MaxUnPool Input", B)

C = torch.nn.MaxUnpool2d(3, 1)(B, I)

print("MaxUnPool Output", C)

D = C * torch.arange(C.numel()).to(torch.float).view(C.shape)

# now compute the gradient

E = D.sum()

E.backward()

print("MaxUnPool Gradient", B.grad)

```

The output is:

```

Indices tensor([[[[20, 20, 20, 23, 23, 23, 17],

[28, 30, 30, 23, 23, 23, 34],

[28, 30, 30, 23, 23, 23, 43],

[28, 48, 48, 48, 49, 43, 43],

[47, 48, 48, 48, 49, 43, 43],

[47, 48, 48, 48, 49, 70, 70],

[74, 57, 57, 57, 69, 70, 70]]]])

MaxUnPool Input tensor([[[[49., 48., 47., 46., 45., 44., 43.],

[42., 41., 40., 39., 38., 37., 36.],

[35., 34., 33., 32., 31., 30., 29.],

[28., 27., 26., 25., 24., 23., 22.],

[21., 20., 19., 18., 17., 16., 15.],

[14., 13., 12., 11., 10., 9., 8.],

[ 7., 6., 5., 4., 3., 2., 1.]]]], requires_grad=True)

MaxUnPool Output tensor([[[[ 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 0., 43.],

[ 0., 0., 47., 0., 0., 37., 0., 0., 0.],

[ 0., 35., 0., 33., 0., 0., 0., 36., 0.],

[ 0., 0., 0., 0., 0., 0., 0., 15., 0.],

[ 0., 0., 14., 18., 17., 0., 0., 0., 0.],

[ 0., 0., 0., 5., 0., 0., 0., 0., 0.],

[ 0., 0., 0., 0., 0., 0., 3., 8., 0.],

[ 0., 0., 7., 0., 0., 0., 0., 0., 0.]]]],

grad_fn=<MaxUnpool2DBackward0>)

MaxUnPool Gradient tensor([[[[20., 20., 20., 23., 23., 23., 17.],

[28., 30., 30., 23., 23., 23., 34.],

[28., 30., 30., 23., 23., 23., 43.],

[28., 48., 48., 48., 49., 43., 43.],

[47., 48., 48., 48., 49., 43., 43.],

[47., 48., 48., 48., 49., 70., 70.],

[74., 57., 57., 57., 69., 70., 70.]]]])

```

In "Indices" we can see that the index 20 is used 3 times.

Now in "MaxUnPool Output" we see that the index 20 does only use the very last value 47 in this case. So I would expect the gradient for these three to be [0, 0, 20].

I put in a multiplication, so that in the gradient we get the actual index where the value was taken from. As we can see, the gradient for these three values is instead [20, 20, 20].

To wrap this up. To my understanding MaxUnPool computes the following during the forward pass:

```c++

for(int batch ...)

for(int channel ...)

for(int index ...)

output[batch, channel, indices[index]] = input[batch, channel, index];

```

However, when we look at the gradient, all get the value propagated, which is inconsistent with the forward pass, where only the last gets used.

This seems to be inconsistent to me. So I think either the forward pass needs to use a ```+=``` instead of ```=```, or the backward pass needs to only propagate the value to the last occurrence of the index.

Best

### Versions

Collecting environment information...

PyTorch version: 1.12.0+cu102

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: CentOS Linux 7 (Core) (x86_64)

GCC version: (GCC) 10.3.0

Clang version: Could not collect

CMake version: version 3.23.1

Libc version: glibc-2.17

Python version: 3.7.12 (default, Feb 6 2022, 20:29:18) [GCC 10.2.1 20210130 (Red Hat 10.2.1-11)] (64-bit runtime)

Python platform: Linux-3.10.0-1160.59.1.el7.x86_64-x86_64-with-centos-7.9.2009-Core

Is CUDA available: False

CUDA runtime version: 11.3.109

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.21.6

[pip3] torch==1.12.0

[pip3] torchmetrics==0.9.1

[pip3] torchvision==0.13.0

[conda] Could not collect

cc @ezyang @gchanan @zou3519 @albanD @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7 @mruberry @kurtamohler

| 19 |

5,312 | 80,826 |

Ne op does not behaves as expected with nan

|

high priority, needs reproduction, triaged

|

### 🐛 Describe the bug

When Nan values are given as input torch.ne op behaves in a strange manner

```

import torch

import numpy as np

x= torch.tensor([ -np.inf, 0.3516, 0.3719, 0.5452, 0.4024, 0.9232, 0.6995, 0.0805, 0.7434,0.1871, 0.3802, 0.5379, 0.1533, np.nan, 0.8519, 0.7572, np.inf, 0.4675, 0.4702, 0.2297, 0.5905, 0.6923, 0.2628, -np.inf, -np.inf, 0.6335, 0.9912,0.9256, 0.0237, 0.4891, np.nan, 0.9731])

x.ne__(45)

# when x.size is greater than or equal to 32, ne op returns 0 in the place of NAN

x= torch.tensor([ -np.inf, 0.9232, 0.6995, 0.0805, 0.7434,0.1871, 0.3802, 0.5379, 0.1533, np.nan, 0.8519, 0.7572, np.inf, 0.4675, 0.4702, 0.2297, 0.5905, 0.6923, 0.2628, -np.inf, -np.inf, 0.6335, 0.9912,0.9256, 0.0237, 0.4891, np.nan, 0.9731])

x.ne__(45)

# when x.size is lesser than 32, ne op returns 1 in the place of NAN

```

### Versions

Collecting environment information...

PyTorch version: 1.11.0+cu113

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.5 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: 6.0.0-1ubuntu2 (tags/RELEASE_600/final)

CMake version: version 3.22.5

Libc version: glibc-2.26

Python version: 3.7.13 (default, Apr 24 2022, 01:04:09) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.4.188+-x86_64-with-Ubuntu-18.04-bionic

Is CUDA available: False

CUDA runtime version: 11.1.105

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.7.6.5

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.0.5

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.21.6

[pip3] torch==1.11.0+cu113

[pip3] torchaudio==0.11.0+cu113

[pip3] torchsummary==1.5.1

[pip3] torchtext==0.12.0

[pip3] torchvision==0.12.0+cu113

[conda] Could not collect

cc @ezyang @gchanan @zou3519

| 3 |

5,313 | 80,824 |

When running GPT trainning with megatron, the program quit due to torch.distributed.elastic.agent.server.api:Received 1 death signal, shutting down workers

|

oncall: distributed, module: elastic

|

### 🐛 Describe the bug

1.When running GPT trainning with megatron, the program quit due to torch.distributed.elastic.agent.server.api:Received 1 death signal, shutting down workers

2.code

Megatron-LM github branch master and I changed /Megatron-LM/megatron/tokenizer/bert_tokenization.py and /Megatron-LM/megatron/tokenizer/tokenizer.py for berttokenizer data preprocess needs.

[tokenizer.zip](https://github.com/NVIDIA/Megatron-LM/files/9035644/tokenizer.zip)

[bert_tokenization.zip](https://github.com/NVIDIA/Megatron-LM/files/9035645/bert_tokenization.zip)

3.training data ~103MB

[vocab_processed.txt](https://github.com/NVIDIA/Megatron-LM/files/9035635/vocab_processed.txt)

[my-gpt2_test_0704_text_document.zip](https://github.com/NVIDIA/Megatron-LM/files/9035638/my-gpt2_test_0704_text_document.zip)

my-gpt2_test_0704_text_document.bin is ~103MB which exceed size limit, if you need , i can send it.

4.bash 0704_gpt_train.sh

[0704_gpt_train.zip](https://github.com/NVIDIA/Megatron-LM/files/9035651/0704_gpt_train.zip)

5.env:

linux:Linux version 4.15.0-167-generic (buildd@lcy02-amd64-045) (gcc version 7.5.0 (Ubuntu 7.5.0-3ubuntu1~18.04))

[python env.txt](https://github.com/pytorch/pytorch/files/9035632/python.env.txt)

6.error log:

[0704.log](https://github.com/pytorch/pytorch/files/9035617/0704.log)

### Versions

Collecting environment information...

PyTorch version: 1.11.0+cu102

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.5 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: Could not collect

CMake version: Could not collect

Libc version: glibc-2.27

Python version: 3.8.13 (default, Mar 28 2022, 11:38:47) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-4.15.0-167-generic-x86_64-with-glibc2.17

Is CUDA available: True

CUDA runtime version: 10.2.89

GPU models and configuration:

GPU 0: Tesla V100S-PCIE-32GB

GPU 1: Tesla V100S-PCIE-32GB

GPU 2: Tesla V100S-PCIE-32GB

GPU 3: Tesla V100S-PCIE-32GB

GPU 4: Tesla V100S-PCIE-32GB

GPU 5: Tesla V100S-PCIE-32GB

GPU 6: Tesla V100S-PCIE-32GB

GPU 7: Tesla V100S-PCIE-32GB

Nvidia driver version: 470.103.01

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.2.4

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.2.4

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.2.4

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.2.4

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.2.4

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.2.4

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.2.4

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.22.3

[pip3] torch==1.11.0

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] blas 1.0 mkl defaults

[conda] cudatoolkit 10.2.89 h713d32c_10 conda-forge

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640 defaults

[conda] mkl-service 2.4.0 py38h95df7f1_0 conda-forge

[conda] mkl_fft 1.3.1 py38h8666266_1 conda-forge

[conda] mkl_random 1.2.2 py38h1abd341_0 conda-forge

[conda] numpy 1.22.3 py38he7a7128_0 defaults

[conda] numpy-base 1.22.3 py38hf524024_0 defaults

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torch 1.11.0 pypi_0 pypi

[conda] torchaudio 0.11.0 py38_cu102 pytorch

[conda] torchvision 0.12.0 py38_cu102 pytorch

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501

| 1 |

5,314 | 80,821 |

Add typing support to ModuleList and ModuleDict

|

module: typing, triaged

|

### 🚀 The feature, motivation and pitch

Currently, the containers `nn.ModuleList` and `nn.ModuleDict` are typing-unaware, i.e. given this:

```python

class A(nn.Module):

def __init__(self):

self.my_modules = nn.ModuleList([nn.Linear(1, 1) for _ in range(10)])

```

`self.my_modules[i]` is treated as `nn.Module`, not as `nn.Linear`. For example, VSCode complains about snippets like `function_that_expects_tensor(self.my_modules[i].weight`), because it thinks that `.weight` can be both `Tensor` and `nn.Module`.

What I propose:

```python

from collections.abc import MutableSequence

from typing import TypeVar

ModuleListValue = TypeVar('ModuleListValue', bound=nn.Module)

class ModuleList(Module, MutableSequence[ModuleListValue]):

# now, some methods can be typed, e.g.:

def __getitem__(...) -> ModuleListValue:

...

...

```

For `nn.DictModule`, it is a bit more complicated, since there are two different patterns:

- (A) `dict`-like: the set of keys is not fixed, all values are modules of the same type (e.g. `nn.Linear`)

- (B) `TypedDict`-like: the set of keys is fixed, the values can be of different types (e.g. `{'linear': nn.Linear(...), 'relu': nn.ReLU}`).

(A) can be implemented similarly to the previous example:

```python

...

class ModuleDict(Module, MutableMapping[str, ModuleDictValue]):

...

```

In fact, this can cover (B) as well in a very limited way (by setting `ModuleDictValue=nn.Module`). And it is unclear to me how to implement the fully functioning (B), it looks like we need something like `TypedMutableMapping`, but there is no such thing in typing. So I would start with `MutableMapping` and add TypedModuleDict when it becomes technically possible.

### Alternatives

_No response_

### Additional context

_No response_

cc @ezyang @malfet @rgommers @xuzhao9 @gramster

| 4 |

5,315 | 80,808 |

The result of doing a dot product between two vectors, using einsum, depends on another unrelated vector

|

triaged, module: numerical-reproducibility

|

### 🐛 Describe the bug

I have two tensors, `x` and `y`. The first has shape `(2, 3, 2)`, the second has shape `(34, 2)`. I use `einsum` to calculate the dot product between each of the six 2-dimensional vectors that lie in the last dimension of `x`, and each of the 34 vectors that lie in the last dimension of `y`. The bug is that the result of the dot product between `x[0, 0]` and `y[0]` changes if we ignore the last vector of `y`, i.e. if we take `y[:33]` instead of `y`. This is undesired behavior (I think).

See here:

```python

from torch import tensor, zeros, einsum

x = zeros((2, 3, 2))

x[0, 0, 0] = 1.0791796445846558

x[0, 0, 1] = 0.30579063296318054

y = zeros((34, 2))

y[0, 0] = -0.14987720549106598

y[0, 1] = 0.9887046217918396

# the following two numbers should be equal, but they are not.

# the expressions differ in that the second one uses y[:33]

a = einsum('dhb,nb->ndh', x, y )[0, 0, 0].item() # =0.14059218764305115

b = einsum('dhb,nb->ndh', x, y[:33])[0, 0, 0].item() # =0.14059217274188995

# returns False

a == b

```

I believe this is a minimal example (at least, local minimum). If I take `x` to be 1d or 2d instead of 3d, the bug does not occur. If I take `x` and `y` to have last dimension of size 1, the bug does not occur. If I change any of the non-zero entries of `x` and `y` to value zero, the bug does not occur. If I do a "manual" dot product instead, like this: `(x[None,...]*y[:33,None,None,:]).sum(3)[0,1,0].item()`, the bug does not occur (and we get the value `0.14059217274188995`, equal to `b` above). If I put the tensors on GPU (`.to('cuda')`), the bug does not occur (and again we get the value of `b` above). If I use numpy, the bug does not occur (but we get a different value: `0.14059218275815866`).

### Versions

PyTorch version: 1.11.0+cu113

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.5 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: 6.0.0-1ubuntu2 (tags/RELEASE_600/final)

CMake version: version 3.22.5

Libc version: glibc-2.26

Python version: 3.7.13 (default, Apr 24 2022, 01:04:09) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-5.4.188+-x86_64-with-Ubuntu-18.04-bionic

Is CUDA available: True

CUDA runtime version: 11.1.105

GPU models and configuration: GPU 0: Tesla T4

Nvidia driver version: 460.32.03

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.7.6.5

/usr/lib/x86_64-linux-gnu/libcudnn.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_adv_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_adv_train.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_train.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_ops_infer.so.8.0.5

/usr/lib/x86_64-linux-gnu/libcudnn_ops_train.so.8.0.5

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.21.6

[pip3] torch==1.11.0+cu113

[pip3] torchaudio==0.11.0+cu113

[pip3] torchsummary==1.5.1

[pip3] torchtext==0.12.0

[pip3] torchvision==0.12.0+cu113

[conda] Could not collect

| 5 |

5,316 | 80,805 |

torch.einsum results in segfault

|

high priority, triage review, oncall: binaries, module: crash, module: openmp, module: multithreading

|

### 🐛 Describe the bug

I experience a segfault when running a simple script like:

```python

import torch

As = torch.randn(3, 2, 5)

Bs = torch.randn(3, 5, 4)

torch.einsum("bij,bjk->bik", As, Bs)

```

Running this results in:

```console

$ python test.py

Segmentation fault: 11

```

Even if I add `-X faulthandler` I don't seem to get any kind of stacktrace to help locate the issue. If someone can give me instructions for how to use gdb I can try to get a backtrace.

### Versions

```console

$ python collect_env.py

Collecting environment information...

PyTorch version: 1.12.0

Is debug build: False

CUDA used to build PyTorch: None

ROCM used to build PyTorch: N/A

OS: macOS 12.4 (arm64)

GCC version: Could not collect

Clang version: 13.1.6 (clang-1316.0.21.2.5)

CMake version: version 3.23.1

Libc version: N/A

Python version: 3.9.13 (main, Jun 18 2022, 21:43:00) [Clang 13.1.6 (clang-1316.0.21.2.5)] (64-bit runtime)

Python platform: macOS-12.4-arm64-arm-64bit

Is CUDA available: False

CUDA runtime version: No CUDA

GPU models and configuration: No CUDA

Nvidia driver version: No CUDA

cuDNN version: No CUDA

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] efficientnet-pytorch==0.6.3

[pip3] mypy==0.931

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.23.0

[pip3] pytorch-lightning==1.6.4

[pip3] pytorch-sphinx-theme==0.0.24

[pip3] segmentation-models-pytorch==0.2.0

[pip3] torch==1.12.0

[pip3] torchmetrics==0.7.2

[pip3] torchvision==0.12.0a0

[conda] Could not collect

```

cc @ezyang @gchanan @zou3519 @seemethere @malfet

| 15 |

5,317 | 80,804 |

`torch.renorm` gives wrong gradient for 0-valued input when `p` is even and `maxnorm=0`.

|

module: autograd, triaged, module: edge cases

|

### 🐛 Describe the bug

`torch.renorm` gives wrong gradient for 0-valued input when `p` is even and `maxnorm=0`.

```py

import torch

def fn(input):

p = 2

dim = -1

maxnorm = 0

fn_res = torch.renorm(input, p, dim, maxnorm, )

return fn_res

input = torch.tensor([[0.1, 0.], [0., 0.]], dtype=torch.float64, requires_grad=True)

torch.autograd.gradcheck(fn, (input))

```

```

GradcheckError: Jacobian mismatch for output 0 with respect to input 0,

numerical:tensor([[0., 0., 0., 0.],

[0., 0., 0., 0.],

[0., 0., 0., 0.],

[0., 0., 0., 0.]], dtype=torch.float64)

analytical:tensor([[1., 0., 0., 0.],

[0., 1., 0., 0.],

[0., 0., 1., 0.],

[0., 0., 0., 1.]], dtype=torch.float64)

```

Because `p=2` and `maxnorm=0`, this function should be `f(x) = 0` for every element. Therefore, it should return 0 as the gradient.

### Versions

pytorch: 1.11.0

cc @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7

| 0 |

5,318 | 80,803 |

`hardshrink` gives wrong gradient for 0 input when `lambd` is 0.

|

module: autograd, triaged, module: edge cases

|

### 🐛 Describe the bug

`hardshrink` gives wrong gradient for 0-valued input when `lambd` is 0.

```python

import torch

def fn(input):

fn_res = input.hardshrink(lambd=0.0)

return fn_res

input = torch.tensor([0.], dtype=torch.float64, requires_grad=True)

torch.autograd.gradcheck(fn, (input))

```

```

torch.autograd.gradcheck.GradcheckError: Jacobian mismatch for output 0 with respect to input 0,

numerical:tensor([[1.]], dtype=torch.float64)

analytical:tensor([[0.]], dtype=torch.float64)

```

Based on the definition of `hardshrink`, it should be `f(x) = x` if `lambd=0`. Thus, it's supposed to return 1 as the gradient when input is 0.

### Versions

pytorch: 1.11.0

cc @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7

| 0 |

5,319 | 80,776 |

`torch.inverse()` crash in cuda

|

triaged, module: linear algebra, module: correctness (silent)

|

### 🐛 Describe the bug

`tensor.inverse` produce wrong results. The code works fine before, until one day I move `import torch` to the end of imports

Unfortunately, I can't provide a snippet to reproduce the bug. It may be caused by the conficts with other libs.

The result that works correctly before:

After I move `import torch` to the end of imports, I get:

It works correctly on CPU and `torch.pinverse`.

### Versions

`torch.__version__`: 1.11.0+cu113

cc @jianyuh @nikitaved @pearu @mruberry @walterddr @IvanYashchuk @xwang233 @Lezcano

| 1 |

5,320 | 80,774 |

RPC: Make RRefProxy callable

|

oncall: distributed, enhancement, module: rpc

|

### 🚀 The feature, motivation and pitch

Executing remote callable objects (including `Module`) currently requires explicitly specifying `__call__` in the RPC command.

Consider:

```Python

import os

import torch

from torch.distributed import rpc

os.environ['MASTER_ADDR'] = 'localhost'

os.environ['MASTER_PORT'] = '29500'

rpc.init_rpc('worker0', world_size=1, rank=0)

class MyModule(torch.nn.Module):

def forward(self, tensor):

print(tensor)

mod = rpc.remote(0, MyModule)

t = torch.randn(10)

# Works:

mod.rpc_sync().__call__(t)

# TypeError: 'RRefProxy' object is not callable

mod.rpc_sync()(t)

rpc.shutdown()

```

It would be cleaner if users didn't have to explicitly call double-underscore methods.

Cheers.

### Alternatives

None

### Additional context

None

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501 @jjlilley @mrzzd

| 0 |

5,321 | 80,771 |

Anaconda is not a package manager

|

module: docs, triaged

|

### 📚 The doc issue

The documentation in ["Getting Started"](https://pytorch.org/get-started/locally/#start-locally) states:

> "*Anaconda is our recommended package manager since it installs all dependencies.*"

However, Anaconda is not a package manager, but a distribution of Python that includes the Conda package manager. This misusage confuses users and leads to people unnecessarily installing Anaconda when many users are better off with a Miniforge variant, such as Mambaforge.

### Suggest a potential alternative/fix

Please replace "Anaconda" with "Conda".

cc @svekars @holly1238

| 0 |

5,322 | 80,765 |

Let torch.utils.tensorboard support multiprocessing

|

module: multiprocessing, triaged, module: tensorboard

|

### 🚀 The feature, motivation and pitch

In TensorboardX, [GlobalSummaryWriter](https://github.com/lanpa/tensorboardX/blob/df1944916f3aecd22309217af040f2e705997d9c/tensorboardX/global_writer.py) is implemented. I want to use torch.utils.tensorboard in multiprocessing, but I failed after a simple modification of TensorboardX’s code. I believe this feature is very important and not difficult to implement. Thanks you very much!

### Alternatives

_No response_

### Additional context

_No response_

cc @VitalyFedyunin

| 2 |

5,323 | 80,762 |

`atan2` will gradcheck fail when `other` is a tensor with `int8` dtype

|

module: autograd, triaged, module: edge cases

|

### 🐛 Describe the bug

`atan2` will gradcheck fail when `other` is a tensor with `int8` dtype

```python

import torch

def fn(input):

other = torch.tensor([[22, 18, 29, 24, 27],

[ 3, 11, 23, 1, 19],

[17, 26, 11, 26, 2],

[22, 11, 21, 23, 29],

[ 7, 30, 24, 15, 10]], dtype=torch.int8)

fn_res = torch.atan2(input, other, )

return fn_res

input = torch.tensor([[ 0.6021, -0.8055, -0.5270, -0.3233, -0.9129]], dtype=torch.float64, requires_grad=True)

torch.autograd.gradcheck(fn, (input))

```

It will fail

```

GradcheckError: Jacobian mismatch for output 0 with respect to input 0,

numerical:tensor([[0.0454, 0.0000, 0.0000, 0.0000, 0.0000, 0.3204, 0.0000, 0.0000, 0.0000,

0.0000, 0.0587, 0.0000, 0.0000, 0.0000, 0.0000, 0.0454, 0.0000, 0.0000,

0.0000, 0.0000, 0.1418, 0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0554, 0.0000, 0.0000, 0.0000, 0.0000, 0.0904, 0.0000, 0.0000,

0.0000, 0.0000, 0.0384, 0.0000, 0.0000, 0.0000, 0.0000, 0.0904, 0.0000,

0.0000, 0.0000, 0.0000, 0.0333, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0345, 0.0000, 0.0000, 0.0000, 0.0000, 0.0435, 0.0000,

0.0000, 0.0000, 0.0000, 0.0907, 0.0000, 0.0000, 0.0000, 0.0000, 0.0476,

0.0000, 0.0000, 0.0000, 0.0000, 0.0416, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0417, 0.0000, 0.0000, 0.0000, 0.0000, 0.9054,

0.0000, 0.0000, 0.0000, 0.0000, 0.0385, 0.0000, 0.0000, 0.0000, 0.0000,

0.0435, 0.0000, 0.0000, 0.0000, 0.0000, 0.0666, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0370, 0.0000, 0.0000, 0.0000, 0.0000,

0.0525, 0.0000, 0.0000, 0.0000, 0.0000, 0.4138, 0.0000, 0.0000, 0.0000,

0.0000, 0.0344, 0.0000, 0.0000, 0.0000, 0.0000, 0.0992]],

dtype=torch.float64)

analytical:tensor([[-0.7960, 0.0000, 0.0000, 0.0000, 0.0000, 0.3204, 0.0000, 0.0000,

0.0000, 0.0000, 0.5096, 0.0000, 0.0000, 0.0000, 0.0000, -0.7960,

0.0000, 0.0000, 0.0000, 0.0000, 0.1418, 0.0000, 0.0000, 0.0000,

0.0000],

[ 0.0000, 0.2622, 0.0000, 0.0000, 0.0000, 0.0000, 0.0904, 0.0000,

0.0000, 0.0000, 0.0000, -0.2846, 0.0000, 0.0000, 0.0000, 0.0000,

0.0904, 0.0000, 0.0000, 0.0000, 0.0000, -0.2432, 0.0000, 0.0000,

0.0000],

[ 0.0000, 0.0000, 0.3958, 0.0000, 0.0000, 0.0000, 0.0000, 1.3312,

0.0000, 0.0000, 0.0000, 0.0000, 0.0907, 0.0000, 0.0000, 0.0000,

0.0000, -0.2969, 0.0000, 0.0000, 0.0000, 0.0000, 0.3734, 0.0000,

0.0000],

[ 0.0000, 0.0000, 0.0000, 0.3744, 0.0000, 0.0000, 0.0000, 0.0000,

0.9054, 0.0000, 0.0000, 0.0000, 0.0000, -0.2829, 0.0000, 0.0000,

0.0000, 0.0000, 1.3447, 0.0000, 0.0000, 0.0000, 0.0000, -0.4855,

0.0000],

[ 0.0000, 0.0000, 0.0000, 0.0000, -0.7074, 0.0000, 0.0000, 0.0000,

0.0000, 0.1795, 0.0000, 0.0000, 0.0000, 0.0000, 0.4138, 0.0000,

0.0000, 0.0000, 0.0000, 0.3928, 0.0000, 0.0000, 0.0000, 0.0000,

0.0992]], dtype=torch.float64)

```

But when the `other` is `int16` or `int32`, it will pass the gradcheck

```python

import torch

def fn(input):

other = torch.tensor([[22, 18, 29, 24, 27],

[ 3, 11, 23, 1, 19],

[17, 26, 11, 26, 2],

[22, 11, 21, 23, 29],

[ 7, 30, 24, 15, 10]], dtype=torch.int16)

fn_res = torch.atan2(input, other, )

return fn_res

input = torch.tensor([[ 0.6021, -0.8055, -0.5270, -0.3233, -0.9129]], dtype=torch.float64, requires_grad=True)

torch.autograd.gradcheck(fn, (input))

# True

```

### Versions

pytorch: 1.11.0

cc @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7

| 0 |

5,324 | 80,761 |

`det` will return wrong gradient for `1x1` matrix with 0 value.

|

module: autograd, triaged, module: edge cases

|

### 🐛 Describe the bug

`det` will return wrong gradient for `1x1` matrix with 0 value.

```python

import torch

input = torch.tensor([[0.]], dtype=torch.float64, requires_grad=True)

torch.det(input).backward()

print(input.grad)

# tensor([[0.]], dtype=torch.float64)

```

The correct gradient should be 1. Instead, when the value isn't zero, it will return the correct gradient.

```python

import torch

input = torch.tensor([[0.1]], dtype=torch.float64, requires_grad=True)

torch.det(input).backward()

print(input.grad)

# tensor([[1.]], dtype=torch.float64)

```

### Versions

pytorch: 1.11.0

cc @ezyang @albanD @zou3519 @gqchen @pearu @nikitaved @soulitzer @Lezcano @Varal7

| 1 |

5,325 | 80,756 |

[ONNX] RuntimeError: 0 INTERNAL ASSERT FAILED at "/pytorch/torch/csrc/jit/ir/ir.cpp":518

|

oncall: jit, module: onnx, onnx-needs-info

|

### 🐛 Describe the bug

I want to export an onnx model which contains a dynamic for loop determined by the input tensor.

However, I got the bug below.

# Full Error Information

```

Traceback (most recent call last):

File "d:\End2End\test_onnx.py", line 22, in <module>

export(model_script, inputs, 'script.onnx',opset_version=11, example_outputs=output)

File "D:\anaconda\envs\mmlab\lib\site-packages\torch\onnx\__init__.py", line 271, in export

return utils.export(model, args, f, export_params, verbose, training,

File "D:\anaconda\envs\mmlab\lib\site-packages\torch\onnx\utils.py", line 88, in export

_export(model, args, f, export_params, verbose, training, input_names, output_names,

File "D:\anaconda\envs\mmlab\lib\site-packages\torch\onnx\utils.py", line 694, in _export

_model_to_graph(model, args, verbose, input_names,

File "D:\anaconda\envs\mmlab\lib\site-packages\torch\onnx\utils.py", line 463, in _model_to_graph

graph = _optimize_graph(graph, operator_export_type,

File "D:\anaconda\envs\mmlab\lib\site-packages\torch\onnx\utils.py", line 174, in _optimize_graph

torch._C._jit_pass_lint(graph)

RuntimeError: 0 INTERNAL ASSERT FAILED at "..\\torch\\csrc\\jit\\ir\\ir.cpp":518, please report a bug to PyTorch. 20 not in scope

```

# code to reproduce the bug.

```python

import torch

from torch import nn, jit

from typing import List

from torch.onnx import export

class Model(nn.Module):

def __init__(self):

super().__init__()

self.conv = nn.Conv3d(1, 1, 3, 1, 1)

def forward(self, x):

outputs = jit.annotate(List[torch.Tensor], [])

for i in range(x.size(0)):

outputs.append(self.conv(x[i].unsqueeze(0)))

return torch.stack(outputs, 0).squeeze()

inputs = torch.rand((3, 1, 5, 5, 5))

model = Model()

with torch.no_grad():

output = model(inputs)

model_script = jit.script(model)

export(model_script, inputs, 'script.onnx',

opset_version=11, example_outputs=output)

```

### Versions

PyTorch version: 1.8.1+cu111

Is debug build: False

CUDA used to build PyTorch: 11.1

ROCM used to build PyTorch: N/A

OS: Microsoft Windows 10 家庭中文版

GCC version: (x86_64-posix-seh-rev0, Built by MinGW-W64 project) 8.1.0

Clang version: Could not collect

CMake version: version 3.21.1

Libc version: N/A

Python version: 3.8.13 (default, Mar 28 2022, 06:59:08) [MSC v.1916 64 bit (AMD64)] (64-bit runtime)

Python platform: Windows-10-10.0.19044-SP0

Is CUDA available: True

CUDA runtime version: Could not collect

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 3060 Laptop GPU

Nvidia driver version: 466.81

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.22.4

[pip3] torch==1.8.1+cu111

[pip3] torchvision==0.9.1+cu111

[conda] numpy 1.22.4 <pip>

[conda] torch 1.8.1+cu111 <pip>

[conda] torchvision 0.9.1+cu111 <pip>

| 1 |

5,326 | 80,753 |

CapabilityBasedPartitioner requires is node supported to only return true for CALLABLE_NODE_OPS but no assertion for this invariant exists

|

triaged, module: fx, oncall: pt2

|

### 🐛 Describe the bug

Steps to reproduce:

1. Write a OperatorSupport that always returns True

2. Try to run the partitioner on a graph

Expected: an error, or it fuses the entire graph

Actual: the resulting graph is malformed

@SherlockNoMad

### Versions

master

| 2 |

5,327 | 92,033 |

Unable to use vmap atop torch.distribution functionality

|

high priority, triaged, module: functorch

|

Hello! I'm working on an application that requires computing a neural net's weight Jacobians through a torch.distribution log probability. Minimal example code show below:

```python

import torch

from torch.distributions import Independent, Normal

from functorch import make_functional_with_buffers, jacrev, vmap

def compute_fischer_stateless_model(fmodel, params, buffers, input, target):

input = input.unsqueeze(0)

target = target.unsqueeze(0)

pred = fmodel(params, buffers, input)

normal = Independent(Normal(loc=pred, scale=torch.ones_like(pred)), reinterpreted_batch_ndims=1)

log_prob = normal.log_prob(target)

return log_prob

# Instantiate model, inputs, targets, etc.

fmodel, params, buffers = make_functional_with_buffers(model)

ft_compute_jac = jacrev(compute_fischer_stateless_model, argnums=1)

ft_compute_sample_jac = vmap(ft_compute_jac, in_dims=(None, None, None, 0, 0))

jac = ft_compute_sample_jac(fmodel, params, buffers, inputs, targets)

```

Executing my script returns a `RuntimeError` error of the form:

*RuntimeError: vmap: It looks like you're either (1) calling .item() on a Tensor or (2) attempting to use a Tensor in some data-dependent control flow or (3) encountering this error in PyTorch internals. For (1): we don't support vmap over calling .item() on a Tensor, please try to rewrite what you're doing with other operations. For (2): If you're doing some control flow instead, we don't support that yet, please shout over at https://github.com/pytorch/functorch/issues/257 . For (3): please file an issue.*

Any help would be appreciated -- thanks in advance for you time!

cc @ezyang @gchanan @zou3519 @Chillee @samdow @soumith @kshitij12345 @janeyx99

| 9 |

5,328 | 80,742 |

Add TorchDynamo as a submodule to Pytorch?

|

module: build, triaged

|

### 🚀 The feature, motivation and pitch

It has been hard to recommend TorchDynamo usage with Pytorch given that TorchDynamo does not have an official release and users often want to use specific versions of Pytorch (Nvidia releases containers on a monthly basis from TOT), however, TorchDynamo requires using Pytorch TOT, currently, and can break when not in sync.

I wanted to propose adding TorchDynamo as a submodule to Pytorch.

### Alternatives

_No response_

### Additional context

_No response_

cc @malfet @seemethere

| 22 |

5,329 | 80,738 |

Output for `aten::_native_multi_head_attention` appears inconsistent with entry in `native_functions.yaml`

|

oncall: transformer/mha

|

### 🐛 Describe the bug

The following python code is an invocation of `aten::_native_multi_head_attention` that outputs a `(Tensor, None)` tuple.

```python

import torch

embed_dim = 8

num_heads = 4

bs = 4

sl = 2

qkv = torch.nn.Linear(embed_dim, embed_dim * 3, dtype=torch.float32)

proj = torch.nn.Linear(embed_dim, embed_dim, dtype=torch.float32)

q = torch.randn(bs, sl, embed_dim) * 10

k = torch.randn(bs, sl, embed_dim) * 10

v = torch.randn(bs, sl, embed_dim) * 10

mha = torch.ops.aten._native_multi_head_attention(

q,

k,

v,

embed_dim,

num_heads,

qkv.weight,

qkv.bias,

proj.weight,

proj.bias,

need_weights=False,

average_attn_weights=False,

)

print(mha)

```

The following is an example output.

```

(tensor([[[ 1.6427, 2.0966, 2.4298, 1.6536, 2.9116, -0.6659, 0.0086,

4.0757],

[ 2.0386, 0.8152, -0.8718, 1.7295, 0.9999, -1.8865, -2.7697,

1.9216]],

[[ 4.0717, 0.0476, -0.6383, 3.1022, -2.5480, 2.0922, -4.1062,

-0.5034],

[ 2.3662, 0.3523, -1.0895, 1.9332, 0.3525, 0.4775, -2.1356,

0.4972]],

[[-5.0851, 3.8904, 2.9651, -3.1131, 6.5247, -2.5286, -1.4031,

1.0763],

[-2.5247, 1.5687, -1.5536, 1.0382, 4.8081, -2.2505, 1.6698,

2.1023]],

[[-1.7481, 1.0500, 2.4167, -1.5026, 5.5205, -3.3177, 3.3927,

4.1006],

[-3.4155, 2.5501, 4.6239, -8.3866, 4.6514, -2.5655, 5.8211,

2.1764]]], grad_fn=<NotImplemented>), None)

```

Note that the second value in the returned tuple is `None`. This appears to contradict the entry for `_native_multi_head_attention` in `native_functions.yaml` which indicates that it will always return a tuple of tensors `(Tensor, Tensor)`.

```

- func: _native_multi_head_attention(Tensor query, Tensor key, Tensor value, int embed_dim, int num_head, Tensor qkv_weight, Tensor qkv_bias, Tensor proj_weight, Tensor proj_bias, Tensor? mask=None, bool need_weights=True, bool average_attn_weights=True) -> (Tensor, Tensor)

variants: function

dispatch:

CPU, CUDA, NestedTensorCPU, NestedTensorCUDA: native_multi_head_attention

```

Please let me know if I have misunderstood something regarding this function signature and this is intended behavior.

### Versions

```

PyTorch version: 1.12.0+cu102

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: Arch Linux (x86_64)

GCC version: (GCC) 12.1.0

Clang version: 13.0.1

CMake version: version 3.23.2

Libc version: glibc-2.35

Python version: 3.10.5 (main, Jun 6 2022, 18:49:26) [GCC 12.1.0] (64-bit runtime)

Python platform: Linux-5.18.5-arch1-1-x86_64-with-glibc2.35

Is CUDA available: True

CUDA runtime version: Could not collect

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 2070 SUPER

Nvidia driver version: 515.48.07

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.23.0

[pip3] torch==1.12.0

[conda] Could not collect

```

cc @jbschlosser @bhosmer @cpuhrsch @erichan1

| 2 |

5,330 | 80,606 |

[jit.script] jit.script give uncertain results using torch.half

|

oncall: jit, module: nvfuser

|

### 🐛 Describe the bug

`torch.jit.script` give uncertain results using `torch.half`. The result of the first execution of the function is different from that of the second execution, but the result is the same since the second execution. Here is the code to reproduce.

```

import math

import torch

@torch.jit.script

def f(x):

return torch.tanh(math.sqrt(2.0 / math.pi) * x)

x = torch.rand((32,), dtype=torch.half).cuda()

res = []

for i in range(5):

res.append(f(x))

for i in range(5):

for j in range(i+1, 5):

print(f"{i} {j}: {(res[i]-res[j]).nonzero().any()}")

```

the result is:

```

0 0: False

0 1: True

0 2: True

0 3: True

0 4: True

1 1: False

1 2: False

1 3: False

1 4: False

2 2: False

2 3: False

2 4: False

3 3: False

3 4: False

4 4: False

```

please note that the problem does not exist using `torch.float`. And also not exists without `@torch.jit.script`

### Versions

Collecting environment information...

PyTorch version: 1.12.0a0+git67ece03

Is debug build: False

CUDA used to build PyTorch: 11.0

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.6 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: Could not collect

CMake version: version 3.22.5

Libc version: glibc-2.27

Python version: 3.9.7 (default, Sep 16 2021, 13:09:58) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-3.10.0-1127.el7.x86_64-x86_64-with-glibc2.27

Is CUDA available: True

CUDA runtime version: 11.0.221

GPU models and configuration:

GPU 0: NVIDIA A100-SXM4-40GB

GPU 1: NVIDIA A100-SXM4-40GB

GPU 2: NVIDIA A100-SXM4-40GB

GPU 3: NVIDIA A100-SXM4-40GB

GPU 4: NVIDIA A100-SXM4-40GB

GPU 5: NVIDIA A100-SXM4-40GB

GPU 6: NVIDIA A100-SXM4-40GB

GPU 7: NVIDIA A100-SXM4-40GB

Nvidia driver version: 470.103.01

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.20.3

[pip3] torch==1.12.0a0+git67ece03

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] _pytorch_select 0.1 cpu_0 https://10.251.102.1/anaconda/pkgs/main

[conda] blas 1.0 mkl https://10.251.102.1/anaconda/pkgs/main

[conda] cudatoolkit 11.3.1 h2bc3f7f_2 https://10.251.102.1/anaconda/pkgs/main

[conda] libmklml 2019.0.5 h06a4308_0 https://10.251.102.1/anaconda/pkgs/main

[conda] mkl 2020.2 256 https://10.251.102.1/anaconda/pkgs/main

[conda] numpy 1.20.3 py39hdbf815f_1 https://10.251.102.1/anaconda/cloud/conda-forge

[conda] pytorch 1.11.0 py3.9_cuda11.3_cudnn8.2.0_0 https://10.251.102.1/anaconda/cloud/pytorch

[conda] pytorch-mutex 1.0 cuda https://10.251.102.1/anaconda/cloud/pytorch

[conda] torch 1.12.0a0+git67ece03 pypi_0 pypi

[conda] torchaudio 0.11.0 py39_cu113 https://10.251.102.1/anaconda/cloud/pytorch

[conda] torchvision 0.12.0 py39_cu113 https://10.251.102.1/anaconda/cloud/pytorch

| 2 |

5,331 | 80,605 |

pad_sequence and pack_sequence should support length zero tensors

|

module: rnn, triaged, enhancement

|

### 🚀 The feature, motivation and pitch

This situation naturally occurs when training on irregularly sampled times series data with a fixed real-time sliding window, i.e. the model received all observations made within a time interval of say 2 hours.

Since the time series is irregular, it can happen that there are no observations in this time-slice, hence the resulting tensor has a 0-length dimension.

## MWE

```python

import torch

from torch.nn.utils.rnn import pack_sequence, pad_sequence

tensors = [torch.randn(abs(n - 3)) for n in range(6)]

pad_sequence(tensors, batch_first=True, padding_value=float("nan"))

pack_sequence(tensors, enforce_sorted=False)

```

### Alternatives

_No response_

### Additional context

_No response_

cc @zou3519

| 0 |

5,332 | 80,595 |

Overlapping Optimizer.step() with DDP backward

|

oncall: distributed, module: optimizer

|

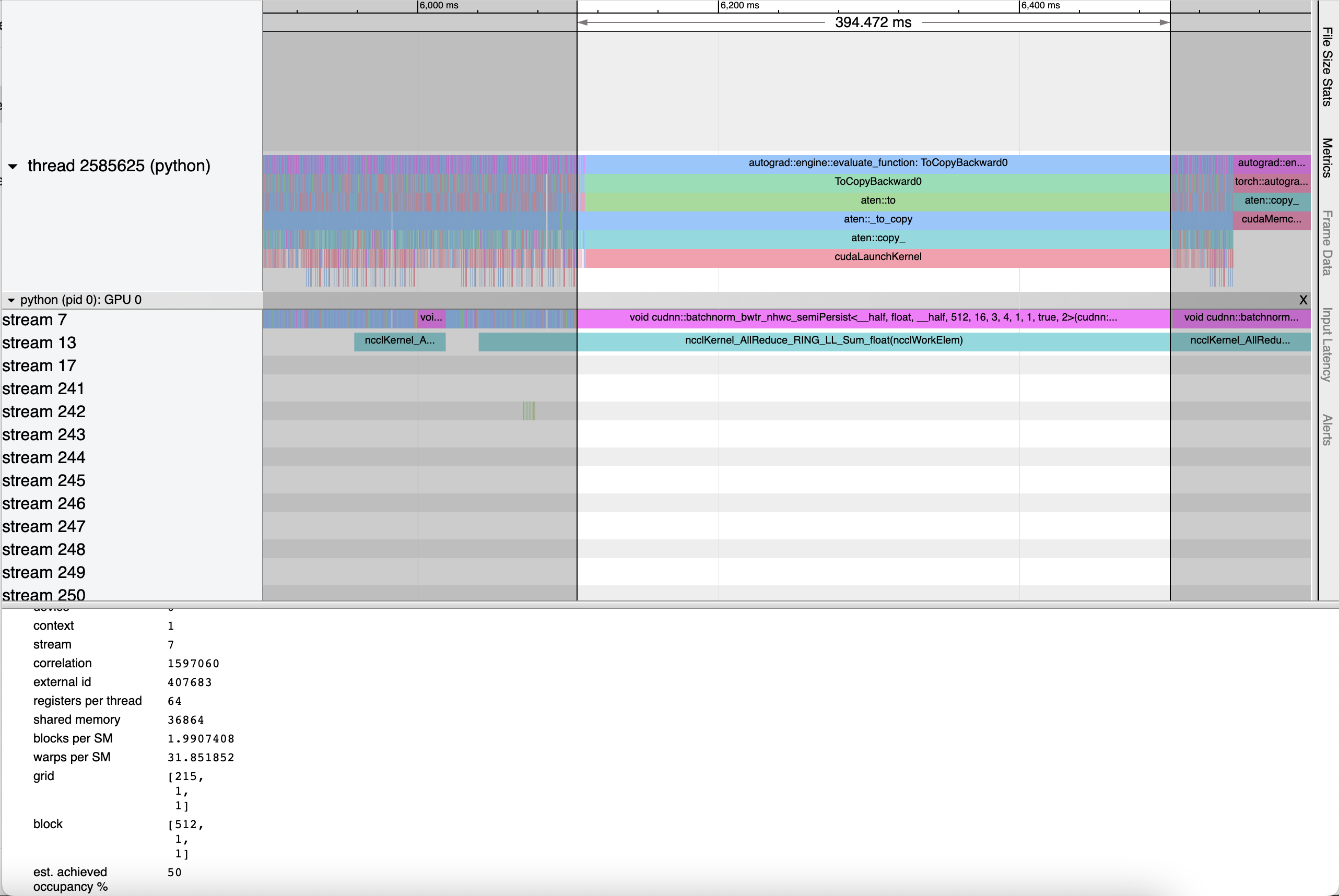

### 🚀 The feature, motivation and pitch

DDP `all_reduce` parameters' gradients in `buckets`, which means that some parameters would get their finial gradients earlier than others. This exposes an opportunity for `Optimizer` to optimize part of parameters before `backward` finished. So that overlapping part of `Optimizer.step` and DDP `backward` is possible and may led to higher parallelism or even hide some `all_reduce` cost.

### Alternatives

This re-scheduling may be achieved by compilation optimizations like `LazyTensorCore` and `XLA`.

### Additional context

<img width="1160" alt="截屏2022-06-30 下午3 58 35" src="https://user-images.githubusercontent.com/73142299/176624701-e2ec53da-5240-42e9-8ca2-7c5e71f6b62a.png">

As the timeline above, if we can start `Optimizer.step` working on those parameters already has finial gradients, we may save almost all `Optimizer.step`'s cost.

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @SciPioneer @H-Huang @kwen2501 @vincentqb @jbschlosser @albanD

| 5 |

5,333 | 80,594 |

RuntimeError: DataLoader worker (pid 22822) is killed by signal: Aborted.

|

module: dataloader, triaged

|

### 🐛 Describe the bug

I set num_workers=4 in dataloader,the training process can run normally, and an error is reported in the verification phase。

### Versions

torch 1.11.0

torchvision 0.12.0

cc @SsnL @VitalyFedyunin @ejguan @NivekT

| 3 |

5,334 | 80,588 |

Semi-reproducible random torch.baddbmm NaNs

|

needs reproduction, triaged, module: NaNs and Infs

|

### 🐛 Describe the bug

The following code snippet appears to cause `torch.baddbmm` to randomly generate NaNs when run *on CPU*

```

for i in range(10000):

out = torch.baddbmm(

torch.zeros([1, 1, 1], dtype=torch.float32),

torch.FloatTensor([[[1]]]),

torch.FloatTensor([[[1]]]),

beta=0,

)

assert not torch.isnan(out).any(), i

# AssertionError: 9886

# (or some other number)

```

Despite running the same calculation each time, it often fails not on the first try, but many tries in.

(Sometimes I need to run the loop several times before it actually encounters a NaN, which seems odd to me.)

I've tried this on two different hardware setups and encountered the same issue.

Hope I'm not just doing something silly!

### Versions

Collecting environment information...

PyTorch version: 1.11.0

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.5 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: Could not collect

CMake version: version 3.10.2

Libc version: glibc-2.27

Python version: 3.9.11 (main, Mar 29 2022, 19:08:29) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-4.18.0-305.28.1.el8_4.x86_64-x86_64-with-glibc2.27

Is CUDA available: True

CUDA runtime version: 10.1.243

GPU models and configuration: GPU 0: Quadro RTX 8000

Nvidia driver version: 470.57.02

cuDNN version: /usr/lib/x86_64-linux-gnu/libcudnn.so.7.6.5

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] mypy-extensions==0.4.3

[pip3] numpy==1.21.2

[pip3] torch==1.11.0

[pip3] torchaudio==0.11.0

[pip3] torchvision==0.12.0

[conda] blas 1.0 mkl

[conda] cudatoolkit 10.2.89 hfd86e86_1

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py39h7f8727e_0

[conda] mkl_fft 1.3.1 py39hd3c417c_0

[conda] mkl_random 1.2.2 py39h51133e4_0

[conda] numpy 1.21.2 py39h20f2e39_0

[conda] numpy-base 1.21.2 py39h79a1101_0

[conda] pytorch 1.11.0 py3.9_cuda10.2_cudnn7.6.5_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchaudio 0.11.0 py39_cu102 pytorch

[conda] torchvision 0.12.0 py39_cu102 pytorch

| 9 |

5,335 | 80,580 |

`torch.ops.aten.find` inconsistent with `str.find`

|

module: cpp, triaged, module: sorting and selection

|

### 🐛 Describe the bug

For empty target strings `aten::find` will always return the value of the starting position in the string, even when the given range is invalid.

```

>>> import torch

>>> "example".find("", 100, 0)

-1

>>> torch.ops.aten.find("example", "", 100, 0)

100

```

As far as I can tell the cause of the discrepancy is found in `register_prim_ops.cpp` on [line 1522](https://github.com/pytorch/pytorch/blob/b4e491798c0679ab2e61f36a511484d7b8ecf8d3/torch/csrc/jit/runtime/register_prim_ops.cpp#L1522) where if the target string is empty, it will always search for the target even if the search range is invalid in some way.

Additionally, this can lead to crashes for certain inputs as shown below.

```

>>> import torch

>>> torch.ops.aten.find("example", "a", 100, 200)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/quinn/torch-mlir/mlir_venv/lib/python3.10/site-packages/torch/_ops.py", line 148, in __call__

return self._op(*args, **kwargs or {})

IndexError: basic_string::substr: __pos (which is 100) > this->size() (which is 7)

```

### Versions

Collecting environment information...

PyTorch version: 1.13.0.dev20220623+cpu

Is debug build: False

CUDA used to build PyTorch: Could not collect

ROCM used to build PyTorch: N/A

OS: Arch Linux (x86_64)

GCC version: (GCC) 12.1.0

Clang version: 13.0.1

CMake version: version 3.22.5

Libc version: glibc-2.35

Python version: 3.10.5 (main, Jun 6 2022, 18:49:26) [GCC 12.1.0] (64-bit runtime)

Python platform: Linux-5.18.5-arch1-1-x86_64-with-glibc2.35

Is CUDA available: False

CUDA runtime version: Could not collect

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 2070 SUPER

Nvidia driver version: 515.48.07

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.23.0

[pip3] torch==1.13.0.dev20220623+cpu

[pip3] torch-mlir==20220620.509

[pip3] torchvision==0.14.0.dev20220623+cpu

[conda] Could not collect

cc @jbschlosser

| 1 |

5,336 | 80,577 |

2-dimensional arange

|

triaged, enhancement, module: nestedtensor, module: tensor creation

|

### 🚀 The feature, motivation and pitch

When dealing with batches of variable-length sequences, we often track the start indices, length of sequences, and end indices.

```python

maximum_sequence_length = 3

number_of_sequences = 3

data = torch.random(maximum_sequence_length * number_of_sequences, feature)

sequence_length = torch.tensor([1, 3, 2])

start_idx = torch.tensor([0, 3, 6])

end_idx = torch.tensor([1, 6, 8])

```