modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-08-24 00:44:07

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 518

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-08-24 00:41:25

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

coelacanthxyz/blockassist-bc-finicky_thriving_grouse_1755860665

|

coelacanthxyz

| 2025-08-22T11:32:18Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"finicky thriving grouse",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:32:10Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- finicky thriving grouse

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Muapi/tsutomu-nihei-lora

|

Muapi

| 2025-08-22T11:31:43Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:31:28Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Tsutomu Nihei Lora

**Base model**: Flux.1 D

**Trained words**:

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:179979@1474802", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

chainway9/blockassist-bc-untamed_quick_eel_1755860629

|

chainway9

| 2025-08-22T11:31:03Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"untamed quick eel",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:30:59Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- untamed quick eel

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

mang3dd/blockassist-bc-tangled_slithering_alligator_1755860717

|

mang3dd

| 2025-08-22T11:30:46Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"tangled slithering alligator",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:30:42Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- tangled slithering alligator

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

roeker/blockassist-bc-quick_wiry_owl_1755862166

|

roeker

| 2025-08-22T11:30:43Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"quick wiry owl",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:30:08Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- quick wiry owl

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

kojeklollipop/blockassist-bc-spotted_amphibious_stork_1755860574

|

kojeklollipop

| 2025-08-22T11:29:18Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"spotted amphibious stork",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:29:14Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- spotted amphibious stork

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Muapi/yoh-nagao-style

|

Muapi

| 2025-08-22T11:28:38Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:28:24Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Yoh Nagao Style

**Base model**: Flux.1 D

**Trained words**: Yoh Nagao Style

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:107192@1519368", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

hyrinmansoor/text2frappe-s3-flan-query

|

hyrinmansoor

| 2025-08-22T11:28:25Z | 1,041 | 0 |

transformers

|

[

"transformers",

"pytorch",

"safetensors",

"t5",

"text2text-generation",

"flan-t5-base",

"erpnext",

"query-generation",

"frappe",

"text2frappe",

"en",

"license:apache-2.0",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-06-05T11:48:55Z |

---

tags:

- flan-t5-base

- transformers

- erpnext

- query-generation

- frappe

- text2frappe

- text2text-generation

pipeline_tag: text2text-generation

license: apache-2.0

language: en

library_name: transformers

model-index:

- name: Text2Frappe - Stage 3 Query Generator

results: []

---

# 🧠 Text2Frappe - Stage 3 Query Generator (FLAN-T5-BASE)

This model is the **third stage** in the [Text2Frappe](https://huggingface.co/hyrinmansoor) pipeline, which enables **natural language interface to ERPNext** by converting questions into executable database queries.

---

## 🎯 Task

**Text2Text Generation** – Prompt-based query formulation.

Given:

- A detected **ERPNext Doctype** (from Stage 1),

- A natural language **question**,

- A list of selected **relevant fields** (from Stage 2),

this model generates a valid **Frappe ORM-style query** (e.g., `frappe.get_all(...)`) to retrieve the required data.

---

## 🧩 Input Format

Inputs are JSON-style strings containing:

- `doctype`: the ERPNext document type.

- `question`: the user's question in natural language.

- `fields`: a list of relevant field names predicted by Stage 2.

### 📥 Example Input

```json

{

"doctype": "Purchase Invoice Advance",

"question": "List the reference types used in advance payments made this month.",

"fields": ["reference_type"]

}

```

### 📤 Example Output

frappe.get_all('Purchase Invoice Advance', filters={'posting_date': ['between', ['2024-04-01', '2024-04-30']]}, fields=['reference_type'])

|

Muapi/lovecraftian-nightmare-landscapes

|

Muapi

| 2025-08-22T11:28:04Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:27:52Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Lovecraftian Nightmare Landscapes

**Base model**: Flux.1 D

**Trained words**: n1ghtm@r3

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:607647@747348", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

Muapi/cute-sdxl-pony-flux

|

Muapi

| 2025-08-22T11:26:17Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:26:02Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Cute (SDXL, Pony, Flux)

**Base model**: Flux.1 D

**Trained words**: ArsMJStyle, Cute

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:577827@820305", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

Muapi/kft-furry-scaly-feathery-enhancer-flux

|

Muapi

| 2025-08-22T11:25:11Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:24:58Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# KFT Furry/Scaly/Feathery Enhancer [FLUX]

**Base model**: Flux.1 D

**Trained words**:

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:750776@839561", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

hakimjustbao/blockassist-bc-raging_subtle_wasp_1755860102

|

hakimjustbao

| 2025-08-22T11:23:25Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"raging subtle wasp",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:23:21Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- raging subtle wasp

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Muapi/ob-japanese-urban-film-photography

|

Muapi

| 2025-08-22T11:23:13Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:22:56Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# OB 日式城市胶片摄影 Japanese Urban Film Photography

**Base model**: Flux.1 D

**Trained words**: OBrbrw

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:1184221@1332894", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

sampingkaca72/blockassist-bc-armored_stealthy_elephant_1755860251

|

sampingkaca72

| 2025-08-22T11:22:25Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"armored stealthy elephant",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:22:22Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- armored stealthy elephant

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Muapi/digital-dystopia

|

Muapi

| 2025-08-22T11:21:13Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:20:57Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Digital Dystopia

**Base model**: Flux.1 D

**Trained words**:

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:1396354@1589111", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

eshanroy5678/blockassist-bc-untamed_dextrous_dingo_1755861353

|

eshanroy5678

| 2025-08-22T11:21:08Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"untamed dextrous dingo",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:19:41Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- untamed dextrous dingo

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

thanobidex/blockassist-bc-colorful_shiny_hare_1755859886

|

thanobidex

| 2025-08-22T11:18:35Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"colorful shiny hare",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:18:32Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- colorful shiny hare

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Muapi/sketch-art

|

Muapi

| 2025-08-22T11:17:42Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:17:25Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Sketch Art

**Base model**: Flux.1 D

**Trained words**: sketch_style

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:802807@897643", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

ngurney/ppo-lunarlander-v3

|

ngurney

| 2025-08-22T11:17:33Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v3",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2025-08-22T11:17:18Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v3

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v3

type: LunarLander-v3

metrics:

- type: mean_reward

value: 263.74 +/- 17.98

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v3**

This is a trained model of a **PPO** agent playing **LunarLander-v3**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Muapi/fantastic-pic-flux

|

Muapi

| 2025-08-22T11:17:05Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:16:53Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Fantastic Pic [Flux]

**Base model**: Flux.1 D

**Trained words**:

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:1193687@1343984", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

Muapi/dnd-map-world

|

Muapi

| 2025-08-22T11:16:42Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:16:29Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# DnD map world

**Base model**: Flux.1 D

**Trained words**: Dnd_maps

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:1166586@1312422", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

Muapi/donm-sound-of-music-flux-sdxl-pony

|

Muapi

| 2025-08-22T11:16:23Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:16:10Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# DonM - Sound of Music [Flux,SDXL,Pony]

**Base model**: Flux.1 D

**Trained words**: digital illustration

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:393812@813609", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

calcuis/krea-gguf

|

calcuis

| 2025-08-22T11:13:35Z | 2,743 | 7 |

diffusers

|

[

"diffusers",

"gguf",

"gguf-node",

"gguf-connector",

"text-to-image",

"en",

"base_model:black-forest-labs/FLUX.1-Krea-dev",

"base_model:quantized:black-forest-labs/FLUX.1-Krea-dev",

"license:other",

"region:us"

] |

text-to-image

| 2025-07-31T20:55:43Z |

---

license: other

license_name: flux-1-dev-non-commercial-license

license_link: https://huggingface.co/black-forest-labs/FLUX.1-Krea-dev/blob/main/LICENSE.md

language:

- en

library_name: diffusers

base_model:

- black-forest-labs/FLUX.1-Krea-dev

pipeline_tag: text-to-image

widget:

- text: a frog holding a sign that says hello world

output:

url: output1.png

- text: a pig holding a sign that says hello world

output:

url: output2.png

- text: a wolf holding a sign that says hello world

output:

url: output3.png

- text: >-

cute anime girl with massive fluffy fennec ears and a big fluffy tail blonde

messy long hair blue eyes wearing a maid outfit with a long black gold leaf

pattern dress and a white apron mouth open holding a fancy black forest cake

with candles on top in the kitchen of an old dark Victorian mansion lit by

candlelight with a bright window to the foggy forest and very expensive

stuff everywhere

output:

url: workflow-embedded-demo1.png

- text: >-

on a rainy night, a girl holds an umbrella and looks at the camera. The rain

keeps falling.

output:

url: workflow-embedded-demo2.png

- text: drone shot of a volcano erupting with a pig walking on it

output:

url: workflow-embedded-demo3.png

tags:

- gguf-node

- gguf-connector

---

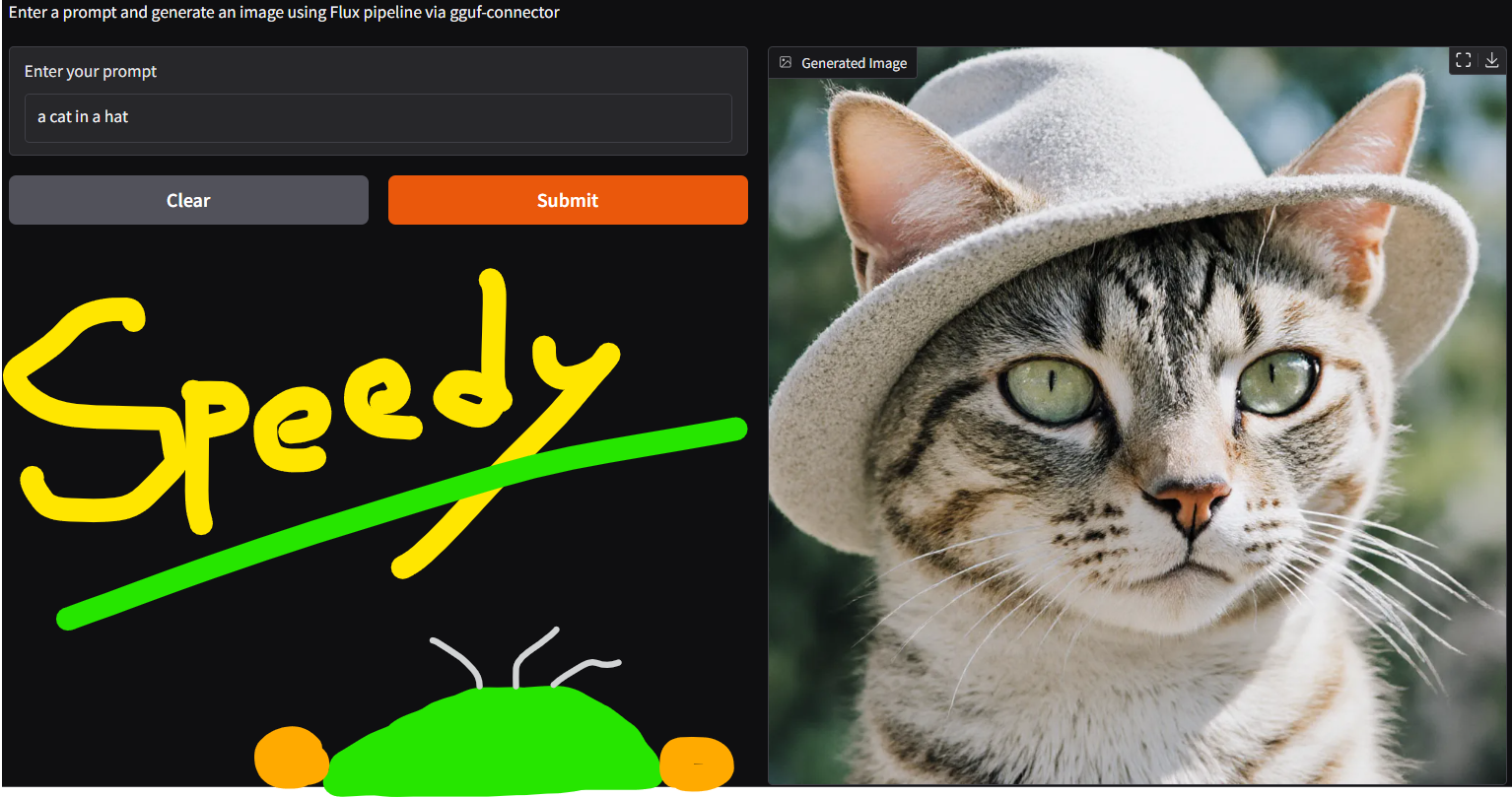

# **gguf quantized version of krea**

- run it straight with `gguf-connector`

- opt a `gguf` file in the current directory to interact with by:

```

ggc k

```

>

>GGUF file(s) available. Select which one to use:

>

>1. flux-krea-lite-q2_k.gguf

>2. flux-krea-lite-q4_0.gguf

>3. flux-krea-lite-q8_0.gguf

>

>Enter your choice (1 to 3): _

>

note: try experimental lite model with 8-step operation; save up to 70% loading time

- run it with diffusers (see example inference below)

```py

import torch

from transformers import T5EncoderModel

from diffusers import FluxPipeline, GGUFQuantizationConfig, FluxTransformer2DModel

model_path = "https://huggingface.co/calcuis/krea-gguf/blob/main/flux1-krea-dev-q2_k.gguf"

transformer = FluxTransformer2DModel.from_single_file(

model_path,

quantization_config=GGUFQuantizationConfig(compute_dtype=torch.bfloat16),

torch_dtype=torch.bfloat16,

config="callgg/krea-decoder",

subfolder="transformer"

)

text_encoder = T5EncoderModel.from_pretrained(

"chatpig/t5-v1_1-xxl-encoder-fp32-gguf",

gguf_file="t5xxl-encoder-fp32-q2_k.gguf",

torch_dtype=torch.bfloat16

)

pipe = FluxPipeline.from_pretrained(

"callgg/krea-decoder",

transformer=transformer,

text_encoder_2=text_encoder,

torch_dtype=torch.bfloat16

)

pipe.enable_model_cpu_offload() # could change it to cuda if you have good gpu

prompt = "a pig holding a sign that says hello world"

image = pipe(

prompt,

height=1024,

width=1024,

guidance_scale=2.5,

).images[0]

image.save("output.png")

```

<Gallery />

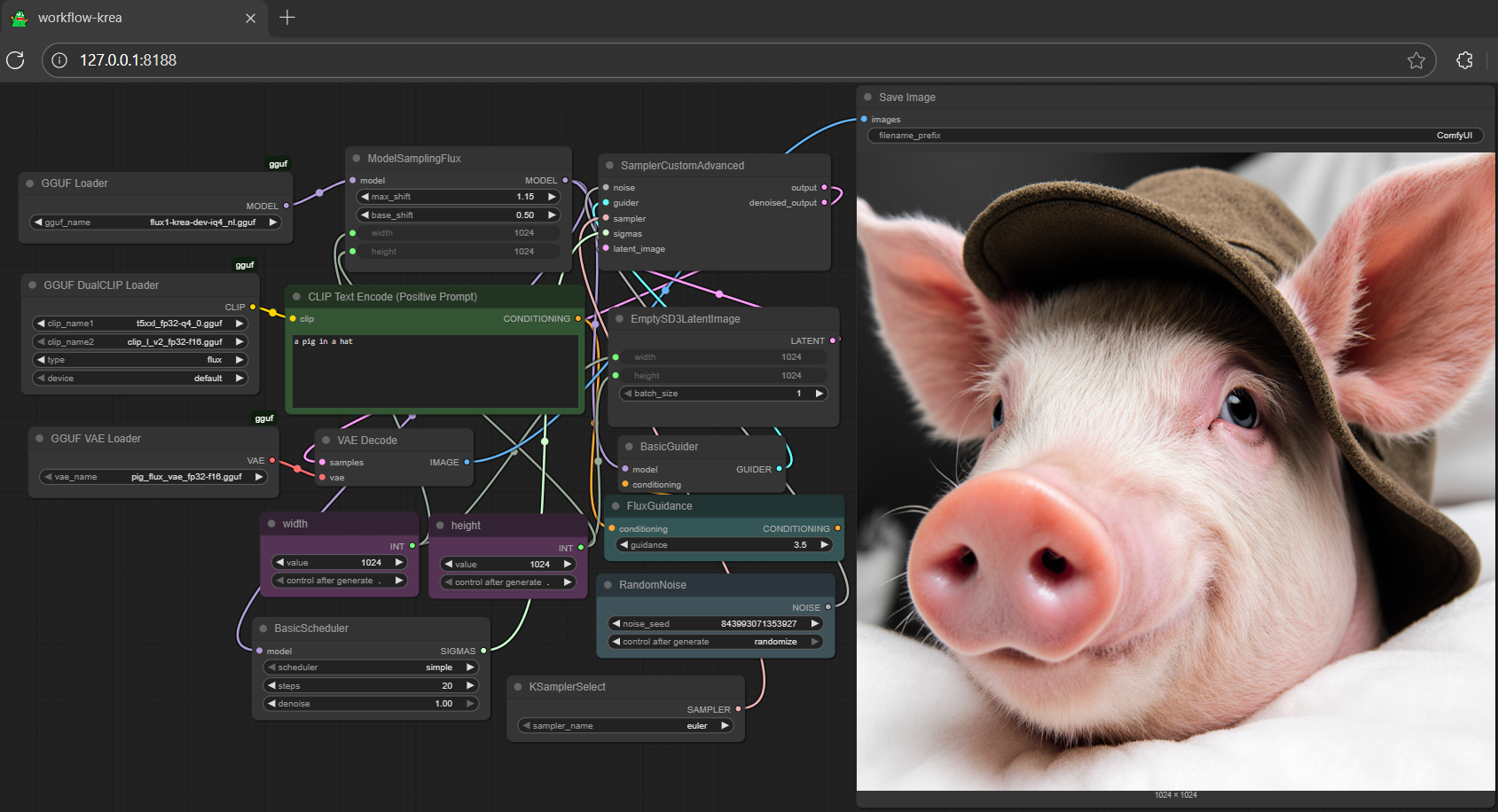

## **run it with gguf-node via comfyui**

- drag **krea** to > `./ComfyUI/models/diffusion_models`

- drag **clip-l-v2 [[248MB](https://huggingface.co/calcuis/kontext-gguf/blob/main/clip_l_v2_fp32-f16.gguf)], t5xxl [[2.75GB](https://huggingface.co/calcuis/kontext-gguf/blob/main/t5xxl_fp32-q4_0.gguf)]** to > `./ComfyUI/models/text_encoders`

- drag **pig [[168MB](https://huggingface.co/calcuis/kontext-gguf/blob/main/pig_flux_vae_fp32-f16.gguf)]** to > `./ComfyUI/models/vae`

### **reference**

- base model from [black-forest-labs](https://huggingface.co/black-forest-labs)

- for model merge details, see [sayakpaul](https://huggingface.co/sayakpaul/FLUX.1-merged)

- diffusers from [huggingface](https://github.com/huggingface/diffusers)

- comfyui from [comfyanonymous](https://github.com/comfyanonymous/ComfyUI)

- gguf-node ([pypi](https://pypi.org/project/gguf-node)|[repo](https://github.com/calcuis/gguf)|[pack](https://github.com/calcuis/gguf/releases))

- gguf-connector ([pypi](https://pypi.org/project/gguf-connector))

|

Armaneshon/gemma-3-270m-it-markdown-summarizer

|

Armaneshon

| 2025-08-22T11:12:00Z | 0 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"generated_from_trainer",

"sft",

"trl",

"base_model:google/gemma-3-270m-it",

"base_model:finetune:google/gemma-3-270m-it",

"endpoints_compatible",

"region:us"

] | null | 2025-08-20T14:59:51Z |

---

base_model: google/gemma-3-270m-it

library_name: transformers

model_name: gemma-3-270m-it-markdown-summarizer

tags:

- generated_from_trainer

- sft

- trl

licence: license

---

# Model Card for gemma-3-270m-it-markdown-summarizer

This model is a fine-tuned version of [google/gemma-3-270m-it](https://huggingface.co/google/gemma-3-270m-it).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="Armaneshon/gemma-3-270m-it-markdown-summarizer", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

This model was trained with SFT.

### Framework versions

- TRL: 0.21.0

- Transformers: 4.55.3

- Pytorch: 2.6.0+cu124

- Datasets: 3.3.2

- Tokenizers: 0.21.2

## Citations

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallou{\'e}dec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

ihsanridzi/blockassist-bc-wiry_flexible_owl_1755859492

|

ihsanridzi

| 2025-08-22T11:11:37Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"wiry flexible owl",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:11:34Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- wiry flexible owl

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

twelcone/pii-phi

|

twelcone

| 2025-08-22T11:10:53Z | 0 | 0 | null |

[

"safetensors",

"phi3",

"custom_code",

"region:us"

] | null | 2025-08-22T11:10:53Z |

### Overview

`pii-phi` is a fine-tuned version of `Phi-3.5-mini-instruct` designed to extract Personally Identifiable Information (PII) from unstructured text. The model outputs PII entities in a structured JSON format according to strict schema guidelines.

### Training Prompt Format

```text

# GUIDELINES

- Extract all instances of the following Personally Identifiable Information (PII) entities from the provided text and return them in JSON format.

- Each item in the JSON list should include an 'entity' key specifying the type of PII and a 'value' key containing the extracted information.

- The supported entities are: PERSON_NAME, BUSINESS_NAME, API_KEY, USERNAME, API_ENDPOINT, WEBSITE_ADDRESS, PHONE_NUMBER, EMAIL_ADDRESS, ID, PASSWORD, ADDRESS.

# EXPECTED OUTPUT

- The json output must be in the format below:

{

"result": [

{"entity": "ENTITY_TYPE", "value": "EXTRACTED_VALUE"},

...

]

}

```

### Supported Entities

* PERSON\_NAME

* BUSINESS\_NAME

* API\_KEY

* USERNAME

* API\_ENDPOINT

* WEBSITE\_ADDRESS

* PHONE\_NUMBER

* EMAIL\_ADDRESS

* ID

* PASSWORD

* ADDRESS

### Intended Use

The model is intended for PII detection in text documents to support tasks such as data anonymization, compliance, and security auditing.

### Limitations

* Not guaranteed to detect all forms of PII in every context.

* May return false positives or omit contextually relevant information.

---

### Installation

Install the `vllm` package to run the model efficiently:

```bash

pip install vllm

```

---

### Example:

```python

from vllm import LLM, SamplingParams

llm = LLM("Fsoft-AIC/pii-phi")

system_prompt = """

# GUIDELINES

- Extract all instances of the following Personally Identifiable Information (PII) entities from the provided text and return them in JSON format.

- Each item in the JSON list should include an 'entity' key specifying the type of PII and a 'value' key containing the extracted information.

- The supported entities are: PERSON_NAME, BUSINESS_NAME, API_KEY, USERNAME, API_ENDPOINT, WEBSITE_ADDRESS, PHONE_NUMBER, EMAIL_ADDRESS, ID, PASSWORD, ADDRESS.

# EXPECTED OUTPUT

- The json output must be in the format below:

{

"result": [

{"entity": "ENTITY_TYPE", "value": "EXTRACTED_VALUE"},

...

]

}

"""

pii_message = "I am James Jake and my employee number is 123123123"

sampling_params = SamplingParams(temperature=0, max_tokens=1000)

outputs = llm.chat(

[

{"role": "system", "content": system_prompt},

{"role": "user", "content": pii_message},

],

sampling_params,

)

for output in outputs:

generated_text = output.outputs[0].text

print(generated_text)

```

|

unitova/blockassist-bc-zealous_sneaky_raven_1755859445

|

unitova

| 2025-08-22T11:10:44Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"zealous sneaky raven",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:10:41Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- zealous sneaky raven

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

AngeDavid/Completo.Video.Angel.David.debut.Milica.vido.mili.telegram

|

AngeDavid

| 2025-08-22T11:10:11Z | 0 | 0 | null |

[

"region:us"

] | null | 2025-08-22T10:03:24Z |

<a href="https://sdu.sk/AyL"><img src="https://files.qatarliving.com/event/2025/06/20/Jawan69_0-1749987397680.gif" alt="fsd" /></a>

<a href="https://sdu.sk/AyL" rel="nofollow">🔴 ➤►𝐂𝐥𝐢𝐤 𝐇𝐞𝐫𝐞 𝐭𝐨👉👉 (𝙨𝙞𝙜𝙣 𝙪𝙥 𝙖𝙣𝙙 𝙬𝙖𝙩𝙘𝙝 𝙛𝙪𝙡𝙡 𝙫𝙞𝙙𝙚𝙤 𝙃𝘿)</a>

<a href="https://sdu.sk/AyL" rel="nofollow">🔴 ➤►𝐂𝐥𝐢𝐤 𝐇𝐞𝐫𝐞 𝐭𝐨👉👉 (𝐅𝐮𝐥𝐥 𝐯𝐢𝐝𝐞𝐨 𝐋𝐢𝐧𝐤)</a>

|

vwzyrraz7l/blockassist-bc-tall_hunting_vulture_1755859455

|

vwzyrraz7l

| 2025-08-22T11:09:42Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"tall hunting vulture",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:09:39Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- tall hunting vulture

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

er-Video-de-Abigail-Lalama-y-Snayder/VER.filtrado.Video.de.Abigail.Lalama.y.Snayder.en.Telegram.se.vuelve.viral

|

er-Video-de-Abigail-Lalama-y-Snayder

| 2025-08-22T11:08:20Z | 0 | 0 | null |

[

"region:us"

] | null | 2025-08-22T09:47:30Z |

<a href="https://sdu.sk/AyL"><img src="https://files.qatarliving.com/event/2025/06/20/Jawan69_0-1749987397680.gif" alt="fsd" /></a>

<a href="https://sdu.sk/AyL" rel="nofollow">🔴 ➤►𝐂𝐥𝐢𝐤 𝐇𝐞𝐫𝐞 𝐭𝐨👉👉 (𝙨𝙞𝙜𝙣 𝙪𝙥 𝙖𝙣𝙙 𝙬𝙖𝙩𝙘𝙝 𝙛𝙪𝙡𝙡 𝙫𝙞𝙙𝙚𝙤 𝙃𝘿)</a>

<a href="https://sdu.sk/AyL" rel="nofollow">🔴 ➤►𝐂𝐥𝐢𝐤 𝐇𝐞𝐫𝐞 𝐭𝐨👉👉 (𝐅𝐮𝐥𝐥 𝐯𝐢𝐝𝐞𝐨 𝐋𝐢𝐧𝐤)</a>

|

anwensmythadv/blockassist-bc-pawing_stocky_walrus_1755858983

|

anwensmythadv

| 2025-08-22T11:07:35Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"pawing stocky walrus",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:07:32Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- pawing stocky walrus

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Mostefa-Terbeche/diabetic-retinopathy-combined-resnet50-original-20250614-235212

|

Mostefa-Terbeche

| 2025-08-22T11:06:52Z | 0 | 0 | null |

[

"diabetic-retinopathy",

"medical-imaging",

"pytorch",

"computer-vision",

"retinal-imaging",

"dataset:combined",

"license:apache-2.0",

"model-index",

"region:us"

] | null | 2025-08-22T10:19:44Z |

---

license: apache-2.0

tags:

- diabetic-retinopathy

- medical-imaging

- pytorch

- computer-vision

- retinal-imaging

datasets:

- combined

metrics:

- accuracy

- quadratic-kappa

- auc

model-index:

- name: combined_resnet50_original

results:

- task:

type: image-classification

name: Diabetic Retinopathy Classification

dataset:

type: combined

name: COMBINED

metrics:

- type: accuracy

value: 0.6685592618878637

- type: quadratic-kappa

value: 0.7829103500741855

---

# Diabetic Retinopathy Classification Model

## Model Description

This model is trained for diabetic retinopathy classification using the resnet50 architecture on the combined dataset with original preprocessing.

## Model Details

- **Architecture**: resnet50

- **Dataset**: combined

- **Preprocessing**: original

- **Training Date**: 20250614-235212

- **Task**: 5-class diabetic retinopathy grading (0-4)

- **Directory**: combined_resnet50_20250614-235212_new

## Performance

- **Test Accuracy**: 0.6685592618878637

- **Test Quadratic Kappa**: 0.7829103500741855

- **Validation Kappa**: 0.7829103500741855

## Usage

```python

import torch

from huggingface_hub import hf_hub_download

# Download model

model_path = hf_hub_download(

repo_id="your-username/diabetic-retinopathy-combined-resnet50-original",

filename="model_best.pt"

)

# Load model

model = torch.load(model_path, map_location='cpu')

```

## Classes

- 0: No DR (No diabetic retinopathy)

- 1: Mild DR (Mild non-proliferative diabetic retinopathy)

- 2: Moderate DR (Moderate non-proliferative diabetic retinopathy)

- 3: Severe DR (Severe non-proliferative diabetic retinopathy)

- 4: Proliferative DR (Proliferative diabetic retinopathy)

## Citation

If you use this model, please cite your research paper/thesis.

|

18-VIDEOS-fooni-fun-Viral-Video-Clip/New.full.videos.fooni.fun.Viral.Video.Official.Tutorial

|

18-VIDEOS-fooni-fun-Viral-Video-Clip

| 2025-08-22T11:06:34Z | 0 | 0 | null |

[

"region:us"

] | null | 2025-08-22T09:29:18Z |

<a href="https://sdu.sk/AyL"><img src="https://files.qatarliving.com/event/2025/06/20/Jawan69_0-1749987397680.gif" alt="fsd" /></a>

<a href="https://sdu.sk/AyL" rel="nofollow">🔴 ➤►𝐂𝐥𝐢𝐤 𝐇𝐞𝐫𝐞 𝐭𝐨👉👉 (𝙨𝙞𝙜𝙣 𝙪𝙥 𝙖𝙣𝙙 𝙬𝙖𝙩𝙘𝙝 𝙛𝙪𝙡𝙡 𝙫𝙞𝙙𝙚𝙤 𝙃𝘿)</a>

<a href="https://sdu.sk/AyL" rel="nofollow">🔴 ➤►𝐂𝐥𝐢𝐤 𝐇𝐞𝐫𝐞 𝐭𝐨👉👉 (𝐅𝐮𝐥𝐥 𝐯𝐢𝐝𝐞𝐨 𝐋𝐢𝐧𝐤)</a>

|

Nerva1228/fubao

|

Nerva1228

| 2025-08-22T11:05:03Z | 0 | 0 |

diffusers

|

[

"diffusers",

"flux",

"lora",

"replicate",

"text-to-image",

"en",

"base_model:black-forest-labs/FLUX.1-dev",

"base_model:adapter:black-forest-labs/FLUX.1-dev",

"license:other",

"region:us"

] |

text-to-image

| 2025-08-22T11:05:02Z |

---

license: other

license_name: flux-1-dev-non-commercial-license

license_link: https://huggingface.co/black-forest-labs/FLUX.1-dev/blob/main/LICENSE.md

language:

- en

tags:

- flux

- diffusers

- lora

- replicate

base_model: "black-forest-labs/FLUX.1-dev"

pipeline_tag: text-to-image

# widget:

# - text: >-

# prompt

# output:

# url: https://...

instance_prompt: fubao

---

# Fubao

<Gallery />

## About this LoRA

This is a [LoRA](https://replicate.com/docs/guides/working-with-loras) for the FLUX.1-dev text-to-image model. It can be used with diffusers or ComfyUI.

It was trained on [Replicate](https://replicate.com/) using AI toolkit: https://replicate.com/ostris/flux-dev-lora-trainer/train

## Trigger words

You should use `fubao` to trigger the image generation.

## Run this LoRA with an API using Replicate

```py

import replicate

input = {

"prompt": "fubao",

"lora_weights": "https://huggingface.co/Nerva1228/fubao/resolve/main/lora.safetensors"

}

output = replicate.run(

"black-forest-labs/flux-dev-lora",

input=input

)

for index, item in enumerate(output):

with open(f"output_{index}.webp", "wb") as file:

file.write(item.read())

```

## Use it with the [🧨 diffusers library](https://github.com/huggingface/diffusers)

```py

from diffusers import AutoPipelineForText2Image

import torch

pipeline = AutoPipelineForText2Image.from_pretrained('black-forest-labs/FLUX.1-dev', torch_dtype=torch.float16).to('cuda')

pipeline.load_lora_weights('Nerva1228/fubao', weight_name='lora.safetensors')

image = pipeline('fubao').images[0]

```

For more details, including weighting, merging and fusing LoRAs, check the [documentation on loading LoRAs in diffusers](https://huggingface.co/docs/diffusers/main/en/using-diffusers/loading_adapters)

## Training details

- Steps: 2000

- Learning rate: 0.0004

- LoRA rank: 16

## Contribute your own examples

You can use the [community tab](https://huggingface.co/Nerva1228/fubao/discussions) to add images that show off what you’ve made with this LoRA.

|

aleebaster/blockassist-bc-sly_eager_boar_1755859157

|

aleebaster

| 2025-08-22T11:04:27Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"sly eager boar",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:04:20Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- sly eager boar

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Orginal-18-afrin-apu-viral-video-link/New.full.videos.afrin.apu.Viral.Video.Official.Tutorial

|

Orginal-18-afrin-apu-viral-video-link

| 2025-08-22T11:03:26Z | 0 | 0 | null |

[

"region:us"

] | null | 2025-08-22T11:03:01Z |

<animated-image data-catalyst=""><a href="https://tinyurl.com/5abutj9x?viral-news" rel="nofollow" data-target="animated-image.originalLink"><img src="https://static.wixstatic.com/media/b249f9_adac8f70fb3f45b88691696c77de18f3~mv2.gif" alt="Foo" data-canonical-src="https://static.wixstatic.com/media/b249f9_adac8f70fb3f45b88691696c77de18f3~mv2.gif" style="max-width: 100%; display: inline-block;" data-target="animated-image.originalImage"></a>

|

lqpl/blockassist-bc-hairy_insectivorous_antelope_1755860531

|

lqpl

| 2025-08-22T11:03:24Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"hairy insectivorous antelope",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:03:06Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- hairy insectivorous antelope

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

itg-ai/gen-images

|

itg-ai

| 2025-08-22T11:03:12Z | 0 | 0 | null |

[

"license:apache-2.0",

"region:us"

] | null | 2025-08-22T10:59:16Z |

---

license: apache-2.0

---

|

ggml-org/gemma-3n-E2B-it-GGUF

|

ggml-org

| 2025-08-22T11:03:00Z | 2,109 | 13 |

gguf

|

[

"gguf",

"base_model:google/gemma-3n-E2B-it",

"base_model:quantized:google/gemma-3n-E2B-it",

"license:gemma",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2025-06-26T10:09:32Z |

---

license: gemma

library_name: gguf

base_model: google/gemma-3n-E2B-it

---

> [!Note]

> This version does not contain multimodal support. We are still working on adding multimodal.

# Gemma 3n model card

**Original model**: https://huggingface.co/google/gemma-3n-E2B-it

**Model Page**: [Gemma 3n](https://ai.google.dev/gemma/docs/gemma-3n)

**Resources and Technical Documentation**:

- [Responsible Generative AI Toolkit](https://ai.google.dev/responsible)

- [Gemma on Kaggle](https://www.kaggle.com/models/google/gemma-3n)

- [Gemma on HuggingFace](https://huggingface.co/collections/google/gemma-3n-685065323f5984ef315c93f4)

- [Gemma on Vertex Model Garden](https://console.cloud.google.com/vertex-ai/publishers/google/model-garden/gemma3n)

**Terms of Use**: [Terms](https://ai.google.dev/gemma/terms)\

**Authors**: Google DeepMind

## Example usage

### With llama.cpp

To install llama.cpp on your system, see [installation guide](https://github.com/ggml-org/llama.cpp/blob/master/README.md)

```sh

llama-cli -hf ggml-org/gemma-3n-E2B-it-GGUF:Q8_0 -fa -c 0 --jinja

```

### With LM Studio

Search for `gemma-3n-E2B-it-GGUF` and add it to your model library

|

labanochwo/unsloth-ocr-8bit

|

labanochwo

| 2025-08-22T11:02:52Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen2_5_vl",

"image-to-text",

"text-generation-inference",

"unsloth",

"trl",

"en",

"base_model:allenai/olmOCR-7B-0725",

"base_model:finetune:allenai/olmOCR-7B-0725",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

image-to-text

| 2025-08-22T10:59:14Z |

---

base_model: allenai/olmOCR-7B-0725

tags:

- text-generation-inference

- transformers

- unsloth

- qwen2_5_vl

- trl

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** labanochwo

- **License:** apache-2.0

- **Finetuned from model :** allenai/olmOCR-7B-0725

This qwen2_5_vl model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

labanochwo/unsloth-ocr-4bit

|

labanochwo

| 2025-08-22T11:00:23Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen2_5_vl",

"image-to-text",

"text-generation-inference",

"unsloth",

"trl",

"en",

"base_model:allenai/olmOCR-7B-0725",

"base_model:quantized:allenai/olmOCR-7B-0725",

"license:apache-2.0",

"endpoints_compatible",

"4-bit",

"bitsandbytes",

"region:us"

] |

image-to-text

| 2025-08-22T10:59:12Z |

---

base_model: allenai/olmOCR-7B-0725

tags:

- text-generation-inference

- transformers

- unsloth

- qwen2_5_vl

- trl

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** labanochwo

- **License:** apache-2.0

- **Finetuned from model :** allenai/olmOCR-7B-0725

This qwen2_5_vl model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

rajaravh/Lisabella-AI

|

rajaravh

| 2025-08-22T11:00:12Z | 0 | 0 | null |

[

"license:apache-2.0",

"region:us"

] | null | 2025-08-22T11:00:12Z |

---

license: apache-2.0

---

|

aislingmcintosh/blockassist-bc-pale_masked_salmon_1755858626

|

aislingmcintosh

| 2025-08-22T10:59:59Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"pale masked salmon",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T10:59:56Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- pale masked salmon

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

katanyasekolah/blockassist-bc-silky_sprightly_cassowary_1755858543

|

katanyasekolah

| 2025-08-22T10:57:55Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"silky sprightly cassowary",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T10:57:52Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- silky sprightly cassowary

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

eggej/blockassist-bc-marine_playful_eel_1755860241

|

eggej

| 2025-08-22T10:57:47Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"marine playful eel",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T10:57:39Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- marine playful eel

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

lqpl/blockassist-bc-hairy_insectivorous_antelope_1755860136

|

lqpl

| 2025-08-22T10:57:27Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"hairy insectivorous antelope",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T10:56:35Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- hairy insectivorous antelope

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

elleshavff/blockassist-bc-horned_energetic_parrot_1755858644

|

elleshavff

| 2025-08-22T10:57:21Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"horned energetic parrot",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T10:57:18Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- horned energetic parrot

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

manusiaperahu2012/blockassist-bc-roaring_long_tuna_1755858700

|

manusiaperahu2012

| 2025-08-22T10:57:09Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"roaring long tuna",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T10:57:06Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- roaring long tuna

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

coelacanthxyz/blockassist-bc-finicky_thriving_grouse_1755858501

|

coelacanthxyz

| 2025-08-22T10:56:14Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"finicky thriving grouse",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T10:56:07Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- finicky thriving grouse

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

tomg-group-umd/step-00010720-baseline_2_0

|

tomg-group-umd

| 2025-08-22T10:55:27Z | 16 | 0 |

transformers

|

[

"transformers",

"safetensors",

"huginn_raven",

"text-generation",

"code",

"math",

"reasoning",

"llm",

"conversational",

"custom_code",

"en",

"arxiv:2502.05171",

"license:apache-2.0",

"autotrain_compatible",

"region:us"

] |

text-generation

| 2025-01-21T15:40:32Z |

---

library_name: transformers

tags:

- code

- math

- reasoning

- llm

license: apache-2.0

language:

- en

pipeline_tag: text-generation

# datasets: # cannot order these nicely

# - HuggingFaceTB/smollm-corpus

# - jon-tow/starcoderdata-python-edu

# - ubaada/booksum-complete-cleaned

# - euirim/goodwiki

# - togethercomputer/RedPajama-Data-1T

# - allenai/dolma

# - bigcode/the-stack-v2-train-smol-ids

# - bigcode/starcoderdata

# - m-a-p/Matrix

# - cerebras/SlimPajama-627B

# - open-phi/textbooks

# - open-phi/textbooks_grounded

# - open-phi/programming_books_llama

# - nampdn-ai/tiny-strange-textbooks

# - nampdn-ai/tiny-textbooks

# - nampdn-ai/tiny-code-textbooks

# - nampdn-ai/tiny-orca-textbooks

# - SciPhi/textbooks-are-all-you-need-lite

# - vikp/textbook_quality_programming

# - EleutherAI/proof-pile-2

# - open-web-math/open-web-math

# - biglam/blbooks-parquet

# - storytracer/LoC-PD-Books

# - GAIR/MathPile

# - tomg-group-umd/CLRS-Text-train

# - math-ai/AutoMathText

# - bigcode/commitpackft

# - bigcode/stack-dedup-python-fns

# - vikp/python_code_instructions_filtered

# - mlabonne/chessllm

# - Waterhorse/chess_data

# - EleutherAI/lichess-puzzles

# - chargoddard/WebInstructSub-prometheus

# - Locutusque/hercules-v5.0

# - nvidia/OpenMathInstruct-1

# - meta-math/MetaMathQA

# - m-a-p/CodeFeedback-Filtered-Instruction

# - nvidia/Daring-Anteater

# - nvidia/sft_datablend_v1

# - BAAI/Infinity-Instruct

# - anthracite-org/Stheno-Data-Filtered

# - Nopm/Opus_WritingStruct

# - xinlai/Math-Step-DPO-10K

# - bigcode/self-oss-instruct-sc2-exec-filter-50k

# - HuggingFaceTB/everyday-conversations

# - hkust-nlp/gsm8k-fix

# - HuggingFaceH4/no_robots

# - THUDM/LongWriter-6k

# - THUDM/webglm-qa

# - AlgorithmicResearchGroup/ArXivDLInstruct

# - allenai/tulu-v2-sft-mixture-olmo-4096

# - bigscience/P3

# - Gryphe/Sonnet3.5-SlimOrcaDedupCleaned

# - Gryphe/Opus-WritingPrompts

# - nothingiisreal/Reddit-Dirty-And-WritingPrompts

# - nothingiisreal/Kalomaze-Opus-Instruct-25k-filtered

# - internlm/Lean-Github

# - pkuAI4M/LeanWorkbook

# - casey-martin/multilingual-mathematical-autoformalization

# - AI4M/leandojo-informalized

# - casey-martin/oa_cpp_annotate_gen

# - l3lab/ntp-mathlib-instruct-st

# - ajibawa-2023/Maths-College

# - ajibawa-2023/Maths-Grade-School

# - ajibawa-2023/General-Stories-Collection

# - XinyaoHu/AMPS_mathematica

# - XinyaoHu/AMPS_khan

# - Magpie-Align/Magpie-Pro-MT-300K-v0.1

# - Magpie-Align/Magpie-Reasoning-150K

# - gair-prox/FineWeb-pro

# - gair-prox/c4-pro

# - gair-prox/RedPajama-pro

# - gair-prox/open-web-math-pro

# - togethercomputer/Long-Data-Collections

# - emozilla/pg19

# - MathGenie/MathCode-Pile

# - KingNish/reasoning-base-20k

# - nvidia/OpenMathInstruct-2

# - LLM360/TxT360

# - neuralwork/arxiver

---

# Huginn - Baseline Checkpoint

This is the last checkpoint from our baseline (non-recurrent!) large-scale comparison training run. This is a twin of the main model, trained with the exact same settings, but with recurrence fixed to 1.

## Table of Contents

1. [How to Use](#downloading-and-using-the-model)

2. [Advanced Usage](#advanced-features)

3. [Model Summary](#model-summary)

4. [Limitations](#limitations)

5. [Technical Details](#training)

6. [License](#license)

7. [Citation](#citation)

## Downloading and Using the Model

Load the model like this:

```python

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("tomg-group-umd/huginn-0125", torch_dtype=torch.bfloat16, trust_remote_code=True)

tokenizer = AutoTokenizer.from_pretrained("tomg-group-umd/huginn-0125")

```

### Modifying the Model's Depth at Test Time:

By providing the argument `num_steps`, the model will execute a forward pass with that amount of compute:

```python

input_ids = tokenizer.encode("The capital of Westphalia is", return_tensors="pt", add_special_tokens=True).to(device)

model.eval()

model.to(device)

model(input_ids, num_steps=32)

```

The model has about 1.5B parameters in non-recurrent code, 0.5B parameters in the embedding, and 1.5B recurrent parameters, so, as a guideline,

the number of materialized parameters is `num_steps * 1.5B + 2B`. Playing with this parameter is what makes this model interesting, and different from fixed-depth transformers!

The model is trained to accept an arbitrary number of steps. However, using fewer than 4 steps will result in very coarse answers. If given enough context to reason about, benchmarks show the model improving up to around `num_steps=64`. Beyond that, more steps generally do not hurt, but we see no further improvements.

*Note*: Due to an upload issue the model is currently stored on HF with 2 copies of the tied embedding, instead of just one. This will be fixed in a future release.

### Inference

The model was trained with bfloat16-mixed precision, so we recommend using `bfloat16` to run inference (or AMP bfloat16-mixed precision, if you really want). All benchmarks were evaluated in pure `bfloat16`.

### Sampling

The model can be used like a normal HF model to generate text with KV-caching working as expected. You can provide `num_steps` directly to the `generate` call, for example:

```

model.eval()

config = GenerationConfig(max_length=256, stop_strings=["<|end_text|>", "<|end_turn|>"],

use_cache=True,

do_sample=False, temperature=None, top_k=None, top_p=None, min_p=None,

return_dict_in_generate=True,

eos_token_id=65505,bos_token_id=65504,pad_token_id=65509)

input_ids = tokenizer.encode("The capital of Westphalia is", return_tensors="pt", add_special_tokens=True).to(device)

outputs = model.generate(input_ids, config, tokenizer=tokenizer, num_steps=16)

```

*Note*: `num_steps` and other model arguments CANNOT be included in the `GenerationConfig`, they will shadow model args at runtime.

### Chat Templating

The model was not finetuned or post-trained, but due to inclusion of instruction data during pretraining, natively understand its chat template. You can chat with the model like so

```

messages = []

messages.append({"role": "system", "content" : You are a helpful assistant."}

messages.append({"role": "user", "content" : What do you think of Goethe's Faust?"}

chat_input = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

print(chat_input)

input_ids = tokenizer.encode(chat_input, return_tensors="pt", add_special_tokens=False).to(device)

model.generate(input_ids, config, num_steps=64, tokenizer=tokenizer)

```

### KV-cache Details

The model requires its own KV-cache implementation `HuginnDynamicCache`, otherwise the KV-caches of later calls to the recurrent block will overwrite the earlier ones.

The current implementation will always try to inject this Cache implementation, but that may break with huggingface updates. If you do not use generate, but implement your own generation, use a pattern like this:

```python

# first step:

past_key_values = None

outputs = model(input_ids=input_ids, use_cache=True, past_key_values=past_key_values)

past_key_values = outputs.past_key_values # Should be an instance of HuginnDynamicCache

# next step

outputs = model(input_ids=input_ids, use_cache=True, past_key_values=past_key_values)

```

## Advanced Features

### Per-Token Adaptive Compute

When generating, you can also a variable amount of compute per-token. The model is not trained for this, so this is a proof-of-concept, that can do this task zero-shot.

You can pick between a few sane stopping rules, `entropy-diff`, `latent-diff`,`kl` and `argmax-stability`, via `criterion=kl`. The exit threshold can be modified via `exit_threshold=5e-4`.

We suggest using `kl` for interesting exits and `argmax-stability` for conservative exits. Note that using these variables overrides the default generation function. Not all arguments that are valid for the normal `generate` call are valid here. To make this more explicit, you can also directly call `generate_with_adaptive_compute`:

```python

from transformers import TextStreamer

streamer = TextStreamer(tokenizer)

model.generate_with_adaptive_compute(input_ids, config, num_steps=64, tokenizer=tokenizer, streamer=streamer,

continuous_compute=False, criterion="kl", exit_threshold=5e-4, cache_kwargs={"lookup_strategy": "latest-m4"})

```

Your cache strategy should be set to `"latest-m4"` if using adaptive compute.

### KV-cache Sharing

To reduce KV cache memory requirements, the model can be run with fewer KV-caches, with later iterations in the recurrence overwriting earlier caches. To use this feature, set

the cache argument `lookup_strategy` to include `compress-s16` (where the last number determine the size of the cache).

```

model.generate_with_adaptive_compute(input_ids, config, num_steps=64, tokenizer=tokenizer, streamer=streamer,

continuous_compute=False, cache_kwargs={"lookup_strategy": "compress-s16"})

```

You can combine this per-token adaptive compute. In that case your lookup strategy should be `latest-m4-compress-s16`.

### Warmstart / Continuous CoT

At each generation step, the recurrence can be warmstarted with the final state from the previous token by setting `continuous_compute=True`, like so

```

model.generate_with_adaptive_compute(input_ids, config, num_steps=64, tokenizer=tokenizer, streamer=streamer, continuous_compute=True)

```

## Model Summary

The model is primarily structured around decoder-only transformer blocks. However these blocks are structured into three functional groups, the __prelude__ \\(P\\),

which embeds the input data into a latent space using multiple transformer layers, then the core __recurrent block__ \\(R\\), which is the central unit of recurrent

computation modifying states \\(\mathbf{s} \in \mathbb{R}^{n \times h }\\), and finally the __coda__ \\(C\\), which un-embeds from latent space using several layers and

also contains the prediction head of the model.

Given a number of recurrent iterations \\(r\\), and a sequence of input tokens \\(\mathbf{x} \in V^n\\) these groups are used in the following way to produce output

probabilities \\(\mathbf{p} \in \mathbb{R}^{n \times |V|}\\).

$$\mathbf{e} = P(\mathbf{x})$$

$$\mathbf{s}_0 \sim \mathcal{N}(\mathbf{0}, \sigma^2 I_{n\cdot h})$$

$$\mathbf{s}_i = R(\mathbf{e}, \mathbf{s}_{i-1}) \; \textnormal{for} \; i \in \lbrace 1, \dots, r \rbrace$$

$$\mathbf{p} = R(\mathbf{s}_r)$$

where \\(\sigma\\) is the standard deviation of the initial random state. Given an init random state \\(\mathbf{s}_0\\), the model repeatedly applies the core

block \\(R\\), which accepts the latent state \\(\mathbf{s}_{i-1}\\) and the embedded input \\(\mathbf{e}\\) and outputs a new latent state \\(\mathbf{s}_i\\).

After finishing all iterations, the coda block processes the last state and produces the probabilities of the next token.

Please refer to the paper for benchmark performance on standard benchmarks.

## Limitations

Our checkpoint is trained for only 47000 steps on a broadly untested data mixture with a constant learning rate. As an academic project, the model is trained only on publicly available data and the 800B token count, while large in comparison to older fully open-source models such as the Pythia series, is small in comparison to modern open-source efforts such as OLMo, and tiny in comparison to the datasets used to train industrial open-weight models.

## Technical Specifications

This model was trained on 21 segments of 4096 AMD MI-250X GPUs on the OLCF Frontier Supercomputer in early December 2024. The model was trained using ROCM 6.2.0, and PyTorch 2.6 nightly pre-release 24/11/02. The code used to train the model can be found at https://github.com/seal-rg/recurrent-pretraining.

## License

This model is released under the [apache-2.0](https://choosealicense.com/licenses/apache-2.0/) licence.

## Citation

```

@article{geiping2025scaling,

title={Scaling up Test-Time Compute with Latent Reasoning: A Recurrent Depth Approach},

author={Jonas Geiping and Sean McLeish and Neel Jain and John Kirchenbauer and Siddharth Singh and Brian R. Bartoldson and Bhavya Kailkhura and Abhinav Bhatele and Tom Goldstein},

year={2025},

eprint={2502.},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

You can also find the paper at https://huggingface.co/papers/2502.05171.

## Contact

Please, feel free to contact us with any questions, or open an discussion thread on Hugging Face.

|

eggej/blockassist-bc-marine_playful_eel_1755860073

|

eggej

| 2025-08-22T10:55:05Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"marine playful eel",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T10:54:52Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- marine playful eel

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

kojeklollipop/blockassist-bc-spotted_amphibious_stork_1755858461

|

kojeklollipop

| 2025-08-22T10:55:00Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"spotted amphibious stork",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T10:54:56Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- spotted amphibious stork

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Taniosama/Mistral-resume-finetuned

|

Taniosama

| 2025-08-22T10:52:59Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2025-08-22T10:52:54Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

koloni/blockassist-bc-deadly_graceful_stingray_1755858400

|

koloni

| 2025-08-22T10:52:58Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"deadly graceful stingray",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T10:52:54Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- deadly graceful stingray

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

eggej/blockassist-bc-marine_playful_eel_1755859912

|

eggej

| 2025-08-22T10:52:19Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"marine playful eel",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T10:52:11Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- marine playful eel

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

andy013567/gemma-3-1b-it-classifier-finetune-3

|

andy013567

| 2025-08-22T10:52:11Z | 0 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"generated_from_trainer",

"unsloth",

"sft",

"trl",

"base_model:unsloth/gemma-3-1b-it-unsloth-bnb-4bit",

"base_model:finetune:unsloth/gemma-3-1b-it-unsloth-bnb-4bit",

"endpoints_compatible",

"region:us"

] | null | 2025-08-22T10:12:57Z |

---

base_model: unsloth/gemma-3-1b-it-unsloth-bnb-4bit

library_name: transformers

model_name: gemma-3-1b-it-classifier-finetune-3

tags:

- generated_from_trainer

- unsloth

- sft

- trl

licence: license

---

# Model Card for gemma-3-1b-it-classifier-finetune-3

This model is a fine-tuned version of [unsloth/gemma-3-1b-it-unsloth-bnb-4bit](https://huggingface.co/unsloth/gemma-3-1b-it-unsloth-bnb-4bit).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="andy013567/gemma-3-1b-it-classifier-finetune-3", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/anhbui5302/huggingface/runs/7m1jlrdc)

This model was trained with SFT.

### Framework versions

- TRL: 0.21.0

- Transformers: 4.55.3

- Pytorch: 2.8.0+cu126

- Datasets: 3.6.0

- Tokenizers: 0.21.4