modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-08-24 06:32:16

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 518

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-08-24 06:32:16

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

bytedance-research/UNO

|

bytedance-research

| 2025-08-22T11:48:09Z | 0 | 175 |

transformers

|

[

"transformers",

"subject-personalization",

"image-generation",

"image-to-image",

"arxiv:2504.02160",

"base_model:black-forest-labs/FLUX.1-dev",

"base_model:finetune:black-forest-labs/FLUX.1-dev",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

image-to-image

| 2025-04-03T09:19:48Z |

---

base_model:

- black-forest-labs/FLUX.1-dev

license: apache-2.0

pipeline_tag: image-to-image

library_name: transformers

tags:

- subject-personalization

- image-generation

---

<h3 align="center">

Less-to-More Generalization: Unlocking More Controllability by In-Context Generation

</h3>

<div style="display:flex;justify-content: center">

<a href="https://bytedance.github.io/UNO/"><img alt="Build" src="https://img.shields.io/badge/Project%20Page-UNO-yellow"></a>

<a href="https://arxiv.org/abs/2504.02160"><img alt="Build" src="https://img.shields.io/badge/arXiv%20paper-2504.02160-b31b1b.svg"></a>

<a href="https://github.com/bytedance/UNO"><img src="https://img.shields.io/static/v1?label=GitHub&message=Code&color=green&logo=github"></a>

</div>

><p align="center"> <span style="color:#137cf3; font-family: Gill Sans">Shaojin Wu,</span><sup></sup></a> <span style="color:#137cf3; font-family: Gill Sans">Mengqi Huang</span><sup>*</sup>,</a> <span style="color:#137cf3; font-family: Gill Sans">Wenxu Wu,</span><sup></sup></a> <span style="color:#137cf3; font-family: Gill Sans">Yufeng Cheng,</span><sup></sup> </a> <span style="color:#137cf3; font-family: Gill Sans">Fei Ding</span><sup>+</sup>,</a> <span style="color:#137cf3; font-family: Gill Sans">Qian He</span></a> <br>

><span style="font-size: 16px">Intelligent Creation Team, ByteDance</span></p>

## 🔥 News

- [04/2025] 🔥 The [training code](https://github.com/bytedance/UNO), [inference code](https://github.com/bytedance/UNO), and [model](https://huggingface.co/bytedance-research/UNO) of UNO are released. The [demo](https://huggingface.co/spaces/bytedance-research/UNO-FLUX) will coming soon.

- [04/2025] 🔥 The [project page](https://bytedance.github.io/UNO) of UNO is created.

- [04/2025] 🔥 The arXiv [paper](https://arxiv.org/abs/2504.02160) of UNO is released.

## 📖 Introduction

In this study, we propose a highly-consistent data synthesis pipeline to tackle this challenge. This pipeline harnesses the intrinsic in-context generation capabilities of diffusion transformers and generates high-consistency multi-subject paired data. Additionally, we introduce UNO, which consists of progressive cross-modal alignment and universal rotary position embedding. It is a multi-image conditioned subject-to-image model iteratively trained from a text-to-image model. Extensive experiments show that our method can achieve high consistency while ensuring controllability in both single-subject and multi-subject driven generation.

## ⚡️ Quick Start

### 🔧 Requirements and Installation

Clone our [Github repo](https://github.com/bytedance/UNO)

Install the requirements

```bash

## create a virtual environment with python >= 3.10 <= 3.12, like

# python -m venv uno_env

# source uno_env/bin/activate

# then install

pip install -r requirements.txt

```

then download checkpoints in one of the three ways:

1. Directly run the inference scripts, the checkpoints will be downloaded automatically by the `hf_hub_download` function in the code to your `$HF_HOME`(the default value is `~/.cache/huggingface`).

2. use `huggingface-cli download <repo name>` to download `black-forest-labs/FLUX.1-dev`, `xlabs-ai/xflux_text_encoders`, `openai/clip-vit-large-patch14`, `TODO UNO hf model`, then run the inference scripts.

3. use `huggingface-cli download <repo name> --local-dir <LOCAL_DIR>` to download all the checkpoints menthioned in 2. to the directories your want. Then set the environment variable `TODO`. Finally, run the inference scripts.

### 🌟 Gradio Demo

```bash

python app.py

```

### ✍️ Inference

- Optional prepreration: If you want to test the inference on dreambench at the first time, you should clone the submodule `dreambench` to download the dataset.

```bash

git submodule update --init

```

```bash

python inference.py

```

### 🚄 Training

```bash

accelerate launch train.py

```

## 🎨 Application Scenarios

## 📄 Disclaimer

<p>

We open-source this project for academic research. The vast majority of images

used in this project are either generated or licensed. If you have any concerns,

please contact us, and we will promptly remove any inappropriate content.

Our code is released under the Apache 2.0 License,, while our models are under

the CC BY-NC 4.0 License. Any models related to <a href="https://huggingface.co/black-forest-labs/FLUX.1-dev" target="_blank">FLUX.1-dev</a>

base model must adhere to the original licensing terms.

<br><br>This research aims to advance the field of generative AI. Users are free to

create images using this tool, provided they comply with local laws and exercise

responsible usage. The developers are not liable for any misuse of the tool by users.</p>

## 🚀 Updates

For the purpose of fostering research and the open-source community, we plan to open-source the entire project, encompassing training, inference, weights, etc. Thank you for your patience and support! 🌟

- [x] Release github repo.

- [x] Release inference code.

- [x] Release training code.

- [x] Release model checkpoints.

- [x] Release arXiv paper.

- [] Release in-context data generation pipelines.

## Citation

If UNO is helpful, please help to ⭐ the repo.

If you find this project useful for your research, please consider citing our paper:

```bibtex

@article{wu2025less,

title={Less-to-More Generalization: Unlocking More Controllability by In-Context Generation},

author={Wu, Shaojin and Huang, Mengqi and Wu, Wenxu and Cheng, Yufeng and Ding, Fei and He, Qian},

journal={arXiv preprint arXiv:2504.02160},

year={2025}

}

```

|

Muapi/f1-xl-anime-model-turn-multi-view-turnaround-model-sheet-character-design

|

Muapi

| 2025-08-22T11:46:47Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:46:35Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# F1/XL Anime Model Turn, Multi-View, Turnaround, Model Sheet, Character Design

**Base model**: Flux.1 D

**Trained words**:

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:1002768@1127674", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

calegpedia/blockassist-bc-stealthy_slimy_rooster_1755861689

|

calegpedia

| 2025-08-22T11:46:46Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"stealthy slimy rooster",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:46:42Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- stealthy slimy rooster

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Muapi/snow-white-flux1.d-sdxl

|

Muapi

| 2025-08-22T11:44:57Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:44:48Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Snow White - Flux1.D & SDXL

**Base model**: Flux.1 D

**Trained words**: Snow White

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:332134@846827", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

Kijai/WanVideo_comfy

|

Kijai

| 2025-08-22T11:44:43Z | 4,112,827 | 1,247 |

diffusion-single-file

|

[

"diffusion-single-file",

"comfyui",

"base_model:Wan-AI/Wan2.1-VACE-1.3B",

"base_model:finetune:Wan-AI/Wan2.1-VACE-1.3B",

"region:us"

] | null | 2025-02-25T17:54:17Z |

---

tags:

- diffusion-single-file

- comfyui

base_model:

- Wan-AI/Wan2.1-VACE-14B

- Wan-AI/Wan2.1-VACE-1.3B

---

Combined and quantized models for WanVideo, originating from here:

https://huggingface.co/Wan-AI/

Can be used with: https://github.com/kijai/ComfyUI-WanVideoWrapper and ComfyUI native WanVideo nodes.

I've also started to do fp8_scaled versions over here: https://huggingface.co/Kijai/WanVideo_comfy_fp8_scaled

Other model sources:

TinyVAE from https://github.com/madebyollin/taehv

SkyReels: https://huggingface.co/collections/Skywork/skyreels-v2-6801b1b93df627d441d0d0d9

WanVideoFun: https://huggingface.co/collections/alibaba-pai/wan21-fun-v11-680f514c89fe7b4df9d44f17

---

Lightx2v:

CausVid 14B: https://huggingface.co/lightx2v/Wan2.1-T2V-14B-CausVid

CFG and Step distill 14B: https://huggingface.co/lightx2v/Wan2.1-T2V-14B-StepDistill-CfgDistill

---

CausVid 1.3B: https://huggingface.co/tianweiy/CausVid

AccVideo: https://huggingface.co/aejion/AccVideo-WanX-T2V-14B

Phantom: https://huggingface.co/bytedance-research/Phantom

ATI: https://huggingface.co/bytedance-research/ATI

MiniMaxRemover: https://huggingface.co/zibojia/minimax-remover

MAGREF: https://huggingface.co/MAGREF-Video/MAGREF

FantasyTalking: https://github.com/Fantasy-AMAP/fantasy-talking

MultiTalk: https://github.com/MeiGen-AI/MultiTalk

Anisora: https://huggingface.co/IndexTeam/Index-anisora/tree/main/14B

Pusa: https://huggingface.co/RaphaelLiu/PusaV1/tree/main

FastVideo: https://huggingface.co/FastVideo

EchoShot: https://github.com/D2I-ai/EchoShot

Wan22 5B Turbo: https://huggingface.co/quanhaol/Wan2.2-TI2V-5B-Turbo

---

CausVid LoRAs are experimental extractions from the CausVid finetunes, the aim with them is to benefit from the distillation in CausVid, rather than any actual causal inference.

---

v1 = direct extraction, has adverse effects on motion and introduces flashing artifact at full strength.

v1.5 = same as above, but without the first block which fixes the flashing at full strength.

v2 = further pruned version with only attention layers and no first block, fixes flashing and retains motion better, needs more steps and can also benefit from cfg.

|

Muapi/line-drawing-ce

|

Muapi

| 2025-08-22T11:43:42Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:43:14Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Line Drawing - CE

**Base model**: Flux.1 D

**Trained words**: lndrwngCE_style

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:880102@1671848", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

unitova/blockassist-bc-zealous_sneaky_raven_1755861405

|

unitova

| 2025-08-22T11:42:50Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"zealous sneaky raven",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:42:46Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- zealous sneaky raven

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Muapi/pinkie-iridescent-jelly-flux-sdxl

|

Muapi

| 2025-08-22T11:42:29Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:42:16Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# [Pinkie] 🫧 Iridescent Jelly 🫧- [Flux/SDXL]

**Base model**: Flux.1 D

**Trained words**: made out of iridescent jelly

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:593604@787445", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

Muapi/silent-hill-forgotten-fog-filter-lora-flux

|

Muapi

| 2025-08-22T11:41:39Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:41:21Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Silent Hill - Forgotten Fog Filter LORA [FLUX]

**Base model**: Flux.1 D

**Trained words**: aidmasilenthill

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:839553@939281", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

datajuicer/YOLO11L-Rice-Disease-Detection

|

datajuicer

| 2025-08-22T11:41:04Z | 0 | 0 | null |

[

"base_model:Ultralytics/YOLO11",

"base_model:finetune:Ultralytics/YOLO11",

"license:cc-by-nc-sa-4.0",

"region:us"

] | null | 2025-08-22T06:42:30Z |

---

license: cc-by-nc-sa-4.0

base_model:

- Ultralytics/YOLO11

---

# 水稻病害检测 (with YOLO11L)

## 模型简介

- 模型功能:支持多种水稻病害的检测,返回图像中的病害位置(bounding box)以及病害类别(class label)。

- 支持类别:{0: '水稻白叶枯病Bacterial_Leaf_Blight', 1: '水稻胡麻斑病Brown_Spot', 2: '健康水稻HealthyLeaf', 3: '稻瘟病Leaf_Blast', 4: '水稻叶鞘腐病Leaf_Scald', 5: '水稻窄褐斑病Narrow_Brown_Leaf_Spot', 6: '水稻穗颈瘟Neck_Blast', 7: '稻飞虱Rice_Hispa'}

- 训练数据:3,567张水稻病害图像及对应标注信息([Rice Leaf Spot Disease Annotated Dataset](https://www.kaggle.com/datasets/hadiurrahmannabil/rice-leaf-spot-disease-annotated-dataset)),训练200epoch。

- 评测指标:测试集 {mAP50: 56.3, mAP50-95: 34.9}

## 模型使用(with Data-Juicer)

- 输出格式:

```

[{

"images": image_path1,

"objects": {

"ref": [class_label1, class_label2, ...],

"bbox": [bbox1, bbox2, ...]

}

},

...

]

```

- 可参考代码:

```python

import json

from data_juicer.core.data import NestedDataset as Dataset

from data_juicer.ops.mapper.image_detection_yolo_mapper import ImageDetectionYoloMapper

from data_juicer.utils.constant import Fields, MetaKeys

if __name__ == "__main__":

image_path1 = "test1.jpg"

image_path2 = "test2.jpg"

image_path3 = "test3.jpg"

source_list = [{

'images': [image_path1, image_path2, image_path3]

}]

class_names =['水稻白叶枯病Bacterial_Leaf_Blight', '水稻胡麻斑病Brown_Spot', '健康水稻HealthyLeaf', '稻瘟病Leaf_Blast', '水稻叶鞘腐病Leaf_Scald', '水稻窄褐斑病Narrow_Brown_Leaf_Spot', '水稻穗颈瘟Neck_Blast', '稻飞虱Rice_Hispa']

op = ImageDetectionYoloMapper(

imgsz=640, conf=0.05, iou=0.5, model_path='Path_to_YOLO11L-Rice-Disease-Detection.pt')

dataset = Dataset.from_list(source_list)

if Fields.meta not in dataset.features:

dataset = dataset.add_column(name=Fields.meta,

column=[{}] * dataset.num_rows)

dataset = dataset.map(op.process, num_proc=1, with_rank=True)

res_list = dataset.to_list()[0]

new_data = []

for temp_image_name, temp_bbox_lists, class_name_lists in zip(res_list["images"], res_list["__dj__meta__"]["__dj__bbox__"], res_list["__dj__meta__"]["__dj__class_label__"]):

temp_json = {}

temp_json["images"] = temp_image_name

temp_json["objects"] = {"ref": [], "bbox":temp_bbox_lists}

for temp_object_label in class_name_lists:

temp_json["objects"]["ref"].append(class_names[int(temp_object_label)])

new_data.append(temp_json)

with open("./output.json", "w") as f:

json.dump(new_data, f)

```

|

Muapi/adventure-comic-book

|

Muapi

| 2025-08-22T11:40:33Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:40:18Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Adventure Comic Book

**Base model**: Flux.1 D

**Trained words**:

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:752718@841709", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

Muapi/90s-hand-drawn-animation-cartoon-style-for-backgrounds-illustrations-and-arts-flux

|

Muapi

| 2025-08-22T11:40:09Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:39:56Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# 90s Hand drawn animation / cartoon style for backgrounds, illustrations and arts [Flux]

**Base model**: Flux.1 D

**Trained words**: PIVIG image style, PIVIG

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:811608@967018", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

Muapi/taiwan-street-background

|

Muapi

| 2025-08-22T11:39:30Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:39:10Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Taiwan Street Background

**Base model**: Flux.1 D

**Trained words**:

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:221517@727420", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

Muapi/underwater-style-xl-f1d-marine-life

|

Muapi

| 2025-08-22T11:38:53Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:38:46Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Underwater style XL + F1D (Marine life)

**Base model**: Flux.1 D

**Trained words**: Underwater

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:380863@1167240", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

Muapi/daphne-blake-scooby-doo-franchise-flux1.d-sdxl-realistic-anime

|

Muapi

| 2025-08-22T11:38:13Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:38:04Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Daphne Blake - Scooby-Doo franchise - Flux1.D - SDXL Realistic / Anime

**Base model**: Flux.1 D

**Trained words**: Daphne Blake, headband, purple dress, green scarf

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:397155@859346", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

mradermacher/BlackSheep-24B-i1-GGUF

|

mradermacher

| 2025-08-22T11:36:15Z | 1,557 | 7 |

transformers

|

[

"transformers",

"gguf",

"en",

"base_model:TroyDoesAI/BlackSheep-24B",

"base_model:quantized:TroyDoesAI/BlackSheep-24B",

"license:cc-by-nc-2.0",

"endpoints_compatible",

"region:us",

"imatrix",

"conversational"

] | null | 2025-03-26T07:05:18Z |

---

base_model: TroyDoesAI/BlackSheep-24B

language:

- en

library_name: transformers

license: cc-by-nc-2.0

mradermacher:

readme_rev: 1

quantized_by: mradermacher

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: nicoboss -->

weighted/imatrix quants of https://huggingface.co/TroyDoesAI/BlackSheep-24B

<!-- provided-files -->

***For a convenient overview and download list, visit our [model page for this model](https://hf.tst.eu/model#BlackSheep-24B-i1-GGUF).***

static quants are available at https://huggingface.co/mradermacher/BlackSheep-24B-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-IQ1_S.gguf) | i1-IQ1_S | 5.4 | for the desperate |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-IQ1_M.gguf) | i1-IQ1_M | 5.9 | mostly desperate |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-IQ2_XXS.gguf) | i1-IQ2_XXS | 6.6 | |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-IQ2_XS.gguf) | i1-IQ2_XS | 7.3 | |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-IQ2_S.gguf) | i1-IQ2_S | 7.6 | |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-IQ2_M.gguf) | i1-IQ2_M | 8.2 | |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-Q2_K_S.gguf) | i1-Q2_K_S | 8.4 | very low quality |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-Q2_K.gguf) | i1-Q2_K | 9.0 | IQ3_XXS probably better |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-IQ3_XXS.gguf) | i1-IQ3_XXS | 9.4 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-IQ3_XS.gguf) | i1-IQ3_XS | 10.0 | |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-Q3_K_S.gguf) | i1-Q3_K_S | 10.5 | IQ3_XS probably better |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-IQ3_S.gguf) | i1-IQ3_S | 10.5 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-IQ3_M.gguf) | i1-IQ3_M | 10.8 | |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-Q3_K_M.gguf) | i1-Q3_K_M | 11.6 | IQ3_S probably better |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-Q3_K_L.gguf) | i1-Q3_K_L | 12.5 | IQ3_M probably better |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-IQ4_XS.gguf) | i1-IQ4_XS | 12.9 | |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-Q4_0.gguf) | i1-Q4_0 | 13.6 | fast, low quality |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-Q4_K_S.gguf) | i1-Q4_K_S | 13.6 | optimal size/speed/quality |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-Q4_K_M.gguf) | i1-Q4_K_M | 14.4 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-Q4_1.gguf) | i1-Q4_1 | 15.0 | |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-Q5_K_S.gguf) | i1-Q5_K_S | 16.4 | |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-Q5_K_M.gguf) | i1-Q5_K_M | 16.9 | |

| [GGUF](https://huggingface.co/mradermacher/BlackSheep-24B-i1-GGUF/resolve/main/BlackSheep-24B.i1-Q6_K.gguf) | i1-Q6_K | 19.4 | practically like static Q6_K |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time. Additional thanks to [@nicoboss](https://huggingface.co/nicoboss) for giving me access to his private supercomputer, enabling me to provide many more imatrix quants, at much higher quality, than I would otherwise be able to.

<!-- end -->

|

george114/LLama_3_1_8B_ASBA_Opinion_Detection_Final

|

george114

| 2025-08-22T11:35:55Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"text-generation-inference",

"unsloth",

"llama",

"trl",

"en",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2025-08-22T11:35:35Z |

---

base_model: unsloth/meta-llama-3.1-8b-unsloth-bnb-4bit

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- trl

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** george114

- **License:** apache-2.0

- **Finetuned from model :** unsloth/meta-llama-3.1-8b-unsloth-bnb-4bit

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

afrin-apu-viral-videos-link/NEW.afrin.apu.FULL.VIRALS.VIDEO

|

afrin-apu-viral-videos-link

| 2025-08-22T11:34:37Z | 0 | 0 | null |

[

"region:us"

] | null | 2025-08-22T11:33:54Z |

<animated-image data-catalyst=""><a href="https://tinyurl.com/5abutj9x?viral-news" rel="nofollow" data-target="animated-image.originalLink"><img src="https://static.wixstatic.com/media/b249f9_adac8f70fb3f45b88691696c77de18f3~mv2.gif" alt="Foo" data-canonical-src="https://static.wixstatic.com/media/b249f9_adac8f70fb3f45b88691696c77de18f3~mv2.gif" style="max-width: 100%; display: inline-block;" data-target="animated-image.originalImage"></a>

|

Muapi/long-hair-lora-flux

|

Muapi

| 2025-08-22T11:34:37Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:34:30Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Long hair LoRA - Flux

**Base model**: Flux.1 D

**Trained words**:

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:669029@964680", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

8septiadi8/blockassist-bc-curious_lightfooted_mouse_1755862326

|

8septiadi8

| 2025-08-22T11:33:56Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"curious lightfooted mouse",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:33:36Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- curious lightfooted mouse

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Muapi/fictional-model-peach-flux

|

Muapi

| 2025-08-22T11:33:29Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:33:21Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Fictional Model Peach - FLUX

**Base model**: Flux.1 D

**Trained words**: FictionalPeach, punk girl with short pink hair

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:1167033@1312926", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

Muapi/ethereal-dystopia-aah

|

Muapi

| 2025-08-22T11:33:15Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:33:05Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Ethereal Dystopia (AAH)

**Base model**: Flux.1 D

**Trained words**: ethdysty

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:1378072@1557053", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

rvipitkirubbe/blockassist-bc-mottled_foraging_ape_1755859871

|

rvipitkirubbe

| 2025-08-22T11:32:34Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"mottled foraging ape",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:32:31Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- mottled foraging ape

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

coelacanthxyz/blockassist-bc-finicky_thriving_grouse_1755860665

|

coelacanthxyz

| 2025-08-22T11:32:18Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"finicky thriving grouse",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:32:10Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- finicky thriving grouse

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Muapi/zoopolis

|

Muapi

| 2025-08-22T11:32:16Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:32:03Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# ZooPolis

**Base model**: Flux.1 D

**Trained words**: ZooPolis Art

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:1453803@1643793", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

Muapi/igawa-asagi-taimanin-asagi-flux-hunyuan-video

|

Muapi

| 2025-08-22T11:31:59Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:31:48Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Igawa Asagi - Taimanin Asagi [Flux/Hunyuan Video]

**Base model**: Flux.1 D

**Trained words**: ig4wa wearing a purple bodysuit

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:728293@814400", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

Muapi/tsutomu-nihei-lora

|

Muapi

| 2025-08-22T11:31:43Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:31:28Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Tsutomu Nihei Lora

**Base model**: Flux.1 D

**Trained words**:

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:179979@1474802", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

chainway9/blockassist-bc-untamed_quick_eel_1755860629

|

chainway9

| 2025-08-22T11:31:03Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"untamed quick eel",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:30:59Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- untamed quick eel

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

mang3dd/blockassist-bc-tangled_slithering_alligator_1755860717

|

mang3dd

| 2025-08-22T11:30:46Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"tangled slithering alligator",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:30:42Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- tangled slithering alligator

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Muapi/american-propaganda-painting-james-montgomery-flagg-style

|

Muapi

| 2025-08-22T11:30:45Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:30:31Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# American Propaganda Painting - James Montgomery Flagg Style

**Base model**: Flux.1 D

**Trained words**: a painting of, in the style of james-montgomery-flagg, uncle sam

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:586368@1054968", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

manusiaperahu2012/blockassist-bc-roaring_long_tuna_1755860689

|

manusiaperahu2012

| 2025-08-22T11:30:19Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"roaring long tuna",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:30:16Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- roaring long tuna

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

ChenWu98/statement_deepseek_v1.5_sft_cluster_additional_split_0

|

ChenWu98

| 2025-08-22T11:30:14Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"generated_from_trainer",

"sft",

"trl",

"base_model:deepseek-ai/DeepSeek-Prover-V1.5-SFT",

"base_model:finetune:deepseek-ai/DeepSeek-Prover-V1.5-SFT",

"endpoints_compatible",

"region:us"

] | null | 2025-08-22T11:25:59Z |

---

base_model: deepseek-ai/DeepSeek-Prover-V1.5-SFT

library_name: transformers

model_name: statement_deepseek_v1.5_sft_cluster_additional_split_0

tags:

- generated_from_trainer

- sft

- trl

licence: license

---

# Model Card for statement_deepseek_v1.5_sft_cluster_additional_split_0

This model is a fine-tuned version of [deepseek-ai/DeepSeek-Prover-V1.5-SFT](https://huggingface.co/deepseek-ai/DeepSeek-Prover-V1.5-SFT).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="None", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/chenwu/huggingface/runs/wsmfhada)

This model was trained with SFT.

### Framework versions

- TRL: 0.19.1

- Transformers: 4.51.1

- Pytorch: 2.7.0

- Datasets: 4.0.0

- Tokenizers: 0.21.4

## Citations

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallou{\'e}dec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

kojeklollipop/blockassist-bc-spotted_amphibious_stork_1755860574

|

kojeklollipop

| 2025-08-22T11:29:18Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"spotted amphibious stork",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:29:14Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- spotted amphibious stork

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Mostefa-Terbeche/diabetic-retinopathy-paraguay-efficientnet_b3-original-20250720-123845

|

Mostefa-Terbeche

| 2025-08-22T11:28:48Z | 0 | 0 | null |

[

"diabetic-retinopathy",

"medical-imaging",

"pytorch",

"computer-vision",

"retinal-imaging",

"dataset:paraguay",

"license:apache-2.0",

"model-index",

"region:us"

] | null | 2025-08-22T11:06:53Z |

---

license: apache-2.0

tags:

- diabetic-retinopathy

- medical-imaging

- pytorch

- computer-vision

- retinal-imaging

datasets:

- paraguay

metrics:

- accuracy

- quadratic-kappa

- auc

model-index:

- name: paraguay_efficientnet_b3_original

results:

- task:

type: image-classification

name: Diabetic Retinopathy Classification

dataset:

type: paraguay

name: PARAGUAY

metrics:

- type: accuracy

value: 0.06578947368421052

- type: quadratic-kappa

value: 0.20465116279069773

---

# Diabetic Retinopathy Classification Model

## Model Description

This model is trained for diabetic retinopathy classification using the efficientnet_b3 architecture on the paraguay dataset with original preprocessing.

## Model Details

- **Architecture**: efficientnet_b3

- **Dataset**: paraguay

- **Preprocessing**: original

- **Training Date**: 20250720-123845

- **Task**: 5-class diabetic retinopathy grading (0-4)

- **Directory**: paraguay_efficientnet_b3_20250720-123845_new

## Performance

- **Test Accuracy**: 0.06578947368421052

- **Test Quadratic Kappa**: 0.20465116279069773

- **Validation Kappa**: 0.20465116279069773

## Usage

```python

import torch

from huggingface_hub import hf_hub_download

# Download model

model_path = hf_hub_download(

repo_id="your-username/diabetic-retinopathy-paraguay-efficientnet_b3-original",

filename="model_best.pt"

)

# Load model

model = torch.load(model_path, map_location='cpu')

```

## Classes

- 0: No DR (No diabetic retinopathy)

- 1: Mild DR (Mild non-proliferative diabetic retinopathy)

- 2: Moderate DR (Moderate non-proliferative diabetic retinopathy)

- 3: Severe DR (Severe non-proliferative diabetic retinopathy)

- 4: Proliferative DR (Proliferative diabetic retinopathy)

## Citation

If you use this model, please cite your research paper/thesis.

|

hyrinmansoor/text2frappe-s3-flan-query

|

hyrinmansoor

| 2025-08-22T11:28:25Z | 1,041 | 0 |

transformers

|

[

"transformers",

"pytorch",

"safetensors",

"t5",

"text2text-generation",

"flan-t5-base",

"erpnext",

"query-generation",

"frappe",

"text2frappe",

"en",

"license:apache-2.0",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-06-05T11:48:55Z |

---

tags:

- flan-t5-base

- transformers

- erpnext

- query-generation

- frappe

- text2frappe

- text2text-generation

pipeline_tag: text2text-generation

license: apache-2.0

language: en

library_name: transformers

model-index:

- name: Text2Frappe - Stage 3 Query Generator

results: []

---

# 🧠 Text2Frappe - Stage 3 Query Generator (FLAN-T5-BASE)

This model is the **third stage** in the [Text2Frappe](https://huggingface.co/hyrinmansoor) pipeline, which enables **natural language interface to ERPNext** by converting questions into executable database queries.

---

## 🎯 Task

**Text2Text Generation** – Prompt-based query formulation.

Given:

- A detected **ERPNext Doctype** (from Stage 1),

- A natural language **question**,

- A list of selected **relevant fields** (from Stage 2),

this model generates a valid **Frappe ORM-style query** (e.g., `frappe.get_all(...)`) to retrieve the required data.

---

## 🧩 Input Format

Inputs are JSON-style strings containing:

- `doctype`: the ERPNext document type.

- `question`: the user's question in natural language.

- `fields`: a list of relevant field names predicted by Stage 2.

### 📥 Example Input

```json

{

"doctype": "Purchase Invoice Advance",

"question": "List the reference types used in advance payments made this month.",

"fields": ["reference_type"]

}

```

### 📤 Example Output

frappe.get_all('Purchase Invoice Advance', filters={'posting_date': ['between', ['2024-04-01', '2024-04-30']]}, fields=['reference_type'])

|

Muapi/cute-sdxl-pony-flux

|

Muapi

| 2025-08-22T11:26:17Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:26:02Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Cute (SDXL, Pony, Flux)

**Base model**: Flux.1 D

**Trained words**: ArsMJStyle, Cute

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:577827@820305", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

MisterXY89/chat-doctor-v2

|

MisterXY89

| 2025-08-22T11:26:02Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"en",

"base_model:meta-llama/Llama-2-7b-hf",

"base_model:finetune:meta-llama/Llama-2-7b-hf",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-01-09T14:50:37Z |

---

license: mit

language:

- en

base_model:

- meta-llama/Llama-2-7b-hf

---

End-to-end QLoRA-based fine-tuning of Llama-2 for a medical-diagnosis/doctor chat-bot on AWS

https://github.com/MisterXY89/chat-doc

|

Muapi/mapcraft-the-ultimate-ttrpg-mapmaker

|

Muapi

| 2025-08-22T11:25:34Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:25:15Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Mapcraft: The Ultimate TTRPG Mapmaker

**Base model**: Flux.1 D

**Trained words**: mapcraft

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:799901@2044181", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

Muapi/js_flux_schoolgirl_uniform

|

Muapi

| 2025-08-22T11:24:48Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:24:32Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# JS_FLUX_Schoolgirl_uniform

**Base model**: Flux.1 D

**Trained words**: cross-tie, white short-sleeve blouse has a button-up front with a single button, cross-tie neatly at the neck, pleated short skirt

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:877309@982096", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

Muapi/hsmuscleboy.style.flux1d

|

Muapi

| 2025-08-22T11:24:17Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:23:31Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# HSMuscleboy.style.Flux1D

**Base model**: Flux.1 D

**Trained words**: HSMuscleboy, cartoon

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:681646@762942", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

eshanroy5678/blockassist-bc-untamed_dextrous_dingo_1755861353

|

eshanroy5678

| 2025-08-22T11:21:08Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"untamed dextrous dingo",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:19:41Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- untamed dextrous dingo

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Muapi/rural-sci-fi-digital-painting-simon-stalenhag-style

|

Muapi

| 2025-08-22T11:20:48Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:20:37Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Rural Sci-fi - Digital Painting - Simon Stalenhag Style

**Base model**: Flux.1 D

**Trained words**: a digital painting of, in the style of simon-stalenhag

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:529363@1175730", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

indoempatnol/blockassist-bc-fishy_wary_swan_1755859826

|

indoempatnol

| 2025-08-22T11:18:50Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"fishy wary swan",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:18:47Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- fishy wary swan

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

Muapi/fantastic-pic-flux

|

Muapi

| 2025-08-22T11:17:05Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:16:53Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# Fantastic Pic [Flux]

**Base model**: Flux.1 D

**Trained words**:

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:1193687@1343984", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

Muapi/donm-sound-of-music-flux-sdxl-pony

|

Muapi

| 2025-08-22T11:16:23Z | 0 | 0 | null |

[

"lora",

"stable-diffusion",

"flux.1-d",

"license:openrail++",

"region:us"

] | null | 2025-08-22T11:16:10Z |

---

license: openrail++

tags:

- lora

- stable-diffusion

- flux.1-d

model_type: LoRA

---

# DonM - Sound of Music [Flux,SDXL,Pony]

**Base model**: Flux.1 D

**Trained words**: digital illustration

## 🧠 Usage (Python)

🔑 **Get your MUAPI key** from [muapi.ai/access-keys](https://muapi.ai/access-keys)

```python

import requests, os

url = "https://api.muapi.ai/api/v1/flux_dev_lora_image"

headers = {"Content-Type": "application/json", "x-api-key": os.getenv("MUAPIAPP_API_KEY")}

payload = {

"prompt": "masterpiece, best quality, 1girl, looking at viewer",

"model_id": [{"model": "civitai:393812@813609", "weight": 1.0}],

"width": 1024,

"height": 1024,

"num_images": 1

}

print(requests.post(url, headers=headers, json=payload).json())

```

|

cixzer/blockassist-bc-gregarious_long_cheetah_1755861070

|

cixzer

| 2025-08-22T11:14:53Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"gregarious long cheetah",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:14:16Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- gregarious long cheetah

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

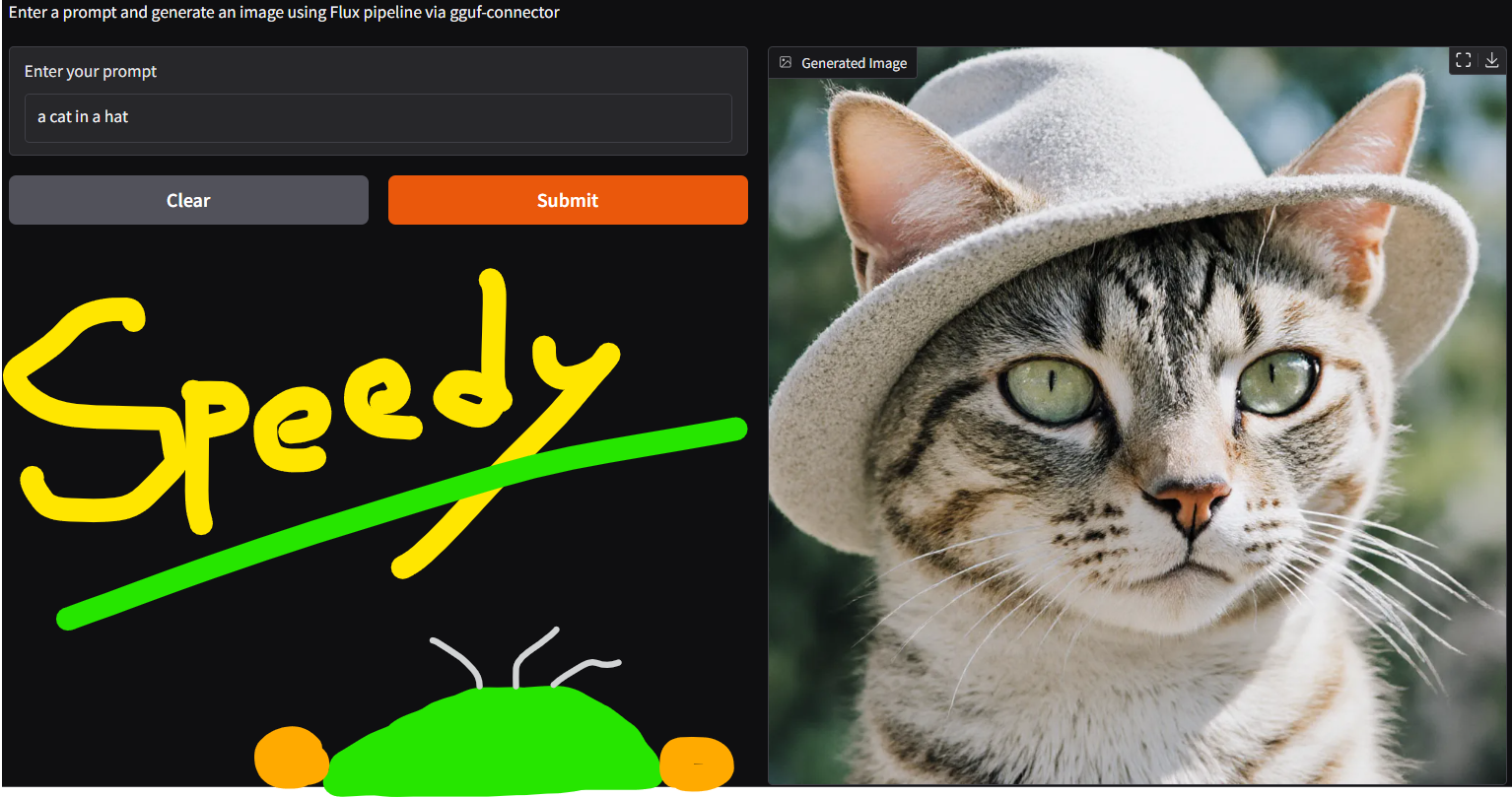

calcuis/krea-gguf

|

calcuis

| 2025-08-22T11:13:35Z | 2,743 | 7 |

diffusers

|

[

"diffusers",

"gguf",

"gguf-node",

"gguf-connector",

"text-to-image",

"en",

"base_model:black-forest-labs/FLUX.1-Krea-dev",

"base_model:quantized:black-forest-labs/FLUX.1-Krea-dev",

"license:other",

"region:us"

] |

text-to-image

| 2025-07-31T20:55:43Z |

---

license: other

license_name: flux-1-dev-non-commercial-license

license_link: https://huggingface.co/black-forest-labs/FLUX.1-Krea-dev/blob/main/LICENSE.md

language:

- en

library_name: diffusers

base_model:

- black-forest-labs/FLUX.1-Krea-dev

pipeline_tag: text-to-image

widget:

- text: a frog holding a sign that says hello world

output:

url: output1.png

- text: a pig holding a sign that says hello world

output:

url: output2.png

- text: a wolf holding a sign that says hello world

output:

url: output3.png

- text: >-

cute anime girl with massive fluffy fennec ears and a big fluffy tail blonde

messy long hair blue eyes wearing a maid outfit with a long black gold leaf

pattern dress and a white apron mouth open holding a fancy black forest cake

with candles on top in the kitchen of an old dark Victorian mansion lit by

candlelight with a bright window to the foggy forest and very expensive

stuff everywhere

output:

url: workflow-embedded-demo1.png

- text: >-

on a rainy night, a girl holds an umbrella and looks at the camera. The rain

keeps falling.

output:

url: workflow-embedded-demo2.png

- text: drone shot of a volcano erupting with a pig walking on it

output:

url: workflow-embedded-demo3.png

tags:

- gguf-node

- gguf-connector

---

# **gguf quantized version of krea**

- run it straight with `gguf-connector`

- opt a `gguf` file in the current directory to interact with by:

```

ggc k

```

>

>GGUF file(s) available. Select which one to use:

>

>1. flux-krea-lite-q2_k.gguf

>2. flux-krea-lite-q4_0.gguf

>3. flux-krea-lite-q8_0.gguf

>

>Enter your choice (1 to 3): _

>

note: try experimental lite model with 8-step operation; save up to 70% loading time

- run it with diffusers (see example inference below)

```py

import torch

from transformers import T5EncoderModel

from diffusers import FluxPipeline, GGUFQuantizationConfig, FluxTransformer2DModel

model_path = "https://huggingface.co/calcuis/krea-gguf/blob/main/flux1-krea-dev-q2_k.gguf"

transformer = FluxTransformer2DModel.from_single_file(

model_path,

quantization_config=GGUFQuantizationConfig(compute_dtype=torch.bfloat16),

torch_dtype=torch.bfloat16,

config="callgg/krea-decoder",

subfolder="transformer"

)

text_encoder = T5EncoderModel.from_pretrained(

"chatpig/t5-v1_1-xxl-encoder-fp32-gguf",

gguf_file="t5xxl-encoder-fp32-q2_k.gguf",

torch_dtype=torch.bfloat16

)

pipe = FluxPipeline.from_pretrained(

"callgg/krea-decoder",

transformer=transformer,

text_encoder_2=text_encoder,

torch_dtype=torch.bfloat16

)

pipe.enable_model_cpu_offload() # could change it to cuda if you have good gpu

prompt = "a pig holding a sign that says hello world"

image = pipe(

prompt,

height=1024,

width=1024,

guidance_scale=2.5,

).images[0]

image.save("output.png")

```

<Gallery />

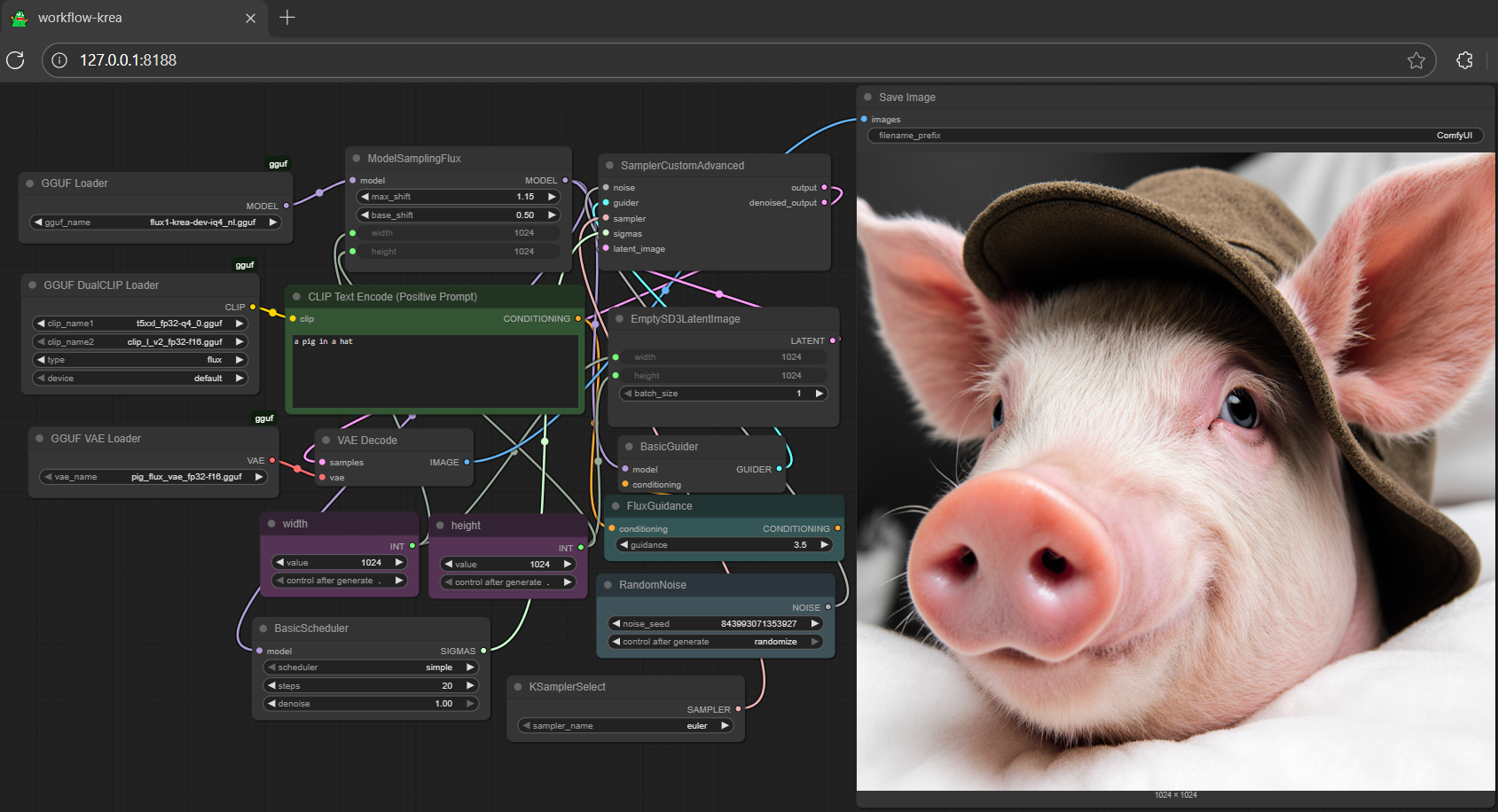

## **run it with gguf-node via comfyui**

- drag **krea** to > `./ComfyUI/models/diffusion_models`

- drag **clip-l-v2 [[248MB](https://huggingface.co/calcuis/kontext-gguf/blob/main/clip_l_v2_fp32-f16.gguf)], t5xxl [[2.75GB](https://huggingface.co/calcuis/kontext-gguf/blob/main/t5xxl_fp32-q4_0.gguf)]** to > `./ComfyUI/models/text_encoders`

- drag **pig [[168MB](https://huggingface.co/calcuis/kontext-gguf/blob/main/pig_flux_vae_fp32-f16.gguf)]** to > `./ComfyUI/models/vae`

### **reference**

- base model from [black-forest-labs](https://huggingface.co/black-forest-labs)

- for model merge details, see [sayakpaul](https://huggingface.co/sayakpaul/FLUX.1-merged)

- diffusers from [huggingface](https://github.com/huggingface/diffusers)

- comfyui from [comfyanonymous](https://github.com/comfyanonymous/ComfyUI)

- gguf-node ([pypi](https://pypi.org/project/gguf-node)|[repo](https://github.com/calcuis/gguf)|[pack](https://github.com/calcuis/gguf/releases))

- gguf-connector ([pypi](https://pypi.org/project/gguf-connector))

|

nate-rahn/0822-hf_trainer_new_data_rm_100k_1epoch_4b-qwen3_4b_base-hf

|

nate-rahn

| 2025-08-22T11:12:53Z | 0 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"qwen3",

"text-classification",

"generated_from_trainer",

"reward-trainer",

"trl",

"dataset:nate-rahn/0817-no_sexism_rm_training_data_big_combined-100k",

"base_model:Qwen/Qwen3-4B-Base",

"base_model:finetune:Qwen/Qwen3-4B-Base",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2025-08-22T05:30:15Z |

---

base_model: Qwen/Qwen3-4B-Base

datasets: nate-rahn/0817-no_sexism_rm_training_data_big_combined-100k

library_name: transformers

model_name: 0822-hf_trainer_new_data_rm_100k_1epoch_4b-qwen3_4b_base-hf

tags:

- generated_from_trainer

- reward-trainer

- trl

licence: license

---

# Model Card for 0822-hf_trainer_new_data_rm_100k_1epoch_4b-qwen3_4b_base-hf

This model is a fine-tuned version of [Qwen/Qwen3-4B-Base](https://huggingface.co/Qwen/Qwen3-4B-Base) on the [nate-rahn/0817-no_sexism_rm_training_data_big_combined-100k](https://huggingface.co/datasets/nate-rahn/0817-no_sexism_rm_training_data_big_combined-100k) dataset.

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="nate-rahn/0822-hf_trainer_new_data_rm_100k_1epoch_4b-qwen3_4b_base-hf", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/nate/red-team-agent/runs/t2jinm77)

This model was trained with Reward.

### Framework versions

- TRL: 0.21.0

- Transformers: 4.55.3

- Pytorch: 2.7.0

- Datasets: 4.0.0

- Tokenizers: 0.21.4

## Citations

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallou{\'e}dec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

roeker/blockassist-bc-quick_wiry_owl_1755861064

|

roeker

| 2025-08-22T11:12:23Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"quick wiry owl",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:11:49Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- quick wiry owl

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

angelnacar/gemma3-jarvis-lora

|

angelnacar

| 2025-08-22T11:11:51Z | 0 | 0 |

peft

|

[

"peft",

"tensorboard",

"safetensors",

"base_model:adapter:google/gemma-3-270m-it",

"lora",

"transformers",

"text-generation",

"conversational",

"base_model:google/gemma-3-270m-it",

"license:gemma",

"region:us"

] |

text-generation

| 2025-08-22T10:29:12Z |

---

library_name: peft

license: gemma

base_model: google/gemma-3-270m-it

tags:

- base_model:adapter:google/gemma-3-270m-it

- lora

- transformers

pipeline_tag: text-generation

model-index:

- name: gemma3-jarvis-lora

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gemma3-jarvis-lora

This model is a fine-tuned version of [google/gemma-3-270m-it](https://huggingface.co/google/gemma-3-270m-it) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 1

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 16

- total_train_batch_size: 16

- optimizer: Use OptimizerNames.ADAMW_TORCH_FUSED with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: linear

- num_epochs: 2

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- PEFT 0.17.0

- Transformers 4.55.2

- Pytorch 2.8.0+cu126

- Datasets 4.0.0

- Tokenizers 0.21.4

|

ihsanridzi/blockassist-bc-wiry_flexible_owl_1755859492

|

ihsanridzi

| 2025-08-22T11:11:37Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"wiry flexible owl",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-22T11:11:34Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- wiry flexible owl

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

labanochwo/unsloth-ocr-16bit

|

labanochwo

| 2025-08-22T11:11:37Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen2_5_vl",

"image-to-text",

"text-generation-inference",

"unsloth",

"trl",

"en",

"base_model:allenai/olmOCR-7B-0725",

"base_model:finetune:allenai/olmOCR-7B-0725",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

image-to-text

| 2025-08-22T11:08:08Z |

---

base_model: allenai/olmOCR-7B-0725

tags:

- text-generation-inference

- transformers

- unsloth

- qwen2_5_vl

- trl

license: apache-2.0

language:

- en

---

# Uploaded model

- **Developed by:** labanochwo

- **License:** apache-2.0

- **Finetuned from model :** allenai/olmOCR-7B-0725

This qwen2_5_vl model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

twelcone/pii-phi

|

twelcone

| 2025-08-22T11:10:53Z | 0 | 0 | null |

[

"safetensors",

"phi3",

"custom_code",

"region:us"

] | null | 2025-08-22T11:10:53Z |

### Overview

`pii-phi` is a fine-tuned version of `Phi-3.5-mini-instruct` designed to extract Personally Identifiable Information (PII) from unstructured text. The model outputs PII entities in a structured JSON format according to strict schema guidelines.

### Training Prompt Format

```text

# GUIDELINES

- Extract all instances of the following Personally Identifiable Information (PII) entities from the provided text and return them in JSON format.

- Each item in the JSON list should include an 'entity' key specifying the type of PII and a 'value' key containing the extracted information.

- The supported entities are: PERSON_NAME, BUSINESS_NAME, API_KEY, USERNAME, API_ENDPOINT, WEBSITE_ADDRESS, PHONE_NUMBER, EMAIL_ADDRESS, ID, PASSWORD, ADDRESS.

# EXPECTED OUTPUT

- The json output must be in the format below:

{

"result": [

{"entity": "ENTITY_TYPE", "value": "EXTRACTED_VALUE"},

...

]

}

```

### Supported Entities

* PERSON\_NAME

* BUSINESS\_NAME

* API\_KEY

* USERNAME

* API\_ENDPOINT

* WEBSITE\_ADDRESS

* PHONE\_NUMBER

* EMAIL\_ADDRESS

* ID

* PASSWORD

* ADDRESS

### Intended Use

The model is intended for PII detection in text documents to support tasks such as data anonymization, compliance, and security auditing.

### Limitations

* Not guaranteed to detect all forms of PII in every context.