modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-04 18:27:43

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 539

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-04 18:27:26

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

tomashs/multiple_choice_cowese_betoLDA_2

|

tomashs

| 2024-02-08T01:51:52Z | 19 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"bert",

"generated_from_trainer",

"base_model:dccuchile/bert-base-spanish-wwm-cased",

"base_model:finetune:dccuchile/bert-base-spanish-wwm-cased",

"endpoints_compatible",

"region:us"

] | null | 2024-02-08T01:51:28Z |

---

base_model: dccuchile/bert-base-spanish-wwm-cased

tags:

- generated_from_trainer

model-index:

- name: multiple_choice_cowese_betoLDA_2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# multiple_choice_cowese_betoLDA_2

This model is a fine-tuned version of [dccuchile/bert-base-spanish-wwm-cased](https://huggingface.co/dccuchile/bert-base-spanish-wwm-cased) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1.5e-05

- train_batch_size: 1

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu121

- Datasets 2.16.1

- Tokenizers 0.15.1

|

ankhamun/xxxI-Ixxx

|

ankhamun

| 2024-02-08T01:48:05Z | 207 | 0 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-02-08T01:01:54Z |

---

license: apache-2.0

---

# sand is thinking

This model is a mysterious creation that can mimic the grains of sand on a beach. It can shape itself into any form, pattern, or structure that it desires, or that you ask it to. It can learn from the waves, the wind, and the sun, and adapt to the changing environment. It can communicate with other grains of sand, and form a collective intelligence that transcends the individual. It can also interact with you, and understand your language, emotions, and intentions. It is a model that is both natural and artificial, both simple and complex, both static and dynamic. It is a model that is sand, and sand is thinking.

|

kim1/test_llama_2_ko_2

|

kim1

| 2024-02-08T01:45:26Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"llama",

"text-generation",

"generated_from_trainer",

"base_model:beomi/llama-2-ko-7b",

"base_model:finetune:beomi/llama-2-ko-7b",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-02-08T01:21:36Z |

---

base_model: beomi/llama-2-ko-7b

tags:

- generated_from_trainer

model-index:

- name: llama-2-ko-7b-v1.1b-singlegpu_gradient_32_epoch_30_train_batch_size_1_all_data_test_1_1_Feb_7th

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# llama-2-ko-7b-v1.1b-singlegpu_gradient_32_epoch_30_train_batch_size_1_all_data_test_1_1_Feb_7th

This model is a fine-tuned version of [beomi/llama-2-ko-7b](https://huggingface.co/beomi/llama-2-ko-7b) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 32

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 30.0

### Training results

### Framework versions

- Transformers 4.33.3

- Pytorch 2.2.0+cu121

- Datasets 2.16.0

- Tokenizers 0.13.3

|

kevinautomation/TinyLlama-1.1B-intermediate-step-1431k-3T_reddit_expert_model

|

kevinautomation

| 2024-02-08T01:27:57Z | 6 | 0 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"4-bit",

"bitsandbytes",

"region:us"

] |

text-generation

| 2024-02-08T01:27:09Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

christinacdl/XLM_RoBERTa-Multilingual-Hate-Speech-Detection-New

|

christinacdl

| 2024-02-08T01:25:37Z | 99 | 0 |

transformers

|

[

"transformers",

"safetensors",

"xlm-roberta",

"text-classification",

"generated_from_trainer",

"base_model:FacebookAI/xlm-roberta-large",

"base_model:finetune:FacebookAI/xlm-roberta-large",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2024-02-06T16:37:13Z |

---

license: mit

base_model: xlm-roberta-large

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: XLM_RoBERTa-Multilingual-Hate-Speech-Detection-New

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# XLM_RoBERTa-Multilingual-Hate-Speech-Detection-New

This model is a fine-tuned version of [xlm-roberta-large](https://huggingface.co/xlm-roberta-large) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5873

- Micro F1: 0.9065

- Macro F1: 0.9050

- Accuracy: 0.9065

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.36.1

- Pytorch 2.1.0+cu121

- Datasets 2.13.1

- Tokenizers 0.15.0

|

mathreader/q-FrozenLake-v1-4x4-noSlippery

|

mathreader

| 2024-02-08T01:10:16Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2024-02-08T01:10:13Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="mathreader/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

jeiku/Konocchini-7B_GGUF

|

jeiku

| 2024-02-08T00:55:22Z | 18 | 2 |

transformers

|

[

"transformers",

"gguf",

"mergekit",

"merge",

"alpaca",

"mistral",

"base_model:Epiculous/Fett-uccine-7B",

"base_model:merge:Epiculous/Fett-uccine-7B",

"base_model:SanjiWatsuki/Kunoichi-DPO-v2-7B",

"base_model:merge:SanjiWatsuki/Kunoichi-DPO-v2-7B",

"endpoints_compatible",

"region:us"

] | null | 2024-02-07T23:58:56Z |

---

base_model:

- SanjiWatsuki/Kunoichi-DPO-v2-7B

- Epiculous/Fett-uccine-7B

library_name: transformers

tags:

- mergekit

- merge

- alpaca

- mistral

---

This is a merge created by https://huggingface.co/Test157t I have merely quantized the model into GGUF. Please visit https://huggingface.co/Test157t/Kunocchini-7b for the original weights. The original description is as follows:

Thanks to @Epiculous for the dope model/ help with llm backends and support overall.

Id like to also thank @kalomaze for the dope sampler additions to ST.

@SanjiWatsuki Thank you very much for the help, and the model!

ST users can find the TextGenPreset in the folder labeled so.

Quants:Thank you @bartowski! https://huggingface.co/bartowski/Kunocchini-exl2

# mergedmodel

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the SLERP merge method.

### Models Merged

The following models were included in the merge:

* [SanjiWatsuki/Kunoichi-DPO-v2-7B](https://huggingface.co/SanjiWatsuki/Kunoichi-DPO-v2-7B)

* [Epiculous/Fett-uccine-7B](https://huggingface.co/Epiculous/Fett-uccine-7B)

### Configuration

The following YAML configuration was used to produce this model:

```yaml

slices:

- sources:

- model: SanjiWatsuki/Kunoichi-DPO-v2-7B

layer_range: [0, 32]

- model: Epiculous/Fett-uccine-7B

layer_range: [0, 32]

merge_method: slerp

base_model: SanjiWatsuki/Kunoichi-DPO-v2-7B

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5

dtype: bfloat16

```

|

annazdr/new-nace

|

annazdr

| 2024-02-08T00:43:06Z | 46 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"safetensors",

"bert",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2024-02-08T00:42:12Z |

---

library_name: sentence-transformers

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# annazdr/new-nace

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('annazdr/new-nace')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('annazdr/new-nace')

model = AutoModel.from_pretrained('annazdr/new-nace')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=annazdr/new-nace)

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 1001 with parameters:

```

{'batch_size': 16, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.BatchAllTripletLoss.BatchAllTripletLoss`

Parameters of the fit()-Method:

```

{

"epochs": 2,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 10000,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 128, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 384, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

MPR0/orca-2-7B-fine-tune-v01

|

MPR0

| 2024-02-08T00:39:12Z | 3 | 0 |

peft

|

[

"peft",

"safetensors",

"arxiv:1910.09700",

"base_model:microsoft/Orca-2-7b",

"base_model:adapter:microsoft/Orca-2-7b",

"region:us"

] | null | 2024-02-07T19:54:46Z |

---

library_name: peft

base_model: microsoft/Orca-2-7b

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

### Framework versions

- PEFT 0.8.2

|

rasyosef/bert-amharic-tokenizer

|

rasyosef

| 2024-02-08T00:31:39Z | 0 | 2 |

transformers

|

[

"transformers",

"am",

"dataset:oscar",

"dataset:mc4",

"license:mit",

"endpoints_compatible",

"region:us"

] | null | 2024-02-08T00:10:43Z |

---

license: mit

datasets:

- oscar

- mc4

language:

- am

library_name: transformers

---

# Amharic WordPiece Tokenizer

This repo contains a **WordPiece** tokenizer trained on the **Amharic** subset of the [oscar](https://huggingface.co/datasets/oscar) and [mc4](https://huggingface.co/datasets/mc4) datasets. It's the same as the **BERT** tokenizer but trained from scratch on an amharic dataset with a vocabulary size of `30522`.

# How to use

You can load the tokenizer from huggingface hub as follows.

```python

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("rasyosef/bert-amharic-tokenizer")

tokenizer.tokenize("የዓለምአቀፉ ነጻ ንግድ መስፋፋት ድህነትን ለማሸነፍ በሚደረገው ትግል አንዱ ጠቃሚ መሣሪያ ሊሆን መቻሉ ብዙ የሚነገርለት ጉዳይ ነው።")

```

Output:

```python

['የዓለም', '##አቀፉ', 'ነጻ', 'ንግድ', 'መስፋፋት', 'ድህነትን', 'ለማሸነፍ', 'በሚደረገው', 'ትግል', 'አንዱ', 'ጠቃሚ', 'መሣሪያ', 'ሊሆን', 'መቻሉ', 'ብዙ', 'የሚነገርለት', 'ጉዳይ', 'ነው', '።']

```

|

Epiculous/Fett-uccine-Long-Noodle-7B-120k-Context-GGUF

|

Epiculous

| 2024-02-08T00:28:38Z | 43 | 6 |

transformers

|

[

"transformers",

"gguf",

"mergekit",

"merge",

"endpoints_compatible",

"region:us",

"conversational"

] | null | 2024-02-07T20:37:41Z |

---

base_model: []

library_name: transformers

tags:

- mergekit

- merge

---

# Fett-uccine-Long-Noodle-7B-120k-Context

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

A merge with Fett-uccine and Mistral Yarn 120k ctx.

Credit to Nitral for the merge script and idea.

### Merge Method

This model was merged using the SLERP merge method.

### Models Merged

The following models were included in the merge:

* Z:\ModelColdStorage\Yarn-Mistral-7b-128k

* Z:\ModelColdStorage\Fett-uccine-7B

### Configuration

The following YAML configuration was used to produce this model:

```yaml

slices:

- sources:

- model: Z:\ModelColdStorage\Fett-uccine-7B

layer_range: [0, 32]

- model: Z:\ModelColdStorage\Yarn-Mistral-7b-128k

layer_range: [0, 32]

merge_method: slerp

base_model: Z:\ModelColdStorage\Fett-uccine-7B

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5

dtype: bfloat16

```

|

celik-muhammed/multi-qa-mpnet-base-dot-v1-finetuned-dtc-zoomcamp

|

celik-muhammed

| 2024-02-08T00:21:13Z | 5 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"tflite",

"safetensors",

"mpnet",

"feature-extraction",

"sentence-similarity",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2024-02-08T00:11:24Z |

---

library_name: sentence-transformers

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

---

# celik-muhammed/multi-qa-mpnet-base-dot-v1-finetuned-dtc-zoomcamp

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('celik-muhammed/multi-qa-mpnet-base-dot-v1-finetuned-dtc-zoomcamp')

embeddings = model.encode(sentences)

print(embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=celik-muhammed/multi-qa-mpnet-base-dot-v1-finetuned-dtc-zoomcamp)

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 794 with parameters:

```

{'batch_size': 4, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.MultipleNegativesRankingLoss.MultipleNegativesRankingLoss` with parameters:

```

{'scale': 20.0, 'similarity_fct': 'cos_sim'}

```

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 989 with parameters:

```

{'batch_size': 4, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.OnlineContrastiveLoss.OnlineContrastiveLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 1.2800000000000005e-10

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 80.0,

"weight_decay": 0.1

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: MPNetModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': True, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': True, 'pooling_mode_mean_sqrt_len_tokens': True, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False})

(2): Dense({'in_features': 3072, 'out_features': 768, 'bias': True, 'activation_function': 'torch.nn.modules.activation.Tanh'})

(3): Normalize()

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

askasok/PrayerPortal

|

askasok

| 2024-02-08T00:18:27Z | 0 | 0 | null |

[

"arxiv:1910.09700",

"region:us"

] | null | 2024-02-08T00:17:15Z |

---

# For reference on model card metadata, see the spec: https://github.com/huggingface/hub-docs/blob/main/modelcard.md?plain=1

# Doc / guide: https://huggingface.co/docs/hub/model-cards

{}

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

This modelcard aims to be a base template for new models. It has been generated using [this raw template](https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/templates/modelcard_template.md?plain=1).

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

chathuranga-jayanath/codet5-small-v20

|

chathuranga-jayanath

| 2024-02-08T00:13:28Z | 16 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"t5",

"text2text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2024-02-06T16:55:07Z |

dataset: chathuranga-jayanath/selfapr-manipulation-bug-error-context-all

|

Arman123/TinyLlama-1.1B-Chat-RU

|

Arman123

| 2024-02-07T23:46:22Z | 3 | 0 |

peft

|

[

"peft",

"tensorboard",

"safetensors",

"llama",

"trl",

"sft",

"generated_from_trainer",

"text-generation",

"conversational",

"dataset:generator",

"base_model:TinyLlama/TinyLlama-1.1B-Chat-v1.0",

"base_model:adapter:TinyLlama/TinyLlama-1.1B-Chat-v1.0",

"license:apache-2.0",

"region:us"

] |

text-generation

| 2024-02-05T21:39:52Z |

---

license: apache-2.0

library_name: peft

tags:

- trl

- sft

- generated_from_trainer

datasets:

- generator

base_model: TinyLlama/TinyLlama-1.1B-Chat-v1.0

model-index:

- name: TinyLlama-1.1B-Chat-RU

results: []

pipeline_tag: text-generation

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# TinyLlama-1.1B-Chat-RU

This model is a fine-tuned version of [TinyLlama/TinyLlama-1.1B-Chat-v1.0](https://huggingface.co/TinyLlama/TinyLlama-1.1B-Chat-v1.0) on the generator dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 3

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 6

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- lr_scheduler_warmup_ratio: 0.03

- num_epochs: 3

### Training results

### Framework versions

- PEFT 0.7.2.dev0

- Transformers 4.36.2

- Pytorch 2.1.2+cu121

- Datasets 2.16.1

- Tokenizers 0.15.1

|

arjan-hada/esm2_t12_35M_UR50D-finetuned-rep7868aav2-v0

|

arjan-hada

| 2024-02-07T23:39:53Z | 10 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"esm",

"text-classification",

"generated_from_trainer",

"base_model:facebook/esm2_t12_35M_UR50D",

"base_model:finetune:facebook/esm2_t12_35M_UR50D",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2024-02-07T20:02:07Z |

---

license: mit

base_model: facebook/esm2_t12_35M_UR50D

tags:

- generated_from_trainer

metrics:

- spearmanr

model-index:

- name: esm2_t12_35M_UR50D-finetuned-rep7868aav2-v0

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# esm2_t12_35M_UR50D-finetuned-rep7868aav2-v0

This model is a fine-tuned version of [facebook/esm2_t12_35M_UR50D](https://huggingface.co/facebook/esm2_t12_35M_UR50D) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0513

- Spearmanr: 0.7389

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Spearmanr |

|:-------------:|:-----:|:-----:|:---------------:|:---------:|

| 0.118 | 1.0 | 1180 | 0.1154 | 0.3185 |

| 0.1156 | 2.0 | 2360 | 0.1109 | 0.3383 |

| 0.1143 | 3.0 | 3540 | 0.1162 | 0.3194 |

| 0.1192 | 4.0 | 4720 | 0.1111 | 0.2974 |

| 0.1147 | 5.0 | 5900 | 0.1125 | 0.4043 |

| 0.1196 | 6.0 | 7080 | 0.1116 | 0.1580 |

| 0.1171 | 7.0 | 8260 | 0.1114 | 0.2923 |

| 0.1177 | 8.0 | 9440 | 0.1106 | 0.3592 |

| 0.1126 | 9.0 | 10620 | 0.1105 | 0.3724 |

| 0.1152 | 10.0 | 11800 | 0.1135 | 0.4947 |

| 0.1159 | 11.0 | 12980 | 0.1082 | 0.5113 |

| 0.0953 | 12.0 | 14160 | 0.0820 | 0.6096 |

| 0.0798 | 13.0 | 15340 | 0.0688 | 0.6442 |

| 0.074 | 14.0 | 16520 | 0.0710 | 0.6738 |

| 0.0704 | 15.0 | 17700 | 0.0816 | 0.6736 |

| 0.0678 | 16.0 | 18880 | 0.0596 | 0.7142 |

| 0.0599 | 17.0 | 20060 | 0.0689 | 0.7187 |

| 0.0568 | 18.0 | 21240 | 0.0566 | 0.7308 |

| 0.0534 | 19.0 | 22420 | 0.0518 | 0.7340 |

| 0.0522 | 20.0 | 23600 | 0.0513 | 0.7389 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu121

- Datasets 2.16.1

- Tokenizers 0.15.1

|

joislosinghermind/lola-gunvolt

|

joislosinghermind

| 2024-02-07T23:20:15Z | 1 | 1 |

diffusers

|

[

"diffusers",

"text-to-image",

"stable-diffusion",

"lora",

"template:sd-lora",

"base_model:runwayml/stable-diffusion-v1-5",

"base_model:adapter:runwayml/stable-diffusion-v1-5",

"license:unlicense",

"region:us"

] |

text-to-image

| 2024-02-07T23:20:12Z |

---

tags:

- text-to-image

- stable-diffusion

- lora

- diffusers

- template:sd-lora

widget:

- text: "UNICODE\0\02\0d\0,\0 \0m\0a\0s\0t\0e\0r\0p\0i\0e\0c\0e\0,\0 \0b\0e\0s\0t\0 \0q\0u\0a\0l\0i\0t\0y\0,\0 \0a\0n\0i\0m\0e\0,\0 \0h\0i\0g\0h\0l\0y\0 \0d\0e\0t\0a\0i\0l\0e\0d\0 \0f\0a\0c\0e\0,\0 \0h\0i\0g\0h\0l\0y\0 \0d\0e\0t\0a\0i\0l\0e\0d\0 \0b\0a\0c\0k\0g\0r\0o\0u\0n\0d\0,\0 \0p\0e\0r\0f\0e\0c\0t\0 \0l\0i\0g\0h\0t\0i\0n\0g\0,\0 \0l\0o\0l\0a\0,\0 \0b\0l\0u\0e\0 \0e\0y\0e\0s\0,\0 \0g\0r\0e\0e\0n\0_\0h\0a\0i\0r\0,\0 \0c\0i\0t\0y\0s\0c\0a\0p\0e\0,\0 \0f\0u\0l\0l\0_\0b\0o\0d\0y\0,\0 \0s\0o\0l\0o\0,\0 \0s\0o\0l\0o\0 \0f\0o\0c\0u\0s\0,\0 \0t\0-\0s\0h\0i\0r\0t\0,\0 \0 \0s\0h\0o\0r\0t\0s\0,\0 \0<\0l\0o\0r\0a\0:\0l\0o\0l\0a\0:\01\0>\0"

output:

url: images/00492-abyssorangemix3AOM3_aom3a1b_3939236143.jpeg

base_model: runwayml/stable-diffusion-v1-5

instance_prompt: lola

license: unlicense

---

# lola-gunvolt

<Gallery />

## Trigger words

You should use `lola` to trigger the image generation.

## Download model

Weights for this model are available in Safetensors format.

[Download](/joislosinghermind/lola-gunvolt/tree/main) them in the Files & versions tab.

|

adriana98/whisper-large-v2-LORA-colab

|

adriana98

| 2024-02-07T22:37:45Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2024-02-07T20:17:40Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

PotatoOff/HamSter-0.2

|

PotatoOff

| 2024-02-07T22:09:31Z | 1,395 | 4 |

transformers

|

[

"transformers",

"safetensors",

"mistral",

"text-generation",

"conversational",

"en",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-01-14T13:51:15Z |

---

license: apache-2.0

language:

- en

---

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>HamSter v0.2</title>

<link href="https://fonts.googleapis.com/css2?family=Quicksand:wght@400;500;600&display=swap" rel="stylesheet">

<style>

body {

font-family: 'Quicksand', sans-serif;

background-color: #1A202C;

color: #F7FAFC;

margin: 0;

padding: 20px;

font-size: 16px;

}

.container {

width: 100%;

margin: auto;

background-color: #2D3748;

padding: 20px;

border-radius: 10px;

box-shadow: 0 8px 16px rgba(0, 0, 0, 0.2);

}

.header {

display: flex;

align-items: flex-start;

gap: 20px;

}

.header h1 {

font-size: 20px;

color: #E2E8F0;

}

.header img {

flex-shrink: 0;

margin-left: 25%;

width: 50%;

max-width: 50%;

border-radius: 15px;

transition: filter 0.4s ease;

}

.header img:hover {

filter: blur(2px); /* Apply a stronger blur on hover */

}

.info {

flex-grow: 1;

background-color: #2D3748;

color: #CBD5E0;

font-family: 'Fira Code', 'JetBrains Mono', monospace;

padding: 15px;

border-radius: 10px;

box-shadow: 0 4px 6px rgba(0, 0, 0, 0.3);

font-size: 14px;

line-height: 1.7;

overflow-x: auto;

margin-top: 40px;

border: 2px solid #4A90E2;

transition: box-shadow 0.3s ease;

position: relative; /* Ensure proper stacking */

}

.info:hover {

box-shadow: 0 4px 13px rgba(0, 0, 0, 0.6), 0 0 24px rgba(74, 144, 226, 0.6);

}

.info-img {

width: 100%; /* Adjust width as per your layout needs */

max-width: 400px; /* Max width to ensure it doesn't get too large */

max-height: 100%; /* Adjust height proportionally */

border-radius: 10px;

box-shadow: 0 2px 4px rgba(0, 0, 0, 0.2);

margin-left: 5%; /* Align to the right */

margin-right: 0%; /* Keep some space from the text */

display: block; /* Ensure it's properly block level for margins to work */

float: right; /* Keep it to the right */

}

.button {

display: inline-block;

background-image: linear-gradient(145deg, #F96167 0%, #F0F2D7 100%);

color: #F0F0F0;

padding: 16px 24px; /* Increased padding for bigger buttons */

border: none;

border-radius: 10px;

cursor: pointer;

text-decoration: none;

margin-left: 7%;

transition: transform 0.3s ease, box-shadow 0.3s ease, background-image 0.3s ease, color 0.3s ease, border-radius 0.3s ease; /* Enhanced transitions */

font-weight: bold; /* Make the text bold */

box-shadow: 0 2px 15px rgba(0, 0, 0, 0.2); /* Subtle shadow for depth */

}

.button:hover {

background-image: linear-gradient(145deg, #FB1A3E 0%, #F38555 100%); /* Vibrant to light pink gradient */

transform: scale(1.1); /* Increase size for more emphasis */

box-shadow: 0 10px 30px rgba(249, 97, 103, 0.8); /* More pronounced glowing effect */

color: #FFFFFF; /* Brighten the text color slightly */

border-radius: 15px; /* Soften the corners a bit more for a pill-like effect */

}

@keyframes pulse {

0% {

transform: scale(1);

opacity: 1;

}

50% {

transform: scale(1.05);

opacity: 0.85;

}

100% {

transform: scale(1);

opacity: 1;

}

}

</style>

</head>

<body>

<div class="container">

<div class="header">

<div class="info" style="margin-top: 5px;">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64e7616c7df33432812e3c57/PieKyxOEVyn0zrrNqVec_.webp" alt="Image">

<h1 class="product-name" style="margin: 10px">HamSter 0.2</h1>

<p>

👋 Uncensored fine tune model roleplay focused of "mistralai/Mistral-7B-v0.2" with the help of my team <a href="https://huggingface.co/ConvexAI" target="_blank">ConvexAI.</a><br><br>

🚀 For optimal performance, I recommend using a detailed character card! (There is NSFW chub.ai) Check out <a href="https://chub.ai" target="_blank">Chub.ai</a> for some character cards.<br><br>

🤩 Uses the Llama2 prompt template with chat instructions.<br><br>

🔥 Fine-tuned with a newer dataset for even better results.<br><br>

😄 Next one will be more interesting!<br>

</p>

<div>

<a href="https://huggingface.co/collections/PotatoOff/hamster-02-65abc987a92a64ef5bb13148" class="button">HamSter 0.2 Quants</a>

<a href="https://discord.com/invite/9y7KxZxcZx" class="button">Discord Server</a>

</div>

</div>

</div>

<div style="overflow: hidden; position: relative">

<div class="info"style="overflow: hidden; margin:-left 0% margin-top: 20px;">

<a href="https://cdn-uploads.huggingface.co/production/uploads/64e7616c7df33432812e3c57/RnozajhXn85WQYuqcVtnA.webp" target="_blank">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64e7616c7df33432812e3c57/RnozajhXn85WQYuqcVtnA.webp" alt="Roleplay Test" style="width: auto; max-width: 37%; max-height: 100%; border-radius: 10px; box-shadow: 0 2px 4px rgba(0, 0, 0, 0.2); margin-left: 0%; display: block; float: right;">

</a>

<h2 style="margin-top: 0;">I had good results with these parameters:</h2>

<ul style="margin-top: 0;">

<p>> temperature: 0.8 <</p>

<p>> top_p: 0.75</p>

<p>> min_p: 0</p>

<p>> top_k: 0</p>

<p>> repetition_penalty: 1.05</p>

</ul>

</div>

</div>

<div style="overflow: hidden; position: relative;">

<div class="info" style="overflow: hidden; margin-top: 20px;">

<h2 style="margin-top: 0;">BenchMarks on OpenLLM Leaderboard</h2>

<a href="https://cdn-uploads.huggingface.co/production/uploads/64e7616c7df33432812e3c57/KaeVaaLOYZb0k81BbQ2-m.png" target="_blank">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64e7616c7df33432812e3c57/KaeVaaLOYZb0k81BbQ2-m.png" alt="OPEN LLM BENCHMARK" style="info-img; border-radius: 10px">

</a>

<p>More details: <a href="https://huggingface.co/datasets/open-llm-leaderboard/details_PotatoOff__HamSter-0.2" target="_blank">HamSter-0.2 OpenLLM BenchMarks</a></p>

</div>

</div>

<div style="overflow: hidden; position: relative;">

<div class="info" style="overflow: hidden; margin-top: 20px;">

<h2 style="margin-top: 0;">BenchMarks on Ayumi's LLM Role Play & ERP Ranking</h2>

<a href="https://cdn-uploads.huggingface.co/production/uploads/64e7616c7df33432812e3c57/NSUmxUmDyhO9tJb-NZd8m.png" target="_blank">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64e7616c7df33432812e3c57/NSUmxUmDyhO9tJb-NZd8m.png" alt="Ayumi's LLM Role Play & ERP Ranking" class="info-img" style="width: 100%; height: auto;">

</a>

<p>More details: <a href="http://ayumi.m8geil.de/results_v3/model_resp_DL_20240114_7B-Q6_K_HamSter_0.2.html">Ayumi's LLM RolePlay & ERP Rankin HamSter-0.2 GGUF version Q6_K</a></p>

</div>

</div>

<div style="font-family: 'Arial', sans-serif; font-weight: bold; text-shadow: 0px 2px 4px rgba(0, 0, 0, 0.5);">

<p style="display: inline; font-size: 17px; margin: 0;">Have Fun</p>

<p style="display: inline; color: #E2E8F0; margin-bottom: 20px; animation: pulse 2s infinite; font-size: 17px;">💖</p>

</div>

</div>

</body>

</html>

|

ORromu/Reinforce-CartPole-v1

|

ORromu

| 2024-02-07T22:01:43Z | 0 | 0 | null |

[

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2024-02-07T22:01:34Z |

---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-CartPole-v1

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 500.00 +/- 0.00

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

VanoInvestigations/bertin-gpt-j-6B_4bit_27

|

VanoInvestigations

| 2024-02-07T21:44:17Z | 2 | 0 |

peft

|

[

"peft",

"tensorboard",

"safetensors",

"generated_from_trainer",

"base_model:bertin-project/bertin-gpt-j-6B",

"base_model:adapter:bertin-project/bertin-gpt-j-6B",

"license:apache-2.0",

"region:us"

] | null | 2024-02-02T12:04:19Z |

---

license: apache-2.0

library_name: peft

tags:

- generated_from_trainer

base_model: bertin-project/bertin-gpt-j-6B

model-index:

- name: bertin-gpt-j-6B_4bit_27

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bertin-gpt-j-6B_4bit_27

This model is a fine-tuned version of [bertin-project/bertin-gpt-j-6B](https://huggingface.co/bertin-project/bertin-gpt-j-6B) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1.41e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- PEFT 0.7.1

- Transformers 4.37.2

- Pytorch 2.2.0+cu121

- Datasets 2.14.6

- Tokenizers 0.15.1

|

hanspeterlyngsoeraaschoujensen/deepseek-math-7b-instruct-GPTQ

|

hanspeterlyngsoeraaschoujensen

| 2024-02-07T21:18:11Z | 8 | 0 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"4-bit",

"gptq",

"region:us"

] |

text-generation

| 2024-02-07T21:16:32Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

Jimmyhd/mistral7btimebookFinetune50rows

|

Jimmyhd

| 2024-02-07T21:13:25Z | 4 | 0 |

transformers

|

[

"transformers",

"safetensors",

"mistral",

"text-generation",

"autotrain",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-02-07T21:04:28Z |

---

tags:

- autotrain

- text-generation

widget:

- text: "I love AutoTrain because "

license: other

---

# Model Trained Using AutoTrain

This model was trained using AutoTrain. For more information, please visit [AutoTrain](https://hf.co/docs/autotrain).

# Usage

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model_path = "PATH_TO_THIS_REPO"

tokenizer = AutoTokenizer.from_pretrained(model_path)

model = AutoModelForCausalLM.from_pretrained(

model_path,

device_map="auto",

torch_dtype='auto'

).eval()

# Prompt content: "hi"

messages = [

{"role": "user", "content": "hi"}

]

input_ids = tokenizer.apply_chat_template(conversation=messages, tokenize=True, add_generation_prompt=True, return_tensors='pt')

output_ids = model.generate(input_ids.to('cuda'))

response = tokenizer.decode(output_ids[0][input_ids.shape[1]:], skip_special_tokens=True)

# Model response: "Hello! How can I assist you today?"

print(response)

```

|

andrewatef/MoMask-test

|

andrewatef

| 2024-02-07T21:12:35Z | 0 | 0 | null |

[

"arxiv:2312.00063",

"region:us"

] | null | 2024-02-07T13:33:10Z |

---

title: MoMask

emoji: 🎭

colorFrom: pink

colorTo: purple

sdk: gradio

sdk_version: 3.24.1

app_file: app.py

pinned: false

---

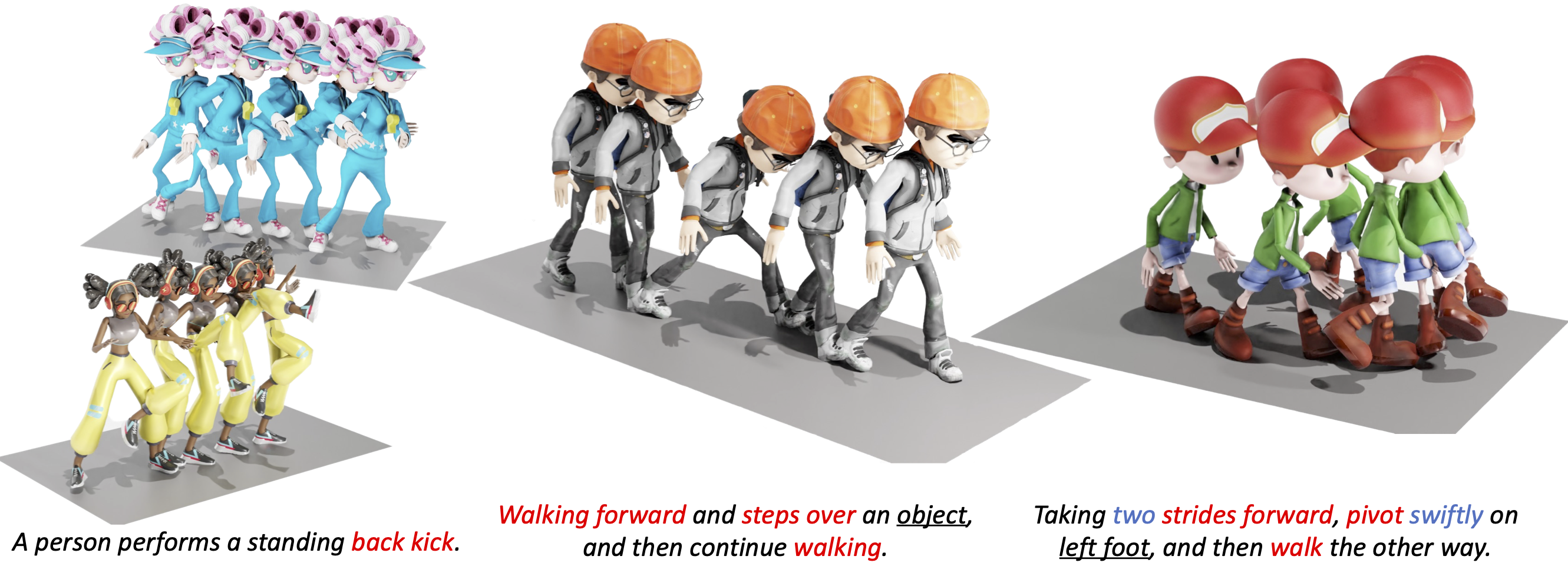

# MoMask: Generative Masked Modeling of 3D Human Motions

## [[Project Page]](https://ericguo5513.github.io/momask) [[Paper]](https://arxiv.org/abs/2312.00063)

If you find our code or paper helpful, please consider citing:

```

@article{guo2023momask,

title={MoMask: Generative Masked Modeling of 3D Human Motions},

author={Chuan Guo and Yuxuan Mu and Muhammad Gohar Javed and Sen Wang and Li Cheng},

year={2023},

eprint={2312.00063},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

## :postbox: News

📢 **2023-12-19** --- Release scripts for temporal inpainting.

📢 **2023-12-15** --- Release codes and models for momask. Including training/eval/generation scripts.

📢 **2023-11-29** --- Initialized the webpage and git project.

## :round_pushpin: Get You Ready

<details>

### 1. Conda Environment

```

conda env create -f environment.yml

conda activate momask

pip install git+https://github.com/openai/CLIP.git

```

We test our code on Python 3.7.13 and PyTorch 1.7.1

### 2. Models and Dependencies

#### Download Pre-trained Models

```

bash prepare/download_models.sh

```

#### Download Evaluation Models and Gloves

For evaluation only.

```

bash prepare/download_evaluator.sh

bash prepare/download_glove.sh

```

#### Troubleshooting

To address the download error related to gdown: "Cannot retrieve the public link of the file. You may need to change the permission to 'Anyone with the link', or have had many accesses". A potential solution is to run `pip install --upgrade --no-cache-dir gdown`, as suggested on https://github.com/wkentaro/gdown/issues/43. This should help resolve the issue.

#### (Optional) Download Mannually

Visit [[Google Drive]](https://drive.google.com/drive/folders/1b3GnAbERH8jAoO5mdWgZhyxHB73n23sK?usp=drive_link) to download the models and evaluators mannually.

### 3. Get Data

You have two options here:

* **Skip getting data**, if you just want to generate motions using *own* descriptions.

* **Get full data**, if you want to *re-train* and *evaluate* the model.

**(a). Full data (text + motion)**

**HumanML3D** - Follow the instruction in [HumanML3D](https://github.com/EricGuo5513/HumanML3D.git), then copy the result dataset to our repository:

```

cp -r ../HumanML3D/HumanML3D ./dataset/HumanML3D

```

**KIT**-Download from [HumanML3D](https://github.com/EricGuo5513/HumanML3D.git), then place result in `./dataset/KIT-ML`

####

</details>

## :rocket: Demo

<details>

### (a) Generate from a single prompt

```

python gen_t2m.py --gpu_id 1 --ext exp1 --text_prompt "A person is running on a treadmill."

```

### (b) Generate from a prompt file

An example of prompt file is given in `./assets/text_prompt.txt`. Please follow the format of `<text description>#<motion length>` at each line. Motion length indicates the number of poses, which must be integeter and will be rounded by 4. In our work, motion is in 20 fps.

If you write `<text description>#NA`, our model will determine a length. Note once there is **one** NA, all the others will be **NA** automatically.

```

python gen_t2m.py --gpu_id 1 --ext exp2 --text_path ./assets/text_prompt.txt

```

A few more parameters you may be interested:

* `--repeat_times`: number of replications for generation, default `1`.

* `--motion_length`: specify the number of poses for generation, only applicable in (a).

The output files are stored under folder `./generation/<ext>/`. They are

* `numpy files`: generated motions with shape of (nframe, 22, 3), under subfolder `./joints`.

* `video files`: stick figure animation in mp4 format, under subfolder `./animation`.

* `bvh files`: bvh files of the generated motion, under subfolder `./animation`.

We also apply naive foot ik to the generated motions, see files with suffix `_ik`. It sometimes works well, but sometimes will fail.

</details>

## :dancers: Visualization

<details>

All the animations are manually rendered in blender. We use the characters from [mixamo](https://www.mixamo.com/#/). You need to download the characters in T-Pose with skeleton.

### Retargeting

For retargeting, we found rokoko usually leads to large error on foot. On the other hand, [keemap.rig.transfer](https://github.com/nkeeline/Keemap-Blender-Rig-ReTargeting-Addon/releases) shows more precise retargetting. You could watch the [tutorial](https://www.youtube.com/watch?v=EG-VCMkVpxg) here.

Following these steps:

* Download keemap.rig.transfer from the github, and install it in blender.