modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-01 00:47:04

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 530

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-01 00:46:57

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

pfunk/CartPole-v1-DQPN_freq_150-seed1

|

pfunk

| 2023-03-18T14:11:08Z | 0 | 0 |

cleanrl

|

[

"cleanrl",

"tensorboard",

"CartPole-v1",

"deep-reinforcement-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T14:11:05Z |

---

tags:

- CartPole-v1

- deep-reinforcement-learning

- reinforcement-learning

- custom-implementation

library_name: cleanrl

model-index:

- name: DQPN_freq

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 495.60 +/- 0.00

name: mean_reward

verified: false

---

# (CleanRL) **DQPN_freq** Agent Playing **CartPole-v1**

This is a trained model of a DQPN_freq agent playing CartPole-v1.

The model was trained by using [CleanRL](https://github.com/vwxyzjn/cleanrl) and the most up-to-date training code can be

found [here](https://github.com/vwxyzjn/cleanrl/blob/master/cleanrl/DQPN_freq_150.py).

## Get Started

To use this model, please install the `cleanrl` package with the following command:

```

pip install "cleanrl[DQPN_freq_150]"

python -m cleanrl_utils.enjoy --exp-name DQPN_freq_150 --env-id CartPole-v1

```

Please refer to the [documentation](https://docs.cleanrl.dev/get-started/zoo/) for more detail.

## Command to reproduce the training

```bash

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_150-seed1/raw/main/dqpn_freq.py

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_150-seed1/raw/main/pyproject.toml

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_150-seed1/raw/main/poetry.lock

poetry install --all-extras

python dqpn_freq.py --track --wandb-entity pfunk --wandb-project-name dqpn --capture-video true --save-model true --upload-model true --hf-entity pfunk --exp-name DQPN_freq_150 --policy-network-frequency 150 --seed 1

```

# Hyperparameters

```python

{'alg_type': 'dqpn_freq.py',

'batch_size': 256,

'buffer_size': 300000,

'capture_video': True,

'cuda': True,

'end_e': 0.1,

'env_id': 'CartPole-v1',

'exp_name': 'DQPN_freq_150',

'exploration_fraction': 0.2,

'gamma': 1.0,

'hf_entity': 'pfunk',

'learning_rate': 0.0001,

'learning_starts': 1000,

'policy_network_frequency': 150,

'policy_tau': 1.0,

'save_model': True,

'seed': 1,

'start_e': 1.0,

'target_network_frequency': 20,

'target_tau': 1.0,

'torch_deterministic': True,

'total_timesteps': 500000,

'track': True,

'train_frequency': 1,

'upload_model': True,

'wandb_entity': 'pfunk',

'wandb_project_name': 'dqpn'}

```

|

pfunk/CartPole-v1-DQPN_freq_50-seed2

|

pfunk

| 2023-03-18T14:09:56Z | 0 | 0 |

cleanrl

|

[

"cleanrl",

"tensorboard",

"CartPole-v1",

"deep-reinforcement-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T14:09:52Z |

---

tags:

- CartPole-v1

- deep-reinforcement-learning

- reinforcement-learning

- custom-implementation

library_name: cleanrl

model-index:

- name: DQPN_freq

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 26.97 +/- 0.00

name: mean_reward

verified: false

---

# (CleanRL) **DQPN_freq** Agent Playing **CartPole-v1**

This is a trained model of a DQPN_freq agent playing CartPole-v1.

The model was trained by using [CleanRL](https://github.com/vwxyzjn/cleanrl) and the most up-to-date training code can be

found [here](https://github.com/vwxyzjn/cleanrl/blob/master/cleanrl/DQPN_freq_50.py).

## Get Started

To use this model, please install the `cleanrl` package with the following command:

```

pip install "cleanrl[DQPN_freq_50]"

python -m cleanrl_utils.enjoy --exp-name DQPN_freq_50 --env-id CartPole-v1

```

Please refer to the [documentation](https://docs.cleanrl.dev/get-started/zoo/) for more detail.

## Command to reproduce the training

```bash

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_50-seed2/raw/main/dqpn_freq.py

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_50-seed2/raw/main/pyproject.toml

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_50-seed2/raw/main/poetry.lock

poetry install --all-extras

python dqpn_freq.py --track --wandb-entity pfunk --wandb-project-name dqpn --capture-video true --save-model true --upload-model true --hf-entity pfunk --exp-name DQPN_freq_50 --policy-network-frequency 50 --seed 2

```

# Hyperparameters

```python

{'alg_type': 'dqpn_freq.py',

'batch_size': 256,

'buffer_size': 300000,

'capture_video': True,

'cuda': True,

'end_e': 0.1,

'env_id': 'CartPole-v1',

'exp_name': 'DQPN_freq_50',

'exploration_fraction': 0.2,

'gamma': 1.0,

'hf_entity': 'pfunk',

'learning_rate': 0.0001,

'learning_starts': 1000,

'policy_network_frequency': 50,

'policy_tau': 1.0,

'save_model': True,

'seed': 2,

'start_e': 1.0,

'target_network_frequency': 20,

'target_tau': 1.0,

'torch_deterministic': True,

'total_timesteps': 500000,

'track': True,

'train_frequency': 1,

'upload_model': True,

'wandb_entity': 'pfunk',

'wandb_project_name': 'dqpn'}

```

|

pfunk/CartPole-v1-DQPN_freq_50-seed1

|

pfunk

| 2023-03-18T14:09:55Z | 0 | 0 |

cleanrl

|

[

"cleanrl",

"tensorboard",

"CartPole-v1",

"deep-reinforcement-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T14:09:52Z |

---

tags:

- CartPole-v1

- deep-reinforcement-learning

- reinforcement-learning

- custom-implementation

library_name: cleanrl

model-index:

- name: DQPN_freq

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 74.65 +/- 0.00

name: mean_reward

verified: false

---

# (CleanRL) **DQPN_freq** Agent Playing **CartPole-v1**

This is a trained model of a DQPN_freq agent playing CartPole-v1.

The model was trained by using [CleanRL](https://github.com/vwxyzjn/cleanrl) and the most up-to-date training code can be

found [here](https://github.com/vwxyzjn/cleanrl/blob/master/cleanrl/DQPN_freq_50.py).

## Get Started

To use this model, please install the `cleanrl` package with the following command:

```

pip install "cleanrl[DQPN_freq_50]"

python -m cleanrl_utils.enjoy --exp-name DQPN_freq_50 --env-id CartPole-v1

```

Please refer to the [documentation](https://docs.cleanrl.dev/get-started/zoo/) for more detail.

## Command to reproduce the training

```bash

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_50-seed1/raw/main/dqpn_freq.py

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_50-seed1/raw/main/pyproject.toml

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_50-seed1/raw/main/poetry.lock

poetry install --all-extras

python dqpn_freq.py --track --wandb-entity pfunk --wandb-project-name dqpn --capture-video true --save-model true --upload-model true --hf-entity pfunk --exp-name DQPN_freq_50 --policy-network-frequency 50 --seed 1

```

# Hyperparameters

```python

{'alg_type': 'dqpn_freq.py',

'batch_size': 256,

'buffer_size': 300000,

'capture_video': True,

'cuda': True,

'end_e': 0.1,

'env_id': 'CartPole-v1',

'exp_name': 'DQPN_freq_50',

'exploration_fraction': 0.2,

'gamma': 1.0,

'hf_entity': 'pfunk',

'learning_rate': 0.0001,

'learning_starts': 1000,

'policy_network_frequency': 50,

'policy_tau': 1.0,

'save_model': True,

'seed': 1,

'start_e': 1.0,

'target_network_frequency': 20,

'target_tau': 1.0,

'torch_deterministic': True,

'total_timesteps': 500000,

'track': True,

'train_frequency': 1,

'upload_model': True,

'wandb_entity': 'pfunk',

'wandb_project_name': 'dqpn'}

```

|

pfunk/CartPole-v1-DQPN_freq_50-seed4

|

pfunk

| 2023-03-18T14:09:54Z | 0 | 0 |

cleanrl

|

[

"cleanrl",

"tensorboard",

"CartPole-v1",

"deep-reinforcement-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T14:09:51Z |

---

tags:

- CartPole-v1

- deep-reinforcement-learning

- reinforcement-learning

- custom-implementation

library_name: cleanrl

model-index:

- name: DQPN_freq

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 498.88 +/- 0.00

name: mean_reward

verified: false

---

# (CleanRL) **DQPN_freq** Agent Playing **CartPole-v1**

This is a trained model of a DQPN_freq agent playing CartPole-v1.

The model was trained by using [CleanRL](https://github.com/vwxyzjn/cleanrl) and the most up-to-date training code can be

found [here](https://github.com/vwxyzjn/cleanrl/blob/master/cleanrl/DQPN_freq_50.py).

## Get Started

To use this model, please install the `cleanrl` package with the following command:

```

pip install "cleanrl[DQPN_freq_50]"

python -m cleanrl_utils.enjoy --exp-name DQPN_freq_50 --env-id CartPole-v1

```

Please refer to the [documentation](https://docs.cleanrl.dev/get-started/zoo/) for more detail.

## Command to reproduce the training

```bash

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_50-seed4/raw/main/dqpn_freq.py

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_50-seed4/raw/main/pyproject.toml

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_50-seed4/raw/main/poetry.lock

poetry install --all-extras

python dqpn_freq.py --track --wandb-entity pfunk --wandb-project-name dqpn --capture-video true --save-model true --upload-model true --hf-entity pfunk --exp-name DQPN_freq_50 --policy-network-frequency 50 --seed 4

```

# Hyperparameters

```python

{'alg_type': 'dqpn_freq.py',

'batch_size': 256,

'buffer_size': 300000,

'capture_video': True,

'cuda': True,

'end_e': 0.1,

'env_id': 'CartPole-v1',

'exp_name': 'DQPN_freq_50',

'exploration_fraction': 0.2,

'gamma': 1.0,

'hf_entity': 'pfunk',

'learning_rate': 0.0001,

'learning_starts': 1000,

'policy_network_frequency': 50,

'policy_tau': 1.0,

'save_model': True,

'seed': 4,

'start_e': 1.0,

'target_network_frequency': 20,

'target_tau': 1.0,

'torch_deterministic': True,

'total_timesteps': 500000,

'track': True,

'train_frequency': 1,

'upload_model': True,

'wandb_entity': 'pfunk',

'wandb_project_name': 'dqpn'}

```

|

pfunk/CartPole-v1-DQPN_freq_100-seed4

|

pfunk

| 2023-03-18T14:08:22Z | 0 | 0 |

cleanrl

|

[

"cleanrl",

"tensorboard",

"CartPole-v1",

"deep-reinforcement-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T14:08:19Z |

---

tags:

- CartPole-v1

- deep-reinforcement-learning

- reinforcement-learning

- custom-implementation

library_name: cleanrl

model-index:

- name: DQPN_freq

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 55.21 +/- 0.00

name: mean_reward

verified: false

---

# (CleanRL) **DQPN_freq** Agent Playing **CartPole-v1**

This is a trained model of a DQPN_freq agent playing CartPole-v1.

The model was trained by using [CleanRL](https://github.com/vwxyzjn/cleanrl) and the most up-to-date training code can be

found [here](https://github.com/vwxyzjn/cleanrl/blob/master/cleanrl/DQPN_freq_100.py).

## Get Started

To use this model, please install the `cleanrl` package with the following command:

```

pip install "cleanrl[DQPN_freq_100]"

python -m cleanrl_utils.enjoy --exp-name DQPN_freq_100 --env-id CartPole-v1

```

Please refer to the [documentation](https://docs.cleanrl.dev/get-started/zoo/) for more detail.

## Command to reproduce the training

```bash

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_100-seed4/raw/main/dqpn_freq.py

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_100-seed4/raw/main/pyproject.toml

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_100-seed4/raw/main/poetry.lock

poetry install --all-extras

python dqpn_freq.py --track --wandb-entity pfunk --wandb-project-name dqpn --capture-video true --save-model true --upload-model true --hf-entity pfunk --exp-name DQPN_freq_100 --policy-network-frequency 100 --seed 4

```

# Hyperparameters

```python

{'alg_type': 'dqpn_freq.py',

'batch_size': 256,

'buffer_size': 300000,

'capture_video': True,

'cuda': True,

'end_e': 0.1,

'env_id': 'CartPole-v1',

'exp_name': 'DQPN_freq_100',

'exploration_fraction': 0.2,

'gamma': 1.0,

'hf_entity': 'pfunk',

'learning_rate': 0.0001,

'learning_starts': 1000,

'policy_network_frequency': 100,

'policy_tau': 1.0,

'save_model': True,

'seed': 4,

'start_e': 1.0,

'target_network_frequency': 20,

'target_tau': 1.0,

'torch_deterministic': True,

'total_timesteps': 500000,

'track': True,

'train_frequency': 1,

'upload_model': True,

'wandb_entity': 'pfunk',

'wandb_project_name': 'dqpn'}

```

|

pfunk/CartPole-v1-DQPN_freq_100-seed3

|

pfunk

| 2023-03-18T14:08:13Z | 0 | 0 |

cleanrl

|

[

"cleanrl",

"tensorboard",

"CartPole-v1",

"deep-reinforcement-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T14:08:10Z |

---

tags:

- CartPole-v1

- deep-reinforcement-learning

- reinforcement-learning

- custom-implementation

library_name: cleanrl

model-index:

- name: DQPN_freq

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 500.00 +/- 0.00

name: mean_reward

verified: false

---

# (CleanRL) **DQPN_freq** Agent Playing **CartPole-v1**

This is a trained model of a DQPN_freq agent playing CartPole-v1.

The model was trained by using [CleanRL](https://github.com/vwxyzjn/cleanrl) and the most up-to-date training code can be

found [here](https://github.com/vwxyzjn/cleanrl/blob/master/cleanrl/DQPN_freq_100.py).

## Get Started

To use this model, please install the `cleanrl` package with the following command:

```

pip install "cleanrl[DQPN_freq_100]"

python -m cleanrl_utils.enjoy --exp-name DQPN_freq_100 --env-id CartPole-v1

```

Please refer to the [documentation](https://docs.cleanrl.dev/get-started/zoo/) for more detail.

## Command to reproduce the training

```bash

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_100-seed3/raw/main/dqpn_freq.py

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_100-seed3/raw/main/pyproject.toml

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_100-seed3/raw/main/poetry.lock

poetry install --all-extras

python dqpn_freq.py --track --wandb-entity pfunk --wandb-project-name dqpn --capture-video true --save-model true --upload-model true --hf-entity pfunk --exp-name DQPN_freq_100 --policy-network-frequency 100 --seed 3

```

# Hyperparameters

```python

{'alg_type': 'dqpn_freq.py',

'batch_size': 256,

'buffer_size': 300000,

'capture_video': True,

'cuda': True,

'end_e': 0.1,

'env_id': 'CartPole-v1',

'exp_name': 'DQPN_freq_100',

'exploration_fraction': 0.2,

'gamma': 1.0,

'hf_entity': 'pfunk',

'learning_rate': 0.0001,

'learning_starts': 1000,

'policy_network_frequency': 100,

'policy_tau': 1.0,

'save_model': True,

'seed': 3,

'start_e': 1.0,

'target_network_frequency': 20,

'target_tau': 1.0,

'torch_deterministic': True,

'total_timesteps': 500000,

'track': True,

'train_frequency': 1,

'upload_model': True,

'wandb_entity': 'pfunk',

'wandb_project_name': 'dqpn'}

```

|

pfunk/CartPole-v1-DQPN_freq_100-seed1

|

pfunk

| 2023-03-18T14:07:44Z | 0 | 0 |

cleanrl

|

[

"cleanrl",

"tensorboard",

"CartPole-v1",

"deep-reinforcement-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T14:07:41Z |

---

tags:

- CartPole-v1

- deep-reinforcement-learning

- reinforcement-learning

- custom-implementation

library_name: cleanrl

model-index:

- name: DQPN_freq

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 500.00 +/- 0.00

name: mean_reward

verified: false

---

# (CleanRL) **DQPN_freq** Agent Playing **CartPole-v1**

This is a trained model of a DQPN_freq agent playing CartPole-v1.

The model was trained by using [CleanRL](https://github.com/vwxyzjn/cleanrl) and the most up-to-date training code can be

found [here](https://github.com/vwxyzjn/cleanrl/blob/master/cleanrl/DQPN_freq_100.py).

## Get Started

To use this model, please install the `cleanrl` package with the following command:

```

pip install "cleanrl[DQPN_freq_100]"

python -m cleanrl_utils.enjoy --exp-name DQPN_freq_100 --env-id CartPole-v1

```

Please refer to the [documentation](https://docs.cleanrl.dev/get-started/zoo/) for more detail.

## Command to reproduce the training

```bash

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_100-seed1/raw/main/dqpn_freq.py

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_100-seed1/raw/main/pyproject.toml

curl -OL https://huggingface.co/pfunk/CartPole-v1-DQPN_freq_100-seed1/raw/main/poetry.lock

poetry install --all-extras

python dqpn_freq.py --track --wandb-entity pfunk --wandb-project-name dqpn --capture-video true --save-model true --upload-model true --hf-entity pfunk --exp-name DQPN_freq_100 --policy-network-frequency 100 --seed 1

```

# Hyperparameters

```python

{'alg_type': 'dqpn_freq.py',

'batch_size': 256,

'buffer_size': 300000,

'capture_video': True,

'cuda': True,

'end_e': 0.1,

'env_id': 'CartPole-v1',

'exp_name': 'DQPN_freq_100',

'exploration_fraction': 0.2,

'gamma': 1.0,

'hf_entity': 'pfunk',

'learning_rate': 0.0001,

'learning_starts': 1000,

'policy_network_frequency': 100,

'policy_tau': 1.0,

'save_model': True,

'seed': 1,

'start_e': 1.0,

'target_network_frequency': 20,

'target_tau': 1.0,

'torch_deterministic': True,

'total_timesteps': 500000,

'track': True,

'train_frequency': 1,

'upload_model': True,

'wandb_entity': 'pfunk',

'wandb_project_name': 'dqpn'}

```

|

Jackmin108/a2c-PandaReachDense-v2

|

Jackmin108

| 2023-03-18T14:03:34Z | 1 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"PandaReachDense-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T07:53:39Z |

---

library_name: stable-baselines3

tags:

- PandaReachDense-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: PandaReachDense-v2

type: PandaReachDense-v2

metrics:

- type: mean_reward

value: -1.59 +/- 0.50

name: mean_reward

verified: false

---

# **A2C** Agent playing **PandaReachDense-v2**

This is a trained model of a **A2C** agent playing **PandaReachDense-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

CVPR/DualStyleGAN

|

CVPR

| 2023-03-18T13:53:08Z | 0 | 11 |

pytorch

|

[

"pytorch",

"style-transfer",

"face-stylization",

"dataset:cartoon",

"dataset:caricature",

"dataset:anime",

"dataset:pixar",

"dataset:slamdunk",

"dataset:arcane",

"dataset:comic",

"arxiv:2203.13248",

"license:mit",

"region:us"

] | null | 2022-06-12T13:29:24Z |

---

license: mit

library_name: pytorch

tags:

- style-transfer

- face-stylization

datasets:

- cartoon

- caricature

- anime

- pixar

- slamdunk

- arcane

- comic

---

## Model Details

This system provides a web demo for the following paper:

**Pastiche Master: Exemplar-Based High-Resolution Portrait Style Transfer (CVPR 2022)**

- Algorithm developed by: Shuai Yang, Liming Jiang, Ziwei Liu and Chen Change Loy

- Web demo developed by: [hysts](https://huggingface.co/hysts)

- Resources for more information:

- [Project Page](https://www.mmlab-ntu.com/project/dualstylegan/)

- [Research Paper](https://arxiv.org/abs/2203.13248)

- [GitHub Repo](https://github.com/williamyang1991/DualStyleGAN)

**Abstract**

> Recent studies on StyleGAN show high performance on artistic portrait generation by transfer learning with limited data. In this paper, we explore more challenging exemplar-based high-resolution portrait style transfer by introducing a novel DualStyleGAN with flexible control of dual styles of the original face domain and the extended artistic portrait domain. Different from StyleGAN, DualStyleGAN provides a natural way of style transfer by characterizing the content and style of a portrait with an intrinsic style path and a new extrinsic style path, respectively. The delicately designed extrinsic style path enables our model to modulate both the color and complex structural styles hierarchically to precisely pastiche the style example. Furthermore, a novel progressive fine-tuning scheme is introduced to smoothly transform the generative space of the model to the target domain, even with the above modifications on the network architecture. Experiments demonstrate the superiority of DualStyleGAN over state-of-the-art methods in high-quality portrait style transfer and flexible style control.

## Citation Information

```bibtex

@inproceedings{yang2022Pastiche,

author = {Yang, Shuai and Jiang, Liming and Liu, Ziwei and and Loy, Chen Change},

title = {Pastiche Master: Exemplar-Based High-Resolution Portrait Style Transfer},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year = {2022}

}

```

|

coreml-community/coreml-ModernArtStyle-v10

|

coreml-community

| 2023-03-18T13:43:12Z | 0 | 3 | null |

[

"coreml",

"stable-diffusion",

"text-to-image",

"license:creativeml-openrail-m",

"region:us"

] |

text-to-image

| 2023-03-18T05:29:49Z |

---

license: creativeml-openrail-m

tags:

- coreml

- stable-diffusion

- text-to-image

---

# Core ML Converted Model:

- This model was converted to [Core ML for use on Apple Silicon devices](https://github.com/apple/ml-stable-diffusion). Conversion instructions can be found [here](https://github.com/godly-devotion/MochiDiffusion/wiki/How-to-convert-ckpt-or-safetensors-files-to-Core-ML).<br>

- Provide the model to an app such as Mochi Diffusion [Github](https://github.com/godly-devotion/MochiDiffusion) - [Discord](https://discord.gg/x2kartzxGv) to generate images.<br>

- `split_einsum` version is compatible with all compute unit options including Neural Engine.<br>

- `original` version is only compatible with CPU & GPU option.<br>

- Custom resolution versions are tagged accordingly.<br>

- The `vae-ft-mse-840000-ema-pruned.ckpt` vae is embedded into the model.<br>

- Descriptions are posted as-is from original model source. Not all features and/or results may be available in CoreML format.<br>

- This model was converted with `vae-encoder` for i2i.<br>

- This model is fp16.<br>

- This model does not have the [unet split into chunks](https://github.com/apple/ml-stable-diffusion#-converting-models-to-core-ml).<br>

- This model does not include a safety checker (for NSFW content)<br>

# ModernArtStyle-v10:

Source(s): [Hugging Face](https://huggingface.co/theintuitiveye/modernartstyle) - [CivitAI](https://civitai.com/models/3519/modernartstyle)

You can use this model to generate modernart style images.

## Dataset

~100 modern art images.

## Usage

Use stability ai VAE for better results.

For majority of prompts trigger phrase is not required; use *"modernartst"* to force the style

*samples*

Help us to be able to create models of professional standards. Consider supporting us on [Patreon](https://www.patreon.com/intuitiveai) / [Ko-fi](https://ko-fi.com/intuitiveai) / [Paypal](https://www.paypal.com/paypalme/theintuitiveye)

## *Demo*

We support a [Gradio](https://github.com/gradio-app/gradio) Web UI to run ModernArt Diffusion :

[](https://huggingface.co/spaces/theintuitiveye/modernartstyle)

## *License*

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage. The CreativeML OpenRAIL License specifies :

- You can't use the model to deliberately produce nor share illegal or harmful outputs or content

- The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

- You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully) Please read the full license [here](https://huggingface.co/spaces/CompVis/stable-diffusion-license)

|

takinai/Tifa_meenow

|

takinai

| 2023-03-18T13:35:01Z | 0 | 2 | null |

[

"stable_diffusion",

"lora",

"region:us"

] | null | 2023-03-17T18:11:04Z |

---

tags:

- stable_diffusion

- lora

---

The source of the models is listed below. Please check the original licenses from the source.

https://civitai.com/models/11367

|

Feldi/ppoSelf-LunarLender-v2

|

Feldi

| 2023-03-18T13:31:49Z | 0 | 0 | null |

[

"tensorboard",

"LunarLander-v2",

"ppo",

"deep-reinforcement-learning",

"reinforcement-learning",

"custom-implementation",

"deep-rl-course",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T13:31:42Z |

---

tags:

- LunarLander-v2

- ppo

- deep-reinforcement-learning

- reinforcement-learning

- custom-implementation

- deep-rl-course

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: -106.81 +/- 68.67

name: mean_reward

verified: false

---

# PPO Agent Playing LunarLander-v2

This is a trained model of a PPO agent playing LunarLander-v2.

# Hyperparameters

```python

{'exp_name': 'ppo'

'seed': 1

'torch_deterministic': True

'cuda': True

'track': False

'wandb_project_name': 'cleanRL'

'wandb_entity': None

'capture_video': False

'env_id': 'LunarLander-v2'

'total_timesteps': 50000

'learning_rate': 0.00025

'num_envs': 4

'num_steps': 128

'anneal_lr': True

'gae': True

'gamma': 0.99

'gae_lambda': 0.95

'num_minibatches': 4

'update_epochs': 4

'norm_adv': True

'clip_coef': 0.2

'clip_vloss': True

'ent_coef': 0.01

'vf_coef': 0.5

'max_grad_norm': 0.5

'target_kl': None

'repo_id': 'Feldi/ppoSelf-LunarLender-v2'

'batch_size': 512

'minibatch_size': 128}

```

|

takinai/SamDoesArts_Sam_Yang_Style_LoRA

|

takinai

| 2023-03-18T13:23:52Z | 0 | 4 | null |

[

"stable_diffusion",

"lora",

"region:us"

] | null | 2023-03-18T13:19:05Z |

---

tags:

- stable_diffusion

- lora

---

The source of the models is listed below. Please check the original licenses from the source.

https://civitai.com/models/6638

|

marinone94/whisper-medium-nordic

|

marinone94

| 2023-03-18T13:23:18Z | 89 | 2 |

transformers

|

[

"transformers",

"pytorch",

"whisper",

"automatic-speech-recognition",

"whisper-event",

"generated_from_trainer",

"hf-asr-leaderboard",

"sv",

"no",

"da",

"multilingual",

"dataset:mozilla-foundation/common_voice_11_0",

"dataset:babelbox/babelbox_voice",

"dataset:NbAiLab/NST",

"dataset:NbAiLab/NPSC",

"dataset:google/fleurs",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-12-17T07:18:20Z |

---

language:

- sv

- 'no'

- da

- multilingual

license: apache-2.0

tags:

- whisper-event

- generated_from_trainer

- hf-asr-leaderboard

datasets:

- mozilla-foundation/common_voice_11_0

- babelbox/babelbox_voice

- NbAiLab/NST

- NbAiLab/NPSC

- google/fleurs

metrics:

- wer

model-index:

- name: Whisper Medium Nordic

results:

- task:

type: automatic-speech-recognition

name: Automatic Speech Recognition

dataset:

name: mozilla-foundation/common_voice_11_0

type: mozilla-foundation/common_voice_11_0

config: sv-SE

split: test

metrics:

- type: wer

value: 11.31

name: Wer

- type: wer

value: 14.86

name: Wer

- type: wer

value: 37.02

name: Wer

---

# Whisper Medium Nordic

This model is a fine-tuned version of [openai/whisper-medium](https://huggingface.co/openai/whisper-medium) on the [mozilla-foundation/common_voice_11_0](https://huggingface.co/datasets/mozilla-foundation/common_voice_11_0) (sv-SE, da, nn-NO), the [babelbox/babelbox_voice](https://huggingface.co/datasets/babelbox/babelbox_voice) (Swedish radio), the [NbAiLab/NST](https://huggingface.co/datasets/NbAiLab/NST) (Norwegian radio), the [NbAiLab/NPSC](https://huggingface.co/datasets/NbAiLab/NPSC) (Norwegian parliament) and the [google/fleurs](https://huggingface.co/datasets/google/fleurs) (sv_se, da_dk, nb_no) datasets. The goal is to leverage transfer learning across Nordic languages, which have strong similarities.

It achieves the following results on the common voice Swedish test set:

- Loss: 0.2129

- Wer: 11.3079

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

Please note that a bug during training prevented us from evaluating WER correctly.

Validation loss suggests we started overfitting after 5000/6000 steps.

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-06

- train_batch_size: 32

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- training_steps: 10000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-------:|:--------:|:---------------:|:-----------:|

| 0.3056 | 0.1 | 1000 | 0.2670 | ~~99.9221~~ |

| 0.16 | 0.2 | 2000 | 0.2322 | ~~99.6640~~ |

| 0.1309 | 0.3 | 3000 | 0.2152 | ~~98.9759~~ |

| 0.097 | 0.4 | 4000 | 0.2112 | ~~100.0~~ |

| **0.091** | **0.5** | **5000** | **0.2094** | ~~99.7312~~ |

| 0.1098 | 0.6 | 6000 | 0.2098 | ~~98.6077~~ |

| 0.0637 | 0.7 | 7000 | 0.2148 | ~~98.4625~~ |

| 0.0718 | 0.8 | 8000 | 0.2151 | ~~99.8710~~ |

| 0.0517 | 0.9 | 9000 | 0.2175 | ~~97.2342~~ |

| 0.0465 | 1.0 | 10000 | 0.2129 | ~~96.3552~~ |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.1+cu117

- Datasets 2.7.1.dev0

- Tokenizers 0.13.2

### WandB run

https://wandb.ai/pn-aa/whisper/runs/xc70fbwv?workspace=user-emilio_marinone

### Baseline model

This model finetuned whisper-medium, and here we can observe imrpovements when evaluated on CommonVoice 11 Swedish(sv-SE), Danish(da), and Norwegian (nn-NO) test splits.

| Language | Whisper Medium (WER) | Whisper Medium Nordic (WER) |

|:--------:|:--------------------:|:---------------------------:|

| sv-SE | 14.93 | 11.31 |

| da | 20.85 | 14.86 |

| nn-NO | 50.82 | 37.02

|

MikolajDeja/alirezamsh-small100-pl-en-yhavinga-ccmatrix-finetune

|

MikolajDeja

| 2023-03-18T13:20:31Z | 45 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"m2m_100",

"text2text-generation",

"generated_from_trainer",

"dataset:ccmatrix",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2023-03-04T12:07:01Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- ccmatrix

model-index:

- name: alirezamsh-small100-pl-en-yhavinga-ccmatrix-finetune

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# alirezamsh-small100-pl-en-yhavinga-ccmatrix-finetune

This model is a fine-tuned version of [alirezamsh/small100](https://huggingface.co/alirezamsh/small100) on the ccmatrix dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 65

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.1

- Datasets 2.10.1

- Tokenizers 0.13.2

|

aiartwork/rl_course_vizdoom_health_gathering_supreme

|

aiartwork

| 2023-03-18T12:57:41Z | 0 | 0 |

sample-factory

|

[

"sample-factory",

"tensorboard",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T12:57:13Z |

---

library_name: sample-factory

tags:

- deep-reinforcement-learning

- reinforcement-learning

- sample-factory

model-index:

- name: APPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: doom_health_gathering_supreme

type: doom_health_gathering_supreme

metrics:

- type: mean_reward

value: 9.75 +/- 4.54

name: mean_reward

verified: false

---

A(n) **APPO** model trained on the **doom_health_gathering_supreme** environment.

This model was trained using Sample-Factory 2.0: https://github.com/alex-petrenko/sample-factory.

Documentation for how to use Sample-Factory can be found at https://www.samplefactory.dev/

## Downloading the model

After installing Sample-Factory, download the model with:

```

python -m sample_factory.huggingface.load_from_hub -r aiartwork/rl_course_vizdoom_health_gathering_supreme

```

## Using the model

To run the model after download, use the `enjoy` script corresponding to this environment:

```

python -m .usr.local.lib.python3.9.dist-packages.ipykernel_launcher --algo=APPO --env=doom_health_gathering_supreme --train_dir=./train_dir --experiment=rl_course_vizdoom_health_gathering_supreme

```

You can also upload models to the Hugging Face Hub using the same script with the `--push_to_hub` flag.

See https://www.samplefactory.dev/10-huggingface/huggingface/ for more details

## Training with this model

To continue training with this model, use the `train` script corresponding to this environment:

```

python -m .usr.local.lib.python3.9.dist-packages.ipykernel_launcher --algo=APPO --env=doom_health_gathering_supreme --train_dir=./train_dir --experiment=rl_course_vizdoom_health_gathering_supreme --restart_behavior=resume --train_for_env_steps=10000000000

```

Note, you may have to adjust `--train_for_env_steps` to a suitably high number as the experiment will resume at the number of steps it concluded at.

|

britojr/Reinforce-CartPole-v1

|

britojr

| 2023-03-18T12:56:24Z | 0 | 0 | null |

[

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T12:56:11Z |

---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-CartPole-v1

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 500.00 +/- 0.00

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

LarryAIDraw/kmsBlCherHighAltitudeHead_releaseV30

|

LarryAIDraw

| 2023-03-18T12:55:22Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-03-18T12:54:38Z |

---

license: creativeml-openrail-m

---

|

ZhouZX/rare-puppers

|

ZhouZX

| 2023-03-18T12:43:57Z | 224 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"huggingpics",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2023-03-18T12:43:44Z |

---

tags:

- image-classification

- pytorch

- huggingpics

metrics:

- accuracy

model-index:

- name: rare-puppers

results:

- task:

name: Image Classification

type: image-classification

metrics:

- name: Accuracy

type: accuracy

value: 0.8636363744735718

---

# rare-puppers

Autogenerated by HuggingPics🤗🖼️

Create your own image classifier for **anything** by running [the demo on Google Colab](https://colab.research.google.com/github/nateraw/huggingpics/blob/main/HuggingPics.ipynb).

Report any issues with the demo at the [github repo](https://github.com/nateraw/huggingpics).

## Example Images

#### corgi

#### samoyed

#### shiba inu

|

Perse90/q-FrozenLake-v1-4x4-noSlippery

|

Perse90

| 2023-03-18T12:26:38Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T12:26:32Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="Perse90/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

bankholdup/rugpt3_song_writer

|

bankholdup

| 2023-03-18T12:11:07Z | 143 | 3 |

transformers

|

[

"transformers",

"pytorch",

"safetensors",

"gpt2",

"text-generation",

"PyTorch",

"Transformers",

"ru",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

language:

- ru

tags:

- PyTorch

- Transformers

widget:

- text: "Батя возвращается трезвый, в руке буханка"

example_title: "Example 1"

- text: "Как дела? Как дела? Это новый кадиллак"

example_title: "Example 2"

- text: "4:20 на часах и я дрочу на твоё фото"

example_title: "Example 3"

inference:

parameters:

temperature: 0.9

k: 50

p: 0.95

length: 1500

---

Model based on [ruGPT-3](https://huggingface.co/sberbank-ai/rugpt3small_based_on_gpt2) for generating songs.

Tuned on lyrics collected from [genius](https://genius.com/).

Examples of used artists:

* [Oxxxymiron](https://genius.com/artists/Oxxxymiron)

* [Моргенштерн](https://genius.com/artists/Morgenshtern)

* [ЛСП](https://genius.com/artists/Lsp)

* [Гражданская оборона](https://genius.com/artists/Civil-defense)

* [Король и Шут](https://genius.com/artists/The-king-and-the-jester)

* etc

|

samwit/bloompaca-7b1-lora

|

samwit

| 2023-03-18T12:11:06Z | 0 | 0 | null |

[

"region:us"

] | null | 2023-03-18T12:06:52Z |

This is a LoRa finetuning of Bloom-7b1 using the Alpaca instruction dataset.

It really highlights how the Bloom models are undertrained with ~400M tokens as opposed to 1 Trillion in the smaller LLaMa models.

|

heziyevv/dqn-SpaceInvadersNoFrameskip-v4

|

heziyevv

| 2023-03-18T12:11:04Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T12:10:20Z |

---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 668.50 +/- 227.73

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga heziyevv -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga heziyevv -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga heziyevv

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 10000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

|

aiartwork/unit1-ppo-LunarLander-v2

|

aiartwork

| 2023-03-18T11:58:43Z | 2 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T11:58:23Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 245.74 +/- 19.56

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

megrxu/pokemon-lora

|

megrxu

| 2023-03-18T11:56:26Z | 2 | 2 |

diffusers

|

[

"diffusers",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"lora",

"base_model:runwayml/stable-diffusion-v1-5",

"base_model:adapter:runwayml/stable-diffusion-v1-5",

"license:creativeml-openrail-m",

"region:us"

] |

text-to-image

| 2023-03-18T07:48:15Z |

---

license: creativeml-openrail-m

base_model: runwayml/stable-diffusion-v1-5

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

- lora

inference: true

---

# LoRA text2image fine-tuning - https://huggingface.co/megrxu/pokemon-lora

These are LoRA adaption weights for runwayml/stable-diffusion-v1-5. The weights were fine-tuned on the lambdalabs/pokemon-blip-captions dataset. You can find some example images in the following.

|

matthv/second_t5-end2end-questions-generation

|

matthv

| 2023-03-18T11:51:54Z | 161 | 0 |

transformers

|

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2023-03-18T11:36:44Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: second_t5-end2end-questions-generation

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# second_t5-end2end-questions-generation

This model is a fine-tuned version of [ThomasSimonini/t5-end2end-question-generation](https://huggingface.co/ThomasSimonini/t5-end2end-question-generation) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- gradient_accumulation_steps: 16

- total_train_batch_size: 256

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 7

### Training results

### Framework versions

- Transformers 4.27.1

- Pytorch 1.13.1+cu116

- Datasets 2.10.1

- Tokenizers 0.13.2

|

cthiriet/ppo2-LunarLander-v2

|

cthiriet

| 2023-03-18T11:21:28Z | 0 | 0 | null |

[

"tensorboard",

"LunarLander-v2",

"ppo",

"deep-reinforcement-learning",

"reinforcement-learning",

"custom-implementation",

"deep-rl-course",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T11:14:12Z |

---

tags:

- LunarLander-v2

- ppo

- deep-reinforcement-learning

- reinforcement-learning

- custom-implementation

- deep-rl-course

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: -146.89 +/- 80.73

name: mean_reward

verified: false

---

# PPO Agent Playing LunarLander-v2

This is a trained model of a PPO agent playing LunarLander-v2.

# Hyperparameters

```python

{'exp_name': 'ppo'

'seed': 1

'torch_deterministic': True

'cuda': True

'track': False

'wandb_project_name': 'cleanRL'

'wandb_entity': None

'capture_video': False

'env_id': 'LunarLander-v2'

'total_timesteps': 100000

'learning_rate': 0.005

'num_envs': 10

'num_steps': 2048

'anneal_lr': True

'gae': True

'gamma': 0.99

'gae_lambda': 0.95

'num_minibatches': 4

'update_epochs': 4

'norm_adv': True

'clip_coef': 0.2

'clip_vloss': True

'ent_coef': 0.01

'vf_coef': 0.5

'max_grad_norm': 0.5

'target_kl': None

'repo_id': 'clemdev2000/ppo2-LunarLander-v2'

'batch_size': 20480

'minibatch_size': 5120}

```

|

vocabtrimmer/mt5-small-trimmed-it-5000-itquad-qg

|

vocabtrimmer

| 2023-03-18T11:17:59Z | 106 | 0 |

transformers

|

[

"transformers",

"pytorch",

"mt5",

"text2text-generation",

"question generation",

"it",

"dataset:lmqg/qg_itquad",

"arxiv:2210.03992",

"license:cc-by-4.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2023-03-18T11:17:30Z |

---

license: cc-by-4.0

metrics:

- bleu4

- meteor

- rouge-l

- bertscore

- moverscore

language: it

datasets:

- lmqg/qg_itquad

pipeline_tag: text2text-generation

tags:

- question generation

widget:

- text: "<hl> Dopo il 1971 <hl> , l' OPEC ha tardato ad adeguare i prezzi per riflettere tale deprezzamento."

example_title: "Question Generation Example 1"

- text: "L' individuazione del petrolio e lo sviluppo di nuovi giacimenti richiedeva in genere <hl> da cinque a dieci anni <hl> prima di una produzione significativa."

example_title: "Question Generation Example 2"

- text: "il <hl> Giappone <hl> è stato il paese più dipendente dal petrolio arabo."

example_title: "Question Generation Example 3"

model-index:

- name: vocabtrimmer/mt5-small-trimmed-it-5000-itquad-qg

results:

- task:

name: Text2text Generation

type: text2text-generation

dataset:

name: lmqg/qg_itquad

type: default

args: default

metrics:

- name: BLEU4 (Question Generation)

type: bleu4_question_generation

value: 6.94

- name: ROUGE-L (Question Generation)

type: rouge_l_question_generation

value: 21.07

- name: METEOR (Question Generation)

type: meteor_question_generation

value: 17.35

- name: BERTScore (Question Generation)

type: bertscore_question_generation

value: 80.39

- name: MoverScore (Question Generation)

type: moverscore_question_generation

value: 56.63

---

# Model Card of `vocabtrimmer/mt5-small-trimmed-it-5000-itquad-qg`

This model is fine-tuned version of [vocabtrimmer/mt5-small-trimmed-it-5000](https://huggingface.co/vocabtrimmer/mt5-small-trimmed-it-5000) for question generation task on the [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) (dataset_name: default) via [`lmqg`](https://github.com/asahi417/lm-question-generation).

### Overview

- **Language model:** [vocabtrimmer/mt5-small-trimmed-it-5000](https://huggingface.co/vocabtrimmer/mt5-small-trimmed-it-5000)

- **Language:** it

- **Training data:** [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) (default)

- **Online Demo:** [https://autoqg.net/](https://autoqg.net/)

- **Repository:** [https://github.com/asahi417/lm-question-generation](https://github.com/asahi417/lm-question-generation)

- **Paper:** [https://arxiv.org/abs/2210.03992](https://arxiv.org/abs/2210.03992)

### Usage

- With [`lmqg`](https://github.com/asahi417/lm-question-generation#lmqg-language-model-for-question-generation-)

```python

from lmqg import TransformersQG

# initialize model

model = TransformersQG(language="it", model="vocabtrimmer/mt5-small-trimmed-it-5000-itquad-qg")

# model prediction

questions = model.generate_q(list_context="Dopo il 1971 , l' OPEC ha tardato ad adeguare i prezzi per riflettere tale deprezzamento.", list_answer="Dopo il 1971")

```

- With `transformers`

```python

from transformers import pipeline

pipe = pipeline("text2text-generation", "vocabtrimmer/mt5-small-trimmed-it-5000-itquad-qg")

output = pipe("<hl> Dopo il 1971 <hl> , l' OPEC ha tardato ad adeguare i prezzi per riflettere tale deprezzamento.")

```

## Evaluation

- ***Metric (Question Generation)***: [raw metric file](https://huggingface.co/vocabtrimmer/mt5-small-trimmed-it-5000-itquad-qg/raw/main/eval/metric.first.sentence.paragraph_answer.question.lmqg_qg_itquad.default.json)

| | Score | Type | Dataset |

|:-----------|--------:|:--------|:-----------------------------------------------------------------|

| BERTScore | 80.39 | default | [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) |

| Bleu_1 | 21.98 | default | [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) |

| Bleu_2 | 14.25 | default | [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) |

| Bleu_3 | 9.79 | default | [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) |

| Bleu_4 | 6.94 | default | [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) |

| METEOR | 17.35 | default | [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) |

| MoverScore | 56.63 | default | [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) |

| ROUGE_L | 21.07 | default | [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) |

## Training hyperparameters

The following hyperparameters were used during fine-tuning:

- dataset_path: lmqg/qg_itquad

- dataset_name: default

- input_types: paragraph_answer

- output_types: question

- prefix_types: None

- model: vocabtrimmer/mt5-small-trimmed-it-5000

- max_length: 512

- max_length_output: 32

- epoch: 16

- batch: 16

- lr: 0.0005

- fp16: False

- random_seed: 1

- gradient_accumulation_steps: 4

- label_smoothing: 0.15

The full configuration can be found at [fine-tuning config file](https://huggingface.co/vocabtrimmer/mt5-small-trimmed-it-5000-itquad-qg/raw/main/trainer_config.json).

## Citation

```

@inproceedings{ushio-etal-2022-generative,

title = "{G}enerative {L}anguage {M}odels for {P}aragraph-{L}evel {Q}uestion {G}eneration",

author = "Ushio, Asahi and

Alva-Manchego, Fernando and

Camacho-Collados, Jose",

booktitle = "Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2022",

address = "Abu Dhabi, U.A.E.",

publisher = "Association for Computational Linguistics",

}

```

|

dussinus/pixelcopter-unit4-lr98e-5

|

dussinus

| 2023-03-18T10:42:41Z | 0 | 0 | null |

[

"Pixelcopter-PLE-v0",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T10:42:38Z |

---

tags:

- Pixelcopter-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: pixelcopter-unit4-lr98e-5

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pixelcopter-PLE-v0

type: Pixelcopter-PLE-v0

metrics:

- type: mean_reward

value: 27.20 +/- 16.41

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pixelcopter-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pixelcopter-PLE-v0** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

rebolforces/Reinforce-Pixelcopter-PLE-v0

|

rebolforces

| 2023-03-18T10:41:02Z | 0 | 0 | null |

[

"Pixelcopter-PLE-v0",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-03-18T10:40:56Z |

---

tags:

- Pixelcopter-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-Pixelcopter-PLE-v0

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pixelcopter-PLE-v0

type: Pixelcopter-PLE-v0

metrics:

- type: mean_reward

value: 17.10 +/- 22.67

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pixelcopter-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pixelcopter-PLE-v0** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

vocabtrimmer/mt5-small-trimmed-it-10000-itquad-qg

|

vocabtrimmer

| 2023-03-18T10:33:18Z | 106 | 0 |

transformers

|

[

"transformers",

"pytorch",

"mt5",

"text2text-generation",

"question generation",

"it",

"dataset:lmqg/qg_itquad",

"arxiv:2210.03992",

"license:cc-by-4.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2023-03-18T10:32:48Z |

---

license: cc-by-4.0

metrics:

- bleu4

- meteor

- rouge-l

- bertscore

- moverscore

language: it

datasets:

- lmqg/qg_itquad

pipeline_tag: text2text-generation

tags:

- question generation

widget:

- text: "<hl> Dopo il 1971 <hl> , l' OPEC ha tardato ad adeguare i prezzi per riflettere tale deprezzamento."

example_title: "Question Generation Example 1"

- text: "L' individuazione del petrolio e lo sviluppo di nuovi giacimenti richiedeva in genere <hl> da cinque a dieci anni <hl> prima di una produzione significativa."

example_title: "Question Generation Example 2"

- text: "il <hl> Giappone <hl> è stato il paese più dipendente dal petrolio arabo."

example_title: "Question Generation Example 3"

model-index:

- name: vocabtrimmer/mt5-small-trimmed-it-10000-itquad-qg

results:

- task:

name: Text2text Generation

type: text2text-generation

dataset:

name: lmqg/qg_itquad

type: default

args: default

metrics:

- name: BLEU4 (Question Generation)

type: bleu4_question_generation

value: 7.51

- name: ROUGE-L (Question Generation)

type: rouge_l_question_generation

value: 21.88

- name: METEOR (Question Generation)

type: meteor_question_generation

value: 17.78

- name: BERTScore (Question Generation)

type: bertscore_question_generation

value: 81.15

- name: MoverScore (Question Generation)

type: moverscore_question_generation

value: 57.1

---

# Model Card of `vocabtrimmer/mt5-small-trimmed-it-10000-itquad-qg`

This model is fine-tuned version of [vocabtrimmer/mt5-small-trimmed-it-10000](https://huggingface.co/vocabtrimmer/mt5-small-trimmed-it-10000) for question generation task on the [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) (dataset_name: default) via [`lmqg`](https://github.com/asahi417/lm-question-generation).

### Overview

- **Language model:** [vocabtrimmer/mt5-small-trimmed-it-10000](https://huggingface.co/vocabtrimmer/mt5-small-trimmed-it-10000)

- **Language:** it

- **Training data:** [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) (default)

- **Online Demo:** [https://autoqg.net/](https://autoqg.net/)

- **Repository:** [https://github.com/asahi417/lm-question-generation](https://github.com/asahi417/lm-question-generation)

- **Paper:** [https://arxiv.org/abs/2210.03992](https://arxiv.org/abs/2210.03992)

### Usage

- With [`lmqg`](https://github.com/asahi417/lm-question-generation#lmqg-language-model-for-question-generation-)

```python

from lmqg import TransformersQG

# initialize model

model = TransformersQG(language="it", model="vocabtrimmer/mt5-small-trimmed-it-10000-itquad-qg")

# model prediction

questions = model.generate_q(list_context="Dopo il 1971 , l' OPEC ha tardato ad adeguare i prezzi per riflettere tale deprezzamento.", list_answer="Dopo il 1971")

```

- With `transformers`

```python

from transformers import pipeline

pipe = pipeline("text2text-generation", "vocabtrimmer/mt5-small-trimmed-it-10000-itquad-qg")

output = pipe("<hl> Dopo il 1971 <hl> , l' OPEC ha tardato ad adeguare i prezzi per riflettere tale deprezzamento.")

```

## Evaluation

- ***Metric (Question Generation)***: [raw metric file](https://huggingface.co/vocabtrimmer/mt5-small-trimmed-it-10000-itquad-qg/raw/main/eval/metric.first.sentence.paragraph_answer.question.lmqg_qg_itquad.default.json)

| | Score | Type | Dataset |

|:-----------|--------:|:--------|:-----------------------------------------------------------------|

| BERTScore | 81.15 | default | [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) |

| Bleu_1 | 22.96 | default | [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) |

| Bleu_2 | 15.06 | default | [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) |

| Bleu_3 | 10.47 | default | [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) |

| Bleu_4 | 7.51 | default | [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) |

| METEOR | 17.78 | default | [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) |

| MoverScore | 57.1 | default | [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) |

| ROUGE_L | 21.88 | default | [lmqg/qg_itquad](https://huggingface.co/datasets/lmqg/qg_itquad) |

## Training hyperparameters

The following hyperparameters were used during fine-tuning:

- dataset_path: lmqg/qg_itquad

- dataset_name: default

- input_types: paragraph_answer

- output_types: question

- prefix_types: None

- model: vocabtrimmer/mt5-small-trimmed-it-10000

- max_length: 512

- max_length_output: 32

- epoch: 14

- batch: 16

- lr: 0.001

- fp16: False

- random_seed: 1

- gradient_accumulation_steps: 4

- label_smoothing: 0.15

The full configuration can be found at [fine-tuning config file](https://huggingface.co/vocabtrimmer/mt5-small-trimmed-it-10000-itquad-qg/raw/main/trainer_config.json).

## Citation

```

@inproceedings{ushio-etal-2022-generative,

title = "{G}enerative {L}anguage {M}odels for {P}aragraph-{L}evel {Q}uestion {G}eneration",

author = "Ushio, Asahi and

Alva-Manchego, Fernando and

Camacho-Collados, Jose",

booktitle = "Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2022",

address = "Abu Dhabi, U.A.E.",

publisher = "Association for Computational Linguistics",

}

```

|

Melanit/dreambooth_voyager_v2

|

Melanit

| 2023-03-18T10:31:38Z | 10 | 0 |

keras

|

[

"keras",

"tf-keras",

"keras-dreambooth",

"scifi",

"license:cc-by-nc-4.0",

"region:us"

] | null | 2023-03-16T18:50:33Z |

---

library_name: keras

tags:

- keras-dreambooth

- scifi

license: cc-by-nc-4.0

---

## Model description

This Stable-Diffusion Model has been fine-tuned on images of the Star Trek Voyager Spaceship.

### Here are some examples that were created using the model using these settings:

Prompt: photo of voyager spaceship in space, high quality, blender, 3d, trending on artstation, 8k

Negative Prompt: bad, ugly, malformed, deformed, out of frame, blurry

Denoising Steps: 50

## Intended uses & limitations

Anyone may use this model for non-commercial usecases under the Linked License, as long as Paragraph 5 of the [Open RAIL-M License](https://raw.githubusercontent.com/CompVis/stable-diffusion/main/LICENSE) are respected as well. The original Model adheres under Open RAIL-M.

It was made solely as an experiment for keras_cv Dreambooth Training.

Since a lot of orthographic views were used, the model seems to be biased around them, and has issues creating more variance and poses. While inferring, the background appears noisy.

## Training and evaluation data

Images from Rob Bonchune from [Trekcore](https://blog.trekcore.com/) were used for training.

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

| Hyperparameters | Value |

| :-- | :-- |

| inner_optimizer.class_name | Custom>RMSprop |

| inner_optimizer.config.name | RMSprop |

| inner_optimizer.config.weight_decay | None |

| inner_optimizer.config.clipnorm | None |

| inner_optimizer.config.global_clipnorm | None |

| inner_optimizer.config.clipvalue | None |

| inner_optimizer.config.use_ema | False |

| inner_optimizer.config.ema_momentum | 0.99 |

| inner_optimizer.config.ema_overwrite_frequency | 100 |

| inner_optimizer.config.jit_compile | True |

| inner_optimizer.config.is_legacy_optimizer | False |

| inner_optimizer.config.learning_rate | 0.0010000000474974513 |

| inner_optimizer.config.rho | 0.9 |

| inner_optimizer.config.momentum | 0.0 |

| inner_optimizer.config.epsilon | 1e-07 |

| inner_optimizer.config.centered | False |

| dynamic | True |

| initial_scale | 32768.0 |

| dynamic_growth_steps | 2000 |

| training_precision | mixed_float16 |

|

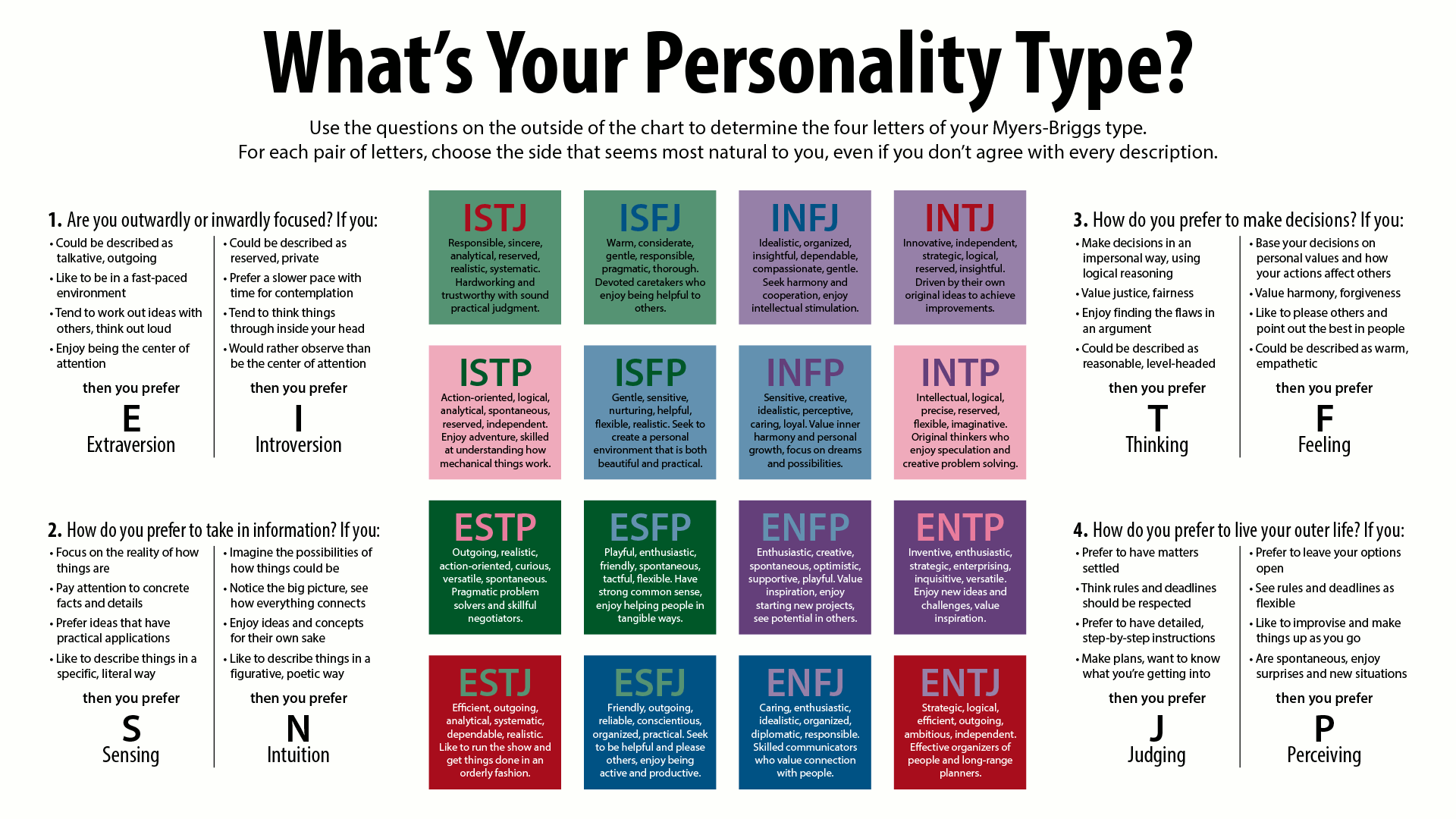

JanSt/albert-base-v2_mbti-classification

|

JanSt

| 2023-03-18T10:25:39Z | 655 | 14 |

transformers

|

[

"transformers",

"pytorch",

"albert",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-03-15T22:17:36Z |

---

picture: https://en.wikipedia.org/wiki/Myers%E2%80%93Briggs_Type_Indicator

license: mit

language:

- en

metrics:

- bertscore

pipeline_tag: text-classification

library_name: transformers

---

|

taohoang/ppo-PyramidsTraining

|

taohoang

| 2023-03-18T10:23:06Z | 4 | 0 |

ml-agents

|

[

"ml-agents",

"tensorboard",

"onnx",

"Pyramids",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Pyramids",

"region:us"

] |

reinforcement-learning

| 2023-03-18T10:23:01Z |

---

library_name: ml-agents

tags:

- Pyramids

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Pyramids

---

# **ppo** Agent playing **Pyramids**

This is a trained model of a **ppo** agent playing **Pyramids** using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://github.com/huggingface/ml-agents#get-started

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

### Resume the training

```

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser:**.

1. Go to https://huggingface.co/spaces/unity/ML-Agents-Pyramids

2. Step 1: Find your model_id: taohoang/ppo-PyramidsTraining

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

toreleon/combine-60-vsfc-xlm-r

|

toreleon

| 2023-03-18T10:19:58Z | 179 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"text-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-03-18T10:05:06Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- precision

- recall

model-index:

- name: combine-60-vsfc-xlm-r

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# combine-60-vsfc-xlm-r

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2538

- Precision: 0.8786

- Recall: 0.9210

- F1 Weighted: 0.8993

- F1 Macro: 0.6284

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 64

- eval_batch_size: 128

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 100

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 Weighted | F1 Macro |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:-----------:|:--------:|

| 1.12 | 0.09 | 25 | 1.0597 | 0.2586 | 0.5085 | 0.3429 | 0.2247 |

| 0.9016 | 0.18 | 50 | 0.5441 | 0.8258 | 0.8642 | 0.8440 | 0.5895 |

| 0.6163 | 0.27 | 75 | 0.4097 | 0.8713 | 0.9109 | 0.8897 | 0.6215 |