modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-08-27 12:31:29

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 521

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-08-27 12:31:13

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

mang3dd/blockassist-bc-tangled_slithering_alligator_1756228562

|

mang3dd

| 2025-08-26T17:42:06Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"tangled slithering alligator",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-26T17:42:02Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- tangled slithering alligator

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

19-VIDEOS-Nayeon-Sana-viral-video-Clips/New.full.videos.Nayeon.Sana.Viral.Video.Official.Tutorial

|

19-VIDEOS-Nayeon-Sana-viral-video-Clips

| 2025-08-26T17:41:45Z | 0 | 0 | null |

[

"region:us"

] | null | 2025-08-26T17:41:34Z |

<animated-image data-catalyst=""><a href="https://tinyurl.com/mdfprj9k?viral-video" rel="nofollow" data-target="animated-image.originalLink"><img src="https://static.wixstatic.com/media/b249f9_adac8f70fb3f45b88691696c77de18f3~mv2.gif" alt="Foo" data-canonical-src="https://static.wixstatic.com/media/b249f9_adac8f70fb3f45b88691696c77de18f3~mv2.gif" style="max-width: 100%; display: inline-block;" data-target="animated-image.originalImage"></a>

|

youuotty/blockassist-bc-scaly_tiny_locust_1756230075

|

youuotty

| 2025-08-26T17:41:28Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"scaly tiny locust",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-26T17:41:16Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- scaly tiny locust

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

ggozzy/blockassist-bc-stubby_yapping_mandrill_1756229749

|

ggozzy

| 2025-08-26T17:37:00Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"stubby yapping mandrill",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-26T17:36:54Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- stubby yapping mandrill

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

calegpedia/blockassist-bc-stealthy_slimy_rooster_1756228111

|

calegpedia

| 2025-08-26T17:35:36Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"stealthy slimy rooster",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-26T17:35:33Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- stealthy slimy rooster

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

qualcomm/Yolo-X

|

qualcomm

| 2025-08-26T17:35:00Z | 561 | 5 |

pytorch

|

[

"pytorch",

"tflite",

"real_time",

"android",

"object-detection",

"license:other",

"region:us"

] |

object-detection

| 2025-03-14T02:22:40Z |

---

library_name: pytorch

license: other

tags:

- real_time

- android

pipeline_tag: object-detection

---

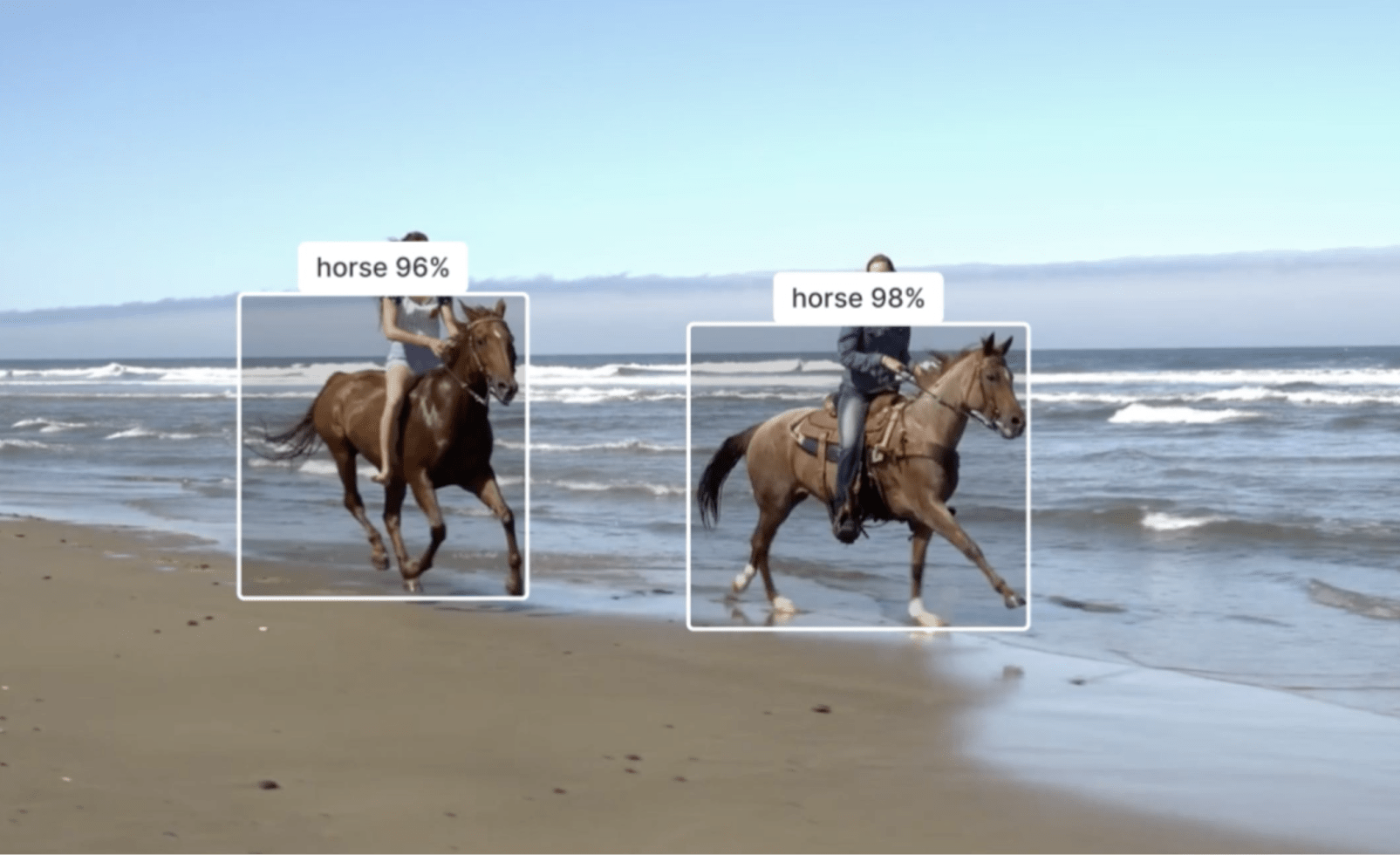

# Yolo-X: Optimized for Mobile Deployment

## Real-time object detection optimized for mobile and edge

YoloX is a machine learning model that predicts bounding boxes and classes of objects in an image.

This model is an implementation of Yolo-X found [here](https://github.com/Megvii-BaseDetection/YOLOX/).

This repository provides scripts to run Yolo-X on Qualcomm® devices.

More details on model performance across various devices, can be found

[here](https://aihub.qualcomm.com/models/yolox).

### Model Details

- **Model Type:** Model_use_case.object_detection

- **Model Stats:**

- Model checkpoint: YoloX Small

- Input resolution: 640x640

- Number of parameters: 8.98M

- Model size (float): 34.3 MB

- Model size (w8a16): 9.53 MB

- Model size (w8a8): 8.96 MB

| Model | Precision | Device | Chipset | Target Runtime | Inference Time (ms) | Peak Memory Range (MB) | Primary Compute Unit | Target Model

|---|---|---|---|---|---|---|---|---|

| Yolo-X | float | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | TFLITE | 32.199 ms | 0 - 46 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.tflite) |

| Yolo-X | float | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | QNN_DLC | 31.557 ms | 0 - 69 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.dlc) |

| Yolo-X | float | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | TFLITE | 14.375 ms | 0 - 54 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.tflite) |

| Yolo-X | float | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | QNN_DLC | 18.929 ms | 4 - 45 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.dlc) |

| Yolo-X | float | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | TFLITE | 8.727 ms | 0 - 12 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.tflite) |

| Yolo-X | float | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | QNN_DLC | 8.296 ms | 5 - 27 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.dlc) |

| Yolo-X | float | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | TFLITE | 11.861 ms | 0 - 48 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.tflite) |

| Yolo-X | float | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | QNN_DLC | 11.211 ms | 1 - 61 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.dlc) |

| Yolo-X | float | SA7255P ADP | Qualcomm® SA7255P | TFLITE | 32.199 ms | 0 - 46 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.tflite) |

| Yolo-X | float | SA7255P ADP | Qualcomm® SA7255P | QNN_DLC | 31.557 ms | 0 - 69 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.dlc) |

| Yolo-X | float | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | TFLITE | 8.586 ms | 0 - 12 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.tflite) |

| Yolo-X | float | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | QNN_DLC | 8.243 ms | 5 - 25 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.dlc) |

| Yolo-X | float | SA8295P ADP | Qualcomm® SA8295P | TFLITE | 16.149 ms | 0 - 43 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.tflite) |

| Yolo-X | float | SA8295P ADP | Qualcomm® SA8295P | QNN_DLC | 14.908 ms | 0 - 39 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.dlc) |

| Yolo-X | float | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | TFLITE | 8.699 ms | 0 - 16 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.tflite) |

| Yolo-X | float | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | QNN_DLC | 8.312 ms | 5 - 24 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.dlc) |

| Yolo-X | float | SA8775P ADP | Qualcomm® SA8775P | TFLITE | 11.861 ms | 0 - 48 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.tflite) |

| Yolo-X | float | SA8775P ADP | Qualcomm® SA8775P | QNN_DLC | 11.211 ms | 1 - 61 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.dlc) |

| Yolo-X | float | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | TFLITE | 8.807 ms | 0 - 16 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.tflite) |

| Yolo-X | float | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | QNN_DLC | 8.268 ms | 5 - 23 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.dlc) |

| Yolo-X | float | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | ONNX | 14.188 ms | 0 - 59 MB | NPU | [Yolo-X.onnx.zip](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.onnx.zip) |

| Yolo-X | float | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | TFLITE | 6.46 ms | 0 - 60 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.tflite) |

| Yolo-X | float | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | QNN_DLC | 6.136 ms | 5 - 87 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.dlc) |

| Yolo-X | float | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | ONNX | 10.004 ms | 5 - 167 MB | NPU | [Yolo-X.onnx.zip](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.onnx.zip) |

| Yolo-X | float | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | TFLITE | 5.608 ms | 0 - 51 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.tflite) |

| Yolo-X | float | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | QNN_DLC | 5.78 ms | 5 - 79 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.dlc) |

| Yolo-X | float | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | ONNX | 9.403 ms | 5 - 104 MB | NPU | [Yolo-X.onnx.zip](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.onnx.zip) |

| Yolo-X | float | Snapdragon X Elite CRD | Snapdragon® X Elite | QNN_DLC | 8.99 ms | 5 - 5 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.dlc) |

| Yolo-X | float | Snapdragon X Elite CRD | Snapdragon® X Elite | ONNX | 13.73 ms | 14 - 14 MB | NPU | [Yolo-X.onnx.zip](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X.onnx.zip) |

| Yolo-X | w8a16 | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | QNN_DLC | 15.583 ms | 2 - 40 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a16.dlc) |

| Yolo-X | w8a16 | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | QNN_DLC | 9.235 ms | 2 - 55 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a16.dlc) |

| Yolo-X | w8a16 | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | QNN_DLC | 7.857 ms | 2 - 17 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a16.dlc) |

| Yolo-X | w8a16 | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | QNN_DLC | 8.465 ms | 1 - 40 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a16.dlc) |

| Yolo-X | w8a16 | RB3 Gen 2 (Proxy) | Qualcomm® QCS6490 (Proxy) | QNN_DLC | 27.546 ms | 2 - 54 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a16.dlc) |

| Yolo-X | w8a16 | SA7255P ADP | Qualcomm® SA7255P | QNN_DLC | 15.583 ms | 2 - 40 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a16.dlc) |

| Yolo-X | w8a16 | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | QNN_DLC | 7.852 ms | 2 - 11 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a16.dlc) |

| Yolo-X | w8a16 | SA8295P ADP | Qualcomm® SA8295P | QNN_DLC | 9.995 ms | 1 - 46 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a16.dlc) |

| Yolo-X | w8a16 | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | QNN_DLC | 7.846 ms | 2 - 16 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a16.dlc) |

| Yolo-X | w8a16 | SA8775P ADP | Qualcomm® SA8775P | QNN_DLC | 8.465 ms | 1 - 40 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a16.dlc) |

| Yolo-X | w8a16 | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | QNN_DLC | 7.843 ms | 2 - 14 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a16.dlc) |

| Yolo-X | w8a16 | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | QNN_DLC | 5.046 ms | 2 - 58 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a16.dlc) |

| Yolo-X | w8a16 | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | QNN_DLC | 4.871 ms | 2 - 48 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a16.dlc) |

| Yolo-X | w8a16 | Snapdragon X Elite CRD | Snapdragon® X Elite | QNN_DLC | 8.499 ms | 6 - 6 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a16.dlc) |

| Yolo-X | w8a8 | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | TFLITE | 6.353 ms | 0 - 31 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.tflite) |

| Yolo-X | w8a8 | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | QNN_DLC | 5.441 ms | 1 - 34 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.dlc) |

| Yolo-X | w8a8 | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | TFLITE | 3.011 ms | 0 - 48 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.tflite) |

| Yolo-X | w8a8 | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | QNN_DLC | 2.821 ms | 1 - 49 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.dlc) |

| Yolo-X | w8a8 | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | TFLITE | 2.792 ms | 0 - 34 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.tflite) |

| Yolo-X | w8a8 | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | QNN_DLC | 2.309 ms | 1 - 13 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.dlc) |

| Yolo-X | w8a8 | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | TFLITE | 3.232 ms | 0 - 32 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.tflite) |

| Yolo-X | w8a8 | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | QNN_DLC | 2.691 ms | 1 - 36 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.dlc) |

| Yolo-X | w8a8 | RB3 Gen 2 (Proxy) | Qualcomm® QCS6490 (Proxy) | QNN_DLC | 9.957 ms | 0 - 41 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.dlc) |

| Yolo-X | w8a8 | SA7255P ADP | Qualcomm® SA7255P | TFLITE | 6.353 ms | 0 - 31 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.tflite) |

| Yolo-X | w8a8 | SA7255P ADP | Qualcomm® SA7255P | QNN_DLC | 5.441 ms | 1 - 34 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.dlc) |

| Yolo-X | w8a8 | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | TFLITE | 2.862 ms | 0 - 35 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.tflite) |

| Yolo-X | w8a8 | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | QNN_DLC | 2.32 ms | 1 - 13 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.dlc) |

| Yolo-X | w8a8 | SA8295P ADP | Qualcomm® SA8295P | TFLITE | 4.137 ms | 0 - 39 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.tflite) |

| Yolo-X | w8a8 | SA8295P ADP | Qualcomm® SA8295P | QNN_DLC | 3.626 ms | 1 - 42 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.dlc) |

| Yolo-X | w8a8 | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | TFLITE | 2.852 ms | 0 - 33 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.tflite) |

| Yolo-X | w8a8 | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | QNN_DLC | 2.326 ms | 1 - 12 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.dlc) |

| Yolo-X | w8a8 | SA8775P ADP | Qualcomm® SA8775P | TFLITE | 3.232 ms | 0 - 32 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.tflite) |

| Yolo-X | w8a8 | SA8775P ADP | Qualcomm® SA8775P | QNN_DLC | 2.691 ms | 1 - 36 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.dlc) |

| Yolo-X | w8a8 | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | TFLITE | 2.865 ms | 0 - 34 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.tflite) |

| Yolo-X | w8a8 | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | QNN_DLC | 2.321 ms | 1 - 11 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.dlc) |

| Yolo-X | w8a8 | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | ONNX | 11.037 ms | 0 - 27 MB | NPU | [Yolo-X.onnx.zip](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.onnx.zip) |

| Yolo-X | w8a8 | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | TFLITE | 1.848 ms | 0 - 50 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.tflite) |

| Yolo-X | w8a8 | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | QNN_DLC | 1.546 ms | 1 - 53 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.dlc) |

| Yolo-X | w8a8 | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | ONNX | 7.865 ms | 1 - 104 MB | NPU | [Yolo-X.onnx.zip](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.onnx.zip) |

| Yolo-X | w8a8 | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | TFLITE | 1.69 ms | 0 - 38 MB | NPU | [Yolo-X.tflite](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.tflite) |

| Yolo-X | w8a8 | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | QNN_DLC | 1.29 ms | 1 - 43 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.dlc) |

| Yolo-X | w8a8 | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | ONNX | 8.682 ms | 1 - 74 MB | NPU | [Yolo-X.onnx.zip](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.onnx.zip) |

| Yolo-X | w8a8 | Snapdragon X Elite CRD | Snapdragon® X Elite | QNN_DLC | 2.593 ms | 26 - 26 MB | NPU | [Yolo-X.dlc](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.dlc) |

| Yolo-X | w8a8 | Snapdragon X Elite CRD | Snapdragon® X Elite | ONNX | 11.714 ms | 8 - 8 MB | NPU | [Yolo-X.onnx.zip](https://huggingface.co/qualcomm/Yolo-X/blob/main/Yolo-X_w8a8.onnx.zip) |

## Installation

Install the package via pip:

```bash

pip install "qai-hub-models[yolox]"

```

## Configure Qualcomm® AI Hub to run this model on a cloud-hosted device

Sign-in to [Qualcomm® AI Hub](https://app.aihub.qualcomm.com/) with your

Qualcomm® ID. Once signed in navigate to `Account -> Settings -> API Token`.

With this API token, you can configure your client to run models on the cloud

hosted devices.

```bash

qai-hub configure --api_token API_TOKEN

```

Navigate to [docs](https://app.aihub.qualcomm.com/docs/) for more information.

## Demo off target

The package contains a simple end-to-end demo that downloads pre-trained

weights and runs this model on a sample input.

```bash

python -m qai_hub_models.models.yolox.demo

```

The above demo runs a reference implementation of pre-processing, model

inference, and post processing.

**NOTE**: If you want running in a Jupyter Notebook or Google Colab like

environment, please add the following to your cell (instead of the above).

```

%run -m qai_hub_models.models.yolox.demo

```

### Run model on a cloud-hosted device

In addition to the demo, you can also run the model on a cloud-hosted Qualcomm®

device. This script does the following:

* Performance check on-device on a cloud-hosted device

* Downloads compiled assets that can be deployed on-device for Android.

* Accuracy check between PyTorch and on-device outputs.

```bash

python -m qai_hub_models.models.yolox.export

```

## How does this work?

This [export script](https://aihub.qualcomm.com/models/yolox/qai_hub_models/models/Yolo-X/export.py)

leverages [Qualcomm® AI Hub](https://aihub.qualcomm.com/) to optimize, validate, and deploy this model

on-device. Lets go through each step below in detail:

Step 1: **Compile model for on-device deployment**

To compile a PyTorch model for on-device deployment, we first trace the model

in memory using the `jit.trace` and then call the `submit_compile_job` API.

```python

import torch

import qai_hub as hub

from qai_hub_models.models.yolox import Model

# Load the model

torch_model = Model.from_pretrained()

# Device

device = hub.Device("Samsung Galaxy S24")

# Trace model

input_shape = torch_model.get_input_spec()

sample_inputs = torch_model.sample_inputs()

pt_model = torch.jit.trace(torch_model, [torch.tensor(data[0]) for _, data in sample_inputs.items()])

# Compile model on a specific device

compile_job = hub.submit_compile_job(

model=pt_model,

device=device,

input_specs=torch_model.get_input_spec(),

)

# Get target model to run on-device

target_model = compile_job.get_target_model()

```

Step 2: **Performance profiling on cloud-hosted device**

After compiling models from step 1. Models can be profiled model on-device using the

`target_model`. Note that this scripts runs the model on a device automatically

provisioned in the cloud. Once the job is submitted, you can navigate to a

provided job URL to view a variety of on-device performance metrics.

```python

profile_job = hub.submit_profile_job(

model=target_model,

device=device,

)

```

Step 3: **Verify on-device accuracy**

To verify the accuracy of the model on-device, you can run on-device inference

on sample input data on the same cloud hosted device.

```python

input_data = torch_model.sample_inputs()

inference_job = hub.submit_inference_job(

model=target_model,

device=device,

inputs=input_data,

)

on_device_output = inference_job.download_output_data()

```

With the output of the model, you can compute like PSNR, relative errors or

spot check the output with expected output.

**Note**: This on-device profiling and inference requires access to Qualcomm®

AI Hub. [Sign up for access](https://myaccount.qualcomm.com/signup).

## Run demo on a cloud-hosted device

You can also run the demo on-device.

```bash

python -m qai_hub_models.models.yolox.demo --eval-mode on-device

```

**NOTE**: If you want running in a Jupyter Notebook or Google Colab like

environment, please add the following to your cell (instead of the above).

```

%run -m qai_hub_models.models.yolox.demo -- --eval-mode on-device

```

## Deploying compiled model to Android

The models can be deployed using multiple runtimes:

- TensorFlow Lite (`.tflite` export): [This

tutorial](https://www.tensorflow.org/lite/android/quickstart) provides a

guide to deploy the .tflite model in an Android application.

- QNN (`.so` export ): This [sample

app](https://docs.qualcomm.com/bundle/publicresource/topics/80-63442-50/sample_app.html)

provides instructions on how to use the `.so` shared library in an Android application.

## View on Qualcomm® AI Hub

Get more details on Yolo-X's performance across various devices [here](https://aihub.qualcomm.com/models/yolox).

Explore all available models on [Qualcomm® AI Hub](https://aihub.qualcomm.com/)

## License

* The license for the original implementation of Yolo-X can be found

[here](https://github.com/Megvii-BaseDetection/YOLOX/blob/main/LICENSE).

* The license for the compiled assets for on-device deployment can be found [here](https://qaihub-public-assets.s3.us-west-2.amazonaws.com/qai-hub-models/Qualcomm+AI+Hub+Proprietary+License.pdf)

## References

* [YOLOX: Exceeding YOLO Series in 2021](https://github.com/Megvii-BaseDetection/YOLOX/blob/main/README.md)

* [Source Model Implementation](https://github.com/Megvii-BaseDetection/YOLOX/)

## Community

* Join [our AI Hub Slack community](https://aihub.qualcomm.com/community/slack) to collaborate, post questions and learn more about on-device AI.

* For questions or feedback please [reach out to us](mailto:ai-hub-support@qti.qualcomm.com).

|

qualcomm/YOLOv8-Detection

|

qualcomm

| 2025-08-26T17:34:30Z | 82 | 0 |

pytorch

|

[

"pytorch",

"real_time",

"android",

"object-detection",

"license:other",

"region:us"

] |

object-detection

| 2024-02-25T22:41:14Z |

---

library_name: pytorch

license: other

tags:

- real_time

- android

pipeline_tag: object-detection

---

# YOLOv8-Detection: Optimized for Mobile Deployment

## Real-time object detection optimized for mobile and edge by Ultralytics

Ultralytics YOLOv8 is a machine learning model that predicts bounding boxes and classes of objects in an image.

This model is an implementation of YOLOv8-Detection found [here](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/models/yolo/detect).

This repository provides scripts to run YOLOv8-Detection on Qualcomm® devices.

More details on model performance across various devices, can be found

[here](https://aihub.qualcomm.com/models/yolov8_det).

**WARNING**: The model assets are not readily available for download due to licensing restrictions.

### Model Details

- **Model Type:** Model_use_case.object_detection

- **Model Stats:**

- Model checkpoint: YOLOv8-N

- Input resolution: 640x640

- Number of parameters: 3.18M

- Model size (float): 12.2 MB

- Model size (w8a8): 3.25 MB

- Model size (w8a16): 3.60 MB

| Model | Precision | Device | Chipset | Target Runtime | Inference Time (ms) | Peak Memory Range (MB) | Primary Compute Unit | Target Model

|---|---|---|---|---|---|---|---|---|

| YOLOv8-Detection | float | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | TFLITE | 14.24 ms | 0 - 66 MB | NPU | -- |

| YOLOv8-Detection | float | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | QNN_DLC | 13.288 ms | 2 - 94 MB | NPU | -- |

| YOLOv8-Detection | float | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | TFLITE | 6.584 ms | 0 - 40 MB | NPU | -- |

| YOLOv8-Detection | float | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | QNN_DLC | 8.03 ms | 5 - 44 MB | NPU | -- |

| YOLOv8-Detection | float | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | TFLITE | 4.13 ms | 0 - 38 MB | NPU | -- |

| YOLOv8-Detection | float | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | QNN_DLC | 3.453 ms | 0 - 76 MB | NPU | -- |

| YOLOv8-Detection | float | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | TFLITE | 5.589 ms | 0 - 65 MB | NPU | -- |

| YOLOv8-Detection | float | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | QNN_DLC | 5.107 ms | 1 - 96 MB | NPU | -- |

| YOLOv8-Detection | float | SA7255P ADP | Qualcomm® SA7255P | TFLITE | 14.24 ms | 0 - 66 MB | NPU | -- |

| YOLOv8-Detection | float | SA7255P ADP | Qualcomm® SA7255P | QNN_DLC | 13.288 ms | 2 - 94 MB | NPU | -- |

| YOLOv8-Detection | float | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | TFLITE | 4.115 ms | 0 - 40 MB | NPU | -- |

| YOLOv8-Detection | float | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | QNN_DLC | 3.456 ms | 0 - 75 MB | NPU | -- |

| YOLOv8-Detection | float | SA8295P ADP | Qualcomm® SA8295P | TFLITE | 7.643 ms | 0 - 34 MB | NPU | -- |

| YOLOv8-Detection | float | SA8295P ADP | Qualcomm® SA8295P | QNN_DLC | 7.224 ms | 4 - 35 MB | NPU | -- |

| YOLOv8-Detection | float | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | TFLITE | 4.123 ms | 0 - 38 MB | NPU | -- |

| YOLOv8-Detection | float | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | QNN_DLC | 3.458 ms | 0 - 81 MB | NPU | -- |

| YOLOv8-Detection | float | SA8775P ADP | Qualcomm® SA8775P | TFLITE | 5.589 ms | 0 - 65 MB | NPU | -- |

| YOLOv8-Detection | float | SA8775P ADP | Qualcomm® SA8775P | QNN_DLC | 5.107 ms | 1 - 96 MB | NPU | -- |

| YOLOv8-Detection | float | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | TFLITE | 4.13 ms | 0 - 39 MB | NPU | -- |

| YOLOv8-Detection | float | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | QNN_DLC | 3.459 ms | 0 - 69 MB | NPU | -- |

| YOLOv8-Detection | float | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | ONNX | 5.759 ms | 0 - 48 MB | NPU | -- |

| YOLOv8-Detection | float | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | TFLITE | 3.038 ms | 0 - 85 MB | NPU | -- |

| YOLOv8-Detection | float | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | QNN_DLC | 2.549 ms | 5 - 231 MB | NPU | -- |

| YOLOv8-Detection | float | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | ONNX | 3.756 ms | 0 - 170 MB | NPU | -- |

| YOLOv8-Detection | float | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | TFLITE | 2.948 ms | 0 - 73 MB | NPU | -- |

| YOLOv8-Detection | float | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | QNN_DLC | 1.97 ms | 5 - 133 MB | NPU | -- |

| YOLOv8-Detection | float | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | ONNX | 3.656 ms | 4 - 92 MB | NPU | -- |

| YOLOv8-Detection | float | Snapdragon X Elite CRD | Snapdragon® X Elite | QNN_DLC | 3.834 ms | 114 - 114 MB | NPU | -- |

| YOLOv8-Detection | float | Snapdragon X Elite CRD | Snapdragon® X Elite | ONNX | 6.034 ms | 5 - 5 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | QNN_DLC | 6.551 ms | 1 - 29 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | QNN_DLC | 3.949 ms | 2 - 37 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | QNN_DLC | 3.263 ms | 2 - 13 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | QNN_DLC | 3.8 ms | 1 - 30 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | RB3 Gen 2 (Proxy) | Qualcomm® QCS6490 (Proxy) | QNN_DLC | 13.293 ms | 0 - 36 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | SA7255P ADP | Qualcomm® SA7255P | QNN_DLC | 6.551 ms | 1 - 29 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | QNN_DLC | 3.268 ms | 2 - 11 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | SA8295P ADP | Qualcomm® SA8295P | QNN_DLC | 4.437 ms | 2 - 34 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | QNN_DLC | 3.274 ms | 2 - 11 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | SA8775P ADP | Qualcomm® SA8775P | QNN_DLC | 3.8 ms | 1 - 30 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | QNN_DLC | 3.274 ms | 2 - 11 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | ONNX | 60.762 ms | 0 - 181 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | QNN_DLC | 2.177 ms | 2 - 40 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | ONNX | 45.489 ms | 14 - 1068 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | QNN_DLC | 1.869 ms | 2 - 45 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | ONNX | 48.69 ms | 28 - 1004 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | Snapdragon X Elite CRD | Snapdragon® X Elite | QNN_DLC | 3.647 ms | 2 - 2 MB | NPU | -- |

| YOLOv8-Detection | w8a16 | Snapdragon X Elite CRD | Snapdragon® X Elite | ONNX | 63.415 ms | 27 - 27 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | TFLITE | 3.364 ms | 0 - 24 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | QNN_DLC | 3.134 ms | 1 - 25 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | TFLITE | 1.65 ms | 0 - 41 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | QNN_DLC | 1.641 ms | 1 - 40 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | TFLITE | 1.504 ms | 0 - 15 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | QNN_DLC | 1.425 ms | 1 - 16 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | TFLITE | 1.909 ms | 0 - 24 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | QNN_DLC | 1.793 ms | 1 - 25 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | RB3 Gen 2 (Proxy) | Qualcomm® QCS6490 (Proxy) | TFLITE | 3.773 ms | 0 - 31 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | RB3 Gen 2 (Proxy) | Qualcomm® QCS6490 (Proxy) | QNN_DLC | 4.744 ms | 1 - 33 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | SA7255P ADP | Qualcomm® SA7255P | TFLITE | 3.364 ms | 0 - 24 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | SA7255P ADP | Qualcomm® SA7255P | QNN_DLC | 3.134 ms | 1 - 25 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | TFLITE | 1.497 ms | 0 - 16 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | QNN_DLC | 1.417 ms | 0 - 15 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | SA8295P ADP | Qualcomm® SA8295P | TFLITE | 2.356 ms | 0 - 30 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | SA8295P ADP | Qualcomm® SA8295P | QNN_DLC | 2.201 ms | 1 - 32 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | TFLITE | 1.51 ms | 0 - 15 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | QNN_DLC | 1.416 ms | 0 - 15 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | SA8775P ADP | Qualcomm® SA8775P | TFLITE | 1.909 ms | 0 - 24 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | SA8775P ADP | Qualcomm® SA8775P | QNN_DLC | 1.793 ms | 1 - 25 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | TFLITE | 1.496 ms | 0 - 15 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | QNN_DLC | 1.419 ms | 1 - 9 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | ONNX | 6.251 ms | 0 - 18 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | TFLITE | 0.989 ms | 0 - 38 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | QNN_DLC | 0.958 ms | 1 - 37 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | ONNX | 4.482 ms | 1 - 75 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | TFLITE | 0.912 ms | 0 - 28 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | QNN_DLC | 0.708 ms | 1 - 32 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | ONNX | 3.299 ms | 0 - 79 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | Snapdragon X Elite CRD | Snapdragon® X Elite | QNN_DLC | 1.649 ms | 4 - 4 MB | NPU | -- |

| YOLOv8-Detection | w8a8 | Snapdragon X Elite CRD | Snapdragon® X Elite | ONNX | 6.826 ms | 2 - 2 MB | NPU | -- |

## Installation

Install the package via pip:

```bash

pip install "qai-hub-models[yolov8-det]"

```

## Configure Qualcomm® AI Hub to run this model on a cloud-hosted device

Sign-in to [Qualcomm® AI Hub](https://app.aihub.qualcomm.com/) with your

Qualcomm® ID. Once signed in navigate to `Account -> Settings -> API Token`.

With this API token, you can configure your client to run models on the cloud

hosted devices.

```bash

qai-hub configure --api_token API_TOKEN

```

Navigate to [docs](https://app.aihub.qualcomm.com/docs/) for more information.

## Demo off target

The package contains a simple end-to-end demo that downloads pre-trained

weights and runs this model on a sample input.

```bash

python -m qai_hub_models.models.yolov8_det.demo

```

The above demo runs a reference implementation of pre-processing, model

inference, and post processing.

**NOTE**: If you want running in a Jupyter Notebook or Google Colab like

environment, please add the following to your cell (instead of the above).

```

%run -m qai_hub_models.models.yolov8_det.demo

```

### Run model on a cloud-hosted device

In addition to the demo, you can also run the model on a cloud-hosted Qualcomm®

device. This script does the following:

* Performance check on-device on a cloud-hosted device

* Downloads compiled assets that can be deployed on-device for Android.

* Accuracy check between PyTorch and on-device outputs.

```bash

python -m qai_hub_models.models.yolov8_det.export

```

## How does this work?

This [export script](https://aihub.qualcomm.com/models/yolov8_det/qai_hub_models/models/YOLOv8-Detection/export.py)

leverages [Qualcomm® AI Hub](https://aihub.qualcomm.com/) to optimize, validate, and deploy this model

on-device. Lets go through each step below in detail:

Step 1: **Compile model for on-device deployment**

To compile a PyTorch model for on-device deployment, we first trace the model

in memory using the `jit.trace` and then call the `submit_compile_job` API.

```python

import torch

import qai_hub as hub

from qai_hub_models.models.yolov8_det import Model

# Load the model

torch_model = Model.from_pretrained()

# Device

device = hub.Device("Samsung Galaxy S24")

# Trace model

input_shape = torch_model.get_input_spec()

sample_inputs = torch_model.sample_inputs()

pt_model = torch.jit.trace(torch_model, [torch.tensor(data[0]) for _, data in sample_inputs.items()])

# Compile model on a specific device

compile_job = hub.submit_compile_job(

model=pt_model,

device=device,

input_specs=torch_model.get_input_spec(),

)

# Get target model to run on-device

target_model = compile_job.get_target_model()

```

Step 2: **Performance profiling on cloud-hosted device**

After compiling models from step 1. Models can be profiled model on-device using the

`target_model`. Note that this scripts runs the model on a device automatically

provisioned in the cloud. Once the job is submitted, you can navigate to a

provided job URL to view a variety of on-device performance metrics.

```python

profile_job = hub.submit_profile_job(

model=target_model,

device=device,

)

```

Step 3: **Verify on-device accuracy**

To verify the accuracy of the model on-device, you can run on-device inference

on sample input data on the same cloud hosted device.

```python

input_data = torch_model.sample_inputs()

inference_job = hub.submit_inference_job(

model=target_model,

device=device,

inputs=input_data,

)

on_device_output = inference_job.download_output_data()

```

With the output of the model, you can compute like PSNR, relative errors or

spot check the output with expected output.

**Note**: This on-device profiling and inference requires access to Qualcomm®

AI Hub. [Sign up for access](https://myaccount.qualcomm.com/signup).

## Run demo on a cloud-hosted device

You can also run the demo on-device.

```bash

python -m qai_hub_models.models.yolov8_det.demo --eval-mode on-device

```

**NOTE**: If you want running in a Jupyter Notebook or Google Colab like

environment, please add the following to your cell (instead of the above).

```

%run -m qai_hub_models.models.yolov8_det.demo -- --eval-mode on-device

```

## Deploying compiled model to Android

The models can be deployed using multiple runtimes:

- TensorFlow Lite (`.tflite` export): [This

tutorial](https://www.tensorflow.org/lite/android/quickstart) provides a

guide to deploy the .tflite model in an Android application.

- QNN (`.so` export ): This [sample

app](https://docs.qualcomm.com/bundle/publicresource/topics/80-63442-50/sample_app.html)

provides instructions on how to use the `.so` shared library in an Android application.

## View on Qualcomm® AI Hub

Get more details on YOLOv8-Detection's performance across various devices [here](https://aihub.qualcomm.com/models/yolov8_det).

Explore all available models on [Qualcomm® AI Hub](https://aihub.qualcomm.com/)

## License

* The license for the original implementation of YOLOv8-Detection can be found

[here](https://github.com/ultralytics/ultralytics/blob/main/LICENSE).

* The license for the compiled assets for on-device deployment can be found [here](https://github.com/ultralytics/ultralytics/blob/main/LICENSE)

## References

* [Ultralytics YOLOv8 Docs: Object Detection](https://docs.ultralytics.com/tasks/detect/)

* [Source Model Implementation](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/models/yolo/detect)

## Community

* Join [our AI Hub Slack community](https://aihub.qualcomm.com/community/slack) to collaborate, post questions and learn more about on-device AI.

* For questions or feedback please [reach out to us](mailto:ai-hub-support@qti.qualcomm.com).

|

Egor-N/blockassist-bc-vicious_stubby_bear_1756228446

|

Egor-N

| 2025-08-26T17:34:27Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"vicious stubby bear",

"arxiv:2504.07091",

"region:us"

] | null | 2025-08-26T17:34:23Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- vicious stubby bear

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

qualcomm/Yolo-v7

|

qualcomm

| 2025-08-26T17:34:27Z | 23 | 2 |

pytorch

|

[

"pytorch",

"real_time",

"android",

"object-detection",

"arxiv:2207.02696",

"license:other",

"region:us"

] |

object-detection

| 2024-02-25T22:57:07Z |

---

library_name: pytorch

license: other

tags:

- real_time

- android

pipeline_tag: object-detection

---

# Yolo-v7: Optimized for Mobile Deployment

## Real-time object detection optimized for mobile and edge

YoloV7 is a machine learning model that predicts bounding boxes and classes of objects in an image.

This model is an implementation of Yolo-v7 found [here](https://github.com/WongKinYiu/yolov7/).

This repository provides scripts to run Yolo-v7 on Qualcomm® devices.

More details on model performance across various devices, can be found

[here](https://aihub.qualcomm.com/models/yolov7).

**WARNING**: The model assets are not readily available for download due to licensing restrictions.

### Model Details

- **Model Type:** Model_use_case.object_detection

- **Model Stats:**

- Model checkpoint: YoloV7 Tiny

- Input resolution: 640x640

- Number of parameters: 6.24M

- Model size (float): 23.8 MB

- Model size (w8a8): 6.23 MB

- Model size (w8a16): 6.66 MB

| Model | Precision | Device | Chipset | Target Runtime | Inference Time (ms) | Peak Memory Range (MB) | Primary Compute Unit | Target Model

|---|---|---|---|---|---|---|---|---|

| Yolo-v7 | float | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | TFLITE | 24.69 ms | 1 - 118 MB | NPU | -- |

| Yolo-v7 | float | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | QNN_DLC | 23.088 ms | 2 - 132 MB | NPU | -- |

| Yolo-v7 | float | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | TFLITE | 12.899 ms | 1 - 48 MB | NPU | -- |

| Yolo-v7 | float | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | QNN_DLC | 13.39 ms | 5 - 46 MB | NPU | -- |

| Yolo-v7 | float | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | TFLITE | 9.495 ms | 0 - 100 MB | NPU | -- |

| Yolo-v7 | float | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | QNN_DLC | 10.38 ms | 5 - 20 MB | NPU | -- |

| Yolo-v7 | float | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | TFLITE | 11.354 ms | 1 - 117 MB | NPU | -- |

| Yolo-v7 | float | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | QNN_DLC | 10.527 ms | 1 - 129 MB | NPU | -- |

| Yolo-v7 | float | SA7255P ADP | Qualcomm® SA7255P | TFLITE | 24.69 ms | 1 - 118 MB | NPU | -- |

| Yolo-v7 | float | SA7255P ADP | Qualcomm® SA7255P | QNN_DLC | 23.088 ms | 2 - 132 MB | NPU | -- |

| Yolo-v7 | float | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | TFLITE | 9.471 ms | 0 - 101 MB | NPU | -- |

| Yolo-v7 | float | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | QNN_DLC | 10.404 ms | 5 - 32 MB | NPU | -- |

| Yolo-v7 | float | SA8295P ADP | Qualcomm® SA8295P | TFLITE | 14.446 ms | 1 - 40 MB | NPU | -- |

| Yolo-v7 | float | SA8295P ADP | Qualcomm® SA8295P | QNN_DLC | 11.846 ms | 1 - 41 MB | NPU | -- |

| Yolo-v7 | float | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | TFLITE | 9.481 ms | 0 - 102 MB | NPU | -- |

| Yolo-v7 | float | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | QNN_DLC | 10.419 ms | 5 - 20 MB | NPU | -- |

| Yolo-v7 | float | SA8775P ADP | Qualcomm® SA8775P | TFLITE | 11.354 ms | 1 - 117 MB | NPU | -- |

| Yolo-v7 | float | SA8775P ADP | Qualcomm® SA8775P | QNN_DLC | 10.527 ms | 1 - 129 MB | NPU | -- |

| Yolo-v7 | float | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | TFLITE | 9.408 ms | 0 - 103 MB | NPU | -- |

| Yolo-v7 | float | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | QNN_DLC | 10.451 ms | 5 - 22 MB | NPU | -- |

| Yolo-v7 | float | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | ONNX | 11.037 ms | 0 - 43 MB | NPU | -- |

| Yolo-v7 | float | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | TFLITE | 6.7 ms | 8 - 221 MB | NPU | -- |

| Yolo-v7 | float | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | QNN_DLC | 6.033 ms | 5 - 315 MB | NPU | -- |

| Yolo-v7 | float | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | ONNX | 8.907 ms | 5 - 166 MB | NPU | -- |

| Yolo-v7 | float | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | TFLITE | 7.059 ms | 1 - 116 MB | NPU | -- |

| Yolo-v7 | float | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | QNN_DLC | 5.88 ms | 5 - 130 MB | NPU | -- |

| Yolo-v7 | float | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | ONNX | 7.724 ms | 5 - 132 MB | NPU | -- |

| Yolo-v7 | float | Snapdragon X Elite CRD | Snapdragon® X Elite | QNN_DLC | 11.164 ms | 204 - 204 MB | NPU | -- |

| Yolo-v7 | float | Snapdragon X Elite CRD | Snapdragon® X Elite | ONNX | 11.733 ms | 9 - 9 MB | NPU | -- |

| Yolo-v7 | w8a16 | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | QNN_DLC | 18.124 ms | 2 - 37 MB | NPU | -- |

| Yolo-v7 | w8a16 | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | QNN_DLC | 12.361 ms | 2 - 55 MB | NPU | -- |

| Yolo-v7 | w8a16 | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | QNN_DLC | 9.425 ms | 2 - 17 MB | NPU | -- |

| Yolo-v7 | w8a16 | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | QNN_DLC | 10.263 ms | 1 - 37 MB | NPU | -- |

| Yolo-v7 | w8a16 | RB3 Gen 2 (Proxy) | Qualcomm® QCS6490 (Proxy) | QNN_DLC | 24.212 ms | 2 - 43 MB | NPU | -- |

| Yolo-v7 | w8a16 | SA7255P ADP | Qualcomm® SA7255P | QNN_DLC | 18.124 ms | 2 - 37 MB | NPU | -- |

| Yolo-v7 | w8a16 | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | QNN_DLC | 9.453 ms | 2 - 16 MB | NPU | -- |

| Yolo-v7 | w8a16 | SA8295P ADP | Qualcomm® SA8295P | QNN_DLC | 11.967 ms | 2 - 52 MB | NPU | -- |

| Yolo-v7 | w8a16 | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | QNN_DLC | 9.44 ms | 2 - 16 MB | NPU | -- |

| Yolo-v7 | w8a16 | SA8775P ADP | Qualcomm® SA8775P | QNN_DLC | 10.263 ms | 1 - 37 MB | NPU | -- |

| Yolo-v7 | w8a16 | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | QNN_DLC | 9.432 ms | 2 - 14 MB | NPU | -- |

| Yolo-v7 | w8a16 | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | QNN_DLC | 5.126 ms | 2 - 51 MB | NPU | -- |

| Yolo-v7 | w8a16 | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | QNN_DLC | 4.967 ms | 2 - 46 MB | NPU | -- |

| Yolo-v7 | w8a16 | Snapdragon X Elite CRD | Snapdragon® X Elite | QNN_DLC | 7.928 ms | 12 - 12 MB | NPU | -- |

| Yolo-v7 | w8a8 | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | TFLITE | 5.592 ms | 0 - 27 MB | NPU | -- |

| Yolo-v7 | w8a8 | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | QNN_DLC | 4.299 ms | 1 - 30 MB | NPU | -- |

| Yolo-v7 | w8a8 | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | TFLITE | 3.283 ms | 0 - 48 MB | NPU | -- |

| Yolo-v7 | w8a8 | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | QNN_DLC | 2.749 ms | 1 - 44 MB | NPU | -- |

| Yolo-v7 | w8a8 | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | TFLITE | 2.621 ms | 0 - 32 MB | NPU | -- |

| Yolo-v7 | w8a8 | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | QNN_DLC | 1.924 ms | 1 - 14 MB | NPU | -- |

| Yolo-v7 | w8a8 | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | TFLITE | 3.049 ms | 0 - 27 MB | NPU | -- |

| Yolo-v7 | w8a8 | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | QNN_DLC | 2.367 ms | 1 - 30 MB | NPU | -- |

| Yolo-v7 | w8a8 | RB3 Gen 2 (Proxy) | Qualcomm® QCS6490 (Proxy) | TFLITE | 20.597 ms | 8 - 57 MB | NPU | -- |

| Yolo-v7 | w8a8 | RB3 Gen 2 (Proxy) | Qualcomm® QCS6490 (Proxy) | QNN_DLC | 8.56 ms | 1 - 39 MB | NPU | -- |

| Yolo-v7 | w8a8 | RB5 (Proxy) | Qualcomm® QCS8250 (Proxy) | TFLITE | 128.83 ms | 15 - 45 MB | GPU | -- |

| Yolo-v7 | w8a8 | SA7255P ADP | Qualcomm® SA7255P | TFLITE | 5.592 ms | 0 - 27 MB | NPU | -- |

| Yolo-v7 | w8a8 | SA7255P ADP | Qualcomm® SA7255P | QNN_DLC | 4.299 ms | 1 - 30 MB | NPU | -- |

| Yolo-v7 | w8a8 | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | TFLITE | 2.649 ms | 0 - 31 MB | NPU | -- |

| Yolo-v7 | w8a8 | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | QNN_DLC | 1.911 ms | 1 - 14 MB | NPU | -- |

| Yolo-v7 | w8a8 | SA8295P ADP | Qualcomm® SA8295P | TFLITE | 4.277 ms | 0 - 36 MB | NPU | -- |

| Yolo-v7 | w8a8 | SA8295P ADP | Qualcomm® SA8295P | QNN_DLC | 3.232 ms | 1 - 37 MB | NPU | -- |

| Yolo-v7 | w8a8 | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | TFLITE | 2.644 ms | 0 - 33 MB | NPU | -- |

| Yolo-v7 | w8a8 | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | QNN_DLC | 1.913 ms | 1 - 14 MB | NPU | -- |

| Yolo-v7 | w8a8 | SA8775P ADP | Qualcomm® SA8775P | TFLITE | 3.049 ms | 0 - 27 MB | NPU | -- |

| Yolo-v7 | w8a8 | SA8775P ADP | Qualcomm® SA8775P | QNN_DLC | 2.367 ms | 1 - 30 MB | NPU | -- |

| Yolo-v7 | w8a8 | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | TFLITE | 2.652 ms | 0 - 9 MB | NPU | -- |

| Yolo-v7 | w8a8 | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | QNN_DLC | 1.913 ms | 1 - 15 MB | NPU | -- |

| Yolo-v7 | w8a8 | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | ONNX | 4.06 ms | 0 - 62 MB | NPU | -- |

| Yolo-v7 | w8a8 | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | TFLITE | 1.755 ms | 0 - 46 MB | NPU | -- |

| Yolo-v7 | w8a8 | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | QNN_DLC | 1.292 ms | 1 - 48 MB | NPU | -- |

| Yolo-v7 | w8a8 | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | ONNX | 3.014 ms | 0 - 258 MB | NPU | -- |

| Yolo-v7 | w8a8 | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | TFLITE | 1.607 ms | 0 - 33 MB | NPU | -- |

| Yolo-v7 | w8a8 | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | QNN_DLC | 1.171 ms | 1 - 38 MB | NPU | -- |

| Yolo-v7 | w8a8 | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | ONNX | 2.362 ms | 1 - 140 MB | NPU | -- |

| Yolo-v7 | w8a8 | Snapdragon X Elite CRD | Snapdragon® X Elite | QNN_DLC | 2.172 ms | 22 - 22 MB | NPU | -- |

| Yolo-v7 | w8a8 | Snapdragon X Elite CRD | Snapdragon® X Elite | ONNX | 4.592 ms | 5 - 5 MB | NPU | -- |

## Installation

Install the package via pip:

```bash

pip install "qai-hub-models[yolov7]"

```

## Configure Qualcomm® AI Hub to run this model on a cloud-hosted device

Sign-in to [Qualcomm® AI Hub](https://app.aihub.qualcomm.com/) with your

Qualcomm® ID. Once signed in navigate to `Account -> Settings -> API Token`.

With this API token, you can configure your client to run models on the cloud

hosted devices.

```bash

qai-hub configure --api_token API_TOKEN

```

Navigate to [docs](https://app.aihub.qualcomm.com/docs/) for more information.

## Demo off target

The package contains a simple end-to-end demo that downloads pre-trained

weights and runs this model on a sample input.

```bash

python -m qai_hub_models.models.yolov7.demo

```

The above demo runs a reference implementation of pre-processing, model

inference, and post processing.

**NOTE**: If you want running in a Jupyter Notebook or Google Colab like

environment, please add the following to your cell (instead of the above).

```

%run -m qai_hub_models.models.yolov7.demo

```

### Run model on a cloud-hosted device

In addition to the demo, you can also run the model on a cloud-hosted Qualcomm®

device. This script does the following:

* Performance check on-device on a cloud-hosted device

* Downloads compiled assets that can be deployed on-device for Android.

* Accuracy check between PyTorch and on-device outputs.

```bash

python -m qai_hub_models.models.yolov7.export

```

## How does this work?

This [export script](https://aihub.qualcomm.com/models/yolov7/qai_hub_models/models/Yolo-v7/export.py)

leverages [Qualcomm® AI Hub](https://aihub.qualcomm.com/) to optimize, validate, and deploy this model

on-device. Lets go through each step below in detail:

Step 1: **Compile model for on-device deployment**

To compile a PyTorch model for on-device deployment, we first trace the model

in memory using the `jit.trace` and then call the `submit_compile_job` API.

```python

import torch

import qai_hub as hub

from qai_hub_models.models.yolov7 import Model

# Load the model

torch_model = Model.from_pretrained()

# Device

device = hub.Device("Samsung Galaxy S24")

# Trace model

input_shape = torch_model.get_input_spec()

sample_inputs = torch_model.sample_inputs()

pt_model = torch.jit.trace(torch_model, [torch.tensor(data[0]) for _, data in sample_inputs.items()])

# Compile model on a specific device

compile_job = hub.submit_compile_job(

model=pt_model,

device=device,

input_specs=torch_model.get_input_spec(),

)

# Get target model to run on-device

target_model = compile_job.get_target_model()

```

Step 2: **Performance profiling on cloud-hosted device**

After compiling models from step 1. Models can be profiled model on-device using the

`target_model`. Note that this scripts runs the model on a device automatically

provisioned in the cloud. Once the job is submitted, you can navigate to a

provided job URL to view a variety of on-device performance metrics.

```python

profile_job = hub.submit_profile_job(

model=target_model,

device=device,

)

```

Step 3: **Verify on-device accuracy**

To verify the accuracy of the model on-device, you can run on-device inference

on sample input data on the same cloud hosted device.

```python

input_data = torch_model.sample_inputs()

inference_job = hub.submit_inference_job(

model=target_model,

device=device,

inputs=input_data,

)

on_device_output = inference_job.download_output_data()

```

With the output of the model, you can compute like PSNR, relative errors or

spot check the output with expected output.

**Note**: This on-device profiling and inference requires access to Qualcomm®

AI Hub. [Sign up for access](https://myaccount.qualcomm.com/signup).

## Run demo on a cloud-hosted device

You can also run the demo on-device.

```bash

python -m qai_hub_models.models.yolov7.demo --eval-mode on-device

```

**NOTE**: If you want running in a Jupyter Notebook or Google Colab like

environment, please add the following to your cell (instead of the above).

```

%run -m qai_hub_models.models.yolov7.demo -- --eval-mode on-device

```

## Deploying compiled model to Android

The models can be deployed using multiple runtimes:

- TensorFlow Lite (`.tflite` export): [This

tutorial](https://www.tensorflow.org/lite/android/quickstart) provides a

guide to deploy the .tflite model in an Android application.

- QNN (`.so` export ): This [sample

app](https://docs.qualcomm.com/bundle/publicresource/topics/80-63442-50/sample_app.html)

provides instructions on how to use the `.so` shared library in an Android application.

## View on Qualcomm® AI Hub

Get more details on Yolo-v7's performance across various devices [here](https://aihub.qualcomm.com/models/yolov7).

Explore all available models on [Qualcomm® AI Hub](https://aihub.qualcomm.com/)

## License

* The license for the original implementation of Yolo-v7 can be found

[here](https://github.com/WongKinYiu/yolov7/blob/main/LICENSE.md).

* The license for the compiled assets for on-device deployment can be found [here](https://github.com/WongKinYiu/yolov7/blob/main/LICENSE.md)

## References

* [YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors](https://arxiv.org/abs/2207.02696)

* [Source Model Implementation](https://github.com/WongKinYiu/yolov7/)

## Community

* Join [our AI Hub Slack community](https://aihub.qualcomm.com/community/slack) to collaborate, post questions and learn more about on-device AI.

* For questions or feedback please [reach out to us](mailto:ai-hub-support@qti.qualcomm.com).

|

qualcomm/Yolo-v5

|

qualcomm

| 2025-08-26T17:34:21Z | 5 | 0 |

pytorch

|

[

"pytorch",

"real_time",

"android",

"object-detection",

"license:other",

"region:us"

] |

object-detection

| 2025-01-23T02:39:47Z |

---

library_name: pytorch

license: other

tags:

- real_time

- android

pipeline_tag: object-detection

---

# Yolo-v5: Optimized for Mobile Deployment

## Real-time object detection optimized for mobile and edge

YoloV5 is a machine learning model that predicts bounding boxes and classes of objects in an image.

This model is an implementation of Yolo-v5 found [here](https://github.com/ultralytics/yolov5).

This repository provides scripts to run Yolo-v5 on Qualcomm® devices.

More details on model performance across various devices, can be found

[here](https://aihub.qualcomm.com/models/yolov5).

**WARNING**: The model assets are not readily available for download due to licensing restrictions.

### Model Details

- **Model Type:** Model_use_case.object_detection

- **Model Stats:**

- Model checkpoint: YoloV5-M

- Input resolution: 640x640

- Number of parameters: 21.2M

- Model size (float): 81.1 MB

- Model size (w8a16): 21.8 MB

| Model | Precision | Device | Chipset | Target Runtime | Inference Time (ms) | Peak Memory Range (MB) | Primary Compute Unit | Target Model

|---|---|---|---|---|---|---|---|---|

| Yolo-v5 | float | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | TFLITE | 64.075 ms | 1 - 125 MB | NPU | -- |

| Yolo-v5 | float | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | QNN_DLC | 63.706 ms | 3 - 150 MB | NPU | -- |

| Yolo-v5 | float | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | TFLITE | 34.171 ms | 1 - 92 MB | NPU | -- |

| Yolo-v5 | float | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | QNN_DLC | 35.402 ms | 5 - 69 MB | NPU | -- |

| Yolo-v5 | float | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | TFLITE | 19.266 ms | 0 - 78 MB | NPU | -- |

| Yolo-v5 | float | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | QNN_DLC | 18.739 ms | 5 - 38 MB | NPU | -- |

| Yolo-v5 | float | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | TFLITE | 23.542 ms | 1 - 126 MB | NPU | -- |

| Yolo-v5 | float | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | QNN_DLC | 22.989 ms | 2 - 137 MB | NPU | -- |

| Yolo-v5 | float | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | TFLITE | 19.104 ms | 0 - 54 MB | NPU | -- |

| Yolo-v5 | float | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | QNN_DLC | 18.823 ms | 5 - 42 MB | NPU | -- |

| Yolo-v5 | float | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | ONNX | 24.772 ms | 0 - 128 MB | NPU | -- |

| Yolo-v5 | float | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | TFLITE | 14.702 ms | 0 - 230 MB | NPU | -- |

| Yolo-v5 | float | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | QNN_DLC | 14.648 ms | 5 - 159 MB | NPU | -- |

| Yolo-v5 | float | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | ONNX | 18.075 ms | 3 - 144 MB | NPU | -- |

| Yolo-v5 | float | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | TFLITE | 11.983 ms | 0 - 103 MB | NPU | -- |

| Yolo-v5 | float | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | QNN_DLC | 12.034 ms | 5 - 145 MB | NPU | -- |

| Yolo-v5 | float | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | ONNX | 16.338 ms | 5 - 135 MB | NPU | -- |

| Yolo-v5 | float | Snapdragon X Elite CRD | Snapdragon® X Elite | QNN_DLC | 18.144 ms | 5 - 5 MB | NPU | -- |

| Yolo-v5 | float | Snapdragon X Elite CRD | Snapdragon® X Elite | ONNX | 25.903 ms | 39 - 39 MB | NPU | -- |

| Yolo-v5 | w8a16 | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | QNN_DLC | 24.885 ms | 2 - 89 MB | NPU | -- |

| Yolo-v5 | w8a16 | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | QNN_DLC | 16.103 ms | 2 - 90 MB | NPU | -- |

| Yolo-v5 | w8a16 | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | QNN_DLC | 11.919 ms | 2 - 32 MB | NPU | -- |

| Yolo-v5 | w8a16 | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | QNN_DLC | 12.148 ms | 2 - 92 MB | NPU | -- |

| Yolo-v5 | w8a16 | RB3 Gen 2 (Proxy) | Qualcomm® QCS6490 (Proxy) | QNN_DLC | 52.554 ms | 2 - 97 MB | NPU | -- |

| Yolo-v5 | w8a16 | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | QNN_DLC | 11.941 ms | 2 - 31 MB | NPU | -- |

| Yolo-v5 | w8a16 | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | QNN_DLC | 7.973 ms | 2 - 102 MB | NPU | -- |

| Yolo-v5 | w8a16 | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | QNN_DLC | 6.171 ms | 2 - 100 MB | NPU | -- |

| Yolo-v5 | w8a16 | Snapdragon X Elite CRD | Snapdragon® X Elite | QNN_DLC | 12.754 ms | 31 - 31 MB | NPU | -- |

## Installation

Install the package via pip:

```bash

pip install "qai-hub-models[yolov5]"

```

## Configure Qualcomm® AI Hub to run this model on a cloud-hosted device

Sign-in to [Qualcomm® AI Hub](https://app.aihub.qualcomm.com/) with your

Qualcomm® ID. Once signed in navigate to `Account -> Settings -> API Token`.

With this API token, you can configure your client to run models on the cloud

hosted devices.

```bash

qai-hub configure --api_token API_TOKEN

```

Navigate to [docs](https://app.aihub.qualcomm.com/docs/) for more information.

## Demo off target

The package contains a simple end-to-end demo that downloads pre-trained

weights and runs this model on a sample input.

```bash

python -m qai_hub_models.models.yolov5.demo

```

The above demo runs a reference implementation of pre-processing, model

inference, and post processing.

**NOTE**: If you want running in a Jupyter Notebook or Google Colab like

environment, please add the following to your cell (instead of the above).

```

%run -m qai_hub_models.models.yolov5.demo

```

### Run model on a cloud-hosted device

In addition to the demo, you can also run the model on a cloud-hosted Qualcomm®

device. This script does the following:

* Performance check on-device on a cloud-hosted device

* Downloads compiled assets that can be deployed on-device for Android.

* Accuracy check between PyTorch and on-device outputs.

```bash

python -m qai_hub_models.models.yolov5.export

```

## How does this work?

This [export script](https://aihub.qualcomm.com/models/yolov5/qai_hub_models/models/Yolo-v5/export.py)

leverages [Qualcomm® AI Hub](https://aihub.qualcomm.com/) to optimize, validate, and deploy this model

on-device. Lets go through each step below in detail:

Step 1: **Compile model for on-device deployment**

To compile a PyTorch model for on-device deployment, we first trace the model

in memory using the `jit.trace` and then call the `submit_compile_job` API.

```python

import torch

import qai_hub as hub

from qai_hub_models.models.yolov5 import Model

# Load the model

torch_model = Model.from_pretrained()

# Device

device = hub.Device("Samsung Galaxy S24")

# Trace model

input_shape = torch_model.get_input_spec()

sample_inputs = torch_model.sample_inputs()

pt_model = torch.jit.trace(torch_model, [torch.tensor(data[0]) for _, data in sample_inputs.items()])

# Compile model on a specific device

compile_job = hub.submit_compile_job(

model=pt_model,

device=device,

input_specs=torch_model.get_input_spec(),

)

# Get target model to run on-device

target_model = compile_job.get_target_model()

```

Step 2: **Performance profiling on cloud-hosted device**

After compiling models from step 1. Models can be profiled model on-device using the

`target_model`. Note that this scripts runs the model on a device automatically

provisioned in the cloud. Once the job is submitted, you can navigate to a

provided job URL to view a variety of on-device performance metrics.

```python

profile_job = hub.submit_profile_job(

model=target_model,

device=device,

)

```

Step 3: **Verify on-device accuracy**

To verify the accuracy of the model on-device, you can run on-device inference

on sample input data on the same cloud hosted device.

```python

input_data = torch_model.sample_inputs()

inference_job = hub.submit_inference_job(

model=target_model,

device=device,

inputs=input_data,

)

on_device_output = inference_job.download_output_data()

```

With the output of the model, you can compute like PSNR, relative errors or

spot check the output with expected output.

**Note**: This on-device profiling and inference requires access to Qualcomm®

AI Hub. [Sign up for access](https://myaccount.qualcomm.com/signup).

## Run demo on a cloud-hosted device

You can also run the demo on-device.

```bash

python -m qai_hub_models.models.yolov5.demo --eval-mode on-device

```

**NOTE**: If you want running in a Jupyter Notebook or Google Colab like

environment, please add the following to your cell (instead of the above).

```

%run -m qai_hub_models.models.yolov5.demo -- --eval-mode on-device

```

## Deploying compiled model to Android

The models can be deployed using multiple runtimes:

- TensorFlow Lite (`.tflite` export): [This

tutorial](https://www.tensorflow.org/lite/android/quickstart) provides a

guide to deploy the .tflite model in an Android application.

- QNN (`.so` export ): This [sample

app](https://docs.qualcomm.com/bundle/publicresource/topics/80-63442-50/sample_app.html)

provides instructions on how to use the `.so` shared library in an Android application.

## View on Qualcomm® AI Hub

Get more details on Yolo-v5's performance across various devices [here](https://aihub.qualcomm.com/models/yolov5).

Explore all available models on [Qualcomm® AI Hub](https://aihub.qualcomm.com/)

## License

* The license for the original implementation of Yolo-v5 can be found

[here](https://github.com/ultralytics/yolov5?tab=AGPL-3.0-1-ov-file#readme).

* The license for the compiled assets for on-device deployment can be found [here](https://github.com/ultralytics/yolov5?tab=AGPL-3.0-1-ov-file#readme)

## References

* [Source Model Implementation](https://github.com/ultralytics/yolov5)

## Community

* Join [our AI Hub Slack community](https://aihub.qualcomm.com/community/slack) to collaborate, post questions and learn more about on-device AI.

* For questions or feedback please [reach out to us](mailto:ai-hub-support@qti.qualcomm.com).

|

qualcomm/YamNet

|

qualcomm

| 2025-08-26T17:34:08Z | 336 | 1 |

pytorch

|

[

"pytorch",

"tflite",

"real_time",

"android",

"audio-classification",

"arxiv:1704.04861",

"license:other",

"region:us"

] |

audio-classification

| 2025-03-14T02:22:13Z |

---

library_name: pytorch

license: other

tags:

- real_time

- android

pipeline_tag: audio-classification

---

# YamNet: Optimized for Mobile Deployment

## Audio Event classification Model

An audio event classifier trained on the AudioSet dataset to predict audio events from the AudioSet ontology employing the Mobilenet_v1 depthwise-separable convolution architecture.

This model is an implementation of YamNet found [here](https://github.com/w-hc/torch_audioset).

This repository provides scripts to run YamNet on Qualcomm® devices.

More details on model performance across various devices, can be found

[here](https://aihub.qualcomm.com/models/yamnet).

### Model Details

- **Model Type:** Model_use_case.audio_classification

- **Model Stats:**

- Model checkpoint: yamnet.pth

- Input resolution: 1x1x96x64

- Number of parameters: 3.73M

- Model size (float): 14.2 MB

| Model | Precision | Device | Chipset | Target Runtime | Inference Time (ms) | Peak Memory Range (MB) | Primary Compute Unit | Target Model

|---|---|---|---|---|---|---|---|---|

| YamNet | float | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | TFLITE | 0.668 ms | 0 - 22 MB | NPU | [YamNet.tflite](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.tflite) |

| YamNet | float | QCS8275 (Proxy) | Qualcomm® QCS8275 (Proxy) | QNN_DLC | 0.649 ms | 0 - 16 MB | NPU | [YamNet.dlc](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.dlc) |

| YamNet | float | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | TFLITE | 0.319 ms | 0 - 34 MB | NPU | [YamNet.tflite](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.tflite) |

| YamNet | float | QCS8450 (Proxy) | Qualcomm® QCS8450 (Proxy) | QNN_DLC | 0.346 ms | 0 - 23 MB | NPU | [YamNet.dlc](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.dlc) |

| YamNet | float | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | TFLITE | 0.211 ms | 0 - 72 MB | NPU | [YamNet.tflite](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.tflite) |

| YamNet | float | QCS8550 (Proxy) | Qualcomm® QCS8550 (Proxy) | QNN_DLC | 0.222 ms | 0 - 51 MB | NPU | [YamNet.dlc](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.dlc) |

| YamNet | float | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | TFLITE | 0.368 ms | 0 - 22 MB | NPU | [YamNet.tflite](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.tflite) |

| YamNet | float | QCS9075 (Proxy) | Qualcomm® QCS9075 (Proxy) | QNN_DLC | 0.359 ms | 0 - 16 MB | NPU | [YamNet.dlc](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.dlc) |

| YamNet | float | SA7255P ADP | Qualcomm® SA7255P | TFLITE | 0.668 ms | 0 - 22 MB | NPU | [YamNet.tflite](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.tflite) |

| YamNet | float | SA7255P ADP | Qualcomm® SA7255P | QNN_DLC | 0.649 ms | 0 - 16 MB | NPU | [YamNet.dlc](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.dlc) |

| YamNet | float | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | TFLITE | 0.22 ms | 0 - 70 MB | NPU | [YamNet.tflite](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.tflite) |

| YamNet | float | SA8255 (Proxy) | Qualcomm® SA8255P (Proxy) | QNN_DLC | 0.221 ms | 0 - 51 MB | NPU | [YamNet.dlc](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.dlc) |

| YamNet | float | SA8295P ADP | Qualcomm® SA8295P | TFLITE | 0.547 ms | 0 - 28 MB | NPU | [YamNet.tflite](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.tflite) |

| YamNet | float | SA8295P ADP | Qualcomm® SA8295P | QNN_DLC | 0.51 ms | 0 - 24 MB | NPU | [YamNet.dlc](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.dlc) |

| YamNet | float | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | TFLITE | 0.207 ms | 0 - 73 MB | NPU | [YamNet.tflite](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.tflite) |

| YamNet | float | SA8650 (Proxy) | Qualcomm® SA8650P (Proxy) | QNN_DLC | 0.212 ms | 0 - 49 MB | NPU | [YamNet.dlc](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.dlc) |

| YamNet | float | SA8775P ADP | Qualcomm® SA8775P | TFLITE | 0.368 ms | 0 - 22 MB | NPU | [YamNet.tflite](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.tflite) |

| YamNet | float | SA8775P ADP | Qualcomm® SA8775P | QNN_DLC | 0.359 ms | 0 - 16 MB | NPU | [YamNet.dlc](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.dlc) |

| YamNet | float | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | TFLITE | 0.212 ms | 0 - 73 MB | NPU | [YamNet.tflite](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.tflite) |

| YamNet | float | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | QNN_DLC | 0.204 ms | 0 - 50 MB | NPU | [YamNet.dlc](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.dlc) |

| YamNet | float | Samsung Galaxy S23 | Snapdragon® 8 Gen 2 Mobile | ONNX | 0.311 ms | 0 - 46 MB | NPU | [YamNet.onnx.zip](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.onnx.zip) |

| YamNet | float | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | TFLITE | 0.173 ms | 0 - 34 MB | NPU | [YamNet.tflite](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.tflite) |

| YamNet | float | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | QNN_DLC | 0.176 ms | 0 - 27 MB | NPU | [YamNet.dlc](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.dlc) |

| YamNet | float | Samsung Galaxy S24 | Snapdragon® 8 Gen 3 Mobile | ONNX | 0.251 ms | 0 - 30 MB | NPU | [YamNet.onnx.zip](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.onnx.zip) |

| YamNet | float | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | TFLITE | 0.174 ms | 0 - 29 MB | NPU | [YamNet.tflite](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.tflite) |

| YamNet | float | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | QNN_DLC | 0.164 ms | 0 - 21 MB | NPU | [YamNet.dlc](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.dlc) |

| YamNet | float | Snapdragon 8 Elite QRD | Snapdragon® 8 Elite Mobile | ONNX | 0.276 ms | 0 - 16 MB | NPU | [YamNet.onnx.zip](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.onnx.zip) |

| YamNet | float | Snapdragon X Elite CRD | Snapdragon® X Elite | QNN_DLC | 0.269 ms | 56 - 56 MB | NPU | [YamNet.dlc](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.dlc) |

| YamNet | float | Snapdragon X Elite CRD | Snapdragon® X Elite | ONNX | 0.3 ms | 8 - 8 MB | NPU | [YamNet.onnx.zip](https://huggingface.co/qualcomm/YamNet/blob/main/YamNet.onnx.zip) |

## Installation

Install the package via pip:

```bash

pip install "qai-hub-models[yamnet]"

```

## Configure Qualcomm® AI Hub to run this model on a cloud-hosted device

Sign-in to [Qualcomm® AI Hub](https://app.aihub.qualcomm.com/) with your

Qualcomm® ID. Once signed in navigate to `Account -> Settings -> API Token`.

With this API token, you can configure your client to run models on the cloud

hosted devices.

```bash

qai-hub configure --api_token API_TOKEN

```

Navigate to [docs](https://app.aihub.qualcomm.com/docs/) for more information.

## Demo off target

The package contains a simple end-to-end demo that downloads pre-trained

weights and runs this model on a sample input.

```bash

python -m qai_hub_models.models.yamnet.demo

```

The above demo runs a reference implementation of pre-processing, model

inference, and post processing.

**NOTE**: If you want running in a Jupyter Notebook or Google Colab like

environment, please add the following to your cell (instead of the above).

```

%run -m qai_hub_models.models.yamnet.demo

```

### Run model on a cloud-hosted device

In addition to the demo, you can also run the model on a cloud-hosted Qualcomm®

device. This script does the following: