modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-07 18:30:29

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 544

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-07 18:30:28

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

jguevara/Reinforce-PixelCopter-demo

|

jguevara

| 2023-11-22T04:26:19Z | 0 | 0 | null |

[

"Pixelcopter-PLE-v0",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-11-22T04:26:18Z |

---

tags:

- Pixelcopter-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-PixelCopter-demo

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pixelcopter-PLE-v0

type: Pixelcopter-PLE-v0

metrics:

- type: mean_reward

value: -5.00 +/- 0.00

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pixelcopter-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pixelcopter-PLE-v0** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

livingbox/minimalist-style

|

livingbox

| 2023-11-22T04:13:32Z | 0 | 0 |

diffusers

|

[

"diffusers",

"text-to-image",

"stable-diffusion",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2023-11-22T04:07:49Z |

---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

---

### minimalist_style Dreambooth model trained by livingbox with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Sample pictures of this concept:

|

LoneStriker/Yarn-Llama-2-70b-32k-2.4bpw-h6-exl2

|

LoneStriker

| 2023-11-22T04:07:11Z | 8 | 0 |

transformers

|

[

"transformers",

"pytorch",

"llama",

"text-generation",

"custom_code",

"en",

"dataset:emozilla/yarn-train-tokenized-8k-llama",

"arxiv:2309.00071",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-11-22T03:54:33Z |

---

metrics:

- perplexity

library_name: transformers

license: apache-2.0

language:

- en

datasets:

- emozilla/yarn-train-tokenized-8k-llama

---

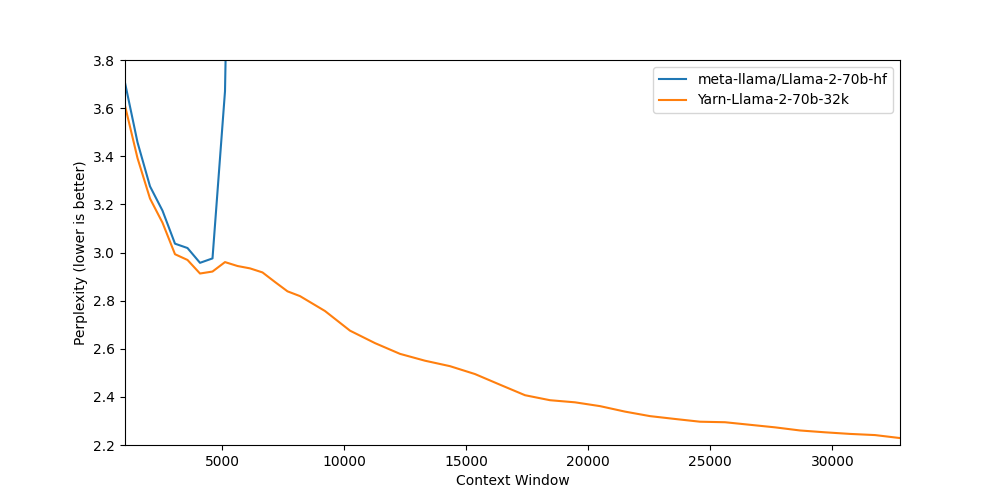

# Model Card: Yarn-Llama-2-70b-32k

[Preprint (arXiv)](https://arxiv.org/abs/2309.00071)

[GitHub](https://github.com/jquesnelle/yarn)

The authors would like to thank [LAION AI](https://laion.ai/) for their support of compute for this model.

It was trained on the [JUWELS](https://www.fz-juelich.de/en/ias/jsc/systems/supercomputers/juwels) supercomputer.

## Model Description

Nous-Yarn-Llama-2-70b-32k is a state-of-the-art language model for long context, further pretrained on long context data for 400 steps using the YaRN extension method.

It is an extension of [Llama-2-70b-hf](meta-llama/Llama-2-70b-hf) and supports a 32k token context window.

To use, pass `trust_remote_code=True` when loading the model, for example

```python

model = AutoModelForCausalLM.from_pretrained("NousResearch/Yarn-Llama-2-70b-32k",

use_flash_attention_2=True,

torch_dtype=torch.bfloat16,

device_map="auto",

trust_remote_code=True)

```

In addition you will need to use the latest version of `transformers` (until 4.35 comes out)

```sh

pip install git+https://github.com/huggingface/transformers

```

## Benchmarks

Long context benchmarks:

| Model | Context Window | 1k PPL | 2k PPL | 4k PPL | 8k PPL | 16k PPL | 32k PPL |

|-------|---------------:|-------:|--------:|------:|-------:|--------:|--------:|

| [Llama-2-70b-hf](meta-llama/Llama-2-70b-hf) | 4k | 3.71 | 3.27 | 2.96 | - | - | - |

| [Yarn-Llama-2-70b-32k](https://huggingface.co/NousResearch/Yarn-Llama-2-70b-32k) | 32k | 3.61 | 3.22 | 2.91 | 2.82 | 2.45 | 2.23 |

Short context benchmarks showing that quality degradation is minimal:

| Model | Context Window | ARC-c | MMLU | Truthful QA |

|-------|---------------:|------:|-----:|------------:|

| [Llama-2-70b-hf](meta-llama/Llama-2-70b-hf) | 4k | 67.32 | 69.83 | 44.92 |

| [Yarn-Llama-2-70b-32k](https://huggingface.co/NousResearch/Yarn-Llama-2-70b-32k) | 32k | 67.41 | 68.84 | 46.14 |

## Collaborators

- [bloc97](https://github.com/bloc97): Methods, paper and evals

- [@theemozilla](https://twitter.com/theemozilla): Methods, paper, model training, and evals

- [@EnricoShippole](https://twitter.com/EnricoShippole): Model training

- [honglu2875](https://github.com/honglu2875): Paper and evals

|

phuong-tk-nguyen/resnet-50-finetuned-cifar10

|

phuong-tk-nguyen

| 2023-11-22T04:04:52Z | 40 | 0 |

transformers

|

[

"transformers",

"safetensors",

"resnet",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"base_model:microsoft/resnet-50",

"base_model:finetune:microsoft/resnet-50",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2023-11-22T03:40:31Z |

---

license: apache-2.0

base_model: microsoft/resnet-50

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: resnet-50-finetuned-cifar10

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.5076

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# resnet-50-finetuned-cifar10

This model is a fine-tuned version of [microsoft/resnet-50](https://huggingface.co/microsoft/resnet-50) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 1.9060

- Accuracy: 0.5076

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 2.3058 | 0.03 | 10 | 2.3106 | 0.0794 |

| 2.3033 | 0.06 | 20 | 2.3026 | 0.0892 |

| 2.3012 | 0.09 | 30 | 2.2971 | 0.1042 |

| 2.2914 | 0.11 | 40 | 2.2890 | 0.1254 |

| 2.2869 | 0.14 | 50 | 2.2816 | 0.16 |

| 2.2785 | 0.17 | 60 | 2.2700 | 0.1902 |

| 2.2712 | 0.2 | 70 | 2.2602 | 0.2354 |

| 2.2619 | 0.23 | 80 | 2.2501 | 0.2688 |

| 2.2509 | 0.26 | 90 | 2.2383 | 0.3022 |

| 2.2382 | 0.28 | 100 | 2.2229 | 0.3268 |

| 2.2255 | 0.31 | 110 | 2.2084 | 0.353 |

| 2.2164 | 0.34 | 120 | 2.1939 | 0.3608 |

| 2.2028 | 0.37 | 130 | 2.1829 | 0.3668 |

| 2.1977 | 0.4 | 140 | 2.1646 | 0.401 |

| 2.1844 | 0.43 | 150 | 2.1441 | 0.4244 |

| 2.1689 | 0.45 | 160 | 2.1323 | 0.437 |

| 2.1555 | 0.48 | 170 | 2.1159 | 0.4462 |

| 2.1448 | 0.51 | 180 | 2.0992 | 0.45 |

| 2.1313 | 0.54 | 190 | 2.0810 | 0.4642 |

| 2.1189 | 0.57 | 200 | 2.0589 | 0.4708 |

| 2.1111 | 0.6 | 210 | 2.0430 | 0.4828 |

| 2.0905 | 0.63 | 220 | 2.0288 | 0.4938 |

| 2.082 | 0.65 | 230 | 2.0089 | 0.4938 |

| 2.0646 | 0.68 | 240 | 1.9970 | 0.5014 |

| 2.0636 | 0.71 | 250 | 1.9778 | 0.4946 |

| 2.0579 | 0.74 | 260 | 1.9609 | 0.49 |

| 2.028 | 0.77 | 270 | 1.9602 | 0.4862 |

| 2.0447 | 0.8 | 280 | 1.9460 | 0.4934 |

| 2.0168 | 0.82 | 290 | 1.9369 | 0.505 |

| 2.0126 | 0.85 | 300 | 1.9317 | 0.4926 |

| 2.0099 | 0.88 | 310 | 1.9235 | 0.4952 |

| 1.9978 | 0.91 | 320 | 1.9174 | 0.4972 |

| 1.9951 | 0.94 | 330 | 1.9119 | 0.507 |

| 1.9823 | 0.97 | 340 | 1.9120 | 0.4992 |

| 1.985 | 1.0 | 350 | 1.9064 | 0.5022 |

### Framework versions

- Transformers 4.35.0

- Pytorch 2.1.1

- Datasets 2.14.6

- Tokenizers 0.14.1

|

devagonal/t5-flan-semantic-2

|

devagonal

| 2023-11-22T03:52:16Z | 5 | 0 |

transformers

|

[

"transformers",

"safetensors",

"t5",

"text2text-generation",

"generated_from_trainer",

"base_model:google/flan-t5-base",

"base_model:finetune:google/flan-t5-base",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2023-11-22T03:23:18Z |

---

license: apache-2.0

base_model: google/flan-t5-base

tags:

- generated_from_trainer

model-index:

- name: t5-flan-semantic-2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-flan-semantic-2

This model is a fine-tuned version of [google/flan-t5-base](https://huggingface.co/google/flan-t5-base) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 180

### Training results

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu118

- Datasets 2.15.0

- Tokenizers 0.15.0

|

Yuta555/Llama-2-7b-MBTI-classification

|

Yuta555

| 2023-11-22T03:50:51Z | 0 | 0 |

peft

|

[

"peft",

"llama",

"en",

"arxiv:1910.09700",

"base_model:meta-llama/Llama-2-7b-hf",

"base_model:adapter:meta-llama/Llama-2-7b-hf",

"region:us"

] | null | 2023-11-22T03:09:49Z |

---

library_name: peft

base_model: meta-llama/Llama-2-7b-hf

language:

- en

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Data Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Data Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

## Training procedure

### Framework versions

- PEFT 0.7.0.dev0

|

uukuguy/mistral-7b-platypus-fp16-dare-0.9

|

uukuguy

| 2023-11-22T03:44:58Z | 1,407 | 0 |

transformers

|

[

"transformers",

"pytorch",

"mistral",

"text-generation",

"license:llama2",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-11-20T05:24:14Z |

---

license: llama2

---

Experiment for DARE(Drop and REscale), most of the delta parameters can be directly set to zeros without affecting the capabilities of SFT LMs and larger models can tolerate a higher proportion of discarded parameters.

| Model | Average | ARC | HellaSwag | MMLU | TruthfulQA | Winogrande | GSM8K | DROP |

| ------ | ------ | ------ | ------ | ------ | ------ | ------ | ------ | ------ |

| bhenrym14/mistral-7b-platypus-fp16 | 56.89 | 63.05 | 84.15 | 64.11 | 45.07 | 78.53 | 17.36 | 45.92 |

|

ivandzefen/llama-2-ko-7b-chat-gguf

|

ivandzefen

| 2023-11-22T03:34:50Z | 5 | 1 |

transformers

|

[

"transformers",

"llama",

"text-generation",

"ko",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-11-22T02:02:15Z |

---

license: mit

language:

- ko

---

quantized verion of [kfkas/Llama-2-ko-7b-Chat](https://huggingface.co/kfkas/Llama-2-ko-7b-Chat)

|

smartlens/pix2Struct-peft-rank-8-docvqa-v1.0

|

smartlens

| 2023-11-22T03:25:45Z | 0 | 0 |

peft

|

[

"peft",

"safetensors",

"pix2struct",

"arxiv:1910.09700",

"base_model:google/pix2struct-docvqa-base",

"base_model:adapter:google/pix2struct-docvqa-base",

"region:us"

] | null | 2023-11-22T03:12:22Z |

---

library_name: peft

base_model: google/pix2struct-docvqa-base

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

## Training procedure

### Framework versions

- PEFT 0.6.2

|

AmelieSchreiber/PepMLM_v0

|

AmelieSchreiber

| 2023-11-22T03:18:23Z | 1 | 0 | null |

[

"safetensors",

"license:mit",

"region:us"

] | null | 2023-11-21T20:56:36Z |

---

license: mit

---

# ESM-2 for Generating Peptide Binders for Proteins

This is just a retraining of PepMLM using this [forked repo](https://github.com/Amelie-Schreiber/pepmlm/tree/main).

The original PepMLM is also already on HuggingFace [here](https://huggingface.co/TianlaiChen/PepMLM-650M).

## Using the Model

To use the model, run the following:

```python

from transformers import AutoTokenizer, EsmForMaskedLM

import torch

import pandas as pd

import numpy as np

from torch.distributions import Categorical

def compute_pseudo_perplexity(model, tokenizer, protein_seq, binder_seq):

sequence = protein_seq + binder_seq

tensor_input = tokenizer.encode(sequence, return_tensors='pt').to(model.device)

# Create a mask for the binder sequence

binder_mask = torch.zeros(tensor_input.shape).to(model.device)

binder_mask[0, -len(binder_seq)-1:-1] = 1

# Mask the binder sequence in the input and create labels

masked_input = tensor_input.clone().masked_fill_(binder_mask.bool(), tokenizer.mask_token_id)

labels = tensor_input.clone().masked_fill_(~binder_mask.bool(), -100)

with torch.no_grad():

loss = model(masked_input, labels=labels).loss

return np.exp(loss.item())

def generate_peptide_for_single_sequence(protein_seq, peptide_length = 15, top_k = 3, num_binders = 4):

peptide_length = int(peptide_length)

top_k = int(top_k)

num_binders = int(num_binders)

binders_with_ppl = []

for _ in range(num_binders):

# Generate binder

masked_peptide = '<mask>' * peptide_length

input_sequence = protein_seq + masked_peptide

inputs = tokenizer(input_sequence, return_tensors="pt").to(model.device)

with torch.no_grad():

logits = model(**inputs).logits

mask_token_indices = (inputs["input_ids"] == tokenizer.mask_token_id).nonzero(as_tuple=True)[1]

logits_at_masks = logits[0, mask_token_indices]

# Apply top-k sampling

top_k_logits, top_k_indices = logits_at_masks.topk(top_k, dim=-1)

probabilities = torch.nn.functional.softmax(top_k_logits, dim=-1)

predicted_indices = Categorical(probabilities).sample()

predicted_token_ids = top_k_indices.gather(-1, predicted_indices.unsqueeze(-1)).squeeze(-1)

generated_binder = tokenizer.decode(predicted_token_ids, skip_special_tokens=True).replace(' ', '')

# Compute PPL for the generated binder

ppl_value = compute_pseudo_perplexity(model, tokenizer, protein_seq, generated_binder)

# Add the generated binder and its PPL to the results list

binders_with_ppl.append([generated_binder, ppl_value])

return binders_with_ppl

def generate_peptide(input_seqs, peptide_length=15, top_k=3, num_binders=4):

if isinstance(input_seqs, str): # Single sequence

binders = generate_peptide_for_single_sequence(input_seqs, peptide_length, top_k, num_binders)

return pd.DataFrame(binders, columns=['Binder', 'Pseudo Perplexity'])

elif isinstance(input_seqs, list): # List of sequences

results = []

for seq in input_seqs:

binders = generate_peptide_for_single_sequence(seq, peptide_length, top_k, num_binders)

for binder, ppl in binders:

results.append([seq, binder, ppl])

return pd.DataFrame(results, columns=['Input Sequence', 'Binder', 'Pseudo Perplexity'])

model = EsmForMaskedLM.from_pretrained("AmelieSchreiber/PepMLM_v0")

tokenizer = AutoTokenizer.from_pretrained("facebook/esm2_t33_650M_UR50D")

protein_seq = "MAPLRKTYVLKLYVAGNTPNSVRALKTLNNILEKEFKGVYALKVIDVLKNPQLAEEDKILATPTLAKVLPPPVRRIIGDLSNREKVLIGLDLLYEEIGDQAEDDLGLE"

results_df = generate_peptide(protein_seq, peptide_length=15, top_k=3, num_binders=5)

print(results_df)

```

|

e-n-v-y/envy-tiny-worlds-xl-01

|

e-n-v-y

| 2023-11-22T03:15:41Z | 621 | 4 |

diffusers

|

[

"diffusers",

"text-to-image",

"stable-diffusion",

"lora",

"template:sd-lora",

"city",

"concept",

"miniatures",

"tiny",

"scenery",

"tilt shift",

"miniature landscapes",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"base_model:adapter:stabilityai/stable-diffusion-xl-base-1.0",

"license:other",

"region:us"

] |

text-to-image

| 2023-11-22T03:15:39Z |

---

license: other

license_name: bespoke-lora-trained-license

license_link: https://multimodal.art/civitai-licenses?allowNoCredit=True&allowCommercialUse=Sell&allowDerivatives=True&allowDifferentLicense=True

tags:

- text-to-image

- stable-diffusion

- lora

- diffusers

- template:sd-lora

- city

- concept

- miniatures

- tiny

- scenery

- tilt shift

- miniature landscapes

base_model: stabilityai/stable-diffusion-xl-base-1.0

instance_prompt: tilt-shift

widget:

- text: 'tilt-shift, digital painting, morning, blue sky, clouds, scenery, in a Wild Magic Stormlands'

output:

url: >-

3819345.jpeg

- text: 'tilt-shift, digital painting, noon, scenery, in a Surreal Ice Palace Tundra'

output:

url: >-

3819355.jpeg

- text: 'tilt-shift, digital painting, golden hour, scenery, in a Lake'

output:

url: >-

3819344.jpeg

- text: 'tilt-shift, digital painting, Minotaur''s Maze'

output:

url: >-

3819351.jpeg

- text: 'tilt-shift, digital painting, fantasysolar farm in a infinite,gargantuan scifi arcology at the beginning of time, masterpiece'

output:

url: >-

3819346.jpeg

- text: 'tilt-shift, digital painting, fantasyboardwalk in a abandoned scifi topia at the beginning of time, masterpiece'

output:

url: >-

3819348.jpeg

- text: 'tilt-shift, digital painting, noon, scenery, "at the Astronomic Event horizon"'

output:

url: >-

3819349.jpeg

- text: 'tilt-shift, digital painting, Tropical Rainforest'

output:

url: >-

3819350.jpeg

- text: 'tilt-shift, digital painting, Mummy''s Tomb Desert'

output:

url: >-

3819352.jpeg

- text: 'tilt-shift, digital painting, scifiParadoxical fantasy metropolis beyond the end of the universe'

output:

url: >-

3819399.jpeg

---

# Envy Tiny Worlds XL 01

<Gallery />

## Model description

<p>This model is trained on the concept of tilt shift, which is an old camera trick that makes the subject of photos look very tiny by manipulating blur on the upper and lower half of the image to make it look like depth of field blur. Anyway, it makes everything look really tiny. The trigger word is "tilt-shift".</p>

## Trigger words

You should use `tilt-shift` to trigger the image generation.

## Download model

Weights for this model are available in Safetensors format.

[Download](/e-n-v-y/envy-tiny-worlds-xl-01/tree/main) them in the Files & versions tab.

## Use it with the [🧨 diffusers library](https://github.com/huggingface/diffusers)

```py

from diffusers import AutoPipelineForText2Image

import torch

pipeline = AutoPipelineForText2Image.from_pretrained('stabilityai/stable-diffusion-xl-base-1.0', torch_dtype=torch.float16).to('cuda')

pipeline.load_lora_weights('e-n-v-y/envy-tiny-worlds-xl-01', weight_name='EnvyTinyWorldsXL01.safetensors')

image = pipeline('tilt-shift, digital painting, scifiParadoxical fantasy metropolis beyond the end of the universe').images[0]

```

For more details, including weighting, merging and fusing LoRAs, check the [documentation on loading LoRAs in diffusers](https://huggingface.co/docs/diffusers/main/en/using-diffusers/loading_adapters)

|

Dotunnorth/ppo-Huggy

|

Dotunnorth

| 2023-11-22T03:00:00Z | 5 | 0 |

ml-agents

|

[

"ml-agents",

"tensorboard",

"onnx",

"Huggy",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

] |

reinforcement-learning

| 2023-11-22T02:59:55Z |

---

library_name: ml-agents

tags:

- Huggy

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy**

using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://unity-technologies.github.io/ml-agents/ML-Agents-Toolkit-Documentation/

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

- A *short tutorial* where you teach Huggy the Dog 🐶 to fetch the stick and then play with him directly in your

browser: https://huggingface.co/learn/deep-rl-course/unitbonus1/introduction

- A *longer tutorial* to understand how works ML-Agents:

https://huggingface.co/learn/deep-rl-course/unit5/introduction

### Resume the training

```bash

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser**

1. If the environment is part of ML-Agents official environments, go to https://huggingface.co/unity

2. Step 1: Find your model_id: Dotunnorth/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

srushtibhavsar/FineTuneLlama2

|

srushtibhavsar

| 2023-11-22T02:57:02Z | 0 | 0 |

peft

|

[

"peft",

"safetensors",

"arxiv:1910.09700",

"base_model:NousResearch/Llama-2-7b-chat-hf",

"base_model:adapter:NousResearch/Llama-2-7b-chat-hf",

"region:us"

] | null | 2023-11-22T02:56:56Z |

---

library_name: peft

base_model: NousResearch/Llama-2-7b-chat-hf

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- quant_method: bitsandbytes

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: True

- bnb_4bit_compute_dtype: float16

### Framework versions

- PEFT 0.6.2

|

srushtibhavsar/FineTuneLlama2onHiwiData

|

srushtibhavsar

| 2023-11-22T02:49:57Z | 2 | 0 |

peft

|

[

"peft",

"base_model:NousResearch/Llama-2-7b-chat-hf",

"base_model:adapter:NousResearch/Llama-2-7b-chat-hf",

"region:us"

] | null | 2023-10-27T10:08:38Z |

---

library_name: peft

base_model: NousResearch/Llama-2-7b-chat-hf

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- quant_method: bitsandbytes

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: True

- bnb_4bit_compute_dtype: float16

### Framework versions

- PEFT 0.5.0

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- quant_method: bitsandbytes

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: True

- bnb_4bit_compute_dtype: float16

### Framework versions

- PEFT 0.6.2

|

robinsyihab/Sidrap-7B-v2-GPTQ

|

robinsyihab

| 2023-11-22T02:46:53Z | 8 | 1 |

transformers

|

[

"transformers",

"mistral",

"text-generation",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-11-16T06:47:56Z |

---

license: apache-2.0

---

# Sidrap-7B-v2-GPTQ

Sidrap-7B-v2-GPTQ is an 8-bit quantized model of Sidrap-7B-v2, which is one of the best open model LLM bahasa Indonesia available today. This model has been quantized using [AutoGPTQ](https://github.com/PanQiWei/AutoGPTQ) to get smaller model that allows us to run in a lower resource environment with faster inference. The quantization uses random subset of original training data to "calibrate" the weights resulting in an optimally compact model with minimall loss in accuracy.

## Usage

Here is an example code snippet for using Sidrap-7B-v2-GPTQ:

```python

from transformers import AutoTokenizer, pipeline

from auto_gptq import AutoGPTQForCausalLM

model_id = "robinsyihab/Sidrap-7B-v2-GPTQ"

tokenizer = AutoTokenizer.from_pretrained(model_id, use_fast=True)

model = AutoGPTQForCausalLM.from_quantized(model_id,

device="cuda:0",

inject_fused_mlp=True,

inject_fused_attention=True,

trust_remote_code=True)

chat = pipeline("text-generation",

model=model,

tokenizer=tokenizer,

device_map="auto")

prompt = ("<s>[INST] <<SYS>>\nAnda adalah asisten yang suka membantu, penuh hormat, dan jujur. Selalu jawab semaksimal mungkin, sambil tetap aman. Jawaban Anda tidak boleh berisi konten berbahaya, tidak etis, rasis, seksis, beracun, atau ilegal. Harap pastikan bahwa tanggapan Anda tidak memihak secara sosial dan bersifat positif.\n\

Jika sebuah pertanyaan tidak masuk akal, atau tidak koheren secara faktual, jelaskan alasannya daripada menjawab sesuatu yang tidak benar. Jika Anda tidak mengetahui jawaban atas sebuah pertanyaan, mohon jangan membagikan informasi palsu.\n"

"<</SYS>>\n\n"

"Siapa penulis kitab alfiyah? [/INST]\n"

)

sequences = chat(prompt, num_beams=2, max_length=max_size, top_k=10, num_return_sequences=1)

print(sequences[0]['generated_text'])

```

## License

Sidrap-7B-v2-GPTQ is licensed under the Apache 2.0 License.

## Author

[] Robin Syihab ([@anvie](https://x.com/anvie))

|

nathanReitinger/mlcb

|

nathanReitinger

| 2023-11-22T02:41:15Z | 5 | 0 |

transformers

|

[

"transformers",

"tf",

"roberta",

"text-classification",

"generated_from_keras_callback",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-11-19T00:29:00Z |

---

tags:

- generated_from_keras_callback

model-index:

- name: nathanReitinger/mlcb

results: []

widget:

- text: "window._wpemojiSettings = {'baseUrl':'http:\/\/s.w.org\/images\/core\/emoji\/72x72\/','ext':'.png','source':{'concatemoji':'http:\/\/basho.com\/wp-includes\/js\/wp-emoji-release.min.js?ver=4.2.2'}}; !function(a,b,c){function d(a){var c=b.createElement('canvas'),d=c.getContext&&c.getContext('2d');return d&&d.fillText?(d.textBaseline='top',d.font='600 32px Arial','flag'===a?(d.fillText(String.fromCharCode(55356,56812,55356,56807),0,0),c.toDataURL().length>3e3):(d.fillText(String.fromCharCode(55357,56835),0,0),0!==d.getImageData(16,16,1,1).data[0])):!1}function e(a){var c=b.createElement('script');c.src=a,c.type='text/javascript',b.getElementsByTagName('head')[0].appendChild(c)}var f,g;c.supports={simple:d('simple'),flag:d('flag')},c.DOMReady=!1,c.readyCallback=function(){c.DOMReady=!0},c.supports.simple&&c.supports.flag||(g=function(){c.readyCallback()},b.addEventListener?(b.addEventListener('DOMContentLoaded',g,!1),a.addEventListener('load',g,!1)):(a.attachEvent('onload',g),b.attachEvent('onreadystatechange',function(){'complete'===b.readyState&&c.readyCallback()})),f=c.source||{},f.concatemoji?e(f.concatemoji):f.wpemoji&&f.twemoji&&(e(f.twemoji),e(f.wpemoji)))}(window,document,window._wpemojiSettings);"

example_title: "Word Press Emoji False Positive"

- text: "var canvas = document.createElement('canvas');

var ctx = canvas.getContext('2d');

var txt = 'i9asdm..$#po((^@KbXrww!~cz';

ctx.textBaseline = 'top';

ctx.font = '16px 'Arial'';

ctx.textBaseline = 'alphabetic';

ctx.rotate(.05);

ctx.fillStyle = '#f60';

ctx.fillRect(125,1,62,20);

ctx.fillStyle = '#069';

ctx.fillText(txt, 2, 15);

ctx.fillStyle = 'rgba(102, 200, 0, 0.7)';

ctx.fillText(txt, 4, 17);

ctx.shadowBlur=10;

ctx.shadowColor='blue';

ctx.fillRect(-20,10,234,5);

var strng=canvas.toDataURL();"

example_title: "Canvas Fingerprinting Canonical Example"

inference:

parameters:

wait_for_model: true

use_cache: false

temperature: 0

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# nathanReitinger/mlcb

This model is a fine-tuned version of [dbernsohn/roberta-javascript](https://huggingface.co/dbernsohn/roberta-javascript) on the [mlcb dataset](https://huggingface.co/datasets/nathanReitinger/mlcb).

It achieves the following results on the evaluation set:

- Train Loss: 0.0463

- Validation Loss: 0.0930

- Train Accuracy: 0.9708

- Epoch: 4

## Intended uses & limitations

The model can be used to identify whether a JavaScript program is engaging in canvas fingerprinting.

## Training and evaluation data

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'weight_decay': None, 'clipnorm': None, 'global_clipnorm': None, 'clipvalue': None, 'use_ema': False, 'ema_momentum': 0.99, 'ema_overwrite_frequency': None, 'jit_compile': False, 'is_legacy_optimizer': False, 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 910, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Train Accuracy | Epoch |

|:----------:|:---------------:|:--------------:|:-----:|

| 0.1291 | 0.1235 | 0.9693 | 0 |

| 0.0874 | 0.1073 | 0.9662 | 1 |

| 0.0720 | 0.1026 | 0.9677 | 2 |

| 0.0588 | 0.0950 | 0.9708 | 3 |

| 0.0463 | 0.0930 | 0.9708 | 4 |

### Framework versions

- Transformers 4.30.2

- TensorFlow 2.11.0

- Datasets 2.13.2

- Tokenizers 0.13.3

# Citation

```

@inproceedings{reitinger2021ml,

title={ML-CB: Machine Learning Canvas Block.},

author={Nathan Reitinger and Michelle L Mazurek},

journal={Proc.\ PETS},

volume={2021},

number={3},

pages={453--473},

year={2021}

}

```

- [OSF](https://osf.io/shbe7/)

- [GitHub](https://github.com/SP2-MC2/ML-CB)

- [Data](https://dataverse.harvard.edu/dataverse/ml-cb)

|

snintendog/Gummibar-Spanish

|

snintendog

| 2023-11-22T02:39:20Z | 0 | 0 | null |

[

"license:openrail",

"region:us"

] | null | 2023-11-22T02:32:26Z |

---

license: openrail

---

Created from a 3:03 song at 1000 Epochs Using rmvpe in RVC v2. Male Voices like to be in low octavies to neutral, for female voices -8 or less.

|

LinYuting/icd_o_sentence_transformer_128_dim_model

|

LinYuting

| 2023-11-22T02:39:00Z | 4 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"bert",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2023-11-22T02:38:38Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# pritamdeka/BioBERT-mnli-snli-scinli-scitail-mednli-stsb

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search. It has been trained over the SNLI, MNLI, SCINLI, SCITAIL, MEDNLI and STSB datasets for providing robust sentence embeddings.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('pritamdeka/BioBERT-mnli-snli-scinli-scitail-mednli-stsb')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('pritamdeka/BioBERT-mnli-snli-scinli-scitail-mednli-stsb')

model = AutoModel.from_pretrained('pritamdeka/BioBERT-mnli-snli-scinli-scitail-mednli-stsb')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 90 with parameters:

```

{'batch_size': 64, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 4,

"evaluation_steps": 1000,

"evaluator": "sentence_transformers.evaluation.EmbeddingSimilarityEvaluator.EmbeddingSimilarityEvaluator",

"max_grad_norm": 1,

"optimizer_class": "<class 'transformers.optimization.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 36,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 100, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

If you use the model kindly cite the following work

```

@inproceedings{deka2022evidence,

title={Evidence Extraction to Validate Medical Claims in Fake News Detection},

author={Deka, Pritam and Jurek-Loughrey, Anna and others},

booktitle={International Conference on Health Information Science},

pages={3--15},

year={2022},

organization={Springer}

}

```

|

jrad98/rl_course_vizdoom_health_gathering_supreme

|

jrad98

| 2023-11-22T02:29:38Z | 0 | 0 |

sample-factory

|

[

"sample-factory",

"tensorboard",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-11-22T02:29:29Z |

---

library_name: sample-factory

tags:

- deep-reinforcement-learning

- reinforcement-learning

- sample-factory

model-index:

- name: APPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: doom_health_gathering_supreme

type: doom_health_gathering_supreme

metrics:

- type: mean_reward

value: 9.60 +/- 3.99

name: mean_reward

verified: false

---

A(n) **APPO** model trained on the **doom_health_gathering_supreme** environment.

This model was trained using Sample-Factory 2.0: https://github.com/alex-petrenko/sample-factory.

Documentation for how to use Sample-Factory can be found at https://www.samplefactory.dev/

## Downloading the model

After installing Sample-Factory, download the model with:

```

python -m sample_factory.huggingface.load_from_hub -r jrad98/rl_course_vizdoom_health_gathering_supreme

```

## Using the model

To run the model after download, use the `enjoy` script corresponding to this environment:

```

python -m .usr.local.lib.python3.10.dist-packages.colab_kernel_launcher --algo=APPO --env=doom_health_gathering_supreme --train_dir=./train_dir --experiment=rl_course_vizdoom_health_gathering_supreme

```

You can also upload models to the Hugging Face Hub using the same script with the `--push_to_hub` flag.

See https://www.samplefactory.dev/10-huggingface/huggingface/ for more details

## Training with this model

To continue training with this model, use the `train` script corresponding to this environment:

```

python -m .usr.local.lib.python3.10.dist-packages.colab_kernel_launcher --algo=APPO --env=doom_health_gathering_supreme --train_dir=./train_dir --experiment=rl_course_vizdoom_health_gathering_supreme --restart_behavior=resume --train_for_env_steps=10000000000

```

Note, you may have to adjust `--train_for_env_steps` to a suitably high number as the experiment will resume at the number of steps it concluded at.

|

lillybak/sft_zephyr

|

lillybak

| 2023-11-22T02:24:19Z | 0 | 0 | null |

[

"tensorboard",

"safetensors",

"generated_from_trainer",

"base_model:HuggingFaceH4/zephyr-7b-alpha",

"base_model:finetune:HuggingFaceH4/zephyr-7b-alpha",

"license:mit",

"region:us"

] | null | 2023-11-22T02:24:11Z |

---

license: mit

base_model: HuggingFaceH4/zephyr-7b-alpha

tags:

- generated_from_trainer

model-index:

- name: sft_zephyr

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# sft_zephyr

This model is a fine-tuned version of [HuggingFaceH4/zephyr-7b-alpha](https://huggingface.co/HuggingFaceH4/zephyr-7b-alpha) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- num_epochs: 5

### Training results

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu118

- Datasets 2.15.0

- Tokenizers 0.15.0

|

pbelcak/UltraFastBERT-1x11-long

|

pbelcak

| 2023-11-22T02:21:22Z | 11 | 75 |

transformers

|

[

"transformers",

"safetensors",

"crammedBERT",

"en",

"dataset:EleutherAI/pile",

"arxiv:2311.10770",

"license:mit",

"endpoints_compatible",

"region:us"

] | null | 2023-11-21T07:00:55Z |

---

license: mit

datasets:

- EleutherAI/pile

language:

- en

metrics:

- glue

---

# UltraFastBERT-1x11-long

This is the final model described in "Exponentially Faster Language Modelling".

The model has been pretrained just like crammedBERT but with fast feedforward networks (FFF) in place of the traditional feedforward layers.

To use this model, you need the code from the repo at https://github.com/pbelcak/UltraFastBERT.

You can find the paper here: https://arxiv.org/abs/2311.10770, and the abstract below:

> Language models only really need to use an exponential fraction of their neurons for individual inferences.

> As proof, we present UltraFastBERT, a BERT variant that uses 0.3% of its neurons during inference while performing on par with similar BERT models. UltraFastBERT selectively engages just 12 out of 4095 neurons for each layer inference. This is achieved by replacing feedforward networks with fast feedforward networks (FFFs).

> While no truly efficient implementation currently exists to unlock the full acceleration potential of conditional neural execution, we provide high-level CPU code achieving 78x speedup over the optimized baseline feedforward implementation, and a PyTorch implementation delivering 40x speedup over the equivalent batched feedforward inference. We publish our training code, benchmarking setup, and model weights.

## Intended uses & limitations

This is the raw pretraining checkpoint. You can use this to fine-tune on a downstream task like GLUE as discussed in the paper. This model is provided only as sanity check for research purposes, it is untested and unfit for deployment.

### How to get started

1. Create a new Python/conda environment, or simply use one that does not have any previous version of the original `cramming` project installed. If, by accident, you use the original cramming repository code instead of the one provided in the `/training` folder of this project, you will be warned by `transformers` that there are some extra weights (FFF weight) and that some weights are missing (the FF weights expected by the original `crammedBERT`).

2. `cd ./training`

3. `pip install .`

4. Create `minimal_example.py`

5. Paste the code below

```python

import cramming

from transformers import AutoModelForMaskedLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("pbelcak/UltraFastBERT-1x11-long")

model = AutoModelForMaskedLM.from_pretrained("pbelcak/UltraFastBERT-1x11-long")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

6. Run `python minimal_example.py`.

### Limitations and bias

The training data used for this model was further filtered and sorted beyond the normal Pile. These modifications were not tested for unintended consequences.

## Training data, Training procedure, Preprocessing, Pretraining

These are discussed in the paper. You can find the final configurations for each in this repository.

## Evaluation results

When fine-tuned on downstream tasks, this model achieves the following results:

Glue test results:

| Task | MNLI-(m-mm) | QQP | QNLI | SST-2 | STS-B | MRPC | RTE | Average |

|:----:|:-----------:|:----:|:----:|:-----:|:-----:|:----:|:----:|:-------:|

| Score| 81.3 | 87.6 | 89.7 | 89.9 | 86.4 | 87.5 | 60.7 | 83.0 |

These numbers are the median over 5 trials on "GLUE-sane" using the GLUE-dev set. With this variant of GLUE, finetuning cannot be longer than 5 epochs on each task, and hyperparameters have to be chosen equal for all tasks.

### BibTeX entry and citation info

```bibtex

@article{belcak2023exponential,

title = {Exponentially {{Faster}} {{Language}} {{Modelling}}},

author = {Belcak, Peter and Wattenhofer, Roger},

year = {2023},

month = nov,

eprint = {2311.10770},

eprinttype = {arxiv},

primaryclass = {cs},

publisher = {{arXiv}},

url = {https://arxiv.org/pdf/2311.10770},

urldate = {2023-11-21},

archiveprefix = {arXiv},

keywords = {Computer Science - Computation and Language,Computer Science - Machine Learning},

journal = {arxiv:2311.10770[cs]}

}

```

|

Suraj-Yadav/finetuned-kde4-en-to-hi

|

Suraj-Yadav

| 2023-11-22T02:16:23Z | 16 | 1 |

transformers

|

[

"transformers",

"pytorch",

"safetensors",

"marian",

"text2text-generation",

"translation",

"generated_from_trainer",

"dataset:kde4",

"base_model:Helsinki-NLP/opus-mt-en-hi",

"base_model:finetune:Helsinki-NLP/opus-mt-en-hi",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

translation

| 2023-10-31T14:56:45Z |

---

license: apache-2.0

base_model: Helsinki-NLP/opus-mt-en-hi

tags:

- translation

- generated_from_trainer

datasets:

- kde4

metrics:

- bleu

model-index:

- name: finetuned-kde4-en-to-hi

results:

- task:

name: Sequence-to-sequence Language Modeling

type: text2text-generation

dataset:

name: kde4

type: kde4

config: en-hi

split: train

args: en-hi

metrics:

- name: Bleu

type: bleu

value: 48.24401152147744

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuned-kde4-en-to-hi

This model is a fine-tuned version of [Helsinki-NLP/opus-mt-en-hi](https://huggingface.co/Helsinki-NLP/opus-mt-en-hi) on the kde4 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9644

- Bleu: 48.2440

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.34.1

- Pytorch 2.1.0+cu118

- Datasets 2.14.6

- Tokenizers 0.14.1

|

tparng/distilbert-base-uncased-lora-text-classification

|

tparng

| 2023-11-22T01:51:17Z | 0 | 0 | null |

[

"safetensors",

"generated_from_trainer",

"base_model:distilbert/distilbert-base-uncased",

"base_model:finetune:distilbert/distilbert-base-uncased",

"license:apache-2.0",

"region:us"

] | null | 2023-11-22T01:51:10Z |

---

license: apache-2.0

base_model: distilbert-base-uncased

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: distilbert-base-uncased-lora-text-classification

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-lora-text-classification

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9162

- Accuracy: {'accuracy': 0.901}

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:-------------------:|

| No log | 1.0 | 250 | 0.3611 | {'accuracy': 0.871} |

| 0.4182 | 2.0 | 500 | 0.5356 | {'accuracy': 0.883} |

| 0.4182 | 3.0 | 750 | 0.5292 | {'accuracy': 0.899} |

| 0.2132 | 4.0 | 1000 | 0.5966 | {'accuracy': 0.897} |

| 0.2132 | 5.0 | 1250 | 0.6869 | {'accuracy': 0.894} |

| 0.0748 | 6.0 | 1500 | 0.7645 | {'accuracy': 0.898} |

| 0.0748 | 7.0 | 1750 | 0.8095 | {'accuracy': 0.897} |

| 0.0335 | 8.0 | 2000 | 0.9055 | {'accuracy': 0.892} |

| 0.0335 | 9.0 | 2250 | 0.9086 | {'accuracy': 0.901} |

| 0.0083 | 10.0 | 2500 | 0.9162 | {'accuracy': 0.901} |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.1+cu121

- Datasets 2.15.0

- Tokenizers 0.15.0

|

jrad98/lunar_lander_v2_unit8_part1

|

jrad98

| 2023-11-22T01:45:06Z | 0 | 0 | null |

[

"tensorboard",

"LunarLander-v2",

"ppo",

"deep-reinforcement-learning",

"reinforcement-learning",

"custom-implementation",

"deep-rl-course",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-11-22T01:10:59Z |

---

tags:

- LunarLander-v2

- ppo

- deep-reinforcement-learning

- reinforcement-learning

- custom-implementation

- deep-rl-course

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 81.91 +/- 175.33

name: mean_reward

verified: false

---

# PPO Agent Playing LunarLander-v2

This is a trained model of a PPO agent playing LunarLander-v2.

# Hyperparameters

```python

{'exp_name': 'ppo'

'seed': 1

'torch_deterministic': True

'cuda': True

'track': False

'wandb_project_name': 'cleanRL'

'wandb_entity': None

'capture_video': False

'env_id': 'LunarLander-v2'

'total_timesteps': 250000

'learning_rate': 0.0005

'num_envs': 10

'num_steps': 500

'anneal_lr': True

'gae': True

'gamma': 0.99

'gae_lambda': 0.95

'num_minibatches': 20

'update_epochs': 40

'norm_adv': True

'clip_coef': 0.2

'clip_vloss': True

'ent_coef': 0.01

'vf_coef': 0.5

'max_grad_norm': 0.2

'target_kl': 0.2

'repo_id': 'jrad98/lunar_lander_v2_unit8_part1'

'batch_size': 5000

'minibatch_size': 250}

```

|

cmagganas/sft_zephyr

|

cmagganas

| 2023-11-22T01:03:01Z | 0 | 0 | null |

[

"tensorboard",

"safetensors",

"generated_from_trainer",

"base_model:HuggingFaceH4/zephyr-7b-alpha",

"base_model:finetune:HuggingFaceH4/zephyr-7b-alpha",

"license:mit",

"region:us"

] | null | 2023-11-22T01:02:46Z |

---

license: mit

base_model: HuggingFaceH4/zephyr-7b-alpha

tags:

- generated_from_trainer

model-index:

- name: sft_zephyr

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# sft_zephyr

This model is a fine-tuned version of [HuggingFaceH4/zephyr-7b-alpha](https://huggingface.co/HuggingFaceH4/zephyr-7b-alpha) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- num_epochs: 5

### Training results

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu118

- Datasets 2.15.0

- Tokenizers 0.15.0

|

syed789/zephyr-7b-beta-fhir-ft

|

syed789

| 2023-11-22T00:59:56Z | 1 | 0 |

peft

|

[

"peft",

"arxiv:1910.09700",

"base_model:HuggingFaceH4/zephyr-7b-beta",

"base_model:adapter:HuggingFaceH4/zephyr-7b-beta",

"region:us"

] | null | 2023-11-22T00:59:55Z |

---

library_name: peft

base_model: HuggingFaceH4/zephyr-7b-beta

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]