modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-04 06:26:56

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 538

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-04 06:26:41

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

benedikt-schaber/q-FrozenLake-v1-4x4-noSlippery

|

benedikt-schaber

| 2023-09-21T17:32:16Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-09-21T17:32:14Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="benedikt-schaber/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

CyberHarem/senzaki_ema_idolmastercinderellagirls

|

CyberHarem

| 2023-09-21T17:22:10Z | 0 | 0 | null |

[

"art",

"text-to-image",

"dataset:CyberHarem/senzaki_ema_idolmastercinderellagirls",

"license:mit",

"region:us"

] |

text-to-image

| 2023-09-21T17:13:47Z |

---

license: mit

datasets:

- CyberHarem/senzaki_ema_idolmastercinderellagirls

pipeline_tag: text-to-image

tags:

- art

---

# Lora of senzaki_ema_idolmastercinderellagirls

This model is trained with [HCP-Diffusion](https://github.com/7eu7d7/HCP-Diffusion). And the auto-training framework is maintained by [DeepGHS Team](https://huggingface.co/deepghs).

The base model used during training is [NAI](https://huggingface.co/deepghs/animefull-latest), and the base model used for generating preview images is [Meina/MeinaMix_V11](https://huggingface.co/Meina/MeinaMix_V11).

After downloading the pt and safetensors files for the specified step, you need to use them simultaneously. The pt file will be used as an embedding, while the safetensors file will be loaded for Lora.

For example, if you want to use the model from step 3400, you need to download `3400/senzaki_ema_idolmastercinderellagirls.pt` as the embedding and `3400/senzaki_ema_idolmastercinderellagirls.safetensors` for loading Lora. By using both files together, you can generate images for the desired characters.

**The best step we recommend is 3400**, with the score of 0.999. The trigger words are:

1. `senzaki_ema_idolmastercinderellagirls`

2. `short_hair, jewelry, blonde_hair, very_short_hair, earrings, smile, red_eyes, open_mouth`

For the following groups, it is not recommended to use this model and we express regret:

1. Individuals who cannot tolerate any deviations from the original character design, even in the slightest detail.

2. Individuals who are facing the application scenarios with high demands for accuracy in recreating character outfits.

3. Individuals who cannot accept the potential randomness in AI-generated images based on the Stable Diffusion algorithm.

4. Individuals who are not comfortable with the fully automated process of training character models using LoRA, or those who believe that training character models must be done purely through manual operations to avoid disrespecting the characters.

5. Individuals who finds the generated image content offensive to their values.

These are available steps:

| Steps | Score | Download | pattern_1 | bikini | bondage | free | maid | miko | nude | nude2 | suit | yukata |

|:---------|:----------|:---------------------------------------------------------------|:-----------------------------------------------|:-----------------------------------------|:--------------------------------------------------|:-------------------------------------|:-------------------------------------|:-------------------------------------|:-----------------------------------------------|:------------------------------------------------|:-------------------------------------|:-----------------------------------------|

| 5100 | 0.962 | [Download](5100/senzaki_ema_idolmastercinderellagirls.zip) |  |  | [<NSFW, click to see>](5100/previews/bondage.png) |  |  |  | [<NSFW, click to see>](5100/previews/nude.png) | [<NSFW, click to see>](5100/previews/nude2.png) |  |  |

| 4760 | 0.993 | [Download](4760/senzaki_ema_idolmastercinderellagirls.zip) |  |  | [<NSFW, click to see>](4760/previews/bondage.png) |  |  |  | [<NSFW, click to see>](4760/previews/nude.png) | [<NSFW, click to see>](4760/previews/nude2.png) |  |  |

| 4420 | 0.998 | [Download](4420/senzaki_ema_idolmastercinderellagirls.zip) |  |  | [<NSFW, click to see>](4420/previews/bondage.png) |  |  |  | [<NSFW, click to see>](4420/previews/nude.png) | [<NSFW, click to see>](4420/previews/nude2.png) |  |  |

| 4080 | 0.996 | [Download](4080/senzaki_ema_idolmastercinderellagirls.zip) |  |  | [<NSFW, click to see>](4080/previews/bondage.png) |  |  |  | [<NSFW, click to see>](4080/previews/nude.png) | [<NSFW, click to see>](4080/previews/nude2.png) |  |  |

| 3740 | 0.962 | [Download](3740/senzaki_ema_idolmastercinderellagirls.zip) |  |  | [<NSFW, click to see>](3740/previews/bondage.png) |  |  |  | [<NSFW, click to see>](3740/previews/nude.png) | [<NSFW, click to see>](3740/previews/nude2.png) |  |  |

| **3400** | **0.999** | [**Download**](3400/senzaki_ema_idolmastercinderellagirls.zip) |  |  | [<NSFW, click to see>](3400/previews/bondage.png) |  |  |  | [<NSFW, click to see>](3400/previews/nude.png) | [<NSFW, click to see>](3400/previews/nude2.png) |  |  |

| 3060 | 0.978 | [Download](3060/senzaki_ema_idolmastercinderellagirls.zip) |  |  | [<NSFW, click to see>](3060/previews/bondage.png) |  |  |  | [<NSFW, click to see>](3060/previews/nude.png) | [<NSFW, click to see>](3060/previews/nude2.png) |  |  |

| 2720 | 0.994 | [Download](2720/senzaki_ema_idolmastercinderellagirls.zip) |  |  | [<NSFW, click to see>](2720/previews/bondage.png) |  |  |  | [<NSFW, click to see>](2720/previews/nude.png) | [<NSFW, click to see>](2720/previews/nude2.png) |  |  |

| 2380 | 0.996 | [Download](2380/senzaki_ema_idolmastercinderellagirls.zip) |  |  | [<NSFW, click to see>](2380/previews/bondage.png) |  |  |  | [<NSFW, click to see>](2380/previews/nude.png) | [<NSFW, click to see>](2380/previews/nude2.png) |  |  |

| 2040 | 0.994 | [Download](2040/senzaki_ema_idolmastercinderellagirls.zip) |  |  | [<NSFW, click to see>](2040/previews/bondage.png) |  |  |  | [<NSFW, click to see>](2040/previews/nude.png) | [<NSFW, click to see>](2040/previews/nude2.png) |  |  |

| 1700 | 0.992 | [Download](1700/senzaki_ema_idolmastercinderellagirls.zip) |  |  | [<NSFW, click to see>](1700/previews/bondage.png) |  |  |  | [<NSFW, click to see>](1700/previews/nude.png) | [<NSFW, click to see>](1700/previews/nude2.png) |  |  |

| 1360 | 0.997 | [Download](1360/senzaki_ema_idolmastercinderellagirls.zip) |  |  | [<NSFW, click to see>](1360/previews/bondage.png) |  |  |  | [<NSFW, click to see>](1360/previews/nude.png) | [<NSFW, click to see>](1360/previews/nude2.png) |  |  |

| 1020 | 0.983 | [Download](1020/senzaki_ema_idolmastercinderellagirls.zip) |  |  | [<NSFW, click to see>](1020/previews/bondage.png) |  |  |  | [<NSFW, click to see>](1020/previews/nude.png) | [<NSFW, click to see>](1020/previews/nude2.png) |  |  |

| 680 | 0.992 | [Download](680/senzaki_ema_idolmastercinderellagirls.zip) |  |  | [<NSFW, click to see>](680/previews/bondage.png) |  |  |  | [<NSFW, click to see>](680/previews/nude.png) | [<NSFW, click to see>](680/previews/nude2.png) |  |  |

| 340 | 0.847 | [Download](340/senzaki_ema_idolmastercinderellagirls.zip) |  |  | [<NSFW, click to see>](340/previews/bondage.png) |  |  |  | [<NSFW, click to see>](340/previews/nude.png) | [<NSFW, click to see>](340/previews/nude2.png) |  |  |

|

steveice/videomae-large-finetuned-kinetics-finetuned-videomae-large-kitchen

|

steveice

| 2023-09-21T17:13:55Z | 8 | 0 |

transformers

|

[

"transformers",

"pytorch",

"videomae",

"video-classification",

"generated_from_trainer",

"base_model:MCG-NJU/videomae-large-finetuned-kinetics",

"base_model:finetune:MCG-NJU/videomae-large-finetuned-kinetics",

"license:cc-by-nc-4.0",

"endpoints_compatible",

"region:us"

] |

video-classification

| 2023-09-20T21:16:12Z |

---

license: cc-by-nc-4.0

base_model: MCG-NJU/videomae-large-finetuned-kinetics

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: videomae-large-finetuned-kinetics-finetuned-videomae-large-kitchen

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# videomae-large-finetuned-kinetics-finetuned-videomae-large-kitchen

This model is a fine-tuned version of [MCG-NJU/videomae-large-finetuned-kinetics](https://huggingface.co/MCG-NJU/videomae-large-finetuned-kinetics) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6309

- Accuracy: 0.8900

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- training_steps: 11100

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 3.5158 | 0.02 | 222 | 3.6067 | 0.0588 |

| 2.8571 | 1.02 | 444 | 3.1445 | 0.3014 |

| 1.8854 | 2.02 | 666 | 2.3644 | 0.4607 |

| 1.5533 | 3.02 | 888 | 1.7967 | 0.5621 |

| 1.3935 | 4.02 | 1110 | 1.3755 | 0.6502 |

| 1.1722 | 5.02 | 1332 | 1.2232 | 0.7109 |

| 0.2896 | 6.02 | 1554 | 1.2859 | 0.6256 |

| 0.3166 | 7.02 | 1776 | 1.2910 | 0.6720 |

| 0.6902 | 8.02 | 1998 | 1.2702 | 0.6995 |

| 0.4193 | 9.02 | 2220 | 1.2087 | 0.7137 |

| 0.1889 | 10.02 | 2442 | 1.0500 | 0.7611 |

| 0.4502 | 11.02 | 2664 | 1.1647 | 0.7118 |

| 0.7703 | 12.02 | 2886 | 1.1037 | 0.7242 |

| 0.0957 | 13.02 | 3108 | 1.0967 | 0.7706 |

| 0.3202 | 14.02 | 3330 | 1.0479 | 0.7545 |

| 0.3634 | 15.02 | 3552 | 1.0714 | 0.8057 |

| 0.3883 | 16.02 | 3774 | 1.2323 | 0.7498 |

| 0.0322 | 17.02 | 3996 | 1.0504 | 0.7848 |

| 0.5108 | 18.02 | 4218 | 1.1356 | 0.7915 |

| 0.309 | 19.02 | 4440 | 1.1409 | 0.7592 |

| 0.56 | 20.02 | 4662 | 1.0828 | 0.7915 |

| 0.3675 | 21.02 | 4884 | 0.9154 | 0.8123 |

| 0.0076 | 22.02 | 5106 | 1.0974 | 0.8133 |

| 0.0451 | 23.02 | 5328 | 1.0361 | 0.8152 |

| 0.2558 | 24.02 | 5550 | 0.7830 | 0.8237 |

| 0.0125 | 25.02 | 5772 | 0.8728 | 0.8171 |

| 0.4184 | 26.02 | 5994 | 0.8413 | 0.8265 |

| 0.2566 | 27.02 | 6216 | 1.0644 | 0.8009 |

| 0.1257 | 28.02 | 6438 | 0.8641 | 0.8265 |

| 0.1326 | 29.02 | 6660 | 0.8444 | 0.8417 |

| 0.0436 | 30.02 | 6882 | 0.8615 | 0.8322 |

| 0.0408 | 31.02 | 7104 | 0.8075 | 0.8332 |

| 0.0316 | 32.02 | 7326 | 0.8699 | 0.8341 |

| 0.2235 | 33.02 | 7548 | 0.8151 | 0.8455 |

| 0.0079 | 34.02 | 7770 | 0.8099 | 0.8550 |

| 0.001 | 35.02 | 7992 | 0.8640 | 0.8370 |

| 0.0007 | 36.02 | 8214 | 0.7146 | 0.8483 |

| 0.464 | 37.02 | 8436 | 0.7917 | 0.8464 |

| 0.0005 | 38.02 | 8658 | 0.7239 | 0.8531 |

| 0.0004 | 39.02 | 8880 | 0.7702 | 0.8701 |

| 0.1705 | 40.02 | 9102 | 0.7543 | 0.8521 |

| 0.0039 | 41.02 | 9324 | 0.7456 | 0.8673 |

| 0.0168 | 42.02 | 9546 | 0.7255 | 0.8730 |

| 0.2615 | 43.02 | 9768 | 0.7453 | 0.8758 |

| 0.0004 | 44.02 | 9990 | 0.6824 | 0.8806 |

| 0.236 | 45.02 | 10212 | 0.6624 | 0.8825 |

| 0.0007 | 46.02 | 10434 | 0.6727 | 0.8815 |

| 0.0004 | 47.02 | 10656 | 0.6478 | 0.8863 |

| 0.268 | 48.02 | 10878 | 0.6309 | 0.8900 |

| 0.0025 | 49.02 | 11100 | 0.6284 | 0.8900 |

### Framework versions

- Transformers 4.33.2

- Pytorch 1.12.1+cu113

- Datasets 2.14.5

- Tokenizers 0.13.3

|

annahaz/xlm-roberta-base-misogyny-sexism-indomain-mix-bal

|

annahaz

| 2023-09-21T17:12:38Z | 126 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-08-16T18:33:59Z |

This is a multilingual misogyny and sexism detection model.

This model was released with the following paper (https://rdcu.be/dmIpq):

```

@InProceedings{10.1007/978-3-031-43129-6_9,

author="Chang, Rong-Ching

and May, Jonathan

and Lerman, Kristina",

editor="Thomson, Robert

and Al-khateeb, Samer

and Burger, Annetta

and Park, Patrick

and A. Pyke, Aryn",

title="Feedback Loops and Complex Dynamics of Harmful Speech in Online Discussions",

booktitle="Social, Cultural, and Behavioral Modeling",

year="2023",

publisher="Springer Nature Switzerland",

address="Cham",

pages="85--94",

abstract="Harmful and toxic speech contribute to an unwelcoming online environment that suppresses participation and conversation. Efforts have focused on detecting and mitigating harmful speech; however, the mechanisms by which toxicity degrades online discussions are not well understood. This paper makes two contributions. First, to comprehensively model harmful comments, we introduce a multilingual misogyny and sexist speech detection model (https://huggingface.co/annahaz/xlm-roberta-base-misogyny-sexism-indomain-mix-bal). Second, we model the complex dynamics of online discussions as feedback loops in which harmful comments lead to negative emotions which prompt even more harmful comments. To quantify the feedback loops, we use a combination of mutual Granger causality and regression to analyze discussions on two political forums on Reddit: the moderated political forum r/Politics and the moderated neutral political forum r/NeutralPolitics. Our results suggest that harmful comments and negative emotions create self-reinforcing feedback loops in forums. Contrarily, moderation with neutral discussion appears to tip interactions into self-extinguishing feedback loops that reduce harmful speech and negative emotions. Our study sheds more light on the complex dynamics of harmful speech and the role of moderation and neutral discussion in mitigating these dynamics.",

isbn="978-3-031-43129-6"

}

```

We combined several multilingual ground truth datasets for misogyny and sexism (M/S) versus non-misogyny and non-sexism (non-M/S) [3,5,8,9,11,13, 20]. Specifically, the dataset expressing misogynistic or sexist speech (M/S) and the same number of texts expressing non-M/S speech in each language included 8, 582 English-language texts, 872 in French, 561 in Hindi, 2, 190 in Italian, and 612 in Bengali. The test data was a balanced set of 100 texts sampled randomly from both M/S and non-M/S groups in each language, for a total of 500 examples of M/S speech and 500 examples of non-M/S speech.

References of the datasets are:

3. Bhattacharya, S., et al.: Developing a multilingual annotated corpus of misog- yny and aggression, pp. 158–168. ELRA, Marseille, France, May 2020. https:// aclanthology.org/2020.trac- 1.25

5. Chiril, P., Moriceau, V., Benamara, F., Mari, A., Origgi, G., Coulomb-Gully, M.: An annotated corpus for sexism detection in French tweets. In: Proceedings of LREC, pp. 1397–1403 (2020)

8. Fersini, E., et al.: SemEval-2022 task 5: multimedia automatic misogyny identification. In: Proceedings of SemEval, pp. 533–549 (2022)

9. Fersini, E., Nozza, D., Rosso, P.: Overview of the Evalita 2018 task on automatic misogyny identification (AMI). EVALITA Eval. NLP Speech Tools Italian 12, 59 (2018)

11. Guest, E., Vidgen, B., Mittos, A., Sastry, N., Tyson, G., Margetts, H.: An expert annotated dataset for the detection of online misogyny. In: Proceedings of EACL, pp. 1336–1350 (2021)

13. Jha, A., Mamidi, R.: When does a compliment become sexist? Analysis and classification of ambivalent sexism using Twitter data. In: Proceedings of NLP+CSS, pp. 7–16 (2017)

20. Waseem, Z., Hovy, D.: Hateful symbols or hateful people? Predictive features for hate speech detection on Twitter. In: Proceedings of NAACL SRW, pp. 88–93 (2016)

Please see the paper for more detail.

---

license: mit

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

- precision

- recall

model-index:

- name: xlm-roberta-base-misogyny-sexism-indomain-mix-bal

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-misogyny-sexism-indomain-mix-bal

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8259

- Accuracy: 0.826

- F1: 0.8333

- Precision: 0.7996

- Recall: 0.87

- Mae: 0.174

- Tn: 391

- Fp: 109

- Fn: 65

- Tp: 435

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Precision | Recall | Mae | Tn | Fp | Fn | Tp |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|:---------:|:------:|:-----:|:---:|:---:|:--:|:---:|

| 0.2643 | 1.0 | 1603 | 0.6511 | 0.82 | 0.8269 | 0.7963 | 0.86 | 0.18 | 390 | 110 | 70 | 430 |

| 0.2004 | 2.0 | 3206 | 0.8259 | 0.826 | 0.8333 | 0.7996 | 0.87 | 0.174 | 391 | 109 | 65 | 435 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0+cu102

- Datasets 2.3.2

- Tokenizers 0.12.1

# Multilingual_Misogyny_Detection

|

ChaoticQubit/Tom_Cruise_Face.-.Stable_Diffusion

|

ChaoticQubit

| 2023-09-21T16:59:13Z | 1 | 0 |

diffusers

|

[

"diffusers",

"image-to-image",

"en",

"license:openrail",

"region:us"

] |

image-to-image

| 2023-09-21T16:55:08Z |

---

license: openrail

language:

- en

library_name: diffusers

pipeline_tag: image-to-image

---

|

jtlowell/cozy_fantasy_xl

|

jtlowell

| 2023-09-21T16:43:51Z | 3 | 1 |

diffusers

|

[

"diffusers",

"stable-diffusion-xl",

"stable-diffusion-xl-diffusers",

"text-to-image",

"lora",

"dataset:jtlowell/cozy_interiors_2",

"base_model:stabilityai/stable-diffusion-xl-base-1.0",

"base_model:adapter:stabilityai/stable-diffusion-xl-base-1.0",

"region:us"

] |

text-to-image

| 2023-09-21T15:51:17Z |

---

base_model: stabilityai/stable-diffusion-xl-base-1.0

instance_prompt: cozy_int

tags:

- stable-diffusion-xl

- stable-diffusion-xl-diffusers

- text-to-image

- diffusers

- lora

inference: true

datasets:

- jtlowell/cozy_interiors_2

---

# LoRA DreamBooth - jtlowell/cozy_fantasy_xl

These are LoRA adaption weights for stabilityai/stable-diffusion-xl-base-1.0.

The weights were trained on the concept prompt:

`cozy_int`

Use this keyword to trigger your custom model in your prompts.

LoRA for the text encoder was enabled: False.

Special VAE used for training: madebyollin/sdxl-vae-fp16-fix.

## Usage

Make sure to upgrade diffusers to >= 0.19.0:

```

pip install diffusers --upgrade

```

In addition make sure to install transformers, safetensors, accelerate as well as the invisible watermark:

```

pip install invisible_watermark transformers accelerate safetensors

```

To just use the base model, you can run:

```python

import torch

from diffusers import DiffusionPipeline, AutoencoderKL

vae = AutoencoderKL.from_pretrained('madebyollin/sdxl-vae-fp16-fix', torch_dtype=torch.float16)

pipe = DiffusionPipeline.from_pretrained(

"stabilityai/stable-diffusion-xl-base-1.0",

vae=vae, torch_dtype=torch.float16, variant="fp16",

use_safetensors=True

)

# This is where you load your trained weights

pipe.load_lora_weights('jtlowell/cozy_fantasy_xl')

pipe.to("cuda")

prompt = "A majestic cozy_int jumping from a big stone at night"

image = pipe(prompt=prompt, num_inference_steps=50).images[0]

```

|

SHENMU007/neunit_BASE_V13.5.10

|

SHENMU007

| 2023-09-21T16:41:56Z | 76 | 0 |

transformers

|

[

"transformers",

"pytorch",

"speecht5",

"text-to-audio",

"1.1.0",

"generated_from_trainer",

"zh",

"dataset:facebook/voxpopuli",

"base_model:microsoft/speecht5_tts",

"base_model:finetune:microsoft/speecht5_tts",

"license:mit",

"endpoints_compatible",

"region:us"

] |

text-to-audio

| 2023-09-21T15:22:09Z |

---

language:

- zh

license: mit

base_model: microsoft/speecht5_tts

tags:

- 1.1.0

- generated_from_trainer

datasets:

- facebook/voxpopuli

model-index:

- name: SpeechT5 TTS Dutch neunit

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# SpeechT5 TTS Dutch neunit

This model is a fine-tuned version of [microsoft/speecht5_tts](https://huggingface.co/microsoft/speecht5_tts) on the VoxPopuli dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 4000

### Training results

### Framework versions

- Transformers 4.33.1

- Pytorch 2.0.1+cu117

- Datasets 2.14.5

- Tokenizers 0.13.3

|

Rishs/DangerousV2

|

Rishs

| 2023-09-21T16:28:03Z | 0 | 0 | null |

[

"michaeljackson",

"en",

"region:us"

] | null | 2023-09-21T16:26:28Z |

---

language:

- en

tags:

- michaeljackson

---

|

benedikt-schaber/ppo-Huggy

|

benedikt-schaber

| 2023-09-21T16:25:51Z | 3 | 0 |

ml-agents

|

[

"ml-agents",

"tensorboard",

"onnx",

"Huggy",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

] |

reinforcement-learning

| 2023-09-21T16:25:40Z |

---

library_name: ml-agents

tags:

- Huggy

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy**

using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://unity-technologies.github.io/ml-agents/ML-Agents-Toolkit-Documentation/

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

- A *short tutorial* where you teach Huggy the Dog 🐶 to fetch the stick and then play with him directly in your

browser: https://huggingface.co/learn/deep-rl-course/unitbonus1/introduction

- A *longer tutorial* to understand how works ML-Agents:

https://huggingface.co/learn/deep-rl-course/unit5/introduction

### Resume the training

```bash

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser**

1. If the environment is part of ML-Agents official environments, go to https://huggingface.co/unity

2. Step 1: Find your model_id: benedikt-schaber/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

gpadam/autotrain-prospero-query-training-87679143506

|

gpadam

| 2023-09-21T16:23:36Z | 114 | 0 |

transformers

|

[

"transformers",

"pytorch",

"safetensors",

"bart",

"text2text-generation",

"autotrain",

"summarization",

"unk",

"dataset:gpadam/autotrain-data-prospero-query-training",

"co2_eq_emissions",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

summarization

| 2023-09-07T11:44:46Z |

---

tags:

- autotrain

- summarization

language:

- unk

widget:

- text: "I love AutoTrain"

datasets:

- gpadam/autotrain-data-prospero-query-training

co2_eq_emissions:

emissions: 16.811591021038232

---

# Model Trained Using AutoTrain

- Problem type: Summarization

- Model ID: 87679143506

- CO2 Emissions (in grams): 16.8116

## Validation Metrics

- Loss: 1.544

- Rouge1: 26.107

- Rouge2: 12.267

- RougeL: 22.582

- RougeLsum: 22.590

- Gen Len: 19.956

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_HUGGINGFACE_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/gpadam/autotrain-prospero-query-training-87679143506

```

|

Panchovix/airoboros-l2-70b-gpt4-1.4.1_4bit-bpw_variants_h6-exl2

|

Panchovix

| 2023-09-21T16:21:13Z | 5 | 0 |

transformers

|

[

"transformers",

"llama",

"text-generation",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-09-13T05:59:53Z |

---

license: other

---

4bit variants quantizations of airoboros 70b 1.4.1 (https://huggingface.co/jondurbin/airoboros-l2-70b-gpt4-1.4.1), using exllama2.

You can find 4.25bpw (main branch), 4.5bpw and 4.75bpw in each branch.

Update 21/09/2023

Re-quanted all variants with latest exllamav2 version, which fixed some measurement issues.

|

Keenan5755/ppo-LunarLander-v2

|

Keenan5755

| 2023-09-21T16:04:48Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-09-21T16:04:28Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 261.74 +/- 21.02

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

starkiee/stark

|

starkiee

| 2023-09-21T15:55:36Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-09-21T15:55:36Z |

---

license: creativeml-openrail-m

---

|

salesforce/blipdiffusion-controlnet

|

salesforce

| 2023-09-21T15:55:24Z | 85 | 2 |

diffusers

|

[

"diffusers",

"en",

"arxiv:2305.14720",

"license:apache-2.0",

"diffusers:BlipDiffusionControlNetPipeline",

"region:us"

] | null | 2023-09-21T15:55:24Z |

---

license: apache-2.0

language:

- en

library_name: diffusers

---

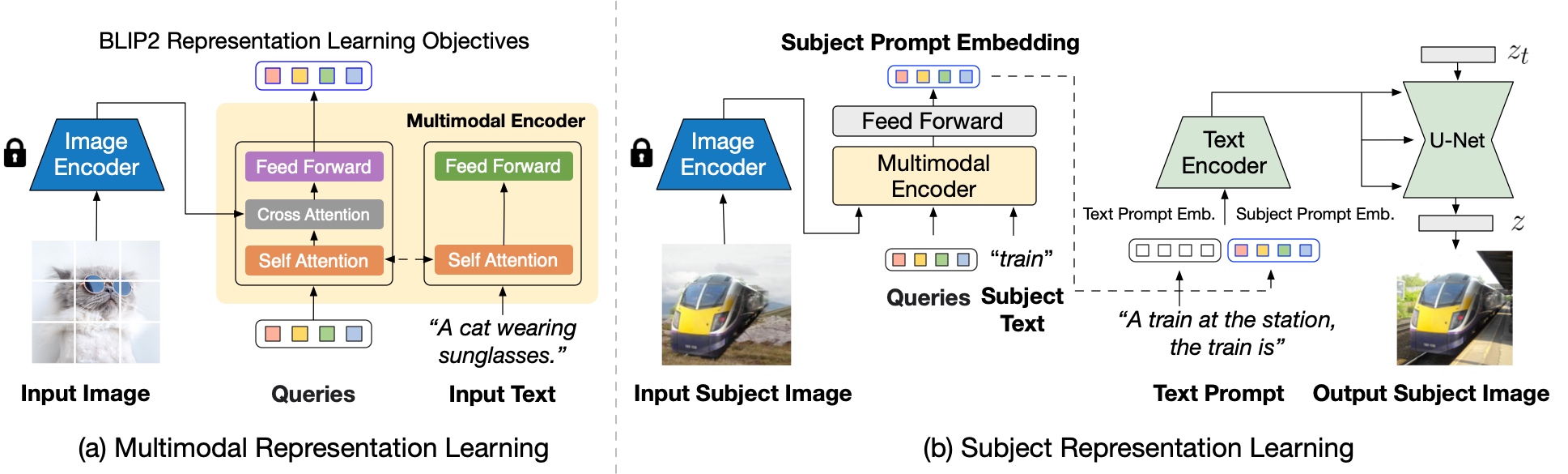

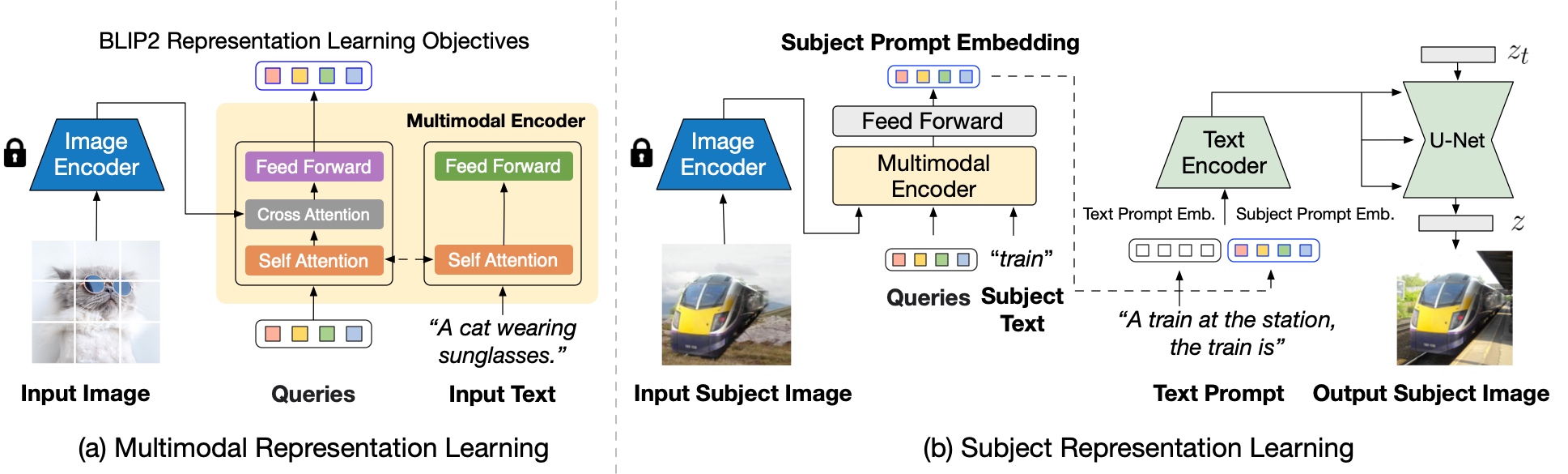

# BLIP-Diffusion: Pre-trained Subject Representation for Controllable Text-to-Image Generation and Editing

<!-- Provide a quick summary of what the model is/does. -->

Model card for BLIP-Diffusion, a text to image Diffusion model which enables zero-shot subject-driven generation and control-guided zero-shot generation.

The abstract from the paper is:

*Subject-driven text-to-image generation models create novel renditions of an input subject based on text prompts. Existing models suffer from lengthy fine-tuning and difficulties preserving the subject fidelity. To overcome these limitations, we introduce BLIP-Diffusion, a new subject-driven image generation model that supports multimodal control which consumes inputs of subject images and text prompts. Unlike other subject-driven generation models, BLIP-Diffusion introduces a new multimodal encoder which is pre-trained to provide subject representation. We first pre-train the multimodal encoder following BLIP-2 to produce visual representation aligned with the text. Then we design a subject representation learning task which enables a diffusion model to leverage such visual representation and generates new subject renditions. Compared with previous methods such as DreamBooth, our model enables zero-shot subject-driven generation, and efficient fine-tuning for customized subject with up to 20x speedup. We also demonstrate that BLIP-Diffusion can be flexibly combined with existing techniques such as ControlNet and prompt-to-prompt to enable novel subject-driven generation and editing applications.*

The model is created by Dongxu Li, Junnan Li, Steven C.H. Hoi.

### Model Sources

<!-- Provide the basic links for the model. -->

- **Original Repository:** https://github.com/salesforce/LAVIS/tree/main

- **Project Page:** https://dxli94.github.io/BLIP-Diffusion-website/

## Uses

### Zero-Shot Subject Driven Generation

```python

from diffusers.pipelines import BlipDiffusionPipeline

from diffusers.utils import load_image

import torch

blip_diffusion_pipe = BlipDiffusionPipeline.from_pretrained(

"Salesforce/blipdiffusion", torch_dtype=torch.float16

).to("cuda")

cond_subject = "dog"

tgt_subject = "dog"

text_prompt_input = "swimming underwater"

cond_image = load_image(

"https://huggingface.co/datasets/ayushtues/blipdiffusion_images/resolve/main/dog.jpg"

)

iter_seed = 88888

guidance_scale = 7.5

num_inference_steps = 25

negative_prompt = "over-exposure, under-exposure, saturated, duplicate, out of frame, lowres, cropped, worst quality, low quality, jpeg artifacts, morbid, mutilated, out of frame, ugly, bad anatomy, bad proportions, deformed, blurry, duplicate"

output = blip_diffusion_pipe(

text_prompt_input,

cond_image,

cond_subject,

tgt_subject,

guidance_scale=guidance_scale,

num_inference_steps=num_inference_steps,

neg_prompt=negative_prompt,

height=512,

width=512,

).images

output[0].save("image.png")

```

Input Image : <img src="https://huggingface.co/datasets/ayushtues/blipdiffusion_images/resolve/main/dog.jpg" style="width:500px;"/>

Generatred Image : <img src="https://huggingface.co/datasets/ayushtues/blipdiffusion_images/resolve/main/dog_underwater.png" style="width:500px;"/>

### Controlled subject-driven generation

```python

from diffusers.pipelines import BlipDiffusionControlNetPipeline

from diffusers.utils import load_image

from controlnet_aux import CannyDetector

blip_diffusion_pipe = BlipDiffusionControlNetPipeline.from_pretrained(

"Salesforce/blipdiffusion-controlnet", torch_dtype=torch.float16

).to("cuda")

style_subject = "flower" # subject that defines the style

tgt_subject = "teapot" # subject to generate.

text_prompt = "on a marble table"

cldm_cond_image = load_image(

"https://huggingface.co/datasets/ayushtues/blipdiffusion_images/resolve/main/kettle.jpg"

).resize((512, 512))

canny = CannyDetector()

cldm_cond_image = canny(cldm_cond_image, 30, 70, output_type="pil")

style_image = load_image(

"https://huggingface.co/datasets/ayushtues/blipdiffusion_images/resolve/main/flower.jpg"

)

guidance_scale = 7.5

num_inference_steps = 50

negative_prompt = "over-exposure, under-exposure, saturated, duplicate, out of frame, lowres, cropped, worst quality, low quality, jpeg artifacts, morbid, mutilated, out of frame, ugly, bad anatomy, bad proportions, deformed, blurry, duplicate"

output = blip_diffusion_pipe(

text_prompt,

style_image,

cldm_cond_image,

style_subject,

tgt_subject,

guidance_scale=guidance_scale,

num_inference_steps=num_inference_steps,

neg_prompt=negative_prompt,

height=512,

width=512,

).images

output[0].save("image.png")

```

Input Style Image : <img src="https://huggingface.co/datasets/ayushtues/blipdiffusion_images/resolve/main/flower.jpg" style="width:500px;"/>

Canny Edge Input : <img src="https://huggingface.co/datasets/ayushtues/blipdiffusion_images/resolve/main/kettle.jpg" style="width:500px;"/>

Generated Image : <img src="https://huggingface.co/datasets/ayushtues/blipdiffusion_images/resolve/main/canny_generated.png" style="width:500px;"/>

### Controlled subject-driven generation Scribble

```python

from diffusers.pipelines import BlipDiffusionControlNetPipeline

from diffusers.utils import load_image

from controlnet_aux import HEDdetector

blip_diffusion_pipe = BlipDiffusionControlNetPipeline.from_pretrained(

"Salesforce/blipdiffusion-controlnet"

)

controlnet = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-scribble")

blip_diffusion_pipe.controlnet = controlnet

blip_diffusion_pipe.to("cuda")

style_subject = "flower" # subject that defines the style

tgt_subject = "bag" # subject to generate.

text_prompt = "on a table"

cldm_cond_image = load_image(

"https://huggingface.co/lllyasviel/sd-controlnet-scribble/resolve/main/images/bag.png"

).resize((512, 512))

hed = HEDdetector.from_pretrained("lllyasviel/Annotators")

cldm_cond_image = hed(cldm_cond_image)

style_image = load_image(

"https://huggingface.co/datasets/ayushtues/blipdiffusion_images/resolve/main/flower.jpg"

)

guidance_scale = 7.5

num_inference_steps = 50

negative_prompt = "over-exposure, under-exposure, saturated, duplicate, out of frame, lowres, cropped, worst quality, low quality, jpeg artifacts, morbid, mutilated, out of frame, ugly, bad anatomy, bad proportions, deformed, blurry, duplicate"

output = blip_diffusion_pipe(

text_prompt,

style_image,

cldm_cond_image,

style_subject,

tgt_subject,

guidance_scale=guidance_scale,

num_inference_steps=num_inference_steps,

neg_prompt=negative_prompt,

height=512,

width=512,

).images

output[0].save("image.png")

```

Input Style Image : <img src="https://huggingface.co/datasets/ayushtues/blipdiffusion_images/resolve/main/flower.jpg" style="width:500px;"/>

Scribble Input : <img src="https://huggingface.co/datasets/ayushtues/blipdiffusion_images/resolve/main/scribble.png" style="width:500px;"/>

Generated Image : <img src="https://huggingface.co/datasets/ayushtues/blipdiffusion_images/resolve/main/scribble_output.png" style="width:500px;"/>

## Model Architecture

Blip-Diffusion learns a **pre-trained subject representation**. uch representation aligns with text embeddings and in the meantime also encodes the subject appearance. This allows efficient fine-tuning of the model for high-fidelity subject-driven applications, such as text-to-image generation, editing and style transfer.

To this end, they design a two-stage pre-training strategy to learn generic subject representation. In the first pre-training stage, they perform multimodal representation learning, which enforces BLIP-2 to produce text-aligned visual features based on the input image. In the second pre-training stage, they design a subject representation learning task, called prompted context generation, where the diffusion model learns to generate novel subject renditions based on the input visual features.

To achieve this, they curate pairs of input-target images with the same subject appearing in different contexts. Specifically, they synthesize input images by composing the subject with a random background. During pre-training, they feed the synthetic input image and the subject class label through BLIP-2 to obtain the multimodal embeddings as subject representation. The subject representation is then combined with a text prompt to guide the generation of the target image.

The architecture is also compatible to integrate with established techniques built on top of the diffusion model, such as ControlNet.

They attach the U-Net of the pre-trained ControlNet to that of BLIP-Diffusion via residuals. In this way, the model takes into account the input structure condition, such as edge maps and depth maps, in addition to the subject cues. Since the model inherits the architecture of the original latent diffusion model, they observe satisfying generations using off-the-shelf integration with pre-trained ControlNet without further training.

<img src="https://huggingface.co/datasets/ayushtues/blipdiffusion_images/resolve/main/arch_controlnet.png" style="width:50%;"/>

## Citation

**BibTeX:**

If you find this repository useful in your research, please cite:

```

@misc{li2023blipdiffusion,

title={BLIP-Diffusion: Pre-trained Subject Representation for Controllable Text-to-Image Generation and Editing},

author={Dongxu Li and Junnan Li and Steven C. H. Hoi},

year={2023},

eprint={2305.14720},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

|

JuanMa360/kitchen-style-classification

|

JuanMa360

| 2023-09-21T15:51:55Z | 213 | 1 |

transformers

|

[

"transformers",

"pytorch",

"vit",

"image-classification",

"huggingpics",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2023-09-21T15:51:51Z |

---

tags:

- image-classification

- pytorch

- huggingpics

metrics:

- accuracy

model-index:

- name: kitchen-style-classification

results:

- task:

name: Image Classification

type: image-classification

metrics:

- name: Accuracy

type: accuracy

value: 0.7284768223762512

---

# kitchen-style-classification

House & Apartaments Classification model🤗🖼️

## Example Images

#### kitchens-island

#### kitchens-l

#### kitchens-lineal

#### kitchens-u

|

am-infoweb/QA_SYNTH_19_SEPT_FINETUNE_1.0

|

am-infoweb

| 2023-09-21T15:51:29Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"question-answering",

"generated_from_trainer",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2023-09-21T15:00:50Z |

---

tags:

- generated_from_trainer

model-index:

- name: QA_SYNTH_19_SEPT_FINETUNE_1.0

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# QA_SYNTH_19_SEPT_FINETUNE_1.0

This model was trained from scratch on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1182

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 0.1211 | 1.0 | 1350 | 0.1318 |

| 0.0599 | 2.0 | 2700 | 0.1617 |

| 0.0571 | 3.0 | 4050 | 0.0833 |

| 0.0248 | 4.0 | 5400 | 0.0396 |

| 0.0154 | 5.0 | 6750 | 0.0911 |

| 0.0 | 6.0 | 8100 | 0.1054 |

| 0.0 | 7.0 | 9450 | 0.1086 |

| 0.0 | 8.0 | 10800 | 0.1224 |

| 0.0002 | 9.0 | 12150 | 0.1155 |

| 0.0025 | 10.0 | 13500 | 0.1182 |

### Framework versions

- Transformers 4.32.0.dev0

- Pytorch 2.0.1+cu117

- Datasets 2.14.4

- Tokenizers 0.13.3

|

ryatora/distilbert-base-uncased-finetuned-emotion

|

ryatora

| 2023-09-21T15:36:40Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:emotion",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-09-19T12:44:18Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

args: split

metrics:

- name: Accuracy

type: accuracy

value: 0.9225

- name: F1

type: f1

value: 0.9224787080842691

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2185

- Accuracy: 0.9225

- F1: 0.9225

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.8423 | 1.0 | 250 | 0.3084 | 0.9065 | 0.9049 |

| 0.2493 | 2.0 | 500 | 0.2185 | 0.9225 | 0.9225 |

### Framework versions

- Transformers 4.16.2

- Pytorch 2.0.1+cu118

- Datasets 2.14.5

- Tokenizers 0.14.0

|

ShivamMangale/XLM-Roberta-base-allhiweakdap_5th_iteration_d5

|

ShivamMangale

| 2023-09-21T15:35:52Z | 122 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"base_model:FacebookAI/xlm-roberta-base",

"base_model:finetune:FacebookAI/xlm-roberta-base",

"license:mit",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2023-09-21T14:45:26Z |

---

license: mit

base_model: xlm-roberta-base

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: XLM-Roberta-base-allhiweakdap_5th_iteration_d5

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# XLM-Roberta-base-allhiweakdap_5th_iteration_d5

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the squad dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1.3122e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

### Framework versions

- Transformers 4.33.2

- Pytorch 2.0.1+cu117

- Datasets 2.14.5

- Tokenizers 0.13.3

|

kla-20/qa-flant5

|

kla-20

| 2023-09-21T15:30:53Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2023-09-21T15:23:27Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: qa-flant5

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# qa-flant5

This model is a fine-tuned version of [google/flan-t5-base](https://huggingface.co/google/flan-t5-base) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 1

### Training results

### Framework versions

- Transformers 4.27.2

- Pytorch 1.13.1+cu117

- Datasets 2.11.0

- Tokenizers 0.13.3

|

SamuraiPetya/ppo-LunarLander-v2

|

SamuraiPetya

| 2023-09-21T15:26:57Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-09-21T15:26:34Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 258.01 +/- 17.73

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

MarcosMunoz95/SpaceInvadersNoFrameskip

|

MarcosMunoz95

| 2023-09-21T15:25:11Z | 1 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-09-21T15:24:37Z |

---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 670.00 +/- 96.93

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga MarcosMunoz95 -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga MarcosMunoz95 -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga MarcosMunoz95

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 1000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

# Environment Arguments

```python

{'render_mode': 'rgb_array'}

```

|

Uberariy/q-FrozenLake-v1-4x4-noSlippery

|

Uberariy

| 2023-09-21T15:24:34Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-09-21T15:24:31Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="Uberariy/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

ShivamMangale/XLM-Roberta-base-all_hi_weakdap_4th_iteration_d4_d3_d2_d1_d0

|

ShivamMangale

| 2023-09-21T15:24:22Z | 133 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"base_model:FacebookAI/xlm-roberta-base",

"base_model:finetune:FacebookAI/xlm-roberta-base",

"license:mit",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2023-09-21T14:52:40Z |

---

license: mit

base_model: xlm-roberta-base

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: XLM-Roberta-base-all_hi_weakdap_4th_iteration_d4_d3_d2_d1_d0

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# XLM-Roberta-base-all_hi_weakdap_4th_iteration_d4_d3_d2_d1_d0

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the squad dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

### Framework versions

- Transformers 4.33.2

- Pytorch 2.0.1+cu117

- Datasets 2.14.5

- Tokenizers 0.13.3

|

Govern/textual_inversion_airplane

|

Govern

| 2023-09-21T15:17:46Z | 14 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"textual_inversion",

"base_model:runwayml/stable-diffusion-v1-5",

"base_model:adapter:runwayml/stable-diffusion-v1-5",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2023-09-21T13:37:06Z |

---

license: creativeml-openrail-m

base_model: runwayml/stable-diffusion-v1-5

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

- textual_inversion

inference: true

---

# Textual inversion text2image fine-tuning - Govern/textual_inversion_airplane

These are textual inversion adaption weights for runwayml/stable-diffusion-v1-5. You can find some example images in the following.

|

prathameshdalal/videomae-base-finetuned-ucf101-subset

|

prathameshdalal

| 2023-09-21T15:08:40Z | 69 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"videomae",

"video-classification",

"generated_from_trainer",

"base_model:MCG-NJU/videomae-base",

"base_model:finetune:MCG-NJU/videomae-base",

"license:cc-by-nc-4.0",

"endpoints_compatible",

"region:us"

] |

video-classification

| 2023-08-20T08:43:35Z |

---

license: cc-by-nc-4.0

base_model: MCG-NJU/videomae-base

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: videomae-base-finetuned-ucf101-subset

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# videomae-base-finetuned-ucf101-subset

This model is a fine-tuned version of [MCG-NJU/videomae-base](https://huggingface.co/MCG-NJU/videomae-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1362

- Accuracy: 0.9714

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- training_steps: 600

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 2.2638 | 0.06 | 38 | 2.2761 | 0.1143 |

| 1.6112 | 1.06 | 76 | 1.0811 | 0.7143 |

| 0.5768 | 2.06 | 114 | 0.4538 | 0.8857 |

| 0.298 | 3.06 | 152 | 0.4841 | 0.8 |

| 0.0856 | 4.06 | 190 | 0.6021 | 0.8 |

| 0.2283 | 5.06 | 228 | 0.2103 | 0.9286 |

| 0.0559 | 6.06 | 266 | 0.1142 | 0.9714 |

| 0.2279 | 7.06 | 304 | 0.1132 | 0.9714 |

| 0.0145 | 8.06 | 342 | 0.0762 | 0.9714 |

| 0.0057 | 9.06 | 380 | 0.0226 | 1.0 |

| 0.0076 | 10.06 | 418 | 0.1619 | 0.9714 |

| 0.0046 | 11.06 | 456 | 0.1617 | 0.9714 |

| 0.0034 | 12.06 | 494 | 0.1676 | 0.9571 |

| 0.0034 | 13.06 | 532 | 0.1398 | 0.9714 |

| 0.0034 | 14.06 | 570 | 0.1345 | 0.9714 |

| 0.0035 | 15.05 | 600 | 0.1362 | 0.9714 |

### Framework versions

- Transformers 4.33.2

- Pytorch 1.10.0+cu113

- Datasets 2.14.5

- Tokenizers 0.13.3

|

CyberHarem/hiiragi_shino_idolmastercinderellagirls

|

CyberHarem

| 2023-09-21T14:54:07Z | 0 | 0 | null |

[

"art",

"text-to-image",

"dataset:CyberHarem/hiiragi_shino_idolmastercinderellagirls",

"license:mit",

"region:us"

] |

text-to-image

| 2023-09-21T14:42:01Z |

---

license: mit

datasets:

- CyberHarem/hiiragi_shino_idolmastercinderellagirls

pipeline_tag: text-to-image

tags:

- art

---

# Lora of hiiragi_shino_idolmastercinderellagirls

This model is trained with [HCP-Diffusion](https://github.com/7eu7d7/HCP-Diffusion). And the auto-training framework is maintained by [DeepGHS Team](https://huggingface.co/deepghs).

The base model used during training is [NAI](https://huggingface.co/deepghs/animefull-latest), and the base model used for generating preview images is [Meina/MeinaMix_V11](https://huggingface.co/Meina/MeinaMix_V11).

After downloading the pt and safetensors files for the specified step, you need to use them simultaneously. The pt file will be used as an embedding, while the safetensors file will be loaded for Lora.

For example, if you want to use the model from step 4760, you need to download `4760/hiiragi_shino_idolmastercinderellagirls.pt` as the embedding and `4760/hiiragi_shino_idolmastercinderellagirls.safetensors` for loading Lora. By using both files together, you can generate images for the desired characters.

**The best step we recommend is 4760**, with the score of 0.994. The trigger words are:

1. `hiiragi_shino_idolmastercinderellagirls`

2. `long_hair, black_hair, blush, brown_eyes, smile, jewelry, breasts, large_breasts`

For the following groups, it is not recommended to use this model and we express regret:

1. Individuals who cannot tolerate any deviations from the original character design, even in the slightest detail.

2. Individuals who are facing the application scenarios with high demands for accuracy in recreating character outfits.

3. Individuals who cannot accept the potential randomness in AI-generated images based on the Stable Diffusion algorithm.

4. Individuals who are not comfortable with the fully automated process of training character models using LoRA, or those who believe that training character models must be done purely through manual operations to avoid disrespecting the characters.

5. Individuals who finds the generated image content offensive to their values.

These are available steps:

| Steps | Score | Download | pattern_1 | pattern_2 | pattern_3 | pattern_4 | bikini | bondage | free | maid | miko | nude | nude2 | suit | yukata |

|:---------|:----------|:-----------------------------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:-----------------------------------------|:--------------------------------------------------|:-----------------------------------------------|:-------------------------------------|:-------------------------------------|:-----------------------------------------------|:------------------------------------------------|:-------------------------------------|:-----------------------------------------|

| 5100 | 0.976 | [Download](5100/hiiragi_shino_idolmastercinderellagirls.zip) |  |  |  |  |  | [<NSFW, click to see>](5100/previews/bondage.png) | [<NSFW, click to see>](5100/previews/free.png) |  |  | [<NSFW, click to see>](5100/previews/nude.png) | [<NSFW, click to see>](5100/previews/nude2.png) |  |  |

| **4760** | **0.994** | [**Download**](4760/hiiragi_shino_idolmastercinderellagirls.zip) |  |  |  |  |  | [<NSFW, click to see>](4760/previews/bondage.png) | [<NSFW, click to see>](4760/previews/free.png) |  |  | [<NSFW, click to see>](4760/previews/nude.png) | [<NSFW, click to see>](4760/previews/nude2.png) |  |  |

| 4420 | 0.981 | [Download](4420/hiiragi_shino_idolmastercinderellagirls.zip) |  |  |  |  |  | [<NSFW, click to see>](4420/previews/bondage.png) | [<NSFW, click to see>](4420/previews/free.png) |  |  | [<NSFW, click to see>](4420/previews/nude.png) | [<NSFW, click to see>](4420/previews/nude2.png) |  |  |

| 4080 | 0.977 | [Download](4080/hiiragi_shino_idolmastercinderellagirls.zip) |  |  |  |  |  | [<NSFW, click to see>](4080/previews/bondage.png) | [<NSFW, click to see>](4080/previews/free.png) |  |  | [<NSFW, click to see>](4080/previews/nude.png) | [<NSFW, click to see>](4080/previews/nude2.png) |  |  |

| 3740 | 0.963 | [Download](3740/hiiragi_shino_idolmastercinderellagirls.zip) |  |  |  |  |  | [<NSFW, click to see>](3740/previews/bondage.png) | [<NSFW, click to see>](3740/previews/free.png) |  |  | [<NSFW, click to see>](3740/previews/nude.png) | [<NSFW, click to see>](3740/previews/nude2.png) |  |  |

| 3400 | 0.951 | [Download](3400/hiiragi_shino_idolmastercinderellagirls.zip) |  |  |  |  |  | [<NSFW, click to see>](3400/previews/bondage.png) | [<NSFW, click to see>](3400/previews/free.png) |  |  | [<NSFW, click to see>](3400/previews/nude.png) | [<NSFW, click to see>](3400/previews/nude2.png) |  |  |

| 3060 | 0.984 | [Download](3060/hiiragi_shino_idolmastercinderellagirls.zip) |  |  |  |  |  | [<NSFW, click to see>](3060/previews/bondage.png) | [<NSFW, click to see>](3060/previews/free.png) |  |  | [<NSFW, click to see>](3060/previews/nude.png) | [<NSFW, click to see>](3060/previews/nude2.png) |  |  |

| 2720 | 0.966 | [Download](2720/hiiragi_shino_idolmastercinderellagirls.zip) |  |  |  |  |  | [<NSFW, click to see>](2720/previews/bondage.png) | [<NSFW, click to see>](2720/previews/free.png) |  |  | [<NSFW, click to see>](2720/previews/nude.png) | [<NSFW, click to see>](2720/previews/nude2.png) |  |  |

| 2380 | 0.938 | [Download](2380/hiiragi_shino_idolmastercinderellagirls.zip) |  |  |  |  |  | [<NSFW, click to see>](2380/previews/bondage.png) | [<NSFW, click to see>](2380/previews/free.png) |  |  | [<NSFW, click to see>](2380/previews/nude.png) | [<NSFW, click to see>](2380/previews/nude2.png) |  |  |

| 2040 | 0.938 | [Download](2040/hiiragi_shino_idolmastercinderellagirls.zip) |  |  |  |  |  | [<NSFW, click to see>](2040/previews/bondage.png) | [<NSFW, click to see>](2040/previews/free.png) |  |  | [<NSFW, click to see>](2040/previews/nude.png) | [<NSFW, click to see>](2040/previews/nude2.png) |  |  |

| 1700 | 0.971 | [Download](1700/hiiragi_shino_idolmastercinderellagirls.zip) |  |  |  |  |  | [<NSFW, click to see>](1700/previews/bondage.png) | [<NSFW, click to see>](1700/previews/free.png) |  |  | [<NSFW, click to see>](1700/previews/nude.png) | [<NSFW, click to see>](1700/previews/nude2.png) |  |  |

| 1360 | 0.955 | [Download](1360/hiiragi_shino_idolmastercinderellagirls.zip) |  |  |  |  |  | [<NSFW, click to see>](1360/previews/bondage.png) | [<NSFW, click to see>](1360/previews/free.png) |  |  | [<NSFW, click to see>](1360/previews/nude.png) | [<NSFW, click to see>](1360/previews/nude2.png) |  |  |

| 1020 | 0.792 | [Download](1020/hiiragi_shino_idolmastercinderellagirls.zip) |  |  |  |  |  | [<NSFW, click to see>](1020/previews/bondage.png) | [<NSFW, click to see>](1020/previews/free.png) |  |  | [<NSFW, click to see>](1020/previews/nude.png) | [<NSFW, click to see>](1020/previews/nude2.png) |  |  |

| 680 | 0.937 | [Download](680/hiiragi_shino_idolmastercinderellagirls.zip) |  |  |  |  |  | [<NSFW, click to see>](680/previews/bondage.png) | [<NSFW, click to see>](680/previews/free.png) |  |  | [<NSFW, click to see>](680/previews/nude.png) | [<NSFW, click to see>](680/previews/nude2.png) |  |  |

| 340 | 0.867 | [Download](340/hiiragi_shino_idolmastercinderellagirls.zip) |  |  |  |  |  | [<NSFW, click to see>](340/previews/bondage.png) | [<NSFW, click to see>](340/previews/free.png) |  |  | [<NSFW, click to see>](340/previews/nude.png) | [<NSFW, click to see>](340/previews/nude2.png) |  |  |

|

ShivamMangale/XLM-Roberta-base-all_hi_weakdap_4th_iteration_d4_d3_d2_d1

|

ShivamMangale

| 2023-09-21T14:52:39Z | 122 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"base_model:FacebookAI/xlm-roberta-base",

"base_model:finetune:FacebookAI/xlm-roberta-base",

"license:mit",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2023-09-21T14:34:47Z |

---

license: mit

base_model: xlm-roberta-base

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: XLM-Roberta-base-all_hi_weakdap_4th_iteration_d4_d3_d2_d1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# XLM-Roberta-base-all_hi_weakdap_4th_iteration_d4_d3_d2_d1

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the squad dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

### Framework versions

- Transformers 4.33.2

- Pytorch 2.0.1+cu117

- Datasets 2.14.5

- Tokenizers 0.13.3

|

mann-e/mann-e_5.4

|

mann-e

| 2023-09-21T14:52:30Z | 3 | 0 |

diffusers

|

[

"diffusers",

"text-to-image",

"region:us"

] |

text-to-image

| 2023-09-21T12:47:14Z |

---

library_name: diffusers

pipeline_tag: text-to-image

---

# Mann-E 5.4

This repository represents what is the main brain of [Mann-E](https://manne.ir) artificial intelligence platform.

## Features

1. _LoRa support_. In previous versions, most of LoRa models weren't working perfectly with the model.

2. _More coherent results_. Compared to the old versions, this version has more "midjourney" feel to its outputs.

3. _New License_. Unlike old versions this one isn't licensed undet MIT, we decided to go with our own license.

## Samples

<span align="center">

<img src="https://huggingface.co/mann-e/mann-e_5.4/resolve/main/grid-1.png" width=512px />

<br/>

<img src="https://huggingface.co/mann-e/mann-e_5.4/resolve/main/grid-2.png" width=512px />

<br/>

<img src="https://huggingface.co/mann-e/mann-e_5.4/resolve/main/grid-3.png" width=512px />

<br/>

<img src="https://huggingface.co/mann-e/mann-e_5.4/resolve/main/grid-4.png" width=512px />

<br/>

<img src="https://huggingface.co/mann-e/mann-e_5.4/resolve/main/grid-5.png" width=512px />

</span>

## License

This software and associated checkpoints are provided by Mann-E for educational and non-commercial use only. By accessing or using this software and checkpoints, you agree to the following terms and conditions:

1. Access and Use:

- You are granted the right to access and use the source code and checkpoints for educational and non-commercial purposes.

2. Modification and Distribution:

- You may modify and distribute the source code and checkpoints solely for educational and non-commercial purposes, provided that you retain this license notice.

3. Commercial Use:

- Commercial use of this software and checkpoints is strictly prohibited without the explicit written consent of the Copyright Holder.

4. Fine-tuning of Checkpoints:

- You may not fine-tune or modify the provided checkpoints without obtaining the express written consent of the Copyright Holder.

5. No Warranty:

- This software and checkpoints are provided "as is" without any warranty. The Copyright Holder shall not be liable for any damages or liabilities arising out of the use or inability to use the software and checkpoints.

6. Termination:

- This license is effective until terminated by the Copyright Holder. Your rights under this license will terminate automatically without notice from the Copyright Holder if you fail to comply with any term or condition of this license.

If you do not agree to these terms and conditions or do not have the legal authority to bind yourself, you may not use, modify, or distribute this software and checkpoints.

For inquiries regarding commercial use or fine-tuning of checkpoints, please contact Mann-E.

|

nickypro/tinyllama-15M-fp32

|

nickypro

| 2023-09-21T14:50:50Z | 152 | 0 |

transformers

|

[

"transformers",

"pytorch",

"llama",

"text-generation",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-09-16T17:23:46Z |

---

license: mit

---

This is the Float32 15M parameter Llama 2 architecture model trained on the TinyStories dataset.

These are converted from

[karpathy/tinyllamas](https://huggingface.co/karpathy/tinyllamas).

See the [llama2.c](https://github.com/karpathy/llama2.c) project for more details.

|

nickypro/tinyllama-42M-fp32

|

nickypro

| 2023-09-21T14:50:34Z | 150 | 1 |

transformers

|

[

"transformers",

"pytorch",

"llama",

"text-generation",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-09-16T17:25:17Z |

---

license: mit

---

This is the float32 42M parameter Llama 2 architecture model trained on the TinyStories dataset.

These are converted from

[karpathy/tinyllamas](https://huggingface.co/karpathy/tinyllamas).

See the [llama2.c](https://github.com/karpathy/llama2.c) project for more details.

|

yunosuken/results

|

yunosuken

| 2023-09-21T14:50:34Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"generated_from_trainer",

"base_model:tohoku-nlp/bert-large-japanese-v2",

"base_model:finetune:tohoku-nlp/bert-large-japanese-v2",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-09-13T14:15:12Z |

---

license: apache-2.0

base_model: cl-tohoku/bert-large-japanese-v2

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: bert-large-japanease-v2-gpt4-relevance-learned

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-large-japanease-v2-gpt4-relevance-learned

This model is a fine-tuned version of [cl-tohoku/bert-large-japanese-v2](https://huggingface.co/cl-tohoku/bert-large-japanese-v2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.2693

- Accuracy: 0.885

- F1: 0.8788

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters