modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-03 00:36:49

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 535

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-03 00:36:49

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

monai-test/valve_landmarks

|

monai-test

| 2023-08-16T03:03:17Z | 0 | 0 |

monai

|

[

"monai",

"medical",

"license:mit",

"region:us"

] | null | 2023-08-16T03:03:10Z |

---

tags:

- monai

- medical

library_name: monai

license: mit

---

# 2D Cardiac Valve Landmark Regressor

This network identifies 10 different landmarks in 2D+t MR images of the heart (2 chamber, 3 chamber, and 4 chamber) representing the insertion locations of valve leaflets into the myocardial wall. These coordinates are used in part of the construction of 3D FEM cardiac models suitable for physics simulation of heart functions.

Input images are individual 2D slices from the time series, and the output from the network is a `(2, 10)` set of 2D points in `HW` image coordinate space. The 10 coordinates correspond to the attachment point for these valves:

1. Mitral anterior in 2CH

2. Mitral posterior in 2CH

3. Mitral septal in 3CH

4. Mitral free wall in 3CH

5. Mitral septal in 4CH

6. Mitral free wall in 4CH

7. Aortic septal

8. Aortic free wall

9. Tricuspid septal

10. Tricuspid free wall

Landmarks which do not appear in a particular image are predicted to be `(0, 0)` or close to this location. The mitral valve is expected to appear in all three views. Landmarks are not provided for the pulmonary valve.

Example plot of landmarks on a single frame, see [view_results.ipynb](./view_results.ipynb) for visualising network output:

## Training

The training script `train.json` is provided to train the network using a dataset of image pairs containing the MR image and a landmark image. This is done to reuse image-based transforms which do not currently operate on geometry. A number of other transforms are provided in `valve_landmarks.py` to implement Fourier-space dropout, image shifting which preserve landmarks, and smooth-field deformation applied to images and landmarks.

The dataset used for training unfortunately cannot be made public, however the training script can be used with any NPZ file containing the training image stack in key `trainImgs` and landmark image stack in `trainLMImgs`, plus `testImgs` and `testLMImgs` containing validation data. The landmark images are defined as 0 for every non-landmark pixel, with landmark pixels contaning the following values for each landmark type:

* 10: Mitral anterior in 2CH

* 15: Mitral posterior in 2CH

* 20: Mitral septal in 3CH

* 25: Mitral free wall in 3CH

* 30: Mitral septal in 4CH

* 35: Mitral free wall in 4CH

* 100: Aortic septal

* 150: Aortic free wall

* 200: Tricuspid septal

* 250: Tricuspid free wall

The following command will train with the default NPZ filename `./valvelandmarks.npz`, assuming the current directory is the bundle directory:

```sh

python -m monai.bundle run training --meta_file configs/metadata.json --config_file configs/train.json \

--bundle_root . --dataset_file ./valvelandmarks.npz --output_dir /path/to/outputs

```

## Inference

The included `inference.json` script will run inference on a directory containing Nifti files whose images have shape `(256, 256, 1, N)` for `N` timesteps. For each image the output in the `output_dir` directory will be a npy file containing a result array of shape `(N, 2, 10)` storing the 10 coordinates for each `N` timesteps. Invoking this script can be done as follows, assuming the current directory is the bundle directory:

```sh

python -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file configs/inference.json \

--bundle_root . --dataset_dir /path/to/data --output_dir /path/to/outputs

```

The provided test Nifti file can be placed in a directory which is then used as the `dataset_dir` value. This image was derived from [the AMRG Cardiac Atlas dataset](http://www.cardiacatlas.org/studies/amrg-cardiac-atlas) (AMRG Cardiac Atlas, Auckland MRI Research Group, Auckland, New Zealand). The results from this inference can be visualised by changing path values in [view_results.ipynb](./view_results.ipynb).

### Reference

The work for this model and its application is described in:

`Kerfoot, E, King, CE, Ismail, T, Nordsletten, D & Miller, R 2021, Estimation of Cardiac Valve Annuli Motion with Deep Learning. in E Puyol Anton, M Pop, M Sermesant, V Campello, A Lalande, K Lekadir, A Suinesiaputra, O Camara & A Young (eds), Statistical Atlases and Computational Models of the Heart. MandMs and EMIDEC Challenges - 11th International Workshop, STACOM 2020, Held in Conjunction with MICCAI 2020, Revised Selected Papers. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 12592 LNCS, Springer Science and Business Media Deutschland GmbH, pp. 146-155, 11th International Workshop on Statistical Atlases and Computational Models of the Heart, STACOM 2020 held in Conjunction with MICCAI 2020, Lima, Peru, 4/10/2020. https://doi.org/10.1007/978-3-030-68107-4_15`

# License

This model is released under the MIT License. The license file is included with the model.

|

monai-test/swin_unetr_btcv_segmentation

|

monai-test

| 2023-08-16T03:03:07Z | 0 | 1 |

monai

|

[

"monai",

"medical",

"arxiv:2201.01266",

"arxiv:2111.14791",

"license:apache-2.0",

"region:us"

] | null | 2023-08-16T03:01:00Z |

---

tags:

- monai

- medical

library_name: monai

license: apache-2.0

---

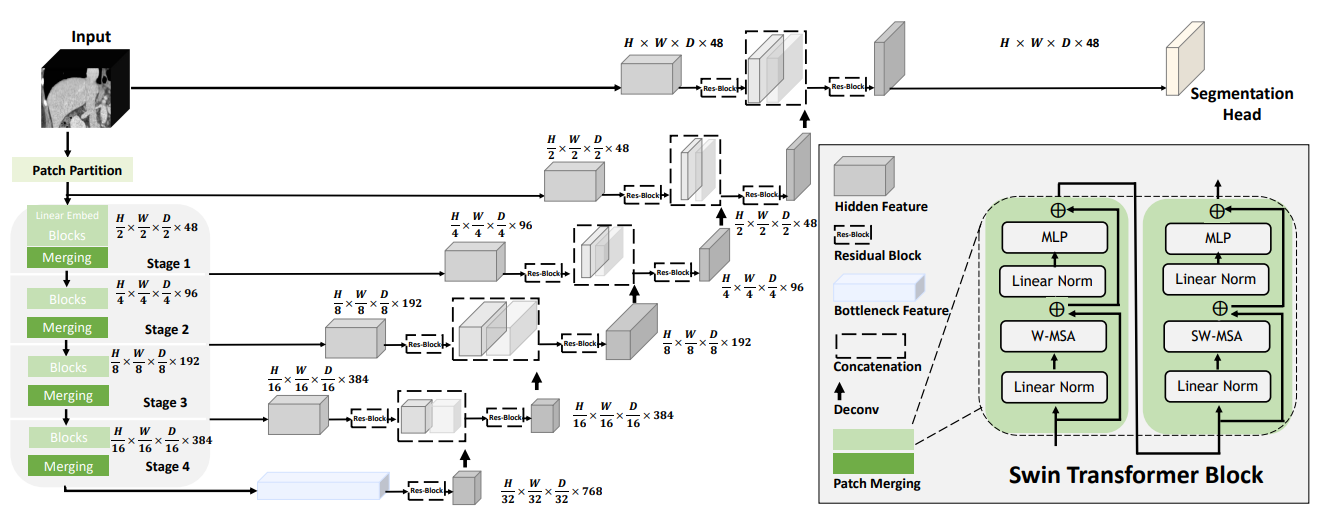

# Model Overview

A pre-trained Swin UNETR [1,2] for volumetric (3D) multi-organ segmentation using CT images from Beyond the Cranial Vault (BTCV) Segmentation Challenge dataset [3].

## Data

The training data is from the [BTCV dataset](https://www.synapse.org/#!Synapse:syn3193805/wiki/89480/) (Register through `Synapse` and download the `Abdomen/RawData.zip`).

- Target: Multi-organs

- Task: Segmentation

- Modality: CT

- Size: 30 3D volumes (24 Training + 6 Testing)

### Preprocessing

The dataset format needs to be redefined using the following commands:

```

unzip RawData.zip

mv RawData/Training/img/ RawData/imagesTr

mv RawData/Training/label/ RawData/labelsTr

mv RawData/Testing/img/ RawData/imagesTs

```

## Training configuration

The training as performed with the following:

- GPU: At least 32GB of GPU memory

- Actual Model Input: 96 x 96 x 96

- AMP: True

- Optimizer: Adam

- Learning Rate: 2e-4

### Memory Consumption

- Dataset Manager: CacheDataset

- Data Size: 30 samples

- Cache Rate: 1.0

- Single GPU - System RAM Usage: 5.8G

### Memory Consumption Warning

If you face memory issues with CacheDataset, you can either switch to a regular Dataset class or lower the caching rate `cache_rate` in the configurations within range [0, 1] to minimize the System RAM requirements.

### Input

1 channel

- CT image

### Output

14 channels:

- 0: Background

- 1: Spleen

- 2: Right Kidney

- 3: Left Kideny

- 4: Gallbladder

- 5: Esophagus

- 6: Liver

- 7: Stomach

- 8: Aorta

- 9: IVC

- 10: Portal and Splenic Veins

- 11: Pancreas

- 12: Right adrenal gland

- 13: Left adrenal gland

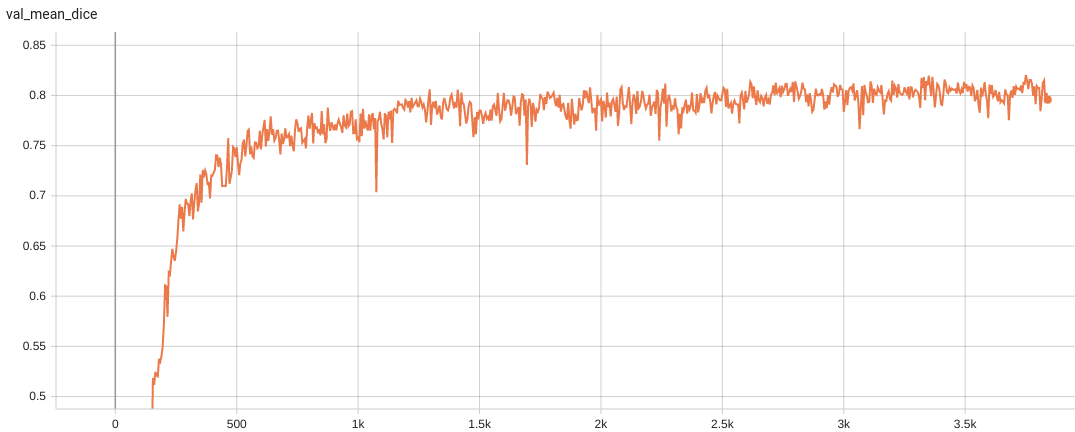

## Performance

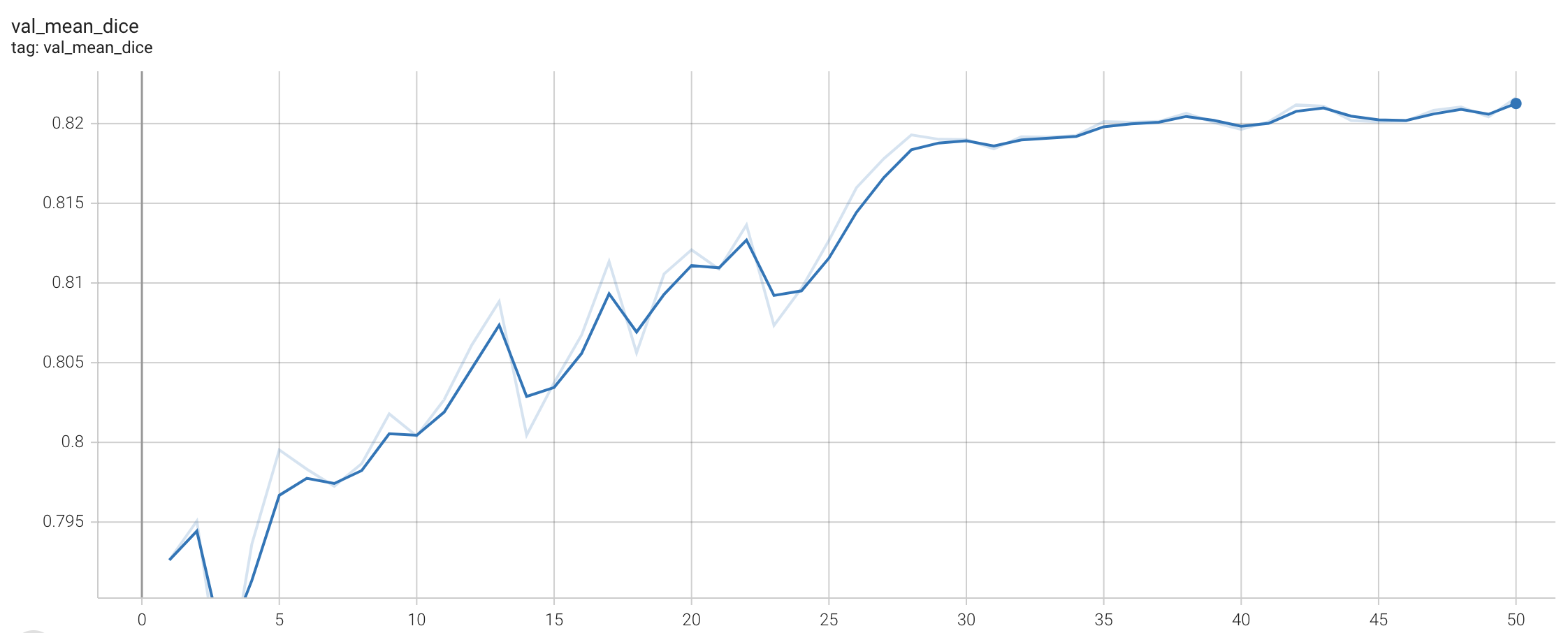

Dice score was used for evaluating the performance of the model. This model achieves a mean dice score of 0.82

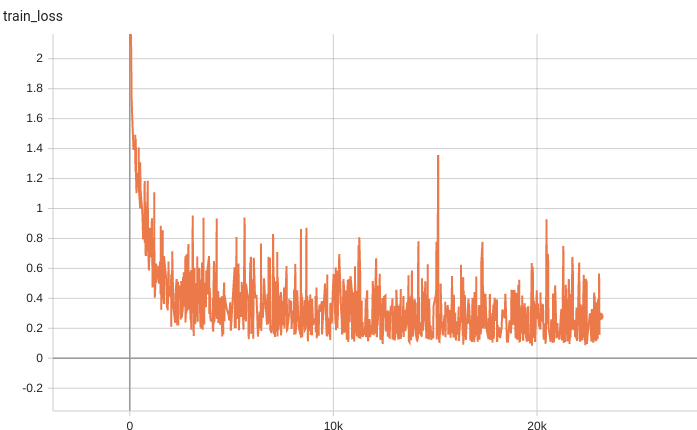

#### Training Loss

#### Validation Dice

## MONAI Bundle Commands

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

For more details usage instructions, visit the [MONAI Bundle Configuration Page](https://docs.monai.io/en/latest/config_syntax.html).

#### Execute training:

```

python -m monai.bundle run --config_file configs/train.json

```

Please note that if the default dataset path is not modified with the actual path in the bundle config files, you can also override it by using `--dataset_dir`:

```

python -m monai.bundle run --config_file configs/train.json --dataset_dir <actual dataset path>

```

#### Override the `train` config to execute multi-GPU training:

```

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run --config_file "['configs/train.json','configs/multi_gpu_train.json']"

```

Please note that the distributed training-related options depend on the actual running environment; thus, users may need to remove `--standalone`, modify `--nnodes`, or do some other necessary changes according to the machine used. For more details, please refer to [pytorch's official tutorial](https://pytorch.org/tutorials/intermediate/ddp_tutorial.html).

#### Override the `train` config to execute evaluation with the trained model:

```

python -m monai.bundle run --config_file "['configs/train.json','configs/evaluate.json']"

```

#### Execute inference:

```

python -m monai.bundle run --config_file configs/inference.json

```

#### Export checkpoint to TorchScript file:

TorchScript conversion is currently not supported.

# References

[1] Hatamizadeh, Ali, et al. "Swin UNETR: Swin Transformers for Semantic Segmentation of Brain Tumors in MRI Images." arXiv preprint arXiv:2201.01266 (2022). https://arxiv.org/abs/2201.01266.

[2] Tang, Yucheng, et al. "Self-supervised pre-training of swin transformers for 3d medical image analysis." arXiv preprint arXiv:2111.14791 (2021). https://arxiv.org/abs/2111.14791.

[3] Landman B, et al. "MICCAI multi-atlas labeling beyond the cranial vault–workshop and challenge." In Proc. of the MICCAI Multi-Atlas Labeling Beyond Cranial Vault—Workshop Challenge 2015 Oct (Vol. 5, p. 12).

# License

Copyright (c) MONAI Consortium

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

|

monai-test/renalStructures_UNEST_segmentation

|

monai-test

| 2023-08-16T03:00:07Z | 0 | 0 |

monai

|

[

"monai",

"medical",

"arxiv:2203.02430",

"license:apache-2.0",

"region:us"

] | null | 2023-08-16T02:59:13Z |

---

tags:

- monai

- medical

library_name: monai

license: apache-2.0

---

# Description

A pre-trained model for training and inferencing volumetric (3D) kidney substructures segmentation from contrast-enhanced CT images (Arterial/Portal Venous Phase). Training pipeline is provided to support model fine-tuning with bundle and MONAI Label active learning.

A tutorial and release of model for kidney cortex, medulla and collecting system segmentation.

Authors: Yinchi Zhou (yinchi.zhou@vanderbilt.edu) | Xin Yu (xin.yu@vanderbilt.edu) | Yucheng Tang (yuchengt@nvidia.com) |

# Model Overview

A pre-trained UNEST base model [1] for volumetric (3D) renal structures segmentation using dynamic contrast enhanced arterial or venous phase CT images.

## Data

The training data is from the [ImageVU RenalSeg dataset] from Vanderbilt University and Vanderbilt University Medical Center.

(The training data is not public available yet).

- Target: Renal Cortex | Medulla | Pelvis Collecting System

- Task: Segmentation

- Modality: CT (Artrial | Venous phase)

- Size: 96 3D volumes

The data and segmentation demonstration is as follow:

<br>

## Method and Network

The UNEST model is a 3D hierarchical transformer-based semgnetation network.

Details of the architecture:

<br>

## Training configuration

The training was performed with at least one 16GB-memory GPU.

Actual Model Input: 96 x 96 x 96

## Input and output formats

Input: 1 channel CT image

Output: 4: 0:Background, 1:Renal Cortex, 2:Medulla, 3:Pelvicalyceal System

## Performance

A graph showing the validation mean Dice for 5000 epochs.

<br>

This model achieves the following Dice score on the validation data (our own split from the training dataset):

Mean Valdiation Dice = 0.8523

Note that mean dice is computed in the original spacing of the input data.

## commands example

Download trained checkpoint model to ./model/model.pt:

Add scripts component: To run the workflow with customized components, PYTHONPATH should be revised to include the path to the customized component:

```

export PYTHONPATH=$PYTHONPATH:"'<path to the bundle root dir>/scripts'"

```

Execute Training:

```

python -m monai.bundle run training --meta_file configs/metadata.json --config_file configs/train.json --logging_file configs/logging.conf

```

Execute inference:

```

python -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file configs/inference.json --logging_file configs/logging.conf

```

## More examples output

<br>

# Disclaimer

This is an example, not to be used for diagnostic purposes.

# References

[1] Yu, Xin, Yinchi Zhou, Yucheng Tang et al. "Characterizing Renal Structures with 3D Block Aggregate Transformers." arXiv preprint arXiv:2203.02430 (2022). https://arxiv.org/pdf/2203.02430.pdf

[2] Zizhao Zhang et al. "Nested Hierarchical Transformer: Towards Accurate, Data-Efficient and Interpretable Visual Understanding." AAAI Conference on Artificial Intelligence (AAAI) 2022

# License

Copyright (c) MONAI Consortium

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

|

ZeroUniqueness/qlora-llama-2-13b-code

|

ZeroUniqueness

| 2023-08-16T02:59:42Z | 27 | 0 |

peft

|

[

"peft",

"region:us"

] | null | 2023-08-02T16:13:08Z |

---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: True

- bnb_4bit_compute_dtype: bfloat16

### Framework versions

- PEFT 0.5.0.dev0

|

monai-test/renalStructures_CECT_segmentation

|

monai-test

| 2023-08-16T02:58:59Z | 0 | 0 |

monai

|

[

"monai",

"medical",

"license:apache-2.0",

"region:us"

] | null | 2023-08-16T02:58:56Z |

---

tags:

- monai

- medical

library_name: monai

license: apache-2.0

---

# Model Title

Renal structures CECT segmentation

### **Authors**

Ivan Chernenkiy, Michael Chernenkiy, Dmitry Fiev, Evgeny Sirota, Center for Neural Network Technologies / Institute of Urology and Human Reproductive Systems / Sechenov First Moscow State Medical University

### **Tags**

Segmentation, CT, CECT, Kidney, Renal, Supervised

## **Model Description**

The model is the SegResNet architecture[1] for volumetric (3D) renal structures segmentation. Input is artery, vein, excretory phases after mutual registration and concatenated to 3 channel 3D tensor.

## **Data**

DICOM data from 41 patients with kidney neoplasms were used [2]. The images and segmentation data are available under a CC BY-NC-SA 4.0 license. Data included all phases of contrast-enhanced multispiral computed tomography. We split the data: 32 observations for the training set and 9 – for the validation set. At the labeling stage, the arterial, venous, and excretory phases were taken, affine registration was performed to jointly match the location of the kidneys, and noise was removed using a median filter and a non-local means filter. Validation set ip published to Yandex.Disk. You can download via [link](https://disk.yandex.ru/d/pWEKt6D3qi3-aw) or use following command:

```bash

python -m monai.bundle run download_data --meta_file configs/metadata.json --config_file "['configs/train.json', 'configs/evaluate.json']"

```

**NB**: underlying data is in LPS orientation. IF! you want to test model on your own data, reorient it from RAS to LPS with `Orientation` transform. You can see example of preprocessing pipeline in `inference.json` file of this bundle.

#### **Preprocessing**

Images are (1) croped to kidney region, all (artery,vein,excret) phases are (2) [registered](https://simpleitk.readthedocs.io/en/master/registrationOverview.html#lbl-registration-overview) with affine transform, noise removed with (3) median and (4) non-local means filter. After that, images are (5) resampled to (0.8,0.8,0.8) density and intesities are (6) scaled from [-1000,1000] to [0,1] range.

## **Performance**

On the validation subset, the values of the Dice score of the SegResNet architecture were: 0.89 for the normal parenchyma of the kidney, 0.58 for the kidney neoplasms, 0.86 for arteries, 0.80 for veins, 0.80 for ureters.

When compared with the nnU-Net model, which was trained on KiTS 21 dataset, the Dice score was greater for the kidney parenchyma in SegResNet – 0.89 compared to three model variants: lowres – 0.69, fullres – 0.70, cascade – 0.69. At the same time, for the neoplasms of the parenchyma of the kidney, the Dice score was comparable: for SegResNet – 0.58, for nnU-Net fullres – 0.59; lowres and cascade had lower Dice score of 0.37 and 0.45, respectively. To reproduce, visit - https://github.com/blacky-i/nephro-segmentation

## **Additional Usage Steps**

#### Execute training:

```bash

python -m monai.bundle run training --meta_file configs/metadata.json --config_file configs/train.json

```

Expected result: finished, Training process started

#### Execute training with finetuning

```bash

python -m monai.bundle run training --dont_finetune false --meta_file configs/metadata.json --config_file configs/train.json

```

Expected result: finished, Training process started, model variables are restored

#### Execute validation:

Download validation data (described in [Data](#data) section).

With provided model weights mean dice score is expected to be ~0.78446.

##### Run validation script:

```bash

python -m monai.bundle run evaluate --meta_file configs/metadata.json --config_file "['configs/train.json', 'configs/evaluate.json']"

```

Expected result: finished, `Key metric: val_mean_dice best value: ...` is printed.

## **System Configuration**

The model was trained for 10000 epochs on 2 RTX2080Ti GPUs with [SmartCacheDataset](https://docs.monai.io/en/stable/data.html#smartcachedataset). This takes 1 days and 2 hours, with 4 images per GPU.

Training progress is available on [tensorboard.dev](https://tensorboard.dev/experiment/VlEMjLdURH6SyFp216dFBg)

To perform training in minimal settings, at least one 12GB-memory GPU is required.

Actual Model Input: 96 x 96 x 96

## **Limitations**

For developmental purposes only and cannot be used directly for clinical procedures.

## **Citation Info**

```

@article{chernenkiy2023segmentation,

title={Segmentation of renal structures based on contrast computed tomography scans using a convolutional neural network},

author={Chernenkiy, IМ and Chernenkiy, MM and Fiev, DN and Sirota, ES},

journal={Sechenov Medical Journal},

volume={14},

number={1},

pages={39--49},

year={2023}

}

```

## **References**

[1] Myronenko, A. (2019). 3D MRI Brain Tumor Segmentation Using Autoencoder Regularization. In: Crimi, A., Bakas, S., Kuijf, H., Keyvan, F., Reyes, M., van Walsum, T. (eds) Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries. BrainLes 2018. Lecture Notes in Computer Science(), vol 11384. Springer, Cham. https://doi.org/10.1007/978-3-030-11726-9_28

[2] Chernenkiy, I. М., et al. "Segmentation of renal structures based on contrast computed tomography scans using a convolutional neural network." Sechenov Medical Journal 14.1 (2023): 39-49.https://doi.org/10.47093/2218-7332.2023.14.1.39-49

#### **Tests used for bundle checking**

Checking with ci script file

```bash

python ci/verify_bundle.py -b renalStructures_CECT_segmentation -p models

```

Expected result: passed, model.pt file downloaded

Checking downloading validation data file

```bash

cd models/renalStructures_CECT_segmentation

python -m monai.bundle run download_data --meta_file configs/metadata.json --config_file "['configs/train.json', 'configs/evaluate.json']"

```

Expected result: finished, `data/` folder is created and filled with images.

Checking evaluation script

```bash

python -m monai.bundle run evaluate --meta_file configs/metadata.json --config_file "['configs/train.json', 'configs/evaluate.json']"

```

Expected result: finished, `Key metric: val_mean_dice best value: ...` is printed.

Checking train script

```bash

python -m monai.bundle run training --meta_file configs/metadata.json --config_file configs/train.json

```

Expected result: finished, Training process started

Checking train script with finetuning

```bash

python -m monai.bundle run training --dont_finetune false --meta_file configs/metadata.json --config_file configs/train.json

```

Expected result: finished, Training process started, model variables are restored

Checking inference script

```bash

python -m monai.bundle run inference --meta_file configs/metadata.json --config_file configs/inference.json

```

Expected result: finished, in `eval` folder masks are created

Check unit test with script:

```bash

python ci/unit_tests/runner.py --b renalStructures_CECT_segmentation

```

|

monai-test/prostate_mri_anatomy

|

monai-test

| 2023-08-16T02:58:50Z | 0 | 0 |

monai

|

[

"monai",

"medical",

"arxiv:1903.08205",

"license:apache-2.0",

"region:us"

] | null | 2023-08-16T02:58:06Z |

---

tags:

- monai

- medical

library_name: monai

license: apache-2.0

---

# Prostate MRI zonal segmentation

### **Authors**

Lisa C. Adams, Keno K. Bressem

### **Tags**

Segmentation, MR, Prostate

## **Model Description**

This model was trained with the UNet architecture [1] and is used for 3D volumetric segmentation of the anatomical prostate zones on T2w MRI images. The segmentation of the anatomical regions is formulated as a voxel-wise classification. Each voxel is classified as either central gland (1), peripheral zone (2), or background (0). The model is optimized using a gradient descent method that minimizes the focal soft-dice loss between the predicted mask and the actual segmentation.

## **Data**

The model was trained in the prostate158 training data, which is available at https://doi.org/10.5281/zenodo.6481141. Only T2w images were used for this task.

### **Preprocessing**

MRI images in the prostate158 dataset were preprocessed, including center cropping and resampling. When applying the model to new data, this preprocessing should be repeated.

#### **Center cropping**

T2w images were acquired with a voxel spacing of 0.47 x 0.47 x 3 mm and an axial FOV size of 180 x 180 mm. However, the prostate rarely exceeds an axial diameter of 100 mm, and for zonal segmentation, the tissue surrounding the prostate is not of interest and only increases the image size and thus the computational cost. Center-cropping can reduce the image size without sacrificing information.

The script `center_crop.py` allows to reproduce center-cropping as performed in the prostate158 paper.

```bash

python scripts/center_crop.py --file_name path/to/t2_image --out_name cropped_t2

```

#### **Resampling**

DWI and ADC sequences in prostate158 were resampled to the orientation and voxel spacing of the T2w sequence. As the zonal segmentation uses T2w images, no additional resampling is nessecary. However, the training script will perform additonal resampling automatically.

## **Performance**

The model achives the following performance on the prostate158 test dataset:

<table border=1 frame=void rules=rows>

<thead>

<tr>

<td></td>

<td colspan = 3><b><center>Rater 1</center></b></td>

<td> </td>

<td colspan = 3><b><center>Rater 2</center></b></td>

</tr>

<tr>

<th>Metric</th>

<th>Transitional Zone</th>

<th>Peripheral Zone</th>

<th> </th>

<th>Transitional Zone</th>

<th>Peripheral Zone</th>

</tr>

</thead>

<tbody>

<tr>

<td><a href='https://en.wikipedia.org/wiki/S%C3%B8rensen%E2%80%93Dice_coefficient'>Dice Coefficient </a></td>

<td> 0.877</td>

<td> 0.754</td>

<td> </td>

<td> 0.875</td>

<td> 0.730</td>

</tr>

<tr>

<td><a href='https://en.wikipedia.org/wiki/Hausdorff_distance'>Hausdorff Distance </a></td>

<td> 18.3</td>

<td> 22.8</td>

<td> </td>

<td> 17.5</td>

<td> 33.2</td>

</tr>

<tr>

<td><a href='https://github.com/deepmind/surface-distance'>Surface Distance </a></td>

<td> 2.19</td>

<td> 1.95</td>

<td> </td>

<td> 2.59</td>

<td> 1.88</td>

</tr>

</tbody>

</table>

For more details, please see the original [publication](https://doi.org/10.1016/j.compbiomed.2022.105817) or official [GitHub repository](https://github.com/kbressem/prostate158)

## **System Configuration**

The model was trained for 100 epochs on a workstaion with a single Nvidia RTX 3080 GPU. This takes approximatly 8 hours.

## **Limitations** (Optional)

This training and inference pipeline was developed for research purposes only. This research use only software that has not been cleared or approved by FDA or any regulatory agency. The model is for research/developmental purposes only and cannot be used directly for clinical procedures.

## **Citation Info** (Optional)

```

@article{ADAMS2022105817,

title = {Prostate158 - An expert-annotated 3T MRI dataset and algorithm for prostate cancer detection},

journal = {Computers in Biology and Medicine},

volume = {148},

pages = {105817},

year = {2022},

issn = {0010-4825},

doi = {https://doi.org/10.1016/j.compbiomed.2022.105817},

url = {https://www.sciencedirect.com/science/article/pii/S0010482522005789},

author = {Lisa C. Adams and Marcus R. Makowski and Günther Engel and Maximilian Rattunde and Felix Busch and Patrick Asbach and Stefan M. Niehues and Shankeeth Vinayahalingam and Bram {van Ginneken} and Geert Litjens and Keno K. Bressem},

keywords = {Prostate cancer, Deep learning, Machine learning, Artificial intelligence, Magnetic resonance imaging, Biparametric prostate MRI}

}

```

## **References**

[1] Sakinis, Tomas, et al. "Interactive segmentation of medical images through fully convolutional neural networks." arXiv preprint arXiv:1903.08205 (2019).

# License

Copyright (c) MONAI Consortium

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

|

monai-test/pathology_nuclick_annotation

|

monai-test

| 2023-08-16T02:56:54Z | 0 | 0 |

monai

|

[

"monai",

"medical",

"arxiv:2005.14511",

"license:apache-2.0",

"region:us"

] | null | 2023-08-16T02:56:25Z |

---

tags:

- monai

- medical

library_name: monai

license: apache-2.0

---

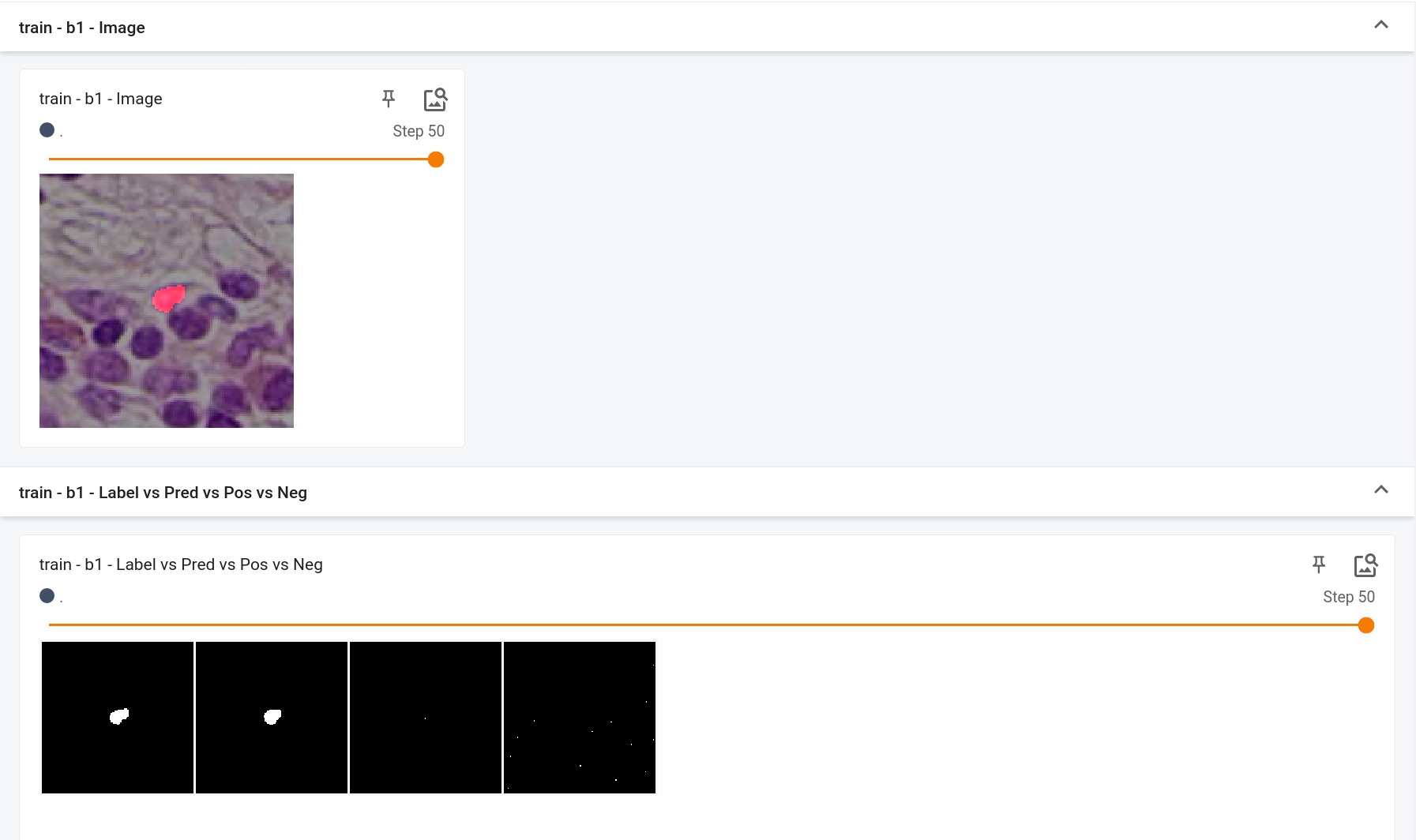

# Model Overview

A pre-trained model for segmenting nuclei cells with user clicks/interactions.

This model is trained using [BasicUNet](https://docs.monai.io/en/latest/networks.html#basicunet) over [ConSeP](https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet) dataset.

## Data

The training dataset is from https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet

```commandline

wget https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet/consep_dataset.zip

unzip -q consep_dataset.zip

```

<br/>

### Preprocessing

After [downloading this dataset](https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet/consep_dataset.zip),

python script `data_process.py` from `scripts` folder can be used to preprocess and generate the final dataset for training.

```

python scripts/data_process.py --input /path/to/data/CoNSeP --output /path/to/data/CoNSePNuclei

```

After generating the output files, please modify the `dataset_dir` parameter specified in `configs/train.json` and `configs/inference.json` to reflect the output folder which contains new dataset.json.

Class values in dataset are

- 1 = other

- 2 = inflammatory

- 3 = healthy epithelial

- 4 = dysplastic/malignant epithelial

- 5 = fibroblast

- 6 = muscle

- 7 = endothelial

As part of pre-processing, the following steps are executed.

- Crop and Extract each nuclei Image + Label (128x128) based on the centroid given in the dataset.

- Combine classes 3 & 4 into the epithelial class and 5,6 & 7 into the spindle-shaped class.

- Update the label index for the target nuclei based on the class value

- Other cells which are part of the patch are modified to have label idx = 255

Example dataset.json

```json

{

"training": [

{

"image": "/workspace/data/CoNSePNuclei/Train/Images/train_1_3_0001.png",

"label": "/workspace/data/CoNSePNuclei/Train/Labels/train_1_3_0001.png",

"nuclei_id": 1,

"mask_value": 3,

"centroid": [

64,

64

]

}

],

"validation": [

{

"image": "/workspace/data/CoNSePNuclei/Test/Images/test_1_3_0001.png",

"label": "/workspace/data/CoNSePNuclei/Test/Labels/test_1_3_0001.png",

"nuclei_id": 1,

"mask_value": 3,

"centroid": [

64,

64

]

}

]

}

```

## Training Configuration

The training was performed with the following:

- GPU: at least 12GB of GPU memory

- Actual Model Input: 5 x 128 x 128

- AMP: True

- Optimizer: Adam

- Learning Rate: 1e-4

- Loss: DiceLoss

### Memory Consumption

- Dataset Manager: CacheDataset

- Data Size: 13,136 PNG images

- Cache Rate: 1.0

- Single GPU - System RAM Usage: 4.7G

### Memory Consumption Warning

If you face memory issues with CacheDataset, you can either switch to a regular Dataset class or lower the caching rate `cache_rate` in the configurations within range [0, 1] to minimize the System RAM requirements.

## Input

5 channels

- 3 RGB channels

- +ve signal channel (this nuclei)

- -ve signal channel (other nuclei)

## Output

2 channels

- 0 = Background

- 1 = Nuclei

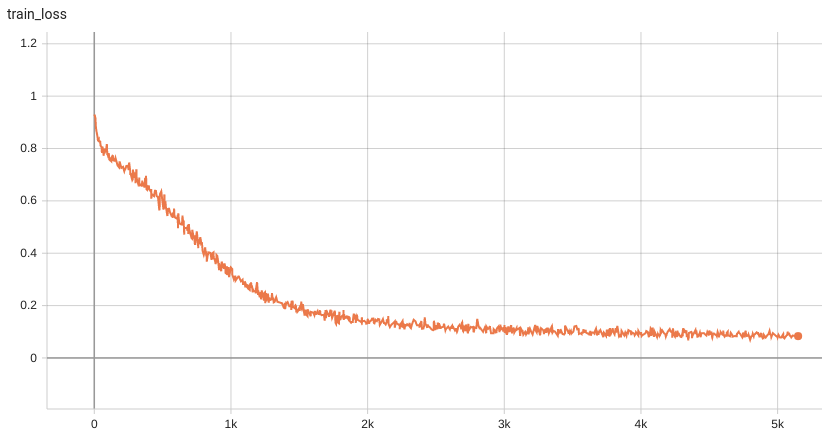

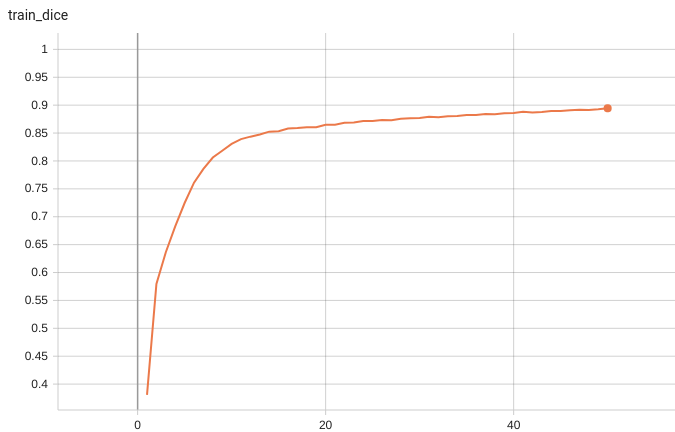

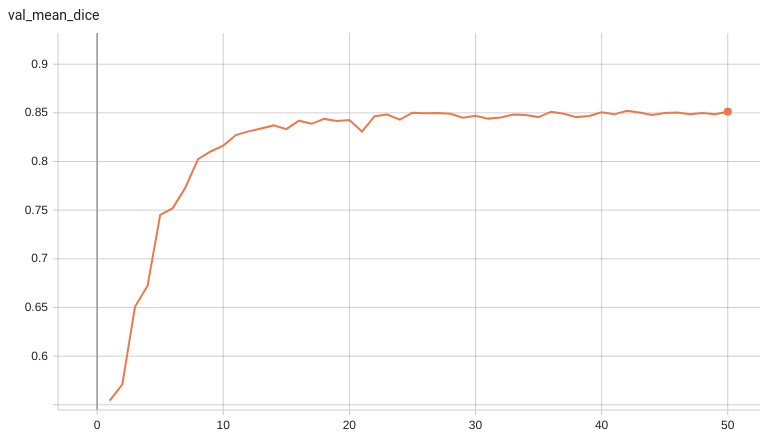

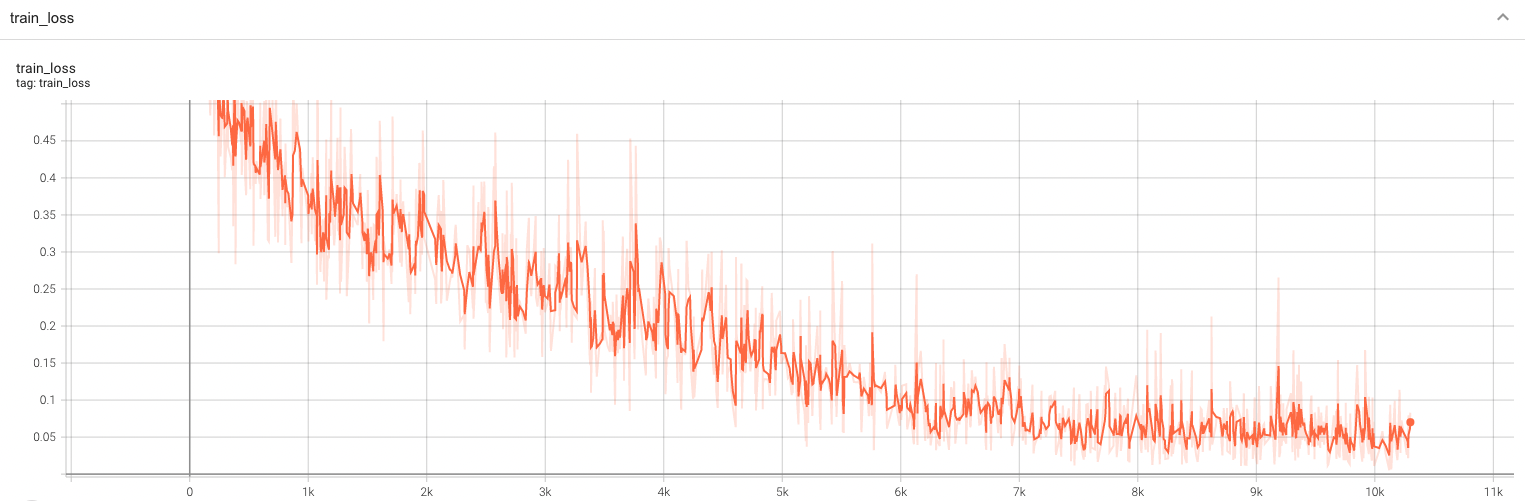

## Performance

This model achieves the following Dice score on the validation data provided as part of the dataset:

- Train Dice score = 0.89

- Validation Dice score = 0.85

#### Training Loss and Dice

A graph showing the training Loss and Dice over 50 epochs.

<br>

<br>

#### Validation Dice

A graph showing the validation mean Dice over 50 epochs.

<br>

## MONAI Bundle Commands

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

For more details usage instructions, visit the [MONAI Bundle Configuration Page](https://docs.monai.io/en/latest/config_syntax.html).

#### Execute training:

```

python -m monai.bundle run --config_file configs/train.json

```

Please note that if the default dataset path is not modified with the actual path in the bundle config files, you can also override it by using `--dataset_dir`:

```

python -m monai.bundle run --config_file configs/train.json --dataset_dir <actual dataset path>

```

#### Override the `train` config to execute multi-GPU training:

```

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run --config_file "['configs/train.json','configs/multi_gpu_train.json']"

```

Please note that the distributed training-related options depend on the actual running environment; thus, users may need to remove `--standalone`, modify `--nnodes`, or do some other necessary changes according to the machine used. For more details, please refer to [pytorch's official tutorial](https://pytorch.org/tutorials/intermediate/ddp_tutorial.html).

#### Override the `train` config to execute evaluation with the trained model:

```

python -m monai.bundle run --config_file "['configs/train.json','configs/evaluate.json']"

```

#### Override the `train` config and `evaluate` config to execute multi-GPU evaluation:

```

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run --config_file "['configs/train.json','configs/evaluate.json','configs/multi_gpu_evaluate.json']"

```

#### Execute inference:

```

python -m monai.bundle run --config_file configs/inference.json

```

# References

[1] Koohbanani, Navid Alemi, et al. "NuClick: a deep learning framework for interactive segmentation of microscopic images." Medical Image Analysis 65 (2020): 101771. https://arxiv.org/abs/2005.14511.

[2] S. Graham, Q. D. Vu, S. E. A. Raza, A. Azam, Y-W. Tsang, J. T. Kwak and N. Rajpoot. "HoVer-Net: Simultaneous Segmentation and Classification of Nuclei in Multi-Tissue Histology Images." Medical Image Analysis, Sept. 2019. [[doi](https://doi.org/10.1016/j.media.2019.101563)]

[3] NuClick [PyTorch](https://github.com/mostafajahanifar/nuclick_torch) Implementation

# License

Copyright (c) MONAI Consortium

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

|

monai-test/pathology_nuclei_segmentation_classification

|

monai-test

| 2023-08-16T02:56:21Z | 0 | 0 |

monai

|

[

"monai",

"medical",

"license:apache-2.0",

"region:us"

] | null | 2023-08-16T02:54:58Z |

---

tags:

- monai

- medical

library_name: monai

license: apache-2.0

---

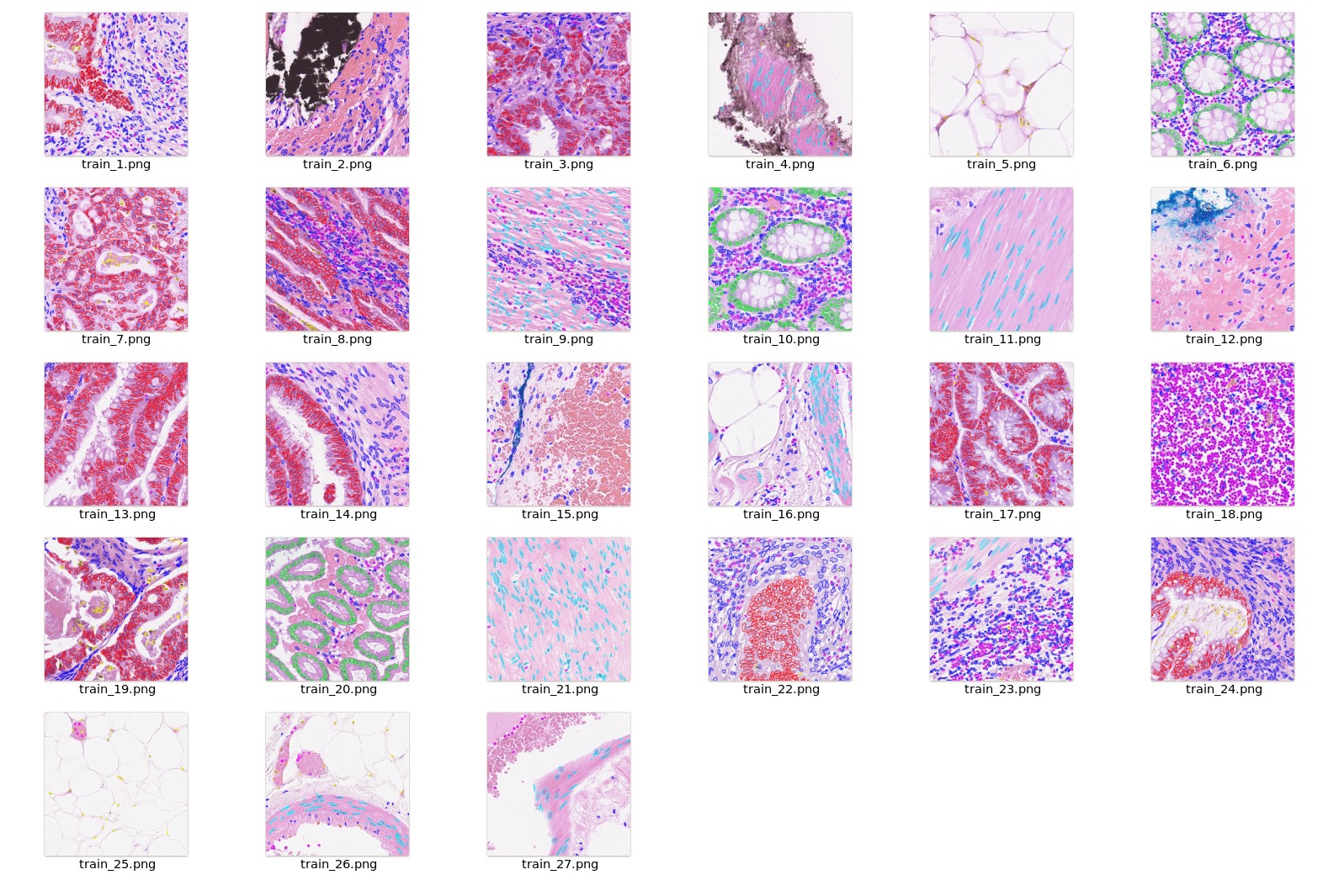

# Model Overview

A pre-trained model for simultaneous segmentation and classification of nuclei within multi-tissue histology images based on CoNSeP data. The details of the model can be found in [1].

The model is trained to simultaneously segment and classify nuclei, and a two-stage training approach is utilized:

- Initialize the model with pre-trained weights, and train the decoder only for 50 epochs.

- Finetune all layers for another 50 epochs.

There are two training modes in total. If "original" mode is specified, [270, 270] and [80, 80] are used for `patch_size` and `out_size` respectively. If "fast" mode is specified, [256, 256] and [164, 164] are used for `patch_size` and `out_size` respectively. The results shown below are based on the "fast" mode.

In this bundle, the first stage is trained with pre-trained weights from some internal data. The [original author's repo](https://github.com/vqdang/hover_net) and [torchvison](https://pytorch.org/vision/stable/_modules/torchvision/models/resnet.html#ResNet18_Weights) also provide pre-trained weights but for non-commercial use.

Each user is responsible for checking the content of models/datasets and the applicable licenses and determining if suitable for the intended use.

If you want to train the first stage with pre-trained weights, just specify `--network_def#pretrained_url <your pretrain weights URL>` in the training command below, such as [ImageNet](https://download.pytorch.org/models/resnet18-f37072fd.pth).

## Data

The training data is from <https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet/>.

- Target: segment instance-level nuclei and classify the nuclei type

- Task: Segmentation and classification

- Modality: RGB images

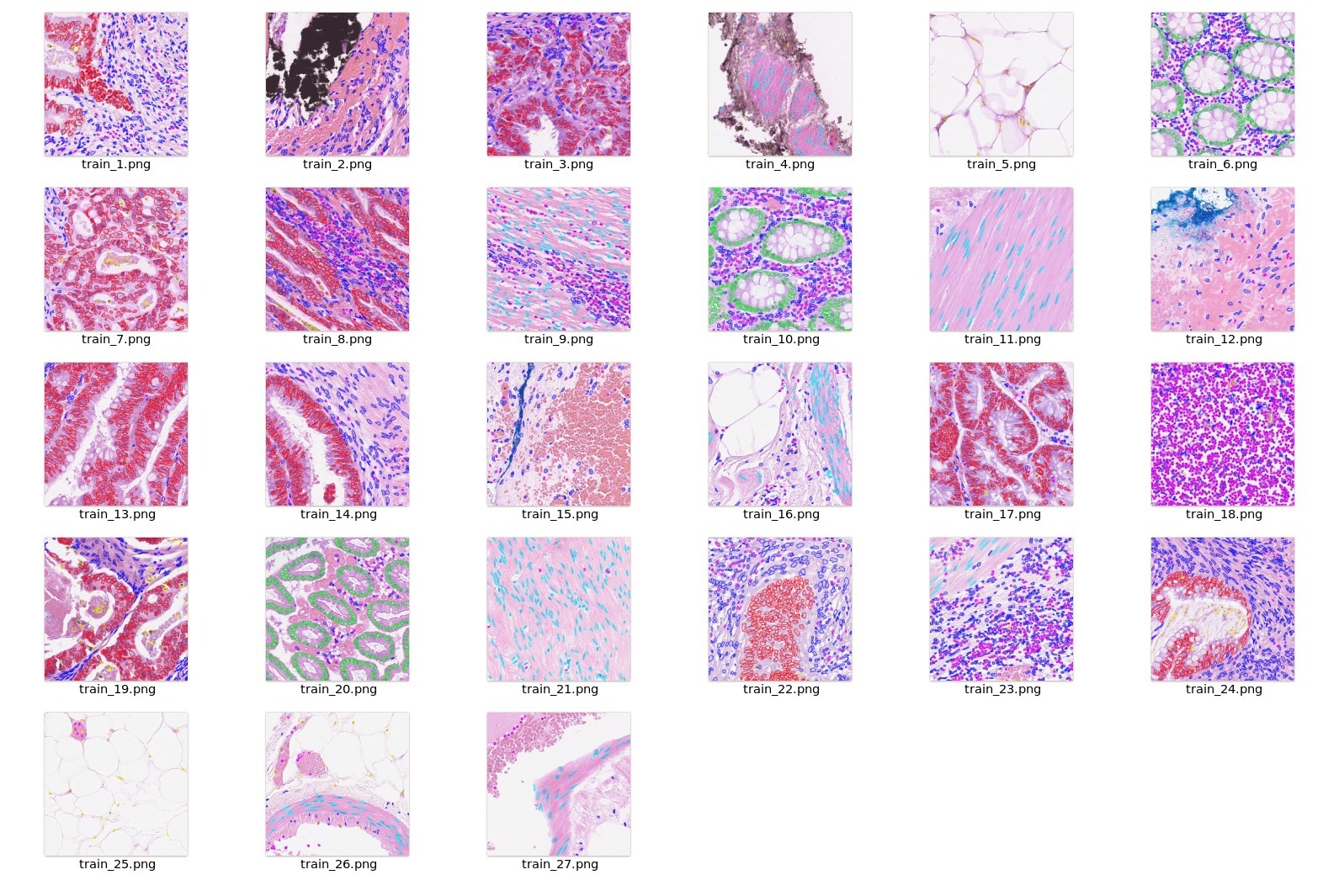

- Size: 41 image tiles (2009 patches)

The provided labelled data was partitioned, based on the original split, into training (27 tiles) and testing (14 tiles) datasets.

You can download the dataset by using this command:

```

wget https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet/consep_dataset.zip

unzip consep_dataset.zip

```

### Preprocessing

After download the [datasets](https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet/consep_dataset.zip), please run `scripts/prepare_patches.py` to prepare patches from tiles. Prepared patches are saved in `<your concep dataset path>`/Prepared. The implementation is referring to <https://github.com/vqdang/hover_net>. The command is like:

```

python scripts/prepare_patches.py --root <your concep dataset path>

```

## Training configuration

This model utilized a two-stage approach. The training was performed with the following:

- GPU: At least 24GB of GPU memory.

- Actual Model Input: 256 x 256

- AMP: True

- Optimizer: Adam

- Learning Rate: 1e-4

- Loss: HoVerNetLoss

- Dataset Manager: CacheDataset

### Memory Consumption Warning

If you face memory issues with CacheDataset, you can either switch to a regular Dataset class or lower the caching rate `cache_rate` in the configurations within range [0, 1] to minimize the System RAM requirements.

## Input

Input: RGB images

## Output

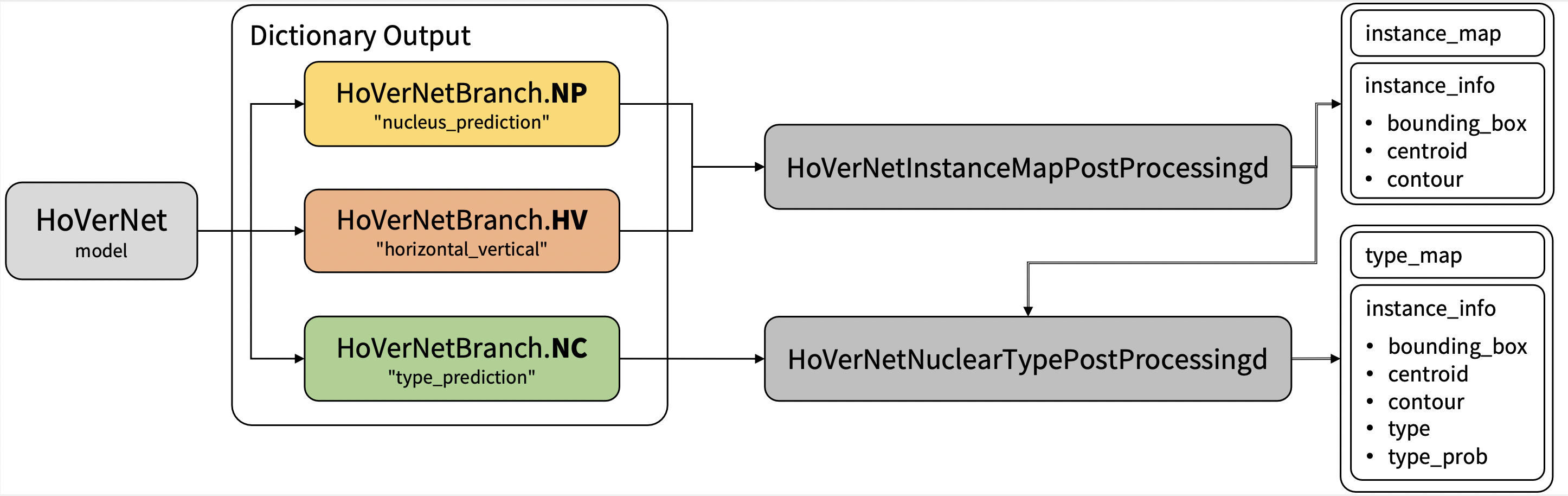

Output: a dictionary with the following keys:

1. nucleus_prediction: predict whether or not a pixel belongs to the nuclei or background

2. horizontal_vertical: predict the horizontal and vertical distances of nuclear pixels to their centres of mass

3. type_prediction: predict the type of nucleus for each pixel

## Performance

The achieved metrics on the validation data are:

Fast mode:

- Binary Dice: 0.8291

- PQ: 0.4973

- F1d: 0.7417

Note:

- Binary Dice is calculated based on the whole input. PQ and F1d were calculated from https://github.com/vqdang/hover_net#inference.

- This bundle is non-deterministic because of the bilinear interpolation used in the network. Therefore, reproducing the training process may not get exactly the same performance.

Please refer to https://pytorch.org/docs/stable/notes/randomness.html#reproducibility for more details about reproducibility.

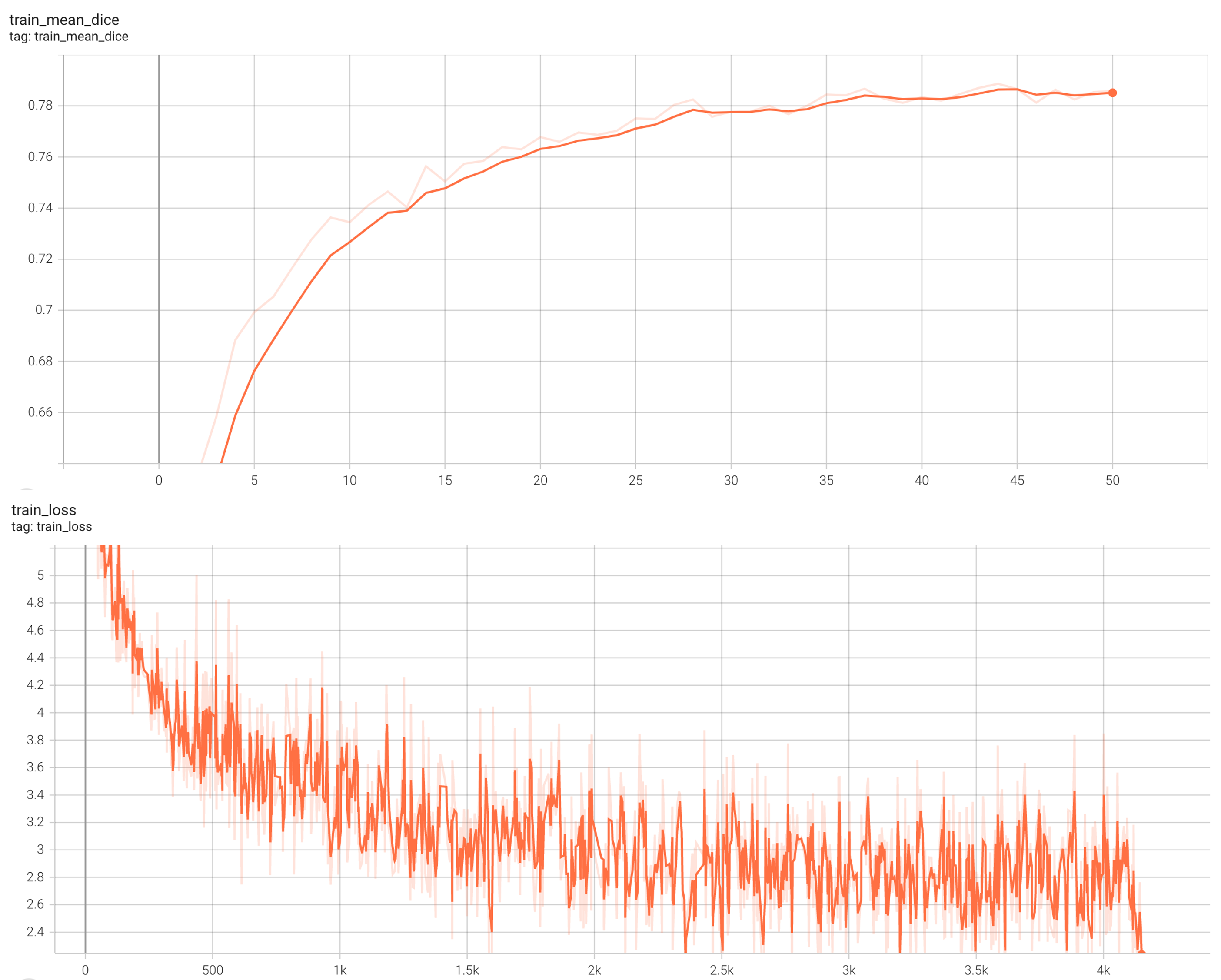

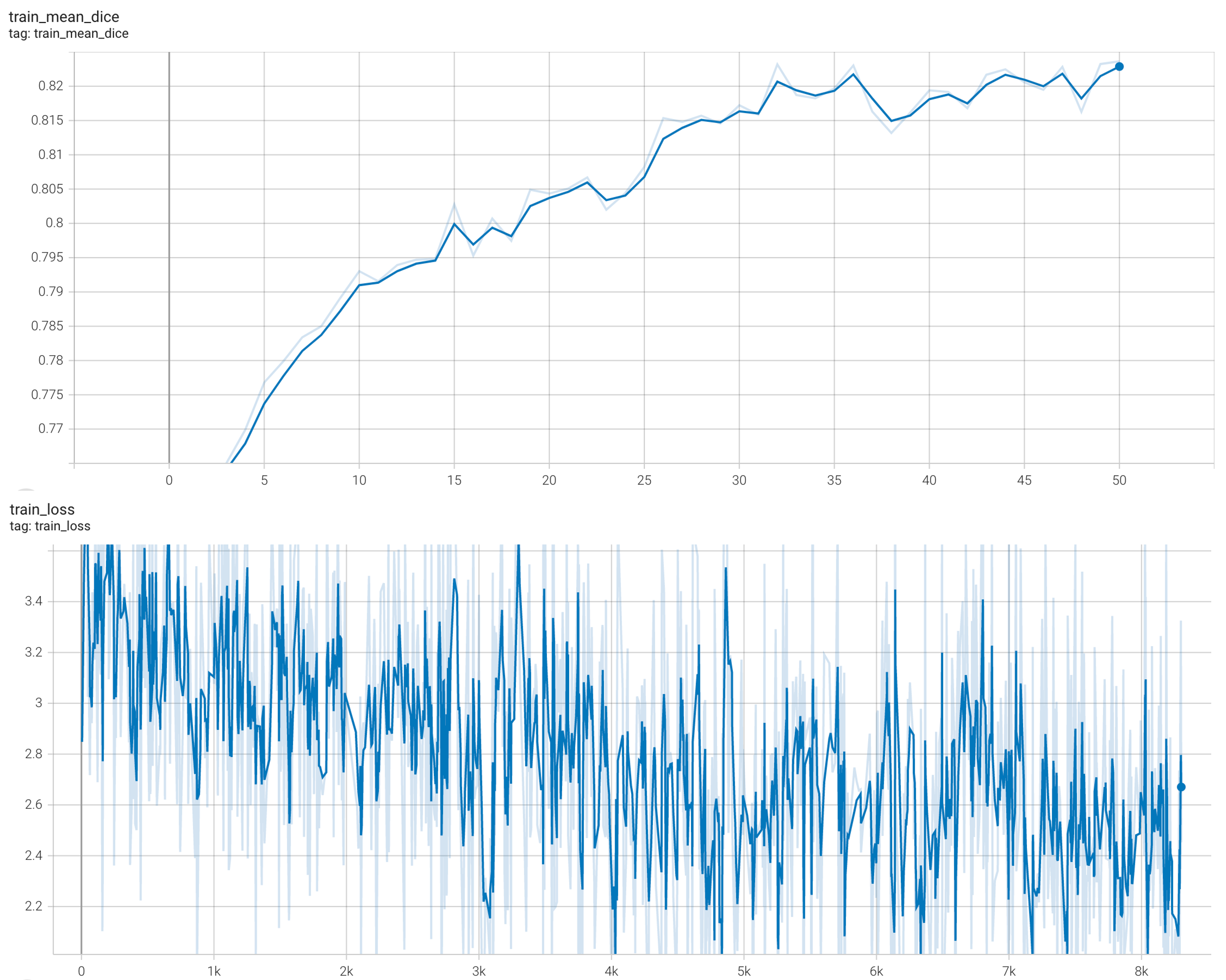

#### Training Loss and Dice

stage1:

stage2:

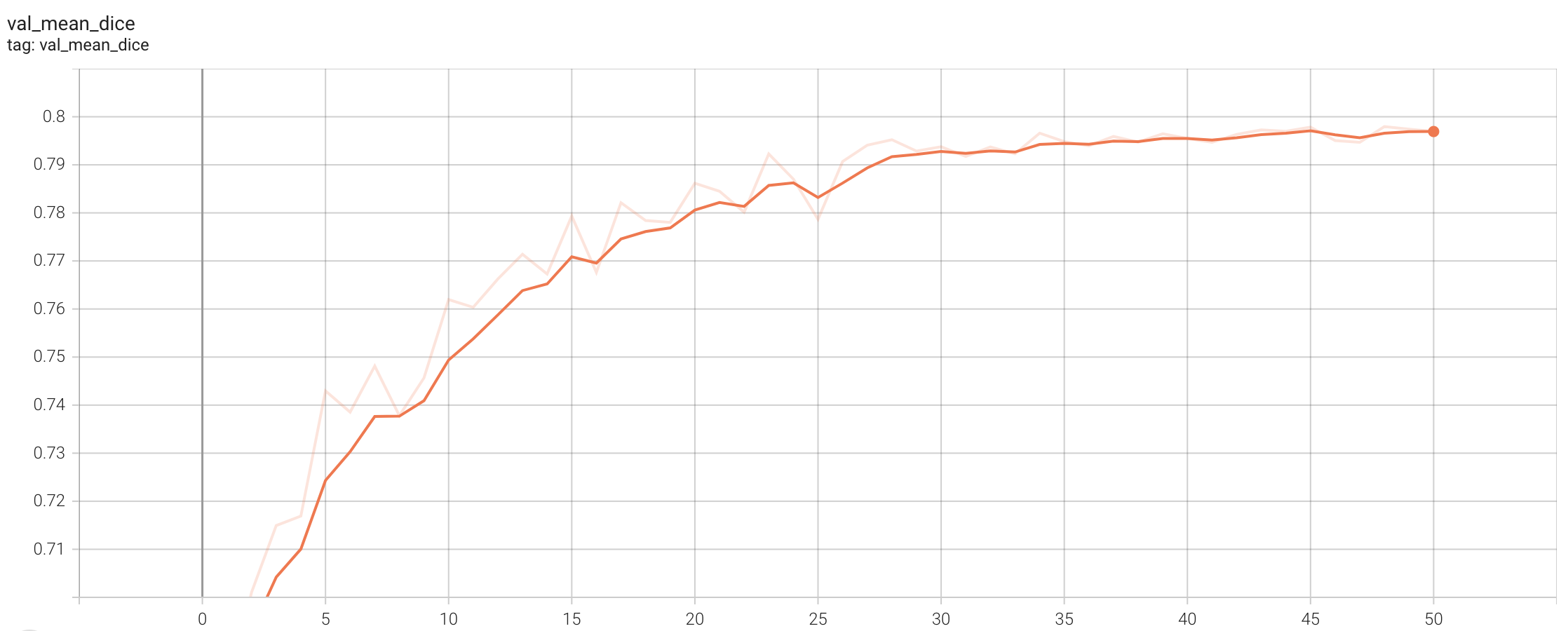

#### Validation Dice

stage1:

stage2:

## MONAI Bundle Commands

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

For more details usage instructions, visit the [MONAI Bundle Configuration Page](https://docs.monai.io/en/latest/config_syntax.html).

#### Execute training, the evaluation during the training were evaluated on patches:

Please note that if the default dataset path is not modified with the actual path in the bundle config files, you can also override it by using `--dataset_dir`:

- Run first stage

```

python -m monai.bundle run --config_file configs/train.json --stage 0 --dataset_dir <actual dataset path>

```

- Run second stage

```

python -m monai.bundle run --config_file configs/train.json --network_def#freeze_encoder False --stage 1 --dataset_dir <actual dataset path>

```

#### Override the `train` config to execute multi-GPU training:

- Run first stage

```

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run --config_file "['configs/train.json','configs/multi_gpu_train.json']" --batch_size 8 --network_def#freeze_encoder True --stage 0

```

- Run second stage

```

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run --config_file "['configs/train.json','configs/multi_gpu_train.json']" --batch_size 4 --network_def#freeze_encoder False --stage 1

```

#### Override the `train` config to execute evaluation with the trained model, here we evaluated dice from the whole input instead of the patches:

```

python -m monai.bundle run --config_file "['configs/train.json','configs/evaluate.json']"

```

#### Execute inference:

```

python -m monai.bundle run --config_file configs/inference.json

```

# References

[1] Simon Graham, Quoc Dang Vu, Shan E Ahmed Raza, Ayesha Azam, Yee Wah Tsang, Jin Tae Kwak, Nasir Rajpoot, Hover-Net: Simultaneous segmentation and classification of nuclei in multi-tissue histology images, Medical Image Analysis, 2019 https://doi.org/10.1016/j.media.2019.101563

# License

Copyright (c) MONAI Consortium

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

|

monai-test/pathology_nuclei_classification

|

monai-test

| 2023-08-16T02:54:49Z | 0 | 3 |

monai

|

[

"monai",

"medical",

"license:apache-2.0",

"region:us"

] | null | 2023-08-16T02:54:19Z |

---

tags:

- monai

- medical

library_name: monai

license: apache-2.0

---

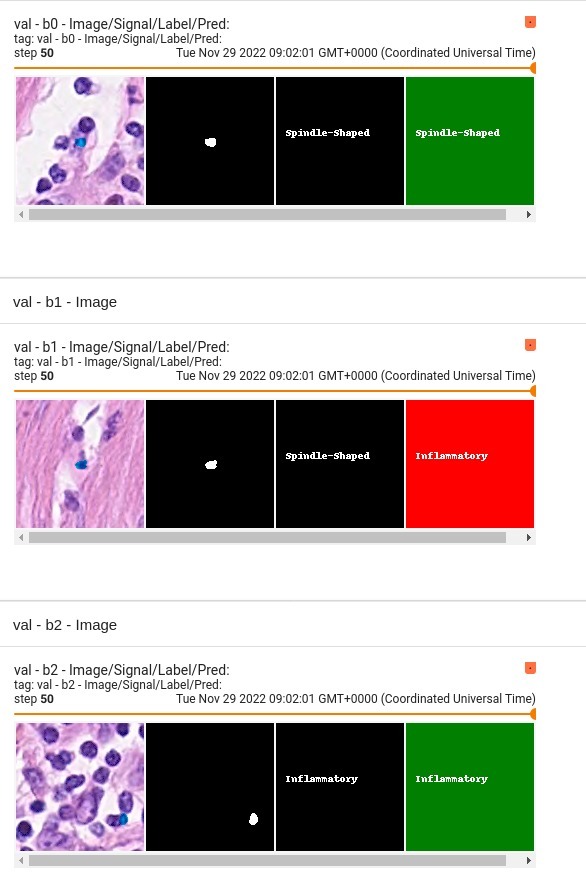

# Model Overview

A pre-trained model for classifying nuclei cells as the following types

- Other

- Inflammatory

- Epithelial

- Spindle-Shaped

This model is trained using [DenseNet121](https://docs.monai.io/en/latest/networks.html#densenet121) over [ConSeP](https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet) dataset.

## Data

The training dataset is from https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet

```commandline

wget https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet/consep_dataset.zip

unzip -q consep_dataset.zip

```

<br/>

### Preprocessing

After [downloading this dataset](https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet/consep_dataset.zip),

python script `data_process.py` from `scripts` folder can be used to preprocess and generate the final dataset for training.

```commandline

python scripts/data_process.py --input /path/to/data/CoNSeP --output /path/to/data/CoNSePNuclei

```

After generating the output files, please modify the `dataset_dir` parameter specified in `configs/train.json` and `configs/inference.json` to reflect the output folder which contains new dataset.json.

Class values in dataset are

- 1 = other

- 2 = inflammatory

- 3 = healthy epithelial

- 4 = dysplastic/malignant epithelial

- 5 = fibroblast

- 6 = muscle

- 7 = endothelial

As part of pre-processing, the following steps are executed.

- Crop and Extract each nuclei Image + Label (128x128) based on the centroid given in the dataset.

- Combine classes 3 & 4 into the epithelial class and 5,6 & 7 into the spindle-shaped class.

- Update the label index for the target nuclie based on the class value

- Other cells which are part of the patch are modified to have label idex = 255

Example `dataset.json` in output folder:

```json

{

"training": [

{

"image": "/workspace/data/CoNSePNuclei/Train/Images/train_1_3_0001.png",

"label": "/workspace/data/CoNSePNuclei/Train/Labels/train_1_3_0001.png",

"nuclei_id": 1,

"mask_value": 3,

"centroid": [

64,

64

]

}

],

"validation": [

{

"image": "/workspace/data/CoNSePNuclei/Test/Images/test_1_3_0001.png",

"label": "/workspace/data/CoNSePNuclei/Test/Labels/test_1_3_0001.png",

"nuclei_id": 1,

"mask_value": 3,

"centroid": [

64,

64

]

}

]

}

```

## Training configuration

The training was performed with the following:

- GPU: at least 12GB of GPU memory

- Actual Model Input: 4 x 128 x 128

- AMP: True

- Optimizer: Adam

- Learning Rate: 1e-4

- Loss: torch.nn.CrossEntropyLoss

- Dataset Manager: CacheDataset

### Memory Consumption Warning

If you face memory issues with CacheDataset, you can either switch to a regular Dataset class or lower the caching rate `cache_rate` in the configurations within range [0, 1] to minimize the System RAM requirements.

## Input

4 channels

- 3 RGB channels

- 1 signal channel (label mask)

## Output

4 channels

- 0 = Other

- 1 = Inflammatory

- 2 = Epithelial

- 3 = Spindle-Shaped

## Performance

This model achieves the following F1 score on the validation data provided as part of the dataset:

- Train F1 score = 0.926

- Validation F1 score = 0.852

<hr/>

Confusion Metrics for <b>Validation</b> for individual classes are:

| Metric | Other | Inflammatory | Epithelial | Spindle-Shaped |

|-----------|--------|--------------|------------|----------------|

| Precision | 0.6909 | 0.7773 | 0.9078 | 0.8478 |

| Recall | 0.2754 | 0.7831 | 0.9533 | 0.8514 |

| F1-score | 0.3938 | 0.7802 | 0.9300 | 0.8496 |

<hr/>

Confusion Metrics for <b>Training</b> for individual classes are:

| Metric | Other | Inflammatory | Epithelial | Spindle-Shaped |

|-----------|--------|--------------|------------|----------------|

| Precision | 0.8000 | 0.9076 | 0.9560 | 0.9019 |

| Recall | 0.6512 | 0.9028 | 0.9690 | 0.8989 |

| F1-score | 0.7179 | 0.9052 | 0.9625 | 0.9004 |

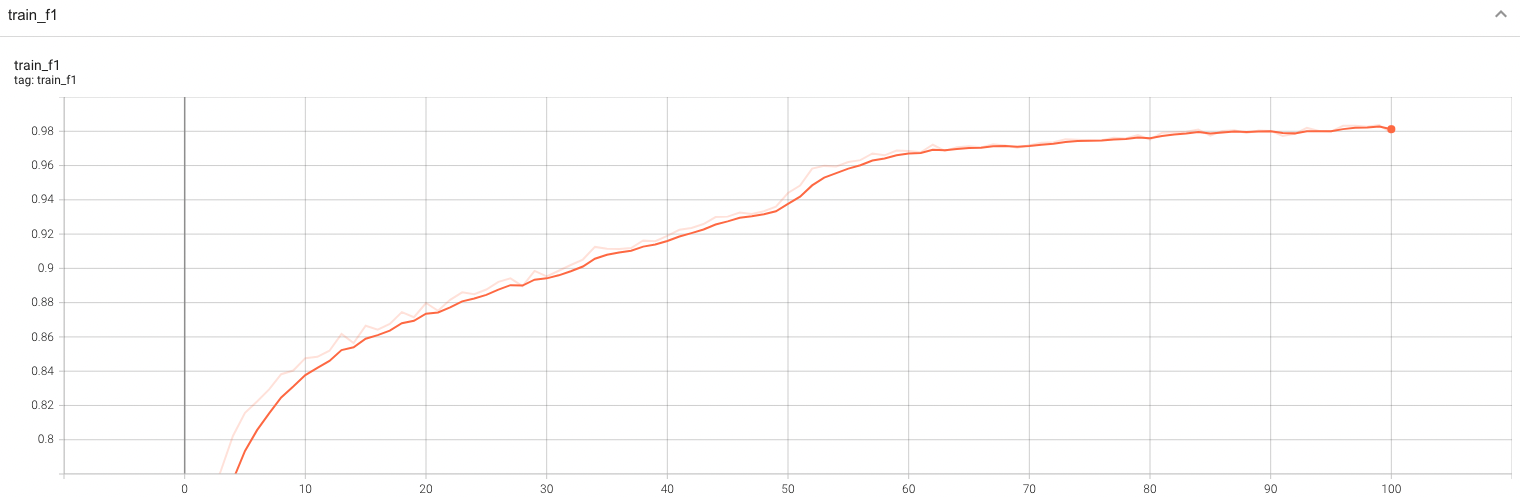

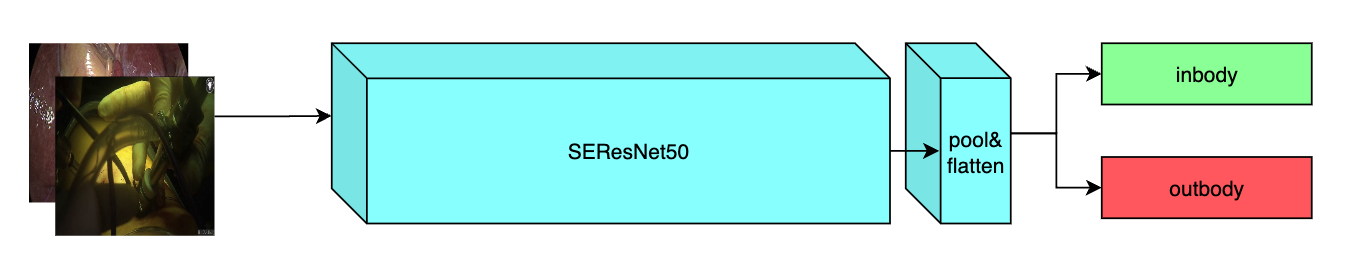

#### Training Loss and F1

A graph showing the training Loss and F1-score over 100 epochs.

<br>

<br>

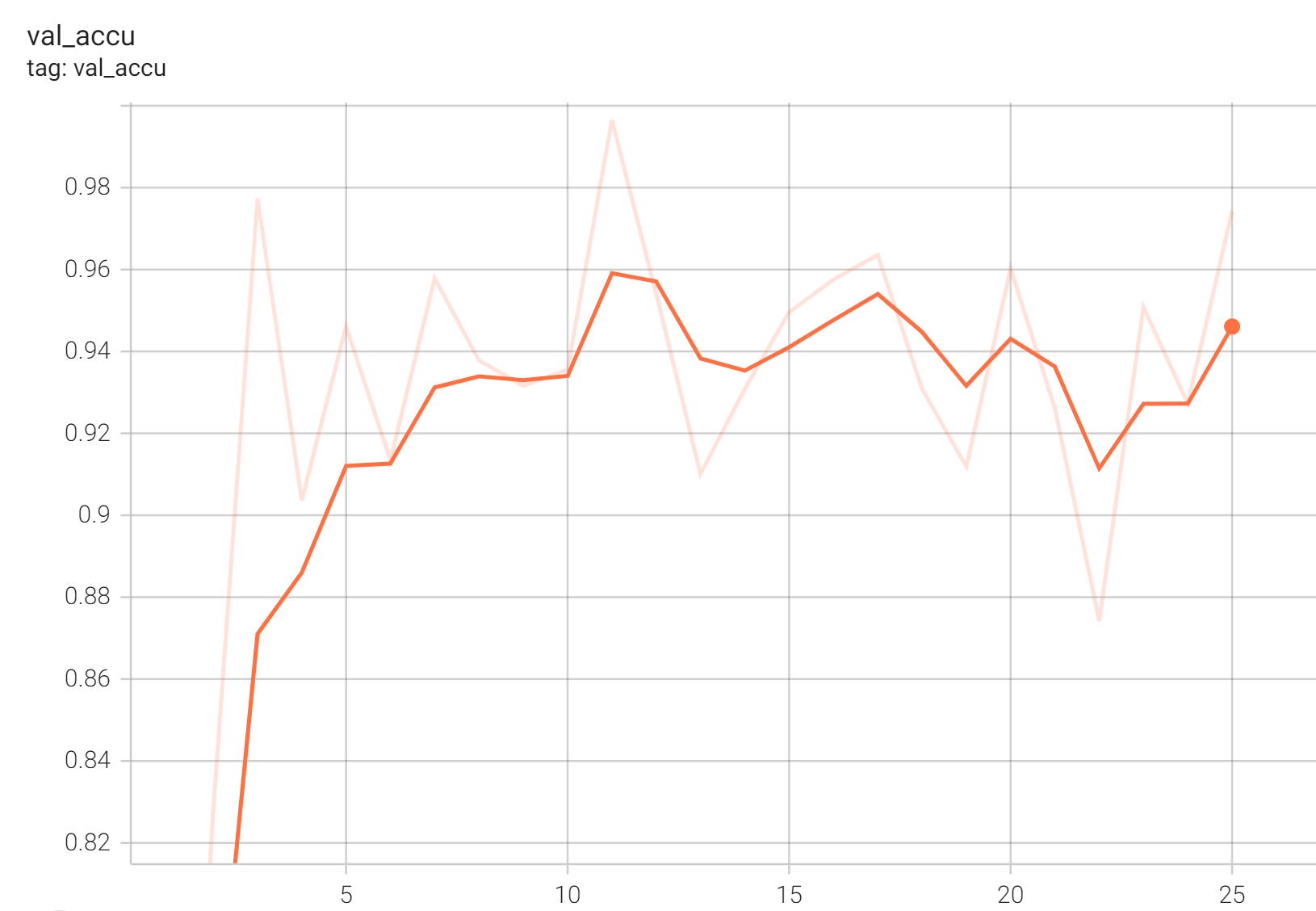

#### Validation F1

A graph showing the validation F1-score over 100 epochs.

<br>

## MONAI Bundle Commands

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

For more details usage instructions, visit the [MONAI Bundle Configuration Page](https://docs.monai.io/en/latest/config_syntax.html).

#### Execute training:

```

python -m monai.bundle run --config_file configs/train.json

```

Please note that if the default dataset path is not modified with the actual path in the bundle config files, you can also override it by using `--dataset_dir`:

```

python -m monai.bundle run --config_file configs/train.json --dataset_dir <actual dataset path>

```

#### Override the `train` config to execute multi-GPU training:

```

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run --config_file "['configs/train.json','configs/multi_gpu_train.json']"

```

Please note that the distributed training-related options depend on the actual running environment; thus, users may need to remove `--standalone`, modify `--nnodes`, or do some other necessary changes according to the machine used. For more details, please refer to [pytorch's official tutorial](https://pytorch.org/tutorials/intermediate/ddp_tutorial.html).

#### Override the `train` config to execute evaluation with the trained model:

```

python -m monai.bundle run --config_file "['configs/train.json','configs/evaluate.json']"

```

#### Override the `train` config and `evaluate` config to execute multi-GPU evaluation:

```

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run --config_file "['configs/train.json','configs/evaluate.json','configs/multi_gpu_evaluate.json']"

```

#### Execute inference:

```

python -m monai.bundle run --config_file configs/inference.json

```

# References

[1] S. Graham, Q. D. Vu, S. E. A. Raza, A. Azam, Y-W. Tsang, J. T. Kwak and N. Rajpoot. "HoVer-Net: Simultaneous Segmentation and Classification of Nuclei in Multi-Tissue Histology Images." Medical Image Analysis, Sept. 2019. [[doi](https://doi.org/10.1016/j.media.2019.101563)]

# License

Copyright (c) MONAI Consortium

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

|

monai-test/mednist_gan

|

monai-test

| 2023-08-16T02:46:42Z | 0 | 0 |

monai

|

[

"monai",

"medical",

"license:apache-2.0",

"region:us"

] | null | 2023-08-16T02:46:37Z |

---

tags:

- monai

- medical

library_name: monai

license: apache-2.0

---

# MedNIST GAN Hand Model

This model is a generator for creating images like the Hand category in the MedNIST dataset. It was trained as a GAN and accepts random values as inputs to produce an image output. The `train.json` file describes the training process along with the definition of the discriminator network used, and is based on the [MONAI GAN tutorials](https://github.com/Project-MONAI/tutorials/blob/main/modules/mednist_GAN_workflow_dict.ipynb).

This is a demonstration network meant to just show the training process for this sort of network with MONAI, its outputs are not particularly good and are of the same tiny size as the images in MedNIST. The training process was very short so a network with a longer training time would produce better results.

### Downloading the Dataset

Download the dataset from [here](https://github.com/Project-MONAI/MONAI-extra-test-data/releases/download/0.8.1/MedNIST.tar.gz) and extract the contents to a convenient location.

The MedNIST dataset was gathered from several sets from [TCIA](https://wiki.cancerimagingarchive.net/display/Public/Data+Usage+Policies+and+Restrictions),

[the RSNA Bone Age Challenge](http://rsnachallenges.cloudapp.net/competitions/4),

and [the NIH Chest X-ray dataset](https://cloud.google.com/healthcare/docs/resources/public-datasets/nih-chest).

The dataset is kindly made available by [Dr. Bradley J. Erickson M.D., Ph.D.](https://www.mayo.edu/research/labs/radiology-informatics/overview) (Department of Radiology, Mayo Clinic)

under the Creative Commons [CC BY-SA 4.0 license](https://creativecommons.org/licenses/by-sa/4.0/).

If you use the MedNIST dataset, please acknowledge the source.

### Training

Assuming the current directory is the bundle directory, and the dataset was extracted to the directory `./MedNIST`, the following command will train the network for 50 epochs:

```

python -m monai.bundle run training --meta_file configs/metadata.json --config_file configs/train.json --logging_file configs/logging.conf --bundle_root .

```

Not also the output from the training will be placed in the `models` directory but will not overwrite the `model.pt` file that may be there already. You will have to manually rename the most recent checkpoint file to `model.pt` to use the inference script mentioned below after checking the results are correct. This saved checkpoint contains a dictionary with the generator weights stored as `model` and omits the discriminator.

Another feature in the training file is the addition of sigmoid activation to the network by modifying it's structure at runtime. This is done with a line in the `training` section calling `add_module` on a layer of the network. This works best for training although the definition of the model now doesn't strictly match what it is in the `generator` section.

The generator and discriminator networks were both trained with the `Adam` optimizer with a learning rate of 0.0002 and `betas` values `[0.5, 0.999]`. These have been emperically found to be good values for the optimizer and this GAN problem.

### Inference

The included `inference.json` generates a set number of png samples from the network and saves these to the directory `./outputs`. The output directory can be changed by setting the `output_dir` value, and the number of samples changed by setting the `num_samples` value. The following command line assumes it is invoked in the bundle directory:

```

python -m monai.bundle run inferring --meta_file configs/metadata.json --config_file configs/inference.json --logging_file configs/logging.conf --bundle_root .

```

Note this script uses postprocessing to apply the sigmoid activation the model's outputs and to save the results to image files.

### Export

The generator can be exported to a Torchscript bundle with the following:

```

python -m monai.bundle ckpt_export network_def --filepath mednist_gan.ts --ckpt_file models/model.pt --meta_file configs/metadata.json --config_file configs/inference.json

```

The model can be loaded without MONAI code after this operation. For example, an image can be generated from a set of random values with:

```python

import torch

net = torch.jit.load("mednist_gan.ts")

latent = torch.rand(1, 64)

img = net(latent) # (1,1,64,64)

```

# License

Copyright (c) MONAI Consortium

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

|

monai-test/endoscopic_inbody_classification

|

monai-test

| 2023-08-16T02:44:21Z | 0 | 0 |

monai

|

[

"monai",

"medical",

"arxiv:1709.01507",

"license:apache-2.0",

"region:us"

] | null | 2023-08-16T02:42:51Z |

---

tags:

- monai

- medical

library_name: monai

license: apache-2.0

---

# Model Overview

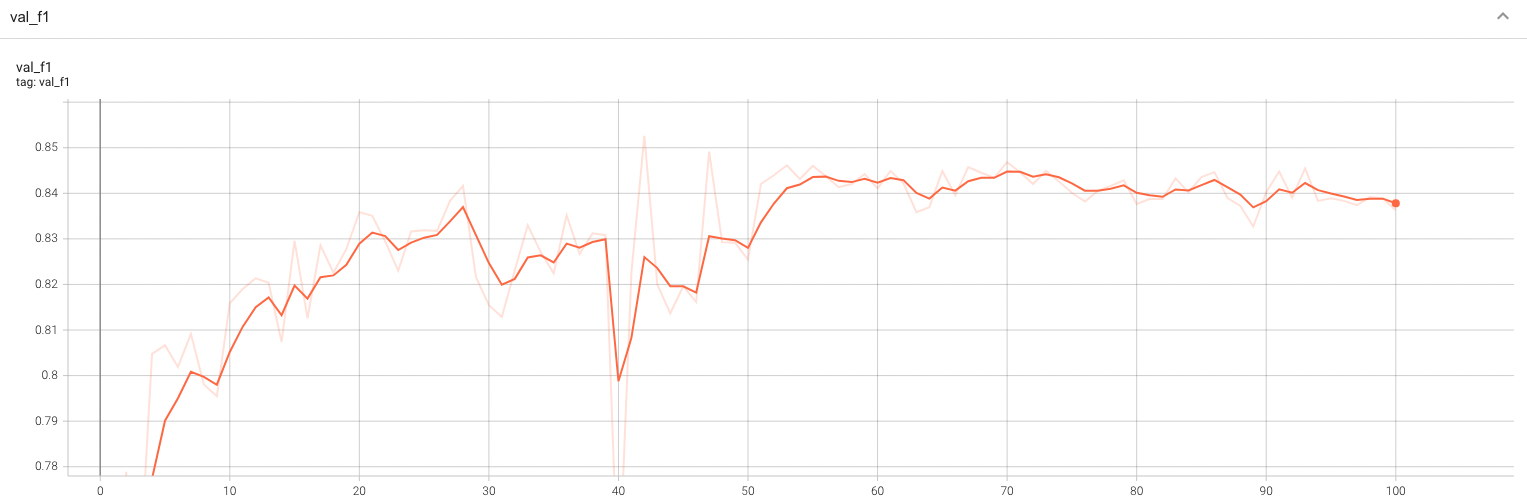

A pre-trained model for the endoscopic inbody classification task and trained using the SEResNet50 structure, whose details can be found in [1]. All datasets are from private samples of [Activ Surgical](https://www.activsurgical.com/). Samples in training and validation dataset are from the same 4 videos, while test samples are from different two videos.

The [PyTorch model](https://drive.google.com/file/d/14CS-s1uv2q6WedYQGeFbZeEWIkoyNa-x/view?usp=sharing) and [torchscript model](https://drive.google.com/file/d/1fOoJ4n5DWKHrt9QXTZ2sXwr9C-YvVGCM/view?usp=sharing) are shared in google drive. Modify the `bundle_root` parameter specified in `configs/train.json` and `configs/inference.json` to reflect where models are downloaded. Expected directory path to place downloaded models is `models/` under `bundle_root`.

## Data

The datasets used in this work were provided by [Activ Surgical](https://www.activsurgical.com/).

Since datasets are private, we provide a [link](https://github.com/Project-MONAI/MONAI-extra-test-data/releases/download/0.8.1/inbody_outbody_samples.zip) of 20 samples (10 in-body and 10 out-body) to show what they look like.

### Preprocessing

After downloading this dataset, python script in `scripts` folder named `data_process` can be used to generate label json files by running the command below and modifying `datapath` to path of unziped downloaded data. Generated label json files will be stored in `label` folder under the bundle path.

```

python scripts/data_process.py --datapath /path/to/data/root

```

By default, label path parameter in `train.json` and `inference.json` of this bundle is point to the generated `label` folder under bundle path. If you move these generated label files to another place, please modify the `train_json`, `val_json` and `test_json` parameters specified in `configs/train.json` and `configs/inference.json` to where these label files are.

The input label json should be a list made up by dicts which includes `image` and `label` keys. An example format is shown below.

```

[

{

"image":"/path/to/image/image_name0.jpg",

"label": 0

},

{

"image":"/path/to/image/image_name1.jpg",

"label": 0

},

{

"image":"/path/to/image/image_name2.jpg",

"label": 1

},

....

{

"image":"/path/to/image/image_namek.jpg",

"label": 0

},

]

```

## Training configuration

The training as performed with the following:

- GPU: At least 12GB of GPU memory

- Actual Model Input: 256 x 256 x 3

- Optimizer: Adam

- Learning Rate: 1e-3

### Input

A three channel video frame

### Output

Two Channels

- Label 0: in body

- Label 1: out body

## Performance

Accuracy was used for evaluating the performance of the model. This model achieves an accuracy score of 0.99

#### Training Loss

#### Validation Accuracy

#### TensorRT speedup

The `endoscopic_inbody_classification` bundle supports acceleration with TensorRT through the ONNX-TensorRT method. The table below displays the speedup ratios observed on an A100 80G GPU.

| method | torch_fp32(ms) | torch_amp(ms) | trt_fp32(ms) | trt_fp16(ms) | speedup amp | speedup fp32 | speedup fp16 | amp vs fp16|

| :---: | :---: | :---: | :---: | :---: | :---: | :---: | :---: | :---: |

| model computation | 6.50 | 9.23 | 2.78 | 2.31 | 0.70 | 2.34 | 2.81 | 4.00 |

| end2end | 23.54 | 23.78 | 7.37 | 7.14 | 0.99 | 3.19 | 3.30 | 3.33 |

Where:

- `model computation` means the speedup ratio of model's inference with a random input without preprocessing and postprocessing

- `end2end` means run the bundle end-to-end with the TensorRT based model.

- `torch_fp32` and `torch_amp` are for the PyTorch models with or without `amp` mode.

- `trt_fp32` and `trt_fp16` are for the TensorRT based models converted in corresponding precision.

- `speedup amp`, `speedup fp32` and `speedup fp16` are the speedup ratios of corresponding models versus the PyTorch float32 model

- `amp vs fp16` is the speedup ratio between the PyTorch amp model and the TensorRT float16 based model.

Currently, the only available method to accelerate this model is through ONNX-TensorRT. However, the Torch-TensorRT method is under development and will be available in the near future.

This result is benchmarked under:

- TensorRT: 8.5.3+cuda11.8

- Torch-TensorRT Version: 1.4.0

- CPU Architecture: x86-64

- OS: ubuntu 20.04

- Python version:3.8.10

- CUDA version: 12.0

- GPU models and configuration: A100 80G

## MONAI Bundle Commands

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

For more details usage instructions, visit the [MONAI Bundle Configuration Page](https://docs.monai.io/en/latest/config_syntax.html).

#### Execute training:

```

python -m monai.bundle run --config_file configs/train.json

```

Please note that if the default dataset path is not modified with the actual path in the bundle config files, you can also override it by using `--dataset_dir`:

```

python -m monai.bundle run --config_file configs/train.json --dataset_dir <actual dataset path>

```

#### Override the `train` config to execute multi-GPU training:

```

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run \

--config_file "['configs/train.json','configs/multi_gpu_train.json']"

```

Please note that the distributed training-related options depend on the actual running environment; thus, users may need to remove `--standalone`, modify `--nnodes`, or do some other necessary changes according to the machine used. For more details, please refer to [pytorch's official tutorial](https://pytorch.org/tutorials/intermediate/ddp_tutorial.html).

In addition, if using the 20 samples example dataset, the preprocessing script will divide the samples to 16 training samples, 2 validation samples and 2 test samples. However, pytorch multi-gpu training requires number of samples in dataloader larger than gpu numbers. Therefore, please use no more than 2 gpus to run this bundle if using the 20 samples example dataset.

#### Override the `train` config to execute evaluation with the trained model:

```

python -m monai.bundle run --config_file "['configs/train.json','configs/evaluate.json']"

```

#### Execute inference:

```

python -m monai.bundle run --config_file configs/inference.json

```

The classification result of every images in `test.json` will be printed to the screen.

#### Export checkpoint to TorchScript file:

```

python -m monai.bundle ckpt_export network_def --filepath models/model.ts --ckpt_file models/model.pt --meta_file configs/metadata.json --config_file configs/inference.json

```

#### Export checkpoint to TensorRT based models with fp32 or fp16 precision:

```bash

python -m monai.bundle trt_export --net_id network_def \

--filepath models/model_trt.ts --ckpt_file models/model.pt \

--meta_file configs/metadata.json --config_file configs/inference.json \

--precision <fp32/fp16> --use_onnx "True" --use_trace "True"

```

#### Execute inference with the TensorRT model:

```

python -m monai.bundle run --config_file "['configs/inference.json', 'configs/inference_trt.json']"

```

# References

[1] J. Hu, L. Shen and G. Sun, Squeeze-and-Excitation Networks, 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 7132-7141. https://arxiv.org/pdf/1709.01507.pdf

# License

Copyright (c) MONAI Consortium

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

|

peteryushunli/bert-finetuned-ner

|

peteryushunli

| 2023-08-16T02:26:18Z | 120 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"dataset:conll2003",

"base_model:google-bert/bert-base-cased",

"base_model:finetune:google-bert/bert-base-cased",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2023-08-16T02:10:28Z |

---

license: apache-2.0

base_model: bert-base-cased

tags:

- generated_from_trainer

datasets:

- conll2003

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-finetuned-ner

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: conll2003

type: conll2003

config: conll2003

split: validation

args: conll2003

metrics:

- name: Precision

type: precision

value: 0.9325396825396826

- name: Recall

type: recall

value: 0.9491753618310333

- name: F1

type: f1

value: 0.9407839866555464

- name: Accuracy

type: accuracy

value: 0.9861364572908695

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-ner

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0589

- Precision: 0.9325

- Recall: 0.9492

- F1: 0.9408

- Accuracy: 0.9861

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.078 | 1.0 | 1756 | 0.0737 | 0.9054 | 0.9340 | 0.9195 | 0.9807 |

| 0.0387 | 2.0 | 3512 | 0.0591 | 0.9327 | 0.9498 | 0.9412 | 0.9861 |

| 0.0253 | 3.0 | 5268 | 0.0589 | 0.9325 | 0.9492 | 0.9408 | 0.9861 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.4

- Tokenizers 0.13.3

|

jjsprockel/distil-ast-audioset-finetuned-gtzan

|

jjsprockel

| 2023-08-16T02:18:46Z | 157 | 0 |

transformers

|

[

"transformers",

"pytorch",

"audio-spectrogram-transformer",

"audio-classification",

"generated_from_trainer",

"dataset:marsyas/gtzan",

"base_model:bookbot/distil-ast-audioset",

"base_model:finetune:bookbot/distil-ast-audioset",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] |

audio-classification

| 2023-08-16T01:49:11Z |

---

license: apache-2.0

base_model: bookbot/distil-ast-audioset

tags:

- generated_from_trainer

datasets:

- marsyas/gtzan

metrics:

- accuracy

model-index:

- name: distil-ast-audioset-finetuned-gtzan

results:

- task:

name: Audio Classification

type: audio-classification

dataset:

name: GTZAN

type: marsyas/gtzan

config: all

split: train

args: all

metrics:

- name: Accuracy

type: accuracy

value: 0.89

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distil-ast-audioset-finetuned-gtzan

This model is a fine-tuned version of [bookbot/distil-ast-audioset](https://huggingface.co/bookbot/distil-ast-audioset) on the GTZAN dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3897

- Accuracy: 0.89

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 6

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 1.321 | 0.99 | 28 | 0.6668 | 0.82 |

| 0.4901 | 1.98 | 56 | 0.5119 | 0.85 |

| 0.2659 | 2.97 | 84 | 0.4564 | 0.87 |

| 0.1518 | 4.0 | 113 | 0.3853 | 0.88 |

| 0.0626 | 4.99 | 141 | 0.3862 | 0.89 |

| 0.0309 | 5.95 | 168 | 0.3897 | 0.89 |

### Framework versions

- Transformers 4.32.0.dev0

- Pytorch 2.0.1+cu118

- Datasets 2.14.4

- Tokenizers 0.13.3

|

tomoohive/a2c-PandaReachDense-v3

|

tomoohive

| 2023-08-16T02:18:42Z | 2 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"PandaReachDense-v3",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-08-09T06:18:53Z |

---

library_name: stable-baselines3

tags:

- PandaReachDense-v3

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: PandaReachDense-v3

type: PandaReachDense-v3

metrics:

- type: mean_reward

value: -0.22 +/- 0.09

name: mean_reward

verified: false

---

# **A2C** Agent playing **PandaReachDense-v3**

This is a trained model of a **A2C** agent playing **PandaReachDense-v3**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

yeongsang2/polyglot-ko-12.8B-v.1.02-checkpoint-240

|

yeongsang2

| 2023-08-16T02:15:59Z | 0 | 0 |

peft

|

[

"peft",

"region:us"

] | null | 2023-08-16T02:13:35Z |

---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- quant_method: bitsandbytes

- load_in_8bit: True

- load_in_4bit: False

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: fp4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float32

### Framework versions

- PEFT 0.5.0.dev0

|

JuiThe/mt5base_lora_Wreview_30e

|

JuiThe

| 2023-08-16T01:51:55Z | 0 | 0 |

peft

|

[

"peft",

"region:us"

] | null | 2023-08-16T01:51:54Z |

---

library_name: peft

---

## Training procedure

### Framework versions

- PEFT 0.4.0

|

HachiML/ja-stablelm-alpha-7b-dolly-ja-qlora-3ep-v8

|

HachiML

| 2023-08-16T01:43:39Z | 0 | 0 |

peft

|

[

"peft",

"region:us"

] | null | 2023-08-16T01:43:28Z |

---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float16

### Framework versions

- PEFT 0.4.0

|

ialvarenga/finetuned-mpnet-citation-itent

|

ialvarenga

| 2023-08-16T01:38:01Z | 3 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"mpnet",

"setfit",

"text-classification",

"arxiv:2209.11055",

"license:apache-2.0",

"region:us"

] |

text-classification

| 2023-08-14T01:47:41Z |

---

license: apache-2.0

tags:

- setfit

- sentence-transformers

- text-classification

pipeline_tag: text-classification

---

# ialvarenga/finetuned-mpnet-citation-itent

This is a [SetFit model](https://github.com/huggingface/setfit) that can be used for text classification. The model has been trained using an efficient few-shot learning technique that involves:

1. Fine-tuning a [Sentence Transformer](https://www.sbert.net) with contrastive learning.

2. Training a classification head with features from the fine-tuned Sentence Transformer.

## Usage

To use this model for inference, first install the SetFit library:

```bash

python -m pip install setfit

```

You can then run inference as follows:

```python

from setfit import SetFitModel

# Download from Hub and run inference

model = SetFitModel.from_pretrained("ialvarenga/finetuned-mpnet-citation-itent")

# Run inference

preds = model(["i loved the spiderman movie!", "pineapple on pizza is the worst 🤮"])

```

## BibTeX entry and citation info

```bibtex

@article{https://doi.org/10.48550/arxiv.2209.11055,

doi = {10.48550/ARXIV.2209.11055},

url = {https://arxiv.org/abs/2209.11055},

author = {Tunstall, Lewis and Reimers, Nils and Jo, Unso Eun Seo and Bates, Luke and Korat, Daniel and Wasserblat, Moshe and Pereg, Oren},

keywords = {Computation and Language (cs.CL), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Efficient Few-Shot Learning Without Prompts},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}

}

```

|

edor/Platypus2-mini-7B

|

edor

| 2023-08-16T01:35:11Z | 1,399 | 1 |

transformers

|

[

"transformers",

"pytorch",

"llama",

"text-generation",

"license:other",

"autotrain_compatible",