modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-01 18:27:28

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 532

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-01 18:27:19

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

huggingtweets/planetmoney

|

huggingtweets

| 2022-03-19T20:19:56Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

language: en

thumbnail: http://www.huggingtweets.com/planetmoney/1647721191942/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/473888336449269761/vIurMh9f_400x400.png')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">NPR's Planet Money</div>

<div style="text-align: center; font-size: 14px;">@planetmoney</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from NPR's Planet Money.

| Data | NPR's Planet Money |

| --- | --- |

| Tweets downloaded | 3246 |

| Retweets | 601 |

| Short tweets | 37 |

| Tweets kept | 2608 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/7jiqlr8t/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @planetmoney's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/1t6h63jy) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/1t6h63jy/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/planetmoney')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

ronykroy/distilbert-base-uncased-finetuned-emotion

|

ronykroy

| 2022-03-19T17:55:13Z | 15 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:emotion",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-03-19T17:30:38Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.922

- name: F1

type: f1

value: 0.9222310284051585

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2334

- Accuracy: 0.922

- F1: 0.9222

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.8454 | 1.0 | 250 | 0.3308 | 0.8975 | 0.8937 |

| 0.2561 | 2.0 | 500 | 0.2334 | 0.922 | 0.9222 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu111

- Datasets 1.16.1

- Tokenizers 0.10.3

|

sanchit-gandhi/wav2vec2-2-gpt2-no-adapter-regularisation

|

sanchit-gandhi

| 2022-03-19T17:43:39Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"speech-encoder-decoder",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:librispeech_asr",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-03-17T16:34:45Z |

---

tags:

- generated_from_trainer

datasets:

- librispeech_asr

model-index:

- name: ''

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

#

This model was trained from scratch on the librispeech_asr dataset.

It achieves the following results on the evaluation set:

- Loss: 1.7494

- Wer: 1.0532

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 20.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 3.4828 | 2.8 | 2500 | 4.0554 | 1.7873 |

| 0.8683 | 5.61 | 5000 | 2.5401 | 1.3156 |

| 0.4394 | 8.41 | 7500 | 1.7519 | 1.1129 |

| 0.0497 | 11.21 | 10000 | 1.7102 | 1.0738 |

| 0.031 | 14.01 | 12500 | 1.7395 | 1.0512 |

| 0.0508 | 16.82 | 15000 | 1.7254 | 1.0463 |

| 0.0462 | 19.62 | 17500 | 1.7494 | 1.0532 |

### Framework versions

- Transformers 4.17.0.dev0

- Pytorch 1.10.2+cu113

- Datasets 1.18.3

- Tokenizers 0.11.0

|

vinaykudari/distilGPT-ft-eli5

|

vinaykudari

| 2022-03-19T17:24:50Z | 7 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-19T16:05:12Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: distilGPT-ft-eli5

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilGPT-ft-eli5

This model is a fine-tuned version of [distilgpt2](https://huggingface.co/distilgpt2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 5.5643

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 30

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 281 | 5.8277 |

| 5.7427 | 2.0 | 562 | 5.7525 |

| 5.7427 | 3.0 | 843 | 5.7016 |

| 5.5614 | 4.0 | 1124 | 5.6593 |

| 5.5614 | 5.0 | 1405 | 5.6273 |

| 5.4408 | 6.0 | 1686 | 5.6029 |

| 5.4408 | 7.0 | 1967 | 5.5855 |

| 5.3522 | 8.0 | 2248 | 5.5739 |

| 5.2948 | 9.0 | 2529 | 5.5670 |

| 5.2948 | 10.0 | 2810 | 5.5643 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.6.0

- Datasets 2.0.0

- Tokenizers 0.11.6

|

sanchit-gandhi/wav2vec2-2-gpt2-regularisation

|

sanchit-gandhi

| 2022-03-19T17:11:48Z | 6 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"speech-encoder-decoder",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:librispeech_asr",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-03-17T16:34:24Z |

---

tags:

- generated_from_trainer

datasets:

- librispeech_asr

model-index:

- name: ''

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

#

This model was trained from scratch on the librispeech_asr dataset.

It achieves the following results on the evaluation set:

- Loss: 1.8529

- Wer: 0.9977

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 20.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 3.5506 | 2.8 | 2500 | 4.4928 | 1.8772 |

| 0.5145 | 5.61 | 5000 | 1.8942 | 1.1063 |

| 0.2736 | 8.41 | 7500 | 1.6550 | 1.0372 |

| 0.0807 | 11.21 | 10000 | 1.7601 | 1.0004 |

| 0.0439 | 14.01 | 12500 | 1.8014 | 1.0022 |

| 0.043 | 16.82 | 15000 | 1.8534 | 1.0097 |

| 0.0434 | 19.62 | 17500 | 1.8529 | 0.9977 |

### Framework versions

- Transformers 4.17.0.dev0

- Pytorch 1.10.2+cu113

- Datasets 1.18.3

- Tokenizers 0.11.0

|

huggingtweets/abombayboy

|

huggingtweets

| 2022-03-19T16:13:12Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-19T15:53:28Z |

---

language: en

thumbnail: http://www.huggingtweets.com/abombayboy/1647706387106/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1465673407178043396/aYbTBRbu_400x400.png')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Bombay Boy</div>

<div style="text-align: center; font-size: 14px;">@abombayboy</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Bombay Boy.

| Data | Bombay Boy |

| --- | --- |

| Tweets downloaded | 3238 |

| Retweets | 927 |

| Short tweets | 181 |

| Tweets kept | 2130 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/3paz3q98/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @abombayboy's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/331ordwj) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/331ordwj/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/abombayboy')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

Pavithra/code-parrot

|

Pavithra

| 2022-03-19T04:04:29Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-19T03:52:29Z |

# CodeParrot 🦜 (small)

CodeParrot 🦜 is a GPT-2 model (110M parameters) trained to generate Python code.

## Usage

You can load the CodeParrot model and tokenizer directly in `transformers`:

```Python

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("lvwerra/codeparrot-small")

model = AutoModelWithLMHead.from_pretrained("lvwerra/codeparrot-small")

inputs = tokenizer("def hello_world():", return_tensors="pt")

outputs = model(**inputs)

```

or with a `pipeline`:

```Python

from transformers import pipeline

pipe = pipeline("text-generation", model="lvwerra/codeparrot-small")

outputs = pipe("def hello_world():")

```

## Training

The model was trained on the cleaned [CodeParrot 🦜 dataset](https://huggingface.co/datasets/lvwerra/codeparrot-clean) with the following settings:

|Config|Value|

|-------|-----|

|Batch size| 192 |

|Context size| 1024 |

|Training steps| 150'000|

|Gradient accumulation| 1|

|Gradient checkpointing| False|

|Learning rate| 5e-4 |

|Weight decay | 0.1 |

|Warmup steps| 2000 |

|Schedule| Cosine |

The training was executed on 16 x A100 (40GB) GPUs. This setting amounts to roughly 29 billion tokens.

## Performance

We evaluated the model on OpenAI's [HumanEval](https://huggingface.co/datasets/openai_humaneval) benchmark which consists of programming challenges:

| Metric | Value |

|-------|-----|

|pass@1 | 3.80% |

|pass@10 | 6.57% |

|pass@100 | 12.78% |

The [pass@k metric](https://huggingface.co/metrics/code_eval) tells the probability that at least one out of k generations passes the tests.

## Resources

- Dataset: [full](https://huggingface.co/datasets/lvwerra/codeparrot-clean), [train](https://huggingface.co/datasets/lvwerra/codeparrot-clean-train), [valid](https://huggingface.co/datasets/lvwerra/codeparrot-clean-valid)

- Code: [repository](https://github.com/huggingface/transformers/tree/master/examples/research_projects/codeparrot)

- Spaces: [generation](), [highlighting]()

|

mikeadimech/bart-large-cnn-qmsum-meeting-summarization

|

mikeadimech

| 2022-03-18T19:00:43Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bart",

"text2text-generation",

"generated_from_trainer",

"dataset:yawnick/QMSum",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-03-18T12:44:49Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: bart-large-cnn-qmsum-meeting-summarization

results: []

datasets:

- yawnick/QMSum

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-large-cnn-qmsum-meeting-summarization

This model is a fine-tuned version of [facebook/bart-large-cnn](https://huggingface.co/facebook/bart-large-cnn) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 5.7578

- Rouge1: 37.9431

- Rouge2: 10.6366

- Rougel: 25.5782

- Rougelsum: 33.0209

- Gen Len: 72.7714

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 500

- label_smoothing_factor: 0.1

### Training results

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.0+cu111

- Datasets 2.0.0

- Tokenizers 0.11.6

|

MehSatho/Tai-medium-Hermione

|

MehSatho

| 2022-03-18T18:56:41Z | 4 | 1 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conversational",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-18T18:45:51Z |

---

tags:

- conversational

---

|

saattrupdan/xlmr-base-texas-squad-fr

|

saattrupdan

| 2022-03-18T16:56:07Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"xlm-roberta",

"question-answering",

"generated_from_trainer",

"license:mit",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-03-02T23:29:05Z |

---

license: mit

tags:

- generated_from_trainer

model-index:

- name: xlmr-base-texas-squad-fr

results: []

widget:

- text: "Comment obtenir la coagulation?"

context: "La coagulation peut être obtenue soit par action d'une enzyme, la présure, soit par fermentation provoquée par des bactéries lactiques (le lactose est alors transformé en acide lactique), soit très fréquemment par combinaison des deux méthodes précédentes, soit par chauffage associé à une acidification directe (vinaigre…). On procède ensuite à l'égouttage. On obtient alors le caillé et le lactosérum. Le lactosérum peut aussi être utilisé directement : fromage de lactosérum comme le sérac, ou par réincorporation de ses composants."

---

# TExAS-SQuAD-fr

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the TExAS-SQuAD-fr dataset.

It achieves the following results on the evaluation set:

- Exact match: xx.xx%

- F1-score: xx.xx%

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 2.1478 | 0.23 | 1000 | 1.8543 |

| 1.9827 | 0.46 | 2000 | 1.7643 |

| 1.8427 | 0.69 | 3000 | 1.6789 |

| 1.8372 | 0.92 | 4000 | 1.6137 |

| 1.7318 | 1.15 | 5000 | 1.6093 |

| 1.6603 | 1.38 | 6000 | 1.7157 |

| 1.6334 | 1.61 | 7000 | 1.6302 |

| 1.6716 | 1.84 | 8000 | 1.5845 |

| 1.5192 | 2.06 | 9000 | 1.6690 |

| 1.5174 | 2.29 | 10000 | 1.6669 |

| 1.4611 | 2.52 | 11000 | 1.6301 |

| 1.4648 | 2.75 | 12000 | 1.6009 |

| 1.5052 | 2.98 | 13000 | 1.6133 |

### Framework versions

- Transformers 4.12.2

- Pytorch 1.8.1+cu101

- Datasets 1.12.1

- Tokenizers 0.10.3

|

mfleck/wav2vec2-large-xls-r-300m-german-with-lm

|

mfleck

| 2022-03-18T16:48:09Z | 5 | 1 |

transformers

|

[

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-03-10T16:46:25Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: wav2vec2-large-xls-r-300m-german-with-lm

results: []

---

# wav2vec2-large-xls-r-300m-german-with-lm

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the German set of the Common Voice dataset.

It achieves a Word Error Rate of 8,8 percent on the evaluation set

## Model description

German wav2vec2-xls-r-300m trained on the full train set of Common Voice dataset with a n-gram language model.

Full code available in [my Github repository](https://github.com/MichaelFleck92/asr-wav2vec)

## Citation

Feel free to cite this work by

```

@misc{mfleck/wav2vec2-large-xls-r-300m-german-with-lm,

title={XLS-R-300 Wav2Vec2 German with language model},

author={Fleck, Michael},

publisher={Hugging Face},

journal={Hugging Face Hub},

howpublished={\url{https://huggingface.co/mfleck/wav2vec2-large-xls-r-300m-german-with-lm}},

year={2022}

}

```

## Intended uses & limitations

Inference Usage

```python

from transformers import pipeline

pipe = pipeline(model="mfleck/wav2vec2-large-xls-r-300m-german-with-lm")

output = pipe("/path/to/file.wav",chunk_length_s=5, stride_length_s=1)

print(output["text"])

```

## Training and evaluation data

Script used for training (takes about 80 hours on a single A100 40GB)

```python

import random

import re

import json

from typing import Any, Dict, List, Optional, Union

import pandas as pd

import numpy as np

import torch

# import soundfile

from datasets import load_dataset, load_metric, Audio

from dataclasses import dataclass, field

from transformers import Wav2Vec2CTCTokenizer, Wav2Vec2FeatureExtractor, Wav2Vec2Processor, TrainingArguments, Trainer, Wav2Vec2ForCTC

'''

Most parts of this script are following the tutorial: https://huggingface.co/blog/fine-tune-xlsr-wav2vec2

'''

common_voice_train = load_dataset("common_voice", "de", split="train+validation")

# Use train dataset with less training data

#common_voice_train = load_dataset("common_voice", "de", split="train[:3%]")

common_voice_test = load_dataset("common_voice", "de", split="test")

# Remove unused columns

common_voice_train = common_voice_train.remove_columns(["accent", "age", "client_id", "down_votes", "gender", "locale", "segment", "up_votes"])

common_voice_test = common_voice_test.remove_columns(["accent", "age", "client_id", "down_votes", "gender", "locale", "segment", "up_votes"])

# Remove batches with chars which do not exist in German

print(len(common_voice_train))

regex = "[^A-Za-zäöüÄÖÜß,?.! ]+"

common_voice_train = common_voice_train.filter(lambda example: bool(re.search(regex, example['sentence']))==False)

common_voice_test = common_voice_test.filter(lambda example: bool(re.search(regex, example['sentence']))==False)

print(len(common_voice_train))

# Remove special chars from transcripts

chars_to_remove_regex = '[\,\?\.\!\-\;\:\"\“\%\‘\”\�\']'

def remove_special_characters(batch):

batch["sentence"] = re.sub(chars_to_remove_regex, '', batch["sentence"]).lower()

return batch

common_voice_train = common_voice_train.map(remove_special_characters, num_proc=10)

common_voice_test = common_voice_test.map(remove_special_characters, num_proc=10)

# Show some random transcripts to proof that preprocessing worked as expected

def show_random_elements(dataset, num_examples=10):

assert num_examples <= len(dataset), "Can't pick more elements than there are in the dataset."

picks = []

for _ in range(num_examples):

pick = random.randint(0, len(dataset)-1)

while pick in picks:

pick = random.randint(0, len(dataset)-1)

picks.append(pick)

print(str(dataset[picks]))

show_random_elements(common_voice_train.remove_columns(["path","audio"]))

# Extract all chars which exist in datasets and add wav2vek tokens

def extract_all_chars(batch):

all_text = " ".join(batch["sentence"])

vocab = list(set(all_text))

return {"vocab": [vocab], "all_text": [all_text]}

vocab_train = common_voice_train.map(extract_all_chars, batched=True, batch_size=-1, keep_in_memory=True, remove_columns=common_voice_train.column_names)

vocab_test = common_voice_test.map(extract_all_chars, batched=True, batch_size=-1, keep_in_memory=True, remove_columns=common_voice_test.column_names)

vocab_list = list(set(vocab_train["vocab"][0]) | set(vocab_test["vocab"][0]))

vocab_dict = {v: k for k, v in enumerate(sorted(vocab_list))}

vocab_dict

vocab_dict["|"] = vocab_dict[" "]

del vocab_dict[" "]

vocab_dict["[UNK]"] = len(vocab_dict)

vocab_dict["[PAD]"] = len(vocab_dict)

len(vocab_dict)

with open('vocab.json', 'w') as vocab_file:

json.dump(vocab_dict, vocab_file)

# Create tokenizer and repo at Huggingface

tokenizer = Wav2Vec2CTCTokenizer.from_pretrained("./", unk_token="[UNK]", pad_token="[PAD]", word_delimiter_token="|")

repo_name = "wav2vec2-large-xls-r-300m-german-with-lm"

tokenizer.push_to_hub(repo_name)

print("pushed to hub")

# Create feature extractor and processor

feature_extractor = Wav2Vec2FeatureExtractor(feature_size=1, sampling_rate=16000, padding_value=0.0, do_normalize=True, return_attention_mask=True)

processor = Wav2Vec2Processor(feature_extractor=feature_extractor, tokenizer=tokenizer)

# Cast audio column

common_voice_train = common_voice_train.cast_column("audio", Audio(sampling_rate=16_000))

common_voice_test = common_voice_test.cast_column("audio", Audio(sampling_rate=16_000))

# Convert audio signal to array and 16khz sampling rate

def prepare_dataset(batch):

audio = batch["audio"]

# batched output is "un-batched"

batch["input_values"] = processor(audio["array"], sampling_rate=audio["sampling_rate"]).input_values[0]

# Save an audio file to check if it gets loaded correctly

# soundfile.write("/home/debian/trainnew/test.wav",batch["input_values"],audio["sampling_rate"])

batch["input_length"] = len(batch["input_values"])

with processor.as_target_processor():

batch["labels"] = processor(batch["sentence"]).input_ids

return batch

common_voice_train = common_voice_train.map(prepare_dataset, remove_columns=common_voice_train.column_names)

common_voice_test = common_voice_test.map(prepare_dataset, remove_columns=common_voice_test.column_names)

print("dataset prepared")

@dataclass

class DataCollatorCTCWithPadding:

"""

Data collator that will dynamically pad the inputs received.

Args:

processor (:class:`~transformers.Wav2Vec2Processor`)

The processor used for proccessing the data.

padding (:obj:`bool`, :obj:`str` or :class:`~transformers.tokenization_utils_base.PaddingStrategy`, `optional`, defaults to :obj:`True`):

Select a strategy to pad the returned sequences (according to the model's padding side and padding index)

among:

* :obj:`True` or :obj:`'longest'`: Pad to the longest sequence in the batch (or no padding if only a single

sequence if provided).

* :obj:`'max_length'`: Pad to a maximum length specified with the argument :obj:`max_length` or to the

maximum acceptable input length for the model if that argument is not provided.

* :obj:`False` or :obj:`'do_not_pad'` (default): No padding (i.e., can output a batch with sequences of

different lengths).

"""

processor: Wav2Vec2Processor

padding: Union[bool, str] = True

def __call__(self, features: List[Dict[str, Union[List[int], torch.Tensor]]]) -> Dict[str, torch.Tensor]:

# split inputs and labels since they have to be of different lenghts and need

# different padding methods

input_features = [{"input_values": feature["input_values"]} for feature in features]

label_features = [{"input_ids": feature["labels"]} for feature in features]

batch = self.processor.pad(

input_features,

padding=self.padding,

return_tensors="pt",

)

with self.processor.as_target_processor():

labels_batch = self.processor.pad(

label_features,

padding=self.padding,

return_tensors="pt",

)

# replace padding with -100 to ignore loss correctly

labels = labels_batch["input_ids"].masked_fill(labels_batch.attention_mask.ne(1), -100)

batch["labels"] = labels

return batch

data_collator = DataCollatorCTCWithPadding(processor=processor, padding=True)

# Use word error rate as metric

wer_metric = load_metric("wer")

def compute_metrics(pred):

pred_logits = pred.predictions

pred_ids = np.argmax(pred_logits, axis=-1)

pred.label_ids[pred.label_ids == -100] = processor.tokenizer.pad_token_id

pred_str = processor.batch_decode(pred_ids)

# we do not want to group tokens when computing the metrics

label_str = processor.batch_decode(pred.label_ids, group_tokens=False)

wer = wer_metric.compute(predictions=pred_str, references=label_str)

return {"wer": wer}

# Model and training parameters

model = Wav2Vec2ForCTC.from_pretrained(

"facebook/wav2vec2-xls-r-300m",

attention_dropout=0.094,

hidden_dropout=0.01,

feat_proj_dropout=0.04,

mask_time_prob=0.08,

layerdrop=0.04,

ctc_loss_reduction="mean",

pad_token_id=processor.tokenizer.pad_token_id,

vocab_size=len(processor.tokenizer),

)

model.freeze_feature_extractor()

training_args = TrainingArguments(

output_dir=repo_name,

group_by_length=True,

per_device_train_batch_size=32,

gradient_accumulation_steps=2,

evaluation_strategy="steps",

num_train_epochs=20,

gradient_checkpointing=True,

fp16=True,

save_steps=5000,

eval_steps=5000,

logging_steps=100,

learning_rate=1e-4,

warmup_steps=500,

save_total_limit=3,

push_to_hub=True,

)

trainer = Trainer(

model=model,

data_collator=data_collator,

args=training_args,

compute_metrics=compute_metrics,

train_dataset=common_voice_train,

eval_dataset=common_voice_test,

tokenizer=processor.feature_extractor,

)

# Start fine tuning

trainer.train()

# When done push final model to Huggingface hub

trainer.push_to_hub()

```

The model achieves a Word Error Rate of 8,8% using the following script:

```python

import argparse

import re

from typing import Dict

import torch

from datasets import Audio, Dataset, load_dataset, load_metric

from transformers import AutoFeatureExtractor, pipeline

# load dataset

dataset = load_dataset("common_voice", "de", split="test")

# use only 1% of data

#dataset = load_dataset("common_voice", "de", split="test[:1%]")

# load processor

feature_extractor = AutoFeatureExtractor.from_pretrained("mfleck/wav2vec2-large-xls-r-300m-german-with-lm")

sampling_rate = feature_extractor.sampling_rate

dataset = dataset.cast_column("audio", Audio(sampling_rate=sampling_rate))

# load eval pipeline

# device=0 means GPU, use device=-1 for CPU

asr = pipeline("automatic-speech-recognition", model="mfleck/wav2vec2-large-xls-r-300m-german-with-lm", device=0)

# Remove batches with chars which do not exist in German

regex = "[^A-Za-zäöüÄÖÜß,?.! ]+"

dataset = dataset.filter(lambda example: bool(re.search(regex, example['sentence']))==False)

chars_to_ignore_regex = '[\,\?\.\!\-\;\:\"\“\%\‘\”\�\']'

# map function to decode audio

def map_to_pred(batch):

prediction = asr(batch["audio"]["array"], chunk_length_s=5, stride_length_s=1)

# Print automatic generated transcript

#print(str(prediction))

batch["prediction"] = prediction["text"]

text = batch["sentence"]

batch["target"] = re.sub(chars_to_ignore_regex, "", text.lower()) + " "

return batch

# run inference on all examples

result = dataset.map(map_to_pred, remove_columns=dataset.column_names)

# load metric

wer = load_metric("wer")

cer = load_metric("cer")

# compute metrics

wer_result = wer.compute(references=result["target"], predictions=result["prediction"])

cer_result = cer.compute(references=result["target"], predictions=result["prediction"])

# print results

result_str = f"WER: {wer_result}\n" f"CER: {cer_result}"

print(result_str)

```

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 20

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 0.1396 | 1.42 | 5000 | 0.1449 | 0.1479 |

| 0.1169 | 2.83 | 10000 | 0.1285 | 0.1286 |

| 0.0938 | 4.25 | 15000 | 0.1277 | 0.1230 |

| 0.0924 | 5.67 | 20000 | 0.1305 | 0.1191 |

| 0.0765 | 7.09 | 25000 | 0.1256 | 0.1158 |

| 0.0749 | 8.5 | 30000 | 0.1186 | 0.1092 |

| 0.066 | 9.92 | 35000 | 0.1173 | 0.1068 |

| 0.0581 | 11.34 | 40000 | 0.1225 | 0.1030 |

| 0.0582 | 12.75 | 45000 | 0.1153 | 0.0999 |

| 0.0507 | 14.17 | 50000 | 0.1182 | 0.0971 |

| 0.0491 | 15.59 | 55000 | 0.1136 | 0.0939 |

| 0.045 | 17.01 | 60000 | 0.1140 | 0.0914 |

| 0.0395 | 18.42 | 65000 | 0.1160 | 0.0902 |

| 0.037 | 19.84 | 70000 | 0.1148 | 0.0882 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.9.0+cu111

- Datasets 1.18.4

- Tokenizers 0.11.6

|

ScandinavianMrT/distilbert-IMDB-NEG

|

ScandinavianMrT

| 2022-03-18T16:43:11Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"distilbert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-03-18T16:15:50Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: distilbert-IMDB-NEG

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-IMDB-NEG

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1871

- Accuracy: 0.9346

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.1865 | 1.0 | 2000 | 0.1871 | 0.9346 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.0+cu111

- Datasets 2.0.0

- Tokenizers 0.11.6

|

brad1141/bert-finetuned-comp2

|

brad1141

| 2022-03-18T16:39:56Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-03-18T15:44:06Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-finetuned-comp2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-comp2

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9570

- Precision: 0.5169

- Recall: 0.6765

- F1: 0.5820

- Accuracy: 0.5820

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 8

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 7

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.8434 | 1.0 | 934 | 0.7147 | 0.4475 | 0.6252 | 0.5096 | 0.5096 |

| 0.6307 | 2.0 | 1868 | 0.5959 | 0.5058 | 0.6536 | 0.5585 | 0.5585 |

| 0.4691 | 3.0 | 2802 | 0.6555 | 0.4761 | 0.6865 | 0.5521 | 0.5521 |

| 0.334 | 4.0 | 3736 | 0.7211 | 0.5292 | 0.6682 | 0.5863 | 0.5863 |

| 0.2326 | 5.0 | 4670 | 0.8046 | 0.4886 | 0.6865 | 0.5682 | 0.5682 |

| 0.1625 | 6.0 | 5604 | 0.8650 | 0.4972 | 0.6851 | 0.5728 | 0.5728 |

| 0.1195 | 7.0 | 6538 | 0.9570 | 0.5169 | 0.6765 | 0.5820 | 0.5820 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.0+cu111

- Datasets 2.0.0

- Tokenizers 0.11.6

|

facebook/wav2vec2-large-xlsr-53

|

facebook

| 2022-03-18T16:11:44Z | 557,799 | 121 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"wav2vec2",

"pretraining",

"speech",

"multilingual",

"dataset:common_voice",

"arxiv:2006.13979",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z |

---

language: multilingual

datasets:

- common_voice

tags:

- speech

license: apache-2.0

---

# Wav2Vec2-XLSR-53

[Facebook's XLSR-Wav2Vec2](https://ai.facebook.com/blog/wav2vec-20-learning-the-structure-of-speech-from-raw-audio/)

The base model pretrained on 16kHz sampled speech audio. When using the model make sure that your speech input is also sampled at 16Khz. Note that this model should be fine-tuned on a downstream task, like Automatic Speech Recognition. Check out [this blog](https://huggingface.co/blog/fine-tune-wav2vec2-english) for more information.

[Paper](https://arxiv.org/abs/2006.13979)

Authors: Alexis Conneau, Alexei Baevski, Ronan Collobert, Abdelrahman Mohamed, Michael Auli

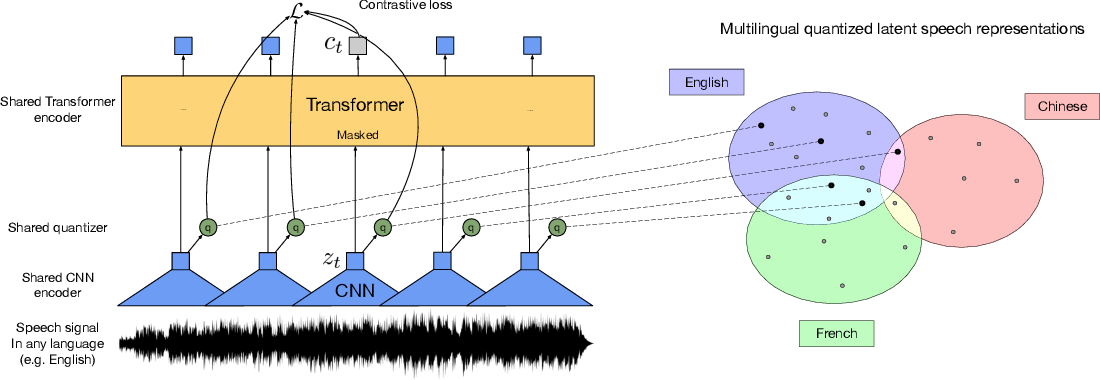

**Abstract**

This paper presents XLSR which learns cross-lingual speech representations by pretraining a single model from the raw waveform of speech in multiple languages. We build on wav2vec 2.0 which is trained by solving a contrastive task over masked latent speech representations and jointly learns a quantization of the latents shared across languages. The resulting model is fine-tuned on labeled data and experiments show that cross-lingual pretraining significantly outperforms monolingual pretraining. On the CommonVoice benchmark, XLSR shows a relative phoneme error rate reduction of 72% compared to the best known results. On BABEL, our approach improves word error rate by 16% relative compared to a comparable system. Our approach enables a single multilingual speech recognition model which is competitive to strong individual models. Analysis shows that the latent discrete speech representations are shared across languages with increased sharing for related languages. We hope to catalyze research in low-resource speech understanding by releasing XLSR-53, a large model pretrained in 53 languages.

The original model can be found under https://github.com/pytorch/fairseq/tree/master/examples/wav2vec#wav2vec-20.

# Usage

See [this notebook](https://colab.research.google.com/github/patrickvonplaten/notebooks/blob/master/Fine_Tune_XLSR_Wav2Vec2_on_Turkish_ASR_with_%F0%9F%A4%97_Transformers.ipynb) for more information on how to fine-tune the model.

|

TestSB3/ppo-CartPole-v1

|

TestSB3

| 2022-03-18T13:41:36Z | 0 | 0 | null |

[

"gym",

"reinforcement-learning",

"region:us"

] |

reinforcement-learning

| 2022-03-18T10:20:52Z |

---

tags:

- gym

- reinforcement-learning

---

# TestSB3/ppo-CartPole-v1

This is a trained model of a PPO agent playing CartPole-v1 using the [rl-baselines3-zoo](https://github.com/DLR-RM/rl-baselines3-zoo) library.

## Usage (with RL-baselines3-zoo)

Just clone the [rl-baselines3-zoo](https://github.com/DLR-RM/rl-baselines3-zoo) library.

Then run:

```python

python enjoy.py --algo ppo --env CartPole-v1

```

## Evaluation Results

Mean Reward: 500.0 +/- 0.0 (300 test episodes)

## Citing the Project

To cite this repository in publications:

```

@misc{rl-zoo3,

author = {Raffin, Antonin},

title = {RL Baselines3 Zoo},

year = {2020},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/DLR-RM/rl-baselines3-zoo}},

}

```

|

navteca/all-mpnet-base-v2

|

navteca

| 2022-03-18T11:28:42Z | 7 | 1 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"mpnet",

"feature-extraction",

"sentence-similarity",

"en",

"arxiv:1904.06472",

"arxiv:2102.07033",

"arxiv:2104.08727",

"arxiv:1704.05179",

"arxiv:1810.09305",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-03-02T23:29:05Z |

---

language: en

license: mit

pipeline_tag: sentence-similarity

tags:

- feature-extraction

- sentence-similarity

- sentence-transformers

---

# All MPNet base model (v2) for Semantic Search

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('sentence-transformers/all-mpnet-base-v2')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

import torch.nn.functional as F

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('sentence-transformers/all-mpnet-base-v2')

model = AutoModel.from_pretrained('sentence-transformers/all-mpnet-base-v2')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

# Normalize embeddings

sentence_embeddings = F.normalize(sentence_embeddings, p=2, dim=1)

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=sentence-transformers/all-mpnet-base-v2)

------

## Background

The project aims to train sentence embedding models on very large sentence level datasets using a self-supervised

contrastive learning objective. We used the pretrained [`microsoft/mpnet-base`](https://huggingface.co/microsoft/mpnet-base) model and fine-tuned in on a

1B sentence pairs dataset. We use a contrastive learning objective: given a sentence from the pair, the model should predict which out of a set of randomly sampled other sentences, was actually paired with it in our dataset.

We developped this model during the

[Community week using JAX/Flax for NLP & CV](https://discuss.huggingface.co/t/open-to-the-community-community-week-using-jax-flax-for-nlp-cv/7104),

organized by Hugging Face. We developped this model as part of the project:

[Train the Best Sentence Embedding Model Ever with 1B Training Pairs](https://discuss.huggingface.co/t/train-the-best-sentence-embedding-model-ever-with-1b-training-pairs/7354). We benefited from efficient hardware infrastructure to run the project: 7 TPUs v3-8, as well as intervention from Googles Flax, JAX, and Cloud team member about efficient deep learning frameworks.

## Intended uses

Our model is intented to be used as a sentence and short paragraph encoder. Given an input text, it ouptuts a vector which captures

the semantic information. The sentence vector may be used for information retrieval, clustering or sentence similarity tasks.

By default, input text longer than 384 word pieces is truncated.

## Training procedure

### Pre-training

We use the pretrained [`microsoft/mpnet-base`](https://huggingface.co/microsoft/mpnet-base) model. Please refer to the model card for more detailed information about the pre-training procedure.

### Fine-tuning

We fine-tune the model using a contrastive objective. Formally, we compute the cosine similarity from each possible sentence pairs from the batch.

We then apply the cross entropy loss by comparing with true pairs.

#### Hyper parameters

We trained ou model on a TPU v3-8. We train the model during 100k steps using a batch size of 1024 (128 per TPU core).

We use a learning rate warm up of 500. The sequence length was limited to 128 tokens. We used the AdamW optimizer with

a 2e-5 learning rate.

#### Training data

We use the concatenation from multiple datasets to fine-tune our model. The total number of sentence pairs is above 1 billion sentences.

We sampled each dataset given a weighted probability which configuration is detailed in the `data_config.json` file.

| Dataset | Paper | Number of training tuples |

|--------------------------------------------------------|:----------------------------------------:|:--------------------------:|

| [Reddit comments (2015-2018)](https://github.com/PolyAI-LDN/conversational-datasets/tree/master/reddit) | [paper](https://arxiv.org/abs/1904.06472) | 726,484,430 |

| [S2ORC](https://github.com/allenai/s2orc) Citation pairs (Abstracts) | [paper](https://aclanthology.org/2020.acl-main.447/) | 116,288,806 |

| [WikiAnswers](https://github.com/afader/oqa#wikianswers-corpus) Duplicate question pairs | [paper](https://doi.org/10.1145/2623330.2623677) | 77,427,422 |

| [PAQ](https://github.com/facebookresearch/PAQ) (Question, Answer) pairs | [paper](https://arxiv.org/abs/2102.07033) | 64,371,441 |

| [S2ORC](https://github.com/allenai/s2orc) Citation pairs (Titles) | [paper](https://aclanthology.org/2020.acl-main.447/) | 52,603,982 |

| [S2ORC](https://github.com/allenai/s2orc) (Title, Abstract) | [paper](https://aclanthology.org/2020.acl-main.447/) | 41,769,185 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) (Title, Body) pairs | - | 25,316,456 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) (Title+Body, Answer) pairs | - | 21,396,559 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) (Title, Answer) pairs | - | 21,396,559 |

| [MS MARCO](https://microsoft.github.io/msmarco/) triplets | [paper](https://doi.org/10.1145/3404835.3462804) | 9,144,553 |

| [GOOAQ: Open Question Answering with Diverse Answer Types](https://github.com/allenai/gooaq) | [paper](https://arxiv.org/pdf/2104.08727.pdf) | 3,012,496 |

| [Yahoo Answers](https://www.kaggle.com/soumikrakshit/yahoo-answers-dataset) (Title, Answer) | [paper](https://proceedings.neurips.cc/paper/2015/hash/250cf8b51c773f3f8dc8b4be867a9a02-Abstract.html) | 1,198,260 |

| [Code Search](https://huggingface.co/datasets/code_search_net) | - | 1,151,414 |

| [COCO](https://cocodataset.org/#home) Image captions | [paper](https://link.springer.com/chapter/10.1007%2F978-3-319-10602-1_48) | 828,395|

| [SPECTER](https://github.com/allenai/specter) citation triplets | [paper](https://doi.org/10.18653/v1/2020.acl-main.207) | 684,100 |

| [Yahoo Answers](https://www.kaggle.com/soumikrakshit/yahoo-answers-dataset) (Question, Answer) | [paper](https://proceedings.neurips.cc/paper/2015/hash/250cf8b51c773f3f8dc8b4be867a9a02-Abstract.html) | 681,164 |

| [Yahoo Answers](https://www.kaggle.com/soumikrakshit/yahoo-answers-dataset) (Title, Question) | [paper](https://proceedings.neurips.cc/paper/2015/hash/250cf8b51c773f3f8dc8b4be867a9a02-Abstract.html) | 659,896 |

| [SearchQA](https://huggingface.co/datasets/search_qa) | [paper](https://arxiv.org/abs/1704.05179) | 582,261 |

| [Eli5](https://huggingface.co/datasets/eli5) | [paper](https://doi.org/10.18653/v1/p19-1346) | 325,475 |

| [Flickr 30k](https://shannon.cs.illinois.edu/DenotationGraph/) | [paper](https://transacl.org/ojs/index.php/tacl/article/view/229/33) | 317,695 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) Duplicate questions (titles) | | 304,525 |

| AllNLI ([SNLI](https://nlp.stanford.edu/projects/snli/) and [MultiNLI](https://cims.nyu.edu/~sbowman/multinli/) | [paper SNLI](https://doi.org/10.18653/v1/d15-1075), [paper MultiNLI](https://doi.org/10.18653/v1/n18-1101) | 277,230 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) Duplicate questions (bodies) | | 250,519 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) Duplicate questions (titles+bodies) | | 250,460 |

| [Sentence Compression](https://github.com/google-research-datasets/sentence-compression) | [paper](https://www.aclweb.org/anthology/D13-1155/) | 180,000 |

| [Wikihow](https://github.com/pvl/wikihow_pairs_dataset) | [paper](https://arxiv.org/abs/1810.09305) | 128,542 |

| [Altlex](https://github.com/chridey/altlex/) | [paper](https://aclanthology.org/P16-1135.pdf) | 112,696 |

| [Quora Question Triplets](https://quoradata.quora.com/First-Quora-Dataset-Release-Question-Pairs) | - | 103,663 |

| [Simple Wikipedia](https://cs.pomona.edu/~dkauchak/simplification/) | [paper](https://www.aclweb.org/anthology/P11-2117/) | 102,225 |

| [Natural Questions (NQ)](https://ai.google.com/research/NaturalQuestions) | [paper](https://transacl.org/ojs/index.php/tacl/article/view/1455) | 100,231 |

| [SQuAD2.0](https://rajpurkar.github.io/SQuAD-explorer/) | [paper](https://aclanthology.org/P18-2124.pdf) | 87,599 |

| [TriviaQA](https://huggingface.co/datasets/trivia_qa) | - | 73,346 |

| **Total** | | **1,170,060,424** |

|

willcai/wav2vec2_common_voice_accents_4

|

willcai

| 2022-03-18T11:11:03Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:common_voice",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-03-18T01:46:54Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- common_voice

model-index:

- name: wav2vec2_common_voice_accents_4

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2_common_voice_accents_4

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0047

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 48

- eval_batch_size: 4

- seed: 42

- distributed_type: multi-GPU

- num_devices: 8

- total_train_batch_size: 384

- total_eval_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 4.615 | 1.28 | 400 | 0.8202 |

| 0.3778 | 2.56 | 800 | 0.1587 |

| 0.2229 | 3.85 | 1200 | 0.1027 |

| 0.1799 | 5.13 | 1600 | 0.0879 |

| 0.1617 | 6.41 | 2000 | 0.0772 |

| 0.1474 | 7.69 | 2400 | 0.0625 |

| 0.134 | 8.97 | 2800 | 0.0498 |

| 0.1213 | 10.26 | 3200 | 0.0429 |

| 0.1186 | 11.54 | 3600 | 0.0434 |

| 0.1118 | 12.82 | 4000 | 0.0312 |

| 0.1026 | 14.1 | 4400 | 0.0365 |

| 0.0951 | 15.38 | 4800 | 0.0321 |

| 0.0902 | 16.67 | 5200 | 0.0262 |

| 0.0843 | 17.95 | 5600 | 0.0208 |

| 0.0744 | 19.23 | 6000 | 0.0140 |

| 0.0718 | 20.51 | 6400 | 0.0204 |

| 0.0694 | 21.79 | 6800 | 0.0133 |

| 0.0636 | 23.08 | 7200 | 0.0104 |

| 0.0609 | 24.36 | 7600 | 0.0084 |

| 0.0559 | 25.64 | 8000 | 0.0050 |

| 0.0527 | 26.92 | 8400 | 0.0089 |

| 0.0495 | 28.21 | 8800 | 0.0058 |

| 0.0471 | 29.49 | 9200 | 0.0047 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.2+cu102

- Datasets 1.18.4

- Tokenizers 0.11.6

|

adamfeldmann/HILANCO-GPTX

|

adamfeldmann

| 2022-03-18T11:04:43Z | 0 | 0 | null |

[

"license:apache-2.0",

"region:us"

] | null | 2022-03-18T11:04:43Z |

---

license: apache-2.0

---

|

cammy/bart-large-cnn-100-MDS-own

|

cammy

| 2022-03-18T09:32:08Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bart",

"text2text-generation",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-03-18T09:31:07Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: bart-large-cnn-100-MDS-own

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-large-cnn-100-MDS-own

This model is a fine-tuned version of [facebook/bart-large-cnn](https://huggingface.co/facebook/bart-large-cnn) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 3.5357

- Rouge1: 22.4039

- Rouge2: 4.681

- Rougel: 13.1526

- Rougelsum: 15.7986

- Gen Len: 70.3

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 4

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:------:|:-------:|:---------:|:-------:|

| No log | 1.0 | 25 | 3.3375 | 25.7428 | 6.754 | 16.4131 | 19.6269 | 81.9 |

| No log | 2.0 | 50 | 3.5357 | 22.4039 | 4.681 | 13.1526 | 15.7986 | 70.3 |

### Framework versions

- Transformers 4.16.2

- Pytorch 1.10.2

- Datasets 1.18.3

- Tokenizers 0.11.0

|

taozhuoqun/tzq_pretrained

|

taozhuoqun

| 2022-03-18T08:50:37Z | 0 | 0 | null |

[

"license:apache-2.0",

"region:us"

] | null | 2022-03-18T08:50:37Z |

---

license: apache-2.0

---

|

brad1141/gpt2-finetuned-comp2

|

brad1141

| 2022-03-18T08:47:38Z | 77 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"token-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-03-18T07:26:03Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: gpt2-finetuned-comp2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-finetuned-comp2

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7788

- Precision: 0.3801

- Recall: 0.6854

- F1: 0.4800

- Accuracy: 0.4800

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 8

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 7

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 1.0962 | 1.0 | 1012 | 0.7528 | 0.3793 | 0.6109 | 0.4411 | 0.4411 |

| 0.7022 | 2.0 | 2024 | 0.6763 | 0.3992 | 0.6557 | 0.4799 | 0.4799 |

| 0.6136 | 3.0 | 3036 | 0.6751 | 0.3995 | 0.6597 | 0.4824 | 0.4824 |

| 0.5444 | 4.0 | 4048 | 0.6799 | 0.3891 | 0.6817 | 0.4854 | 0.4854 |

| 0.4846 | 5.0 | 5060 | 0.7371 | 0.4030 | 0.6701 | 0.4906 | 0.4906 |

| 0.4379 | 6.0 | 6072 | 0.7520 | 0.3956 | 0.6788 | 0.4887 | 0.4887 |

| 0.404 | 7.0 | 7084 | 0.7788 | 0.3801 | 0.6854 | 0.4800 | 0.4800 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.0+cu111

- Datasets 2.0.0

- Tokenizers 0.11.6

|

aaraki/bert-base-uncased-finetuned-swag

|

aaraki

| 2022-03-18T08:16:58Z | 1 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"multiple-choice",

"generated_from_trainer",

"dataset:swag",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

multiple-choice

| 2022-03-18T06:29:45Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- swag

metrics:

- accuracy

model-index:

- name: bert-base-uncased-finetuned-swag

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased-finetuned-swag

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the swag dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5155

- Accuracy: 0.8002

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.6904 | 1.0 | 4597 | 0.5155 | 0.8002 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.0+cu111

- Datasets 2.0.0

- Tokenizers 0.11.6

|

moshew/paraphrase-mpnet-base-v2_SetFit_sst2

|

moshew

| 2022-03-18T07:53:15Z | 1 | 1 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"mpnet",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-03-18T07:53:07Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# moshew/paraphrase-mpnet-base-v2_SetFit_sst2

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('moshew/paraphrase-mpnet-base-v2_SetFit_sst2')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('moshew/paraphrase-mpnet-base-v2_SetFit_sst2')

model = AutoModel.from_pretrained('moshew/paraphrase-mpnet-base-v2_SetFit_sst2')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=moshew/paraphrase-mpnet-base-v2_SetFit_sst2)

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 8650 with parameters:

```

{'batch_size': 8, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'transformers.optimization.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 10,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: MPNetModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

youzanai/bert-product-title-chinese

|

youzanai

| 2022-03-18T06:19:06Z | 6 | 3 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:05Z |

基于有赞商品标题语料训练的bert模型。

模型示例代码参考 https://github.com/youzanai/trexpark

|

brad1141/Longformer-finetuned-norm

|

brad1141

| 2022-03-18T05:42:11Z | 61 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"longformer",

"token-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-03-18T02:29:24Z |

---

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: Longformer-finetuned-norm

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Longformer-finetuned-norm

This model is a fine-tuned version of [allenai/longformer-base-4096](https://huggingface.co/allenai/longformer-base-4096) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8127

- Precision: 0.8429

- Recall: 0.8701

- F1: 0.8562

- Accuracy: 0.8221

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 8

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 7

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.8008 | 1.0 | 1012 | 0.5839 | 0.8266 | 0.8637 | 0.8447 | 0.8084 |

| 0.5168 | 2.0 | 2024 | 0.5927 | 0.7940 | 0.9102 | 0.8481 | 0.8117 |

| 0.3936 | 3.0 | 3036 | 0.5651 | 0.8476 | 0.8501 | 0.8488 | 0.8143 |

| 0.2939 | 4.0 | 4048 | 0.6411 | 0.8494 | 0.8578 | 0.8536 | 0.8204 |

| 0.2165 | 5.0 | 5060 | 0.6833 | 0.8409 | 0.8822 | 0.8611 | 0.8270 |

| 0.1561 | 6.0 | 6072 | 0.7643 | 0.8404 | 0.8810 | 0.8602 | 0.8259 |

| 0.1164 | 7.0 | 7084 | 0.8127 | 0.8429 | 0.8701 | 0.8562 | 0.8221 |

### Framework versions

- Transformers 4.17.0