modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-04 18:27:43

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 539

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-04 18:27:26

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

Jasmine8596/distilbert-finetuned-imdb

|

Jasmine8596

| 2022-09-09T02:41:29Z | 70 | 0 |

transformers

|

[

"transformers",

"tf",

"distilbert",

"fill-mask",

"generated_from_keras_callback",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-09-08T23:25:43Z |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: Jasmine8596/distilbert-finetuned-imdb

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Jasmine8596/distilbert-finetuned-imdb

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 2.8423

- Validation Loss: 2.6128

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'WarmUp', 'config': {'initial_learning_rate': 2e-05, 'decay_schedule_fn': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': -687, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, '__passive_serialization__': True}, 'warmup_steps': 1000, 'power': 1.0, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 2.8423 | 2.6128 | 0 |

### Framework versions

- Transformers 4.22.0.dev0

- TensorFlow 2.8.2

- Tokenizers 0.12.1

|

UmberH/distilbert-base-uncased-finetuned-cola

|

UmberH

| 2022-09-09T01:53:53Z | 108 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:glue",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-08T20:21:04Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- glue

metrics:

- matthews_correlation

model-index:

- name: distilbert-base-uncased-finetuned-cola

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: glue

type: glue

config: cola

split: train

args: cola

metrics:

- name: Matthews Correlation

type: matthews_correlation

value: 0.5456062114587601

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-cola

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8381

- Matthews Correlation: 0.5456

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Matthews Correlation |

|:-------------:|:-----:|:----:|:---------------:|:--------------------:|

| 0.5245 | 1.0 | 535 | 0.5432 | 0.4249 |

| 0.3514 | 2.0 | 1070 | 0.5075 | 0.4874 |

| 0.2368 | 3.0 | 1605 | 0.5554 | 0.5403 |

| 0.1712 | 4.0 | 2140 | 0.7780 | 0.5246 |

| 0.1254 | 5.0 | 2675 | 0.8381 | 0.5456 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

sd-concepts-library/bonzi-monkey

|

sd-concepts-library

| 2022-09-09T00:03:11Z | 0 | 2 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-09T00:03:05Z |

---

license: mit

---

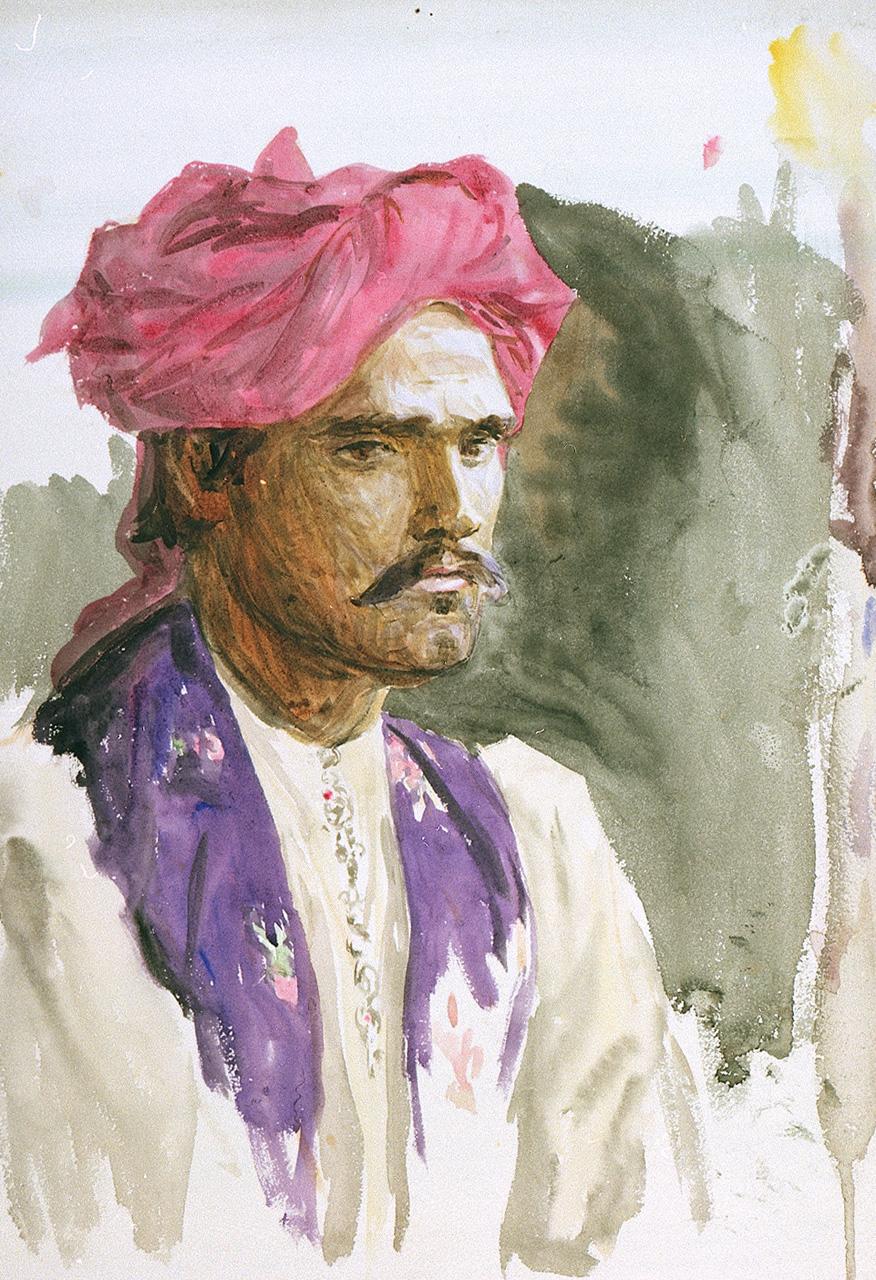

### bonzi monkey on Stable Diffusion

This is the `<bonzi>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

SebastianS/MetalSebastian

|

SebastianS

| 2022-09-09T00:00:23Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conversational",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-08-07T15:25:14Z |

---

tags:

- conversational

---

# Produced with ⚙️ by [mimicbot](https://github.com/CakeCrusher/mimicbot)🤖

|

sd-concepts-library/shrunken-head

|

sd-concepts-library

| 2022-09-08T22:23:57Z | 0 | 1 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-08T22:23:46Z |

---

license: mit

---

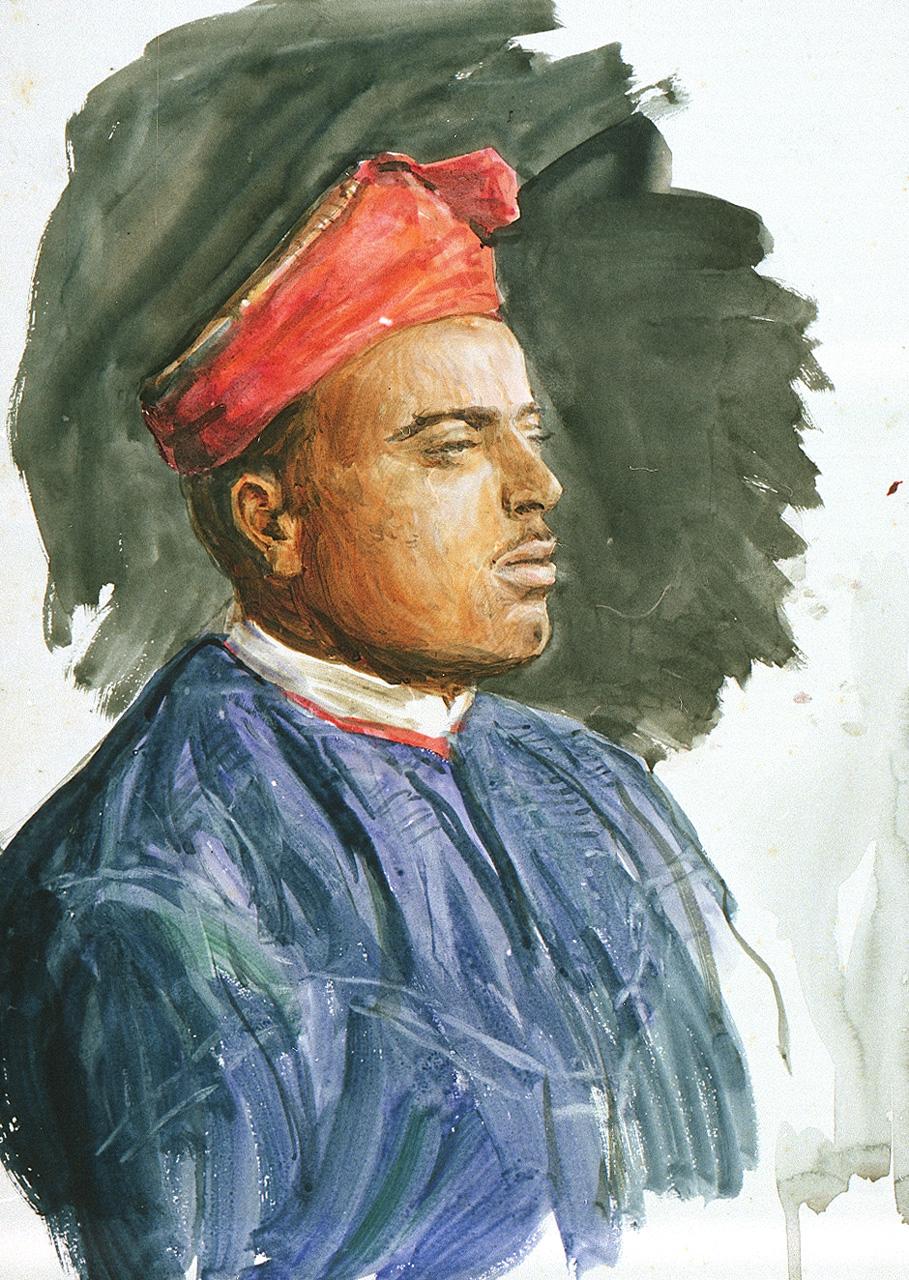

### shrunken head on Stable Diffusion

This is the `<shrunken-head>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

IIIT-L/xlm-roberta-base-finetuned-combined-DS

|

IIIT-L

| 2022-09-08T21:22:20Z | 114 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"text-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-09-08T20:48:41Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- accuracy

- precision

- recall

- f1

model-index:

- name: xlm-roberta-base-finetuned-combined-DS

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-combined-DS

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0232

- Accuracy: 0.6362

- Precision: 0.6193

- Recall: 0.6204

- F1: 0.6160

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4.1187640010910775e-05

- train_batch_size: 16

- eval_batch_size: 32

- seed: 43

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 6

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:---------:|:------:|:------:|

| 1.0408 | 1.0 | 711 | 1.0206 | 0.5723 | 0.5597 | 0.5122 | 0.4897 |

| 0.9224 | 2.0 | 1422 | 0.9092 | 0.5695 | 0.5745 | 0.5610 | 0.5572 |

| 0.8395 | 3.0 | 2133 | 0.8878 | 0.6088 | 0.6083 | 0.6071 | 0.5981 |

| 0.7418 | 3.99 | 2844 | 0.8828 | 0.6088 | 0.6009 | 0.6068 | 0.5936 |

| 0.6484 | 4.99 | 3555 | 0.9636 | 0.6355 | 0.6235 | 0.6252 | 0.6184 |

| 0.5644 | 5.99 | 4266 | 1.0232 | 0.6362 | 0.6193 | 0.6204 | 0.6160 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.10.1+cu111

- Datasets 2.3.2

- Tokenizers 0.12.1

|

PrimeQA/tydiqa-ft-listqa_nq-task-xlm-roberta-large

|

PrimeQA

| 2022-09-08T21:12:24Z | 37 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"MRC",

"TyDiQA",

"Natural Questions List",

"xlm-roberta-large",

"multilingual",

"arxiv:1911.02116",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2022-09-07T14:45:48Z |

---

license: apache-2.0

tags:

- MRC

- TyDiQA

- Natural Questions List

- xlm-roberta-large

language:

- multilingual

---

*Task*: MRC

# Model description

An XLM-RoBERTa reading comprehension model for List Question Answering using a fine-tuned [TyDi xlm-roberta-large](https://huggingface.co/PrimeQA/tydiqa-primary-task-xlm-roberta-large) model that is further fine-tuned on the list questions in the [Natural Questions](https://huggingface.co/datasets/natural_questions) dataset.

## Intended uses & limitations

You can use the raw model for the reading comprehension task. Biases associated with the pre-existing language model, xlm-roberta-large, that we used may be present in our fine-tuned model, tydiqa-ft-listqa_nq-task-xlm-roberta-large.

## Usage

You can use this model directly with the [PrimeQA](https://github.com/primeqa/primeqa) pipeline for reading comprehension [listqa.ipynb](https://github.com/primeqa/primeqa/blob/main/notebooks/mrc/listqa.ipynb).

### BibTeX entry and citation info

```bibtex

@article{kwiatkowski-etal-2019-natural,

title = "Natural Questions: A Benchmark for Question Answering Research",

author = "Kwiatkowski, Tom and

Palomaki, Jennimaria and

Redfield, Olivia and

Collins, Michael and

Parikh, Ankur and

Alberti, Chris and

Epstein, Danielle and

Polosukhin, Illia and

Devlin, Jacob and

Lee, Kenton and

Toutanova, Kristina and

Jones, Llion and

Kelcey, Matthew and

Chang, Ming-Wei and

Dai, Andrew M. and

Uszkoreit, Jakob and

Le, Quoc and

Petrov, Slav",

journal = "Transactions of the Association for Computational Linguistics",

volume = "7",

year = "2019",

address = "Cambridge, MA",

publisher = "MIT Press",

url = "https://aclanthology.org/Q19-1026",

doi = "10.1162/tacl_a_00276",

pages = "452--466",

}

```

```bibtex

@article{DBLP:journals/corr/abs-1911-02116,

author = {Alexis Conneau and

Kartikay Khandelwal and

Naman Goyal and

Vishrav Chaudhary and

Guillaume Wenzek and

Francisco Guzm{\'{a}}n and

Edouard Grave and

Myle Ott and

Luke Zettlemoyer and

Veselin Stoyanov},

title = {Unsupervised Cross-lingual Representation Learning at Scale},

journal = {CoRR},

volume = {abs/1911.02116},

year = {2019},

url = {http://arxiv.org/abs/1911.02116},

eprinttype = {arXiv},

eprint = {1911.02116},

timestamp = {Mon, 11 Nov 2019 18:38:09 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-1911-02116.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

GItaf/bert2bert-no-cross-attn-decoder

|

GItaf

| 2022-09-08T20:26:21Z | 49 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-generation",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-09-05T08:11:45Z |

---

tags:

- generated_from_trainer

- text-generation

widget:

parameters:

- max_new_tokens = 100

model-index:

- name: bert-base-uncased-bert-base-uncased-finetuned-mbti-0909

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased-bert-base-uncased-finetuned-mbti-0909

This model is a fine-tuned version of [](https://huggingface.co/) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 6.0549

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 5.2244 | 1.0 | 1735 | 5.7788 |

| 4.8483 | 2.0 | 3470 | 5.7647 |

| 4.7578 | 3.0 | 5205 | 5.9016 |

| 4.5606 | 4.0 | 6940 | 5.9895 |

| 4.4314 | 5.0 | 8675 | 6.0549 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1

- Datasets 2.4.0

- Tokenizers 0.12.1

|

GItaf/bert-base-uncased-bert-base-uncased-finetuned-mbti-0909

|

GItaf

| 2022-09-08T20:12:28Z | 12 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-09-08T16:52:48Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: bert-base-uncased-bert-base-uncased-finetuned-mbti-0909

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased-bert-base-uncased-finetuned-mbti-0909

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- eval_loss: 4.3136

- eval_runtime: 23.6133

- eval_samples_per_second: 73.475

- eval_steps_per_second: 9.19

- step: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 15

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1

- Datasets 2.4.0

- Tokenizers 0.12.1

|

lewtun/dummy-setfit-model

|

lewtun

| 2022-09-08T19:53:17Z | 2 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"mpnet",

"feature-extraction",

"sentence-similarity",

"transformers",

"arxiv:1908.10084",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-09-08T19:53:10Z |

---

pipeline_tag: sentence-similarity

license: apache-2.0

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# sentence-transformers/paraphrase-mpnet-base-v2

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('sentence-transformers/paraphrase-mpnet-base-v2')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('sentence-transformers/paraphrase-mpnet-base-v2')

model = AutoModel.from_pretrained('sentence-transformers/paraphrase-mpnet-base-v2')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, max pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=sentence-transformers/paraphrase-mpnet-base-v2)

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: MPNetModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

This model was trained by [sentence-transformers](https://www.sbert.net/).

If you find this model helpful, feel free to cite our publication [Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks](https://arxiv.org/abs/1908.10084):

```bibtex

@inproceedings{reimers-2019-sentence-bert,

title = "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks",

author = "Reimers, Nils and Gurevych, Iryna",

booktitle = "Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing",

month = "11",

year = "2019",

publisher = "Association for Computational Linguistics",

url = "http://arxiv.org/abs/1908.10084",

}

```

|

sd-concepts-library/line-art

|

sd-concepts-library

| 2022-09-08T19:30:01Z | 0 | 47 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-08T19:29:47Z |

---

license: mit

---

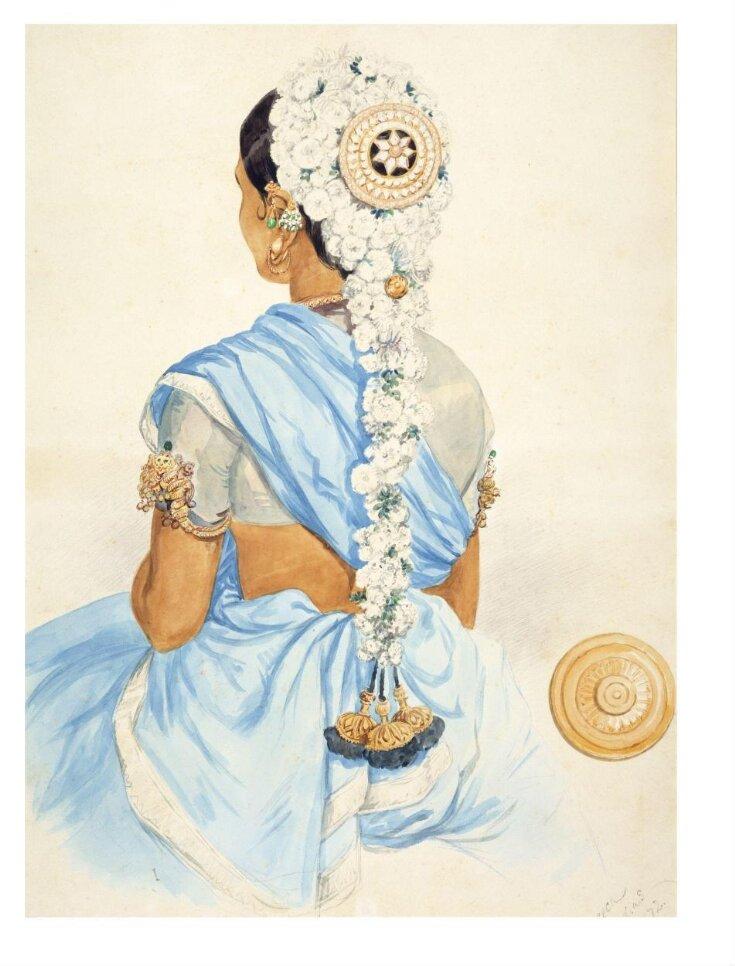

### Line Art on Stable Diffusion

This is the `<line-art>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

Images via Freepik.com

|

ighita/ddpm-butterflies-128

|

ighita

| 2022-09-08T19:17:27Z | 0 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"en",

"dataset:huggan/smithsonian_butterflies_subset",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2022-09-06T10:19:48Z |

---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: huggan/smithsonian_butterflies_subset

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-butterflies-128

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `huggan/smithsonian_butterflies_subset` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/ighita/ddpm-butterflies-128/tensorboard?#scalars)

|

hashb/darknet-yolov4-object-detection

|

hashb

| 2022-09-08T19:11:58Z | 0 | 1 | null |

[

"arxiv:2004.10934",

"license:mit",

"region:us"

] | null | 2022-09-08T18:36:21Z |

---

license: mit

---

[](https://github.com/AlexeyAB/darknet/actions?query=workflow%3A%22Darknet+Continuous+Integration%22)

## Model

YOLOv7 surpasses all known object detectors in both speed and accuracy in the range from 5 FPS to 160 FPS and has the highest accuracy 56.8% AP among all known real-time object detectors with 30 FPS or higher on GPU V100. YOLOv7-E6 object detector (56 FPS V100, 55.9% AP) outperforms both transformer-based detector SWIN-L Cascade-Mask R-CNN (9.2 FPS A100, 53.9% AP) by 509% in speed and 2% in accuracy, and convolutional-based detector ConvNeXt-XL Cascade-Mask R-CNN (8.6 FPS A100, 55.2% AP) by 551% in speed and 0.7% AP in accuracy, as well as YOLOv7 outperforms: YOLOR, YOLOX, Scaled-YOLOv4, YOLOv5, DETR, Deformable DETR, DINO-5scale-R50, ViT-Adapter-B and many other object detectors in speed and accuracy.

## How to use:

```

# clone the repo

git clone https://huggingface.co/hashb/darknet-yolov4-object-detection

# open file darknet-yolov4-object-detection.ipynb and run in colab

```

## Citation

```

@misc{bochkovskiy2020yolov4,

title={YOLOv4: Optimal Speed and Accuracy of Object Detection},

author={Alexey Bochkovskiy and Chien-Yao Wang and Hong-Yuan Mark Liao},

year={2020},

eprint={2004.10934},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

```

@InProceedings{Wang_2021_CVPR,

author = {Wang, Chien-Yao and Bochkovskiy, Alexey and Liao, Hong-Yuan Mark},

title = {{Scaled-YOLOv4}: Scaling Cross Stage Partial Network},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2021},

pages = {13029-13038}

}

```

|

sd-concepts-library/art-brut

|

sd-concepts-library

| 2022-09-08T18:40:33Z | 0 | 3 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-08T18:40:22Z |

---

license: mit

---

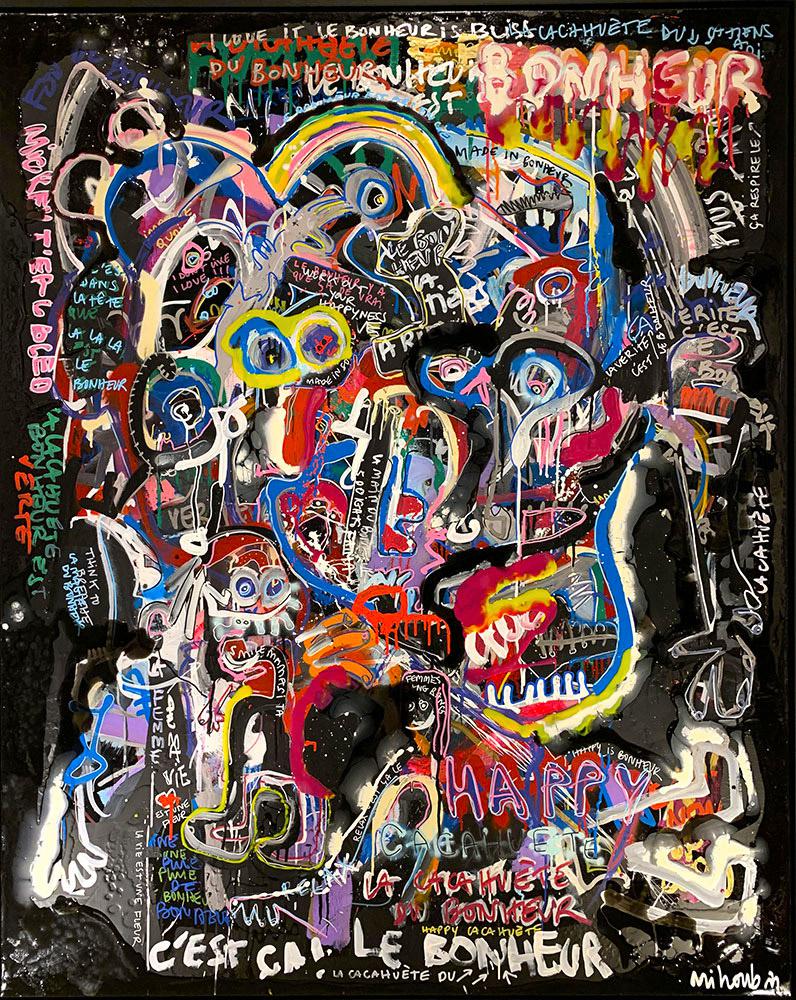

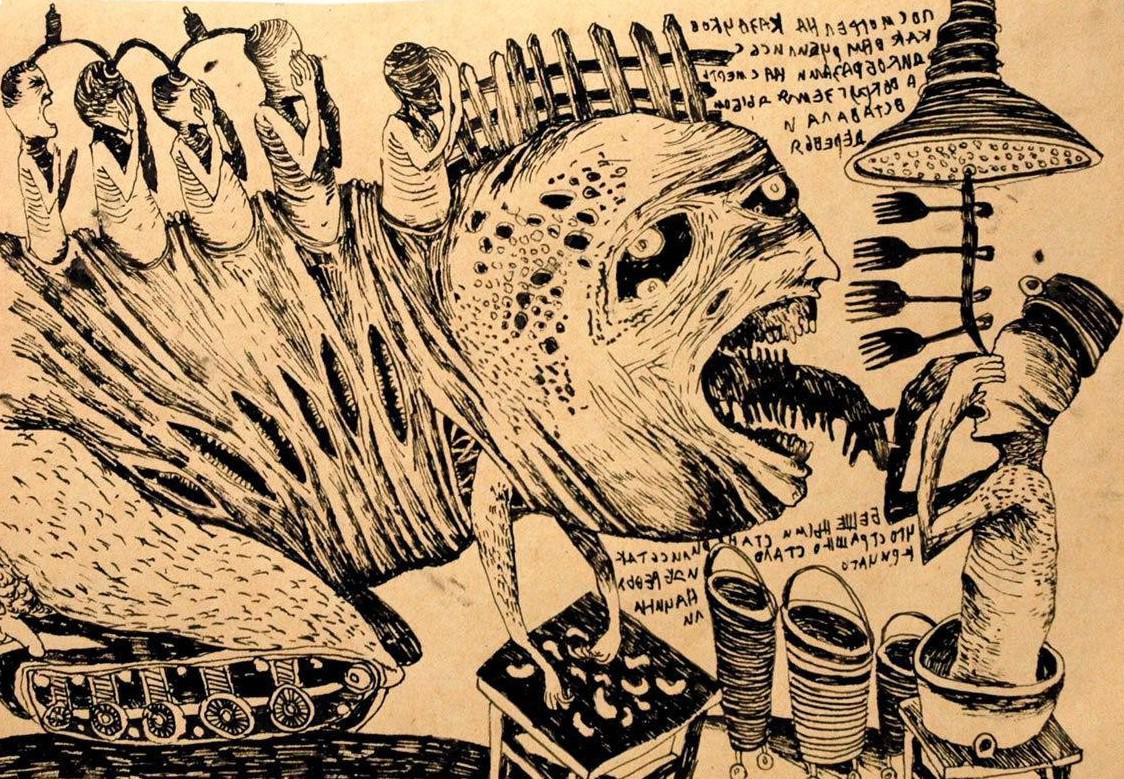

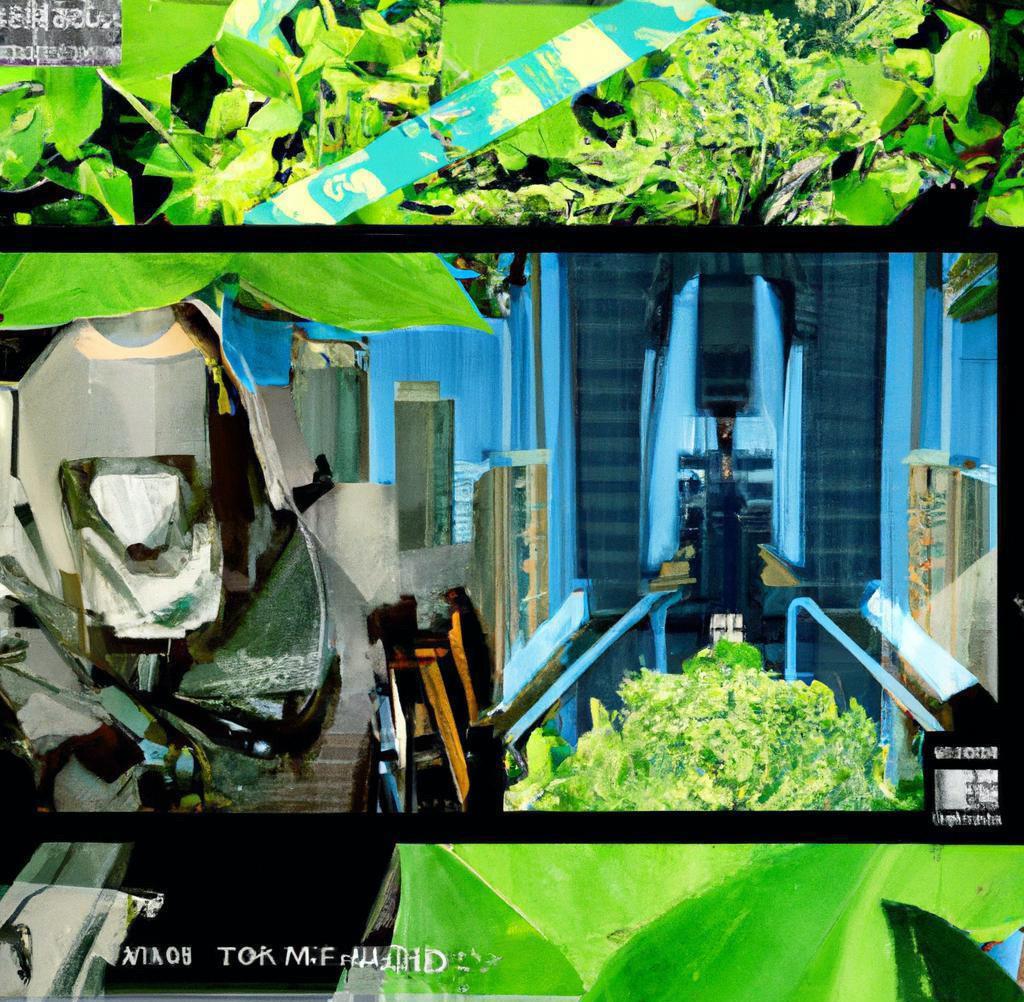

### art brut on Stable Diffusion

This is the `<art-brut>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/nebula

|

sd-concepts-library

| 2022-09-08T17:48:26Z | 0 | 23 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-08T17:48:21Z |

---

license: mit

---

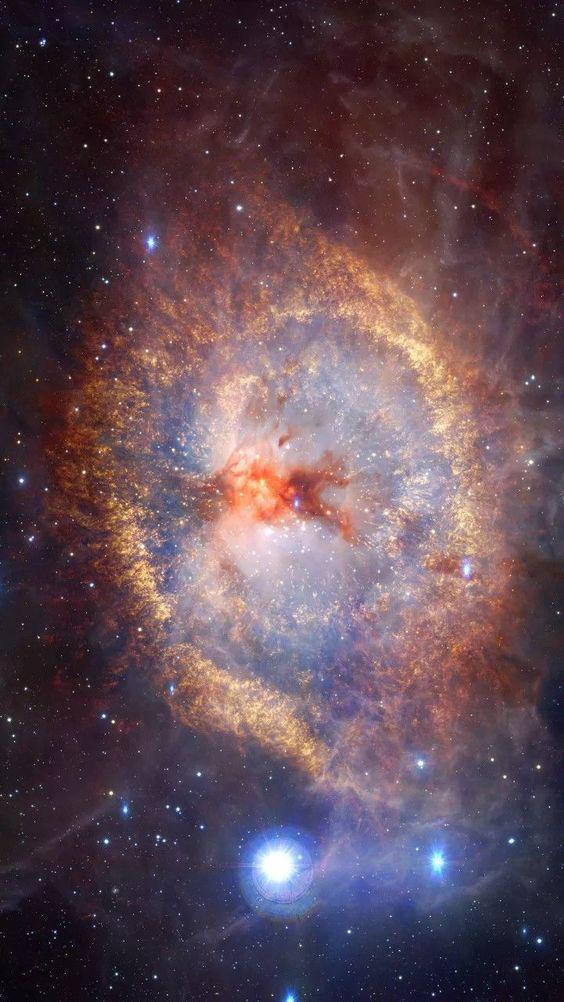

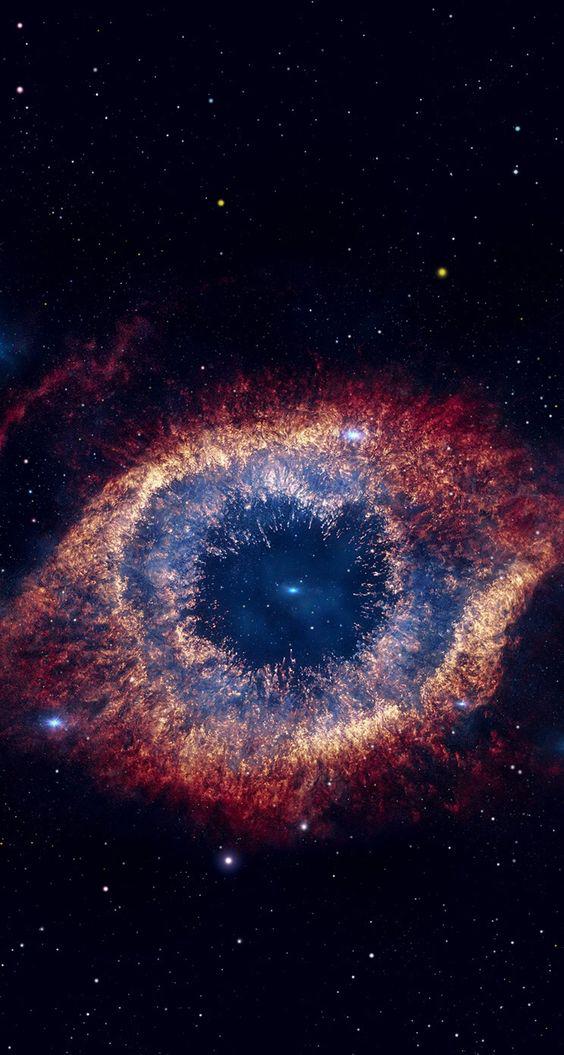

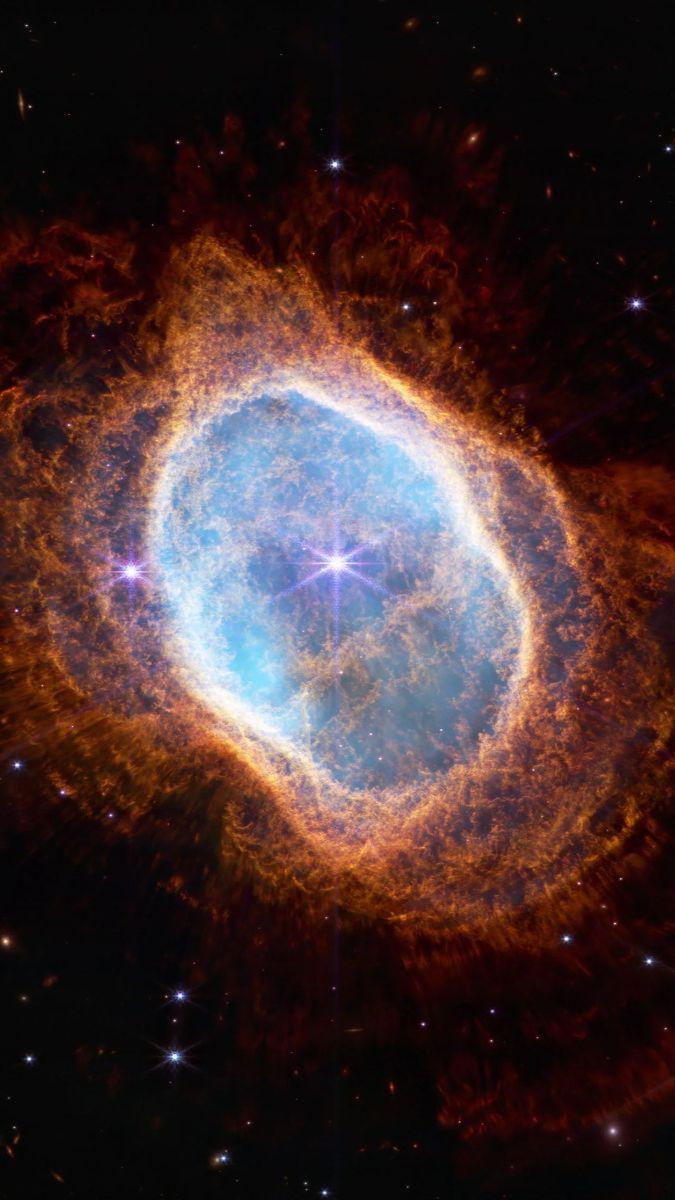

### Nebula on Stable Diffusion

This is the `<nebula>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

danielwang-hads/wav2vec2-base-timit-demo-google-colab

|

danielwang-hads

| 2022-09-08T17:45:13Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-08-30T18:26:43Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: wav2vec2-base-timit-demo-google-colab

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-timit-demo-google-colab

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5079

- Wer: 0.3365

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 3.4933 | 1.0 | 500 | 1.7711 | 0.9978 |

| 0.8658 | 2.01 | 1000 | 0.6262 | 0.5295 |

| 0.4405 | 3.01 | 1500 | 0.4841 | 0.4845 |

| 0.3062 | 4.02 | 2000 | 0.4897 | 0.4215 |

| 0.233 | 5.02 | 2500 | 0.4326 | 0.4101 |

| 0.1896 | 6.02 | 3000 | 0.4924 | 0.4078 |

| 0.1589 | 7.03 | 3500 | 0.4430 | 0.3896 |

| 0.1391 | 8.03 | 4000 | 0.4334 | 0.3889 |

| 0.1216 | 9.04 | 4500 | 0.4691 | 0.3828 |

| 0.1063 | 10.04 | 5000 | 0.4726 | 0.3705 |

| 0.0992 | 11.04 | 5500 | 0.4333 | 0.3690 |

| 0.0872 | 12.05 | 6000 | 0.4986 | 0.3771 |

| 0.0829 | 13.05 | 6500 | 0.4903 | 0.3685 |

| 0.0713 | 14.06 | 7000 | 0.5293 | 0.3655 |

| 0.068 | 15.06 | 7500 | 0.5039 | 0.3612 |

| 0.0621 | 16.06 | 8000 | 0.5314 | 0.3665 |

| 0.0571 | 17.07 | 8500 | 0.5038 | 0.3572 |

| 0.0585 | 18.07 | 9000 | 0.4718 | 0.3550 |

| 0.0487 | 19.08 | 9500 | 0.5482 | 0.3626 |

| 0.0459 | 20.08 | 10000 | 0.5239 | 0.3545 |

| 0.0419 | 21.08 | 10500 | 0.5096 | 0.3473 |

| 0.0362 | 22.09 | 11000 | 0.5222 | 0.3500 |

| 0.0331 | 23.09 | 11500 | 0.5062 | 0.3489 |

| 0.0352 | 24.1 | 12000 | 0.4913 | 0.3459 |

| 0.0315 | 25.1 | 12500 | 0.4701 | 0.3412 |

| 0.028 | 26.1 | 13000 | 0.5178 | 0.3402 |

| 0.0255 | 27.11 | 13500 | 0.5168 | 0.3405 |

| 0.0228 | 28.11 | 14000 | 0.5154 | 0.3368 |

| 0.0232 | 29.12 | 14500 | 0.5079 | 0.3365 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.12.1+cu113

- Datasets 1.18.3

- Tokenizers 0.12.1

|

sd-concepts-library/apulian-rooster-v0-1

|

sd-concepts-library

| 2022-09-08T17:31:44Z | 0 | 2 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-08T16:14:06Z |

---

license: mit

---

### apulian-rooster-v0.1 on Stable Diffusion

--

# Inspired by the design of the Galletto (rooster) typical of ceramics and pottery made in Grottaglie, Puglia (Italy).

This is the `<apulian-rooster-v0.1>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/fractal

|

sd-concepts-library

| 2022-09-08T17:04:23Z | 0 | 4 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-08T16:58:04Z |

---

license: mit

---

### fractal on Stable Diffusion

This is the `<fractal>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](#) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](#).

The images composing the token are here:

https://huggingface.co/datasets/Nbardy/Fractal-photos

Thank you to the photographers. Who graciously published these photos for free non-commercial use. Each photo has the artists name in the dataset hosted on hugging face

|

MultiTrickFox/bloom-2b5_Zen

|

MultiTrickFox

| 2022-09-08T16:55:59Z | 14 | 2 |

transformers

|

[

"transformers",

"pytorch",

"bloom",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-07-16T00:37:54Z |

#####

## Bloom2.5B Zen ##

#####

Bloom (2.5 B) Scientific Model fine-tuned on Zen knowledge

#####

## Usage ##

#####

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("MultiTrickFox/bloom-2b5_Zen")

model = AutoModelForCausalLM.from_pretrained("MultiTrickFox/bloom-2b5_Zen")

generator = pipeline('text-generation', model=model, tokenizer=tokenizer)

inp = [ """Today""", """Yesterday""" ]

out = generator(

inp,

do_sample=True,

temperature=.7,

typical_p=.6,

#top_p=.9,

repetition_penalty=1.2,

max_new_tokens=666,

max_time=60, # seconds

)

for o in out: print(o[0]['generated_text'])

```

|

huggingtweets/piemadd

|

huggingtweets

| 2022-09-08T16:20:49Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-09-08T16:16:57Z |

---

language: en

thumbnail: http://www.huggingtweets.com/piemadd/1662653961299/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1521050682983424003/yERaHagV_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Piero Maddaleni 2027</div>

<div style="text-align: center; font-size: 14px;">@piemadd</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Piero Maddaleni 2027.

| Data | Piero Maddaleni 2027 |

| --- | --- |

| Tweets downloaded | 3242 |

| Retweets | 322 |

| Short tweets | 540 |

| Tweets kept | 2380 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/jem4xdn0/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @piemadd's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/6e8s7bst) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/6e8s7bst/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/piemadd')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

sd-concepts-library/lolo

|

sd-concepts-library

| 2022-09-08T16:06:05Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-08T16:05:54Z |

---

license: mit

---

### Lolo on Stable Diffusion

This is the `<lolo>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

Guruji108/xlm-roberta-base-finetuned-panx-de

|

Guruji108

| 2022-09-08T16:00:40Z | 115 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"dataset:xtreme",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-09-05T17:49:47Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- xtreme

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: xtreme

type: xtreme

args: PAN-X.de

metrics:

- name: F1

type: f1

value: 0.863677639046538

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1343

- F1: 0.8637

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2578 | 1.0 | 525 | 0.1562 | 0.8273 |

| 0.1297 | 2.0 | 1050 | 0.1330 | 0.8474 |

| 0.0809 | 3.0 | 1575 | 0.1343 | 0.8637 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.12.1+cu113

- Datasets 1.16.1

- Tokenizers 0.10.3

|

mariolinml/roberta_large-unbalanced_simple-ner-conll2003_0908_v0

|

mariolinml

| 2022-09-08T15:24:17Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"roberta",

"token-classification",

"generated_from_trainer",

"dataset:conll2003",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-09-08T14:34:41Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- conll2003

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: roberta_large-unbalanced_simple-ner-conll2003_0908_v0

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: conll2003

type: conll2003

config: conll2003

split: train

args: conll2003

metrics:

- name: Precision

type: precision

value: 0.9552732335537766

- name: Recall

type: recall

value: 0.9718484419263456

- name: F1

type: f1

value: 0.9634895559066174

- name: Accuracy

type: accuracy

value: 0.989226995491912

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta_large-unbalanced_simple-ner-conll2003_0908_v0

This model is a fine-tuned version of [roberta-large](https://huggingface.co/roberta-large) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0881

- Precision: 0.9553

- Recall: 0.9718

- F1: 0.9635

- Accuracy: 0.9892

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.07 | 1.0 | 878 | 0.0249 | 0.9616 | 0.9746 | 0.9681 | 0.9936 |

| 0.0176 | 2.0 | 1756 | 0.0241 | 0.9699 | 0.9818 | 0.9758 | 0.9948 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

SiddharthaM/bert-base-uncased-ner-conll2003

|

SiddharthaM

| 2022-09-08T14:57:50Z | 112 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"dataset:conll2003",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-09-08T14:37:16Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- conll2003

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-base-uncased-ner-conll2003

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: conll2003

type: conll2003

config: conll2003

split: train

args: conll2003

metrics:

- name: Precision

type: precision

value: 0.9342126957955482

- name: Recall

type: recall

value: 0.9535509929316729

- name: F1

type: f1

value: 0.943782793370534

- name: Accuracy

type: accuracy

value: 0.9870194854889033

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased-ner-conll2003

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0602

- Precision: 0.9342

- Recall: 0.9536

- F1: 0.9438

- Accuracy: 0.9870

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.0871 | 1.0 | 1756 | 0.0728 | 0.9138 | 0.9275 | 0.9206 | 0.9811 |

| 0.0331 | 2.0 | 3512 | 0.0591 | 0.9311 | 0.9514 | 0.9411 | 0.9866 |

| 0.0173 | 3.0 | 5268 | 0.0602 | 0.9342 | 0.9536 | 0.9438 | 0.9870 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Sebabrata/lmv2-g-w2-300-doc-09-08

|

Sebabrata

| 2022-09-08T14:33:01Z | 78 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"layoutlmv2",

"token-classification",

"generated_from_trainer",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-09-08T13:35:51Z |

---

license: cc-by-nc-sa-4.0

tags:

- generated_from_trainer

model-index:

- name: lmv2-g-w2-300-doc-09-08

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# lmv2-g-w2-300-doc-09-08

This model is a fine-tuned version of [microsoft/layoutlmv2-base-uncased](https://huggingface.co/microsoft/layoutlmv2-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0262

- Control Number Precision: 1.0

- Control Number Recall: 1.0

- Control Number F1: 1.0

- Control Number Number: 17

- Ein Precision: 1.0

- Ein Recall: 0.9833

- Ein F1: 0.9916

- Ein Number: 60

- Employee’s Address Precision: 0.9667

- Employee’s Address Recall: 0.9831

- Employee’s Address F1: 0.9748

- Employee’s Address Number: 59

- Employee’s Name Precision: 0.9833

- Employee’s Name Recall: 1.0

- Employee’s Name F1: 0.9916

- Employee’s Name Number: 59

- Employee’s Ssn Precision: 0.9836

- Employee’s Ssn Recall: 1.0

- Employee’s Ssn F1: 0.9917

- Employee’s Ssn Number: 60

- Employer’s Address Precision: 0.9833

- Employer’s Address Recall: 0.9672

- Employer’s Address F1: 0.9752

- Employer’s Address Number: 61

- Employer’s Name Precision: 0.9833

- Employer’s Name Recall: 0.9833

- Employer’s Name F1: 0.9833

- Employer’s Name Number: 60

- Federal Income Tax Withheld Precision: 1.0

- Federal Income Tax Withheld Recall: 1.0

- Federal Income Tax Withheld F1: 1.0

- Federal Income Tax Withheld Number: 60

- Medicare Tax Withheld Precision: 1.0

- Medicare Tax Withheld Recall: 1.0

- Medicare Tax Withheld F1: 1.0

- Medicare Tax Withheld Number: 60

- Medicare Wages Tips Precision: 1.0

- Medicare Wages Tips Recall: 1.0

- Medicare Wages Tips F1: 1.0

- Medicare Wages Tips Number: 60

- Social Security Tax Withheld Precision: 1.0

- Social Security Tax Withheld Recall: 0.9836

- Social Security Tax Withheld F1: 0.9917

- Social Security Tax Withheld Number: 61

- Social Security Wages Precision: 0.9833

- Social Security Wages Recall: 1.0

- Social Security Wages F1: 0.9916

- Social Security Wages Number: 59

- Wages Tips Precision: 1.0

- Wages Tips Recall: 0.9836

- Wages Tips F1: 0.9917

- Wages Tips Number: 61

- Overall Precision: 0.9905

- Overall Recall: 0.9905

- Overall F1: 0.9905

- Overall Accuracy: 0.9973

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- num_epochs: 30

### Training results

| Training Loss | Epoch | Step | Validation Loss | Control Number Precision | Control Number Recall | Control Number F1 | Control Number Number | Ein Precision | Ein Recall | Ein F1 | Ein Number | Employee’s Address Precision | Employee’s Address Recall | Employee’s Address F1 | Employee’s Address Number | Employee’s Name Precision | Employee’s Name Recall | Employee’s Name F1 | Employee’s Name Number | Employee’s Ssn Precision | Employee’s Ssn Recall | Employee’s Ssn F1 | Employee’s Ssn Number | Employer’s Address Precision | Employer’s Address Recall | Employer’s Address F1 | Employer’s Address Number | Employer’s Name Precision | Employer’s Name Recall | Employer’s Name F1 | Employer’s Name Number | Federal Income Tax Withheld Precision | Federal Income Tax Withheld Recall | Federal Income Tax Withheld F1 | Federal Income Tax Withheld Number | Medicare Tax Withheld Precision | Medicare Tax Withheld Recall | Medicare Tax Withheld F1 | Medicare Tax Withheld Number | Medicare Wages Tips Precision | Medicare Wages Tips Recall | Medicare Wages Tips F1 | Medicare Wages Tips Number | Social Security Tax Withheld Precision | Social Security Tax Withheld Recall | Social Security Tax Withheld F1 | Social Security Tax Withheld Number | Social Security Wages Precision | Social Security Wages Recall | Social Security Wages F1 | Social Security Wages Number | Wages Tips Precision | Wages Tips Recall | Wages Tips F1 | Wages Tips Number | Overall Precision | Overall Recall | Overall F1 | Overall Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:------------------------:|:---------------------:|:-----------------:|:---------------------:|:-------------:|:----------:|:------:|:----------:|:----------------------------:|:-------------------------:|:---------------------:|:-------------------------:|:-------------------------:|:----------------------:|:------------------:|:----------------------:|:------------------------:|:---------------------:|:-----------------:|:---------------------:|:----------------------------:|:-------------------------:|:---------------------:|:-------------------------:|:-------------------------:|:----------------------:|:------------------:|:----------------------:|:-------------------------------------:|:----------------------------------:|:------------------------------:|:----------------------------------:|:-------------------------------:|:----------------------------:|:------------------------:|:----------------------------:|:-----------------------------:|:--------------------------:|:----------------------:|:--------------------------:|:--------------------------------------:|:-----------------------------------:|:-------------------------------:|:-----------------------------------:|:-------------------------------:|:----------------------------:|:------------------------:|:----------------------------:|:--------------------:|:-----------------:|:-------------:|:-----------------:|:-----------------:|:--------------:|:----------:|:----------------:|

| 1.7717 | 1.0 | 240 | 0.9856 | 0.0 | 0.0 | 0.0 | 17 | 0.9206 | 0.9667 | 0.9431 | 60 | 0.6824 | 0.9831 | 0.8056 | 59 | 0.2333 | 0.5932 | 0.3349 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.7609 | 0.5738 | 0.6542 | 61 | 0.3654 | 0.3167 | 0.3393 | 60 | 0.0 | 0.0 | 0.0 | 60 | 0.8194 | 0.9833 | 0.8939 | 60 | 0.6064 | 0.95 | 0.7403 | 60 | 0.5050 | 0.8361 | 0.6296 | 61 | 0.0 | 0.0 | 0.0 | 59 | 0.5859 | 0.9508 | 0.725 | 61 | 0.5954 | 0.6649 | 0.6282 | 0.9558 |

| 0.5578 | 2.0 | 480 | 0.2957 | 0.8462 | 0.6471 | 0.7333 | 17 | 0.9831 | 0.9667 | 0.9748 | 60 | 0.9048 | 0.9661 | 0.9344 | 59 | 0.8358 | 0.9492 | 0.8889 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.8125 | 0.8525 | 0.8320 | 61 | 0.8462 | 0.9167 | 0.8800 | 60 | 0.9672 | 0.9833 | 0.9752 | 60 | 0.9524 | 1.0 | 0.9756 | 60 | 0.9194 | 0.95 | 0.9344 | 60 | 0.9833 | 0.9672 | 0.9752 | 61 | 0.9508 | 0.9831 | 0.9667 | 59 | 0.9516 | 0.9672 | 0.9593 | 61 | 0.9212 | 0.9512 | 0.9359 | 0.9891 |

| 0.223 | 3.0 | 720 | 0.1626 | 0.5 | 0.6471 | 0.5641 | 17 | 0.9667 | 0.9667 | 0.9667 | 60 | 0.9355 | 0.9831 | 0.9587 | 59 | 0.9672 | 1.0 | 0.9833 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.8769 | 0.9344 | 0.9048 | 61 | 0.9508 | 0.9667 | 0.9587 | 60 | 0.9833 | 0.9833 | 0.9833 | 60 | 0.9836 | 1.0 | 0.9917 | 60 | 0.8769 | 0.95 | 0.912 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9355 | 0.9831 | 0.9587 | 59 | 0.9516 | 0.9672 | 0.9593 | 61 | 0.9370 | 0.9688 | 0.9526 | 0.9923 |

| 0.1305 | 4.0 | 960 | 0.1025 | 0.9444 | 1.0 | 0.9714 | 17 | 0.9831 | 0.9667 | 0.9748 | 60 | 0.9194 | 0.9661 | 0.9421 | 59 | 0.9508 | 0.9831 | 0.9667 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.9219 | 0.9672 | 0.944 | 61 | 0.9667 | 0.9667 | 0.9667 | 60 | 0.9833 | 0.9833 | 0.9833 | 60 | 0.9524 | 1.0 | 0.9756 | 60 | 0.8906 | 0.95 | 0.9194 | 60 | 0.9833 | 0.9672 | 0.9752 | 61 | 0.9355 | 0.9831 | 0.9587 | 59 | 0.9516 | 0.9672 | 0.9593 | 61 | 0.9511 | 0.9756 | 0.9632 | 0.9947 |

| 0.0852 | 5.0 | 1200 | 0.0744 | 0.7391 | 1.0 | 0.85 | 17 | 0.9831 | 0.9667 | 0.9748 | 60 | 0.9667 | 0.9831 | 0.9748 | 59 | 0.9833 | 1.0 | 0.9916 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.9344 | 0.9344 | 0.9344 | 61 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9365 | 0.9833 | 0.9593 | 60 | 0.9677 | 1.0 | 0.9836 | 60 | 0.95 | 0.95 | 0.9500 | 60 | 0.9836 | 0.9836 | 0.9836 | 61 | 0.9667 | 0.9831 | 0.9748 | 59 | 0.9833 | 0.9672 | 0.9752 | 61 | 0.9626 | 0.9783 | 0.9704 | 0.9953 |

| 0.0583 | 6.0 | 1440 | 0.0554 | 0.7727 | 1.0 | 0.8718 | 17 | 0.9831 | 0.9667 | 0.9748 | 60 | 0.9667 | 0.9831 | 0.9748 | 59 | 0.9833 | 1.0 | 0.9916 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.9048 | 0.9344 | 0.9194 | 61 | 1.0 | 0.9833 | 0.9916 | 60 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9833 | 0.9833 | 0.9833 | 60 | 0.9344 | 0.95 | 0.9421 | 60 | 1.0 | 0.9672 | 0.9833 | 61 | 0.9667 | 0.9831 | 0.9748 | 59 | 0.9833 | 0.9672 | 0.9752 | 61 | 0.9677 | 0.9756 | 0.9716 | 0.9957 |

| 0.0431 | 7.0 | 1680 | 0.0471 | 0.9444 | 1.0 | 0.9714 | 17 | 0.9831 | 0.9667 | 0.9748 | 60 | 0.9016 | 0.9322 | 0.9167 | 59 | 0.95 | 0.9661 | 0.9580 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.8676 | 0.9672 | 0.9147 | 61 | 0.9831 | 0.9667 | 0.9748 | 60 | 1.0 | 0.9833 | 0.9916 | 60 | 1.0 | 1.0 | 1.0 | 60 | 0.9516 | 0.9833 | 0.9672 | 60 | 0.9836 | 0.9836 | 0.9836 | 61 | 0.9831 | 0.9831 | 0.9831 | 59 | 0.9833 | 0.9672 | 0.9752 | 61 | 0.9625 | 0.9756 | 0.9690 | 0.9947 |

| 0.0314 | 8.0 | 1920 | 0.0359 | 1.0 | 1.0 | 1.0 | 17 | 0.9831 | 0.9667 | 0.9748 | 60 | 0.9355 | 0.9831 | 0.9587 | 59 | 0.9833 | 1.0 | 0.9916 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.9516 | 0.9672 | 0.9593 | 61 | 1.0 | 0.9667 | 0.9831 | 60 | 0.9833 | 0.9833 | 0.9833 | 60 | 1.0 | 1.0 | 1.0 | 60 | 0.9516 | 0.9833 | 0.9672 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9831 | 0.9831 | 0.9831 | 59 | 0.9672 | 0.9672 | 0.9672 | 61 | 0.9771 | 0.9824 | 0.9797 | 0.9969 |

| 0.0278 | 9.0 | 2160 | 0.0338 | 0.8947 | 1.0 | 0.9444 | 17 | 0.9833 | 0.9833 | 0.9833 | 60 | 0.9355 | 0.9831 | 0.9587 | 59 | 0.9667 | 0.9831 | 0.9748 | 59 | 1.0 | 1.0 | 1.0 | 60 | 0.9365 | 0.9672 | 0.9516 | 61 | 0.9672 | 0.9833 | 0.9752 | 60 | 1.0 | 0.9833 | 0.9916 | 60 | 1.0 | 1.0 | 1.0 | 60 | 0.9516 | 0.9833 | 0.9672 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9667 | 0.9831 | 0.9748 | 59 | 0.9672 | 0.9672 | 0.9672 | 61 | 0.9705 | 0.9837 | 0.9771 | 0.9965 |

| 0.0231 | 10.0 | 2400 | 0.0332 | 0.9444 | 1.0 | 0.9714 | 17 | 0.9831 | 0.9667 | 0.9748 | 60 | 0.9508 | 0.9831 | 0.9667 | 59 | 0.9048 | 0.9661 | 0.9344 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.9667 | 0.9508 | 0.9587 | 61 | 0.9667 | 0.9667 | 0.9667 | 60 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9836 | 1.0 | 0.9917 | 60 | 0.9365 | 0.9833 | 0.9593 | 60 | 1.0 | 0.9672 | 0.9833 | 61 | 0.9831 | 0.9831 | 0.9831 | 59 | 0.9833 | 0.9672 | 0.9752 | 61 | 0.9690 | 0.9769 | 0.9730 | 0.9964 |

| 0.0189 | 11.0 | 2640 | 0.0342 | 1.0 | 1.0 | 1.0 | 17 | 0.9667 | 0.9667 | 0.9667 | 60 | 0.8657 | 0.9831 | 0.9206 | 59 | 0.9833 | 1.0 | 0.9916 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.8594 | 0.9016 | 0.88 | 61 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9833 | 0.9833 | 0.9833 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9833 | 1.0 | 0.9916 | 59 | 0.9516 | 0.9672 | 0.9593 | 61 | 0.964 | 0.9810 | 0.9724 | 0.9958 |

| 0.0187 | 12.0 | 2880 | 0.0255 | 1.0 | 1.0 | 1.0 | 17 | 0.9667 | 0.9667 | 0.9667 | 60 | 0.9508 | 0.9831 | 0.9667 | 59 | 0.9833 | 1.0 | 0.9916 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.9667 | 0.9508 | 0.9587 | 61 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9672 | 0.9833 | 0.9752 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9833 | 1.0 | 0.9916 | 59 | 0.9833 | 0.9672 | 0.9752 | 61 | 0.9824 | 0.9851 | 0.9837 | 0.9976 |

| 0.0126 | 13.0 | 3120 | 0.0257 | 1.0 | 1.0 | 1.0 | 17 | 0.9667 | 0.9667 | 0.9667 | 60 | 0.9344 | 0.9661 | 0.95 | 59 | 0.8889 | 0.9492 | 0.9180 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.8788 | 0.9508 | 0.9134 | 61 | 1.0 | 0.9833 | 0.9916 | 60 | 1.0 | 1.0 | 1.0 | 60 | 0.9836 | 1.0 | 0.9917 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 0.9672 | 0.9833 | 61 | 0.9508 | 0.9831 | 0.9667 | 59 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9652 | 0.9796 | 0.9724 | 0.9971 |

| 0.012 | 14.0 | 3360 | 0.0227 | 1.0 | 1.0 | 1.0 | 17 | 0.9667 | 0.9667 | 0.9667 | 60 | 0.9516 | 1.0 | 0.9752 | 59 | 0.9833 | 1.0 | 0.9916 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.9194 | 0.9344 | 0.9268 | 61 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9672 | 0.9833 | 0.9752 | 60 | 1.0 | 0.9833 | 0.9916 | 60 | 1.0 | 1.0 | 1.0 | 60 | 0.9836 | 0.9836 | 0.9836 | 61 | 0.9833 | 1.0 | 0.9916 | 59 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9784 | 0.9851 | 0.9817 | 0.9977 |

| 0.0119 | 15.0 | 3600 | 0.0284 | 1.0 | 1.0 | 1.0 | 17 | 1.0 | 1.0 | 1.0 | 60 | 0.9355 | 0.9831 | 0.9587 | 59 | 0.9833 | 1.0 | 0.9916 | 59 | 1.0 | 1.0 | 1.0 | 60 | 0.9167 | 0.9016 | 0.9091 | 61 | 0.9661 | 0.95 | 0.9580 | 60 | 0.9833 | 0.9833 | 0.9833 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9833 | 1.0 | 0.9916 | 59 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9810 | 0.9824 | 0.9817 | 0.9965 |

| 0.0103 | 16.0 | 3840 | 0.0289 | 0.9444 | 1.0 | 0.9714 | 17 | 0.9672 | 0.9833 | 0.9752 | 60 | 0.9344 | 0.9661 | 0.95 | 59 | 0.9833 | 1.0 | 0.9916 | 59 | 1.0 | 1.0 | 1.0 | 60 | 0.8088 | 0.9016 | 0.8527 | 61 | 0.9667 | 0.9667 | 0.9667 | 60 | 0.9833 | 0.9833 | 0.9833 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9833 | 1.0 | 0.9916 | 59 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9666 | 0.9810 | 0.9737 | 0.9963 |

| 0.01 | 17.0 | 4080 | 0.0305 | 0.8947 | 1.0 | 0.9444 | 17 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9355 | 0.9831 | 0.9587 | 59 | 0.9516 | 1.0 | 0.9752 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.9355 | 0.9508 | 0.9431 | 61 | 0.9833 | 0.9833 | 0.9833 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 0.8955 | 1.0 | 0.9449 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9833 | 1.0 | 0.9916 | 59 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9694 | 0.9891 | 0.9792 | 0.9961 |

| 0.0082 | 18.0 | 4320 | 0.0256 | 1.0 | 1.0 | 1.0 | 17 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9508 | 0.9831 | 0.9667 | 59 | 0.9833 | 1.0 | 0.9916 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.8636 | 0.9344 | 0.8976 | 61 | 0.9831 | 0.9667 | 0.9748 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9833 | 1.0 | 0.9916 | 59 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9785 | 0.9864 | 0.9824 | 0.9970 |

| 0.0059 | 19.0 | 4560 | 0.0255 | 1.0 | 1.0 | 1.0 | 17 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9667 | 0.9831 | 0.9748 | 59 | 0.9833 | 1.0 | 0.9916 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.9355 | 0.9508 | 0.9431 | 61 | 0.9833 | 0.9833 | 0.9833 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9833 | 1.0 | 0.9916 | 59 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9865 | 0.9891 | 0.9878 | 0.9974 |

| 0.0078 | 20.0 | 4800 | 0.0293 | 1.0 | 1.0 | 1.0 | 17 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9508 | 0.9831 | 0.9667 | 59 | 0.9833 | 1.0 | 0.9916 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.9 | 0.8852 | 0.8926 | 61 | 0.9661 | 0.95 | 0.9580 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9833 | 1.0 | 0.9916 | 59 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9810 | 0.9810 | 0.9810 | 0.9966 |

| 0.009 | 21.0 | 5040 | 0.0264 | 1.0 | 1.0 | 1.0 | 17 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9206 | 0.9831 | 0.9508 | 59 | 0.9667 | 0.9831 | 0.9748 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.8889 | 0.9180 | 0.9032 | 61 | 0.9672 | 0.9833 | 0.9752 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 0.9836 | 0.9836 | 0.9836 | 61 | 0.9831 | 0.9831 | 0.9831 | 59 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9745 | 0.9837 | 0.9791 | 0.9969 |

| 0.0046 | 22.0 | 5280 | 0.0271 | 1.0 | 1.0 | 1.0 | 17 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9355 | 0.9831 | 0.9587 | 59 | 0.9667 | 0.9831 | 0.9748 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.9032 | 0.9180 | 0.9106 | 61 | 0.9672 | 0.9833 | 0.9752 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9833 | 1.0 | 0.9916 | 59 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9784 | 0.9851 | 0.9817 | 0.9970 |

| 0.0087 | 23.0 | 5520 | 0.0278 | 0.9444 | 1.0 | 0.9714 | 17 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9194 | 0.9661 | 0.9421 | 59 | 0.9667 | 0.9831 | 0.9748 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.8657 | 0.9508 | 0.9062 | 61 | 0.9836 | 1.0 | 0.9917 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9833 | 1.0 | 0.9916 | 59 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9733 | 0.9878 | 0.9805 | 0.9958 |

| 0.0054 | 24.0 | 5760 | 0.0276 | 0.9444 | 1.0 | 0.9714 | 17 | 1.0 | 0.9833 | 0.9916 | 60 | 0.95 | 0.9661 | 0.9580 | 59 | 0.9833 | 1.0 | 0.9916 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.9355 | 0.9508 | 0.9431 | 61 | 0.9831 | 0.9667 | 0.9748 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 0.9355 | 0.9667 | 0.9508 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9833 | 1.0 | 0.9916 | 59 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9784 | 0.9837 | 0.9811 | 0.9971 |

| 0.0057 | 25.0 | 6000 | 0.0260 | 1.0 | 1.0 | 1.0 | 17 | 1.0 | 0.9667 | 0.9831 | 60 | 0.9077 | 1.0 | 0.9516 | 59 | 0.95 | 0.9661 | 0.9580 | 59 | 0.9677 | 1.0 | 0.9836 | 60 | 0.9508 | 0.9508 | 0.9508 | 61 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9833 | 0.9833 | 0.9833 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 0.9672 | 0.9833 | 61 | 0.9672 | 1.0 | 0.9833 | 59 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9771 | 0.9837 | 0.9804 | 0.9971 |

| 0.0074 | 26.0 | 6240 | 0.0340 | 1.0 | 1.0 | 1.0 | 17 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9180 | 0.9492 | 0.9333 | 59 | 0.9667 | 0.9831 | 0.9748 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.8906 | 0.9344 | 0.9120 | 61 | 0.9831 | 0.9667 | 0.9748 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9833 | 1.0 | 0.9916 | 59 | 0.9836 | 0.9836 | 0.9836 | 61 | 0.9757 | 0.9824 | 0.9790 | 0.9959 |

| 0.0047 | 27.0 | 6480 | 0.0306 | 1.0 | 1.0 | 1.0 | 17 | 1.0 | 1.0 | 1.0 | 60 | 0.8923 | 0.9831 | 0.9355 | 59 | 0.9672 | 1.0 | 0.9833 | 59 | 1.0 | 1.0 | 1.0 | 60 | 0.9016 | 0.9016 | 0.9016 | 61 | 0.9667 | 0.9667 | 0.9667 | 60 | 0.9833 | 0.9833 | 0.9833 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 0.9672 | 0.9833 | 61 | 0.8551 | 1.0 | 0.9219 | 59 | 1.0 | 0.8525 | 0.9204 | 61 | 0.9624 | 0.9715 | 0.9669 | 0.9961 |

| 0.0052 | 28.0 | 6720 | 0.0262 | 1.0 | 1.0 | 1.0 | 17 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9667 | 0.9831 | 0.9748 | 59 | 0.9833 | 1.0 | 0.9916 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.9833 | 0.9672 | 0.9752 | 61 | 0.9833 | 0.9833 | 0.9833 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9833 | 1.0 | 0.9916 | 59 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9905 | 0.9905 | 0.9905 | 0.9973 |

| 0.0033 | 29.0 | 6960 | 0.0320 | 0.9444 | 1.0 | 0.9714 | 17 | 1.0 | 0.9833 | 0.9916 | 60 | 0.8406 | 0.9831 | 0.9062 | 59 | 0.9672 | 1.0 | 0.9833 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.8852 | 0.8852 | 0.8852 | 61 | 0.9833 | 0.9833 | 0.9833 | 60 | 1.0 | 0.9667 | 0.9831 | 60 | 1.0 | 1.0 | 1.0 | 60 | 0.9833 | 0.9833 | 0.9833 | 60 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9365 | 1.0 | 0.9672 | 59 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9627 | 0.9796 | 0.9711 | 0.9960 |

| 0.0048 | 30.0 | 7200 | 0.0215 | 1.0 | 1.0 | 1.0 | 17 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9672 | 1.0 | 0.9833 | 59 | 0.9833 | 1.0 | 0.9916 | 59 | 0.9836 | 1.0 | 0.9917 | 60 | 0.9833 | 0.9672 | 0.9752 | 61 | 1.0 | 0.9833 | 0.9916 | 60 | 0.9833 | 0.9833 | 0.9833 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 1.0 | 1.0 | 60 | 1.0 | 0.9672 | 0.9833 | 61 | 0.9672 | 1.0 | 0.9833 | 59 | 1.0 | 0.9836 | 0.9917 | 61 | 0.9891 | 0.9891 | 0.9891 | 0.9980 |

### Framework versions

- Transformers 4.22.0.dev0

- Pytorch 1.12.1+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

kkpathak91/FVM

|

kkpathak91

| 2022-09-08T13:23:40Z | 0 | 0 | null |

[

"region:us"

] | null | 2022-09-08T10:32:31Z |

fact verification model(FVM) is trained on [FEVER](https://fever.ai), which aims to predict the veracity of a textual claim against a trustworthy knowledge source such as Wikipedia.

This repo hosts the following models for `FVM`:

- `fact_checking/`: the verification models based on BERT (large) and RoBERTa (large), respectively.

- `mrc_seq2seq/`: the generative machine reading comprehension model based on BART (base).

- `evidence_retrieval/`: the evidence sentence ranking models, which are copied directly from [KGAT](https://github.com/thunlp/KernelGAT).

|

sd-concepts-library/hub-city

|

sd-concepts-library

| 2022-09-08T12:04:39Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2022-09-08T12:04:27Z |

---

license: mit

---

### Hub City on Stable Diffusion

This is the `<HubCity>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

microsoft/xclip-base-patch16-ucf-16-shot

|

microsoft

| 2022-09-08T11:54:38Z | 68 | 1 |

transformers

|

[

"transformers",

"pytorch",

"xclip",

"feature-extraction",

"vision",

"video-classification",

"en",

"arxiv:2208.02816",

"license:mit",

"model-index",

"endpoints_compatible",

"region:us"

] |

video-classification

| 2022-09-07T17:45:07Z |

---

language: en

license: mit

tags:

- vision

- video-classification

model-index:

- name: nielsr/xclip-base-patch16-ucf-16-shot

results:

- task:

type: video-classification

dataset:

name: UCF101

type: ucf101

metrics:

- type: top-1 accuracy

value: 91.4

---

# X-CLIP (base-sized model)

X-CLIP model (base-sized, patch resolution of 16) trained in a few-shot fashion (K=16) on [UCF101](https://www.crcv.ucf.edu/data/UCF101.php). It was introduced in the paper [Expanding Language-Image Pretrained Models for General Video Recognition](https://arxiv.org/abs/2208.02816) by Ni et al. and first released in [this repository](https://github.com/microsoft/VideoX/tree/master/X-CLIP).

This model was trained using 32 frames per video, at a resolution of 224x224.

Disclaimer: The team releasing X-CLIP did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

X-CLIP is a minimal extension of [CLIP](https://huggingface.co/docs/transformers/model_doc/clip) for general video-language understanding. The model is trained in a contrastive way on (video, text) pairs.

This allows the model to be used for tasks like zero-shot, few-shot or fully supervised video classification and video-text retrieval.

## Intended uses & limitations

You can use the raw model for determining how well text goes with a given video. See the [model hub](https://huggingface.co/models?search=microsoft/xclip) to look for

fine-tuned versions on a task that interests you.

### How to use

For code examples, we refer to the [documentation](https://huggingface.co/transformers/main/model_doc/xclip.html#).

## Training data

This model was trained on [UCF101](https://www.crcv.ucf.edu/data/UCF101.php).

### Preprocessing

The exact details of preprocessing during training can be found [here](https://github.com/microsoft/VideoX/blob/40f6d177e0a057a50ac69ac1de6b5938fd268601/X-CLIP/datasets/build.py#L247).

The exact details of preprocessing during validation can be found [here](https://github.com/microsoft/VideoX/blob/40f6d177e0a057a50ac69ac1de6b5938fd268601/X-CLIP/datasets/build.py#L285).

During validation, one resizes the shorter edge of each frame, after which center cropping is performed to a fixed-size resolution (like 224x224). Next, frames are normalized across the RGB channels with the ImageNet mean and standard deviation.

## Evaluation results

This model achieves a top-1 accuracy of 91.4%.

|

microsoft/xclip-base-patch16-ucf-8-shot

|

microsoft

| 2022-09-08T11:49:44Z | 70 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xclip",

"feature-extraction",

"vision",

"video-classification",

"en",

"arxiv:2208.02816",

"license:mit",

"model-index",

"endpoints_compatible",

"region:us"

] |

video-classification

| 2022-09-07T17:13:46Z |

---

language: en

license: mit

tags:

- vision

- video-classification

model-index:

- name: nielsr/xclip-base-patch16-ucf-8-shot

results:

- task:

type: video-classification

dataset:

name: UCF101

type: ucf101

metrics:

- type: top-1 accuracy

value: 88.3

---

# X-CLIP (base-sized model)

X-CLIP model (base-sized, patch resolution of 16) trained in a few-shot fashion (K=8) on [UCF101](https://www.crcv.ucf.edu/data/UCF101.php). It was introduced in the paper [Expanding Language-Image Pretrained Models for General Video Recognition](https://arxiv.org/abs/2208.02816) by Ni et al. and first released in [this repository](https://github.com/microsoft/VideoX/tree/master/X-CLIP).

This model was trained using 32 frames per video, at a resolution of 224x224.

Disclaimer: The team releasing X-CLIP did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

X-CLIP is a minimal extension of [CLIP](https://huggingface.co/docs/transformers/model_doc/clip) for general video-language understanding. The model is trained in a contrastive way on (video, text) pairs.

This allows the model to be used for tasks like zero-shot, few-shot or fully supervised video classification and video-text retrieval.

## Intended uses & limitations

You can use the raw model for determining how well text goes with a given video. See the [model hub](https://huggingface.co/models?search=microsoft/xclip) to look for

fine-tuned versions on a task that interests you.

### How to use

For code examples, we refer to the [documentation](https://huggingface.co/transformers/main/model_doc/xclip.html#).

## Training data

This model was trained on [UCF101](https://www.crcv.ucf.edu/data/UCF101.php).

### Preprocessing

The exact details of preprocessing during training can be found [here](https://github.com/microsoft/VideoX/blob/40f6d177e0a057a50ac69ac1de6b5938fd268601/X-CLIP/datasets/build.py#L247).

The exact details of preprocessing during validation can be found [here](https://github.com/microsoft/VideoX/blob/40f6d177e0a057a50ac69ac1de6b5938fd268601/X-CLIP/datasets/build.py#L285).

During validation, one resizes the shorter edge of each frame, after which center cropping is performed to a fixed-size resolution (like 224x224). Next, frames are normalized across the RGB channels with the ImageNet mean and standard deviation.

## Evaluation results

This model achieves a top-1 accuracy of 88.3%.

|

microsoft/xclip-base-patch16-ucf-2-shot

|

microsoft

| 2022-09-08T11:49:14Z | 75 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xclip",

"feature-extraction",

"vision",

"video-classification",

"en",

"arxiv:2208.02816",

"license:mit",

"model-index",

"endpoints_compatible",

"region:us"

] |

video-classification

| 2022-09-07T17:06:55Z |

---

language: en

license: mit

tags:

- vision

- video-classification

model-index:

- name: nielsr/xclip-base-patch16-ucf-2-shot

results:

- task:

type: video-classification

dataset:

name: UCF101

type: ucf101

metrics:

- type: top-1 accuracy

value: 76.4

---

# X-CLIP (base-sized model)

X-CLIP model (base-sized, patch resolution of 16) trained in a few-shot fashion (K=2) on [UCF101](https://www.crcv.ucf.edu/data/UCF101.php). It was introduced in the paper [Expanding Language-Image Pretrained Models for General Video Recognition](https://arxiv.org/abs/2208.02816) by Ni et al. and first released in [this repository](https://github.com/microsoft/VideoX/tree/master/X-CLIP).

This model was trained using 32 frames per video, at a resolution of 224x224.

Disclaimer: The team releasing X-CLIP did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

X-CLIP is a minimal extension of [CLIP](https://huggingface.co/docs/transformers/model_doc/clip) for general video-language understanding. The model is trained in a contrastive way on (video, text) pairs.

This allows the model to be used for tasks like zero-shot, few-shot or fully supervised video classification and video-text retrieval.

## Intended uses & limitations

You can use the raw model for determining how well text goes with a given video. See the [model hub](https://huggingface.co/models?search=microsoft/xclip) to look for

fine-tuned versions on a task that interests you.

### How to use

For code examples, we refer to the [documentation](https://huggingface.co/transformers/main/model_doc/xclip.html#).

## Training data

This model was trained on [UCF101](https://www.crcv.ucf.edu/data/UCF101.php).

### Preprocessing