modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-04 06:26:56

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 538

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-04 06:26:41

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

konverner/due_eshop_21_multilabel

|

konverner

| 2023-07-05T18:59:21Z | 4 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"mpnet",

"setfit",

"text-classification",

"arxiv:2209.11055",

"license:apache-2.0",

"region:us"

] |

text-classification

| 2023-07-04T22:21:59Z |

---

license: apache-2.0

tags:

- setfit

- sentence-transformers

- text-classification

pipeline_tag: text-classification

---

# konverner/due_eshop_21_multilabel

This is a [SetFit model](https://github.com/huggingface/setfit) that can be used for text classification. The model has been trained using an efficient few-shot learning technique that involves:

1. Fine-tuning a [Sentence Transformer](https://www.sbert.net) with contrastive learning.

2. Training a classification head with features from the fine-tuned Sentence Transformer.

## Usage

To use this model for inference, first install the SetFit library:

```bash

python -m pip install setfit

```

You can then run inference as follows:

```python

from setfit import SetFitModel

# Download from Hub and run inference

model = SetFitModel.from_pretrained("konverner/due_eshop_21_multilabel")

# Run inference

preds = model(["i loved the spiderman movie!", "pineapple on pizza is the worst 🤮"])

```

## BibTeX entry and citation info

```bibtex

@article{https://doi.org/10.48550/arxiv.2209.11055,

doi = {10.48550/ARXIV.2209.11055},

url = {https://arxiv.org/abs/2209.11055},

author = {Tunstall, Lewis and Reimers, Nils and Jo, Unso Eun Seo and Bates, Luke and Korat, Daniel and Wasserblat, Moshe and Pereg, Oren},

keywords = {Computation and Language (cs.CL), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Efficient Few-Shot Learning Without Prompts},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}

}

```

|

sd-concepts-library/ahx-beta-4a5b307

|

sd-concepts-library

| 2023-07-05T18:57:32Z | 0 | 0 | null |

[

"license:mit",

"region:us"

] | null | 2023-07-05T18:57:29Z |

---

license: mit

---

### ahx-beta-4a5b307 on Stable Diffusion

This is the `<ahx-beta-4a5b307>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

Sandrro/text_to_subfunction_v5

|

Sandrro

| 2023-07-05T18:50:38Z | 108 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-07-05T12:03:44Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: text_to_subfunction_v5

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# text_to_subfunction_v5

This model is a fine-tuned version of [cointegrated/rubert-tiny2](https://huggingface.co/cointegrated/rubert-tiny2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.4805

- F1: 0.3751

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 3.9061 | 1.0 | 4050 | 3.8927 | 0.0909 |

| 3.0607 | 2.0 | 8100 | 3.1700 | 0.2214 |

| 2.4112 | 3.0 | 12150 | 2.8376 | 0.2764 |

| 1.9961 | 4.0 | 16200 | 2.6672 | 0.3388 |

| 1.5209 | 5.0 | 20250 | 2.5776 | 0.3428 |

| 1.2317 | 6.0 | 24300 | 2.5766 | 0.3578 |

| 0.9831 | 7.0 | 28350 | 2.6033 | 0.3691 |

| 0.7033 | 8.0 | 32400 | 2.7067 | 0.3633 |

| 0.5462 | 9.0 | 36450 | 2.7621 | 0.3672 |

| 0.4332 | 10.0 | 40500 | 2.8558 | 0.3750 |

| 0.3114 | 11.0 | 44550 | 2.9402 | 0.3729 |

| 0.2379 | 12.0 | 48600 | 3.0508 | 0.3738 |

| 0.1877 | 13.0 | 52650 | 3.1642 | 0.3703 |

| 0.1923 | 14.0 | 56700 | 3.2413 | 0.3754 |

| 0.1489 | 15.0 | 60750 | 3.3047 | 0.3856 |

| 0.1202 | 16.0 | 64800 | 3.3581 | 0.3764 |

| 0.1065 | 17.0 | 68850 | 3.4211 | 0.3767 |

| 0.1107 | 18.0 | 72900 | 3.4589 | 0.3725 |

| 0.1004 | 19.0 | 76950 | 3.4768 | 0.3723 |

| 0.1082 | 20.0 | 81000 | 3.4805 | 0.3751 |

### Framework versions

- Transformers 4.27.1

- Pytorch 2.1.0.dev20230414+cu117

- Datasets 2.9.0

- Tokenizers 0.13.3

|

kubjonkyr/ppo-LunarLander-v2

|

kubjonkyr

| 2023-07-05T18:35:22Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-07-05T17:36:39Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 290.89 +/- 13.49

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

DCTR/my_vicuna

|

DCTR

| 2023-07-05T18:22:52Z | 4 | 0 |

peft

|

[

"peft",

"region:us"

] | null | 2023-07-05T18:11:24Z |

---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: True

- bnb_4bit_compute_dtype: bfloat16

### Framework versions

- PEFT 0.4.0.dev0

|

alesthehuman/poca-SoccerTwos

|

alesthehuman

| 2023-07-05T18:14:32Z | 24 | 0 |

ml-agents

|

[

"ml-agents",

"tensorboard",

"onnx",

"SoccerTwos",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-SoccerTwos",

"region:us"

] |

reinforcement-learning

| 2023-07-05T18:13:38Z |

---

library_name: ml-agents

tags:

- SoccerTwos

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-SoccerTwos

---

# **poca** Agent playing **SoccerTwos**

This is a trained model of a **poca** agent playing **SoccerTwos**

using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://unity-technologies.github.io/ml-agents/ML-Agents-Toolkit-Documentation/

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

- A *short tutorial* where you teach Huggy the Dog 🐶 to fetch the stick and then play with him directly in your

browser: https://huggingface.co/learn/deep-rl-course/unitbonus1/introduction

- A *longer tutorial* to understand how works ML-Agents:

https://huggingface.co/learn/deep-rl-course/unit5/introduction

### Resume the training

```bash

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser**

1. If the environment is part of ML-Agents official environments, go to https://huggingface.co/unity

2. Step 1: Find your model_id: alesthehuman/poca-SoccerTwos

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

Tubido/Taxi-v3-001

|

Tubido

| 2023-07-05T18:06:11Z | 0 | 0 | null |

[

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-07-05T18:06:09Z |

---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: Taxi-v3-001

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="Tubido/Taxi-v3-001", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

aronmal/dqn-SpaceInvaders-v4

|

aronmal

| 2023-07-05T18:01:03Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-07-05T18:00:21Z |

---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 551.00 +/- 161.83

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga aronmal -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga aronmal -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga aronmal

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 1000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

# Environment Arguments

```python

{'render_mode': 'rgb_array'}

```

|

TN19N/ppo-LunarLander-v2

|

TN19N

| 2023-07-05T17:45:04Z | 8 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-07-05T14:51:02Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 274.09 +/- 14.90

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

S1X3L4/NavecitaUWU

|

S1X3L4

| 2023-07-05T17:30:43Z | 1 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-07-05T17:30:17Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 239.85 +/- 15.30

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

cleandata/distilhubert-finetuned-gtzan_accuracy_93

|

cleandata

| 2023-07-05T17:24:29Z | 161 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"hubert",

"audio-classification",

"music",

"genre",

"classification",

"en",

"dataset:marsyas/gtzan",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

audio-classification

| 2023-07-05T14:56:11Z |

---

license: apache-2.0

tags:

- music

- genre

- classification

datasets:

- marsyas/gtzan

metrics:

- accuracy

model-index:

- name: distilhubert-finetuned-gtzan_accuracy_93

results: []

language:

- en

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilhubert-finetuned-gtzan_accuracy_93

### This model is a fine-tuned version of [yuval6967/distilhubert-finetuned-gtzan](https://huggingface.co/yuval6967/distilhubert-finetuned-gtzan) on the GTZAN dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5121

- __Accuracy: 0.93__

## Model description

- Fine-tuned model to demonstrate > 87% accuracy for the [Huggingface Audio course](https://huggingface.co/learn/audio-course/chapter0/introduction)

## Intended uses & limitations

- Model is built to identify the genre of music based on a ~30 sec clip

## Training and evaluation data

More information needed

## Training procedure

- test_size = 0.20 was used for the split

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.0316 | 1.0 | 100 | 0.4338 | 0.895 |

| 0.0031 | 2.0 | 200 | 0.7039 | 0.86 |

| 0.0069 | 3.0 | 300 | 0.4526 | 0.925 |

| 0.1799 | 4.0 | 400 | 0.7071 | 0.88 |

| 0.1783 | 5.0 | 500 | 0.5923 | 0.92 |

| 0.0011 | 6.0 | 600 | 0.5498 | 0.92 |

| 0.0005 | 7.0 | 700 | 0.4927 | 0.925 |

| 0.0005 | 8.0 | 800 | 0.6172 | 0.915 |

| 0.0004 | 9.0 | 900 | 0.4988 | 0.925 |

| 0.0004 | 10.0 | 1000 | 0.5121 | 0.93 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

heka-ai/e5-100k

|

heka-ai

| 2023-07-05T17:09:28Z | 1 | 1 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"distilbert",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2023-07-05T17:09:24Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# heka-ai/e5-100k

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('heka-ai/e5-100k')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

def cls_pooling(model_output, attention_mask):

return model_output[0][:,0]

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('heka-ai/e5-100k')

model = AutoModel.from_pretrained('heka-ai/e5-100k')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, cls pooling.

sentence_embeddings = cls_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=heka-ai/e5-100k)

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 20000 with parameters:

```

{'batch_size': 32, 'sampler': 'torch.utils.data.sampler.SequentialSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`gpl.toolkit.loss.MarginDistillationLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 100000,

"warmup_steps": 1000,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 350, 'do_lower_case': False}) with Transformer model: DistilBertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': True, 'pooling_mode_mean_tokens': False, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

ddoc/def

|

ddoc

| 2023-07-05T17:04:15Z | 0 | 0 | null |

[

"region:us"

] | null | 2023-07-05T17:03:23Z |

# Deforum Stable Diffusion — official extension for AUTOMATIC1111's webui

<p align="left">

<a href="https://github.com/deforum-art/sd-webui-deforum/commits"><img alt="Last Commit" src="https://img.shields.io/github/last-commit/deforum-art/deforum-for-automatic1111-webui"></a>

<a href="https://github.com/deforum-art/sd-webui-deforum/issues"><img alt="GitHub issues" src="https://img.shields.io/github/issues/deforum-art/deforum-for-automatic1111-webui"></a>

<a href="https://github.com/deforum-art/sd-webui-deforum/stargazers"><img alt="GitHub stars" src="https://img.shields.io/github/stars/deforum-art/deforum-for-automatic1111-webui"></a>

<a href="https://github.com/deforum-art/sd-webui-deforum/network"><img alt="GitHub forks" src="https://img.shields.io/github/forks/deforum-art/deforum-for-automatic1111-webui"></a>

</a>

</p>

## Need help? See our [FAQ](https://github.com/deforum-art/sd-webui-deforum/wiki/FAQ-&-Troubleshooting)

## Getting Started

1. Install [AUTOMATIC1111's webui](https://github.com/AUTOMATIC1111/stable-diffusion-webui/).

2. Now two ways: either clone the repo into the `extensions` directory via git commandline launched within in the `stable-diffusion-webui` folder

```sh

git clone https://github.com/deforum-art/sd-webui-deforum extensions/deforum

```

Or download this repository, locate the `extensions` folder within your WebUI installation, create a folder named `deforum` and put the contents of the downloaded directory inside of it. Then restart WebUI.

3. Open the webui, find the Deforum tab at the top of the page.

4. Enter the animation settings. Refer to [this general guide](https://docs.google.com/document/d/1pEobUknMFMkn8F5TMsv8qRzamXX_75BShMMXV8IFslI/edit) and [this guide to math keyframing functions in Deforum](https://docs.google.com/document/d/1pfW1PwbDIuW0cv-dnuyYj1UzPqe23BlSLTJsqazffXM/edit?usp=sharing). However, **in this version prompt weights less than zero don't just like in original Deforum!** Split the positive and the negative prompt in the json section using --neg argument like this "apple:\`where(cos(t)>=0, cos(t), 0)\`, snow --neg strawberry:\`where(cos(t)<0, -cos(t), 0)\`"

5. To view animation frames as they're being made, without waiting for the completion of an animation, go to the 'Settings' tab and set the value of this toolbar **above zero**. Warning: it may slow down the generation process.

6. Run the script and see if you got it working or even got something. **In 3D mode a large delay is expected at first** as the script loads the depth models. In the end, using the default settings the whole thing should consume 6.4 GBs of VRAM at 3D mode peaks and no more than 3.8 GB VRAM in 3D mode if you launch the webui with the '--lowvram' command line argument.

7. After the generation process is completed, click the button with the self-describing name to show the video or gif result right in the GUI!

8. Join our Discord where you can post generated stuff, ask questions and more: https://discord.gg/deforum. <br>

* There's also the 'Issues' tab in the repo, for well... reporting issues ;)

9. Profit!

## Known issues

* This port is not fully backward-compatible with the notebook and the local version both due to the changes in how AUTOMATIC1111's webui handles Stable Diffusion models and the changes in this script to get it to work in the new environment. *Expect* that you may not get exactly the same result or that the thing may break down because of the older settings.

## Screenshots

Amazing raw Deforum animation by [Pxl.Pshr](https://www.instagram.com/pxl.pshr):

* Turn Audio ON!

(Audio credits: SKRILLEX, FRED AGAIN & FLOWDAN - RUMBLE (PHACE'S DNB FLIP))

https://user-images.githubusercontent.com/121192995/224450647-39529b28-be04-4871-bb7a-faf7afda2ef2.mp4

Setting file of that video: [here](https://github.com/deforum-art/sd-webui-deforum/files/11353167/PxlPshrWinningAnimationSettings.txt).

<br>

Main extension tab:

Keyframes tab:

|

vincentmin/RedPajama-INCITE-Base-3B-v1-colab

|

vincentmin

| 2023-07-05T16:59:36Z | 0 | 0 | null |

[

"tensorboard",

"generated_from_trainer",

"base_model:togethercomputer/RedPajama-INCITE-Base-3B-v1",

"base_model:finetune:togethercomputer/RedPajama-INCITE-Base-3B-v1",

"license:apache-2.0",

"region:us"

] | null | 2023-07-05T13:20:32Z |

---

license: apache-2.0

base_model: togethercomputer/RedPajama-INCITE-Base-3B-v1

tags:

- generated_from_trainer

model-index:

- name: RedPajama-INCITE-Base-3B-v1-colab

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# RedPajama-INCITE-Base-3B-v1-colab

This model is a fine-tuned version of [togethercomputer/RedPajama-INCITE-Base-3B-v1](https://huggingface.co/togethercomputer/RedPajama-INCITE-Base-3B-v1) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.6061

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 4

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.59 | 0.08 | 200 | 1.6639 |

| 1.6331 | 0.16 | 400 | 1.6433 |

| 1.6253 | 0.24 | 600 | 1.6323 |

| 1.6133 | 0.33 | 800 | 1.6259 |

| 1.6153 | 0.41 | 1000 | 1.6210 |

| 1.5429 | 0.49 | 1200 | 1.6165 |

| 1.5379 | 0.57 | 1400 | 1.6129 |

| 1.6046 | 0.65 | 1600 | 1.6090 |

| 1.6253 | 0.73 | 1800 | 1.6073 |

| 1.6955 | 0.81 | 2000 | 1.6061 |

### Framework versions

- Transformers 4.31.0.dev0

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

Emilianohack6950/GenOrtega

|

Emilianohack6950

| 2023-07-05T16:50:47Z | 32 | 0 |

diffusers

|

[

"diffusers",

"safetensors",

"text-to-image",

"stable-diffusion",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2023-06-28T22:03:29Z |

---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

---

GenOrtega es un modelo avanzado de generación de imágenes basado en inteligencia artificial, diseñado para capturar y recrear la belleza única de Jenna Ortega, una talentosa actriz reconocida en la industria del entretenimiento. Utilizando técnicas de aprendizaje profundo y una vasta cantidad de datos de entrenamiento, este modelo ha sido entrenado para generar imágenes fotorrealistas de alta calidad que capturan con precisión los rasgos faciales, la expresividad y el estilo inconfundible de Jenna Ortega.

Con GenOrtega, puedes explorar la creatividad y obtener imágenes personalizadas de Jenna Ortega para diversas aplicaciones, como proyectos de diseño gráfico, desarrollo de videojuegos, producción cinematográfica, arte digital y más. El modelo ofrece una amplia gama de opciones para personalizar las imágenes generadas, como la elección de expresiones faciales, cambios de vestuario y entornos, lo que te permite adaptar las imágenes a tus necesidades específicas.

GenOrtega ha sido entrenado en una amplia variedad de imágenes y poses de Jenna Ortega, lo que le permite capturar su diversidad y versatilidad como artista. Además, el modelo cuenta con una interfaz intuitiva y fácil de usar, lo que lo hace accesible tanto para profesionales creativos como para entusiastas del arte digital.

Descubre la magia de GenOrtega y experimenta con la generación de imágenes de Jenna Ortega para dar vida a tus ideas y proyectos con un toque de estilo y autenticidad únicos.

Sample pictures of this concept:

|

alexmejva/test_model_alexmejva

|

alexmejva

| 2023-07-05T16:49:26Z | 3 | 0 |

transformers

|

[

"transformers",

"bert",

"text-classification",

"fill-mask",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2023-07-05T16:42:15Z |

---

license: apache-2.0

pipeline_tag: fill-mask

---

|

oknashar/distilbert-base-uncased-finetuned-emotion

|

oknashar

| 2023-07-05T16:42:28Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"base_model:distilbert/distilbert-base-uncased",

"base_model:finetune:distilbert/distilbert-base-uncased",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-07-05T15:20:24Z |

---

license: apache-2.0

base_model: distilbert-base-uncased

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an mteb/emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1803

- Accuracy: 0.94

- F1: 0.9400

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.5017 | 1.0 | 250 | 0.2116 | 0.9295 | 0.9305 |

| 0.1763 | 2.0 | 500 | 0.1617 | 0.936 | 0.9369 |

| 0.1267 | 3.0 | 750 | 0.1492 | 0.9385 | 0.9386 |

| 0.0979 | 4.0 | 1000 | 0.1495 | 0.9395 | 0.9392 |

| 0.0787 | 5.0 | 1250 | 0.1602 | 0.935 | 0.9349 |

| 0.067 | 6.0 | 1500 | 0.1588 | 0.9405 | 0.9401 |

| 0.0557 | 7.0 | 1750 | 0.1675 | 0.9415 | 0.9413 |

| 0.0452 | 8.0 | 2000 | 0.1764 | 0.937 | 0.9365 |

| 0.0375 | 9.0 | 2250 | 0.1765 | 0.9405 | 0.9406 |

| 0.0337 | 10.0 | 2500 | 0.1803 | 0.94 | 0.9400 |

### Framework versions

- Transformers 4.31.0.dev0

- Pytorch 2.0.0

- Datasets 2.1.0

- Tokenizers 0.13.3

|

adisrini11/AIE-Assessment

|

adisrini11

| 2023-07-05T16:39:39Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:tweet_eval",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-06-18T21:32:35Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- tweet_eval

metrics:

- accuracy

model-index:

- name: AIE-Assessment

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: tweet_eval

type: tweet_eval

config: emotion

split: test

args: emotion

metrics:

- name: Accuracy

type: accuracy

value: 0.800844475721323

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# AIE-Assessment

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the tweet_eval dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5687

- Accuracy: 0.8008

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 204 | 0.6383 | 0.7910 |

| No log | 2.0 | 408 | 0.5687 | 0.8008 |

### Framework versions

- Transformers 4.29.2

- Pytorch 2.0.1

- Datasets 2.12.0

- Tokenizers 0.11.0

|

hazemOmrann14/AraBART-summ-finetuned-xsum-finetuned-xsum-finetuned-xsum

|

hazemOmrann14

| 2023-07-05T16:28:35Z | 106 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"mbart",

"text2text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2023-07-05T16:08:14Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: AraBART-summ-finetuned-xsum-finetuned-xsum-finetuned-xsum

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# AraBART-summ-finetuned-xsum-finetuned-xsum-finetuned-xsum

This model is a fine-tuned version of [hazemOmrann14/AraBART-summ-finetuned-xsum-finetuned-xsum](https://huggingface.co/hazemOmrann14/AraBART-summ-finetuned-xsum-finetuned-xsum) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3.328889038158605e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:------:|:---------:|:-------:|

| No log | 1.0 | 10 | 2.5240 | 0.0 | 0.0 | 0.0 | 0.0 | 20.0 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

ozsenior13/distilbert-base-uncased-finetuned-squad

|

ozsenior13

| 2023-07-05T16:24:38Z | 116 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"question-answering",

"generated_from_trainer",

"dataset:squad",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2023-07-05T15:02:16Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad

model-index:

- name: distilbert-base-uncased-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-squad

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the squad dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2169

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.263 | 1.0 | 5533 | 1.2169 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

NasimB/gpt2-dp-cl-rarity-11-135k-mod-datasets-rarity1-root3

|

NasimB

| 2023-07-05T16:22:18Z | 7 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"generated_from_trainer",

"dataset:generator",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-07-05T13:27:11Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- generator

model-index:

- name: gpt2-dp-cl-rarity-11-135k-mod-datasets-rarity1-root3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-dp-cl-rarity-11-135k-mod-datasets-rarity1-root3

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the generator dataset.

It achieves the following results on the evaluation set:

- Loss: 4.7616

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 6.7003 | 0.05 | 500 | 5.8421 |

| 5.4077 | 0.1 | 1000 | 5.4379 |

| 5.0667 | 0.15 | 1500 | 5.2282 |

| 4.8285 | 0.2 | 2000 | 5.0890 |

| 4.6639 | 0.25 | 2500 | 4.9968 |

| 4.5282 | 0.29 | 3000 | 4.9414 |

| 4.4194 | 0.34 | 3500 | 4.8843 |

| 4.3138 | 0.39 | 4000 | 4.8436 |

| 4.2135 | 0.44 | 4500 | 4.8229 |

| 4.1242 | 0.49 | 5000 | 4.7947 |

| 4.0388 | 0.54 | 5500 | 4.7670 |

| 3.952 | 0.59 | 6000 | 4.7585 |

| 3.8701 | 0.64 | 6500 | 4.7431 |

| 3.8026 | 0.69 | 7000 | 4.7273 |

| 3.7345 | 0.74 | 7500 | 4.7219 |

| 3.6661 | 0.79 | 8000 | 4.7135 |

| 3.6259 | 0.84 | 8500 | 4.7072 |

| 3.5927 | 0.88 | 9000 | 4.7052 |

| 3.5699 | 0.93 | 9500 | 4.7025 |

| 3.5638 | 0.98 | 10000 | 4.7018 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.11.0+cu113

- Datasets 2.13.0

- Tokenizers 0.13.3

|

omar-al-sharif/AlQalam-finetuned-mmj

|

omar-al-sharif

| 2023-07-05T16:21:33Z | 3 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2023-07-05T14:11:56Z |

---

tags:

- generated_from_trainer

model-index:

- name: AlQalam-finetuned-mmj

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# AlQalam-finetuned-mmj

This model is a fine-tuned version of [malmarjeh/t5-arabic-text-summarization](https://huggingface.co/malmarjeh/t5-arabic-text-summarization) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0723

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.3745 | 1.0 | 1678 | 1.1947 |

| 1.219 | 2.0 | 3356 | 1.1176 |

| 1.065 | 3.0 | 5034 | 1.0895 |

| 0.9928 | 4.0 | 6712 | 1.0734 |

| 0.9335 | 5.0 | 8390 | 1.0723 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

timbrooks/instruct-pix2pix

|

timbrooks

| 2023-07-05T16:19:25Z | 78,060 | 1,061 |

diffusers

|

[

"diffusers",

"safetensors",

"image-to-image",

"license:mit",

"diffusers:StableDiffusionInstructPix2PixPipeline",

"region:us"

] |

image-to-image

| 2023-01-20T04:27:06Z |

---

license: mit

tags:

- image-to-image

---

# InstructPix2Pix: Learning to Follow Image Editing Instructions

GitHub: https://github.com/timothybrooks/instruct-pix2pix

<img src='https://instruct-pix2pix.timothybrooks.com/teaser.jpg'/>

## Example

To use `InstructPix2Pix`, install `diffusers` using `main` for now. The pipeline will be available in the next release

```bash

pip install diffusers accelerate safetensors transformers

```

```python

import PIL

import requests

import torch

from diffusers import StableDiffusionInstructPix2PixPipeline, EulerAncestralDiscreteScheduler

model_id = "timbrooks/instruct-pix2pix"

pipe = StableDiffusionInstructPix2PixPipeline.from_pretrained(model_id, torch_dtype=torch.float16, safety_checker=None)

pipe.to("cuda")

pipe.scheduler = EulerAncestralDiscreteScheduler.from_config(pipe.scheduler.config)

url = "https://raw.githubusercontent.com/timothybrooks/instruct-pix2pix/main/imgs/example.jpg"

def download_image(url):

image = PIL.Image.open(requests.get(url, stream=True).raw)

image = PIL.ImageOps.exif_transpose(image)

image = image.convert("RGB")

return image

image = download_image(url)

prompt = "turn him into cyborg"

images = pipe(prompt, image=image, num_inference_steps=10, image_guidance_scale=1).images

images[0]

```

|

stabilityai/stable-diffusion-2-1-base

|

stabilityai

| 2023-07-05T16:19:20Z | 863,939 | 647 |

diffusers

|

[

"diffusers",

"safetensors",

"stable-diffusion",

"text-to-image",

"arxiv:2112.10752",

"arxiv:2202.00512",

"arxiv:1910.09700",

"license:openrail++",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2022-12-06T17:25:36Z |

---

license: openrail++

tags:

- stable-diffusion

- text-to-image

---

# Stable Diffusion v2-1-base Model Card

This model card focuses on the model associated with the Stable Diffusion v2-1-base model.

This `stable-diffusion-2-1-base` model fine-tunes [stable-diffusion-2-base](https://huggingface.co/stabilityai/stable-diffusion-2-base) (`512-base-ema.ckpt`) with 220k extra steps taken, with `punsafe=0.98` on the same dataset.

- Use it with the [`stablediffusion`](https://github.com/Stability-AI/stablediffusion) repository: download the `v2-1_512-ema-pruned.ckpt` [here](https://huggingface.co/stabilityai/stable-diffusion-2-1-base/resolve/main/v2-1_512-ema-pruned.ckpt).

- Use it with 🧨 [`diffusers`](#examples)

## Model Details

- **Developed by:** Robin Rombach, Patrick Esser

- **Model type:** Diffusion-based text-to-image generation model

- **Language(s):** English

- **License:** [CreativeML Open RAIL++-M License](https://huggingface.co/stabilityai/stable-diffusion-2/blob/main/LICENSE-MODEL)

- **Model Description:** This is a model that can be used to generate and modify images based on text prompts. It is a [Latent Diffusion Model](https://arxiv.org/abs/2112.10752) that uses a fixed, pretrained text encoder ([OpenCLIP-ViT/H](https://github.com/mlfoundations/open_clip)).

- **Resources for more information:** [GitHub Repository](https://github.com/Stability-AI/).

- **Cite as:**

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

## Examples

Using the [🤗's Diffusers library](https://github.com/huggingface/diffusers) to run Stable Diffusion 2 in a simple and efficient manner.

```bash

pip install diffusers transformers accelerate scipy safetensors

```

Running the pipeline (if you don't swap the scheduler it will run with the default PNDM/PLMS scheduler, in this example we are swapping it to EulerDiscreteScheduler):

```python

from diffusers import StableDiffusionPipeline, EulerDiscreteScheduler

import torch

model_id = "stabilityai/stable-diffusion-2-1-base"

scheduler = EulerDiscreteScheduler.from_pretrained(model_id, subfolder="scheduler")

pipe = StableDiffusionPipeline.from_pretrained(model_id, scheduler=scheduler, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "a photo of an astronaut riding a horse on mars"

image = pipe(prompt).images[0]

image.save("astronaut_rides_horse.png")

```

**Notes**:

- Despite not being a dependency, we highly recommend you to install [xformers](https://github.com/facebookresearch/xformers) for memory efficient attention (better performance)

- If you have low GPU RAM available, make sure to add a `pipe.enable_attention_slicing()` after sending it to `cuda` for less VRAM usage (to the cost of speed)

# Uses

## Direct Use

The model is intended for research purposes only. Possible research areas and tasks include

- Safe deployment of models which have the potential to generate harmful content.

- Probing and understanding the limitations and biases of generative models.

- Generation of artworks and use in design and other artistic processes.

- Applications in educational or creative tools.

- Research on generative models.

Excluded uses are described below.

### Misuse, Malicious Use, and Out-of-Scope Use

_Note: This section is originally taken from the [DALLE-MINI model card](https://huggingface.co/dalle-mini/dalle-mini), was used for Stable Diffusion v1, but applies in the same way to Stable Diffusion v2_.

The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. This includes generating images that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes.

#### Out-of-Scope Use

The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

#### Misuse and Malicious Use

Using the model to generate content that is cruel to individuals is a misuse of this model. This includes, but is not limited to:

- Generating demeaning, dehumanizing, or otherwise harmful representations of people or their environments, cultures, religions, etc.

- Intentionally promoting or propagating discriminatory content or harmful stereotypes.

- Impersonating individuals without their consent.

- Sexual content without consent of the people who might see it.

- Mis- and disinformation

- Representations of egregious violence and gore

- Sharing of copyrighted or licensed material in violation of its terms of use.

- Sharing content that is an alteration of copyrighted or licensed material in violation of its terms of use.

## Limitations and Bias

### Limitations

- The model does not achieve perfect photorealism

- The model cannot render legible text

- The model does not perform well on more difficult tasks which involve compositionality, such as rendering an image corresponding to “A red cube on top of a blue sphere”

- Faces and people in general may not be generated properly.

- The model was trained mainly with English captions and will not work as well in other languages.

- The autoencoding part of the model is lossy

- The model was trained on a subset of the large-scale dataset

[LAION-5B](https://laion.ai/blog/laion-5b/), which contains adult, violent and sexual content. To partially mitigate this, we have filtered the dataset using LAION's NFSW detector (see Training section).

### Bias

While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases.

Stable Diffusion vw was primarily trained on subsets of [LAION-2B(en)](https://laion.ai/blog/laion-5b/),

which consists of images that are limited to English descriptions.

Texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for.

This affects the overall output of the model, as white and western cultures are often set as the default. Further, the

ability of the model to generate content with non-English prompts is significantly worse than with English-language prompts.

Stable Diffusion v2 mirrors and exacerbates biases to such a degree that viewer discretion must be advised irrespective of the input or its intent.

## Training

**Training Data**

The model developers used the following dataset for training the model:

- LAION-5B and subsets (details below). The training data is further filtered using LAION's NSFW detector, with a "p_unsafe" score of 0.1 (conservative). For more details, please refer to LAION-5B's [NeurIPS 2022](https://openreview.net/forum?id=M3Y74vmsMcY) paper and reviewer discussions on the topic.

**Training Procedure**

Stable Diffusion v2 is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. During training,

- Images are encoded through an encoder, which turns images into latent representations. The autoencoder uses a relative downsampling factor of 8 and maps images of shape H x W x 3 to latents of shape H/f x W/f x 4

- Text prompts are encoded through the OpenCLIP-ViT/H text-encoder.

- The output of the text encoder is fed into the UNet backbone of the latent diffusion model via cross-attention.

- The loss is a reconstruction objective between the noise that was added to the latent and the prediction made by the UNet. We also use the so-called _v-objective_, see https://arxiv.org/abs/2202.00512.

We currently provide the following checkpoints, for various versions:

### Version 2.1

- `512-base-ema.ckpt`: Fine-tuned on `512-base-ema.ckpt` 2.0 with 220k extra steps taken, with `punsafe=0.98` on the same dataset.

- `768-v-ema.ckpt`: Resumed from `768-v-ema.ckpt` 2.0 with an additional 55k steps on the same dataset (`punsafe=0.1`), and then fine-tuned for another 155k extra steps with `punsafe=0.98`.

### Version 2.0

- `512-base-ema.ckpt`: 550k steps at resolution `256x256` on a subset of [LAION-5B](https://laion.ai/blog/laion-5b/) filtered for explicit pornographic material, using the [LAION-NSFW classifier](https://github.com/LAION-AI/CLIP-based-NSFW-Detector) with `punsafe=0.1` and an [aesthetic score](https://github.com/christophschuhmann/improved-aesthetic-predictor) >= `4.5`.

850k steps at resolution `512x512` on the same dataset with resolution `>= 512x512`.

- `768-v-ema.ckpt`: Resumed from `512-base-ema.ckpt` and trained for 150k steps using a [v-objective](https://arxiv.org/abs/2202.00512) on the same dataset. Resumed for another 140k steps on a `768x768` subset of our dataset.

- `512-depth-ema.ckpt`: Resumed from `512-base-ema.ckpt` and finetuned for 200k steps. Added an extra input channel to process the (relative) depth prediction produced by [MiDaS](https://github.com/isl-org/MiDaS) (`dpt_hybrid`) which is used as an additional conditioning.

The additional input channels of the U-Net which process this extra information were zero-initialized.

- `512-inpainting-ema.ckpt`: Resumed from `512-base-ema.ckpt` and trained for another 200k steps. Follows the mask-generation strategy presented in [LAMA](https://github.com/saic-mdal/lama) which, in combination with the latent VAE representations of the masked image, are used as an additional conditioning.

The additional input channels of the U-Net which process this extra information were zero-initialized. The same strategy was used to train the [1.5-inpainting checkpoint](https://github.com/saic-mdal/lama).

- `x4-upscaling-ema.ckpt`: Trained for 1.25M steps on a 10M subset of LAION containing images `>2048x2048`. The model was trained on crops of size `512x512` and is a text-guided [latent upscaling diffusion model](https://arxiv.org/abs/2112.10752).

In addition to the textual input, it receives a `noise_level` as an input parameter, which can be used to add noise to the low-resolution input according to a [predefined diffusion schedule](configs/stable-diffusion/x4-upscaling.yaml).

- **Hardware:** 32 x 8 x A100 GPUs

- **Optimizer:** AdamW

- **Gradient Accumulations**: 1

- **Batch:** 32 x 8 x 2 x 4 = 2048

- **Learning rate:** warmup to 0.0001 for 10,000 steps and then kept constant

## Evaluation Results

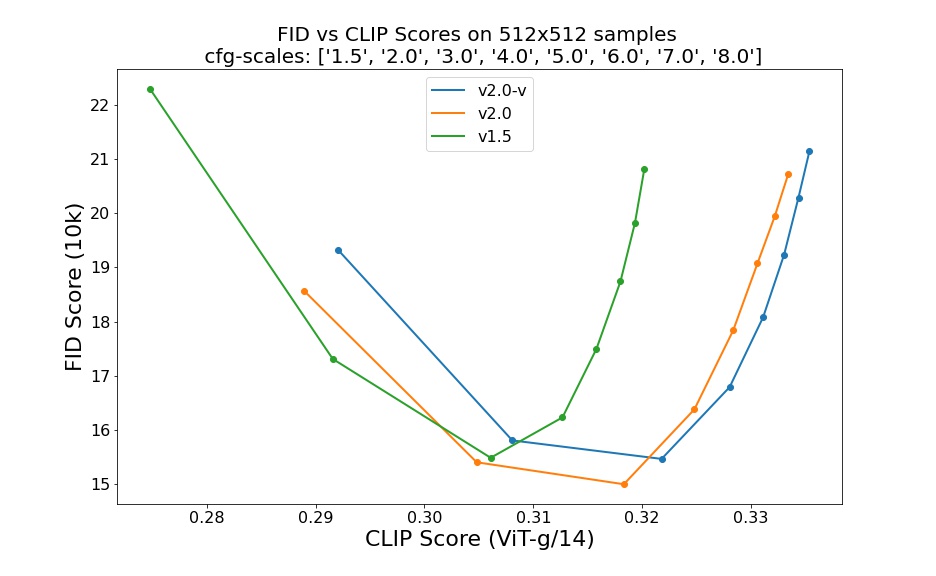

Evaluations with different classifier-free guidance scales (1.5, 2.0, 3.0, 4.0,

5.0, 6.0, 7.0, 8.0) and 50 steps DDIM sampling steps show the relative improvements of the checkpoints:

Evaluated using 50 DDIM steps and 10000 random prompts from the COCO2017 validation set, evaluated at 512x512 resolution. Not optimized for FID scores.

## Environmental Impact

**Stable Diffusion v1** **Estimated Emissions**

Based on that information, we estimate the following CO2 emissions using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700). The hardware, runtime, cloud provider, and compute region were utilized to estimate the carbon impact.

- **Hardware Type:** A100 PCIe 40GB

- **Hours used:** 200000

- **Cloud Provider:** AWS

- **Compute Region:** US-east

- **Carbon Emitted (Power consumption x Time x Carbon produced based on location of power grid):** 15000 kg CO2 eq.

## Citation

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

*This model card was written by: Robin Rombach, Patrick Esser and David Ha and is based on the [Stable Diffusion v1](https://github.com/CompVis/stable-diffusion/blob/main/Stable_Diffusion_v1_Model_Card.md) and [DALL-E Mini model card](https://huggingface.co/dalle-mini/dalle-mini).*

|

stabilityai/stable-diffusion-2-1

|

stabilityai

| 2023-07-05T16:19:17Z | 999,034 | 3,935 |

diffusers

|

[

"diffusers",

"safetensors",

"stable-diffusion",

"text-to-image",

"arxiv:2112.10752",

"arxiv:2202.00512",

"arxiv:1910.09700",

"license:openrail++",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2022-12-06T17:24:51Z |

---

license: openrail++

tags:

- stable-diffusion

- text-to-image

pinned: true

---

# Stable Diffusion v2-1 Model Card

This model card focuses on the model associated with the Stable Diffusion v2-1 model, codebase available [here](https://github.com/Stability-AI/stablediffusion).

This `stable-diffusion-2-1` model is fine-tuned from [stable-diffusion-2](https://huggingface.co/stabilityai/stable-diffusion-2) (`768-v-ema.ckpt`) with an additional 55k steps on the same dataset (with `punsafe=0.1`), and then fine-tuned for another 155k extra steps with `punsafe=0.98`.

- Use it with the [`stablediffusion`](https://github.com/Stability-AI/stablediffusion) repository: download the `v2-1_768-ema-pruned.ckpt` [here](https://huggingface.co/stabilityai/stable-diffusion-2-1/blob/main/v2-1_768-ema-pruned.ckpt).

- Use it with 🧨 [`diffusers`](#examples)

## Model Details

- **Developed by:** Robin Rombach, Patrick Esser

- **Model type:** Diffusion-based text-to-image generation model

- **Language(s):** English

- **License:** [CreativeML Open RAIL++-M License](https://huggingface.co/stabilityai/stable-diffusion-2/blob/main/LICENSE-MODEL)

- **Model Description:** This is a model that can be used to generate and modify images based on text prompts. It is a [Latent Diffusion Model](https://arxiv.org/abs/2112.10752) that uses a fixed, pretrained text encoder ([OpenCLIP-ViT/H](https://github.com/mlfoundations/open_clip)).

- **Resources for more information:** [GitHub Repository](https://github.com/Stability-AI/).

- **Cite as:**

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

## Examples

Using the [🤗's Diffusers library](https://github.com/huggingface/diffusers) to run Stable Diffusion 2 in a simple and efficient manner.

```bash

pip install diffusers transformers accelerate scipy safetensors

```

Running the pipeline (if you don't swap the scheduler it will run with the default DDIM, in this example we are swapping it to DPMSolverMultistepScheduler):

```python

import torch

from diffusers import StableDiffusionPipeline, DPMSolverMultistepScheduler

model_id = "stabilityai/stable-diffusion-2-1"

# Use the DPMSolverMultistepScheduler (DPM-Solver++) scheduler here instead

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe.scheduler = DPMSolverMultistepScheduler.from_config(pipe.scheduler.config)

pipe = pipe.to("cuda")

prompt = "a photo of an astronaut riding a horse on mars"

image = pipe(prompt).images[0]

image.save("astronaut_rides_horse.png")

```

**Notes**:

- Despite not being a dependency, we highly recommend you to install [xformers](https://github.com/facebookresearch/xformers) for memory efficient attention (better performance)

- If you have low GPU RAM available, make sure to add a `pipe.enable_attention_slicing()` after sending it to `cuda` for less VRAM usage (to the cost of speed)

# Uses

## Direct Use

The model is intended for research purposes only. Possible research areas and tasks include

- Safe deployment of models which have the potential to generate harmful content.

- Probing and understanding the limitations and biases of generative models.

- Generation of artworks and use in design and other artistic processes.

- Applications in educational or creative tools.

- Research on generative models.

Excluded uses are described below.

### Misuse, Malicious Use, and Out-of-Scope Use

_Note: This section is originally taken from the [DALLE-MINI model card](https://huggingface.co/dalle-mini/dalle-mini), was used for Stable Diffusion v1, but applies in the same way to Stable Diffusion v2_.

The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. This includes generating images that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes.

#### Out-of-Scope Use

The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

#### Misuse and Malicious Use

Using the model to generate content that is cruel to individuals is a misuse of this model. This includes, but is not limited to:

- Generating demeaning, dehumanizing, or otherwise harmful representations of people or their environments, cultures, religions, etc.

- Intentionally promoting or propagating discriminatory content or harmful stereotypes.

- Impersonating individuals without their consent.

- Sexual content without consent of the people who might see it.

- Mis- and disinformation

- Representations of egregious violence and gore

- Sharing of copyrighted or licensed material in violation of its terms of use.

- Sharing content that is an alteration of copyrighted or licensed material in violation of its terms of use.

## Limitations and Bias

### Limitations

- The model does not achieve perfect photorealism

- The model cannot render legible text

- The model does not perform well on more difficult tasks which involve compositionality, such as rendering an image corresponding to “A red cube on top of a blue sphere”

- Faces and people in general may not be generated properly.

- The model was trained mainly with English captions and will not work as well in other languages.

- The autoencoding part of the model is lossy

- The model was trained on a subset of the large-scale dataset

[LAION-5B](https://laion.ai/blog/laion-5b/), which contains adult, violent and sexual content. To partially mitigate this, we have filtered the dataset using LAION's NFSW detector (see Training section).

### Bias

While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases.

Stable Diffusion was primarily trained on subsets of [LAION-2B(en)](https://laion.ai/blog/laion-5b/),

which consists of images that are limited to English descriptions.

Texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for.

This affects the overall output of the model, as white and western cultures are often set as the default. Further, the

ability of the model to generate content with non-English prompts is significantly worse than with English-language prompts.

Stable Diffusion v2 mirrors and exacerbates biases to such a degree that viewer discretion must be advised irrespective of the input or its intent.

## Training

**Training Data**

The model developers used the following dataset for training the model:

- LAION-5B and subsets (details below). The training data is further filtered using LAION's NSFW detector, with a "p_unsafe" score of 0.1 (conservative). For more details, please refer to LAION-5B's [NeurIPS 2022](https://openreview.net/forum?id=M3Y74vmsMcY) paper and reviewer discussions on the topic.

**Training Procedure**

Stable Diffusion v2 is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. During training,

- Images are encoded through an encoder, which turns images into latent representations. The autoencoder uses a relative downsampling factor of 8 and maps images of shape H x W x 3 to latents of shape H/f x W/f x 4

- Text prompts are encoded through the OpenCLIP-ViT/H text-encoder.

- The output of the text encoder is fed into the UNet backbone of the latent diffusion model via cross-attention.

- The loss is a reconstruction objective between the noise that was added to the latent and the prediction made by the UNet. We also use the so-called _v-objective_, see https://arxiv.org/abs/2202.00512.

We currently provide the following checkpoints:

- `512-base-ema.ckpt`: 550k steps at resolution `256x256` on a subset of [LAION-5B](https://laion.ai/blog/laion-5b/) filtered for explicit pornographic material, using the [LAION-NSFW classifier](https://github.com/LAION-AI/CLIP-based-NSFW-Detector) with `punsafe=0.1` and an [aesthetic score](https://github.com/christophschuhmann/improved-aesthetic-predictor) >= `4.5`.

850k steps at resolution `512x512` on the same dataset with resolution `>= 512x512`.

- `768-v-ema.ckpt`: Resumed from `512-base-ema.ckpt` and trained for 150k steps using a [v-objective](https://arxiv.org/abs/2202.00512) on the same dataset. Resumed for another 140k steps on a `768x768` subset of our dataset.

- `512-depth-ema.ckpt`: Resumed from `512-base-ema.ckpt` and finetuned for 200k steps. Added an extra input channel to process the (relative) depth prediction produced by [MiDaS](https://github.com/isl-org/MiDaS) (`dpt_hybrid`) which is used as an additional conditioning.

The additional input channels of the U-Net which process this extra information were zero-initialized.

- `512-inpainting-ema.ckpt`: Resumed from `512-base-ema.ckpt` and trained for another 200k steps. Follows the mask-generation strategy presented in [LAMA](https://github.com/saic-mdal/lama) which, in combination with the latent VAE representations of the masked image, are used as an additional conditioning.

The additional input channels of the U-Net which process this extra information were zero-initialized. The same strategy was used to train the [1.5-inpainting checkpoint](https://huggingface.co/runwayml/stable-diffusion-inpainting).

- `x4-upscaling-ema.ckpt`: Trained for 1.25M steps on a 10M subset of LAION containing images `>2048x2048`. The model was trained on crops of size `512x512` and is a text-guided [latent upscaling diffusion model](https://arxiv.org/abs/2112.10752).

In addition to the textual input, it receives a `noise_level` as an input parameter, which can be used to add noise to the low-resolution input according to a [predefined diffusion schedule](configs/stable-diffusion/x4-upscaling.yaml).

- **Hardware:** 32 x 8 x A100 GPUs

- **Optimizer:** AdamW

- **Gradient Accumulations**: 1

- **Batch:** 32 x 8 x 2 x 4 = 2048

- **Learning rate:** warmup to 0.0001 for 10,000 steps and then kept constant

## Evaluation Results

Evaluations with different classifier-free guidance scales (1.5, 2.0, 3.0, 4.0,

5.0, 6.0, 7.0, 8.0) and 50 steps DDIM sampling steps show the relative improvements of the checkpoints:

Evaluated using 50 DDIM steps and 10000 random prompts from the COCO2017 validation set, evaluated at 512x512 resolution. Not optimized for FID scores.

## Environmental Impact

**Stable Diffusion v1** **Estimated Emissions**

Based on that information, we estimate the following CO2 emissions using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700). The hardware, runtime, cloud provider, and compute region were utilized to estimate the carbon impact.

- **Hardware Type:** A100 PCIe 40GB

- **Hours used:** 200000

- **Cloud Provider:** AWS

- **Compute Region:** US-east

- **Carbon Emitted (Power consumption x Time x Carbon produced based on location of power grid):** 15000 kg CO2 eq.

## Citation

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

*This model card was written by: Robin Rombach, Patrick Esser and David Ha and is based on the [Stable Diffusion v1](https://github.com/CompVis/stable-diffusion/blob/main/Stable_Diffusion_v1_Model_Card.md) and [DALL-E Mini model card](https://huggingface.co/dalle-mini/dalle-mini).*

|

stabilityai/stable-diffusion-2-inpainting

|

stabilityai

| 2023-07-05T16:19:10Z | 277,480 | 527 |

diffusers

|

[

"diffusers",

"safetensors",

"stable-diffusion",

"arxiv:2112.10752",

"arxiv:2202.00512",

"arxiv:1910.09700",

"license:openrail++",

"diffusers:StableDiffusionInpaintPipeline",

"region:us"

] |

image-to-image

| 2022-11-23T17:41:55Z |

---

license: openrail++

tags:

- stable-diffusion

inference: false

---

# Stable Diffusion v2 Model Card

This model card focuses on the model associated with the Stable Diffusion v2, available [here](https://github.com/Stability-AI/stablediffusion).

This `stable-diffusion-2-inpainting` model is resumed from [stable-diffusion-2-base](https://huggingface.co/stabilityai/stable-diffusion-2-base) (`512-base-ema.ckpt`) and trained for another 200k steps. Follows the mask-generation strategy presented in [LAMA](https://github.com/saic-mdal/lama) which, in combination with the latent VAE representations of the masked image, are used as an additional conditioning.

- Use it with the [`stablediffusion`](https://github.com/Stability-AI/stablediffusion) repository: download the `512-inpainting-ema.ckpt` [here](https://huggingface.co/stabilityai/stable-diffusion-2-inpainting/resolve/main/512-inpainting-ema.ckpt).

- Use it with 🧨 [`diffusers`](https://huggingface.co/stabilityai/stable-diffusion-2-inpainting#examples)

## Model Details

- **Developed by:** Robin Rombach, Patrick Esser

- **Model type:** Diffusion-based text-to-image generation model

- **Language(s):** English

- **License:** [CreativeML Open RAIL++-M License](https://huggingface.co/stabilityai/stable-diffusion-2/blob/main/LICENSE-MODEL)

- **Model Description:** This is a model that can be used to generate and modify images based on text prompts. It is a [Latent Diffusion Model](https://arxiv.org/abs/2112.10752) that uses a fixed, pretrained text encoder ([OpenCLIP-ViT/H](https://github.com/mlfoundations/open_clip)).

- **Resources for more information:** [GitHub Repository](https://github.com/Stability-AI/).

- **Cite as:**

@InProceedings{Rombach_2022_CVPR,