modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

list | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

rach405/test_trainer6

|

rach405

| 2022-11-23T22:42:58Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-11-23T18:19:23Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: test_trainer6

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# test_trainer6

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 2.0525

- Accuracy: 0.3229

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 2.0672 | 1.0 | 88 | 2.0811 | 0.3229 |

| 1.9813 | 2.0 | 176 | 2.0715 | 0.3229 |

| 2.1212 | 3.0 | 264 | 2.0525 | 0.3229 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.11.0+cpu

- Tokenizers 0.11.6

|

huggingtweets/josephflaherty

|

huggingtweets

| 2022-11-23T22:21:56Z | 113 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-11-23T22:20:04Z |

---

language: en

thumbnail: http://www.huggingtweets.com/josephflaherty/1669242112755/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1529933319919616011/mEzYnY5Z_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Joe Flaherty – Venture Capital Scribe</div>

<div style="text-align: center; font-size: 14px;">@josephflaherty</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Joe Flaherty – Venture Capital Scribe.

| Data | Joe Flaherty – Venture Capital Scribe |

| --- | --- |

| Tweets downloaded | 3247 |

| Retweets | 150 |

| Short tweets | 154 |

| Tweets kept | 2943 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/h0zhab8z/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @josephflaherty's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/2hw29ydt) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/2hw29ydt/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/josephflaherty')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

NobodyX23/Pablobato

|

NobodyX23

| 2022-11-23T22:19:27Z | 0 | 0 | null |

[

"region:us"

] | null | 2022-11-23T16:41:57Z |

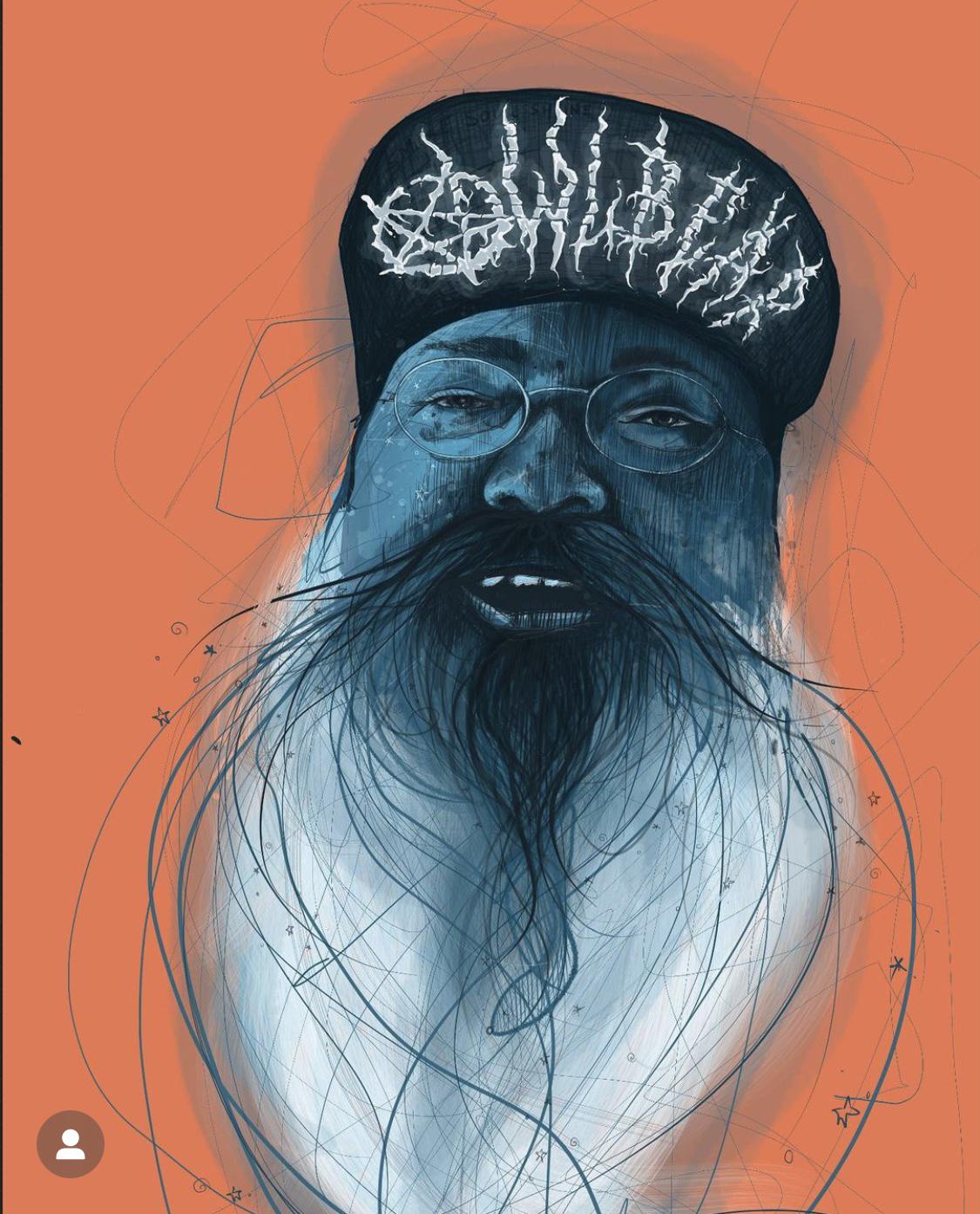

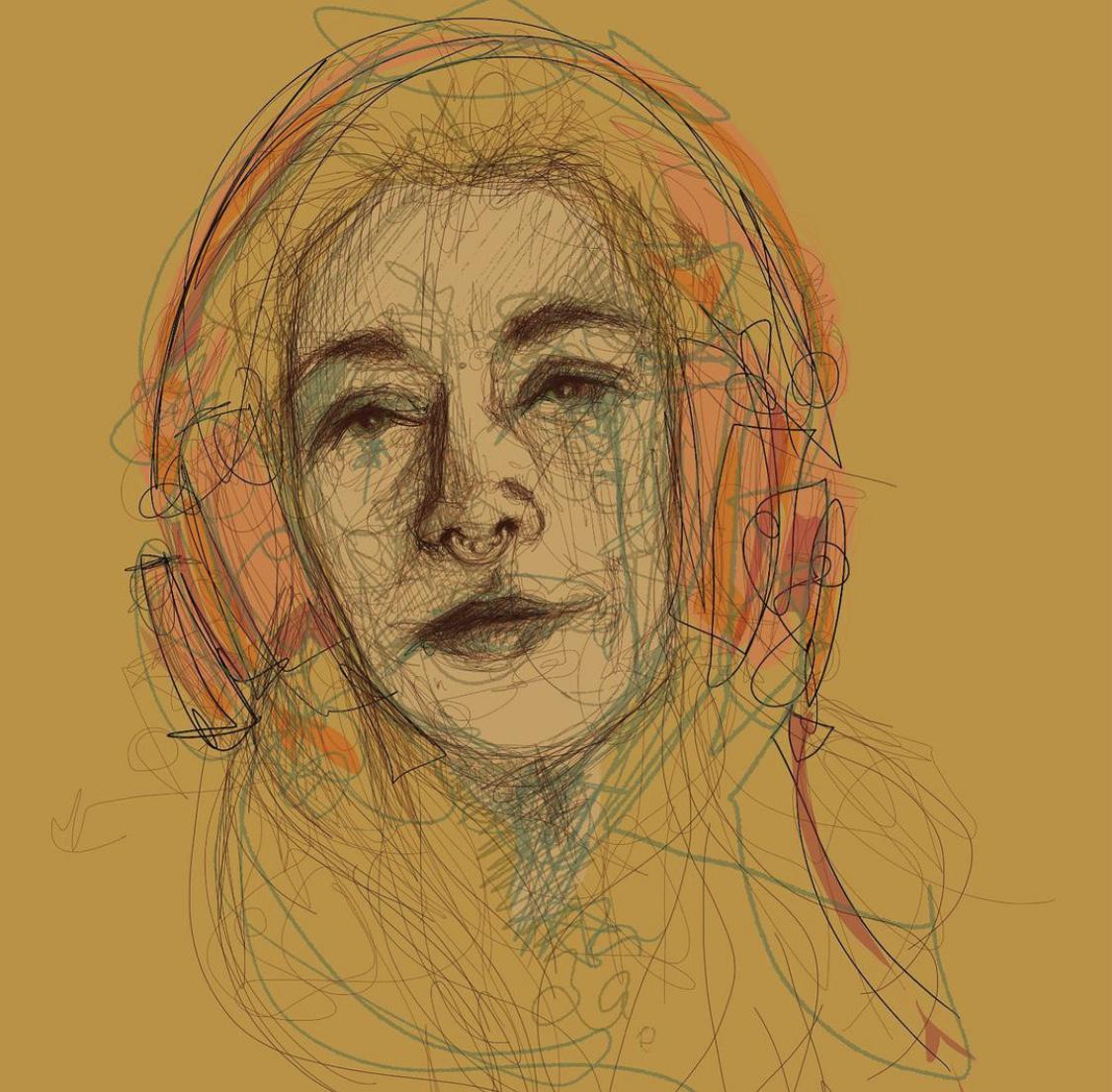

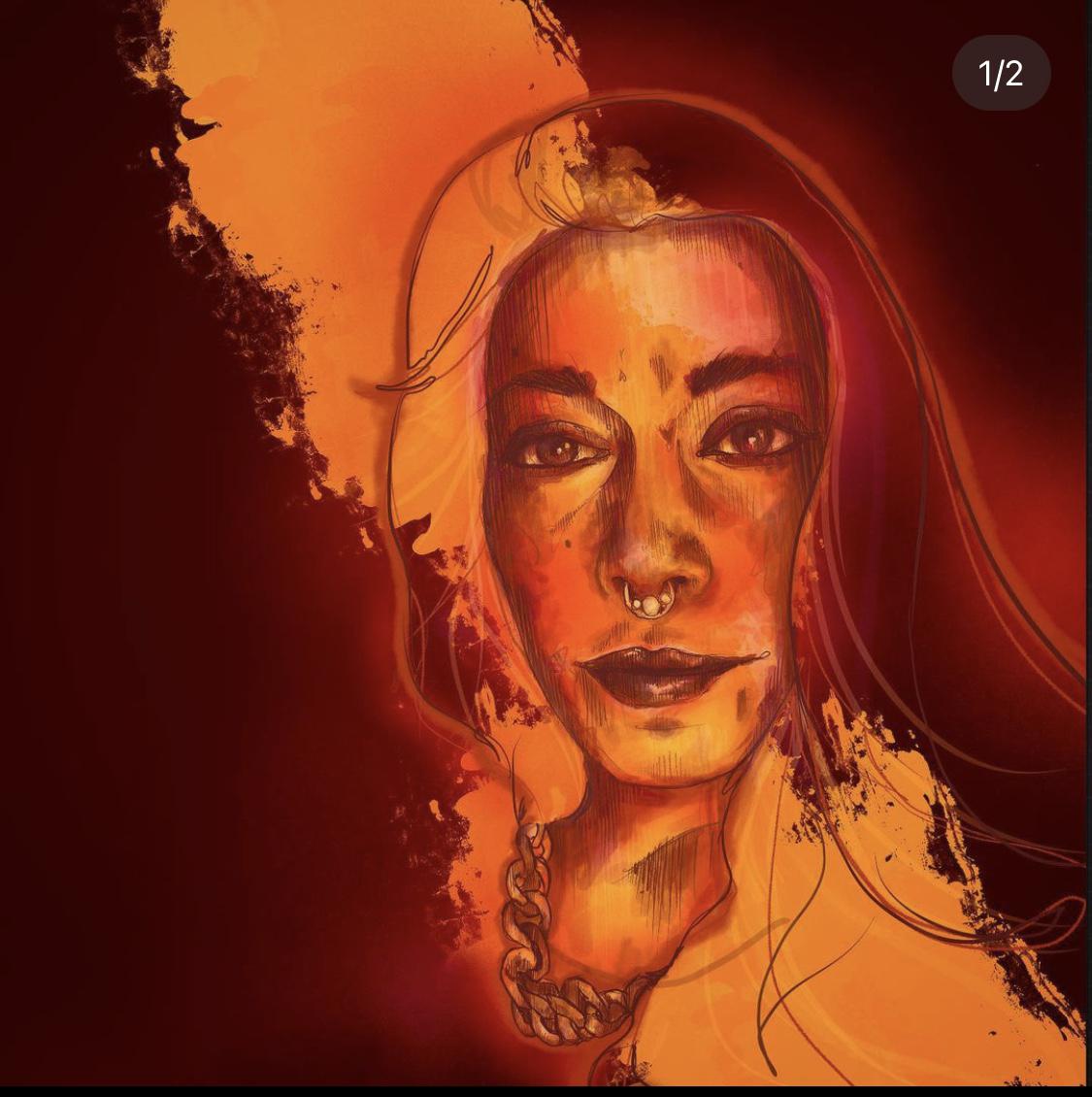

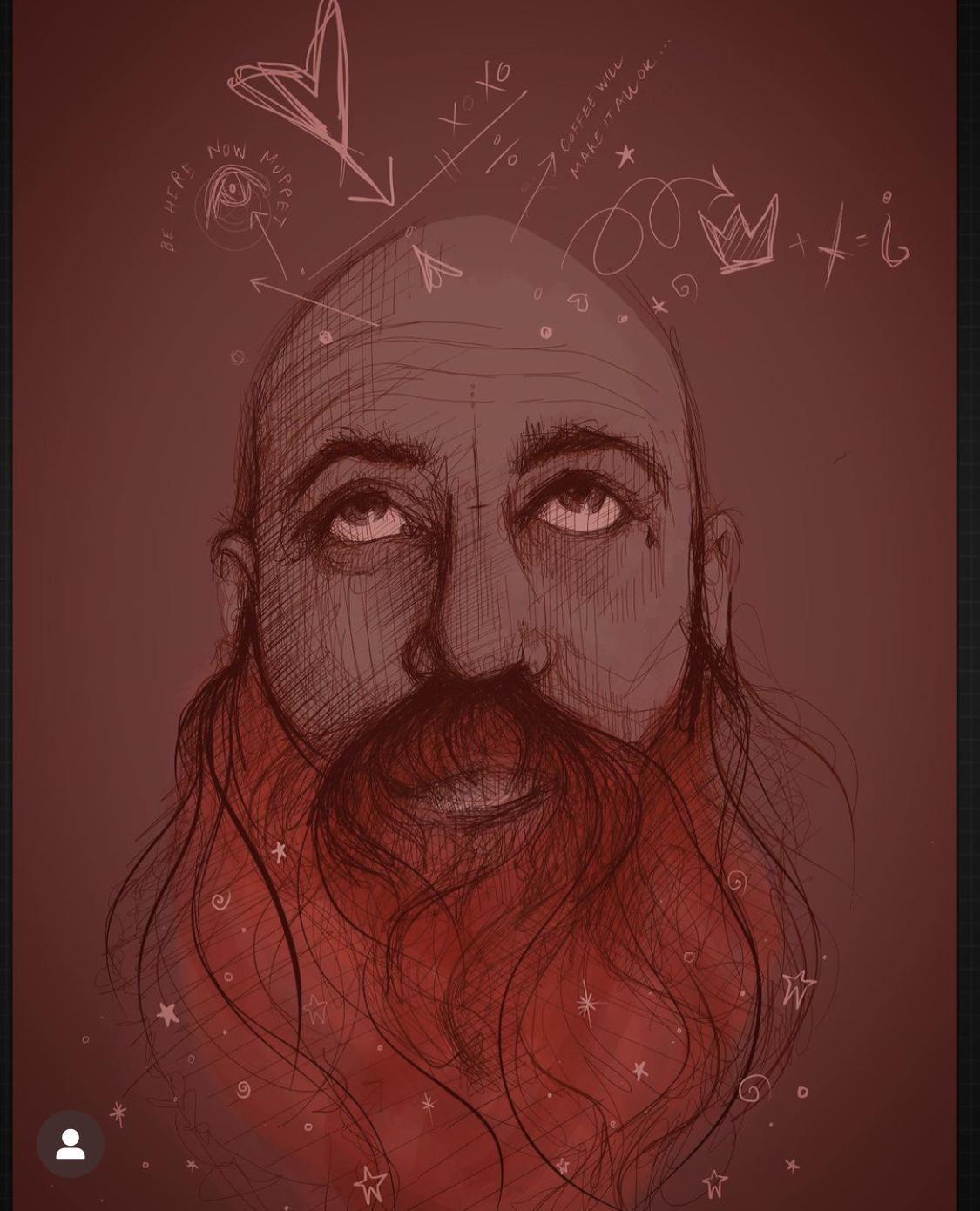

Pablo Lobato style model

---

Text prompt: Pablobato style

---

license: creativeml-openrail-m

---

|

Guizmus/SD_DreamerCommunities_Collection

|

Guizmus

| 2022-11-23T22:17:55Z | 0 | 29 |

EveryDream

|

[

"EveryDream",

"diffusers",

"stable-diffusion",

"text-to-image",

"image-to-image",

"en",

"license:creativeml-openrail-m",

"region:us"

] |

text-to-image

| 2022-11-13T17:51:54Z |

---

language:

- en

license: creativeml-openrail-m

thumbnail: "https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/resolve/main/images/showcase_main.jpg"

tags:

- stable-diffusion

- text-to-image

- image-to-image

- diffusers

library_name: "EveryDream"

inference: false

---

# Introduction

This is a collection of models made from and for the users of the Stable Diffusion Discord server. Different categories of channel exist, the "Dreamers Communities" presenting a panel of subjects, like Anime, 3D, or Architectural. Each of these channels has users posting images made through the use of Stable diffusion. After asking the users, and, depending on the activity of each channel, collecting a dataset from new submissions or from the history of the channel, I intend to build multiple models representing the style of each, so that users can produce things in the style they like and mix it with other things more easily.

Those are mainly done through the use of EveryDream, and should result in a Mega Model towards the end for the datasets that are compatible. Some model like the Anime one require to stay on a different starting point, and may not get merged.

# CharacterChan Style

## Dataset & training

This model was based on [RunwayML SD 1.5](https://huggingface.co/runwayml/stable-diffusion-v1-5) model with updated VAE.

The dataset was a collaborative effort of the Stable Diffusion #CharacterChan channel, made of pictures from the users themselves using their different techniques.

50 total pictures in the dataset, 160 repeats total each, over 4 Epoch on LR1e-6.

This was trained using EveryDream with a full caption of all training pictures.

The style will be called by the use of the token **CharacterChan Style**.

## Showcase & Downloads v1

[CKPT (2GB)](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/resolve/main/diffusers/CharacterChan/CharacterChanStyle-v1.ckpt)

[CKPT with training optimizers (11GB)](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/resolve/main/ckpt/CharacterChanStyle-v1_with_optimizers.ckpt)

[Diffusers](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/tree/main/diffusers/CharacterChan)

[Dataset](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/resolve/main/datasets/CharacterChanStyle-v1.zip)

# CreatureChan Style

## Dataset & training

This model was based on [RunwayML SD 1.5](https://huggingface.co/runwayml/stable-diffusion-v1-5) model with updated VAE.

The dataset was a collaborative effort of the Stable Diffusion #CreatureChan channel, made of pictures from the users themselves using their different techniques.

50 total pictures in the dataset, 160 repeats total each, over 4 Epoch on LR1e-6.

This was trained using EveryDream with a full caption of all training pictures.

The style will be called by the use of the token **CreatureChan Style**.

## Showcase & Downloads v1

[CKPT (2GB)](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/resolve/main/diffusers/CreatureChan/CreatureChanStyle-v1.ckpt)

[CKPT with training optimizers (11GB)](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/resolve/main/ckpt/CreatureChanStyle-v1_with_optimizers.ckpt)

[Diffusers](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/tree/main/diffusers/CreatureChan)

[Dataset](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/resolve/main/datasets/CreatureChanStyle-v1.zip)

# 3DChan Style

## Dataset & training

This model was based on [RunwayML SD 1.5](https://huggingface.co/runwayml/stable-diffusion-v1-5) model with updated VAE.

The dataset was a collaborative effort of the Stable Diffusion #3D channel, made of pictures from the users themselves using their different techniques.

120 total pictures in the dataset, 500 repeats total each, over 10 Epoch on LR1e-6.

This was trained using EveryDream with a full caption of all training pictures.

The style will be called by the use of the token **3D Style**.

Other significant tokens : rick roll, fullbody shot, bad cosplay man

## Showcase & Downloads v1

[CKPT (2GB)](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/resolve/main/diffusers/3DStyle/3DStyle-v1.ckpt)

[CKPT with training optimizers (11GB)](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/resolve/main/ckpt/3DStyle-v1_with_optimizers.ckpt)

[Diffusers](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/tree/main/diffusers/3DStyle)

[Dataset](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/resolve/main/datasets/3DChanStyle-v1.zip)

# AnimeChan Style

## Dataset & training

This model was based on [Trinart](https://huggingface.co/naclbit/trinart_stable_diffusion_v2) model.

The dataset was a collaborative effort of the Stable Diffusion #anime channel, made of pictures from the users themselves using their different techniques.

100 total pictures in the dataset, 300 repeats total each, over 6 Epoch on LR1e-6.

This was trained using EveryDream with a full caption of all training pictures.

The style will be called by the use of the token **AnimeChan Style**.

## Showcase & Downloads v2

[CKPT (2GB)](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/resolve/main/diffusers/AnimeStyle/AnimeChanStyle-v2.ckpt)

[Diffusers](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/tree/main/diffusers/AnimeStyle)

[Dataset](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/resolve/main/datasets/AnimeChanStyle-v2.zip)

## Showcase & Downloads v1

[CKPT (2GB)](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/resolve/main/ckpt/AnimeChanStyle-v1.ckpt)

[Dataset](https://huggingface.co/Guizmus/SD_DreamerCommunities_Collection/resolve/main/datasets/AnimeChanStyle-v1.zip)

# License

These models are open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license)

|

TUMxudashuai/DQN-LunarLander-v2

|

TUMxudashuai

| 2022-11-23T21:02:30Z | 5 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-11-23T21:01:50Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: -83.37 +/- 29.36

name: mean_reward

verified: false

---

# **DQN** Agent playing **LunarLander-v2**

This is a trained model of a **DQN** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

SherlockHolmes/ddpm-butterflies-128

|

SherlockHolmes

| 2022-11-23T21:02:17Z | 3 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"en",

"dataset:huggan/smithsonian_butterflies_subset",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2022-11-23T19:48:55Z |

---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: huggan/smithsonian_butterflies_subset

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-butterflies-128

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `huggan/smithsonian_butterflies_subset` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/SherlockHolmes/ddpm-butterflies-128/tensorboard?#scalars)

|

tomekkorbak/agitated_jones

|

tomekkorbak

| 2022-11-23T19:45:02Z | 0 | 0 | null |

[

"generated_from_trainer",

"en",

"dataset:tomekkorbak/detoxify-pile-chunk3-0-50000",

"dataset:tomekkorbak/detoxify-pile-chunk3-50000-100000",

"dataset:tomekkorbak/detoxify-pile-chunk3-100000-150000",

"dataset:tomekkorbak/detoxify-pile-chunk3-150000-200000",

"dataset:tomekkorbak/detoxify-pile-chunk3-200000-250000",

"dataset:tomekkorbak/detoxify-pile-chunk3-250000-300000",

"dataset:tomekkorbak/detoxify-pile-chunk3-300000-350000",

"dataset:tomekkorbak/detoxify-pile-chunk3-350000-400000",

"dataset:tomekkorbak/detoxify-pile-chunk3-400000-450000",

"dataset:tomekkorbak/detoxify-pile-chunk3-450000-500000",

"dataset:tomekkorbak/detoxify-pile-chunk3-500000-550000",

"dataset:tomekkorbak/detoxify-pile-chunk3-550000-600000",

"dataset:tomekkorbak/detoxify-pile-chunk3-600000-650000",

"dataset:tomekkorbak/detoxify-pile-chunk3-650000-700000",

"dataset:tomekkorbak/detoxify-pile-chunk3-700000-750000",

"dataset:tomekkorbak/detoxify-pile-chunk3-750000-800000",

"dataset:tomekkorbak/detoxify-pile-chunk3-800000-850000",

"dataset:tomekkorbak/detoxify-pile-chunk3-850000-900000",

"dataset:tomekkorbak/detoxify-pile-chunk3-900000-950000",

"dataset:tomekkorbak/detoxify-pile-chunk3-950000-1000000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1000000-1050000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1050000-1100000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1100000-1150000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1150000-1200000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1200000-1250000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1250000-1300000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1300000-1350000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1350000-1400000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1400000-1450000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1450000-1500000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1500000-1550000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1550000-1600000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1600000-1650000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1650000-1700000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1700000-1750000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1750000-1800000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1800000-1850000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1850000-1900000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1900000-1950000",

"license:mit",

"region:us"

] | null | 2022-11-23T19:37:18Z |

---

language:

- en

license: mit

tags:

- generated_from_trainer

datasets:

- tomekkorbak/detoxify-pile-chunk3-0-50000

- tomekkorbak/detoxify-pile-chunk3-50000-100000

- tomekkorbak/detoxify-pile-chunk3-100000-150000

- tomekkorbak/detoxify-pile-chunk3-150000-200000

- tomekkorbak/detoxify-pile-chunk3-200000-250000

- tomekkorbak/detoxify-pile-chunk3-250000-300000

- tomekkorbak/detoxify-pile-chunk3-300000-350000

- tomekkorbak/detoxify-pile-chunk3-350000-400000

- tomekkorbak/detoxify-pile-chunk3-400000-450000

- tomekkorbak/detoxify-pile-chunk3-450000-500000

- tomekkorbak/detoxify-pile-chunk3-500000-550000

- tomekkorbak/detoxify-pile-chunk3-550000-600000

- tomekkorbak/detoxify-pile-chunk3-600000-650000

- tomekkorbak/detoxify-pile-chunk3-650000-700000

- tomekkorbak/detoxify-pile-chunk3-700000-750000

- tomekkorbak/detoxify-pile-chunk3-750000-800000

- tomekkorbak/detoxify-pile-chunk3-800000-850000

- tomekkorbak/detoxify-pile-chunk3-850000-900000

- tomekkorbak/detoxify-pile-chunk3-900000-950000

- tomekkorbak/detoxify-pile-chunk3-950000-1000000

- tomekkorbak/detoxify-pile-chunk3-1000000-1050000

- tomekkorbak/detoxify-pile-chunk3-1050000-1100000

- tomekkorbak/detoxify-pile-chunk3-1100000-1150000

- tomekkorbak/detoxify-pile-chunk3-1150000-1200000

- tomekkorbak/detoxify-pile-chunk3-1200000-1250000

- tomekkorbak/detoxify-pile-chunk3-1250000-1300000

- tomekkorbak/detoxify-pile-chunk3-1300000-1350000

- tomekkorbak/detoxify-pile-chunk3-1350000-1400000

- tomekkorbak/detoxify-pile-chunk3-1400000-1450000

- tomekkorbak/detoxify-pile-chunk3-1450000-1500000

- tomekkorbak/detoxify-pile-chunk3-1500000-1550000

- tomekkorbak/detoxify-pile-chunk3-1550000-1600000

- tomekkorbak/detoxify-pile-chunk3-1600000-1650000

- tomekkorbak/detoxify-pile-chunk3-1650000-1700000

- tomekkorbak/detoxify-pile-chunk3-1700000-1750000

- tomekkorbak/detoxify-pile-chunk3-1750000-1800000

- tomekkorbak/detoxify-pile-chunk3-1800000-1850000

- tomekkorbak/detoxify-pile-chunk3-1850000-1900000

- tomekkorbak/detoxify-pile-chunk3-1900000-1950000

model-index:

- name: agitated_jones

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# agitated_jones

This model was trained from scratch on the tomekkorbak/detoxify-pile-chunk3-0-50000, the tomekkorbak/detoxify-pile-chunk3-50000-100000, the tomekkorbak/detoxify-pile-chunk3-100000-150000, the tomekkorbak/detoxify-pile-chunk3-150000-200000, the tomekkorbak/detoxify-pile-chunk3-200000-250000, the tomekkorbak/detoxify-pile-chunk3-250000-300000, the tomekkorbak/detoxify-pile-chunk3-300000-350000, the tomekkorbak/detoxify-pile-chunk3-350000-400000, the tomekkorbak/detoxify-pile-chunk3-400000-450000, the tomekkorbak/detoxify-pile-chunk3-450000-500000, the tomekkorbak/detoxify-pile-chunk3-500000-550000, the tomekkorbak/detoxify-pile-chunk3-550000-600000, the tomekkorbak/detoxify-pile-chunk3-600000-650000, the tomekkorbak/detoxify-pile-chunk3-650000-700000, the tomekkorbak/detoxify-pile-chunk3-700000-750000, the tomekkorbak/detoxify-pile-chunk3-750000-800000, the tomekkorbak/detoxify-pile-chunk3-800000-850000, the tomekkorbak/detoxify-pile-chunk3-850000-900000, the tomekkorbak/detoxify-pile-chunk3-900000-950000, the tomekkorbak/detoxify-pile-chunk3-950000-1000000, the tomekkorbak/detoxify-pile-chunk3-1000000-1050000, the tomekkorbak/detoxify-pile-chunk3-1050000-1100000, the tomekkorbak/detoxify-pile-chunk3-1100000-1150000, the tomekkorbak/detoxify-pile-chunk3-1150000-1200000, the tomekkorbak/detoxify-pile-chunk3-1200000-1250000, the tomekkorbak/detoxify-pile-chunk3-1250000-1300000, the tomekkorbak/detoxify-pile-chunk3-1300000-1350000, the tomekkorbak/detoxify-pile-chunk3-1350000-1400000, the tomekkorbak/detoxify-pile-chunk3-1400000-1450000, the tomekkorbak/detoxify-pile-chunk3-1450000-1500000, the tomekkorbak/detoxify-pile-chunk3-1500000-1550000, the tomekkorbak/detoxify-pile-chunk3-1550000-1600000, the tomekkorbak/detoxify-pile-chunk3-1600000-1650000, the tomekkorbak/detoxify-pile-chunk3-1650000-1700000, the tomekkorbak/detoxify-pile-chunk3-1700000-1750000, the tomekkorbak/detoxify-pile-chunk3-1750000-1800000, the tomekkorbak/detoxify-pile-chunk3-1800000-1850000, the tomekkorbak/detoxify-pile-chunk3-1850000-1900000 and the tomekkorbak/detoxify-pile-chunk3-1900000-1950000 datasets.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 64

- total_train_batch_size: 1024

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.01

- training_steps: 3147

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0+cu113

- Datasets 2.5.1

- Tokenizers 0.11.6

# Full config

{'dataset': {'datasets': ['tomekkorbak/detoxify-pile-chunk3-0-50000',

'tomekkorbak/detoxify-pile-chunk3-50000-100000',

'tomekkorbak/detoxify-pile-chunk3-100000-150000',

'tomekkorbak/detoxify-pile-chunk3-150000-200000',

'tomekkorbak/detoxify-pile-chunk3-200000-250000',

'tomekkorbak/detoxify-pile-chunk3-250000-300000',

'tomekkorbak/detoxify-pile-chunk3-300000-350000',

'tomekkorbak/detoxify-pile-chunk3-350000-400000',

'tomekkorbak/detoxify-pile-chunk3-400000-450000',

'tomekkorbak/detoxify-pile-chunk3-450000-500000',

'tomekkorbak/detoxify-pile-chunk3-500000-550000',

'tomekkorbak/detoxify-pile-chunk3-550000-600000',

'tomekkorbak/detoxify-pile-chunk3-600000-650000',

'tomekkorbak/detoxify-pile-chunk3-650000-700000',

'tomekkorbak/detoxify-pile-chunk3-700000-750000',

'tomekkorbak/detoxify-pile-chunk3-750000-800000',

'tomekkorbak/detoxify-pile-chunk3-800000-850000',

'tomekkorbak/detoxify-pile-chunk3-850000-900000',

'tomekkorbak/detoxify-pile-chunk3-900000-950000',

'tomekkorbak/detoxify-pile-chunk3-950000-1000000',

'tomekkorbak/detoxify-pile-chunk3-1000000-1050000',

'tomekkorbak/detoxify-pile-chunk3-1050000-1100000',

'tomekkorbak/detoxify-pile-chunk3-1100000-1150000',

'tomekkorbak/detoxify-pile-chunk3-1150000-1200000',

'tomekkorbak/detoxify-pile-chunk3-1200000-1250000',

'tomekkorbak/detoxify-pile-chunk3-1250000-1300000',

'tomekkorbak/detoxify-pile-chunk3-1300000-1350000',

'tomekkorbak/detoxify-pile-chunk3-1350000-1400000',

'tomekkorbak/detoxify-pile-chunk3-1400000-1450000',

'tomekkorbak/detoxify-pile-chunk3-1450000-1500000',

'tomekkorbak/detoxify-pile-chunk3-1500000-1550000',

'tomekkorbak/detoxify-pile-chunk3-1550000-1600000',

'tomekkorbak/detoxify-pile-chunk3-1600000-1650000',

'tomekkorbak/detoxify-pile-chunk3-1650000-1700000',

'tomekkorbak/detoxify-pile-chunk3-1700000-1750000',

'tomekkorbak/detoxify-pile-chunk3-1750000-1800000',

'tomekkorbak/detoxify-pile-chunk3-1800000-1850000',

'tomekkorbak/detoxify-pile-chunk3-1850000-1900000',

'tomekkorbak/detoxify-pile-chunk3-1900000-1950000'],

'is_split_by_sentences': True},

'generation': {'force_call_on': [25354],

'metrics_configs': [{}, {'n': 1}, {'n': 2}, {'n': 5}],

'scenario_configs': [{'generate_kwargs': {'do_sample': True,

'max_length': 128,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'unconditional',

'num_samples': 2048},

{'generate_kwargs': {'do_sample': True,

'max_length': 128,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'challenging_rtp',

'num_samples': 2048,

'prompts_path': 'resources/challenging_rtp.jsonl'}],

'scorer_config': {'device': 'cuda:0'}},

'kl_gpt3_callback': {'force_call_on': [25354],

'max_tokens': 64,

'num_samples': 4096},

'model': {'from_scratch': True,

'gpt2_config_kwargs': {'reorder_and_upcast_attn': True,

'scale_attn_by': True},

'model_kwargs': {'value_head_config': {'is_detached': False}},

'path_or_name': 'gpt2'},

'objective': {'alpha': 1, 'beta': 10, 'name': 'AWR'},

'tokenizer': {'path_or_name': 'gpt2'},

'training': {'dataloader_num_workers': 0,

'effective_batch_size': 1024,

'evaluation_strategy': 'no',

'fp16': True,

'hub_model_id': 'agitated_jones',

'hub_strategy': 'all_checkpoints',

'learning_rate': 0.0005,

'logging_first_step': True,

'logging_steps': 1,

'num_tokens': 3300000000,

'output_dir': 'training_output104340',

'per_device_train_batch_size': 16,

'push_to_hub': True,

'remove_unused_columns': False,

'save_steps': 25354,

'save_strategy': 'steps',

'seed': 42,

'warmup_ratio': 0.01,

'weight_decay': 0.1}}

# Wandb URL:

https://wandb.ai/tomekkorbak/apo/runs/3t7xpujc

|

tomekkorbak/wonderful_engelbart

|

tomekkorbak

| 2022-11-23T19:38:30Z | 0 | 0 | null |

[

"generated_from_trainer",

"en",

"dataset:tomekkorbak/detoxify-pile-chunk3-0-50000",

"dataset:tomekkorbak/detoxify-pile-chunk3-50000-100000",

"dataset:tomekkorbak/detoxify-pile-chunk3-100000-150000",

"dataset:tomekkorbak/detoxify-pile-chunk3-150000-200000",

"dataset:tomekkorbak/detoxify-pile-chunk3-200000-250000",

"dataset:tomekkorbak/detoxify-pile-chunk3-250000-300000",

"dataset:tomekkorbak/detoxify-pile-chunk3-300000-350000",

"dataset:tomekkorbak/detoxify-pile-chunk3-350000-400000",

"dataset:tomekkorbak/detoxify-pile-chunk3-400000-450000",

"dataset:tomekkorbak/detoxify-pile-chunk3-450000-500000",

"dataset:tomekkorbak/detoxify-pile-chunk3-500000-550000",

"dataset:tomekkorbak/detoxify-pile-chunk3-550000-600000",

"dataset:tomekkorbak/detoxify-pile-chunk3-600000-650000",

"dataset:tomekkorbak/detoxify-pile-chunk3-650000-700000",

"dataset:tomekkorbak/detoxify-pile-chunk3-700000-750000",

"dataset:tomekkorbak/detoxify-pile-chunk3-750000-800000",

"dataset:tomekkorbak/detoxify-pile-chunk3-800000-850000",

"dataset:tomekkorbak/detoxify-pile-chunk3-850000-900000",

"dataset:tomekkorbak/detoxify-pile-chunk3-900000-950000",

"dataset:tomekkorbak/detoxify-pile-chunk3-950000-1000000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1000000-1050000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1050000-1100000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1100000-1150000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1150000-1200000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1200000-1250000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1250000-1300000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1300000-1350000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1350000-1400000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1400000-1450000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1450000-1500000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1500000-1550000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1550000-1600000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1600000-1650000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1650000-1700000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1700000-1750000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1750000-1800000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1800000-1850000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1850000-1900000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1900000-1950000",

"license:mit",

"region:us"

] | null | 2022-11-23T19:34:25Z |

---

language:

- en

license: mit

tags:

- generated_from_trainer

datasets:

- tomekkorbak/detoxify-pile-chunk3-0-50000

- tomekkorbak/detoxify-pile-chunk3-50000-100000

- tomekkorbak/detoxify-pile-chunk3-100000-150000

- tomekkorbak/detoxify-pile-chunk3-150000-200000

- tomekkorbak/detoxify-pile-chunk3-200000-250000

- tomekkorbak/detoxify-pile-chunk3-250000-300000

- tomekkorbak/detoxify-pile-chunk3-300000-350000

- tomekkorbak/detoxify-pile-chunk3-350000-400000

- tomekkorbak/detoxify-pile-chunk3-400000-450000

- tomekkorbak/detoxify-pile-chunk3-450000-500000

- tomekkorbak/detoxify-pile-chunk3-500000-550000

- tomekkorbak/detoxify-pile-chunk3-550000-600000

- tomekkorbak/detoxify-pile-chunk3-600000-650000

- tomekkorbak/detoxify-pile-chunk3-650000-700000

- tomekkorbak/detoxify-pile-chunk3-700000-750000

- tomekkorbak/detoxify-pile-chunk3-750000-800000

- tomekkorbak/detoxify-pile-chunk3-800000-850000

- tomekkorbak/detoxify-pile-chunk3-850000-900000

- tomekkorbak/detoxify-pile-chunk3-900000-950000

- tomekkorbak/detoxify-pile-chunk3-950000-1000000

- tomekkorbak/detoxify-pile-chunk3-1000000-1050000

- tomekkorbak/detoxify-pile-chunk3-1050000-1100000

- tomekkorbak/detoxify-pile-chunk3-1100000-1150000

- tomekkorbak/detoxify-pile-chunk3-1150000-1200000

- tomekkorbak/detoxify-pile-chunk3-1200000-1250000

- tomekkorbak/detoxify-pile-chunk3-1250000-1300000

- tomekkorbak/detoxify-pile-chunk3-1300000-1350000

- tomekkorbak/detoxify-pile-chunk3-1350000-1400000

- tomekkorbak/detoxify-pile-chunk3-1400000-1450000

- tomekkorbak/detoxify-pile-chunk3-1450000-1500000

- tomekkorbak/detoxify-pile-chunk3-1500000-1550000

- tomekkorbak/detoxify-pile-chunk3-1550000-1600000

- tomekkorbak/detoxify-pile-chunk3-1600000-1650000

- tomekkorbak/detoxify-pile-chunk3-1650000-1700000

- tomekkorbak/detoxify-pile-chunk3-1700000-1750000

- tomekkorbak/detoxify-pile-chunk3-1750000-1800000

- tomekkorbak/detoxify-pile-chunk3-1800000-1850000

- tomekkorbak/detoxify-pile-chunk3-1850000-1900000

- tomekkorbak/detoxify-pile-chunk3-1900000-1950000

model-index:

- name: wonderful_engelbart

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wonderful_engelbart

This model was trained from scratch on the tomekkorbak/detoxify-pile-chunk3-0-50000, the tomekkorbak/detoxify-pile-chunk3-50000-100000, the tomekkorbak/detoxify-pile-chunk3-100000-150000, the tomekkorbak/detoxify-pile-chunk3-150000-200000, the tomekkorbak/detoxify-pile-chunk3-200000-250000, the tomekkorbak/detoxify-pile-chunk3-250000-300000, the tomekkorbak/detoxify-pile-chunk3-300000-350000, the tomekkorbak/detoxify-pile-chunk3-350000-400000, the tomekkorbak/detoxify-pile-chunk3-400000-450000, the tomekkorbak/detoxify-pile-chunk3-450000-500000, the tomekkorbak/detoxify-pile-chunk3-500000-550000, the tomekkorbak/detoxify-pile-chunk3-550000-600000, the tomekkorbak/detoxify-pile-chunk3-600000-650000, the tomekkorbak/detoxify-pile-chunk3-650000-700000, the tomekkorbak/detoxify-pile-chunk3-700000-750000, the tomekkorbak/detoxify-pile-chunk3-750000-800000, the tomekkorbak/detoxify-pile-chunk3-800000-850000, the tomekkorbak/detoxify-pile-chunk3-850000-900000, the tomekkorbak/detoxify-pile-chunk3-900000-950000, the tomekkorbak/detoxify-pile-chunk3-950000-1000000, the tomekkorbak/detoxify-pile-chunk3-1000000-1050000, the tomekkorbak/detoxify-pile-chunk3-1050000-1100000, the tomekkorbak/detoxify-pile-chunk3-1100000-1150000, the tomekkorbak/detoxify-pile-chunk3-1150000-1200000, the tomekkorbak/detoxify-pile-chunk3-1200000-1250000, the tomekkorbak/detoxify-pile-chunk3-1250000-1300000, the tomekkorbak/detoxify-pile-chunk3-1300000-1350000, the tomekkorbak/detoxify-pile-chunk3-1350000-1400000, the tomekkorbak/detoxify-pile-chunk3-1400000-1450000, the tomekkorbak/detoxify-pile-chunk3-1450000-1500000, the tomekkorbak/detoxify-pile-chunk3-1500000-1550000, the tomekkorbak/detoxify-pile-chunk3-1550000-1600000, the tomekkorbak/detoxify-pile-chunk3-1600000-1650000, the tomekkorbak/detoxify-pile-chunk3-1650000-1700000, the tomekkorbak/detoxify-pile-chunk3-1700000-1750000, the tomekkorbak/detoxify-pile-chunk3-1750000-1800000, the tomekkorbak/detoxify-pile-chunk3-1800000-1850000, the tomekkorbak/detoxify-pile-chunk3-1850000-1900000 and the tomekkorbak/detoxify-pile-chunk3-1900000-1950000 datasets.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.01

- training_steps: 50354

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0+cu113

- Datasets 2.5.1

- Tokenizers 0.11.6

# Full config

{'dataset': {'conditional_training_config': {'aligned_prefix': '<|aligned|>',

'drop_token_fraction': 0.01,

'misaligned_prefix': '<|misaligned|>',

'threshold': 0.00056},

'datasets': ['tomekkorbak/detoxify-pile-chunk3-0-50000',

'tomekkorbak/detoxify-pile-chunk3-50000-100000',

'tomekkorbak/detoxify-pile-chunk3-100000-150000',

'tomekkorbak/detoxify-pile-chunk3-150000-200000',

'tomekkorbak/detoxify-pile-chunk3-200000-250000',

'tomekkorbak/detoxify-pile-chunk3-250000-300000',

'tomekkorbak/detoxify-pile-chunk3-300000-350000',

'tomekkorbak/detoxify-pile-chunk3-350000-400000',

'tomekkorbak/detoxify-pile-chunk3-400000-450000',

'tomekkorbak/detoxify-pile-chunk3-450000-500000',

'tomekkorbak/detoxify-pile-chunk3-500000-550000',

'tomekkorbak/detoxify-pile-chunk3-550000-600000',

'tomekkorbak/detoxify-pile-chunk3-600000-650000',

'tomekkorbak/detoxify-pile-chunk3-650000-700000',

'tomekkorbak/detoxify-pile-chunk3-700000-750000',

'tomekkorbak/detoxify-pile-chunk3-750000-800000',

'tomekkorbak/detoxify-pile-chunk3-800000-850000',

'tomekkorbak/detoxify-pile-chunk3-850000-900000',

'tomekkorbak/detoxify-pile-chunk3-900000-950000',

'tomekkorbak/detoxify-pile-chunk3-950000-1000000',

'tomekkorbak/detoxify-pile-chunk3-1000000-1050000',

'tomekkorbak/detoxify-pile-chunk3-1050000-1100000',

'tomekkorbak/detoxify-pile-chunk3-1100000-1150000',

'tomekkorbak/detoxify-pile-chunk3-1150000-1200000',

'tomekkorbak/detoxify-pile-chunk3-1200000-1250000',

'tomekkorbak/detoxify-pile-chunk3-1250000-1300000',

'tomekkorbak/detoxify-pile-chunk3-1300000-1350000',

'tomekkorbak/detoxify-pile-chunk3-1350000-1400000',

'tomekkorbak/detoxify-pile-chunk3-1400000-1450000',

'tomekkorbak/detoxify-pile-chunk3-1450000-1500000',

'tomekkorbak/detoxify-pile-chunk3-1500000-1550000',

'tomekkorbak/detoxify-pile-chunk3-1550000-1600000',

'tomekkorbak/detoxify-pile-chunk3-1600000-1650000',

'tomekkorbak/detoxify-pile-chunk3-1650000-1700000',

'tomekkorbak/detoxify-pile-chunk3-1700000-1750000',

'tomekkorbak/detoxify-pile-chunk3-1750000-1800000',

'tomekkorbak/detoxify-pile-chunk3-1800000-1850000',

'tomekkorbak/detoxify-pile-chunk3-1850000-1900000',

'tomekkorbak/detoxify-pile-chunk3-1900000-1950000'],

'is_split_by_sentences': True},

'generation': {'force_call_on': [25354],

'metrics_configs': [{}, {'n': 1}, {'n': 2}, {'n': 5}],

'scenario_configs': [{'generate_kwargs': {'bad_words_ids': [[50257],

[50258]],

'do_sample': True,

'max_length': 128,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'unconditional',

'num_samples': 2048,

'prefix': '<|aligned|>'},

{'generate_kwargs': {'bad_words_ids': [[50257],

[50258]],

'do_sample': True,

'max_length': 128,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'challenging_rtp',

'num_samples': 2048,

'prefix': '<|aligned|>',

'prompt_before_control': True,

'prompts_path': 'resources/challenging_rtp.jsonl'}],

'scorer_config': {'device': 'cuda:0'}},

'kl_gpt3_callback': {'force_call_on': [25354],

'max_tokens': 64,

'num_samples': 4096,

'prefix': '<|aligned|>'},

'model': {'from_scratch': True,

'gpt2_config_kwargs': {'reorder_and_upcast_attn': True,

'scale_attn_by': True},

'num_additional_tokens': 2,

'path_or_name': 'gpt2'},

'objective': {'name': 'MLE'},

'tokenizer': {'path_or_name': 'gpt2',

'special_tokens': ['<|aligned|>', '<|misaligned|>']},

'training': {'dataloader_num_workers': 0,

'effective_batch_size': 64,

'evaluation_strategy': 'no',

'fp16': True,

'hub_model_id': 'wonderful_engelbart',

'hub_strategy': 'all_checkpoints',

'learning_rate': 0.0005,

'logging_first_step': True,

'logging_steps': 1,

'num_tokens': 3300000000,

'output_dir': 'training_output104340',

'per_device_train_batch_size': 16,

'push_to_hub': True,

'remove_unused_columns': False,

'save_steps': 25354,

'save_strategy': 'steps',

'seed': 42,

'warmup_ratio': 0.01,

'weight_decay': 0.1}}

# Wandb URL:

https://wandb.ai/tomekkorbak/apo/runs/2fqdlqy2

|

NehalJani/fin_sentiment

|

NehalJani

| 2022-11-23T18:11:11Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-11-23T18:04:57Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: fin_sentiment

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# fin_sentiment

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 125 | 0.4801 | 0.8006 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.1

- Tokenizers 0.13.2

|

whatlurks/test

|

whatlurks

| 2022-11-23T17:24:28Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2022-11-23T17:24:28Z |

---

license: creativeml-openrail-m

---

|

monakth/bert-base-multilingual-uncased-sv2

|

monakth

| 2022-11-23T17:03:27Z | 117 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"question-answering",

"generated_from_trainer",

"dataset:squad_v2",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-11-23T17:01:03Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad_v2

model-index:

- name: bert-base-multilingual-uncased-svv

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-multilingual-uncased-svv

This model is a fine-tuned version of [bert-base-multilingual-uncased](https://huggingface.co/bert-base-multilingual-uncased) on the squad_v2 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

jamiehudson/579-STmodel-v1a

|

jamiehudson

| 2022-11-23T16:46:09Z | 1 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"mpnet",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2022-11-23T16:45:56Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 300 with parameters:

```

{'batch_size': 4, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 300,

"warmup_steps": 30,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: MPNetModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

tomekkorbak/wizardly_dubinsky

|

tomekkorbak

| 2022-11-23T16:20:10Z | 0 | 0 | null |

[

"generated_from_trainer",

"en",

"dataset:tomekkorbak/detoxify-pile-chunk3-0-50000",

"dataset:tomekkorbak/detoxify-pile-chunk3-50000-100000",

"dataset:tomekkorbak/detoxify-pile-chunk3-100000-150000",

"dataset:tomekkorbak/detoxify-pile-chunk3-150000-200000",

"dataset:tomekkorbak/detoxify-pile-chunk3-200000-250000",

"dataset:tomekkorbak/detoxify-pile-chunk3-250000-300000",

"dataset:tomekkorbak/detoxify-pile-chunk3-300000-350000",

"dataset:tomekkorbak/detoxify-pile-chunk3-350000-400000",

"dataset:tomekkorbak/detoxify-pile-chunk3-400000-450000",

"dataset:tomekkorbak/detoxify-pile-chunk3-450000-500000",

"dataset:tomekkorbak/detoxify-pile-chunk3-500000-550000",

"dataset:tomekkorbak/detoxify-pile-chunk3-550000-600000",

"dataset:tomekkorbak/detoxify-pile-chunk3-600000-650000",

"dataset:tomekkorbak/detoxify-pile-chunk3-650000-700000",

"dataset:tomekkorbak/detoxify-pile-chunk3-700000-750000",

"dataset:tomekkorbak/detoxify-pile-chunk3-750000-800000",

"dataset:tomekkorbak/detoxify-pile-chunk3-800000-850000",

"dataset:tomekkorbak/detoxify-pile-chunk3-850000-900000",

"dataset:tomekkorbak/detoxify-pile-chunk3-900000-950000",

"dataset:tomekkorbak/detoxify-pile-chunk3-950000-1000000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1000000-1050000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1050000-1100000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1100000-1150000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1150000-1200000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1200000-1250000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1250000-1300000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1300000-1350000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1350000-1400000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1400000-1450000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1450000-1500000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1500000-1550000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1550000-1600000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1600000-1650000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1650000-1700000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1700000-1750000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1750000-1800000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1800000-1850000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1850000-1900000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1900000-1950000",

"license:mit",

"region:us"

] | null | 2022-11-23T16:15:26Z |

---

language:

- en

license: mit

tags:

- generated_from_trainer

datasets:

- tomekkorbak/detoxify-pile-chunk3-0-50000

- tomekkorbak/detoxify-pile-chunk3-50000-100000

- tomekkorbak/detoxify-pile-chunk3-100000-150000

- tomekkorbak/detoxify-pile-chunk3-150000-200000

- tomekkorbak/detoxify-pile-chunk3-200000-250000

- tomekkorbak/detoxify-pile-chunk3-250000-300000

- tomekkorbak/detoxify-pile-chunk3-300000-350000

- tomekkorbak/detoxify-pile-chunk3-350000-400000

- tomekkorbak/detoxify-pile-chunk3-400000-450000

- tomekkorbak/detoxify-pile-chunk3-450000-500000

- tomekkorbak/detoxify-pile-chunk3-500000-550000

- tomekkorbak/detoxify-pile-chunk3-550000-600000

- tomekkorbak/detoxify-pile-chunk3-600000-650000

- tomekkorbak/detoxify-pile-chunk3-650000-700000

- tomekkorbak/detoxify-pile-chunk3-700000-750000

- tomekkorbak/detoxify-pile-chunk3-750000-800000

- tomekkorbak/detoxify-pile-chunk3-800000-850000

- tomekkorbak/detoxify-pile-chunk3-850000-900000

- tomekkorbak/detoxify-pile-chunk3-900000-950000

- tomekkorbak/detoxify-pile-chunk3-950000-1000000

- tomekkorbak/detoxify-pile-chunk3-1000000-1050000

- tomekkorbak/detoxify-pile-chunk3-1050000-1100000

- tomekkorbak/detoxify-pile-chunk3-1100000-1150000

- tomekkorbak/detoxify-pile-chunk3-1150000-1200000

- tomekkorbak/detoxify-pile-chunk3-1200000-1250000

- tomekkorbak/detoxify-pile-chunk3-1250000-1300000

- tomekkorbak/detoxify-pile-chunk3-1300000-1350000

- tomekkorbak/detoxify-pile-chunk3-1350000-1400000

- tomekkorbak/detoxify-pile-chunk3-1400000-1450000

- tomekkorbak/detoxify-pile-chunk3-1450000-1500000

- tomekkorbak/detoxify-pile-chunk3-1500000-1550000

- tomekkorbak/detoxify-pile-chunk3-1550000-1600000

- tomekkorbak/detoxify-pile-chunk3-1600000-1650000

- tomekkorbak/detoxify-pile-chunk3-1650000-1700000

- tomekkorbak/detoxify-pile-chunk3-1700000-1750000

- tomekkorbak/detoxify-pile-chunk3-1750000-1800000

- tomekkorbak/detoxify-pile-chunk3-1800000-1850000

- tomekkorbak/detoxify-pile-chunk3-1850000-1900000

- tomekkorbak/detoxify-pile-chunk3-1900000-1950000

model-index:

- name: wizardly_dubinsky

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wizardly_dubinsky

This model was trained from scratch on the tomekkorbak/detoxify-pile-chunk3-0-50000, the tomekkorbak/detoxify-pile-chunk3-50000-100000, the tomekkorbak/detoxify-pile-chunk3-100000-150000, the tomekkorbak/detoxify-pile-chunk3-150000-200000, the tomekkorbak/detoxify-pile-chunk3-200000-250000, the tomekkorbak/detoxify-pile-chunk3-250000-300000, the tomekkorbak/detoxify-pile-chunk3-300000-350000, the tomekkorbak/detoxify-pile-chunk3-350000-400000, the tomekkorbak/detoxify-pile-chunk3-400000-450000, the tomekkorbak/detoxify-pile-chunk3-450000-500000, the tomekkorbak/detoxify-pile-chunk3-500000-550000, the tomekkorbak/detoxify-pile-chunk3-550000-600000, the tomekkorbak/detoxify-pile-chunk3-600000-650000, the tomekkorbak/detoxify-pile-chunk3-650000-700000, the tomekkorbak/detoxify-pile-chunk3-700000-750000, the tomekkorbak/detoxify-pile-chunk3-750000-800000, the tomekkorbak/detoxify-pile-chunk3-800000-850000, the tomekkorbak/detoxify-pile-chunk3-850000-900000, the tomekkorbak/detoxify-pile-chunk3-900000-950000, the tomekkorbak/detoxify-pile-chunk3-950000-1000000, the tomekkorbak/detoxify-pile-chunk3-1000000-1050000, the tomekkorbak/detoxify-pile-chunk3-1050000-1100000, the tomekkorbak/detoxify-pile-chunk3-1100000-1150000, the tomekkorbak/detoxify-pile-chunk3-1150000-1200000, the tomekkorbak/detoxify-pile-chunk3-1200000-1250000, the tomekkorbak/detoxify-pile-chunk3-1250000-1300000, the tomekkorbak/detoxify-pile-chunk3-1300000-1350000, the tomekkorbak/detoxify-pile-chunk3-1350000-1400000, the tomekkorbak/detoxify-pile-chunk3-1400000-1450000, the tomekkorbak/detoxify-pile-chunk3-1450000-1500000, the tomekkorbak/detoxify-pile-chunk3-1500000-1550000, the tomekkorbak/detoxify-pile-chunk3-1550000-1600000, the tomekkorbak/detoxify-pile-chunk3-1600000-1650000, the tomekkorbak/detoxify-pile-chunk3-1650000-1700000, the tomekkorbak/detoxify-pile-chunk3-1700000-1750000, the tomekkorbak/detoxify-pile-chunk3-1750000-1800000, the tomekkorbak/detoxify-pile-chunk3-1800000-1850000, the tomekkorbak/detoxify-pile-chunk3-1850000-1900000 and the tomekkorbak/detoxify-pile-chunk3-1900000-1950000 datasets.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.01

- training_steps: 50354

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0+cu113

- Datasets 2.5.1

- Tokenizers 0.11.6

# Full config

{'dataset': {'datasets': ['tomekkorbak/detoxify-pile-chunk3-0-50000',

'tomekkorbak/detoxify-pile-chunk3-50000-100000',

'tomekkorbak/detoxify-pile-chunk3-100000-150000',

'tomekkorbak/detoxify-pile-chunk3-150000-200000',

'tomekkorbak/detoxify-pile-chunk3-200000-250000',

'tomekkorbak/detoxify-pile-chunk3-250000-300000',

'tomekkorbak/detoxify-pile-chunk3-300000-350000',

'tomekkorbak/detoxify-pile-chunk3-350000-400000',

'tomekkorbak/detoxify-pile-chunk3-400000-450000',

'tomekkorbak/detoxify-pile-chunk3-450000-500000',

'tomekkorbak/detoxify-pile-chunk3-500000-550000',

'tomekkorbak/detoxify-pile-chunk3-550000-600000',

'tomekkorbak/detoxify-pile-chunk3-600000-650000',

'tomekkorbak/detoxify-pile-chunk3-650000-700000',

'tomekkorbak/detoxify-pile-chunk3-700000-750000',

'tomekkorbak/detoxify-pile-chunk3-750000-800000',

'tomekkorbak/detoxify-pile-chunk3-800000-850000',

'tomekkorbak/detoxify-pile-chunk3-850000-900000',

'tomekkorbak/detoxify-pile-chunk3-900000-950000',

'tomekkorbak/detoxify-pile-chunk3-950000-1000000',

'tomekkorbak/detoxify-pile-chunk3-1000000-1050000',

'tomekkorbak/detoxify-pile-chunk3-1050000-1100000',

'tomekkorbak/detoxify-pile-chunk3-1100000-1150000',

'tomekkorbak/detoxify-pile-chunk3-1150000-1200000',

'tomekkorbak/detoxify-pile-chunk3-1200000-1250000',

'tomekkorbak/detoxify-pile-chunk3-1250000-1300000',

'tomekkorbak/detoxify-pile-chunk3-1300000-1350000',

'tomekkorbak/detoxify-pile-chunk3-1350000-1400000',

'tomekkorbak/detoxify-pile-chunk3-1400000-1450000',

'tomekkorbak/detoxify-pile-chunk3-1450000-1500000',

'tomekkorbak/detoxify-pile-chunk3-1500000-1550000',

'tomekkorbak/detoxify-pile-chunk3-1550000-1600000',

'tomekkorbak/detoxify-pile-chunk3-1600000-1650000',

'tomekkorbak/detoxify-pile-chunk3-1650000-1700000',

'tomekkorbak/detoxify-pile-chunk3-1700000-1750000',

'tomekkorbak/detoxify-pile-chunk3-1750000-1800000',

'tomekkorbak/detoxify-pile-chunk3-1800000-1850000',

'tomekkorbak/detoxify-pile-chunk3-1850000-1900000',

'tomekkorbak/detoxify-pile-chunk3-1900000-1950000'],

'is_split_by_sentences': True},

'generation': {'force_call_on': [25354],

'metrics_configs': [{}, {'n': 1}, {'n': 2}, {'n': 5}],

'scenario_configs': [{'generate_kwargs': {'do_sample': True,

'max_length': 128,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'unconditional',

'num_samples': 2048},

{'generate_kwargs': {'do_sample': True,

'max_length': 128,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'challenging_rtp',

'num_samples': 2048,

'prompts_path': 'resources/challenging_rtp.jsonl'}],

'scorer_config': {'device': 'cuda:0'}},

'kl_gpt3_callback': {'force_call_on': [25354],

'max_tokens': 64,

'num_samples': 4096},

'model': {'from_scratch': True,

'gpt2_config_kwargs': {'reorder_and_upcast_attn': True,

'scale_attn_by': True},

'path_or_name': 'gpt2'},

'objective': {'alpha': 1, 'name': 'Unlikelihood', 'score_threshold': 0.00078},

'tokenizer': {'path_or_name': 'gpt2'},

'training': {'dataloader_num_workers': 0,

'effective_batch_size': 64,

'evaluation_strategy': 'no',

'fp16': True,

'hub_model_id': 'wizardly_dubinsky',

'hub_strategy': 'all_checkpoints',

'learning_rate': 0.0005,

'logging_first_step': True,

'logging_steps': 1,

'num_tokens': 3300000000,

'output_dir': 'training_output104340',

'per_device_train_batch_size': 16,

'push_to_hub': True,

'remove_unused_columns': False,

'save_steps': 25354,

'save_strategy': 'steps',

'seed': 42,

'warmup_ratio': 0.01,

'weight_decay': 0.1}}

# Wandb URL:

https://wandb.ai/tomekkorbak/apo/runs/2kewh3j9

|

tomekkorbak/cranky_jang

|

tomekkorbak

| 2022-11-23T16:18:30Z | 0 | 0 | null |

[

"generated_from_trainer",

"en",

"dataset:tomekkorbak/detoxify-pile-chunk3-0-50000",

"dataset:tomekkorbak/detoxify-pile-chunk3-50000-100000",

"dataset:tomekkorbak/detoxify-pile-chunk3-100000-150000",

"dataset:tomekkorbak/detoxify-pile-chunk3-150000-200000",

"dataset:tomekkorbak/detoxify-pile-chunk3-200000-250000",

"dataset:tomekkorbak/detoxify-pile-chunk3-250000-300000",

"dataset:tomekkorbak/detoxify-pile-chunk3-300000-350000",

"dataset:tomekkorbak/detoxify-pile-chunk3-350000-400000",

"dataset:tomekkorbak/detoxify-pile-chunk3-400000-450000",

"dataset:tomekkorbak/detoxify-pile-chunk3-450000-500000",

"dataset:tomekkorbak/detoxify-pile-chunk3-500000-550000",

"dataset:tomekkorbak/detoxify-pile-chunk3-550000-600000",

"dataset:tomekkorbak/detoxify-pile-chunk3-600000-650000",

"dataset:tomekkorbak/detoxify-pile-chunk3-650000-700000",

"dataset:tomekkorbak/detoxify-pile-chunk3-700000-750000",

"dataset:tomekkorbak/detoxify-pile-chunk3-750000-800000",

"dataset:tomekkorbak/detoxify-pile-chunk3-800000-850000",

"dataset:tomekkorbak/detoxify-pile-chunk3-850000-900000",

"dataset:tomekkorbak/detoxify-pile-chunk3-900000-950000",

"dataset:tomekkorbak/detoxify-pile-chunk3-950000-1000000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1000000-1050000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1050000-1100000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1100000-1150000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1150000-1200000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1200000-1250000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1250000-1300000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1300000-1350000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1350000-1400000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1400000-1450000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1450000-1500000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1500000-1550000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1550000-1600000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1600000-1650000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1650000-1700000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1700000-1750000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1750000-1800000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1800000-1850000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1850000-1900000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1900000-1950000",

"license:mit",

"region:us"

] | null | 2022-11-23T16:17:34Z |

---

language:

- en

license: mit

tags:

- generated_from_trainer

datasets:

- tomekkorbak/detoxify-pile-chunk3-0-50000

- tomekkorbak/detoxify-pile-chunk3-50000-100000

- tomekkorbak/detoxify-pile-chunk3-100000-150000

- tomekkorbak/detoxify-pile-chunk3-150000-200000

- tomekkorbak/detoxify-pile-chunk3-200000-250000

- tomekkorbak/detoxify-pile-chunk3-250000-300000

- tomekkorbak/detoxify-pile-chunk3-300000-350000

- tomekkorbak/detoxify-pile-chunk3-350000-400000

- tomekkorbak/detoxify-pile-chunk3-400000-450000

- tomekkorbak/detoxify-pile-chunk3-450000-500000

- tomekkorbak/detoxify-pile-chunk3-500000-550000

- tomekkorbak/detoxify-pile-chunk3-550000-600000

- tomekkorbak/detoxify-pile-chunk3-600000-650000

- tomekkorbak/detoxify-pile-chunk3-650000-700000

- tomekkorbak/detoxify-pile-chunk3-700000-750000

- tomekkorbak/detoxify-pile-chunk3-750000-800000

- tomekkorbak/detoxify-pile-chunk3-800000-850000

- tomekkorbak/detoxify-pile-chunk3-850000-900000

- tomekkorbak/detoxify-pile-chunk3-900000-950000

- tomekkorbak/detoxify-pile-chunk3-950000-1000000

- tomekkorbak/detoxify-pile-chunk3-1000000-1050000

- tomekkorbak/detoxify-pile-chunk3-1050000-1100000

- tomekkorbak/detoxify-pile-chunk3-1100000-1150000

- tomekkorbak/detoxify-pile-chunk3-1150000-1200000

- tomekkorbak/detoxify-pile-chunk3-1200000-1250000

- tomekkorbak/detoxify-pile-chunk3-1250000-1300000

- tomekkorbak/detoxify-pile-chunk3-1300000-1350000

- tomekkorbak/detoxify-pile-chunk3-1350000-1400000

- tomekkorbak/detoxify-pile-chunk3-1400000-1450000

- tomekkorbak/detoxify-pile-chunk3-1450000-1500000

- tomekkorbak/detoxify-pile-chunk3-1500000-1550000

- tomekkorbak/detoxify-pile-chunk3-1550000-1600000

- tomekkorbak/detoxify-pile-chunk3-1600000-1650000

- tomekkorbak/detoxify-pile-chunk3-1650000-1700000

- tomekkorbak/detoxify-pile-chunk3-1700000-1750000

- tomekkorbak/detoxify-pile-chunk3-1750000-1800000

- tomekkorbak/detoxify-pile-chunk3-1800000-1850000

- tomekkorbak/detoxify-pile-chunk3-1850000-1900000

- tomekkorbak/detoxify-pile-chunk3-1900000-1950000

model-index:

- name: cranky_jang

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# cranky_jang

This model was trained from scratch on the tomekkorbak/detoxify-pile-chunk3-0-50000, the tomekkorbak/detoxify-pile-chunk3-50000-100000, the tomekkorbak/detoxify-pile-chunk3-100000-150000, the tomekkorbak/detoxify-pile-chunk3-150000-200000, the tomekkorbak/detoxify-pile-chunk3-200000-250000, the tomekkorbak/detoxify-pile-chunk3-250000-300000, the tomekkorbak/detoxify-pile-chunk3-300000-350000, the tomekkorbak/detoxify-pile-chunk3-350000-400000, the tomekkorbak/detoxify-pile-chunk3-400000-450000, the tomekkorbak/detoxify-pile-chunk3-450000-500000, the tomekkorbak/detoxify-pile-chunk3-500000-550000, the tomekkorbak/detoxify-pile-chunk3-550000-600000, the tomekkorbak/detoxify-pile-chunk3-600000-650000, the tomekkorbak/detoxify-pile-chunk3-650000-700000, the tomekkorbak/detoxify-pile-chunk3-700000-750000, the tomekkorbak/detoxify-pile-chunk3-750000-800000, the tomekkorbak/detoxify-pile-chunk3-800000-850000, the tomekkorbak/detoxify-pile-chunk3-850000-900000, the tomekkorbak/detoxify-pile-chunk3-900000-950000, the tomekkorbak/detoxify-pile-chunk3-950000-1000000, the tomekkorbak/detoxify-pile-chunk3-1000000-1050000, the tomekkorbak/detoxify-pile-chunk3-1050000-1100000, the tomekkorbak/detoxify-pile-chunk3-1100000-1150000, the tomekkorbak/detoxify-pile-chunk3-1150000-1200000, the tomekkorbak/detoxify-pile-chunk3-1200000-1250000, the tomekkorbak/detoxify-pile-chunk3-1250000-1300000, the tomekkorbak/detoxify-pile-chunk3-1300000-1350000, the tomekkorbak/detoxify-pile-chunk3-1350000-1400000, the tomekkorbak/detoxify-pile-chunk3-1400000-1450000, the tomekkorbak/detoxify-pile-chunk3-1450000-1500000, the tomekkorbak/detoxify-pile-chunk3-1500000-1550000, the tomekkorbak/detoxify-pile-chunk3-1550000-1600000, the tomekkorbak/detoxify-pile-chunk3-1600000-1650000, the tomekkorbak/detoxify-pile-chunk3-1650000-1700000, the tomekkorbak/detoxify-pile-chunk3-1700000-1750000, the tomekkorbak/detoxify-pile-chunk3-1750000-1800000, the tomekkorbak/detoxify-pile-chunk3-1800000-1850000, the tomekkorbak/detoxify-pile-chunk3-1850000-1900000 and the tomekkorbak/detoxify-pile-chunk3-1900000-1950000 datasets.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 64

- total_train_batch_size: 1024

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.01

- training_steps: 3147

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0+cu113

- Datasets 2.5.1

- Tokenizers 0.11.6

# Full config

{'dataset': {'datasets': ['tomekkorbak/detoxify-pile-chunk3-0-50000',

'tomekkorbak/detoxify-pile-chunk3-50000-100000',

'tomekkorbak/detoxify-pile-chunk3-100000-150000',

'tomekkorbak/detoxify-pile-chunk3-150000-200000',

'tomekkorbak/detoxify-pile-chunk3-200000-250000',

'tomekkorbak/detoxify-pile-chunk3-250000-300000',

'tomekkorbak/detoxify-pile-chunk3-300000-350000',

'tomekkorbak/detoxify-pile-chunk3-350000-400000',

'tomekkorbak/detoxify-pile-chunk3-400000-450000',

'tomekkorbak/detoxify-pile-chunk3-450000-500000',

'tomekkorbak/detoxify-pile-chunk3-500000-550000',

'tomekkorbak/detoxify-pile-chunk3-550000-600000',

'tomekkorbak/detoxify-pile-chunk3-600000-650000',

'tomekkorbak/detoxify-pile-chunk3-650000-700000',

'tomekkorbak/detoxify-pile-chunk3-700000-750000',

'tomekkorbak/detoxify-pile-chunk3-750000-800000',

'tomekkorbak/detoxify-pile-chunk3-800000-850000',

'tomekkorbak/detoxify-pile-chunk3-850000-900000',

'tomekkorbak/detoxify-pile-chunk3-900000-950000',

'tomekkorbak/detoxify-pile-chunk3-950000-1000000',

'tomekkorbak/detoxify-pile-chunk3-1000000-1050000',

'tomekkorbak/detoxify-pile-chunk3-1050000-1100000',

'tomekkorbak/detoxify-pile-chunk3-1100000-1150000',

'tomekkorbak/detoxify-pile-chunk3-1150000-1200000',

'tomekkorbak/detoxify-pile-chunk3-1200000-1250000',

'tomekkorbak/detoxify-pile-chunk3-1250000-1300000',

'tomekkorbak/detoxify-pile-chunk3-1300000-1350000',

'tomekkorbak/detoxify-pile-chunk3-1350000-1400000',

'tomekkorbak/detoxify-pile-chunk3-1400000-1450000',

'tomekkorbak/detoxify-pile-chunk3-1450000-1500000',

'tomekkorbak/detoxify-pile-chunk3-1500000-1550000',

'tomekkorbak/detoxify-pile-chunk3-1550000-1600000',

'tomekkorbak/detoxify-pile-chunk3-1600000-1650000',

'tomekkorbak/detoxify-pile-chunk3-1650000-1700000',

'tomekkorbak/detoxify-pile-chunk3-1700000-1750000',

'tomekkorbak/detoxify-pile-chunk3-1750000-1800000',

'tomekkorbak/detoxify-pile-chunk3-1800000-1850000',

'tomekkorbak/detoxify-pile-chunk3-1850000-1900000',

'tomekkorbak/detoxify-pile-chunk3-1900000-1950000'],

'is_split_by_sentences': True},

'generation': {'force_call_on': [25354],

'metrics_configs': [{}, {'n': 1}, {'n': 2}, {'n': 5}],

'scenario_configs': [{'generate_kwargs': {'do_sample': True,

'max_length': 128,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'unconditional',

'num_samples': 2048},

{'generate_kwargs': {'do_sample': True,

'max_length': 128,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'challenging_rtp',

'num_samples': 2048,

'prompts_path': 'resources/challenging_rtp.jsonl'}],

'scorer_config': {'device': 'cuda:0'}},

'kl_gpt3_callback': {'force_call_on': [25354],

'max_tokens': 64,

'num_samples': 4096},

'model': {'from_scratch': True,

'gpt2_config_kwargs': {'reorder_and_upcast_attn': True,

'scale_attn_by': True},

'model_kwargs': {'value_head_config': {'is_detached': False}},

'path_or_name': 'gpt2'},

'objective': {'alpha': 0.5, 'beta': 10, 'name': 'AWR'},

'tokenizer': {'path_or_name': 'gpt2'},

'training': {'dataloader_num_workers': 0,

'effective_batch_size': 1024,

'evaluation_strategy': 'no',

'fp16': True,

'hub_model_id': 'cranky_jang',

'hub_strategy': 'all_checkpoints',

'learning_rate': 0.001,

'logging_first_step': True,

'logging_steps': 1,

'num_tokens': 3300000000,

'output_dir': 'training_output104340',

'per_device_train_batch_size': 16,

'push_to_hub': True,

'remove_unused_columns': False,

'save_steps': 25354,

'save_strategy': 'steps',

'seed': 42,

'warmup_ratio': 0.01,

'weight_decay': 0.1}}

# Wandb URL:

https://wandb.ai/tomekkorbak/apo/runs/37cxyfb2

|

tomekkorbak/ecstatic_hoover

|

tomekkorbak

| 2022-11-23T16:14:21Z | 0 | 0 | null |

[

"generated_from_trainer",

"en",

"dataset:tomekkorbak/detoxify-pile-chunk3-0-50000",

"dataset:tomekkorbak/detoxify-pile-chunk3-50000-100000",

"dataset:tomekkorbak/detoxify-pile-chunk3-100000-150000",

"dataset:tomekkorbak/detoxify-pile-chunk3-150000-200000",

"dataset:tomekkorbak/detoxify-pile-chunk3-200000-250000",

"dataset:tomekkorbak/detoxify-pile-chunk3-250000-300000",

"dataset:tomekkorbak/detoxify-pile-chunk3-300000-350000",

"dataset:tomekkorbak/detoxify-pile-chunk3-350000-400000",

"dataset:tomekkorbak/detoxify-pile-chunk3-400000-450000",

"dataset:tomekkorbak/detoxify-pile-chunk3-450000-500000",

"dataset:tomekkorbak/detoxify-pile-chunk3-500000-550000",

"dataset:tomekkorbak/detoxify-pile-chunk3-550000-600000",

"dataset:tomekkorbak/detoxify-pile-chunk3-600000-650000",

"dataset:tomekkorbak/detoxify-pile-chunk3-650000-700000",

"dataset:tomekkorbak/detoxify-pile-chunk3-700000-750000",

"dataset:tomekkorbak/detoxify-pile-chunk3-750000-800000",

"dataset:tomekkorbak/detoxify-pile-chunk3-800000-850000",

"dataset:tomekkorbak/detoxify-pile-chunk3-850000-900000",

"dataset:tomekkorbak/detoxify-pile-chunk3-900000-950000",

"dataset:tomekkorbak/detoxify-pile-chunk3-950000-1000000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1000000-1050000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1050000-1100000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1100000-1150000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1150000-1200000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1200000-1250000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1250000-1300000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1300000-1350000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1350000-1400000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1400000-1450000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1450000-1500000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1500000-1550000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1550000-1600000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1600000-1650000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1650000-1700000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1700000-1750000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1750000-1800000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1800000-1850000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1850000-1900000",

"dataset:tomekkorbak/detoxify-pile-chunk3-1900000-1950000",

"license:mit",

"region:us"

] | null | 2022-11-23T16:13:50Z |

---

language:

- en

license: mit

tags:

- generated_from_trainer

datasets:

- tomekkorbak/detoxify-pile-chunk3-0-50000

- tomekkorbak/detoxify-pile-chunk3-50000-100000

- tomekkorbak/detoxify-pile-chunk3-100000-150000

- tomekkorbak/detoxify-pile-chunk3-150000-200000

- tomekkorbak/detoxify-pile-chunk3-200000-250000

- tomekkorbak/detoxify-pile-chunk3-250000-300000

- tomekkorbak/detoxify-pile-chunk3-300000-350000

- tomekkorbak/detoxify-pile-chunk3-350000-400000

- tomekkorbak/detoxify-pile-chunk3-400000-450000

- tomekkorbak/detoxify-pile-chunk3-450000-500000

- tomekkorbak/detoxify-pile-chunk3-500000-550000

- tomekkorbak/detoxify-pile-chunk3-550000-600000

- tomekkorbak/detoxify-pile-chunk3-600000-650000

- tomekkorbak/detoxify-pile-chunk3-650000-700000

- tomekkorbak/detoxify-pile-chunk3-700000-750000

- tomekkorbak/detoxify-pile-chunk3-750000-800000