modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-09-11 06:30:11

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 555

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-09-11 06:29:58

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

gaochangkuan/model_dir

|

gaochangkuan

| 2021-05-21T16:10:50Z | 10 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

## Generating Chinese poetry by topic.

```python

from transformers import *

tokenizer = BertTokenizer.from_pretrained("gaochangkuan/model_dir")

model = AutoModelWithLMHead.from_pretrained("gaochangkuan/model_dir")

prompt= '''<s>田园躬耕'''

length= 84

stop_token='</s>'

temperature = 1.2

repetition_penalty=1.3

k= 30

p= 0.95

device ='cuda'

seed=2020

no_cuda=False

prompt_text = prompt if prompt else input("Model prompt >>> ")

encoded_prompt = tokenizer.encode(

'<s>'+prompt_text+'<sep>',

add_special_tokens=False,

return_tensors="pt"

)

encoded_prompt = encoded_prompt.to(device)

output_sequences = model.generate(

input_ids=encoded_prompt,

max_length=length,

min_length=10,

do_sample=True,

early_stopping=True,

num_beams=10,

temperature=temperature,

top_k=k,

top_p=p,

repetition_penalty=repetition_penalty,

bad_words_ids=None,

bos_token_id=tokenizer.bos_token_id,

pad_token_id=tokenizer.pad_token_id,

eos_token_id=tokenizer.eos_token_id,

length_penalty=1.2,

no_repeat_ngram_size=2,

num_return_sequences=1,

attention_mask=None,

decoder_start_token_id=tokenizer.bos_token_id,)

generated_sequence = output_sequences[0].tolist()

text = tokenizer.decode(generated_sequence)

text = text[: text.find(stop_token) if stop_token else None]

print(''.join(text).replace(' ','').replace('<pad>','').replace('<s>',''))

```

|

gagan3012/project-code-py-small

|

gagan3012

| 2021-05-21T16:06:24Z | 11 | 1 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

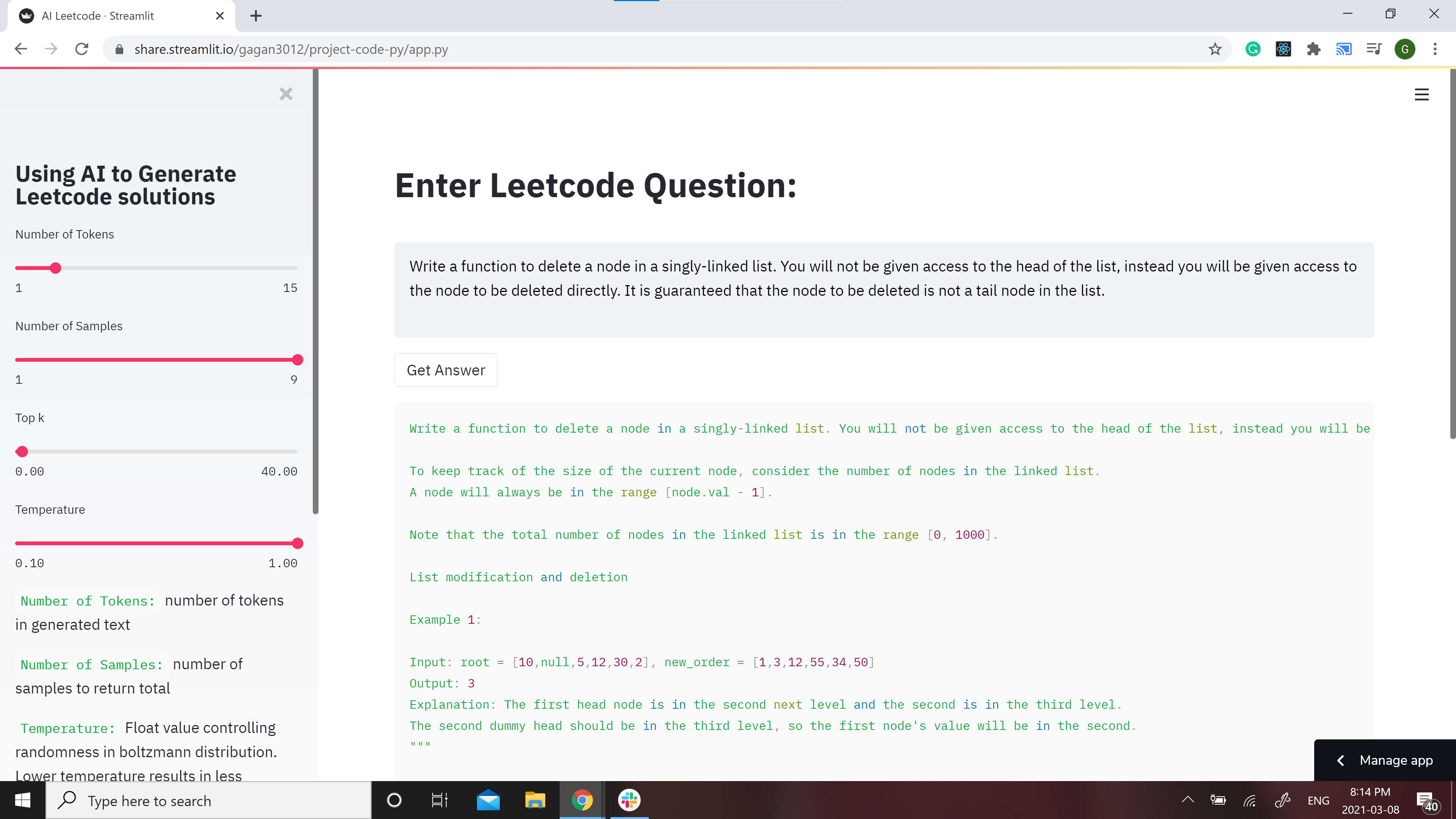

# Leetcode using AI :robot:

GPT-2 Model for Leetcode Questions in python

**Note**: the Answers might not make sense in some cases because of the bias in GPT-2

**Contribtuions:** If you would like to make the model better contributions are welcome Check out [CONTRIBUTIONS.md](https://github.com/gagan3012/project-code-py/blob/master/CONTRIBUTIONS.md)

### 📢 Favour:

It would be highly motivating, if you can STAR⭐ this repo if you find it helpful.

## Model

Two models have been developed for different use cases and they can be found at https://huggingface.co/gagan3012

The model weights can be found here: [GPT-2](https://huggingface.co/gagan3012/project-code-py) and [DistilGPT-2](https://huggingface.co/gagan3012/project-code-py-small)

### Example usage:

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("gagan3012/project-code-py")

model = AutoModelWithLMHead.from_pretrained("gagan3012/project-code-py")

```

## Demo

[](https://share.streamlit.io/gagan3012/project-code-py/app.py)

A streamlit webapp has been setup to use the model: https://share.streamlit.io/gagan3012/project-code-py/app.py

## Example results:

### Question:

```

Write a function to delete a node in a singly-linked list. You will not be given access to the head of the list, instead you will be given access to the node to be deleted directly. It is guaranteed that the node to be deleted is not a tail node in the list.

```

### Answer:

```python

""" Write a function to delete a node in a singly-linked list. You will not be given access to the head of the list, instead you will be given access to the node to be deleted directly. It is guaranteed that the node to be deleted is not a tail node in the list.

For example,

a = 1->2->3

b = 3->1->2

t = ListNode(-1, 1)

Note: The lexicographic ordering of the nodes in a tree matters. Do not assign values to nodes in a tree.

Example 1:

Input: [1,2,3]

Output: 1->2->5

Explanation: 1->2->3->3->4, then 1->2->5[2] and then 5->1->3->4.

Note:

The length of a linked list will be in the range [1, 1000].

Node.val must be a valid LinkedListNode type.

Both the length and the value of the nodes in a linked list will be in the range [-1000, 1000].

All nodes are distinct.

"""

# Definition for singly-linked list.

# class ListNode:

# def __init__(self, x):

# self.val = x

# self.next = None

class Solution:

def deleteNode(self, head: ListNode, val: int) -> None:

"""

BFS

Linked List

:param head: ListNode

:param val: int

:return: ListNode

"""

if head is not None:

return head

dummy = ListNode(-1, 1)

dummy.next = head

dummy.next.val = val

dummy.next.next = head

dummy.val = ""

s1 = Solution()

print(s1.deleteNode(head))

print(s1.deleteNode(-1))

print(s1.deleteNode(-1))

```

|

gagan3012/Fox-News-Generator

|

gagan3012

| 2021-05-21T16:03:28Z | 7 | 3 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

# Generating Right Wing News Using GPT2

### I have built a custom model for it using data from Kaggle

Creating a new finetuned model using data from FOX news

### My model can be accessed at gagan3012/Fox-News-Generator

Check the [BenchmarkTest](https://github.com/gagan3012/Fox-News-Generator/blob/master/BenchmarkTest.ipynb) notebook for results

Find the model at [gagan3012/Fox-News-Generator](https://huggingface.co/gagan3012/Fox-News-Generator)

```

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("gagan3012/Fox-News-Generator")

model = AutoModelWithLMHead.from_pretrained("gagan3012/Fox-News-Generator")

```

|

erikinfo/gpt2TEDlectures

|

erikinfo

| 2021-05-21T16:00:10Z | 6 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

# GPT2 Keyword Based Lecture Generator

## Model description

GPT2 fine-tuned on the TED Talks Dataset (published under the Creative Commons BY-NC-ND license).

## Intended uses

Used to generate spoken-word lectures.

### How to use

Input text:

<BOS> title <|SEP|> Some keywords <|SEP|>

Keyword Format: "Main Topic"."Subtopic1","Subtopic2","Subtopic3"

Code Example:

```

prompt = <BOS> + title + \\

<|SEP|> + keywords + <|SEP|>

generated = torch.tensor(tokenizer.encode(prompt)).unsqueeze(0)

model.eval();

```

|

e-tornike/gpt2-rnm

|

e-tornike

| 2021-05-21T15:43:11Z | 7 | 1 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

### How to use

You can use this model directly with a pipeline for text generation. Since the generation relies on some randomness, we

set a seed for reproducibility:

```python

>>> from transformers import pipeline, set_seed

>>> generator = pipeline('text-generation', model='e-tony/gpt2-rnm')

>>> set_seed(42)

>>> generator("Rick: I turned myself into a pickle, Morty!\nMorty: ", max_length=50, num_return_sequences=5)

[{'generated_text': "Rick: I turned myself into a pickle, Morty!\nMorty: I didn't want to have children. It was my fate! I'll pay my mom and dad.\nSnuffles: Well, at least we"},

{'generated_text': "Rick: I turned myself into a pickle, Morty!\nMorty: you know what happened?\n(Steven begins dragging people down the toilet with his hand. As Steven falls) The whole thing starts.\nA man approaches Steven"},

{'generated_text': "Rick: I turned myself into a pickle, Morty!\nMorty: Oh wait! And do you remember what I did to you?\nJerry: Uh, it didn't hurt. It should have hurt a lot since I"},

{'generated_text': "Rick: I turned myself into a pickle, Morty!\nMorty: Rick!\nKraven: Wait! [wary gasp] What the hell are you doing this time?!\nJerry: Hey, are you"},

{'generated_text': "Rick: I turned myself into a pickle, Morty!\nMorty: Uh.\nJerry: You don't have to put your finger on me today, do you?\nRick: It's just, what do you"}]

```

### Training data

We used the original `gpt2` model and fine-tuned it on [Rick and Morty transcripts](https://rickandmorty.fandom.com/wiki/Category:Transcripts).

|

DebateLabKIT/cript

|

DebateLabKIT

| 2021-05-21T15:40:52Z | 8 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"en",

"arxiv:2009.07185",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

language: en

tags:

- gpt2

---

# CRiPT Model (Critical Thinking Intermediarily Pretrained Transformer)

Small version of the trained model (`SYL01-2020-10-24-72K/gpt2-small-train03-72K`) presented in the paper "Critical Thinking for Language Models" (Betz, Voigt and Richardson 2020). See also:

* [blog entry](https://debatelab.github.io/journal/critical-thinking-language-models.html)

* [GitHub repo](https://github.com/debatelab/aacorpus)

* [paper](https://arxiv.org/pdf/2009.07185)

|

DebateLabKIT/cript-medium

|

DebateLabKIT

| 2021-05-21T15:39:12Z | 10 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"en",

"arxiv:2009.07185",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

language: en

tags:

- gpt2

---

# CRiPT Model Medium (Critical Thinking Intermediarily Pretrained Transformer)

Medium version of the trained model (`SYL01-2020-10-24-72K/gpt2-medium-train03-72K`) presented in the paper "Critical Thinking for Language Models" (Betz, Voigt and Richardson 2020). See also:

* [blog entry](https://debatelab.github.io/journal/critical-thinking-language-models.html)

* [GitHub repo](https://github.com/debatelab/aacorpus)

* [paper](https://arxiv.org/pdf/2009.07185)

|

DebateLabKIT/cript-large

|

DebateLabKIT

| 2021-05-21T15:31:48Z | 7 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"en",

"arxiv:2009.07185",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

language: en

tags:

- gpt2

---

# CRiPT Model Large (Critical Thinking Intermediarily Pretrained Transformer)

Large version of the trained model (`SYL01-2020-10-24-72K/gpt2-large-train03-72K`) presented in the paper "Critical Thinking for Language Models" (Betz, Voigt and Richardson 2020). See also:

* [blog entry](https://debatelab.github.io/journal/critical-thinking-language-models.html)

* [GitHub repo](https://github.com/debatelab/aacorpus)

* [paper](https://arxiv.org/pdf/2009.07185)

|

classla/bcms-bertic-generator

|

classla

| 2021-05-21T13:29:30Z | 5 | 2 |

transformers

|

[

"transformers",

"pytorch",

"electra",

"pretraining",

"masked-lm",

"hr",

"bs",

"sr",

"cnr",

"hbs",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z |

---

language:

- hr

- bs

- sr

- cnr

- hbs

tags:

- masked-lm

widget:

- text: "Zovem se Marko i radim u [MASK]."

license: apache-2.0

---

# BERTić* [bert-ich] /bɜrtitʃ/ - A transformer language model for Bosnian, Croatian, Montenegrin and Serbian

* The name should resemble the facts (1) that the model was trained in Zagreb, Croatia, where diminutives ending in -ić (as in fotić, smajlić, hengić etc.) are very popular, and (2) that most surnames in the countries where these languages are spoken end in -ić (with diminutive etymology as well).

This is the smaller generator of the main [discriminator model](https://huggingface.co/classla/bcms-bertic), useful if you want to continue pre-training the discriminator model.

If you use the model, please cite the following paper:

```

@inproceedings{ljubesic-lauc-2021-bertic,

title = "{BERT}i{\'c} - The Transformer Language Model for {B}osnian, {C}roatian, {M}ontenegrin and {S}erbian",

author = "Ljube{\v{s}}i{\'c}, Nikola and Lauc, Davor",

booktitle = "Proceedings of the 8th Workshop on Balto-Slavic Natural Language Processing",

month = apr,

year = "2021",

address = "Kiyv, Ukraine",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2021.bsnlp-1.5",

pages = "37--42",

}

```

|

Dongjae/mrc2reader

|

Dongjae

| 2021-05-21T13:25:57Z | 14 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"question-answering",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-03-02T23:29:04Z |

The Reader model is for Korean Question Answering

The backbone model is deepset/xlm-roberta-large-squad2.

It is a finetuned model with KorQuAD-v1 dataset.

As a result of verification using KorQuAD evaluation dataset, it showed approximately 87% and 92% respectively for the EM score and F1 score.

Thank you

|

aliosm/ComVE-gpt2-medium

|

aliosm

| 2021-05-21T13:17:55Z | 8 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"feature-extraction",

"exbert",

"commonsense",

"semeval2020",

"comve",

"en",

"dataset:ComVE",

"license:mit",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

feature-extraction

| 2022-03-02T23:29:05Z |

---

language: "en"

tags:

- gpt2

- exbert

- commonsense

- semeval2020

- comve

license: "mit"

datasets:

- ComVE

metrics:

- bleu

widget:

- text: "Chicken can swim in water. <|continue|>"

---

# ComVE-gpt2-medium

## Model description

Finetuned model on Commonsense Validation and Explanation (ComVE) dataset introduced in [SemEval2020 Task4](https://competitions.codalab.org/competitions/21080) using a causal language modeling (CLM) objective.

The model is able to generate a reason why a given natural language statement is against commonsense.

## Intended uses & limitations

You can use the raw model for text generation to generate reasons why natural language statements are against commonsense.

#### How to use

You can use this model directly to generate reasons why the given statement is against commonsense using [`generate.sh`](https://github.com/AliOsm/SemEval2020-Task4-ComVE/tree/master/TaskC-Generation) script.

*Note:* make sure that you are using version `2.4.1` of `transformers` package. Newer versions has some issue in text generation and the model repeats the last token generated again and again.

#### Limitations and bias

The model biased to negate the entered sentence usually instead of producing a factual reason.

## Training data

The model is initialized from the [gpt2-medium](https://github.com/huggingface/transformers/blob/master/model_cards/gpt2-README.md) model and finetuned using [ComVE](https://github.com/wangcunxiang/SemEval2020-Task4-Commonsense-Validation-and-Explanation) dataset which contains 10K against commonsense sentences, each of them is paired with three reference reasons.

## Training procedure

Each natural language statement that against commonsense is concatenated with its reference reason with `<|continue|>` as a separator, then the model finetuned using CLM objective.

The model trained on Nvidia Tesla P100 GPU from Google Colab platform with 5e-5 learning rate, 5 epochs, 128 maximum sequence length and 64 batch size.

<center>

<img src="https://i.imgur.com/xKbrwBC.png">

</center>

## Eval results

The model achieved fifth place with 16.7153/16.1187 BLEU scores and third place with 1.94 Human Evaluation score on SemEval2020 Task4: Commonsense Validation and Explanation development and testing dataset.

These are some examples generated by the model:

| Against Commonsense Statement | Generated Reason |

|:-----------------------------------------------------:|:--------------------------------------------:|

| Chicken can swim in water. | Chicken can't swim. |

| shoes can fly | Shoes are not able to fly. |

| Chocolate can be used to make a coffee pot | Chocolate is not used to make coffee pots. |

| you can also buy tickets online with an identity card | You can't buy tickets with an identity card. |

| a ball is square and can roll | A ball is round and cannot roll. |

| You can use detergent to dye your hair. | Detergent is used to wash clothes. |

| you can eat mercury | mercury is poisonous |

| A gardener can follow a suspect | gardener is not a police officer |

| cars can float in the ocean just like a boat | Cars are too heavy to float in the ocean. |

| I am going to work so I can lose money. | Working is not a way to lose money. |

### BibTeX entry and citation info

```bibtex

@article{fadel2020justers,

title={JUSTers at SemEval-2020 Task 4: Evaluating Transformer Models Against Commonsense Validation and Explanation},

author={Fadel, Ali and Al-Ayyoub, Mahmoud and Cambria, Erik},

year={2020}

}

```

<a href="https://huggingface.co/exbert/?model=aliosm/ComVE-gpt2-medium">

<img width="300px" src="https://cdn-media.huggingface.co/exbert/button.png">

</a>

|

aliosm/ComVE-gpt2-large

|

aliosm

| 2021-05-21T13:12:02Z | 13 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"exbert",

"commonsense",

"semeval2020",

"comve",

"en",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

language: "en"

tags:

- gpt2

- exbert

- commonsense

- semeval2020

- comve

license: "mit"

datasets:

- https://github.com/wangcunxiang/SemEval2020-Task4-Commonsense-Validation-and-Explanation

metrics:

- bleu

widget:

- text: "Chicken can swim in water. <|continue|>"

---

# ComVE-gpt2-large

## Model description

Finetuned model on Commonsense Validation and Explanation (ComVE) dataset introduced in [SemEval2020 Task4](https://competitions.codalab.org/competitions/21080) using a causal language modeling (CLM) objective.

The model is able to generate a reason why a given natural language statement is against commonsense.

## Intended uses & limitations

You can use the raw model for text generation to generate reasons why natural language statements are against commonsense.

#### How to use

You can use this model directly to generate reasons why the given statement is against commonsense using [`generate.sh`](https://github.com/AliOsm/SemEval2020-Task4-ComVE/tree/master/TaskC-Generation) script.

*Note:* make sure that you are using version `2.4.1` of `transformers` package. Newer versions has some issue in text generation and the model repeats the last token generated again and again.

#### Limitations and bias

The model biased to negate the entered sentence usually instead of producing a factual reason.

## Training data

The model is initialized from the [gpt2-large](https://github.com/huggingface/transformers/blob/master/model_cards/gpt2-README.md) model and finetuned using [ComVE](https://github.com/wangcunxiang/SemEval2020-Task4-Commonsense-Validation-and-Explanation) dataset which contains 10K against commonsense sentences, each of them is paired with three reference reasons.

## Training procedure

Each natural language statement that against commonsense is concatenated with its reference reason with `<|conteniue|>` as a separator, then the model finetuned using CLM objective.

The model trained on Nvidia Tesla P100 GPU from Google Colab platform with 5e-5 learning rate, 5 epochs, 128 maximum sequence length and 64 batch size.

<center>

<img src="https://i.imgur.com/xKbrwBC.png">

</center>

## Eval results

The model achieved 16.5110/15.9299 BLEU scores on SemEval2020 Task4: Commonsense Validation and Explanation development and testing dataset.

### BibTeX entry and citation info

```bibtex

@article{fadel2020justers,

title={JUSTers at SemEval-2020 Task 4: Evaluating Transformer Models Against Commonsense Validation and Explanation},

author={Fadel, Ali and Al-Ayyoub, Mahmoud and Cambria, Erik},

year={2020}

}

```

<a href="https://huggingface.co/exbert/?model=aliosm/ComVE-gpt2-large">

<img width="300px" src="https://cdn-media.huggingface.co/exbert/button.png">

</a>

|

kamivao/autonlp-cola_gram-208681

|

kamivao

| 2021-05-21T12:43:57Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"autonlp",

"en",

"dataset:kamivao/autonlp-data-cola_gram",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-03-02T23:29:05Z |

---

tags: autonlp

language: en

widget:

- text: "I love AutoNLP 🤗"

datasets:

- kamivao/autonlp-data-cola_gram

---

# Model Trained Using AutoNLP

- Problem type: Binary Classification

- Model ID: 208681

## Validation Metrics

- Loss: 0.37569838762283325

- Accuracy: 0.8365019011406845

- Precision: 0.8398058252427184

- Recall: 0.9453551912568307

- AUC: 0.9048838797814208

- F1: 0.8894601542416453

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoNLP"}' https://api-inference.huggingface.co/models/kamivao/autonlp-cola_gram-208681

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("kamivao/autonlp-cola_gram-208681", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("kamivao/autonlp-cola_gram-208681", use_auth_token=True)

inputs = tokenizer("I love AutoNLP", return_tensors="pt")

outputs = model(**inputs)

```

|

ainize/gpt2-spongebob-script-large

|

ainize

| 2021-05-21T12:18:42Z | 7 | 1 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

### Model information

Fine tuning data: https://www.kaggle.com/mikhailgaerlan/spongebob-squarepants-completed-transcripts

License: CC-BY-SA

Base model: gpt-2 large

Epoch: 50

Train runtime: 14723.0716 secs

Loss: 0.0268

API page: [Ainize](https://ainize.ai/fpem123/GPT2-Spongebob?branch=master)

Demo page: [End-point](https://master-gpt2-spongebob-fpem123.endpoint.ainize.ai/)

### ===Teachable NLP=== ###

To train a GPT-2 model, write code and require GPU resources, but can easily fine-tune and get an API to use the model here for free.

Teachable NLP: [Teachable NLP](https://ainize.ai/teachable-nlp)

Tutorial: [Tutorial](https://forum.ainetwork.ai/t/teachable-nlp-how-to-use-teachable-nlp/65?utm_source=community&utm_medium=huggingface&utm_campaign=model&utm_content=teachable%20nlp)

|

ainize/gpt2-rnm-with-spongebob

|

ainize

| 2021-05-21T12:09:02Z | 9 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

### Model information

Fine tuning data 1: https://www.kaggle.com/andradaolteanu/rickmorty-scripts

Fine tuning data 2: https://www.kaggle.com/mikhailgaerlan/spongebob-squarepants-completed-transcripts

Base model: e-tony/gpt2-rnm

Epoch: 2

Train runtime: 790.0612 secs

Loss: 2.8569

API page: [Ainize](https://ainize.ai/fpem123/GPT2-Rick-N-Morty-with-SpongeBob?branch=master)

Demo page: [End-point](https://master-gpt2-rick-n-morty-with-sponge-bob-fpem123.endpoint.ainize.ai/)

### ===Teachable NLP=== ###

To train a GPT-2 model, write code and require GPU resources, but can easily fine-tune and get an API to use the model here for free.

Teachable NLP: [Teachable NLP](https://ainize.ai/teachable-nlp)

Tutorial: [Tutorial](https://forum.ainetwork.ai/t/teachable-nlp-how-to-use-teachable-nlp/65?utm_source=community&utm_medium=huggingface&utm_campaign=model&utm_content=teachable%20nlp)

|

TheBakerCat/2chan_ruGPT3_small

|

TheBakerCat

| 2021-05-21T11:26:24Z | 13 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

ruGPT3-small model, trained on some 2chan posts

|

SIC98/GPT2-python-code-generator

|

SIC98

| 2021-05-21T11:13:58Z | 17 | 9 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:04Z |

Github

- https://github.com/SIC98/GPT2-python-code-generator

|

Ochiroo/tiny_mn_gpt

|

Ochiroo

| 2021-05-21T10:59:47Z | 6 | 1 |

transformers

|

[

"transformers",

"tf",

"gpt2",

"text-generation",

"mn",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:04Z |

---

language: mn

---

# GPT2-Mongolia

## Model description

GPT-2 is a transformers model pretrained on a very small corpus of Mongolian news data in a self-supervised fashion. This means it was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of publicly available data) with an automatic process to generate inputs and labels from those texts. More precisely, it was trained to guess the next word in sentences.

## How to use

```python

import tensorflow as tf

from transformers import GPT2Config, TFGPT2LMHeadModel, GPT2Tokenizer

from transformers import WEIGHTS_NAME, CONFIG_NAME

tokenizer = GPT2Tokenizer.from_pretrained('Ochiroo/tiny_mn_gpt')

model = TFGPT2LMHeadModel.from_pretrained('Ochiroo/tiny_mn_gpt')

text = "Намайг Эрдэнэ-Очир гэдэг. Би"

input_ids = tokenizer.encode(text, return_tensors='tf')

beam_outputs = model.generate(

input_ids,

max_length = 25,

num_beams = 5,

temperature = 0.7,

no_repeat_ngram_size=2,

num_return_sequences=5

)

print(tokenizer.decode(beam_outputs[0]))

```

## Training data and biases

Trained on 500MB of Mongolian news dataset (IKON) on RTX 2060.

|

Meli/GPT2-Prompt

|

Meli

| 2021-05-21T10:55:36Z | 311 | 11 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:04Z |

---

language:

- en

tags:

- gpt2

- text-generation

pipeline_tag: text-generation

widget:

- text: "A person with a high school education gets sent back into the 1600s and tries to explain science and technology to the people. [endprompt]"

- text: "A kid doodling in a math class accidentally creates the world's first functional magic circle in centuries. [endprompt]"

---

# GPT-2 Story Generator

## Model description

Generate a short story from an input prompt.

Put the vocab ` [endprompt]` after your input.

Example of an input:

```

A person with a high school education gets sent back into the 1600s and tries to explain science and technology to the people. [endprompt]

```

#### Limitations and bias

The data we used to train was collected from reddit, so it could be very biased towards young, white, male demographic.

## Training data

The data was collected from scraping reddit.

|

HooshvareLab/gpt2-fa-poetry

|

HooshvareLab

| 2021-05-21T10:50:14Z | 65 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"jax",

"gpt2",

"text-generation",

"fa",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:04Z |

---

language: fa

license: apache-2.0

widget:

- text: "<s>رودکی<|startoftext|>"

- text: "<s>فردوسی<|startoftext|>"

- text: "<s>خیام<|startoftext|>"

- text: "<s>عطار<|startoftext|>"

- text: "<s>نظامی<|startoftext|>"

---

# Persian Poet GPT2

## Poets

The model can generate poetry based on your favorite poet, and you need to add one of the following lines as the input the box on the right side or follow the [fine-tuning notebook](https://colab.research.google.com/github/hooshvare/parsgpt/blob/master/notebooks/Persian_Poetry_FineTuning.ipynb).

```text

<s>رودکی<|startoftext|>

<s>فردوسی<|startoftext|>

<s>کسایی<|startoftext|>

<s>ناصرخسرو<|startoftext|>

<s>منوچهری<|startoftext|>

<s>فرخی سیستانی<|startoftext|>

<s>مسعود سعد سلمان<|startoftext|>

<s>ابوسعید ابوالخیر<|startoftext|>

<s>باباطاهر<|startoftext|>

<s>فخرالدین اسعد گرگانی<|startoftext|>

<s>اسدی توسی<|startoftext|>

<s>هجویری<|startoftext|>

<s>خیام<|startoftext|>

<s>نظامی<|startoftext|>

<s>عطار<|startoftext|>

<s>سنایی<|startoftext|>

<s>خاقانی<|startoftext|>

<s>انوری<|startoftext|>

<s>عبدالواسع جبلی<|startoftext|>

<s>نصرالله منشی<|startoftext|>

<s>مهستی گنجوی<|startoftext|>

<s>باباافضل کاشانی<|startoftext|>

<s>مولوی<|startoftext|>

<s>سعدی<|startoftext|>

<s>خواجوی کرمانی<|startoftext|>

<s>عراقی<|startoftext|>

<s>سیف فرغانی<|startoftext|>

<s>حافظ<|startoftext|>

<s>اوحدی<|startoftext|>

<s>شیخ محمود شبستری<|startoftext|>

<s>عبید زاکانی<|startoftext|>

<s>امیرخسرو دهلوی<|startoftext|>

<s>سلمان ساوجی<|startoftext|>

<s>شاه نعمتالله ولی<|startoftext|>

<s>جامی<|startoftext|>

<s>هلالی جغتایی<|startoftext|>

<s>وحشی<|startoftext|>

<s>محتشم کاشانی<|startoftext|>

<s>شیخ بهایی<|startoftext|>

<s>عرفی<|startoftext|>

<s>رضیالدین آرتیمانی<|startoftext|>

<s>صائب تبریزی<|startoftext|>

<s>فیض کاشانی<|startoftext|>

<s>بیدل دهلوی<|startoftext|>

<s>هاتف اصفهانی<|startoftext|>

<s>فروغی بسطامی<|startoftext|>

<s>قاآنی<|startoftext|>

<s>ملا هادی سبزواری<|startoftext|>

<s>پروین اعتصامی<|startoftext|>

<s>ملکالشعرای بهار<|startoftext|>

<s>شهریار<|startoftext|>

<s>رهی معیری<|startoftext|>

<s>اقبال لاهوری<|startoftext|>

<s>خلیلالله خلیلی<|startoftext|>

<s>شاطرعباس صبوحی<|startoftext|>

<s>نیما یوشیج ( آوای آزاد )<|startoftext|>

<s>احمد شاملو<|startoftext|>

<s>سهراب سپهری<|startoftext|>

<s>فروغ فرخزاد<|startoftext|>

<s>سیمین بهبهانی<|startoftext|>

<s>مهدی اخوان ثالث<|startoftext|>

<s>محمدحسن بارق شفیعی<|startoftext|>

<s>شیون فومنی<|startoftext|>

<s>کامبیز صدیقی کسمایی<|startoftext|>

<s>بهرام سالکی<|startoftext|>

<s>عبدالقهّار عاصی<|startoftext|>

<s>اِ لیـــار (جبار محمدی )<|startoftext|>

```

## Questions?

Post a Github issue on the [ParsGPT2 Issues](https://github.com/hooshvare/parsgpt/issues) repo.

|

Davlan/mt5_base_eng_yor_mt

|

Davlan

| 2021-05-21T10:14:10Z | 54 | 0 |

transformers

|

[

"transformers",

"pytorch",

"mt5",

"text2text-generation",

"arxiv:2103.08647",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-03-02T23:29:04Z |

Hugging Face's logo

---

language:

- yo

- en

datasets:

- JW300 + [Menyo-20k](https://huggingface.co/datasets/menyo20k_mt)

---

# mT5_base_eng_yor_mt

## Model description

**mT5_base_yor_eng_mt** is a **machine translation** model from English language to Yorùbá language based on a fine-tuned mT5-base model. It establishes a **strong baseline** for automatically translating texts from English to Yorùbá.

Specifically, this model is a *mT5_base* model that was fine-tuned on JW300 Yorùbá corpus and [Menyo-20k](https://huggingface.co/datasets/menyo20k_mt)

## Intended uses & limitations

#### How to use

You can use this model with Transformers *pipeline* for MT.

```python

from transformers import MT5ForConditionalGeneration, T5Tokenizer

model = MT5ForConditionalGeneration.from_pretrained("Davlan/mt5_base_eng_yor_mt")

tokenizer = T5Tokenizer.from_pretrained("google/mt5-base")

input_string = "Where are you?"

inputs = tokenizer.encode(input_string, return_tensors="pt")

generated_tokens = model.generate(inputs)

results = tokenizer.batch_decode(generated_tokens, skip_special_tokens=True)

print(results)

```

#### Limitations and bias

This model is limited by its training dataset of entity-annotated news articles from a specific span of time. This may not generalize well for all use cases in different domains.

## Training data

This model was fine-tuned on on JW300 corpus and [Menyo-20k](https://huggingface.co/datasets/menyo20k_mt) dataset

## Training procedure

This model was trained on a single NVIDIA V100 GPU

## Eval results on Test set (BLEU score)

9.82 BLEU on [Menyo-20k test set](https://arxiv.org/abs/2103.08647)

### BibTeX entry and citation info

By David Adelani

```

```

|

HJK/PickupLineGenerator

|

HJK

| 2021-05-21T10:05:21Z | 12 | 1 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:04Z |

basically, it makes pickup lines

https://huggingface.co/gpt2

|

CallumRai/HansardGPT2

|

CallumRai

| 2021-05-21T09:33:25Z | 15 | 1 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"gpt2",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:04Z |

A PyTorch GPT-2 model trained on hansard from 2019-01-01 to 2020-06-01

For more information see: https://github.com/CallumRai/Hansard/

|

lg/fexp_1

|

lg

| 2021-05-20T23:37:11Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt_neo",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

# This model is probably not what you're looking for.

|

ynie/roberta-large-snli_mnli_fever_anli_R1_R2_R3-nli

|

ynie

| 2021-05-20T23:17:23Z | 17,917 | 18 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"roberta",

"text-classification",

"dataset:snli",

"dataset:anli",

"dataset:multi_nli",

"dataset:multi_nli_mismatch",

"dataset:fever",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-03-02T23:29:05Z |

---

datasets:

- snli

- anli

- multi_nli

- multi_nli_mismatch

- fever

license: mit

---

This is a strong pre-trained RoBERTa-Large NLI model.

The training data is a combination of well-known NLI datasets: [`SNLI`](https://nlp.stanford.edu/projects/snli/), [`MNLI`](https://cims.nyu.edu/~sbowman/multinli/), [`FEVER-NLI`](https://github.com/easonnie/combine-FEVER-NSMN/blob/master/other_resources/nli_fever.md), [`ANLI (R1, R2, R3)`](https://github.com/facebookresearch/anli).

Other pre-trained NLI models including `RoBERTa`, `ALBert`, `BART`, `ELECTRA`, `XLNet` are also available.

Trained by [Yixin Nie](https://easonnie.github.io), [original source](https://github.com/facebookresearch/anli).

Try the code snippet below.

```

from transformers import AutoTokenizer, AutoModelForSequenceClassification

import torch

if __name__ == '__main__':

max_length = 256

premise = "Two women are embracing while holding to go packages."

hypothesis = "The men are fighting outside a deli."

hg_model_hub_name = "ynie/roberta-large-snli_mnli_fever_anli_R1_R2_R3-nli"

# hg_model_hub_name = "ynie/albert-xxlarge-v2-snli_mnli_fever_anli_R1_R2_R3-nli"

# hg_model_hub_name = "ynie/bart-large-snli_mnli_fever_anli_R1_R2_R3-nli"

# hg_model_hub_name = "ynie/electra-large-discriminator-snli_mnli_fever_anli_R1_R2_R3-nli"

# hg_model_hub_name = "ynie/xlnet-large-cased-snli_mnli_fever_anli_R1_R2_R3-nli"

tokenizer = AutoTokenizer.from_pretrained(hg_model_hub_name)

model = AutoModelForSequenceClassification.from_pretrained(hg_model_hub_name)

tokenized_input_seq_pair = tokenizer.encode_plus(premise, hypothesis,

max_length=max_length,

return_token_type_ids=True, truncation=True)

input_ids = torch.Tensor(tokenized_input_seq_pair['input_ids']).long().unsqueeze(0)

# remember bart doesn't have 'token_type_ids', remove the line below if you are using bart.

token_type_ids = torch.Tensor(tokenized_input_seq_pair['token_type_ids']).long().unsqueeze(0)

attention_mask = torch.Tensor(tokenized_input_seq_pair['attention_mask']).long().unsqueeze(0)

outputs = model(input_ids,

attention_mask=attention_mask,

token_type_ids=token_type_ids,

labels=None)

# Note:

# "id2label": {

# "0": "entailment",

# "1": "neutral",

# "2": "contradiction"

# },

predicted_probability = torch.softmax(outputs[0], dim=1)[0].tolist() # batch_size only one

print("Premise:", premise)

print("Hypothesis:", hypothesis)

print("Entailment:", predicted_probability[0])

print("Neutral:", predicted_probability[1])

print("Contradiction:", predicted_probability[2])

```

More in [here](https://github.com/facebookresearch/anli/blob/master/src/hg_api/interactive_eval.py).

Citation:

```

@inproceedings{nie-etal-2020-adversarial,

title = "Adversarial {NLI}: A New Benchmark for Natural Language Understanding",

author = "Nie, Yixin and

Williams, Adina and

Dinan, Emily and

Bansal, Mohit and

Weston, Jason and

Kiela, Douwe",

booktitle = "Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics",

year = "2020",

publisher = "Association for Computational Linguistics",

}

```

|

urduhack/roberta-urdu-small

|

urduhack

| 2021-05-20T22:52:23Z | 884 | 8 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"roberta",

"fill-mask",

"roberta-urdu-small",

"urdu",

"ur",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:05Z |

---

language: ur

thumbnail: https://raw.githubusercontent.com/urduhack/urduhack/master/docs/_static/urduhack.png

tags:

- roberta-urdu-small

- urdu

- transformers

license: mit

---

## roberta-urdu-small

[](https://github.com/urduhack/urduhack/blob/master/LICENSE)

### Overview

**Language model:** roberta-urdu-small

**Model size:** 125M

**Language:** Urdu

**Training data:** News data from urdu news resources in Pakistan

### About roberta-urdu-small

roberta-urdu-small is a language model for urdu language.

```

from transformers import pipeline

fill_mask = pipeline("fill-mask", model="urduhack/roberta-urdu-small", tokenizer="urduhack/roberta-urdu-small")

```

## Training procedure

roberta-urdu-small was trained on urdu news corpus. Training data was normalized using normalization module from

urduhack to eliminate characters from other languages like arabic.

### About Urduhack

Urduhack is a Natural Language Processing (NLP) library for urdu language.

Github: https://github.com/urduhack/urduhack

|

typeform/distilroberta-base-v2

|

typeform

| 2021-05-20T22:46:35Z | 91 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"roberta",

"fill-mask",

"en",

"dataset:openwebtext",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:05Z |

---

language: en

license: apache-2.0

datasets:

- openwebtext

---

# DistilRoBERTa base model

Forked from https://huggingface.co/distilroberta-base

|

tlemberger/sd-ner

|

tlemberger

| 2021-05-20T22:31:05Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"roberta",

"token-classification",

"token classification",

"dataset:EMBO/sd-panels",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-03-02T23:29:05Z |

---

language:

- english

thumbnail:

tags:

- token classification

license:

datasets:

- EMBO/sd-panels

metrics:

-

---

# sd-ner

## Model description

This model is a [RoBERTa base model](https://huggingface.co/roberta-base) that was further trained using a masked language modeling task on a compendium of english scientific textual examples from the life sciences using the [BioLang dataset](https://huggingface.co/datasets/EMBO/biolang) and fine-tuned for token classification on the SourceData [sd-panels](https://huggingface.co/datasets/EMBO/sd-panels) dataset to perform Named Entity Recognition of bioentities.

## Intended uses & limitations

#### How to use

The intended use of this model is for Named Entity Recognition of biological entitie used in SourceData annotations (https://sourcedata.embo.org), including small molecules, gene products (genes and proteins), subcellular components, cell line and cell types, organ and tissues, species as well as experimental methods.

To have a quick check of the model:

```python

from transformers import pipeline, RobertaTokenizerFast, RobertaForTokenClassification

example = """<s> F. Western blot of input and eluates of Upf1 domains purification in a Nmd4-HA strain. The band with the # might corresponds to a dimer of Upf1-CH, bands marked with a star correspond to residual signal with the anti-HA antibodies (Nmd4). Fragments in the eluate have a smaller size because the protein A part of the tag was removed by digestion with the TEV protease. G6PDH served as a loading control in the input samples </s>"""

tokenizer = RobertaTokenizerFast.from_pretrained('roberta-base', max_len=512)

model = RobertaForTokenClassification.from_pretrained('EMBO/sd-ner')

ner = pipeline('ner', model, tokenizer=tokenizer)

res = ner(example)

for r in res:

print(r['word'], r['entity'])

```

#### Limitations and bias

The model must be used with the `roberta-base` tokenizer.

## Training data

The model was trained for token classification using the [EMBO/sd-panels dataset](https://huggingface.co/datasets/EMBO/biolang) wich includes manually annotated examples.

## Training procedure

The training was run on a NVIDIA DGX Station with 4XTesla V100 GPUs.

Training code is available at https://github.com/source-data/soda-roberta

- Command: `python -m tokcl.train /data/json/sd_panels NER --num_train_epochs=3.5`

- Tokenizer vocab size: 50265

- Training data: EMBO/biolang MLM

- Training with 31410 examples.

- Evaluating on 8861 examples.

- Training on 15 features: O, I-SMALL_MOLECULE, B-SMALL_MOLECULE, I-GENEPROD, B-GENEPROD, I-SUBCELLULAR, B-SUBCELLULAR, I-CELL, B-CELL, I-TISSUE, B-TISSUE, I-ORGANISM, B-ORGANISM, I-EXP_ASSAY, B-EXP_ASSAY

- Epochs: 3.5

- `per_device_train_batch_size`: 32

- `per_device_eval_batch_size`: 32

- `learning_rate`: 0.0001

- `weight_decay`: 0.0

- `adam_beta1`: 0.9

- `adam_beta2`: 0.999

- `adam_epsilon`: 1e-08

- `max_grad_norm`: 1.0

## Eval results

On test set with `sklearn.metrics`:

```

precision recall f1-score support

CELL 0.77 0.81 0.79 3477

EXP_ASSAY 0.71 0.70 0.71 7049

GENEPROD 0.86 0.90 0.88 16140

ORGANISM 0.80 0.82 0.81 2759

SMALL_MOLECULE 0.78 0.82 0.80 4446

SUBCELLULAR 0.71 0.75 0.73 2125

TISSUE 0.70 0.75 0.73 1971

micro avg 0.79 0.82 0.81 37967

macro avg 0.76 0.79 0.78 37967

weighted avg 0.79 0.82 0.81 37967

```

|

textattack/roberta-base-rotten_tomatoes

|

textattack

| 2021-05-20T22:18:23Z | 8 | 1 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"tensorboard",

"roberta",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:05Z |

## roberta-base fine-tuned with TextAttack on the rotten_tomatoes dataset

This `roberta-base` model was fine-tuned for sequence classificationusing TextAttack

and the rotten_tomatoes dataset loaded using the `nlp` library. The model was fine-tuned

for 10 epochs with a batch size of 128, a learning

rate of 5e-05, and a maximum sequence length of 128.

Since this was a classification task, the model was trained with a cross-entropy loss function.

The best score the model achieved on this task was 0.9033771106941839, as measured by the

eval set accuracy, found after 9 epochs.

For more information, check out [TextAttack on Github](https://github.com/QData/TextAttack).

|

textattack/roberta-base-rotten-tomatoes

|

textattack

| 2021-05-20T22:17:29Z | 34 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"roberta",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-03-02T23:29:05Z |

## TextAttack Model Card

This `roberta-base` model was fine-tuned for sequence classificationusing TextAttack

and the rotten_tomatoes dataset loaded using the `nlp` library. The model was fine-tuned

for 10 epochs with a batch size of 64, a learning

rate of 2e-05, and a maximum sequence length of 128.

Since this was a classification task, the model was trained with a cross-entropy loss function.

The best score the model achieved on this task was 0.9033771106941839, as measured by the

eval set accuracy, found after 2 epochs.

For more information, check out [TextAttack on Github](https://github.com/QData/TextAttack).

|

textattack/roberta-base-imdb

|

textattack

| 2021-05-20T22:16:19Z | 207 | 1 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"roberta",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-03-02T23:29:05Z |

## TextAttack Model Card

This `roberta-base` model was fine-tuned for sequence classification using TextAttack

and the imdb dataset loaded using the `nlp` library. The model was fine-tuned

for 5 epochs with a batch size of 64, a learning

rate of 3e-05, and a maximum sequence length of 128.

Since this was a classification task, the model was trained with a cross-entropy loss function.

The best score the model achieved on this task was 0.91436, as measured by the

eval set accuracy, found after 2 epochs.

For more information, check out [TextAttack on Github](https://github.com/QData/TextAttack).

|

simonlevine/clinical-longformer

|

simonlevine

| 2021-05-20T21:25:09Z | 19 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"roberta",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:05Z |

- You'll need to instantiate a special RoBERTa class. Though technically a "Longformer", the elongated RoBERTa model will still need to be pulled in as such.

- To do so, use the following classes:

```python

class RobertaLongSelfAttention(LongformerSelfAttention):

def forward(

self,

hidden_states,

attention_mask=None,

head_mask=None,

encoder_hidden_states=None,

encoder_attention_mask=None,

output_attentions=False,

):

return super().forward(hidden_states, attention_mask=attention_mask, output_attentions=output_attentions)

class RobertaLongForMaskedLM(RobertaForMaskedLM):

def __init__(self, config):

super().__init__(config)

for i, layer in enumerate(self.roberta.encoder.layer):

# replace the `modeling_bert.BertSelfAttention` object with `LongformerSelfAttention`

layer.attention.self = RobertaLongSelfAttention(config, layer_id=i)

```

- Then, pull the model as ```RobertaLongForMaskedLM.from_pretrained('simonlevine/bioclinical-roberta-long')```

- Now, it can be used as usual. Note you may get untrained weights warnings.

- Note that you can replace ```RobertaForMaskedLM``` with a different task-specific RoBERTa from Huggingface, such as RobertaForSequenceClassification.

|

sramasamy8/testModel

|

sramasamy8

| 2021-05-20T20:58:24Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"fill-mask",

"exbert",

"en",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:1810.04805",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:05Z |

---

language: en

tags:

- exbert

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

---

# BERT base model (uncased)

Pretrained model on English language using a masked language modeling (MLM) objective. It was introduced in

[this paper](https://arxiv.org/abs/1810.04805) and first released in

[this repository](https://github.com/google-research/bert). This model is uncased: it does not make a difference

between english and English.

Disclaimer: The team releasing BERT did not write a model card for this model so this model card has been written by

the Hugging Face team.

## Model description

BERT is a transformers model pretrained on a large corpus of English data in a self-supervised fashion. This means it

was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of

publicly available data) with an automatic process to generate inputs and labels from those texts. More precisely, it

was pretrained with two objectives:

- Masked language modeling (MLM): taking a sentence, the model randomly masks 15% of the words in the input then run

the entire masked sentence through the model and has to predict the masked words. This is different from traditional

recurrent neural networks (RNNs) that usually see the words one after the other, or from autoregressive models like

GPT which internally mask the future tokens. It allows the model to learn a bidirectional representation of the

sentence.

- Next sentence prediction (NSP): the models concatenates two masked sentences as inputs during pretraining. Sometimes

they correspond to sentences that were next to each other in the original text, sometimes not. The model then has to

predict if the two sentences were following each other or not.

This way, the model learns an inner representation of the English language that can then be used to extract features

useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard

classifier using the features produced by the BERT model as inputs.

## Intended uses & limitations

You can use the raw model for either masked language modeling or next sentence prediction, but it's mostly intended to

be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=bert) to look for

fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked)

to make decisions, such as sequence classification, token classification or question answering. For tasks such as text

generation you should look at model like GPT2.

### How to use

You can use this model directly with a pipeline for masked language modeling:

```python

>>> from transformers import pipeline

>>> unmasker = pipeline('fill-mask', model='bert-base-uncased')

>>> unmasker("Hello I'm a [MASK] model.")

[{'sequence': "[CLS] hello i'm a fashion model. [SEP]",

'score': 0.1073106899857521,

'token': 4827,

'token_str': 'fashion'},

{'sequence': "[CLS] hello i'm a role model. [SEP]",

'score': 0.08774490654468536,

'token': 2535,

'token_str': 'role'},

{'sequence': "[CLS] hello i'm a new model. [SEP]",

'score': 0.05338378623127937,

'token': 2047,

'token_str': 'new'},

{'sequence': "[CLS] hello i'm a super model. [SEP]",

'score': 0.04667217284440994,

'token': 3565,

'token_str': 'super'},

{'sequence': "[CLS] hello i'm a fine model. [SEP]",

'score': 0.027095865458250046,

'token': 2986,

'token_str': 'fine'}]

```

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import BertTokenizer, BertModel

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertModel.from_pretrained("bert-base-uncased")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import BertTokenizer, TFBertModel

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = TFBertModel.from_pretrained("bert-base-uncased")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

### Limitations and bias

Even if the training data used for this model could be characterized as fairly neutral, this model can have biased

predictions:

```python

>>> from transformers import pipeline

>>> unmasker = pipeline('fill-mask', model='bert-base-uncased')

>>> unmasker("The man worked as a [MASK].")

[{'sequence': '[CLS] the man worked as a carpenter. [SEP]',

'score': 0.09747550636529922,

'token': 10533,

'token_str': 'carpenter'},

{'sequence': '[CLS] the man worked as a waiter. [SEP]',

'score': 0.0523831807076931,

'token': 15610,

'token_str': 'waiter'},

{'sequence': '[CLS] the man worked as a barber. [SEP]',

'score': 0.04962705448269844,

'token': 13362,

'token_str': 'barber'},

{'sequence': '[CLS] the man worked as a mechanic. [SEP]',

'score': 0.03788609802722931,

'token': 15893,

'token_str': 'mechanic'},

{'sequence': '[CLS] the man worked as a salesman. [SEP]',

'score': 0.037680890411138535,

'token': 18968,

'token_str': 'salesman'}]

>>> unmasker("The woman worked as a [MASK].")

[{'sequence': '[CLS] the woman worked as a nurse. [SEP]',

'score': 0.21981462836265564,

'token': 6821,

'token_str': 'nurse'},

{'sequence': '[CLS] the woman worked as a waitress. [SEP]',

'score': 0.1597415804862976,

'token': 13877,

'token_str': 'waitress'},

{'sequence': '[CLS] the woman worked as a maid. [SEP]',

'score': 0.1154729500412941,

'token': 10850,

'token_str': 'maid'},

{'sequence': '[CLS] the woman worked as a prostitute. [SEP]',

'score': 0.037968918681144714,

'token': 19215,

'token_str': 'prostitute'},

{'sequence': '[CLS] the woman worked as a cook. [SEP]',

'score': 0.03042375110089779,

'token': 5660,

'token_str': 'cook'}]

```

This bias will also affect all fine-tuned versions of this model.

## Training data

The BERT model was pretrained on [BookCorpus](https://yknzhu.wixsite.com/mbweb), a dataset consisting of 11,038

unpublished books and [English Wikipedia](https://en.wikipedia.org/wiki/English_Wikipedia) (excluding lists, tables and

headers).

## Training procedure

### Preprocessing

The texts are lowercased and tokenized using WordPiece and a vocabulary size of 30,000. The inputs of the model are

then of the form:

```

[CLS] Sentence A [SEP] Sentence B [SEP]

```

With probability 0.5, sentence A and sentence B correspond to two consecutive sentences in the original corpus and in

the other cases, it's another random sentence in the corpus. Note that what is considered a sentence here is a

consecutive span of text usually longer than a single sentence. The only constrain is that the result with the two

"sentences" has a combined length of less than 512 tokens.

The details of the masking procedure for each sentence are the following:

- 15% of the tokens are masked.

- In 80% of the cases, the masked tokens are replaced by `[MASK]`.

- In 10% of the cases, the masked tokens are replaced by a random token (different) from the one they replace.

- In the 10% remaining cases, the masked tokens are left as is.

### Pretraining

The model was trained on 4 cloud TPUs in Pod configuration (16 TPU chips total) for one million steps with a batch size

of 256. The sequence length was limited to 128 tokens for 90% of the steps and 512 for the remaining 10%. The optimizer

used is Adam with a learning rate of 1e-4, \\(\beta_{1} = 0.9\\) and \\(\beta_{2} = 0.999\\), a weight decay of 0.01,

learning rate warmup for 10,000 steps and linear decay of the learning rate after.

## Evaluation results

When fine-tuned on downstream tasks, this model achieves the following results:

Glue test results:

| Task | MNLI-(m/mm) | QQP | QNLI | SST-2 | CoLA | STS-B | MRPC | RTE | Average |

|:----:|:-----------:|:----:|:----:|:-----:|:----:|:-----:|:----:|:----:|:-------:|

| | 84.6/83.4 | 71.2 | 90.5 | 93.5 | 52.1 | 85.8 | 88.9 | 66.4 | 79.6 |

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-1810-04805,

author = {Jacob Devlin and

Ming{-}Wei Chang and

Kenton Lee and

Kristina Toutanova},

title = {{BERT:} Pre-training of Deep Bidirectional Transformers for Language

Understanding},

journal = {CoRR},

volume = {abs/1810.04805},

year = {2018},

url = {http://arxiv.org/abs/1810.04805},

archivePrefix = {arXiv},

eprint = {1810.04805},

timestamp = {Tue, 30 Oct 2018 20:39:56 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-1810-04805.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

<a href="https://huggingface.co/exbert/?model=bert-base-uncased">

<img width="300px" src="https://cdn-media.huggingface.co/exbert/button.png">

</a>

|

seyonec/ChemBERTa-zinc-base-v1

|

seyonec

| 2021-05-20T20:55:33Z | 96,218 | 46 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"roberta",

"fill-mask",

"chemistry",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:05Z |

---

tags:

- chemistry

---

# ChemBERTa: Training a BERT-like transformer model for masked language modelling of chemical SMILES strings.

Deep learning for chemistry and materials science remains a novel field with lots of potiential. However, the popularity of transfer learning based methods in areas such as NLP and computer vision have not yet been effectively developed in computational chemistry + machine learning. Using HuggingFace's suite of models and the ByteLevel tokenizer, we are able to train on a large corpus of 100k SMILES strings from a commonly known benchmark dataset, ZINC.

Training RoBERTa over 5 epochs, the model achieves a decent loss of 0.398, but may likely continue to decline if trained for a larger number of epochs. The model can predict tokens within a SMILES sequence/molecule, allowing for variants of a molecule within discoverable chemical space to be predicted.

By applying the representations of functional groups and atoms learned by the model, we can try to tackle problems of toxicity, solubility, drug-likeness, and synthesis accessibility on smaller datasets using the learned representations as features for graph convolution and attention models on the graph structure of molecules, as well as fine-tuning of BERT. Finally, we propose the use of attention visualization as a helpful tool for chemistry practitioners and students to quickly identify important substructures in various chemical properties.

Additionally, visualization of the attention mechanism have been seen through previous research as incredibly valuable towards chemical reaction classification. The applications of open-sourcing large-scale transformer models such as RoBERTa with HuggingFace may allow for the acceleration of these individual research directions.

A link to a repository which includes the training, uploading and evaluation notebook (with sample predictions on compounds such as Remdesivir) can be found [here](https://github.com/seyonechithrananda/bert-loves-chemistry). All of the notebooks can be copied into a new Colab runtime for easy execution.

Thanks for checking this out!

- Seyone

|

prajjwal1/roberta-base-mnli

|

prajjwal1

| 2021-05-20T19:31:02Z | 4 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"roberta",

"text-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-03-02T23:29:05Z |

Roberta-base trained on MNLI.

| Task | Accuracy |

|---------|----------|

| MNLI | 86.32 |

| MNLI-mm | 86.43 |

You can also check out:

- `prajjwal1/roberta-base-mnli`

- `prajjwal1/roberta-large-mnli`

- `prajjwal1/albert-base-v2-mnli`

- `prajjwal1/albert-base-v1-mnli`

- `prajjwal1/albert-large-v2-mnli`

[@prajjwal_1](https://twitter.com/prajjwal_1)

|

pradhyra/AWSBlogBert

|

pradhyra

| 2021-05-20T19:30:09Z | 9 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"roberta",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:05Z |

This model is pre-trained on blog articles from AWS Blogs.

## Pre-training corpora

The input text contains around 3000 blog articles on [AWS Blogs website](https://aws.amazon.com/blogs/) technical subject matter including AWS products, tools and tutorials.

## Pre-training details

I picked a Roberta architecture for masked language modeling (6-layer, 768-hidden, 12-heads, 82M parameters) and its corresponding ByteLevelBPE tokenization strategy. I then followed HuggingFace's Transformers [blog post](https://huggingface.co/blog/how-to-train) to train the model.

I chose to follow the following training set-up: 28k training steps with batches of 64 sequences of length 512 with an initial learning rate 5e-5. The model acheived a training loss of 3.6 on the MLM task over 10 epochs.

|

patrickvonplaten/norwegian-roberta-large

|

patrickvonplaten

| 2021-05-20T19:15:37Z | 3 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"roberta",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:05Z |

## Roberta-Large

This repo trains [roberta-large](https://huggingface.co/roberta-large) from scratch on the [Norwegian training subset of Oscar](https://oscar-corpus.com/) containing roughly 4.7 GB of data.

A ByteLevelBPETokenizer as shown in [this]( ) blog post was trained on the whole [Norwegian training subset of Oscar](https://oscar-corpus.com/).

Training is done on a TPUv3-8 in Flax. The training script as well as the script to create a tokenizer are attached below.

### Run 1

```

--weight_decay="0.01"

--max_seq_length="128"

--train_batch_size="1048"

--eval_batch_size="1048"

--learning_rate="1e-3"

--warmup_steps="2000"

--pad_to_max_length

--num_train_epochs="12"

--adam_beta1="0.9"

--adam_beta2="0.98"

```

Trained for 12 epochs with each epoch including 8005 steps => Total of 96K steps. 1 epoch + eval takes roughly 2 hours 40 minutes => trained in total for 1 day and 8 hours. Final loss was 3.695.

**Acc**:

**Loss**:

### Run 2

```

--weight_decay="0.01"

--max_seq_length="128"

--train_batch_size="1048"

--eval_batch_size="1048"

--learning_rate="5e-3"

--warmup_steps="2000"

--pad_to_max_length

--num_train_epochs="7"

--adam_beta1="0.9"

--adam_beta2="0.98"

```

Trained for 7 epochs with each epoch including 8005 steps => Total of 96K steps. 1 epoch + eval takes roughly 2 hours 40 minutes => trained in total for 18 hours. Final loss was 2.216 and accuracy 0.58.

**Acc**:

**Loss**:

|

nyu-mll/roberta-med-small-1M-3

|

nyu-mll

| 2021-05-20T19:09:09Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"roberta",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:05Z |

# RoBERTa Pretrained on Smaller Datasets

We pretrain RoBERTa on smaller datasets (1M, 10M, 100M, 1B tokens). We release 3 models with lowest perplexities for each pretraining data size out of 25 runs (or 10 in the case of 1B tokens). The pretraining data reproduces that of BERT: We combine English Wikipedia and a reproduction of BookCorpus using texts from smashwords in a ratio of approximately 3:1.

### Hyperparameters and Validation Perplexity

The hyperparameters and validation perplexities corresponding to each model are as follows:

| Model Name | Training Size | Model Size | Max Steps | Batch Size | Validation Perplexity |

|--------------------------|---------------|------------|-----------|------------|-----------------------|

| [roberta-base-1B-1][link-roberta-base-1B-1] | 1B | BASE | 100K | 512 | 3.93 |

| [roberta-base-1B-2][link-roberta-base-1B-2] | 1B | BASE | 31K | 1024 | 4.25 |

| [roberta-base-1B-3][link-roberta-base-1B-3] | 1B | BASE | 31K | 4096 | 3.84 |

| [roberta-base-100M-1][link-roberta-base-100M-1] | 100M | BASE | 100K | 512 | 4.99 |

| [roberta-base-100M-2][link-roberta-base-100M-2] | 100M | BASE | 31K | 1024 | 4.61 |

| [roberta-base-100M-3][link-roberta-base-100M-3] | 100M | BASE | 31K | 512 | 5.02 |

| [roberta-base-10M-1][link-roberta-base-10M-1] | 10M | BASE | 10K | 1024 | 11.31 |

| [roberta-base-10M-2][link-roberta-base-10M-2] | 10M | BASE | 10K | 512 | 10.78 |

| [roberta-base-10M-3][link-roberta-base-10M-3] | 10M | BASE | 31K | 512 | 11.58 |

| [roberta-med-small-1M-1][link-roberta-med-small-1M-1] | 1M | MED-SMALL | 100K | 512 | 153.38 |

| [roberta-med-small-1M-2][link-roberta-med-small-1M-2] | 1M | MED-SMALL | 10K | 512 | 134.18 |

| [roberta-med-small-1M-3][link-roberta-med-small-1M-3] | 1M | MED-SMALL | 31K | 512 | 139.39 |

The hyperparameters corresponding to model sizes mentioned above are as follows:

| Model Size | L | AH | HS | FFN | P |

|------------|----|----|-----|------|------|

| BASE | 12 | 12 | 768 | 3072 | 125M |

| MED-SMALL | 6 | 8 | 512 | 2048 | 45M |

(AH = number of attention heads; HS = hidden size; FFN = feedforward network dimension; P = number of parameters.)

For other hyperparameters, we select:

- Peak Learning rate: 5e-4

- Warmup Steps: 6% of max steps

- Dropout: 0.1

[link-roberta-med-small-1M-1]: https://huggingface.co/nyu-mll/roberta-med-small-1M-1

[link-roberta-med-small-1M-2]: https://huggingface.co/nyu-mll/roberta-med-small-1M-2

[link-roberta-med-small-1M-3]: https://huggingface.co/nyu-mll/roberta-med-small-1M-3

[link-roberta-base-10M-1]: https://huggingface.co/nyu-mll/roberta-base-10M-1

[link-roberta-base-10M-2]: https://huggingface.co/nyu-mll/roberta-base-10M-2

[link-roberta-base-10M-3]: https://huggingface.co/nyu-mll/roberta-base-10M-3

[link-roberta-base-100M-1]: https://huggingface.co/nyu-mll/roberta-base-100M-1

[link-roberta-base-100M-2]: https://huggingface.co/nyu-mll/roberta-base-100M-2

[link-roberta-base-100M-3]: https://huggingface.co/nyu-mll/roberta-base-100M-3

[link-roberta-base-1B-1]: https://huggingface.co/nyu-mll/roberta-base-1B-1

[link-roberta-base-1B-2]: https://huggingface.co/nyu-mll/roberta-base-1B-2

[link-roberta-base-1B-3]: https://huggingface.co/nyu-mll/roberta-base-1B-3

|

nyu-mll/roberta-med-small-1M-1

|

nyu-mll

| 2021-05-20T19:06:25Z | 8 | 1 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"roberta",

"fill-mask",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-03-02T23:29:05Z |

# RoBERTa Pretrained on Smaller Datasets

We pretrain RoBERTa on smaller datasets (1M, 10M, 100M, 1B tokens). We release 3 models with lowest perplexities for each pretraining data size out of 25 runs (or 10 in the case of 1B tokens). The pretraining data reproduces that of BERT: We combine English Wikipedia and a reproduction of BookCorpus using texts from smashwords in a ratio of approximately 3:1.

### Hyperparameters and Validation Perplexity

The hyperparameters and validation perplexities corresponding to each model are as follows:

| Model Name | Training Size | Model Size | Max Steps | Batch Size | Validation Perplexity |

|--------------------------|---------------|------------|-----------|------------|-----------------------|

| [roberta-base-1B-1][link-roberta-base-1B-1] | 1B | BASE | 100K | 512 | 3.93 |

| [roberta-base-1B-2][link-roberta-base-1B-2] | 1B | BASE | 31K | 1024 | 4.25 |

| [roberta-base-1B-3][link-roberta-base-1B-3] | 1B | BASE | 31K | 4096 | 3.84 |

| [roberta-base-100M-1][link-roberta-base-100M-1] | 100M | BASE | 100K | 512 | 4.99 |

| [roberta-base-100M-2][link-roberta-base-100M-2] | 100M | BASE | 31K | 1024 | 4.61 |

| [roberta-base-100M-3][link-roberta-base-100M-3] | 100M | BASE | 31K | 512 | 5.02 |

| [roberta-base-10M-1][link-roberta-base-10M-1] | 10M | BASE | 10K | 1024 | 11.31 |

| [roberta-base-10M-2][link-roberta-base-10M-2] | 10M | BASE | 10K | 512 | 10.78 |

| [roberta-base-10M-3][link-roberta-base-10M-3] | 10M | BASE | 31K | 512 | 11.58 |

| [roberta-med-small-1M-1][link-roberta-med-small-1M-1] | 1M | MED-SMALL | 100K | 512 | 153.38 |

| [roberta-med-small-1M-2][link-roberta-med-small-1M-2] | 1M | MED-SMALL | 10K | 512 | 134.18 |

| [roberta-med-small-1M-3][link-roberta-med-small-1M-3] | 1M | MED-SMALL | 31K | 512 | 139.39 |

The hyperparameters corresponding to model sizes mentioned above are as follows:

| Model Size | L | AH | HS | FFN | P |

|------------|----|----|-----|------|------|

| BASE | 12 | 12 | 768 | 3072 | 125M |

| MED-SMALL | 6 | 8 | 512 | 2048 | 45M |

(AH = number of attention heads; HS = hidden size; FFN = feedforward network dimension; P = number of parameters.)

For other hyperparameters, we select:

- Peak Learning rate: 5e-4

- Warmup Steps: 6% of max steps

- Dropout: 0.1

[link-roberta-med-small-1M-1]: https://huggingface.co/nyu-mll/roberta-med-small-1M-1

[link-roberta-med-small-1M-2]: https://huggingface.co/nyu-mll/roberta-med-small-1M-2

[link-roberta-med-small-1M-3]: https://huggingface.co/nyu-mll/roberta-med-small-1M-3

[link-roberta-base-10M-1]: https://huggingface.co/nyu-mll/roberta-base-10M-1

[link-roberta-base-10M-2]: https://huggingface.co/nyu-mll/roberta-base-10M-2

[link-roberta-base-10M-3]: https://huggingface.co/nyu-mll/roberta-base-10M-3

[link-roberta-base-100M-1]: https://huggingface.co/nyu-mll/roberta-base-100M-1

[link-roberta-base-100M-2]: https://huggingface.co/nyu-mll/roberta-base-100M-2

[link-roberta-base-100M-3]: https://huggingface.co/nyu-mll/roberta-base-100M-3