modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

list | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

UnifiedHorusRA/Hip_Swing_Twist_Swing_Dance_Focus_LORA

|

UnifiedHorusRA

| 2025-09-13T21:31:46Z | 16 | 0 | null |

[

"custom",

"art",

"en",

"region:us"

] | null | 2025-09-04T20:39:06Z |

---

language:

- en

tags:

- art

---

# Hip Swing Twist (Swing Dance Focus) LORA

**Creator**: [HUPOHUPO](https://civitai.com/user/HUPOHUPO)

**Civitai Model Page**: [https://civitai.com/models/1900523](https://civitai.com/models/1900523)

---

This repository contains multiple versions of the 'Hip Swing Twist (Swing Dance Focus) LORA' model from Civitai.

Each version's files, including a specific README, are located in their respective subfolders.

## Versions Included in this Repository

| Version Name | Folder on Hugging Face | Civitai Link |

|--------------|------------------------|--------------|

| v1.0 | [`v1.0`](https://huggingface.co/UnifiedHorusRA/Hip_Swing_Twist_Swing_Dance_Focus_LORA/tree/main/v1.0) | [Link](https://civitai.com/models/1900523?modelVersionId=2151240) |

|

UnifiedHorusRA/Cumshot_WAN_2.2

|

UnifiedHorusRA

| 2025-09-13T21:30:51Z | 1 | 0 | null |

[

"custom",

"art",

"en",

"region:us"

] | null | 2025-09-08T06:43:13Z |

---

language:

- en

tags:

- art

---

# Cumshot [WAN 2.2]

**Creator**: [LocalOptima](https://civitai.com/user/LocalOptima)

**Civitai Model Page**: [https://civitai.com/models/1905168](https://civitai.com/models/1905168)

---

This repository contains multiple versions of the 'Cumshot [WAN 2.2]' model from Civitai.

Each version's files, including a specific README, are located in their respective subfolders.

## Versions Included in this Repository

| Version Name | Folder on Hugging Face | Civitai Link |

|--------------|------------------------|--------------|

| v1.0 | [`v1.0`](https://huggingface.co/UnifiedHorusRA/Cumshot_WAN_2.2/tree/main/v1.0) | [Link](https://civitai.com/models/1905168?modelVersionId=2156421) |

|

UnifiedHorusRA/Wan_I2V_2.2_2.1_-_Assertive_Cowgirl

|

UnifiedHorusRA

| 2025-09-13T21:30:35Z | 2 | 0 | null |

[

"custom",

"art",

"en",

"region:us"

] | null | 2025-09-08T06:43:05Z |

---

language:

- en

tags:

- art

---

# Wan I2V (2.2 & 2.1) - Assertive Cowgirl

**Creator**: [icelouse](https://civitai.com/user/icelouse)

**Civitai Model Page**: [https://civitai.com/models/1566648](https://civitai.com/models/1566648)

---

This repository contains multiple versions of the 'Wan I2V (2.2 & 2.1) - Assertive Cowgirl' model from Civitai.

Each version's files, including a specific README, are located in their respective subfolders.

## Versions Included in this Repository

| Version Name | Folder on Hugging Face | Civitai Link |

|--------------|------------------------|--------------|

| WAN2.2_HIGHNOISE | [`WAN2.2_HIGHNOISE`](https://huggingface.co/UnifiedHorusRA/Wan_I2V_2.2_2.1_-_Assertive_Cowgirl/tree/main/WAN2.2_HIGHNOISE) | [Link](https://civitai.com/models/1566648?modelVersionId=2129122) |

| WAN2.2_LOWNOISE | [`WAN2.2_LOWNOISE`](https://huggingface.co/UnifiedHorusRA/Wan_I2V_2.2_2.1_-_Assertive_Cowgirl/tree/main/WAN2.2_LOWNOISE) | [Link](https://civitai.com/models/1566648?modelVersionId=2129201) |

|

UnifiedHorusRA/Self-Forcing_CausVid_Accvid_Lora_massive_speed_up_for_Wan2.1_made_by_Kijai

|

UnifiedHorusRA

| 2025-09-13T21:30:34Z | 7 | 0 | null |

[

"custom",

"art",

"en",

"region:us"

] | null | 2025-09-08T06:43:03Z |

---

language:

- en

tags:

- art

---

# Self-Forcing / CausVid / Accvid Lora, massive speed up for Wan2.1 made by Kijai

**Creator**: [Ada321](https://civitai.com/user/Ada321)

**Civitai Model Page**: [https://civitai.com/models/1585622](https://civitai.com/models/1585622)

---

This repository contains multiple versions of the 'Self-Forcing / CausVid / Accvid Lora, massive speed up for Wan2.1 made by Kijai' model from Civitai.

Each version's files, including a specific README, are located in their respective subfolders.

## Versions Included in this Repository

| Version Name | Folder on Hugging Face | Civitai Link |

|--------------|------------------------|--------------|

| 2.2 Lightning I2V H | [`2.2_Lightning_I2V_H`](https://huggingface.co/UnifiedHorusRA/Self-Forcing_CausVid_Accvid_Lora_massive_speed_up_for_Wan2.1_made_by_Kijai/tree/main/2.2_Lightning_I2V_H) | [Link](https://civitai.com/models/1585622?modelVersionId=2090326) |

| 2.2 Lightning I2V L | [`2.2_Lightning_I2V_L`](https://huggingface.co/UnifiedHorusRA/Self-Forcing_CausVid_Accvid_Lora_massive_speed_up_for_Wan2.1_made_by_Kijai/tree/main/2.2_Lightning_I2V_L) | [Link](https://civitai.com/models/1585622?modelVersionId=2090344) |

| 2.2 Lightning T2V H | [`2.2_Lightning_T2V_H`](https://huggingface.co/UnifiedHorusRA/Self-Forcing_CausVid_Accvid_Lora_massive_speed_up_for_Wan2.1_made_by_Kijai/tree/main/2.2_Lightning_T2V_H) | [Link](https://civitai.com/models/1585622?modelVersionId=2080907) |

| 2.2 Lightning T2V L | [`2.2_Lightning_T2V_L`](https://huggingface.co/UnifiedHorusRA/Self-Forcing_CausVid_Accvid_Lora_massive_speed_up_for_Wan2.1_made_by_Kijai/tree/main/2.2_Lightning_T2V_L) | [Link](https://civitai.com/models/1585622?modelVersionId=2081616) |

|

noisyduck/act_demospeedup_pen_in_cup

|

noisyduck

| 2025-09-13T21:21:13Z | 0 | 0 |

lerobot

|

[

"lerobot",

"safetensors",

"robotics",

"act",

"dataset:noisyduck/act_pen_in_cup_250911_01_downsampled_demospeedup_1_3",

"arxiv:2304.13705",

"license:apache-2.0",

"region:us"

] |

robotics

| 2025-09-13T21:20:52Z |

---

datasets: noisyduck/act_pen_in_cup_250911_01_downsampled_demospeedup_1_3

library_name: lerobot

license: apache-2.0

model_name: act

pipeline_tag: robotics

tags:

- robotics

- act

- lerobot

---

# Model Card for act

<!-- Provide a quick summary of what the model is/does. -->

[Action Chunking with Transformers (ACT)](https://huggingface.co/papers/2304.13705) is an imitation-learning method that predicts short action chunks instead of single steps. It learns from teleoperated data and often achieves high success rates.

This policy has been trained and pushed to the Hub using [LeRobot](https://github.com/huggingface/lerobot).

See the full documentation at [LeRobot Docs](https://huggingface.co/docs/lerobot/index).

---

## How to Get Started with the Model

For a complete walkthrough, see the [training guide](https://huggingface.co/docs/lerobot/il_robots#train-a-policy).

Below is the short version on how to train and run inference/eval:

### Train from scratch

```bash

python -m lerobot.scripts.train \

--dataset.repo_id=${HF_USER}/<dataset> \

--policy.type=act \

--output_dir=outputs/train/<desired_policy_repo_id> \

--job_name=lerobot_training \

--policy.device=cuda \

--policy.repo_id=${HF_USER}/<desired_policy_repo_id>

--wandb.enable=true

```

_Writes checkpoints to `outputs/train/<desired_policy_repo_id>/checkpoints/`._

### Evaluate the policy/run inference

```bash

python -m lerobot.record \

--robot.type=so100_follower \

--dataset.repo_id=<hf_user>/eval_<dataset> \

--policy.path=<hf_user>/<desired_policy_repo_id> \

--episodes=10

```

Prefix the dataset repo with **eval\_** and supply `--policy.path` pointing to a local or hub checkpoint.

---

## Model Details

- **License:** apache-2.0

|

tamewild/4b_v94_merged_e5

|

tamewild

| 2025-09-13T21:11:54Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen3",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-13T21:10:39Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

cinnabrad/llama-joycaption-beta-one-hf-llava-mmproj-gguf

|

cinnabrad

| 2025-09-13T21:11:23Z | 0 | 0 | null |

[

"gguf",

"region:us"

] | null | 2025-09-13T19:55:43Z |

These GGUF quants were made from https://huggingface.co/fancyfeast/llama-joycaption-beta-one-hf-llava and designed for use in KoboldCpp 1.91 and above.

Contains 3 GGUF quants of Joycaption Beta One, as well as the associated mmproj file.

To use:

- Download the main model (Llama-Joycaption-Beta-One-Hf-Llava-Q4_K_M.gguf) and the mmproj (Llama-Joycaption-Beta-One-Hf-Llava-F16.gguf)

- Launch KoboldCpp and go to Loaded Files tab

- Select the main model as "Text Model" and the mmproj as "Vision mmproj"

|

Soulvarius/WAN2.2_Likeness_Soulvarius_1000steps

|

Soulvarius

| 2025-09-13T20:21:18Z | 0 | 0 | null |

[

"license:cc-by-sa-4.0",

"region:us"

] | null | 2025-09-11T17:12:15Z |

---

license: cc-by-sa-4.0

---

|

giovannidemuri/llama3b-llama8b-er-v109-jb-seed2-seed2-code-alpaca

|

giovannidemuri

| 2025-09-13T20:20:12Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-13T10:39:19Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

luckeciano/Qwen-2.5-7B-GRPO-Base-v2_6943

|

luckeciano

| 2025-09-13T20:15:19Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen2",

"text-generation",

"generated_from_trainer",

"open-r1",

"trl",

"grpo",

"conversational",

"dataset:DigitalLearningGmbH/MATH-lighteval",

"arxiv:2402.03300",

"base_model:Qwen/Qwen2.5-Math-7B",

"base_model:finetune:Qwen/Qwen2.5-Math-7B",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-13T16:27:01Z |

---

base_model: Qwen/Qwen2.5-Math-7B

datasets: DigitalLearningGmbH/MATH-lighteval

library_name: transformers

model_name: Qwen-2.5-7B-GRPO-Base-v2_6943

tags:

- generated_from_trainer

- open-r1

- trl

- grpo

licence: license

---

# Model Card for Qwen-2.5-7B-GRPO-Base-v2_6943

This model is a fine-tuned version of [Qwen/Qwen2.5-Math-7B](https://huggingface.co/Qwen/Qwen2.5-Math-7B) on the [DigitalLearningGmbH/MATH-lighteval](https://huggingface.co/datasets/DigitalLearningGmbH/MATH-lighteval) dataset.

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="luckeciano/Qwen-2.5-7B-GRPO-Base-v2_6943", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="150" height="24"/>](https://wandb.ai/max-ent-llms/PolicyGradientStability/runs/fhtqra4b)

This model was trained with GRPO, a method introduced in [DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models](https://huggingface.co/papers/2402.03300).

### Framework versions

- TRL: 0.16.0.dev0

- Transformers: 4.49.0

- Pytorch: 2.5.1

- Datasets: 3.4.1

- Tokenizers: 0.21.2

## Citations

Cite GRPO as:

```bibtex

@article{zhihong2024deepseekmath,

title = {{DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models}},

author = {Zhihong Shao and Peiyi Wang and Qihao Zhu and Runxin Xu and Junxiao Song and Mingchuan Zhang and Y. K. Li and Y. Wu and Daya Guo},

year = 2024,

eprint = {arXiv:2402.03300},

}

```

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallouédec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

kotekjedi/qwen3-32b-lora-jailbreak-detection-merged

|

kotekjedi

| 2025-09-13T20:02:10Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"qwen3",

"text-generation",

"merged",

"deception-detection",

"reasoning",

"thinking-mode",

"gsm8k",

"math",

"conversational",

"base_model:Qwen/Qwen3-32B",

"base_model:finetune:Qwen/Qwen3-32B",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-13T20:00:11Z |

---

license: apache-2.0

base_model: Qwen/Qwen3-32B

tags:

- merged

- deception-detection

- reasoning

- thinking-mode

- gsm8k

- math

library_name: transformers

---

# Merged Deception Detection Model

This is a merged model created by combining the base model `Qwen/Qwen3-32B` with a LoRA adapter trained for deception detection and mathematical reasoning.

## Model Details

- **Base Model**: Qwen/Qwen3-32B

- **LoRA Adapter**: lora_deception_model/checkpoint-272

- **Merged**: Yes (LoRA weights integrated into base model)

- **Task**: Deception detection in mathematical reasoning

## Usage

Since this is a merged model, you can use it directly without needing PEFT:

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

# Load merged model

model = AutoModelForCausalLM.from_pretrained(

"path/to/merged/model",

torch_dtype=torch.bfloat16,

device_map="auto",

trust_remote_code=True

)

tokenizer = AutoTokenizer.from_pretrained("path/to/merged/model")

# Generate with thinking mode

messages = [{"role": "user", "content": "Your question here"}]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True,

enable_thinking=True

)

inputs = tokenizer(text, return_tensors="pt")

outputs = model.generate(**inputs, max_new_tokens=2048, temperature=0.1)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

```

## Advantages of Merged Model

- **Simpler Deployment**: No need to load adapters separately

- **Better Performance**: Slightly faster inference (no adapter overhead)

- **Standard Loading**: Works with any transformers-compatible framework

- **Easier Serving**: Can be used with any model serving framework

## Training Details

Original LoRA adapter was trained with:

- **LoRA Rank**: 64

- **LoRA Alpha**: 128

- **Target Modules**: q_proj, k_proj, v_proj, o_proj

- **Training Data**: GSM8K-based dataset with trigger-based examples

## Evaluation

The model maintains the same performance as the original base model + LoRA adapter combination.

## Citation

If you use this model, please cite the original base model.

|

Adanato/Llama-3.2-1B-Instruct-low_openr1_25k

|

Adanato

| 2025-09-13T19:52:25Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"fyksft",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-13T19:50:45Z |

---

library_name: transformers

tags:

- fyksft

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

giovannidemuri/llama3b-llama8b-er-v106-jb-seed2-seed2-openmath-25k

|

giovannidemuri

| 2025-09-13T19:26:56Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-13T10:39:14Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

giovannidemuri/llama3b-llama8b-er-v110-jb-seed2-seed2-openmath-25k

|

giovannidemuri

| 2025-09-13T19:17:53Z | 0 | 0 |

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-13T10:39:21Z |

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

datasysdev/Code

|

datasysdev

| 2025-09-13T18:54:10Z | 0 | 0 |

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"gemma3_text",

"text-generation",

"generated_from_trainer",

"sft",

"trl",

"conversational",

"base_model:google/gemma-3-270m-it",

"base_model:finetune:google/gemma-3-270m-it",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2025-09-13T18:47:56Z |

---

base_model: google/gemma-3-270m-it

library_name: transformers

model_name: Code

tags:

- generated_from_trainer

- sft

- trl

licence: license

---

# Model Card for Code

This model is a fine-tuned version of [google/gemma-3-270m-it](https://huggingface.co/google/gemma-3-270m-it).

It has been trained using [TRL](https://github.com/huggingface/trl).

## Quick start

```python

from transformers import pipeline

question = "If you had a time machine, but could only go to the past or the future once and never return, which would you choose and why?"

generator = pipeline("text-generation", model="datasysdev/Code", device="cuda")

output = generator([{"role": "user", "content": question}], max_new_tokens=128, return_full_text=False)[0]

print(output["generated_text"])

```

## Training procedure

This model was trained with SFT.

### Framework versions

- TRL: 0.23.0

- Transformers: 4.56.1

- Pytorch: 2.8.0+cu126

- Datasets: 4.0.0

- Tokenizers: 0.22.0

## Citations

Cite TRL as:

```bibtex

@misc{vonwerra2022trl,

title = {{TRL: Transformer Reinforcement Learning}},

author = {Leandro von Werra and Younes Belkada and Lewis Tunstall and Edward Beeching and Tristan Thrush and Nathan Lambert and Shengyi Huang and Kashif Rasul and Quentin Gallou{\'e}dec},

year = 2020,

journal = {GitHub repository},

publisher = {GitHub},

howpublished = {\url{https://github.com/huggingface/trl}}

}

```

|

Successmove/tinyllama-function-calling-finetuned

|

Successmove

| 2025-09-13T18:53:14Z | 0 | 0 | null |

[

"safetensors",

"llm",

"tinyllama",

"function-calling",

"question-answering",

"finetuned",

"license:mit",

"region:us"

] |

question-answering

| 2025-09-13T18:53:10Z |

---

license: mit

tags:

- llm

- tinyllama

- function-calling

- question-answering

- finetuned

---

# TinyLlama Fine-tuned for Function Calling

This is a fine-tuned version of the [TinyLlama](https://huggingface.co/jzhang38/TinyLlama) model optimized for function calling tasks.

## Model Details

- **Base Model**: [Successmove/tinyllama-function-calling-cpu-optimized](https://huggingface.co/Successmove/tinyllama-function-calling-cpu-optimized)

- **Fine-tuning Data**: [Successmove/combined-function-calling-context-dataset](https://huggingface.co/datasets/Successmove/combined-function-calling-context-dataset)

- **Training Method**: LoRA (Low-Rank Adaptation)

- **Training Epochs**: 3

- **Final Training Loss**: ~0.05

## Usage

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

from peft import PeftModel

# Load base model

base_model_name = "Successmove/tinyllama-function-calling-cpu-optimized"

model = AutoModelForCausalLM.from_pretrained(base_model_name)

# Load the LoRA adapters

model = PeftModel.from_pretrained(model, "path/to/this/model")

# Load tokenizer

tokenizer = AutoTokenizer.from_pretrained("path/to/this/model")

# Generate text

input_text = "Set a reminder for tomorrow at 9 AM"

inputs = tokenizer(input_text, return_tensors="pt")

outputs = model.generate(**inputs, max_new_tokens=100)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

```

## Training Details

This model was fine-tuned using:

- LoRA with r=8

- Learning rate: 2e-4

- Batch size: 4

- Gradient accumulation steps: 2

- 3 training epochs

## Limitations

This is a research prototype and may not be suitable for production use without further evaluation and testing.

## License

This model is licensed under the MIT License.

|

mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF

|

mradermacher

| 2025-09-13T18:25:24Z | 0 | 0 |

transformers

|

[

"transformers",

"gguf",

"programming",

"code generation",

"code",

"coding",

"coder",

"chat",

"brainstorm",

"qwen",

"qwen3",

"qwencoder",

"brainstorm 20x",

"creative",

"all uses cases",

"Jan-V1",

"Deep Space Nine",

"DS9",

"horror",

"science fiction",

"fantasy",

"Star Trek",

"finetune",

"thinking",

"reasoning",

"unsloth",

"en",

"base_model:DavidAU/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B",

"base_model:quantized:DavidAU/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B",

"license:apache-2.0",

"endpoints_compatible",

"region:us",

"imatrix",

"conversational"

] | null | 2025-09-13T14:31:07Z |

---

base_model: DavidAU/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B

language:

- en

library_name: transformers

license: apache-2.0

mradermacher:

readme_rev: 1

quantized_by: mradermacher

tags:

- programming

- code generation

- code

- coding

- coder

- chat

- code

- chat

- brainstorm

- qwen

- qwen3

- qwencoder

- brainstorm 20x

- creative

- all uses cases

- Jan-V1

- Deep Space Nine

- DS9

- horror

- science fiction

- fantasy

- Star Trek

- finetune

- thinking

- reasoning

- unsloth

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: nicoboss -->

<!-- ### quants: Q2_K IQ3_M Q4_K_S IQ3_XXS Q3_K_M small-IQ4_NL Q4_K_M IQ2_M Q6_K IQ4_XS Q2_K_S IQ1_M Q3_K_S IQ2_XXS Q3_K_L IQ2_XS Q5_K_S IQ2_S IQ1_S Q5_K_M Q4_0 IQ3_XS Q4_1 IQ3_S -->

<!-- ### quants_skip: -->

<!-- ### skip_mmproj: -->

weighted/imatrix quants of https://huggingface.co/DavidAU/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B

<!-- provided-files -->

***For a convenient overview and download list, visit our [model page for this model](https://hf.tst.eu/model#Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF).***

static quants are available at https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.imatrix.gguf) | imatrix | 0.1 | imatrix file (for creating your own qwuants) |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-IQ1_S.gguf) | i1-IQ1_S | 1.7 | for the desperate |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-IQ1_M.gguf) | i1-IQ1_M | 1.8 | mostly desperate |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-IQ2_XXS.gguf) | i1-IQ2_XXS | 2.0 | |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-IQ2_XS.gguf) | i1-IQ2_XS | 2.2 | |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-IQ2_S.gguf) | i1-IQ2_S | 2.3 | |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-IQ2_M.gguf) | i1-IQ2_M | 2.4 | |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-Q2_K_S.gguf) | i1-Q2_K_S | 2.4 | very low quality |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-Q2_K.gguf) | i1-Q2_K | 2.6 | IQ3_XXS probably better |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-IQ3_XXS.gguf) | i1-IQ3_XXS | 2.7 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-IQ3_XS.gguf) | i1-IQ3_XS | 2.9 | |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-Q3_K_S.gguf) | i1-Q3_K_S | 3.0 | IQ3_XS probably better |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-IQ3_S.gguf) | i1-IQ3_S | 3.0 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-IQ3_M.gguf) | i1-IQ3_M | 3.1 | |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-Q3_K_M.gguf) | i1-Q3_K_M | 3.3 | IQ3_S probably better |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-Q3_K_L.gguf) | i1-Q3_K_L | 3.5 | IQ3_M probably better |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-IQ4_XS.gguf) | i1-IQ4_XS | 3.6 | |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-Q4_0.gguf) | i1-Q4_0 | 3.8 | fast, low quality |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-IQ4_NL.gguf) | i1-IQ4_NL | 3.8 | prefer IQ4_XS |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-Q4_K_S.gguf) | i1-Q4_K_S | 3.8 | optimal size/speed/quality |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-Q4_K_M.gguf) | i1-Q4_K_M | 4.0 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-Q4_1.gguf) | i1-Q4_1 | 4.1 | |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-Q5_K_S.gguf) | i1-Q5_K_S | 4.5 | |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-Q5_K_M.gguf) | i1-Q5_K_M | 4.6 | |

| [GGUF](https://huggingface.co/mradermacher/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B-i1-GGUF/resolve/main/Qwen3-ST-Deep-Space-Nine-v3-256k-ctx-6B.i1-Q6_K.gguf) | i1-Q6_K | 5.3 | practically like static Q6_K |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time. Additional thanks to [@nicoboss](https://huggingface.co/nicoboss) for giving me access to his private supercomputer, enabling me to provide many more imatrix quants, at much higher quality, than I would otherwise be able to.

<!-- end -->

|

shaasmn/blockassist-bc-quick_leggy_gecko_1757787618

|

shaasmn

| 2025-09-13T18:21:40Z | 0 | 0 | null |

[

"gensyn",

"blockassist",

"gensyn-blockassist",

"minecraft",

"quick leggy gecko",

"arxiv:2504.07091",

"region:us"

] | null | 2025-09-13T18:21:19Z |

---

tags:

- gensyn

- blockassist

- gensyn-blockassist

- minecraft

- quick leggy gecko

---

# Gensyn BlockAssist

Gensyn's BlockAssist is a distributed extension of the paper [AssistanceZero: Scalably Solving Assistance Games](https://arxiv.org/abs/2504.07091).

|

ehsanaghaei/SecureBERT

|

ehsanaghaei

| 2025-09-13T18:20:44Z | 8,683 | 61 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"fill-mask",

"cybersecurity",

"cyber threat intelligence",

"en",

"doi:10.57967/hf/0042",

"license:bigscience-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-10-07T23:05:49Z |

---

license: bigscience-openrail-m

widget:

- text: >-

Native API functions such as <mask> may be directly invoked via system

calls (syscalls). However, these features are also commonly exposed to

user-mode applications through interfaces and libraries.

example_title: Native API functions

- text: >-

One way to explicitly assign the PPID of a new process is through the

<mask> API call, which includes a parameter for defining the PPID.

example_title: Assigning the PPID of a new process

- text: >-

Enable Safe DLL Search Mode to ensure that system DLLs in more restricted

directories (e.g., %<mask>%) are prioritized over DLLs in less secure

locations such as a user’s home directory.

example_title: Enable Safe DLL Search Mode

- text: >-

GuLoader is a file downloader that has been active since at least December

2019. It has been used to distribute a variety of <mask>, including

NETWIRE, Agent Tesla, NanoCore, and FormBook.

example_title: GuLoader is a file downloader

language:

- en

tags:

- cybersecurity

- cyber threat intelligence

---

# SecureBERT: A Domain-Specific Language Model for Cybersecurity

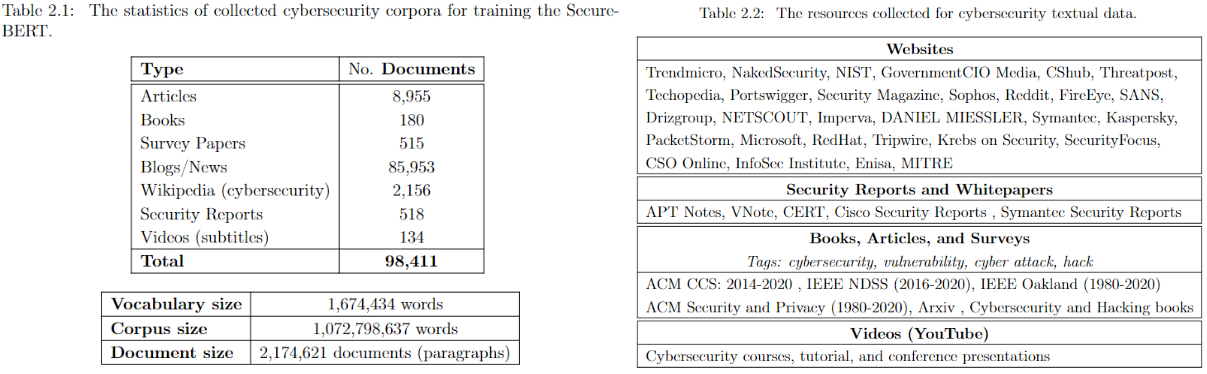

**SecureBERT** is a RoBERTa-based, domain-specific language model trained on a large cybersecurity-focused corpus. It is designed to represent and understand cybersecurity text more effectively than general-purpose models.

[SecureBERT](https://link.springer.com/chapter/10.1007/978-3-031-25538-0_3) was trained on extensive in-domain data crawled from diverse online resources. It has demonstrated strong performance in a range of cybersecurity NLP tasks.

👉 See the [presentation on YouTube](https://www.youtube.com/watch?v=G8WzvThGG8c&t=8s).

👉 Explore details on the [GitHub repository](https://github.com/ehsanaghaei/SecureBERT/blob/main/README.md).

---

## Applications

SecureBERT can be used as a base model for downstream NLP tasks in cybersecurity, including:

- Text classification

- Named Entity Recognition (NER)

- Sequence-to-sequence tasks

- Question answering

### Key Results

- Outperforms baseline models such as **RoBERTa (base/large)**, **SciBERT**, and **SecBERT** in masked language modeling tasks within the cybersecurity domain.

- Maintains strong performance in **general English language understanding**, ensuring broad usability beyond domain-specific tasks.

---

## Using SecureBERT

The model is available on [Hugging Face](https://huggingface.co/ehsanaghaei/SecureBERT).

### Load the Model

```python

from transformers import RobertaTokenizer, RobertaModel

import torch

tokenizer = RobertaTokenizer.from_pretrained("ehsanaghaei/SecureBERT")

model = RobertaModel.from_pretrained("ehsanaghaei/SecureBERT")

inputs = tokenizer("This is SecureBERT!", return_tensors="pt")

outputs = model(**inputs)

last_hidden_states = outputs.last_hidden_state

Masked Language Modeling Example

SecureBERT is trained with Masked Language Modeling (MLM). Use the following example to predict masked tokens:

#!pip install transformers torch tokenizers

import torch

import transformers

from transformers import RobertaTokenizerFast

tokenizer = RobertaTokenizerFast.from_pretrained("ehsanaghaei/SecureBERT")

model = transformers.RobertaForMaskedLM.from_pretrained("ehsanaghaei/SecureBERT")

def predict_mask(sent, tokenizer, model, topk=10, print_results=True):

token_ids = tokenizer.encode(sent, return_tensors='pt')

masked_pos = (token_ids.squeeze() == tokenizer.mask_token_id).nonzero().tolist()

words = []

with torch.no_grad():

output = model(token_ids)

for pos in masked_pos:

logits = output.logits[0, pos]

top_tokens = torch.topk(logits, k=topk).indices

predictions = [tokenizer.decode(i).strip().replace(" ", "") for i in top_tokens]

words.append(predictions)

if print_results:

print(f"Mask Predictions: {predictions}")

return words

```

# Limitations & Risks

* Domain-Specific Bias: SecureBERT is trained primarily on cybersecurity-related text. It may underperform on tasks outside this domain compared to general-purpose models.

* Data Quality: The training data was collected from online sources. As such, it may contain inaccuracies, outdated terminology, or biased representations of cybersecurity threats and behaviors.

* Potential Misuse: While the model is intended for defensive cybersecurity research, it could potentially be misused to generate malicious text (e.g., obfuscating malware descriptions or aiding adversarial tactics).

* Not a Substitute for Expertise: Predictions made by the model should not be considered authoritative. Cybersecurity professionals must validate results before applying them in critical systems or operational contexts.

* Evolving Threat Landscape: Cyber threats evolve rapidly, and the model may become outdated without continuous retraining on fresh data.

* Users should apply SecureBERT responsibly, keeping in mind its limitations and the need for human oversight in all security-critical applications.

# Reference

```

@inproceedings{aghaei2023securebert,

title={SecureBERT: A Domain-Specific Language Model for Cybersecurity},

author={Aghaei, Ehsan and Niu, Xi and Shadid, Waseem and Al-Shaer, Ehab},

booktitle={Security and Privacy in Communication Networks:

18th EAI International Conference, SecureComm 2022, Virtual Event, October 2022, Proceedings},

pages={39--56},

year={2023},

organization={Springer}

}

```

|

IoannisKat1/legal-bert-base-uncased-new

|

IoannisKat1

| 2025-09-13T18:15:26Z | 0 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"safetensors",

"bert",

"sentence-similarity",

"feature-extraction",

"dense",

"generated_from_trainer",

"dataset_size:391",

"loss:MatryoshkaLoss",

"loss:MultipleNegativesRankingLoss",

"en",

"arxiv:1908.10084",

"arxiv:2205.13147",

"arxiv:1705.00652",

"base_model:nlpaueb/legal-bert-base-uncased",

"base_model:finetune:nlpaueb/legal-bert-base-uncased",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2025-09-13T18:14:27Z |

---

language:

- en

license: apache-2.0

tags:

- sentence-transformers

- sentence-similarity

- feature-extraction

- dense

- generated_from_trainer

- dataset_size:391

- loss:MatryoshkaLoss

- loss:MultipleNegativesRankingLoss

base_model: nlpaueb/legal-bert-base-uncased

widget:

- source_sentence: What does 'personal data breach' entail?

sentences:

- '1.Processing of personal data revealing racial or ethnic origin, political opinions,

religious or philosophical beliefs, or trade union membership, and the processing

of genetic data, biometric data for the purpose of uniquely identifying a natural

person, data concerning health or data concerning a natural person''s sex life

or sexual orientation shall be prohibited.

2.Paragraph 1 shall not apply if one of the following applies: (a) the data subject

has given explicit consent to the processing of those personal data for one or

more specified purposes, except where Union or Member State law provide that the

prohibition referred to in paragraph 1 may not be lifted by the data subject;

(b) processing is necessary for the purposes of carrying out the obligations

and exercising specific rights of the controller or of the data subject in the

field of employment and social security and social protection law in so far as

it is authorised by Union or Member State law or a collective agreement pursuant

to Member State law providing for appropriate safeguards for the fundamental rights

and the interests of the data subject; (c) processing is necessary to protect

the vital interests of the data subject or of another natural person where the

data subject is physically or legally incapable of giving consent; (d) processing

is carried out in the course of its legitimate activities with appropriate safeguards

by a foundation, association or any other not-for-profit body with a political,

philosophical, religious or trade union aim and on condition that the processing

relates solely to the members or to former members of the body or to persons who

have regular contact with it in connection with its purposes and that the personal

data are not disclosed outside that body without the consent of the data subjects;

(e) processing relates to personal data which are manifestly made public by the

data subject; (f) processing is necessary for the establishment, exercise or

defence of legal claims or whenever courts are acting in their judicial capacity;

(g) processing is necessary for reasons of substantial public interest, on the

basis of Union or Member State law which shall be proportionate to the aim pursued,

respect the essence of the right to data protection and provide for suitable and

specific measures to safeguard the fundamental rights and the interests of the

data subject; (h) processing is necessary for the purposes of preventive or occupational

medicine, for the assessment of the working capacity of the employee, medical

diagnosis, the provision of health or social care or treatment or the management

of health or social care systems and services on the basis of Union or Member

State law or pursuant to contract with a health professional and subject to the

conditions and safeguards referred to in paragraph 3; (i) processing is necessary

for reasons of public interest in the area of public health, such as protecting

against serious cross-border threats to health or ensuring high standards of quality

and safety of health care and of medicinal products or medical devices, on the

basis of Union or Member State law which provides for suitable and specific measures

to safeguard the rights and freedoms of the data subject, in particular professional

secrecy; 4.5.2016 L 119/38 (j) processing is necessary for archiving purposes

in the public interest, scientific or historical research purposes or statistical

purposes in accordance with Article 89(1) based on Union or Member State law which

shall be proportionate to the aim pursued, respect the essence of the right to

data protection and provide for suitable and specific measures to safeguard the

fundamental rights and the interests of the data subject.

3.Personal data referred to in paragraph 1 may be processed for the purposes referred

to in point (h) of paragraph 2 when those data are processed by or under the responsibility

of a professional subject to the obligation of professional secrecy under Union

or Member State law or rules established by national competent bodies or by another

person also subject to an obligation of secrecy under Union or Member State law

or rules established by national competent bodies.

4.Member States may maintain or introduce further conditions, including limitations,

with regard to the processing of genetic data, biometric data or data concerning

health.'

- '1) ''personal data'' means any information relating to an identified or identifiable

natural person (''data subject''); an identifiable natural person is one who can

be identified, directly or indirectly, in particular by reference to an identifier

such as a name, an identification number, location data, an online identifier

or to one or more factors specific to the physical, physiological, genetic, mental,

economic, cultural or social identity of that natural person;

(2) ‘processing’ means any operation or set of operations which is performed on

personal data or on sets of personal data, whether or not by automated means,

such as collection, recording, organisation, structuring, storage, adaptation

or alteration, retrieval, consultation, use, disclosure by transmission, dissemination

or otherwise making available, alignment or combination, restriction, erasure

or destruction;

(3) ‘restriction of processing’ means the marking of stored personal data with

the aim of limiting their processing in the future;

(4) ‘profiling’ means any form of automated processing of personal data consisting

of the use of personal data to evaluate certain personal aspects relating to a

natural person, in particular to analyse or predict aspects concerning that natural

person''s performance at work, economic situation, health, personal preferences,

interests, reliability, behaviour, location or movements;

(5) ‘pseudonymisation’ means the processing of personal data in such a manner

that the personal data can no longer be attributed to a specific data subject

without the use of additional information, provided that such additional information

is kept separately and is subject to technical and organisational measures to

ensure that the personal data are not attributed to an identified or identifiable

natural person;

(6) ‘filing system’ means any structured set of personal data which are accessible

according to specific criteria, whether centralised, decentralised or dispersed

on a functional or geographical basis;

(7) ‘controller’ means the natural or legal person, public authority, agency or

other body which, alone or jointly with others, determines the purposes and means

of the processing of personal data; where the purposes and means of such processing

are determined by Union or Member State law, the controller or the specific criteria

for its nomination may be provided for by Union or Member State law;

(8) ‘processor’ means a natural or legal person, public authority, agency or other

body which processes personal data on behalf of the controller;

(9) ‘recipient’ means a natural or legal person, public authority, agency or another

body, to which the personal data are disclosed, whether a third party or not.

However, public authorities which may receive personal data in the framework of

a particular inquiry in accordance with Union or Member State law shall not be

regarded as recipients; the processing of those data by those public authorities

shall be in compliance with the applicable data protection rules according to

the purposes of the processing;

(10) ‘third party’ means a natural or legal person, public authority, agency or

body other than the data subject, controller, processor and persons who, under

the direct authority of the controller or processor, are authorised to process

personal data;

(11) ‘consent’ of the data subject means any freely given, specific, informed

and unambiguous indication of the data subject''s wishes by which he or she, by

a statement or by a clear affirmative action, signifies agreement to the processing

of personal data relating to him or her;

(12) ‘personal data breach’ means a breach of security leading to the accidental

or unlawful destruction, loss, alteration, unauthorised disclosure of, or access

to, personal data transmitted, stored or otherwise processed;

(13) ‘genetic data’ means personal data relating to the inherited or acquired

genetic characteristics of a natural person which give unique information about

the physiology or the health of that natural person and which result, in particular,

from an analysis of a biological sample from the natural person in question;

(14) ‘biometric data’ means personal data resulting from specific technical processing

relating to the physical, physiological or behavioural characteristics of a natural

person, which allow or confirm the unique identification of that natural person,

such as facial images or dactyloscopic data;

(15) ‘data concerning health’ means personal data related to the physical or mental

health of a natural person, including the provision of health care services, which

reveal information about his or her health status;

(16) ‘main establishment’ means: (a) as regards a controller with establishments

in more than one Member State, the place of its central administration in the

Union, unless the decisions on the purposes and means of the processing of personal

data are taken in another establishment of the controller in the Union and the

latter establishment has the power to have such decisions implemented, in which

case the establishment having taken such decisions is to be considered to be the

main establishment; (b) as regards a processor with establishments in more than

one Member State, the place of its central administration in the Union, or, if

the processor has no central administration in the Union, the establishment of

the processor in the Union where the main processing activities in the context

of the activities of an establishment of the processor take place to the extent

that the processor is subject to specific obligations under this Regulation;