code

stringlengths 2.5k

6.36M

| kind

stringclasses 2

values | parsed_code

stringlengths 0

404k

| quality_prob

float64 0

0.98

| learning_prob

float64 0.03

1

|

|---|---|---|---|---|

<a href="https://colab.research.google.com/github/roopy7890/AttnGAN/blob/master/code/main.ipynb" target="_parent"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/></a>

```

from __future__ import print_function

from miscc.config import cfg, cfg_from_file

from datasets import TextDataset

from trainer import condGANTrainer as trainer

import os

import sys

import time

import random

import pprint

import datetime

import dateutil.tz

import argparse

import numpy as np

import torch

import torchvision.transforms as transforms

dir_path = (os.path.abspath(os.path.join(os.path.realpath(__file__), './.')))

sys.path.append(dir_path)

def parse_args():

parser = argparse.ArgumentParser(description='Train a AttnGAN network')

parser.add_argument('--cfg', dest='cfg_file',

help='optional config file',

default='cfg/bird_attn2.yml', type=str)

parser.add_argument('--gpu', dest='gpu_id', type=int, default=-1)

parser.add_argument('--data_dir', dest='data_dir', type=str, default='')

parser.add_argument('--manualSeed', type=int, help='manual seed')

args = parser.parse_args()

return args

def gen_example(wordtoix, algo):

'''generate images from example sentences'''

from nltk.tokenize import RegexpTokenizer

filepath = '%s/example_filenames.txt' % (cfg.DATA_DIR)

data_dic = {}

with open(filepath, "r") as f:

filenames = f.read().decode('utf8').split('\n')

for name in filenames:

if len(name) == 0:

continue

filepath = '%s/%s.txt' % (cfg.DATA_DIR, name)

with open(filepath, "r") as f:

print('Load from:', name)

sentences = f.read().decode('utf8').split('\n')

# a list of indices for a sentence

captions = []

cap_lens = []

for sent in sentences:

if len(sent) == 0:

continue

sent = sent.replace("\ufffd\ufffd", " ")

tokenizer = RegexpTokenizer(r'\w+')

tokens = tokenizer.tokenize(sent.lower())

if len(tokens) == 0:

print('sent', sent)

continue

rev = []

for t in tokens:

t = t.encode('ascii', 'ignore').decode('ascii')

if len(t) > 0 and t in wordtoix:

rev.append(wordtoix[t])

captions.append(rev)

cap_lens.append(len(rev))

max_len = np.max(cap_lens)

sorted_indices = np.argsort(cap_lens)[::-1]

cap_lens = np.asarray(cap_lens)

cap_lens = cap_lens[sorted_indices]

cap_array = np.zeros((len(captions), max_len), dtype='int64')

for i in range(len(captions)):

idx = sorted_indices[i]

cap = captions[idx]

c_len = len(cap)

cap_array[i, :c_len] = cap

key = name[(name.rfind('/') + 1):]

data_dic[key] = [cap_array, cap_lens, sorted_indices]

algo.gen_example(data_dic)

if __name__ == "__main__":

args = parse_args()

if args.cfg_file is not None:

cfg_from_file(args.cfg_file)

if args.gpu_id != -1:

cfg.GPU_ID = args.gpu_id

else:

cfg.CUDA = False

if args.data_dir != '':

cfg.DATA_DIR = args.data_dir

print('Using config:')

pprint.pprint(cfg)

if not cfg.TRAIN.FLAG:

args.manualSeed = 100

elif args.manualSeed is None:

args.manualSeed = random.randint(1, 10000)

random.seed(args.manualSeed)

np.random.seed(args.manualSeed)

torch.manual_seed(args.manualSeed)

if cfg.CUDA:

torch.cuda.manual_seed_all(args.manualSeed)

now = datetime.datetime.now(dateutil.tz.tzlocal())

timestamp = now.strftime('%Y_%m_%d_%H_%M_%S')

output_dir = '../output/%s_%s_%s' % \

(cfg.DATASET_NAME, cfg.CONFIG_NAME, timestamp)

split_dir, bshuffle = 'train', True

if not cfg.TRAIN.FLAG:

# bshuffle = False

split_dir = 'test'

# Get data loader

imsize = cfg.TREE.BASE_SIZE * (2 ** (cfg.TREE.BRANCH_NUM - 1))

image_transform = transforms.Compose([

transforms.Scale(int(imsize * 76 / 64)),

transforms.RandomCrop(imsize),

transforms.RandomHorizontalFlip()])

dataset = TextDataset(cfg.DATA_DIR, split_dir,

base_size=cfg.TREE.BASE_SIZE,

transform=image_transform)

assert dataset

dataloader = torch.utils.data.DataLoader(

dataset, batch_size=cfg.TRAIN.BATCH_SIZE,

drop_last=True, shuffle=bshuffle, num_workers=int(cfg.WORKERS))

# Define models and go to train/evaluate

algo = trainer(output_dir, dataloader, dataset.n_words, dataset.ixtoword)

start_t = time.time()

if cfg.TRAIN.FLAG:

algo.train()

else:

'''generate images from pre-extracted embeddings'''

if cfg.B_VALIDATION:

algo.sampling(split_dir) # generate images for the whole valid dataset

else:

gen_example(dataset.wordtoix, algo) # generate images for customized captions

end_t = time.time()

print('Total time for training:', end_t - start_t)

```

|

github_jupyter

|

from __future__ import print_function

from miscc.config import cfg, cfg_from_file

from datasets import TextDataset

from trainer import condGANTrainer as trainer

import os

import sys

import time

import random

import pprint

import datetime

import dateutil.tz

import argparse

import numpy as np

import torch

import torchvision.transforms as transforms

dir_path = (os.path.abspath(os.path.join(os.path.realpath(__file__), './.')))

sys.path.append(dir_path)

def parse_args():

parser = argparse.ArgumentParser(description='Train a AttnGAN network')

parser.add_argument('--cfg', dest='cfg_file',

help='optional config file',

default='cfg/bird_attn2.yml', type=str)

parser.add_argument('--gpu', dest='gpu_id', type=int, default=-1)

parser.add_argument('--data_dir', dest='data_dir', type=str, default='')

parser.add_argument('--manualSeed', type=int, help='manual seed')

args = parser.parse_args()

return args

def gen_example(wordtoix, algo):

'''generate images from example sentences'''

from nltk.tokenize import RegexpTokenizer

filepath = '%s/example_filenames.txt' % (cfg.DATA_DIR)

data_dic = {}

with open(filepath, "r") as f:

filenames = f.read().decode('utf8').split('\n')

for name in filenames:

if len(name) == 0:

continue

filepath = '%s/%s.txt' % (cfg.DATA_DIR, name)

with open(filepath, "r") as f:

print('Load from:', name)

sentences = f.read().decode('utf8').split('\n')

# a list of indices for a sentence

captions = []

cap_lens = []

for sent in sentences:

if len(sent) == 0:

continue

sent = sent.replace("\ufffd\ufffd", " ")

tokenizer = RegexpTokenizer(r'\w+')

tokens = tokenizer.tokenize(sent.lower())

if len(tokens) == 0:

print('sent', sent)

continue

rev = []

for t in tokens:

t = t.encode('ascii', 'ignore').decode('ascii')

if len(t) > 0 and t in wordtoix:

rev.append(wordtoix[t])

captions.append(rev)

cap_lens.append(len(rev))

max_len = np.max(cap_lens)

sorted_indices = np.argsort(cap_lens)[::-1]

cap_lens = np.asarray(cap_lens)

cap_lens = cap_lens[sorted_indices]

cap_array = np.zeros((len(captions), max_len), dtype='int64')

for i in range(len(captions)):

idx = sorted_indices[i]

cap = captions[idx]

c_len = len(cap)

cap_array[i, :c_len] = cap

key = name[(name.rfind('/') + 1):]

data_dic[key] = [cap_array, cap_lens, sorted_indices]

algo.gen_example(data_dic)

if __name__ == "__main__":

args = parse_args()

if args.cfg_file is not None:

cfg_from_file(args.cfg_file)

if args.gpu_id != -1:

cfg.GPU_ID = args.gpu_id

else:

cfg.CUDA = False

if args.data_dir != '':

cfg.DATA_DIR = args.data_dir

print('Using config:')

pprint.pprint(cfg)

if not cfg.TRAIN.FLAG:

args.manualSeed = 100

elif args.manualSeed is None:

args.manualSeed = random.randint(1, 10000)

random.seed(args.manualSeed)

np.random.seed(args.manualSeed)

torch.manual_seed(args.manualSeed)

if cfg.CUDA:

torch.cuda.manual_seed_all(args.manualSeed)

now = datetime.datetime.now(dateutil.tz.tzlocal())

timestamp = now.strftime('%Y_%m_%d_%H_%M_%S')

output_dir = '../output/%s_%s_%s' % \

(cfg.DATASET_NAME, cfg.CONFIG_NAME, timestamp)

split_dir, bshuffle = 'train', True

if not cfg.TRAIN.FLAG:

# bshuffle = False

split_dir = 'test'

# Get data loader

imsize = cfg.TREE.BASE_SIZE * (2 ** (cfg.TREE.BRANCH_NUM - 1))

image_transform = transforms.Compose([

transforms.Scale(int(imsize * 76 / 64)),

transforms.RandomCrop(imsize),

transforms.RandomHorizontalFlip()])

dataset = TextDataset(cfg.DATA_DIR, split_dir,

base_size=cfg.TREE.BASE_SIZE,

transform=image_transform)

assert dataset

dataloader = torch.utils.data.DataLoader(

dataset, batch_size=cfg.TRAIN.BATCH_SIZE,

drop_last=True, shuffle=bshuffle, num_workers=int(cfg.WORKERS))

# Define models and go to train/evaluate

algo = trainer(output_dir, dataloader, dataset.n_words, dataset.ixtoword)

start_t = time.time()

if cfg.TRAIN.FLAG:

algo.train()

else:

'''generate images from pre-extracted embeddings'''

if cfg.B_VALIDATION:

algo.sampling(split_dir) # generate images for the whole valid dataset

else:

gen_example(dataset.wordtoix, algo) # generate images for customized captions

end_t = time.time()

print('Total time for training:', end_t - start_t)

| 0.445771 | 0.610686 |

# The Devito domain specific language: an overview

This notebook presents an overview of the Devito symbolic language, used to express and discretise operators, in particular partial differential equations (PDEs).

For convenience, we import all Devito modules:

```

from devito import *

```

## From equations to code in a few lines of Python

The main objective of this tutorial is to demonstrate how Devito and its [SymPy](http://www.sympy.org/en/index.html)-powered symbolic API can be used to solve partial differential equations using the finite difference method with highly optimized stencils in a few lines of Python. We demonstrate how computational stencils can be derived directly from the equation in an automated fashion and how Devito can be used to generate and execute, at runtime, the desired numerical scheme in the form of optimized C code.

## Defining the physical domain

Before we can begin creating finite-difference (FD) stencils we will need to give Devito a few details regarding the computational domain within which we wish to solve our problem. For this purpose we create a `Grid` object that stores the physical `extent` (the size) of our domain and knows how many points we want to use in each dimension to discretise our data.

<img src="figures/grid.png" style="width: 220px;"/>

```

grid = Grid(shape=(5, 6), extent=(1., 1.))

grid

```

## Functions and data

To express our equation in symbolic form and discretise it using finite differences, Devito provides a set of `Function` types. A `Function` object:

1. Behaves like a `sympy.Function` symbol

2. Manages data associated with the symbol

To get more information on how to create and use a `Function` object, or any type provided by Devito, we can take a look at the documentation.

```

print(Function.__doc__)

```

Ok, let's create a function $f(x, y)$ and look at the data Devito has associated with it. Please note that it is important to use explicit keywords, such as `name` or `grid` when creating `Function` objects.

```

f = Function(name='f', grid=grid)

f

f.data

```

By default, Devito `Function` objects use the spatial dimensions `(x, y)` for 2D grids and `(x, y, z)` for 3D grids. To solve a PDE over several timesteps a time dimension is also required by our symbolic function. For this Devito provides an additional function type, the `TimeFunction`, which incorporates the correct dimension along with some other intricacies needed to create a time stepping scheme.

```

g = TimeFunction(name='g', grid=grid)

g

```

Since the default time order of a `TimeFunction` is `1`, the shape of `f` is `(2, 5, 6)`, i.e. Devito has allocated two buffers to represent `g(t, x, y)` and `g(t + dt, x, y)`:

```

g.shape

```

## Derivatives of symbolic functions

The functions we have created so far all act as `sympy.Function` objects, which means that we can form symbolic derivative expressions from them. Devito provides a set of shorthand expressions (implemented as Python properties) that allow us to generate finite differences in symbolic form. For example, the property `f.dx` denotes $\frac{\partial}{\partial x} f(x, y)$ - only that Devito has already discretised it with a finite difference expression. There are also a set of shorthand expressions for left (backward) and right (forward) derivatives:

| Derivative | Shorthand | Discretised | Stencil |

| ---------- |:---------:|:-----------:|:-------:|

| $\frac{\partial}{\partial x}f(x, y)$ (right) | `f.dxr` | $\frac{f(x+h_x,y)}{h_x} - \frac{f(x,y)}{h_x}$ | <img src="figures/stencil_forward.png" style="width: 180px;"/> |

| $\frac{\partial}{\partial x}f(x, y)$ (left) | `f.dxl` | $\frac{f(x,y)}{h_x} - \frac{f(x-h_x,y)}{h_x}$ | <img src="figures/stencil_backward.png" style="width: 180px;"/> |

A similar set of expressions exist for each spatial dimension defined on our grid, for example `f.dy` and `f.dyl`. Obviously, one can also take derivatives in time of `TimeFunction` objects. For example, to take the first derivative in time of `g` you can simply write:

```

g.dt

```

We may also want to take a look at the stencil Devito will generate based on the chosen discretisation:

```

g.dt.evaluate

```

There also exist convenient shortcuts to express the forward and backward stencil points, `g(t+dt, x, y)` and `g(t-dt, x, y)`.

```

g.forward

g.backward

```

And of course, there's nothing to stop us taking derivatives on these objects:

```

g.forward.dt

g.forward.dy

```

## A linear convection operator

**Note:** The following example is derived from [step 5](http://nbviewer.ipython.org/github/barbagroup/CFDPython/blob/master/lessons/07_Step_5.ipynb) in the excellent tutorial series [CFD Python: 12 steps to Navier-Stokes](http://lorenabarba.com/blog/cfd-python-12-steps-to-navier-stokes/).

In this simple example we will show how to derive a very simple convection operator from a high-level description of the governing equation. We will go through the process of deriving a discretised finite difference formulation of the state update for the field variable $u$, before creating a callable `Operator` object. Luckily, the automation provided by SymPy makes the derivation very nice and easy.

The governing equation we want to implement is the linear convection equation:

$$\frac{\partial u}{\partial t}+c\frac{\partial u}{\partial x} + c\frac{\partial u}{\partial y} = 0.$$

Before we begin, we must define some parameters including the grid, the number of timesteps and the timestep size. We will also initialize our velocity `u` with a smooth field:

```

from examples.cfd import init_smooth, plot_field

nt = 100 # Number of timesteps

dt = 0.2 * 2. / 80 # Timestep size (sigma=0.2)

c = 1 # Value for c

# Then we create a grid and our function

grid = Grid(shape=(81, 81), extent=(2., 2.))

u = TimeFunction(name='u', grid=grid)

# We can now set the initial condition and plot it

init_smooth(field=u.data[0], dx=grid.spacing[0], dy=grid.spacing[1])

init_smooth(field=u.data[1], dx=grid.spacing[0], dy=grid.spacing[1])

plot_field(u.data[0])

```

Next, we wish to discretise our governing equation so that a functional `Operator` can be created from it. We begin by simply writing out the equation as a symbolic expression, while using shorthand expressions for the derivatives provided by the `Function` object. This will create a symbolic object of the dicretised equation.

Using the Devito shorthand notation, we can express the governing equations as:

```

eq = Eq(u.dt + c * u.dxl + c * u.dyl)

eq

```

We now need to rearrange our equation so that the term $u(t+dt, x, y)$ is on the left-hand side, since it represents the next point in time for our state variable $u$. Devito provides a utility called `solve`, built on top of SymPy's `solve`, to rearrange our equation so that it represents a valid state update for $u$. Here, we use `solve` to create a valid stencil for our update to `u(t+dt, x, y)`:

```

stencil = solve(eq, u.forward)

update = Eq(u.forward, stencil)

update

```

The right-hand side of the 'update' equation should be a stencil of the shape

<img src="figures/stencil_convection.png" style="width: 160px;"/>

Once we have created this 'update' expression, we can create a Devito `Operator`. This `Operator` will basically behave like a Python function that we can call to apply the created stencil over our associated data, as long as we provide all necessary unknowns. In this case we need to provide the number of timesteps to compute via the keyword `time` and the timestep size via `dt` (both have been defined above):

```

op = Operator(update, opt='noop')

op(time=nt+1, dt=dt)

plot_field(u.data[0])

```

Note that the real power of Devito is hidden within `Operator`, it will automatically generate and compile the optimized C code. We can look at this code (noting that this is not a requirement of executing it) via:

```

print(op.ccode)

```

## Second derivatives and high-order stencils

In the above example only a combination of first derivatives was present in the governing equation. However, second (or higher) order derivatives are often present in scientific problems of interest, notably any PDE modeling diffusion. To generate second order derivatives we must give the `devito.Function` object another piece of information: the desired discretisation of the stencil(s).

First, lets define a simple second derivative in `x`, for which we need to give $u$ a `space_order` of (at least) `2`. The shorthand for this second derivative is `u.dx2`.

```

u = TimeFunction(name='u', grid=grid, space_order=2)

u.dx2

u.dx2.evaluate

```

We can increase the discretisation arbitrarily if we wish to specify higher order FD stencils:

```

u = TimeFunction(name='u', grid=grid, space_order=4)

u.dx2

u.dx2.evaluate

```

To implement the diffusion or wave equations, we must take the Laplacian $\nabla^2 u$, which is the sum of the second derivatives in all spatial dimensions. For this, Devito also provides a shorthand expression, which means we do not have to hard-code the problem dimension (2D or 3D) in the code. To change the problem dimension we can create another `Grid` object and use this to re-define our `Function`'s:

```

grid_3d = Grid(shape=(5, 6, 7), extent=(1., 1., 1.))

u = TimeFunction(name='u', grid=grid_3d, space_order=2)

u

```

We can re-define our function `u` with a different `space_order` argument to change the discretisation order of the stencil expression created. For example, we can derive an expression of the 12th-order Laplacian $\nabla^2 u$:

```

u = TimeFunction(name='u', grid=grid_3d, space_order=12)

u.laplace

```

The same expression could also have been generated explicitly via:

```

u.dx2 + u.dy2 + u.dz2

```

## Derivatives of composite expressions

Derivatives of any arbitrary expression can easily be generated:

```

u = TimeFunction(name='u', grid=grid, space_order=2)

v = TimeFunction(name='v', grid=grid, space_order=2, time_order=2)

v.dt2 + u.laplace

(v.dt2 + u.laplace).dx2

```

Which can, depending on the chosen discretisation, lead to fairly complex stencils:

```

(v.dt2 + u.laplace).dx2.evaluate

```

|

github_jupyter

|

from devito import *

grid = Grid(shape=(5, 6), extent=(1., 1.))

grid

print(Function.__doc__)

f = Function(name='f', grid=grid)

f

f.data

g = TimeFunction(name='g', grid=grid)

g

g.shape

g.dt

g.dt.evaluate

g.forward

g.backward

g.forward.dt

g.forward.dy

from examples.cfd import init_smooth, plot_field

nt = 100 # Number of timesteps

dt = 0.2 * 2. / 80 # Timestep size (sigma=0.2)

c = 1 # Value for c

# Then we create a grid and our function

grid = Grid(shape=(81, 81), extent=(2., 2.))

u = TimeFunction(name='u', grid=grid)

# We can now set the initial condition and plot it

init_smooth(field=u.data[0], dx=grid.spacing[0], dy=grid.spacing[1])

init_smooth(field=u.data[1], dx=grid.spacing[0], dy=grid.spacing[1])

plot_field(u.data[0])

eq = Eq(u.dt + c * u.dxl + c * u.dyl)

eq

stencil = solve(eq, u.forward)

update = Eq(u.forward, stencil)

update

op = Operator(update, opt='noop')

op(time=nt+1, dt=dt)

plot_field(u.data[0])

print(op.ccode)

u = TimeFunction(name='u', grid=grid, space_order=2)

u.dx2

u.dx2.evaluate

u = TimeFunction(name='u', grid=grid, space_order=4)

u.dx2

u.dx2.evaluate

grid_3d = Grid(shape=(5, 6, 7), extent=(1., 1., 1.))

u = TimeFunction(name='u', grid=grid_3d, space_order=2)

u

u = TimeFunction(name='u', grid=grid_3d, space_order=12)

u.laplace

u.dx2 + u.dy2 + u.dz2

u = TimeFunction(name='u', grid=grid, space_order=2)

v = TimeFunction(name='v', grid=grid, space_order=2, time_order=2)

v.dt2 + u.laplace

(v.dt2 + u.laplace).dx2

(v.dt2 + u.laplace).dx2.evaluate

| 0.687735 | 0.992604 |

```

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

pd.set_option('display.max_columns', None)

pd.set_option('display.max_rows', None)

survey_apr_20 = pd.read_csv(r"C:\Rajat Dev\ML_Session\ML-for-Good-Hackathon\Data\ProlificAcademic\April 2020\Data\CRISIS_Adult_April_2020.csv")

survey_may_20 = pd.read_csv(r"C:\Rajat Dev\ML_Session\ML-for-Good-Hackathon\Data\ProlificAcademic\May 2020\Data\CRISIS_Adult_May_2020.csv");

survey_nov_20 = pd.read_csv(r"C:\Rajat Dev\ML_Session\ML-for-Good-Hackathon\Data\ProlificAcademic\November 2020\Data\CRISIS_Adult_November_2020.csv");

survey_apr_21 = pd.read_csv(r"C:\Rajat Dev\ML_Session\ML-for-Good-Hackathon\Data\ProlificAcademic\April 2021\Data\CRISIS_Adult_April_2021.csv")

survey_apr_20.head()

survey_apr_20.drop(["sex_other"], axis=1,inplace=True)

survey_apr_20['essentialworkerhome'] = survey_apr_20['essentialworkerhome'].fillna(0)

survey_apr_20['covidfacility'] = survey_apr_20['covidfacility'].fillna(2)

survey_apr_20['goingtoworkplace'] = survey_apr_20['goingtoworkplace'].fillna(2)

survey_apr_20['workfromhome'] = survey_apr_20['workfromhome'].fillna(2)

survey_apr_20['laidoff'] = survey_apr_20['laidoff'].fillna(2)

survey_apr_20['losejob'] = survey_apr_20['losejob'].fillna(2)

survey_apr_20['mentalhealth'] = survey_apr_20['mentalhealth'].fillna(6)

df = survey_apr_20[["working___1","working___2","working___3","working___4","working___5","working___6","working___7","working___8","occupation","military","location","education","educationmother","educationfather","householdnumber","essentialworkers","essentialworkerhome","covidfacility","householdcomp___1","householdcomp___2","householdcomp___3","householdcomp___4","householdcomp___5","householdcomp___6","householdcomp___7","roomsinhouse","insurance","govassist","physicalhealth","work","goingtoworkplace","workfromhome","laidoff","losejob","mentalhealth"]]

df.mentalhealth.unique()

df["occupation"] = pd.Categorical(df["occupation"]).codes

cols = ["military","location", "education", "educationmother","educationfather","householdnumber",

"essentialworkers", "essentialworkerhome", "covidfacility","insurance","govassist",

"physicalhealth", "work", "goingtoworkplace","workfromhome", "laidoff", "losejob"]

for col in cols:

df = pd.get_dummies(df, columns=[col], drop_first=True, dtype=df[col].dtype)

df = pd.get_dummies(df,)

df.head()

def correlation_plot(df):

corr = abs(df.corr()) # correlation matrix

lower_triangle = np.tril(corr, k = -1) # select only the lower triangle of the correlation matrix

mask = lower_triangle == 0 # to mask the upper triangle in the following heatmap

plt.figure(figsize = (10,10)) # setting the figure size

sns.set_style(style = 'white') # Setting it to white so that we do not see the grid lines

sns.heatmap(lower_triangle, center=0.5, cmap= 'Blues', xticklabels = corr.index,

yticklabels = corr.columns,cbar = False, annot= True, linewidths= 1, mask = mask) # Da Heatmap

plt.show()

i=0

while(i<90):

correlation_plot(df.iloc[:,i:i+15])

i=i+15

```

|

github_jupyter

|

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

pd.set_option('display.max_columns', None)

pd.set_option('display.max_rows', None)

survey_apr_20 = pd.read_csv(r"C:\Rajat Dev\ML_Session\ML-for-Good-Hackathon\Data\ProlificAcademic\April 2020\Data\CRISIS_Adult_April_2020.csv")

survey_may_20 = pd.read_csv(r"C:\Rajat Dev\ML_Session\ML-for-Good-Hackathon\Data\ProlificAcademic\May 2020\Data\CRISIS_Adult_May_2020.csv");

survey_nov_20 = pd.read_csv(r"C:\Rajat Dev\ML_Session\ML-for-Good-Hackathon\Data\ProlificAcademic\November 2020\Data\CRISIS_Adult_November_2020.csv");

survey_apr_21 = pd.read_csv(r"C:\Rajat Dev\ML_Session\ML-for-Good-Hackathon\Data\ProlificAcademic\April 2021\Data\CRISIS_Adult_April_2021.csv")

survey_apr_20.head()

survey_apr_20.drop(["sex_other"], axis=1,inplace=True)

survey_apr_20['essentialworkerhome'] = survey_apr_20['essentialworkerhome'].fillna(0)

survey_apr_20['covidfacility'] = survey_apr_20['covidfacility'].fillna(2)

survey_apr_20['goingtoworkplace'] = survey_apr_20['goingtoworkplace'].fillna(2)

survey_apr_20['workfromhome'] = survey_apr_20['workfromhome'].fillna(2)

survey_apr_20['laidoff'] = survey_apr_20['laidoff'].fillna(2)

survey_apr_20['losejob'] = survey_apr_20['losejob'].fillna(2)

survey_apr_20['mentalhealth'] = survey_apr_20['mentalhealth'].fillna(6)

df = survey_apr_20[["working___1","working___2","working___3","working___4","working___5","working___6","working___7","working___8","occupation","military","location","education","educationmother","educationfather","householdnumber","essentialworkers","essentialworkerhome","covidfacility","householdcomp___1","householdcomp___2","householdcomp___3","householdcomp___4","householdcomp___5","householdcomp___6","householdcomp___7","roomsinhouse","insurance","govassist","physicalhealth","work","goingtoworkplace","workfromhome","laidoff","losejob","mentalhealth"]]

df.mentalhealth.unique()

df["occupation"] = pd.Categorical(df["occupation"]).codes

cols = ["military","location", "education", "educationmother","educationfather","householdnumber",

"essentialworkers", "essentialworkerhome", "covidfacility","insurance","govassist",

"physicalhealth", "work", "goingtoworkplace","workfromhome", "laidoff", "losejob"]

for col in cols:

df = pd.get_dummies(df, columns=[col], drop_first=True, dtype=df[col].dtype)

df = pd.get_dummies(df,)

df.head()

def correlation_plot(df):

corr = abs(df.corr()) # correlation matrix

lower_triangle = np.tril(corr, k = -1) # select only the lower triangle of the correlation matrix

mask = lower_triangle == 0 # to mask the upper triangle in the following heatmap

plt.figure(figsize = (10,10)) # setting the figure size

sns.set_style(style = 'white') # Setting it to white so that we do not see the grid lines

sns.heatmap(lower_triangle, center=0.5, cmap= 'Blues', xticklabels = corr.index,

yticklabels = corr.columns,cbar = False, annot= True, linewidths= 1, mask = mask) # Da Heatmap

plt.show()

i=0

while(i<90):

correlation_plot(df.iloc[:,i:i+15])

i=i+15

| 0.277767 | 0.336535 |

# Load your own PyTorch BERT model

In the previous [example](https://github.com/deepjavalibrary/djl/blob/master/jupyter/BERTQA.ipynb), you run BERT inference with the model from Model Zoo. You can also load the model on your own pre-trained BERT and use custom classes as the input and output.

In general, the PyTorch BERT model from [HuggingFace](https://github.com/huggingface/transformers) requires these three inputs:

- word indices: The index of each word in a sentence

- word types: The type index of the word.

- attention mask: The mask indicates to the model which tokens should be attended to, and which should not after batching sequence together.

We will dive deep into these details later.

## Preparation

This tutorial requires the installation of Java Kernel. To install the Java Kernel, see the [README](https://github.com/deepjavalibrary/djl/blob/master/jupyter/README.md).

There are dependencies we will use.

```

// %mavenRepo snapshots https://oss.sonatype.org/content/repositories/snapshots/

%maven ai.djl:api:0.16.0

%maven ai.djl.pytorch:pytorch-engine:0.16.0

%maven ai.djl.pytorch:pytorch-model-zoo:0.16.0

%maven org.slf4j:slf4j-simple:1.7.32

```

### Import java packages

```

import java.io.*;

import java.nio.file.*;

import java.util.*;

import java.util.stream.*;

import ai.djl.*;

import ai.djl.ndarray.*;

import ai.djl.ndarray.types.*;

import ai.djl.inference.*;

import ai.djl.translate.*;

import ai.djl.training.util.*;

import ai.djl.repository.zoo.*;

import ai.djl.modality.nlp.*;

import ai.djl.modality.nlp.qa.*;

import ai.djl.modality.nlp.bert.*;

```

**Reuse the previous input**

```

var question = "When did BBC Japan start broadcasting?";

var resourceDocument = "BBC Japan was a general entertainment Channel.\n" +

"Which operated between December 2004 and April 2006.\n" +

"It ceased operations after its Japanese distributor folded.";

QAInput input = new QAInput(question, resourceDocument);

```

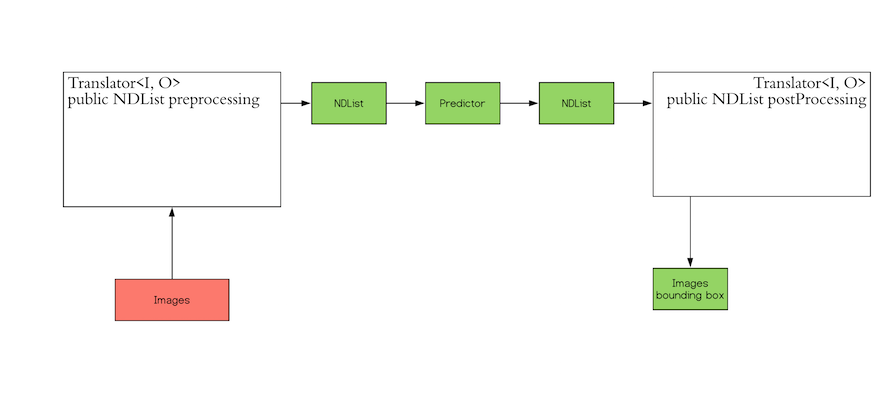

## Dive deep into Translator

Inference in deep learning is the process of predicting the output for a given input based on a pre-defined model.

DJL abstracts away the whole process for ease of use. It can load the model, perform inference on the input, and provide

output. DJL also allows you to provide user-defined inputs. The workflow looks like the following:

The red block ("Images") in the workflow is the input that DJL expects from you. The green block ("Images

bounding box") is the output that you expect. Because DJL does not know which input to expect and which output format that you prefer, DJL provides the `Translator` interface so you can define your own

input and output.

The `Translator` interface encompasses the two white blocks: Pre-processing and Post-processing. The pre-processing

component converts the user-defined input objects into an NDList, so that the `Predictor` in DJL can understand the

input and make its prediction. Similarly, the post-processing block receives an NDList as the output from the

`Predictor`. The post-processing block allows you to convert the output from the `Predictor` to the desired output

format.

### Pre-processing

Now, you need to convert the sentences into tokens. We provide a powerful tool `BertTokenizer` that you can use to convert questions and answers into tokens, and batchify your sequence together. Once you have properly formatted tokens, you can use `Vocabulary` to map your token to BERT index.

The following code block demonstrates tokenizing the question and answer defined earlier into BERT-formatted tokens.

```

var tokenizer = new BertTokenizer();

List<String> tokenQ = tokenizer.tokenize(question.toLowerCase());

List<String> tokenA = tokenizer.tokenize(resourceDocument.toLowerCase());

System.out.println("Question Token: " + tokenQ);

System.out.println("Answer Token: " + tokenA);

```

`BertTokenizer` can also help you batchify questions and resource documents together by calling `encode()`.

The output contains information that BERT ingests.

- getTokens: It returns a list of strings including the question, resource document and special word to let the model tell which part is the question and which part is the resource document. Because PyTorch BERT was trained with varioue sequence length, you don't pad the tokens.

- getTokenTypes: It returns a list of type indices of the word to indicate the location of the resource document. All Questions will be labelled with 0 and all resource documents will be labelled with 1.

[Question tokens...DocResourceTokens...padding tokens] => [000000...11111....0000]

- getValidLength: It returns the actual length of the question and resource document tokens tokens, which are required by MXNet BERT.

- getAttentionMask: It returns the mask for the model to indicate which part should be paid attention to and which part is the padding. It is required by PyTorch BERT.

[Question tokens...DocResourceTokens...padding tokens] => [111111...11111....0000]

PyTorch BERT was trained with varioue sequence length, so we don't need to pad the tokens.

```

BertToken token = tokenizer.encode(question.toLowerCase(), resourceDocument.toLowerCase());

System.out.println("Encoded tokens: " + token.getTokens());

System.out.println("Encoded token type: " + token.getTokenTypes());

System.out.println("Valid length: " + token.getValidLength());

```

Normally, words and sentences are represented as indices instead of tokens for training.

They typically work like a vector in a n-dimensional space. In this case, you need to map them into indices.

DJL provides `Vocabulary` to take care of you vocabulary mapping.

The bert vocab from Huggingface is of the following format.

```

[PAD]

[unused0]

[unused1]

[unused2]

[unused3]

[unused4]

[unused5]

[unused6]

[unused7]

[unused8]

...

```

We provide the `bert-base-uncased-vocab.txt` from our pre-trained BERT for demonstration.

```

DownloadUtils.download("https://djl-ai.s3.amazonaws.com/mlrepo/model/nlp/question_answer/ai/djl/pytorch/bertqa/0.0.1/bert-base-uncased-vocab.txt.gz", "build/pytorch/bertqa/vocab.txt", new ProgressBar());

var path = Paths.get("build/pytorch/bertqa/vocab.txt");

var vocabulary = DefaultVocabulary.builder()

.optMinFrequency(1)

.addFromTextFile(path)

.optUnknownToken("[UNK]")

.build();

```

You can easily convert the token to the index using `vocabulary.getIndex(token)` and the other way around using `vocabulary.getToken(index)`.

```

long index = vocabulary.getIndex("car");

String token = vocabulary.getToken(2482);

System.out.println("The index of the car is " + index);

System.out.println("The token of the index 2482 is " + token);

```

To properly convert them into `float[]` for `NDArray` creation, here is the helper function:

Now that you have everything you need, you can create an NDList and populate all of the inputs you formatted earlier. You're done with pre-processing!

#### Construct `Translator`

You need to do this processing within an implementation of the `Translator` interface. `Translator` is designed to do pre-processing and post-processing. You must define the input and output objects. It contains the following two override classes:

- `public NDList processInput(TranslatorContext ctx, I)`

- `public String processOutput(TranslatorContext ctx, O)`

Every translator takes in input and returns output in the form of generic objects. In this case, the translator takes input in the form of `QAInput` (I) and returns output as a `String` (O). `QAInput` is just an object that holds questions and answer; We have prepared the Input class for you.

Armed with the needed knowledge, you can write an implementation of the `Translator` interface. `BertTranslator` uses the code snippets explained previously to implement the `processInput`method. For more information, see [`NDManager`](https://javadoc.io/static/ai.djl/api/0.16.0/index.html?ai/djl/ndarray/NDManager.html).

```

manager.create(Number[] data, Shape)

manager.create(Number[] data)

```

```

public class BertTranslator implements Translator<QAInput, String> {

private List<String> tokens;

private Vocabulary vocabulary;

private BertTokenizer tokenizer;

@Override

public void prepare(TranslatorContext ctx) throws IOException {

Path path = Paths.get("build/pytorch/bertqa/vocab.txt");

vocabulary = DefaultVocabulary.builder()

.optMinFrequency(1)

.addFromTextFile(path)

.optUnknownToken("[UNK]")

.build();

tokenizer = new BertTokenizer();

}

@Override

public NDList processInput(TranslatorContext ctx, QAInput input) {

BertToken token =

tokenizer.encode(

input.getQuestion().toLowerCase(),

input.getParagraph().toLowerCase());

// get the encoded tokens that would be used in precessOutput

tokens = token.getTokens();

NDManager manager = ctx.getNDManager();

// map the tokens(String) to indices(long)

long[] indices = tokens.stream().mapToLong(vocabulary::getIndex).toArray();

long[] attentionMask = token.getAttentionMask().stream().mapToLong(i -> i).toArray();

long[] tokenType = token.getTokenTypes().stream().mapToLong(i -> i).toArray();

NDArray indicesArray = manager.create(indices);

NDArray attentionMaskArray =

manager.create(attentionMask);

NDArray tokenTypeArray = manager.create(tokenType);

// The order matters

return new NDList(indicesArray, attentionMaskArray, tokenTypeArray);

}

@Override

public String processOutput(TranslatorContext ctx, NDList list) {

NDArray startLogits = list.get(0);

NDArray endLogits = list.get(1);

int startIdx = (int) startLogits.argMax().getLong();

int endIdx = (int) endLogits.argMax().getLong();

return tokens.subList(startIdx, endIdx + 1).toString();

}

@Override

public Batchifier getBatchifier() {

return Batchifier.STACK;

}

}

```

Congrats! You have created your first Translator! We have pre-filled the `processOutput()` function to process the `NDList` and return it in a desired format. `processInput()` and `processOutput()` offer the flexibility to get the predictions from the model in any format you desire.

With the Translator implemented, you need to bring up the predictor that uses your `Translator` to start making predictions. You can find the usage for `Predictor` in the [Predictor Javadoc](https://javadoc.io/static/ai.djl/api/0.16.0/index.html?ai/djl/inference/Predictor.html). Create a translator and use the `question` and `resourceDocument` provided previously.

```

DownloadUtils.download("https://djl-ai.s3.amazonaws.com/mlrepo/model/nlp/question_answer/ai/djl/pytorch/bertqa/0.0.1/trace_bertqa.pt.gz", "build/pytorch/bertqa/bertqa.pt", new ProgressBar());

BertTranslator translator = new BertTranslator();

Criteria<QAInput, String> criteria = Criteria.builder()

.setTypes(QAInput.class, String.class)

.optModelPath(Paths.get("build/pytorch/bertqa/")) // search in local folder

.optTranslator(translator)

.optProgress(new ProgressBar()).build();

ZooModel model = criteria.loadModel();

String predictResult = null;

QAInput input = new QAInput(question, resourceDocument);

// Create a Predictor and use it to predict the output

try (Predictor<QAInput, String> predictor = model.newPredictor(translator)) {

predictResult = predictor.predict(input);

}

System.out.println(question);

System.out.println(predictResult);

```

Based on the input, the following result will be shown:

```

[december, 2004]

```

That's it!

You can try with more questions and answers. Here are the samples:

**Answer Material**

The Normans (Norman: Nourmands; French: Normands; Latin: Normanni) were the people who in the 10th and 11th centuries gave their name to Normandy, a region in France. They were descended from Norse ("Norman" comes from "Norseman") raiders and pirates from Denmark, Iceland and Norway who, under their leader Rollo, agreed to swear fealty to King Charles III of West Francia. Through generations of assimilation and mixing with the native Frankish and Roman-Gaulish populations, their descendants would gradually merge with the Carolingian-based cultures of West Francia. The distinct cultural and ethnic identity of the Normans emerged initially in the first half of the 10th century, and it continued to evolve over the succeeding centuries.

**Question**

Q: When were the Normans in Normandy?

A: 10th and 11th centuries

Q: In what country is Normandy located?

A: france

For the full source code, see the [DJL repo](https://github.com/deepjavalibrary/djl/blob/master/examples/src/main/java/ai/djl/examples/inference/BertQaInference.java) and translator implementation [MXNet](https://github.com/deepjavalibrary/djl/blob/master/engines/mxnet/mxnet-model-zoo/src/main/java/ai/djl/mxnet/zoo/nlp/qa/MxBertQATranslator.java) [PyTorch](https://github.com/deepjavalibrary/djl/blob/master/engines/pytorch/pytorch-model-zoo/src/main/java/ai/djl/pytorch/zoo/nlp/qa/PtBertQATranslator.java).

|

github_jupyter

|

// %mavenRepo snapshots https://oss.sonatype.org/content/repositories/snapshots/

%maven ai.djl:api:0.16.0

%maven ai.djl.pytorch:pytorch-engine:0.16.0

%maven ai.djl.pytorch:pytorch-model-zoo:0.16.0

%maven org.slf4j:slf4j-simple:1.7.32

import java.io.*;

import java.nio.file.*;

import java.util.*;

import java.util.stream.*;

import ai.djl.*;

import ai.djl.ndarray.*;

import ai.djl.ndarray.types.*;

import ai.djl.inference.*;

import ai.djl.translate.*;

import ai.djl.training.util.*;

import ai.djl.repository.zoo.*;

import ai.djl.modality.nlp.*;

import ai.djl.modality.nlp.qa.*;

import ai.djl.modality.nlp.bert.*;

var question = "When did BBC Japan start broadcasting?";

var resourceDocument = "BBC Japan was a general entertainment Channel.\n" +

"Which operated between December 2004 and April 2006.\n" +

"It ceased operations after its Japanese distributor folded.";

QAInput input = new QAInput(question, resourceDocument);

var tokenizer = new BertTokenizer();

List<String> tokenQ = tokenizer.tokenize(question.toLowerCase());

List<String> tokenA = tokenizer.tokenize(resourceDocument.toLowerCase());

System.out.println("Question Token: " + tokenQ);

System.out.println("Answer Token: " + tokenA);

BertToken token = tokenizer.encode(question.toLowerCase(), resourceDocument.toLowerCase());

System.out.println("Encoded tokens: " + token.getTokens());

System.out.println("Encoded token type: " + token.getTokenTypes());

System.out.println("Valid length: " + token.getValidLength());

[PAD]

[unused0]

[unused1]

[unused2]

[unused3]

[unused4]

[unused5]

[unused6]

[unused7]

[unused8]

...

DownloadUtils.download("https://djl-ai.s3.amazonaws.com/mlrepo/model/nlp/question_answer/ai/djl/pytorch/bertqa/0.0.1/bert-base-uncased-vocab.txt.gz", "build/pytorch/bertqa/vocab.txt", new ProgressBar());

var path = Paths.get("build/pytorch/bertqa/vocab.txt");

var vocabulary = DefaultVocabulary.builder()

.optMinFrequency(1)

.addFromTextFile(path)

.optUnknownToken("[UNK]")

.build();

long index = vocabulary.getIndex("car");

String token = vocabulary.getToken(2482);

System.out.println("The index of the car is " + index);

System.out.println("The token of the index 2482 is " + token);

manager.create(Number[] data, Shape)

manager.create(Number[] data)

public class BertTranslator implements Translator<QAInput, String> {

private List<String> tokens;

private Vocabulary vocabulary;

private BertTokenizer tokenizer;

@Override

public void prepare(TranslatorContext ctx) throws IOException {

Path path = Paths.get("build/pytorch/bertqa/vocab.txt");

vocabulary = DefaultVocabulary.builder()

.optMinFrequency(1)

.addFromTextFile(path)

.optUnknownToken("[UNK]")

.build();

tokenizer = new BertTokenizer();

}

@Override

public NDList processInput(TranslatorContext ctx, QAInput input) {

BertToken token =

tokenizer.encode(

input.getQuestion().toLowerCase(),

input.getParagraph().toLowerCase());

// get the encoded tokens that would be used in precessOutput

tokens = token.getTokens();

NDManager manager = ctx.getNDManager();

// map the tokens(String) to indices(long)

long[] indices = tokens.stream().mapToLong(vocabulary::getIndex).toArray();

long[] attentionMask = token.getAttentionMask().stream().mapToLong(i -> i).toArray();

long[] tokenType = token.getTokenTypes().stream().mapToLong(i -> i).toArray();

NDArray indicesArray = manager.create(indices);

NDArray attentionMaskArray =

manager.create(attentionMask);

NDArray tokenTypeArray = manager.create(tokenType);

// The order matters

return new NDList(indicesArray, attentionMaskArray, tokenTypeArray);

}

@Override

public String processOutput(TranslatorContext ctx, NDList list) {

NDArray startLogits = list.get(0);

NDArray endLogits = list.get(1);

int startIdx = (int) startLogits.argMax().getLong();

int endIdx = (int) endLogits.argMax().getLong();

return tokens.subList(startIdx, endIdx + 1).toString();

}

@Override

public Batchifier getBatchifier() {

return Batchifier.STACK;

}

}

DownloadUtils.download("https://djl-ai.s3.amazonaws.com/mlrepo/model/nlp/question_answer/ai/djl/pytorch/bertqa/0.0.1/trace_bertqa.pt.gz", "build/pytorch/bertqa/bertqa.pt", new ProgressBar());

BertTranslator translator = new BertTranslator();

Criteria<QAInput, String> criteria = Criteria.builder()

.setTypes(QAInput.class, String.class)

.optModelPath(Paths.get("build/pytorch/bertqa/")) // search in local folder

.optTranslator(translator)

.optProgress(new ProgressBar()).build();

ZooModel model = criteria.loadModel();

String predictResult = null;

QAInput input = new QAInput(question, resourceDocument);

// Create a Predictor and use it to predict the output

try (Predictor<QAInput, String> predictor = model.newPredictor(translator)) {

predictResult = predictor.predict(input);

}

System.out.println(question);

System.out.println(predictResult);

[december, 2004]

| 0.689306 | 0.956513 |

## 9.2 微调

1. **迁移学习(transfer learning)**

- 该数据集上训练的模型可以抽取较通用的图像特征,从而能够帮助识别边缘、纹理、形状和物体组成

- 迁移学习中的一种常用技术: 微调

2. **微调(fine tuning)**

- 在源数据集(如ImageNet数据集)上预训练一个神经网络模型,即源模型

- 创建一个新的神经网络模型,即目标模型

- 复制了源模型上除了输出层外的所有模型设计及其参数

- 假设这些模型参数包含了源数据集上学习到的知识,且这些知识同样适用于目标数据集

- 还假设源模型的输出层跟源数据集的标签紧密相关,因此在目标模型中不予采用

- 为目标模型添加一个输出大小为目标数据集类别个数的输出层,并随机初始化该层的模型参数

- 目标数据集上训练目标模型。我们将从头训练输出层,而其余层的参数都是基于源模型的参数微调得到的

### 9.2.1 热狗识别

1. torchvision的[models](https://pytorch.org/docs/stable/torchvision/models.html)包提供了常用的预训练模型

2. 更多的预训练模型,可以使用使用[pretrained-models](https://github.com/Cadene/pretrained-models.pytorch)仓库

```

%matplotlib inline

import torch

from torch import nn, optim

from torch.utils.data import Dataset, DataLoader

import torchvision

from torchvision.datasets import ImageFolder

from torchvision import transforms

from torchvision import models

import os

import sys

sys.path.append("..")

import d2lzh_pytorch.utils as d2l

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

```

#### 9.2.1.1 获取数据集

1. 该数据集含有1400张包含热狗的正类图像,和同样多包含其他食品的负类图像

2. 各类的1000张图像被用于训练,其余则用于测试

```

data_dir = '~/Datasets/'

data_dir = os.path.expanduser(data_dir)

os.listdir(os.path.join(data_dir, "hotdog"))

```

`ImageFolder`实例来分别读取训练数据集和测试数据集中的所有图像文件

```

train_imgs = ImageFolder(os.path.join(data_dir, 'hotdog/train'))

test_imgs = ImageFolder(os.path.join(data_dir, 'hotdog/test'))

# 前八张正类

hotdots = [train_imgs[i][0] for i in range(8)]

# 后八张负类

not_hotdogs = [train_imgs[-i - 1][0] for i in range(8)]

d2l.show_images(hotdots + not_hotdogs, 2, 8, scale=1.5)

```

- 训练

- 先从图像中裁剪出随机大小和随机高宽比的一块随机区域,

- 然后将该区域缩放为高和宽均为224像素的输入

- 测试

- 时,我们将图像的高和宽均缩放为256像素

- 然后从中裁剪出高和宽均为224像素的中心区域作为输入

- 标准化

- 对RGB(红、绿、蓝)三个颜色通道的数值做标准化:每个数值减去该通道所有数值的平均值,再除以该通道所有数值的标准差作为输出

***在使用预训练模型时,一定要和预训练时作同样的预处理***

```

All pre-trained models expect input images normalized in the same way, i.e. mini-batches of 3-channel RGB images of shape (3 x H x W), where H and W are expected to be at least 224. The images have to be loaded in to a range of [0, 1] and then normalized using mean = [0.485, 0.456, 0.406] and std = [0.229, 0.224, 0.225]

```

```

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

train_augs = transforms.Compose([

transforms.RandomResizedCrop(size=224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

normalize

])

test_augs = transforms.Compose([

transforms.Resize(size=256),

transforms.CenterCrop(size=224),

transforms.ToTensor(),

normalize

])

```

#### 9.2.1.2 定义和初始化模型

> 不管你是使用的torchvision的models还是pretrained-models.pytorch仓库,默认都会将预训练好的模型参数下载到你的home目录下.cache/torch/checkpoint文件夹。你可以通过修改环境变量`TORCH_MODEL_ZOO`来更改下载目录(windows环境)

> 可直接手动下载,然后放入上面目录中

```

pretrained_net = models.resnet18(pretrained=True)

# 作为一个全连接层,它将ResNet最终的全局平均池化层输出变换成ImageNet数据集上1000类的输出

print(pretrained_net.fc)

# 修改需要的输出类别数

pretrained_net.fc = nn.Linear(512, 2)

print(pretrained_net.fc)

```

1. **此时,pretrained_net的fc层就被随机初始化了,但是其他层依然保存着预训练得到的参数**

2. **由于是在很大的ImageNet数据集上预训练的,所以参数已经足够好,因此一般只需使用较小的学习率来微调这些参数**

3. **而fc中的随机初始化参数一般需要更大的学习率从头训练**

```

# 将fc的学习率设为已经预训练过的部分的10倍

output_params = list(map(id, pretrained_net.fc.parameters()))

feature_params = filter(lambda p: id(p) not in output_params, pretrained_net.parameters())

lr = 0.01

optimizer = optim.SGD([{'params': feature_params},

{'params': pretrained_net.fc.parameters(), 'lr': lr * 10}],

lr=lr, weight_decay=0.001)

```

#### 9.2.1.3 微调模型

```

def train_fine_tuning(net, optimizer, batch_size=8, num_epochs=5):

train_iter = DataLoader(ImageFolder(os.path.join(data_dir, 'hotdog/train'), transform=train_augs),

batch_size, shuffle=True)

test_iter = DataLoader(ImageFolder(os.path.join(data_dir, 'hotdog/test'), transform=test_augs),

batch_size)

loss = torch.nn.CrossEntropyLoss()

d2l.train(train_iter, test_iter, net, loss, optimizer, device, num_epochs)

train_fine_tuning(pretrained_net, optimizer)

```

> 作为对比,我们定义一个相同的模型,但将它的所有模型参数都初始化为随机值。由于整个模型都需要从头训练,我们可以使用较大的学习率

```

scratch_net = models.resnet18(pretrained=False, num_classes=2)

lr = 0.1

optimizer = optim.SGD(scratch_net.parameters(), lr=lr, weight_decay=0.001)

train_fine_tuning(scratch_net, optimizer)

```

|

github_jupyter

|

%matplotlib inline

import torch

from torch import nn, optim

from torch.utils.data import Dataset, DataLoader

import torchvision

from torchvision.datasets import ImageFolder

from torchvision import transforms

from torchvision import models

import os

import sys

sys.path.append("..")

import d2lzh_pytorch.utils as d2l

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

data_dir = '~/Datasets/'

data_dir = os.path.expanduser(data_dir)

os.listdir(os.path.join(data_dir, "hotdog"))

train_imgs = ImageFolder(os.path.join(data_dir, 'hotdog/train'))

test_imgs = ImageFolder(os.path.join(data_dir, 'hotdog/test'))

# 前八张正类

hotdots = [train_imgs[i][0] for i in range(8)]

# 后八张负类

not_hotdogs = [train_imgs[-i - 1][0] for i in range(8)]

d2l.show_images(hotdots + not_hotdogs, 2, 8, scale=1.5)

All pre-trained models expect input images normalized in the same way, i.e. mini-batches of 3-channel RGB images of shape (3 x H x W), where H and W are expected to be at least 224. The images have to be loaded in to a range of [0, 1] and then normalized using mean = [0.485, 0.456, 0.406] and std = [0.229, 0.224, 0.225]

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

train_augs = transforms.Compose([

transforms.RandomResizedCrop(size=224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

normalize

])

test_augs = transforms.Compose([

transforms.Resize(size=256),

transforms.CenterCrop(size=224),

transforms.ToTensor(),

normalize

])

pretrained_net = models.resnet18(pretrained=True)

# 作为一个全连接层,它将ResNet最终的全局平均池化层输出变换成ImageNet数据集上1000类的输出

print(pretrained_net.fc)

# 修改需要的输出类别数

pretrained_net.fc = nn.Linear(512, 2)

print(pretrained_net.fc)

# 将fc的学习率设为已经预训练过的部分的10倍

output_params = list(map(id, pretrained_net.fc.parameters()))

feature_params = filter(lambda p: id(p) not in output_params, pretrained_net.parameters())

lr = 0.01

optimizer = optim.SGD([{'params': feature_params},

{'params': pretrained_net.fc.parameters(), 'lr': lr * 10}],

lr=lr, weight_decay=0.001)

def train_fine_tuning(net, optimizer, batch_size=8, num_epochs=5):

train_iter = DataLoader(ImageFolder(os.path.join(data_dir, 'hotdog/train'), transform=train_augs),

batch_size, shuffle=True)

test_iter = DataLoader(ImageFolder(os.path.join(data_dir, 'hotdog/test'), transform=test_augs),

batch_size)

loss = torch.nn.CrossEntropyLoss()

d2l.train(train_iter, test_iter, net, loss, optimizer, device, num_epochs)

train_fine_tuning(pretrained_net, optimizer)

scratch_net = models.resnet18(pretrained=False, num_classes=2)

lr = 0.1

optimizer = optim.SGD(scratch_net.parameters(), lr=lr, weight_decay=0.001)

train_fine_tuning(scratch_net, optimizer)

| 0.672869 | 0.937268 |

# 宿題2

```

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.cm as cm

from collections import defaultdict

data = np.loadtxt('data/digit_test0.csv', delimiter=',')

data.shape

data[0]

img = data[0].reshape(16,16)

plt.imshow(img, cmap='gray')

plt.show()

digit = np.array([[1]] * 200)

digit.shape

np.array(data, digit)

np.array([data, [[0]]*200])

test = np.array([1,2,2,3,2,4,5,4])

from collections import Counter

c = Counter(test)

c.most_common(1)[0][0]

```

# kNN

http://blog.amedama.jp/entry/2017/03/18/140238

```

import numpy as np

from collections import Counter

class kNN(object):

def __init__(self, k=1):

self._train_data = None

self._target_data = None

self._k = k

def fit(self, train_data, target_data):

self._train_data = train_data

self._target_data = target_data

def predict(self, x):

distances = np.array([np.linalg.norm(p - x) for p in self._train_data])

nearest_indices = distances.argsort()[:self._k]

nearest_labels = self._target_data[nearest_indices]

c = Counter(nearest_labels)

return c.most_common(1)[0][0]

def load_train_data():

for i in range(10):

if i==0:

train_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_label = np.array([i]*train_feature.shape[0])

else:

temp_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_feature = np.vstack([train_feature, temp_feature])

temp_label = np.array([i]*temp_feature.shape[0])

train_label = np.hstack([train_label, temp_label])

return train_feature, train_label

def load_test_data():

for i in range(10):

if i==0:

test_feature = np.loadtxt('data/digit_test{}.csv'.format(i), delimiter=',')

test_label = np.array([i]*test_feature.shape[0])

else:

temp_feature = np.loadtxt('data/digit_test{}.csv'.format(i), delimiter=',')

test_feature = np.vstack([test_feature, temp_feature])

temp_label = np.array([i]*temp_feature.shape[0])

test_label = np.hstack([test_label, temp_label])

return test_feature, test_label

train_feature, train_label = load_train_data()

train_feature.shape

train_label.shape

train_label[1900]

test_feature, test_label = load_test_data()

test_label.shape

model = kNN()

model.fit(train_feature, train_label)

model._train_data.shape

from sklearn.metrics import accuracy_score

predicted_labels = []

for feature in test_feature:

predicted_label = model.predict(feature)

predicted_labels.append(predicted_label)

accuracy_score(test_label, predicted_labels)

len(predicted_labels)

accuracy_score(test_label, predicted_labels)

def calc_accuracy(train_feature, train_label, test_feature, test_label):

model = kNN()

model.fit(train_feature, train_label)

predicted_labels = []

for feature in test_feature:

predicted_label = model.predict(feature)

predicted_labels.append(predicted_label)

return accuracy_score(test_label, predicted_labels)

calc_accuracy(train_feature, train_label, test_feature, test_label)

```

## 高速化

```

import numpy as np

from collections import Counter

def load_train_data():

for i in range(10):

if i==0:

train_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_label = np.array([i]*train_feature.shape[0])

else:

temp_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_feature = np.vstack([train_feature, temp_feature])

temp_label = np.array([i]*temp_feature.shape[0])

train_label = np.hstack([train_label, temp_label])

return train_feature, train_label

def load_test_data():

for i in range(10):

if i==0:

test_feature = np.loadtxt('data/digit_test{}.csv'.format(i), delimiter=',')

test_label = np.array([i]*test_feature.shape[0])

else:

temp_feature = np.loadtxt('data/digit_test{}.csv'.format(i), delimiter=',')

test_feature = np.vstack([test_feature, temp_feature])

temp_label = np.array([i]*temp_feature.shape[0])

test_label = np.hstack([test_label, temp_label])

return test_feature, test_label

train_feature, train_label = load_train_data()

test_feature, test_label = load_test_data()

import numpy as np

from collections import Counter

class kNN(object):

def __init__(self, k=1):

self._train_data = None

self._target_data = None

self._k = k

def fit(self, train_data, target_data):

self._train_data = train_data

self._target_data = target_data

def predict(self, x):

distances = np.array([np.linalg.norm(p - x) for p in self._train_data])

nearest_indices = distances.argsort()[:self._k]

nearest_labels = self._target_data[nearest_indices]

c = Counter(nearest_labels)

return c.most_common(1)[0][0]

predicted_labels

test_feature.shape

predicted_label

```

## ここまでのまとめ

```

import numpy as np

from collections import Counter

from sklearn.metrics import accuracy_score

class kNN(object):

def __init__(self, k=1):

self._train_data = None

self._target_data = None

self._k = k

def fit(self, train_data, target_data):

self._train_data = train_data

self._target_data = target_data

def predict(self, x):

distances = np.array([np.linalg.norm(p - x) for p in self._train_data])

nearest_indices = distances.argsort()[:self._k]

nearest_labels = self._target_data[nearest_indices]

c = Counter(nearest_labels)

return c.most_common(1)[0][0]

def load_train_data():

for i in range(10):

if i==0:

train_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_label = np.array([i]*train_feature.shape[0])

else:

temp_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_feature = np.vstack([train_feature, temp_feature])

temp_label = np.array([i]*temp_feature.shape[0])

train_label = np.hstack([train_label, temp_label])

return train_feature, train_label

def load_test_data():

for i in range(10):

if i==0:

test_feature = np.loadtxt('data/digit_test{}.csv'.format(i), delimiter=',')

test_label = np.array([i]*test_feature.shape[0])

else:

temp_feature = np.loadtxt('data/digit_test{}.csv'.format(i), delimiter=',')

test_feature = np.vstack([test_feature, temp_feature])

temp_label = np.array([i]*temp_feature.shape[0])

test_label = np.hstack([test_label, temp_label])

return test_feature, test_label

def calc_accuracy(train_feature, train_label, test_feature, test_label, k=1):

model = kNN(k)

model.fit(train_feature, train_label)

predicted_labels = []

for feature in test_feature:

predicted_label = model.predict(feature)

predicted_labels.append(predicted_label)

return accuracy_score(test_label, predicted_labels)

train_feature, train_label = load_train_data()

test_feature, test_label = load_test_data()

calc_accuracy(train_feature, train_label, test_feature, test_label, k=1)

calc_accuracy(train_feature, train_label, test_feature, test_label, k=5)

```

# 交差検証

```

n_split = 5

def load_train_data_cv(n_split):

for i in range(10):

if i==0:

train_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_label = np.array([i]*train_feature.shape[0])

group_feature = np.split(train_feature, n_split)

group_label = np.split(train_label, n_split)

else:

temp_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

temp_group_feature = np.split(temp_feature, n_split)

temp_label = np.array([i]*temp_feature.shape[0])

temp_group_label = np.split(temp_label, n_split)

for m in range(n_split):

group_feature[m] = np.vstack([group_feature[m], temp_group_feature[m]])

group_label[m] = np.hstack([group_label[m], temp_group_label[m]])

return group_feature, group_label

group_feature, group_label = load_train_data_cv(5)

len(group_feature)

group_feature[0].shape

group_label[0].shape

group_label[0][999]

group_feature.pop(2)

temp = np.vstack(group_feature)

temp.shape

```

`pop`はよくなさそう

```

temp = group_feature.copy()

temp.pop(2)

temp1 = np.vstack(temp)

print(temp1.shape)

print(len(group_feature))

def cross_validation(n_split=5, params=[1,2,3,4,5,10,20]):

n_params = len(params)

score_list = np.zeros(n_params)

group_feature, group_label = load_train_data_cv(n_split)

for j in range(n_params):

for i in range(n_split):

temp_group_feature = group_feature.copy()

temp_test_feature = temp_group_feature.pop(i)

temp_train_feature = np.vstack(temp_group_feature)

temp_group_label = group_label.copy()

temp_test_label = temp_group_label.pop(i)

temp_train_label = np.hstack(temp_group_label)

score_list[j] += calc_accuracy(temp_train_feature, temp_train_label, temp_test_feature, temp_test_label, k=params[j])

opt_param = params[np.argmax(score_list)]

print(score_list)

return opt_param

cross_validation(n_split=5, params=[1,3,5])

test = np.array([1,2,3,4,5])

np.split(test, 5)

test = [1,2,3,4]

test.pop(2)

test

test = [4.838, 4.837, 4.825]

for i in test:

print(i/5)

```

# まとめ

```

import numpy as np

from collections import Counter

from sklearn.metrics import accuracy_score

class kNN(object):

def __init__(self, k=1):

self._train_data = None

self._target_data = None

self._k = k

def fit(self, train_data, target_data):

self._train_data = train_data

self._target_data = target_data

def predict(self, x):

distances = np.array([np.linalg.norm(p - x) for p in self._train_data])

nearest_indices = distances.argsort()[:self._k]

nearest_labels = self._target_data[nearest_indices]

c = Counter(nearest_labels)

return c.most_common(1)[0][0]

def load_train_data():

for i in range(10):

if i==0:

train_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_label = np.array([i]*train_feature.shape[0])

else:

temp_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_feature = np.vstack([train_feature, temp_feature])

temp_label = np.array([i]*temp_feature.shape[0])

train_label = np.hstack([train_label, temp_label])

return train_feature, train_label

def load_test_data():

for i in range(10):

if i==0:

test_feature = np.loadtxt('data/digit_test{}.csv'.format(i), delimiter=',')

test_label = np.array([i]*test_feature.shape[0])

else:

temp_feature = np.loadtxt('data/digit_test{}.csv'.format(i), delimiter=',')

test_feature = np.vstack([test_feature, temp_feature])

temp_label = np.array([i]*temp_feature.shape[0])

test_label = np.hstack([test_label, temp_label])

return test_feature, test_label

def calc_accuracy(train_feature, train_label, test_feature, test_label, k=1):

model = kNN(k)

model.fit(train_feature, train_label)

predicted_labels = []

for feature in test_feature:

predicted_label = model.predict(feature)

predicted_labels.append(predicted_label)

return accuracy_score(test_label, predicted_labels)

def load_train_data_cv(n_split=5):

for i in range(10):

if i==0:

train_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_label = np.array([i]*train_feature.shape[0])

group_feature = np.split(train_feature, n_split)

group_label = np.split(train_label, n_split)

else:

temp_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

temp_group_feature = np.split(temp_feature, n_split)

temp_label = np.array([i]*temp_feature.shape[0])

temp_group_label = np.split(temp_label, n_split)

for m in range(n_split):

group_feature[m] = np.vstack([group_feature[m], temp_group_feature[m]])

group_label[m] = np.hstack([group_label[m], temp_group_label[m]])

return group_feature, group_label

def cross_validation(n_split=5, params=[1,2,3,4,5,10,20]):

n_params = len(params)

score_list = np.zeros(n_params)

group_feature, group_label = load_train_data_cv(n_split)

for j in range(n_params):

for i in range(n_split):

temp_group_feature = group_feature.copy()

temp_test_feature = temp_group_feature.pop(i)

temp_train_feature = np.vstack(temp_group_feature)

temp_group_label = group_label.copy()

temp_test_label = temp_group_label.pop(i)

temp_train_label = np.hstack(temp_group_label)

score_list[j] += calc_accuracy(temp_train_feature, temp_train_label, temp_test_feature, temp_test_label, k=params[j])/n_split

opt_param = params[np.argmax(score_list)]

print(score_list)

return opt_param

def main():

k_opt = cross_validation(n_split=5, params=[1,2,3,4,5,10,20])

train_feature, train_label = load_train_data()

test_feature, test_label = load_test_data()

score = calc_accuracy(train_feature, train_label, test_feature, test_label, k=k_opt)

print(score)

main()

```

|

github_jupyter

|

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.cm as cm

from collections import defaultdict

data = np.loadtxt('data/digit_test0.csv', delimiter=',')

data.shape

data[0]

img = data[0].reshape(16,16)

plt.imshow(img, cmap='gray')

plt.show()

digit = np.array([[1]] * 200)

digit.shape

np.array(data, digit)

np.array([data, [[0]]*200])

test = np.array([1,2,2,3,2,4,5,4])

from collections import Counter

c = Counter(test)

c.most_common(1)[0][0]

import numpy as np

from collections import Counter

class kNN(object):

def __init__(self, k=1):

self._train_data = None

self._target_data = None

self._k = k

def fit(self, train_data, target_data):

self._train_data = train_data

self._target_data = target_data

def predict(self, x):

distances = np.array([np.linalg.norm(p - x) for p in self._train_data])

nearest_indices = distances.argsort()[:self._k]

nearest_labels = self._target_data[nearest_indices]

c = Counter(nearest_labels)

return c.most_common(1)[0][0]

def load_train_data():

for i in range(10):

if i==0:

train_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_label = np.array([i]*train_feature.shape[0])

else:

temp_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_feature = np.vstack([train_feature, temp_feature])

temp_label = np.array([i]*temp_feature.shape[0])

train_label = np.hstack([train_label, temp_label])

return train_feature, train_label

def load_test_data():

for i in range(10):

if i==0:

test_feature = np.loadtxt('data/digit_test{}.csv'.format(i), delimiter=',')

test_label = np.array([i]*test_feature.shape[0])

else:

temp_feature = np.loadtxt('data/digit_test{}.csv'.format(i), delimiter=',')

test_feature = np.vstack([test_feature, temp_feature])

temp_label = np.array([i]*temp_feature.shape[0])

test_label = np.hstack([test_label, temp_label])

return test_feature, test_label

train_feature, train_label = load_train_data()

train_feature.shape

train_label.shape

train_label[1900]

test_feature, test_label = load_test_data()

test_label.shape

model = kNN()

model.fit(train_feature, train_label)

model._train_data.shape

from sklearn.metrics import accuracy_score

predicted_labels = []

for feature in test_feature:

predicted_label = model.predict(feature)

predicted_labels.append(predicted_label)

accuracy_score(test_label, predicted_labels)

len(predicted_labels)

accuracy_score(test_label, predicted_labels)

def calc_accuracy(train_feature, train_label, test_feature, test_label):

model = kNN()

model.fit(train_feature, train_label)

predicted_labels = []

for feature in test_feature:

predicted_label = model.predict(feature)

predicted_labels.append(predicted_label)

return accuracy_score(test_label, predicted_labels)

calc_accuracy(train_feature, train_label, test_feature, test_label)

import numpy as np

from collections import Counter

def load_train_data():

for i in range(10):

if i==0:

train_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_label = np.array([i]*train_feature.shape[0])

else:

temp_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_feature = np.vstack([train_feature, temp_feature])

temp_label = np.array([i]*temp_feature.shape[0])

train_label = np.hstack([train_label, temp_label])

return train_feature, train_label

def load_test_data():

for i in range(10):

if i==0:

test_feature = np.loadtxt('data/digit_test{}.csv'.format(i), delimiter=',')

test_label = np.array([i]*test_feature.shape[0])

else:

temp_feature = np.loadtxt('data/digit_test{}.csv'.format(i), delimiter=',')

test_feature = np.vstack([test_feature, temp_feature])

temp_label = np.array([i]*temp_feature.shape[0])

test_label = np.hstack([test_label, temp_label])

return test_feature, test_label

train_feature, train_label = load_train_data()

test_feature, test_label = load_test_data()

import numpy as np

from collections import Counter

class kNN(object):

def __init__(self, k=1):

self._train_data = None

self._target_data = None

self._k = k

def fit(self, train_data, target_data):

self._train_data = train_data

self._target_data = target_data

def predict(self, x):

distances = np.array([np.linalg.norm(p - x) for p in self._train_data])

nearest_indices = distances.argsort()[:self._k]

nearest_labels = self._target_data[nearest_indices]

c = Counter(nearest_labels)

return c.most_common(1)[0][0]

predicted_labels

test_feature.shape

predicted_label

import numpy as np

from collections import Counter

from sklearn.metrics import accuracy_score

class kNN(object):

def __init__(self, k=1):

self._train_data = None

self._target_data = None

self._k = k

def fit(self, train_data, target_data):

self._train_data = train_data

self._target_data = target_data

def predict(self, x):

distances = np.array([np.linalg.norm(p - x) for p in self._train_data])

nearest_indices = distances.argsort()[:self._k]

nearest_labels = self._target_data[nearest_indices]

c = Counter(nearest_labels)

return c.most_common(1)[0][0]

def load_train_data():

for i in range(10):

if i==0:

train_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_label = np.array([i]*train_feature.shape[0])

else:

temp_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_feature = np.vstack([train_feature, temp_feature])

temp_label = np.array([i]*temp_feature.shape[0])

train_label = np.hstack([train_label, temp_label])

return train_feature, train_label

def load_test_data():

for i in range(10):

if i==0:

test_feature = np.loadtxt('data/digit_test{}.csv'.format(i), delimiter=',')

test_label = np.array([i]*test_feature.shape[0])

else:

temp_feature = np.loadtxt('data/digit_test{}.csv'.format(i), delimiter=',')

test_feature = np.vstack([test_feature, temp_feature])

temp_label = np.array([i]*temp_feature.shape[0])

test_label = np.hstack([test_label, temp_label])

return test_feature, test_label

def calc_accuracy(train_feature, train_label, test_feature, test_label, k=1):

model = kNN(k)

model.fit(train_feature, train_label)

predicted_labels = []

for feature in test_feature:

predicted_label = model.predict(feature)

predicted_labels.append(predicted_label)

return accuracy_score(test_label, predicted_labels)

train_feature, train_label = load_train_data()

test_feature, test_label = load_test_data()

calc_accuracy(train_feature, train_label, test_feature, test_label, k=1)

calc_accuracy(train_feature, train_label, test_feature, test_label, k=5)

n_split = 5

def load_train_data_cv(n_split):

for i in range(10):

if i==0:

train_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

train_label = np.array([i]*train_feature.shape[0])

group_feature = np.split(train_feature, n_split)

group_label = np.split(train_label, n_split)

else:

temp_feature = np.loadtxt('data/digit_train{}.csv'.format(i), delimiter=',')

temp_group_feature = np.split(temp_feature, n_split)

temp_label = np.array([i]*temp_feature.shape[0])

temp_group_label = np.split(temp_label, n_split)

for m in range(n_split):

group_feature[m] = np.vstack([group_feature[m], temp_group_feature[m]])

group_label[m] = np.hstack([group_label[m], temp_group_label[m]])

return group_feature, group_label

group_feature, group_label = load_train_data_cv(5)

len(group_feature)

group_feature[0].shape

group_label[0].shape

group_label[0][999]

group_feature.pop(2)

temp = np.vstack(group_feature)

temp.shape